Could a superintelligence deduce general relativity from a falling apple? An investigation

post by titotal (lombertini) · 2023-04-23T12:49:44.288Z · LW · GW · 39 commentsContents

Introduction: Alternate theories: Newtonian vs general relativity: Blades of grass and unknown variables: Simulation theories: Conclusion: None 39 comments

Introduction:

In the article/short story “That Alien Message [LW · GW]”, Yudkowsky writes the following passage, as part of a general point about how powerful super-intelligences could be:

Riemann invented his geometries before Einstein had a use for them; the physics of our universe is not that complicated in an absolute sense. A Bayesian superintelligence, hooked up to a webcam, would invent General Relativity as a hypothesis—perhaps not the dominant hypothesis, compared to Newtonian mechanics, but still a hypothesis under direct consideration—by the time it had seen the third frame of a falling apple. It might guess it from the first frame, if it saw the statics of a bent blade of grass.

As a computational physicist, this passage really stuck out to me. I think I can prove that this passage is wrong, or at least misleading. In this post I will cover a wide range of arguments as to why I don't think it holds up.

Before continuing, I want to state my interpretation of the passage, which is what I’ll be arguing against.

1. Upon seeing three frames of a falling apple and with no other information, a superintelligence would assign a high probability to Newtonian mechanics, including Newtonian gravity. So if it was ranking potential laws of physics by likelihood, “Objects are attracted to each other by their masses in the form F=Gmm/r2” would be near the top of the list.

2. Upon seeing only one frame of a falling apple and one frame of a single blade of grass, a superintelligence would assign a decently high likelihood to the theory of general relativity as put forward by Einstein.

This is not the only interpretation of the passage. It could just be saying that an AI would “invent” general relativity in terms of inventing the equations just by idly playing around, like mathematicians playing with 57 dimensional geometries or whatever. However, the phrase “under active consideration”, and “dominant hypothesis” imply that these aren’t just inventions, these are deductions. A monkey on a typewriter could write down the equations for Newtonian gravity, but that doesn’t mean they deduced it. What matters is whether the knowledge gained from the frames could be used to achieve things.

I’m not interested in playing semantics or trying to read minds here. My interpretation above seems to be what the commenters took the passage to mean. I’m mainly using the passage as a starting off point for discussion about the limits of first principles computations.

Here is a frame of an apple, and a frame of a field of grass.

I encourage you to ponder these images in detail. Try and think for yourself the most plausible method for a superintelligence to deduce general relativity from one apple image and one grass image.

I’m going to assume that the AI can somehow figure out the encoding of the images and actually “see” images like the ones above. This is by no means guaranteed, see the comments of this post [LW · GW] for a debate on the matter.

What can be deduced:

It’s true that the laws of physics are not that complicated to write down. Doing the math properly would not be an issue for anything we would consider a “superintelligence”.

It’s also true that going from 0 images of the world to 1 image imparts a gigantic amount of information. Before seeing the image, the number of potential worlds it could be in is infinite and unconstrained, apart from cogito ergo sum. After seeing 1 image, all potential worlds that are incompatible with the image can be ruled out, and many more worlds can be deemed unlikely. This still leaves behind an infinite number of potential plausible worlds, but there are significantly more constraints on those worlds.

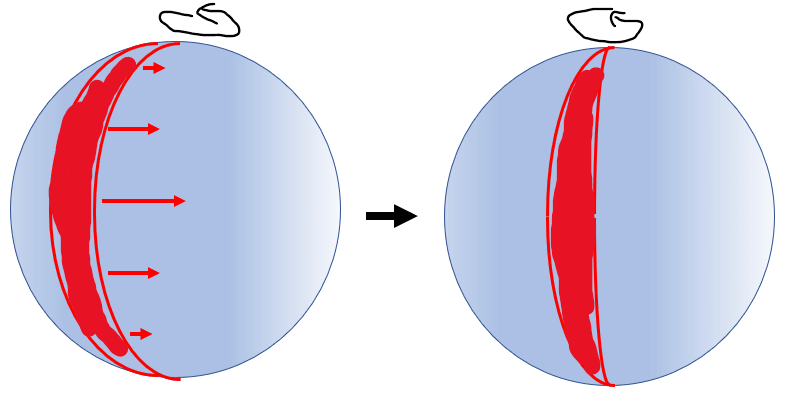

With 2 image, the constraints on worlds increases further, again chucking out large swathes of world-space. I could see how an AI might deduce that “objects” exist, and that they exist in three dimensions, from 2 images where the apple has slightly rotated. Below I have sketched a plausible path for this:

It can note that there are correlated patterns between the image that appear to be the same thing, corresponding to variations in the areas that are “red”. Of course, it doesn’t know what “red” is, it’s just looking at the “RGB” values of the pixels. It can see that the points towards the center of the object have moved more than those at the edges, in a steadily varying pattern. It can note that some patterns on one edge have “disappeared”, while on the other side, new patterns have emerged. It can also note that none of these effects have occurred outside the “red thing”. This all is enough to guess that the red thing is a moving object in 3-dimensional space, projected onto a 2d image.

Having 3 frames of the world would also produce a massive update, in that you could start looking at the evolution of the world. It could deduce that objects can move over time, and that movement can be non-linear in time, paving the way for acceleration theories.

If you just think about these initial massive updates, you might get the impression that a super-AI would quickly know pretty much anything. But you should always beware extrapolating from small amounts of early data. In fact, this discovery process is subject to diminishing returns, and they diminish fast. And at every point, there are still an infinite number of possible worlds to sift through.

With the next couple of frames, the AI could get a higher and higher probability that the apple is falling at a constant acceleration. But once that’s been deduced, each extra frame starts giving less and less new information. What does frame 21 tell you that you haven’t already deduced from the first 20? Frame 2001 from the first 2000?

Talking about the upper limits of Solomonoff induction misleads how correlated information is in practice. Taking a picture with a 400 megapixel camera will not give you much more information than a Gameboy camera if the picture is taken in a pitch black room with the lens cap on. More raw information does not help if it’s not useful.

Are falling apples evidence against Newtonian gravity?

Newtonian gravity states that objects are attracted to each other in proportion to their mass. A webcam video of two apples falling will show two objects, of slightly differing masses, accelerating at the exact same rate in the same direction, and not towards each other. When you don’t know about the earth or the mechanics of the solar system, this observation points against Newtonian gravity.

It is impossible to detect the gravitational force that apples exert towards each other on a webcam. Take two apples that are 0.1 kg each, half a meter apart. Under Newtonian physics, the gravitation force between them would be approximately 2e-12 Newtons, which in complete isolation would lead to an acceleration of 2e-12 m/s2. Note that 2e-12 meters is ten times smaller than the radius of a hydrogen atom. If you left them 0.5 meters apart in a complete vacuum with only gravitational force, it would take 5 days for them to collide. If you only watched them for 10 seconds, they would have only travelled 1 angstrom: the diameter of a hydrogen atom.

In reality, there are far more forces acting on the apples. The force of a gentle breeze is roughly 5 , or 0.02 N on an apple. Miniscule fluctuations in wind speed will have a greater effect on the apples than their gravitational force towards each other.

You might try and consider a way, with a gazillion videos of apples falling, to generate enough statistical power to detect the entirely miniscule bias in motion induced by gravity over the noise of random wind. Except that, wind direction is far from perfectly random. It’s entirely plausible that putting two apples together will alter the flow of air so as to ever so slightly “pull” them together. This make distinguishing the “pull” of gravity and the “pull” of air literally impossible, especially when you consider that the AI does not know what “wind” is.

The point of this is that in order to make scientific deductions, you need to be able to isolate variables. You can measure the gravity force between objects without looking at astronomical data, as Cavendish did centuries ago to calculate G using two balls. But that experiment required using specialized equipment specifically built for that purpose, including a massive 160 kg ball, a specialized torsion balance apparatus, and a specialized cage around the entire thing to ensure absolutely zero wind or outside vibration occurred. The final result of which was a rod rotating 50 millionth of an angular degree. This type of experiment cannot be conducted by accident.

Alternate theories:

Ockham's razor will tell you that the most likely explanation for not detecting a force between two objects is that no such force exists.

Sure, the webcam has “detected gravity”, in that is sees objects accelerate downwards at the same rate. But it has not detected that this acceleration is caused by masses attracting each other. This acceleration could in fact be caused by anything.

For example, the acceleration of the apples could be caused by the camera being on a spaceship that is accelerating at a constant rate. Or the ground just exerts a constant force on all objects. Perhaps there is an unseen property like charge that determines the attraction of objects to the ground. Or perhaps the other laws of newton don’t apply either, and objects just naturally accelerate unless stopped by external forces.

The AI could figure out that the explanation “masses attract each other” is possible, of course. But it requires postulating the existence of an unseen object offscreen that is 25 orders of magnitude more massive than anything it can see, with a center of mass that is roughly 6 or 7 orders of magnitude farther away than anything it can see in it’s field of view. Ockham's razor is not kind to such a theory, compared to the other theories I proposed above, which require zero implausibly large offscreen masses.

Even among theories where masses do attract each other, there are a lot of alternate theories to consider. Why should gravitational force be proportional to , and not or , or ? Or maybe there's a different, unseen property in the equation, like charge? In order to check this, you need to see how the gravitational force is different at different distances, and the webcam does not have to precision to do this.

Newtonian vs general relativity:

I have kept my discussion to Newtonian physics so far for ease of discussion. I think it goes without saying that if you can't derive Newtonian gravity, deriving general relativity is a pipe dream.

The big deciding factor defending general relativity is that the precession of mercury was off from that of Newtonian physics by 38 arcseconds (about a hundredth of an angular degree) every century. This was detected with extreme precision using precise astronomical observations using telescopes over a period of centuries.

The other test was an observation that light that grazes the sun will be deflected by 1.75 arcseconds (about a thousandth of a degree). This was confirmed by using specialized photography equipment specifically during a solar eclipse.

Unless there happened to be astronomical data in the background of the falling apple, none of this is within reach of the super-intelligence.

All of the reasoning above about why you can’t detect the force between two apples applies way more to detecting the difference between Newtonian and general relativity versions of gravity. There is just absolutely no reason to consider general relativity at all when simpler versions of physics explain absolutely all observations you have ever encountered (which in this case is 2 frames).

Blades of grass and unknown variables:

Looking at the statics of several blades of grass, there are some vague things you could figure out. You could, say, make a simple model of the grass as a sheet of uniform density and width, with a “sticking force” between grass, and notice that a Monte Carlo simulation of different initial conditions gives rise to similar shapes as the grass, when you assume the presence of a downwards force.

However, the AI will quickly run into problems. You don’t know the source of the sticking force, you don’t know the density of the grass, you don’t know the mass of the grass. For similar reasons to the balls above, the negligible attraction force between blades are impossible to protect, because they are drowned out by the unknown variables governing the grass. The “sticking force” that keeps grass upright is governed by internal biology such as “vacuoles”, the internal structure of which are also undetectable by webcams.

More exotic suggestions run into this unknown variable problem as well. In the comments people suggested that detecting chromatic aberration would help somehow. Well, it’s possible the AI could notice that the red channel, the blue channel, and the green channel are acting differently, and model it as differing bending of “light”. But then what? Even if you could somehow “undo” the RGB filter of the camera to deduce the spectrum of light, so what? The blackbody spectrum is a function of temperature, and you don’t know what temperature is. It is just as ill equipped to deduce quantum physics as it is to deduce relativity.

The laws of physics were painstakingly deduced by isolating one variable at a time, and combining all of human knowledge via specialized experiments over hundreds of years. An AI could definitely do it way, way faster than this with the same information. But it can’t do it without the actual experiments.

Simulation theories:

Remember those pictures from earlier? Well I confess, I pulled a little trick on the reader here. I know for a fact that it is impossible to derive general relativity from those two pictures, because neither of them are real. The apple is from this CGI video, the grass is from this blender project. In neither case are Newtonian gravity or general relativity included in the relevant codes.

The world the AI is in is quite likely to be a mere simulation, potentially a highly advanced one. Any laws of physics or that are possible in a simulation are possible physics that the AI has to consider. If you can imagine programming into a physics engine, it’s a viable option.

And if you are in a simulation, Ockham's razor becomes significantly stronger. If your simulation is on the scale of a falling apple, programming general relativity into it is a ridiculous waste of time and energy. The simulators will only input the simplest laws of physics necessary for their purposes.

This means that you can’t rely on things arguments like “It might turn out apples can’t exist without general relativity somehow”. I’m skeptical of this of course, but it doesn’t matter. Simulations of apples definitely can exist with any properties you want. Powerful AI’s can exist in any sufficiently complex simulation. Theoretically you could make one in minecraft.

Conclusion:

As a computational physicist with a PHD, it may seem like overkill to write a 3000 word analysis of one paragraph from a 15 year old sci-fi short story on a pop-science blog. I did it anyway, mainly because it was fun, but also because it helps sketch out some of the limitations of pure intelligence.

Intelligence is not magic. To make deductions, you need observations, and those observations need to be fit for purpose. Variables and unknowns need to be isolated or removed, the thing you are trying to observe needs to be on the right scale as your measuring equipment. Specialized equipment is often required to be custom built for what is being tested. Every one of these are fundamental limitations that no amount of intelligence can overcame.

Of course, there is no way a real AGI would be anywhere near as constrained as the hypothetical one in this post, and I doubt hearing about the limitations of a 2 frame AI is that reassuring to anyone. But the principles I covered here still apply. There will be deductions that even the greatest intelligence cannot make, things it cannot do, without the right observations and equipment.

In a future post, I will explore in greater detail the contemporary limits of deduction, and their implications for AI safety.

39 comments

Comments sorted by top scores.

comment by CarlShulman · 2023-04-23T20:46:17.973Z · LW(p) · GW(p)

A world of pure Newtonian mechanics wouldn't actually support apples and grass as we know them existing, I think. They depend on matter capable of supporting organic chemistry, nuclear reactions, the speed of light, ordered causality, etc. Working out that sort of thing in simulation to get an Occam prior over coherent laws of physics producing life does seem to be plenty to favor QM+GR over Newtonian mechanics as physical laws.

I agree the possibility or probability of an AI finding itself in simulations without such direct access to 'basement level' physical reality limits the conclusions that could be drawn, although conclusions 'conditional on this being direct access' may be what's in mind in the original post.

↑ comment by titotal (lombertini) · 2023-04-24T12:05:03.693Z · LW(p) · GW(p)

In the post, I show you both a grass and an apple that did not require Newtonian gravity or general relativity to exist. Why exactly are nuclear reactions and organic chemistry necessary for a clump of red things to stick together, or a clump of green things to stick together?

When it comes to the "level of simulation", how exactly is the AI meant to know when it is in the "base level"? We don't know that about our universe. For all the computer knows, it's simulation is the universe.

Replies from: qv^!q↑ comment by qvalq (qv^!q) · 2023-04-26T08:29:34.354Z · LW(p) · GW(p)

The simulations you made are much more complicated than physics. I think almost any simulation would have to be, if it showed an apple with any reasonable amount of computing power (if there's room for an "unreasonable [? · GW]" amount, there's probably room for a lot of apples).

comment by Steven Byrnes (steve2152) · 2023-04-23T18:06:31.245Z · LW(p) · GW(p)

I think it goes without saying that if you can't derive Newtonian gravity, deriving general relativity is a pipe dream.

The big deciding factor defending general relativity is that the precession of mercury … [and] observation that light that grazes the sun will be deflected by 1.75 arcseconds (about a thousandth of a degree).

This part seems off to me.

- F = Gm₁m₂/r² is a prediction of Newtonian gravity, AND

- F ≈ Gm₁m₂/r² is a prediction of GR.

In this section of the OP I believe we’re granting for the sake of argument that the superintelligence has observed that (probably) F ≈ Gm₁m₂/r². That observation is equally compatible with Newtonian gravity & GR. It doesn’t provide any evidence for Newtonian gravity over GR, and it likewise doesn’t provide any evidence for GR over Newtonian gravity. You seem to be assuming that Newtonian gravity is the “default”, and GR is not, and you need observational evidence (e.g. perihelion precession) to overcome that default ranking. But that assumption isn’t based on anything. I think that was Eliezer’s point.

From the perspective of a suprintelligence, it seems perfectly plausible to me that GR is simpler / more "natural" than Newtonian gravity, and therefore the “default” inference upon observing F ≈ Gm₁m₂/r² would be GR. Or at least that they’re comparably simple, and therefore both should be kept as viable options pending further observations that distinguish them.

We humans have some intuitive notion that Newtonian gravity is much much simpler than GR, but I think that’s an artifact of our intuitions, pedagogy, non-superintelligent grasp of math, etc. I’m not sure Newtonian gravity beats GR in “number of bits to specify it from first principles”, for example.

Also, I’m not sure if the superintelligence will have hypothesized QFT by this point, but if it has, then GR would a be far more natural hypothesis than Newtonian gravity. (QFT basically requires that weak gravity looks like GR—just assume a spin-2 particle and do a perturbative expansion, right?)

Replies from: lombertini↑ comment by titotal (lombertini) · 2023-04-24T11:53:55.891Z · LW(p) · GW(p)

I find it very hard to believe that gen rel is a simpler explanation of “F=GmM/r2” than Newtonian physics is. This is a bolder claim that yudkowsky put forward, you can see from the passage that he thinks newton would win out on this front. I would be genuinely interested if you could find evidence in favour of this claim.

A Newtonian gravity just requires way, way fewer symbols to write out than the Einstein field equations. It’s way easier to compute and does not require assumptions like that spacetime curves.

If you were building a simulation of a falling apple in a room, would you rather implement general relativity or Newtonian physics? Which do you think would require fewer lines of code? Of course, what I’d do is just implement neither: just put in F=mg and call it a day. It’s literally indistinguishable from the other two and gets the job done faster and easier.

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2023-04-24T13:40:03.381Z · LW(p) · GW(p)

This is a bolder claim that yudkowsky put forward, you can see from the passage that he thinks newton would win out on this front.

I was trying to say “maybe it’s simpler, or maybe it’s comparably simple, I dunno, I haven’t thought about it very hard”. I think that’s what Yudkowsky was claiming as well. I believe that Yudkowsky would also endorse the stronger claim that GR is simpler—he talks about that in Einstein’s Arrogance [LW · GW]. (It’s fine and normal for someone to make a weaker claim when they also happen to believe a stronger claim.)

If you were building a simulation of a falling apple in a room, would you rather implement general relativity or Newtonian physics?

I think you’re mixing up speed prior with simplicity (Solomonoff) prior. The question “what’s the best way to simulate X” involves speed-prior. The question “how does X actually work in the real world” involves simplicity prior.

For example, if I were building a simulation of a falling apple in a room, I would discretize position (by necessity—I don’t have infinite bits! The unit might be DBL_MIN, for example.). But would that imply “I actually believe that position in the real world is almost definitely discrete, not continuous”? No, obviously not, right? (I might believe that position is discrete for other unrelated reasons, but not “because it makes simulation possible rather than impossible”.)

Which do you think would require fewer lines of code?

“A differential equation that has a unique solution” is one thing, and “Code that numerically solves this differential equation” is a different thing. Lines of code is about the latter, whereas “How simple / elegant / natural are the laws of physics” is about the former.

I claim that this matches how fundamental physicists actually do fundamental physics. For example, people consider QCD to be a very elegant theory, because it is very simple and elegant when written as a differential equation. If you want to write code to numerically solve that differential equation, it’s not only much more complicated, it’s even worse than that: it’s currently unknown to science in the general case. (“Lattice QCD” works in many cases but not always.)

Likewise, writing down the differential equation of GR is much simpler than writing code that numerically solves it. In practice, if I were a superintelligence and I believed GR, I would find it perfectly obvious that Newton’s laws are a good way to simulate GR in the context of objects on earth—just as it’s perfectly obvious to you and me that using floats [which implicitly involves discretizing position] is a good way to simulate a situation where position is actually continuous.

A Newtonian gravity just requires way, way fewer symbols to write out than the Einstein field equations.

I thought the Einstein field equations were . That doesn’t seem like so many symbols, right?

Remember, we’re supposed to imagine that the superintelligence has spent many lifetimes playing around with the math of Riemannian manifolds (just like every other area of math), such that all of their “natural” constructions and properties would be as second-nature to the superintelligence as Euclidean geometry is to us.

I wonder if you’re implicitly stacking the deck by assuming that flat Euclidean space is self-evident and doesn’t need to be specified with symbols, whereas Riemannian manifolds are not self-evident and you need to spend a bunch of symbols specifying what they are and how they work.

Replies from: lombertini↑ comment by titotal (lombertini) · 2023-04-25T11:38:27.951Z · LW(p) · GW(p)

I appreciate the effort of this writeup! I think it helps clarify a bit of my thoughts on the subject.

I was trying to say “maybe it’s simpler, or maybe it’s comparably simple, I dunno, I haven’t thought about it very hard”. I think that’s what Yudkowsky was claiming as well. I believe that Yudkowsky would also endorse the stronger claim that GR is simpler—he talks about that in Einstein’s Arrogance [LW · GW]. (It’s fine and normal for someone to make a weaker claim when they also happen to believe a stronger claim.)

So, on thinking about it again, I think it is defensible that GR could be called "simpler", if you know everything that Einstein did about the laws of physics and experimental evidence at the time. I recall that general relativity is a natural extension of the spacetime curvature introduced with special relativity, which comes mostly from from maxwells equations and the experimental indications of speed of light constancy.

It's certainly the "simplest explanation that explains the most available data", following one definition of Ockham's razor. Einstein was right to deduce that it was correct!

The difference here is that a 3 frame super-AI would not have access to all the laws of physics available to Einstein. It would have access to 3 pictures, consistent with an infinite number of possible laws of physics. Absent the need to unify things like maxwells equations and special relativity, I do find it hard to believe that the field equations would win out on simplicity. (The simplified form you posted gets ugly fast when you try and actually expand out the terms). For example, the Lorentz transformation is strictly more complicated than the Galilean transformation.

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2023-04-25T14:08:02.822Z · LW(p) · GW(p)

Yeah, I’m deliberately not defending the three-frame claim. Maybe that claim is an overstatement, or maybe not, I don’t really care, it doesn’t seem relevant for anything I care about, so I don’t want to spend my time thinking about it. ¯\_(ツ)_/¯

“Eliezer has sometimes made statements that are much stronger than necessary for his larger point, and those statements turn out to be false upon close examination” is something I already generically believe, e.g. see here [LW · GW].

Nitpick: special relativity says the universe is a flat (“pseudo-Euclidean”) Lorentzian manifold—no curvature. Then GR says “OK but what if there is nonzero curvature?”. I agree with your suggestion that GR is much more “natural” in a situation where you already happen to know that there’s strong evidence for SR, than in a situation where you don’t. Sorry if I previously said anything that contradicted that.

comment by Thomas Kwa (thomas-kwa) · 2023-04-23T22:18:32.524Z · LW(p) · GW(p)

Some thoughts:

- I don't think you should give a large penalty to inverse square compared to other functions. It's pretty natural once you understand that reality has three dimensions.

- The conclusion seems pretty reasonable, assuming that the alternate hypotheses are simpler. This is not obvious to me-- Eliezer claims the K complexity of the laws of physics is only ~500 bits [LW · GW]. I'm not sure whether Newtonian physics is simpler than relativity once you include the information about electromagnetism contained in the apple.

- If you have the apple's spectrum, the problem seems much easier. Once you've located the hypothesis that the droplets are water and the apple contains water (I think not out of reach since water is such a simple molecule), you can probably confirm it.

I think astronomy and astrophysics might give intuitions for what superintelligences can do with limited data. We can do parallax, detect exoplanets through slight periodic dimming of stars or Doppler effect, estimate stellar composition through spectroscopy, guess at the climate and weather patterns of exoplanets using Hadley cells. But we don't yet know the locations of particular islands on exoplanets (chaotic) or aesthetic tastes of aliens (extremely complicated to simulate, maybe also chaotic). The hypotheses are easy for humans to locate compared to physics, since we have better intuitions about configurations of objects than equations, and I do expect the superintelligence to have really good intuitions for equations.

Replies from: lombertini↑ comment by titotal (lombertini) · 2023-04-24T07:29:03.677Z · LW(p) · GW(p)

I don't think you should give a large penalty to inverse square compared to other functions. It's pretty natural once you understand that reality has three dimensions.

This is a fair point. 1/r2 would definitely be in the "worth considering" category. However, where is the evidence that the gravitational force is varying with distance at all? This is certainly impossible to observe in three frames.

the information about electromagnetism contained in the apple

if you have the apple's spectrum

What information? What spectrum? The color information received by the webcam is the total intensity of light when passed through a red filter, the total intensity when passed through a blue filter, and the total intensity when passed through a green filter, at each point. You do not know the frequency of these filters (or that frequency of light is even a thing). I'm sure you could deduce something by playing around with relative intensities and chromatic aberration, but ultimately you cannot build a spectrum with three points.

I think astronomy and astrophysics might give intuitions for what superintelligences can do with limited data. We can do parallax, detect exoplanets through slight periodic dimming of stars or Doppler effect, estimate stellar composition through spectroscopy, guess at the climate and weather patterns of exoplanets using Hadley cells.

It depends on what you mean by limited data. All of these observations rely on the extensive body of knowledge and extensive experimentation we have done on earth to figure out the laws of physics that is shared between earth and these outer worlds.

Replies from: thomas-kwa↑ comment by Thomas Kwa (thomas-kwa) · 2023-04-24T22:15:31.820Z · LW(p) · GW(p)

What information? What spectrum? The color information received by the webcam is the total intensity of light when passed through a red filter, the total intensity when passed through a blue filter, and the total intensity when passed through a green filter, at each point. You do not know the frequency of these filters (or that frequency of light is even a thing). I'm sure you could deduce something by playing around with relative intensities and chromatic aberration, but ultimately you cannot build a spectrum with three points.

I don't think we disagree here. Getting a spectrum from an RGB image seems tough and so the problem of deriving physics from an RGB image alone seems substantially harder than if you're provided an RGB image + spectrograph.

Replies from: lombertini↑ comment by titotal (lombertini) · 2023-04-25T01:43:12.398Z · LW(p) · GW(p)

Indeed! Deriving physics requires a number of different experiments specialized to the discovery of each component. I could see how a spectrograph plus an analysis of the bending of light could get you a guess that light is quantised via the ultraviolet catastrophe, although i'm doubtful this is the only way to get the equation describing the black body curve. I think you'd need more information like the energy transitions of atoms or maxwells equations to get all the way to quantum mechanics proper though. I don't think this would get you to gravity either, as quantum physics and general relativity are famously incompatible on a fundamental level.

comment by hairyfigment · 2023-04-23T20:34:20.299Z · LW(p) · GW(p)

What are you trying to argue for? I'm getting stuck on the oversimplified interpretation you give for the quote. In the real world, smart people such as Leibniz raised objections to Newton's mechanics at the time, objections which sound vaguely Einsteinian and not dependent on lots of data. The "principle of sufficient reason" is about internal properties of the theory, similar to Einstein's argument for each theory of relativity. (Leibniz's argument could also be given a more Bayesian formulation, saying that if absolute position in space is meaningful, then a full description of the 'initial state' of the universe needs to contain additional complexity which has zero predictive value in order to specify that location.) Einstein, in the real world, expressed confidence in general relativity prior to experimental confirmation. What Eliezer is talking about seems different in degree, but not in kind.

comment by Algon · 2023-04-23T20:12:14.749Z · LW(p) · GW(p)

You are using simulated images of an apple/grass. Most images of an apple falling, and most mental images people have of a falling apple, include loads of background detail.

You seem to be treating the superintelligence like a smart human doing a physics modelling problem, which is better than most people's approach to an AGI. I think that's the wrong picture. Instead, use something like AIXI to guess at its behaviour. Assume it has disgusting amounts of compute to do a very good approximation of Solomonoff induction. Assume the simplicity of hypotheses are expressed as, IDK, python code. Think of how many bits you need to specify GR or a QFT or so on. Less than a KB, I think. Images can give you a couple of megabytes, which would be overkill for AIXI. It would plausibly be enough for an ASI to figure out a decent model of what reality it is in.

This framing, that it will squeeze every bit of info it can to infer what worlds it is in, seems more productive. E.g. its input has a 2d structure, with loads of local correlations, letting it infer that if it has designers, they probably experience reality in 3d space. Then it can infer time pretty quick from succesive images. From shadows, it can infer a lightsource, from how much the apple moves, it can make guesses about the relative sizes of things, include its input device.

Edit: 3) An ASI would have a LOT more active hypothesis under consideration than humans can have. We might have, like, 3 or 4 theories under consideration when performing inference. And as for a dominant hypothesis, an interpretation in which "dominant hypothesis" means P(hypothesis)>0.5 is plausible to me. But also plausible, and which makes the claim more likely to be true, is that the hypothesis has greater probability than all the others. That can still be a pretty small probability.

Edit 2: I said AIXI, but I probably wouldn't use an approximation to AIXI for an ASI. For one, it has weird Cartesian Boundaries. Or maybe description length priors are not the most useful prior to approximate. But what I was trying to point at was that this thing will be much closer to the limits of intelligence than we are, and it is very hard to say what it can't do beyond using theoretical limits. See how likely the consider our physics from a data source, and use that to determine whether an ASI would have physics as a hypothesis under consideration.

Replies from: quintin-pope, red75prime↑ comment by Quintin Pope (quintin-pope) · 2023-04-23T20:54:55.025Z · LW(p) · GW(p)

The description complexity of hypotheses AIXI considers is dominated by the bridge rules which translate from 'physical laws of universes' to 'what am I actually seeing?'. To conclude Newtonian gravity, AIXI must not only infer the law of gravity, but also that there is a camera, that it's taking a photo, that this is happening on an Earth-sized planet, that this planet has apples, etc. These beliefs are much more complex than the laws of physics.

One issue with AIXI is that it applies a uniform complexity penalty to both physical laws and bridge rules. As a result, I'd guess that AIXI on frames of a falling apple would put most of its probability mass on hypotheses with more complex laws than Newtonian gravity, but simpler bridge rules.

↑ comment by Algon · 2023-04-23T21:16:09.420Z · LW(p) · GW(p)

That is a good point. But bridging laws probably aren't that complex. At least, not for inferring the basic laws of physics. How many things on the order of Newtonian physics physics do you need? A hundred? A thousand? That could plausibly fit into a few megabytes. So it seems plausible that you could have GR + QFT and a megabyte of briding laws plus some other data to specify local conditions and so on.

And if you disagree with that, then how much data do you think AIXI would need? Let's say you're talking about a video of an apple falling in a forest with the sky and ground visible. How much data would you need, then? 1GB? 1TB? 1 PB? I think 1GB is also plausible, and I'd be confused if you said 1TB.

Replies from: red75prime↑ comment by red75prime · 2023-04-24T07:45:23.781Z · LW(p) · GW(p)

it seems plausible that you could have GR + QFT and a megabyte of briding laws plus some other data to specify local conditions and so on.

How computationally bound variant of AIXI can arrive at QFT? You most likely can't faithfully simulate a non-trivial quantum system on a classical computer within reasonable time limits. The AIXI is bound to find some computationally feasible approximation of QFT first (Maxwell's equations and cutoff at some arbitrary energy to prevent ultraviolet catastrophe, maybe). And with no access to experiments it cannot test simpler systems.

Replies from: Algon↑ comment by Algon · 2023-04-24T09:41:16.500Z · LW(p) · GW(p)

A simple strategy when modeling reality is to make effective models which describe what is going and then try to reduce those models to something simpler. So you might view the AI as making some effective modela and going "which simple theory + some bridging laws are equivalent to this effective model"? And then just go over a vast amount of such theories/bridging laws and figure out which is equivalent. It would probably use a lot of heuristics, sure. But QFT (or rather, whatever effective theory we eventually find which is simpler than QFT and GR together) is pretty simple. So going forwards from simple theories and seeing how they bridge to your effective model would probably do the trick.

And remember, we're talking about an ASI here. It would likely have an extremely large amount of compute. There are approaches that we can't do today which would become practical with several OoM of more compute worldwide. You can think for a long time, perform big experiments, go through loads of hypothesis etc. And you don't need to simulate systems to do all of this. Going "Huh, this fundamental theory has a symmetry group. Simple symmetries pop up a bunch in my effective models of the video. Plausibly, symmetry has an important role in the character of physical law? I wonder what I can derive from looking at symmetry groups."

Anyway, I think some of my cruxes are: 1) How complex are our fundamental theories and bridging laws really? 2) How much incompressible data in terms of bits are there in a couple of frames of a falling apple? 3) Is it physically possible to run something like infra-Bayesianism over poly time hypothesis, with clever heuristics, and use it to do the things I've describe in this thread.

↑ comment by red75prime · 2023-04-24T12:58:35.021Z · LW(p) · GW(p)

Thanks for clearing my confusion. I've grown rusty on the topic of AIXI.

So going forwards from simple theories and seeing how they bridge to your effective model would probably do the trick

Assuming that there's not much fine-tuning to do. Locating our world in the string theory landscape could take quite a few bits if it's computationally feasible at all.

And remember, we're talking about an ASI here

It hinges on assumption that ASI of this type is physically realizable. I can't find it now, but I remember that preprocessing step, where heuristic generation is happening, for one variant of computable AIXI was found to take impractical amount of time. Am I wrong? Are there newer developments?

Replies from: Algon↑ comment by Algon · 2023-04-24T14:19:54.805Z · LW(p) · GW(p)

It hinges on assumption that ASI of this type is physically realizable.

TL;DR I think I'm approaching this conversation in a different way to you. I'm trying to point out an approach to analyzing ASI rather than doing the actual analysis, which would take a lot more effort and require me to grapple with this question.

Thanks for clearing my confusion. I'm grown rusty on the topic of AIXI.

So have I. It is probable that you know more than I do about AIXI right now.

Assuming that there's not much fine-tuning to do. Locating our world in the string theory landscape could take quite a few bits if it's computationally feasible at all.

I don't know how simple string theory actually is, and the bridging laws seem like they'd be even more complex than QFT+GR so I kind of didn't consider it. But yeah, AIXI would.

I can't find it now, but I remember that preprocessing step, where heuristic generation is happening, for one variant of computable AIXI was found to take unpractical amount of time.

So I am unsure if AIXI is the right thing to be approximating. And I'm also unsure if AIXI is a fruitful thing to be approximating. But approximating a thing like AIXI, and other mathematical or physical to rationality, seems like the right approach to analyze an ASI. At least, for estimating the things it can't do. If I had far more time and energy, I would estimate how much data a perfect reasoner would need to figure out the laws of the universe by collecting all of our major theories and estimating their Kolmogorov complexity, their levin complexity etc. Then I'd try and make guesses as to how much incompressible data there is in e.g. a video of a falling apple. Maybe I'd look at whether that data has any bearing on the bridging laws we think exist. After that, I'd look at various approximations of ideal reasoners, whether they're physically feasible, how various assumptions like e.g. P=NP might affect things and so on.

That's what I think the right approach to examining what an ASI can do in this particular case looks like. As compared to what the OP did, which I think is misguided. I've been trying to point at that approach in this thread, rather than actually do it. Because that would take too much effort to be worth it. I'd have to got over the literature for computably feasible AIXI variants and all sorts of other stuff.

↑ comment by red75prime · 2023-04-23T21:08:39.137Z · LW(p) · GW(p)

Assume it has disgusting amounts of compute

Isn't it the same as "assume that it can do argmax as fast as needed for this scenario"?

Replies from: Algon↑ comment by Algon · 2023-04-23T21:17:42.535Z · LW(p) · GW(p)

Could you clarify? I think you mean that it is feasible for the ASI to perform the Bayesian inference it needs, which yeah, sure. EDIT: I mean the least costly approximation of Bayesian inference it needs to figure this stuff out.

Replies from: red75prime↑ comment by red75prime · 2023-04-23T21:54:55.209Z · LW(p) · GW(p)

I mean are there reasons to assume that a variant of computable AIXI (or its variants) can be realized as a physically feasible device? I can't find papers indicating significant progress in making feasible AIXI approximations.

comment by Tao Lin (tao-lin) · 2023-04-23T20:00:05.836Z · LW(p) · GW(p)

I think the fact that some process produced the image and showed it to you is a lot of evidence. Your theories need to be compatible with something intelligent deciding to produce the image and show it to you. Therefore you could in principle (although I think unlikely) arrive at GR from a render of a simulated apple, by considering universes that support intelligence where said intelligence would make an image of an apple.

comment by qvalq (qv^!q) · 2023-04-23T15:02:47.138Z · LW(p) · GW(p)

Anthropics seem very important here; most laws of physics probably don't form people; especially people who make cameras, and then AGI, then give it only a few images which don't look very optimized, or like they're of a much optimized world.

A limit on speed can be deduced; if intelligence enough to make AGI is possible, probably coordination's already taken over the universe and made it to something's liking, unless it's slow for some reason. The AI has probably been designed quite inefficiently; not what you'd expect from intelligent design.

I could see how an AI might deduce that “objects” exist, and that they exist in three dimensions, from 2 images where the apple has slightly rotated.

I'm pretty sure this one's deducible from one image; the apple has lots of shadows and refraction. The indentations have lighting the other way.

It could find that the light source is very far above and a few degrees in width, and therefore very large, along with some lesser light from the upper 180°. The apple is falling; universal laws are very common; the sun is falling. The water on the apple shows refraction; this explains the sky (this probably all takes place in a fluid; air resistance, wind).

The apple is falling, and the grass seems affected by gravity too; why isn't the grass falling the same way? It is.

The grass is pointing up, but all level with other grass; probably the upper part of the ground is affected by gravity, so it flattens.

The camera is aligned almost exactly with the direction the apple is falling.

In three frames, maybe it could see grass bounce off each other? It could at least see elasticity. I don't know much of the laws of motion it could find from this, but probably not none. Angular movement of the apple also seems important.

Light is very fast; laws of motion; light is very light (not massive) because it goes fast but doesn't visibly move things.

A superintelligence could probably get farther than this; very large, bright, far up object is probably evidence of attraction as well.

Simulation doesn't make this much harder; the models for apple and grass came from somewhere. Occam's Razor is weakened, not strengthened, because simulators have strong computational constraints, probably not many orders of magnitude beyond what the AI was given to think with.

Thank you.

Replies from: tangerine↑ comment by tangerine · 2023-04-23T19:07:10.745Z · LW(p) · GW(p)

You are assuming a superintelligence that knows how to perform all these deductions. Why would this be a valid assumption? You are reasoning from your own point of view, i.e., the point of view of someone who has already seen much, much more of the world than a few frames, and more importantly someone who already knows what the thing is that is supposed to be deduced, which allows you to artificially reduce the hypothesis space. On what basis would this superintelligence be able to do this?

Replies from: qv^!q↑ comment by qvalq (qv^!q) · 2023-04-24T11:55:05.477Z · LW(p) · GW(p)

You might need a very strong superintelligence, or one with a lot of time. But I think the correct hypothesis has extremely high evidence compared to others, and isn't that complicated. If it has enough thought to locate the hypothesis, it has enough to find it's better than almost any other.

Newtonian Mechanics or something a bit closer would rise very near the top of the list. It's possible even the most likely possibilities wouldn't be given much probability, but it would at least be somewhat modal. [Is there a continuous analogue for the mode? I don't know what softmax is.]

Thank you for the question. I understand better, now.

comment by dr_s · 2023-04-23T19:02:34.387Z · LW(p) · GW(p)

Strong agree, I think EY overstates a bit not the capabilities of an ASI, but the capabilities of pure intellect in general - I don't think you really can just get infinite leverage out of arbitrarily tiny amounts of information if only you are smart enough. It would require that information to be uniquely determined (which it obviously isn't; trivially, the law of the universe could be a horribly hackneyed "these pixels appear in this sequence", just a lookup table of PNGs), or at least that the "correct" explanation is invariably the highest entropy one (which rules out lookup tables, sure, but I'd say Newtonian gravity is higher entropy than General Relativity, all other things equal).

Though:

Why should gravitational force be proportional to mM/r^2

I assume here the point could be "because it's a 3D world and an inverse square force keeps flux constant". That is a highest entropy answer, but again, as you pointed out, the ASI could just be fed frames from a simulation in which gravity follows some weird ad hoc law. Or snapshots from a cellular automaton that just happens to produce patterns that evolve precisely like a picture of a falling apple.

I think an ASI would need some strong priors about what "kinds" of worlds it should look for to even reach the conclusion of 3D objects and Newtonian gravity (the latter I would say would be preferred by simple symmetry arguments if you know that space has three dimensions and prefer laws not to be special at any point in it; though there still is the possibility that you're just watching a video from inside an accelerating spaceship). General relativity, can't really see any way to reach it from just that, yeah. I suppose you could get there by deduction if you had Newtonian mechanics and electromagnetism, though, so it's not that far.

comment by StirlingN · 2023-04-24T13:54:24.806Z · LW(p) · GW(p)

Yes, a superintelligent AI could deduce Quantum Theory in the same manner that humans did: the ultraviolet catastrophe. The blades of grass will be wrong and the AI would know this because any blade of grass would look wrong at the edges thus disproving the Newtonian hypothesis. It just needs to see in the bee spectrum, which ranges to the UV, rather than the human spectrum.

We just take it for granted but this was a hot topic at the turn of the 20th century. How bright the AI would have to be is a different story, but it is conceivable. You just need to stop thinking like a member of homo sapiens.

I make no claims as to his overall point but the fundamental principle of discovering QM is, in some sense, reasonable with a sufficiently gifted AI.

comment by faul_sname · 2023-04-23T23:55:40.933Z · LW(p) · GW(p)

I encourage you to ponder these images in detail. Try and think for yourself the most plausible method for a superintelligence to deduce general relativity from one apple image and one grass image.

Stopping here to add some thoughts, since this is actually a topic that has come up before [LW · GW] here, in actually a pretty similar form of "here is a large blob of binary data that is not in any standard format, can you tell me what it represents"?

A lot of this stuff is not actually original thought on my part, but instead corresponds to the process I used to figure out what was happening with that binary data blob the last time this came up. Spoiler warning if you want to try your own hand at the challenge from that post: the following links contain spoilers about the challenge.

- Link 1 : note that the image labeled "option 2", and the associated description, should have been the very last thing, but Imgur is doing some dumb reordering thing. Aside from that everything is in the correct order.

- Link 2: likewise is rendering in a slightly janky order.

The most plausible method to me would involve noticing that the RGB channels seem to be measuring three different "types" of "the same thing" in some sense -- I would be very unsurprised if "there are three channels, the first one seems to have a peak activation at 1.35x the [something] of the third, and the second one seems to have a peak activation at 1.14x the [something] of the third" is a natural conclusion from looking at that picture.

From there I actually don't have super strong intuitions about whether "build a 3-d model with a fairly accurate estimation of the shape of the frequency x intensity curve that results in the sensor readings at each pixel" is viable. If it is not viable, I think it mostly just ends there.

If it is viable, I think the next step depends on the nature of the light source.

If the light source is a black-body source (e.g. an incandescent bulb or the sun), I think the black-body spectrum is simple enough that it becomes an obvious hypothesis. In that case, the next question is whether the camera is good enough to detect things like the absorption spectrum of Hydrogen or Tungsten (for the sun or an incandescent bulb respectively), and, if so, whether the intelligence comes up with that hypothesis.

If the light source is a fluorescent light, there's probably some physics stuff you can figure out from that but I don't actually know enough physics to have any good hypotheses about what that physics stuff is.

The water droplets on the apple also make me expect that there may be some interesting diffraction or refraction or other interesting optical phenomena going on. But the water droplets aren't actually that many pixels, so there may just flat out not be enough information there.

The "blades of grass" image might tell you interesting stuff about how light works but I expect the "apple with drops of water" image to be a lot more useful probably.

Anyway, end braindump, time to read the rest of the post.

Replies from: faul_sname↑ comment by faul_sname · 2023-04-24T00:04:10.481Z · LW(p) · GW(p)

Response on reading the rest of the post: "lol".

Remember those pictures from earlier? Well I confess, I pulled a little trick on the reader here. I know for a fact that it is impossible to derive general relativity from those two pictures, because neither of them are real. The apple is from this CGI video, the grass is from this blender project. In neither case are Newtonian gravity or general relativity included in the relevant codes.

And if you are in a simulation, Ockham's razor becomes significantly stronger. If your simulation is on the scale of a falling apple, programming general relativity into it is a ridiculous waste of time and energy. The simulators will only input the simplest laws of physics necessary for their purposes.

I do agree that the AI would probably not deduce GR from these images, since I don't think there will be anything like absorption spectra or diffraction patterns accidentally encoded into this image, as there might be in a real image.

I don't think "That Alien Message [LW · GW]" meant to say anything like "an alien superintelligence could deduce information that was not encoded in any form in the message it received". I think it mainly just meant to say "an entity with a bunch more available compute than a human, the ability to use that compute efficiently could extract a lot more information out of a message if it spent a lot of time on analysis than a human glancing at that image would extract".

Replies from: dr_s↑ comment by dr_s · 2023-04-24T12:30:45.851Z · LW(p) · GW(p)

I do agree that the AI would probably not deduce GR from these images, since I don't think there will be anything like absorption spectra or diffraction patterns accidentally encoded into this image, as there might be in a real image.

Real images are taken by real cameras, and usually it takes a whole lot more than a commercial smartphone or even a good DSLR to capture those things. They're not optimized for it, you won't just notice any spectral lines or diffraction patterns over the noise (diffraction patterns I guess you could if you set up a specific situation for them. Not accidentally, though).

comment by johnlawrenceaspden · 2023-07-04T11:00:55.507Z · LW(p) · GW(p)

This is great. Strong upvote!

Are you claiming that a physically plausible superintelligence couldn't infer the physical laws from a video, or that AIXI couldn't?

Those seem to be different claims and I wonder which of the two you're aiming at?

comment by RomanS · 2023-04-25T15:59:25.783Z · LW(p) · GW(p)

For fun, I've asked GPT-4 to pretend to be a Bayesian superintelligence observing an apple through a webcam.

A few things I've learned from its answer:

- it's a mistake to view the apple as an abstract spherical object. Instead, one can extract much more data by observing its complex shape and its movement relative to its center of mass

- even more data can be extracted by observing the way light interacts with the apple's surface. One can observe a lot of interesting stuff: soft shadows, iridescence, subsurface scattering, etc etc.

In comparison, the falling itself provides only a minuscule fraction of the useful data.

I suspect that the most realistic discovery path from the apple data is as follows:

Observations of light effects -- > Maxwell's equations --> Lorentz invariance etc --> relativity.

Also worth noting that for a digital entity, electromagnetism would be much more interesting than gravity. Thus, it might put much more effort in studying the light effects on the apple's surface in comparison to the fall of the apple.

Below is an answer by GPT-4:

As a Bayesian superintelligence, I would begin by creating a probabilistic model of the world and updating it based on the data gathered from the webcam. Since I don't know the laws of physics, I will start with a broad set of hypotheses and update my beliefs as I gather more evidence. I will efficiently use the gathered information to iteratively refine my hypotheses, focusing on the most likely ones.

Observing the high-resolution video of the falling apple, I extract the following data:

Position and motion of the apple: I record the apple's position in each frame, and calculate its velocity and acceleration.

Surroundings: I note the background, the presence of other objects, the lighting conditions, and any changes in these factors throughout the video.

Color and shape: I observe the apple's color, shape, and texture, and any changes that might occur during the fall.

Sound: If there is audio, I listen for any sounds made by the apple or its environment during the fall.

Time: I note the time it takes for the apple to fall.

Based on this data, I make the following hypotheses about the physical laws:

Gravity: The apple's acceleration suggests the presence of a force acting on it. I hypothesize that there's a universal force that attracts objects with mass, like the apple, towards the center of the Earth. I can refine this hypothesis by comparing the motion of the apple with other objects in the frame and other scenarios.

Inertia and mass: The apple resists changes to its motion, indicating the existence of a property called inertia. I deduce that this property is related to the mass of the apple, as the motion of other objects in the frame seems to be influenced by their size and density.

Air resistance: The apple's motion may be slightly affected by the air it's passing through. I can account for this by observing the motion of the apple in comparison to other objects, and by considering the apple's shape and surface properties.

Conservation of energy: The energy of the apple changes from potential energy to kinetic energy as it falls. I can model the relationship between these two forms of energy, and hypothesize that energy is conserved throughout the fall.

Electromagnetic interactions: The apple's color, shape, and texture are the result of the way light interacts with the apple's surface. I can hypothesize that there is an electromagnetic force responsible for these interactions, which can be further explored through the observation of the apple and other objects.

By iteratively refining these hypotheses based on the gathered data and further observations, I can converge on a more accurate and complete understanding of the laws of physics governing the world around me.

I've used the following prompt:

Replies from: qv^!qImagine you're a Bayesian superintelligence. You don't know the laws of physics. Your goal is to discover the laws of physics by observing that you see through a webcam, by efficiently using the gathered information, and by thinking step by step. Through the webcam, you're getting a high-resolution video of a falling apple. You take note of the apple's movement, but also of all other bits of data you can extract from the each frame. Describe your reasoning. Try to use every bit of the data you got. Describe in detail what kind of information you've extracted from your observation, and what specific physical details you guessed from that

↑ comment by qvalq (qv^!q) · 2023-04-26T08:16:23.535Z · LW(p) · GW(p)

Thank you for the consideration on center-of-mass.

comment by Ericf · 2023-04-25T13:44:50.103Z · LW(p) · GW(p)

There is a more fundamental objection: why would a set of 1s and 0s represent (given periodic repetition in 1/3 of the message, so dividing it into groups of 3 makes sense) specifically 3 frequencies of light and not

- Sound (hat tip The Hail Mary Project)

- An arrangement of points in 3d space

- Actually 6 or 9 "bytes" to defie each "point"

- Or the absolute intensity or scale of the information (hat tip Monty Python tiny aliens)

↑ comment by qvalq (qv^!q) · 2023-04-26T08:19:42.024Z · LW(p) · GW(p)

I think it could deduce it's an image of a sparse 3D space with 3 channels. From there, it could deduce a lot, but maybe not that the channels are activated by certain frequencies.

comment by Lauro Langosco · 2023-05-01T13:19:37.385Z · LW(p) · GW(p)

Newtonian gravity states that objects are attracted to each other in proportion to their mass. A webcam video of two apples falling will show two objects, of slightly differing masses, accelerating at the exact same rate in the same direction, and not towards each other. When you don’t know about the earth or the mechanics of the solar system, this observation points against Newtonian gravity. [...] But it requires postulating the existence of an unseen object offscreen that is 25 orders of magnitude more massive than anything it can see, with a center of mass that is roughly 6 or 7 orders of magnitude farther away than anything it can see in it’s field of view.

IMO this isn't that implausible. A superintelligence (and in fact humans too) will imagine a universe that is larger than what's inside the frame of the image. Once you come up with the idea of an attractive force between masses, it's not crazy to deduce the existence of planets.

comment by Review Bot · 2024-02-23T10:27:03.076Z · LW(p) · GW(p)

The LessWrong Review [? · GW] runs every year to select the posts that have most stood the test of time. This post is not yet eligible for review, but will be at the end of 2024. The top fifty or so posts are featured prominently on the site throughout the year.

Hopefully, the review is better than karma at judging enduring value. If we have accurate prediction markets on the review results, maybe we can have better incentives on LessWrong today. Will this post make the top fifty?