Studies On Slack

post by Scott Alexander (Yvain) · 2020-05-13T05:00:02.772Z · LW · GW · 34 commentsContents

I. II. III. IV. None 34 comments

I.

Imagine a distant planet full of eyeless animals. Evolving eyes is hard: they need to evolve Eye Part 1, then Eye Part 2, then Eye Part 3, in that order. Each of these requires a separate series of rare mutations.

Here on Earth, scientists believe each of these mutations must have had its own benefits – in the land of the blind, the man with only Eye Part 1 is king. But on this hypothetical alien planet, there is no such luck. You need all three Eye Parts or they’re useless. Worse, each Eye Part is metabolically costly; the animal needs to eat 1% more food per Eye Part it has. An animal with a full eye would be much more fit than anything else around, but an animal with only one or two Eye Parts will be at a small disadvantage.

So these animals will only evolve eyes in conditions of relatively weak evolutionary pressure. In a world of intense and perfect competition, where the fittest animal always survives to reproduce and the least fit always dies, the animal with Eye Part 1 will always die – it’s less fit than its fully-eyeless peers. The weaker the competition, and the more randomness dominates over survival-of-the-fittest, the more likely an animal with Eye Part 1 can survive and reproduce long enough to eventually produce a descendant with Eye Part 2, and so on.

There are lots of ways to decrease evolutionary pressure. Maybe natural disasters often decimate the population, dozens of generations are spend recolonizing empty land, and during this period there’s more than enough for everyone and nobody has to compete. Maybe there are frequent whalefalls, and any animal nearby has hit the evolutionary jackpot and will have thousands of descendants. Maybe the population is isolated in little islands and mountain valleys, and one gene or another can reach fixation in a population totally by chance. It doesn’t matter exactly how it happens, it matters that evolutionary pressure is low.

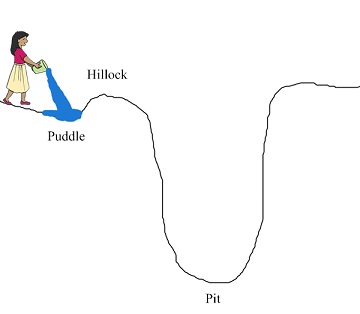

The branch of evolutionary science that deals with this kind of situation is called “adaptive fitness landscapes”. Landscapes really are a great metaphor – consider somewhere like this:

You pour out a bucket of water. Water “flows downhill”, so it’s tempting to say something like “water wants to be at the lowest point possible”. But that’s not quite right. The lowest point possible is the pit, and water won’t go there. It will just sit in the little puddle forever, because it would have to go up the tiny little hillock in order to get to the pit, and water can’t flow uphill. Using normal human logic, we feel tempted to say something like “Come on! The hillock is so tiny, and that pit is so deep, just make a single little exception to your ‘always flow downhill’ policy and you could do so much better for yourself!” But water stubbornly refuses to listen.

Under conditions of perfectly intense competition, evolution works the same way. We imagine a multidimensional evolutionary “landscape” where lower ground represents higher fitness. In this perfectly intense competition, organisms can go from higher to lower fitness, but never vice versa. As with water, the tiniest hillock will leave their potential forever unrealized.

Under more relaxed competition, evolution only tends probabilistically to flow downhill. Every so often, it will flow uphill; the smaller the hillock, the more likely evolution will surmount it. Given enough time, it’s guaranteed to reach the deepest pit and mostly stay there.

Take a moment to be properly amazed by this. It sounds like something out of the Tao Te Ching. An animal with eyes has very high evolutionary fitness. It will win at all its evolutionary competitions. So in order to produce the highest-fitness animal, we need to – select for fitness less hard? In order to produce an animal that wins competitions, we need to stop optimizing for winning competitions?

This doesn’t mean that less competition is always good. An evolutionary environment with no competition won’t evolve eyes either; a few individuals might randomly drift into having eyes, but they won’t catch on. In order to optimize the species as much as possible as fast as possible, you need the right balance, somewhere in the middle between total competition and total absence of competition.

In the esoteric teachings, total competition is called Moloch, and total absence of competition is called Slack [LW · GW]. Slack (thanks to Zvi Moskovitz for the term and concept) gets short shrift. If you think of it as “some people try to win competitions, other people don’t care about winning competitions and slack off and go to the beach”, you’re misunderstanding it. Think of slack as a paradox – the Taoist art of winning competitions by not trying too hard at them. Moloch and Slack are opposites and complements, like yin and yang. Neither is stronger than the other, but their interplay creates the ten thousand things.

II.

Before we discuss slack further, a digression on group selection.

Some people would expect this discussion to be quick, since group selection doesn’t exist. These people understand it as evolution acting for the good of a species. It’s a tempting way to think, because evolution usually eventually makes species stronger and more fit, and sometimes we colloquially round that off to evolution targeting a species’ greater good. But inevitably we find evolution is awful and does absolutely nothing of the sort.

Imagine an alien planet that gets hit with a solar flare once an eon, killing all unshielded animals. Sometimes unshielded animals spontaneously mutate to shielded, and vice versa. Shielded animals are completely immune to solar flares, but have 1% higher metabolic costs. What happens? If you predicted “magnetic shielding reaches fixation and all animals get it”, you’ve fallen into the group selection trap. The unshielded animals outcompete the shielded ones during the long inter-flare period, driving their population down to zero (though a few new shielded ones arise every generation through spontaneous mutations). When the flare comes, only the few spontaneous mutants survive. They breed a new entirely-shielded population, until a few unshielded animals arise through spontaneous mutation. The unshielded outcompete the shielded ones again, and by the time of the next solar flare, the population is 100% unshielded again and they all die. If the animals are lucky, there will always be enough spontaneously-mutated shielded animals to create a post-flare breeding population; if they are unlucky, the flare will hit at a time with unusually few such mutants, and the species will go extinct.

An Evolution Czar concerned with the good of the species would just declare that all animals should be shielded and solve the problem. In the absence of such a Czar, these animals will just keep dying in solar-flare-induced mass extinctions forever, even though there is an easy solution with only 1% metabolic cost.

A less dramatic version of the same problem happens here on Earth. Every so often predators (let’s say foxes) reproduce too quickly and outstrip the available supply of prey (let’s say rabbits). There is a brief period of starvation as foxes can’t find any more rabbits and die en masse. This usually ends with a boom-bust cycle: after most foxes die, the rabbits (who reproduce very quickly and are now free of predation) have a population boom; now there are rabbits everywhere. Eventually the foxes catch up, eat all the new rabbits, and the cycle repeats again. It’s a waste of resources for foxkind to spend so much of time and its energy breeding a huge population of foxes that will inevitably collapse a generation later; an Evolution Czar concerned with the common good would have foxes limit their breeding at a sustainable level. But since individual foxes that breed excessively are more likely to have their genes represented in the next generation than foxes that breed at a sustainable level, we end up with foxes that breed excessively, and the cycle continues.

(but humans are too smart to fall for this one, right?)

Some scientists tried to create group selection under laboratory conditions [LW · GW]. They divided some insects into subpopulations, then killed off any subpopulation whose numbers got too high, and and “promoted” any subpopulation that kept its numbers low to better conditions. They hoped the insects would evolve to naturally limit their family size in order to keep their subpopulation alive. Instead, the insects became cannibals: they ate other insects’ children so they could have more of their own without the total population going up. In retrospect, this makes perfect sense; an insect with the behavioral program “have many children, and also kill other insects’ children” will have its genes better represented in the next generation than an insect with the program “have few children”.

But sometimes evolution appears to solve group selection problems. What about multicellular life? Stick some cells together in a resource-plentiful environment, and they’ll naturally do the evolutionary competition thing of eating resources as quickly as possible to churn out as many copies of themselves as possible. If you were expecting these cells to form a unitary organism where individual cells do things like become heart cells and just stay in place beating rhythmically, you would call the expected normal behavior “cancer” and be against it. Your opposition would be on firm group selectionist grounds: if any cell becomes cancer, it and its descendants will eventually overwhelm everything, and the organism (including all cells within it, including the cancer cells) will die. So for the good of the group, none of the cells should become cancerous.

The first step in evolution’s solution is giving all cells the same genome; this mostly eliminates the need to compete to give their genes to the next generation. But this solution isn’t perfect; cells can get mutations in the normal course of dividing and doing bodily functions. So it employs a host of other tricks: genetic programs telling cells to self-destruct if they get too cancer-adjacent, an immune system that hunts down and destroys cancer cells, or growing old and dying (this last one isn’t usually thought of as a “trick”, but it absolutely is: if you arrange for a cell line to lose a little information during each mitosis, so that it degrades to the point of gobbledygook after X divisions, this means cancer cells that divide constantly will die very quickly, but normal cells dividing on an approved schedules will last for decades).

Why can evolution “develop tricks” to prevent cancer, but not to prevent foxes from overbreeding, or aliens from losing their solar flare shields? Group selection works when the group itself has a shared genetic code (or other analogous ruleset) that can evolve. It doesn’t work if you expect it to directly change the genetic code of each individual to cooperate more.

When we think of cancer, we are at risk of conflating two genetic codes: the shared genetic code of the multicellular organism, and the genetic code of each cell within the organism. Usually (when there are no mutations in cell divisions) these are the same. Once individual cells within the organism start mutating, they become different. Evolution will select for cancer in changes to individual cells’ genomes over an organism’s lifetime, but select against it in changes to the overarching genome over the lifetime of the species (ie you should expect all the genes you inherited from your parents to be selected against cancer, and all the mutations in individual cells you’ve gotten since then to be selected for cancer).

The fox population has no equivalent of the overarching genome; there is no set of rules that govern the behavior of every fox. So foxes can’t undergo group selection to prevent overpopulation (there are some more complicated dynamics that might still be able to rescue the foxes in some situations, but they’re not relevant to the simple model we’re looking at).

In other words, group selection can happen in a two-layer hierarchy of nested evolutionary systems when the outer system (eg multicellular humans) includes rules that the inner system (eg human cells) have to follow, and where the fitness of the evolving-entities in the outer system depends on some characteristics of the evolving-entities in the inner system (eg humans are higher-fitness if their cells do not become cancerous). The evolution of the outer layer includes includes evolution over rulesets, and eventually evolves good strong rulesets that tell the inner-layer evolving entities how to behave, which can include group selection (eg humans evolve a genetic code that includes a rule “individual cells inside of me should not get cancer” and mechanisms for enforcing this rule).

You can find these kinds of two-layer evolutionary systems everywhere. For example, “cultural evolution” is a two-layer evolutionary system. In the hypothetical state of nature, there’s unrestricted competition – people steal from and murder each other, and only the strongest survive. After they form groups, the groups compete with each other, and groups that develop rulesets that prevent theft and murder (eg legal codes, religions, mores) tend to win those competitions. Once again, the outer layer (competition between cultures) evolves groups that successfully constrains the inner layer (competition between individuals). Species don’t have a czar who restraints internal competition in the interest of keeping the group strong, but some human cultures do (eg Russia).

Or what about market economics? The outer layer is companies, the inner layer is individuals. Maybe the individuals are workers – each worker would selfishly be best off if they spent the day watching YouTube videos and pushed the hard work onto someone else. Or maybe they’re executives – each individual executive would selfishly be best off if they spent their energy on office politics, trying to flatter and network with whoever was most likely to promote them. But if all the employees loaf off and all the executives focus on office politics, the company won’t make products, and competitors will eat their lunch. So someone – maybe the founder/CEO – comes up with a ruleset to incentivize good work, probably some kind of performance review system where people who do good work get promoted and people who do bad work get fired. The outer-layer competition between companies will select for corporations with the best rulesets; over time, companies’ internal politics should get better at promoting the kind of cooperation necessary to succeed.

How do these systems replicate multicellular life’s success without being literal entities with literal DNA having literal sex? They all involve a shared ruleset and a way of punishing rulebreakers which make it in each individual’s short-term interest to follow the ruleset that leads to long-term success. Countries can do that (follow the law or we’ll jail you), companies can do that (follow our policies or we’ll fire you), even multicellular life can sort of do that (don’t become cancer, or immune cells will kill you). When there’s nothing like that (like the overly-fast-breeding foxes) evolution fails at group selection problems. When there is something like that, it has a chance. When there’s something like that, and the thing like that is itself evolving (either because it’s encoded in literal DNA, or because it’s encoded in things like company policies that determine whether a company goes out of business or becomes a model for others), then it can reach a point where it solves group selection problems very effectively.

In the esoteric teachings, the inner layer of two-layer evolutionary systems is represented by the Goddess of Cancer, and outer layer by the Goddess of Everything Else. In each part of the poem, the Goddess of Cancer orders the evolving-entities to compete, but the Goddess of Everything Else recasts it as a two-layer competition where cooperation on the internal layer helps win the competition on the external layer. He who has ears to hear, let him listen.

III.

Why the digression? Because slack is a group selection problem. A species that gave itself slack in its evolutionary competition would do better than one that didn’t – for example, the eyeless aliens would evolve eyes and get a big fitness boost. But no individual can unilaterally choose to compete less intensely; if it did, it would be outcompeted and die. So one-layer evolution will fail at this problem the same way it fails all group selection problems, but two-layer systems will have a chance to escape the trap.

The multicellular life example above is a special case where you want 100% coordination and 0% competition. I framed the other examples the same way – countries do best when their citizens avoid all competition and work together for the common good, companies do best when their executives avoid self-aggrandizing office politics and focus on product quality. But as we saw above, some systems do best somewhere in the middle, where there’s some competition but also some slack.

For example, consider a researcher facing their own version of the eyeless aliens’ dilemma. They can keep going with business as normal – publishing trendy but ultimately useless papers that nobody will remember in ten years. Or they can work on Research Program Part 1, which might lead to Research Program Part 2, which might lead to Research Program Part 3, which might lead to a ground-breaking insight. If their jobs are up for review every year, and a year from now the business-as-normal researcher will have five trendy papers, and the groundbreaking-insight researcher will be halfway through Research Program Part 1, then the business-as-normal researcher will outcompete the groundbreaking-insight researcher; as the saying goes, “publish or perish”. Without slack, no researcher can unilaterally escape the system; their best option will always be to continue business as usual.

But group selection makes the situation less hopeless. Universities have long time-horizons and good incentives; they want to get famous for producing excellent research. Universities have rulesets that bind their individual researchers, for example “after a while good researchers get tenure”. And since universities compete with each other, each is incentivized to come up with the ruleset that maximizes long-term researcher productivity. So if tenure really does work better than constant vicious competition, then (absent the usual culprits like resistance-to-change, weird signaling equilibria, politics, etc) we should expect universities to converge on a tenure system in order to produce the best work. In fact, we should expect universities to evolve a really impressive ruleset for optimizing researcher incentives, just as impressive as the clever mechanisms the human body uses to prevent cancer (since this seems a bit optimistic, I assume the usual culprits are not absent).

The same is true for grant-writing; naively you would want some competition to make sure that only the best grant proposals get funded, but too much competition seems to stifle original research, so much so that some funders are throwing out the whole process and selecting grants by lottery, and others are running grants you can apply for in a half-hour and hear back about two days later. If there’s a feedback mechanism – if these different rulesets produce different-quality research, and grant programs that produce higher-quality research are more likely to get funded in the future – then the rulesets for grants will gradually evolve, and the competition for grants will take place in an environment with whatever the right evolutionary parameters for evolving good research are.

I don’t want to say these things will definitely happen – you can read Inadequate Equilibria for an idea of why not. But they might. The evolutionary dynamics which would normally prevent them can be overcome. Two-layer evolutionary systems can produce their own slack, if having slack would be a good idea.

IV.

That was a lot of paragraphs, and a lot of them started with “imagine a hypothetical situation where…”. Let’s look deeper into cases where an understanding of slack can inform how we think about real-world phenomena. Five examples:

1. Monopolies. Not the kind that survive off overregulation and patents, the kind that survive by being big enough to crush competitors. These are predators that exploit low-slack environments. If Boeing has a monopoly on building passenger planes, and is exploiting that by making shoddy products and overcharging consumers, then that means anyone else who built a giant airplane factory could make better products at a lower price, capture the whole airplane market, and become a zillionaire. Why don’t they? Slack. In terms of those adaptive fitness landscapes, in between your current position (average Joe) and a much better position at the bottom of a deep pit (you own a giant airplane factor and are a zillionaire), there’s a very big hill you have to climb – the part where you build Giant Airplane Factory Part 1, Giant Airplane Factory Part 2, etc. At each point in this hill, you are worse off than somebody who was not building an as-yet-unprofitable giant airplane factory. If you have infinite slack (maybe you are Jeff Bezos, have unlimited money, and will never go bankrupt no matter how much time and cost it takes before you start earning profits) you’re fine. If you have more limited slack, your slack will run out and you’ll be outcompeted before you make it to the greater-fitness deep pit.

Real monopolies are more complicated than this, because Boeing can shape up and cut prices when you’re halfway to building your giant airplane factory, thus removing your incentive. Or they can do actually shady stuff. But none of this would matter if you already had your giant airplane factory fully built and ready to go – at worst, you and Boeing would then be in a fair fight. Everything Boeing does to try to prevent you from building that factory is exploiting your slacklessness and trying to increase the height of that hill you have to climb before the really deep pit.

(Peter Thiel inverts the landscape metaphor and calls the hill a “moat”, but he’s getting at the same concept).

2. Tariffs. Same story. Here’s the way I understand the history of the international auto industry – anyone who knows more can correct me if I’m wrong. Automobiles were invented in the early 20th century. Several Western countries developed homegrown auto industries more or less simultaneously, with the most impressive being Henry Ford’s work on mass production in the US. Post-WWII Japan realized that its own auto industry would never be able to compete with more established Western companies, so it placed high tariffs on foreign cars, giving local companies like Nissan and Toyota a chance to get their act together. These companies, especially Toyota, invented a new form of auto production which was actually much more efficient than the usual American methods, and were eventually able to hold their own. They started exporting cars to the US; although American tariffs put them at a disadvantage, they were so much better than the American cars of the time that consumers preferred them anyway. After decades of losing out, the American companies adopted a more Japanese ethos, and were eventually able to compete on a level playing field again.

This is a story of things gone surprisingly right – Americans and Japanese alike were able to get excellent inexpensive cars. Two things had to happen for it to work. First, Japan had to have high enough tariffs to give their companies some slack – to let them develop their own homegrown methods from scratch without being immediately outcompeted by temporarily-superior American competitors. Second, America had to have low enough tariffs that eventually-superior Japanese companies could outcompete American automakers, and Japan’s fitness-improving innovations could spread.

From the perspective of a Toyota manager, this is analogous to the eyeless alien story. You start with some good-enough standard (blind animals, American car companies). You want to evolve a superior end product (eye-having animals, Toyota). The intermediate steps (an animal with only Eye Part 1, a kind of crappy car company that stumbles over itself trying out new things) are less fit than the good-enough standard. Only when the inferior intermediate steps are protected from competition (through evolutionary randomness, through tariffs) can the superior end product come into existence. But you want to keep enough competition that the superior end product can use its superiority to spread (there is enough evolutionary competition that having eyes reaches fixation, there is enough free trade that Americans preferentially buy Toyota and US car companies have to adopt its policies).

From the perspective of an economic historian, maybe it’s a group selection story. The various stakeholders in the US auto industry – Ford, GM, suppliers, the government, labor, customers – competed with each other in a certain way and struck some compromise. The various stakeholders in the Japanese auto industry did the same. For some reason the American compromise worked worse than the Japanese one – I’ve heard stories about how US companies were more willing to defraud consumers for short-term profit, how US labor unions were more willing to demand concessions even at the cost of company efficiency, how regulators and executives were in bed with each other to the detriment of the product, etc. Every US interest group was acting in its own short-term self-interest, but the Japanese industry-as-a-whole outcompeted the American one and the Americans had to adjust.

3. Monopolies, Part II. Traditionally, monopolies have been among the most successful R&D centers. The most famous example is Xerox; it had a monopoly on photocopiers for a few decades before losing an anti-trust suit in the late 1970s; during that period, its PARC R&D program invented “laser printing, Ethernet, the modern personal computer, graphical user interface (GUI) and desktop paradigm, object-oriented programming, [and] the mouse”. The second most famous example is Bell Labs, which invented “radio astronomy, the transistor, the laser, the photovoltaic cell, the charge-coupled device, information theory, the Unix operating system, and the programming languages B, C, C++, and S” before the government broke up its parent company AT&T. Google seems to be trying something similar, though it’s too soon to judge their outcomes.

These successes make sense. Research and development is a long-term gamble. Devoting more money to R&D decreases your near-term profits, but (hopefully) increases your future profits. Freed from competition, monopolies have limitless slack, and can afford to invest in projects that won’t pay off for ten or twenty years. This is part of Peter Thiel’s defense of monopolies in Zero To One.

An administrator tasked with advancing technology might be tempted to encourage monopolies in order to get more research done. But monopolies can also be stagnant and resistant to change; it’s probably not a coincidence that Xerox wasn’t the first company to bring the personal computer to market, and ended up irrelevant to the computing revolution. Like the eyeless aliens, who will not evolve in conditions of perfect competition or perfect lack of competition, probably all you can do here is strike a balance. Some Communist countries tried the extreme solution – one state-supported monopoly per industry – and it failed the test of group selection. I don’t know enough to have an opinion on whether countries with strong antitrust eventually outcompete those with weaker antitrust or vice versa.

4. Strategy Games. I like the strategy game Civilization, where you play as a group of primitives setting out to found a empire. You build cities and infrastructure, research technologies, and fight wars. Your world is filled with several (usually 2 to 7) other civilizations trying to do the same.

Just like in the real world, civilizations must decide between Guns and Butter. The Civ version of Guns is called the Axe Rush. You immediately devote all your research to discovering how to make really good axes, all your industry to manufacturing those axes, and all your population into wielding those axes. Then you go and hack everyone else to pieces while they’re still futzing about trying to invent pottery or something.

The Civ version of Butter is called Build. You devote all your research, industry, and populace to laying the foundations of a balanced economy and culture. You invent pottery and weaving and stuff like that. Soon you have a thriving trade network and a strong philosophical tradition. Eventually you can field larger and more advanced armies than your neighbors, and leverage the advantage into even more prosperity, or into military conquest.

Consider a very simple scenario: a map of Eurasia with two civilizations, Rome and China.

If both choose Axe Rush, then whoever Axe Rushes better wins.

If both choose Build, then whoever Builds better wins.

What if Rome chooses Axe Rush, and China chooses Build?

Then it depends on their distance! If it’s a very small map and they start very close together, Rome will probably overwhelm the Chinese before Build starts paying off. But if it’s a very big map, by the time Roman Axemen trek all the way to China, China will have Built high walls, discovered longbows and other defensive technologies, and generally become too strong for axes to defeat. Then they can crush the Romans – who are still just axe-wielding primitives – at their leisure.

Consider a more complicated scenario. You have a map of Earth. The Old World contains Rome and China. The New World contains Aztecs. Rome and China are very close to each other. Now what happens?

Rome and China spend the Stone, Bronze, and Iron Ages hacking each other to bits. Aztecs spend those Ages building cities, researching technologies, and building unique Wonders of the World that provide powerful bonuses. In 1492, they discover Galleons and starts crossing the ocean. The powerful and advanced Aztec empire crushes the exhausted axe-wielding Romans and Chinese.

This is another story about slack. The Aztecs had it – they were under no competitive pressure to do things that paid off next turn. The Romans and Chinese didn’t – they had to be at the top of their game every single turn, or their neighbor would conquer them. If there was an option that made you 10% weaker next turn in exchange for making you 100% stronger ten turns down the line, the Aztecs could take it without a second thought; the Romans and Chinese would probably have to pass.

Okay, more complicated Civilization scenario. This time there are two Old World civs, Rome and China, and two New World civs, Aztecs and Inca. The map is stretched a little bit so that all four civilizations have the same amount of natural territory. All four players understand the map layout and can communicate with each other. What happens?

Now it’s a group selection problem. A skillful Rome player will private message the China player and explain all of this to her. She’ll remind him that if one hemisphere spends the whole Stone Age fighting, and the other spends it building, the builders will win. She might tell him that she knows the Aztec and Inca players, they’re smart, and they’re going to be discussing the same considerations. So it would benefit both Rome and China to sign a peace treaty dividing the Old World in two, stick to their own side, and Build. If both sides cooperate, they’ll both Build strong empires capable of matching the New World players. If one side cooperates and the other defects, it will easily steamroll over its unprepared opponent and conquer the whole Old World. If both sides defect, they’ll hack each other to death with axes and be easy prey for the New Worlders.

This might be true in Civilization games, but real-world civilizations are more complicated. Graham Greene wrote:

In Italy, for thirty years under the Borgias, they had warfare, terror, murder and bloodshed, but they produced Michelangelo, Leonardo da Vinci and the Renaissance. In Switzerland, they had brotherly love, they had five hundred years of democracy and peace – and what did that produce? The cuckoo clock.

So maybe a little bit of internal conflict is good, to keep you honest. Too much conflict, and you tear yourselves apart and are easy prey for outsiders. Too little conflict, and you invent the cuckoo clock and nothing else. The continent that conquers the world will have enough pressure that its people want to innovate, and enough slack that they’re able to.

This is total ungrounded amateur historical speculation, but when I hear that I think of the Classical world. We can imagine it as divided into a certain number of “theaters of civilization” – Greece, Mesopotamia, Egypt, Persia, India, Scythia, etc. Each theater had its own rules governing average state size, the rules of engagement between states, how often bigger states conquered smaller states, how often ideas spread between states of the same size, etc. Some of those theaters were intensely competitive: Egypt was a nice straight line, very suited to centralized rule. Others had more slack: it was really hard to take over all of Greece; even the Spartans didn’t manage. Each theater conducted its own “evolution” in its own way – Egypt was ruled by a single Pharaoh without much competition, Scythia was constant warfare of all against all, Greece was isolated city-states that fought each other sometimes but also had enough slack to develop philosophy and science. Each of those systems did their own thing for a while, until finally one of them produced something perfect: 4th century BC Macedonia. Then it went out and conquered everything.

If Greene is right, the point isn’t to find the ruleset that promotes 100% cooperation. It’s to find the ruleset that promotes an evolutionary system that makes your group the strongest. Usually this involves some amount of competition – in order to select for stronger organisms – but also some amount of slack – to let organisms develop complicated strategies that can make them stronger. Despite the earlier description, this isn’t necessarily a slider between 0% competition and 100% competition. It could be much more complicated – maybe alternating high-slack vs. low-slack periods, or many semi-isolated populations with a small chance of interaction each generation, or alternation between periods of isolation and periods of churning.

In a full two-layer evolution, you would let the systems evolve until they reached the best parameters. Here we can’t do that – Greece has however many mountains it has; its success does not cause the rest of the world to grow more mountains. Still, we randomly started with enough different groups that we got to learn something interesting.

(I can’t emphasize enough how ungrounded this historical speculation is. Please don’t try to evolve Alexander the Great in your basement and then get angry at me when it doesn’t work)

5. The Long-Term Stock Exchange. Actually, all stock exchanges are about slack. Imagine you are a brilliant inventor who, given $10 million and ten years, could invent fusion power. But in fact you have $10 and need work tomorrow or you will starve. Given those constraints, maybe you could start, I don’t know, a lemonade stand.

You’re in the same position as the animal trying to evolve an eye – you could create something very high-utility, if only you had enough slack to make it happen. But by default, the inventor working on fusion power starves to death ten days from now (or at least makes less money than his counterpart who ran the lemonade stand), the same way the animal who evolves Eye Part 1 gets outcompeted by other animals who didn’t and dies out.

You need slack. In the evolution example, animals usually stumble across slack randomly. You too might stumble across slack randomly – maybe it so happens that you are independently wealthy, or won the lottery, or something.

More likely, you use the investment system. You ask rich people to give you $10 million for ten years so you can invent fusion; once you do, you’ll make trillions of dollars and share some of it with them.

This is a great system. There’s no evolutionary equivalent. An animal can’t pitch Darwin on its three-step plan to evolve eyes and get free food and mating opportunities to make it happen. Wall Street is a giant multi-trillion dollar time machine funneling future profits back into the past, and that gives people the slack they need to make the future profits happen at all.

But the Long-Term Stock Exchange is especially about slack. They are a new exchange (approved by the SEC last year) which has complicated rules about who can list with them. Investors will get extra clout by agreeing to hold stocks for a long time; executives will get incentivized to do well in the far future instead of at the next quarterly earnings report. It’s making a deliberate choice to give companies more slack than the regular system and see what they do with it. I don’t know enough about investing to have an opinion, except that I appreciate the experiment. Presumably its companies will do better/worse than companies on the regular stock exchange, that will cause companies to flock toward/away from it, and we’ll learn that its new ruleset is better/worse at evolving good companies through competition than the regular stock exchange’s ruleset.

6. That Time Ayn Rand Destroyed Sears. Or at least that’s how Michael Rozworski and Leigh Phillips describe Eddie Lampert’s corporate reorganization in How Ayn Rand Destroyed Sears, which I recommend. Lampert was a Sears CEO who figured – since free-market competitive economies outcompete top-down economies, shouldn’t free-market competitive companies outcompete top-down companies? He reorganized Sears as a set of competing departments that traded with each other on normal free-market principles; if the Product Department wanted its products marketed, it would have to pay the Marketing Department. This worked really badly, and was one of the main contributors to Sears’ implosion.

I don’t have a great understanding of exactly why Lampert’s Sears lost to other companies, but capitalist economies beat socialist ones; Rozworski and Phillips’ People’s Republic Of Wal-Mart, which looks into this question, is somewhere on my reading list. But even without complete understanding, we can use group selection to evolve the right parameters. Imagine an economy with several businesses. One is a straw-man communist collective, where every worker gets paid the same regardless of output and there are no promotions (0% competition, 100% cooperation). Another is Lampert’s Sears (100% competition, 0% cooperation). Others are normal businesses, where employees mostly work together for the good of the company but also compete for promotions (X% competition, Y% cooperation). Presumably the normal business outcompetes both Lampert and the commies, and we sigh with relief and continue having normal businesses. And if some of the normal businesses outcompete others, we’ve learned something about the best values of X and Y.

7. Ideas. These are in constant evolutionary competition – this is the insight behind memetics. The memetic equivalent of slack is inferential range, aka “willingness to entertain and explore ideas before deciding that they are wrong”.

Inferential distance [LW · GW] is the number of steps it takes to make someone understand and accept a certain idea. Sometimes inferential distances can be very far apart. Imagine trying to convince a 12th century monk that there was no historical Exodus from Egypt. You’re in the middle of going over archaeological evidence when he objects that the Bible says there was. You respond that the Bible is false and there’s no God. He says that doesn’t make sense, how would life have originated? You say it evolved from single-celled organisms. He asks how evolution, which seems to be a change in animals’ accidents, could ever affect their essences and change them into an entirely new species. You say that the whole scholastic worldview is wrong, there’s no such thing as accidents and essences, it’s just atoms and empty space. He asks how you ground morality if not in a striving to approximate the ideal embodied by your essence, you say…well, it doesn’t matter what you say, because you were trying to convince him that some very specific people didn’t leave Egypt one time, and now you’ve got to ground morality.

Another way of thinking about this is that there are two self-consistent equilibria. There’s your equilibrium, (no Exodus, atheism, evolution, atomism, moral nonrealism), and the monk’s equilibrium (yes Exodus, theism, creationism, scholasticism, teleology), and before you can make the monk budge on any of those points, you have to convince him of all of them.

So the question becomes – how much patience does this monk have? If you tell him there’s no God, does he say “I look forward to the several years of careful study of your scientific and philosophical theories that it will take for that statement not to seem obviously wrong and contradicted by every other feature of the world”? Or does he say “KILL THE UNBELIEVER”? This is inferential range.

Aristotle says that the mark of an educated man is to be able to entertain an idea without accepting it. Inferential range explains why. The monk certainly shouldn’t immediately accept your claim, when he has countless pieces of evidence for the existence of God, from the spectacular faith healings he has witnessed (“look, there’s this thing called psychosomatic illness, and it’s really susceptible to this other thing called the placebo effect…”) to Constantine’s victory at the Mulvian Bridge despite being heavily outnumbered (“look, I’m not a classical scholar, but some people are just really good generals and get lucky, and sometimes it happens the day after they have weird dreams, I think there’s enough good evidence the other way that this is not the sort of thing you should center your worldview around”). But if he’s willing to entertain your claim long enough to hear your arguments one by one, eventually he can reach the same self-consistent equilibrium you’re at and judge for himself.

Nowadays we don’t burn people at the stake. But we do make fun of them, or flame them, or block them, or wander off, or otherwise not listen with an open mind to ideas that strike us at first as stupid. This is another case where we have to balance competition vs. slack. With perfect competition, the monk instantly rejects our “no Exodus” idea as less true (less memetically fit) than its competitors, and it has no chance to grow on him. With zero competition, the monk doesn’t believe anything at all, or spends hours patiently listening to someone explain their world-is-flat theory. Good epistemics require a balance between being willing to choose better ideas over worse ones, and open-mindedly hearing the worse ones out in case they grow on you.

(Thomas Kuhn points out that early versions of the heliocentric model were much worse than the geocentric model, that astronomers only kept working on them out of a sort of weird curiosity, and that it took decades before they could clearly hold their own against geocentrism in a debate).

Different people strike a different balance in this space, and those different people succeed or fail based on their own epistemic ruleset. Someone who’s completely closed-minded and dogmatic probably won’t succeed in business, or science, or the military, or any other career (except maybe politics). But someone who’s so pathologically open-minded that they listen to everything and refuse to prioritize what is or isn’t worth their time will also fail. We take notice of who succeeds or fails and change our behavior accordingly.

Maybe there’s even a third layer of selection; maybe different communities are more or less willing to tolerate open-minded vs. close-minded people. The Slate Star Codex community has really different epistemics norms from the Catholic Church or Infowars listeners; these are evolutionary parameters that determine which ideas are more memetically fit. If our epistemics make us more likely to converge on useful (not necessarily true!) ideas, we will succeed and our epistemic norms will catch on. Francis Bacon was just some guy with really good epistemic norms, and now everybody who wants to be taken seriously has to use his norms instead of whatever they were doing before. Come up with the right evolutionary parameters, and that could be you!

34 comments

Comments sorted by top scores.

comment by johnswentworth · 2020-05-13T15:51:47.537Z · LW(p) · GW(p)

There's a big problem with the Eye Part metaphor right at the beginning, which propagates through many ideas/examples in the rest of the post: the real world is high-dimensional.

The Eye Part metaphor imagines three types of organisms, all arranged along one dimension: No Eye -> Eye Part 1 -> Eye Part 2 -> Whole Eye. In that picture, the main problem in getting from No Eye to Whole Eye is just getting "over the hill".

But the real world doesn't look like that. Evolution operates in a space with (at least) hundreds of thousands of dimensions - every codon in every gene can change, genes/chunks of genes can copy or delete, etc. The "No Eye" state doesn't have one outgoing arrow, it has hundreds of thousands of outgoing arrows, and "Eye Part 1" has hundreds of thousands of outgoing arrows", and so forth.

As we move further away from the starting state, the number of possible states increases exponentially. By the time we're as-far-away as Whole Eye (which, in practice, is a lot more than three steps), the number of possible states will outnumber the atoms in the universe. If evolution is uniformly-randomly exploring that space, without any pressure toward the Whole Eye state specifically, it will not ever stumble on the Whole Eye - no matter how much slack it has.

Point is: the hard part of getting from "No Eye" to "Whole Eye" is not the fitness cost in the middle, it's figuring out which direction to go in a space with hundreds of thousands of directions to choose from at every single step.

Conversely, the weak evolutionary benefits of partial-eyes matter, not because they grant a fitness gain in themselves, but because they bias evolution in the direction toward Whole Eyes.

Let's apply that to some of the examples.

Tariffs example: from the perspective of a policy-maker, the hard part of evolving successful companies is not giving them plenty of slack, it's figuring out which company-designs are actually likely to succeed. If policy makers just grant unconditional slack, then they'll end up with random company-designs, and the exponentially-vast majority of random company-designs suck. They need to strategically select company-designs which will work if given slack. I don't have a reference, but IIRC, both Korean and Japanese policy makers did think about the problem this way.

Monopolies/Research example: the hard part of a successful research lab is not giving plenty of slack, it's figuring out which research-directions are actually likely to succeed. If management just funds research indiscriminately, then they'll end up with random research directions, and the exponentially-vast majority of random research directions suck. Xerox and Bell worked in large part because they successfully researched things targeted toward their business applications - e.g. programming languages and solid-state electronics.

Rand/Sears: the hard part of a successful company is not giving internal components plenty of slack, it's figuring out what each component needs to do in order to actually be useful to the company (i.e. alignment). A good example here is Amazon: they try to expose all of their internal-facing products to the external world. That's how Amazon's cloud compute services started, for instance: they sold their own data center infrastructure to the rest of the world. That exposes the data center infrastructure to ordinary market pressure, and forces them to produce a competitive product. The external market tells the data infrastructure team "which direction to go" in order to be maximally-useful. On the other hand, if Amazon's data center team had to compete with the warehouse team without external market pressure, then we'd effectively have two monopolies bargaining - not actually market competition at all.

Replies from: Charlie Steiner, Vaniver↑ comment by Charlie Steiner · 2020-05-14T06:10:03.544Z · LW(p) · GW(p)

Thus reminds me of the machine learning point that when you do gradient descent in really high dimensions, local minima are less common than you'd think, because to be trapped in a local minimum, every dimension has to be bad.

Instead of gradient descent getting trapped at local minima, it's more likely to get pseudo-trapped at "saddle points" where it's at a local minimum along some dimensions but a local maximum along others, and due to the small slope of the curve it has trouble learning which is which.

↑ comment by Vaniver · 2020-05-13T20:23:57.382Z · LW(p) · GW(p)

If management just funds research indiscriminately, then they'll end up with random research directions, and the exponentially-vast majority of random research directions suck. Xerox and Bell worked in large part because they successfully researched things targeted toward their business applications - e.g. programming languages and solid-state electronics.

That said, I think there's still a compelling point in slack's favor here; my impression is that Bell Labs (and probably Xerox?) put some pressure on people to research things that would eventually be helpful, but put most of its effort into hiring people with good taste and high ability in the first place.

Replies from: johnswentworth, Vaniver↑ comment by johnswentworth · 2020-05-13T21:16:32.844Z · LW(p) · GW(p)

That sounds plausible; hiring people with good taste and high ability is also a good way to filter out the exponentially-vast number of useless research directions (assuming that one can recognize such people [LW · GW]). That said, I wouldn't label that a point in favor of slack, so much as another way of filtering. It's still mainly solving the problem of "which direction to go" rather than "can we get over the hill".

Replies from: Benquo, ChristianKl, countingtoten↑ comment by ChristianKl · 2020-05-14T08:10:20.882Z · LW(p) · GW(p)

Alan Key of Xerox Parc makes the argument that hiring great people and giving them freedom is a key to get good innovation and that's the principle on which Parc worked.

The funders are only supposed to provide a vision but not goals which are supposed to be picked by individual researchers.

↑ comment by countingtoten · 2020-05-13T21:25:27.433Z · LW(p) · GW(p)

This shows why I don't trust the categories. The ability to let talented people go in whatever direction seems best will almost always be felt as freedom from pressure.

↑ comment by Vaniver · 2020-05-29T20:58:48.270Z · LW(p) · GW(p)

From The Sources of Economic Growth by Richard Nelson, but I think it's a quote from James Fisk, Bell Labs President:

If the new work of an individual proves of significant interest, both scientifically and in possible communications applications, then it is likely that others in the laboratory will also initiate work in the field, and that people from the outside will be brought in. Thus a new area of laboratory research will be started. If the work does not prove of interest to the Laboratories, eventually the individual in question will be requested to return to the fold, or leave. It is hoped the pressure can be informal. There seems to be no consensus about how long to let someone wander, but it is clear that young and newly hired scientists are kept under closer rein than the more senior scientists. However even top-flight people, like Jansky, have been asked to change their line of research. But, in general, the experience has been that informal pressures together with the hiring policy are sufficient to keep AT&T and Western Electric more than satisfied with the output of research.

[Most recently brought to my attention by this post [LW · GW] from a few days ago]

comment by DirectedEvolution (AllAmericanBreakfast) · 2021-12-23T09:41:15.657Z · LW(p) · GW(p)

The referenced study on group selection on insects is "Group selection among laboratory populations of Tribolium," from 1976. Studies on Slack claims that "They hoped the insects would evolve to naturally limit their family size in order to keep their subpopulation alive. Instead, the insects became cannibals: they ate other insects’ children so they could have more of their own without the total population going up."

This makes it sound like cannibalism was the only population-limiting behavior the beetles evolved. According to the original study, however, the low-population condition (B populations) showed a range of population size-limiting strategies, including but not limited to higher cannibalism rates.

"Some of the B populations enjoy a higher cannibalism rate than the controls while other B populations have a longer mean developmental time or a lower average fecundity relative to the controls. Unidirectional group selection for lower adult population size resulted in a multivarious response among the B populations because there are many ways to achieve low population size."

Scott claims that group selection can't work to restrain boom-bust cycles (i.e. between foxes and rabbits) because "the fox population has no equivalent of the overarching genome; there is no set of rules that govern the behavior of every fox." But the empirical evidence of the insect study he cited shows that we do in fact see changes in developmental time and fecundity. After all, a species has considerable genetic overlap between individuals, even if we're not talking about heavily inbred family members, as we'd be seeing in the beetle study. Wikipedia's article on human genetic diversity cites a Nature article and says "as of 2015, the typical difference between an individual's genome and the reference genome was estimated at 20 million base pairs (or 0.6% of the total of 3.2 billion base pairs)."

An explanation here is that the inbred beetles of the study are becoming progressively more inbred with each generation, meaning that genetic-controlled fecundity-limiting changes will tend to be shared and passed down. Individual differences will be progressively erased generation by generation, meaning that as time goes by, group selection may increasingly dominate individual competition as a driver of selection.

All this is to motivate the outer/inner selection model, the idea that the ability to impose a top-down ruleset restraining the selfish short-term interests of individuals naturally results in higher-level entities. In biology, it's multicellular life, while in human culture, it's various forms of social enterprise. Scott is trying to explain not only how such social enterprises form, but why some fail and others thrive.

In part 3, this model is used to justify the tenure system, as well as diversity in grantmaking. And this is where I think Scott's inner/outer selection model, with its origins in biology, doesn't work as well. Tenure has its origins in the early 1600s, but really took off in the mid-19th century. The first university was founded in 1088.

Under Scott's explanatory framework, tenure is an inner constraint that has spread because it enhances the fitness of universities by constraining academics from focusing on shortsighted publishing at the expense of long-term investments in excellent research. This can't be the result of blind evolution selecting for the survival and reproduction of universities, because the individual "organisms" (universities) often predate the "tenure gene" by centuries, and then acquired it hundreds of years into their individual lifespan. Instead, tenure historically is the result of 19th-century labor organizing by teachers. In fact, it looks like tenure was a rebellion of the inner system against the outer.

If we run with Scott's framing, though, things get weird. What if tenure helps universities prosper not because it helps them produce high-quality research, but because it helps them recruit and maintain high-prestige academics capable of attracting big grants from external funders? If so, that would be a little like an organism that figures out how to attract the healthiest cells from a neighboring organism and incorporate them into its body. That seems to be a facet of group selection in biology (i.e. mitochondria and gut bacteria), but for the most part, our bodies are vigilant to destroy outsiders. To me, this is just a challenge for taking frameworks that are derived from the particularities of eukaryotic biology and trying to apply them to a different sort of entity with an entirely different set of mechanisms for self-definition, survival, reward, and replication. Perhaps group selection is still relevant, but this framing of university tenure as group selection ruleset feels less like a useful way to explore culture and more like a mad lib. We're just taking the abstract components of the inner/outer selection model for cancer biology and finding the handiest intuitive category to map onto the component parts. It creates superficial plausibility, but isn't historically accurate and feels mechanistically questionable.

I think that a more compelling concept of a human "ruleset" is perhaps the pattern of behavior called "professionalism." Across the board, groups of all kinds that maintain a basic level of professional decorum tend to thrive. Those that do not tend to fail. The way that looks across cultures can vary, but there's a recognizable commonality around the world. Something like professionalism has been around for a long time, and a very large number of professional groups have come and gone on a much shorter time scale. This creates opportunity for group selection to function, an opportunity that seems to be lacking in the university/tenure context.

Moving to the last section, Boeing is not a monopoly. Their four largest competitors have about the same number of employees all together as Boeing has alone. But that's a side concern. More importantly, lack of slack is not the obvious explanation for why a misbehaving monopoly would persist. The most obvious solution would be for an activist investor to purchase 51% of the monopoly's shares, then either reform or profit off the company. But they'd face whatever coordination and incentives caused the monopoly to misbehave in the first place. They'd also have to deal with efficient market questions. The real story here, I think, isn't slacklessness, but computational challenges.

An alternative explanation for why monopolies would be major innovators is not because their freedom from competition makes them able to invest for the long term, but rather because they are the only employer in the industry. As a consequence, the company gets the credit for every innovation occurring in its industry during the time period in which it's a monopoly.

To make this more clear, imagine that an industrial sector starts with 1 company, which produces 10 inventions per year for 10 years, for a total of 100 inventions. Then, it splits into 10 companies, each of which produces 2 inventions per year for 10 years, for a total of 200 inventions. The smaller companies will individually only have 20% of the inventions that the monopoly had, but the industry as a whole is twice as innovative.

Trying to evaluate the impact of a monopoly on innovation requires evaluating something like the "rate of innovation per capita" among employees in the sector, but even that's not good enough, because we don't know if a monopoly context tends to expand or constrain the population of employees, and we also care about the absolute number of innovations per year. If a highly monopolistic industrial landscape tended to increase the number of people choosing careers as innovators while decreasing the rate of innovation per capita, it's hard to know how that would cash out in terms of innovations per year.

Turning to the section on "strategy games," and specifically the Graham Greene quote, while I don't know historical population levels for Italy vs. Switzerland, in modern times, Italy has 7x the population of Switzerland. From "The Renaissance in Switzerland," "The geographical position of Switzerland made contact with Renaissance manifestations in Italy and Germany easy even if the country was too small and poor for notable buildings or works of art." Switzerland, then, seems like it suffered if anything from too little slack, not too much.

Where Scott references Greece's "slack" from being "hard to take over" "even by Sparta," I suggest the delightful blog A Collection of Unmitigated Pedantry's take on Sparta's underwhelming military prowess. Scott points out that this part is purely speculative, so my main aim is just to spread the word that there's a great blog on military history to be read, if you haven't seen it before.

One of my issues with this article as a whole, beyond the epistemological topiary, is that veers between instances in which we can clearly see on a mechanistic level how dire poverty forces an inventor to take a day job rather than invent cold fusion, to a plausible but unsupported claim that Sears' unconventional corporate structure contributed to its implosion, to wild speculation of "this thing happened in history, and maybe it was caused by too much/too little/just the right amount of slack too!" Scott sometimes labels his confidence levels explicitly, and other times indicates them with his tone, but I notice that my first read produced a credulous absorption of his speculative claims as factual, and my second read left me with Gell-Mann amnesia. If we're really having a hard time finding a slack-based account for some phenomenon, we can always redefine slack (lack of resources vs. absence of competition), play around with whether the problem was too much or too little slack or the wrong temporal pattern of change in slack, or add a third (and why not a fourth?) layer to the inner/outer model.

As a complicating extension, it's hard to say where the slackness "resides." For example, imagine a student has two tutors, one harsh and exacting (low slack), the other nurturing and patient (high slack). Say the student notices that they learn faster with the low-slack tutor. They hypothesize that this shows that, at least in the tutoring domain, their optimal learning-rate is generated by low-slack tutors. So they hire low-slack tutors for every subject, only to find that their learning rate plummets.

They now suppose that this must be because with their original mix of tutors, adding a low-slack tutor enhanced their learning rate for that individual subject, but also used up their overall emotional capacity to deal with stress. Adding all low-slack tutors overwhelmed their emotional budget.

Optimal slack levels on one level led to conditions of suboptimal slack on a different level. Optimizing slack would mean finding harmonious slack levels throughout the many interwoven systems affecting the entities in question.

But we still might be able to do that by just varying the slack levels in ways that seem sensible, and keeping the new equilibrium if we like the outcome. You don't necessarily need an RCT to figure this out. Maybe the barrier is just having the idea and putting it into action. The effects might be obvious. Maybe this is low-hanging fruit.

The vagueness and complexity of "slack" as a concept makes me worry that this term lends itself to be a hand-wavey explanation for whatever a writer wants to assert, or for political agendas, more than for making testable predictions that enhance our understanding of the world. What it seems to offer is inspiration for making testable predictions. It's a hypothesis-generating tool.

This seems to be one of the things Scott's really good at. He doesn't make too many testable predictions, although he sometimes shares those of others. Instead, he finds patterns in observations and makes you fall in love with that pattern. Then, it's on you to figure out how to turn that speculative pattern into a testable hypothesis. This is a valuable skill, one that many of his readers seem to envy. But it also puts you at risk of just resorting to that pattern he's jammed into your head any time you need to reach for an explanation for something.

Unfortunately, I'm really not sure if we have it in us, as a blogging community, to internally coordinate intellectual progress. We have speculative stuff, like this article. To that, we need not only epistemic spot checks, like I'm trying to do in this review, but attempts at operationalization and normal scientific study, which this article is referencing in places, but which isn't obviously being directly triggered or motivated by this article. If we mostly only have the time and expertise for speculation, exhortations, and the occasional fact check or two, how do we achieve intellectual progress? How will we get the resources to have these ideas checked, operationalized, and put to the test? How do we overcome our short-term status competitions in order to create a body of work that builds on itself over time toward a higher long-term payoff for the community?

It sounds like we need more slack.

Replies from: Vaniver↑ comment by Vaniver · 2022-01-01T05:26:18.995Z · LW(p) · GW(p)

An explanation here is that the inbred beetles of the study are becoming progressively more inbred with each generation, meaning that genetic-controlled fecundity-limiting changes will tend to be shared and passed down. Individual differences will be progressively erased generation by generation, meaning that as time goes by, group selection may increasingly dominate individual competition as a driver of selection.

I don't think this adds up. Yes, species share many of their genes--but then those can't be the genes that natural selection is working on! And so we have to explain why the less fecund individuals survived more than the more fecund individuals. If that's true, then this is just an adaptive trait going to fixation, as in common (and isn't really a group selection thing).

Replies from: AllAmericanBreakfast↑ comment by DirectedEvolution (AllAmericanBreakfast) · 2022-01-01T07:28:59.550Z · LW(p) · GW(p)

I’d enjoy talking this out with you if you have the stamina for a few more back-and-forths. I didn’t quite understand the wording of your counter argument, so I’m hoping you could spell it out in a bit more detail?

Replies from: Vaniver↑ comment by Vaniver · 2022-01-01T18:23:05.761Z · LW(p) · GW(p)

Looking at the paper, I think I wasn't tracking an important difference.

I still think that genes that have reached fixation among a population aren't selected for, because you don't have enough variance to support natural selection. The important thing that's happening in the paper is that, because they have groups that colonize new groups, traits can reach fixation within a group (by 'accident') and then form the material for selection between groups. The important quote from the paper:

The total variance in adult numbers for a generation can be partitioned on the basis of the parents in the previous generation into two components: a within-populations component of variance and a between-populations component of variance. The within-populations component is evaluated by calculating the variance among D populations descended from the same parent in the immediately preceding generation. The between-populations component is evaluated by calculating the variance among groups of D populations descended from different parents. The process of random extinctions with recolonization (D) was observed to convert a large portion of the total variance into the between-populations component of the variance (Fig. 2b), the component necessary for group selection.

So even tho low fecundity is punished within every group (because your groupmates who have more children will be a larger part of the ancestor distribution), some groups by founder effects will have low fecundity, and be inbred enough that there's not enough fecundity variance to differentiate between members of the population of that group, (even if fecundity varies among all beetles, because they're not a shared breeding population).

[EDIT] That is, I still think it's correct that foxes sharing 'the fox genome' can't fix boom-bust cycles for all foxes, but that you can locally avoid catastrophe in an unstable way.

For example, there's a gene for some species that causes fathers to only have sons. This is fascinating because it 1) is reproductively successful in the early stage (you have twice as many chances to be a father in the next generation as someone without the copy of the gene, and all children need to have a father) and it 2) leads to extinction in the later stage (because as you grow to be a larger and larger fraction of the population, the total number of descendants in the next generation shrinks, with there eventually being a last generation of only men). The reason this isn't common everywhere is group selection; any subpopulations where this gene appeared died out, and failed to take other subpopulations down with them because of the difficulty of traveling between subpopulations. But this is 'luck' and 'survivor recolonization', which are pretty different mechanisms than individual selection.

Replies from: wangscarpet↑ comment by wangscarpet · 2022-02-21T18:34:14.709Z · LW(p) · GW(p)

This is a little like game theory coordination vs cooperation actually. Coordination is if you can constrain all actors to change in the same way: competition is if each can change while holding the others fixed. "Evolutionary replicator dynamics" is a game theory algorithm that encompasses the latter.

Even if the beetles all currently share the same genes, any one beetle can have a mutation that competes with his/her peers in future generations. Therefore, reduced variation at the current time doesn't cause the system to be stable, unless there's some way to ensure that any change is passed to all beetles (like having a queen that does all the breeding).

comment by Viliam · 2020-05-14T21:41:01.684Z · LW(p) · GW(p)

Web moderation is also like this. Karma can become a tool of Moloch, but discussions without voting do not separate the wheat from the chaff.

(With all respect to author, finding the best comments on SSC is a full-time job.)

Replies from: Charlie Steiner↑ comment by Charlie Steiner · 2020-05-16T07:15:29.102Z · LW(p) · GW(p)

Well, you need some selection process. But for a karma-less community you can still have selection on members, or social encouragement/discouragement. I guess this also requires that the volume of comments isn't so high that ain't nobody got time for that.

comment by NoriMori1992 · 2022-09-30T01:31:39.844Z · LW(p) · GW(p)

Some scientists tried to create group selection under laboratory conditions. They divided some insects into subpopulations, then killed off any subpopulation whose numbers got too high, and and “promoted” any subpopulation that kept its numbers low to better conditions. They hoped the insects would evolve to naturally limit their family size in order to keep their subpopulation alive. Instead, the insects became cannibals: they ate other insects’ children so they could have more of their own without the total population going up. In retrospect, this makes perfect sense; an insect with the behavioral program “have many children, and also kill other insects’ children” will have its genes better represented in the next generation than an insect with the program “have few children”.

Why didn't they try also killing off subpopulations that engaged in cannibalism, and promoting those that didn't? And what would have most likely happened if they had tried that?

comment by Leafcraft · 2020-05-13T07:57:27.036Z · LW(p) · GW(p)

I believe you used the therm "genetic code" incorrectly in [II.] when you were talking about cancer, the correct word is genome.

Replies from: ChristianKl↑ comment by ChristianKl · 2020-05-13T17:52:24.380Z · LW(p) · GW(p)

For anybody who wants to understand:

The difference between the terms is that genetic code means the part of the DNA that codes for proteins. The genome on the other hand means all DNA and thus also telomere and other information like various types of RNA for which DNA codes.

Replies from: adrian-arellano-davin↑ comment by mukashi (adrian-arellano-davin) · 2021-08-20T00:07:31.034Z · LW(p) · GW(p)

Sorry for replying to a comment from so long ago, I just bumped into this.

This clarification is wrong, and a common mistake in science journalism: the genetic code is not the part of DNA that codes for proteins. The genetic code is the mapping between triplets of nucleotides (codons) and amino acids. The genetic code is very conserved among all life beings, though there is some variation (especially in mitochondria, where the selective pressure seems to be quite special)

https://en.wikipedia.org/wiki/Genetic_code

The correct word is genome, I agree

comment by ChristianKl · 2020-05-13T20:09:55.059Z · LW(p) · GW(p)

It's not that evolutionary pressure doesn't exist after a catastroph that kills most members of a species. There will still be different reproduction rates of different members of the species. Not all members will find mates. The key point is that the evolutionary pressure is quite different from the pressure that the species is usually exposed.

The thing that's more central is that there's not one specific context for which everything is optimized. That gives you diversity.

When I contribute on LessWrong I might get benefits such as status but the evaluation of my performance is sufficently unclear that it's hard to focus on those gains and easy to just write what I consider to be important.

comment by Miguel Cisneros (miguel-cisneros) · 2020-05-13T06:47:36.475Z · LW(p) · GW(p)

I study secular psychology and i can tell you the obvious... Evolution theory has been proven wrong a long time ago. Also, the human eye can´t "evolve". It is recognize as one of many examples of irreducible complexity. You should read Michael Behe and Jonathan Wells, they have tons of evidence that refute your core hypothesis... Also, your moral foundation (Francis Bacon) is very weak that i am not going to bother refuting it

Replies from: Raemon, Leafcraft, Dustin, Teerth Aloke↑ comment by Raemon · 2020-05-14T06:56:31.556Z · LW(p) · GW(p)

Mod note: I have locked this thread, and banned Miguel Cisneros.

There are parts of the internet that are the right space for this sort of conversation, but this isn't one of them. A central point of LessWrong is that some questions can be settled so we can move on to other interesting debates.

Replies from: Pattern, Viliam↑ comment by Viliam · 2020-05-14T21:22:59.279Z · LW(p) · GW(p)

The thread is locked, but I can still reply to your comment, is that okay?

Replies from: Raemon↑ comment by Raemon · 2020-05-14T21:35:29.715Z · LW(p) · GW(p)

Eh, that's an accident, and if there turns out to be a Big Subsequent Drama Thread underneath my comment I may lock it too, but it seems fine for now to let people ask clarifying questions if they want. (I may end up moving that discussion to another Meta Post if it gets too big)

Replies from: Viliam↑ comment by Viliam · 2020-05-15T21:10:09.212Z · LW(p) · GW(p)

I thought that was on purpose, like "the thread in general is locked, but here is the place to go meta and complain about locking".

What does the admin user interface look like? You lock comments one by one, instead of locking the entire thread? Maybe "lock this comment and all its replies" would be a better option. Less clicking, smaller chance of a mistake.

Replies from: Raemon↑ comment by Raemon · 2020-05-15T21:15:07.747Z · LW(p) · GW(p)

Oh for sure, we just haven't coded that yet, and it doesn't come up enough to be near the top of our priorities. (We have some tech debt around how comments work that makes it a little more annoying than it needs to be, which we'll get around to fixing someday, after which adding a. "lock thread" feature would probably be easier)

↑ comment by Leafcraft · 2020-05-13T08:06:21.587Z · LW(p) · GW(p)

You mention evolution being proven wrong a long time ago. Care to elaborate?

Replies from: miguel-cisneros↑ comment by Miguel Cisneros (miguel-cisneros) · 2020-05-13T10:24:28.130Z · LW(p) · GW(p)

Sure. Look, there are SEVERAL rebuttals for atheism/evolution. One of the many examples that Dr. Michael Behe uses to show the Biochemical complexity (which atheists reject), is blood clotting: If your blood does not clot in the right place, in the right amount and at the right time, you will bleed to death. It turns out that the blood clotting system involves an extremely choreographed ten-step cascade that uses about twenty different molecular compounds. To create a complex, perfectly balanced blood coagulation system, clusters of protein components have to be inserted all at once. That eliminates a Darwinian approach and fits the hypothesis of an intelligent designer. How could blood clotting develop over time, step by step, while the animal had no effective way of stopping the blood flow that would cause death every time it was injured? It doesn’t work if you only have one part of the system, you need all the components, and natural selection only works if there is something useful at that time, not in the future. Finally, no one has ever carried out experiments to show how blood clotting could have developed. It is one of the many examples of an irreducibly complex system, which needs to emerge complete to function. My response has been extracted from Behe´s response in the book "The case for the Creator" of Lee Strobel. Look, i know there are several atheists here that like to hide on this forum and erase any comment they don´t like, but i believe you can be open-minded

Replies from: gilch, gjm, Ericf↑ comment by gilch · 2020-05-13T15:22:47.813Z · LW(p) · GW(p)

How could blood clotting develop over time, step by step

Step-by-Step Evolution of Vertebrate Blood Coagulation.