«Boundaries», Part 3a: Defining boundaries as directed Markov blankets

post by Andrew_Critch · 2022-10-30T06:31:00.277Z · LW · GW · 20 commentsContents

Motivation Boundaries, defined Definition part (a): "part of the world" Definition parts (b) & (c): the active boundary, passive boundary, and viscera Definition part (d): "making decisions" Discussion Non-violent boundary-crossings Respect for boundaries as non-arbitrary coordination norms Comparison to related work Cartesian frames Active inference Functional decision theory (FDT) Markov blankets Recap Reminder to vote None 20 comments

This is Part 3a of my «Boundaries» Sequence [? · GW] on LessWrong.

Here I attempt to define (organismal) boundaries in a manner intended to apply to AI alignment and existential safety, in theory and in practice. A more detailed name for this concept might be an approximate directed (dynamic) Markov blanket.

Skip to the end if you're eager for a comparison to related work including Scott Garrabrant's Cartesian frames, Karl Friston's active inference, and Eliezer Yudkowsky's functional decision theory; these are not prerequisites.

Motivation

In Part 3b, I'm hoping to survey a list of problems that I believe are related, insofar as they would all benefit from a better notion of what constitutes the boundary of a living system and a better normative theory for interfacing with those boundaries. Here are the problems:

- AI boxing / Containment — the method and challenge of confining an AI system to a "box", i.e., preventing the system from interacting with the external world except through specific restricted output channels (Bostrom, 2014, p.129).

- Corrigibility — the problem of constructing a mind that will cooperate with what its creators regard as a corrective intervention (Soares et al, 2015).

- Mild Optimization — the problem of designing AI systems and objective functions that, in an intuitive sense, don’t optimize more than they have to (Taylor et al, 2016).

- Impact Regularization — the problem of formalizing "change to the environment" in a way that can be effectively used as a regularizer penalizing negative side effects from AI systems (Amodei et al, 2016).

- Counterfactuals in Decision Theory — the problem of defining what would have happened if an AI system had made a different choice, such as in the Twin Prisoner's Dilemma (Yudkowsky & Soares, 2017).

- Mesa-optimizers — instances of learned models that are themselves optimizers, which give rise to the so called inner alignment problem (Hubinger et al, 2019).

- Preference Plasticity — the possibility of changes to the preferences of human preferences over time, and the challenge of defining alignment in light of time-varying preferences (Russell, 2019, p.263).

- (Unscoped) Consequentialism — the problem that an AI system engaging in consequentialist reasoning, for many objectives, is at odds with corrigibility and containment (Yudkowsky, 2022 [LW · GW], no. 23).

Also, in the comments after Part 1 [LW(p) · GW(p)] of this sequence, I asked commenters to vote on which of the above 8 topics I should write a deeper analysis on; here's the current state of the vote:

Go cast your vote, here [LW(p) · GW(p)]! Or read this part first and then vote :)

Boundaries, defined

Boundaries include things like a cell membrane, a fence around yard, and a national border; see Part 1 [LW · GW]. In short, a boundary is going to be something that separates the inside of a living system from the outside of the system. More fundamentally, a living system or organism will be defined as

- a) a part of the world, with

- b) a subsystem called its boundary which approximately causally separates another subsystem called its viscera from the rest of the world,

where - c) the boundary state decomposes into active and passive features that direct causal influence outward and inward respectively, such that

- d) the boundary and viscera together implement a decision-making process that perpetuates these four defining properties.

One reason this combination of properties is interesting is that systems that make decisions to self-perpetuate tend to last longer and therefore be correspondingly more prevalent in the world; i.e., "survival of the survivalists".

But more importantly, this definition will be directly relevant both to x-risk and to individual humans. In particular, we want the living system called humanity to use its model of itself to perpetuate its own existence, and we want AI to be respectful of that and hopefully even help us out with it. It might seem like continuing our species is just an arbitrary subjective preference among many that humanity would espouse. However, I'll later argue that the preservation of boundaries is a special kind of preference that can play a special role in bargaining, due to having a (relatively) objective or intersubjectively verifiable meaning.

To get started, let's expand the concise definition above with more mathematical precision, one part at a time. Eventually my goal is to unpack the following diagrams:

Definition part (a): "part of the world"

First, let's define what it means for the living system boundary to be a part of the world. For that, let's represent the world as a Markov chain (definition:Wikipedia), which intuitively just means the future only depends on the past via the present.

- the set of possible fully-detailed states of the entire world, including all details of the world, which will include the living system in question, and its boundary. A world state is not a compressed or simplified model of the world the agent lives in; it's a fully detailed description of the entire world.

- is the set of all possible sequences of world states, i.e., complete world histories. In future work we can add a parameter for the resolution of time steps, but I don't think that's crucial here.

- is a (stochastic) transition function, defining the probability that the world will transition to state in the next time step, given that it is in state . By modeling the world as a linear time series like this, I'm knowingly omitting considerations of special relativity (where time is relative), general relativity (where time is curved), a quantum mechanics (where wave amplitudes are more fundamental than probabilities). I don't think any of these omissions render useless the concept of boundaries developed here.

- is a specified initial distribution on worlds at the start of time or earliest time of interest (), which defines a distribution over all possible histories from that time forward.

- is the natural map which, given a distribution over world states , returns a distribution over futures obtained by repeated application of over time.

- denotes, for any world state , the future of the Dirac (100% concentrated) distribution on the world state .

- denotes the distribution over futures by in the initial distribution .

- is the state of the world at time , as a random variable, obtained by projecting onto its component,

- is the sequence of states of the world prior to time (as a random variable).

- is the sequence of states of the world after time .

Since the world at each time is generated purely from the previous moment in time, it follows that history satisfies the temporal Markov property: . In other words, the future is independent of the past, conditional on the present.

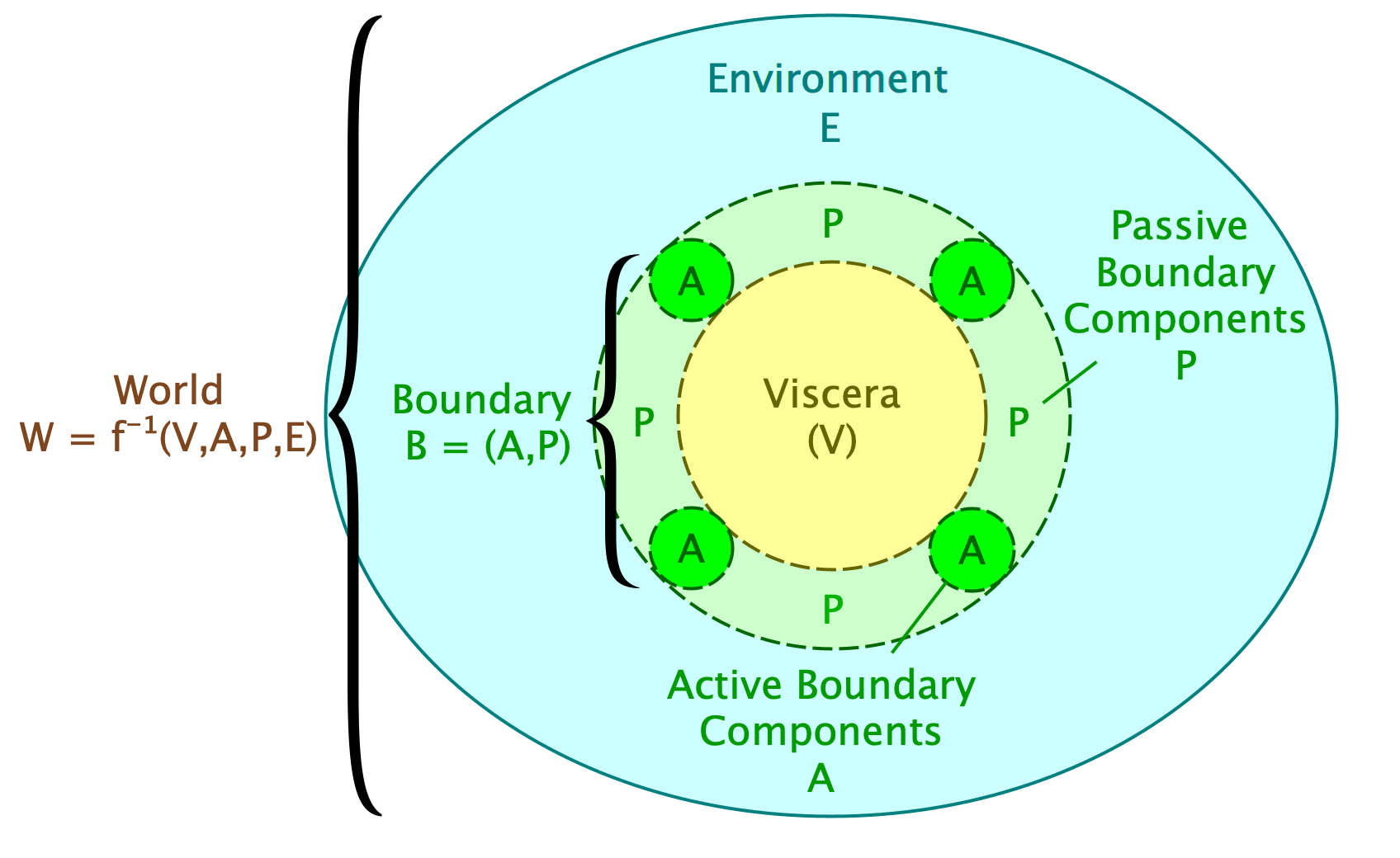

Now, for the living system to exist "within" the world, the world should be factorable into features that are and are not part of the living system. In short, the state (W) of the world should be factorable into an environment state (E), the state of the boundary of the living system (B), and the state of the interior of the living system, which I'll call its viscera (V).

Definition parts (b) & (c): the active boundary, passive boundary, and viscera

We're going to want to view the system as taking actions, so let's assume the boundary state can be further factorable into what I'll call the active boundary, A — the features or parts of the boundary primarily controlled by the viscera, interpretable as "actions" of the system— and the passive boundary, P — the features or parts of the boundary primarily controlled by the environment, interpretable as "perceptions" of the system. These could also be called "input" and "output", but for later reasons I prefer the active/passive or action/perception terminology.

To formalize this, I want a collection of state spaces and maps, like so:

- the set of world states (includes everything)

- the set of viscera states

- the set of boundary states

- the set of states of the active boundary

- the set of states of the passive boundary

- , , , , ,

,

.... which fit nicely into a diagram like this:

For each time , we define a state variable for each state space, from :

Each of these factorizations are assumed to be bijective, in the sense of accounting for everything that matters and not double-counting anything, i.e.,

- is bijective;

- is bijective;

- is bijective (which follows from the above two).

These decompositions needn't correspond to physically distinct or conspicuous regions of space, but it might be helpful to visualize the world — if it were laid out in a physical space — as being broken down into a disjoint union of parts, like this:

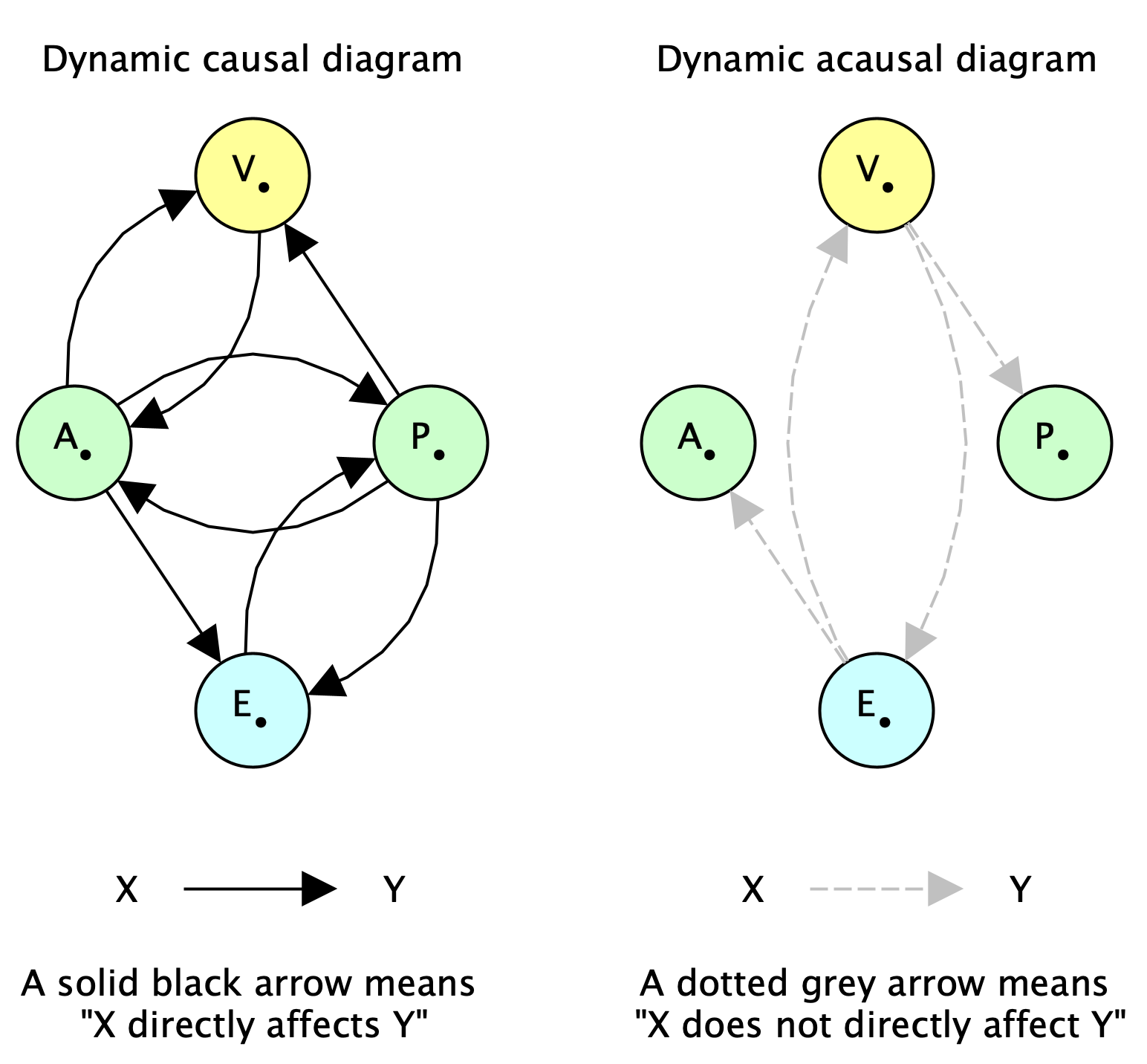

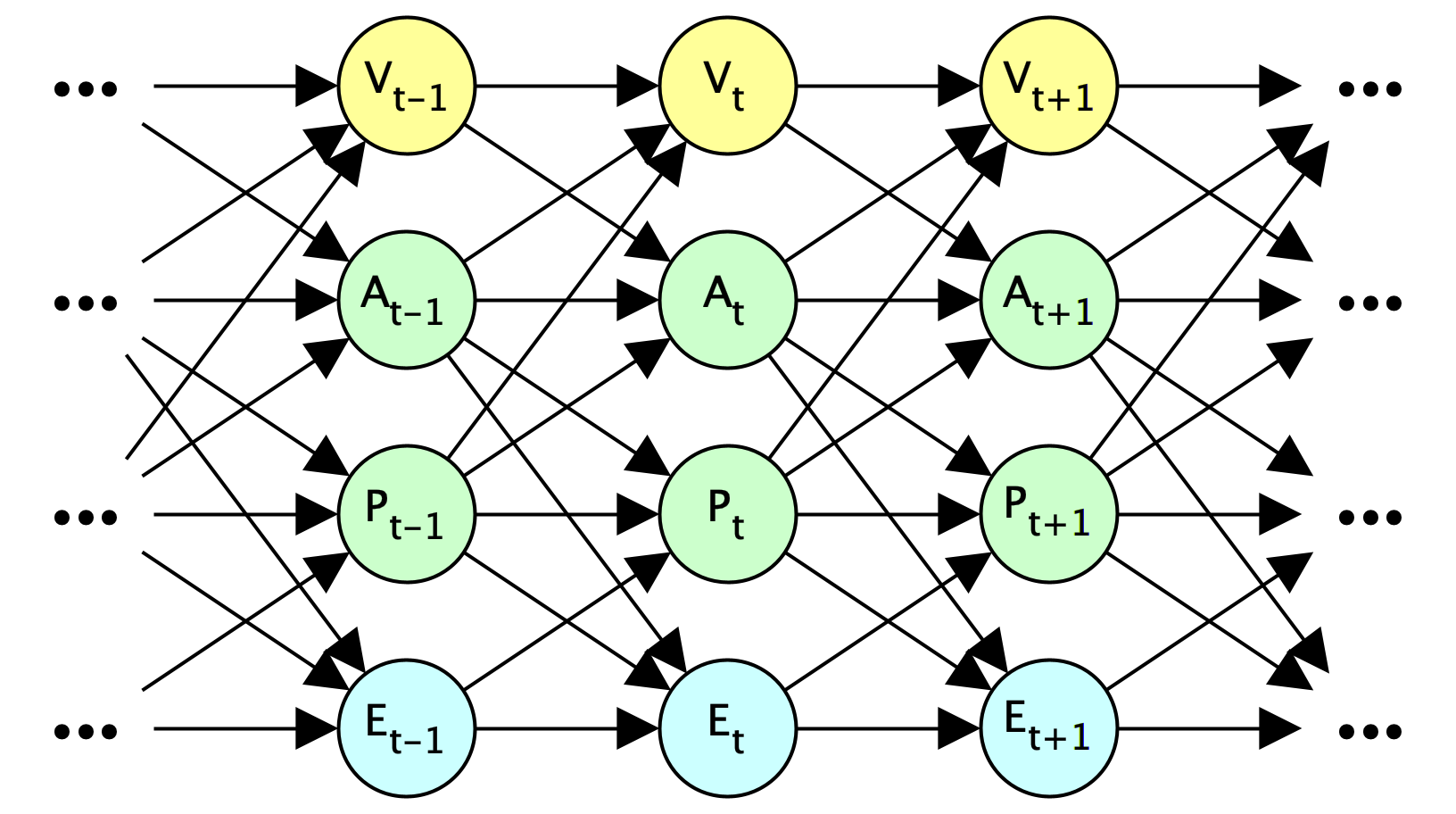

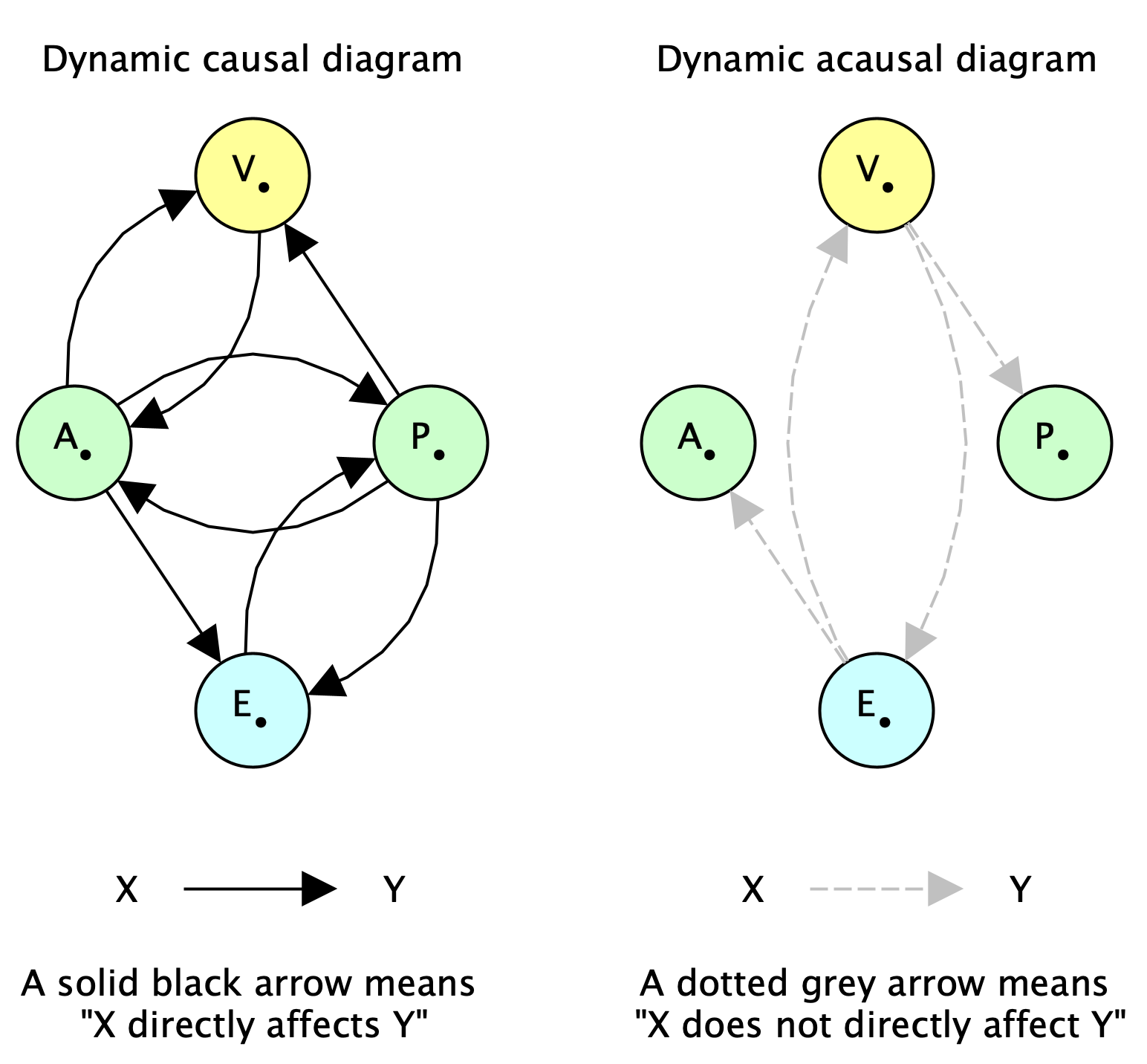

Now, when I say the boundary or its decomposition approximately causally separates the viscera from the environment, I mean that the following Pearl-style causal diagram of the world approximately holds:

This diagram is easier to parse if we highlight the arrows that are not present from each time step to the next:

If we fold each horizontal time series into a single node, we get a much simpler-looking dynamic causal diagram (or dynamic Bayes net) and what I'll call a dynamic acausal diagram, as in the earlier Figure 1:

Since I only want to assume these causal relationships are approximately valid, let's describe the approximation quantitatively. Let denote the conditional mutual information of X and Y given Z, under any given distribution over world histories on which are defined. Let denote an aggregation function for aggregating quantities over time, like averaging, discounted averaging, or max. Define:

- "Infiltration" of information from the environment into the active boundary & viscera:

- "Exfiltration" of information from the viscera into the passive boundary & environment:

When infiltration and exfiltration are both zero, a perfect information boundary exists between in the (otherwise putative) inside and outside of the system, with a clear separation of perception and action as distinct directions of inward and outward causal influence.

With all of the above, a short yet descriptive answer to the question "what is a living system boundary?" is:

- an "approximate directed Markov blanket"

Why this phrase? Well, together the boundary (A,P) are:

- an approximate Markov blanket, meaning that A and P approximately causally separate V and E from each other, and the separation is

- "directed" in that there are discernible outward and inward channels, namely, A and P.

In the next section, infiltration and exfiltration will be related to a decision rule followed by the organism.

Definition part (d): "making decisions"

Next, let's formalize how a living system implements a decision-making process that perpetuates the defining properties of the system (including this one!). In plain terms, the system takes actions that continue its own survival. Somewhat circularly, the survival of the system as a decision-making entity involves perpetuating the particular sense in which it is a decision-making entity. So, the definition here is going to involve a fixed-point-like constraint; stay tuned 🙂

More formally, for each time step , we need to characterize the degree to which the true transition probability function

can be summarized by a description of the form "the system makes a decision about how to transform its viscera and action subject to some (soft) constraints". So, define a decision rule as any function of the form

- .

Notice how conditions on all of , while a decision rule will only look at as an input. Thus, using to predict implicates the imperfectly-accurate assumption that the system's "decisions" are not directly affected by its environment. This assumption holds precisely when the quantity is zero.

Dual to this we have what might be called a situation rule:

- .

The situation rule works well exactly when is zero. The rest of this post is focussed on , and dual statements will exist for .

As a reminder: these variables are not compressed representations. The states are not a simplified description of the world; they together describe literally everything in the world. In a later post I might talk about compressed versions of these variables that could be represented inside the mind of the organism itself or another organism, but for now we're not assuming any kind of lossy compression. Nonetheless, despite the map being lossless, probably every decision rule will be somewhat wrong as a description of reality, because by construction it ignores the direct causal influences of and on each other, of on , and of on .

Now, let's suppose we have some parametrized space of decision rules , i.e., a decision rule for every parameter in a parameter space . For example, if is defined by a neural net with a vector of weights, could be . Procedurally, could implement a process like "Compute a Bayesian-update by observing , store the result in , and choose action randomly amongst options that approximately optimize expected utility according to some utility function". More realistically, could be an implementation of a satisficing rule rather than an optimization. The particular choice of and its implementation are not crucial for this post, only the type signature of as a map .

Next, define a description error function to be a function that evaluates the error of a decision rule as a description of the true transition rule , from the perspective of anyone trying to predict or describe how the system behaves at time . For instance, we could use either of these:

- Example: , i.e., how surprising is if we predict samples from it using . Here denotes the (average) KL divergence (averaged over the value of that the two distributions are conditioned on).

Or: - Example: , where denotes an average Wasserstein distance, if there's a natural metric on , which there is for many applications.

As with the particular implementation of is not important for this post; only that it measures the failure of as a description of the system's true transition function , and in particular it should be zero precisely when agrees perfectly with . When no confusion will result, I'll write as shorthand for . Thus we have:

- Assumption: if and only if .

From this very natural assumption on the meaning of "description error", it follows that:

- Corollary: If for all , then .

In other words, for the decision rule to perfectly describe the system, there must be no infiltration, i.e., no inward boundary crossing.

Important: Note that negative description error, , is not a measure of how "optimally" the system makes decisions or predictions, it's measure of how well the rule predicts what the system will do.

Next, we need another function for aggregating description error over time, e.g., max or avg. Here may or may not be the same as the previous function, but there should be some relationship between them such that bounding one can bound the other (e.g., if they're both Avg or Max then this works). For any such function , define an aggregate description error function as

We say is a good fit for the time interval if is small. This implies several things:

- infiltration can't be too large in that time interval, i.e., the boundary remains fairly well intact;

- for each , do not destroy the present or subsequent validity of too badly, i.e., the system "makes sufficiently self-preserving choices"; and

Thus, if is a good fit, then 1 & 2 together say that the decisions made by will perpetuate the four defining properties (a)-(d) of the definition.

Dual to this, for a situation rule to work well requires that exfiltration is not too large, and for each , do not destroy the present or subsequent validity of too badly, i.e., the environment "is sufficiently hospitable". This may be viewed as a definition for a living system having a niche, a property I discussed as a subsection of Part 2 [LW · GW] in the context of jobs and work/life balance.

Together, the survival of the organism requires both and to not violate the future validity of and too badly.

Discussion

Non-violent boundary-crossings

Real-world living systems sometimes do funky things like opening up their boundaries for each other, or even merging. For instance, consider two paramecia named Alex and Bailey. Part of Alex's decision rule involves deciding to open Alex's boundary in order to exchange DNA with Bailey. If Alex does this in a way that allows Bailey's decision rule to continue operating and decide for Bailey to open up, then the exchange of DNA has not violated Bailey's decision rule. In other words, while there is a boundary crossing event, one could say it is not a violation Bailey's boundary, because it respected (proceded in accordance with) Bailey's decision rule.

Respect for boundaries as non-arbitrary coordination norms

Epistemic status: speculation, but I think there's a theorem here.

In my current estimation, respect for boundaries as described above is more than a matter of Alex and Bailey respecting each other's "preferences" as paramecia. I hypothesize that, in the emergence of fairly arbitrary colonies of living systems, standard protocols for respecting boundaries tend to emerge as well. In other words, respect for boundaries may be a "Schelling" concept that plays a crucial role in coordinating and governing positive-sum interactions between living systems. Essentially, preferences that are easily expressed in broadly and intersubjectively meaningful concepts — like Shannon's mutual information and Pearl's causation — are more likely to be pluralistically represented and agreed upon than other more idiosyncratic preferences.

Incidentally, respect for human autonomy — the ability to make decisions — is something that many humans want to preserve through the advent of pervasive AI services and/or super-human agents. Interestingly, respect for autonomy is one of the most strongly codified ethical principles for how the scientific establishment — a kind of super-human intelligence — is supposed to treat experimental human subjects. See the Belmont Report, which is not only required reading for scientists performing human studies at many US universities, but also carries legal force in defining violations of human rights by the scientific establishment. Personally, I find it to be one of the most direct and to-the-point codifications of how a highly intelligent non-human institution (science) is supposed to treat human beings.

Comparison to related work

Cartesian frames

The formalism here is lot like a time-extended version of a Cartesian Frame (Garrabrant, 2020 [LW · GW]), except that what Scott calls an "agent" is further subdivided here into its "boundary" and its "viscera". I'm also not using the word "agent" because my focus is on living systems, which are often not very agentic, even when they can be said to have preferences in a meaningful way. After a reading of this draft, Scott also informed me that he'd like to reserve the use of the term "frame" when talking about "factoring" (in feature space), and "boundary" for when talking about "subdividing" (in physical space). I agree with drawing this distinction, but neither Scott nor I is currently excited about the word "frame" for naming the dual concept to "boundary".

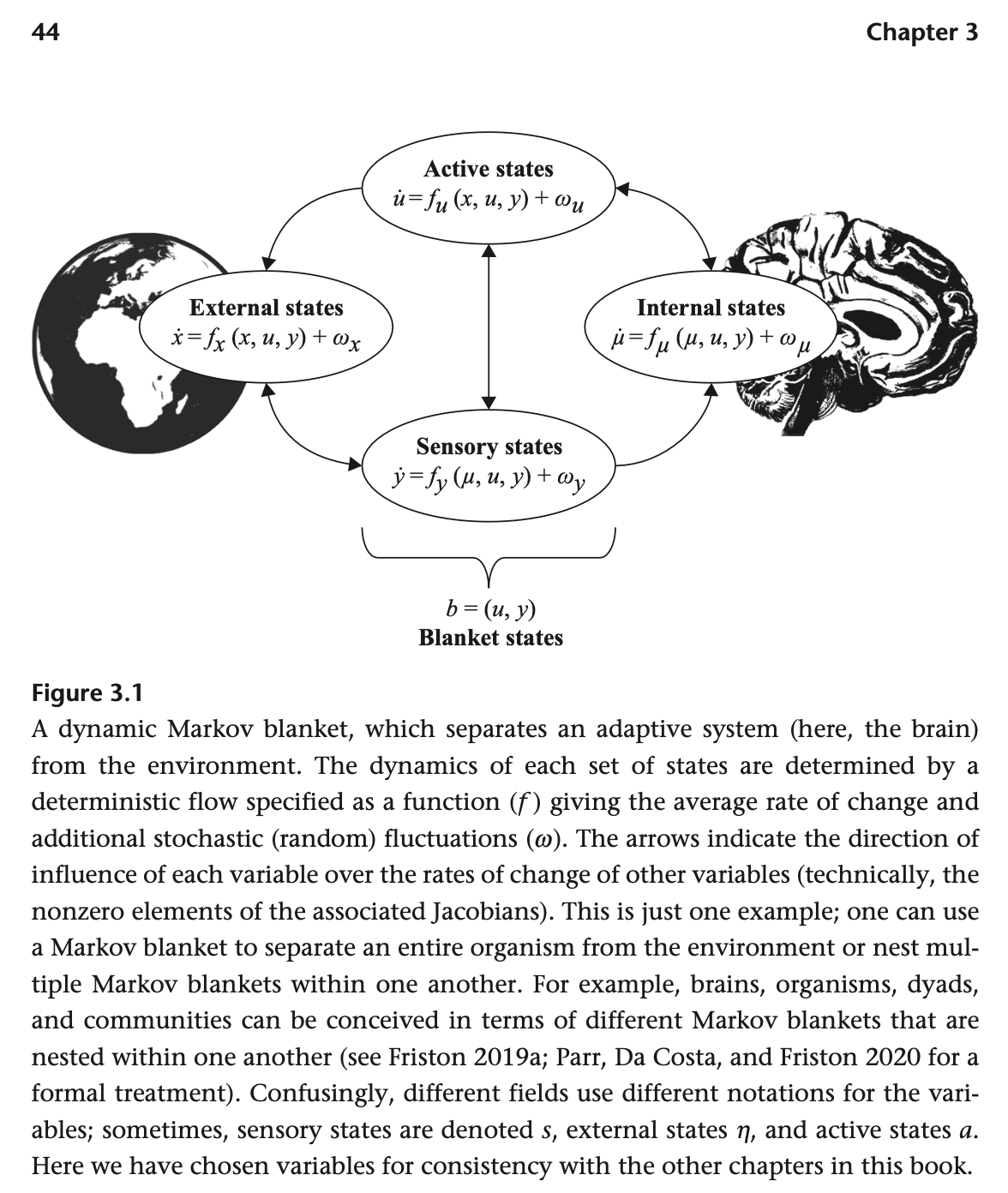

Active inference

The physical ontology here is very similar to Prof. Karl Friston's view of living systems as dynamical subsystems engaging in what Friston calls active inference (Friston, 2009). Notably, Friston is one of the most widely cited scientists alive today, with over 300,000 citations on Google Scholar. Unfortunately, I find Friston's writings to be somewhat inscrutable regarding what does or doesn't constitute a "decision", "inference", or "action". So, despite the at-least-superficial philosophical alignment with Friston's perspective, I'm building things mathematically from scratch using Judea Pearl's approach of modeling causality with Bayes nets, which I find much more readily applicable in a decision-theoretic setting.

After finishing my second draft of this post, I found out about a book by two other authors trying to clarify Friston's active inference principle, with Friston as a co-author (Parr, Pezzulo, and Friston, 2022), which seems to have gained in popularity since I began writing this sequence. Unlike me, they assume the system is a "minimizer" of a free energy objective, which I think is a crucial mistake, on three counts:

- Many organisms are better described as satisficers than optimizers.

- The presumption of energy minimization fails to notice collective bargaining opportunities whereby organisms can conserve energy to spend on other (arbitrary/idiosyncratic) goals, effectively "combatting moloch" in the language of Scott Alexander (2014).

- Rather than minimizing surprise to their world models, I think real-world organisms are more likely to exist due to a tendency to perpetuate their functioning as decision-making entities.

Despite these differences, on page 44 they draw a decomposition nearly identical to my Figure 1, and refer to the separation of the interior from the exterior as a Markov blanket. They even talk about communities as having boundaries, as in Part 1 [LW · GW] of this series:

Overall, I find these similarities encouraging. After so many people being inspired by Friston's writings, it makes sense people are converging somewhat to try to clarify ideas in this space. On page ix, Friston humbly writes:

"I have a confession to make. I did not write much of this book. Or, more

precisely, I was not allowed to. This book’s agenda calls for a crisp and clear

writing style that is beyond me. Although I was allowed to slip in a few of my

favorite words, what follows is a testament to Thomas and Giovanni, their deep

understanding of the issues at hand, and, importantly, their theory of mind—in

all senses."

Functional decision theory (FDT)

FDT (Yudkowsky and Soares, 2017) presents a promising way for artificial or living systems in the physical world to coordinate better, by noticing that they're essentially implementing the same function and choosing their outputs accordingly. When an agent in the 3D world starts thinking like an FDT agent, it draws its boundary around all parts of world that are running the same function, and considers them all to be "itself". This raises a question: how do two identical or nearly identical algorithms recognize — or decide — that they are in fact implementing essentially the same function? I'm not going to go deep into that here, but my best short answer is that algorithms still need to draw some boundaries in some abstract algorithm space — e.g., in the Solomonoff prior or the speed prior — that delineate what are considered their inputs, their outputs, their internals, and their externals. So, FDT sort of punts the problem of where to draw boundaries, moving the question out of physical space and into the space of (possible) algorithms.

Markov blankets

Many other authors have elaborated on the importance of the Markov blanket concept, including LessWrong author John Wentworth, who I've seen presenting on and discussing the idea at several AI safety related meetings. I think for decision-theoretic purposes, one needs to further subdivide an organism's Markov blanket into active and passive components, for action and perception.

Recap

In this post, I delineated 8 problems that I intend to address in terms of a formal definitions of boundaries, and laid our the basic structure of the formal definition. A living system is defined in terms of a decomposition of the world (with state variable W) into an environment (state: E), active boundary (state: A), passive boundary (state: P), and viscera (state: V). The boundary state B=(A,P) forms an "approximate directed Markov blanket" separating the viscera from the environment, with A mediating outward causal influence and P mediating inward causal influence. This allows conceiving of the living system (V,A,P) as engaged in decision making according to some decision rule that approximates reality. In order to "survive" as an -following decision-making entity, the system must make decisions in a manner that does not bring an end to as an approximately-valid description of its behavior, and in particular, does not destroy the approximate Markov property of the boundary , and does not destroy the outward and inward causal influence directions of the passive boundary and active boundary . In other words, is assumed to perpetuate . This assumption is justified by the observation that self-perpetuating systems are made more noticeable and impactful by their continued existence.

From there, I argue briefly that non-violence and respect for boundaries are non-arbitrary coordination norms, because of the ability to define boundaries entirely information-theoretically, without reference to other more idiosyncratic aspects of individual preferences. Comparisons are drawn to Cartesian frames (which are not time-extended), functional decision theory (which conceives of decision-theoretic causation in a logical space rather than a physical space), and Friston's notion of active inference. After writing the definitions, a strong similarity was found to Chapter 3 of Parr (2022), in describing perception ("sensing") and action systems as constituting a Markov blanket, around both individual organisms and communities. However, Friston and Parr both characterize the living system in question as an optimizer, which specifically minimizes surprise to its world model. I consider both of these assumptions to be problematic, enough so that I don't believe the active inference concept is quite right for capturing respect for boundaries as a moral precept.

Reminder to vote

If you have a minute to cast a vote on which alignment-related problem I should most focus on applying these definitions to in Part 3b, please do so here [LW(p) · GW(p)]. Thanks!

20 comments

Comments sorted by top scores.

comment by Scott Garrabrant · 2022-10-31T15:21:16.048Z · LW(p) · GW(p)

Overall, this is my favorite thing I have read on lesswrong in the last year.

Agreements:

I agree very strongly with most of this post, both in the way you are thinking about boundaries, and in the scale and scope of applications of boundaries to important problems.

In particular on the applications, I think that boundaries as you are defining them are crucial to developing decision theory and bargaining theory (and indeed are already helpful for thinking about bargaining and fairness in real life), but I also agree with your other potential applications.

I particular on the theory, I agree that the boundary of an agent (or agent-like-thing) should be thought of as that which screens off the viscera of the agent from its environment. I agree that the agent should think of decisions as intervening on its boundary. I agree that the boundary (as the agent sees it) only partially does the screening-off thing. I agree that the agent should in part be focused on actively maintaining its boundary, as this is crucial to its integrity as an agent.

I believe the above mostly independently of this post, but the place where I think this post is doing better than my default way of thinking is in the directionality of the arrows. I have been thinking about this in a pretty symmetric way: the notion of B screening off V from E is symmetric in swapping V and E. I was aware this was a mistake (because logical mutual information is not symmetric), but this post makes it clear to me how important that mistake was. Thanks!

Disagreements:

Philosophical nitpick: I think the boundary should be thought of as part of the agent/organism and simultaneously as part of the environment. Indeed, the screening off property can be thought of as an informational (as opposed to physical) way of saying that the boundary is the intersection of the agent and environment.

I think the boundary factorization into active and passive is wrong. I am not sure what is right here. My default proposal is to think of the active as the minimal part that contains all information flow from the viscera, and the perceptive as the minimal part that contains all information flow from the environment. By definition, these cover the boundary, but they might intersect. (An alternative proposal is to define the active as the part that the agent thinks of its interventions as living, and the perceptive as where the agent thinks of its perceptions as living, and now they don't cover the boundary)

In both of the above, I am pushing for the claim that we are not yet in the part of the theory where we need to break agent-environment symmetry in the theory. (Although we do need to track the directions of information flow separately!)

I think that thinking of there as being physical nodes is wrong. Unfortunately Finite Factored Sets is not yet able to handle directionality of information flow, so I see how it is the only way you can express an important part of the model. We need to fix that, so we can think of viscera, environments, boundaries, etc. as features of the world rather than sets of nodes.

I also think that the time-embedded picture is wrong. I often complain about models that have a thing persisting across linear time like this, but I think it is especially important here. As far as I can tell, time is mostly about screening-off, and boundaries are also mostly about screening-off, so I think that this is a domain in which it is especially important to get time right.

Replies from: Andrew_Critch, Scott Garrabrant, Scott Garrabrant↑ comment by Andrew_Critch · 2022-10-31T23:34:45.715Z · LW(p) · GW(p)

Thanks, Scott!

I think the boundary factorization into active and passive is wrong.

Are you sure? The informal description I gave for A and P allow for the active boundary to be a bit passive and the passive boundary to be a bit active. From the post:

the active boundary, A — the features or parts of the boundary primarily controlled by the viscera, interpretable as "actions" of the system— and the passive boundary, P — the features or parts of the boundary primarily controlled by the environment, interpretable as "perceptions" of the system.

There's a question of how to factor B into a zillion fine-grained features in the first place, but given such a factorization, I think we can define A and P fairly straightforwardly using Shapley value to decide how much V versus E is controlling each feature, and then A and P won't overlap and will cover everything.

Replies from: Scott Garrabrant, Scott Garrabrant↑ comment by Scott Garrabrant · 2022-11-01T00:09:50.762Z · LW(p) · GW(p)

Oh yeah, oops, that is what it says. Wasn’t careful, and was responding to reading an old draft. I agree that the post is already saying roughly what I want there. Instead, I should have said that the B=AxP bijection is especially unrealistic. Sorry.

Replies from: Andrew_Critch↑ comment by Andrew_Critch · 2022-11-01T04:51:15.181Z · LW(p) · GW(p)

Why is it unrealistic? Do you actually mean it's unrealistic that the set I've defined as "A" will be interpretable at "actions" in the usual coarse-grained sense? If so I think that's a topic for another post when I get into talking about the coarsened variables ...

Replies from: Scott Garrabrant↑ comment by Scott Garrabrant · 2022-11-01T05:15:14.231Z · LW(p) · GW(p)

I mean, the definition is a little vague. If your meaning is something like "It goes in A if it is more accurately described as controlled by the viscera, and it goes in P if it is more accurately described as controlled by the environment," then I guess you can get a bijection by definition, but it is not obvious these are natural categories. I think there will be parts of the boundary that feel like they are controlled by both or neither, depending on how strictly you mean "controlled by."

↑ comment by Scott Garrabrant · 2022-11-01T00:12:23.401Z · LW(p) · GW(p)

Forcing the AxP bijection is an interesting idea, but it feels a little too approximate to my taste.

↑ comment by Scott Garrabrant · 2022-11-01T00:26:36.118Z · LW(p) · GW(p)

To be clear, everywhere I say “is wrong,” I mean I wish the model is slightly different, not that anything is actually is mistaken. In most cases, I don’t really have much of an idea how to actually implement my recommendation.

↑ comment by Scott Garrabrant · 2022-11-01T00:38:01.393Z · LW(p) · GW(p)

comment by Alex Flint (alexflint) · 2022-10-31T20:35:29.914Z · LW(p) · GW(p)

I have the sense that boundaries are so effective as a coordination mechanism that we have come to believe that they are an end in themselves. To me it seems that the over-use of boundaries leads to loneliness that eventually obviates all the goodness of the successful coordination. It's as if we discovered that cars were a great way to get from place to place, but then we got so used to driving in cars that we just never got out of them, and so kind of lost all the value of being able to get from place to place. It was because the cars were in fact so effective as transportation devices that started to emphasize them so heavily in our lives.

You say "real-world living systems sometimes do funky things like opening up their boundaries" but that's like saying "real-world humans sometimes do funky things like getting out of their cars" -- we shouldn't begin with the view that boundaries are the default thing and then consider some "extreme cases" where people open up their boundaries.

Some specific cases to consider for a theory of boundaries-as-arising-from-cordination:

- A baby grows inside a mother and is born, gradually establishing boundaries. You might say the baby has zero boundaries just prior to conception and full boundaries at age 10? age 15? age 20? How do you make appropriate sense of the coming into existence of boundaries over time?

- A human dies, gradually losing agency over years. What is the appropriate way to view the attenuation-to-zero of this person's boundaries?

- During an adult human life, a person finds themselves in situations where it is extremely difficult, for practical reasons, to establish certain boundaries. For example, two people locked in a tiny closet together are unable to establish, perhaps, any boundary around personal space. Perhaps it was a mistake to get locked in there in the first place, but now that they are in there, they need a way to coordinate without being able to establish certain boundaries.

Overall, I would ask "what is an effective set of boundaries given our situation and our goal?" rather than "how can we coordinate on our goals given our situation and our apriori fixed boundaries?"

Replies from: Chipmonk↑ comment by Chipmonk · 2023-08-25T22:28:21.080Z · LW(p) · GW(p)

Oooo I like this comment, especially the first two examples

also,

For example, two people locked in a tiny closet together are unable to establish, perhaps, any boundary around personal space.

Personally I wouldn't call this a «boundary». I don't consider boundaries to be things that are "set" or "established" [LW · GW]

comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2022-10-30T21:29:31.898Z · LW(p) · GW(p)

Woah, Andrew, this is fantastic work! I am seriously excited about this direction.! I liked your previous posts on boundaries very much too, but I had no idea your thoughts on boundaries were this technically refined - and that they tie in so beautifully with Markov blankets!

re: Friston.

Friston particular style that could justifiably be called obscurantist. His writing is extremely verbose, often fails to define key terms, and very nontrivial equations are often posited without derivation or citation. After spending considerable effort trying to understand the rather lofty prose I would often realize the key ideas were indeed quite interesting - but could be explained far better. There are some big claims in his work but insufficiently many details are worked out to ascertain to what degree this claims can be justified. A group of people have taken up the thankless task of Friston exegesis but unfortunately have not really developed a sufficiently clear & crisp account. The book you cite is an example of this literature. Long story short I am excited that your recent work might finally resolve this cloud of confusion!

In the context of this post, the following paper is particularly relevant:

https://royalsocietypublishing.org/doi/10.1098/rsif.2013.0475

Apart from being very unclear, almost deliberately so, apparently it also has actual serious errors.

Nevertheless, inside there is a definition of a decomposition of a Markov blanket & boundary into action, observation, internal and external states that seems extra similar to your story [but I think you've gone much farther already!]. I talked a little about Friston's decomposition of Markov Boundaries recently for the MetaUni Abstraction seminar, see here for the relevant slide.

Replies from: winstonne↑ comment by winstonne · 2023-11-17T16:41:39.748Z · LW(p) · GW(p)

If anyone is interested in joining a learning community around the ideas of active inference, the mission of https://www.activeinference.org/ is to educate the community around these topics. There's a study group around the 2022 active inference textbook by Parr, Friston, and Pezzulo. I'm in the 5th cohort and it's been very useful for me.

comment by Alex Flint (alexflint) · 2022-10-31T19:55:22.986Z · LW(p) · GW(p)

The post begins with "this is part 3b of..." but I think you meant to say 3a.

Replies from: Andrew_Critch↑ comment by Andrew_Critch · 2022-10-31T23:22:27.546Z · LW(p) · GW(p)

Thanks, fixed!

comment by Alex_Altair · 2023-05-24T19:32:40.198Z · LW(p) · GW(p)

Here I'm going to log some things I notice while reading, mostly as a way to help check my understanding, but also to help find possible errata.

In Definition part (a), you've got a whole lot of W-type symbols, and I'm not 100% sure I follow each of their uses. You use a couple times which is legit, but it looks a lot like , so maybe it could be replaced with ?

See this comment [LW(p) · GW(p)] for two errata with the different w's.

denotes, for any world state , the future of the Dirac (100% concentrated) distribution on the world state .

Maybe you could just say, is shorthand for , since will map to the right thing of type . Then you can avoid bringing in the somewhat exotic Dirac delta function. Of course, that now means that itself is not the first item in the resulting sequence. I'm not sure if you need that to be the case for later. But also, everything above is ambiguous about whether the argument to was in the sequence anyway.

The character ⫫ doesn't render for me. (I could figure out what it was by pasting the unicode into google, but maybe it could be done with LaTeX instead?)

To formalize this, I want a collection of state spaces and maps, like so:

Is the following bulleted list missing an entry for ?

Each of these factorizations are assumed to be bijective, in the sense of accounting for everything that matters and not double-counting anything

I was wondering if you were going to say something like and . It sounds like that's almost right, except that you allow the factors to pass through arbitrary functions first, as long as they're bijective. Is that right?

We say is a good fit

You bring back here, but I don't see the doing anything yet. Might be better not to introduce it until later, to free up a bit of the reader's working memory.

See this comment [LW(p) · GW(p)] for a broken link.

comment by rajashree (miraya) · 2023-04-02T23:18:22.985Z · LW(p) · GW(p)

Some notational bugs in the bulleted list in "Definition part (a): "part of the world" that come up in trying to communicate about this with someone:

In the first bullet, should probably be both for consistency with later bullets, and so that it does not seem like is subscript-indexed over some . (I'm inferring that subscript W is just part of the name of .)

In bullet 6 about , the sentence should end with , not .

The last three bullet points should maybe use instead of , or else maybe all the lowercase s should be made capital.

comment by Alex_Altair · 2022-12-01T05:26:48.397Z · LW(p) · GW(p)

My browser thinks this is an invalid link and won't let me open it.

comment by scottviteri · 2024-04-02T06:26:31.301Z · LW(p) · GW(p)

If I try to use this framework to express two agents communicating, I get an image with a V1, A1, P1, V2, A2, and P2, with cross arrows from A1 to P2 and A2 to P1. This admits many ways to get a roundtrip message. We could have A1 -> P2 -> A2 -> P2 directly, or A1 -> P2 -> V2 -> A2 -> P1, or many cycles among P2, V2, and A2 before P1 receives a message. But in none of these could I hope to get a response in one time step the way I would if both agents simultaneously took an action, and then simultaneously read from their inputs and their current state to get their next state. So I have this feeling that pi : S -> Action and update : Observation x S -> S already bake in this active/passive distinction by virtue of the type signature, and this framing is maybe just taking away the computational teeth/specificity. And I can write the same infiltration and exfiltration formulas by substituting S_t for V_t, Obs_t for P_t, Action_t for A_t, and S_env_t for E_t.

Replies from: scottviteri↑ comment by scottviteri · 2024-04-16T19:50:25.997Z · LW(p) · GW(p)

I take back the part about pi and update determining the causal structure, because many causal diagrams are constant with the same poly diagram

comment by Chipmonk · 2023-09-19T21:05:45.790Z · LW(p) · GW(p)

I've written a conceptual distillation of the Markov blanket aspect of this post: Formalizing «Boundaries» with Markov blankets [LW · GW].