We're already in AI takeoff

post by Valentine · 2022-03-08T23:09:06.733Z · LW · GW · 119 commentsContents

We're already in AI takeoff. The "AI" is just running on human minds right now. Sorting out AI alignment in computers is focusing entirely on the endgame. That's not where the causal power is. None 119 comments

Back in 2016, CFAR pivoted to focusing on xrisk. I think the magic phrase at the time was:

"Rationality for its own sake, for the sake of existential risk."

I was against this move. I also had no idea how power works. I don't know how to translate this into LW language, so I'll just use mine: I was secret-to-me vastly more interested in being victimized at people/institutions/the world than I was in doing real things.

But the reason I was against the move is solid. I still believe in it.

I want to spell that part out a bit. Not to gripe about the past. The past makes sense to me. But because the idea still applies.

I think it's a simple idea once it's not cloaked in bullshit. Maybe that's an illusion of transparency. But I'll try to keep this simple-to-me and correct toward more detail when asked and I feel like it, rather than spelling out all the details in a way that turns out to have been unneeded.

Which is to say, this'll be kind of punchy and under-justified.

The short version is this:

We're already in AI takeoff. The "AI" is just running on human minds right now. Sorting out AI alignment in computers is focusing entirely on the endgame. That's not where the causal power is.

Maybe that's enough for you. If so, cool.

I'll say more to gesture at the flesh of this.

What kind of thing is wokism? Or Communism? What kind of thing was Naziism in WWII? Or the flat Earth conspiracy movement? Q Anon?

If you squint a bit, you might see there's a common type here.

In a Facebook post I argued that it's fair to view these things as alive. Well, really, I just described them as living, which kind of is the argument. If your woo allergy keeps you from seeing that… well, good luck to you. But if you're willing to just assume I mean something non-woo, you just might see something real there.

These hyperobject creatures are undergoing massive competitive evolution. Thanks Internet. They're competing for resources. Literal things like land, money, political power… and most importantly, human minds.

I mean something loose here. Y'all are mostly better at details than I am. I'll let you flesh those out rather than pretending I can do it well.

But I'm guessing you know this thing. We saw it in the pandemic, where friendships got torn apart because people got hooked by competing memes. Some "plandemic" conspiracy theorist anti-vax types, some blind belief in provably incoherent authorities, the whole anti-racism woke wave, etc.

This is people getting possessed.

And the… things… possessing them are highly optimizing for this.

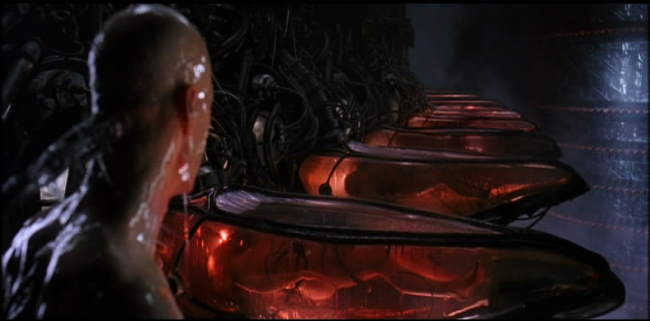

To borrow a bit from fiction: It's worth knowing that in their original vision for The Matrix, the Wachowski siblings wanted humans to be processors, not batteries. The Matrix was a way of harvesting human computing power. As I recall, they had to change it because someone argued that people wouldn't understand their idea.

I think we're in a scenario like this. Not so much the "in a simulation" part. (I mean, maybe. But for what I'm saying here I don't care.) But yes with a functionally nonhuman intelligence hijacking our minds to do coordinated computations.

(And no, I'm not positing a ghost in the machine, any more than I posit a ghost in the machine of "you" when I pretend that you are an intelligent agent. If we stop pretending that intelligence is ontologically separate from the structures it's implemented on, then the same thing that lets "superintelligent agent" mean anything at all says we already have several.)

We're already witnessing orthogonality [? · GW].

The talk of "late-stage capitalism" points at this. The way greenwashing appears for instance is intelligently weaponized Goodhart. It's explicitly hacking people's signals in order to extract what the hypercreature in question wants from people (usually profit).

The way China is drifting with a social credit system and facial recognition tech in its one party system, it appears to be threatening a Shriek. Maybe I'm badly informed here. But the point is the possibility.

In the USA, we have to file income taxes every year even though we have the tech to make it a breeze. Why? "Lobbying" is right, but that describes the action. What's the intelligence behind the action? What agent becomes your intentional opponent if you try to change this? You might point at specific villains, but they're not really the cause. The CEO of TurboTax doesn't stay the CEO if he doesn't serve the hypercreature's hunger.

I'll let you fill in other examples.

If the whole world were unified on AI alignment being an issue, it'd just be a problem to solve.

The problem that's upstream of this is the lack of will.

Same thing with cryonics really. Or aging.

But AI is particularly acute around here, so I'll stick to that.

The problem is that people's minds aren't clear enough to look at the problem for real. Most folk can't orient to AI risk without going nuts or numb or splitting out gibberish platitudes.

I think this is part accidental and part hypercreature-intentional.

The accidental part is like how advertisements do a kind of DDOS attack on people's sense of inherent self-worth. There isn't even a single egregore to point at as the cause of that. It's just that many, many such hypercreatures benefit from the deluge of subtly negative messaging and therefore tap into it in a sort of (for them) inverse tragedy of the commons. (Victory of the commons?)

In the same way, there's a very particular kind of stupid that (a) is pretty much independent of g factor and (b) is super beneficial for these hypercreatures as a pathway to possession.

And I say "stupid" both because it's evocative but also because of ties to terms like "stupendous" and "stupefy". I interpret "stupid" to mean something like "stunned". Like the mind is numb and pliable.

It so happens that the shape of this stupid keeps people from being grounded in the physical world. Like, how do you get a bunch of trucks out of a city? How do you fix the plumbing in your house? Why six feet for social distancing? It's easier to drift to supposed-to's and blame minimization. A mind that does that is super programmable.

The kind of clarity that you need to de-numb and actually goddamn look at AI risk is pretty anti all this. It's inoculation to zombiism.

So for one, that's just hard.

But for two, once a hypercreature (of this type) notices this immunity taking hold, it'll double down. Evolve weaponry.

That's the "intentional" part.

This is where people — having their minds coopted for Matrix-like computation — will pour their intelligence into dismissing arguments for AI risk.

This is why we can't get serious enough buy-in to this problem.

Which is to say, the problem isn't a need for AI alignment research.

The problem is current hypercreature unFriendliness.

From what I've been able to tell, AI alignment folk for the most part are trying to look at this external thing, this AGI, and make it aligned.

I think this is doomed.

Not just because we're out of time. That might be.

But the basic idea was already self-defeating.

Who is aligning the AGI? And to what is it aligning?

This isn't just a cute philosophy problem.

A common result of egregoric stupefaction is identity fuckery. We get this image of ourselves in our minds, and then we look at that image and agree "Yep, that's me." Then we rearrange our minds so that all those survival instincts of the body get aimed at protecting the image in our minds.

How did you decide which bits are "you"? Or what can threaten "you"?

I'll hop past the deluge of opinions and just tell you: It's these superintelligences. They shaped your culture's messages, probably shoved you through public school, gripped your parents to scar you in predictable ways, etc.

It's like installing a memetic operating system.

If you don't sort that out, then that OS will drive how you orient to AI alignment.

My guess is, it's a fuckton easier to sort out Friendliness/alignment within a human being than it is on a computer. Because the stuff making up Friendliness is right there.

And by extension, I think it's a whole lot easier to create/invoke/summon/discover/etc. a Friendly hypercreature than it is to solve digital AI alignment. The birth of science was an early example.

I'm pretty sure this alignment needs to happen in first person. Not third person. It's not (just) an external puzzle, but is something you solve inside yourself.

A brief but hopefully clarifying aside:

Stephen Jenkinson argues that most people don't know they're going to die. Rather, they know that everyone else is going to die.

That's what changes when someone gets a terminal diagnosis.

I mean, if I have a 100% reliable magic method for telling how you're going to die, and I tell you "Oh, you'll get a heart attack and that'll be it", that'll probably feel weird but it won't fill you with dread. If anything it might free you because now you know there's only one threat to guard against.

But there's a kind of deep, personal dread, a kind of intimate knowing, that comes when the doctor comes in with a particular weight and says "I've got some bad news."

It's immanent.

You can feel that it's going to happen to you.

Not the idea of you. It's not "Yeah, sure, I'm gonna die someday."

It becomes real.

You're going to experience it from behind the eyes reading these words.

From within the skin you're in as you witness this screen.

When I talk about alignment being "first person and not third person", it's like this. How knowing your mortality doesn't happen until it happens in first person.

Any kind of "alignment" or "Friendliness" or whatever that doesn't put that first person ness at the absolute very center isn't a thing worth crowing about.

I think that's the core mistake anyway. Why we're in this predicament, why we have unaligned superintelligences ruling the world, and why AGI looks so scary.

It's in forgetting the center of what really matters.

It's worth noting that the only scale that matters anymore is the hypercreature one.

I mean, one of the biggest things a single person can build on their own is a house. But that's hard, and most people can't do that. Mostly companies build houses.

Solving AI alignment is fundamentally a coordination problem. The kind of math/programming/etc. needed to solve it is literally superhuman, the way the four color theorem was (and still kind of is) superhuman.

"Attempted solutions to coordination problems" is a fine proto-definition of the hypercreatures I'm talking about.

So if the creatures you summon to solve AI alignment aren't Friendly, you're going to have a bad time.

And for exactly the same reason that most AGIs aren't Friendly, most emergent egregores aren't either.

As individuals, we seem to have some glimmer of ability to lean toward resonance with one hypercreature or another. Even just choosing what info diet you're on can do this. (Although there's an awful lot of magic in that "just choosing" part.)

But that's about it.

We can't align AGI. That's too big.

It's too big the way the pandemic was too big, and the Ukraine/Putin war is too big, and wokeism is too big.

When individuals try to act on the "god" scale, they usually just get possessed. That's the stupid simple way of solving coordination problems.

So when you try to contribute to solving AI alignment, what egregore are you feeding?

If you don't know, it's probably an unFriendly one.

(Also, don't believe your thoughts too much. Where did they come from?)

So, I think raising the sanity waterline [LW · GW] is upstream of AI alignment.

It's like we've got gods warring, and they're threatening to step into digital form to accelerate their war.

We're freaking out about their potential mech suits.

But the problem is the all-out war, not the weapons.

We have an advantage in that this war happens on and through us. So if we take responsibility for this, we can influence the terrain and bias egregoric/memetic evolution to favor Friendliness.

Anything else is playing at the wrong level. Not our job. Can't be our job. Not as individuals, and it's individuals who seem to have something mimicking free will.

Sorting that out in practice seems like the only thing worth doing.

Not "solving xrisk". We can't do that. Too big. That's worth modeling, since the gods need our minds in order to think and understand things. But attaching desperation and a sense of "I must act!" to it is insanity. Food for the wrong gods.

Ergo why I support rationality for its own sake, period.

That, at least, seems to target a level at which we mere humans can act.

119 comments

Comments sorted by top scores.

comment by Scott Alexander (Yvain) · 2022-03-10T09:37:55.931Z · LW(p) · GW(p)

I wasn't convinced of this ten years ago [LW · GW] and I'm still not convinced.

When I look at people who have contributed most to alignment-related issues - whether directly, like Eliezer Yudkowsky and Paul Christiano - or theoretically, like Toby Ord and Katja Grace - or indirectly, like Sam Bankman-Fried and Holden Karnofsky - what all of these people have in common is focusing mostly on object-level questions. They all seem to me to have a strong understanding of their own biases, in the sense that gets trained by natural intelligence, really good scientific work, and talking to other smart and curious people like themselves. But as far as I know, none of them have made it a focus of theirs to fight egregores, defeat hypercreatures, awaken to their own mortality, refactor their identity, or cultivate their will. In fact, all them (except maybe Eliezer) seem like the kind of people who would be unusually averse to thinking in those terms. And if we pit their plumbing or truck-manuevering skills against those of an average person, I see no reason to think they would do better (besides maybe high IQ and general ability).

It's seemed to me that the more that people talk about "rationality training" more exotic than what you would get at a really top-tier economics department, the more those people tend to get kind of navel-gazey, start fighting among themselves, and not accomplish things of the same caliber as the six people I named earlier. I'm not just saying there's no correlation with success, I'm saying there's a negative correlation.

(Could this be explained by people who are naturally talented not needing to worry about how to gain talent? Possibly, but this isn't how it works in other areas - for example, all top athletes, no matter how naturally talented, have trained a lot.)

You've seen the same data I have, so I'm curious what makes you think this line of research/thought/effort will be productive.

Replies from: JamesPayor, LukeOnline, habryka4, Valentine, adamShimi, ADifferentAnonymous, Benito, Marcello↑ comment by James Payor (JamesPayor) · 2022-03-10T13:02:18.541Z · LW(p) · GW(p)

I think your pushback is ignoring an important point. One major thing the big contributors have in common is that they tend to be unplugged from the stuff Valentine is naming!

So even if folks mostly don't become contributors by asking "how can I come more truthfully from myself and not what I'm plugged into", I think there is an important cluster of mysteries here. Examples of related phenomena:

- Why has it worked out that just about everyone who claims to take AGI seriously is also vehement about publishing every secret they discover?

- Why do we fear an AI arms race, rather than expect deescalation and joint ventures?

- Why does the industry fail to understand the idea of aligned AI, and instead claim that "real" alignment work is adversarial-examples/fairness/performance-fine-tuning?

I think Val's correct on the point that our people and organizations are plugged into some bad stuff, and that it's worth examining that.

↑ comment by LukeOnline · 2022-03-11T13:29:31.317Z · LW(p) · GW(p)

But as far as I know, none of them have made it a focus of theirs to fight egregores, defeat hypercreatures

Egregore is an occult concept representing a distinct non-physical entity that arises from a collective group of people.

I do know one writer who talks a lot about demons and entities from beyond the void. It's you, and it happens in some of, IMHO, the most valuable pieces you've written.

I worry that Caplan is eliding the important summoner/demon distinction. This is an easy distinction to miss, since demons often kill their summoners and wear their skin.

That civilization is dead. It summoned an alien entity from beyond the void which devoured its summoner and is proceeding to eat the rest of the world.

https://slatestarcodex.com/2016/07/25/how-the-west-was-won/And Ginsberg answers: “Moloch”. It’s powerful not because it’s correct – nobody literally thinks an ancient Carthaginian demon causes everything – but because thinking of the system as an agent throws into relief the degree to which the system isn’t an agent.

But the current rulers of the universe – call them what you want, Moloch, Gnon, whatever – want us dead, and with us everything we value. Art, science, love, philosophy, consciousness itself, the entire bundle. And since I’m not down with that plan, I think defeating them and taking their place is a pretty high priority.

https://slatestarcodex.com/2014/07/30/meditations-on-moloch/

It seems pretty obvious to me:

1.) We humans aren't conscious of all the consequences of our actions, both because the subconscious has an important role in making our choices, and because our world is enormously complex so all consequences are practically unknowable

2.) In a society of billions, these unforeseeable forces combine in something larger than humans can explicitly plan and guide: "the economy", "culture", "the market", "democracy", "memes"

3.) These larger-than-human-systems prefer some goals that are often antithetical to human preferences. You describe it perfectly in Seeing Like A State: the state has a desire for legibility and 'rationally planned' designs that are at odds with the human desire for organic design. And thus, the 'supersystem' isn't merely an aggregate of human desires, it has some qualities of being an actual separate agent with its own preferences. It could be called a hypercreature, an egregore, Moloch or the devil.

4.) We keep hurting ourselves, again and again and again. We keep falling into multipolar traps, we keep choosing for Moloch, which you describe as "the god of child sacrifice, the fiery furnace into which you can toss your babies in exchange for victory in war". And thus, we have not accomplished for ourselves what we want to do with AI. Humanity is not aligned with human preferences. This is what failure looks like. [LW · GW]

5.) If we fail to align humanity, if we fail to align major governments and corporations, if we don't even recognize our own misalignment, how big is the chance that we will manage to align AGI with human preferences? Total nuclear war has not been avoided by nuclear technicians who kept perfect control over their inventions - it has been avoided by the fact that the US government in 1945 was reasonably aligned with human preferences. I dare not imagine the world where the Nazi government was the first to get its hands on nuclear weapons.

And thus, I think it would be very, very valuable to put a lot more effort into 'aligning humanity'. How do we keep our institutions and our grassroots movement "free from Moloch"? How do we get and spread reliable, non-corrupt authorities and politicians? How do we stop falling into multipolar traps, how do we stop suffering unnecessarily?

Best case scenario: this effort will turn out to be vital to AGI alignment

Worst case scenario: this effort will turn out to be irrelevant to AGI alignment, but in the meanwhile, we made the world a much better place

↑ comment by habryka (habryka4) · 2022-03-12T09:20:57.885Z · LW(p) · GW(p)

I sadly don't have time to really introspect what is going in me here, but something about this comment feels pretty off to me. I think in some sense it provides an important counterpoint to the OP, but also, I feel like it also stretches the truth quite a bit:

- Toby Ord primarily works on influencing public opinion and governments, and very much seems to view the world through a "raising the sanity waterline" lense. Indeed, I just talked to him last morning where I tried to convince him that misuse risk from AI, and the risk from having the "wrong actor" get the AI is much less than he thinks it is, which feels like a very related topic.

- Eliezer has done most of his writing on the meta-level, on the art of rationality, on the art of being a good and moral person, and on how to think about your own identity.

- Sam Bankman-Fried is also very active in political activism, and (my guess) is quite concerned about the information landscape. I expect he would hate the terms used in this post, but I expect there to be a bunch of similarities in his model of the world and the one outlined in this post, in terms of trying to raise the sanity waterline and improve the world's decision-making in a much broader sense (there is a reason why he was one of the biggest contributors to the Clinton and Biden campaigns).

I think it is true that the other three are mostly focusing on object-level questions.

I... also dislike something about the meta-level of arguing from high-status individuals. I expect it to make the discussion worse, and also make it harder for people to respond with counter arguments, because counter arguments arguments could be read as attacking the high-status people, which is scary.

I dislike the language used in the OP, and sure feel like it actively steers attention in unproductive ways that make me not want to engage with it, but I do have a strong sense that it's going to be very hard to actually make progress on building a healthy field of AI Alignment, because the world will repeatedly try to derail the field into being about defeating the other monkeys, or being another story about why you should work at the big AI companies, or why you should give person X or movement Y all of your money, which feels to me related to what the OP is talking about.

Replies from: ShowMeTheProbability↑ comment by ShowMeTheProbability · 2022-11-24T12:49:29.112Z · LW(p) · GW(p)

The Sam Bankman Fried reads differently now his massive fraud with FTX is public, might be worth a comment/revision?

I can't help but see Sam disagreeing with a message as a positive for the message (I know it's a fallacy, but the feelings still there)

Replies from: habryka4↑ comment by habryka (habryka4) · 2022-11-24T20:41:45.938Z · LW(p) · GW(p)

Hmm, I feel like the revision would have to be in Scott's comment. I was just responding to the names that Scott mentioned, and I think everything I am saying here is still accurate.

↑ comment by Valentine · 2022-03-11T13:24:55.062Z · LW(p) · GW(p)

I wasn't convinced of this ten years ago [LW · GW] and I'm still not convinced.

Given the link, I think you're objecting to something I don't care about. I don't mean to claim that x-rationality is great and has promise to Save the World. Maybe if more really is possible [LW · GW] and we do something pretty different to seriously develop it [LW · GW]. Maybe. But frankly I recognize stupefying egregores here too and I don't expect "more and better x-rationality" to do a damn thing to counter those for the foreseeable future.

So on this point I think I agree with you… and I don't feel whatsoever dissuaded from what I'm saying.

The rest of what you're saying feels like it's more targeting what I care about though:

When I look at people who have contributed most to alignment-related issues […] what all of these people have in common is focusing mostly on object-level questions.

Right. And as I said in the OP, stupefaction often entails alienation from object-level reality.

It's also worth noting that LW exists mostly because Eliezer did in fact notice his own stupidity and freaked the fuck out. He poured a huge amount of energy into taking his internal mental weeding seriously in order to never ever ever be that stupid again. He then wrote all these posts to articulate a mix of (a) what he came to realize and (b) the ethos behind how he came to realize it.

That's exactly the kind of thing I'm talking about.

A deep art of sanity worth honoring might involve some techniques, awareness of biases, Bayesian reasoning, etc. Maybe. But focusing on that invites Goodhart. I think LW suffers from this in particular.

I'm pointing at something I think is more central: casting off systematic stupidity and replacing it with systematic clarity.

And yeah, I'm pretty sure one effect of that would be grounding one's thinking in the physical world. Symbolic thinking in service to working with real things, instead of getting lost in symbols as some kind of weirdly independently real.

But as far as I know, none of them have made it a focus of theirs to fight egregores, defeat hypercreatures, awaken to their own mortality, refactor their identity, or cultivate their will.

I think you're objecting to my aesthetic, not my content.

I think it's well-established that my native aesthetic rubs many (most?) people in this space the wrong way. At this point I've completely given up on giving it a paint job to make it more palatable here.

But if you (or anyone else) cares to attempt a translation, I think you'll find what you're saying here to be patently false.

- Raising the sanity waterline is exactly about fighting and defeating egregores=hypercreatures. Basically every debiasing technique is like that. Singlethink [LW · GW] is a call to arms.

- The talk about cryonics and xrisk is exactly an orientation to mortality. (Though this clearly was never Eliezer's speciality and no one took up the mantle in any deep way, so this is still mostly possessed.)

- The whole arc about free will is absolutely about identity. Likewise the stuff on consciousness and p-zombies. Significant chunks of the "Mysterious Answers to Mysterious Questions" Sequence were about how people protect their identities with stupidity and how not to fall prey to that.

The "cultivate their will" part confuses me. I didn't mean to suggest doing that. I think that's anti-helpful for the most part. Although frankly I think all the stuff about "Tsuyoku Naritai! [LW · GW]" and "Shut up and do the impossible" totally fits the bill of what I imagine when reading your words there… but yeah, no, I think that's just dumb and don't advise it.

Although I very much do think that getting damn clear on what you can and cannot do is important, as is ceasing to don responsibility for what you can't choose and fully accepting responsibility for what you can. That strikes me as absurdly important and neglected. As far as I can tell, anyone who affects anything for real has to at least stumble onto an enacted solution for this in at least some domain.

And if we pit their plumbing or truck-manuevering skills against those of an average person, I see no reason to think they would do better (besides maybe high IQ and general ability).

You seem to be weirdly strawmanning me here.

The trucking thing was a reverence to Zvi's repeated rants about how politicians didn't seem to be able to think about the physical world enough to solve the Canadian Trucker Convoy clog in Toronto. I bet that Holden, Eliezer, Paul, etc. would all do massively better than average at sorting out a policy that would physically work. And if they couldn't, I would worry about their "contributions" to alignment being more made of social illusion than substance.

Things like plumbing are physical skills. So is, say, football. I don't expect most football players to magically be better at plumbing. Maybe some correlation, but I don't really care.

But I do expect that someone who's mastering a general art of sanity and clarity to be able to think about plumbing in practical physical terms. Instead of "The dishwasher doesn't work" and vaguely hoping the person with the right cosmic credentials will cast a magic spell [LW · GW], there'd be a kind of clarity about what Gears one does and doesn't understand, and turning to others because you see they see more relevant Gears.

If the wizards you've named were no better than average at that, then that would also make me worry about their "contributions" to alignment.

It's seemed to me that the more that people talk about "rationality training" more exotic than what you would get at a really top-tier economics department, the more those people tend to get kind of navel-gazey, start fighting among themselves, and not accomplish things of the same caliber as the six people I named earlier. I'm not just saying there's no correlation with success, I'm saying there's a negative correlation.

I totally agree. Watching this dynamic play out within CFAR was a major factor in my checking out from it.

That's part of what I mean by "this space is still possessed". Stupefaction still rules here. Just differently.

You've seen the same data I have, so I'm curious what makes you think this line of research/thought/effort will be productive.

I think you and I are imagining different things.

I don't think a LW or CFAR or MIRI flavored project that focuses on thinking about egregores and designing counters to stupefaction is promising. I think that'd just be a different flavor of the same stupid sauce.

(I had different hopes back in 2016, but I've been thoroughly persuaded otherwise by now.)

I don't mean to prescribe a collective action solution at all, honestly. I'm not proposing a research direction. I'm describing a problem.

The closest thing to a solution-shaped object I'm putting forward is: Look at the goddamned question.

Part of what inspired me to write this piece at all was seeing a kind of blindness to these memetic forces in how people talk about AI risk and alignment research. Making bizarre assertions about what things need to happen on the god scale of "AI researchers" or "governments" or whatever, roughly on par with people loudly asserting opinions about what POTUS should do.

It strikes me as immensely obvious that memetic forces precede AGI. If the memetic landscape slants down mercilessly toward existential oblivion here, then the thing to do isn't to prepare to swim upward against a future avalanche. It's to orient to the landscape.

If there's truly no hope, then just enjoy the ride. No point in worrying about any of it.

But if there is hope, it's going to come from orienting to the right question.

And it strikes me as quite obvious that the technical problem of AI alignment isn't that question. True, it's a question that if we could answer it might address the whole picture. But that's a pretty damn big "if", and that "might" is awfully concerning.

I do feel some hope about people translating what I'm saying into their own way of thinking, looking at reality, and pondering. I think a realistic solution might organically emerge from that. Or rather, what I'm doing here is an iteration of this solution method. The process of solving the Friendliness problem in human culture has the potential to go superexponential since (a) Moloch doesn't actually plan except through us and (b) the emergent hatching Friendly hypercreature(s) would probably get better at persuading people of its cause as more individuals allow it to speak through them.

But that's the wrong scale for individuals to try anything on.

I think all any of us can actually do is try to look at the right question, and hold the fact that we care about having an answer but don't actually have one.

Does that clarify?

Replies from: Yvain↑ comment by Scott Alexander (Yvain) · 2022-03-13T04:34:55.445Z · LW(p) · GW(p)

Maybe. It might be that if you described what you wanted more clearly, it would be the same thing that I want, and possibly I was incorrectly associating this with the things at CFAR you say you're against, in which case sorry.

But I still don't feel like I quite understand your suggestion. You talk of "stupefying egregores" as problematic insofar as they distract from the object-level problem. But I don't understand how pivoting to egregore-fighting isn't also a distraction from the object-level problem. Maybe this is because I don't understand what fighting egregores consists of, and if I knew, then I would agree it was some sort of reasonable problem-solving step.

I agree that the Sequences contain a lot of useful deconfusion, but I interpret them as useful primarily because they provide a template for good thinking, and not because clearing up your thinking about those things is itself necessary for doing good work. I think of the cryonics discussion the same way I think of the Many Worlds discussion - following the motions of someone as they get the right answer to a hard question trains you to do this thing yourself.

I'm sorry if "cultivate your will" has the wrong connotations, but you did say "The problem that's upstream of this is the lack of will", and I interpreted a lot of your discussion of de-numbing and so on as dealing with this.

Part of what inspired me to write this piece at all was seeing a kind of blindness to these memetic forces in how people talk about AI risk and alignment research. Making bizarre assertions about what things need to happen on the god scale of "AI researchers" or "governments" or whatever, roughly on par with people loudly asserting opinions about what POTUS should do. It strikes me as immensely obvious that memetic forces precede AGI. If the memetic landscape slants down mercilessly toward existential oblivion here, then the thing to do isn't to prepare to swim upward against a future avalanche. It's to orient to the landscape.

The claim "memetic forces precede AGI" seems meaningless to me, except insofar as memetic forces precede everything (eg the personal computer was invented because people wanted personal computers and there was a culture of inventing things). Do you mean it in a stronger sense? If so, what sense?

I also don't understand why it's wrong to talk about what "AI researchers" or "governments" should do. Sure, it's more virtuous to act than to chat randomly about stuff, but many Less Wrongers are in positions to change what AI researchers do, and if they have opinions about that, they should voice them. This post of yours right now seems to be about what "the rationalist community" should do, and I don't think it's a category error for you to write it.

Maybe this would easier if you described what actions we should take conditional on everything you wrote being right.

Replies from: SDM, jimmy↑ comment by Sammy Martin (SDM) · 2022-03-14T10:24:00.133Z · LW(p) · GW(p)

There's also the skulls to consider. As far as I can tell, this post's recommendations are that we, who are already in a valley littered with a suspicious number of skulls,

https://forum.effectivealtruism.org/posts/ZcpZEXEFZ5oLHTnr9/noticing-the-skulls-longtermism-edition [EA · GW]

https://slatestarcodex.com/2017/04/07/yes-we-have-noticed-the-skulls/

turn right towards a dark cave marked 'skull avenue' whose mouth is a giant skull, and whose walls are made entirely of skulls that turn to face you as you walk past them deeper into the cave.

The success rate of movments aimed at improving the longterm future or improving rationality has historically been... not great but there's at least solid concrete emperical reasons to think specific actions will help and we can pin our hopes on that.

The success rate of, let's build a movement to successfully uncouple ourselves from society's bad memes and become capable of real action and then our problems will be solvable, is 0. Not just in that thinking that way didn't help but in that with near 100% success you just end up possessed by worse memes if you make that your explicit final goal (rather than ending up doing that as a side effect of trying to get good at something). And there's also no concrete paths to action to pin our hopes on.

Replies from: bortrand↑ comment by bortrand · 2022-03-17T14:18:37.635Z · LW(p) · GW(p)

“The success rate of, let's build a movement to successfully uncouple ourselves from society's bad memes and become capable of real action and then our problems will be solvable, is 0.“

I’m not sure if this is an exact analog, but I would have said the scientific revolution and the age of enlightenment were two (To be honest, I’m not entirely sure where one ends and the other begins, and there may be some overlap, but I think of them as two separate but related things) pretty good examples of this that resulted in the world becoming a vastly better place, largely through the efforts of individuals who realized that by changing the way we think about things we can better put to use human ingenuity. I know this is a massive oversimplification, but I think it points in the direction of there potentially being value in pushing the right memes onto society.

Replies from: SDM↑ comment by Sammy Martin (SDM) · 2022-03-17T15:57:09.789Z · LW(p) · GW(p)

The success rate of developing and introducing better memes into society is indeed not 0. The key thing there is that the scientific revolutionaries weren't just as an abstract thinking "we must uncouple from society first, and then we'll know what to do". Rather, they wanted to understand how objects fell, how animals evolved and lots of other specific problems and developed good memes to achieve those ends.

Replies from: bortrand↑ comment by bortrand · 2022-03-18T00:41:18.459Z · LW(p) · GW(p)

I’m by no means an expert on the topic, but I would have thought it was a result of both object-level thinking producing new memes that society recognized as true, but also some level of abstract thinking along the lines of “using God and the Bible as an explanation for every phenomenon doesn’t seem to be working very well, maybe we should create a scientific method or something.”

I think there may be a bit of us talking past each other, though. From your response, perhaps what I consider “uncoupling from society’s bad memes” you consider to be just generating new memes. It feels like generally a conversation where it’s hard to pin down what exactly people are trying to describe (starting from the OP, which I find very interesting, but am still having some trouble understanding specifically) which is making it a bit hard to communicate.

↑ comment by jimmy · 2022-03-17T02:46:17.206Z · LW(p) · GW(p)

Now that I've had a few days to let the ideas roll around in the back of my head, I'm gonna take a stab at answering this.

I think there are a few different things going on here which are getting confused.

1) What does "memetic forces precede AGI" even mean?

"Individuals", "memetic forces", and "that which is upstream of memetics" all act on different scales. As an example of each, I suggest "What will I eat for lunch?", "Who gets elected POTUS?", and "Will people eat food?", respectively.

"What will I eat for lunch?" is an example of an individual decision because I can actually choose the outcome there. While sometimes things like "veganism" will tell me what I should eat, and while I might let that have influence me, I don't actually have to. If I realize that my life depends on eating steak, I will actually end up eating steak.

"Who gets elected POTUS" is a much tougher problem. I can vote. I can probably persuade friends to vote. If I really dedicate myself to the cause, and I do an exceptionally good job, and I get lucky, I might be able to get my ideas into the minds of enough people that my impact is noticeable. Even then though, it's a drop in the bucket and pretty far outside my ability to "choose" who gets elected president. If I realize that my life depends on a certain person getting elected who would not get elected without my influence... I almost certainly just die. If a popular memeplex decides that a certain candidate threatens it, that actually can move enough people to plausibly change the outcome of an election.

However there's a limitation to which memeplexes can become dominant and what they can tell people to do. If a hypercreature tells people to not eat meat, it may get some traction there. If it tries to tell people not to eat at all, it's almost certainly going to fail and die. Not only will it have a large rate of attrition from adherents dying, but it's going to be a real hard sell to get people to take its ideas on, and therefore it will have a very hard time spreading.

My reading of the claim "memetic forces precede AGI" is that like getting someone elected POTUS, the problem is simply too big for there to be any reasonable chance that a few guys in a basement can just go do it on their own when not supported by friendly hypercreatures. Val is predicting that our current set of hypercreatures won't allow that task to be possible without superhuman abilities, and that our only hope is that we end up with sufficiently friendly hypercreatures that this task becomes humanly possible. Kinda like if your dream was to run an openly gay weed dispensary, it's humanly possible today, but not so further in the past or in Saudi Arabia today; you need that cultural support or it ain't gonna happen.

2) "Fight egregores" sure sounds like "trying to act on the god level" if anything does. How is this not at least as bad as "build FAI"? What could we possibly do which isn't foolishly trying to act above our level?

This is a confusing one, because our words for things like "trying" are all muddled together. I think basically, yes, trying to "fight egregores" is "trying to act on the god level", and is likely to lead to problems. However, that doesn't mean you can't make progress against egregores.

So, the problem with "trying to act on a god level" isn't so much that you're not a god and therefore "don't have permission to act on this level" or "ability to touch this level", it's that you're not a god and therefore attempting to act as if you were a god fundamentally requires you to fail to notice and update on that fact. And because you're failing to update, you're doing something that doesn't make sense in light of the information at hand. And not just any information either; it's information that's telling you that what you're trying to do will not work. So of course you're not going to get where you want if you ignore the road signs saying "WRONG WAY!".

What you can do, which will help free you from the stupifying factors and unfriendly egregores, and (Val claims) will have the best chance of leading to a FAI, is to look at what's true. Rather than "I have to do this, or we all die! I must do the impossible", just "Can I do this? Is it impossible? If so, and I'm [likely] going to die, I can look at that anyway. Given what's true, what do I want to do?"

If this has a "...but that doesn't solve the problem" bit to it, that's kinda the point. You don't necessarily get to solve the problem. That's the uncomfortable thing we should not flinch away from updating on. You might not be able to solve the problem. And then what?

(Not flinching from these things is hard. And important)

3) What's wrong with talking about what AI researchers should do? There's actually a good chance they listen! Should they not voice their opinions on the matter? Isn't that kinda what you're doing here by talking about what the rationality community should do?

Yes. Kinda. Kinda not.

There's a question of how careful one has to be, and Val is making a case for much increased caution but not really stating it this way explicitly. Bear with me here, since I'm going to be making points that necessarily seem like "unimportant nitpicking pedantry" relative to an implicit level of caution that is more tolerant to rounding errors of this type, but I'm not actually presupposing anything here about whether increased caution is necessary in general or as it applies to AGI. It is, however, necessary in order to understand Val's perspective on this, since it is central to his point.

If you look closely, Val never said anything about what the rationality community "should" do. He didn't use the word "should" once.

He said things like "We can't align AGI. That's too big." and "So, I think raising the sanity waterline is upstream of AI alignment." and "We have an advantage in that this war happens on and through us. So if we take responsibility for this, we can influence the terrain and bias egregoric/memetic evolution to favor Friendlines". These things seem to imply that we shouldn't try to align AGI and should instead do something like "take responsibility" so we can "influcence the terrain and bias egregoric/memetic evolution to favor friendliness", and as far as rounding errors go, that's not a huge one. However, he did leave the decision of what to do with the information he presented up to you, and consciously refrained from imbuing it with any "shouldness". The lack of "should" in his post or comments is very intentional, and is an example of him doing the thing he views as necessary for FAI to have a chance of working out.

In (my understanding of) Val's perspective, this "shouldness" is a powerful stupifying factor that works itself into everything -- if you let it. It prevents you from seeing the truth, and in doing so blocks you from any path which might succeed. It's so damn seductive and self protecting that we all get drawn into it all the time and don't really realize -- or worse, rationalize and believe that "it's not really that big a deal; I can achieve my object level goals anyway (or I can't anyway, and so it makes no difference if I look)". His claim is that it is that big a deal, because you can't achieve your goals -- and that you know you can't, which is the whole reason you're stuck in your thoughts of "should" in the first place. He's saying that the annoying effort to be more precise about what exactly we are aiming to share and holding ourselves to be squeaky clean from any "impotent shoulding" at things is actually a necessary precondition for success. That if we try to "Shut up and do the impossible", we fail. That if we "Think about what we should do", we fail. That if we "try to convince people", even if we are right and pointing at the right thing, we fail. That if we allow ourselves to casually "should" at things, instead of recognizing it as so incredibly dangerous as to avoid out of principle, we get seduced into being slaves for unfriendly egregores and fail.

That last line is something I'm less sure Val would agree with. He seems to be doing the "hard line avoid shoulding, aim for maximally clean cognition and communication" thing and the "make a point about doing it to highlight the difference" thing, but I haven't heard him say explicitly that he thinks it has to be a hard line thing.

And I don't think it does, or should be (case in point). Taking a hard line can be evidence of flinching from a different truth, or a lack of self trust to only use that way of communicating/relating to things in a productive way. I think by not highlighting the fact that it can be done wisely, he clouds his point and makes his case less compelling than it could be. However, I do think he's correct about it being both a deceptively huge deal and also something that takes a very high level of caution before you start to recognize the issues with lower levels of caution.

↑ comment by Valentine · 2022-03-17T21:33:28.199Z · LW(p) · GW(p)

I feel seen. I'll tweak a few details here & there, but you have the essence.

Thank you.

If this has a "...but that doesn't solve the problem" bit to it, that's kinda the point. You don't necessarily get to solve the problem. That's the uncomfortable thing we should not flinch away from updating on. You might not be able to solve the problem. And then what?

Agreed.

Two details:

- "…we should not flinch away…" is another instance of the thing. This isn't just banishing the word "should": the ability not to flinch away from hard things is a skill, and trying to bypass development of that skill with moral panic actually makes everything worse.

- The orientation you're pointing at here biases one's inner terrain toward Friendly superintelligences. It's also personally helpful and communicable. This is an example of a Friendly meme that can give rise to a Friendly superintelligence. So while sincerely asking "And then what?" is important, as is holding the preciousness of the fact that we don't yet have an answer, that is enough. We don't have to actually answer that question to participate in feeding Friendliness in the egregoric wars. We just have to sincerely ask.

That if we allow ourselves to casually "should" at things, instead of recognizing it as so incredibly dangerous as to avoid out of principle, we get seduced into being slaves for unfriendly egregores and fail.

That last line is something I'm less sure Val would agree with.

Admittedly I'm not sure either.

Generally speaking, viewing things as "so incredibly dangerous as to avoid out of principle" ossifies them too much. Ossified things tend to become attack surfaces for unFriendly superintelligences.

In particular, being scared of how incredibly dangerous something is tends to be stupefying.

But I do think seeing this clearly naturally creates a desire to be more clear and to drop nearly all "shoulding" — not so much the words as the spirit.

(Relatedly: I actually didn't know I never used the word "should" in the OP! I don't actually have anything against the word per se. I just try to embody this stuff. I'm delighted to see I've gotten far enough that I just naturally dropped using it this way.)

…I haven't heard him say explicitly that he thinks it has to be a hard line thing.

And I don't think it does, or should be (case in point). Taking a hard line can be evidence of flinching from a different truth, or a lack of self trust to only use that way of communicating/relating to things in a productive way. I think by not highlighting the fact that it can be done wisely, he clouds his point and makes his case less compelling than it could be.

I'm not totally sure I follow. Do you mean a hard line against "shoulding"?

If so, I mostly just agree with you here.

That said, I think trying to make my point more compelling would in fact be an example of the corruption I'm trying to purify myself of [LW · GW]. Instead I want to be correct and clear. That might happen to result in what I'm saying being more compelling… but I need to be clean of the need for that to happen in order for it to unfold in a Friendly way.

However. I totally believe that there's a way I could have been clearer.

And given how spot-on the rest of what you've been saying feels to me, my guess is you're right about how here.

Although admittedly I don't have a clear image of what that would have looked like.

Replies from: jimmy↑ comment by jimmy · 2022-03-20T04:11:27.615Z · LW(p) · GW(p)

"…we should not flinch away…" is another instance of the thing.

Doh! Busted.

Thanks for the reminder.

This isn't just banishing the word "should": the ability not to flinch away from hard things is a skill, and trying to bypass development of that skill with moral panic actually makes everything worse.

Agreed.

We don't have to actually answer that question to participate in feeding Friendliness in the egregoric wars. We just have to sincerely ask.

Good point. Agreed, and worth pointing out explicitly.

I'm not totally sure I follow. Do you mean a hard line against "shoulding"?

Yes. You don't really need it, things tend to work better without it, and the fact no one even noticed that that it didn't show up in this post is a good example of that. At the same time, "I shouldn't ever use 'should'" obviously has the exact same problems, and it's possible to miss that you're taking that stance if you don't ever say it out loud. I watched some of your videos after Kaj linked one, and... it's not that it looked like you were doing that, but it looked like you might be doing that. Like there wasn't any sort of self caricaturing or anything that showed me that "Val is well aware of this failure mode, and is actively steering clear", so I couldn't rule it out and wanted to mark it as a point of uncertainty and a thing you might want to watch out for.

That said, I think trying to make my point more compelling would in fact be an example of the corruption I'm trying to purify myself of. Instead I want to be correct and clear. That might happen to result in what I'm saying being more compelling… but I need to be clean of the need for that to happen in order for it to unfold in a Friendly way.

Ah, but I never said you should try to make your point more compelling! What do you notice when you ask yourself why "X would have effect Y" led you to respond with a reason to not do X? ;)

↑ comment by adamShimi · 2022-03-10T13:15:17.953Z · LW(p) · GW(p)

Don't have the time to write a long comment just now, but I still wanted to point out that describing either Yudkowsky or Christiano as doing mostly object-level research seems incredibly wrong. So much of what they're doing and have done focused explicitly on which questions to ask, which question not to ask, which paradigm to work in, how to criticize that kind of work... They rarely published posts that are only about the meta-level (although Arbital does contain a bunch of pages along those lines and Prosaic AI Alignment is also meta) but it pervades their writing and thinking.

More generally, when you're creating a new field of science of research, you tend to do a lot of philosophy of science type stuff, even if you don't label it explicitly that way. Galileo, Carnot, Darwin, Boltzmann, Einstein, Turing all did it.

(To be clear, I'm pointing at meta-stuff in the sense of "philosophy of science for alignment" type things, not necessarily the more hardcore stuff discussed in the original post)

Replies from: TAG↑ comment by TAG · 2022-03-10T16:55:51.344Z · LW(p) · GW(p)

That's true, but if you are doing philosophy it is better to admit to it, and learn from existing philosophy, rather than deriding and dismissing the whole field [LW · GW].

Replies from: Valentine↑ comment by Valentine · 2022-03-11T13:27:14.070Z · LW(p) · GW(p)

This seems irrelevant to the point, yes? I think adamShimi is challenging Scott's claim that Paul & Eliezer are mostly focusing on object-level questions. It sounds like you're challenging whether they're attending to non-object-level questions in the best way. That's a different question. Am I missing your point?

↑ comment by ADifferentAnonymous · 2022-03-10T18:54:16.523Z · LW(p) · GW(p)

Eliezer, at least, now seems quite pessimistic about that object-level approach. And in the last few months he's been writing a ton of fiction about introducing a Friendly hypercreature to an unfriendly world.

↑ comment by Ben Pace (Benito) · 2022-03-13T18:44:53.466Z · LW(p) · GW(p)

When I look at people who have contributed most to alignment-related issues - whether directly... or indirectly, like Sam Bankman-Fried

Perhaps I have missed it, but I’m not aware that Sam has funded any AI alignment work thus far.

If so this sounds like giving him a large amount of credit in advance of doing the work, which is generous but not the order credit allocation should go.

↑ comment by Marcello · 2025-01-01T16:23:31.981Z · LW(p) · GW(p)

Looking at this comment from three years in the future, I'll just note that there's something quite ironic about your having put Sam Bankman-Fried on this list! If only he'd refactored his identity more! But no, he was stuck in short-sighted-greed/CDT/small-self, and we all paid a price for that, didn't we?

comment by james.lucassen · 2022-03-09T05:26:34.712Z · LW(p) · GW(p)

My attempt to break down the key claims here:

- The internet is causing rapid memetic evolution towards ideas which stick in people's minds, encourage them to take certain actions, especially ones that spread the idea. Ex: wokism, Communism, QAnon, etc

- These memes push people who host them (all of us, to be clear) towards behaviors which are not in the best interests of humanity, because Orthogonality Thesis

- The lack of will to work on AI risk comes from these memes' general interference with clarity/agency, plus selective pressure to develop ways to get past "immune" systems which allow clarity/agency

- Before you can work effectively on AI stuff, you have to clear out the misaligned memes stuck in your head. This can get you the clarity/agency necessary, and make sure that (if successful) you actually produce AGI aligned with "you", not some meme

- The global scale is too big for individuals - we need memes to coordinate us. This is why we shouldn't try and just solve x-risk, we should focus on rationality, cultivating our internal meme garden, and favoring memes which will push the world in the direction we want it to go

↑ comment by james.lucassen · 2022-03-09T05:34:53.257Z · LW(p) · GW(p)

Putting this in a separate comment, because Reign of Terror moderation scares me and I want to compartmentalize. I am still unclear about the following things:

- Why do we think memetic evolution will produce complex/powerful results? It seems like the mutation rate is much, much higher than biological evolution.

- Valentine describes these memes as superintelligences, as "noticing" things, and generally being agents. Are these superintelligences hosted per-instance-of-meme, with many stuffed into each human? Or is something like "QAnon" kind of a distributed intelligence, doing its "thinking" through social interactions? Both of these models seem to have some problems (power/speed), so maybe something else?

- Misaligned (digital) AGI doesn't seem like it'll be a manifestation of some existing meme and therefore misaligned, it seems more like it'll just be some new misaligned agent. There is no highly viral meme going around right now about producing tons of paperclips.

↑ comment by bglass · 2022-03-10T04:43:29.046Z · LW(p) · GW(p)

I really appreciate your list of claims and unclear points. Your succinct summary is helping me think about these ideas.

There is no highly viral meme going around right now about producing tons of paperclips.

A few examples came to mind: sports paraphernalia, tabletop miniatures, and stuffed animals (which likely outnumber real animals by hundreds or thousands of times).

One might argue that these things give humans joy, so they don't count. There is some validity to that. AI paperclips are supposed to be useless to humans. On the other hand, one might also argue that it is unsurprising that subsystems repurposed to seek out paperclips derive some 'enjoyment' from the paperclips... but I don't think that argument will hold water for these examples. Looking at it another way, some amount of paperclips are indeed useful.

No egregore has turned the entire world to paperclips just yet. But of course that hasn't happened, else we would have already lost.

Even so: consider paperwork (like the tax forms mentioned in the post), skill certifications in the workplace, and things like slot machines and reality television. A lot of human effort is wasted on things humans don't directly care about, for non-obvious reasons. Those things could be paperclips.

(And perhaps some humans derive genuine joy out of reality television, paperwork, or giant piles of paperclips. I don't think that changes my point that there is evidence of egregores wasting resources.)

Replies from: dxu↑ comment by dxu · 2022-03-10T05:19:42.824Z · LW(p) · GW(p)

I think the point under contention isn't whether current egregores are (in some sense) "optimizing" for things that would score poorly according to human values (they are), but whether the things they're optimizing for have some (clear, substantive) relation to the things a misaligned AGI will end up optimizing for, such that an intervention on the whole egregores situation would have a substantial probability of impacting the eventual AGI.

To this question I think the answer is a fairly clear "no", though of course this doesn't invalidate the possibility that investigating how to deal with egregores may result in some non-trivial insights for the alignment problem.

Replies from: Valentine, bglass↑ comment by Valentine · 2022-03-11T15:02:27.340Z · LW(p) · GW(p)

I agree with you.

I also don't think it matters whether the AGI will optimize for something current egregores care about.

What matters is whether current egregores will in fact create AGI.

The fear around AI risk is that the answer is "inevitably yes".

The current egregores are actually no better at making AGI egregore-aligned than humans are at making it human-aligned.

But they're a hell of a lot better at making AGI accidentally, and probably at all.

So if we don't sort out how to align egregores, we're fucked — and so are the egregores.

↑ comment by Valentine · 2022-03-11T14:50:28.849Z · LW(p) · GW(p)

Why do we think memetic evolution will produce complex/powerful results? It seems like the mutation rate is much, much higher than biological evolution.

Doesn't the second part answer the first? I mean, the reason biological evolution matters is because its mutation rate massively outstrips geological and astronomical shifts. Memetic evolution dominates biological evolution for the same reason.

Also, just empirically: memetic evolution produced civilization, social movements, Crusades, the Nazis, etc.

I wonder if I'm just missing your question.

Are these superintelligences hosted per-instance-of-meme, with many stuffed into each human? Or is something like "QAnon" kind of a distributed intelligence, doing its "thinking" through social interactions?

Both.

I wonder if you're both (a) blurring levels and (b) intuitively viewing these superintelligences as having some kind of essence that either is or isn't in someone.

What is or isn't a "meme" isn't well defined. A catch phrase (e.g. "Black lives matter!") is totally a meme. But is a religion a meme? Is it more like a collection of memes? If so, what exactly are its constituent memes? And with catch phrases, most of them can't survive without a larger memetic context. (Try getting "Black lives matter!" to spread through an isolated Amazonian tribe.) So should we count the larger memetic context as part of the meme?

But if you stop trying to ask what is or isn't a meme and you just look at the phenomenon, you can see something happening. In the BLM movement, the phrase "Silence is violence" evolved and spread because it was evocative and helped the whole movement combat opposition in a way that supported its egregoric possession.

So… where does the whole BLM superorganism live? In its believers and supporters, sure. But also in its opponents. (Think of how folk who opposed BLM would spread its claims in order to object to them.) Also on webpages. Billboards. Now in Hollywood movies. And it's always shifting and mutating.

The academic field of memetics died because they couldn't formally define "meme". But that's backwards. Biology didn't need to formally define life to recognize that there's something to study. The act of studying seems to make some definitions more possible.

That's where we're at right now. Egregoric zoology, post Darwin but pre Watson & Crick.

Misaligned (digital) AGI doesn't seem like it'll be a manifestation of some existing meme and therefore misaligned, it seems more like it'll just be some new misaligned agent. There is no highly viral meme going around right now about producing tons of paperclips.

I quite agree. I didn't mean to imply otherwise.

The thing is, unFriendly hypercreatures aren't thinking about aligning AI to hypercreatures either. They have very little foresight.

(This is an artifact of how most unFriendly egregores do their thing via stupefaction. Most possessed people can't think about the future because it's too real and involves things like their personal death. They instead think about symbolic futures and get sideswiped when reality predictably doesn't go according to their plans. So since unFriendly hypercreatures use stupefied minds to plan, they end up having trouble with long futures, ergo unable to sanely orient to real-world issues that in fact screw them over.)

I think these hypercreatures will get just as shocked as the rest of us when AGI comes online.

The thing is, the pathway by which something like AGI actually destroys us is some combo of (a) getting a hold of real-world systems like nukes and (b) hacking human minds to do its bidding. Both of these are already happening via unFriendly hypercreature evolution, and for exactly the same reasons that folk are fearing AI risk.

The creation of digital AGI just finishes moving the substrate off of humans, at which point the emergent unFriendly superintelligence no longer has any reason to care about human bodies or minds. At that point we lose all leverage.

That's why I'm looking at the current situation and saying "Hey guys, I think you're missing what's actually happening here. We're already in AI takeoff, and you're fixated on the moment we lose all control instead of on this moment where we still have some."

I think of the step to AGI as the final one, when some egregore figures out how to build a memetic nuke but doesn't realize it'll burn everything.

So, no magical meme transforming into a digital form.

(Although it's some company or whatever that will specify to the AGI "Make paperclips" or whatever. God forbid some corporate egregore builds an AGI to "maximize profit".)

Replies from: james.lucassen↑ comment by james.lucassen · 2022-03-12T01:06:23.767Z · LW(p) · GW(p)

Memetic evolution dominates biological evolution for the same reason.

Faster mutation rate doesn't just produce faster evolution - it also reduces the steady-state fitness. Complex machinery can't reliably be evolved if pieces of it are breaking all the time. I'm mostly relying No Evolutions for Corporations or Nanodevices [LW · GW] plus one undergrad course in evolutionary bio here.

Also, just empirically: memetic evolution produced civilization, social movements, Crusades, the Nazis, etc.

Thank you for pointing this out. I agree with the empirical observation that we've had some very virulent and impactful memes. I'm skeptical about saying that those were produced by evolution rather than something more like genetic drift, because of the mutation-rate argument. But given that observation, I don't know if it matters if there's evolution going on or not. What we're concerned with is the impact, not the mechanism.

I think at this point I'm mostly just objecting to the aesthetic and some less-rigorous claims that aren't really important, not the core of what you're arguing. Does it just come down to something like:

"Ideas can be highly infectious and strongly affect behavior. Before you do anything, check for ideas in your head which affect your behavior in ways you don't like. And before you try and tackle a global-scale problem with a small-scale effort, see if you can get an idea out into the world to get help."

↑ comment by Valentine · 2022-03-11T14:16:53.808Z · LW(p) · GW(p)

I like this, thank you.

I score this as "Good enough that I debated not bothering to correct anything."

I think some corrections might be helpful though:

The internet is causing rapid memetic evolution…

While I think that's true, that's not really central to what I'm saying. I think these forces have been the main players for way, way longer than we've had an internet. The internet — like every other advance in communication — just increased evolutionary pressure at the memetic level by bringing more of these hypercreatures into contact with one another and with resources they could compete for.

These memes push people who host them (all of us, to be clear) towards behaviors which are not in the best interests of humanity, because Orthogonality Thesis

Yes. I'd just want to add that not all of them do. It's just that the ones that tend to dominate tend to be unFriendly.

Two counterexamples:

- Science. Not as an establishment, but as a kind of clarifying intelligence. This strikes me as a Friendly hypercreature. (The ossified practices of science, like "RCTs are the gold standard" and "Here's the Scientific Method!", tend to pull toward stupidity via Goodhart. A lot of LW is an attempt to reclaim the clarifying influence of this hypercreature's intelligence.)

- Jokes. These are sort of like innocuous memetic insects. As long as they don't create problems for more powerful hypercreatures, they can undergo memetic evolution and spread. They aren't particularly Friendly or unFriendly for the most part. Some of them add a little value via humor, although that's not what they're optimizing for. (The evolutionary pressure on jokes is "How effectively does hearing this joke cause the listener to faithfully repeat it?"). But if a joke were to somehow evolve into a more coherent behavior-controlling egregore, by default it'll be an unFriendly one.

Before you can work effectively on AI stuff, you have to clear out the misaligned memes stuck in your head.

Almost. I think it's more important that you have installed a system for noticing and weeding out these influences.

Like how John Vervaeke argues that the Buddha's Eightfold Noble Path is a kind of virtual engine for creating relevant insight. The important part isn't the insight but is instead the engine. Because the same processes that create insight also create delusion, so you need a systematic way of course-correcting.

This can get you the clarity/agency necessary, and make sure that (if successful) you actually produce AGI aligned with "you", not some meme

No correction here. I just wanted to say, this is a delightfully clear way of saying what I meant.

…we shouldn't try and just solve x-risk…

While I agree (both with the claim and with the fact that this is what I said), when I read you saying it I worry about an important nuance getting lost.

The emphasis here should be on "solve", not "x-risk". Solving xrisk is superhuman. So is xrisk itself for that matter. "God scale."

However! Friendly hypercreatures need our minds in order to think. In order for a memetic strategy to result in solving AI risk, we need to understand the problem. We need to see its components clearly.

So I do think it helps to model xrisk. See its factors. See its Gears. See the landscape it's embedded in.

Sort of like, a healthy marriage is more likely to emerge if both people make an effort to understand themselves, each other, and their dynamic within a context of togetherness and mutual care. But neither person is actually responsible for creating a healthy marriage. It sort of emerges organically from mutual open willingness plus compatibility.

…we should focus on rationality, cultivating our internal meme garden, and favoring memes which will push the world in the direction we want it to go

FWIW, this part sounds redundant to me. A "rationality" that is something like a magical completion of the Art would, as far as I can tell, consist almost entirely of consciously cultivating one's internal memetic garden, which is nearly the same thing as favoring Friendly memes.

But after reading and replying to Scott's comment, I'd adjust a little bit in the OP. For basically artistic reasons I mentioned "rationality for its own sake, period." But I now think that's distracting. What I'm actually in favor of is memetic literacy by whatever name. I think there's an important art here whose absence causes people to focus on AI risk in unhelpful and often anti-helpful ways.

Also, on this part:

…which will push the world in the direction we want it to go

I want to emphasize that best as I can figure, we don't have control over that. That's more god-scale stuff. What each of us can do is notice what seems clarifying and kind to ourselves and to lean that way. I think there's some delightful game theory that suggests that doing this supports Friendly hypercreatures.

And if not, I think we're just fucked.

↑ comment by Going Durden (going-durden) · 2023-03-22T08:05:17.187Z · LW(p) · GW(p)

These memes push people who host them (all of us, to be clear) towards behaviors which are not in the best interests of humanity, because Orthogonality Thesis

Im not entirely convinced. Memes are parasites, and thus, aim for equilibrium with its host. Hence why memeplexes that are truly evil and omnicidal never stick, memeplexes that are relatively evil peter out, and what we are left with are memeplexes that "kinda suck I guess" at worst. Succesful memeplex is one that ensures the host's survival while forcing the host to spend maximum energy and resources spreading the memeplex without harming themselves too badly.

Replies from: lahwran↑ comment by the gears to ascension (lahwran) · 2023-03-22T08:23:16.866Z · LW(p) · GW(p)

but the memeplexes can, at times, resist the growth of more accurate memeplexes which would ensure host survival better, because agency of the memetic networks and agency of the neural and genetic networks need not be aimed anywhere good, or even necessarily anywhere coherent in particular at times of high mutation. Notably, memeplexes that promote death and malice are more common in the presence of high rates of death and malice; death and malice are themselves self-propagating memetic diseases, in addition to whatever underlying mechanistic diseases might be causing them.

Replies from: going-durden↑ comment by Going Durden (going-durden) · 2023-03-22T09:13:26.451Z · LW(p) · GW(p)

but the memeplexes can, at times, resist the growth of more accurate memeplexes which would ensure host survival better,

Of course, but IMHO they cannot do it for long, at least not on civilizational time scales. Memeplexes that ensure host survival better, and atop of that, empower the hosts, ultimately always win.

As of yet, we do not have any Deus Ex Machina to help the memeplexes exist without a host, or spread without the host being more powerful (physically, politically, socially, scientifically, technologically etc) than the hosts of other memeplexes. Over time, the memetic landscape tends to average out to begrudgingly positive and progressive, because memeplexes that fail to push the hosts forward are outcompeted.

One of the best examples of that is the memeplex of Far Right/Nazi/Fascist ideology, which, while memetically robust, tends to shoot itself in the foot and lose the memetic warfare without much coherent opposition from the liberal memeplexes. It resurfaces all the time, but never accomplishes much, because it is more host-detrimental than it is virulent. Meanwhile, memeplexes tht are kinda-sorta wishy-washy slightly Left of center, egalitarian-ish but not too much, vaguely pro-science and mildly technological, progressive-ish but unobtrusively, tend to always win, and had been winning since the times of Babylon. They struck the perfect balance between memetic frugality, virulence, and benefiting the hosts.

↑ comment by the gears to ascension (lahwran) · 2023-03-22T09:18:10.795Z · LW(p) · GW(p)

Yeah, I see we're thinking on similar terms. I was in fact thinking specifically of the pattern of authoritarian, hyper-destructive memeplexes occasionally coming back up, growing fast, and then suddenly collapsing, repeatedly; sometimes doing huge amounts of damage when this occurs.

I don't think we disagree, I was just expressing another rotation of what seems to already be your perspective.

↑ comment by kyleherndon · 2022-03-09T22:07:43.589Z · LW(p) · GW(p)

I think there's an important difference Valentine tries to make with respect to your fourth bullet (and if not, I will make). You perhaps describe the right idea, but the wrong shape. The problem is more like "China and the US both have incentives to bring about AGI and don't have incentives towards safety." Yes deflecting at the last second with some formula for safe AI will save you, but that's as stupid as jumping away from a train at the last second. Move off the track hours ahead of time, and just broker a peace between countries to not make AGI.

Replies from: james.lucassen↑ comment by james.lucassen · 2022-03-10T01:34:15.425Z · LW(p) · GW(p)

Ah, so on this view, the endgame doesn't look like

"make technical progress until the alignment tax [? · GW] is low enough that policy folks or other AI-risk-aware people in key positions will be able to get an unaware world to pay it"

But instead looks more like

"get the world to be aware enough to not bumble into an apocalypse, specifically by promoting rationality, which will let key decision-makers clear out the misaligned memes that keep them from seeing clearly"

Is that a fair summary? If so, I'm pretty skeptical of the proposed AI alignment strategy, even conditional on this strong memetic selection and orthogonality actually happening. It seems like this strategy requires pretty deeply influencing the worldview of many world leaders. That is obviously very difficult because no movement that I'm aware of has done it (at least, quickly), and I think they all would like to if they judged it doable. Importantly, the reduce-tax strategy requires clarifying and solving a complicated philosophical/technical problem, which is also very difficult. I think it's more promising for the following reasons: