The Basic Double Crux pattern

post by Eli Tyre (elityre) · 2020-07-22T06:41:28.130Z · LW · GW · 21 commentsContents

The pattern In practice Example Caveats and complications None 21 comments

I’ve spent a lot of time developing tools and frameworks for bridging “intractable” disagreements. I’m also the person affiliated with CFAR who has taught Double Crux the most, and done the most work with it.

People often express to me something to the effect,

The important thing about Double Crux is all the low level habits of mind: being curious, being open to changing your mind, paraphrasing to check that you’ve understood, operationalizing, etc. The ‘Double Crux’ framework, itself is not very important.

I half agree with that sentiment. I do think that the low level cognitive and conversational patterns are the most important thing, and at Double Crux trainings that I have run, most of the time is spent focusing on specific exercises to instill those low level TAPs.

However, I don’t think that the only value of the Double Crux schema is in training those low level habits. Double cruxes are extremely powerful machines that allow one to identify, if not the most efficient conversational path, then at least a very high-efficiency conversational path. Effectively navigating down a chain of Double Cruxes, resolving a disagreement at the bottom of the chain, iterating back to the top, and finding that you've actually changed you mind, is like magic. So I’m sad when people write it off as useless.

This post represents my current best attempt to encapsulate the minimum viable Double Crux (TM) skill: a basic conversational pattern for steering toward and finding double cruxes. I’m going to try and outline the basic Double Crux pattern, the series of 4 moves that makes Double Crux work, and give a (simple, silly) example of that pattern in action.

Again, this is only a basic schema. These four moves are not (always) sufficient for making a Double Crux conversation work. That does depend on a number of other mental habits and TAPs. And real conversations can throw up all kinds of problems that require more specific techniques and strategies. But this pattern is, according to me, at the core of the Double Crux formalism.

The pattern

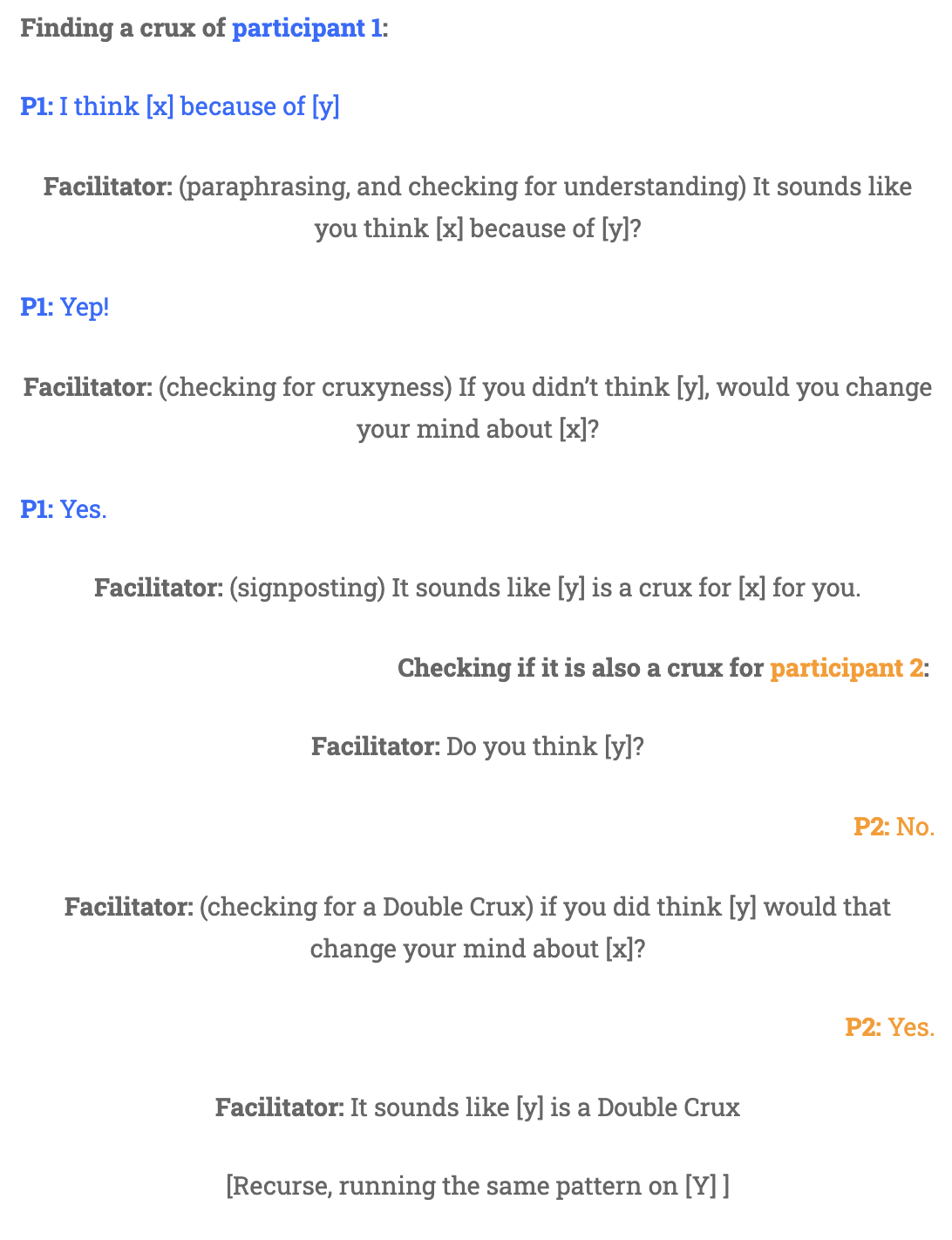

For simplicity, I have described this in the form of a 3-person Double Crux conversation, with two participants and a facilitator. Of course, one can execute these same moves in a 2 person conversation, as one of the participants. But that additional complexity is hard to manage for beginners, so I usually have people practice in a facilitation role, to factor out the difficulty of steering the conversation from the difficulty of introspecting on one's own mental content.

The pattern has two parts, finding a crux, and finding a double crux, and each part is composed of 2 main facilitation moves.

All together, those four moves are…

- Clarifying that you understood the first person’s point.

- Checking if that point is a crux

- Checking the second person’s belief about the truth value of the first person’s crux.

- Checking the if the first person’s crux is also a crux for the second person.

In practice

The conversational flow of these moves looks something like this:

Example

This is an intentionally silly, over-the-top-example, for demonstrating the the pattern without any unnecessary complexity.

Two people, Alex and Barbra, disagree about tea: Alex thinks that tea is great, and drinks it all the time, and thinks that more people should drink tea, and Barbra thinks that tea is bad, and no one should drink tea.

Facilitator: So, Barbra, why do you think tea is bad?

Barbra: Well it’s really quite simple. You see, tea causes cancer.

Facilitator: Let me check if I’ve got that: you think that tea causes cancer?

Barbra: That’s right.

Facilitator: Wow. Ok. Well if you found out that tea actually didn’t cause cancer, would you be fine with people drinking tea?

Barbra: Yeah. Really the main thing that I’m concerned with is the cancer-causing. If tea didn’t cause cancer, then it seems like tea would be fine.

Facilitator: Cool. Well it sounds like this is a crux for you Barb. Alex, do you currently think that tea causes cancer?

Alex: No. That sounds like crazy-talk to me.

Facilitator: Ok. But aside from how realistic it seems right now, if you found out that tea actually does cause cancer, would you change your mind about people drinking tea?

Alex: Well, to be honest, I’ve always been opposed to cancer, so yeah, if I found out that tea causes cancer, then I would think that people shouldn’t drink tea.

Facilitator: Well, it sounds like we have a double crux!

[They high five, write down their double crux, and then recurse, seeking a double crux on the claim "tea cause cancer."]

Caveats and complications

Obviously, in actual conversation, there is a lot more complexity than this example, and a lot of other things that are going on.

For one thing, I’ve only outlined the best case pattern, where the participants give exactly the most convenient answer for moving the conversation forward (yes, yes, no, yes). In actual practice, it is quite likely that one of those answers will be reversed. If a participant gives an "inconvenient answer", the facilitator has to effectively respond in light of that.

For another thing, this formalism is rarely so simple. You might have to do a lot of conversational work to clarify the claims enough that you can ask if B is a crux for A.

In particular, in a real conversation, the first step (checking whether you've understood), usually requires a fair amount of distillation. Most often, folks won't express their point in a simple, crisp sentence. Rather they'll produce many many paragraphs, triangulating around their key point. Part of the facilitator's function is finding a simple statement (or, often, a micro-story) that the participant (enthusiastically) agrees captures their point without leaving out any important detail. [I might write more about how to do distillation sometime.] Only after you've done that distillation to get to a crisp, if highly conjunctive, statement, can you move on to doing the crux checking.

Getting through each of these steps might take fifteen minutes, in which case rather than four basic moves, this pattern describes four phases of conversation. (I claim that one of the core skills of a savvy facilitator is tracking which stage the conversation is at, which goals have already been successfully hit, and which is the current proximal subgoal. Indeed, the facilitator will sometimes need to summarize the current state of the conversational stack, to help the participants reorient when they get lost.)

There is also a judgment call about which person to treat as “participant 1” (the person who generates the point that is tested for cruxyness). As a first order heuristic, the person who is closer to making a positive claim over and above the default, should usually be the “p1”. But this is only one heuristic.

All of which is to say, in a real conversation, it often doesn’t goes as smoothly as our caricatured example above. But this is the rhythm of Double Crux, at least as I apply it.

21 comments

Comments sorted by top scores.

comment by Mark Xu (mark-xu) · 2020-07-23T03:14:20.781Z · LW(p) · GW(p)

My experience with trying the above led to the discovery of a double crux that felt like it wasn't that useful for bridging the disagreement. If you think of disagreeing about whether to drink tea as "surface level" and disagreeing about whether tea causes cancer to be "deeper", then the crux that was identified felt like it was "sideways."

The disagreement was about whether or not advertising was net bad. The identified crux was a disagreement about how bad the negative parts of advertising were. In some sense, if I changed my mind about how bad the negative parts about advertising were, then I _would_ change my mind about advertising - however, the crux seems like it's just a restatement of the initial disagreement.

The thing I think that would have helped with this is identifying multiple cruxes and picking the one that felt most substantive to continue with.

Replies from: elityre, johnswentworth↑ comment by Eli Tyre (elityre) · 2020-07-23T05:17:04.328Z · LW(p) · GW(p)

For context, Mark participated in a session I ran via Zoom last weekend, that covered this pattern.

For what its worth, that particular conversation is the main thing that caused me to add a paragraph about distillation (even just as a bookmark), to the OP. I'm not super confident what would have most helped there, though.

↑ comment by johnswentworth · 2020-07-23T22:42:15.264Z · LW(p) · GW(p)

Seems like there's a big difference between:

- the thing which originally caused me to believe X was learning Y

- because I believe X, I ended up convinced of Y

- things which caused me to believe X also caused me to believe Y

... and in all three cases, Y might be a "crux", since changing my belief about Y would also lead me to change my belief about X as the update propagates. Yet the cases are really quite different:

- first case: if I changed my belief about Y, then my original reason for believing X would be gone, so it would make sense to change my belief about X

- second case: if the falsehood of Y is really strong evidence for the falsehood of X, then changing my mind on Y could make me change my mind on X, but I'd also end up really confused because whatever evidence which originally made me believe X would still be there.

- third case: falsehood of Y is evidence that my underlying beliefs are somehow wrong, but I still have no idea where the mistake is.

↑ comment by Eli Tyre (elityre) · 2020-07-24T08:57:38.059Z · LW(p) · GW(p)

This is not really a response, but it is related: A Taxonomy of Cruxes [LW · GW].

comment by Liron · 2020-07-23T11:17:44.926Z · LW(p) · GW(p)

Great post refreshing our understanding of an important concept. The example dialogue made it easy to understand.

I often just use Single Crux: I say my current view, and that I would change my mind if [X]. This makes me feel like whenever I “take a stance”, it’s an athletic stance with knees bent.

Replies from: elityre↑ comment by Eli Tyre (elityre) · 2020-07-23T21:20:16.016Z · LW(p) · GW(p)

This makes me feel like whenever I “take a stance”, it’s an athletic stance with knees bent.

Hell yeah.

I might steal this metaphor.

comment by Dustin · 2020-07-22T17:29:07.887Z · LW(p) · GW(p)

In my experience, where Double Crux is easiest is also where it's the least interesting to resolve a disagreement because usually such disagreements are already fairly easily resolved or the disagreement is just uninteresting.

An inconveniently large portion of the time disagreements are so complex that the effort required to drill down to the real crux is just...exhausting. By "complex" I don't necessarily mean the disagreements are based upon some super advanced model of the world, but just that the real cruxes are hidden under so much human baggage.

This is related to a point I've made here before about Aumann's agreement theorem being used as a cudgel in an argument...in many of the most interesting and important cases it usually requires a lot of effort to get people on the same page and the number of times where all participants in a conversation are willing to put in that effort seems vanishingly small.

In other words, double crux is most useful when all participants are equally interested in seeking truth. It's least useful in most of the real disagreements people have.

I don't think this is an indictment of double cruxin', but just a warning for someone who reads this and thinks "hot damn, this is going to help me so much".

Replies from: elityre↑ comment by Eli Tyre (elityre) · 2020-07-23T01:19:34.915Z · LW(p) · GW(p)

In my experience, where Double Crux is easiest is also where it's the least interesting to resolve a disagreement because usually such disagreements are already fairly easily resolved or the disagreement is just uninteresting.

An inconveniently large portion of the time disagreements are so complex that the effort required to drill down to the real crux is just...exhausting. By "complex" I don't necessarily mean the disagreements are based upon some super advanced model of the world, but just that the real cruxes are hidden under so much human baggage.

So I broadly agree with this.

When I facilitate "real conversations", including those in the Double Crux framework, I ussually prefer to schedule at least a 4 hour block, and most of that time is spent navigating the psychological threads that arise (things like triggeredness, defensiveness, subtle bucket errors [LW · GW], and compulsions to stand up for something important) and/or iteratively parsing what one person is saying well enough to translate it into the other person's ontology, as opposed to finding cruxes.

in many of the most interesting and important cases it usually requires a lot of effort to get people on the same page and the number of times where all participants in a conversation are willing to put in that effort seems vanishingly small.

Seems right. I will say that people are often more inclined to put in 4+ hours if they have reference experiences of that actually working.

But, yep.

In other words, double crux is most useful when all participants are equally interested in seeking truth.

I'll add a caveat to this though: the spirit of Double Crux is one of trying to learn and change your own mind, not trying to persuade others. And you can do almost all components of a Double Crux conversation (paraphrasing, operationalizing, checking if things are cruxes for you and noting if they are, ect) unilaterally. And people are very often interested in spending a lot of time being listened to sincerely, even if they are not very interested in getting to the truth themselves.

That said, I don't think that you can easily apply this pattern, in particular, unilaterally.

Replies from: Raemon↑ comment by Raemon · 2020-07-23T01:24:35.430Z · LW(p) · GW(p)

And you can do almost all components of a Double Crux conversation (paraphrasing, operationalizing, checking if things are cruxes for you and noting if they are, ect) unilaterally

I've been wondering about specifically training "Single Cruxing", to hammer home that doublecux isn't about changing the other person's mind. I have some sense that the branding for Double Crux is somehow off, similar to how people seem to reliably misinterpret Crocker's Rules to mean "I get to be rude to other people".

I try to introduce doublecrux to people with the definition "Double Crux is where two people help each other each change their own mind." My guess is that having that be the first sentence would help people orient to it better. But, I'm not really sure who "owns" the concept of Double Crux, and whether this is reasonable as the official definition.

Replies from: elityre, shminux↑ comment by Eli Tyre (elityre) · 2020-07-23T06:13:20.659Z · LW(p) · GW(p)

This comment helped me articulate something that I hadn't quite put my finger on before.

There are actually two things that I want to stand up for, which are, from naive perspective, in tension. So I think I need to make sure not to lump them together.

One the one hand, yeah, I think it is deeply true that you can unilaterally do the thing, and with sufficient skill, you can make "the Double Crux thing" work, even with a person who doesn't explicitly opt in for that kind of discourse (because curiosity and empathy are contagious, and many (but not all, I think) of the problems of people "not being truth-seeking" are actually defense mechanism, rather than persistent character traits).

However, sometimes people have said things like "we should focus on and teach methods that involve seeking out your own single cruxes, because that's where the action is at." This has generally made me frown, for the reasons I outlined at the head of my post here: I feel like this is overlooking or discounting the really cool power of the Full Double Crux formalism. I don't want the awareness of that awesomeness to fall out of the lexicon. (Granted, the current state less like "people are using this awesome technique, but maybe we're going to loose it as a community" and more like "there's this technique that most people are frustrated with because it doesn't seem to work very well, but there is a nearby version that does seem useful to them, but I'm sitting here on the sidelines insisting that the "mainline version" actually is awesome, at least in some limited circumstances.")

Anyway, I think these are separate things, and I should optimize for them separately, instead of (something like) trying to uphold both at once.

↑ comment by Shmi (shminux) · 2020-07-23T04:14:47.905Z · LW(p) · GW(p)

Maybe we live in different bubbles.

Replies from: Raemoncomment by Leafcraft · 2020-07-23T12:35:58.996Z · LW(p) · GW(p)

Is there any online community where people can practice DC that you would recommend?

Replies from: elityre↑ comment by Eli Tyre (elityre) · 2020-07-23T21:16:16.983Z · LW(p) · GW(p)

"Community" is a bit strong for these early stages, but I'm running small training sessions via Zoom, with an eye towards scaling them up.

If you're interested, put down your details on this google form.

Also, this is a little bit afield, but there are online NonViolent Communication communities. Bay NVC, for instance, does a weekly dojo, now online due to the pandemic. NVC is very distinctly not Double Crux, but they do have a number of overlapping sub-skills. And in general, I think that the most the of conflicts that most people have in "real life" are better served by NVC than DC.

comment by habryka (habryka4) · 2020-07-23T01:23:16.125Z · LW(p) · GW(p)

Note: The images appear to be hosted on Google in a way where I can't access them (I think this also happened with a previous post of yours, my guess is you try to copy-paste them from Google Docs?). If you send them to me, happy to upload them for you and replace them, but can't fix it unilaterally since I don't have access to the images.

Replies from: elityre↑ comment by Eli Tyre (elityre) · 2020-07-23T04:46:44.006Z · LW(p) · GW(p)

Gar. I thought I finally got images to work on a post of mine.

comment by Shmi (shminux) · 2020-07-23T00:58:11.241Z · LW(p) · GW(p)

Well if you found out that tea actually didn’t cause cancer, would you be fine with people drinking tea?

In my experience that's where most attempts to get to a mutual understanding fail. People refuse to entertain a hypothetical that contradicts their deeply held beliefs, period. Not just "those irrational people", but basically everyone, you and me included. If the belief/alief in question is a core one, there is almost no chance of us to earnestly consider that it might be false, not in a single conversation, anyway.

An example:

"What if you found out that vaccines caused autism?" "They don't, the only study claiming this was decisively debunked." "But just imagine if they did" "Are you trolling? We know they don't!"

An opposite example:

"What if you found out that vaccines didn't cause autism?" "They do, it's a conspiracy by the pharma companies and the government, they poison you with mercury." "Just for the sake of argument, what if they didn't?" "You are so brainwashed by the media, you need to open your eyes to reality!"

Replies from: elityre, Raemon↑ comment by Eli Tyre (elityre) · 2020-07-23T07:46:22.932Z · LW(p) · GW(p)

First of all, yep, the kind of map territory distinction that enables one to even do the crux-checking move at all is reasonably sophisticated. And I suspect that some people, for all practical purposes, just can't do it.

Second, even for those of us who can execute that move, in principle, it gets harder, to the point of impossibility, as the conversation becomes more heated, or the person become more triggered.

Third, when a person is in a public, low-nuance context, or is used to thinking in public, low-nuance context, a person is likely to have resistance to acknowledging that [x] is a crux for [y], because that can sound like an endorsement of [x] to a casual observer.

So there are some real difficulties here.

However, I think there are strategies that help in light of these difficulties.

In terms of doing this move yourself...

You can just practice this until it becomes habitual. In Double Crux sessions, I sometimes include an exercise that involves just doing crux-checking: taking a bunch of statement, isolating the the [A] because [B] structure, and thin checking if [B] is a crux for [A], for you.

And certainly there are people around (me) who will habitually respond to some claim with "that would be a crux for me" / "that wouldn't be a crux for me."

In terms of helping your conversational partner do this move...

First of all, it goes a long way to have a spirit of open curiosity, where you are actually trying to understand where they are coming from. If a person expects that you're going to jump on them and exploit any "misstep" they make, they're not going to be relaxed enough to consider counterfactual-from-their-view hypotheticals. Sincerely offering your own cruxes often helps as a sign of good faith, but keep in mind that there is no substitute for just actually wanting to understand, instead of trying to persuade.

Furthermore, when a person is resistant to do the crux-checking this is often because there is some bucket error or conflation happening, and if you step back and help them untangle it this goes a long way. You should actively go out of your way to help your partner avoid accidentally gas lighting themselves.

For instance, I was having a conversation with someone, this week, about culture war related topics.

A few hours into the discussion I asked,

Suppose that the leaders of the Black Lives Matter movement (not the "rank and file") had a seriously flawed impact model, such that all of the energy going into this area didn't actually resolve any of these terrible problems. In that case, would you have a different felling about the movement?"

(In fact, I asked a somewhat more pointed question: "If that was the case, would you feel more inclined to push a button to 'roll back' the recent flourishing of activity of around BLM?")

I asked this question, and the person said some things in response, and then the conversation drifted away. I brought us back, and asked it again, and again we kind of "slid off."

So I (gently) pointed this out,

We've asked this question twice now, and both times we've sort of drifted away. This suggests to me that maybe there's some bucket error or false dichotomy in play, and I imagine the some part of you is trying to protect something, or making sure that something doesn't slip in sideways. How do you feel about trying to focus on and articulate that thing, directly?

We went into that, and together, we drew out that there were two things at stake, and two (not incompatible) ways that you could view the situation:

- On the one hand BLM, and the recent protests, and other things in that space, are a strategic social change movement, which has some goals, and is trying to achieve them.

- But also, it is an expression of rage and frustration at the pain that black people in the United States, as a group, have had to endure for decades and decades. And separately from the question of "will these actions result in the social change that they're aiming for?", there's just something bad about telling those people to shut up, and something important about this kind of emotional expression on the societal level.

(Which, to translate a little, is to say "no, the leaders having the wrong impact model, on its own, wouldn't be a crux, because that is only part of the story.")

Now, if we hadn't drawn this out explicitly, my conversational partner might have been in danger of making a bucket error, gaslighting themselves into believing that it they think that it is correct or morally permissible to tell people or groups that they should repress their pain, or that they shouldn't be allowed to express it.

And for my part, this was itself a productive exploration, because, while it seems sort of obvious in retrospect (as these things often do), I had only been thinking of "all these things" as strategic societal reform movements, and not mass expressions of frustration. But, actually, that seems like a sort of crucial thing to be tracking if I want to understand what is happening the world, and/or I want to try and plot a path to actual solutions. For instance, I had already been importing my models of social change and intervention-targeting, but now I'm also importing my models of trauma and emotional healing.

(To be clear, I've very unsure how my individual-level models of trauma apply at the societal level. I do think it can be dangerous to assume a one-to-one correspondence between people and groups of people. But also, I've learned how to do Double Crux from doing IDC, and vis versa, and I think modeling groups of people as individuals writ large is often a very good starting point for analysis.)

So overall we went from a place of "this person seems kind of unwilling to consider the question" to "we found some insights that have changed my sense of the situation."

Granted, this was with a rationalist-y person, who I already knew pretty well and with whom I had mutual trust, who was familiar with the concept of bucket errors, and had experience with Focusing and introspection in general.

So on the one hand, this was easy mode.

But on the other hand, one takeaway from this is "with sufficient skill between the two people, you can get past this kind of problem."

↑ comment by Raemon · 2020-07-23T01:20:30.907Z · LW(p) · GW(p)

I think you're selling people way short.

I agree there's a depressingly huge majority out there that at the way you describe, but, like, the whole point of having a rationalist community is to train ourselves to deal with that fact, and I think the people I interact with regularly basically have the skill of at least making a serious attempt at confronting deeply held beliefs.

(I certainly think many people in LW circles still struggle with it, especially in domains that are different from the domains they were training on. But, I've seen people do at least a half-passable job at it pretty frequently)

Replies from: dxu↑ comment by dxu · 2020-07-23T04:16:58.984Z · LW(p) · GW(p)

I think shminux may have in mind one or more specific topics of contention that he's had to hash out with multiple LWers in the past (myself included), usually to no avail.

(Admittedly, the one I'm thinking of is deeply, deeply philosophical, to the point where the question "what if I'm wrong about this?" just gets the intuition generator to spew nonsense. But I would say that this is less about an inability to question one's most deeply held beliefs, and more about the fact that there are certain aspects of our world-models that are still confused, and querying them directly may not lead to any new insight.)

comment by Shmi (shminux) · 2022-04-03T06:06:06.731Z · LW(p) · GW(p)

It would be nice if Double Crux worked like that... Most of the time the exchange would be more like

Facilitator: Wow. Ok. Well if you found out that tea actually didn’t cause cancer, would you be fine with people drinking tea?

Barbra: That's just crazy talk, everyone knows tea causes cancer. You must be one of those Big Tea shills! (Instantly judges you as belonging to an outgroup and you lose all credibility with her.)

If you think that only them unreasonable people react like that, replace tea with AGI and cancer with x-risk.