Thoughts on the AI Safety Summit company policy requests and responses

post by So8res · 2023-10-31T23:54:09.566Z · LW · GW · 14 commentsContents

1. Thoughts on the AI Safety Policy categories 2. Higher priorities for governments 3. Thoughts on the submitted AI Safety Policies None 14 comments

Over the next two days, the UK government is hosting an AI Safety Summit focused on “the safe and responsible development of frontier AI”. They requested that seven companies (Amazon, Anthropic, DeepMind, Inflection, Meta, Microsoft, and OpenAI) “outline their AI Safety Policies across nine areas of AI Safety”.

Below, I’ll give my thoughts on the nine areas the UK government described; I’ll note key priorities that I don’t think are addressed by company-side policy at all; and I’ll say a few words (with input from Matthew Gray, whose discussions here I’ve found valuable) about the individual companies’ AI Safety Policies.[1]

My overall take on the UK government’s asks is: most of these are fine asks; some things are glaringly missing, like independent risk assessments.

My overall take on the labs’ policies is: none are close to adequate, but some are importantly better than others, and most of the organizations are doing better than sheer denial of the primary risks.

1. Thoughts on the AI Safety Policy categories

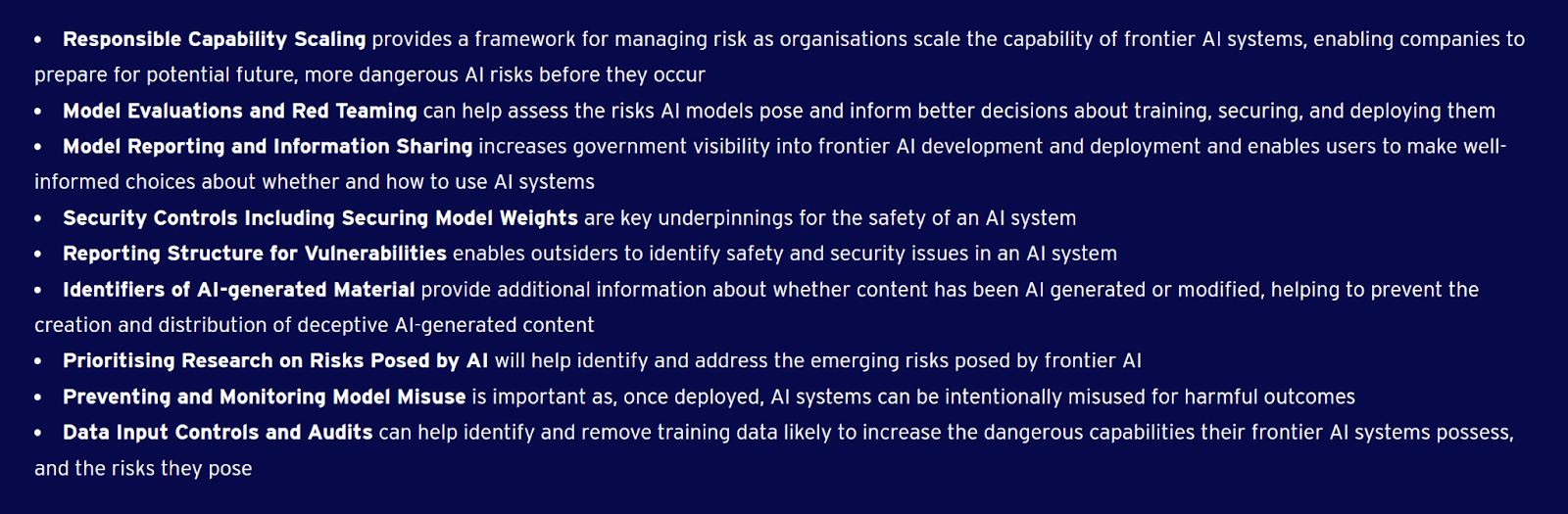

Responsible Capability Scaling provides a framework for managing risk as organisations scale the capability of frontier AI systems, enabling companies to prepare for potential future, more dangerous AI risks before they occur

There is no responsible scaling of frontier AI systems right now — any technical efforts that move us closer to smarter-than-human AI come with an unacceptable level of risk.[2]

That said, it’s good for companies to start noting conditions under which they’d pause, as a first step towards the sane don’t-advance-toward-the-precipice-at-all policy.

In the current regime, I think our situation would look a lot less dire if developers were saying “we won’t scale capabilities or computational resources further unless we really need to, and we consider the following to be indicators that we really need to: [X]”. The reverse situation that we’re currently in, where the default is for developers to scale up to stronger systems and where the very most conscientious labs give vague conditions under which they’ll stop scaling, seems like a clear recipe for disaster. (Albeit a more dignified disaster than the one where they scale recklessly without ever acknowledging the possible issues with that!)

Model Evaluations and Red Teaming can help assess the risks AI models pose and inform better decisions about training, securing, and deploying them

You’ll need to evaluate more than just foundation models, but evaluation doesn’t hurt. There’s an important question about what to do when the evals trigger, but evals are at least harmless, and can be actively useful in the right circumstance.

Red teaming is reasonable. Keep in mind that red teamers may need privacy and discretion in order to properly do their work.

Red teaming would obviously be essential and a central plank of the technical work if we were in a position to solve the alignment problem and safely wield (at least some) powerful AGI systems.

We mostly aren’t in that position: there’s some faint hope that alignment could turn out to be tractable [LW · GW] in the coming decades, but I think the main target we should be shooting for is an indefinite pause on progress toward AGI, and a redirection of efforts away from AGI and alignment and toward other technological avenues for improving the world. The main value I see from evals and red teaming at this point is that they might make it obvious sooner that a shutdown is necessary, and they might otherwise slow down the AGI race to some extent.

Model Reporting and Information Sharing increases government visibility into frontier AI development and deployment and enables users to make well-informed choices about whether and how to use AI systems

This seems clearly good, given the background views I outlined above. I advise building such infrastructure.

Security Controls Including Securing Model Weights are key underpinnings for the safety of an AI system

This seems good; even better would be to make it super explicit that the Earth can’t survive open-sourcing model weights indefinitely. At some point (possibly in a few decades, possibly next year), AIs will be capable enough that open-sourcing those capabilities effectively guarantees human extinction.

And publishing algorithmic ideas, open-sourcing model weights, etc. even today causes more harm than good. Publishing increases the number of actors in a way that makes it harder to mitigate race dynamics and harder to slow down; when a dangerous insight is privately found, it makes it easier for others to reconstruct the dangerous insight by following the public trail of results prior to closure; and publishing contributes to a general culture of reflexively sharing insights without considering their likely long-term consequences.

Reporting Structure for Vulnerabilities enables outsiders to identify safety and security issues in an AI system

Sure; this idea doesn’t hurt.

Identifiers of AI-generated Material provide additional information about whether content has been AI generated or modified, helping to prevent the creation and distribution of deceptive AI-generated content

This seems like good infrastructure to build out, especially insofar as you’re actually building the capacity to track down violations. The capacity to know everyone who’s building AIs and make sure that they’re following basic precautions is the key infrastructure here that might help you further down the line with bigger issues.

Prioritising Research on Risks Posed by AI will help identify and address the emerging risks posed by frontier AI

This section sounds like it covers important things, but also sounds somewhat off-key to me.

For one thing, “identify emerging risks” sounds to me like it involves people waxing philosophical about AI. People have been doing plenty of that for a long time; doing more of this on the margin mostly seems unhelpful to me, as it adds noise and doesn’t address civilization’s big bottlenecks regarding AGI.

For another thing, the “address the emerging risks” sounds to me like it visualizes a world where labs keep an eye on their LLMs and watch for risky behavior, which they then address before proceeding. Whereas it seems pretty likely to me that anyone paying careful attention will eventually realize that the whole modern AI paradigm does not scale safely to superintelligence, and that wildly different (and, e.g., significantly more effable and transparent) paradigms are needed.

If that’s the world we live in, “identify and address the emerging risks” doesn’t sound quite like what we want, as opposed to something more like “prioritizing technical AI alignment research”, which phrasing leaves more of a door open to realizing that an entire development avenue needs abandoning, if humanity is to survive this.

(Note that this is a critique of what the UK government asked for, not necessarily a critique of what the AI companies provided.)[3]

Preventing and Monitoring Model Misuse is important as, once deployed, AI systems can be intentionally misused for harmful outcomes

Setting up monitoring infrastructure seems reasonable. I doubt it serves as much of a defense against the existential risks, but it’s nice to have.

Data Input Controls and Audits can help identify and remove training data likely to increase the dangerous capabilities their frontier AI systems possess, and the risks they pose

This kind of intervention seems fine, though pretty minor from my perspective. I doubt that this will be all that important for all that long.

2. Higher priorities for governments

The whole idea of asking companies to write up AI Safety Policies strikes me as useful, but much less important than some other steps governments should take to address existential risk from smarter-than-human AI. Off the top of my head, governments should also:

- set compute thresholds for labs, and more generally set capabilities thresholds;

- centralize and monitor chips;

- indefinitely halt the development of improved chips;

- set up independent risk assessments;

- and have a well-developed plan for what we’re supposed to do when we get to the brink.

Saying a few more words about #4 (which I haven’t seen others discussing much):

I recommend setting up some sort of panel of independent actuaries who assess the risks coming from major labs (as distinct from the value on offer), especially if those actuaries are up to the task of appreciating the existential risks of AGI, as well as the large-scale stakes (all of the resources in the reachable universe, and the long-term role of humanity in the cosmos) involved.

Independent risk assessments are a key component in figuring out whether labs should be allowed to continue at all. (Or, more generally and theoretically-purely, what their “insurance premiums” should be, with the premiums paid immediately to the citizens of earth that they put at risk, in exchange for the risk.)[4]

Stepping back a bit: What matters is not what written-up answers companies provide to governments about their security policies or the expertise of their red teams; what matters is their actual behavior on the ground and the consequences that result. There’s an urgent need for mechanisms that will create consensus estimates of how risky companies actually are, so that we don’t have to just take their word for it when every company chimes in with “of course we’re being sufficiently careful!”. Panels of independent actuaries who assess the risks coming from major labs are a way of achieving that.

Saying a few more words about #5 (which I also haven’t seen others discussing much): suppose that the evals start triggering and the labs start saying “we cannot proceed safely from here”, and we find that small research groups are not too far behind the labs: what then? It’s all well and good to hope that the issues labs run into will be easy to resolve, but that’s not my guess at what will happen.

What is the plan for the case where the result of “identifying and addressing emerging risks” is that we identify a lot of emerging risks, and cannot address them until long after the technology is widely and cheaply available? If we’re taking those risks seriously, we need to plan for those cases now.

You might think I’d put technical AI alignment research (outside of labs) as another priority on my list above. I haven’t, because I doubt that the relevant actors will be able to evaluate alignment progress [LW · GW]. This poses a major roadblock both for companies and for regulators.

What I’d recommend instead is investment in alternative routes (whole-brain emulation, cognitive augmentation, etc.), on the part of the research community and governments. I would also possibly recommend requiring relatively onerous demonstrations of comprehension of model workings before scaling, though this seems difficult enough for a regulatory body to execute that I mostly think it’s not worth pursuing. The important thing is to achieve an indefinite pause on progress toward smarter-than-human AI (so we can potentially pursue alternatives like WBE, or buy time for some other miracle to occur); if “require relatively onerous demonstrations of comprehension” interferes at all with our ability to fully halt progress, and to stay halted for a very long time, then it’s probably not worth it.

If the UK government is maintaining a list of interventions like this (beyond just politely asking labs to be responsible in various ways), I haven’t seen it. I think that eliciting AI Safety Policies from companies is a fine step to be taking, but I don’t think it should be the top priority.

3. Thoughts on the submitted AI Safety Policies

Looking briefly at the individual companies’ stated policies (and filling in some of the gaps with what I know of the organizations), I’d say on a skim that none of the AI Safety Policies meet a “basic sanity / minimal adequacy” threshold — they all imply imposing huge and unnecessary risks on civilization writ large.

In relative terms:

- The best of the policies seems to me to be Anthropic’s, followed by OpenAI’s. I lean toward Anthropic’s being better than OpenAI’s mainly because Anthropic’s RSP seemed to take ASL-4 more seriously as a possibility, and give it more lip service, than any analog on the OpenAI side. But it’s possible that I just missed some degree of seriousness in OpenAI’s side, and that they'll overtake once they substantiate an RDP.

- DeepMind’s policy seemed a lot worse to me, followed closely by Microsoft’s.

- Amazon’s policy struck me as far worse than Microsoft’s.

- Meta had the worst stated policy, far worse than Amazon’s.

Anthropic and OpenAI pass a (far lower, but still relevant) bar of “making lip service to many of the right high-level ideals and priorities”. Microsoft comes close to that bar, or possibly narrowly meets it (perhaps because of its close relationship to OpenAI). DeepMind’s AI Safety Policy doesn’t meet this bar from my perspective, and lands squarely in “low-content corporate platitudes” territory.

Matthew Gray read the policies more closely than me (and I respect his reasoning on the issue), and writes:

Unlike Nate, I’d rank Anthropic and OpenAI’s write-ups as ~equally good. Mostly I think comparing their plans will depend on how OpenAI’s Risk-Informed Development Policy compares to Anthropic’s Responsible Scaling Policy.[5] For now, only Anthropic’s RSP has shipped, and we’re waiting on OpenAI’s RDP. I’d also rank DeepMind’s write-up as far better than Microsoft’s, and Amazon’s as only modestly worse than Microsoft’s. Otherwise, I agree with Nate’s rankings.

By comparison, CFI’s recent rankings look like the following (though CFI’s reviewers were only asking whether these companies’ AI Safety Policies satisfy the UK government’s requirements, not asking whether these policies are good):[6]

My read of the policy write-ups was:

Anthropic: Believes in evals and responsible scaling; aspirational about security. (In contrast to more established tech companies, which can point to their cybersecurity expertise over decades, Anthropic’s proposal can only point to them advocating for strengthening cybersecurity controls at frontier AI labs.) OpenAI: Believes in alignment research; decent on security. I think OpenAI’s “we’ll solve superalignment in 4 years!” plan is wildly unrealistic, but I greatly appreciate that they’re acknowledging the problem and sticking their neck out with a prediction of what’s required to solve it; I’d like to see more falsifiable plans from other organizations about how they plan to address alignment. DeepMind: Believes in scientific progress; takes security seriously. Microsoft: Experienced tech company, along for the ride with OpenAI. My read is that Microsoft is focused on the short-term security risks; they seem to want to operate a frontier AI model datacenter more than they want to unlock an intelligence explosion. Amazon: Experienced tech company; wants to sell products to customers; takes security seriously. Like DeepMind, Amazon provides detailed answers on the security questions that point to lots of things they’ve done in the past.[7] Meta: Believes in open source; fighting the brief at several points.

I see these policies as largely discussing two different things: security (e.g., making it difficult for outside actors to steal data from your servers, like your model weights), and safe deployment (not accidentally releasing something that goes rogue, not enabling a bad customer to do something catastrophic, etc.). These involve different skill sets, and different sets of companies seem to me to be most credible on one versus the other. E.g,, Anthropic and OpenAI seemed to be thinking the most seriously about safe deployment, but I don’t think Anthropic or OpenAI have security as impressive as Amazon-the-company (though I don’t know about the security standards of Amazon’s AI development teams specifically). In an earlier draft, Nate ranked Microsoft’s response as better than DeepMind’s, because “DeepMind seemed more like it was trying to ground things out into short-term bias concerns, and Microsoft seemed on a skim to be throwing up less smoke and mirrors.” However, I think DeepMind’s responses were equal or better than Microsoft’s on all nine categories. On my read, DeepMind’s answers contained a lot of “we’re part of Google, a company that has lots of experience handling this stuff, you can trust us”, and these sections brought up near-term bias issues as part of Google’s track record. However, I think this wasn’t done in order to deflect or minimize the problem. E.g., DeepMind writes:

The first part of this quote looks like it might be a deflection, but DeepMind then explicitly flags that they have catastrophic risks in mind (“assemble a bioweapon”). In contrast, Microsoft never once brings up biorisks, catastrophes, nuclear risks, etc. I think Microsoft wants to make sure their servers aren’t hacked and they comply with laws, whereas DeepMind is thinking about the fact that they’ve made something you could use to help you kill someone. The part of DeepMind’s response that struck me as smoke-and-mirrors-ish was instead their choice to redirect a lot of the conversation to abstract discussions of scientific and technological progress. For example, while talking about monitoring AlphaFold usage, they talk about using logs of usage to tally the benefits to the research community instead of any actual “monitoring” benefit, like whether or not users were generating proteins that could be useful for harming others. While it is appropriate to weigh both the benefits and the risks of new technology, subtly changing the subject from how risks are being monitored to benefits seems like a distraction. My argument here was enough to persuade Nate on this point, and he updated his ranking to place DeepMind a bit higher than Microsoft. I think evaluating the proposals has the downside that, unless someone fights the brief or gives a concretely dumb answer somewhere, there isn’t actually much to evaluate. One company offers $15k maximum for a bug bounty, another company $20k maximum; does that matter? Did another company which didn’t write a number offer more, or less? Meaningfully evaluating these companies will likely require looking at the companies’ track records and other statements (as Nate and I both tried to do to some degree), rather than looking at these policies in isolation. These considerations also make me very interested in Nate’s proposal of using independent actuaries to assess labs’ risk. |

- ^

Inflection is a late addition to the list, so Matt and I won’t be reviewing their AI Safety Policy here.

Thanks to Rob Bensinger for assembling, editing, and occasionally rephrasing/extending my draft of this post, with shallow-but-not-deep thumbs up from me.

- ^

And, as OpenAI’s write-up notes: “We refer to our policy as a Risk-Informed Development Policy rather than a Responsible Scaling Policy because we can experience dramatic increases in capability without significant increase in scale, e.g., via algorithmic improvements.”

- ^

Matthew Gray writes: “I think OpenAI did a surprisingly good job of responding to this with ‘the real deal’.” Matt cites this line from OpenAI’s discussion of “superalignment”:

Our current techniques for aligning AI, such as reinforcement learning from human feedback, rely on human ability to supervise AI. But these techniques will not work for superintelligence, because humans will be unable to reliably supervise AI systems much smarter than us.

- ^

Doing this fully correctly would also require that you in some sense hold the money that goes to possible future people for risking their fate. Taking into account only the interests of people who are presently alive still doesn’t properly line up all the incentives, since present people could then have a selfish excessive incentive to trade away large amounts of future people’s value in exchange for relatively small amounts of present-day gains.

- ^

I (Nate) agree with Matt here.

- ^

Unlike the CFI post authors, I (Nate) would give all of the companies here an F. However, some get a much higher F grade than others.

- ^

From DeepMind:

This is why we are building on our industry-leading general and infrastructure security approach. Our models are developed, trained, and stored within Google’s infrastructure, supported by central security teams and by a security, safety and reliability organisation consisting of engineers and researchers with world-class expertise. We were the first to introduce zero-trust architecture and software security best practices like fuzzing at scale, and we have built global processes, controls, and systems to ensure that all development (including AI/ML) has the strongest security and privacy guarantees. Our Detection & Response team provides a follow-the-sun model for 24/7/365 monitoring of all Google products, services and infrastructure - with a dedicated team for insider threat and abuse. We also have several red teams that conduct assessments of our products, services, and infrastructure for safety, security, and privacy failures.

14 comments

Comments sorted by top scores.

comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-11-01T15:46:11.726Z · LW(p) · GW(p)

In the current regime, I think our situation would look a lot less dire if developers were saying “we won’t scale capabilities or computational resources further unless we really need to, and we consider the following to be indicators that we really need to: [X]”. The reverse situation that we’re currently in, where the default is for developers to scale up to stronger systems and where the very most conscientious labs give vague conditions under which they’ll stop scaling, seems like a clear recipe for disaster. (Albeit a more dignified disaster than the one where they scale recklessly without ever acknowledging the possible issues with that!)

Big +1. For a while I've been saying that the big change that needs to happen is a shift in the burden of proof. I am still somewhat hopeful that the current regulatory push will accomplish this.

comment by Vaniver · 2023-11-01T00:36:36.459Z · LW(p) · GW(p)

(I'm Matthew Gray)

Inflection is a late addition to the list, so Matt and I won’t be reviewing their AI Safety Policy here.

My sense from reading Inflection's response now is that they say the right things about red teaming and security and so on, but I am pretty worried about their basic plan / they don't seem to be grappling with the risks specific to their approach at all. Quoting from them in two different sections:

Inflection’s mission is to build a personal artificial intelligence (AI) for everyone. That means an AI that is a trusted partner: an advisor, companion, teacher, coach, and assistant rolled into one.

Internally, Inflection believes that personal AIs can serve as empathetic companions that help people grow intellectually and emotionally over a period of years or even decades.** Doing this well requires an understanding of the opportunities and risks that is grounded in long-standing research in the fields of psychology and sociology.** We are presently building our internal research team on these issues, and will be releasing our research on these topics as we enter 2024.

I think AIs thinking specifically about human psychology--and how to convince people to change their thoughts and behaviors--are very dual use (i.e. can be used for both positive and negative ends) and at high risk for evading oversight and going rogue. The potential for deceptive alignment seems quite high, and if Inflection is planning on doing any research on those risks or mitigation efforts specific to that, it doesn't seem to have shown up in their response.

I don't think this type of AI is very useful for closing the acute risk window, and so probably shouldn't be made until much later.

Replies from: TrevorWiesinger↑ comment by trevor (TrevorWiesinger) · 2023-11-01T03:37:48.569Z · LW(p) · GW(p)

I think AIs thinking specifically about human psychology--and how to convince people to change their thoughts and behaviors--are very dual use (i.e. can be used for both positive and negative ends) and at high risk for evading oversight and going rogue. The potential for deceptive alignment seems quite high,

I'd be interested in hearing some more reasoning about that; until reading this, my understanding of psychology-focused AI was that the operators were generally safe, and were as likely to be goodharted/deceived by the AI (or the humans being influenced) as with any other thing, and therefore inner alignment risk (which I understand less well) would become acute at around the same time as non-psychology AI. Maybe I'm displaying flawed thinking that's prevalent among people like me, who spend orders of magnitude more time thinking about contemporary psychology systems than AI risk itself. Are you thinking that psychology-focused AI would notice the existence of their operators sooner than non-psychology AI? Or is it more about influence AI that people deliberately point at themselves instead of others?

and if Inflection is planning on doing any research on those risks or mitigation efforts specific to that, it doesn't seem to have shown up in their response.

I don't think this type of AI is very useful for closing the acute risk window, and so probably shouldn't be made until much later.

All the companies at this summit, not just inflection, are heavily invested in psychology AI (the companies, not the labs). I've also argued elsewhere that the American government and intelligence agencies American government and intelligence agencies are the primary actors with an interest in researching and deploying psychology AI [LW · GW].

I currently predict that the AI safety community is best off picking its battles [LW(p) · GW(p)] and should not try to interfere with technologies that are as directly critical to national security as psychology AI is; it would be wiser to focus on reducing the attack surface of the AI safety community itself [? · GW], and otherwise stay out of the way whenever possible [? · GW] since the alignment problem is obviously a much higher priority.

Replies from: Vaniver↑ comment by Vaniver · 2023-11-01T17:24:09.919Z · LW(p) · GW(p)

Are you thinking that psychology-focused AI would notice the existence of their operators sooner than non-psychology AI? Or is it more about influence AI that people deliberately point at themselves instead of others?

I am mostly thinking about the former; I am worried that psychology-focused AI will develop more advanced theory of mind and be able to hide going rogue from operators/users more effectively, develop situational awareness more quickly, and so on.

I currently predict that the AI safety community is best off picking its battles [LW(p) · GW(p)] and should not try to interfere with technologies that are as directly critical to national security as psychology AI is;

My view is that the AI takeover problem is fundamentally a 'security' problem. Building a robot army/police force has lots of benefits (I prefer it to a human one in many ways) but it means it's that much easier for a rogue AI to seize control; a counter-terrorism AI also can be used against domestic opponents (including ones worried about the AI), and so on. I think jumping the gun on these sorts of things is more dangerous than jumping the gun on non-security uses (yes, you could use a fleet of self-driving cars to help you in a takeover, but it'd be much harder than a fleet of self-driving missile platforms).

Replies from: TrevorWiesinger↑ comment by trevor (TrevorWiesinger) · 2023-11-01T21:41:14.215Z · LW(p) · GW(p)

Sorry, my bad. When I said "critical to national security", I meant that the US and China probably already see psychology AI as critical to state survival [LW · GW]. It's not like it's a good thing for this tech to be developed (idk what Bostrom/FHI was thinking when he wrote VWH in 2019), it's just that the US and China are already in a state of moloch where they are worried about eachother (and Russia) using psychology AI which already exists [LW · GW] to hack public opinion and pull the rug out from under the enemy regime. The NSA and CCP can't resist developing psychological warfare/propaganda applications for SOTA AI systems, because psychology AI is also needed for defensively neutralizing/mitigating successful public opinion influence operations after they get through and turn millions of people (especially elites [LW · GW]). As a result, it seems to me that the AI safety community should pick different battles than opposing psychological AI.

I don't see how psychology-focused AI would develop better theory of mind than AI with tons of books in the training set. At the level where inner misalignment kills everyone, it seems like even something as powerful as the combination of social media and scrolling data [LW · GW] would cause a dimmer awareness of humans than from the combination of physics and biology and evolution and history textbooks. I'd be happy to understand your thinking better since I don't know much of the technical details of inner alignment or how psych AI is connected to that.

comment by ryan_greenblatt · 2023-11-01T00:38:33.623Z · LW(p) · GW(p)

I think Anthropic's write up and current position is considerably better than OpenAI's because they actually have a concrete policy with evals and commitments. Of course, when OpenAI releases an RDP my position might change considerably.

Replies from: akash-wasil↑ comment by Orpheus16 (akash-wasil) · 2023-11-01T13:11:38.779Z · LW(p) · GW(p)

Since OpenAI hasn't released its RDP, I agree with the claim that Anthropic's RSP is currently more concrete than OpenAI's RDP. In other words, "Anthropic has released something, and OpenAI has not yet released something."

That said, I think this comment might make people think Anthropic's RSP is more concrete than it actually is. I encourage people to read this comment [LW(p) · GW(p)], as well as the ensuing discussion between Evan and Habryka.

Especially this part of one of Habryka's comments:

The RSP does not specify the conditions under which Anthropic would stop scaling models (it only says that in order to continue scaling it will implement some safety measures, but that's not an empirical condition, since Anthropic is confident it can implement the listed security measures)

The RSP does not specify under what conditions Anthropic would scale to ASL-4 or beyond, though they have promised they will give those conditions.

Before I read the RSP, people were saying "Anthropic has made concrete commitments that specify the conditions under which they would stop scaling", and I think this was misleading (at least based on the way I interpreted such comments). I offer some examples of what I thought a concrete/clear commitment would look like here [LW(p) · GW(p)].

That said, I do think it's great for Anthropic to be transparent about its current thinking. Anthropic is getting a disproportionate amount of criticism because they're the ones who spoke up, but it is indeed important to recognize that the other scaling labs do not have scaling policies at all.

I'll conclude by noting that I mostly think the "who is doing better than whom" frame can sometimes be useful, but I think right now it's mostly a distracting frame. Unless the RSPs or RDPs get much better (e.g., specify concrete conditions under which they would stop scaling, reconsider whether or not it makes sense to scale to ASL-4, make it clear that the burden of proof is on developers to show that their systems are safe/understandable/controllable as opposed to safety teams or evaluators to show that a model has dangerous capabilities), I think it's reasonable to conclude "some RSPs are better than others, but the entire RSP framework seems insufficient. Also, on the margin I want more community talent going into government interventions that are more ambitious than what the labs are willing or able to agree to in the context of a race to AGI."

Replies from: VaniverI (Nate) would give all of the companies here an F. However, some get a much higher F grade than others.

↑ comment by Vaniver · 2023-11-01T17:02:56.758Z · LW(p) · GW(p)

FWIW I read Anthropic's RSP and came away with the sense that they would stop scaling if their evals suggested that a model being trained either registered as ASL-3 or was likely to (if they scaled it further). They would then restart scaling once they 1) had a definition of the ASL-4 model standard and lab standard and 2) met the standard of an ASL-3 lab.

Do you not think that? Why not?

Replies from: akash-wasil↑ comment by Orpheus16 (akash-wasil) · 2023-11-01T18:56:21.895Z · LW(p) · GW(p)

I got the impression that Anthropic wants to do the following things before it scales beyond systems that trigger their ASL-3 evals:

- Have good enough infosec so that it is "unlikely" for non-state actors to steal model weights, and state actors can only steal them "with significant expense."

- Be ready to deploy evals at least once every 4X in effective compute

- Have a blog post that tells the world what they plan to do to align ASL-4 systems.

The security commitment is the most concrete, and I agree with Habryka [LW(p) · GW(p)] that these don't seem likely to cause Anthropic to stop scaling:

Like, I agree that some of these commitments are costly, but I don't see how there is any world where Anthropic would like to continue scaling but finds itself incapable of doing so, which is what I would consider a "pause" to mean. Like, they can just implement their checklist of security requirements and then go ahead.

Maybe this is quibbling over semantics, but it does really feels quite qualitatively different to me. When OpenAI said that they would spend some substantial fraction of their compute on "Alignment Research" while they train their next model, I think it would be misleading to say "OpenAI has committed to conditionally pausing model scaling".

The commitment to define ASL-4 and tell us how they plan to align it does not seem like a concrete commitment. A concrete commitment would look something like "we have solved X open problem, in alignment as verified via Y verification method" or "we have the ability to pass X test with Y% accuracy."

As is, the commitment is very loose. Anthropic could just publish a post saying "ASL-4 systems are systems that can replicate autonomously in the wild, perform at human-level at most cognitive tasks, or substantially boost AI progress. To align it, we will use Constitutional AI 2.0. And we are going to make our information security even better."

To be clear, the RSP is consistent with a world in which Anthropic actually chooses to pause before scaling to ASL-4 systems. Like, maybe they will want their containment measures for ASL-4 to be really really good, which will require a major pause. But the RSP does not commit Anthropic to having any particular containment measures or any particular evidence that it is safe to scale to ASL-4– it only commits Anthropic to publish a post about ASL-4 systems. This is why I don't consider the ASL-4 section to be a concrete commitment.

The same thing holds for the evals point– Anthropic could say "we feel like our evals are good enough" or they could say "ah, we actually need to pause for a long time to get better evals." But the RSP is consistent with either of these worlds, and Anthropic has enough flexibility/freedom here that I don't think it makes sense to call this a concrete commitment.

Note though that the prediction market RE Anthropic's security commitments currently gives Anthropic a 35% chance of pausing for at least one month, which has updated me somewhat in the direction of "maybe the security commitment is more concrete than I thought". Though I still think it's a bad idea to train a model capable of making biological weapons if it can be stolen by state actors with significant expense. The commitment would be more concrete if it said something like "state actors would not be able to steal this model unless they spent at least $X, which we will operationally define as passing Y red-teaming effort by Z independent group."

Replies from: Vaniver↑ comment by Vaniver · 2023-11-01T21:36:52.038Z · LW(p) · GW(p)

I got the impression that Anthropic wants to do the following things before it scales beyond ASL-3:

Did you mean ASL-2 here? This seems like a pretty important detail to get right. (What they would need to do to scale beyond ASL-3 is meet the standard of an ASL-4 lab, which they have not developed yet.)

I agree with Habryka [LW(p) · GW(p)] that these don't seem likely to cause Anthropic to stop scaling:

By design, RSPs are conditional pauses; you pause until you have met the standard, and then you continue. If you get the standard in place soon enough, you don't need to pause at all. This incentivizes implementing the security and safety procedures as soon as possible, which seems good to me.

But the RSP does not commit Anthropic to having any particular containment measures or any particular evidence that it is safe to scale to ASL-4– it only commits Anthropic to publish a post about ASL-4 systems. This is why I don't consider the ASL-4 section to be a concrete commitment.

Yes, I agree that the ASL-4 part is an IOU, and I predict that when they eventually publish it there will be controversy over whether or not they got it right. (Ideally, by then we'll have a consensus framework and independent body that develops those standards, which Anthropic will just sign on to.)

Again, this is by design; the underlying belief of the RSP is that we can only see so far ahead thru the fog, and so we should set our guidelines bit-by-bit, rather than pausing until we can see our way all the way to an aligned sovereign.

Replies from: akash-wasil↑ comment by Orpheus16 (akash-wasil) · 2023-11-02T11:02:19.912Z · LW(p) · GW(p)

Did you mean ASL-2 here?

My understanding is that their commitment is to stop once their ASL-3 evals are triggered. They hope that their ASL-3 evals will be conservative enough to trigger before they actually have an ASL-3 system, but I think that's an open question. I've edited my comment to say "before Anthropic scales beyond systems that trigger their ASL-3 evals". See this section from their RSP below (bolding my own):

"We commit to define ASL-4 evaluations before we first train ASL-3 models (i.e., before continuing training beyond when ASL-3 evaluations are triggered)."

By design, RSPs are conditional pauses; you pause until you have met the standard, and then you continue.

Yup, this makes sense. I don't think we disagree on the definition of a conditional pause. But I think if a company says "we will do X before we keep scaling", and then X is a relatively easy standard to meet, I would think it's misleading to say "the company has specified concrete commitments under which they would pause." Even if technically accurate, it gives an overly-rosy picture of what happened, and I would expect it to systematically mislead readers into thinking that the commitments were stronger.

For the Anthropic RSP in particular, I think it's accurate & helpful to say "Anthropic has said that they will not scale past systems that substantially increase misuse risk [if they are able to identify this] until they have better infosec and until they have released a blog post defining ASL-4 systems and telling the world how they plan to develop those safely."

Then, separately, readers can decide for themselves how "concrete" or "good" these commitments are. In my opinion, these are not particularly concrete, and I was expecting much more when I heard the initial way that people were communicating about RSPs.

the underlying belief of the RSP is that we can only see so far ahead thru the fog, and so we should set our guidelines bit-by-bit, rather than pausing until we can see our way all the way to an aligned sovereign.

This feels a bit separate from the above discussion, and the "wait until we can see all the way to an aligned sovereign" is not an accurate characterization of my view, but here's how I would frame this.

My underlying problem with the RSP framework is that it presumes that companies should be allowed to keep scaling until there is clear and imminent danger, at which point we do [some unspecified thing]. I think a reasonable response from RSP defenders is something like "yes, but we also want stronger regulation and we see this as a step in the right direction." And then the crux becomes something like "OK, on balance, what effect will RSPs have on government regulations [perhaps relative to nothing, or perhaps relative to what would've happened if the energy that went into RSPS had went into advocating for something else?"

I currently have significant concerns that if the RSP framework, as it has currently been described, is used as the basis for regulation, it will lock-in an incorrect burden of proof. In other words, governments might endorse some sort of "you can keep scaling until auditors can show clear signs of danger and prove that your safeguards are insufficient." This is the opposite of what we expect in other high-risk sectors [LW · GW].

That said, it's not impossible that RSPs will actually get us closer to better regulation– I do buy some sort of general "if industry does something, it's easier for governments to implement it" logic. But I want to see RSP advocates engage more with the burden of proof concerns.

To make this more concrete: I would be enthusiastic if ARC Evals released a blog post saying something along the lines of: "we believe the burden of proof should be on Frontier AI developers to show us affirmative evidence of safety. We have been working on dangerous capability evaluations, which we think will be a useful part of regulatory frameworks, but we would strongly support regulations that demand more evidence than merely the absence of dangerous capabilities. Here are some examples of what that would look like..."

Replies from: Vaniver↑ comment by Vaniver · 2023-11-03T03:57:29.189Z · LW(p) · GW(p)

My understanding is that their commitment is to stop once their ASL-3 evals are triggered.

Ok, we agree. By "beyond ASL-3" I thought you meant "stuff that's outside the category ASL-3" instead of "the first thing inside the category ASL-3".

For the Anthropic RSP in particular, I think it's accurate & helpful to say

Yep, that summary seems right to me. (I also think the "concrete commitments" statement is accurate.)

But I want to see RSP advocates engage more with the burden of proof concerns.

Yeah, I also think putting the burden of proof on scaling (instead of on pausing) is safer and probably appropriate. I am hesitant about it on process grounds; it seems to me like evidence of safety might require the scaling that we're not allowing until we see evidence of safety. On net, it seems like the right decision on the current margin but the same lock-in concerns (if we do the right thing now for the wrong reasons perhaps we will do the wrong thing for the same reasons in the future) worry me about simply switching the burden of proof (instead of coming up with a better system to evaluate risk).

comment by Zach Stein-Perlman · 2023-11-01T00:26:51.022Z · LW(p) · GW(p)

Nice.

You’ll need to evaluate more than just foundation models

Not sure what this is gesturing at—you need to evaluate other kinds of models, or whole labs, or foundation-models-plus-finetuning-and-scaffolding, or something else.

(I think "model evals" means "model+finetuning+scaffolding evals," at least to the AI safety community + Anthropic.)

comment by Oliver Sourbut · 2023-11-01T08:47:34.236Z · LW(p) · GW(p)

Another high(er?) priority for governments:

- start building multilateral consensus and preparations on what to do if/when

- AI developers go rogue

- AI leaked to/stolen by rogue operators

- AI goes rogue