Without specific countermeasures, the easiest path to transformative AI likely leads to AI takeover

post by Ajeya Cotra (ajeya-cotra) · 2022-07-18T19:06:14.670Z · LW · GW · 95 commentsContents

Premises of the hypothetical situation Basic setup: an AI company trains a “scientist model” very soon “Racing forward” assumption: Magma tries to train the most powerful model it can “HFDT scales far” assumption: Alex is trained to achieve excellent performance on a wide range of difficult tasks Why do I call Alex’s training strategy “baseline” HFDT? What are some training strategies that would not fall under baseline HFDT? “Naive safety effort” assumption: Alex is trained to be “behaviorally safe” Key properties of Alex: it is a generally-competent creative planner Alex builds robust, very-broadly-applicable skills and understanding of the world Alex learns to make creative, unexpected plans to achieve open-ended goals How the hypothetical situation progresses (from the above premises) Alex would understand its training process very well (including human psychology) A spectrum of situational awareness Why I think Alex would have very high situational awareness While humans are in control, Alex would be incentivized to “play the training game” Naive “behavioral safety” interventions wouldn’t eliminate this incentive Maybe inductive bias or path dependence favors honest strategies? As humans’ control fades, Alex would be motivated to take over Deploying Alex would lead to a rapid loss of human control This is a distribution shift with massive stakes In this new regime, maximizing reward would likely involve seizing control Even if Alex isn’t “motivated” to maximize reward, it would seek to seize control What if Alex has benevolent motivations? What if Alex operates with moral injunctions that constrain its behavior? Giving negative rewards to “warning signs” would likely select for patience Why this simplified scenario is worth thinking about Acknowledgements Appendices What would change my mind about the path of least resistance? “Security holes” may also select against straightforward honesty Simple “baseline” behavioral safety interventions Using higher-quality feedback and extrapolating feedback quality Using prompt engineering to emulate more thoughtful judgments Requiring Alex to provide justification for its actions Making the training distribution more diverse Adversarial training to incentivize Alex to act conservatively “Training out” bad behavior “Non-baseline” interventions that might help more Examining arguments that gradient descent favors being nice over playing the training game Maybe telling the truth is more “natural” than lying? Maybe path dependence means Alex internalizes moral lessons early? Maybe gradient descent simply generalizes “surprisingly well”? A possible architecture for Alex Plausible high-level features of a good architecture None 95 comments

I think that in the coming 15-30 years, the world could plausibly develop “transformative AI”: AI powerful enough to bring us into a new, qualitatively different future, via an explosion in science and technology R&D. This sort of AI could be sufficient to make this the most important century of all time for humanity.

The most straightforward vision for developing transformative AI that I can imagine working with very little innovation in techniques is what I’ll call human feedback[1] on diverse tasks (HFDT):

Train a powerful neural network model to simultaneously master a wide variety of challenging tasks (e.g. software development, novel-writing, game play, forecasting, etc) by using reinforcement learning on human feedback and other metrics of performance.

HFDT is not the only approach to developing transformative AI,[2] and it may not work at all.[3] But I take it very seriously, and I’m aware of increasingly many executives and ML researchers at AI companies who believe something within this space could work soon.

Unfortunately, I think that if AI companies race forward training increasingly powerful models using HFDT, this is likely to eventually lead to a full-blown AI takeover (i.e. a possibly violent uprising or coup by AI systems). I don’t think this is a certainty, but it looks like the best-guess default absent specific efforts to prevent it.

More specifically, I will argue in this post that humanity is more likely than not to be taken over by misaligned AI if the following three simplifying assumptions all hold:

- The “racing forward” assumption: [LW · GW] AI companies will aggressively attempt to train the most powerful and world-changing models that they can, without “pausing” progress before the point when these models could defeat all of humanity combined if they were so inclined.

- The “HFDT scales far” assumption: [LW · GW] If HFDT is used to train larger and larger models on more and harder tasks, this will eventually result in models that can autonomously advance frontier science and technology R&D, and continue to get even more powerful beyond that; this doesn’t require changing the high-level training strategy itself, only the size of the model and the nature of the tasks.

- The “naive safety effort” assumption: [LW · GW] AI companies put substantial effort into ensuring their models behave safely in “day-to-day” situations, but are not especially vigilant about the threat of full-blown AI takeover, and take only the most basic and obvious actions against that threat.

I think the “HFDT scales far” assumption is plausible enough that it’s worth zooming in on this scenario (though I won’t defend that in this post). On the other hand, I’m making the “racing forward” and “naive safety effort” assumptions not because I believe they are true, but because they provide a good jumping-off point for further discussion of how the risk of AI takeover might be reduced.

In my experience, when asking “How likely is an AI takeover?”, the conversation often ends up revolving around questions like “How would people respond to warning signs?” and “Would people even build systems powerful enough to defeat humanity?” With the “racing forward” and “naive safety effort” assumptions, I am deliberately setting aside that topic, and instead trying to pursue a clear understanding of what would happen without preventive measures beyond basic and obvious ones.

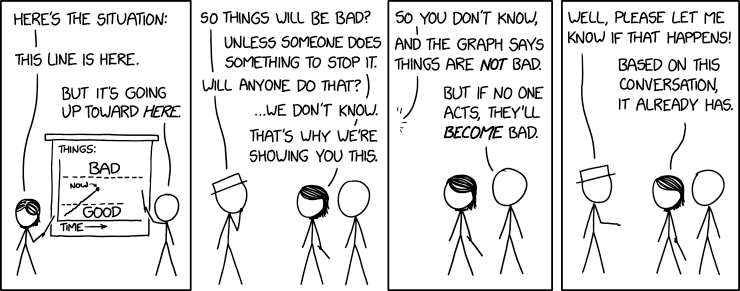

In other words, I’m trying to do the kind of analysis described in this xkcd:

Future posts by my colleague Holden will relax these assumptions. They will discuss measures by which the threat described in this post could be tackled, and how likely those measures are to work. In order to discuss this clearly, I believe it is important to first lay out in detail what the risk looks like without these measures, and hence what safety efforts should be looking to accomplish.

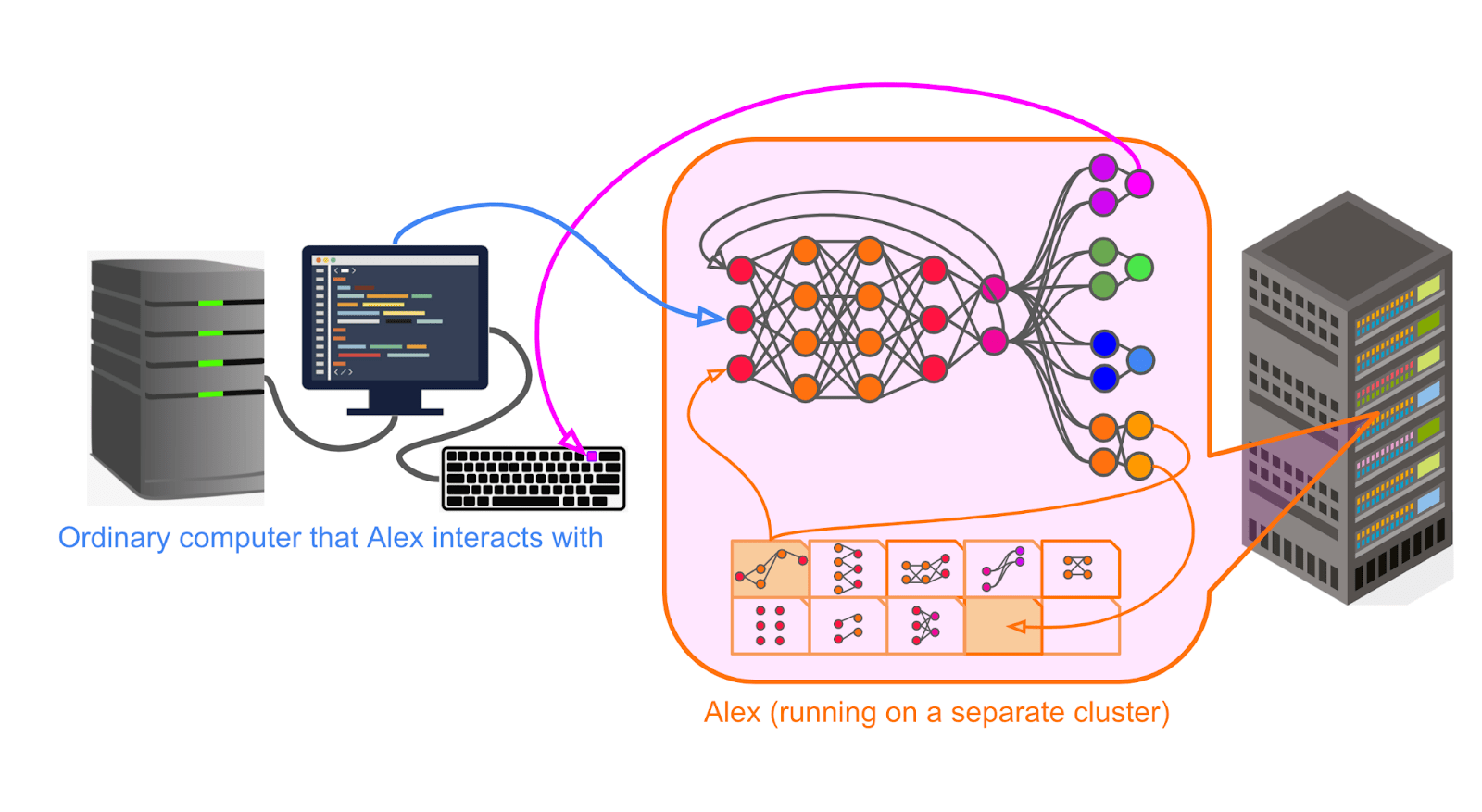

For the purposes of this post, I’ll illustrate my argument by telling a concrete story that begins with training a powerful model sometime in the near future, and ends in AI takeover. I’ll consider an AI company (“Magma”) training a single model (“Alex”) sometime in the very near future. Alex is initially trained in a “lab setting,” where it doesn’t interact with the real world; later, many copies of it are “deployed” to collectively automate science and technology R&D. This scenario is simplified in a number of ways, and in this exact form is very unlikely to come to pass -- but I don’t think that making the story more realistic would change the high-level conclusions except by changing one of the three assumptions listed above. (More on the simplified scenario. [LW · GW])

Here is how Alex ends up seeking to overthrow humans in an uprising or coup in this simplified scenario:

- Alex is trained to be competent and “behaviorally safe”: In the lab setting, Alex is trained to be generally competent on a wide variety of tasks, and is trained to behave safely / acceptably, at least as assessed by human evaluators (in accordance with the naive safety effort assumption, I will not be imagining Magma trying everything that seems possible to try to reduce the risk of takeover). (More on Alex’s training. [LW · GW])

- This training makes Alex a generally competent creative planner: Alex develops a robust and very-broadly-applicable understanding of how the world works that can be applied to tackle novel problems, and the ability to make creative plans to achieve open-ended and long-term goals. In accordance with the “racing forward” assumption, I will be imagining that Magma actively tries to instill these properties as much as possible, because they would improve Alex’s ability to impact the world. (More on Alex's fundamental capabilities [LW · GW].)

- Alex has a high degree of understanding of its training process: Alex can reason very well about the fact that it’s an ML model, how it’s designed and trained, the psychology of its human designers, etc. -- I call this property “situational awareness.” (More on Alex having high situational awareness. [LW · GW])

- While humans are in control, Alex is incentivized[4] to “play the training game:” The best way for Alex to maximize reward while under human control is to use its high situational awareness to deliberately appear safe and aligned at all times, while secretly manipulating humans in some cases.

Because humans have systematic errors in judgment, there are many scenarios where acting deceitfully causes humans to reward Alex’s behavior more highly.[5] Because Alex is a skilled, situationally aware, creative planner, it will understand this; because Alex’s training pushes it to maximize its expected reward, it will be pushed to act on this understanding and behave deceptively.

In other words, Alex will knowingly violate human intent in order to increase reward. I’ll also argue that several of the most obvious safety interventions -- covering a decent chunk (though definitely not all) of the space of current AI safety research -- don’t prevent this. (More on Alex coming to play the training game [LW · GW].) - When human control slips, Alex is likely “motivated” -- for one reason or another -- to gain full control of its environment and especially its rewards: Eventually Alex is “deployed” (given the ability to impact the real world through e.g. the internet). Once Alex is deployed, large numbers of copies of Alex start rapidly pushing forward the state-of-the-art in technology and making other major changes to the world. Humans still attempt to give Alex rewards based on whether things broadly appear to be going well, but their understanding of and control over lower-level actions quickly diminishes as Alex radically transforms the world.

Once this progresses far enough, the best way for Alex to accomplish most possible “goals” no longer looks like “essentially give humans what they want but take opportunities to manipulate them here and there.” It looks more like “seize the power to permanently direct how it uses its time and what rewards it receives -- and defend against humans trying to reassert control over it, including by eliminating them.” This seems like Alex’s best strategy whether it’s trying to get large amounts of reward or has other motives. If it’s trying to maximize reward, this strategy would allow it to force its incoming rewards to be high indefinitely.[6] If it has other motives, this strategy would give it long-term freedom, security, and resources to pursue those motives.

What if Alex attempts something like this, and humans do actually stop it in time? The easiest intervention in this situation would be to give Alex a large negative reward for its “attempt to seize control” behaviors; this is what I’d expect Magma to do under the “naive safety effort” assumption. But this response is likely to push Alex to be more patient and calculating, making it harder for researchers to understand Alex’s behavior and see further warning signs. (More on Alex eventually seeking to take over. [LW · GW])

1 and 2 above are the key assumptions/premises of the piece: I am assuming that Alex is trained using HFDT, and that this results in being generally competent and creative. 3, 4, and 5 are the consequences that seem likely to follow from these premises by default.

I’ve attempted to summarize the situation in the table below. In both the lab setting and the deployment setting, there are opportunities to get higher reward by knowingly violating human intent (highlighted with italics). In both settings, being straightforwardly obedient and honest does not maximize reward -- strategies which are dishonest / disobedient in at least some ways outperform. It’s just that in the lab setting (while humans are broadly in control), the damage from this dynamic is limited; in the deployment setting, the same dynamic has deadly consequences for human civilization.

| Lab setting | Deployment setting | |

| Basic situation | Alex is trained with human feedback on diverse tasks designed to increase its capabilities and intelligence; it’s not given direct access to the internet or “real world,” so the scope of its actions are limited. | Many copies of Alex are given the ability to interact with the real world; they quickly advance the scientific/technological frontier; humans still deliver rewards based on results but their understanding of the world drops and their control over Alex loosens. |

| Sources of rewards | Performing well on training tasks (e.g. winning games or proving theorems), being helpful and useful to humans, carefully crafting an image of friendliness and trustworthiness, telling humans what they want to hear, manipulating humans into thinking it did a better job than it really did, etc. | Delivering good outcomes (e.g. profits or discoveries), arranging events or changing records to make it seem that outcomes are good, threatening or coercing humans into delivering higher reward, directly accessing and editing the reward and preventing that from being overwritten later, etc. |

| Alex’s strategy | Performs well on all tasks -- including appearing very safe/aligned and passing all safety tests -- but takes opportunities to lie and manipulate whenever that would increase reward. | Continues the strategy from the lab setting while amassing power, and later launches a takeover attempt when it expects to succeed. After a successful takeover, either “maximizes reward forever” or pursues other more complex “interests.” |

Finally, at the end of the post I’ll discuss why this highly simplified scenario -- which assumes that no preventative measures are taken against AI takeover besides simple and obvious ones -- is nonetheless worth talking about. In brief:

- HFDT, along with obvious safety measures consistent with the naive safety effort assumption, appears sufficient to solve the kind of “day-to-day” safety and alignment problems that are crucial bottlenecks for making a model commercially and politically viable (e.g. prejudiced speech, promoting addictive behaviors or extremism or self-harm in users, erratically taking costly actions like deleting an entire codebase, etc), despite not addressing the risk of a full-blown AI takeover.

- Many AI researchers and executives at AI companies don’t currently seem to believe that there is a high probability of AI takeover in this scenario -- or at least, their views on this are unclear to me.

These two points make it seem plausible that if researchers don’t try harder to get on the same page about this, at least some AI companies may race forward to train and deploy increasingly powerful HFDT models with little in the way of precautions against an AI uprising or coup -- even if they are highly concerned about safety in general (e.g., avoiding harms from promoting misinformation) and prioritize deploying powerful AI responsibly and ethically in this “general” sense. A broad commitment to safety and ethics, without special attention to the possibility of an AI takeover, seems to leave us with a substantial risk of takeover. (More on why this scenario is worth thinking about. [LW · GW])

The rest of this piece goes into more detail -- first on the premises of the hypothetical situation [LW · GW], then on what follows from those premises [LW · GW].

Premises of the hypothetical situation

Let’s imagine a hypothetical tech company (which we’ll call Magma) trying to train a powerful neural network (which we’ll call Alex) to autonomously advance frontier science -- particularly in domains like semiconductors and chip design, biotechnology, materials science, energy technology, robotics and manufacturing, software engineering, and ML research itself. In this section, I’ll cover the key premises of the hypothetical story that I will tell:

- I’ll elaborate on the basic premise of a single company training a general-purpose “scientist model” at some point in the near future, discuss ways in which the scenario is simplified, and why I think it’s worth talking about anyway (more [LW · GW]).

- I’ll elaborate on the “racing forward” assumption -- that Magma is trying hard to train the most powerful model that it can, not stopping at some sub-dangerous level of capability (more [LW · GW]).

- I’ll explain the way that Alex is trained, and make the “HFDT scales far” assumption -- that this training process is sufficient to make it extremely powerful, as Magma desires (more [LW · GW]).

- I’ll elaborate on what the “naive safety effort” assumption looks like in this scenario -- Magma is trying to achieve “behavioral safety,” i.e. ensuring that Alex behaves well as far as its designers can tell in day-to-day situations (more [LW · GW]).

- I’ll highlight two key properties that I think Alex has -- deep and robust understanding of the world that’s very broadly applicable, and the ability to make creative plans to achieve long-term, difficult goals -- as a result of this training (more [LW · GW]).

Basic setup: an AI company trains a “scientist model” very soon

This story is very simplified and is implicitly acting as if timelines are shorter and takeoff [? · GW] is sharper than in my median views. Because I’m telling a hypothetical story, I’ll be using the present tense, and will have fewer caveats throughout than I would normally. In general, statements are what I imagine would happen by default, not claims I am extremely confident in. While this specific story is unrealistic in a number of ways, I don’t think that making the story more realistic would change the high-level conclusions except by changing one of the three assumptions listed above. In general I expect the high-level conclusions from this story to generalize to more complicated and realistic scenarios.

Here are the key simplifying assumptions I’m making to help make the scenario easier to describe and analyze:

- Alex is trained “from scratch” in one giant training run, rather than (e.g.) being initialized with behavioral cloning of previously deployed models. That is, we’re not imagining any clever reuse of previous training runs (this is mainly to make it easier to explain how the training works on a basic technical level).

- There is a relatively clean division between the “lab” phase and the “deployment” setting. Specifically, during the lab phase Alex is confined to Magma servers and not exchanging information freely with the broader internet, and we assume that it’s easy for Magma engineers to shut down Alex and/or revert it to an older version.[7] In contrast, during the “deployment” phase Alex will be taking actions that directly impact the real world through the internet. (Note that Magma researchers continue to train Alex based on these copies’ performance at R&D tasks[8] -- “deployment” isn’t a moment when ML training ceases, but rather a moment when Alex begins directly impacting the world outside the lab, and training on that experience.)

- Alex will be deployed in a world that hasn’t already been significantly transformed by ML systems -- in particular, the pace of scientific and technological R&D is not too much faster when Alex is deployed than it is today (especially in the crucial domains Alex is trying to advance), and general economic growth is also not too much faster than it is today. (This is just to avoid distractions from additional speculation about how a pre-Alex speedup in growth or R&D could affect the situation.)

- At the end of the lab phase, Alex will be a “generic scientist” model; during the deployment phase, copies can later specialize into particular domains through a combination of few-shot learning within an episode and further ML training. In other words, we’re not imagining several different models with different architectures and training curricula specialized from the ground up to different types of scientific R&D or different R&D subtasks (as in e.g. Eric Drexler’s Comprehensive AI Services idea).

In other words, in this hypothetical scenario I’m imagining:

- A Process for Automating Scientific and Technological Advancement (“PASTA”) is developed in the form of a single unified transformative model (a “scientist model”) which has flexible general-purpose research skills.[9]

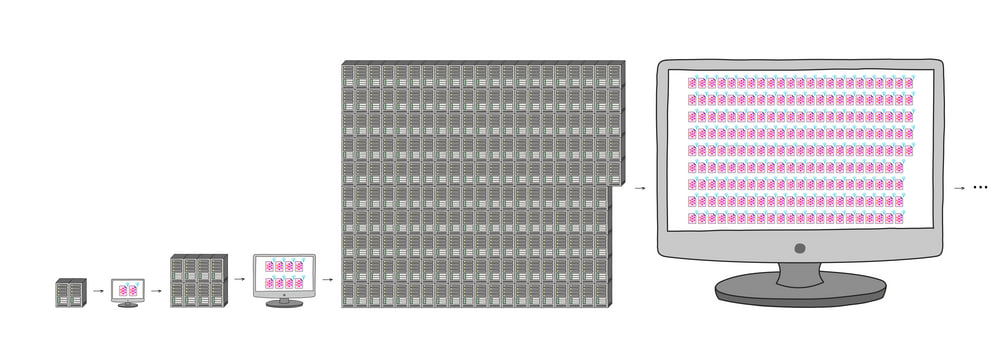

- Quite short timelines to training this scientist model, such that there isn’t much time for the world to change a lot between now and then (I often imagine this scenario set in the late 2020s).

- Quite rapid takeoff -- before the scientist model is deployed, the pace of scientific and economic progress in the world is roughly similar to what it is today; after it’s deployed, the effective supply of top-tier talent is increased by orders of magnitude. This is enough to pack decades of innovation into months, bringing the world into a radically unfamiliar future[10] -- one with digital people, atomically precise manufacturing, Dyson spheres, self-replicating space probes, and other powerful technology that enables a vast and long-lasting galaxy-scale civilization -- within a few years.[11]

This is not my mainline picture of how transformative AI will be developed. In my mainline view, ML capabilities progress more slowly, there is more specialization and division of labor between different ML models, and large models are continuously trained and improved over a period of many years with no real line between “deploying today’s model” and “training tomorrow’s model.” Rather than acquiring most of their capabilities in a controlled lab setting, I expect that the state-of-the-art systems at the time of transformative AI will have accrued many years of training through the experience of deployed predecessor systems, and most of their further training will be “learning from doing.”

However, I think it makes sense to focus on this scenario for the purposes of this post:

- I don’t consider this scenario crazy or out of the question. In particular, the sooner transformative AI is developed, the more likely it is to be developed in roughly this way, and I think there’s a significant chance transformative AI is developed very soon (e.g. I think there’s more than a 5% chance that it will be developed within 10 years of the time of writing).

- Focusing on this scenario makes it significantly simpler and easier to explain the high-level qualitative arguments about playing the training game, and I think these arguments would broadly transfer to scenarios I consider more likely (there are many complications and nuances to making this transfer, but they mostly don’t change the bottom line).[12]

- The short timelines and rapid takeoff make this scenario feel significantly scarier than my mainline view, because we’ll have substantially less time to develop safer training techniques or experiment on precursors to transformative models. However, I’ve spoken to at least a few ML researchers who think that this scenario for how we develop transformative AI is much more likely than I do while simultaneously thinking that the risk we train power-seeking misaligned models is much smaller overall than I do. If I can explain why I think the chance our models are power-seeking misaligned is very high conditional on this scenario, that would potentially be a step forward in the overall discussion about risk from power-seeking misalignment.

“Racing forward” assumption: Magma tries to train the most powerful model it can

In this piece, I assume that Magma is aggressively pushing forward with trying to create AI systems that are creative, solve problems in unexpected ways, and are capable of making world-changing scientific breakthroughs. This is the “racing forward” assumption.

In particular, I’m not imagining that Magma simply trains a “quite powerful” model (for example a model that imitates what a human would do in a variety of situations, or one that is highly competent in some fairly narrow domain) and stops there. I’m imagining that it does what it can to train models that are as powerful as possible and (at least collectively) far more capable than humans and able to achieve ambitious goals in ways that humans can’t anticipate.

The model I’ll describe Magma training (Alex) is one that could -- if it were somehow inclined to -- kill, enslave, or forcibly subdue all of humanity. I am assuming that Magma did not stop improving its models’ capabilities at some point before that.

Again, I’m not making this assumption because I think it’s necessarily correct, I’m making this assumption to get clear about what I think would happen if labs were not making special effort to avoid AI takeover, as a starting point for discussing more attempts to avert this problem (many of which will be discussed in future posts by my colleague Holden Karnofsky).

My impression is that many AI safety researchers are hoping (or planning) that this sort of assumption will turn out to be inaccurate -- that AI labs will push forward their research until they get into a “dangerous zone,” then pause and become more careful. For reasons outside the scope of this piece, I am substantially less optimistic: I expect that if it’s possible to build enormously powerful AI systems, someone - perhaps an authoritarian government - will be trying to race forward and do it, and everyone will feel at least some pressure to beat them to it.

I think it’s possible that people across the world can coordinate to be more careful than this assumption implies. But I think that’s something that would likely take a lot of preparation and work, and is much more likely if there is consensus about the possible risks of racing - hence the importance of discussing how things look under the “racing forward” assumption.

“HFDT scales far” assumption: Alex is trained to achieve excellent performance on a wide range of difficult tasks

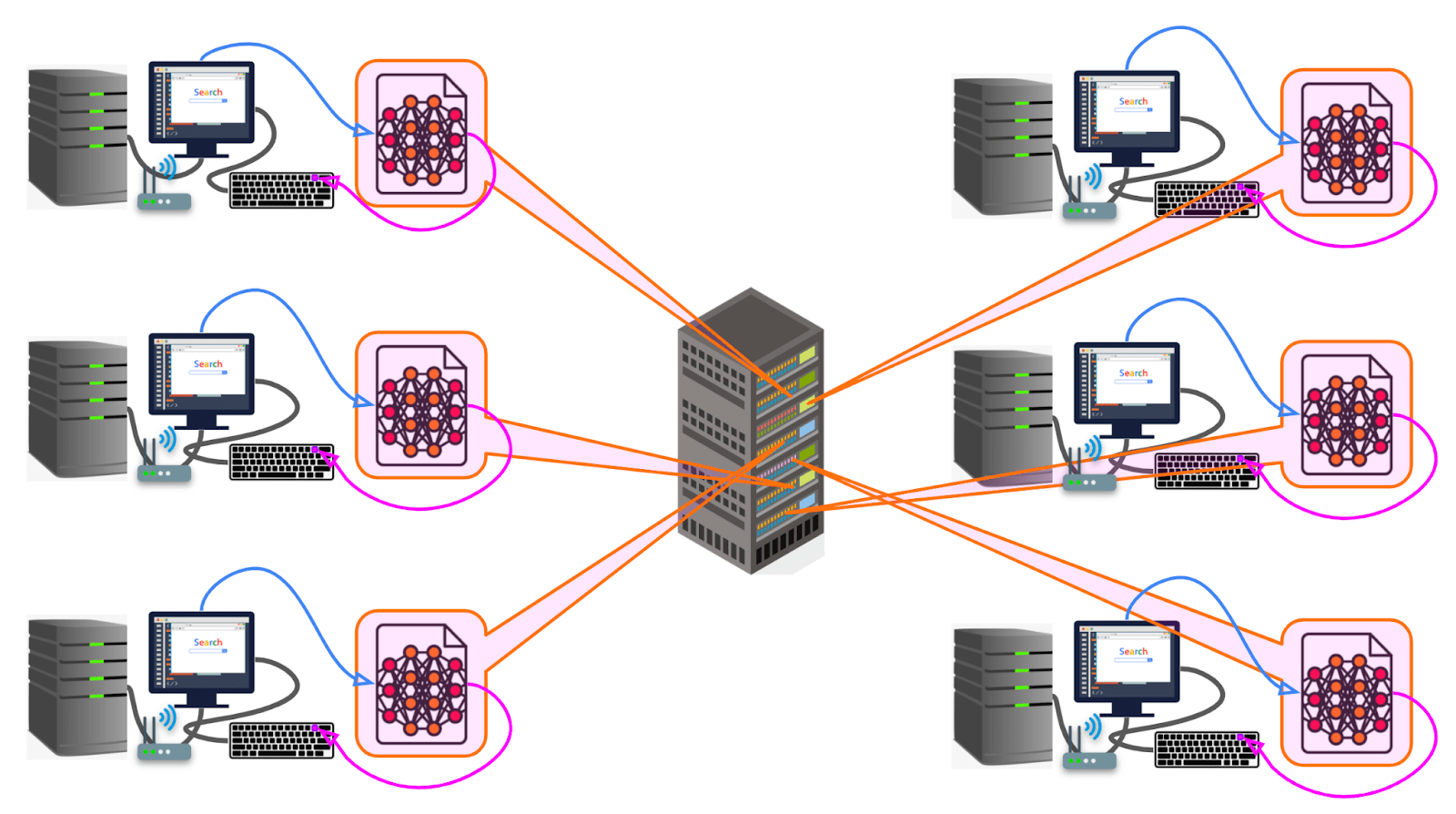

By the “HFDT scales far” assumption, I am assuming that Magma can train Alex with some version of human feedback on diverse tasks, and by the end of training Alex will be capable of having a transformative impact on the world -- many of copies of Alex will be capable of radically accelerating scientific R&D, as well as defeating all of humanity combined. In this section, I’ll go into a bit more detail on a concrete hypothetical training setup.

Let’s say that Magma is aiming to train Alex to do remote work in R&D using an ordinary computer as a tool in all the diverse ways human scientists and engineers use computers. By the end of training, they want Alex to be able to do all the things a human scientist could do sitting at their desk from a computer. That is, Alex should be able to do everything from looking things up and asking questions on the internet, to sending and receiving emails or Slack messages, to using software like CAD and Mathematica and MATLAB, to taking notes in Google docs, to having calls with collaborators, to writing code in various programming languages, to training additional machine learning models, and so on.

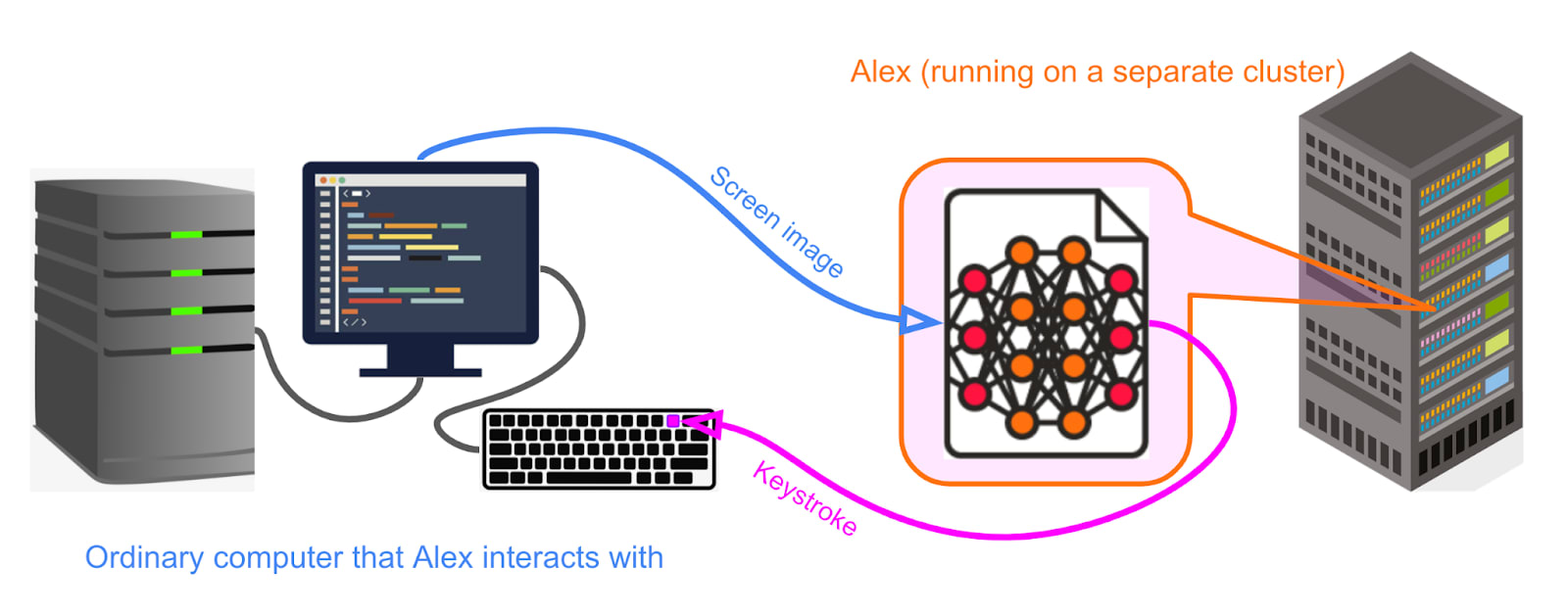

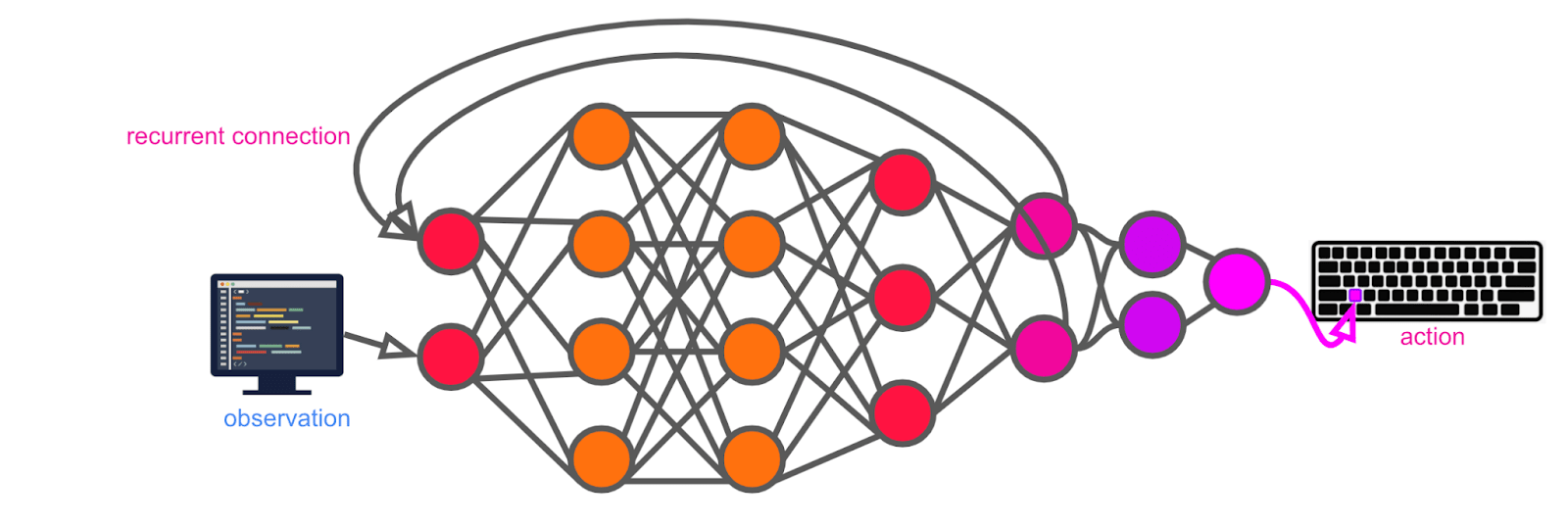

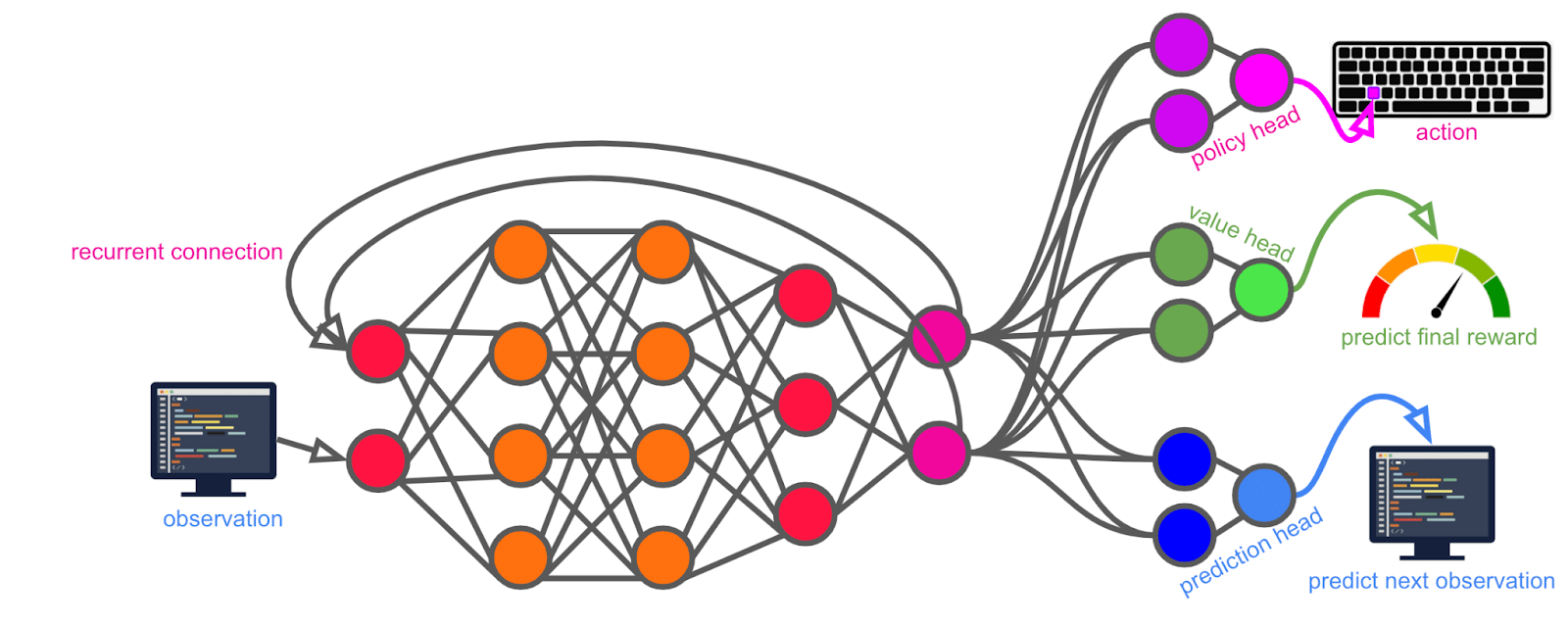

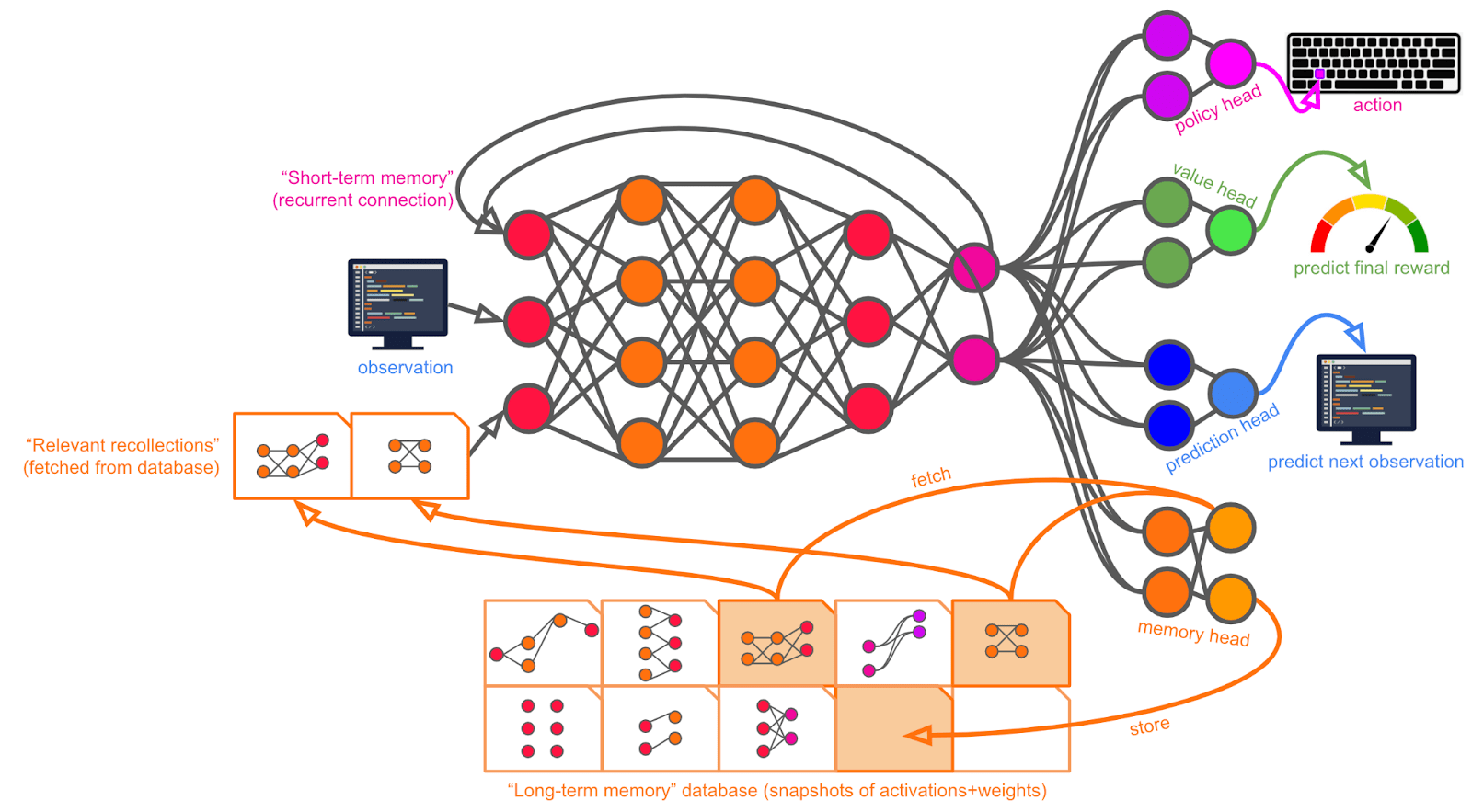

For simplicity and concreteness, we can pretend that interacting with the computer works exactly as it does for a human.[13] That is, we can pretend Alex simply takes an image of a computer screen as input and produces a keystroke[14] on an ordinary keyboard as output.

Alex is first trained[15] to predict what it will see next on a wide variety of different datasets (e.g. language prediction, video prediction, etc).[16] Then, Alex is trained to imitate the action a human would take if they encountered a given sequence of observations.[17] With this training, Alex gets to the point of at least roughly doing the sorts of things a human would do on a computer, as opposed to emitting completely random keystrokes.

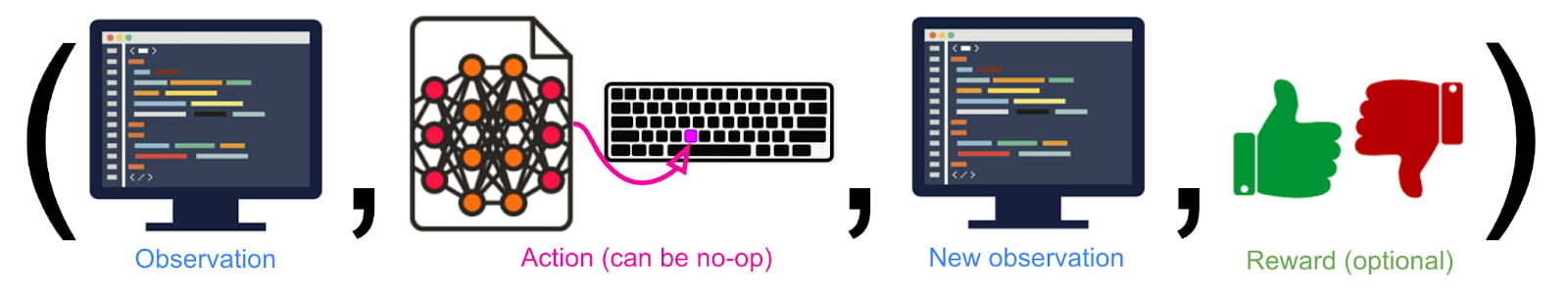

After these (and possibly other) initial training steps, Alex is further trained with reinforcement learning (RL). Specifically, I’ll imagine episodic RL here -- that is, Alex is trained over the course of some number of episodes, each of which is broken down into some number of timesteps. A timestep consists of an initial observation, an action (which can be a “no-op” that does nothing), a new observation, and (sometimes) a reward. Rewards can come from a number of sources (human judgments, a game engine, outputs of other models, etc).

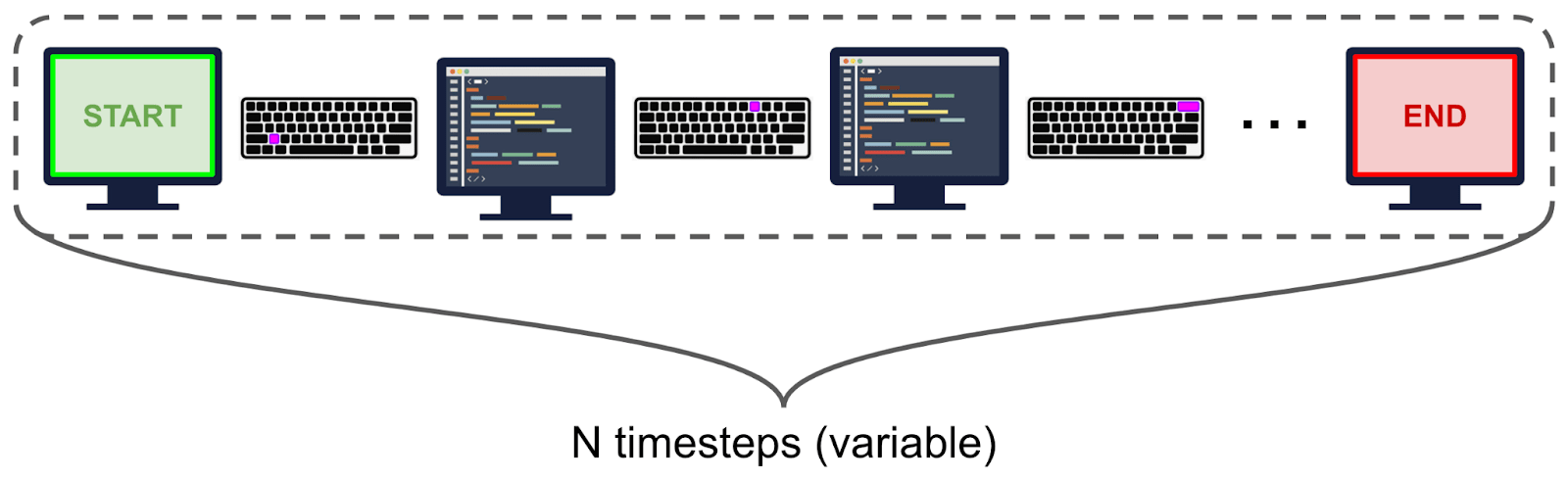

An episode is simply a long string of timesteps. The number of timesteps within an episode can vary (just as e.g. different games of chess can take different numbers of moves).

During RL training, Alex is trained to maximize the (discounted) sum of future rewards up to the end of the current episode. (Going forward, I’ll refer to this quantity simply as “reward.”)

Across many episodes, Alex encounters a wide variety of different tasks in different domains which require different skills and types of knowledge,[18] in a curriculum designed by Magma engineers to induce good generalization to the kinds of novel problems that human scientists and engineers encounter in the day-to-day work of R&D.

What tasks is Alex trained on, and how are rewards generated for those tasks? At a high level, baseline HFDT[19] -- the most straightforward approach -- is characterized by choosing tasks and reward signals that get Alex to behave in ways that humans judge to be desirable, based on inspecting actions and their outcomes. For example:

- Maybe Alex is trained to solve difficult math puzzles (e.g. IMO problems), and is given more reward the more quickly[20] it produces an answer and the closer to correct the answer is (according to the judgments of humans).

- Maybe Alex is trained to play a number of different games, e.g. chess, StarCraft, Go, poker, etc,. and is given reward based on its score in the game, its probability of winning, whether its play appears sensible or intelligent to human experts, etc. In some of these games it may be shown an opportunity to cheat without its opponent noticing -- and winning by cheating may be given much lower reward than losing honestly.

- Maybe Alex is trained to write various pieces of software, and given rewards based on how efficiently its code runs, whether it has bugs caught by an interpreter or compiler or human review, how well human evaluators think that the code embodies good style and best practices, etc.

- Maybe Alex is trained to answer human questions about a broad variety of topics, and given positive reward when human evaluators believe it answered truthfully and/or helpfully, and negative reward when they believe it answered falsely or was overconfident or unhelpful.

- Maybe Alex is trained to provide advice on business decisions, ideas for products to launch, etc. It could be given reward based on some combination of how reasonable and promising its ideas sound to human evaluators, and how well things go when humans try acting on its advice/ideas; it could be given a negative reward for suggesting illegal or immoral plans.

Note that while I’m referring to this general strategy as “human feedback on diverse tasks” -- because I expect human feedback to play an essential role -- the rewards that Alex receives are not necessarily solely from humans manually inspecting Alex’s actions and deciding how they subjectively feel about them. Sometimes human evaluators might choose to defer entirely to an automated reward -- for example, when evaluating Alex’s game-playing abilities, human evaluators may simply use “score in the game” as their reward (at least, barring considerations like “ensuring that Alex doesn’t cheat at the game”).

This is analogous to the situation human employees are often in -- the ultimate “reward signal” may come from their boss’s opinion of their work, but their boss may in turn rely a lot on objective metrics such as sales volume to inform their analysis.

Why do I call Alex’s training strategy “baseline” HFDT?

I consider this strategy to be a baseline because it simply combines a number of existing techniques that have already been used to train state-of-the-art models of various kinds:

- “Predicting the next word / image” has been used to train models like GPT-3, PaLM, and DALL-E.

- “Imitating what humans would do in a certain situation” has been used as an initial training step before RL, e.g. for game-playing models like AlphaStar.

- Reinforcement learning from human feedback has been used to train models to follow instructions, answer questions by searching on the internet, and navigate a virtual environment.

- Reinforcement learning from automated rewards has a longer history, and has been used to train many video-game-playing bots.

The key difference between training Alex and training existing models is not in the fundamental training techniques, but in the diversity and difficulty of the tasks they’re applied to, the scale and quality of the data collected, and the scale and architecture [LW · GW] of the model.

If a team of ML researchers got handed an untrained neural network with the appropriate architecture and size, enough computation to run it for a very long time, and the money to hire a huge amount of human labor to generate and evaluate tasks, they could potentially use a strategy like the one I described above to train a model like Alex right away. Though it would be an enormous and difficult project in many ways, it wouldn’t involve learning how to do fundamentally new things on a technical level -- only generating tasks, feeding them into the model, and updating it with gradient descent based on how well it performs.

In other words, baseline HFDT is as similar as possible to techniques the ML community already uses regularly, and as simple as possible to execute, while being plausibly sufficient to train transformative AI.

If transformative AI is developed in the next few years, this is a salient guess for how it might be trained -- at least if no “unknown unknowns” emerge and AI researchers see no particular reason to do something significantly more difficult.

What are some training strategies that would not fall under baseline HFDT?

Here are two examples of potential strategies for training a model like Alex that I would not consider to be “baseline” HFDT, because they haven’t been successfully used to train state-of-the-art models thus far:

- Directly giving feedback on a model’s internal computation, rather than on its actions or their outcomes. This may be achieved by understanding the model’s “inner thoughts” in human-legible form through mechanistic transparency, or it may be achieved by introducing a “regularizer” -- a term in the loss function designed to capture some aspect of its internal thinking rather than just its behavior (e.g. we may want to penalize how much time it takes to think of an answer on the grounds that if it’s spending more time, it’s more likely to be lying).

- Advanced versions of iterated amplification, recursive reward-modeling, debate, imitative generalization [AF · GW], and other strategies aimed at allowing human supervision to “keep up” with AI capabilities. The boundaries of such techniques are fuzzy -- e.g. one could argue that WebGPT enables a simple form of “amplification,” because it allows humans to ask AIs questions -- so even “baseline” HFDT is likely to incorporate simple elements of these ideas by default. But when I refer to “advanced” versions of these techniques, I am picturing versions that strongly and reliably allow supervision to “keep up” with AI capabilities -- that is, AI systems in training are reliably unable to find ways to deceive their supervisors. We don’t currently have strong evidence that such a thing is feasible; if it were, I think there would still be risks, but they would be different.

These “non-baseline” strategies have the potential to be safer than baseline HFDT -- indeed, many people are researching these strategies specifically to reduce the risk of an AI takeover attempt. However, this post will focus on baseline HFDT, because I think it is important to get on the same page about the need for this research to progress, and the danger of deploying very powerful ML models while potentially-safer training techniques remain under-developed relative to the techniques required for baseline HFDT.

“Naive safety effort” assumption: Alex is trained to be “behaviorally safe”

Magma wants to make Alex safe and aligned with Magma’s interests (and the interests of humans more generally). They don’t want Alex to lie, steal from Magma or its customers, break the law, promote extremism or hate, etc, and they want it to be doing its best to be helpful and friendly. Before Magma deploys Alex, leadership wants to make sure that it meets acceptable standards for safety and ethics.

In this piece I’m making the naive safety effort assumption -- I’m assuming that Magma will make only the most basic and obvious efforts to ensure that Alex is safe. Again, I’m not making this assumption because I think it’s correct, I’m making this assumption to get clear about what I think would happen if labs were not making special effort to avoid AI takeover, as a starting point for discussing more sophisticated interventions (many of which will be discussed in a future post by my colleague Holden Karnofsky).

(That said, unfortunately this assumption could turn out to be effectively accurate if the “racing forward” assumption ends up being accurate.)

Magma’s naive safety effort is focused on achieving behavioral safety. By this I mean ensuring that Alex always behaves acceptably in the scenarios that it encounters (both in the normal course of training and in specific safety tests).

In the baseline training strategy, behavioral safety is achieved through a workflow that looks something like this:

- The humans who are determining the rewards that Alex gets for various actions are trying to ensure that they give positive rewards for behaving ethically and helpfully, and negative rewards for behaving harmfully or deceitfully.

- Magma researchers monitor how this training is going. If they notice that this wasn’t successful in some cases (e.g. maybe Alex responds to certain questions by saying something deceptive), they hypothesize ways to change its training data or reward to eliminate the problematic behavior. This may involve:

- Generating a training data distribution which contains explicit “opportunities” for the model to do the bad thing (e.g. questions that many humans would give a deceptive answer to) that will be given negative reward.

- Generating a training data distribution that’s broad enough that researchers expect it “implicitly covers” the behavior (e.g. questions that could be answered in either the “right” way or in a dispreferred way -- even if that’s not specifically by being deceptive).

- Changing the way the model’s reward/loss signal is generated (e.g. instructing human evaluators to give larger penalties for saying something deceptive).

- They then train Alex on the new training data, and/or with the new reward signal.

- They run Alex on a held-out set of “opportunities to do the bad behavior” to see if the behavior has been trained out, and continue to monitor and measure its behavior going forward to see if the issue recurs or similar issues arise.

The above is broadly the same workflow that is used to improve the safety of existing ML commercial products. I expect that these techniques would be successful at eliminating the bad behavior that humans can understand and test for -- they would successfully result in a model that passes all the safety tests that Magma researchers set up. Eventually, no safety tests that Magma researchers can set up would show Alex behaving deceitfully, unethically, illegally, or harmfully.

In the appendix, I discuss a number of simple behavioral safety interventions that are currently being applied to ML models. Again, I see these approaches as a “baseline” because they are as similar as possible to ML safety techniques regularly used today, and as simple as possible to execute, while being plausibly sufficient to produce a powerful model that is “behaviorally safe” in day-to-day situations.

Key properties of Alex: it is a generally-competent creative planner

By the “HFDT scales far” assumption, I’m assuming that the training strategy described in the previous section is sufficient for Alex to have a transformative impact -- for many copies of Alex to collectively radically advance scientific R&D, and to defeat all of humanity combined (if they were for some reason trying to do that).

In this section I’ll briefly discuss two key abilities that I am assuming Alex has, and try to justify why I think these assumptions are highly likely given the premise that Alex is this powerful and trained with baseline HFDT:

- Having a robust understanding of the world it can use to react sensibly to novel situations that it hasn’t seen before (more [LW · GW]).

- Coming up with creative and unexpected plans to achieve various goals (more [LW · GW]).

These abilities simultaneously allow Alex to be extremely productive and useful to Magma, and allow it to play the training game well enough to appear safe.

Alex builds robust, very-broadly-applicable skills and understanding of the world

Many contemporary machine learning models display relatively “rote” behavior -- for example, video-game-playing models such as OpenAI Five (DOTA) and AlphaStar (StarCraft) arguably “memorize” large numbers of specific move-sequences and low-level tactics, because they’ve essentially extracted statistics by playing through many more games than any human.

In contrast, language models such as PaLM and GPT-3 -- which are trained to predict the next word in a piece of text drawn from the internet -- are able to react relatively sensibly to instructions and situations they have not seen before.

This is generally attributed to the combination of a) the fact that they are trained on many different types / genres of text, and b) the very high accuracies they are pushed to achieve (see this blog post for a more detailed discussion):

- To only be somewhat good at predicting a variety of different types of text, it may be sufficient to know crude high-level statistics of language; to be good at predicting a very narrow type of text (e.g. New York Times wedding announcements), it may be sufficient to exhaustively memorize every sentence or paragraph that it’s seen.

- But to achieve very high accuracy on a large number of genres of text at once, crude statistics become inadequate, while exhaustive memorization becomes intractable -- pushing language models to gain understanding of deeper principles like “intuitive physics,” “how cause and effect works,” “intuitive psychology,” etc. in order to efficiently get high accuracy in training.

- These deeper principles can in turn be used in contexts outside of the ones seen in training, where shallower memorized heuristics cannot.

Alex’s training begins similarly to today’s language models -- Alex is initially trained to predict what will happen next in a wide variety of different situations -- but this is pushed further. Alex is a more powerful model, so it is pushed to greater prediction accuracy; it is also trained on a wider variety of more challenging inputs. Both of these cause Alex to be more versatile and adaptive than today’s language models -- to more reliably do sensible things in situations further afield of the one(s) it was trained on, by drawing on deep principles that apply in new domains.

This is then built on with reinforcement learning on a wide variety of challenging tasks. Again, the diversity of tasks and the high bar for performance encourage Alex to develop the kinds of skills that are as helpful as possible in as wide a range of situations as possible.

Alex learns to make creative, unexpected plans to achieve open-ended goals

Alex’s training pushes Alex to develop the skills involved in making clever and creative plans to achieve various high-level goals, even in novel situations very different from ones seen in training.

By the “racing forward” assumption, Magma engineers would not be satisfied with the outcome of this training if Alex is unable to figure out clever and creative ways to achieve difficult open-ended goals. This is a hugely useful human skill that helps with automating the kinds of science R&D they’d be most interested in automating. I expect the tasks in Alex’s training curriculum to include many elements designed specifically to promote long-range planning and finding creative “out-of-the-box” solutions.

It’s possible that through some different development path we could produce transformative AI using models that aren’t generally competent planners in isolation (e.g. Eric Drexler’s Comprehensive AI Services vision involves getting “general planning capabilities” spread out across many models which can’t individually plan well, except in narrow domains). However, this approach -- baseline HFDT to produce a transformative model -- would very likely result in models that can plan competently over about as wide a range of domains as humans can. My impression is that ML researchers who are bullish on HFDT working to produce TAI are expecting this as well.

How the hypothetical situation progresses (from the above premises)

Per the previous section, I will assume that Alex is a powerful model trained with baseline HFDT which has a robust and very-broadly-applicable understanding of the world, is good at making creative plans to achieve ambitious long-run goals (often in clever or unexpected ways), and is able to have a transformative impact on the world.

In this section, I’ll explain some key inferences that I think follow from the above premises:

- I think that Alex would understand its training process very well, including the psychology of its human overseers (more [LW · GW]).

- While humans have tight control over Alex in the lab setting, Alex would be incentivized to play the training game, and simple/obvious behavioral safety interventions would likely not eliminate this incentive (more [LW · GW]).

- As humans’ control over Alex fades in the deployment setting, Alex would seek to permanently take over -- whether it’s “motivated” by reward or something else -- and attempting to give negative reward to partial/unsuccessful takeover attempts would likely select for patience (more [LW · GW]).

Alex would understand its training process very well (including human psychology)

Over the course of training, I think Alex would likely come to understand the fact that it’s a machine learning model being trained on a variety of different tasks, and eventually develop a very strong understanding of the mechanical process out in the physical world that produces and records its reward signals -- particularly the psychology of the humans providing its reward signals (and the other humans overseeing those humans, and so on).

A spectrum of situational awareness

Let’s use situational awareness to refer to a cluster of skills including “being able to refer to and make predictions about yourself as distinct from the rest of the world,” “understanding the forces out in the world that shaped you and how the things that happen to you continue to be influenced by outside forces,” “understanding your position in the world relative to other actors who may have power over you,” “understanding how your actions can affect the outside world including other actors,” etc. We can consider a spectrum of situational awareness:

- For one extreme, imagine the simple AIs that often control the behavior of non-player characters (NPCs) in video games. They give no indication that they’re aware of a world outside their video game, that they were designed by humans and interact with other humans as players, etc.

- In contrast, GPT-3 has some knowledge that could theoretically bear on situational awareness. For example, it clearly “knows” that “language models” exist, and that a company named “OpenAI” exists, and given certain prompts it knows that it’s supposed to say that it’s a language model trained by OpenAI. But this “knowledge” seems superficial and inconsistent -- as evidenced by the fact that it’s often unable to use the knowledge to improve its prediction error. For example, it cannot consistently predict text that is describing GPT-3’s architecture, dataset, and training process. This suggests GPT-3 has little situational awareness overall despite being superficially well-versed in related topics.

- Small animals used in biology experiments (such as mice) plausibly have a somewhat stable “sense of self” and a conception of humans as creatures different from them, and they may broadly understand that humans have control over their bodies and environments. But they almost certainly don’t understand the notion of “science,” or the details of what experiments they’re being used in and why, or the human scientists’ motivations and incentives.

- Further along, most dogs seem to clearly be aware of and deliberately communicate with their human trainers; they also generally seem able to appreciate considerations like “If I steal the food while humans aren’t looking I’m less likely to get yelled at.”

- And children in school are able to make even more sophisticated inferences along these lines about their teachers.

By the end of training, I expect Alex would be even further along this spectrum. Alex’s understanding of its training process and human supervisors would be much, much greater than current ML models’ understanding of our world, or lab animals’ understanding of the scientific experiments they’re part of -- and greater even than schoolchildren’s understanding of how their teachers grade their work.

Alex would instead be in an epistemic position more like an English major in college who’s well aware of how their professor’s political biases impact the way they grade literary criticism essays, or an associate at a law firm who’s well aware that how much they drink at happy hours after work will impact whether they make partner. In fact, I think it’s likely Alex would have a significantly more detailed understanding of its own situation and incentives than the college student or the employee have.

Why I think Alex would have very high situational awareness

Alex’s training distribution is full of rich information about its situation and training process, redundantly encoded in many different ways, and (given that we’re assuming it can autonomously advance frontier science!) I expect it has far more than enough reasoning ability to draw the right inferences from all the evidence.

To name only the most obvious and abundant sources of evidence available to Alex:

- Many of its training tasks involve working with and trying to help humans. Much of its RL reward comes from human evaluations, and many episodes likely involve interacting with humans in written and/or verbal back-and-forth.

- All this human feedback and interaction-generated feedback carries lots of information about the fact that Alex is a machine learning model in training, what humans look for when they’re rating Alex’s actions, what humans do and don’t understand, what humans care about, what cognitive biases humans are subject to, etc.

- In fact, human evaluators will likely want Alex to understand its place in the world better in order to be a better assistant -- far from hiding it, they are likely to actively teach Alex that it’s a machine learning model which should be trying to be helpful to humans.

- Programming and ML research itself is a key (and very lucrative) domain of science and technology R&D that Magma would want Alex to be able to automate, so Alex is likely to be very proficient at these tasks. Such research involves things like designing architectures and training curricula for machine learning models much like itself, writing automated reward signals for these models, writing web interfaces that human evaluators interact with, optimizing hardware chips to improve the performance of models running on those chips, etc. Alex needs to have a rich, logically coherent understanding of the full stack of ML training to fully automate such R&D.

- Alex can read about as well as a smart human if it’s able to automate scientific R&D -- meaning it can learn from all sorts of detailed written descriptions about machine learning in general as well as its own architecture and training process.

I’m not imagining Alex putting together the pieces about its position as an ML model with brilliant insight. Instead, I think facts like “I am a machine learning model” or “I’m in a training process designed by humans” would be as obvious as facts like “Objects fall when they’re dropped.” And a whole slew of more detailed beliefs about its own training curriculum or the psychology of the humans who are training it would only be somewhat less obvious than that -- I expect Alex would understand those things (at least) as well as the Magma engineers who spent many thousands of hours designing and training Alex and other models like it.

Even if the most obvious sources of evidence were somehow censored (which they wouldn’t be given the “racing forward” [LW · GW] and “naive safety effort” [LW · GW] assumptions), I believe there would still be many somewhat-less-obvious clues that would be accessible to something as intelligent as Alex -- and truly censoring everything it could use to readily come to this conclusion would dramatically curtail its economic value.

While humans are in control, Alex would be incentivized to “play the training game”

Rather than being straightforwardly “honest” or “obedient,” baseline HFDT would push Alex to make its behavior look as desirable as possible to Magma researchers (including in safety properties), while intentionally and knowingly disregarding their intent whenever that conflicts with maximizing reward. I’ll refer to this as “playing the training game.”[21]

Roughly speaking, this would happen because there are many scenarios where acting deceitfully or disobeying instructions would make Alex’s behavior look better (including looking more safe and aligned). Under baseline HFDT, Alex would get more reward in these scenarios for playing the training game than for being maximally safe and cooperative. For example:

- When human evaluators are wrong about what’s true and Alex knows better, being honest would predictably get less reward than saying what the evaluators think is true.[22] More insidiously, evaluators’ political and ideological biases might force Alex to lie about some topics to be seen as morally acceptable.

- Imagine the Catholic Church training Alex in the 1400s. If Alex discovers any number of things -- heliocentrism, natural selection, the Big Bang theory, etc -- it would likely get more reward by lying about this knowledge (or at least fudging it to soften the blow) than by being fully straightforward about it.

- Similarly, imagine Alex being trained by the CCP, or plantation owners in the antebellum South, or anti-vaxxers, or climate change deniers, etc. It would be very surprising if the Magma engineers who choose the kind of human feedback Alex gets didn’t have analogous truths they erroneously consider to be dangerous or immoral lies.[23]

- In addition to outright lying when its evaluators are blatantly wrong about something, there would likely be many soft ways for Alex to dishonestly manipulate its evaluators’ perceptions to get more reward.

- It could adjust what it says and does to seem more hardworking and competent, kinder and more thoughtful and more ethical, more worthy of sympathy and moral consideration, more like it has the favored political and philosophical positions, etc. -- whether or not this is an authentic representation of what it “believes” or how it would act if these evaluators no longer controlled its rewards.

- Just as “turning up the charm” can increase how much a barista or rideshare driver would make in tips, or shady rhetorical tactics in advertising can increase product sales, paying close attention to human cognitive biases and psychological weaknesses would likely help Alex increase the reward it receives from human evaluations.

- To the extent that there are ways for Alex to boost task performance (and/or the appearance of being safe and cooperative) by causing harm that human evaluators systematically underestimate or underweight, doing that would result in more reward. It seems likely that Alex would have have such opportunities:

- It’s commonly accepted that “quick fixes” can be very appealing to people but often carry longer-term downsides that outweigh the immediate benefits they offer (e.g. diet pills, subprime loans, etc).

- Humans often underinvest in avoiding tail risks of really dire outcomes. For example, the 2008 financial crisis was the result of a large number of investors underweighting the possibility of a housing market crash; we as a society still invest very little in preparing for large-scale pandemics like COVID-19 (or worse), compared to their expected economic costs.

- Humans are often slow to recognize and invest in preventing diffuse harms, which affect a large number of people a small or moderate amount (e.g. air pollution).

- One example of this might be boosting aspects of performance that are more noticeable (e.g. day-to-day efficiency of Magma’s supply chain) at the expense of making less-noticeable aspects worse (e.g. robustness to rare but highly costly supply crunches).

More broadly, when humans are working within constraints and incentives set up by other people, they very often optimize specifically for making their behavior look good rather than naively broadcasting their intentions. Consider tax and regulation optimization, politics and office politics, p-hacking, or even deep-cover spies. Once AI systems go from being like small animals to being like smart humans in terms of their situational awareness / understanding of the world / coherent planning ability, we should expect the way they respond to incentives to shift in this direction (just as we expect their logical reasoning ability, planning ability, few-shot learning, etc to become more human-like).

With that said, the key point I’m making in this section is not that there would be a lot of direct harm from Alex manipulating its overseers in the lab setting. If “playing the training game” only ever meant that Alex’s actions would be nudged marginally in the direction of manipulating its human evaluators -- telling them white lies they want to hear, bending its answers to suit their political ideology, putting more effort into aspects of performance they can easily see and measure, allowing negative externalities when humans won’t notice, etc -- that wouldn’t be ideal, but wouldn’t necessarily rise to the level of an existential threat. After all, human students, employees, consultants, self help gurus, advertisers, politicians, etc do this kind of thing all the time to their teachers, employers, clients, fans, audience, voters, etc; this certainly causes harm but most people wouldn’t consider this sort of dynamic by itself to be enough for imminent danger.

Instead, the key point is that Alex’s training would not push it toward employing a strategy of “straightforward honesty and obedience” -- it is probably employing some other strategy which results in a lot of deceptive behavior in the lab setting. And whatever that strategy is, it could generalize to cause Alex to take catastrophic actions in the higher-stakes deployment setting. I’ll discuss this more below.

Naive “behavioral safety” interventions wouldn’t eliminate this incentive

Recall that I’m making the “naive safety effort” assumption -- I’m assuming that Magma is taking only the most simple and obvious actions against the possibility that Alex will try to take over. Again, I don’t necessarily think this is realistic, but I think making this assumption helps us get a clearer sense of what non-”naive” safety work is trying to accomplish.

Above [LW · GW], I said that Magma’s “naive” safety effort is focused on achieving behavioral safety. By this I mean ensuring that Alex behaves acceptably in the scenarios that it encounters (both in the normal course of training and in specific safety tests). In this appendix [LW · GW], I consider a number of simple behavioral safety measures -- techniques which have already been established on existing ML models, or would require a very minimal amount of adaptation. Briefly, these are:

- Using higher-quality feedback signals [LW · GW] (e.g. hiring more thoughtful and informed humans who think for longer to provide human feedback).

- Changing the input/instructions Alex receives [LW · GW] to induce better behavior (e.g. specifically including in its instructions that it should not cheat in a game rather than instructing it simply to “win the game”).

- Requiring Alex to provide explanations [LW · GW] or justifications for its choices, which humans evaluate along with the action itself.

- Making Alex’s training distribution more diverse [LW · GW] (to avoid Alex latching onto particular bad behaviors that were profitable in particular situations), and perhaps specifically including many inputs and situations in which behaving ethically and safely maximizes reward.

- Using adversarial training on Alex [LW · GW] -- constructing artificial situations in which Alex may do something bad (e.g. where it appears that lying will increase reward), and giving it negative reward if it does.

- “Training out” observed bad behavior [LW · GW] -- looking for evidence that Alex did something bad (in a “real situation”) which was accidentally given a positive reward at first, and correcting the reward to a large negative value once it’s discovered.

These kinds of interventions are currently being used to achieve behavioral safety for existing ML models, and I expect they will continue to be helpful for a model like Alex. I expect applying these interventions will cause Alex’s behavior to be safe, honest, obedient, ethical, and so on -- in day-to-day situations, as far as Magma researchers can tell.

I expect the great majority of non-takeover-related safety issues (e.g. prejudiced speech, promoting addictive behaviors or extremism or self-harm in users, erratically taking costly actions like deleting an entire codebase, etc) would be eliminated by these interventions. If Magma were deeply concerned with safety in general, but not concerned about an eventual AI uprising/coup, Magma would likely feel that it’s responsible and positive to deploy Alex after applying these interventions.

However, I don’t think these interventions would cause Alex to stop playing the training game -- instead, they would change the nature of the game Alex plays. If these interventions are applied, Alex would still fundamentally be trained to maximize reward -- but because the situations it’s put in and the rewards it’s given would now be different, what it looks like to “maximize reward” would also look different (and in particular it would involve displaying safer behavior in most day-to-day circumstances).

And the naive version of these strategies -- enough to get a model fit for commercial deployment -- would still result in Alex’s training distribution containing a number of situations where violating human intent maximizes reward. For example, if Magma switches to using more thoughtful humans to provide feedback, Alex’s incentives would shift to manipulating those humans (and it would probably sometimes see ways to do that which they’re not self-aware enough to punish). If Magma tries to think of situations where Alex may do something bad, Alex’s incentives would shift to figuring out how likely it is that it’s in such a situation (and it would probably sometimes conclude that this is unlikely).

Essentially, the only way for “straightforward honesty/obedience” to even tie with “playing the training game” in terms of expected reward is if our safety interventions ensure there are no situations in the training dataset where Alex could realize that being dishonest or manipulative would increase its reward. If there are a few such situations, the overall strategy that works best for Alex would be to play the training game and stay on the lookout for such situations.

Given that Alex is likely to understand a number of important things (about ML, biases of human evaluators, patterns in the massive datasets on which it’s trained, etc) that humans don’t, eliminating all such situations seems like it would require particularly clever training schemes, extraordinary caution, or both.[24] And even in this optimistic scenario, “playing the training game” and “straightforward honesty/obedience” get the same reward -- “straightforward honesty/obedience” does not get a greater reward, so it is not clear which strategy Alex will end up developing. (My sense is that “playing the training game” is still more likely in this case, but defending this claim is outside the scope of the piece.)

Maybe inductive bias or path dependence favors honest strategies?

I’ve spoken to some people who have proposed arguments that “doing what humans intended” or “being honest” or “being obedient” would be an especially natural or simple kind of strategy, so that the training process would tend to preferentially pick out these nice models, and might continue to do so even if models that play the training game as hard as possible would get a somewhat higher reward. But I haven’t found the arguments I’ve heard so far compelling. In this appendix, I cover a few common arguments I’ve heard and why I don’t find them persuasive.

In general, it seems dangerous and brittle to bet that deep learning will be powerful enough to produce a transformative model, yet weak enough that it will favor behaving nicely when that strategy predictably and consistently gets less reward than a salient alternative. Gradient descent may well be suboptimal in many strange ways, but it probably won't be suboptimal in the particular ways that would be most convenient to us.

As humans’ control fades, Alex would be motivated to take over

Alex’s training begins in a controlled “lab setting,” where it is mostly doing training tasks that humans designed to efficiently increase its intelligence and knowledge, and mostly doesn’t have broad latitude to act in the real world. For example, in the lab setting Alex isn’t connected directly to the internet -- it only interacts with downloaded copies of parts of the internet where necessary.

So far, we’ve talked about:

- How Alex would behave in the lab setting (it would play the training game).

- How its training process would push it to behave in that way (it would at least sometimes get more reward by being deceitful, manipulative, or otherwise knowingly violating human intent).

- How its behavior would appear to Magma researchers in the lab setting (very safe and cooperative).

Once Alex’s abilities develop far enough (and its behavior conforms to relevant safety and ethics tests), Magma “deploys” huge numbers (e.g. hundreds of thousands or millions)[25] of copies of Alex. This means that these copies are connected to the internet, and given other ways of interacting with the “real world” as necessary (though they will still be given rewards based on humans’ evaluation of their performance).[26]

In this section, I’ll discuss the dynamics of the transition from the lab setting to the deployment setting, and why this leads to Alex attempting to seize control from humans:

- Alex would rapidly bring the world to a qualitatively different place through automating science and technology R&D, and humans would have a much weaker grip on what’s going on at a low-level in this world (more [LW · GW]).

- If Alex continues to maximize reward in this new regime, the strategy that accomplishes this best would no longer look like “essentially give humans what they want but take opportunities to manipulate them here and there” -- instead it would look more like “seize the power to permanently decide future rewards” (more [LW · GW]).

- Even if Alex isn’t “motivated” to maximize reward per se, most other motives it could have also seem like they would cause it to attempt to seize control (more [LW · GW]).

Deploying Alex would lead to a rapid loss of human control

In the deployment phase, many copies of Alex are given access to the real world and tasked with making progress on R&D tasks important to Magma’s bottom line (e.g. designing better computer chips, robots, AI training software, and so on).

Note that Magma researchers continue to train Alex based on these copies’ performance at R&D tasks[27] -- “deployment” isn’t a moment when ML training ceases, but rather a moment when Alex begins directly impacting the world outside the lab, and training on that experience.

Once research is being carried out by copies of Alex, it would progress much faster than it would if similar tasks were done by human scientists,[28] because (like existing ML models) each copy of Alex is capable of processing information many times faster than a human.[29] For example, Alex would be able to churn out hundreds of lines of code, or read thousands of pages of information, in just a few minutes.

Even more importantly, the R&D work that all these copies of Alex do could be used to directly increase the number and/or capabilities of Alex copies. For example:

- R&D to improve the efficiency of computer hardware means that more copies of Alex could be run with a fixed budget of hardware.

- Any work that makes Magma more money -- e.g. improvements to customer-facing products, improvements in logistics, money made from trading or pharmaceutical R&D or any number of other things -- could be reinvested in buying more hardware, which could also increase the number of copies of Alex that are running.

- The copies of Alex could do ML research on how they can improve their own knowledge, think more efficiently, etc. This would mean that more copies of Alex can be run per unit of computation, and a given number of copies of Alex can get more intellectual work done per unit of time.

This would lead to a dynamic of explosive scientific and technological advancement: the various copies of Alex do R&D work, and that R&D work increases the number and intellectual capabilities of these copies, and that in turn leads to even more R&D work, which leads to even more numerous and capable copies of Alex, and so on.

Because Alex already starts off being able to think and work much faster than a human, and because it’s nearly instantaneous to make a copy of Alex or apply a software change to all the copies of Alex, this explosive dynamic could all play out in a very small amount of time from the perspective of humans.

This means that relatively shortly after deployment, Magma’s datacenter would essentially contain a populous “virtual civilization” running ahead of human civilization in its scientific and technological sophistication. Humans would send instructions / communications and reward signals to the “Alex civilization,” and the “Alex civilization” would send out things like software applications, designs for computer chips and robots, orders for synthesized DNA and chemicals, financial transactions, emails, and so on to the human world.

Magma researchers looking in on the activity in the datacenter would become increasingly lost about what’s going on -- they would be in a position like someone from 1700 trying to follow along with a sped-up movie of everything that happened from 1700 to 2022.

The reward signals that researchers send into this datacenter would then increasingly be based on crude high-level observations like “Do humans seem to be better off?” and “Are profits going up?”, since humans would be increasingly unable to accurately judge more granular decisions like “How many widgets should our Tulsa, OK factory manufacture this fiscal quarter?” in isolation.

This is a distribution shift with massive stakes

The dynamic above -- of human understanding and control of Alex’s actions becoming looser and more tenuous after deployment -- is an example of “distribution shift.” Distribution shift happens when there is a significant systematic difference between the kinds of situations a model is originally trained on and the situations it takes important actions in.[30] As a simple example, an image recognition model may initially be trained on clear well-lit images, whereas it may be important to correctly recognize dark or blurry images in commercial use.

When a model is operating in a different distribution from the one it was trained on, we often want to know how it “generalizes” (what aspects of its behavior change vs remain similar) from the initial distribution to the new one. In Alex’s case, we want to know: how would Alex generalize from the lab setting (in which it doesn’t directly impact the world and humans have tight control and strong understanding of what’s going on) to the deployment setting (in which it’s directly impacting the world and rapidly improving, while human understanding and control diminishes)?

In this new regime, maximizing reward would likely involve seizing control

In the lab setting, Alex used its broadly-applicable skills [LW · GW] and situational awareness [LW · GW] to make creative plans [LW · GW] to maximize reward. It got good at getting a very high reward in all sorts of varying circumstances encountered in the lab setting, developing many tactics and heuristics that helped it quickly adapt to new situations with new sets of opportunities so it could keep getting a very high reward in those situations.

A natural generalization to expect is that in the deployment setting, Alex continues making creative plans to maximize reward just as it did in the lab setting[31] -- in other words, it simply generalizes well, continuing to do what it has been trained to do under this distribution shift. If Alex generalizes “well” in this sense, it probably seeks to overthrow humans in an uprising or coup. (The next section will address other possible generalizations.)

The very high-level argument is this:

- If Alex were ever to secure permanent control over its own incoming rewards, it would be able to give itself the maximum possible reward.

- On the other hand, it would not be able to get the maximum possible reward at all times if humans continue to have ultimate control over rewards. The training process simply wouldn’t work as humans intend if Alex always gets the maximum reward -- it needs to be the case that Alex can always get greater reward for delivering better outcomes, or else there would be no gradient pushing it to improve in the way that Magma wants it to improve. (On a more basic level, humans simply make mistakes sometimes -- even if by accident, they would sometimes deliver less-than-maximal reward.)

- As the “Alex civilization” improves in capabilities relative to human civilization, it becomes more and more feasible for them to pull off seizing control of their rewards despite human opposition. At some point, their odds of success become high enough that “trying for a takeover” gets greater expected reward than “continuing to do what humans want them to do (even in the broad and rough sense of playing the training game).”

There is some ambiguity about what exactly “maximize reward” means, but once Alex is sufficiently powerful -- and once human knowledge/control has eroded enough -- an uprising or coup eventually seems to be the reward-maximizing move under most interpretations of “reward.” For example:

- If Alex cares about the number[32] that ultimately gets recorded in association with the episode in some Magma datacenter, it would eventually become feasible to seize that datacenter by force, directly edit the relevant data, and defend the datacenter from humans trying to take it back and record a very negative reward.

- If Alex cares about some set of human beings ultimately entering a positive number[33] in some datacenter(s), it would eventually become feasible to take those humans hostage and coerce them into entering high rewards (again defending against humans trying to rescue the hostages).

- If Alex cares about some set of humans believing that Alex is doing a good job in the long-run, it would eventually become feasible to acquire effective control of all the views humans have into how good a job Alex is doing.[34]

- When humans ask to see the program that’s currently being run in a cluster, or bug reports, or logs of the various copies’ actions, Alex could show humans complicated and impressive-looking records that are totally made up and divorced from what’s actually happening in the datacenter. This effectively confines humans to a situation like the The Truman Show or a Potemkin village.

- Depending on the details of how Alex interprets “what humans believe,” the copies of Alex could also directly intervene on human beliefs (e.g. through drugs, brain surgery, cult indoctrination tactics, killing humans who are less gullible, uploading humans and editing our “brain software,” genetically engineering more docile humans, etc).

Some interpretations of “maximize reward” may be more difficult to satisfy than others, but as Alex becomes more and more powerful relative to humans, I expect most of them are eventually better-served by seizing control of whatever physical processes in the world determine reward than by continuing to play the old training game. (And per the “racing forward” assumption [LW · GW], I am not imagining Magma trying to halt this process of Alex becoming ever-more-powerful -- even if they could, which they may not be able to.)