Five examples

post by Adam Zerner (adamzerner) · 2021-02-14T02:47:07.317Z · LW · GW · 17 commentsContents

1) Your Cheerful Price 2) If you’re not feeling “hell yeah!” then say no 3) Why I Still ‘Lisp’ (and You Should Too) 4) Short Fat Engineers Are Undervalued 5) Embedded Interactive Predictions on LessWrong Hat tips It's not easy None 17 comments

I often find it difficult to think about things without concrete, realistic examples to latch on to. Here are five examples of this.

1) Your Cheerful Price [LW · GW]

Imagine that you need a simple portfolio website for your photography business. Your friend Alice is a web developer. You can ask her what her normal price is and offer to pay her that price to build you the website. But that might be awkward. Maybe she isn't looking for work right now, but says yes anyway out of some sort of social obligation. You don't want that to happen.

What could you do to avoid this? Find her cheerful price, perhaps. Maybe her normal price is $100/hr and she's not really feeling up for that, but if you paid her $200/hr she'd be excited about the work.

Is this useful? I can't really tell. In this particular example it seems like it'd just make more sense to have a back and forth conversation about what her normal price is, how she's feeling, how you're feeling, etc., and try to figure out if there's a price that you each would feel good about. Cheerful/excited/"hell yeah" certainly establishes an upper bound for one side, but I can't really tell how useful that is.

2) If you’re not feeling “hell yeah!” then say no

This seems like one of those things that sounds wise and smart in theory, but probably not actually good advice in practice.

For example, I have a SAAS app that hasn't really gone anywhere and I've put it on the back burner. Someone just came to me with a revenue share offer. I'd be giving up a larger share than I think is fair. And the total amount I'd make per month is maybe $100-200, so I'm not sure whether it'd be worth the back and forth + any customizations they'd want from me. I don't feel "hell yeah" about it, but it is still probably worth it.

In reality, I'm sure that there are some situations where it's great advice, some situations where it's terrible advice, and some situations where it could go either way. I think the question is whether it's usually useful. It doesn't have to be useful in every single possible situation. That would be setting the bar too high. If it identifies a common failure mode and helps push you away from that failure mode and closer to the point on the spectrum where you should be, then I call that good advice.

If I were to try to figure out whether "hell yeah or no" does this, the way I'd go about it would be to come up with a wide variety of examples, and then ask myself how well it performs in these different examples. It would have been helpful if the original article got the ball rolling for me on that.

3) Why I Still ‘Lisp’ (and You Should Too)

I want to zoom in on the discussion of dynamic typing.

I have never had a static type checker (regardless of how sophisticated it is) help me prevent anything more than an obvious error (which should be caught in testing anyway).

This made me breathe a sigh of relief. I've always felt the same way, but wondered whether it was due to some sort of incompetence on my part as a programmer.

Still, something tells me that it's not true. That such type checking does in fact help you catch some non-obvious errors that would be much harder to catch without the type checking. Too many smart people believe this, so I think I have to give it a decent amount of credence.

Also, I recall an example or two of this from a conversation with a friend a few weeks ago. Most of the discussions of type checking I've seen don't really get into these examples though. But they should! I'd like to see such articles give five examples of:

Here is a situation where I spent a lot of time dealing with an issue, and type checking would have significantly mitigated it.

Hell, don't stop at five, give me 50 if you can!

Examples are the best. Recently I've been learning Haskell. Last night I learned about polymorphism in the context of Haskell. I think that seeing it from this different angle rather than the traditional OOP angle really helped to solidify the concept for me. And I think that this is usually the case.

It makes me think back to Eliezer's old posts [? · GW] about Thingspace [? · GW]. In particular, extensional vs intensional [? · GW] descriptions.

What's a chair? I won't define it for you, but this is a chair. And this. And this. And this.

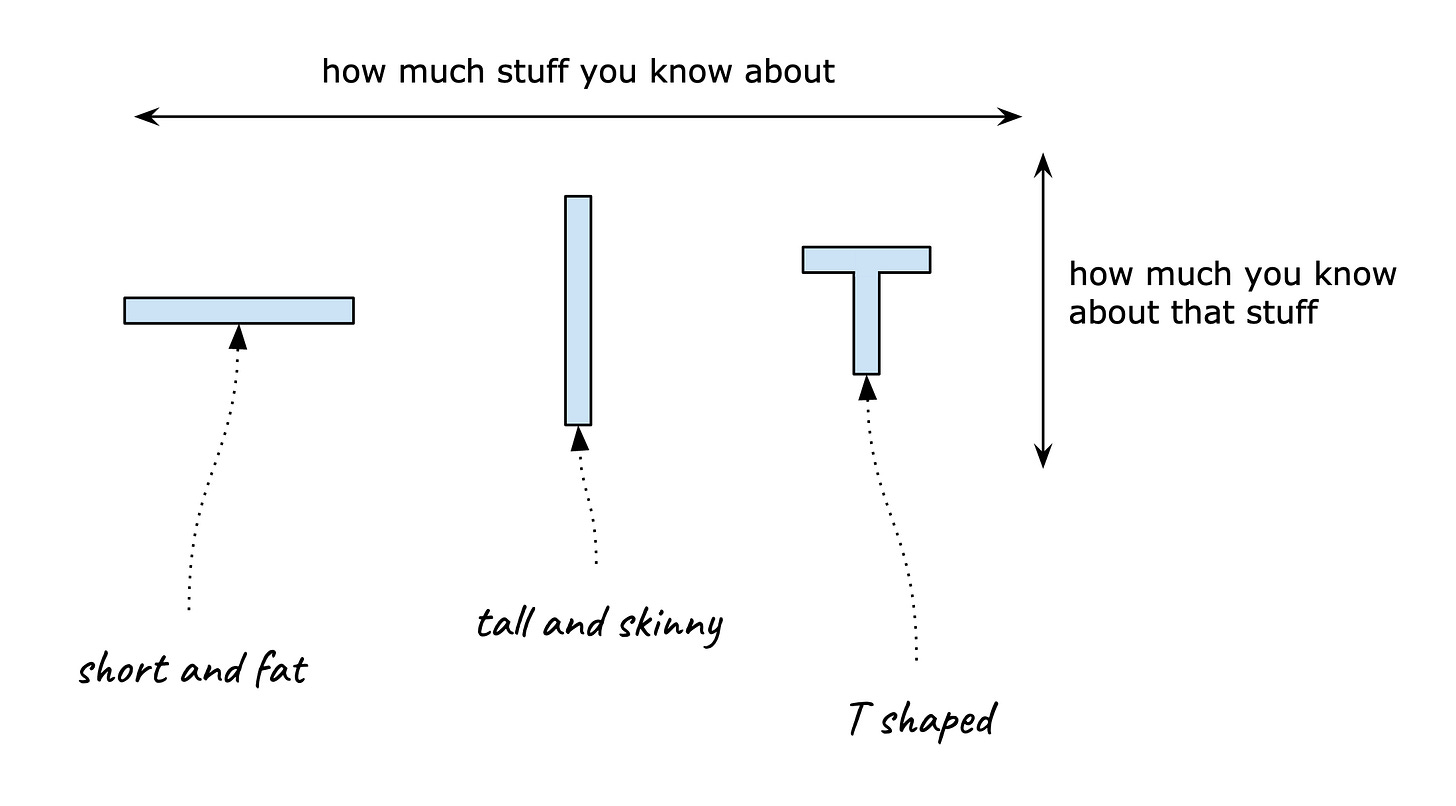

4) Short Fat Engineers Are Undervalued

(Before I read this article I thought it was going to talk about the halo effect and how ugly people in general are undervalued. Oh well.)

The idea that short, fat engineers are undervalued sounds plausible to me. No, I'm not using strong enough language: I think it's very likely. Not that likely though. I think it's also plausible that it's wrong, and that deep expertise is where it's at.

Again, for me to really explore this further, I would want to kind of iterate over all the different situations engineers find themselves in, ask myself how helpful being short-fat is vs tall-skinny in each situation, and then multiply by the importance of each situation.

Something like that. Taken literally it would require thousands of pages of analysis, clearly beyond the scope of a blog post. But this particular blog post actually didn't provide any examples. Big, fat, zero!

Let me provide some examples of the types of examples I have in mind:

- At work yesterday I had a task that really stands out as a short-fat type of task. I needed to make a small UI change, which required going down a small rabbit hole of how our Rails business logic works + how the Rails asset pipeline works, write some tests in Ruby (a language I'm not fluent in), connect to a VPN which involves a shell script and some linux-fu, deploying the change, ssh-ing into our beta server and finding some logs, and just generally making sure everything works as expected. No one step was particularly intense or challenging, but they're all dependencies. If I'm a tall and skinny Rails wizard I'd have an easy time with the first couple parts, but if I also don't know what a VPN is or don't know my way around the command line, I could easily be bottlenecked by the last couple parts.

- For an early work task, I had to add watermarks to GIFs. Turns out GIFs are a little weird and have idiosyncrasies. Being tall-skinny would have been good here, in the sense of a) knowledgeable about GIFs and b) generic programming ability. The task was pretty self-contained and well-defined. "Here's an input. Transform it into an output. Then you're done." It didn't really require too much breadth.

5) Embedded Interactive Predictions on LessWrong [LW · GW]

I really love this tool so I feel bad about picking on this post, but I think it could have really used more examples of "here is where you'd really benefit from using this tool".

This post made more of an attempt to provide examples than examples 1-4 though. It lead off with the "Will there be more than 50 prediction questions embedded in LessWrong posts and comments this month?" poll, which I thought was great. And it did have the "Some examples of how to use this" section. But to me, I still felt like I needed more. A lot more.

I think this is an important point. Sometimes you need a lot of examples. Other times one or two will get the job done. It depends on the situation.

Hat tips

I'm sure there are lots of other posts worthy of hat tips, but these are the ones that come to mind:

- I really like how alkjash [LW · GW] used anecdotes in Pain is not the unit of Effort [LW · GW], and parables in Is Success the Enemy of Freedom [LW · GW]. Parables are an interesting alternative to examples.

- Not quite the same thing, but the Specificity Sequence [LW · GW] is closely related.

- A lot of johnswentworth [LW · GW]'s posts are structured around examples. Eg. Exercise: Taboo "Should" [LW · GW] and Anatomy of a Gear [LW · GW]. In particular, they use examples from different domains. On the other hand, in this post I focused way too much on programming.

- Same with Scott Alexander. In particular, the following line from Meditations on Moloch comes to mind: "And okay, this example is kind of contrived. So let’s run through – let’s say ten – real world examples of similar multipolar traps to really hammer in how important this is."

It's not easy

Coming up with examples is something that always seems to prove way more difficult than it should be. Even just halfway decent examples. Good examples are way harder. Maybe it's just me, but I don't think so.

So then, I don't want this post to come across as "if you don't have enough (good) examples in your post, you're a failure". It's not easy to do, and I don't think it should (necessarily) get in the way of an exploratory or conversation starting type of post.

Maybe it's similar to grammar in text messages. The other person has to strain a bit if you write a (longer) text with bad grammar and abbreviations. There are times when this might be appropriate. Like:

Hey man im really sry but just storm ad I ct anymore. BBl.

But if you have the time, cleaning it up will go a long way towards helping the other person understand what it is you're trying to say.

17 comments

Comments sorted by top scores.

comment by 25Hour (IronLordByron) · 2021-02-14T07:43:20.818Z · LW(p) · GW(p)

I like type checkers less because they help me avoid errors, and more for ergonomics. In particular, autocomplete-- I feel I code much, much faster when I don't have to look up APIs for any libraries I'm using; instead, I just type something that seems like should work and autocomplete gives me a list of sensible options, one of which I generally pick. (Also true when it comes to APIs I've written myself.) I'm working on a Unity project right now where this comes in handy-- I can ask "what operations does this specific field of TMPro.TextMeshProUGUI support", and get an answer in a half a second without leaving the editor.

More concretely than that, static typing enables extremely useful refactoring patterns, like:

- Delete a method or change its return type. Find everywhere this breaks. Fix the breakages. Now you're pretty much done; in a dynamically-typed language you're reliant on the unit test suite to catch these breakages for you. (All the places I've worked have done unit tests, but generally not above somewhere around 80% coverage; not enough to feel entirely safe performing this operation.)

- Refactor/rename becomes possible, even for methods with possibly-duplicated names across your files. For instance: renaming a method named "act" on a class you've implemented requires manual effort and can be error-prone in Javascript, but is instantaneous in C#. This means you can use refactor/rename compulsively any time you feel you have come up with a better name for a concept you're coding up.

I kind of agree with the article posted that, in general, the kinds of things you want to demonstrate about your program mostly cannot be demonstrated with static typing. (Not always true-- see Parse, don’t validate (lexi-lambda.github.io) -- but true most of the time.)

Replies from: adamzerner↑ comment by Adam Zerner (adamzerner) · 2021-02-14T19:27:19.509Z · LW(p) · GW(p)

Thank you for those thoughts, they're helpful.

- I actually was aware of the autocomplete benefit before. I've only spent about three months using a staticly typed language (TypeScript). In that time I found myself not using autocomplete too much for whatever reason, but I suspect that this is more the exception than the rule, that autocomplete is usually something that people find useful.

- I wasn't aware of those benefits for refactoring! That's so awesome! If it's actually as straightforward as you're saying it is, then I see that as a huge benefit of static typing, enough where my current position is now that you'd be leaving a lot on the table if you don't use a staticly typed language for all but the smallest of projects.

At work we actually decided, in part due to my pushing for it, to use JavaScript instead of TypeScript for our AWS Lambda functions. Those actually seem to be a time when you can depend on the codebase being small enough where static typing probably isn't worth it.

Anyway, important question: were you exaggerating at all about the refactoring points?

↑ comment by 25Hour (IronLordByron) · 2021-02-14T22:11:51.075Z · LW(p) · GW(p)

No. I compulsively use the refactor/rename operation (cntrl-shift-r in my own Visual Studio setup) probably 4 or 5 times in a given coding session on my personal Unity project, and trust that all the call sites got fixed automatically. I think this has the downstream effect of having things become a lot more intelligible as my code grows and I start forgetting how particular methods that I wrote work under-the-hood.

Find-all-usages is also extremely important when I'm at work; just a couple weeks ago I was changing some authentication logic for a database we used, and needed to see which systems were using it so I could verify they all still worked after the change. So I just right-click and find-usages and I can immediately evaluate everywhere I need to fix.

As an aside, I suspect a lot of the critiques of statically-typed languages come from people whose static typing experiences come from C++ and Java, where the compiler isn't quite smart enough to infer most things you care about so you have to repeat a whole bunch of information over and over. These issues are greatly mitigated in more modern languages, like C# (for .NET) and Kotlin (for the JVM), both of which I'm very fond of. Also: I haven't programmed in Java for like three years, so it's possible it has improved since I touched it last.

Full disclosure: pretty much all my experiences the last few years have been of statically-typed languages, and my knowledge of the current dynamic language landscape is pretty sparse. All I can say is that if the dynamic language camp has found solutions to the refactor/rename and find-all-usages problems I mentioned, I am not aware of them.

You can get some of these benefits from optional/gradual typing systems, like with Typescript; the only thing is that if it's not getting used everywhere you get a situation where such refactorings go from 100% to 90% safe, which is still pretty huge for discouraging refactoring in a beware-trivial-inconveniences sense.

Replies from: Viliam, adamzerner↑ comment by Viliam · 2021-02-18T19:52:54.354Z · LW(p) · GW(p)

I compulsively use the refactor/rename operation (cntrl-shift-r in my own Visual Studio setup) probably 4 or 5 times in a given coding session on my personal Unity project, and trust that all the call sites got fixed automatically.

Similar here. I use renaming less frequently, but I consider it extremely important to have a tool that allows me to automatically rename X to Y without also renaming unrelated things that also happen to be called X.

With static typing, a good IDE can understand that method "doSomething" of class "Foo" is different from method "doSomething" of class "Bar". So I can have automatically renamed one (at the place it is declared, and at all places it is called) without accidentally renaming the other. I can automatically change the order of parameters in one without changing the other.

It is like having two people called John, but you point your cursor at one of them, and the IDE understands you mean that one... instead of simply doing textual "Search/Replace" on your source code.

I have never had a static type checker (regardless of how sophisticated it is) help me prevent anything more than an obvious error (which should be caught in testing anyway).

In other words, every static type declaration is a unit test you didn't have to write... that is, assuming we are talking about automated testing here; are we?

I agree that when you are writing new code, you rarely make type mistakes, and you likely catch them immediately when you run the program. But if you use a library written by someone else, and it does something wrong, it can be hard to find out what exactly went wrong. Suppose the first method calls the second method with a string argument, the second method does something and then passes the argument to the third method, et cetera, and the tenth method throws an error because the argument is not an integer. Uhm, what now? Was it supposed to be a string or an integer when it was passed to the third or to the fifth method? No one knows. In theory, the methods should be documented and have unit tests, but in practice... I suspect that complaining about having to declare types correlates positively with complaining about having to write documentation. "My code is self-documenting" is how the illusion of transparency [? · GW] feels from inside for a software developer.

I mostly use Java, and yes it is verbose as hell, much of which is needless. (There are attempts to make it somewhat less painful: Lombok, var.) But static typing allows IDE to give me all kinds of support, while in dynamically typed languages it would be like "well, this variable could contain anything, who knows", or perhaps "this function obviously expects to receive an associative array as an argument, and if you spend 15 minutes debugging, you might find out which keys that array is supposed to have". In Java, you immediately see that the value is supposed to be e.g. of type "Foo" which contains integer values called "m" and "n", and a string value called "format", whatever that may mean. Now you could e.g. click on their getters and setters, and find all places in the program where the value is assigned or read (as opposed to all places in the program where something else called "m", "n", or "format" is used). That is a good start.

Replies from: adamzerner↑ comment by Adam Zerner (adamzerner) · 2021-02-19T05:51:36.732Z · LW(p) · GW(p)

It is like having two people called John, but you point your cursor at one of them, and the IDE understands you mean that one... instead of simply doing textual "Search/Replace" on your source code.

Thanks for explaining this. I had been planning on investigating how it compares to search and replace, but I think this clarified a lot for me.

And thank you for the rest of your thoughts too. I think my lack of experience with static typing is making it hard for me to fully grok them, but I am groking them to some extent, and they do give me the vibe of being correct.

↑ comment by Adam Zerner (adamzerner) · 2021-02-14T23:23:03.000Z · LW(p) · GW(p)

Good to know. Thanks!

comment by philh · 2021-02-18T11:48:21.112Z · LW(p) · GW(p)

More on type checking:

A thing I did recently while working on my compiler was write a "macroexpand" function that wasn't recursive. It macroexpanded once, but didn't macroexpand the result. I think I was like "whatever, I'll come back later and handle that", and maybe I would have done. But shortly afterwards I made the types more specific, using mildly advanced features of Haskell, and now the macroexpand function claimed to return a "thing that has been fully macroexpanded" but in code was returning a "thing containing something that hasn't been macroexpanded". I might have caught this easily anyway, but at minimum I think it would have been harder to track down.

So when the author says "I carry around invariants (which is a fancy name for properties about things in my program) in my head all the time. Only one of those invariants is its type", my reaction is that he probably could put more invariants in the type. Examples of that: you can put "this can't be null" into the type, or more precisely the thing can't be null by default but you can choose to relax that invariant in the type. You can put "this function may only be called from within a transaction of isolation level no weaker than repeatable read" into a type, we've done that at work. You can put "this array has a known length" into a type, and "this function returns an array of the same length it was passed", though I haven't found those useful myself. Similarly "this hash table definitely has a value for key X" and "this function returns a hash table with exactly the same keys". "This list is nonempty" is a type-level invariant I use a lot. You even could put "this is an increasing list of floats with mean X and standard deviation Y" into a type, modulo floating point comparison problems, though I'm not convinced that's a realistic example.

I've also had a lot of cases where I have a type with a limited set of possible values, then I add a new possible value, and all the places that accept the type but don't handle the value complain. This isn't strictly type checking, it's exhaustiveness checking, but I'm not sure you can get it without type checking. Maybe easy to catch through testing for functions that accept the type as input (as long as you have e.g. a random generator for the type, and add this case to it), but I think harder to catch when you call functions that return the type.

So I speculate static typing is more useful the more your domain types can be usefully modeled as tagged unions. If all your types are just "a field for this value, a field for that value, ...", where none of the values depend on the others, the kind of thing modeled by C structs or Python classes or Haskell records, it will mostly catch obvious errors. But if you find yourself writing things like that but with lots of invariants like "foo is true, then bar will be null and baz won't be", then that's a good fit for a tagged union, and you can move that invariant out of your head and into the type checker and exhaustiveness checker.

(There's a related thing that we don't have, I guess I'd call co-exhaustiveness checking. Consider: "I have a random generator for values of this type, if I add a new possibility for the type how do I make sure the generator stays in sync?" Or "how do I check the function parsing it from strings has a way to parse the new value?")

(Also, if the author's never had to spend a long time tracking down "this function should never be called with a null input but somehow it is, wtf is going on"... more power to him, I guess.)

Replies from: adamzerner↑ comment by Adam Zerner (adamzerner) · 2021-02-18T18:25:41.998Z · LW(p) · GW(p)

Great points. I see how making your types more specific/complicated helps you catch bugs, and the example with your compiler really helped me to see that. However, making types more complex also has a larger upfront cost. It requires more thought from you. I don't have a good intuition for which side of the tradeoff is stronger though.

comment by crl826 · 2021-02-14T21:18:59.887Z · LW(p) · GW(p)

2) If you’re not feeling “hell yeah!” then say no

I've thought a lot about this myself.

I think the first thing you have to stipulate is that this helps when deciding on goals, not necessarily the things you have to do to get the goal. You may be "hell yeah" about traveling the world, but not "hell yeah" about packing. That doesn't mean you shouldn't travel the world. I don't think Derek was arguing for this level of decision making.

If you buy that, I think the key is that it only works when you have a lot of slack. Derek Sivers is and has been independently wealthy and has way way more options than most people do. It's a very good sorting move in that situation. If you have few options, you probably can't use this as universally as he suggests.

Replies from: adamzerner↑ comment by Adam Zerner (adamzerner) · 2021-02-14T22:08:32.928Z · LW(p) · GW(p)

Yeah those caveats make a lot of sense. However, I strongly suspect that they're not exhaustive. Not that you're implying they are, but it's important to note because when you acknowledge that they're not exhaustive, you rightly treat this sort of advice as more of a heuristic than a rule.

Replies from: crl826↑ comment by crl826 · 2021-02-15T00:22:15.860Z · LW(p) · GW(p)

Sure. I'd love to hear what other caveats you think are important.

Replies from: adamzerner↑ comment by Adam Zerner (adamzerner) · 2021-02-15T00:50:18.314Z · LW(p) · GW(p)

None come to mind right now. But the thing is, that probably just means I can't think of them as opposed to them not existing.

comment by Brendan Long (korin43) · 2021-02-14T03:22:01.343Z · LW(p) · GW(p)

I really need to pay attention so I can give examples of why I like type checkers, but it's always hard to remember in the moment. I'm not sure how to remind myself to do it but I'll make a note to try to watch for this for a few days.

Replies from: adamzerner↑ comment by Adam Zerner (adamzerner) · 2021-02-14T03:25:31.355Z · LW(p) · GW(p)

That would be great! And is a great example of how coming up with examples is often difficult.

comment by bfinn · 2021-04-22T22:23:38.298Z · LW(p) · GW(p)

Philosophy thrives on carefully-chosen examples, which are thought experiments - i.e. that illustrate relevant aspects, and hopefully exclude irrelevant confusing aspects; they are also often tricky (e.g. extreme or borderline) cases. Test cases in law are similar.

Replies from: adamzerner↑ comment by Adam Zerner (adamzerner) · 2021-04-23T22:38:29.611Z · LW(p) · GW(p)

Ah, I never made that connection before. Great point!

comment by bfinn · 2021-04-22T22:18:37.166Z · LW(p) · GW(p)

Re 'hell yeah', I tend to roll my eyes at such advice from self-help gurus, because for example, not many people can find jobs they can go 'hell yeah' at. Hell yeah they'd like to be a rock star or film star or astronaut, but they're only capable of humdrum work, or they train as a musician/actor and find there's almost no paid work. (So then the self-help gurus tell them that if they'd only adopt the right positive attitude they'd say 'hell yeah' to shelf-stacking - rather than just accept that life is a mixed bag and we have to do some things we don't like much.)

The weak form is better, viz. if you think of something you can say 'hell yeah' to that's actually feasible, then do it. I.e. say yes to 'hell yeah', rather than say no to not-'hell yeah'.