Agency in Conway’s Game of Life

post by Alex Flint (alexflint) · 2021-05-13T01:07:19.125Z · LW · GW · 93 commentsContents

Outline Introduction The control question Connection to agency Implications The AI hypothesis Influence as a definition of AI Conclusion Appendix: Technicalities with the control question None 93 comments

Financial status: This is independent research. I welcome financial support to make further posts like this possible.

Epistemic status: I have been thinking about these ideas for years but still have not clarified them to my satisfaction.

Outline

-

This post asks whether it is possible, in Conway’s Game of Life, to arrange for a certain game state to arise after a certain number of steps given control only of a small region of the initial game state.

-

This question is then connected to questions of agency and AI, since one way to answer this question in the positive is by constructing an AI within Conway’s Game of Life.

-

I argue that the permissibility or impermissibility of AI is a deep property of our physics.

-

I propose the AI hypothesis, which is that any pattern that solves the control question does so, essentially, by being an AI.

Introduction

In this post I am going to discuss a celular autonoma known as Conway’s Game of Life:

In Conway’s Game Life, which I will now refer to as just "Life", there is a two-dimensional grid of cells where each cell is either on or off. Over time, the cells switch between on and off according to a simple set of rules:

-

A cell that is "on" and has fewer than two neighbors that are "on" switches to "off" at the next time step

-

A cell that is "on" and has greater than three neighbors that are "on" switches to "off" at the next time step

-

An cell that is "off" and has exactly three neighbors that are "on" switches to "on" at the next time step

-

Otherwise, the cell doesn’t change

It turns out that these simple rules are rich enough to permit patterns that perform arbitrary computation. It is possible to build logic gates and combine them together into a computer that can simulate any Turing machine, all by setting up a particular elaborate pattern of "on" and "off" cells that evolve over time according to the simple rules above. Take a look at this awesome video of a Universal Turing Machine operating within Life.

The control question

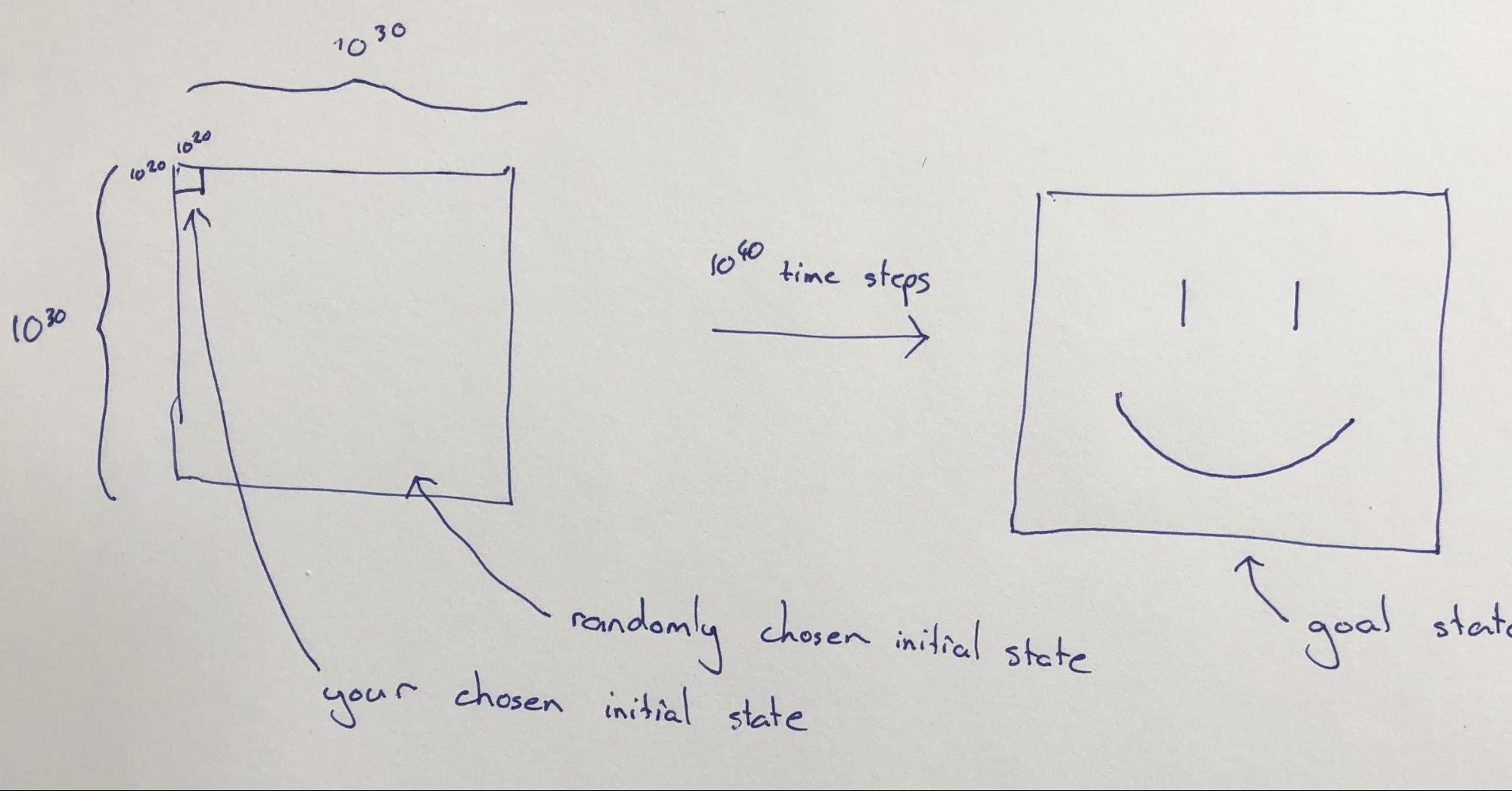

Suppose that we are working with an instance of Life with a very large grid, say rows by columns. Now suppose that I give you control of the initial on/off configuration of a region of size by in the top-left corner of this grid, and set you the goal of configuring things in that region so that after, say, time steps the state of the whole grid will resemble, as closely as possible, a giant smiley face.

The cells outside the top-left corner will be initialized at random, and you do not get to see what their initial configuration is when you decide on the initial configuration for the top-left corner.

The control question is: Can this goal be accomplished?

To repeat that: we have a large grid of cells that will evolve over time according to the laws of Life. We are given power to control the initial on/off configuration of the cells in a square region that is a tiny fraction of the whole grid. The initial on/off configuration of the remaining cells will be chosen randomly. Our goal is to pick an initial configuration for the controllable region in such a way that, after a large number of steps, the on/off configuration of the whole grid resembles a smiley face.

The control question is: Can we use this small initial region to set up a pattern that will eventually determine the configuration of the whole system, to any reasonable degree of accuracy?

[Updated 5/13 following feedback in the comments] Now there are actually some ways that we could get trivial negative answers to this question, so we need to refine things a bit to make sure that our phrasing points squarely at the spirit of the control question. Richard Kennaway points out [LW(p) · GW(p)] that for any pattern that attempts to solve the control question, we could consider the possibility that the randomly initialized region contains the same pattern rotated 180 degrees in the diagonally opposite corner, and is otherwise empty. Since the initial state is symmetric, all future states will be symmetric, which rules our creating a non-rotationally-symmetric smiley face. More generally, as Charlie Steiner points out [LW(p) · GW(p)], what happens if there are patterns in the randomly initialized region that are trying to control the eventual configuration of the whole universe just as we are? To deal with this, we might amend the control question to require a pattern that "works" for at least 99% of configurations of the randomly initialized area, since most configurations of that area will not be adversarial. See further discussion in the brief appendix below.

Connection to agency

On the surface of it, I think that constructing a pattern within Life that solves the control question looks very difficult. Try playing with a Life simulator set to max speed to get a feel for how remarkably intricate can be the evolution of even simple initial states. And when an evolving pattern comes into contact with even a small amount of random noise — say a single stray cell set to "on" — the evolution of the pattern changes shape quickly and dramatically. So designing a pattern that unfolds to the entire universe and produces a goal state no matter what random noise is encountered seems very challenging. It’s remarkable, then, that the following strategy actually seems like a plausible solution:

One way that we might answer the control question is by building an AI. That is, we might find a by array of on/off values that evolve under the laws of Life in a way that collects information using sensors, forms hypotheses about the world, and takes actions in service of a goal. That goal we would give to our AI would be arranging for the configuration of the grid to resemble a smiley face after game steps.

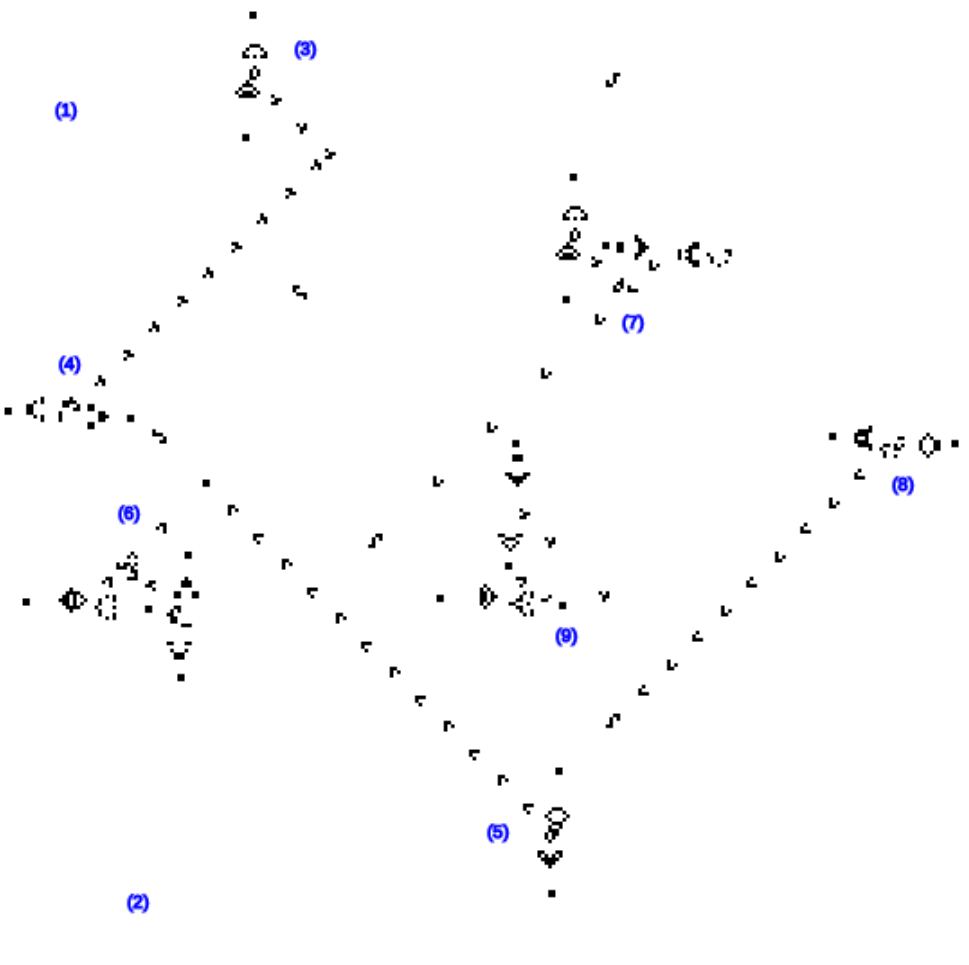

What does it mean to build an AI in the region whose initial state is under our control? Well it turns out that it’s possible to assemble little patterns in Life that act like logic gates, and out of those patterns one can build whole computers. For example, here is what one construction of an AND gate looks like:

And here is a zoomed-out view of a computer within Life that adds integers together:

It has been proven that computers within Life can compute anything that can be computed under our own laws of physics[1], so perhaps it is possible to construct an AI within Life. Building an AI within Life is much more involved than building a computer, not only because we don’t yet know how to construct AGI software, but also because an AI requires apparatus to perceive and act within the world, as well as the ability to move and grow if we want it to eventually exert influence over the entire grid. Most constructions within Life are extremely sensitive to perturbations. The computer construction shown above, for example, will stop working if almost any "on" cell is flipped to "off" at any time during its evolution. In order to solve the control question, we would need to build a machine that is not only able to perceive and react to the random noise in the non-user-controlled region, but is also robust to glider impacts from that region.

Moreover, building large machines that move around or grow over time is highly non-trivial in Life since movement requires a machine that can reproduce itself in different spatial positions over time. If we want such a machine to also perceive, think, and act then these activities would need to be taking place simultaneously with self-reproducing movement.

So it’s not clear that a positive answer to the control question can be given in terms of an AI construction, but neither is it clear that such an answer cannot be given. The real point of the control question is to highlight the way that AI can be seen as not just a particularly powerful conglomeration of parts but as a demonstration of the permissibility of patterns that start out small but eventually determine the large-scale configuration of the whole universe. The reason to construct such thought experiments in Life rather than in our native physics is that the physics of Life is very simple and we are not as used to seeing resource-collecting, action-taking entities in Life as we are in our native physics, so the fundamental significance of these patterns is not as easy to overlook in Life as it is in our native physics.

Implications

If it is possible to build an AI inside Life, and if the answer to the control question is thus positive, then we have discovered a remarkable fact about the basic dynamics of Life. Specifically, we have learned that there are certain patterns within Life that can determine the fate of the entire grid, even when those patterns start out confined to a small spatial region. In the setup described above, the region that we get to control is much less than a trillionth of the area of the whole grid. There are a lot of ways that the remaining grid could be initialized, but the information in these cells seems destined to have little impact on the eventual configuration of the grid compared to the information within at least some parts of the user-controlled region[2].

We are used to thinking about AIs as entities that might start out physically small and grow over time in the scope of their influence. It seems natural to us that such entities are permitted by the laws of physics, because we see that humans are permitted by the laws of physics, and humans have the same general capacity to grow in influence over time. But it seems to me that the permissibility of such entities is actually a deep property of the governing dynamics of any world that permits their construction. The permissibility (or not) of AI is a deep property of physics.

Most patterns that we might construct inside Life do not have this tendency to expand and determine the fate of the whole grid. A glider gun does not have this property. A solitary logic gate does not have this property. And most patterns that we might construct in the real world do not have this property either. A chair does not have the tendency to reshape the whole of the cosmos in its image. It is just a chair. But it seems there might be patterns that do have the tendency to reshape the whole of the cosmos over time. We can call these patterns "AIs" or "agents" or "optimizers", or describe them as "intelligent" or "goal-directed" but these are all just frames for understanding the nature of these profound patterns that exert influence over the future.

It is very important that we study these patterns, because if such patterns do turn out to be permitted by the laws of physics and we do construct one then it might determine the long-run configuration of the whole of our region of the cosmos. Compared to the importance of understanding these patterns, it is relatively unimportant to understand agency for its own sake or intelligence for its own sake or optimization for its own sake. Instead we should remember that these are frames for understanding these patterns that exert influence over the future.

But even more important than this, we should remember that when we study AI, we are studying a profound and basic property of physics. It is not like constructing a toaster oven. A toaster oven is an unwieldy amalgamation of parts that do things. If we construct a powerful AI then we will be touching a profound and basic property of physics, analogous to the way fission reactors touch a profound and basic property of nuclear physics, namely the permissibility of nuclear chain reactions. A nuclear reactor is itself an unwieldy amalgamation of parts, but in order to understand it and engineer it correctly, the most important thing to understand is not the details of the bits and pieces out of which it is constructed but the basic property of physics that it touches. It is the same situation with AI. We should focus on the nature of these profound patterns themselves, not on the bits and pieces out which AI might be constructed.

The AI hypothesis

The above thought experiment suggests the following hypothesis:

Any pattern of physics that eventually exerts control over a region much larger than its initial configuration does so by means of perception, cognition, and action that are recognizably AI-like.

In order to not include things like an exploding supernova as "controlling a region much larger than its initial configuration" we would want to require that such patterns be capable of arranging matter and energy into an arbitrary but low-complexity shape, such as a giant smiley face in Life.

Influence as a definition of AI

If the AI hypothesis is true then we might choose to define AI as a pattern within physics that starts out small but whose initial configuration significantly influences the eventual shape of a much larger region. This would provide an alternative to intelligence as a definition of AI. The problem with intelligence as a definition of AI is that it is typically measured as a function of discrete observations received by some agent, and the actions produced in response. But an unfolding pattern within Life need not interact with the world through any such well-defined input/output channels, and constructions in our native physics will not in general do so either. It seems that AI requires some form of intelligence in order to produce its outsized impact on the world, but it also seems non-trivial to define the intelligence of general patterns of physics. In contrast, influence as defined by the control question is well-defined for arbitrary patterns of physics, although it might be difficult to efficiently predict whether a certain pattern of physics will eventually have a large impact or not.

Conclusion

This post has described the control question, which asks whether, under a given physics, it is possible to set up small patterns that eventually exert significant influence over the configuration of large regions of space. We examined this question in the context of Conway’s Game of Life in order to highlight the significance of either a positive or negative answer to this question. Finally, we proposed the AI hypothesis, which is that any such spatially influential pattern must operate by means of being, in some sense, an AI.

Appendix: Technicalities with the control question

The following are some refinements to the control question that may be needed.

-

There are some patterns that can never be produced in Conway’s Game of Life, since they have no possible predecessor configuration. To deal with this, we should phrase the control question in terms of producing a configuration that is close to rather than exactly matching a single target configuration.

-

There are possible configurations of the whole grid , but only possible configurations of the user-controlled section of the universe. Each configuration of the user-controlled section of the universe will give rise to exactly one final configuration, meaning that the majority of possible final configurations are unreachable. To deal with this we can again phrase things in terms of closeness to a target configuration, and also make sure that our target configuration has reasonably low Kolmogorov complexity.

-

Say we were to find some pattern A that unfolds to final state X and some other pattern B that unfolds to a different final state Y. What happens, then, if we put A and B together in the same initial state — say, starting in opposite corners of the universe? The result cannot be both X and Y. In this case we might have two AIs with different goals competing for control. Some tiny fraction of random initializations will contain AIs, so it is probably not possible for the amplification question to have an unqualified positive answer. We could refine the question so that our initial pattern has to produce the desired goal state for at least 1% of the possible random initializations of the surrounding universe.

-

A region of by cells may not be large enough. Engineering in Life tends to take up a lot of space. It might be necessary to scale up all my numbers.

Rendell, P., 2011, July. A universal Turing machine in Conway's game of life. In 2011 International Conference on High Performance Computing & Simulation (pp. 764-772). IEEE. ↩︎

There are some configurations of the randomly initialized region that affect the final configuration, such as configurations that contain AIs with different goals. This is addressed in the appendix ↩︎

93 comments

Comments sorted by top scores.

comment by Oscar_Cunningham · 2021-05-13T07:58:11.100Z · LW(p) · GW(p)

See here https://conwaylife.com/forums/viewtopic.php?f=7&t=1234&sid=90a05fcce0f1573af805ab90e7aebdf1 and here https://discord.com/channels/357922255553953794/370570978188591105/834767056883941406 for discussion of this topic by Life hobbyists who have a good knowledge of what's possible and not in Life.

What we agree on is that the large random region will quickly settle down into a field of 'ash': small stable or oscillating patterns arranged at random. We wouldn't expect any competitior AIs to form in this region since an area of 10^120 will only be likely to contain arbitrary patterns of sizes up to log(10^120), which almost certainly isn't enough area to do anything smart.

So the question is whether our AI will be able to cut into this ash and clear it up, leaving a blank canvas for it to create the target pattern. Nobody knows a way to do this, but it's also not known to be impossible.

Recently I tried an experiment where I slowly fired gliders at a field of ash, along twenty adjacent lanes. My hope had been that each collision of a glider with the ash would on average destroy more ash than it created, thus carving a diagonal path of width 20 into the ash. Instead I found that the collisions created more ash, and so a stalagmite of ash grew towards the source at which I was creating the gliders.

EDIT: There's been a development of new GoL tech that might be able to clear ash: https://www.conwaylife.com/forums/viewtopic.php?p=135539#p135539

Replies from: charbel-raphael-segerie, JenniferRM, alexflint, itaibn0, Measure↑ comment by Charbel-Raphaël (charbel-raphael-segerie) · 2021-05-14T11:55:45.653Z · LW(p) · GW(p)

Could you just explain a bit "will only be likely to contain arbitrary patterns of sizes up to log(10^120)" please ? Or give some pointers with other usage of such calculation ?

Replies from: Oscar_Cunningham↑ comment by Oscar_Cunningham · 2021-05-14T12:32:38.359Z · LW(p) · GW(p)

This is very much a heuristic, but good enough in this case.

Suppose we want to know how many times we expect to see a pattern with n cells in a random field of area A. Ignoring edge effects, there are A different offsets at which the pattern could appear. Each of these has a 1/2^n chance of being the pattern. So we expect at least one copy of the pattern if n < log_2(A).

In this case the area is (10^60)^2, so we expect patterns of size up to 398.631. In other words, we expect the ash to contain any pattern you can fit in a 20 by 20 box.

Replies from: alexflint↑ comment by Alex Flint (alexflint) · 2021-05-14T15:27:06.860Z · LW(p) · GW(p)

So just to connect this back to your original point: if we knew that it were possible to construct some kind of intelligent entity in a region with area of, say, 1,000,000,000 cells, then if our overall grid had total cells and we initialized it at random, then we would expect an intelligent entity to pop up by chance at least once in the whole grid.

Replies from: Oscar_Cunningham↑ comment by Oscar_Cunningham · 2021-05-14T15:54:52.893Z · LW(p) · GW(p)

Yeah, although probably you'd want to include a 'buffer' at the edge of the region to protect the entity from gliders thrown out from the surroundings. A 1,000,000 cell thick border filled randomly with blocks at 0.1% density would do the job.

↑ comment by JenniferRM · 2021-07-20T19:28:16.441Z · LW(p) · GW(p)

I performed the same experiment with glider guns and got the same result "stalagmite of ash" result.

I didn't use that name for it, but I instantly recognized my result under that description <3

When I performed that experiment, I was relatively naive about physics and turing machines and so on, and sort of didn't have the "more dakka [LW · GW]" gut feeling that you can always try crazier things once you have granted that you're doing math, and so infinities are as nothing to your hypothetical planning limits. Applying that level of effort to Conway's Life... something that might be interesting would be to play with 2^N glider guns in parallel, with variations in their offsets, periodicities, and glider types, for progressively larger values of N? Somewhere in all the variety it might be possible to generate a "cleaning ray"?

If fully general cleaning rays are impossible, that would also be an interesting result! (Also, it is the result I expect.)

My current hunch is that a "cleaning ray with a program" (that is allowed to be fed some kind of setup information full of cheat codes about the details of the finite ash that it is aimed at) might be possible.

Then I would expect there to be a lurking result where there was some kind of Maxwell's Demon style argument about how many bits of cheatcode are necessary to clean up how much random ash... and then you're back to another result confirming the second law of thermodynamics, but now with greater generality about a more abstract physics? I haven't done any of this, but that's what my hunch is.

Replies from: alexflint, Alex_Altair↑ comment by Alex Flint (alexflint) · 2021-07-31T01:43:28.085Z · LW(p) · GW(p)

If you can create a video of any of your constructions in Life, or put the constructions up in a format that I can load into a simulator at my end, I would be fascinated to take a look at what you've put together!

↑ comment by Alex_Altair · 2022-04-28T21:18:04.699Z · LW(p) · GW(p)

I would expect there to be a lurking result where

I can definitely see this intuition! But one of the biggest differences between our universe and Life is that Life's rules aren't reversible, which means entropy can go down universally. So I think that's pretty good reason to believe that e.g. an ash-clearing machine is possible.

↑ comment by Alex Flint (alexflint) · 2021-05-13T14:35:51.549Z · LW(p) · GW(p)

Interesting! Thank you for these links

↑ comment by itaibn0 · 2021-06-02T03:12:35.483Z · LW(p) · GW(p)

Thanks for linking to my post! I checked the other link, on Discord, and for some reason it's not working.

Replies from: Oscar_Cunningham↑ comment by Oscar_Cunningham · 2021-06-02T07:50:18.088Z · LW(p) · GW(p)

The link still works for me. Perhaps you must first become a member of that discord? Invite link: https://discord.gg/nZ9JV5Be (valid for 7 days)

Replies from: itaibn0↑ comment by Measure · 2021-05-13T12:17:22.336Z · LW(p) · GW(p)

At a large enough scale the ash field would probably contain glider guns that would restart the chaos and expand the ash frontier.

Replies from: Oscar_Cunningham↑ comment by Oscar_Cunningham · 2021-05-13T13:16:28.646Z · LW(p) · GW(p)

Most glider guns in random ash will immediately be destroyed by the chaos they cause. Those that don't will eventually reach an eater which will neutralise them. But yes, such things could pose a nasty surprise for any AI trying to clean up the ash. When it removes the eater it will suddenly have a glider stream coming towards it! But this doesn't prove it's impossible to clear up the ash.

comment by Dave Greene (dave-greene) · 2022-01-01T16:07:10.432Z · LW(p) · GW(p)

There has been a really significant amount of progress on this problem in the last year, since this article was posted. The latest experiments can be found here, from October 2021:

https://conwaylife.com/forums/viewtopic.php?p=136948#p136948

The technology for clearing random ash out of a region of space isn't entirely proven yet, but it's looking a lot more likely than it was a year ago, that a workable "space-cleaning" mechanism could exist in Conway's Life.

As previous comments have pointed out, it certainly wouldn't be absolutely foolproof. But it might be surprisingly reliable at clearing out large volumes of settled random ash -- which could very well enable a 99+% success rate for a Very Very Slow Huge-Smiley-Face Constructor.

↑ comment by Alex Flint (alexflint) · 2022-12-08T14:42:29.645Z · LW(p) · GW(p)

Thanks for this note Dave

comment by paulfchristiano · 2021-05-13T22:56:56.880Z · LW(p) · GW(p)

It seems like our physics has a few fundamental characteristics that change the flavor of the question:

- Reversibility. This implies that the task must be impossible on average---you can only succeed under some assumption about the environment (e.g. sparsity).

- Conservation of energy/mass/momentum (which seem fundamental to the way we build and defend structures in our world).

I think this is an interesting question, but if poking around it would probably be nicer to work with simple rules that share (at least) these features of physics.

Replies from: ben-lang, alexflint, FordO↑ comment by Ben (ben-lang) · 2022-12-08T17:28:01.439Z · LW(p) · GW(p)

The reversibility seems especially important to me. In some fundamental sense our universe doesn't actually allow an AI (or human) no matter how intelligent to bring the universe into a controlled state. The reversibility gives us a thermodynamics such that in order to bring any part of the world from an unknown state to a known state we have to scramble something we did know back to a state of unknowing.

So, in our universe, the AI needs access to fuel (negative entropy) at least up to the task it is set. (Of course it can find fuel out their in its environment, but everything it finds can either be fuel, or can be canvas for its creation. But at least usually it cannot be both. Because the fuel needs to be randomised (essentially serve as a dump for entropy), while the canvas needs to be un-randomised.

↑ comment by Alex Flint (alexflint) · 2021-05-14T15:30:43.797Z · LW(p) · GW(p)

Yeah I agree. There was a bit of discussion re conservation of energy here [LW(p) · GW(p)] too. I do like thought experiments in cellular automata because of the spatially localized nature of the transition function, which matches our physics. Do you have any suggestions for automata that also have reversibility and conservation of energy?

Replies from: Oscar_Cunningham, paulfchristiano↑ comment by Oscar_Cunningham · 2021-05-14T15:51:36.264Z · LW(p) · GW(p)

https://en.wikipedia.org/wiki/Critters_(cellular_automaton)

Replies from: paulfchristiano↑ comment by paulfchristiano · 2021-05-14T17:10:02.180Z · LW(p) · GW(p)

That seems great. Is there any reason people talk a lot about Life instead of Critters?

(Seems like Critters also supports universal computers and many other kinds of machines. Are there any respects in which it is known to be less rich than Life?)

Replies from: Oscar_Cunningham↑ comment by Oscar_Cunningham · 2021-05-14T20:50:42.473Z · LW(p) · GW(p)

You're probably right, but I can think of the following points.

Its rule is more complicated than Life's, so its worse as an example of emergent complexity from simple rules (which was Conway's original motivation).

It's also a harder location to demonstrate self replication. Any self replicator in Critters would have to be fed with some food source.

↑ comment by paulfchristiano · 2021-05-14T16:56:49.209Z · LW(p) · GW(p)

I feel like they must exist (and there may not be that many simple nice ones). I expect someone who knows more physics could design them more easily.

My best guess would be to get both properties by defining the system via some kind of discrete hamiltonian. I don't know how that works, i.e. if there is a way of making the hamiltonian discrete (in time and in values of the CA) that still gives you both properties and is generally nice. I would guess there is and that people have written papers about it. But it also seems like that could easily fail in one way or another.

It's surprisingly non-trivial to find that by googling though I didn't try very hard. May look a bit more tonight (or think about it a bit since it seems fun). Finding a suitable replacement for the game of life that has good conservation laws + reversibility (while still having a similar level of richness) would be nice.

Replies from: paulfchristiano↑ comment by paulfchristiano · 2021-05-14T17:05:52.048Z · LW(p) · GW(p)

I guess the important part of the hamiltonian construction may be just having the next state depend on x(t) and x(t-1) (apparently those are called second-order cellular automata). Once you do that it's relatively easy to make them reversible, you just need the dependence of x(t+1) on x(t-1) to be a permutation. But I don't know whether using finite differences for the hamiltonian will easily give you conservation of momentum + energy in the same way that it would with derivatives.

↑ comment by FordO · 2021-05-14T19:15:43.601Z · LW(p) · GW(p)

I have never thought much about reversibility. Do humans make heavy use of reversible processes? And what is the relation between cross entropy and reversibility?

Replies from: ben-lang↑ comment by Ben (ben-lang) · 2022-12-08T17:45:52.147Z · LW(p) · GW(p)

I don't know if GR or some cosmological thing (inflation) breaks reversibility. But classical and quantum mechanics are both reversible. So I would say that all of the lowest-level processes used by human beings are reversible. (Although of course thermodynamics does the normal counter-intuitive thing where the reversibility of the underlying steps is the reason why the overall process is, for all practical purposes, irreversible.)

This paper looks at mutual information (which I think relates to the cross entropy you mention), and how it connects to reversibility and entropy. https://bayes.wustl.edu/etj/articles/gibbs.vs.boltzmann.pdf

(Aside, their is no way that whoever maintains the website hosting that paper and the LW community don't overlap. The mutual information is too high.)

comment by gilch · 2021-05-19T22:16:58.171Z · LW(p) · GW(p)

Have you heard of Von Neumann's universal constructor? This seems relevant. He invented the concept of "cellular automaton" and engineered a programmable replicator in one. His original formulation was a lot more complex than Conway's Life though, with 29 different states per cell. Edgar Codd later demonstrated an 8-state automaton powerful enough to build a universal constructor with.

comment by evhub · 2021-05-19T21:37:11.045Z · LW(p) · GW(p)

Have you seen “Growing Neural Cellular Automata?” It seems like the authors there are trying to do something pretty similar to what you have in mind here.

Replies from: alexflint↑ comment by Alex Flint (alexflint) · 2021-05-25T04:30:19.314Z · LW(p) · GW(p)

Yes - I found that work totally wild. Yes they are setting up a cellular automata in such a way that it evolves towards and then fixates at a target state, but iirc what they are optimizing over is the rules of the automata itself, rather than over a construction within the automata.

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2021-05-25T12:37:13.689Z · LW(p) · GW(p)

Wow, that's cool! Any idea how complex (how large the filesize) the learned CA's rules were? I wonder how it compares to the filesize of the target image. Many order of magnitude bigger? Just one? Could it even be... smaller?

Replies from: alexflint↑ comment by Alex Flint (alexflint) · 2021-05-25T16:57:30.057Z · LW(p) · GW(p)

Yeah I had the sense that the project could have been intended as a compression mechanism since compressing in terms of CA rules kind of captures the spatial nature of image information quite well.

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2021-05-25T21:11:12.886Z · LW(p) · GW(p)

I wonder if there are some sorts of images that are really hard to compress via this particular method.

I wonder if you can achieve massive reliable compression if you aren't trying to target a specific image but rather something in a general category. For example, maybe this specific lizard image requires a CA rule filesize larger than the image to express, but in the space of all possible lizard images there are some nice looking lizards that are super compressible via this CA method. Perhaps using something like DALL-E we could search this space efficiently and find such an image.

comment by Gurkenglas · 2021-05-13T06:55:43.694Z · LW(p) · GW(p)

I would say it all depends on whether there is a wall gadget which protects everything on one side from anything on the other side. (And don't forget the corner gadget.)

If so, cover the edges of the controlled portion in it, except for a "gate" gadget which is supposed to be a wall except openable and closable. (This is relatively easier since a width of 100 ought to be enough, and since we can stack 10000 of these in case one is broken through - rarely should chaos be able to reach through a 100x10000 rectangle.)

Wait 10^40 steps for the chaos to lose entropy. The structures that remain should be highly compressible in a CS sense, and made of a small number of natural gadget types. Send out ships that cover everything in gliders. This should resurrect the chaos temporarily, but decrease entropy further in the long run. Repeat 10^10 times, waiting 10^40 steps in between.

The rest should be a simple matter of carefully distinguishing the remaining natural gadgets with ship sensors to dismantle each. Programming a smiley face deployer that starts from an empty slate is a trivial matter.

If walls are constructible, there's no need for gates, and also one could allow a margin for error in the sensory ships: One could advance the walls after claiming some area, in case a rare encounter summons another era of chaos.

All this is less AGI than ordinary game AI - a bundle of programmed responses.

Replies from: Oscar_Cunningham, alexflint↑ comment by Oscar_Cunningham · 2021-05-13T08:04:32.634Z · LW(p) · GW(p)

Making a 'ship sensor' is tricky. If it collides with something unexpected it will create more chaos that you'll have to clear up.

↑ comment by Alex Flint (alexflint) · 2021-05-13T14:56:18.495Z · LW(p) · GW(p)

Seems plausible to me. I guess the fundamental difference between our universe and Life is that in Life (1) you can do things in Life without "collecting resources" because there is no conservation of energy, and (2) it is perhaps relatively easy to dismantle arbitrary chaos.

Any idea how to amend the control question to more directly point at this issue of whether "influence = AI" under our own physics?

comment by Charlie Steiner · 2021-05-13T03:52:17.035Z · LW(p) · GW(p)

The truly arbitrary version seems provably impossible. For example, what if you're trying to make a smiley face, but some other part of the world contains an agent just like you except they're trying to make a frowny face - you obviously both can't succeed. Instead you need some special environment with low entropy, just like humans do in real life.

Replies from: alexflint, tailcalled↑ comment by Alex Flint (alexflint) · 2021-05-13T14:29:59.277Z · LW(p) · GW(p)

Yeah absolutely - see third bullet in the appendix. One way to resolve this would be to say that to succeed at answering the control question you have to succeed in at least 1% of randomly chosen environments.

↑ comment by tailcalled · 2021-05-13T09:44:26.070Z · LW(p) · GW(p)

Unlike our universe, game of life is not reversible, so I don't think entropy is the key.

comment by Ben Pace (Benito) · 2021-05-13T01:26:19.033Z · LW(p) · GW(p)

I recall once seeing someone say with 99.9% probability that the sun would still rise 100 million years from now, citing information about the life-cycle of stars like our sun. Someone else pointed out that this was clearly wrong, that by default that sun would be taken apart for fuel on that time scale, by us or some AI, and that this was a lesson in people's predictions about the future being highly inaccurate.

But also, "the thing that means there won't be a sun sometime soon" is one of the things I'm pointing to when talking about "general intelligence". This post reminded me of that.

comment by itaibn0 · 2021-06-02T04:57:25.666Z · LW(p) · GW(p)

While I appreciate the analogy between our real universe and simpler physics-like mathematical models like the game of life, assuming intelligence doesn't arise elsewhere in your configuration, this control problem does not seem substantially different or more AI-like from any other engineering problems. After all, there are plenty of other problems that involve leveraging a narrow form of control on a predicable physical system to achieve a more refined control, ex. building a rocket that hits a specific target. The structure that arises from a randomly initialized pattern in Life should be homogeneous in a statistical sense a so highly predictable. I expect almost all of it should stabilize to debris of stable periodic patterns. It's not clear whether it's possible to manipulate or clear the debris in controlled ways, but if it is possible, then a single strategy will work for the entire grid. It may take a great deal of intelligence to come up with such a strategy, but once such a strategy is found it can be hard-coded into the initial Life pattern, without any need for an "inner optimizer". The easiest-to-design solution may involve computer-like patterns, with the pattern keeping track of state involved in debris-clearing and each part tracking its location to determine its role in making the final smiley pattern, but I don't see any need for any AI-like patterns beyond that. On the other hand, if there are inherent limits in the ability to manipulate debris then no amount of reflection by our starting pattern is going to fix that.

That is assuming intelligence doesn't arise in the random starting pattern. If it does, our starting configuration would to overpower every other intelligence that arises and tries to control the space, and this would reasonably require it to be intelligent itself. But if this is the case then the evolution of the random pattern already encodes the concept of intelligence in a much simpler way then this control problem. To predict the structures that would arise from a random initial configuration the idea of intelligence would naturalistic come up. Meanwhile, to solve the control problem in an environment full of intelligence only requires marginally more intelligence at best, and compared to the no-control prediction problem the control problem adds off some complexity for not very much increase in intelligence. Indeed, the solution to the control problem may even be less intelligent than the structures it competes against, and make up for that with hard-coded solutions to NP-hard problems in military strategy.

On a different note, I'm flattered to see a reference in the comments [LW(p) · GW(p)] to some of my own thoughts on working through debris in the Game of Life. It was surprising to see interest in that resurge, and especially surprising to see that interest come from people in AI alignment.

Replies from: alexflint↑ comment by Alex Flint (alexflint) · 2021-06-03T16:24:26.704Z · LW(p) · GW(p)

Thank you for this thoughtful comment itaibn0.

Matter and energy and also approximately homogeneously distributed in our own physical universe, yet building a small device that expands its influence over time and eventually rearranges the cosmos into a non-trivial pattern would seem to require something like an AI.

It might be that the same feat can be accomplished in Life using a pattern that is quite unintelligent. In that case I am very interested in what it is about our own physical universe that makes it different in this respect from Life.

Now it could actually be that in our own physical universe it is also possible to build not-very-intelligent machines that begin small but eventually rearrange the cosmos. In this case I am personally more interested in the nature of these machines than in "intelligent machines", because the reason I am interested in intelligence in the first place is due to its capacity to influence the future in a directed way, and if there are simpler avenues to influence in the future in a directed way then I'd rather spend my energy investigating those avenues than investigating AI. But I don't think it's possible to influence the future in a directed way in our own physical universe without being intelligent.

to solve the control problem in an environment full of intelligence only requires marginally more intelligence at best

What do you mean by this?

the solution to the control problem may even be less intelligent than the structures it competes against, and make up for that with hard-coded solutions to NP-hard problems in military strategy.

But if one entity reliably outcompetes another entity, then on what basis do you say that this other entity is the more intelligent one?

Replies from: itaibn0↑ comment by itaibn0 · 2021-06-04T07:58:32.661Z · LW(p) · GW(p)

Thanks too for responding. I hope our conversation will be productive.

A crucial notion that plays into many of your objections is the distinction between "inner intelligence" and "outer intelligence" of an object (terms derived from "inner vs. outer optimizer"). Inner intelligence is the intelligence the object has in itself as an agent, determined through its behavior in response to novel situation, and outer intelligence is the intelligence that it requires to create this object, and is determined through the ingenuity of its design. I understand your "AI hypothesis" to mean that any solution to the control problem must have inner intelligence. My response is claiming that while solving the control problem may require a lot of outer intelligence, I think it only a requires a small amount of inner intelligence. This is because it seems like the environment in Conway's Game of Life with random dense initial conditions is very low variety and requires a small number of strategies to handle. (Although just as I'm open-minded about intelligent life somehow arising in this environment, it's possible that there are patterns much frequent than abiogenesis that make the environment much more variegated.)

Matter and energy and also approximately homogeneously distributed in our own physical universe, yet building a small device that expands its influence over time and eventually rearranges the cosmos into a non-trivial pattern would seem to require something like an AI.

The universe is only homogeneous at the largest scales, at smaller scales it is highly inhomogeneities in highly diverse ways like stars and planets and raindrops. The value of our intelligence comes from being able to deal with the extreme diversity of intermediate-scale structures. Meanwhile, at the computationally tractable scale in CGOL, dense random initial conditions do not produce intermediate-scale structures between the random small-scale sparks and ashes and the homgeneous large-scale. That said, conditional on life being rare in the universe, I expect that the control problem for our universe requires lower-than-human inner intelligence.

You mention the difficulty of "building a small device that...", but that is talking about outer intelligence. Your AI hypothesis states that, however such a device can or cannot be built, the device itself must be an AI. That's where I disagree.

Now it could actually be that in our own physical universe it is also possible to build not-very-intelligent machines that begin small but eventually rearrange the cosmos. In this case I am personally more interested in the nature of these machines than in "intelligent machines", because the reason I am interested in intelligence in the first place is due to its capacity to influence the future in a directed way, and if there are simpler avenues to influence in the future in a directed way then I'd rather spend my energy investigating those avenues than investigating AI. But I don't think it's possible to influence the future in a directed way in our own physical universe without being intelligent.

Again, the distinction between inner and outer intelligence is crucial. In a pure mathematical sense of existence there exist arrangements of matter that solve the control problem for our universe, but for that to be relevant for our future there has also has to be a natural process that creates these arrangements of matter at a non-negligible rate. If the arrangement requires a high outer intelligence then this process must be intelligent. (For this discussion, I'm considering natural selection to be a form of intelligent design.) So intelligence is still highly relevant for influencing the future. Machines that are mathematically possible cannot practically be created are not "simpler avenues to influence in the future".

"to solve the control problem in an environment full of intelligence only requires marginally more intelligence at best"

What do you mean by this?

Sorry. I meant that the solution to the control problem need only be marginally more intelligent than the intelligent beings in its environment. The difference in intelligence between a controller in an intelligent environment and a controller in a unintelligent environment may be substantial. I realize the phrasing you quote is unclear.

In chess, one player can systematically beat another if the first is ~300 ELO rating points higher, but I'm considering that as a marginal difference in skill on the scale from zero-strategy to perfect play. If our environment is creating the equivalent of a 2000 ELO intelligence, and the solution to the control problem has 2300 ELO, then the specification of the environment contributed 2000 ELO of intelligence, and the specification of the control problem only contributed an extra 300 ELO. In other words, open-world control problems need not be an efficient way of specifying intelligence.

But if one entity reliably outcompetes another entity, then on what basis do you say that this other entity is the more intelligent one?

On the basis of distinguishing narrow intelligence from general intelligence. A solution to the control problem is guaranteed to outcompete other entities in force or manipulation, but it might be worse at most other tasks. The sort of thing I had in mind for "NP-hard problems in military strategy" would be "this particular pattern of gliders is particularly good at penetrating a defensive barrier, and the only way to find this pattern is through a brute force search". Knowing this can the controller a decisive advantage at military conflicts without making it any better at any other tasks, and can permit the controller to have lower general intelligence while still dominating.

comment by Alex_Altair · 2023-01-18T01:32:05.643Z · LW(p) · GW(p)

This post very cleverly uses Conway's Game of Life as an intuition pump for reasoning about agency in general. I found it to be both compelling, and a natural extension of the other work on LW relating to agency & optimization. The post also spurred some object-level engineering work in Life, trying to find a pattern that clears away Ash. It also spurred people in the comments to think more deeply about the implications of the reversibility of the laws of physics. It's also reasonably self-contained, making it a good candidate for inclusion in the Review books.

Replies from: Raemon↑ comment by Raemon · 2023-01-20T02:31:51.606Z · LW(p) · GW(p)

It was unclear to me whether this post directly spurred the work on clearing away Ash or if that's just the sort of thing Game of Life community would have been doing anyway. Did you have a strong sense of that?

Replies from: alexflint↑ comment by Alex Flint (alexflint) · 2023-01-25T13:53:25.450Z · LW(p) · GW(p)

Oh the only information I have about that is Dave Green's comment, plus a few private messages from people over the years who had read the post and were interested in experimenting with concrete GoL constructions. I just messaged the author of the post on the GoL forum asking about whether any of that work was spurred by this post.

comment by gwern · 2021-05-13T02:38:54.034Z · LW(p) · GW(p)

My immediate impulse is to say that it ought to be possible to create the smiley face, and that it wouldn't be that hard for a good Life hacker to devise it.

I'd imagine it to go something like this. Starting from a Turing machine or simpler, you could program it to place arbitrary 'pixels': either by finding a glider-like construct which terminates at specific distances into a still, so the constructor can crawl along an x/y axis, shooting off the terminating-glider to create stable pixels in a pre-programmed pattern. (If that doesn't exist, then one could use two constructors crawling along the x/y axises, shooting off gliders intended to collide, with the delays properly pre-programmed.) The constructor then terminates in a stable still life; this guarantees perpetual stability of the finished smiley face. If one wants to specify a more dynamic environment for realism, then the constructor can also 'wall off' the face using still blocks. Once that's done, nothing from the outside can possibly affect it, and it's internally stable, so the pattern is then eternal.

Replies from: Oscar_Cunningham↑ comment by Oscar_Cunningham · 2021-05-13T08:01:51.957Z · LW(p) · GW(p)

This sounds like you're treating the area as empty space, whereas the OP specifies that it's filled randomly outside the area where our AI starts.

Replies from: gwern↑ comment by gwern · 2021-05-13T14:23:46.227Z · LW(p) · GW(p)

OP said I can initialize a large chunk as I like (which I initialize to be empty aside from my constructors to avoid interfering with placing the pixels), and then the rest might be randomly or arbitrarily initialized, which is why I brought up the wall of still-life eaters to seal yourself off from anything that might then disrupt it. If his specific values don't give me enough space, but larger values do, then that's an answer to the general question as nothing hinges on the specific values.

Replies from: alexflint↑ comment by Alex Flint (alexflint) · 2021-05-13T14:40:07.882Z · LW(p) · GW(p)

I was imagining that the goal configuration would be defined over the whole grid, so that it wouldn't be possible to satisfy the objective within the initial region, since that seems most analogous to constructing an AI in, say, a single room on Earth and having it eventually influence the overall arrangement of matter and energy in the Milky Way.

comment by Bird Concept (jacobjacob) · 2021-07-09T07:27:07.612Z · LW(p) · GW(p)

Curated.

I think this post strikes a really cool balance between discussing some foundational questions about the notion of agency and its importance, as well as posing a concrete puzzle that caused some interesting comments.

For me, Life is a domain that makes it natural to have reductionist intuitions. Compared to say neural networks, I find there are fewer biological metaphors or higher-level abstractions where you might sneak in mysterious answers [? · GW] that purport to solve the deeper questions. I'll consider this post next time I want to introduce someone to some core alignment questions on the back of a napkin, in a shape that makes it more accessible to start toying with the problem without immediatley being led astray. (Though this is made somewhat harder by the technicalities mentioned in the post, and Paul's concerns [LW(p) · GW(p)] about whether Life is similar enough to our physics to be super helpful for poking around).

comment by romeostevensit · 2021-05-13T05:41:56.325Z · LW(p) · GW(p)

Related to sensitivity of instrumental convergence. i.e. the question of whether we live in a universe of strong or weak instrumental convergence. In a strong instrumental convergence universe, most possible optimizers wind up in a relatively small space of configurations regardless of starting conditions, while in a weak one they may diverge arbitrarily in design space. This can be thought of one way of crisping up concepts around orthogonality. e.g. in some universes orthogonality would be locally true but globally false, or vice versa, or locally and globally true or vice versa.

Replies from: alexflint↑ comment by Alex Flint (alexflint) · 2021-05-13T15:18:08.224Z · LW(p) · GW(p)

Romeo if you have time, would you say more about the connection between orthogonality and Life / the control question / the AI hypothesis? It seems related to me but I just can't quite put my finger on exactly what the connection is.

comment by drocta · 2021-05-13T22:48:23.664Z · LW(p) · GW(p)

nitpick : the appendix says possible configurations of the whole grid, while it should say possible configurations. (Similarly for what it says about the number of possible configurations in the region that can be specified.)

Replies from: alexflint↑ comment by Alex Flint (alexflint) · 2021-05-14T15:31:34.569Z · LW(p) · GW(p)

Thank you. Fixed.

comment by Richard_Ngo (ricraz) · 2021-05-13T15:41:18.131Z · LW(p) · GW(p)

It feels like this post pulls a sleight of hand. You suggest that it's hard to solve the control problem because of the randomness of the starting conditions. But this is exactly the reason why it's also difficult to construct an AI with a stable implementation. If you can do the latter, then you can probably also create a much simpler system which creates the smiley face.

Similarly, in the real world, there's a lot of randomness which makes it hard to carry out tasks. But there are a huge number of strategies for achieving things in the world which don't require instantiating an intelligent controller. For example, trees and bacteria started out small but have now radically reshaped the earth. Do they count as having "perception, cognition, and action that are recognizably AI-like"?

Replies from: alexflint, AprilSR↑ comment by Alex Flint (alexflint) · 2021-05-13T15:58:59.531Z · LW(p) · GW(p)

Well yes, I do think that trees and bacteria exhibit this phenomenon of starting out small and growing in impact. The scope of their impact is limited in our universe by the spatial separation between planets, and by the presence of even more powerful world-reshapers in their vicinity, such as humans. But on this view of "which entities are reshaping the whole cosmos around here?", I don't think there is a fundamental difference in kind between trees, bacteria, humans, and hypothetical future AIs. I do think there is a fundamental difference in kind between those entities and rocks, armchairs, microwave ovens, the Opportunity mars rovers, and current Waymo autonomous cars, since these objects just don't have this property of starting out small and eventually reshaping the matter and energy in large regions.

(Surely it's not that it's difficult to build an AI inside Life because of the randomness of the starting conditions -- it's difficult to build an AI inside Life because writing full-AGI software is a difficult design problem, right?)

Replies from: ricraz↑ comment by Richard_Ngo (ricraz) · 2021-05-13T19:10:43.462Z · LW(p) · GW(p)

I don't think there is a fundamental difference in kind between trees, bacteria, humans, and hypothetical future AIs

There's at least one important difference: some of these are intelligent, and some of these aren't.

It does seem plausible that the category boundary you're describing is an interesting one. But when you indicate in your comment below that you see the "AI hypothesis" and the "life hypothesis" as very similar, then that mainly seems to indicate that you're using a highly nonstandard definition of AI, which I expect will lead to confusion.

Replies from: alexflint↑ comment by Alex Flint (alexflint) · 2021-05-13T20:50:49.312Z · LW(p) · GW(p)

But when you indicate in your comment below that you see the "AI hypothesis" and the "life hypothesis" as very similar, then that mainly seems to indicate that you're using a highly nonstandard definition of AI, which I expect will lead to confusion.

Well surely if I built a robot that was able to gather resources and reproduce itself as effectively as either a bacterium or a tree, I would be entirely justified in calling it an "AI". I would certainly have no problem using that terminology for such a construction at any mainstream robotics conference, even if it performed no useful function beyond self-reproduction. Of course we wouldn't call an actual tree or an actual bacterium an "AI" because they are not artificial.

↑ comment by AprilSR · 2021-05-13T15:56:25.157Z · LW(p) · GW(p)

I think the stuff about the supernovas addresses this: a central point is that the “AI” must be capable of generating an arbitrary world state within some bounds.

Replies from: alexflint↑ comment by Alex Flint (alexflint) · 2021-05-13T16:07:42.789Z · LW(p) · GW(p)

Well in case it's relevant here, I actually almost wrote "the AI hypothesis" as "the life hypothesis" and phrased it as

Any pattern of physics that eventually exerts control over a region much larger than its initial configuration does so by means of perception, cognition, and action that are recognizably life-like.

Perhaps in this form it's too vague (what does "life-like" mean?) or too circular (we could just define life-like as having an outsized physical impact).

But in whatever way we phrase it, there is very much a substantial hypothesis under the hood here: the claim is that there is a low-level physical characterization of the general phenomenon of open-ended intelligent autonomy. The thing I'm personally most interested in is the idea that the permissibility of AI is a deep property of our physics.

comment by chasmani · 2021-05-13T09:36:54.676Z · LW(p) · GW(p)

I think a problem you would have is that the speed of information in the game is the same as the speed of, say, a glider. So an AI that is computing within Life would not be able to sense and react to a glider quickly enough to build a control structure in front of it.

Replies from: alexflint, Measure↑ comment by Alex Flint (alexflint) · 2021-05-13T15:09:44.827Z · LW(p) · GW(p)

Yeah this is true and is a very good point. Though consider that under our native physics, information in biological brains travels much more slowly than photons, too. Yet it is possible for structures in our native physics to make net measurements of large numbers of photons, use this information to accumulate knowledge about the environment, and use that knowledge to manipulate the environment, all without being able to build control structures in front of the massive number of photons bouncing around us.

Also, another bit of intuition: photons in our native physics will "knock over" most information-processing structures that we might build that are only one or two orders of magnitude larger than individual photons, such as tiny quantum computers constructed out of individual atoms, or tiny classical computers constructed out of transistors that are each just a handful of atoms. We don't generally build such tiny structures in our universe because it's actually harder for us, being embedded within our world, to build tiny structures than to build large structures. But when we consider building things in Life, we naturally start thinking at the microscopic scale, since we're not starting with a bunch of already-understandable macroscopic building blocks like we are in our native physics.

↑ comment by Measure · 2021-05-13T12:14:39.816Z · LW(p) · GW(p)

The common glider moves at C/4. I don't think there are any that are faster than that, but I'm pretty sure you can make "fuses" that can do a one-time relay at C/2 or maybe even C. You'd have to have an extremely sparse environment, though, to have time to rebuild your fuses.

I'm sceptical that you can make a wall against dense noise though. Maybe there are enough OOMs that you can have a large empty buffer to fill with ash and a wall beyond that?

Replies from: AprilSR↑ comment by AprilSR · 2021-05-14T03:28:22.900Z · LW(p) · GW(p)

There are C/2 spaceships, you don't even need a fuse for that.

Replies from: Oscar_Cunningham↑ comment by Oscar_Cunningham · 2021-05-14T08:07:14.620Z · LW(p) · GW(p)

The glider moves at c/4 diagonally, while the c/2 ships move horizontally. A c/2 ship moving right and then down will reach its destination at the same time the c/4 glider does. In fact, gliders travel at the empty space speed limit.

Replies from: AprilSR↑ comment by AprilSR · 2021-05-14T18:01:51.591Z · LW(p) · GW(p)

Huh. Something about the way speed is calculated feels unintuitive to me, then.

Replies from: Oscar_Cunningham↑ comment by Oscar_Cunningham · 2021-05-14T20:55:56.026Z · LW(p) · GW(p)

The weird thing is that there are two metrics involved: information can propagate through a nonempty universe at 1 cell per generation in the sense of the l_infinity metric, but it can only propagate into empty space at 1/2 a cell per generation in the sense of the l_1 metric.

comment by Isaac King (KingSupernova) · 2021-07-10T01:53:49.943Z · LW(p) · GW(p)

This is an interesting question, but I think your hypothesis is wrong.

Any pattern of physics that eventually exerts control over a region much larger than its initial configuration does so by means of perception, cognition, and action that are recognizably AI-like.

In order to not include things like an exploding supernova as "controlling a region much larger than its initial configuration" we would want to require that such patterns be capable of arranging matter and energy into an arbitrary but low-complexity shape, such as a giant smiley face in Life.

If it is indeed possible to build some sort of simple and robust "area clearer" pattern as the other comments discuss (related post here), then nothing approaching the complexity of an AI is necessary. Simply initialize the small region with the area clearer pattern facing outwards and a smiley face constructer behind it.

It seems to me that the only reason you'd need an AI for this is if that sort of "area clearer" pattern is not possible. In that case, the only way to clear the large square would be to individually sense and interact with each piece of ash and tailor a response to its structure, and you'd need something somewhat "intelligent" to do that. Even in this case, I'm unconvinced that the amount of "intelligence" necessary to do this would put it on par with what we think of as a general intelligence.

comment by Rohin Shah (rohinmshah) · 2021-05-16T23:46:51.780Z · LW(p) · GW(p)

Planned summary for the Alignment Newsletter:

Conway’s Game of Life (GoL) is a simple cellular automaton which is Turing-complete. As a result, it should be possible to build an “artificial intelligence” system in GoL. One way that we could phrase this is: if we imagine a GoL board with 10^30 rows and 10^30 columns, and we are able to set the initial state of the top left 10^20 by 10^20 square, can we set that initial state appropriately such that after a suitable amount of time, we the full board evolves to a desired state (perhaps a giant smiley face), for the vast majority of possible initializations of the remaining area?

This requires us to find some setting of the initial 10^20 by 10^20 square that has [expandable, steerable influence](https://www.lesswrong.com/posts/tmZRyXvH9dgopcnuE/life-and-expanding-steerable-consequences). Intuitively, the best way to do this would be to build “sensors” and “effectors” to have inputs and outputs, and then have some program decide what the effectors should do based on the input from the sensors, and the “goal” of the program would be to steer the world towards the desired state. Thus, this is a framing of the problem of AI (both capabilities and alignment) in GoL, rather than in our native physics.

Planned opinion:

Replies from: alexflintWith the tower of abstractions we humans have built, we now naturally think in terms of inputs and outputs for the agents we build. This hypothetical seems good for shaking us out of that mindset, as we don’t really know what the analogous inputs and outputs in GoL would be, and so we are forced to consider those aspects of the design process as well.

↑ comment by Alex Flint (alexflint) · 2021-05-17T00:54:36.095Z · LW(p) · GW(p)

Yeah this seems right to me.

Thank you for all the summarization work you do, Rohin.

comment by Richard_Kennaway · 2021-05-13T07:35:42.109Z · LW(p) · GW(p)

Here is a simple disproof of the control question.

Among the possible ways the rest of the grid could be filled, one is that is it empty except for the diagonally opposite corner, where there is a duplicate of the top left corner, rotated 180 degrees. Since this makes the whole grid symmetrical under that rotation, every future state must also be symmetrical. The smiley does not have that symmetry, therefore it cannot be achieved.

Also, what Charlie Steiner said [LW(p) · GW(p)].

Replies from: Oscar_Cunningham↑ comment by Oscar_Cunningham · 2021-05-13T08:00:42.223Z · LW(p) · GW(p)

My understanding was that we just want to succeed with high probability. The vast majority of configurations will not contain enemy AIs.

Replies from: alexflint↑ comment by Alex Flint (alexflint) · 2021-05-13T14:42:05.379Z · LW(p) · GW(p)

Yeah success with high probability was how I thought the question would need to be amended to deal with the case of multiple AIs. I mentioned this in the appendix but should have put it in the main text. Will add a note.

comment by JBlack · 2021-05-13T02:50:56.506Z · LW(p) · GW(p)

My strong expectation is that in Life, there is no configuration that you can put in the starting area that is robust against randomly initializing the larger area.

There are very likely other cellular automata which do support arbitrary computation, but which are much less fragile versus evolution of randomly initialized spaces nearby.

comment by the gears to ascension (lahwran) · 2023-01-18T06:34:12.661Z · LW(p) · GW(p)

an AI requires apparatus to perceive and act within the world, as well as the ability to move and grow if we want it to eventually exert influence over the entire grid. Most constructions within Life are extremely sensitive to perturbations.

This may be more tractable in Lenia, because lenia's smoothness means that cell-level changes take many simulation steps and can occur over multiple spatial frequencies. That might also make it harder to study, but I think more people should know about smoothlife variants. Smoothlife gliders have:

- free motion relative to other gliders

- smooth state spaces

- complex phenomena that can be abstracted to simpler ones, a key difficulty of real physics we'd like to have a testbed for

- the potential to be trained with gradient descent, allowing generation of very complex patterns to test algorithms such as discovering agency

- there's already quite a bit of work on these topics, check out the page!

↑ comment by Alex Flint (alexflint) · 2023-01-25T14:03:45.735Z · LW(p) · GW(p)

Interesting. Thank you for the pointer.

The real question, though, is whether it is possible within our physics.

comment by Alex Flint (alexflint) · 2022-12-08T14:50:39.886Z · LW(p) · GW(p)

This is a post about the mystery of agency. It sets up a thought experiment in which we consider a completely deterministic environment that operates according to very simple rules, and ask what it would be for an agentic entity to exist within that.

People in the game of life community actually spent some time investigating the empirical questions that were raised in this post. Dave Greene notes:

The technology for clearing random ash out of a region of space isn't entirely proven yet, but it's looking a lot more likely than it was a year ago, that a workable "space-cleaning" mechanism could exist in Conway's Life.

As previous comments have pointed out, it certainly wouldn't be absolutely foolproof. But it might be surprisingly reliable at clearing out large volumes of settled random ash -- which could very well enable a 99+% success rate for a Very Very Slow Huge-Smiley-Face Constructor.

I have the sense that the most important question raised in this post is about whether it is possible to construct a relatively small object in the physical world that steers the configuration of a relatively large region of the physical world into a desired configuration. The Game of Life analogy is intended to make that primary question concrete, and also to highlight how fundamental the question of such an object's existence is.

The main point of this post was that the feasibility or non-feasibility of AI systems that exert precise influence over regions of space much larger than themselves may actually be a basic kind of descriptive principle for the physical world. It would be great to write a follow-up post highlighting this point.

comment by Vanessa Kosoy (vanessa-kosoy) · 2021-09-09T23:46:09.237Z · LW(p) · GW(p)

I think the GoL is not the best example for this sort of questions. See this post by Scott Aaronson discussing the notion of "physical universality" which seems relevant here.

Also, like other commenters pointed out, I don't think the object you get here is necessarily AI. That's because the "laws of physics" and the distribution of initial conditions are assumed to be simple and known. An AI would be something that can accomplish an objective of this sort while also having to learn the rules of the automaton or detect patterns in the initial conditions. For example, instead of initializing the rest of the field uniformly randomly, you could initialize it using something like the Solomonoff prior.

comment by michael_dello · 2023-01-26T03:44:58.096Z · LW(p) · GW(p)

I really enjoyed this read, thanks. I'm an enjoyer of Life from afar so there may be a trivial answer to this question.

Is it possible to reverse engineer a state in Life? E.g., for time state X, can you easily determine a possible time state X-1? I know that multiple X-1 time states can lead to the same X time state, but is it possible to generate one? Can you reverse engineer any possible X-100 time state for a given time state X? I ask because I wonder if you could generate an X-(10^60) time state on a 10^30 by 10^30 grid where time state X is a large smiley face.

This almost certainly wouldn't give you a 10^20 by 10^20 corner that generates a smiley face for all or even a small fraction of iterations of the rest of the grid. But perhaps you could randomise the reverse engineering and keep generating X-(10^60) states until you get one that creates a smiley face for the greatest number of 10^30 by 10^30 states (randomly generating that part of the grid and running it for 10^60 steps), then call that your solution to the control problem

I used the numbers from your example in mine, but perhaps one could demonstrate this with a much smaller grid. Say for example, a 10^2 by 10^2 grid with 10^5 time steps, and reverse engineer a smiley face.

This might be a way of brute forcing the control problem, so to speak. Proving it is possible in a smaller grid might show it's possible with larger grids.

Replies from: gwern, alexflint↑ comment by gwern · 2023-01-26T22:36:55.902Z · LW(p) · GW(p)

Is it possible to reverse engineer a state in Life? E.g., for time state X, can you easily determine a possible time state X-1?

Wouldn't the existence of Garden of Eden states, which have no predecessor, prove that you cannot easily create a predecessor in general? You could then make any construction non-predecessable by embedding some Garden of Eden blocks somewhere in them.

Replies from: michael_dello↑ comment by michael_dello · 2023-01-27T00:26:43.975Z · LW(p) · GW(p)

That's true, but would I be right in saying that as long as there are no Garden of Eden states, you could in theory at least generate one possible prior state?

Replies from: Throwaway2367↑ comment by Throwaway2367 · 2023-01-27T10:47:46.147Z · LW(p) · GW(p)

There is some terminological confusion in this thread: a Garden of Eden of a Cellular Automaton is a configuration which has no predecessor. (A configuration is a function assigning a state to every cell). An orphan is a finite subset of cells with their states having the property that any configuration containing the orphan is a Garden Of Eden configuration. Obviously every orphan can be extended to a Garden of Eden configuration (by choosing a state arbitrarily for the cells not in the orphan) but interestingly it is also true that every garden of eden contains an orphan. So the answer to your question is yes: if there are no orphans in the configuration then it is not a garden of eden, therefore, by definition, it has a predecessor.

↑ comment by Alex Flint (alexflint) · 2023-01-26T19:31:38.764Z · LW(p) · GW(p)

Thanks for the note.

In Life, I don't think it's easy to generate an X-1 time state that leads to an X time state, unfortunately. The reason is that each cell in an X time state puts a logical constraint on 9 cells in an X-1 time state. It is therefore possible to set up certain constraint satisfaction problems in terms of finding an X-1 time state that leads to an X time state, and in general these can be NP-hard.

However, in practice, it is very very often quite easy to find an X-1 time state that leads to a given X time state, so maybe this experiment could be tried in an experimental form anyhow.

In our own universe, the corresponding operation would be to consider some goal configuration of the whole universe, and propagate that configuration backwards to our current time. However, this would generally just tell us that we should completely reconfigure the whole universe right now, and that is generally not within our power, since we can only act locally, have access only to certain technologies, and such.

I think it is interesting to push on this "brute forcing" approach to steering the future, though. I'd be interested to chat more about it.

Replies from: michael_dello↑ comment by michael_dello · 2023-01-27T00:45:35.428Z · LW(p) · GW(p)

It surprises me a little that there hasn't been more work on working backwards in Life. Perhaps it's just too hard/not useful given the number of possible X-1 time slices.

With the smiley face example, there could be a very large number of combinations for the squares outside the smiley face at X-1 which result in the same empty grid space (i.e. many possible self-destructing patterns).

I'm unreasonably fond of brute forcing problems like these. I don't know if I'd have anything useful to say on this topic that I haven't already, but I'm interested to follow this work. I think this is a fascinating analogy for the control problem.

Edit - It just occurred to me, thanks to a friend, that instead of reverse engineering the desired state, it might be easier to just randomise the inputs until you get the outcome you want (not sure why this didn't occur to me). Still very intensive, but perhaps easier.

Replies from: Pavgran↑ comment by Pavgran · 2023-01-27T10:09:25.716Z · LW(p) · GW(p)

Well, there are programs like Logic Life Search that can solve reasonably small instances of backwards search. This problem is quite hard in general. Part of the reason is that you have to consider sparks in the search. The pattern in question could have evolved from small predecessor pattern, but the reaction could have moved from the starting point and a several dying sparks could have been emitted all around. So you have to somehow figure the exact location, timing and nature of these sparks to successfully evolve the pattern backwards. Otherwise, it quite quickly devolves into bigger and bigger blob of seemingly random dust. In practice, backwards search is regularly applied to find smaller predecessors in terms of bounding box and/or population. See, for example, Max page. And the number of ticks backwards is quite small, we are talking about a single-digit number here.

comment by Lionel Levine · 2021-05-16T19:51:11.886Z · LW(p) · GW(p)

I think this is possible and it doesn’t require AI. It only requires a certain kind of "infectious Turing machine" described below.

Following Gwern’s comment [LW(p) · GW(p)], let’s consider first the easier problem of writing a program on a small portion of a Turing machine’s tape, which draws a large smiley face on the rest of the tape. This is easy even with the *worst case* initialization of the rest of the tape. Whereas our problem is not solvable in worst case [LW(p) · GW(p)], as pointed out by Richard_Kennaway.

What makes our problem harder is errors caused by the random environment. We could model these errors by spontaneous random changes in the letters written on the Turing machine's tape. But it’s easy to make a computation robust to such errors: A naive way to do it is to repeat each square 100 times and assign a repair bot to repeatedly scan the 100 squares, compute majority, and convert the minority squares back to the majority letter. This is not so different from how your laptop repeatedly scans its memory to prevent errors.

Now we get to what I think is the hard part of the problem: How to organize a random environment into squares of Turing machine tape? I don’t know how to do it in Conway's Life, but I would guess it's possible. An interesting question is whether *any* cellular automaton that supports a Turing machine can also support an infectious Turing machine. Again I would guess yes.

Does an infectious Turning machine require AI? There is certainly something lifelike about a device that gradually converts its environment into squares of its own tape. The tape squares reproduce, but they hardly need to be intelligent.