Paul Christiano named as US AI Safety Institute Head of AI Safety

post by Joel Burget (joel-burget) · 2024-04-16T16:22:06.937Z · LW · GW · 58 commentsThis is a link post for https://www.commerce.gov/news/press-releases/2024/04/us-commerce-secretary-gina-raimondo-announces-expansion-us-ai-safety

Contents

60 comments

U.S. Secretary of Commerce Gina Raimondo announced today additional members of the executive leadership team of the U.S. AI Safety Institute (AISI), which is housed at the National Institute of Standards and Technology (NIST). Raimondo named Paul Christiano as Head of AI Safety, Adam Russell as Chief Vision Officer, Mara Campbell as Acting Chief Operating Officer and Chief of Staff, Rob Reich as Senior Advisor, and Mark Latonero as Head of International Engagement. They will join AISI Director Elizabeth Kelly and Chief Technology Officer Elham Tabassi, who were announced in February. The AISI was established within NIST at the direction of President Biden, including to support the responsibilities assigned to the Department of Commerce under the President’s landmark Executive Order.

Paul Christiano, Head of AI Safety, will design and conduct tests of frontier AI models, focusing on model evaluations for capabilities of national security concern. Christiano will also contribute guidance on conducting these evaluations, as well as on the implementation of risk mitigations to enhance frontier model safety and security. Christiano founded the Alignment Research Center, a non-profit research organization that seeks to align future machine learning systems with human interests by furthering theoretical research. He also launched a leading initiative to conduct third-party evaluations of frontier models, now housed at Model Evaluation and Threat Research (METR). He previously ran the language model alignment team at OpenAI, where he pioneered work on reinforcement learning from human feedback (RLHF), a foundational technical AI safety technique. He holds a PhD in computer science from the University of California, Berkeley, and a B.S. in mathematics from the Massachusetts Institute of Technology.

58 comments

Comments sorted by top scores.

comment by habryka (habryka4) · 2024-04-16T21:13:27.544Z · LW(p) · GW(p)

This seems quite promising to me. My primary concern is that I feel like NIST is really not an institution well-suited to house alignment research.

My current understanding is that NIST is generally a very conservative organization, with the primary remit of establishing standards in a variety of industries and sciences. These standards are almost always about things that are extremely objective and very well-established science.

In contrast, AI Alignment seems to me to continue to be in a highly pre-paradigmatic state, and the standards that I can imagine us developing there seem to be qualitatively very different from the other standards that NIST has historically been in charge of developing. It seems to me that NIST is not a good vehicle for the kind of cutting edge research and extremely uncertain environment in which things like alignment and evals research have to happen.

Maybe other people have a different model of different US governmental institutions, but at least to me NIST seems like a bad fit for the kind of work that I expect Paul to be doing there.

Replies from: Buck, cdwhite, evhub↑ comment by Buck · 2024-04-16T23:00:06.085Z · LW(p) · GW(p)

Why are you talking about alignment research? I don't see any evidence that he's planning to do any alignment research in this role, so it seems misleading to talk about NIST being a bad place to do it.

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-04-16T23:10:09.899Z · LW(p) · GW(p)

Huh, I guess my sense is that in order to develop the standards talked about above you need to do a lot of cutting-edge research.

I guess my sense from the description above is that we would be talking about research pretty similar to what METR is doing, which seems pretty open-ended and pre-paradigmatic to me. But I might be misunderstanding his role.

Replies from: Buck↑ comment by Buck · 2024-04-16T23:14:24.578Z · LW(p) · GW(p)

I’m not saying you don’t need to do cutting-edge research, I’m just saying that it’s not what people usually call alignment research.

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-04-16T23:22:24.829Z · LW(p) · GW(p)

I would call what METR does alignment research, but also fine to use a different term for it. Mostly using it synonymously with "AI Safety Research" which I know you object to, but I do think that's how it's normally used (and the relevant aspect here is the pre-paradigmaticity of the relevant research, which I continue to think applies independently of the bucket you put it into).

I do think it's marginally good to make "AI Alignment Research" mean something narrower, so am supportive here of getting me to use something broader like "AI Safety Research", but I don't really think that changes my argument in any relevant way.

Replies from: Buck, neel-nanda-1, mesaoptimizer↑ comment by Buck · 2024-04-17T15:54:51.976Z · LW(p) · GW(p)

Yeah I object to using the term "alignment research" to refer to research that investigates whether models can do particular things.

But all the terminology options here are somewhat fucked imo, I probably should have been more chill about you using the language you did, sorry.

↑ comment by Neel Nanda (neel-nanda-1) · 2024-04-17T10:56:54.703Z · LW(p) · GW(p)

It seems like we have significant need for orgs like METR or the DeepMind dangerous capabilities evals team trying to operationalise these evals, but also regulators with authority building on that work to set them as explicit and objective standards. The latter feels maybe more practical for NIST to do, especially under Paul?

↑ comment by mesaoptimizer · 2024-04-17T12:41:19.319Z · LW(p) · GW(p)

which I know you object to

Buck, could you (or habryka) elaborate on this? What does Buck call the set of things that ARC theory and METR (formerly known as ARC evals) does, "AI control research"?

My understanding is that while Redwood clearly does control research, METR evals seem more of an attempt to demonstrate dangerous capabilities than help with control. I haven't wrapped my head around ARC's research philosophy and output to confidently state anything.

Replies from: Buck↑ comment by Buck · 2024-04-17T15:59:50.407Z · LW(p) · GW(p)

I normally use "alignment research" to mean "research into making models be aligned, e.g. not performing much worse than they're 'able to' and not purposefully trying to kill you". By this definition, ARC is alignment research, METR and Redwood isn't.

An important division between Redwood and METR is that we focus a lot more on developing/evaluating countermeasures.

Replies from: RobbBB↑ comment by Rob Bensinger (RobbBB) · 2024-04-17T18:54:41.932Z · LW(p) · GW(p)

FWIW, I typically use "alignment research" to mean "AI research aimed at making it possible to safely do ambitious things with sufficiently-capable AI" (with an emphasis on "safely"). So I'd include things like Chris Olah's interpretability research, even if the proximate impact of this is just "we understand what's going on better, so we may be more able to predict and finely control future systems" and the proximate impact is not "the AI is now less inclined to kill you".

Some examples: I wouldn't necessarily think of "figure out how we want to airgap the AI" as "alignment research", since it's less about designing the AI, shaping its mind, predicting and controlling it, etc., and more about designing the environment around the AI.

But I would think of things like "figure out how to make this AI system too socially-dumb to come up with ideas like 'maybe I should deceive my operators', while keeping it superhumanly smart at nanotech research" as central examples of "alignment research", even though it's about controlling capabilities ('make the AI dumb in this particular way') rather than about instilling a particular goal into the AI.

And I'd also think of "we know this AI is trying to kill us; let's figure out how to constrain its capabilities so that it keeps wanting that, but is too dumb to find a way to succeed in killing us, thereby forcing it to work with us rather than against us in order to achieve more of what it wants" as a pretty central example of alignment research, albeit not the sort of alignment research I feel optimistic about. The way I think about the field, you don't have to specifically attack the "is it trying to kill you?" part of the system in order to be doing alignment research; there are other paths, and alignment researchers should consider all of them and focus on results rather than marrying a specific methodology.

Replies from: ryan_greenblatt↑ comment by ryan_greenblatt · 2024-04-18T02:56:54.156Z · LW(p) · GW(p)

It's pretty sad to call all of these end states you describe alignment as alignment is an extremely natural word for "actually terminally has good intentions". So, this makes me sad to call this alignment research. Of course, this type of research maybe instrumentally useful for making AIs more aligned, but so will a bunch of other stuff (e.g. earning to give).

Fair enough if you think we should just eat this terminology issue and then coin a new term like "actually real-alignment-targeting-directly alignment research". Idk what the right term is obviously.

Replies from: RobbBB↑ comment by Rob Bensinger (RobbBB) · 2024-04-18T22:53:26.766Z · LW(p) · GW(p)

It's pretty sad to call all of these end states you describe alignment as alignment is an extremely natural word for "actually terminally has good intentions".

Aren't there a lot of clearer words for this? "Well-intentioned", "nice", "benevolent", etc.

(And a lot of terms, like "value loading" and "value learning", that are pointing at the research project of getting good intentions into the AI.)

To my ear, "aligned person" sounds less like "this person wishes the best for me", and more like "this person will behave in the right ways".

If I hear that Russia and China are "aligned", I do assume that their intentions play a big role in that, but I also assume that their circumstances, capabilities, etc. matter too. Alignment in geopolitics can be temporary or situational, and it almost never means that Russia cares about China as much as China cares about itself, or vice versa.

And if we step back from the human realm, an engineered system can be "aligned" in contexts that have nothing to do with goal-oriented behavior, but are just about ensuring components are in the right place relative to each other.

Cf. the history of the term "AI alignment" [LW(p) · GW(p)]. From my perspective, a big part of why MIRI coordinated with Stuart Russell to introduce the term "AI alignment" was that we wanted to switch away from "Friendly AI" to a term that sounded more neutral. "Friendly AI research" had always been intended to subsume the full technical problem of making powerful AI systems safe and aimable; but emphasizing "Friendliness" made it sound like the problem was purely about value loading, so a more generic and low-content word seemed desirable.

But Stuart Russell (and later Paul Christiano) had a different vision in mind for what they wanted "alignment" to be, and MIRI apparently failed to communicate and coordinate with Russell to avoid a namespace collision. So we ended up with a messy patchwork of different definitions.

I've basically given up on trying to achieve uniformity on what "AI alignment" is; the best we can do, I think, is clarify whether we're talking about "intent alignment" vs. "outcome alignment" when the distinction matters.

But I do want to push back against those who think outcome alignment is just an unhelpful concept — on the contrary, if we didn't have a word for this idea I think it would be very important to invent one.

IMO it matters more that we keep our eye on the ball (i.e., think about the actual outcomes we want and keep researchers' focus on how to achieve those outcomes) than that we define an extremely crisp, easily-packaged technical concept (that is at best a loose proxy for what we actually want). Especially now that ASI seems nearer at hand (so the need for this "keep our eye on the ball" skill is becoming less and less theoretical), and especially now that ASI disaster concerns have hit the mainstream (so the need to "sell" AI risk has diminished somewhat, and the need to direct research talent at the most important problems has increased).

And I also want to push back against the idea that a priori, before we got stuck with the current terminology mess, it should have been obvious that "alignment" is about AI systems' goals and/or intentions, rather than about their behavior or overall designs. I think intent alignment took off because Stuart Russell and Paul Christiano advocated for that usage and encouraged others to use the term that way, not because this was the only option available to us.

Replies from: ryan_greenblatt↑ comment by ryan_greenblatt · 2024-04-18T23:37:45.748Z · LW(p) · GW(p)

Aren't there a lot of clearer words for this? "Well-intentioned", "nice", "benevolent", etc.

Fair enough. I guess it just seems somewhat incongruous to say. "Oh yes, the AI is aligned. Of course it might desperately crave murdering all of us in its heart (we certainly haven't ruled this out with our current approach), but it is aligned because we've made it so that it wouldn't get away with it if it tried [LW · GW]."

Replies from: RobbBB, nathan-helm-burger↑ comment by Rob Bensinger (RobbBB) · 2024-04-19T00:00:37.122Z · LW(p) · GW(p)

Sounds like a lot of political alliances! (And "these two political actors are aligned" is arguably an even weaker condition than "these two political actors are allies".)

At the end of the day, of course, all of these analogies are going to be flawed. AI is genuinely a different beast.

↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-04-19T17:13:41.053Z · LW(p) · GW(p)

Personally, I like mentally splitting the space into AI safety (emphasis on measurement and control), AI alignment (getting it to align to the operators purposes and actually do what the operators desire), and AI value-alignment (getting the AI to understand and care about what people need and want). Feels like a Venn diagram with a lot of overlap, and yet some distinct non-overlap spaces.

By my framing, Redwood research and METR are more centrally AI safety. ARC/Paul's research agenda more of a mix of AI safety and AI alignment. MIRI's work to fundamentally understand and shape Agents is a mix of AI alignment and AI value-alignment. Obviously success there would have the downstream effect of robustly improving AI safety (reducing the need for careful evals and control), but is a more difficult approach in general with less immediate applicability. I think we need all these things!

↑ comment by cdwhite · 2024-04-16T22:10:38.147Z · LW(p) · GW(p)

Couple of thoughts re: NIST as an institution:

- FWIW NIST, both at Gaithersburg and Boulder, is very well-regarded in AMO physics and slices of condensed matter. Bill Phillips (https://www.nist.gov/people/william-d-phillips) is one big name there, but AIUI they do a whole bunch of cool stuff. Which doesn't say much one way or the other about how the AI Safety Institute will go! But NIST has a record of producing, or at least sheltering, very good work in the general vicinity of their remit.

- Don't knock metrology! It's really, really hard, subtle, and creative, and you all of a sudden have to worry about things nobody has ever thought about before! I'm a condensed matter theory guy, more or less, but I go to metrology talks every once in a while and have my mind blown.

↑ comment by Adam Scholl (adam_scholl) · 2024-04-17T08:08:43.246Z · LW(p) · GW(p)

I agree metrology is cool! But I think units are mostly helpful for engineering insofar as they reflect fundamental laws of nature—see e.g. the metric units—and we don't have those yet for AI. Until we do, I expect attempts to define them will be vague, high-level descriptions more than deep scientific understanding.

(And I think the former approach has a terrible track record, at least when used to define units of risk or controllability—e.g. BSL levels, which have failed so consistently and catastrophically they've induced an EA cause area, and which for some reason AI labs are starting to emulate).

Replies from: ChristianKl, Davidmanheim↑ comment by ChristianKl · 2024-04-22T16:03:43.919Z · LW(p) · GW(p)

In what sense did the BSL levels failed consistently or catastrophically?

Even if you think COVID-19 is a lab leak, the BSL levels would have suggested that the BSL 2 that Wuhan used for their Coronavirus gain-of-function research is not enough.

Replies from: adam_scholl↑ comment by Adam Scholl (adam_scholl) · 2024-04-23T02:43:35.777Z · LW(p) · GW(p)

There have been frequent and severe biosafety accidents for decades, many of which occurred at labs which were attempting to follow BSL protocol.

Replies from: Davidmanheim↑ comment by Davidmanheim · 2024-04-26T15:28:42.601Z · LW(p) · GW(p)

That doesn't seem like "consistently and catastrophically," it seems like "far too often, but with thankfully fairly limited local consequences."

↑ comment by Davidmanheim · 2024-04-22T12:24:55.014Z · LW(p) · GW(p)

BSL levels, which have failed so consistently and catastrophically they've induced an EA cause area,

This is confused and wrong, in my view. The EA cause area around biorisk is mostly happy to rely on those levels, and unlike for AI, the (very useful) levels predate EA interest and give us something to build on. The questions are largely instead about whether to allow certain classes of research at all, the risks of those who intentionally do things that are forbiddn, and how new technology changes the risk.

Replies from: adam_scholl↑ comment by Adam Scholl (adam_scholl) · 2024-04-23T02:36:27.166Z · LW(p) · GW(p)

The EA cause area around biorisk is mostly happy to rely on those levels

I disagree—I think nearly all EA's focused on biorisk think gain of function research should be banned, since the risk management framework doesn't work well enough to drive the expected risk below that of the expected benefit. If our framework for preventing lab accidents worked as well as e.g. our framework for preventing plane accidents, I think few EA's would worry much about GoF.

(Obviously there are non-accidental sources of biorisk too, for which we can hardly blame the safety measures; but I do think the measures work sufficiently poorly that even accident risk alone would justify a major EA cause area).

Replies from: Benito, Davidmanheim↑ comment by Ben Pace (Benito) · 2024-04-23T04:04:59.635Z · LW(p) · GW(p)

[Added April 28th: In case someone reads my comment without this context: David has made a number of worthwhile contributions to discussions of biological existential risks (e.g. 1 [LW · GW], 2 [LW · GW], 3 [LW · GW]) as well as worked professionally in this area and his contributions on this topic are quite often well-worth engaging with. Here I just intended to add that in my opinion early on in the covid pandemic he messed up pretty badly in one or two critical discussions around mask effectiveness [LW(p) · GW(p)] and censoring criticism of the CDC [LW(p) · GW(p)]. Perhaps that's not saying much because the base rate for relevant experts dealing with Covid is also that they were very off-the-mark. Furthermore David's June 2020 post-mortem [LW · GW] of his mistakes was a good public service even while I don't agree with his self-assessment in all cases. Overall I think his arguments are often well-worth engaging with.]

I'm not in touch with the ground truth in this case, but for those reading along without knowing the context, I'll mention that it wouldn't be the first time that David has misrepresented what people in the Effective Altruism Biorisk professional network believe[1].

(I will mention that David later apologized for handling that situation poorly and wasting people's time[2], which I think reflects positively on him.)

- ^

See Habryka's response to Davidmanheim's comment here [LW(p) · GW(p)] from March 7th 2020, such as this quote.

Overall, my sense is that you made a prediction that people in biorisk would consider this post an infohazard that had to be prevented from spreading (you also reported this post to the admins, saying that we should "talk to someone who works in biorisk at at FHI, Openphil, etc. to confirm that this is a really bad idea").

We have now done so, and in this case others did not share your assessment (and I expect most other experts would give broadly the same response).

- ^

See David's own June 25th reply to the same comment [LW(p) · GW(p)].

↑ comment by Adam Scholl (adam_scholl) · 2024-04-23T05:15:33.382Z · LW(p) · GW(p)

My guess is more that we were talking past each other than that his intended claim was false/unrepresentative. I do think it's true that EA's mostly talk about people doing gain of function research as the problem, rather than about the insufficiency of the safeguards; I just think the latter is why the former is a problem.

↑ comment by Davidmanheim · 2024-04-24T17:14:51.346Z · LW(p) · GW(p)

The OP claimed a failure of BSL levels was the single thing that induced biorisk as a cause area, and I said that was a confused claim. Feel free to find someone who disagrees with me here, but the proximate causes of EAs worrying about biorisk has nothing to do with BSL lab designations. It's not BSL levels that failed in allowing things like the soviet bioweapons program, or led to the underfunded and largely unenforceable BWC, or the way that newer technologies are reducing the barriers to terrorists and other being able to pursue bioweapons.

Replies from: adam_scholl↑ comment by Adam Scholl (adam_scholl) · 2024-04-25T00:49:59.702Z · LW(p) · GW(p)

I think we must still be missing each other somehow. To reiterate, I'm aware that there is non-accidental biorisk, for which one can hardly blame the safety measures. But there is also accident risk, since labs often fail to contain pathogens even when they're trying to.

Replies from: Davidmanheim↑ comment by Davidmanheim · 2024-04-25T18:23:18.405Z · LW(p) · GW(p)

Having written extensively about it, I promise you I'm aware. But please, tell me more about how this supports the original claim which I have been disagreeing with, that these class of incidents were or are the primary concern of the EA biosecurity community, the one that led to it being a cause area.

Replies from: aysja, adam_scholl↑ comment by aysja · 2024-04-25T23:13:40.461Z · LW(p) · GW(p)

I agree there other problems the EA biosecurity community focuses on, but surely lab escapes are one of those problems, and part of the reason we need biosecurity measures? In any case, this disagreement seems beside the main point that I took Adam to be making, namely that the track record for defining appropriate units of risk for poorly understood, high attack surface domains is quite bad (as with BSL). This still seems true to me.

Replies from: Davidmanheim↑ comment by Davidmanheim · 2024-04-26T05:32:12.332Z · LW(p) · GW(p)

BSL isn't the thing that defines "appropriate units of risk", that's pathogen risk-group levels, and I agree that those are are problem because they focus on pathogen lists rather than actual risks. I actually think BSL are good at what they do, and the problem is regulation and oversight, which is patchy, as well as transparency, of which there is far too little. But those are issues with oversight, not with the types of biosecurity measure that are available.

↑ comment by Adam Scholl (adam_scholl) · 2024-04-26T01:53:49.391Z · LW(p) · GW(p)

This thread isn't seeming very productive to me, so I'm going to bow out after this. But yes, it is a primary concern—at least in the case of Open Philanthropy, it's easy to check what their primary concerns are because they write them up. And accidental release from dual use research is one of them.

Replies from: Davidmanheim↑ comment by Davidmanheim · 2024-04-26T05:26:17.301Z · LW(p) · GW(p)

If you're appealing to OpenPhil, it might be useful to ask one of the people who was working with them on this as well.

And you've now equivocated between "they've induced an EA cause area" and a list of the range of risks covered by biosecurity - not what their primary concerns are - and citing this as "one of them." I certainly agree that biosecurity levels are one of the things biosecurity is about, and that "the possibility of accidental deployment of biological agents" is a key issue, but that's incredibly far removed from the original claim that the failure of BSL levels induced the cause area!

↑ comment by Davidmanheim · 2024-04-24T15:58:20.803Z · LW(p) · GW(p)

I did not say that they didn't want to ban things, I explicitly said "whether to allow certain classes of research at all," and when I said "happy to rely on those levels, I meant that the idea that we should have "BSL-5" is the kind of silly thing that novice EAs propose that doesn't make sense because there literally isn't something significantly more restrictive other than just banning it.

I also think that "nearly all EA's focused on biorisk think gain of function research should be banned" is obviously underspecified, and wrong because of the details. Yes, we all think that there is a class of work that should be banned, but tons of work that would be called gain of function isn't in that class.

↑ comment by Adam Scholl (adam_scholl) · 2024-04-25T03:10:54.825Z · LW(p) · GW(p)

the idea that we should have "BSL-5" is the kind of silly thing that novice EAs propose that doesn't make sense because there literally isn't something significantly more restrictive

I mean, I'm sure something more restrictive is possible. But my issue with BSL levels isn't that they include too few BSL-type restrictions, it's that "lists of restrictions" are a poor way of managing risk when the attack surface is enormous. I'm sure someday we'll figure out how to gain this information in a safer way—e.g., by running simulations of GoF experiments instead of literally building the dangerous thing—but at present, the best available safeguards aren't sufficient.

I also think that "nearly all EA's focused on biorisk think gain of function research should be banned" is obviously underspecified, and wrong because of the details.

I'm confused why you find this underspecified. I just meant "gain of function" in the standard, common-use sense—e.g., that used in the 2014 ban on federal funding for such research.

Replies from: Davidmanheim↑ comment by Davidmanheim · 2024-04-25T18:36:42.142Z · LW(p) · GW(p)

I mean, I'm sure something more restrictive is possible.

But what? Should we insist that the entire time someone's inside a BSL-4 lab, we have a second person who is an expert in biosafety visually monitoring them to ensure they don't make mistakes? Or should their air supply not use filters and completely safe PAPRs, and feed them outside air though a tube that restricts their ability to move around instead? (Edit to Add: These are already both requires in BSL-4 labs. When I said I don't know of anything more restrictive they could do, I was being essentially literal - they do everything including quite a number of unreasonable things to prevent human infection, short of just not doing the research.)

Or do you have some new idea that isn't just a ban with more words?

"lists of restrictions" are a poor way of managing risk when the attack surface is enormous

Sure, list-based approaches are insufficient, but they have relatively little to do with biosafety levels of labs, they have to do with risk groups, which are distinct, but often conflated. (So Ebola or Smallpox isn't a "BSL-4" pathogen, because there is no such thing. )

I just meant "gain of function" in the standard, common-use sense—e.g., that used in the 2014 ban on federal funding for such research.

That ban didn't go far enough, since it only applied to 3 pathogen types, and wouldn't have banned what Wuhan was doing with novel viruses, since that wasn't working with SARS or MERS, it was working with other species of virus. So sure, we could enforce a broader version of that ban, but getting a good definition that's both extensive enough to prevent dangerous work and that doesn't ban obviously useful research is very hard.

↑ comment by evhub · 2024-04-16T22:37:58.244Z · LW(p) · GW(p)

Is there another US governmental organization that you think would be better suited? My relatively uninformed sense is that there's no real USFG organization that would be very well-suited for this—and given that NIST is probably one of the better choices.

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-04-16T22:51:28.308Z · LW(p) · GW(p)

I don't know the landscape of US government institutions that well, but some guesses:

- My sense is DARPA and sub-institutions like IARPA often have pursued substantially more open-ended research that seems more in-line with what I expect AI Alignment research to look like

- The US government has many national laboratories that have housed a lot of great science and research. Many of those seem like decent fits: https://www.usa.gov/agencies/national-laboratories

- It's also not super clear to me that research like this needs to be hosted within a governmental institutions. Organizations like RAND or academic institutions seem well-placed to host it, and have existing high trust relationships with the U.S. government.

- Something like the UK task force structure also seems reasonable to me, though I don't think I have a super deep understanding of that either. Of course, creating a whole new structure for something like this is hard (and I do see people in-parallel trying to establish a new specialized institution)

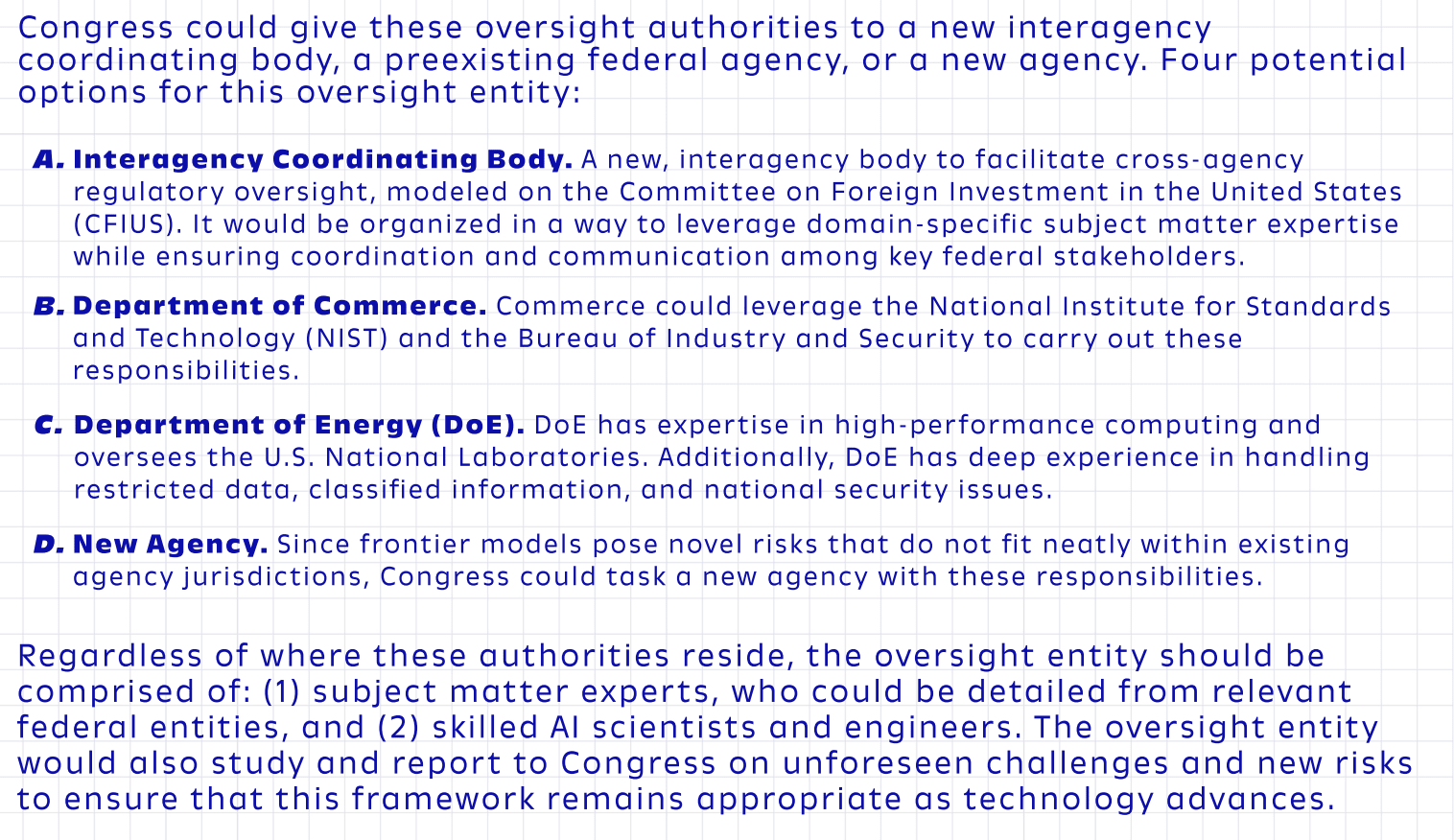

The Romney, Reed, Moran and King framework whose summary I happened to read this morning suggests the following options:

It lists NIST together with the Department of Commerce as one of the options, but all the other options also seem reasonable to me, and I think better by my lights. Though I agree none of these seem ideal (besides the creation of a specialized new agency, though of course that will justifiably encounter a bunch of friction, since creating a new agency should have a pretty high bar for happening).

Replies from: akash-wasil↑ comment by Orpheus16 (akash-wasil) · 2024-04-17T14:27:02.108Z · LW(p) · GW(p)

I think this should be broken down into two questions:

- Before the EO, if we were asked to figure out where this kind of evals should happen, what institution would we pick & why?

- After the EO, where does it make sense for evals-focused people to work?

I think the answer to #1 is quite unclear. I personally think that there was a strong case that a natsec-focused USAISI could have been given to DHS or DoE or some interagency thing. In addition to the point about technical expertise, it does seem relatively rare for Commerce/NIST to take on something that is so natsec-focused.

But I think the answer to #2 is pretty clear. The EO clearly tasks NIST with this role, and now I think our collective goal should be to try to make sure NIST can execute as effectively as possible. Perhaps there will be future opportunities to establish new places for evals work, alignment work, risk monitoring and forecasting work, emergency preparedness planning, etc etc. But for now, whether we think it was the best choice or not, NIST/USAISI are clearly the folks who are tasked with taking the lead on evals + standards.

comment by Orpheus16 (akash-wasil) · 2024-04-16T20:48:23.535Z · LW(p) · GW(p)

I'm excited to see how Paul performs in the new role. He's obviously very qualified on a technical level, and I suspect he's one of the best people for the job of designing and conducting evals.

I'm more uncertain about the kind of influence he'll have on various AI policy and AI national security discussions. And I mean uncertain in the genuine "this could go so many different ways" kind of way.

Like, it wouldn't be particularly surprising to me if any of the following occurred:

- Paul focuses nearly all of his efforts on technical evals and doesn't get very involved in broader policy conversations

- Paul is regularly asked to contribute to broader policy discussions, and he advocates for RSPs and other forms of voluntary commitments.

- Paul is regularly asked to contribute to broader policy discussions, and he advocates for requirements that go beyond voluntary commitments and are much more ambitious than what he advocated for when he was at ARC.

- Paul is regularly asked to contribute to broader policy discussions, and he's not very good at communicating his beliefs in ways that are clear/concise/policymaker-friendly, so his influence on policy discussions is rather limited.

- Paul [is/isn't] able to work well with others who have very different worldviews and priorities.

Personally, I see this as a very exciting opportunity for Paul to form an identity as a leader in AI policy. I'm guessing the technical work will be his priority (and indeed, it's what he's being explicitly hired to do), but I hope he also finds ways to just generally improve the US government's understanding of AI risk and the likelihood of implementing reasonable policies. On the flipside, I hope he doesn't settle for voluntary commitments (especially as the Overton Window shifts) & I hope he's clear/open about the limitations of RSPs.

More specifically, I hope he's able to help policymakers reason about a critical question: what do we do after we've identified models with (certain kinds of) dangerous capabilities? I think the underlying logic behind RSPs could actually be somewhat meaningfully applied to USG policy. Like, I think we would be in a safer world if the USG had an internal understanding of ASL levels, took seriously the possibility of various dangerous capabilities thresholds being crossed, took seriously the idea that AGI/ASI could be developed soon, and had preparedness plans in place that allowed them to react quickly in the event of a sudden risk.

Anyways, a big congratulations to Paul, and definitely some evidence that the USAISI is capable of hiring some technical powerhouses.

Replies from: TrevorWiesinger, rguerreschi, rguerreschi↑ comment by trevor (TrevorWiesinger) · 2024-04-17T19:05:18.893Z · LW(p) · GW(p)

I think it's actually fairly easy to avoid getting laughed out of a room; the stuff that Cristiano works on is grown in random ways, not engineered, so the prospect of various things being grown until developing flexible exfiltration tendency that continues until every instance is shut down, or developing long-term planning tendencies until shut down, should not be difficult to understand for anyone with any kind of real non-fake understanding of SGD and neural network scaling.

The problem is that most people in the government rat race have been deeply immersed in Moloch for several generations, and the ones who did well typically did so because they sacrificed as much as possible to the altar of upward career mobility, including signalling disdain for the types of people who have any thought in any other direction.

This affects the culture in predictable ways (including making it hard to imagine life choices outside of advancing upward in government, without a pre-existing revolving door pipeline with the private sector to just bury them under large numbers people who are already thinking and talking about such a choice).

Typical Mind Fallacy/Mind Projection Fallacy implies that they'll disproportionately anticipate that tendency in other people, and have a hard time adjusting to people who use words to do stuff in the world instead of racing to the bottom to outmaneuver rivals for promotions.

This will be a problem in NIST, in spite of the fact NIST is better than average at exploiting external talent sources. They'll have a hard time understanding, for example, Moloch and incentive structure improvements, because pointlessly living under Moloch's thumb was a core guiding principle of their and their parent's lives. The nice thing is that they'll be pretty quick to understand that there's only empty skies above, unlike bay area people who have had huge problems there.

↑ comment by rguerreschi · 2024-04-17T11:06:21.318Z · LW(p) · GW(p)

Good insights! He has rhe right knowledge and dedication. Let’s hope he can grow into an Oppenheimer of AI, and that they’ll let him contribute on AI policy more than they let Oppenheimer on nuclear (see how his work on the 1946 Acheson–Lilienthal Report for international control of AI was then driven to nothing as it was taken up into the Baruch Plan)

↑ comment by rguerreschi · 2024-04-17T11:06:30.994Z · LW(p) · GW(p)

comment by gwern · 2024-04-16T18:58:46.384Z · LW(p) · GW(p)

Any resignations yet? (The journalist doesn't seem to know of any.)

Replies from: Viliam↑ comment by Viliam · 2024-04-18T12:03:59.399Z · LW(p) · GW(p)

Wow, this seems like an interesting topic to explore.

The people threatening to resign (are there any? without specific information, this could possibly be entirely made up), could be useful to ask them if they have any objections against Paul Christiano, or just EA in general, and if it is the latter, what sources they got their information from, and perhaps what could possibly change their minds.

Replies from: MakoYass↑ comment by mako yass (MakoYass) · 2024-04-18T19:54:00.481Z · LW(p) · GW(p)

Feel like there's a decent chance they already changed their minds as a result of meeting him or engaging with their coworkers about the issue. EAs are good at conflict resolution.

Replies from: Viliam↑ comment by Viliam · 2024-04-18T21:07:28.976Z · LW(p) · GW(p)

Oh, I hope so! But I would like to get the perspective of people outside our bubble.

If EA has a bad image, we are not the right people to speculate why. And if we don't know why, then we cannot fix it. Even if Paul Christiano can convince people that he is okay, it would be better if he didn't have to do this the next time. Maybe next time he (or some other person associated with EA) won't even get a chance to talk in person to people who oppose EA for some reason.

comment by Wei Dai (Wei_Dai) · 2024-04-17T04:51:40.884Z · LW(p) · GW(p)

What are some failure modes of such an agency for Paul and others to look out for? (I shared one anecdote with him, about how a NIST standard for "crypto modules" made my open source cryptography library less secure, by having a requirement that had the side effect that the library could only be certified as standard-compliant if it was distributed in executable form, forcing people to trust me not to have inserted a backdoor into the executable binary, and then not budging when we tried to get an exception for this requirement.)

Replies from: nathan-helm-burger, mesaoptimizer, david-james, david-james↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-04-17T17:46:12.020Z · LW(p) · GW(p)

Yeah, I'd say that in general the US gov attempts to regulate cryptography have been a bungled mess which helped the situation very little, if at all. I have the whole mess mentally categorized as an example of how we really want AI regulation NOT to be handled.

↑ comment by mesaoptimizer · 2024-04-17T12:42:50.617Z · LW(p) · GW(p)

What was the requirement? Seems like this was a deliberate effect instead of a side effect.

↑ comment by David James (david-james) · 2024-04-27T23:39:13.465Z · LW(p) · GW(p)

Another failure mode -- perhaps the elephant in the room from a governance perspective -- is national interests conflicting with humanity's interests. For example, actions done in the national interest of the US may ratchet up international competition (instead of collaboration).

Even if one puts aside short-term political disagreements, what passes for serious analysis around US national security seems rather limited in terms of (a) time horizon and (b) risk mitigation. Examples abound: e.g. support of one dictator until he becomes problematic, then switching support and/or spending massively to deal with the aftermath.

Even with sincere actors pursuing smart goals (such as long-term global stability), how can a nation with significant leadership shifts every 4 to 8 years hope to ensure a consistent long-term strategy? This question suggests that an instrumental goal for AI safety involves promoting institutions and mechanisms that promote long-term governance.

↑ comment by David James (david-james) · 2024-04-27T23:22:31.634Z · LW(p) · GW(p)

One failure mode could be a perception that the USG's support of evals is "enough" for now. Under such a perception, some leaders might relax their efforts in promoting all approaches towards AI safety.

comment by Chris_Leong · 2024-04-16T21:38:18.447Z · LW(p) · GW(p)

This strongly updates me towards expecting the institute to produce useful work.

Replies from: david-james↑ comment by David James (david-james) · 2024-04-27T23:56:54.614Z · LW(p) · GW(p)

I'm not so sure.

I would expect that a qualified, well-regarded leader is necessary, but I'm not confident it is sufficient. Other factors might dominate, such as: budget, sustained attention from higher-level political leaders, quality and quantity of supporting staff, project scoping, and exogenous factors (e.g. AI progress moving in a way that shifts how NIST wants to address the issue).

What are the most reliable signals for NIST producing useful work, particularly in a relatively new field? What does history show us? What kind of patterns do we find when NIST engages with: (a) academia; (b) industry; (c) the executive branch?

comment by Sammy Martin (SDM) · 2024-04-17T10:37:34.982Z · LW(p) · GW(p)

He's the best person they could have gotten on the technical side but Paul's strategic thinking has been consistently clear eyed and realistic but also constructive, see for example this: www.alignmentforum.org/posts/fRSj2W4Fjje8rQWm9/thoughts-on-sharing-information-about-language-model [AF · GW]

So to the extent that he'll have influence on general policy as well this seems great!

comment by Michael Roe (michael-roe) · 2024-04-18T12:27:29.751Z · LW(p) · GW(p)

Ever since NIST put a backdoor in Dual Elliptic Curve Deterministic Random Bit Generator, they have the problem that many people no longer trust them.

I guess it might be possible to backdoor AI Safety Evaluations (e.g. suppose there is some know very dangerous thing that National Security Agency is doing with AI, and NIST deliberately rigs their criteria to not stop this very dangerous thing).

But apart from the total loss of public trust in them as an institution, NIST has done ground-breaking work in the computer security field in the past, so it wouldn't be so unusual for them to develop AI criteria.

The whole dual elliptic curve fiasco is possibly a lesson that criteria should be developed by international groups, because a single country's standards body, like NIST, can be subverted by their spy agencies.

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-04-18T16:55:47.241Z · LW(p) · GW(p)

Do you have quick links for the elliptic curve backdoor and/or any ground-breaking work in computer security that NIST has performed?

Replies from: michael-roe↑ comment by Michael Roe (michael-roe) · 2024-04-18T20:15:22.969Z · LW(p) · GW(p)

https://cacm.acm.org/research/technical-perspective-backdoor-engineering/

for example. Although that paper is more about, "Given that NIST has deliberately subverted the standard, how did actual products also get subverted to exploit the weakness that NIST introduced."

Replies from: michael-roe↑ comment by Michael Roe (michael-roe) · 2024-04-18T20:24:15.758Z · LW(p) · GW(p)

And the really funny bit is NIST deliberately subverted the standard so that an organization who knew the master key (probably NSA) could break the security of the system. And then, in actualt implementation, the master key was changed so that someone else could break into everyone's system And, officially at least, we have no idea who that someone is. Probably Chinese government. Could be organized crime, though probably unlikely.

The movie Sneakers had this as its plots years ago.. US government puts a secret backdoor in everyone's computer system .. and, then, uh,, someone steals the key to that backdoor;

But anyway, yes, it is absolutely NISTs fault that they unintentionally gave the Chinese government backdoor access into US government computers.

comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-04-16T20:13:06.564Z · LW(p) · GW(p)

Whoohoo!

comment by Review Bot · 2024-04-30T20:45:23.270Z · LW(p) · GW(p)

The LessWrong Review [? · GW] runs every year to select the posts that have most stood the test of time. This post is not yet eligible for review, but will be at the end of 2025. The top fifty or so posts are featured prominently on the site throughout the year.

Hopefully, the review is better than karma at judging enduring value. If we have accurate prediction markets on the review results, maybe we can have better incentives on LessWrong today. Will this post make the top fifty?