2011 Survey Results

post by Scott Alexander (Yvain) · 2011-12-05T10:49:02.810Z · LW · GW · Legacy · 513 commentsContents

513 comments

A big thank you to the 1090 people who took the second Less Wrong Census/Survey.

Does this mean there are 1090 people who post on Less Wrong? Not necessarily. 165 people said they had zero karma, and 406 people skipped the karma question - I assume a good number of the skippers were people with zero karma or without accounts. So we can only prove that 519 people post on Less Wrong. Which is still a lot of people.

I apologize for failing to ask who had or did not have an LW account. Because there are a number of these failures, I'm putting them all in a comment to this post so they don't clutter the survey results. Please talk about changes you want for next year's survey there.

Of our 1090 respondents, 972 (89%) were male, 92 (8.4%) female, 7 (.6%) transexual, and 19 gave various other answers or objected to the question. As abysmally male-dominated as these results are, the percent of women has tripled since the last survey in mid-2009.

We're also a little more diverse than we were in 2009; our percent non-whites has risen from 6% to just below 10%. Along with 944 whites (86%) we include 38 Hispanics (3.5%), 31 East Asians (2.8%), 26 Indian Asians (2.4%) and 4 blacks (.4%).

Age ranged from a supposed minimum of 1 (they start making rationalists early these days?) to a more plausible minimum of 14, to a maximum of 77. The mean age was 27.18 years. Quartiles (25%, 50%, 75%) were 21, 25, and 30. 90% of us are under 38, 95% of us are under 45, but there are still eleven Less Wrongers over the age of 60. The average Less Wronger has aged about one week since spring 2009 - so clearly all those anti-agathics we're taking are working!

In order of frequency, we include 366 computer scientists (32.6%), 174 people in the hard sciences (16%) 80 people in finance (7.3%), 63 people in the social sciences (5.8%), 43 people involved in AI (3.9%), 39 philosophers (3.6%), 15 mathematicians (1.5%), 14 statisticians (1.3%), 15 people involved in law (1.5%) and 5 people in medicine (.5%).

48 of us (4.4%) teach in academia, 470 (43.1%) are students, 417 (38.3%) do for-profit work, 34 (3.1%) do non-profit work, 41 (3.8%) work for the government, and 72 (6.6%) are unemployed.

418 people (38.3%) have yet to receive any degrees, 400 (36.7%) have a Bachelor's or equivalent, 175 (16.1%) have a Master's or equivalent, 65 people (6%) have a Ph.D, and 19 people (1.7%) have a professional degree such as an MD or JD.

345 people (31.7%) are single and looking, 250 (22.9%) are single but not looking, 286 (26.2%) are in a relationship, and 201 (18.4%) are married. There are striking differences across men and women: women are more likely to be in a relationship and less likely to be single and looking (33% men vs. 19% women). All of these numbers look a lot like the ones from 2009.

27 people (2.5%) are asexual, 119 (10.9%) are bisexual, 24 (2.2%) are homosexual, and 902 (82.8%) are heterosexual.

625 people (57.3%) described themselves as monogamous, 145 (13.3%) as polyamorous, and 298 (27.3%) didn't really know. These numbers were similar between men and women.

The most popular political view, at least according to the much-maligned categories on the survey, was liberalism, with 376 adherents and 34.5% of the vote. Libertarianism followed at 352 (32.3%), then socialism at 290 (26.6%), conservativism at 30 (2.8%) and communism at 5 (.5%).

680 people (62.4%) were consequentialist, 152 (13.9%) virtue ethicist, 49 (4.5%) deontologist, and 145 (13.3%) did not believe in morality.

801 people (73.5%) were atheist and not spiritual, 108 (9.9%) were atheist and spiritual, 97 (8.9%) were agnostic, 30 (2.8%) were deist or pantheist or something along those lines, and 39 people (3.5%) described themselves as theists (20 committed plus 19 lukewarm)

425 people (38.1%) grew up in some flavor of nontheist family, compared to 297 (27.2%) in committed theist families and 356 in lukewarm theist families (32.7%). Common family religious backgrounds included Protestantism with 451 people (41.4%), Catholicism with 289 (26.5%) Jews with 102 (9.4%), Hindus with 20 (1.8%), Mormons with 17 (1.6%) and traditional Chinese religion with 13 (1.2%)

There was much derision on the last survey over the average IQ supposedly being 146. Clearly Less Wrong has been dumbed down since then, since the average IQ has fallen all the way down to 140. Numbers ranged from 110 all the way up to 204 (for reference, Marilyn vos Savant, who holds the Guinness World Record for highest adult IQ ever recorded, has an IQ of 185).

89 people (8.2%) have never looked at the Sequences; a further 234 (32.5%) have only given them a quick glance. 170 people have read about 25% of the sequences, 169 (15.5%) about 50%, 167 (15.3%) about 75%, and 253 people (23.2%) said they've read almost all of them. This last number is actually lower than the 302 people who have been here since the Overcoming Bias days when the Sequences were still being written (27.7% of us).

The other 72.3% of people who had to find Less Wrong the hard way. 121 people (11.1%) were referred by a friend, 259 people (23.8%) were referred by blogs, 196 people (18%) were referred by Harry Potter and the Methods of Rationality, 96 people (8.8%) were referred by a search engine, and only one person (.1%) was referred by a class in school.

Of the 259 people referred by blogs, 134 told me which blog referred them. There was a very long tail here, with most blogs only referring one or two people, but the overwhelming winner was Common Sense Atheism, which is responsible for 18 current Less Wrong readers. Other important blogs and sites include Hacker News (11 people), Marginal Revolution (6 people), TV Tropes (5 people), and a three way tie for fifth between Reddit, SebastianMarshall.com, and You Are Not So Smart (3 people).

Of those people who chose to list their karma, the mean value was 658 and the median was 40 (these numbers are pretty meaningless, because some people with zero karma put that down and other people did not).

Of those people willing to admit the time they spent on Less Wrong, after eliminating one outlier (sorry, but you don't spend 40579 minutes daily on LW; even I don't spend that long) the mean was 21 minutes and the median was 15 minutes. There were at least a dozen people in the two to three hour range, and the winner (well, except the 40579 guy) was someone who says he spends five hours a day.

I'm going to give all the probabilities in the form [mean, (25%-quartile, 50%-quartile/median, 75%-quartile)]. There may have been some problems here revolving around people who gave numbers like .01: I didn't know whether they meant 1% or .01%. Excel helpfully rounded all numbers down to two decimal places for me, and after a while I decided not to make it stop: unless I wanted to do geometric means, I can't do justice to really small grades in probability.

The Many Worlds hypothesis is true: 56.5, (30, 65, 80)

There is intelligent life elsewhere in the Universe: 69.4, (50, 90, 99)

There is intelligent life elsewhere in our galaxy: 41.2, (1, 30, 80)

The supernatural (ontologically basic mental entities) exists: 5.38, (0, 0, 1)

God (a supernatural creator of the universe) exists: 5.64, (0, 0, 1)

Some revealed religion is true: 3.40, (0, 0, .15)

Average person cryonically frozen today will be successfully revived: 21.1, (1, 10, 30)

Someone now living will reach age 1000: 23.6, (1, 10, 30)

We are living in a simulation: 19, (.23, 5, 33)

Significant anthropogenic global warming is occurring: 70.7, (55, 85, 95)

Humanity will make it to 2100 without a catastrophe killing >90% of us: 67.6, (50, 80, 90)

There were a few significant demographics differences here. Women tended to be more skeptical of the extreme transhumanist claims like cryonics and antiagathics (for example, men thought the current generation had a 24.7% chance of seeing someone live to 1000 years; women thought there was only a 9.2% chance). Older people were less likely to believe in transhumanist claims, a little less likely to believe in anthropogenic global warming, and more likely to believe in aliens living in our galaxy. Community veterans were more likely to believe in Many Worlds, less likely to believe in God, and - surprisingly - less likely to believe in cryonics (significant at 5% level; could be a fluke). People who believed in high existential risk were more likely to believe in global warming, more likely to believe they had a higher IQ than average, and more likely to believe in aliens (I found that same result last time, and it puzzled me then too.)

Intriguingly, even though the sample size increased by more than 6 times, most of these results are within one to two percent of the numbers on the 2009 survey, so this supports taking them as a direct line to prevailing rationalist opinion rather than the contingent opinions of one random group.

Of possible existential risks, the most feared was a bioengineered pandemic, which got 194 votes (17.8%) - a natural pandemic got 89 (8.2%), making pandemics the overwhelming leader. Unfriendly AI followed with 180 votes (16.5%), then nuclear war with 151 (13.9%), ecological collapse with 145 votes (12.3%), economic/political collapse with 134 votes (12.3%), and asteroids and nanotech bringing up the rear with 46 votes each (4.2%).

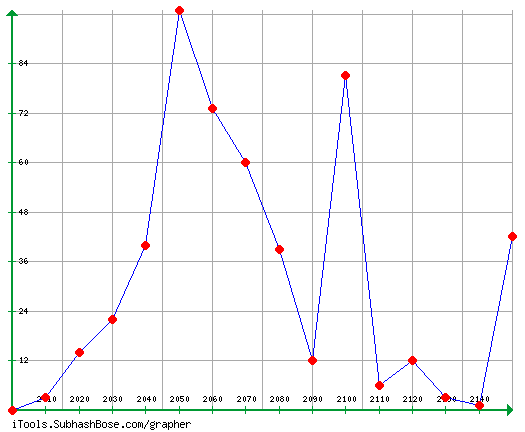

The mean for the Singularity question is useless because of the very high numbers some people put in, but the median was 2080 (quartiles 2050, 2080, 2150). The Singularity has gotten later since 2009: the median guess then was 2067. There was some discussion about whether people might have been anchored by the previous mention of 2100 in the x-risk question. I changed the order after 104 responses to prevent this; a t-test found no significant difference between the responses before and after the change (in fact, the trend was in the wrong direction).

Only 49 people (4.5%) have never considered cryonics or don't know what it is. 388 (35.6%) of the remainder reject it, 583 (53.5%) are considering it, and 47 (4.3%) are already signed up for it. That's more than double the percent signed up in 2009.

231 people (23.4% of respondents) have attended a Less Wrong meetup.

The average person was 37.6% sure their IQ would be above average - underconfident! Imagine that! (quartiles were 10, 40, 60). The mean was 54.5% for people whose IQs really were above average, and 29.7% for people whose IQs really were below average. There was a correlation of .479 (significant at less than 1% level) between IQ and confidence in high IQ.

Isaac Newton published his Principia Mathematica in 1687. Although people guessed dates as early as 1250 and as late as 1960, the mean was...1687 (quartiles were 1650, 1680, 1720). This marks the second consecutive year that the average answer to these difficult historical questions has been exactly right (to be fair, last time it was the median that was exactly right and the mean was all of eight months off). Let no one ever say that the wisdom of crowds is not a powerful tool.

The average person was 34.3% confident in their answer, but 41.9% of people got the question right (again with the underconfidence!). There was a highly significant correlation of r = -.24 between confidence and number of years error.

This graph may take some work to read. The x-axis is confidence. The y-axis is what percent of people were correct at that confidence level. The red line you recognize as perfect calibration. The thick green line is your results from the Newton problem. The black line is results from the general population I got from a different calibration experiment tested on 50 random trivia questions; take the intercomparability of the two with a grain of salt.

As you can see, Less Wrong does significantly better than the general population. However, there are a few areas of failure. First is that, as usual, people who put zero and one hundred percent had nonzero chances of getting the question right or wrong: 16.7% of people who put "0" were right, and 28.6% of people who put "100" were wrong (interestingly, people who put 100 did worse than the average of everyone else in the 90-99 bracket, of whom only 12.2% erred). Second of all, the line is pretty horizontal from zero to fifty or so. People who thought they had a >50% chance of being right had excellent calibration, but people who gave themselves a low chance of being right were poorly calibrated. In particular, I was surprised to see so many people put numbers like "0". If you're pretty sure Newton lived after the birth of Christ, but before the present day, that alone gives you a 1% chance of randomly picking the correct 20-year interval.

160 people wanted their responses kept private. They have been removed. The rest have been sorted by age to remove any information about the time they took the survey. I've converted what's left to a .xls file, and you can download it here.

513 comments

Comments sorted by top scores.

comment by Jack · 2011-12-04T20:39:02.051Z · LW(p) · GW(p)

People who believed in high existential risk were ... more likely to believe in aliens (I found that same result last time, and it puzzled me then too.)

Aliens existing but not yet colonizing multiple systems or broadcasting heavily is the the response consistent with the belief that a Great Filter lies in front of us.

comment by Unnamed · 2011-12-05T19:20:42.619Z · LW(p) · GW(p)

Strength of membership in the LW community was related to responses for most of the questions. There were 3 questions related to strength of membership: karma, sequence reading, and time in the community, and since they were all correlated with each other and showed similar patterns I standardized them and averaged them together into a single measure. Then I checked if this measure of strength in membership in the LW community was related to answers on each of the other questions, for the 822 respondents (described in this comment) who answered at least one of the probability questions and used percentages rather than decimals (since I didn't want to take the time to recode the answers which were given as decimals).

All effects described below have p < .01 (I also indicate when there is a nonsignificant trend with p<.2). On questions with categories I wasn't that rigorous - if there was a significant effect overall I just eyeballed the differences and reported which categories have the clearest difference (and I skipped some of the background questions which had tons of different categories and are hard to interpret).

Compared to those with a less strong membership in the LW community, those with a strong tie to the community are:

Background:

- Gender - no difference

- Age - no difference

- Relationship Status - no difference

- Sexual Orientation - no difference

- Relationship Style - less likely to prefer monogamous, more likely to prefer polyamorous or to have no preference

- Political Views - less likely to be socialist, more likely to be libertarian (but this is driven by the length of time in the community, which may reflect changing demographics - see my reply to this comment)

- Religious Views - more likely to be atheist & not spiritual, especially less likely to be agnostic

- Family Religion - no difference

- Moral Views - more likely to be consequentialist

- IQ - higher

Probabilities:

- Many Worlds - higher

- Aliens in the universe - lower (edited: I had mistakenly reversed the two aliens questions)

- Aliens in our galaxy - trend towards lower (p=.04)

- Supernatural - lower

- God - lower

- Religion - trend towards lower (p=.11, and this is statistically significant with a different analysis)

- Cryonics - lower

- Anti-Agathics - trend towards higher (p=.13) (this was the one question with a significant non-monotonic relationship: those with a moderately strong tie to the community had the highest probability estimate, while those with weak or strong ties had lower estimates)

- Simulation - trend towards higher (p=.20)

- Global Warming - higher

- No Catastrophe - lower (i.e., think it is less likely that we will make it to 2100 without a catastrophe, i.e. think the chances of xrisk are higher)

Other Questions:

- Singularity - sooner (this is statistically significant after truncating the outliers), and more likely to give an estimate rather than leave it blank

- Type of XRisk - more likely to think that Unfriendly AI is the most likely XRisk

- Cryonics Status - More likely to be signed up or to be considering it, less likely to be not planning to or to not have thought about it

↑ comment by Unnamed · 2011-12-05T21:04:56.027Z · LW(p) · GW(p)

Political Views - less likely to be socialist, more likely to be libertarian

I looked at this one a little more closely, and this difference in political views is driven almost entirely by the "time in community" measure of strength of membership in the LW community; it's not even statistically significant with the other two. I'd guess that is because LW started out on Overcoming Bias, which is a relatively libertarian blog, so the old timers tend to share those views. We've also probably added more non-Americans over time, who are more likely to be socialist.

All of the other relationships in the above post hold up when we replace the original measure of membership strength with one that is only based on the two variables of karma & sequence reading, but this one does not.

↑ comment by Normal_Anomaly · 2011-12-07T21:58:05.881Z · LW(p) · GW(p)

Cryonics - lower

Cryonics Status - More likely to be signed up or to be considering it, less likely to be not planning to or to not have thought about it

So long-time participants were less likely to believe that cryonics would work for them but more likely to sign up for it? Interesting. This could be driven by any of: fluke, greater rationality, greater age&income, less akrasia, more willingness to take long-shot bets based on shutting up and multiplying.

Replies from: Unnamed, Randolf↑ comment by Unnamed · 2011-12-08T03:43:47.490Z · LW(p) · GW(p)

I looked into this a little more, and it looks like those who are strongly tied to the LW community are less likely to give high answers to p(cryonics) (p>50%), but not any more or less likely to give low answers (p<10%). That reduction in high answers could be a sign of greater rationality - less affect heuristic driven irrational exuberance about the prospects for cryonics - or just more knowledge about the topic. But I'm surprised that there's no change in the frequency of low answers.

There is a similar pattern in the relationship between cryonics status and p(cryonics). Those who are signed up for cryonics don't give a higher p(cryonics) on average than those who are not signed up, but they are less likely to give a probability under 10%. The group with the highest average p(cryonics) is those who aren't signed up but are considering it, and that's the group that's most likely to give a probability over 50%.

Here are the results for p(cryonics) broken down by cryonics status, showing what percent of each group gave p(cryonics)<.1, what percent gave p(cryonics)>.5, and what the average p(cryonics) is for each group. (I'm expressing p(cryonics) here as probabilities from 0-1 because I think it's easier to follow that way, since I'm giving the percent of people in each group.)

Never thought about it / don't understand (n=26): 58% give p<.1, 8% give p>.5, mean p=.17

No, and not planning to (n=289): 60% give p<.1, 6% give p>.5, mean p=.14

No, but considering it (n=444): 38% give p < .1, 18% give p>.5, mean p=.27

Yes - signed up or just finishing up paperwork (n=36): 39% give p<.1, 8% give p>.5, mean p=.21

Overall: 47% give p<.1, 13% give p>.5, mean p=.22

↑ comment by Randolf · 2011-12-08T01:07:21.947Z · LW(p) · GW(p)

I think the main reason for this is that these persons have simply spent more time thinking about cyronics compared to other people. By spending time on this forum they have had a good chance of running into a discussion which has inspired them to read about it and sign up. Or perhaps people who are interested in cyronics are also interested in other topics LW has to offer, and hence stay in this place. In either case, it follows that they are probably also more knowledgeable about cyronics and hence understand what cyrotechnology can realistically offer currently or in the near future. In addition, these long-time guys might be more open to things such as cyronics in the ethical way.

Replies from: gwern↑ comment by gwern · 2011-12-08T02:51:39.470Z · LW(p) · GW(p)

I think the main reason for this is that these persons have simply spent more time thinking about cyronics compared to other people.

I don't think this is obvious at all. If you had asked me before in advance which of the following 4 possible sign-pairs would be true with increasing time spent thinking about cryonics:

- less credence, less sign-ups

- less credence, more sign-ups

- more credence, more sign-ups

- more credence, less sign-ups

I would have said 'obviously #3, since everyone starts from "that won't ever work" and move up from there, and then one is that much more likely to sign up'

The actual outcome, #2, would be the one I would expect least. (Hence, I am strongly suspicious of anyone claiming to expect or predict it as suffering from hindsight bias.)

Replies from: CarlShulman, brianm, Randolf↑ comment by CarlShulman · 2011-12-08T22:05:35.428Z · LW(p) · GW(p)

It is noted above that those with strong community attachment think that there is more risk of catastrophe. If human civilization collapses or is destroyed, then cryonics patients and facilities will also be destroyed.

↑ comment by brianm · 2011-12-14T15:03:43.302Z · LW(p) · GW(p)

I would expect the result to be a more accurate estimation of the success, combined with more sign-ups . 2 is an example of this if, in fact, the more accurate assessment is lower than the assessment of someone with a different level of information.

I don't it's true that everyone starts from "that won't ever work" - we know some people think it might work, and we may be inclined to some wishful thinking or susceptability to hype to inflate our likelihood above the conclusion we'd reach if we invest the time to consider the issue in more depth, It's also worth noting that we're not comparing the general public to those who've seriously considered signing up, but the lesswrong population, who are probably a lot more exposed to the idea of cryonics.

I'd agree that it's not what I would have predicted in advance (having no more expectation for the likelihood assigned to go up as down with more research), but it would be predictable for someone proceeding from the premise that the lesswrong community overestimates the likelihood of cryonics success compared to those who have done more research.

↑ comment by Randolf · 2011-12-08T11:14:12.660Z · LW(p) · GW(p)

Yeah, I think you have a point. However, maybe the following explanation would be better: Currently cyronics aren't likely to work. People who sign up into cyronics do research on the subject before or after singing up, and hence become aware that cyronics aren't likely to work.

comment by Scott Alexander (Yvain) · 2011-12-04T19:14:42.997Z · LW(p) · GW(p)

Running list of changes for next year's survey:

- Ask who's a poster versus a lurker!

- A non-write-in "Other" for most questions

- Replace "gender" with "sex" to avoid complaints/philosophizing.

- Very very clear instructions to use percent probabilities and not decimal probabilities

- Singularity year question should have explicit instructions for people who don't believe in singularity

- Separate out "relationship status" and "looking for new relationships" questions to account for polys

- Clarify that research is allowed on the probability questions

- Clarify possible destruction of humanity in cryonics/antiagathics questions.

- What does it mean for aliens to "exist in the universe"? Light cone?

- Make sure people write down "0" if they have 0 karma.

- Add "want to sign up, but not available" as cryonics option.

- Birth order.

- Have children?

- Country of origin?

- Consider asking about SAT scores for Americans to have something to correlate IQs with.

- Consider changing morality to PhilPapers version.

↑ comment by A1987dM (army1987) · 2011-12-04T21:43:39.309Z · LW(p) · GW(p)

One about nationality (and/or native language)? I guess that would be much more relevant than e.g. birth order.

↑ comment by orthonormal · 2011-12-04T19:32:37.840Z · LW(p) · GW(p)

Regarding #4, you could just write a % symbol to the right of each input box.

Replies from: army1987↑ comment by A1987dM (army1987) · 2011-12-04T21:47:50.707Z · LW(p) · GW(p)

BTW, I'd also disallow 0 and 100, and give the option of giving log-odds instead of probability (and maybe encourage to do that for probabilities 99%). Someone's “epsilon” might be 10^-4 whereas someone else's might be 10^-30.

Replies from: brilee, Luke_A_Somers, None↑ comment by brilee · 2011-12-05T15:32:08.046Z · LW(p) · GW(p)

I second that. See my post at http://lesswrong.com/r/discussion/lw/8lr/logodds_or_logits/ for a concise summary. Getting the LW survey to use log-odds would go a long way towards getting LW to start using log-odds in normal conversation.

↑ comment by Luke_A_Somers · 2011-12-05T16:40:31.019Z · LW(p) · GW(p)

People will mess up the log-odds, though. Non-log odds seem safer.

Odds of ...

Someone living today living for over 1000 subjectively experienced years : No one living today living for over 1000 subjectively experienced years

[ ] : [ ]

Two fields instead of one, but it seems cleaner than any of the other alternatives.

Replies from: army1987↑ comment by A1987dM (army1987) · 2011-12-05T18:41:35.393Z · LW(p) · GW(p)

The point is not having to type lots of zeros (or of nines) with extreme probabilities (so that people won't weasel out and use ‘epsilon’); having to type 1:999999999999999 is no improvement over having to type 0.000000000000001.

Replies from: Kaj_Sotala, Emile↑ comment by Kaj_Sotala · 2011-12-05T21:37:33.546Z · LW(p) · GW(p)

Is such precision meaningful? At least for me personally, 0.1% is about as low as I can meaningfully go - I can't really discriminate between me having an estimate of 0.1%, 0.001%, or 0.0000000000001%.

Replies from: dlthomas↑ comment by dlthomas · 2011-12-05T21:41:05.441Z · LW(p) · GW(p)

I expect this is incorrect.

Specifically, I would guess that you can distinguish the strength of your belief that a lottery ticket you might purchase will win the jackpot from one in a thousand (a.k.a. 0.1%). Am I mistaken?

Replies from: MBlume, army1987, Kaj_Sotala↑ comment by MBlume · 2011-12-16T02:14:03.818Z · LW(p) · GW(p)

That's a very special case -- in the case of the lottery, it is actually possible-in-principle to enumerate BIG_NUMBER equally likely mutually-exclusive outcomes. Same with getting the works of shakespeare out of your random number generator. The things under discussion don't have that quality.

↑ comment by A1987dM (army1987) · 2011-12-07T11:31:56.077Z · LW(p) · GW(p)

I agree in principle, but on the other hand the questions on the survey are nowhere as easy as "what's the probability of winning such-and-such lottery".

↑ comment by Kaj_Sotala · 2011-12-06T10:07:17.247Z · LW(p) · GW(p)

You're right, good point.

↑ comment by [deleted] · 2011-12-07T00:08:24.914Z · LW(p) · GW(p)

I'd force log odds, as they are the more natural representation and much less susceptible to irrational certainty and nonsense answers.

Someone has to actually try and comprehend what they are doing to troll logits; -INF seems a lot more out to lunch than p = 0.

I'd also like someone to go thru the math to figure out how to correctly take the mean of probability estimates. I see no obvious reason why you can simply average [0, 1] probability. The correct method would probably involve cooking up a hypothetical bayesian judge that takes everyones estimates as evidence.

Edit: since logits can be a bit unintuitive, I'd give a few calibration examples like odds of rolling a 6 on a die, odds of winning some lottery, fair odds, odds of surviving a car crash, etc.

Replies from: army1987, dlthomas↑ comment by A1987dM (army1987) · 2011-12-07T11:28:17.746Z · LW(p) · GW(p)

I'd force log odds, as they are the more natural representation and much less susceptible to irrational certainty and nonsense answers.

Personally, for probabilities roughly between 20% and 80% I find probabilities (or non-log odds) easier than understand than log-odds.

Someone has to actually try and comprehend what they are doing to troll logits; -INF seems a lot more out to lunch than p = 0.

Yeah. One of the reason why I proposed this is the median answer of 0 in several probability questions. (I'd also require a rationale in order to enter probabilities more extreme than 1%/99%.)

I'd also like someone to go thru the math to figure out how to correctly take the mean of probability estimates. I see no obvious reason why you can simply average [0, 1] probability. The correct method would probably involve cooking up a hypothetical bayesian judge that takes everyones estimates as evidence.

I'd go with the average of log-odds, but this requires all of them to be finite...

↑ comment by dlthomas · 2011-12-07T00:15:18.432Z · LW(p) · GW(p)

The correct method would probably involve cooking up a hypothetical bayesian judge that takes everyones estimates as evidence.

Weighting, in part, by the calibration questions?

Replies from: None↑ comment by [deleted] · 2011-12-07T00:27:28.243Z · LW(p) · GW(p)

I dunno how you would weight it. I think you'd want to have a maxentropy 'fair' judge at least for comparison.

Calibration questions are probably the least controversial way of weighting. Compare to, say, trying to weight using karma.

This might be an interesting thing to develop. A voting system backed up by solid bayes-math could be useful for more than just LW surveys.

Replies from: dlthomas↑ comment by Jack · 2011-12-04T20:43:02.211Z · LW(p) · GW(p)

I'd love a specific question on moral realism instead of leaving it as part of the normative ethics question. I'd also like to know about psychiatric diagnoses (autism spectrum, ADHD, depression, whatever else seems relevant)-- perhaps automatically remove those answers from a spreadsheet for privacy reasons.

Replies from: NancyLebovitz↑ comment by NancyLebovitz · 2011-12-05T01:27:02.601Z · LW(p) · GW(p)

I don't care about moral realism, but psychiatric diagnoses (and whether they're self-diagnosed or formally diagnosed) would be interesting.

↑ comment by MixedNuts · 2011-12-04T19:30:56.056Z · LW(p) · GW(p)

You are aware that if you ask people for their sex but not their gender, and say something like "we have more women now", you will be philosophized into a pulp, right?

Replies from: wedrifid, FiftyTwo, ShardPhoenix↑ comment by wedrifid · 2011-12-06T10:53:05.978Z · LW(p) · GW(p)

You are aware that if you ask people for their sex but not their gender, and say something like "we have more women now", you will be philosophized into a pulp, right?

Only if people here are less interested in applying probability theory than they are in philosophizing about gender... Oh.

↑ comment by FiftyTwo · 2011-12-05T22:19:33.454Z · LW(p) · GW(p)

Why not ask for both?

Replies from: Emile, MixedNuts↑ comment by Emile · 2011-12-06T11:58:50.891Z · LW(p) · GW(p)

Because the two are so highly correlated that having both would give us almost no extra information. One goal of the survey should be to maximize the useful-info-extracted / time-spent-on-it ratio, hence also the avoidance of write-ins for many questions (which make people spend more time on the survey, to get results that are less exploitable) (a write-in for gender works because people are less likely to write a manifesto for that than for politics).

↑ comment by ShardPhoenix · 2011-12-06T08:49:24.154Z · LW(p) · GW(p)

How about, "It's highly likely that we have more women now"?

↑ comment by lavalamp · 2011-12-05T20:31:01.822Z · LW(p) · GW(p)

Suggestion: "Which of the following did you change your mind about after reading the sequences? (check all that apply)"

- [] Religion

- [] Cryonics

- [] Politics

- [] Nothing

- [] et cetera.

Many other things could be listed here.

Replies from: TheOtherDave, Alejandro1↑ comment by TheOtherDave · 2011-12-05T21:27:55.866Z · LW(p) · GW(p)

I'm curious, what would you do with the results of such a question?

For my part, I suspect I would merely stare at them and be unsure what to make of a statistical result that aggregates "No, I already held the belief that the sequences attempted to convince me of" with "No, I held a contrary belief and the sequences failed to convince me otherwise." (That it also aggregates "Yes, I held a contrary belief and the sequences convinced me otherwise." and "Yes, I initially held the belief that the sequences attempted to convince me of, and the sequences convinced me otherwise" is less of a concern, since I expect the latter group to be pretty small.)

Replies from: lavalamp, taryneast↑ comment by lavalamp · 2011-12-05T22:14:14.808Z · LW(p) · GW(p)

Originally I was going to suggest asking, "what were your religious beliefs before reading the sequences?"-- and then I succumbed to the programmer's urge to solve the general problem.

However, I guess measuring how effective the sequences are at causing people to change their mind is something that a LW survey can't do, anyway (you'd need to also ask people who read the sequences but didn't stick around to accurately answer that).

Mainly I was curious how many deconversions the sequences caused or hastened.

↑ comment by taryneast · 2011-12-06T17:34:09.380Z · LW(p) · GW(p)

Ok, so use radio-buttons: "believed before, still believe" "believed before, changed my mind now" "didn't believe before, changed my mind now" "never believed, still don't"

Replies from: TheOtherDave↑ comment by TheOtherDave · 2011-12-06T19:25:17.333Z · LW(p) · GW(p)

...and "believed something before, believe something different now"

↑ comment by Alejandro1 · 2011-12-05T23:03:44.901Z · LW(p) · GW(p)

I think the question is too vague as formulated. Does any probability update, no matter how small, count as changing your mind? But if you ask for precise probability changes, then the answers will likely be nonsense because most people (even most LWers, I'd guess) don't keep track of numeric probabilities, just think "oh, this argument makes X a bit more believable" and such.

↑ comment by prase · 2011-12-05T20:01:46.343Z · LW(p) · GW(p)

When asking for race/ethnicity, you should really drop the standard American classification into White - Hispanic - Black - Indian - Asian - Other. From a non-American perspective this looks weird, especially the "White Hispanic" category. A Spaniard is White Hispanic, or just White? If only White, how does the race change when one moves to another continent? And if White Hispanic, why not have also "Italic" or "Scandinavic" or "Arabic" or whatever other peninsula-ic races?

Since I believe the question was intended to determine the cultural background of LW readers, I am surprised that there was no question about country of origin, which would be more informative. There is certainly greater cultural difference between e.g. Turks (White, non-Hispanic I suppose) and White non-Hispanic Americans than between the latter and their Hispanic compatriots.

Also, making a statistic based on nationalities could help people determine whether there is a chance for a meetup in their country. And it would be nice to know whether LW has regular readers in Liechtenstein, of course.

Replies from: None, None, None, NancyLebovitz↑ comment by [deleted] · 2011-12-22T03:03:10.393Z · LW(p) · GW(p)

I was also...well, not surprised per se, but certainly annoyed to see that "Native American" in any form wasn't even an option. One could construe that as revealing, I suppose.

I don't know how relevant the question actually is, but if we want to track ancestry and racial, ethnic or cultural group affiliation, the folowing scheme is pretty hard to mess up:

Country of origin:

Country of residence:

Primary Language:

Native Language (if different):

Heritage language (if different):

Note: A heritage language is one spoken by your family or identity group.

Heritage group:

Diaspora: Means your primary heritage and identity group moved to the country you live in within historical or living memory, as colonists, slaves, workers or settlers.

European diaspora ("white" North America, Australia, New Zealand, South Africa, etc)

African diaspora ("black" in the US, West Indian, more recent African emigrant groups; also North African diaspora)

Asian diaspora (includes, Turkic, Arab, Persian, Central and South Asian, Siberian native)

Indigenous: Means your primary heritage and identity group was resident to the following location prior to 1400, OR prior to the arrival of the majority culture in antiquity (for example: Ainu, Basque, Taiwanese native, etc):

-Africa

-Asia

-Europe

-North America (between Panama and Canada, also includes Greenland and the Carribean)

-Oceania (including Australia)

-South America

Mixed: Select two or more:

European Diaspora

African Diaspora

Asian Diaspora

African Indigenous

American Indigenous

Asian Indigenous

European Indigenous

Oceania Indigenous

What the US census calls "Non-white Hispanic" would be marked as "Mixed" > "European Diaspora" + "American Indigenous" with Spanish as either a Native or Heritage language. Someone who identifies as (say) Mexican-derived but doesn't speak Spanish at all would be impossible to tell from someone who was Euro-American and Cherokee who doesn't speak Cherokee, but no system is perfect...

Replies from: wedrifid↑ comment by [deleted] · 2011-12-08T10:08:08.483Z · LW(p) · GW(p)

Most LessWrong posters and readers are American, perhaps even the vast majority (I am not). Hispanic Americans differ from white Americans differ from black Americans culturally and socio-economically not just on average but in systemic ways regardless if the person in question defines himself as Irish American, Kenyan American, white American or just plain American. From the US we have robust sociological data that allows us to compare LWers based on this information. The same is true of race in Latin America, parts of Africa and more recently Western Europe.

Nationality is not the same thing as racial or even ethnic identity in multicultural societies.

Considering every now and then people bring up a desire to lower barriers to entry for "minorities" (whatever that means in a global forum), such stats are useful for those who argue on such issues and also for ascertaining certain biases.

Adding a nationality and/or citizenship question would probably be useful though.

Replies from: prase↑ comment by prase · 2011-12-08T18:37:51.905Z · LW(p) · GW(p)

Nationality is not the same thing as racial or even ethnic identity in multicultural societies.

I have not said that it is. I was objecting to arbitrariness of "Hispanic race": I believe that the difference between Hispanic White Americans and non-Hispanic White Americans is not significantly higher than the difference between both two groups and non-Americans, and that the number of non-Americans among LW users would be higher than 3.8% reported for the Hispanics. I am not sure what exact sociological data we may extract from the survey, but in any case, the comparison to standard American sociological datasets will be problematic because the LW data are contaminated by presence of non-Americans and there is no way to say how much, because people were not asked about that.

Replies from: None↑ comment by [deleted] · 2011-12-08T19:01:09.601Z · LW(p) · GW(p)

I have not said that it is.

I didn't meant to imply you did, I just wanted to emphasise that data is gained by the racial breakdown. Especially in the American context, race sits at the strange junction of appearance, class, heritage, ethnicity, religion and subculture. And its hard to capture it by any of these metrics.

I am not sure what exact sociological data we may extract from the survey, but in any case, the comparison to standard American sociological datasets will be problematic because the LW data are contaminated by presence of non-Americans and there is no way to say how much, because people were not asked about that.

Once we have data on how many are American (and this is something we really should have) this will be easier to say.

↑ comment by [deleted] · 2011-12-08T10:09:00.782Z · LW(p) · GW(p)

If only White, how does the race change when one moves to another continent? And if White Hispanic, why not have also "Italic" or "Scandinavic" or "Arabic" or whatever other peninsula-ic races?

Because we don't have as much useful sociological data on this. Obviously we can start collecting data on any of the proposed categories, but if we're the only ones, it won't much help us figure out how LW differs from what one might expect of a group that fits its demographic profile.

Since I believe the question was intended to determine the cultural background of LW readers, I am surprised that there was no question about country of origin, which would be more informative. There is certainly greater cultural difference between e.g. Turks (White, non-Hispanic I suppose) and White non-Hispanic Americans than between the latter and their Hispanic compatriots.

Much of the difference in the example of Turks is captured by the Muslim family background question.

Replies from: prase↑ comment by prase · 2011-12-08T18:22:38.914Z · LW(p) · GW(p)

Much of the difference in the example of Turks is captured by the Muslim family background question.

Much, but not most. Religion is easy to ascertain, but there are other cultural differences which are more difficult to classify, but still are signigicant *. Substitute Turks with Egyptian Christians and the example will still work. (And not because of theological differences between Coptic and Protestant Christianity.)

*) Among the culturally determined attributes are: political opinion, musical taste and general aesthetic preferences, favourite food, familiarity with different literature and films, ways of relaxation, knowledge of geography and history, language(s), moral code. Most of these things are independent of religion or only very indirectly influenced by it.

↑ comment by NancyLebovitz · 2011-12-05T22:45:54.585Z · LW(p) · GW(p)

Offer a text field for race. You'll get some distances, not to mention "human" or "other", but you could always use that to find out whether having a contrary streak about race/ethnicity correlates with anything.

If you want people to estimate whether a meetup could be worth it, I recommend location rather than nationality-- some nations are big enough that just knowing nationality isn't useful.

↑ comment by Jayson_Virissimo · 2011-12-05T11:15:51.115Z · LW(p) · GW(p)

I think using your stipulative definition of "supernatural" was a bad move. I would be very surprised if I asked a theologian to define "supernatural" and they replied "ontologically basic mental entities". Even as a rational reconstruction of their reply, it would be quite a stretch. Using such specific definitions of contentious concepts isn't a good idea, if you want to know what proportion of Less Wrongers self-identify as atheist/agnostic/deist/theist/polytheist.

Replies from: TheOtherDave↑ comment by TheOtherDave · 2011-12-05T15:05:54.220Z · LW(p) · GW(p)

OTOH, using a vague definition isn't a good idea either, if you want to know something about what Less Wrongers believe about the world.

I had no problem with the question as worded; it was polling about LWers confidence in a specific belief, using terms from the LW Sequences. That the particular belief is irrelevant to what people who self-identify as various groups consider important about that identification is important to remember, but not in and of itself a problem with the question.

But, yeah... if we want to know what proportion of LWers self-identify as (e.g.) atheist, that question won't tell us.

↑ comment by selylindi · 2011-12-05T19:37:22.018Z · LW(p) · GW(p)

Yet another alternate, culture-neutral way of asking about politics:

Q: How involved are you in your region's politics compared to other people in your region?

A: [choose one]

() I'm among the most involved

() I'm more involved than average

() I'm about as involved as average

() I'm less involved than average

() I'm among the least involved

↑ comment by FiftyTwo · 2011-12-05T22:21:04.355Z · LW(p) · GW(p)

Requires people to self assess next to a cultural baseline, and self assessments of this sort are notoriously inaccurate. (I predict everyone will think they have above-average involvement).

Replies from: Prismattic, wedrifid, thomblake, DanArmak, NancyLebovitz↑ comment by Prismattic · 2011-12-14T04:01:53.226Z · LW(p) · GW(p)

Within a US-specific context, I would eschew these comparisons to a notional average and use the following levels of participation:

0 = indifferent to politics and ignorant of current events

1 = attentive to current events, but does not vote

2 = votes in presidential elections, but irregularly otherwise

3 = always votes

4 = always votes and contributes to political causes

5 = always votes, contributes, and engages in political activism during election seasons

6 = always votes, contributes, and engages in political activism both during and between election seasons

7 = runs for public office

I suspect that the average US citizen of voting age is a 2, but I don't have data to back that up, and I am not motivated to research it. I am a 4, so I do indeed think that I am above average.

Those categories could probably be modified pretty easily to match a parliamentary system by leaving out the reference to presidential elections and just having "votes irregularly" and "always votes"

Editing to add -- for mandatory voting jurisdictions, include a caveat that "spoiled ballot = did not vote"

Replies from: TheOtherDave, Nornagest, army1987, thomblake↑ comment by TheOtherDave · 2011-12-14T05:01:15.542Z · LW(p) · GW(p)

Personally, I'm not sure I necessarily consider the person who runs for public office to be at a higher level of participation than the person who works for them.

↑ comment by Nornagest · 2011-12-16T17:44:18.153Z · LW(p) · GW(p)

I agree denotationally with that estimate, but I think you're putting too much emphasis on voting in at least the 0-4 range. Elections (in the US) only come up once or exceptionally twice a year, after all. If you're looking for an estimate of politics' significance to a person's overall life, I think you'd be better off measuring degree of engagement with current events and involvement in political groups -- the latter meaning not only directed activism, but also political blogs, non-activist societies with a partisan slant, and the like.

For example: do you now, or have you ever, owned a political bumper sticker?

Replies from: TimS↑ comment by A1987dM (army1987) · 2011-12-15T15:51:30.603Z · LW(p) · GW(p)

There might be people who don't always (or even usually) vote yet they contribute to political causes/engage in political activism, for certain values of “political” at least.

↑ comment by thomblake · 2011-12-15T16:27:05.357Z · LW(p) · GW(p)

spoiled ballot = did not vote

I had not before encountered this form of protest. If I were living in a place with mandatory voting and anonymous ballots, I would almost surely write my name on the ballot to spoil it.

Replies from: wedrifid, army1987↑ comment by A1987dM (army1987) · 2011-12-19T17:54:19.474Z · LW(p) · GW(p)

I have never actually spoiled a ballot in a municipality-or-higher-level election (though voting for a list with hardly any chance whatsoever of passing the election threshold has a very similar effect), but in high school I did vote for Homer Simpson as a students' representative, and there were lots of similarly hilarious votes, including (IIRC) ones for God, Osama bin Laden, and Silvio Berlusconi.

↑ comment by wedrifid · 2011-12-15T16:32:20.541Z · LW(p) · GW(p)

Requires people to self assess next to a cultural baseline, and self assessments of this sort are notoriously inaccurate. (I predict everyone will think they have above-average involvement).

I'd actually have guessed an average of below average.

↑ comment by thomblake · 2011-12-15T16:23:49.444Z · LW(p) · GW(p)

I predict everyone will think they have above-average involvement

Bad prediction. While it's hard to say since so few people around here actually vote, my involvement in politics is close enough to 0 that I'd be very surprised if I was more involved than average.

↑ comment by NancyLebovitz · 2011-12-05T22:36:41.334Z · LW(p) · GW(p)

I think I have average or below-average involvement.

Maybe it would be better to ask about the hours/year spent on politics.

Replies from: FiftyTwo↑ comment by FiftyTwo · 2011-12-16T02:28:27.303Z · LW(p) · GW(p)

For comparison what would you say the average persons level of involvement in politics consists of? (To avoid contamination, don't research or overthink the question just give us the average you were comparing yourself to).

Edit: The intuitive average other commenters compared themselves to would also be of interest.

Replies from: NancyLebovitz↑ comment by NancyLebovitz · 2011-12-16T16:35:59.765Z · LW(p) · GW(p)

Good question. I don't know what the average person's involvement is, and I seem to know a lot of people (at least online) who are very politically involved, so I may be misestimating whether my political activity is above or below average.

Replies from: FiftyTwo↑ comment by FiftyTwo · 2011-12-19T22:07:12.364Z · LW(p) · GW(p)

On posting this I made the prediction that the average assumed by most lesswrong commenters would be above the actual average level of participation.

I hypothesise this is because most LW commenters come from relatively educated or affluent social groups, where political participation is quite high. Whereas there are large portions of the population who do not participate at all in politics (in the US and UK a significant percentage don't even vote in the 4-yearly national elections).

Because of this I would be very sceptical of self reported participation levels, and would agree a quantifiable measure would be better.

↑ comment by Pfft · 2011-12-06T01:01:38.311Z · LW(p) · GW(p)

Replacing gender with sex seems like the wrong way to go to me. For example, note how Randall Munroe asked about sex, then regretted it.

Replies from: jkaufman↑ comment by jefftk (jkaufman) · 2012-04-02T21:49:54.810Z · LW(p) · GW(p)

I don't think I'd describe that post as regretting asking "do you have a Y chromosome". He's apologizing for asking for data for one purpose (checking with colorblindness) and then using it for another (color names if you're a guy/girl).

↑ comment by Scott Alexander (Yvain) · 2011-12-07T13:11:27.980Z · LW(p) · GW(p)

Everyone who's suggesting changes: you are much more likely to get your way if you suggest a specific alternative. For example, instead of "handle politics better", something like "your politics question should have these five options: a, b, c, d, and e." Or instead of "use a more valid IQ measure", something more like "Here's a site with a quick and easy test that I think is valid"

Replies from: ChrisHallquist, ChrisHallquist↑ comment by ChrisHallquist · 2011-12-07T21:21:22.054Z · LW(p) · GW(p)

In that case: use the exact ethics questions from the PhilPapers Survey (http://philpapers.org/surveys/), probably minus lean/accept distinction and the endless drop-down menu for "other."

↑ comment by ChrisHallquist · 2011-12-15T04:18:50.348Z · LW(p) · GW(p)

For IQ: maybe you could nudge people to greater honesty by splitting up the question: (1) have you ever taken an IQ test with [whatever features were specified on this year's survey], yes or no? (2) if yes, what was your score?

Replies from: twanvl↑ comment by [deleted] · 2011-12-22T01:53:08.855Z · LW(p) · GW(p)

Replace "gender" with "sex" to avoid complaints/philosophizing.

http://en.wikipedia.org/wiki/Intersex

Otherwise agreed.

Replies from: None↑ comment by [deleted] · 2012-08-07T16:25:35.020Z · LW(p) · GW(p)

Strongly disagree with previous self here. I do not think replacing "gender" with "sex" avoids complaints or "philosophizing", and "philosophizing" in context feels like a shorthand/epithet for "making this more complex than prevailing, mainstream views on gender."

For a start, it seems like even "sex" in the sense used here is getting at a mainly-social phenomenon: that of sex assigned at birth. This is a judgement call by the doctors and parents. The biological correlates used to make that decision are just weighed in aggregate; some people are always going to throw an exception. If you're not asking about the size of gametes and their delivery mechanism, the hormonal makeup of the person, their reproductive anatomy where applicable, or their secondary sexual characteristics, then "sex" is really just asking the "gender" question but hazily referring to biological characteristics instead.

Ultimately, gender is what you're really asking for. Using "sex" as a synonym blurs the data into unintelligibility for some LWers; pragmatically, it also amounts to a tacit "screw you" to trans people. I suggest biting the bullet and dealing with the complexity involved in asking that question -- in many situations people collecting that demographic info don't actually need it, but it seems like useful information for LessWrong.

A suggested approach:

Two optional questions with something like the following phrasing:

Optional: Gender (pick what best describe how you identify):

-Male

-Female

-Genderqueer, genderfluid, other

-None, neutrois, agender

-Prefer not to say

Optional: Sex assigned at birth:

-Male

-Female

-Intersex

-Prefer not to say

↑ comment by RobertLumley · 2011-12-19T16:11:12.905Z · LW(p) · GW(p)

A series of four questions on each Meyers-Briggs indicator would be good, although I'm sure the data would be woefully unsurprising. Perhaps link to an online test if people don't know it already.

↑ comment by DanArmak · 2011-12-15T15:25:40.287Z · LW(p) · GW(p)

Very very clear instructions to use percent probabilities and not decimal probabilities

You can accomplish this by adding a percent sign in the survey itself, to the right of to every textbox entry field.

Edit: sorry, already suggested.

↑ comment by ChrisHallquist · 2011-12-07T05:00:11.971Z · LW(p) · GW(p)

As per my previous comments on this, separate out normative ethics and meta-ethics.

And maybe be extra-clear on not answering the IQ question unless you have official results? Or is that a lost cause?

↑ comment by Armok_GoB · 2011-12-04T20:22:53.152Z · LW(p) · GW(p)

That list is way, way to short. I entirely gave up on the survey partway through because an actual majority of the questions were inapplicable or downright offensive to my sensibilities, or just incomprehensible, or I couldn't answer them for some other reason.

Not that I can think of anything that WOULDN'T have that effect on me without being specifically tailored to me which sort of destroys the point of having a survey... Maybe I'm just incompatible with surveys in general.

Replies from: NancyLebovitz↑ comment by NancyLebovitz · 2011-12-04T21:00:04.470Z · LW(p) · GW(p)

Would you be willing to write a discussion post about the questions you want to answer?

Replies from: Armok_GoB↑ comment by Armok_GoB · 2011-12-04T23:47:57.743Z · LW(p) · GW(p)

No, because I fail utterly at writing things, and because my complaints are way to many so it'd take to much time typing them out.

Replies from: MixedNuts↑ comment by MixedNuts · 2011-12-04T23:51:15.342Z · LW(p) · GW(p)

Random sample of complaints?

Replies from: Armok_GoB↑ comment by Armok_GoB · 2011-12-05T15:04:21.008Z · LW(p) · GW(p)

Good idea!

Many of the questions were USA-centric, assuming people grew up with some religion or political climate common in the US. I didn't get indoctrinated to republicans or democrats, I got indoctrinated to environmentalism, and there's just no way to map that onto American politics where it's an issue rather than a faction. And it might in some ways be the closest match on the religion question as well, being a question of fact that I later had to try to de-bias myself on.

Replies from: NancyLebovitz, MixedNuts↑ comment by NancyLebovitz · 2011-12-05T16:01:12.383Z · LW(p) · GW(p)

The US-centricity is real problem, and probably worth a discussion post. Do political beliefs tell us something important about LW posters, and if so, are there general ways not tied to a particular country to ask about them. If there isn't a general way, how can this be handled?

Question I'd like to see added: how much attention do you give to politics? That question should probably be split between attention to theory, attention to news, and attention to trying to make things happen.

Replies from: kilobug↑ comment by kilobug · 2011-12-05T16:21:17.644Z · LW(p) · GW(p)

I suggested in the survey thread to ask for Political Compass scores instead of a liberal/conservative/libertarian/socialist question. The Compass is slightly US-biased, but it contains enough questions for the end result to be significant even so. How much attention to politics would be an interesting question, I second that.

Replies from: NancyLebovitz↑ comment by NancyLebovitz · 2011-12-05T16:27:16.958Z · LW(p) · GW(p)

I suspect the compass is very US-based, though better than a short list or a single dimension.

there's one more thing about interest in politics that I had trouble phrasing. There's a thing that I call practical politics which I don't do, but it's working for particular candidates or being one yourself or knowing in some detail about the right place to push to get something to happen or not happen. It's the step beyond voting and emailing your representative and signing petitions.

I'd be surprised if very many LWers do practical politics, but that might just be typical mind fallacy.

Replies from: army1987↑ comment by A1987dM (army1987) · 2011-12-05T19:12:53.748Z · LW(p) · GW(p)

They do admit they're biased, but the bias is not exactly American (indeed, they are British). And given that LW has lots of readers from non-US western countries but few from (say) China, while not ideal, it would be a lot better than the very US-centric answers in the last survey. (For example, I'd bet that a lot of people would have self-identified as socialist libertarians if given the chance.)

↑ comment by duckduckMOO · 2011-12-06T14:44:36.645Z · LW(p) · GW(p)

On politics I would like a way to say, I don't identify with any political theory. To me this is like asking "what religion do you identify most with?" with options christianity, Islam, hinduism, other and the option to click no boxes. If, as an atheist with no religious ties you click other you're in with shintoists and satanists and other other unmentioned religions. If you don't answer you're just not giving an answer. You could just not want to say. In any case the question frames things so that you have to subscribe to a questionable framework to answer it all

Solutions:

Option like the morality one, perhaps, 0 identification options for "other" and "prefer not to say" retaining the ability to click no boxes, though there are probably other reasons to click no boxes. or, as someone suggested in the original thread another question to gage how much you identify with something. The current way If I had to pick an answer I could probably dredge up some preference for one or another theories, but I'd be in the same box as someone actively promoting and a part of what option they clicked. Boxes for strongly identify, identify and weakly identify maybe. Or something.

Got to go.

edit: Could someone kindly explain the downvotes? I'm guessing Too esoteric? Personally not bothered? bothered that I'm bothered?

edit2: just realised some line breaks in the comment box haven't translated to line breaks in the published comment. Is the post just hard to read?

comment by Craig_Heldreth · 2011-12-04T20:00:47.690Z · LW(p) · GW(p)

Intriguingly, even though the sample size increased by more than 6 times, most of these results are within one to two percent of the numbers on the 2009 survey, so this supports taking them as a direct line to prevailing rationalist opinion rather than the contingent opinions of one random group.

This is not just intriguing. To me this is the single most significant finding in the survey.

Replies from: steven0461, endoself↑ comment by steven0461 · 2011-12-05T03:16:44.604Z · LW(p) · GW(p)

It's also worrying, because it means we're not getting better on average.

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2011-12-05T12:59:48.228Z · LW(p) · GW(p)

If the readership of LessWrong has gone up similarly in that time, then I would not expect to see an improvement, even if everyone who reads LessWrong improves.

Replies from: steven0461, curiousepic↑ comment by steven0461 · 2011-12-05T23:06:43.113Z · LW(p) · GW(p)

Yes, I was thinking that. Suppose it takes a certain fixed amount of time for any LessWronger to learn the local official truth. Then if the population grows exponentially, you'd expect the fraction that knows the local official truth to remain constant, right? But I'm not sure the population has been growing exponentially, and even so you might have expected the local official truth to become more accurate over time, and you might have expected the community to get better over time at imparting the local official truth.

Regardless of what we should have expected, my impression is LessWrong as a whole tends to assume that it's getting closer to the truth over time. If that's not happening because of newcomers, that's worth worrying about.

Replies from: JoachimSchipper↑ comment by JoachimSchipper · 2011-12-06T15:29:26.817Z · LW(p) · GW(p)

Note that it is possible for newcomers to hold the same inaccurate beliefs as their predecessors while the core improves its knowledge or expands in size. In fact, as LW grows it will have to recruit from, say, Hacker News (where I first heard of LW) instead of Singularity lists, producing newcomers less in tune with the local truth.

(Unnamed's comment shows interesting differences in opinion between a "core" and the rest, but (s)he seems to have skipped the only question with an easily-verified answer, i.e. Newton.)

Replies from: Unnamed↑ comment by Unnamed · 2011-12-06T18:52:47.405Z · LW(p) · GW(p)

The calibration question was more complicated to analyze, but now I've looked at it and it seems like core members were slightly more accurate at estimating the correct year (p=.05 when looking at size of the error, and p=.12 when looking at whether or not it was within the 20-year range), but there's no difference in calibration.

("He", btw.)

↑ comment by curiousepic · 2011-12-06T18:53:22.009Z · LW(p) · GW(p)

Couldn't the current or future data be correlated with length of readership to determine this?

comment by J_Taylor · 2011-12-04T20:27:29.134Z · LW(p) · GW(p)

The supernatural (ontologically basic mental entities) exists: 5.38, (0, 0, 1)

God (a supernatural creator of the universe) exists: 5.64, (0, 0, 1)

??

Replies from: Unnamed, Sophronius, byrnema, scav, Jayson_Virissimo↑ comment by Unnamed · 2011-12-04T21:25:19.378Z · LW(p) · GW(p)

P(Supernatural) What is the probability that supernatural events, defined as those involving ontologically basic mental entities, have occurred since the beginning of the universe?

P(God) What is the probability that there is a god, defined as a supernatural (see above) intelligent entity who created the universe?

So deism (God creating the universe but not being involved in the universe once it began) could make p(God) > p(Supernatural).

Looking at the the data by individual instead of in aggregate, 82 people have p(God) > p(Supernatural); 223 have p(Supernatural) > p(God).

Replies from: J_Taylor↑ comment by J_Taylor · 2011-12-04T21:31:04.847Z · LW(p) · GW(p)

Given this, the numbers no longer seem anomalous. Thank you.

Replies from: CharlesR↑ comment by Sophronius · 2011-12-04T20:53:41.143Z · LW(p) · GW(p)

Yea, I noticed that too. They are so close together that I wrote it off as noise, though. Otherwise, it can be explained by religious people being irrational and unwilling to place god in the same category as ghosts and other "low status" beliefs. That doesn't indicate irrationality on the part of the rest of less wrong.

Replies from: DanielLC↑ comment by DanielLC · 2011-12-04T22:48:05.690Z · LW(p) · GW(p)

They are so close together that I wrote it off as noise, though.

That would work if it was separate surveys, but in order to get that on one survey, individual people would have to give a higher probability to God than any supernatural.

Replies from: Sophronius↑ comment by Sophronius · 2011-12-04T23:23:07.787Z · LW(p) · GW(p)

True, but this could be the result of a handful of people giving a crazy answer (noise). Not really indicative of less wrong as a whole. I imagine most less wrongers gave negligible probabilities for both, allowing a few religious people to skew the results.

Replies from: DanielLC↑ comment by DanielLC · 2011-12-05T02:28:28.835Z · LW(p) · GW(p)

I was thinking you meant statistical error.

Do you mean trolls, or people who don't understand the question?

Replies from: Sophronius↑ comment by Sophronius · 2011-12-05T11:52:18.891Z · LW(p) · GW(p)

Neither, I meant people who don't understand that the probability of a god should be less than the probability of something supernatural existing. Add in religious certainty and you get a handful of people giving answers like P(god) = 99% and P(supernatural) = 50% which can easily skew the results if the rest of less wrong gives probabilities like 1%and 2% respectively. Given what Yvain wrote in the OP though, I think there's also plenty of evidence of trolls upsetting the results somewhat at points.

Of course, it would make much more sense to ask Yvain for more data on how people answered this question rather than speculate on this matter :p

↑ comment by byrnema · 2011-12-05T20:38:07.943Z · LW(p) · GW(p)

Could someone break down what is meant by "ontologically basic mental entities"? Especially, I'm not certain of the role of the word 'mental'..

Replies from: Nornagest↑ comment by Nornagest · 2011-12-05T20:48:38.114Z · LW(p) · GW(p)

It's a bit of a nonstandard definition of the supernatural, but I took it to mean mental phenomena as causeless nodes in a causal graph: that is, that mental phenomena (thoughts, feelings, "souls") exist which do not have physical causes and yet generate physical consequences. By this interpretation, libertarian free will and most conceptions of the soul would both fall under supernaturalism, as would the prerequisites for most types of magic, gods, spirits, etc.

I'm not sure I'd have picked that phrasing, though. It seems to be entangled with epistemological reductionism in a way that might, for a sufficiently careful reading, obscure more conventional conceptions of the "supernatural": I'd expect more people to believe in naive versions of free will than do in, say, fairies. Still, it's a pretty fuzzy concept to begin with.

Replies from: byrnema↑ comment by byrnema · 2011-12-05T23:25:31.405Z · LW(p) · GW(p)

OK, thanks. I also tend to interpret "ontologically basic" as a causeless node in a causal graph. I'm not sure what is meant by 'mental'. (For example, in the case of free will or a soul.) I think this is important, because "ontologically basic" in of itself isn't something I'd be skeptical about. For example, as far as I know, matter is ontologically basic at some level.

A hypothesis: Mental perhaps implies subjective in some sense, perhaps even as far as meaning that an ontologically basic entity is mental if it is a node that is not only without physical cause but also has no physical effect. In which case, I again see no reason to be skeptical of their existence as a category.

↑ comment by scav · 2011-12-06T13:01:44.247Z · LW(p) · GW(p)

It's barely above background noise, but my guess is when specifically asked about ontologically basic mental entities, people will say no (or huh?), but when asked about God a few will decline to define supernatural in that way or decline to insist on God as supernatural.

It's an odd result if you think everyone is being completely consistent about how they answer all the questions, but if you ask me, if they all were it would be an odd result in itself.

↑ comment by Jayson_Virissimo · 2011-12-05T11:12:21.790Z · LW(p) · GW(p)

I think Yvain's stipulative definition of "supernatural" was a bad move. I would be very surprised if I asked a theologian to define "supernatural" and they replied "ontologically basic mental entity". Even as a rational reconstruction of their reply, it would be quite a stretch. Using such specific definitions of contentious concepts isn't a good idea, if you want to know what proportion of Less Wrongers are atheist/agnostic/deist/theist/polytheist.

comment by Vladimir_Nesov · 2011-12-04T20:53:18.141Z · LW(p) · GW(p)

"less likely to believe in cryonics"

Rather, believe the probability of cryonics producing a favorable outcome to be less. This was a confusing question, because it wasn't specified whether it's total probability, since if it is, then probability of global catastrophe had to be taken into account, and, depending on your expectation about usefulness of frozen heads to FAI's value, probability of FAI as well (in addition to the usual failure-of-preservation risks). As a result, even though I'm almost certain that cryonics fundamentally works, I gave only something like 3% probability. Should I really be classified as "doesn't believe in cryonics"?

(The same issue applied to live-to-1000. If there is a global catastrophe anywhere in the next 1000 years, then living-to-1000 doesn't happen, so it's a heavy discount factor. If there is a FAI, it's also unclear whether original individuals remain and it makes sense to count their individual lifespans.)

Replies from: Unnamed, steven0461↑ comment by Unnamed · 2011-12-05T20:30:01.804Z · LW(p) · GW(p)

The same issue applied to live-to-1000. If there is a global catastrophe anywhere in the next 1000 years, then living-to-1000 doesn't happen, so it's a heavy discount factor. If there is a FAI, it's also unclear whether original individuals remain and it makes sense to count their individual lifespans.

Good point, and I think it explains one of the funny results that I found in the data. There was a relationship between strength of membership in the LW community and the answers to a lot of the questions, but the anti-agathics question was the one case where there was a clear non-monotonic relationship. People with a moderate strength of membership (nonzero but small karma, read 25-50% of the sequences, or been in the LW community for 1-2 years) were the most likely to think that at least one currently living person will reach an age of 1,000 years; those with a stronger or weaker tie to LW gave lower estimates.

There was some suggestion of a similar pattern on the cryonics question, but it was only there for the sequence reading measure of strength of membership and not for the other two.

↑ comment by steven0461 · 2011-12-04T21:32:47.864Z · LW(p) · GW(p)

Do you think catastrophe is extremely probable, do you think frozen heads won't be useful to a Friendly AI's value, or is it a combination of both?

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2011-12-04T22:30:36.190Z · LW(p) · GW(p)

Below is my attempt to re-do the calculations that led to that conclusion (this time, it's 4%).

FAI before WBE: 3%; Surviving to WBE: 60%; I assume cryonics revival feasible mostly only after WBE; Given WBE, cryonics revival (actually happening for significant portion of cryonauts) before catastrophe or FAI: 10%; FAI given WBE (but before cryonics revival): 2%; Heads preserved long enough (given no catastrophe): 50%; Heads (equivalently, living humans) mattering/useful to FAI: less than 50%.

In total, 6% for post-WBE revival potential and 4% for FAI revival potential, discounted by 50% preservation probability and 50% mattering-to-FAI probability, this gives 4%.

(By "humans useful to FAI", I don't mean that specific people should be discarded, but that the difference to utility of the future between a case where a given human is initially present, and where they are lost, is significantly less than moral value of current human life, so that it might be better to keep them than not, but not that much better, for fungibility reasons.)

Replies from: jkaufman, wedrifid, steven0461↑ comment by jefftk (jkaufman) · 2012-04-03T01:58:07.662Z · LW(p) · GW(p)

I'm trying to sort this out so I can add it to the collection of cryonics fermi calculations. Do I have this right:

Either we get FAI first (3%) or WBE (97%). If WBE, 60% chance we die out first. Once we do get WBE but before revival, 88% chance of catastrophe, 2% chance of FAI, leaving 10% chance of revival. 50% chance heads are still around.

If at any point we get FAI, then 50% chance heads are still around and 50% chance it's interested in reviving us.

So, combining it all:

(0.5 heads still around)*

((0.03 FAI first)*(0.5 humans useful to FAI) +

(0.97 WBE first)*(0.4 don't die first)*

((.02 FAI before revival)*(0.5 humans useful to FAI) +

(.1 revival with no catastrophe or FAI))))

= .5*(0.03*0.5 + 0.97*0.4*(0.02*0.5 + 0.1))

= 2.9%

This is less than your 4%, but I don't see where I'm misinterpreting you.

Do you also think that the following events are so close to impossible that approximating them at 0% is reasonable?

- The cryonics process doesn't preserve everything

- You die in a situation (location, legality, unfriendly hospital, ...) where you can be frozen quickly enough

- The cryonics people screw up in freezing you

↑ comment by steven0461 · 2011-12-04T22:53:11.436Z · LW(p) · GW(p)

I'm not sure how to interpret the uploads-after-WBE-but-not-FAI scenario. Does that mean FAI never gets invented, possibly in a Hansonian world of eternally competing ems?

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2011-12-04T23:13:31.187Z · LW(p) · GW(p)

If you refer to "cryonics revival before catastrophe or FAI", I mean that catastrophe or FAI could happen (shortly) after, no-catastrophe-or-superintelligence seems very unlikely. I expect catastrophe very likely after WBE, also accounting for most of the probability of revival not happening after WBE. After WBE, greater tech argues for lower FAI-to-catastrophe ratio and better FAI theory argues otherwise.

Replies from: steven0461↑ comment by steven0461 · 2011-12-04T23:59:30.383Z · LW(p) · GW(p)

So the 6% above is where cryonauts get revived by WBE, and then die in a catastrophe anyway?

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2011-12-05T00:03:35.995Z · LW(p) · GW(p)

Yes. Still, if implemented as WBEs, they could live for significant subjective time, and then there's that 2% of FAI.

Replies from: steven0461↑ comment by steven0461 · 2011-12-05T00:10:55.497Z · LW(p) · GW(p)

In total, you're assigning about a 4% chance of a catastrophe never happening, right? That seems low compared to most people, even most people "in the know". Do you have any thoughts on what is causing the difference?

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2011-12-05T01:10:27.433Z · LW(p) · GW(p)

I expect that "no catastrophe" is almost the same as "eventually, FAI is built". I don't expect a non-superintelligent singleton that prevents most risks (so that it can build a FAI eventually). Whenever FAI is feasible, I expect UFAI is feasible too, but easier, and so more probable to come first in that case, but also possible when FAI is not yet feasible (theory isn't ready). In physical time, WBE sets a soft deadline on catastrophe or superintelligence, making either happen sooner.

comment by Dr_Manhattan · 2011-12-06T15:55:01.223Z · LW(p) · GW(p)

I think "has children" is an (unsurprising but important) omission in the survey.

Replies from: taryneast↑ comment by taryneast · 2011-12-06T19:29:24.145Z · LW(p) · GW(p)

Possibly less surprising given the extremely low average age... I agree it should be added as a question. Possibly along with an option for "none but want to have them someday" vs "none and don't want any"

Replies from: Prismattic, Dr_Manhattan↑ comment by Prismattic · 2011-12-07T01:30:23.860Z · LW(p) · GW(p)

↑ comment by Dr_Manhattan · 2011-12-06T20:02:12.496Z · LW(p) · GW(p)

less surprising than 'unsurprising' - you win! :). The additional categories are good.

Replies from: taryneastcomment by NancyLebovitz · 2011-12-04T20:12:39.908Z · LW(p) · GW(p)