Robin Hanson's Grabby Aliens model explained - part 1

post by Writer · 2021-09-22T18:51:29.594Z · LW · GW · 30 commentsThis is a link post for https://youtu.be/l3whaviTqqg

Contents

Introduction The hard-steps model, planets habitability, and human earliness Other reasons why GAs are plausible None 33 comments

This article is the script of the video linked above. It differs slightly from the video due to some phrasing changes in the narration [now it's exactly the narration]. Here, I aim to explain the rationale for Robin Hanson's Grabby Aliens model. In short, humanity's appearance date looks implausibly early. This puzzle is explained by positing that civilizations that Hanson calls "grabby" will set a deadline for other civilizations to appear, embodying a selection effect. This topic might be important for EAs and rationalists because it's a plausible theory of how an essential aspect of the far future will unfold. The next video/article will have more specific numbers to work with.

EA Forum cross-post [EA · GW].

Introduction

Welcome. Today’s topic is... aliens. In particular, we’ll talk about a recent model of how intelligent life expands and distributes in the universe. Its variables can be estimated from observation, and it makes many predictions. For example, it predicts when we should expect to meet another civilization, has things to say about our chances of hearing alien messages, and of becoming an interplanetary civilization ourselves. It also answers why we don’t see aliens yet, considering the universe’s large number of stars and galaxies.

The model we’ll discuss is one of the most interesting of its kind, but it's very recent and still not widely known. Its main author is Robin Hanson. You may know him as the person who first introduced the idea of the Great Filter in 1996. He published a paper with three coauthors detailing his new model in March 2021. [Here's the paper, here's the website, with lots of links].

The model’s basic assumption is that some civilizations in the universe at some point become “grabby”. This means that 1. They expand from their origin planet at a fraction of the speed of light, 2. They make significant and visible changes wherever they go. And 3. They last a long time.

The hard-steps model, planets habitability, and human earliness

But why do we have reason to think that such civilizations should exist? The starting point of the model, the thing that makes it necessary in the first place, is a question: “Why are humans so early?”

To understand where this question comes from, we need to step back and ask another question.

What is the probability that simple dead matter becomes a civilization like ours? Robin Hanson uses a simple statistical model introduced in 1983 by the physicist Brandon Carter, and that many others have pursued since then. It posits that life has to go through a series of difficult phases, from when a planet first becomes habitable to when an advanced civilization is born. These difficult phases are called “hard steps”. For example, hard steps could be the creation of the first self-replicating molecules, the passage from prokaryotic cells to eukaryotic cells, multicellularity, or the creation of particular combos of body parts. Each step has a certain probability of being completed per unit of time.

This model also appears in biology as a standard way of describing the appearance of cancer. One cell has to suffer many mutations before becoming cancerous. Each mutation has a certain probability of occurring per unit of time. The probability of all the mutations occurring in any given cell is low enough that, on average, each cell should become cancerous in a much longer time than the organism’s lifetime. But there are enough cells in the human body that a few outliers suffer enough mutations in a normal lifetime and end up becoming cancerous. The number of mutations required is typically 6 or 7, but sometimes just 2 are enough.

Now, let’s try to estimate how many hard steps life on Earth had to go through. Hanson notices at least two periods during our planet’s lifetime that we could see as potential hard steps. First: the period between now and when Earth will first become uninhabitable, which is 1.1 billion years. Second: the period from when Earth first became habitable to when life first appeared, which is 0.4 billion years. If we use 1.1 billion as the typical duration of a hard step, then we should expect Earth to have undergone 3.9 hard steps. If we use 0.4, we should expect it to have undergone 12.5 hard steps. A middle estimate between these two is 6 hard steps.

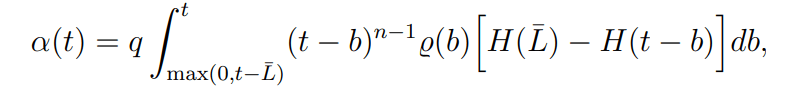

This concept is generalizable from planets to large volumes of space containing many stars. The number of hard steps appears as a parameter in a function that takes a time t and returns the probability that advanced life will appear at that time in a certain volume of space. We use this law to estimate human earliness:

You don’t need to understand every term here. But you should know that “n” is the number of hard steps estimated, and the L with the bar on top is the maximum lifetime of planets that are considered suitable for advanced life. Varying these two parameters leads to different estimates for how early advanced life on Earth looks compared to past and future advanced life in the rest of the universe, as predicted by the law you just saw.

Let’s see what each of the two parameters means regarding human earliness.

First, a large number of hard steps greatly favors later appearance dates of advanced life, making humans look very early. It makes sense that more hard steps make us look early because life has to overcome more difficulties. The more hard steps there are, the more unlikely it is that a civilization appears as early as we did.

Second: The same is true for increasing the maximum lifetime of habitable planets. This is because Earth is a relatively short-lived planet. It's estimated that it will become uninhabitable in around 1.1 billion years, and the sun will run out of hydrogen in about 5 billion years, turning into a red giant and potentially swallowing Earth. But stars and planets can have way longer lifetimes, in the order of trillions of years, thousands of times more than Earth. The universe itself is just 13.8 billion years old. Therefore, If those higher lifetime planets are habitable by advanced life, then humans on Earth look very early. Why? Because the overwhelming majority of life in the universe will exist on those longer-lived planets in the coming trillions of years.

But whether advanced life is possible around longer-lived stars is an open question because longer-lived stars have lower mass than our sun. Plus, the estimate for the number of hard steps is subject to variation.

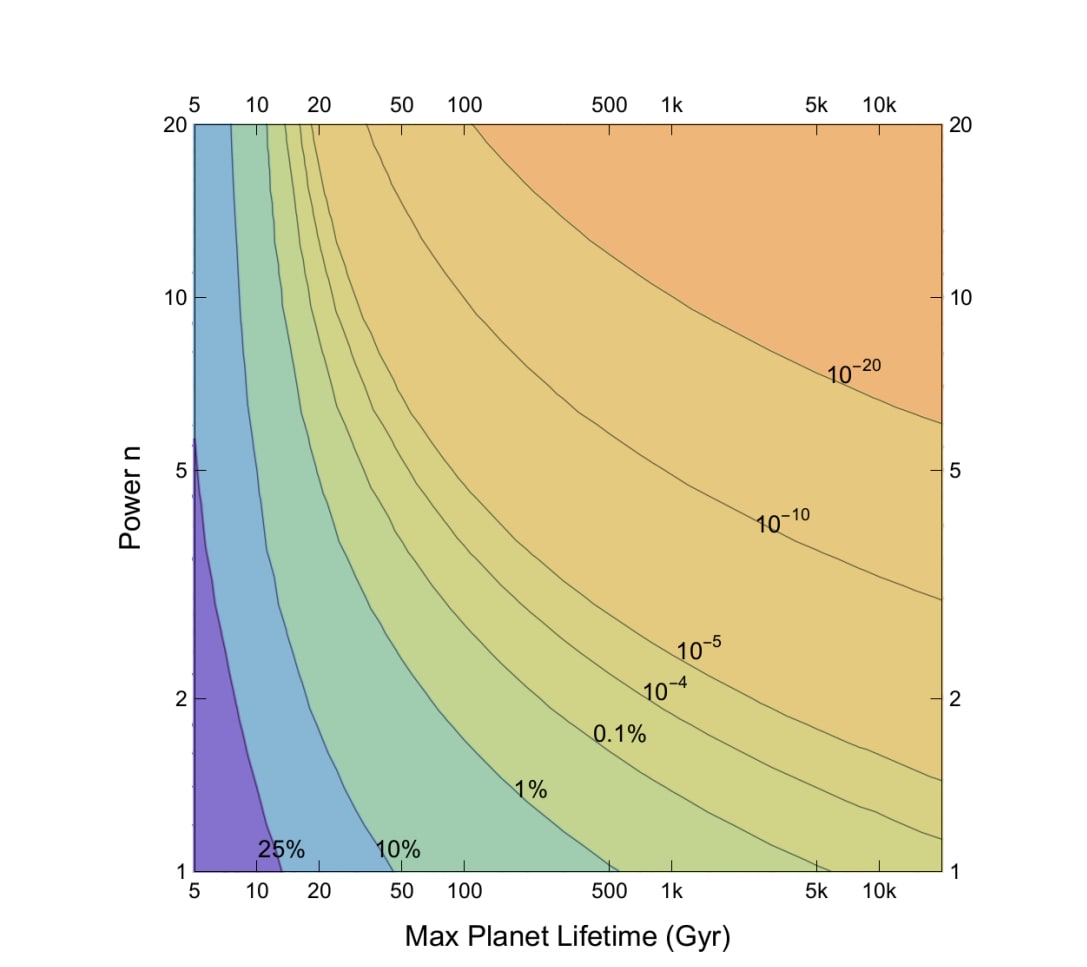

But even with this uncertainty, we can look at how early advanced life on Earth is predicted to be by many combinations of values chosen for the two parameters. Robin Hanson does this in this graph:

The horizontal axis represents the maximum lifetimes for stars that allow habitable planets, expressed in billions of years. The vertical axis is the number of hard steps. For every combination of those two values, you get a measure of how early advanced life on Earth is, according to the mathematical law we saw earlier. Colder colors mean that we are not that early; warmer colors mean that we look very early. You can also look at the numbers. They represent today’s 13.8 billion years’ date percentile rank within the distribution of advanced life arrival dates predicted by the equation we saw. Confused? Here’s an example. See the number “1%” written on that line? That means that every combination of n and L that falls on that line means that humans are among the first 1% of all advanced life that will appear in the universe. All of the values that fall at the left of that line imply that we came later than the first 1%, and all the values on the right of that line imply that we came earlier than the first 1%.

Let’s try a couple more examples. Let’s set the maximum planet lifetime at 10 trillion years and the number of hard steps at 10. We get a rank of less than 10^-20, which is one out of ten quintillions. We look incredibly unbelievably early. Now, let’s try something more conservative, so let’s use our middle estimate for the number of hard steps, which is 6, and a very restrictive maximum planet lifetime: 10 billion years, the lifetime of the Sun. In this case, we get more or less that we are amongst the first 10% of all advanced life that will ever appear in the universe. So, still surprisingly early.

In order to not look early, we have to assume unreasonably restrictive values for the number of hard steps and the maximum lifetimes of habitable planets.

So, we are back to the question we asked at the beginning of the video: why are we early? This is a puzzle in need of explanation. Being early means that we are in an unlikely situation, and when you see evidence that's unlikely according to your models, there’s probably a need for an explanation. Something that you didn’t expect to happen has happened. Or, said differently: when you encounter a piece of evidence that is unlikely according to your beliefs, that piece of evidence tells you to give more weight to hypotheses that make that piece of evidence more likely.

Robin Hanson’s solution to this earliness riddle is… Grabby Aliens. They serve as the main assumption for his model, and their existence is also a hypothesis that has acquired credence due to the evidence provided by the apparent earliness of life on Earth. Together with this hypothesis, the probability of the whole model built by Hanson gets boosted.

As anticipated, the word “grabby” means that 1. such aliens expand from their origin planet at a fraction of the speed of light, 2. They make significant and visible changes wherever they go. And 3. They last a long time.

But why do grabby aliens explain human earliness? One consequence of grabby aliens is that they set a deadline for the appearance of other advanced civilizations. The model predicts that if such aliens exist, they will soon occupy most of the observable universe. And when they do, other civilizations can’t appear anymore because all of the habitable planets are taken. Therefore, if grabby aliens exist, ours is not an unlikely situation anymore. Civilizations like ours (advanced but not yet grabby) can only appear early. Because later, every habitable planet is already taken.

Before becoming grabby, every advanced civilization must observe what we are also observing: that they are early. This means that grabby aliens explain the current evidence. They make it look likely. They make us look not that special anymore. We become exactly what a typical non-grabby civilization would look like. If you know the history of science, then you realize that looking special is very fishy.

To put it in another way: before the grabby aliens’ hypothesis, we have a situation in which we appear very early among all the possible advanced civilizations in the past and future history of the universe. An unlikely situation. But once we hypothesize grabby aliens, future advanced civilizations that are not yet grabby disappear. Their existence is prevented by some of the early civilizations, who became grabby and occupied all the habitable planets on which new civilizations could have been born. And therefore, the grabby aliens’ hypothesis makes us look more typical: every civilization like us will follow the same path of appearing early and then potentially expanding into space.

Other reasons why GAs are plausible

Grabby aliens are also plausible for other reasons. Life on Earth, and humans, look grabby in many ways. Species, cultures, and organizations tend to expand in new niches and territories when possible. Species expanding in new territories can access new resources and increase their population. Therefore we should expect behaviors that encourage such colonization to be selected for and species to evolve to exhibit these behaviors.

Imagine two species that consume the same resources. They inhabit two different territories, and they are separated by an uninhabited land rich in resources. One of the two species has no particular impulse to consume more resources. Its population simply remains stable in its territory. The other, instead, expands into the uninhabited territory and makes more offspring. Therefore, this second species will soon outnumber the first. With each successive episode of expansion, the second species will become larger and larger, and evolution will go in the direction of grabby behavior.

This kind of selection effect looks general enough that it might very well be valid for species on planets other than Earth.

In addition, expansion usually means consuming resources and changing the environment. Grabby aliens, if they exist, should make visible changes to the volumes of space they occupy. They might build structures to harness the energy of the stars, such as Dyson spheres or Dyson swarms, build technology on the planets they occupy, and use other planets and asteroids for resources. Even with the tools we have today, some of these changes wouldn’t escape astronomy, and therefore can be considered visible to other advanced civilizations.

But the idea of grabby aliens relies on interstellar travel being possible. How likely is that? Even today, we foresee a nontrivial chance that humanity will expand to other planets and star systems. Don’t imagine how we could do it with human-crewed spaceships. There are far easier ways. Consider, for example, probes. Self-replicating probes that settle on a planet to plant the seed of a new civilization are plausible technologies in the realm of speculative engineering. They’re called Von Neumann probes, and they comprise elements that are likely to be developed at some point. Each of these elements already exists in nature or among human technology: a capacity of self-replication, a way to store and possibly collect energy, some kind of AI, manufacturing capability, and engine.

Cosmic distances aren’t much of an impediment. There are many possible ways propulsion could be achieved, but consider, for example, solar sails: They are basically mirrors reflecting light from a star or a laser and accelerating due to the force exerted on them by light. With solar sails, it’s possible to reach significant fractions of the speed of light.

Even at lower speeds, estimates for colonizing the milky way range from 5 million to a few hundred million years. Very little when compared to the age of the universe or even the age of the Earth. But we'll see that the Grabby Aliens model invokes speeds at least as high as ⅓ of the speed of light, to explain why we don’t see any trace of aliens in our skies.

Perhaps there is just a tiny chance for any grabby civilization to arise. But even in this case, they’ll have a disproportionate impact on the universe.

If grabby aliens exist, they will spread through the universe relatively soon. In the next video, we’ll talk about how soon and when we'll meet them, along with many other predictions. If you want to know more, stay tuned.

30 comments

Comments sorted by top scores.

comment by Steven Byrnes (steve2152) · 2022-12-09T17:02:37.721Z · LW(p) · GW(p)

I continue to believe that the Grabby Aliens model rests on an extremely sketchy foundation, namely the anthropic assumption “humanity is randomly-selected out of all intelligent civilizations in the past present and future”.

For one thing, given that the Grabby Aliens model does not weight civilizations by their populations, it follows that, if the Grabby Aliens model is right, then all the “popular” anthropic priors like SIA and SSA and UDASSA and so on are all wrong, IIUC.

For another (related) thing, in order to believe the Grabby Aliens model, we need to make both of the following two claims:

- We SHOULD do the following: (1) observe that we (humanity) seem early with respect to all intelligent civilizations that will ever exist; (2) feel surprised at that observation; and then (3) update our credences-about-astrobiology-etc. accordingly;

- We SHOULD NOT do the following: (1) observe that we (humanity in 2022) seem early with respect to all humans that will ever exist; (2) feel surprised at that observation; and then (3) update our credences-about-astrobiology-etc. accordingly.

I don’t understand on what grounds somebody could hold both of these opinions simultaneously, but that’s what the Grabby Aliens paper asks us to do. (The second bullet point by the way is the doomsday argument; here’s Robin Hanson’s rationale for rejecting it.)

There was some discussion on this topic at my Question Post “Is Grabby Aliens built on good anthropic reasoning?” [LW · GW]. Judge for yourself, but the impression I got from that discussion was that roughly nobody outside the study coauthor was really enthusiastic about the anthropic foundation of the Grabby Aliens model, and that at least one person who had thought the anthropic foundation was fine, turned out to have misunderstood it.

Anyway, this is a review for the LessWrong 2021 review, so I guess the question should be: is this post (and corresponding YouTube video) a “highlight of intellectual progress on this website” (or something along those lines)? Well, I thought it was a lovely and well-crafted YouTube video that faithfully explained the paper. But I also think that it was basically endorsing (or at least, failing to criticize) a paper that is deeply flawed. So I’m strong-voting against including this post in the review, but I’m also upvoting this post itself. :)

UPDATE 2024: I screenshotted this comment on X and Robin Hanson responded: Link.

comment by Steven Byrnes (steve2152) · 2021-09-22T23:16:31.054Z · LW(p) · GW(p)

Please correct me if I'm wrong, but this model seems to take for granted the idea that we can line up all the intelligent civilizations in the past and future history of the universe, and treat "humans on earth" as if it's randomly drawn from that distribution. That seems to be a core piece of the model, if I'm understanding it right.

So if the distribution-of-intelligent-civilizations contains much much much more civilizations after "humans on earth" than before, then this is evidence that that's not the real distribution, and maybe we should rethink our assumptions and find a story wherein the "humans on earth" draw is not quite so atypical for the distribution. That's the "humans are early" argument as I understand it.

Is that right? If so, I find that "random draw from the distribution" premise questionable.

There wasn't actually literally a process where, like, I started out as a soul hanging out in heaven, like in that Pixar movie, and then God picked an intelligent civilization out of His hat, and assigned me to that civilization, and sent me down into an appropriate body.

So there has to be some different argument justifying this premise, and I don't know what that argument is.

Here's why I'm skeptical. It seems to me—and I'm very much not an expert—that "I" am the thought processes going on in a particular brain in a particular body at a particular time. I'm in a reference class of one. The idea that "I could have been born into a different species in a different galaxy" strikes me as nonsensical, similar to the idea "I could have been a rock".

Replies from: ricraz, JBlack↑ comment by Richard_Ngo (ricraz) · 2021-09-27T20:54:22.176Z · LW(p) · GW(p)

I'm in a reference class of one.

It depends what purpose you want to use the reference class for. You can always fall back on a reference class of one, but then you'll have a lot of difficulty reasoning about, say, your chance of dying this year - sure, the base rate is pretty low, but using that base rate means implicitly assigning yourself to some reference class.

The anthropic case isn't exactly analogous, but it shares the property that you need to choose a useful reference class by abstracting away from unimportant features. In this case, the unimportant features we're proposing to abstract away from are "every feature except the fact that we're an intelligent species trying to reason about our place in the universe". And then we can try to infer how successful a given chain of reasoning would be for each of those species in every possible world we can imagine, and update our probabilities accordingly.

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2021-09-28T13:47:28.023Z · LW(p) · GW(p)

Thanks, that helps! Here's where I'm at now:

The "chance of dying" argument goes:

- There's a reference class (people in my age bracket in my country)

- Everyone in the reference class is doing a thing (living normal human lives)

- Something happens with blah% probability to everyone in the reference class (dying this year)

- Since I am a member of the reference class too, I should consider that maybe that thing will happen to me with blah% probability as well (my risk of death this year is blah%).

OK, now aliens.

- There's a reference class (alien civilizations from the past present and future)

- Everyone in the reference class is doing a thing (doing analyses how their civilization compares with the distribution of all alien civilizations from the past present and future)

- Something happens with 99.9% probability to everyone in the reference class (the alien civilization doing this analysis finds that they are somewhere in the middle 99.9% of the distribution, in terms of civilization origination date).

- Since I am a member of the reference class too, I should consider that maybe that thing will happen to me with 99.9% probability too.

…So if a purported distribution of alien civilizations has me in the first 0.05%, I should treat it like a hypothesis that made a 99.9% confident prediction and the prediction was wrong, and I should reduce my credence on that hypothesis accordingly.

Is that right?

If so, I find the first argument convincing but not the second one. They don't seem sufficiently parallel to me.

For example, in the first argument, I want to make the reference class as much like me as possible, up to the limits of the bias-variance tradeoff. But in the second case, if I update the reference class to "alien civilizations from the first 14 billion years of the universe", then it destroys the argument, we're not early anymore. Or is that cheating? An issue here is that the first argument is a prediction and the second is a postdiction, and in a postdiction it seems like cheating to condition on the the thing you're trying to postdict. But that disanalogy is fixable: I'll turn the first argument into a postdiction by saying that I'm trying to estimate "my chances of having died in the past year"—i.e., I know in hindsight that I didn't die, but was it because I got lucky, or was that to be expected?

So then I'm thinking: what does it even mean to produce a "correct" probability distribution for a postdiction? Why wouldn't I update on all the information I have (including the fact that I didn't die) and say "well gee I guess my chance of dying last year was 0%!"? Well, in this chance-of-dying case, I have a great way to ground out what I'm trying to do here: I can talk about "re-running the past year with a new (metaphorical) "seed" on all the quantum random number generators". Then the butterfly effect would lead to a distribution of different-but-plausible rollouts of the past year, and we can try to reason about what would have happened. It's still a hard problem, but at least there's a concrete thing that we're trying to figure out.

But there seems to be no analogous way in the alien case to ground out what a "correct" postdictive probability distribution is, because like I said above (with the Pixar movie comment), there was no actual random mechanism involved in the fact that I am a human rather than a member of a future alien civilization that will evolve a trillion years from now in a different galaxy. (We can talk about re-rolling the history of earth, but it seems to me that this kind of analysis would look like normal common-sense reasoning about hard steps in evolution etc., and not weird retrocausal inferences like "if earth-originating life is likely to prevent later civilizations from arising, then that provides such-and-such evidence about whether and when intelligent life would arise in a re-roll of earth's history".)

I can also point out various seemingly-arbitrary choices involved in the alien-related reference class, like whether we should put more weight on the civilizations that have higher population, or higher-population-of-people-doing-anthropic-probability-analyses, and whether non-early aliens are less likely to be thinking about how early they are (as opposed to some other interesting aspect of their situation … do we need a Bonferroni correction to account for all the possible ways that an intelligent civilization could appear atypical??), etc. Also, I feel like there's also a kind of reductio where this argument is awfully similar in structure to the doomsday argument, so that I find it hard to imagine how one could accept the grabby aliens argument but reject the doomsday argument, but they're kinda contradictory I think. It makes me skeptical of this whole enterprise. :-/ But again I'll emphasize that I'm just thinking this through out loud and I'm open to being convinced. :-)

Replies from: ricraz, ricraz↑ comment by Richard_Ngo (ricraz) · 2021-09-28T17:05:09.633Z · LW(p) · GW(p)

For the purposes of this discussion it's probably easier to get rid of the "alien" bit and just talk about humans. For instance, consider this thought experiment (discussed here by Bostrom):

A firm plan was formed to rear humans in two batches: the first batch to be of three humans of one sex, the second of five thousand of the other sex. The plan called for rearing the first batch in one century. Many centuries later, the five thousand humans of the other sex would be reared. Imagine that you learn you’re one of the humans in question. You don’t know which centuries the plan specified, but you are aware of being female. You very reasonably conclude that the large batch was to be female, almost certainly. If adopted by every human in the experiment, the policy of betting that the large batch was of the same sex as oneself would yield only three failures and five thousand successes. . . . [Y]ou mustn’t say: ‘My genes are female, so I have to observe myself to be female, no matter whether the female batch was to be small or large. Hence I can have no special reason for believing it was to be large.’ (Ibid. pp. 222–3)

I'm curious about whether you agree or disagree with the reasoning here (and more generally with the rest of Bostrom's reasoning in the chapter I linked).

To respond to your points more specifically: I don't think your attempted analogy is correct; here's the replacement I'd use:

- Consider the group of all possible alien civilisations (respectively: all living humans).

- Everyone in the reference class is either in a grabby universe or not (respectively: is either going to die this year or not).

- Those who are in grabby universes are more likely to be early (respectively: those who will survive this year are more likely to be young).

- When you observe how early you are, you should think you're more likely to be in a grabby universe (respectively: when you observe how young you are, you should update that you're more likely to survive).

↑ comment by Steven Byrnes (steve2152) · 2021-09-28T19:22:15.352Z · LW(p) · GW(p)

Thanks!!

- First and foremost, I haven't thought about it very much :)

- I admit, the Bostrom book arguments you cite do seem intuitively compelling. Also, if Bostrom and lots of other reasonable people think SSA is sound, I guess I'm somewhat reluctant to disagree.

- (However, I thought the "grabby aliens" argument was NOT based on SSA, and in fact is counter to SSA, because they're not weighing the alien civilizations by their total populations?)

- On the other hand, I find the following argument equally compelling:

Alice walks up to me and says, "Y'know, I was just reading, it turns out that local officials in China have weird incentives related to population reporting, and they've been cooking the books for years. It turns out that the real population of China is more like 1.9B than 1.4B!" I would have various good reasons to believe Alice here, and various other good reasons to disbelieve Alice. But "The fact that I am not Chinese" does not seem like a valid reason to disbelieve Alice!!

- Maybe here's a compromise position: Strong evidence is common [LW · GW]. I am in possession of probably millions of bits of information pertaining to x-risks and the future of humanity, and then the Doomsday Argument provides, like, 10 additional bits of information beyond that. It's not that the argument is wrong, it's just that it's an infinitesimally weak piece of evidence compared to everything else. And ditto with the grabby aliens argument versus "everything humanity knows pertaining to astrobiology". Maybe these anthropic-argument thought experiments are getting a lot of mileage out of the fact that there's no other information whatsoever to go on, and so we need to cling for dear life to any thread of evidence we can find, and maybe that's just not the usual situation for thinking about things, given that we do in fact know the laws of physics and so on. (I don't know if that argument holds up to scrutiny, it's just something that occurred to me just now.) :-)

↑ comment by ESRogs · 2021-09-29T19:46:10.522Z · LW(p) · GW(p)

Maybe here's a compromise position: Strong evidence is common [LW · GW]. I am in possession of probably millions of bits of information pertaining to x-risks and the future of humanity, and then the Doomsday Argument provides, like, 10 additional bits of information beyond that. It's not that the argument is wrong, it's just that it's an infinitesimally weak piece of evidence compared to everything else.

Thanks for making this point and connecting it to that post. I've been thinking that something like this might be the right way to think about a lot of this anthropics stuff — yes, we should use anthropic reasoning to inform our priors, but also we shouldn't be afraid to update on all the detailed data we do have. (And some examples of anthropics-informed reasoning seem not to do enough of that updating.)

Replies from: Benito↑ comment by Ben Pace (Benito) · 2021-09-29T20:10:43.443Z · LW(p) · GW(p)

FWIW this has also been my suspicion for a while.

↑ comment by Richard_Ngo (ricraz) · 2021-09-29T12:23:55.168Z · LW(p) · GW(p)

On the other hand, I find the following argument equally compelling

The argument you discuss an example of very weak anthropic evidence, so I don't think it's a good intuition pump about the validity of anthropic reasoning in general. In general anthropic evidence can be quite strong - the presumptuous philosopher thought experiment [LW · GW], for instance, argues for an update of a trillion to one.

However, I thought the "grabby aliens" argument was NOT based on SSA, and in fact is counter to SSA, because they're not weighing the alien civilizations by their total populations?

I think there's a terminological confusion here. People sometimes talk about SSA vs SIA, but in Bostrom's terminology the two options for anthropic reasoning are SSA + SIA, or SSA + not-SIA. So in Bostrom's terminology, every time you're doing anthropic reasoning, you're accepting SSA; and the main reason I linked his chapter was just to provide intuitions about why anthropic reasoning is valuable, not as an argument against SIA. (In fact, the example I quoted above has the same outcome regardless of whether you accept or reject SIA, because the population size is fixed.)

I don't know whether Hanson is using SIA or not; the previous person who's done similar work tried both possibilities. But either would be fine, because anthropic reasoning has basically been solved by UDT, in a way which dissolves the question of whether or not to accept SIA - as explained by Stuart Armstrong here.

↑ comment by Richard_Ngo (ricraz) · 2021-09-28T16:07:09.136Z · LW(p) · GW(p)

↑ comment by JBlack · 2021-09-24T05:42:37.559Z · LW(p) · GW(p)

Yes, the choice of reference class is the core shaky step for all anthropic arguments. It's analogous to a choice of prior in ordinary Bayesian reasoning, but at least priors are in practice informed by previous experience of what our universe is like. In anthropic reasoning, we (in theory) discard all knowledge of how our universe works, and then treat all our observations including who we are and what sort of universe (and society) we live in as new evidence that we're supposed to use to update the relative weight of various hypotheses.

In principle it's a reasonable idea, but in practice we can't even apply it to drastically simplified toy problems without disagreements. My estimate of the actual reliability of such arguments in discovering true knowledge about the world is near zero, but they're still fun to talk about sometimes.

comment by habryka (habryka4) · 2021-09-27T00:39:55.652Z · LW(p) · GW(p)

Promoted to curated: At least for me, this was by far your most useful video, and the transcript was also quite good. I've been wanting to look into Hanson's grabby alien model for a few weeks, but kept bouncing off of some of his blogposts, and this introduction felt quite good and clear, and finally caused me to jump over the hump. I think this kind of work of distilling and making ideas more accessible is quite valuable, and is extra valuable if its in a new format that might attract a different audience, or reach people that the written content might never reach.

Thanks a lot for the work you are doing!

comment by rodarmor · 2021-09-27T03:45:33.353Z · LW(p) · GW(p)

Thanks for making this post and video. It helped me understand the grabby aliens concept much better.

One nagging question.

I find myself as an individual in a non-grabby civilization. But, according to the grabby aliens model, the vast, vast preponderance of individuals will exist in huge, universe-spanning, grabby alien civilizations. And, using the style of argument used in the grabby aliens model, summed up by Steven Byrnes in his sibling comment:

Please correct me if I'm wrong, but this model seems to take for granted the idea that we can line up all the intelligent civilizations in the past and future history of the universe, and treat "humans on earth" as if it's randomly drawn from that distribution. That seems to be a core piece of the model, if I'm understanding it right.

This is incredibly unlikely, and must be explained, which seems like a weakness of the model.

Replies from: boris-kashirin↑ comment by Boris Kashirin (boris-kashirin) · 2021-09-27T19:25:09.427Z · LW(p) · GW(p)

I find an idea of having children to be morally abhorrent. Granted, right now alternatives are even worse so it is lesser evil - but evil none the less. Presumably grabby civilization able come up with better alternatives. Maybe you will become individual of grabby civilization, individual with great personality and rich inner world spanning multiple solar systems?

comment by RobinHanson · 2021-09-29T18:38:47.281Z · LW(p) · GW(p)

Wow, it seems that EVERYONE here has this counter argument "You say humans look weird according to this calculation, but here are other ways we are weird that you don't explain." But there is NO WAY to explain all ways we are weird, because we are in fact weird in some ways. For each way that we are weird, we should be looking for some other way to see the situation that makes us look less weird. But there is no guarantee of finding that; we just just actually be weird. https://www.overcomingbias.com/2021/07/why-are-we-weird.html

Replies from: rodarmor↑ comment by rodarmor · 2021-10-02T22:11:33.099Z · LW(p) · GW(p)

What do you think about my counter-argument here, which I think is close, but not exactly of the form you describe: https://www.lesswrong.com/posts/RrG8F9SsfpEk9P8yi/robin-hanson-s-grabby-aliens-model-explained-part-1?commentId=zTn8t2kWFHoXqA3Zc [LW(p) · GW(p)]

To sum up "You say humans look weird according to this calculation and come up with a model which explains that, however that model makes humans look even more weird, so if the argument for the model being correct is that it reduces weirdness, that argument isn't very strong."

comment by Tristan Cook · 2021-10-02T19:19:47.422Z · LW(p) · GW(p)

Thanks for this great explainer! For the past few months I've been working on the Bayesian update from Hanson's argument and hoping to share it in the next month or two.

Replies from: Writercomment by JBlack · 2021-09-24T05:17:33.472Z · LW(p) · GW(p)

While the Grabby argument does provide a reason why P(observer is early | observer's species is confined to one planet) is not ridiculously small, it doesn't at all explain why P(observer is early) is not ridiculously small. The Grabby hypothesis actually makes the latter probability very much tinier than without a Grabby hypothesis, since Grabby species have vastly more observers later in the timeline.

The simplest way to salvage this seems to be an additional hypothesis that whatever the Grabby entities are, they do not qualify as observers for the purposes of these anthropic arguments. Maybe they're non-sentient but highly effective replicators that nonetheless prevent emergence of sentient life, and unlikely to ever evolve sentience.

In such a scenario, we get high P(observer is early): despite massive spread of something that prevents development of other species, no late observers exist.

comment by RobinHanson · 2021-09-23T22:14:10.235Z · LW(p) · GW(p)

You have the date of the great filter paper wrong; it was 1998, not 1996.

Replies from: Writer↑ comment by Writer · 2021-09-23T22:39:12.007Z · LW(p) · GW(p)

Robin Hanson, you know nothing about Robin Hanson. You first wrote the paper in 1996 and then last updated it in 1998.

... or so says Wikipedia, that's why I wrote 1996. I just made this clear in the video description anyway, tell me if Wikipedia got this wrong.

Btw, views have nicely snowballed from your endorsement on Twitter, so thanks a lot for it.

↑ comment by holomanga · 2021-10-09T13:52:15.912Z · LW(p) · GW(p)

Ah, yeah, good point, especially since the whole point of the grabby aliens model is that the durations of hard steps are influenced very strongly by survivorship bias.

Scratch my quantitative claims, though I'm still confused because the time for abiogenesis is an actual hard step in the past that has anything at all to do with evolution (and looking at it gives you one datapoint for estimating the number of hard steps in general, since with N hard steps each hard step takes 1/N of the time from planetary formation to civilisation on average), while the time for a planet to become uninhabitable due to its star's lifecycle is unrelated and just seems to be about the right order of magnitude for our civilisation and star in particular.

EDIT: Daniel Eth explains why: https://www.lesswrong.com/posts/JdjxcmwM84vqpGHhn/great-filter-hard-step-math-explained-intuitively [LW · GW]

comment by rahulxyz · 2021-09-22T21:40:32.653Z · LW(p) · GW(p)

Thanks for the great writeup (and the video). I think I finally understand the gist of the argument now.

The argument seems to raise another interesting question about the grabby aliens part.

He's using the hypothesis of grabby aliens to explain away the model's low probability of us appearing early (and I presume we're one of these grabby aliens). But this leads to a similar problem: Robin Hanson (or anyone reading this) has a very low probability of appearing this early amongst all the humans to ever exist.

This low probability would also require a similar hypothesis to explain away. The only way to explain that is some hypothesis where he's not actually that early amongst the total humans to ever exist which means we turn out not to be "grabby"?

This seems like one the problems with anthropic reasoning arguments and I'm unsure how seriously to take them.

Replies from: Writer↑ comment by Writer · 2021-09-22T22:01:22.835Z · LW(p) · GW(p)

The only way to explain that is some hypothesis where he's not actually that early amongst the total humans to ever exist which means we turn out not to be "grabby".

You've rediscovered the doomsday argument! Fun fact: According to Wikipedia, this argument was first formally proposed by Brandon Carter, the author of the hard-steps model. He has also given name to the anthropic principle.

Edit: note that us not becoming grabby doesn't contradict the model. There's a chance that we will not. Plus, the model tells us that hearing alien messages or discovering alien ruins would be terrible news in that regard. I'll explain the reason in the next part.

↑ comment by simple_name (ksv) · 2021-09-23T18:02:04.738Z · LW(p) · GW(p)

We can also become grabby without being there anymore, e.g. the paperclip maximizer scenario.

Replies from: Viliam↑ comment by Viliam · 2021-09-23T23:11:58.268Z · LW(p) · GW(p)

There may be a tradeoff, where by becoming simpler the civilization can spread across the universe faster. So the fastest spreading civilizations are non-sentient von-Neumann-probe maximizers.

That would explain why sentient beings find themselves early in the universe.

↑ comment by rahulxyz · 2021-09-28T20:10:12.802Z · LW(p) · GW(p)

Small world, I guess :) I knew I heard this type of argument before, but I couldn't remember the name of it.

So it seems like the grabby aliens model contradicts the doomsday argument unless one of these is true:

- We live in a "grabby" universe, but one with few or no sentient beings long-term?

- The reference classes for the 2 arguments are somehow different (like discussed above)

comment by MSRayne · 2022-06-18T19:06:15.172Z · LW(p) · GW(p)

This doesn't make sense to me. I don't doubt that all civilizations that last long enough will inevitably become grabby. That's obvious. But how would that retroactively affect the past? We are here now, not there then. What will happen in the future cannot causally affect why we are here now.

Edit: oh, it has something to do with anthropics. I hate anthropics. It makes no sense.

↑ comment by Boris Kashirin (boris-kashirin) · 2021-10-11T16:14:13.182Z · LW(p) · GW(p)

>So children are good, and just because not everyone agrees on that doesn't somehow make children not good.

I can't make sense of this statement. If my terminal value is that children are bad then that's it. How can you even start argue with that?

Replies from: Carmex↑ comment by Boris Kashirin (boris-kashirin) · 2021-10-11T11:41:11.957Z · LW(p) · GW(p)

They are slaves. But it does not really matter. I may be wrong. You may be wrong. We may have different terminal values. Point is, not all people think that children are good even right now, much less in far in the future. Idea that grabby civilization will consist of many individuals is not given. Who knows, may be they even become singe individual. Sound like a bad idea to me now, but may be it will become obvious choice for future me?

comment by holomanga · 2021-09-29T21:17:13.856Z · LW(p) · GW(p)

First: the period between now and when Earth will first become uninhabitable, which is 1.1 billion years.

I'm not too clear on why this would be related to hard steps; it's just a fact about changes in the brightness of the Sun that on the face of it seems unrelated to evolution. If I was asked about a planet orbiting a red dwarf star at a distance that gives an Earth-like temperature, then I'd expect the time between the formation of the planet and life emerging to still be about the same 0.4 billion years, but the time until the planet becomes uninhabitable would be many times longer.