Why has nuclear power been a flop?

post by jasoncrawford · 2021-04-16T16:49:15.789Z · LW · GW · 50 commentsThis is a link post for https://rootsofprogress.org/devanney-on-the-nuclear-flop

Contents

The Gordian knot Nuclear is expensive but should be cheap Safety Linear No Threshold ALARA The Gold Standard Regulator incentives The Big Lie Testing Competition What to do? Metanoeite None 50 comments

To fully understand progress, we must contrast it with non-progress. Of particular interest are the technologies that have failed to live up to the promise they seemed to have decades ago. And few technologies have failed more to live up to a greater promise than nuclear power.

In the 1950s, nuclear was the energy of the future. Two generations later, it provides only about 10% of world electricity, and reactor design hasn‘t fundamentally changed in decades. (Even “advanced reactor designs” are based on concepts first tested in the 1960s.)

So as soon as I came across it, I knew I had to read a book just published last year by Jack Devanney: Why Nuclear Power Has Been a Flop.

What follows is my summary of the book—Devanney‘s arguments and conclusions, whether or not I fully agree with them. I‘ll give my own thoughts at the end.

The Gordian knot

There is a great conflict between two of the most pressing problems of our time: poverty and climate change. To avoid global warming, the world needs to massively reduce CO2 emissions. But to end poverty, the world needs massive amounts of energy. In developing economies, every kWh of energy consumed is worth roughly $5 of GDP.

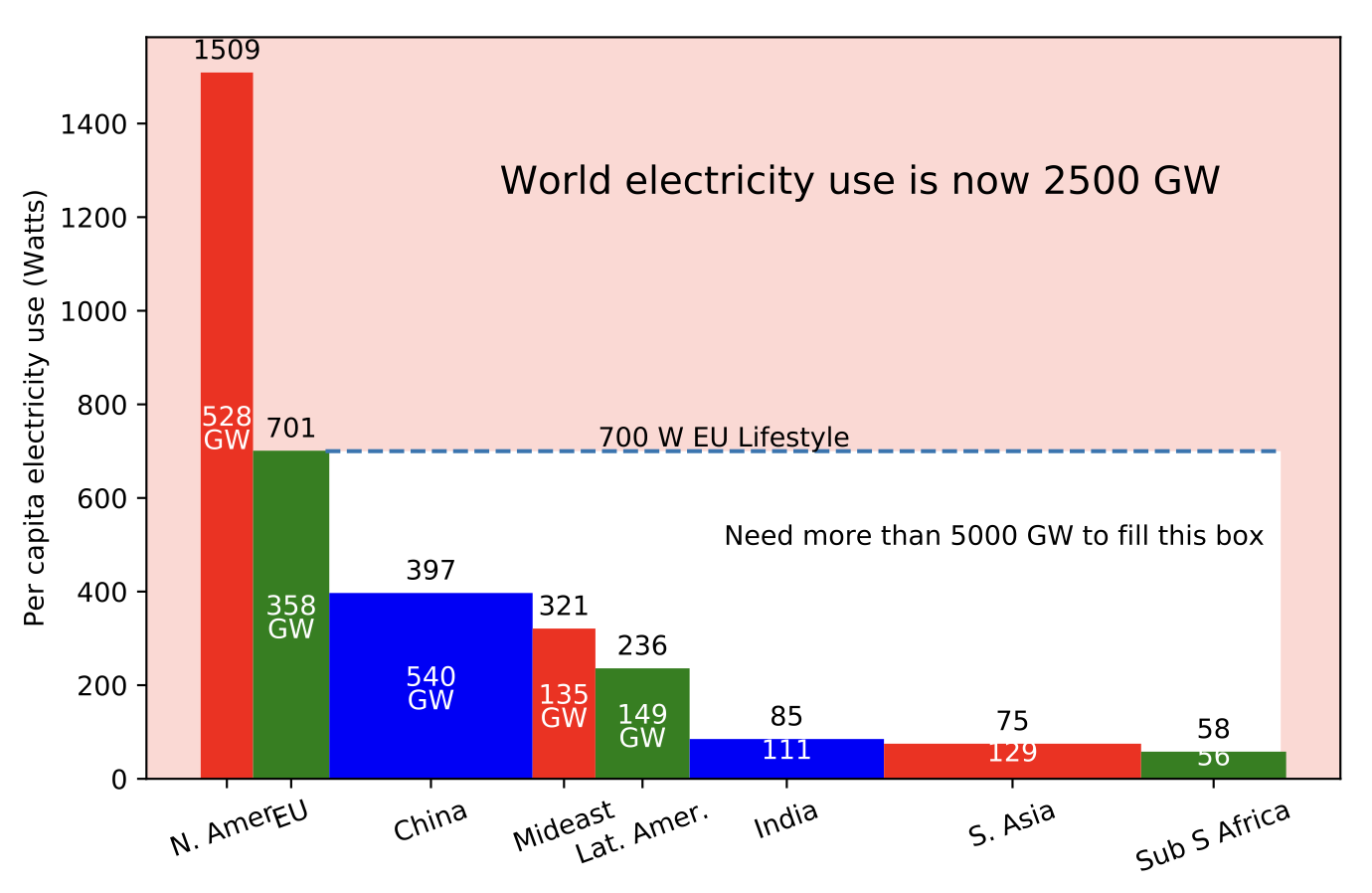

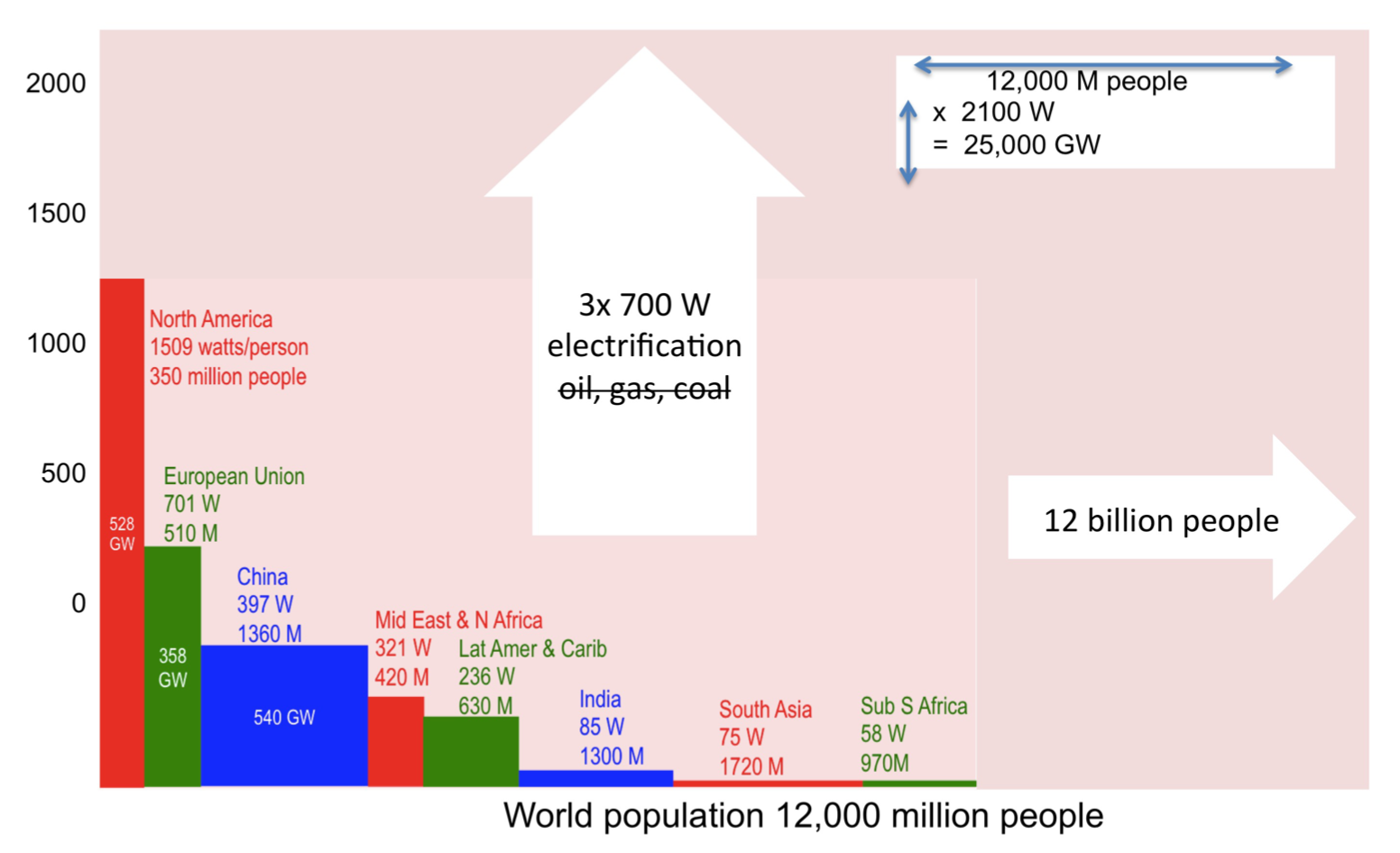

How much energy do we need? Just to give everyone in the world the per-capita energy consumption of Europe (which is only half that of the US), we would need to more than triple world energy production, increasing our current 2.3 TW by over 5 additional TW:

If we account for population growth, and for the decarbonization of the entire economy (building heating, industrial processes, electric vehicles, synthetic fuels, etc.), we need more like 25 TW:

This is the Gordian knot. Nuclear power is the sword that can cut it: a scalable source of dispatchable (i.e., on-demand), virtually emissions-free energy. It takes up very little land, consumes very little fuel, and produces very little waste. It‘s the technology the world needs to solve both energy poverty and climate change.

So why isn‘t it much bigger? Why hasn‘t it solved the problem already? Why has it been “such a tragic flop?”

Nuclear is expensive but should be cheap

The proximal cause of nuclear‘s flop is that it is expensive. In most places, it can‘t compete with fossil fuels. Natural gas can provide electricity at 7–8 cents/kWh; coal at 5 c/kWh.

Why is nuclear expensive? I‘m a little fuzzy on the economic model, but the answer seems to be that it‘s in design and construction costs for the plants themselves. If you can build a nuclear plant for around $2.50/W, you can sell electricity cheaply, at 3.5–4 c/kWh. But costs in the US are around 2–3x that. (Or they were—costs are so high now that we don‘t even build plants anymore.)

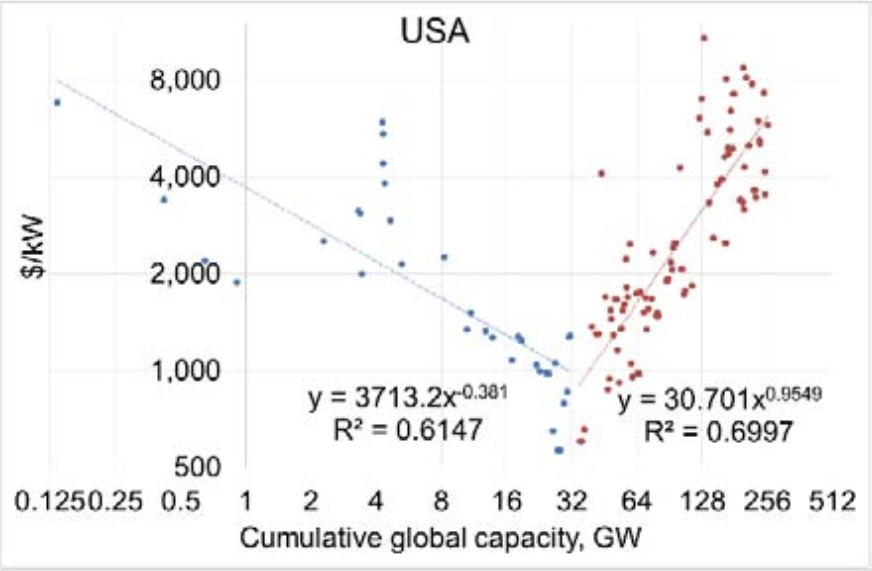

Why are the construction costs high? Well, they weren‘t always high. Through the 1950s and ‘60s, costs were declining rapidly. A law of economics says that costs in an industry tend to follow a power law as a function of production volume: that is, every time production doubles, costs fall by a constant percent (typically 10 to 25%). This function is called the experience curve or the learning curve. Nuclear followed the learning curve up until about 1970, when it inverted and costs started rising:

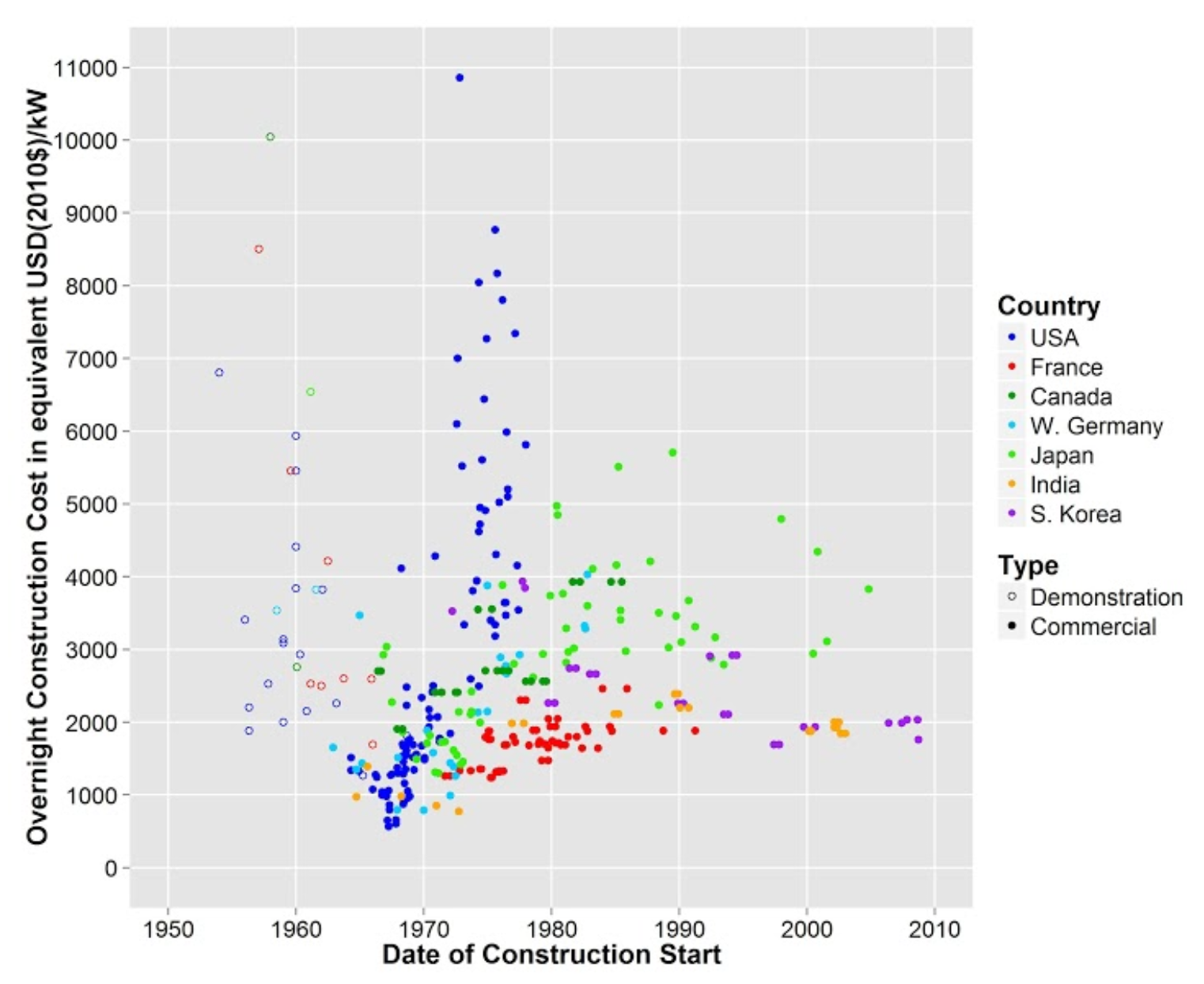

Plotted over time, with a linear y-axis, the effect is even more dramatic. Devanney calls it the “plume,” as US nuclear constructions costs skyrocketed upwards:

This chart also shows that South Korea and India were still building cheaply into the 2000s. Elsewhere in the text, Devanney mentions that Korea, as late as 2013, was able to build for about $2.50/W.

The standard story about nuclear costs is that radiation is dangerous, and therefore safety is expensive. The book argues that this is wrong: nuclear can be made safe and cheap. It should be 3 c/kWh—cheaper than coal.

Safety

Fundamental to the issues of safety is the question: what amount of radiation is harmful?

Very high doses of radiation can cause burns and sickness. But in nuclear power safety, we‘re usually talking about much lower doses. The concern with lower doses is increased long-term cancer risk. Radiation can damage DNA, potentially creating cancerous cells.

But wait: we‘re exposed to radiation all the time. It occurs naturally in the environment—from sand and stone, from altitude, even from bananas (which contain radioactive potassium). So it can‘t be that even the tiniest amount of radiation is a mortal threat.

How, then, does cancer risk relate to the dose of radiation received? Does it make a difference if the radiation hits you all at once, vs. being spread out over a longer period? And is there anything like a “safe” dose, any threshold below which there is no risk?

Linear No Threshold

The official model guiding US government policy, both at the EPA and the Nuclear Regulatory Commission (NRC), is the Linear No Threshold model (LNT). LNT says that cancer risk is directly proportional to dose, that doses are cumulative over time (rate doesn‘t matter), and that there is no threshold or safe dose.

The problem with LNT is that it flies in the face of both evidence and theory.

First, theory. We know that cells have repair mechanisms to fix broken DNA. DNA gets broken all the time, and not just from radiation. And remember, there is natural background radiation from the environment. If cells weren‘t able repair DNA, life would not have survived and evolved on this planet.

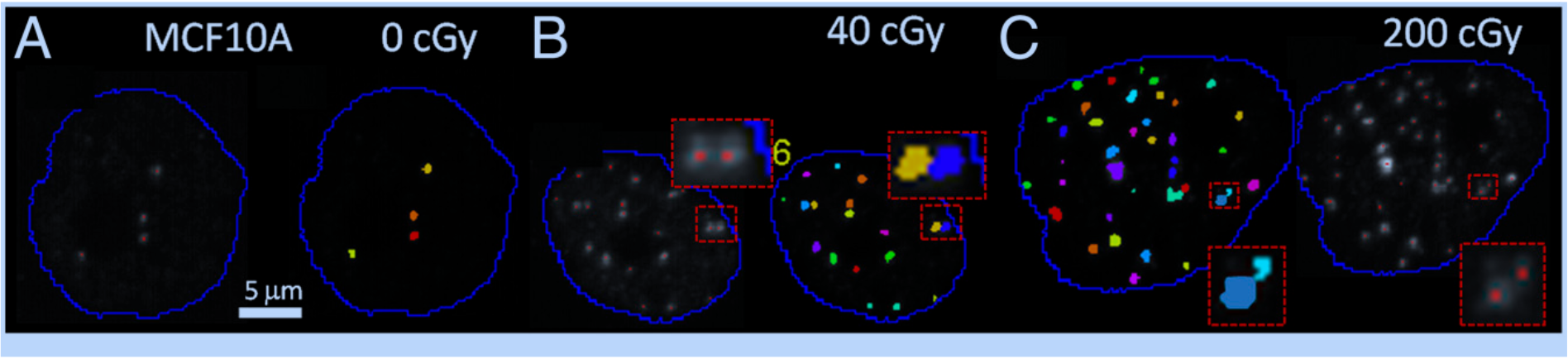

When DNA breaks, it migrates to special “repair centers” within the cell, which put the strands back together within hours. However, this is a highly non-linear process: these centers can correctly repair breaks at a certain rate, but as the break rate increases, the error rate of the repair process goes up drastically. This also implies that dose rate matters: a given amount of radiation is more harmful if received all at once, and less if spread out over time. (In both of these details, I think of this as analogous to alcohol being processed out of the bloodstream by the liver: a low dose can be handled; but overwhelm the system and it quickly becomes toxic. One beer a night for a month might not even get you tipsy; the same amount in a single night would kill you.)

Radiotherapy takes advantage of this. When radiotherapy is applied to tumors, non-linear effects allow doctors to do much more damage to the tumor than to surrounding tissue. And doses of therapy are spread out over multiple days, to give the patient time to recover.

Devanney also assembles a variety of types of evidence about radiation damage from a range of sources. Indeed, his argument against LNT is by far the longest chapter in the book, weighing in at over 50 pages (out of fewer than 200). He looks at studies of:

- The nuclear bomb survivors of Hiroshima and Nagasaki

- The effects of radon gas

- Animal experiments in beagles and mice

- UK radiologists (tracked over 100 years)

- Radiation workers across fifteen countries

- Nuclear shipyard workers (using a closely matched control group of non-nuclear workers in the same yards)

- Areas with naturally high levels of background radiation from sources such as thorium-containing sand or radon: Finland; Ramsar, Iran; Guarapari, Brazil; Yangjiang, China; and Kerala, India

- The population of Washington County, Utah, 200 miles downwind of a nuclear test site in Nevada that was used in the 1950s

- The Chernobyl cleanup crew, including the guys who had to shovel chunks of core graphite off the roof of one of the buildings and toss them into the gaping hole from the explosion

- An incident in Taipei in which an apartment was accidentally built with rebar containing radioactive cobalt-60

- The women who hand-painted radium onto watch dials in the early 20th century (some of whom would lick the brushes to form a point)

- A 1950 trial that violated every conceivable standard of medical ethics by injecting unknowing and non-consenting patients with plutonium

In the last case, all of the patients had been diagnosed with terminal disease. None of them died from the plutonium—including one patient, Albert Stevens, who had been misdiagnosed with terminal stomach cancer that turned out to be an operable ulcer. He lived for more than twenty years after the experiment, over which time he received a cumulative dose of 64 sieverts, one-tenth of which would have killed him if received all at once. He died from heart failure at the age of 79.

The weight of all of this evidence is that low doses of radiation do not cause detectable harm. Little to no cancer, or at least far less than predicted by LNT, is found in the subjects receiving low doses, such as workers operating under modern safety standards, or populations in high-background areas (in fact, there is some evidence of a beneficial effect from very low doses, although nothing in Devanney‘s overall argument depends on this, nor does he stress it). In populations where some subjects did receive high doses, the response curves tend to look decidedly non-linear.

The other finding from these studies is that dose rate matters. This was the explicit finding of an MIT study in mice, and it is the unmistakeable conclusion of the case of Albert Stevens, who lived over two decades with plutonium in his bloodstream.

(At least, all this is Devanney‘s interpretation—it is not always the conclusion written in the papers. Devanney argues, not unconvincingly, that in many cases the researchers‘ conclusions are not supported by their own data.)

ALARA

Excessive concern about low levels of radiation led to a regulatory standard known as ALARA: As Low As Reasonably Achievable. What defines “reasonable”? It is an ever-tightening standard. As long as the costs of nuclear plant construction and operation are in the ballpark of other modes of power, then they are reasonable.

This might seem like a sensible approach, until you realize that it eliminates, by definition, any chance for nuclear power to be cheaper than its competition. Nuclear can‘t even innovate its way out of this predicament: under ALARA, any technology, any operational improvement, anything that reduces costs, simply gives the regulator more room and more excuse to push for more stringent safety requirements, until the cost once again rises to make nuclear just a bit more expensive than everything else. Actually, it‘s worse than that: it essentially says that if nuclear becomes cheap, then the regulators have not done their job.

What kinds of inefficiency resulted?

An example was a prohibition against multiplexing, resulting in thousands of sensor wires leading to a large space called a cable spreading room. Multiplexing would have cut the number of wires by orders of magnitude while at the same time providing better safety by multiple, redundant paths.

A plant that required 670,000 yards of cable in 1973 required almost double that, 1,267,000, by 1978, whereas “the cabling requirement should have been dropping precipitously” given progress at the time in digital technology.

Another example was the acceptance in 1972 of the Double-Ended-Guillotine-Break of the primary loop piping as a credible failure. In this scenario, a section of the piping instantaneously disappears. Steel cannot fail in this manner. As usual Ted Rockwell put it best, “We can’t simulate instantaneous double ended breaks because things don’t break that way.” Designing to handle this impossible casualty imposed very severe requirements on pipe whip restraints, spray shields, sizing of Emergency Core Cooling Systems, emergency diesel start up times, etc., requirements so severe that it pushed the designers into using developmental, unrobust technology. A far more reliable approach is Leak Before Break by which the designer ensures that a stable crack will penetrate the piping before larger scale failure.

Or take this example (quoted from T. Rockwell, “What’s wrong with being cautious?”):

A forklift at the Idaho National Engineering Laboratory moved a small spent fuel cask from the storage pool to the hot cell. The cask had not been properly drained and some pool water was dribbled onto the blacktop along the way. Despite the fact that some characters had taken a midnight swim in such a pool in the days when I used to visit there and were none the worse for it, storage pool water is defined as a hazardous contaminant. It was deemed necessary therefore to dig up the entire path of the forklift, creating a trench two feet wide by a half mile long that was dubbed Toomer’s Creek, after the unfortunate worker whose job it was to ensure that the cask was fully drained.

The Bannock Paving Company was hired to repave the entire road. Bannock used slag from the local phosphate plants as aggregate in the blacktop, which had proved to be highly satisfactory in many of the roads in the Pocatello, Idaho area. After the job was complete, it was learned that the aggregate was naturally high in thorium, and was more radioactive that the material that had been dug up, marked with the dreaded radiation symbol, and hauled away for expensive, long-term burial.

The Gold Standard

Overcautious regulation interacted with economic history in a particular way in the mid–20th century that played out very badly for the nuclear industry.

Nuclear engineering was born with the Manhattan Project during WW2. Nuclear power was initially adopted by the Navy. Until the Atomic Energy Act of 1954, all nuclear technology was the legal monopoly of the US government.

In the ‘50s and ‘60s, the nuclear industry began to grow. But it was competing with extremely abundant and cheap fossil fuels, a mature and established technology. Amazingly, the nuclear industry was not killed by this intense competition—evidence of the extreme promise of nuclear.

Then came the oil shocks of the ‘70s. Between 1969 and 1973, oil prices tripled to $11/barrel. This should have been nuclear‘s moment! And indeed, there was a boom in both coal and nuclear.

But as supply expands to meet demand, costs rise to meet prices. The costs of both coal and nuclear rose. In the coal power industry, this took the form of more expensive coal from marginal mines, higher wages paid to labor who now had more bargaining power, etc. In the nuclear industry, it took the form of ever more stringent regulation, and the formal adoption of ALARA. Prices were high, so the pressure was on to get construction approved as quickly as possible, regardless of cost. Nuclear companies stopped pushing back on the regulators and started agreeing to anything in order to move the process along. The regulatory regime that resulted is now known as the Gold Standard.

The difference between the industries is that the cost rises in coal could, and did, reverse as prices came down. But regulation is a ratchet. It goes in one direction. Once a regulation is in place, it‘s very difficult to undo.

Even worse was the practice of “backfitting”:

The new rules would be imposed on plants already under construction. A 1974 study by the General Accountability Office of the Sequoyah plant documented 23 changes “where a structure or component had to be torn out and rebuilt or added because of required changes.” The Sequoyah plant began construction in 1968, with a scheduled completion date of 1973 at a cost of $300 million. It actually went into operation in 1981 and cost $1700 million. This was a typical experience.

Bottom line: Ever since the ‘70s, nuclear has been stuck with burdensome regulation and high prices—to the point where it‘s now accepted that nuclear is inherently expensive.

Regulator incentives

The individuals who work at NRC are not anti-nuclear. They are strongly pro-nuclear—that‘s why they went to work for a nuclear agency in the first place. But they are captive to institutional logic and to their incentive structure.

The NRC does not have a mandate to increase nuclear power, nor any goals based on its growth. They get no credit for approving new plants. But they do own any problems. For the regulator, there‘s no upside, only downside. No wonder they delay.

Further, the NRC does not benefit when power plants come online. Their budget does not increase proportional to gigawatts generated. Instead, the nuclear companies themselves pay the NRC for the time they spend reviewing applications, at something close to $300 an hour. This creates a perverse incentive: the more overhead, the more delays, the more revenue for the agency.

The result: the NRC approval process now takes several years and costs literally hundreds of millions of dollars.

The Big Lie

Devanney puts a significant amount of blame on the regulators, but he also lays plenty at the feet of industry.

The irrational fear of very low doses of radiation leads to the idea that any reactor core damage, leading to any level whatsoever of radiation release, would be a major public health hazard. This has led the entire nuclear complex to foist upon the public a huge lie: that such a release is virtually impossible and will never happen, or with a frequency of less than one in a million reactor-years.

In reality, we‘ve seen three major disasters—Chernobyl, Three Mile Island, and Fukushima—in less than 15,000 reactor-years of operation worldwide. We should expect about one accident per 3,000 reactor-years going forward, not one per million. If nuclear power were providing most of the world‘s electricity, there would be an accident every few years.

Instead of selling a lie that a radiation release is impossible, the industry should communicate the truth: releases are rare, but they will happen; and they are bad, but not unthinkably bad. The deaths from Chernobyl, 35 years ago, were due to unforgivably bad reactor design that we‘ve advanced far beyond now. There were zero deaths from radiation at Three Mile Island or at Fukushima. (The only deaths from the Fukushima disaster were caused by the unnecessary evacuation of 160,000 people, including seniors in nursing homes.)

In contrast, consider aviation: An airplane crash is a tragedy. It kills hundreds of people. The public accepts this risk not only because of the value of flying, but because these crashes are rare. And further, because the airline industry does not lie about the risk of crashes. Rather than saying “a crash will never happen,” they put data-collecting devices on every plane so that when one inevitably does crash, they can learn from it and improve. This is a healthy attitude towards risk that the nuclear industry should emulate.

Testing

Another criticism the book makes of the industry is its approach to QA and the general lack of testing.

Many questions arise during NRC design review: how a plant will handle the failure of this valve or that pump, etc. A natural way to answer these questions would be to build a reactor and test it, and for the design application to be based in large part on data from actual tests. For instance, one advanced reactor design comes from NuScale:

NuScale is not really a new technology, just a scaled down Pressurized Water Reactor; but the scale down allows them to rely on natural circulation to handle the decay heat. No AC power is required to do this. The design also uses boron, a neutron absorber, in the cooling water to control the reactivity. The Advisory Committee on Reactor Safeguards (ACRS), an independent government body, is concerned that in emergency cooling mode some of the boron will not be recirculated into the core, and that could allow the core to restart. NuScale offers computer analyses that they claim show this will not happen. ACRS and others remain unconvinced.

The solution is simple. Build one and test it. But under NRC rules, you cannot build even a test reactor without a license, and you can’t get a license until all such questions are resolved.

Instead, a lot of analysis is done by building models. In particular, NRC relies on a method called Probabilistic Risk Assessment: enumerate all possible causes of a meltdown, and all the events that might lead up to them, and assign a probability to each branch of each path. In theory, this lets you calculate the frequency of meltdowns. However, this method suffers from all the problems of any highly complex model based on little empirical data: it‘s impossible to predict all the things that might go wrong, or to assign anything like accurate probabilities even to the scenarios you do dream up:

In March, 1975, a workman accidentally set fire to the sensor and control cables at the Browns Ferry Plant in Alabama. He was using a candle to check the polyurethane foam seal that he had applied to the opening where the cables entered the spreading room. The foam caught fire and this spread to the insulation. The whole thing got out of control and the plant was shut down for a year for repairs. Are we to blame the PRA analysts for not including this event in their fault tree? (If they did, what should they use for the probability?)

In practice, different teams using the same method come up with answers that are orders of magnitude apart, and what result to accept is a matter of negotiation. Probabilistic models were used in the past to estimate that reactors would have a core damage frequency of less than one in a million years. They were wrong.

Later, during construction, a similar issue arises. The standard in the industry is to use “formal QA” processes that amount to paperwork and box-checking, a focus on following bureaucratic rules rather than producing reliable outcomes. Devanney saw the same mentality in US Navy shipyards, which produced billion-dollar ships that don‘t even work. Instead, the industry should be more like the Korean shipyards, which are able to deliver reliably on schedule, with higher quality and lower cost. They do this by inspecting the work product, rather than the process used to create it: “test the weld, not the welder.” And they require formal guarantees (such as warranties) of meeting a rigorous spec given up front.

Competition

Finally, Devanney laments the lack of real competition in the market. He paints the industry as a set of bloated incumbents and government labs, all “feeding at the public trough.” For instance:

One the biggest labs is Argonne outside Chicago. At Argonne, they monitor people going in and out of some of the buildings for radiation contamination. The alarms are set so low that, if it’s raining, in coming people must wipe off their shoes after they walk across the wet parking lot. And you can still set off the alarm, which means everything comes to the halt while you wait for the Health Physics monitor to show up, wand you down, and pronounce you OK to come in. What has happened is that the rain has washed some of the naturally occurring radon daughters out of the air, and a few of these mostly alpha articles have stuck to your shoes. In other words, Argonne is monitoring rain water.

Nuclear incumbents aren‘t upset that billions of dollars are thrown away on waste disposal and unnecessary cleanup projects—they are getting those contracts. For instance, 8,000 people are employed in cleanup at Hanford, Washington, costing $2.5B a year, even though the level of radiation is only a few mSv/year, well within the range of normal background radiation.

What to do?

Devanney has a practical alternative for everything he criticizes. Here are the ones that stood out to me as most important:

Replace LNT with a model that more closely matches both theory and evidence. As one workable alternative, he suggests using a sigmoid, or S-curve, instead of a linear fit, in a model he calls Sigmoid No Threshold. In this model, risk is monotonic with dose (there are no beneficial effects at low doses) and it is nonzero for every nonzero dose (there is no “perfectly safe” dose). But the risk is orders of magnitude lower than LNT at low doses. S-curves are standard for dose-response models in other areas.

Drop ALARA. Replace it with firm limits: choose a threshold of radiation deemed safe; enforce that limit and nothing more. Further, these limits should balance risk vs. benefit, recognizing that nuclear is an alternative to other modes of power, including fossil fuels, that have their own health impacts.

Encourage incident reporting, on the model of the FAA‘s Aviation Safety Reporting System. This system enables anonymous reports, and in case of accidental rule violations, it treats workers more leniently if they can show that they proactively reported the incident.

Enable testing. Don‘t regulate test reactors like production ones. Rather than requiring licensing up front, have testing monitored by a regulator, who has the power to shut down test reactors deemed unsafe. Then, a design can be licensed for production based on real data from actual tests, instead of theoretical models.

We could even designate a federal nuclear testing park, the “Protopark,” in an unpopulated region. The park would be funded by rent from tenants, so that the market, rather than the government, would decide who uses it. Tenants would have to obtain insurance, which would force a certain level of safety discipline.

Align regulator incentives with the industry. Instead of an hourly fee for regulatory review, fund the NRC by a tax on each kilowatt-hour of nuclear electricity, giving them a stake in the outcome and the growth of the industry.

Allow arbitration of regulation. Regulators today have absolute power. There should be an appeals process by which disputes can be taken to a panel of arbitrators, to decide whether regulatory action is consistent with the law. City police are held accountable for their use and abuse of power; the nuclear police should be too.

Metanoeite

At the end of the day, though, what is needed is not a few reforms, but “metanoiete”: a deep repentance, a change to the industry‘s entire way of thinking. Devanney is not optimistic that this will happen in the US or any wealthy country; they‘re too comfortable and too able to fund fantasies of “100% renewables.” Instead, he thinks the best prospect for nuclear is a poor country with a strong need for cheap, clean power. (I assume that‘s why his company, ThorCon, is building its thorium-fueled molten salt reactor in Indonesia.)

Again, all of the above is Devanney‘s analysis and conclusions, not necessarily mine. What to make of all this?

I‘m still early in my research on this topic, so I don‘t yet know enough to fully evaluate it. But the arguments are compelling to me. Devanney quantifies his arguments where possible and cites references for his claims. He places blame on systems and incentives rather than on evil or stupid individuals. And he offers reasonable, practical alternatives.

I would have liked to see the nuclear economic model made more explicit. How much of the cost of electricity is the capital cost of the plant, vs. operating costs, vs. fuel? How much is financing, and how sensitive is this to construction times and interest rates? Etc.

A few important topics were not addressed. One is weapons proliferation. Another is the role of the utility companies and the structure of the power industry. Electricity utilities are often regulated monopolies. At least some of them, I believe, have a profit margin that is guaranteed by law. (!) That seems like an important element in the lack of competition and perverse incentive structure.

I would be interested in hearing thoughtful counterarguments to the book’s arguments. But overall, Why Nuclear Power Has Been a Flop pulls together academic research, industry anecdotes, and personal experience into a cogent narrative that pulls no punches. Well worth reading. Buy the paperback on Amazon, or download a revised and updated PDF edition for free.

50 comments

Comments sorted by top scores.

comment by jimrandomh · 2021-04-16T20:27:35.470Z · LW(p) · GW(p)

This all makes sense. But also, I strongly suspect that sabotaging the nuclear power industry this way was a deliberate choice, driven by nuclear weapon proliferation concerns. The experience curve for nuclear power has a lot of overlapping pieces with the experience curve for nuclear weapons; if the US went all-nuclear for its electricity, then other countries would follow, and it'd be a lot harder to stop a country from acquiring nuclear weapons when they already have nuclear power. Similarly, nuclear reactors are a vital piece of US naval power, and commoditized nuclear power generation would undermine that.

I don't think these concerns were worth sacrificing nuclear power, given how much of a problem CO2 emissions turned out to be. But it does mean that the right strategy for fixing things might be subtler than it looks, and involve finding a department hidden away somewhere and convincing them that there are new reactor designs with less proliferation risk.

Replies from: beriukay↑ comment by beriukay · 2021-04-16T22:31:21.498Z · LW(p) · GW(p)

And so, it came as a surprise to me to learn recently that such an alternative has been available to us since World War II, but not pursued because it lacked weapons applications.

It feels perverse, to me, that thorium has been an available option since WW2, and was ignored because it was NOT good for making weapons; and now it is cited that embracing thorium increases the risk of nuclear proliferation.

Replies from: romeostevensit, jimrandomh↑ comment by romeostevensit · 2021-04-16T23:51:03.763Z · LW(p) · GW(p)

AFAIK, no one knows how to make a thorium reactor that doesn't create dirty bomb risk even though their enrichment risk can be made low.

Replies from: DPiepgrass, FireStormOOO↑ comment by DPiepgrass · 2021-10-11T21:51:11.809Z · LW(p) · GW(p)

I like to point people to the DHS on dirty bombs, because you know the DHS is not in the business of downplaying national security risks.

It is very difficult to design an RDD that would deliver radiation doses

high enough to cause immediate health effects or fatalities in a large

number of people. Therefore, experts generally agree that an RDD

would most likely be used to:

- Contaminate facilities or places where people live and work, disrupting lives and livelihoods.

- Cause anxiety in those who think they are being, or have been, exposed

That last point is obviously correct: people still act terrified of radiation and "exposure". This year I got a solid dose of ~20 mSv for a single medical test. The way a lot of people talk about radiation, that's a huge exposure. But if it's administered by a doctor instead of a hypothetical dirty bomb, I expect people don't worry too much about it.

But let's look at the terrorists we know about. How many terrorist groups do you know of that are less interested in killing people and more interested in causing anxiety and inconvenience?

I mean, I guess if someone disrupts the power grid by blowing up some transformers, or puts a carcinogenic substance in our drinking water (hot dogs? kidding), we might call them terrorists, but that's not a normal terrorist M.O., right?

But also, if the "terrorist"'s goal is to just disrupt and not kill, there must be easier ways to do that than to steal used nuclear fuel. Used fuel is protected by security guards, containment buildings and containment casks, right? I'm not an expert on this, but couldn't somebody just buy various carcinogens from a Home Depot and spray them into a reservoir?

↑ comment by FireStormOOO · 2021-04-20T01:02:33.911Z · LW(p) · GW(p)

Any reactor does that though, and it doesn't even have to be a power reactor; hardly a meaninful differentiator.

Dirty bombs just require any reasonably short halflife radioactives (~tens to hundereds of year halflife ideally) that can spread the dust over an area. In some sense the fear is really overblown; they're only effective in the sense that any first world country will predictably overreact to even trace, harmless, radioactive contamination and spend billions on cleanup and have a massive panic. Thus making it an effective terror weapon even if it was so impotent as to cause no actual harm from radiation.

↑ comment by jimrandomh · 2021-04-17T02:10:19.128Z · LW(p) · GW(p)

Maybe inconsistent actions by different government agencies as a result of poor communication? Where nuclear weapons are concerned, poor communication is to be expected.

comment by 1a3orn · 2023-01-14T17:43:11.714Z · LW(p) · GW(p)

This post is a good review of a book, to an space where small regulatory reform could result in great gains, and also changed my mind about LNT. As an introduction to the topic, more focus on economic details would be great, but you can't be all things to all men.

comment by Angela Pretorius · 2023-01-16T11:23:17.978Z · LW(p) · GW(p)

Brilliant article. I’m also curious about the economics side of things.

I found an article which estimates that nuclear power would be two orders of magnitude cheaper if the regulatory process were to be improved, but it doesn’t explain the calculations which led to the ‘two orders of magnitude’ claim. https://www.mackinac.org/blog/2022/nuclear-wasted-why-the-cost-of-nuclear-energy-is-misunderstood

comment by Gurkenglas · 2021-04-16T19:57:18.432Z · LW(p) · GW(p)

a tax on each kilowatt-hour

Wouldn't this almost precisely incentivize approving anything immediately?

Replies from: jasoncrawford, ChristianKl, FireStormOOO↑ comment by jasoncrawford · 2021-04-16T20:23:26.452Z · LW(p) · GW(p)

Presumably you would still hold them accountable for safety though? The point is to have a balance that properly recognizes tradeoffs

↑ comment by ChristianKl · 2021-04-20T09:16:10.476Z · LW(p) · GW(p)

If you head the department for nuclear safety that does give you an incentive to approve things because you want to grow your budget. At the same time a nuclear safety scandal is still bad for your reputation and career.

↑ comment by FireStormOOO · 2021-04-20T01:21:24.698Z · LW(p) · GW(p)

Fair point. We should be going for government sponsored insurance. The tax should be inclusive of both the review and premiums for insuring against resonably nessisary disaster cleanup, with the tax expected to pay for this but not generate substantially more revenue than that.

comment by philh · 2021-04-21T22:48:41.716Z · LW(p) · GW(p)

(This is intended as curiosity, not disagreement.)

Instead, the industry should be more like the Korean shipyards, which are able to deliver reliably on schedule, with higher quality and lower cost. They do this by inspecting the work product, rather than the process used to create it: “test the weld, not the welder.”

Is it possible in general to "test the weld"? I'm thinking of things like, if a building is supposed to stand up to a certain magnitude of earthquake, or a bridge to a certain amount of wind, is that something you can test? Or can you learn it by inspection somehow? Or, can you test that individual components of the structure do what they're supposed to, and then you use your physics knowledge to confirm that the whole structure will stand what it's supposed to?

I vaguely recall an incident that happened partly because the production used some type of epoxy in a situation where regulations required a different type of epoxy. Could something like that have been discovered by inspecting the product? (I'm getting this from the podcast "causality", but I don't remember the incident or details. Of course this shows that "test the welder" can fail too. Turns out, sometimes people don't follow regulations.)

comment by naasking · 2021-04-20T18:19:49.537Z · LW(p) · GW(p)

From prior research, I understood the main problem of nuclear power plant cost to be constant site-specific design adjustments leading to constant cost and schedule overruns. This means there is no standard plant design or construction, each installation is unique with its own quirks, its own parts, its own customizations and so nothing is fungible and training is barely transferrable.

This was the main economic promise behind small modular reactors: small, standard reactors modules that can be assembled at a factory and shipped to a site using regular transportation and just installed, with little assembly, and which you can "daisy chain" in a way to get whatever power output you need. This strikes right at the heart of some of the biggest costs of nuclear.

Of course, it might just be a little too late, as renewables are now cheaper than nuclear in almost every sense. Just need more investment in infrastructure and grid storage.

comment by [deleted] · 2021-04-17T03:28:28.490Z · LW(p) · GW(p)

Things you have neglected:

1. Accidents contaminating large areas of land. These are events that occur infrequently and can negate the lifetime profits from many reactors. (example, fukishima price tag at 187 billion)

2. The very nature of what it means to innovate or cost reduce a product. In any other industry, when you try to make something cheaper, you change the design to remove parts, or cheapen a part that is better than it needs to be. Even if you accept that the NRC is over-zealous, the risk of #1 is a strong incentive not to do either.

Other competing sources of energy, the worst case scenario is acceptable. If you notice, grid-scale battery installations are outdoors and separated by a gap between each metal cabinet. This is so that a lithium fire will be limited to a single battery cabinet. That's an acceptable failure. Ditto the worst case for other forms of power generation. "Contaminating a nearby city and making it permanently unusable if things go badly enough" is not an acceptable scenario.

Anyways, what this means is that solar/wind/batteries are going to keep getting cheaper. And they also have the potential to decarbonize the planet as well. And you can keep innovating and reducing cost wherever possible because the worst case scenario when a solar panel/battery/wind turbine fails is a warranty claim or small fire.

Replies from: Dumbledore's Army, ChristianKl↑ comment by Dumbledore's Army · 2021-04-17T14:05:22.972Z · LW(p) · GW(p)

What is your definition of contaminate? If Devanney is correct that low doses of radiation are acceptable - and I believe he is - then much land which is described as ‘contaminated’ is in fact perfectly liveable. (Also see the people who illegally live in the Chernobyl exclusion zone). For a reasonable definition of ’contaminate’ then, it follows that a nuclear accident contaminates much smaller areas of land and is less expensive.

Your anti-nuclear argument also ignores the status quo of non nuclear energy. In America alone, fossil fuels (read coal) kill tens of thousands every year. So if you replaced all coal power with nuclear and had a Chernobyl every year (unrealistic extreme scenario), it would still save lives on net.

That said, I can see the argument that renewables are safer than both today, but OP is absolutely right to analyse the decades-long failure to replace coal with nuclear in the period before we had renewables.

Replies from: ejacob, None↑ comment by ejacob · 2021-04-17T14:26:43.970Z · LW(p) · GW(p)

Other competing sources of energy, the worst case scenario is acceptable.

I read this was a nod to the status quo bias of nuclear regulators. Millions(?) Of quality-adjusted life-years lost per year from fossil fuels are basically ignored in the cost benefit analysis.

↑ comment by [deleted] · 2021-04-18T06:27:33.999Z · LW(p) · GW(p)

What is your definition of contaminate? If Devanney is correct that low doses of radiation are acceptable - and I believe he is - then much land which is described as ‘contaminated’ is in fact perfectly liveable. (Also see the people who illegally live in the Chernobyl exclusion zone). For a reasonable definition of ’contaminate’ then, it follows that a nuclear accident contaminates much smaller areas of land and is less expensive.

One issue is that it is not possible to rigorously prove it's livable because the parameter you are trying to measure - extra cancers and subtle damage - won't show up for 20-30 years. Over such a long timescan it is difficult to even tease out causation. Your data will be incomplete, your subjects won't all have lived long enough for any radiation damage to matter, some of them smoke, etc. But for the sake of argument I will let the conclusions be conclusive that radiation is harmless below a threshold.

I agree with you that the NRC's decision making is not rational in that it is not factoring in the consequences of a decision to the host society. It's factoring in the consequences of the decision to the NRC. This is true for most regulatory agencies, at best they are captured by not wanting to do something that endangers their own reputation.

Anyways even if all of the above is true the innovation cost I mentioned above isn't there. Nuclear is also small market size in that many advancements do not make economic sense because few reactors are being built, and this would remain true if more were being built up to a point.

Solar and batteries are enormous market scales, and thus many improvements make economic sense.

Replies from: ChristianKl↑ comment by ChristianKl · 2021-04-20T19:35:14.202Z · LW(p) · GW(p)

One issue is that it is not possible to rigorously prove it's livable because the parameter you are trying to measure - extra cancers and subtle damage - won't show up for 20-30 years.

We could measure the effects that radiation has on rats in the lab to get a better model of how the dose of radiation relates to the frequency of effects.

To the extend that your model predicts that radiation causes DNA damage, DNA sequencing could also uncover that damage.

↑ comment by ChristianKl · 2021-04-17T15:52:14.110Z · LW(p) · GW(p)

Other competing sources of energy, the worst case scenario is acceptable.

The numbers for people killed by coal every year world-wide is about that of the Rwandan genocide. I don't hold that to be acceptable and that's the average scenario.

Replies from: None↑ comment by [deleted] · 2021-04-18T06:28:40.590Z · LW(p) · GW(p)

Fair enough. Unfortunately you can walk around with a geiger counter and perceive the dangers of nuclear in the 2 disaster areas. You can't perceive the coal pollution in most areas except when it gets bad enough.

Replies from: ChristianKl↑ comment by ChristianKl · 2021-04-18T17:42:48.630Z · LW(p) · GW(p)

You can't perceive the coal pollution in most areas except when it gets bad enough.

While you can't measure it without devices, you can also measure pollution if it's not bad enough to be visible to everybody.

A geiger counter doesn't perceive dangers. It just measures radiation. The problem is that the value that it measures gets combined with pseudoscientific models of what radiation does to the human body.

If you eat a banana every three days that exposes you to more radiation then living near Chernobyl. BBC gives 0.1 micro Sievert per banana which gives you 12 micro Sievert per year if you eat a banana every three days. On the other hand living in the exclusion zone only gives you 8.8 mirco Sievert per year.

Replies from: None↑ comment by [deleted] · 2021-04-20T17:49:51.210Z · LW(p) · GW(p)

Agree with everything but the last bit. It is possible to find fragments of the core itself still in the area with a kilometer or so of the reactor. These tiny fragments are high level nuclear waste.

Replies from: ChristianKl↑ comment by ChristianKl · 2021-04-20T19:09:57.861Z · LW(p) · GW(p)

To the extend that you can currently find fragments of the core, that's the result of it not being worthwhile to clean those up. Cleaning up an area of a kilometer is not that hard if you want to do it.

comment by Дмитрий Зеленский (dmitrii-zelenskii-1) · 2023-01-14T21:17:29.676Z · LW(p) · GW(p)

I love the description, sounds compelling, should actually read the book :D

comment by rmoehn · 2021-04-18T02:08:16.599Z · LW(p) · GW(p)

Nuclear power is the sword that can cut it: a scalable source of dispatchable (i.e., on-demand), virtually emissions-free energy. It takes up very little land, consumes very little fuel, and produces very little waste.

For balance, here are a few counterpoints, which I recently heard from a friend and have not verified myself:

- There is only enough uranium to provide half of the world's power for fifty years.

- Renewable energy sources have become cheaper than nuclear power in recent years.

- Mining for uranium ore and producing uranium does occupy much land, consume much energy and emit much carbon dioxide. The reason is that there is only about one ton of uranium per forty tons of ore. (Renewables don't consume as much land as some people say, since you can put solar cells on roofs, grow crops under the panels of solar farms etc.)

- Mining and producing uranium also results in much waste in the form of tailings.

- Smart grids can solve the base load power problem with renewables.

Personally, I haven't made up my mind in the go nuclear/stop nuclear dimension. I don't need to, since it's not something I'm going to or trying to have much influence on. But the above are points I would like to see addressed when arguing for increasing nuclear energy production. They're also great points to put numbers on and compare with renewables.

Replies from: gilch, ChristianKl↑ comment by gilch · 2021-04-19T06:38:18.073Z · LW(p) · GW(p)

There is only enough uranium to provide half of the world's power for fifty years.

Not sure where that number came from, but it's not accounting for breeding U-233 from thorium or breeding fissile plutonium from depleted uranium. Fast neutron reactors can burn most actinides, including the bulk of the so-called "nuclear waste" from lightwater reactors, and as ChristianKl already mentioned, we know how to extract uranium from seawater. This is much more expensive, but nuclear reactors require so little fuel that it amounts to a tiny fraction of their operating costs, so it wouldn't affect the price of nuclear power much. Getting enough fuel is really not the issue.

Renewable energy sources have become cheaper than nuclear power in recent years.

Nuclear should be a lot cheaper than it is, and this is due to the red tape, as explained in the post.

Mining for uranium ore and producing uranium does occupy much land, consume much energy and emit much carbon dioxide. The reason is that there is only about one ton of uranium per forty tons of ore. (Renewables don't consume as much land as some people say, since you can put solar cells on roofs, grow crops under the panels of solar farms etc.)

Mostly true for mining anything these days. And you still need minerals to produce solar panels, wind turbines, and the batteries required to make them work in place of baseload, etc. Nuclear, on the other hand, requires uranium in very small quantities.

I dispute that you can grow crops under solar panels. Wind turbines, sure, but they're loud. Maybe some plants are OK with some degree of shade, but they do require sunlight, and efficient harvesting requires access with big tractors.

Smart grids can solve the base load power problem with renewables.

Smart grids aren't good enough, but they could help. Renewables can't easily be used for baseload without some kind of grid-scale storage, but advancing battery technology may address this in the near future.

Replies from: rmoehn, gilch↑ comment by gilch · 2022-01-22T05:53:24.486Z · LW(p) · GW(p)

I have since read articles about growing shade crops under solar panels. This is a thing. The panels have to be spaced out though. It's not going to be as energy-dense as a dedicated solar plant.

Enhanced Geothermal looks really promising, particularly heat mining of hot dry rock. It's both green and baseload, and it leverages a lot of the oil-drilling tech, including deep bores and fracking. I wonder how much Big Oil is going to transition to geothermal over the coming decade.

Fusion also looks really promising right now. The joke was that it was always just fifty years away, even ten years later. I don't think it's that long anymore. Tokamaks basically work now, and improved superconductors have made the required electromagnets much more compact and less expensive. There are also other promising approaches in the works. Only one has to work.

↑ comment by ChristianKl · 2021-04-18T17:43:03.828Z · LW(p) · GW(p)

There is only enough uranium to provide half of the world's power for fifty years.

That's what people said about oil in the 19th century. We know how to mine Uranium from seawater, so if we have a problem with mining it the traditional way we can just take it from the ocean that has plenty.

Smart grids can solve the base load power problem with renewables.

No. Smart grids do nothing to give you energy in dark winter months.

Renewable energy sources have become cheaper than nuclear power in recent years.

Not for 365/7/24 energy needs.

Replies from: gilch, TAG, rmoehn↑ comment by gilch · 2021-04-19T07:05:25.944Z · LW(p) · GW(p)

Not for 365/7/24 energy needs.

Wind and PV will require grid-scale energy storage. At this rate it looks like batteries will get there first, but there are other possibilities. Any fair accounting for the cost of renewables vs nuclear has to account for this part, since nuclear is a baseload source in its own right and doesn't need the batteries. The "cheap" renewables right now are wind and photovoltaic.

However, there are many baseload renewable sources, such as hydro, ocean wave, geothermal, solar thermal, and high-altitude wind. Most of the easy geothermal and hydro sources have been tapped already, and more would be damaging to their local environment.

Solar thermal and enhanced hot dry rock geothermal have great potential as renewable baseload sources. They work practically anywhere, although not equally well anywhere.

↑ comment by TAG · 2021-04-18T18:32:27.299Z · LW(p) · GW(p)

No. Smart grids do nothing to give you energy in dark winter months.

Wind and wave are renewables.

Replies from: ChristianKl↑ comment by ChristianKl · 2021-04-18T19:12:51.720Z · LW(p) · GW(p)

Wind doesn't blow everyday either. Waves do have advantages of being constant but we don't see wave powered strom generations at the price/quantity of the others.

↑ comment by rmoehn · 2021-04-19T12:14:27.535Z · LW(p) · GW(p)

Thanks for your counter-counterpoints. I've added them to my notes.

Re. smart grids: Of course they don't produce energy themselves. We would need the capacity to produce enough during winter. But they address the problem of supply variability. And the energy grid modelers at my friend's company have found that they can address it sufficiently.

Replies from: ChristianKl↑ comment by ChristianKl · 2021-04-20T09:17:37.279Z · LW(p) · GW(p)

Supply variability happens on different time-spans. Batteries and smart grid technology allow you to handle 24 hour varability.

Unforunately, if you use mainly renewable energy, a solution that just handles the 24 hour variability while not handling the variability over longer timescales doesn't bring you far.

You likely need to turn the surplus energy in the summer into hydrogen or methane, store that and then burn it when needed with turbines. Those turbines can then not only handle the variability over a year but also that over shorter timeframes.

Failures of handling electricity variation for an hour gives you an outage of a hour which isn't a big deal. On the other hand failing in handling inter-month variation and having a few days of power outage is very costly.

Replies from: rmoehncomment by ChristianKl · 2021-04-16T17:15:14.092Z · LW(p) · GW(p)

However, this is a highly non-linear process: these centers can correctly repair breaks at a certain rate, but as the break rate increases, the error rate of the repair process goes up drastically.

This seems like a surprising claim to me. I don't see how "repair at a certain rate" would happen in the underlying biology. On the other hand, I would expect that some error are of a quality that the error detection mechanisms don't catch them.

Does anybody know more details?

Replies from: jasoncrawford↑ comment by jasoncrawford · 2021-04-16T20:28:35.213Z · LW(p) · GW(p)

So here is the relevant excerpt, section 4.7.1:

Our bodies are equipped with damage repair systems that are pretty darn effective at low dose rates. If this were not the case, then life would never have evolved as it has. Life started about 3 billion years ago when average background radiation was about 10 mSv/y, about 4 times the current average. Life without repair mechanisms would be impossible. But these repair mechanisms can be overwhelmed by high dose rate damage.

The repair mechanisms take a bewildering number of forms, all of which seem to have names requiring a dictionary. And the strategies are remarkably clever. At doses below 3 mSv, a damaged cell attempts no repair but triggers its premature death. However, at higher doses, it triggers the repair process.23 This scheme avoids an unnecessary and possibly erroneous repair process when cell damage rate is so low that the cell can be sacrificed. But if the damage rate is high enough that the loss of the cell would cause its own problems, then the repair process is initiated. This magic is accomplished by activating/repressing a different set of genes for high and low doses.[143][page 15] LNT denies this is possible.

Even at the cell level, the repair process is fascinating. In terms of cancer, we are most interested in how the cell repairs breaks in its DNA. Single stand breaks are astonishingly frequent, tens of thousands per cell per day. Almost all these breaks are caused by ionized oxygen molecules from metabolism within the cell. MIT researchers observed that 100 mSv/y dose rates increased this number by about 12 per day.[114] Breaks that snap only one side of the chain are repaired almost automatically by the clever chemistry of the double helix itself.

The interesting question is: what happens if both sides of the double helix are broken? Double strand breaks (DSB) also occur naturally. Endogenous, non-radiogenic causes generate a DSB about once every ten days per cell. Average natural background radiation creates a DSB about every 10,000 days per cell.[50] However the break was caused, the DNA molecule is split in two.

Clever experiments at Berkeley show that the two halves migrate to “repair centers”, areas within the cell that are specialized in putting the DNA back together.[105] Berkeley actually has pictures of this process, Figure 4.15 which is a largely complete in about 2 hours for acute doses below 100 mSv and 10 hours for doses around 1000 mSv. These experiments show that if a “repair center” is only faced with one DSB, the repair process rarely makes a mistake in reconstructing the DNA. But if there are multiple breaks per repair center, then the error rate goes up drastically. A few of these errors will survive and a few of those will result in a viable mutation that will eventually cause cancer. The key feature of this process is it is non- linear. And it is critically dose rate dependent. If the damage rate is less than the repair rate, we are in good shape. If the damage rate is greater than the repair rate, we have a problem.

The Berkeley work was part of the DOE funded Low Dose Radiation Research Program. Despite the progress at Berkeley and other labs and bipartisan congressional support, DOE shut the program down in 2015. When the DOE administrator of the program, Dr. Noelle Metting, attempted to defend her program, she was fired and denied access to her office. The program records were not properly archived as required by DOE procedures.

Footnote 23 says:

To be a bit more precise, some repairs can only take place in the G2 phase just before cell division. Radiation to the cell above 3 mSv, activates the ATM-gene, which arrests the cell in the G2 phase. This allows time for the repair process to take place.

comment by DPiepgrass · 2021-10-11T21:22:00.400Z · LW(p) · GW(p)

Nuclear followed the learning curve up until about 1970, when it inverted and costs started rising

This confused me because I thought that TMI in 1979 was when nuclear power really started dying off in the U.S., but Wikipedia agrees: Not a single reactor started construction in the U.S. after 1978! So I looked back on my favorite source on this, and its chart, starting in 1972, shows monotonically rising costs. Thanks for the correction. Rather than TMI happening somewhere in the middle of the coffin-construction, it really was the final nail.

Perhaps anti-nuclear activism started all the way back in 1945? I wonder how things would've been different if Truman wasn't so damn eager to drop atomic bombs on civilians (edit: his diary says he would drop it on a military target, which sounds incredibly naive; but I remember hearing somewhere-or-other that he was eager to use it.)

An incident in Taipei in which an apartment was accidentally built with rebar containing radioactive cobalt-60

I'm curious what the book has to say about this, because I read that study after seeing it featured on a site called X-LNT as one of (only) four studies that ostensibly supported the radiation hormesis hypothesis (the idea that low-dose radiation is good for you). As you may know, there are far, far more than four studies on low-dose radiation exposure, so the lack of data listed on the site was a little suspicious.

But when I actually went and looked at the cited studies, I saw that only one directly supported hormesis. The conclusion of the study on the Taipei incident said "The results suggest that prolonged low dose-rate radiation exposure appeared to increase risks of developing certain cancers in specific subgroups of this population in Taiwan". The study itself did not seem high-quality (the p-hacking is plain as day) but the point is that some people seem to have deliberately misinterpreted it. IIUC, the researchers created a simple model to predict how many cancers they expected to see in the population, and the actual number of cancers among the irradiated group was lower than their prediction, but the researchers themselves didn't think that this was evidence of radiation being good for you.

Regardless, I've seen plenty of support in scientific literature for the idea that radiation is less than proportionally harmful at lower doses, although there seem to be a bunch of scientists that continue to support LNT as well. I am not sure how to tell how much scientific support each side of the debate has.

By the way, who told me about X-LNT in support of his views? None other than Robert Hargraves of Thorcon, the same company that employs Devanney. I'm glad to hear that Devanney does not promote hormesis.

My favorite 1990 book on nuclear power agrees with the general idea that small doses are less harmful:

In view of all this evidence, both UNSCEAR12 and NCRP19 estimate that risks at low dose and low dose rate are lower than those obtained from the straight line relationship by a factor of 2 to 10. For example, if 1 million mrem gives a cancer risk of 0.78, the risk from 1 mrem is not 0.78 chances in a million as stated previously, but only 1/2 to 1/10 of that (0.39 to 0.078 chances in a million). The 1980 BEIR Committee accepted the concept of reduced risk at low dose and used it in its estimates. The 1990 BEIR Committee acknowledges the effect but states that there is not enough information available to quantify it and, therefore, presents results ignoring it but with a footnote stating that these results should be reduced.

But it should be stressed that if you accept the LNT hypothesis and therefore triple the book's risk estimates, that would only change the conclusion from "nuclear power is fantastic and extremely safe" to "nuclear power is very good and quite safe".

The only deaths from the Fukushima disaster were caused by the unnecessary evacuation of 160,000 people, including seniors in nursing homes.

While it seems to me plausibly unjustifiable to have evacuated nursing homes or ICUs, it's important to make a distinction between evacuation and relocation. Evacuation is a much, much smaller step than relocation. It's a way of saying "okay, please move while we evaluate the risks here", and after Fukushima there would have been a certain amount of short-lived iodine isotopes that you don't want in your body. But these isotopes are basically gone after a few weeks, and iodine pills might be sufficient to protect you and allow you to move back home.

What made Fukushima really bad, I think, was the decision to do sudden and permanent relocation instead of just an evacuation, especially as they forced out elderly people who, even if they were to get a large radiation dose, probably wouldn't have lived long enough to develop cancer from it. I don't really understand how a thousand people can die of "stress" as the media reported (I don't speak Japanese so how can I do more research on this?), but regardless of how their deaths happened, it seems clearly dangerous and wrong to suddenly relocate everyone, and not allow them to even visit their homes.

And then there's the bizarre debacle of all the giant drums filled with "radioactive" water that is pretty much safe to drink. My working hypothesis is that after making a bad relocation decision that killed a lot of people, the government can't admit it made a mistake, so it must double down. The public only knows what the media says, and if the media in Japan is like American media, it misrepresents badly. Here's Cohen's 1980s survey:

Here and elsewhere in communicating with the public, I try to represent the position of the great majority of radiation health scientists. [...] In 1982, I became concerned that I had no real proof that I was properly representing the scientific community. I, therefore, decided to conduct a poll by mail.

The selection of the sample to be polled was done by generally approved random sampling techniques using membership lists from Health Physics Society and Radiation Research Society, the principal professional societies for radiation health scientists. Selections were restricted to those employed by universities, since they would be less likely to be influenced by questions of employment security and more likely to be in contact with research. Procedures were such that anonymity was guaranteed.

Questionnaires were sent to 310 people, and 211 were returned, a reasonable response for a survey of this type. The questions and responses are given in Figure 1.

TABLE 1

1. In comparing the general public's fear of radiation with actual dangers of radiation, I would say that the public's fear is (check one):

2 grossly less than realistic (i.e., not enough fear). 9 substantially less than realistic. 8 approximately realistic. 18 slight greater than realistic. 104 substantially greater than realistic. 70 grossly greater than realistic (i.e., too much fear). 2. The impressions created by television coverage of the dangers of radiation (check one)

59 grossly exaggerate the danger. 110 substantially exaggerate the danger. 26 slightly exaggerate the danger. 5 are approximately correct. 3 slightly underplay the danger. 2 substantially underplay the danger. 1 grossly underplay the danger.

Not surprisingly, I think, the public does not know about all the efforts to make nuclear regulations more strict. In the 1970s someone listening to the media might think "nuclear power is a bit dangerous, but at least it's affordable", but after all the strict regulations are added, by 1990 they think "nuclear power is dangerous AND expensive!"

This is quite different than what happened with airplanes, where we got multiple TV shows about airplane accidents, which always talk at the end about how regulations and procedures were changed to ensure This Will Never Happen Again.

Even though flying is thousands of times safer than driving (per mile), people still feel it's a little scary. Luckily, though, it's not so scary that activists are picketing airports and demanding that the "passenger missiles" be prohibited from flying within 15 miles of a populated area.

comment by Felipe Dias (felipe-dias) · 2024-11-28T01:15:41.775Z · LW(p) · GW(p)

Can someone elaborate on how the risk of military attacks to nuclear powerplants is usually accounted for?

During the invasion of Ukraine in 2022, I remember a great fuss around the shelling of the Zaporizhzhia Nuclear Power Plan. It was argued a disaster on the magnitude of chernobyl would be impossible, but I'm unaware of the technical aspects involved and some people were still very afraid.

If nuclear (be it large or SMR) is to become commonplace, what kind of risks are involved in these attacks?

comment by bfinn · 2021-04-20T14:23:55.031Z · LW(p) · GW(p)

Good post. Sorry I didn't read it all, but I get the impression you didn't cover the unfortunate influence of the environmental movement on the decline of nuclear power, which is presumably a major reason for the overregulation, and for political opposition to & closure of nuclear power stations in recent decades in countries like Germany.

Environmentalists should have been all in favour of nuclear power, but many strongly opposed it until quite recently. (Possibly because of somewhat Luddite attitudes to modern technology, capitalism, etc. - I speculate.)

Replies from: ChristianKl↑ comment by ChristianKl · 2021-04-20T19:55:13.147Z · LW(p) · GW(p)

In the German case, I think opposition to nuclear power historically has a lot to do with opposition to nuclear weapons on German soil. The antiwar and enviromental movement where very much overlapping over a long time.

comment by Blippo · 2021-04-17T11:58:48.027Z · LW(p) · GW(p)

The waste makes nuclear power too risky and thus too expensive if you think long-term.

In Germany we have the situation that the country backs out from nuclear power completely. That desicion has been made by the German government after the Fukushima incident and because Germany is so densely populated that an incident like Fukushima would impact all of Europe. Other countries next door, like France, who also has to keep up a nuclear arsenal, will keep producing nuclear power. I'm pretty sure that the possibility to build nukes is the one big argument for keeping and subsidizing a nuclear power industry at all.

The main political issue and the elephant in the room is the nuclear waste management, which is very expensive if you want to have long term safety. And we are talking about stuff that is dangerous for thousands of years. In the 50s and 60s that stuff was simply thrown into the sea or dumped in abandoned mine shafts (that's why the cost curve had been falling). Germany is already spending billions of Euros now to walk back those original sins - and it's complicated because noone wants to pay. The utilities already got some questionable deals and shook off their responsibility. Goverment and taxpayer money will now have to deal with hundreds of tons of nuclear waste for generations to come. If someone wants to advocate a nuclear power renaissance (instead of renewables and possibly fusion power some day), you must answer the question of waste management - technically and economically.

↑ comment by ChristianKl · 2021-04-17T21:32:03.517Z · LW(p) · GW(p)

The main political issue and the elephant in the room is the nuclear waste management, which is very expensive if you want to have long term safety.

It's only expensive when you want to have long-term safety. Given that we don't even have short term safety with coal that kills a lot of people, nuclear without any long-term safety would be an improvement on the status quo.

↑ comment by jimrandomh · 2021-04-17T15:56:27.638Z · LW(p) · GW(p)

Online discussion about this subject is traditionally full of people making bald assertions like this, without evidence they understand any of the subject quantitatively. "Hundreds of tons" is not an intensifier that you stick in a sentence like the word "very"; it's a number that (a) should be sourced, and (b) is only meaningful in comparison with other waste streams, eg fly ash.

Replies from: Blippo, TAG↑ comment by Blippo · 2022-03-23T19:14:21.108Z · LW(p) · GW(p)

Ok, you got me on my lack of precision and missing sources. Estimates like "billions of Euros" and "hundreds of tons" are horribly vague and not a proper base for discussion.

To walk back my reputation I want to add this link: https://world-nuclear.org/information-library/country-profiles/countries-g-n/germany.aspx which provides a lot of hard facts. According to this report Germany hast to manage 11.500 metric tons of nuclear fuel. There's a state fund of 23.6 billion € for managing the waste. Also the energy companies have put back 38 billion € to build back the German reactors. "Millions of years" was plain wrong, spent nuclear fuel has half-lives as high as 24,000 years, which is not nothing but something to do math with.

Where it is difficult to do the math is the total cost of nuclear power in Germany. There are about 187 Billion € of state subsidies that some sources factor in and some don't. The risk of minor and major accidents and how to account for them is debated heavily. Same goes for renewables, where some sources factor in external costs for manufacturing, land and network, some don't. It's a mess and I no longer wonder why there are so many stark opinions pro and against nuclear power.

It would make sense from an economical standpoint to let the running reactors run long term and rather refurbish older ones instead of building new power plants. To shut down the German reactors early has been a political decision fueled by the political problems around the waste and fear of accidents, not a scientific one. Same goes for the discussion about a nuclear renaissance, which could simply be made on a political basis. But if people want to pay a price (like higher energy prices) to reduce risk, all economical discussion will fall short. The war in Ukraine will shift public opinion towards nuclear, just because the fossils from Russia have become a political burden especially in Germany. But also the public opinion on big infrastructure projects have become very strained after the disastrous Berlin airport project.

It's not about the cost. It's about public opinion and I'm not looking forward to the debate. Ugh.

↑ comment by TAG · 2021-04-17T16:01:08.751Z · LW(p) · GW(p)

Hundreds of tons” is not an intensifier that you stick in a sentence like the word “very”;

Here's the first result I got when I googled "Germany tonnes nuclear waste"

Blippo could have googled, but you could have googled.

Replies from: gilch