Seven lessons I didn't learn from election day

post by Eric Neyman (UnexpectedValues) · 2024-11-14T18:39:07.053Z · LW · GW · 33 commentsThis is a link post for https://ericneyman.wordpress.com/2024/11/14/seven-lessons-i-didnt-learn-from-election-day/

Contents

The seven most important things I didn't learn 1. No, Kamala Harris did not run a bad campaign 2. No, polls aren't useless. They were pretty good, actually. 3. No, Theo the French Whale did not have edge 4. No, we didn't learn which campaign strategies worked 5. No, Donald Trump isn't a good candidate 6. No, spending money on political campaigns isn't useless 7. No, my opinion of the American people didn't change One thing I did learn Foreign-born Americans shifted toward Trump None 34 comments

[Cross-posted from my blog. I think LessWrong readers would find the discussion of Theo the French Whale [LW · GW] to be the most interesting section of this post.]

I spent most of my election day -- 3pm to 11pm Pacific time -- trading on Manifold Markets. That went about as well as it could have gone. I doubled the money I was trading with, jumping to 10th place on Manifold's all-time leaderboard. Spending my time trading instead of just nervously watching results come in also spared me emotionally.[1]

It's been a week now, and people seem to be in a mood for learning lessons, for grand takeaways. There is, of course, virtue in learning the right lessons. But there is an equal amount of virtue in not learning the wrong lessons. People seem to over-learn lessons from dramatic events. And so this blog post is intended as a kind of push-back: "Here are some lessons that people seem to be learning, and here is why those lessons are wrong."

The seven most important things I didn't learn

1. No, Kamala Harris did not run a bad campaign

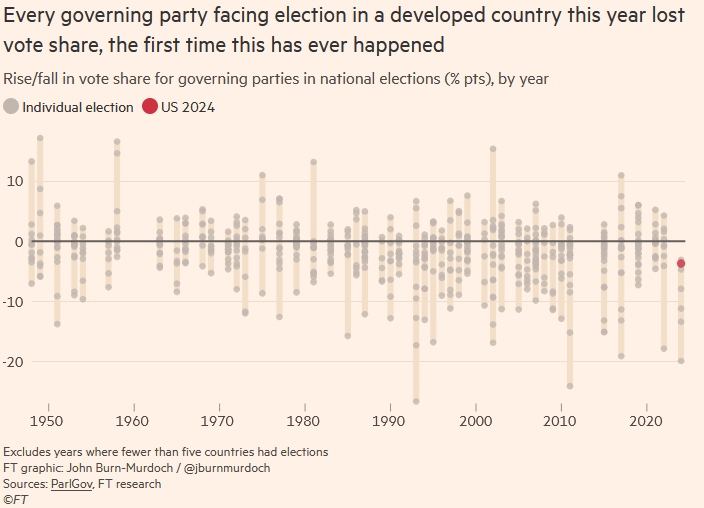

The most important fact about politics in 2024 is that across the world, it's a terrible time to be an incumbent. For the first time this year since at least World War II, the incumbent party did worse than it did in the previous election in every election in the developed world. This happened most dramatically in the United Kingdom, where the Labour Party won in a landslide victory, ending 14 years of Conservative rule. But the same thing played out all over the world, in places like India, France, Japan, Austria, South Korea, South Africa, Portugal, Belgium, and Botswana.

Why? The answer is probably inflation: inflation rates were unusually high throughout the world, and voters really don't like it when prices go up.

The fact that this phenomenon is global shows that we can't infer much about Kamala Harris' quality as a candidate, or about her campaign, just from the fact that she lost. Indeed, as the chart shows, she fared unusually well compared to other incumbents (though I wouldn't read too much into that either[2]).

Honestly, I don't think that Kamala Harris was a good candidate, electorally speaking. According to a New York Times poll, 47 percent of voters thought that Harris was too progressive (compare: only 32 percent thought that Trump was too conservative). This is perhaps because she expressed some fairly unpopular progressive views in 2019-2020, including praising the "defund the police" movement and supporting a ban on fracking.

But I think that her 2024 campaign was pretty good. She had a good speech at the Democratic National Convention, did well in the presidential debate, and mostly avoided taking unpopular positions. By most accounts, her ground game in swing states was superior to Trump's. Plus, Harris was backed by a really impressive Super PAC called Future Forward, which did rigorous ad testing to figure out which political ads were most persuasive to swing voters. Harris' campaign wasn't perfect -- she should have picked Josh Shapiro as her running mate and gone on Joe Rogan's show -- but I have no major complaints.

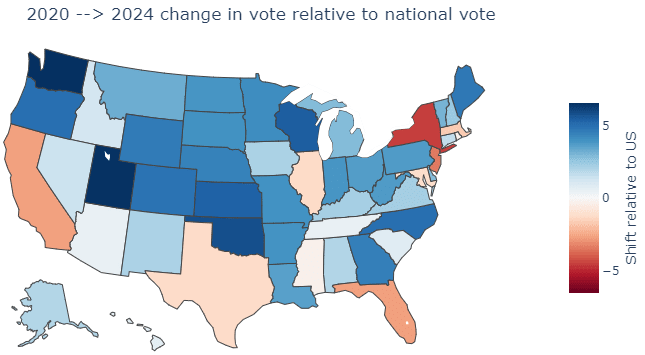

Whatever the cause, I think there's evidence that Harris' campaign was more effective than Trump in the most important states. Here is a map of state-by-state swings in vote from 2020 to 2024, relative to the national popular vote. In other words, while literally every state swung rightward relative to 2020, I've colored red the states that swung rightward more than the nation as a whole, and blue the states that swung less.[3]

Notice that all seven swing states -- Nevada, Arizona, Georgia, North Carolina, Pennsylvania, Michigan, and Wisconsin -- swung right less than the nation. Georgia and North Carolina particularly stand out, having swung the least of any states in the Southeast. And despite Harris' massive losses among Hispanic voters (visible on the map in states like California, Texas, Florida, and New York), she did okay in the heavily-Hispanic swing states of Arizona and Nevada. (See this Twitter thread by Dan Rosenheck for a more rigorous county-by-county regression analysis that agrees with this conclusion.[4])

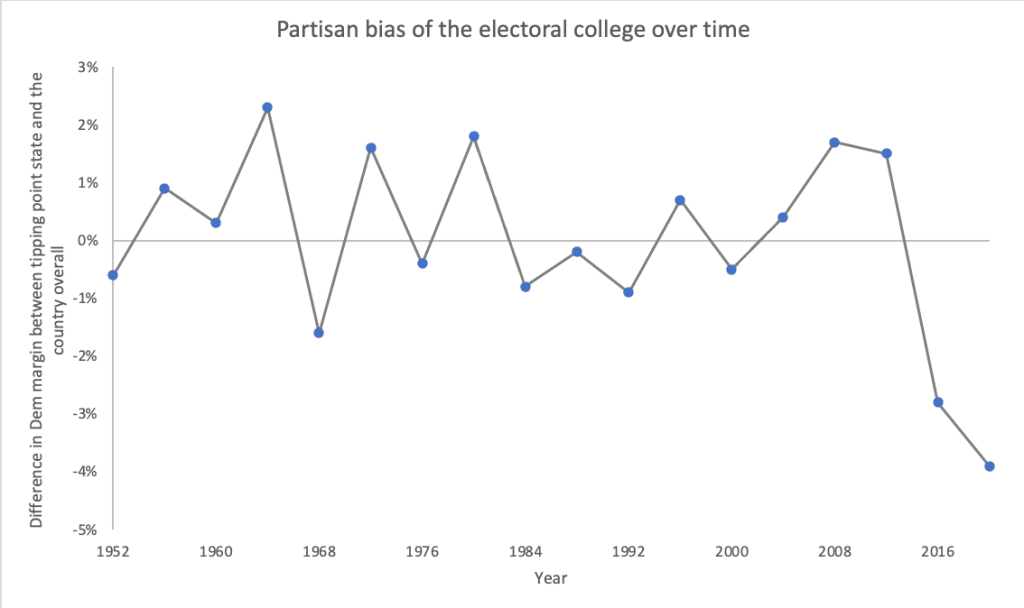

The result? It looks like Harris has pretty much erased the bias that massively benefited Trump in the electoral college in 2020. That year, Biden won the popular vote by 4.5%, but came really close to losing the electoral college. He needed to win the national popular vote by 4% (!) to win the electoral college, a historic disadvantage.

In 2024, it looks like Harris would have needed to win the national popular vote by about 0.3% to win the election. In other words, the last election's bias against Democrats -- a bias that was unprecedented in recent history -- got almost entirely erased.

This analysis is not definitive, but it looks to me that the Harris campaign did a basically good job under highly unfavorable circumstances.

2. No, polls aren't useless. They were pretty good, actually.

I've actually seen surprisingly few people complain about the polls this time around. But people will inevitably complain about this year's polls the next time they're interested in dismissing poll results. So it's worth stating for the record that the polls this year were pretty good. I'd maybe give them a B grade.

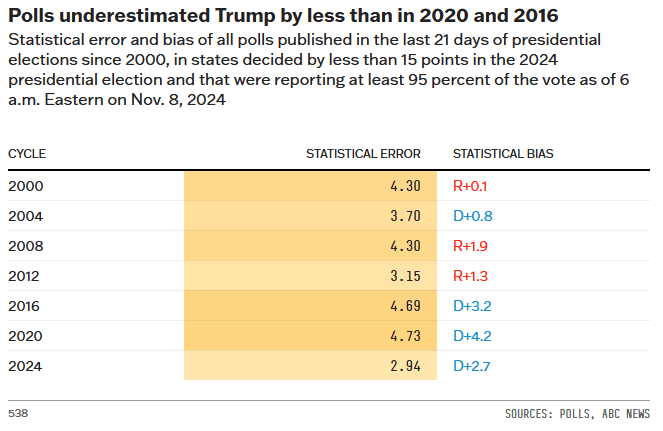

FiveThirtyEight compares 2024 polls to polls from previous presidential elections, finding that polls had low error and medium bias. Below, "statistical error" refers to the average absolute value of how much the polling average differed from the final result, across relatively close states. Meanwhile, "statistical bias" looks at whether polls were persistently wrong in the same direction.

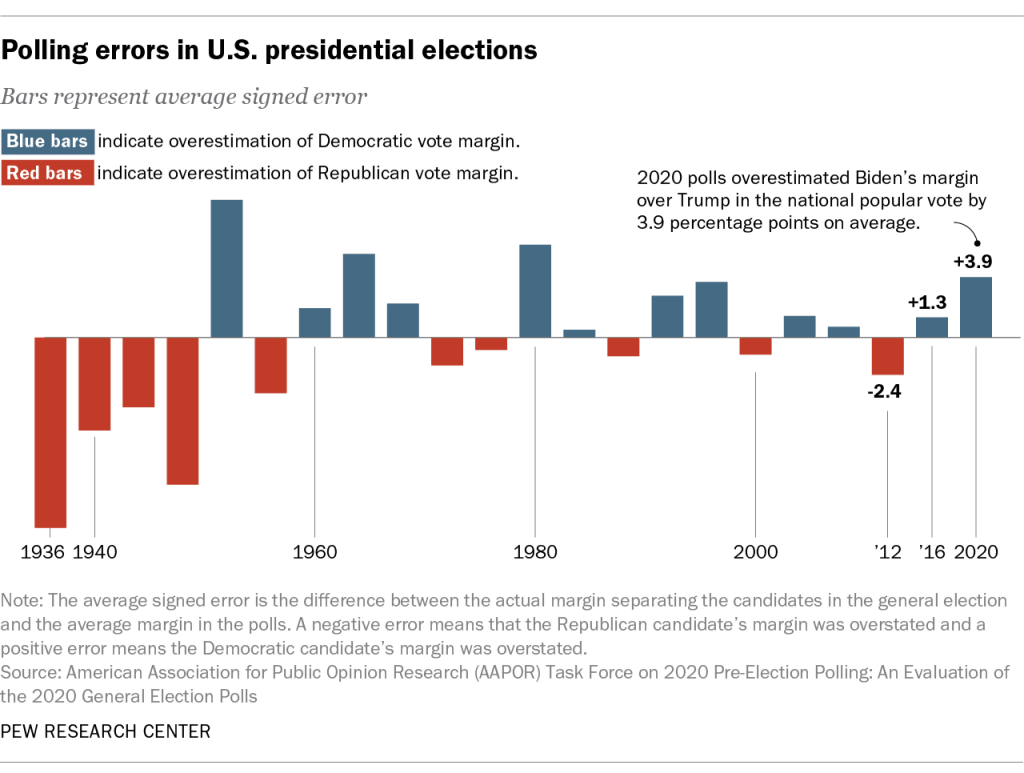

National polls were similarly biased. It looks like Trump will win the popular vote by about 1.5%, whereas the polling average had Harris winning the popular vote by 1%. That's a 2.5% bias, which is in line with the historical average:

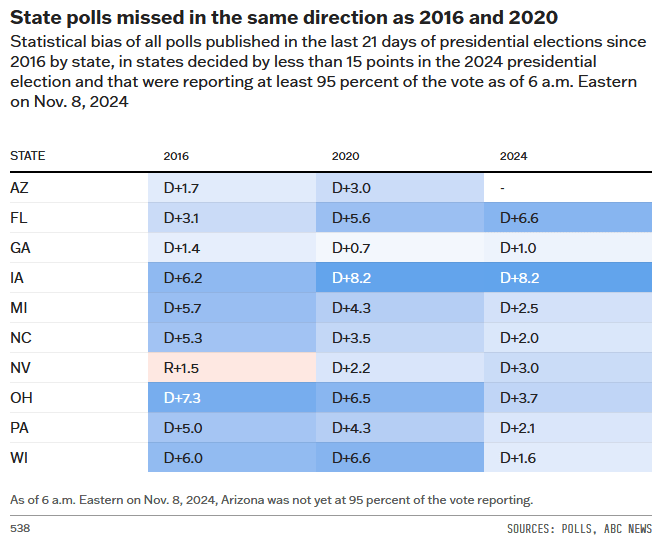

In 2016 and 2020, we saw a massive polling bias in the Midwest; this year, polls had a modest bias. We only saw a large polling bias on Florida and Iowa, neither or which were swing states.

Why were the polls so biased in 2016 and 2020? For 2016, I think we have a reasonably good explanation: most polls did not weight respondents by education level. This ended up biasing polls because low-education voters swung massively against Democrats, and also were much less likely to respond to polls.

I think it's less clear what happened in 2020. The best explanation I've heard is that the polling bias was a one-off miss caused by COVID: Democrats were much more likely to stay home, and thus much more likely to respond to polls. I don't find this explanation fully satisfying, because I would think that weighting by party registration would have mostly eliminated this source of bias.

Some people went into the 2024 election fearing that pollsters had not adequately corrected for the sources of bias that had plagued them in 2016 and 2020. I was ambivalent on this point. On the one hand, I think we didn't end up getting a great explanation for what caused polls to be biased in 2020. On the other hand, pollsters have pretty strong incentives to produce accurate numbers. I hope we end up with a good explanation of this year's (more modest) polling bias; so far, I haven't seen one.

Should we expect polls to once again be biased against Republicans in 2028? I don't know. The polls did not exhibit a bias in the last two midterm elections, but they exhibited a persistent bias in the last three presidential elections. One could posit a few theories:

- [The default hypothesis] Every year has idiosyncratic reasons for polling bias. It just so happened that polls were biased against Trump in all of the last three elections: the coin happened to land heads all three times. (After all, if you flip a coin three times, there's a 25% chance that it will land the same way every time!) Under this theory, we shouldn't expect a bias in 2028.

- [The efficient polling hypothesis] Polls have a reputational incentive to be accurate. Although they were unsuccessful at adjusting their methodologies to get rid of their Democratic bias in 2020 and 2024, in 2028 they will finally succeed in doing so. Under this theory, we shouldn't expect a bias in 2028.

- [The Trump hypothesis] There is a Trump-specific factor that biases polls against him. The most likely reason for this is that some of Trump's voters (a) only turn out to vote for Trump and (b) are really hard to reach, in a way that isn't easily fixed by weighting poll respondents differently. Under this theory, we shouldn't expect a bias in 2028, since Trump isn't running again.

- [The low-propensity voter hypothesis] Some voters only turn out every four years, to vote for president. While a decade ago, a majority of these voters were Democrats, now a majority are Republicans. (This hypothesis is similar to the previous one, except that it doesn't posit a Trump-specific phenomenon.) Under this theory, to the extent that polls have trouble picking up on those voters (because they're unlikely to respond to polls), maybe we should expect the bias to continue.

I think these hypotheses are about equally likely. So, should we expect a bias favoring Democrats in 2028? My tentative answer: probably not, but maybe.

There's one caveat I'd like to make, which concerns Selzer & Co.'s Iowa poll. Ann Selzer is a highly regarded -- see this glowing profile from FiveThirtyEight -- whose polls have been accurate again and again. But this year, her final poll showed Harris up 3 in Iowa; meanwhile, Trump won by 13 points: a huge miss.

So, what happened? Most likely, the error was a result of non-response bias: more Democrats responded to her poll than Republicans. I couldn't the poll methodology online, but Selzer is famous for taking a "hands-off" approach to polling, doing minimal weighting. According to Elliott Morris at FiveThirtyEight, Selzer "only weights by age and sex", basically meaning that she re-weights respondents to ensure a correct number of men vs. women and young vs. middle-aged vs. old respondents, but doesn't do any other weighting.

This means that if Iowa has equal number of registered Democrats and registered Republicans, but Democrats are twice as likely to pick up the phone as Republicans (after controlling for age and sex), then her polls will show that twice as many Democrats as Republicans will show up to vote.[5] By contrast, most pollsters weight respondents by party registration or recalled vote to try to get an unbiased sample of registered voters.

As far as I can tell, this sort of "hands-off" methodology hasn't been tenable since 2016 and won't be tenable going forward. My guess is that luck was a major factor in the accuracy of Selzer's 2016 and 2020 polls. I probably won't place too much stock on Selzer & Co. polls in future years, though I'm open to being persuaded otherwise.

3. No, Theo the French Whale did not have edge

Theo the French Whale, also known as Fredi9999, was one of the more fun characters of the 2024 presidential election.

Theo the French Whale is actually a human. But in gambling-speak, a whale is a gambler who wagers a really large amount of money. And that kind of whale, Theo was.

About a month before the election, the prediction market site Polymarket started becoming more and more confident that Donald Trump would win the election. While Polymarket had previously been in relatively close agreement with forecast models like Nate Silver's, Trump's odds on Polymarket started going up, eventually reaching as high as 66%. Meanwhile, most forecasters thought the race was a tossup.

Traders noticed that the price increase was driven mostly by a single trader with the username Fredi9999, who was buying tens of millions of dollars of Trump shares. A different trader named Domer did some snooping and figured out that Fredi9999 was a Frenchman. The two briefly chatted before Fredi9999 got mad at Domer for disagreeing with him. You can read Domer's account of it all here.

In all, Theo wagered close to $100 million on Trump winning the election. People posited many theories about Theo's motivations, but the most straightforward theory always seemed likeliest to me: Theo was betting on Trump because he thought that Trump was likely to win the election.

After the election, a Wall Street Journal report (paywalled; see here for some quotes) revealed Theo's reasoning: Theo believed that polls were yet again biased against Trump, so he commissioned his own private polling that used a nonstandard methodology called the "neighbor method".

The idea of the neighbor method is that, instead of asking people who they support, you ask them who their neighbors support. This is supposed to reduce the bias that results from Trump supporters being disproportionately unwilling to tell pollsters that they're voting for Trump (so-called "shy Trump voters"). According to the WSJ article, Theo's "neighbor method" polls "showed Harris’s support was several percentage points lower when respondents were asked who their neighbors would vote for, compared with the result that came from directly asking which candidate they supported."

Many people saw the WSJ report as a vindication of prediction markets. Prediction market proponents argue that we should expect prediction markets to be more accurate than other forecasting methods, because holders of private information are incentivized to reveal that information by betting on the markets. And in this case, Theo even did his own novel research, in order to acquire private information, so that he could reveal that information through his bets! A dream come true for prediction market enthusiasts.

Except, as far as I can tell, the neighbor method is total nonsense. This is for a few reasons.

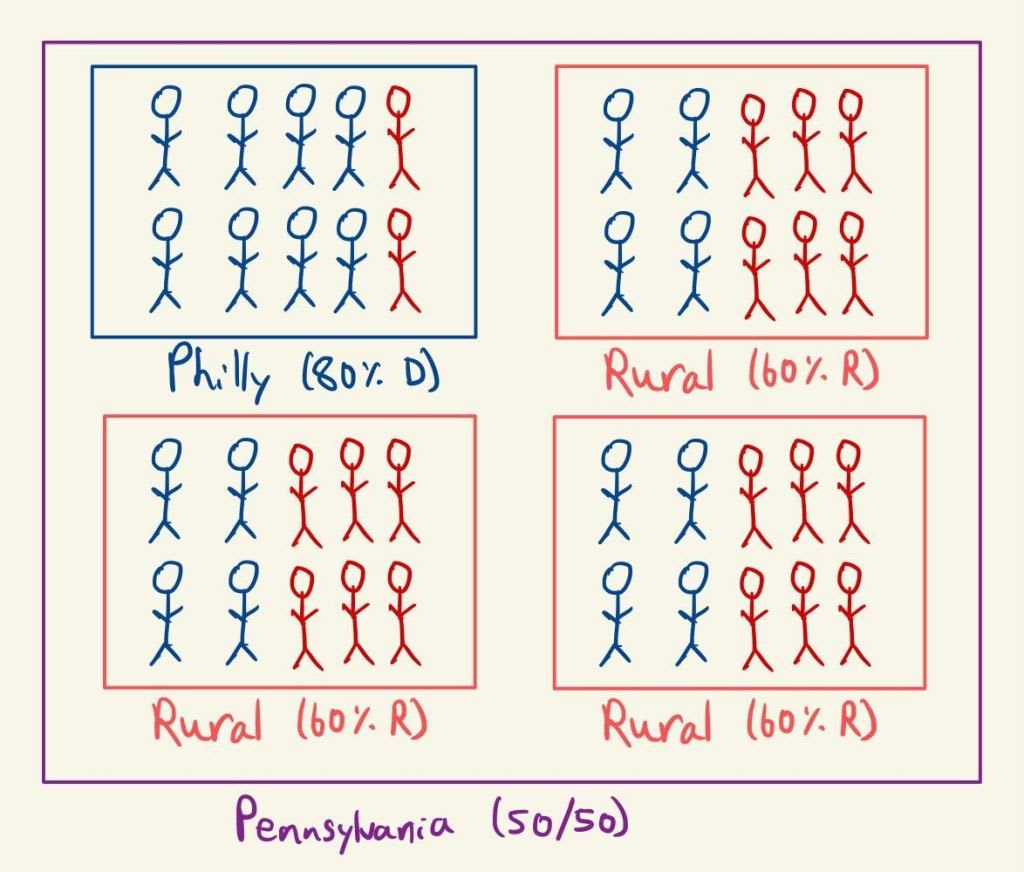

The first reason has to do with the geographic distribution of Democrats and Republicans. Cities are very heavily Democratic, while rural areas are only moderately Republican. As a simple model, imagine that Pennsylvania is split 50/50 between Harris voters and Trump voters, and that in particular:

- 25% of voters live in Philadelphia, which supports Harris 80-20.

- 75% of voters live in rural areas, which support Trump 60-40.

If you ask people who they're voting for, 50% will say they're voting for Harris. But if you ask them who most of their neighbors are voting for, only 25% will say Harris and 75% will say Trump! It's no wonder that Theo's neighbor polls found "more support" for Trump.

(This is just an illustrative example. The actual distribution of voters isn't as dramatic, but the point still stands: while Trump won 51% of the two-party vote, 55% of Pennsylvanians live in counties won by Trump. This lines up with the shift of "several percentage points" in Theo's polls!)

The second reason that I don't trust the neighbor method is that people just... aren't good at knowing who a majority of their neighbors are voting for. In many cases it's obvious (if over 70% of your neighbors support one candidate or the other, you'll probably know). But if it's 55-45, you probably don't know which direction it's 55-45 in.

On the other hand, I could have given you a really good idea of what percentage of voters in every neighborhood will vote for Trump: I'd look at the New York Times' Extremely Detailed Map of the 2020 Election and maybe make a minor adjustment based on polling. My guess would be within 5% of the right answer most of the time.

So... the neighbor method is supposed to elicit voters' fuzzy impressions of whether most of their neighbors are voting for Trump, when I could easily out-predict almost all of them? That doesn't sound like a good methodology.

And the final reason is that the neighbor method's track record is... short and bad. I'm aware of one serious, publicly available attempt at the neighbor method: in 2022, NPR's Planet Money asked Marist College (which does polling for NPR) to poll voters on the following question:

Think of all the people in your life, your friends, your family, your coworkers. Who are they going to vote for?

While the main polling question ("Who will you vote for?") found a 3-point advantage for Republicans (spot on!), the "friends and family" question found a whopping 16-point advantage (which was way off).

(Also, how are you even supposed to answer that question?? "Well, Aunt Sally is voting for the Democrats, while Uncle Greg is voting for the Republicans. Meanwhile, my best friend Joe is planning to vote for the Democrat in the House but the Republican in the Senate. My coworkers seem to be split 50/50 though I don't talk to them about politics much...")

So, barring further evidence, I will continue to be dismissive of the neighbor method. Theo did a lot of work, but it was bad work, and he got lucky.

4. No, we didn't learn which campaign strategies worked

The Kamala Harris campaign is getting a lot of flak for spending millions on swing-state concerts by celebrities lake Katy Perry and Lady Gaga. Had Harris won, the media would probably be praising her youth-savvy strategy.

By contrast, previously-skeptical media coverage of Elon Musk's efforts to turn out voters for Trump in swing states are increasingly viewed as effective, just because Trump won.

Were the concerts a good use of money? I don't know. Did Musk's $200 million get spend wisely? I also don't know. In both cases, my guess is: probably not. But the fact that Trump won and Harris lost provides very little evidence, just because there are so many factors at play in determining who wins or loses an election.

5. No, Donald Trump isn't a good candidate

Trump has now gone two-for-three in presidential elections. This year was just the second time that a Republican won the popular vote in the last nine presidential elections (the other being George Bush in 2004). It's tempting to conclude that Trump is an above-replacement Republican, when it comes to electability. I think that would be the wrong conclusion.

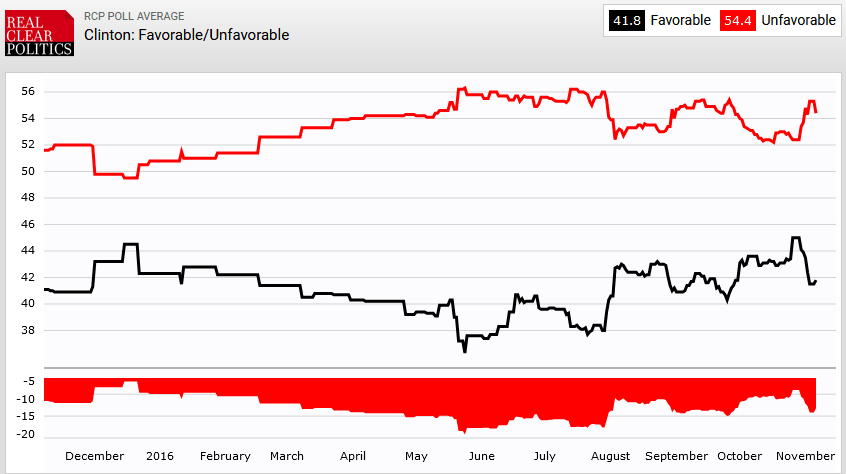

In my opinion, Trump mostly got lucky in the two general elections that he won. In 2016, he barely beat Hillary Clinton, who was deeply unpopular at the time of the election.

And in 2024, he was running against a quasi-incumbent during an unprecedentedly bad time to be an incumbent.

I'm actually not sure that Trump is unusually bad for a Republican. For example, hypothetical Harris vs. Vance polls showed Harris doing about 9 points better against Vance than against Trump. On the other hand, during this year's Republican primary, most polls showed Haley doing better than Trump against Biden (see e.g. this New York Times poll). Overall, I'd guess that Trump is about average for a Republican in terms of electability.

6. No, spending money on political campaigns isn't useless

I've seen a few people jump to this sort of conclusion based on the fact that Harris significantly outraised Trump and still lost.

But again, the relevant question is how much she would have lost by if she hadn't outraised Trump. My guess is that she would have lost by more, particularly in the swing states (where most of her ad spending went, and where she overperformed -- see above).

Natural experiments show that campaign spending helps win votes. I think that while donating to the Harris campaign is only moderately effective, some efforts such as Swap Your Vote were able to get Harris additional swing state votes at a cost of about $200/vote. As an altruistic intervention, I think this is pretty good, given that the outcome of the presidential election affects how trillions of dollars get spent. (See here [EA · GW] for some more of my thoughts about this.)

For my part, I didn't donate to Harris, but I donated a substantial amount to my favorite state legislative candidate, in what looked to be a really close race. He ended up losing by about 10%, but I think my decision to donate was well-informed, and I would do it again.

7. No, my opinion of the American people didn't change

I expected 50% of voters to vote for Harris and 48.5% to vote for Trump. Instead, 48.5% voted for Harris and 50% voted for Trump. The 1.5% of Americans who voted for Trump, and who I thought would vote for Harris, were incredibly important for the outcome of the election and for the future of the country, but they are only 1.5% of the population.

If you (like me) supported Harris, then perhaps you think that 1.5% of Americans have worse judgment than you expected. If you supported Trump, then perhaps you think that 1.5% of Americans have better judgment than you expected. So maybe election day should have very slightly raised or lowered your esteem of the American people -- but certainly not very much.

I think that instead, most of the evidence you got came earlier. In my case, it was 2015-2017, when Trump first ran for president and got elected, and then again in 2021, when his popularity didn't go down very much despite some actions by Trump (1, 2) that were really inexcusable by my lights.

One thing I did learn

Just for fun, I wanted to highlight the most interesting thing I did learn from election night:

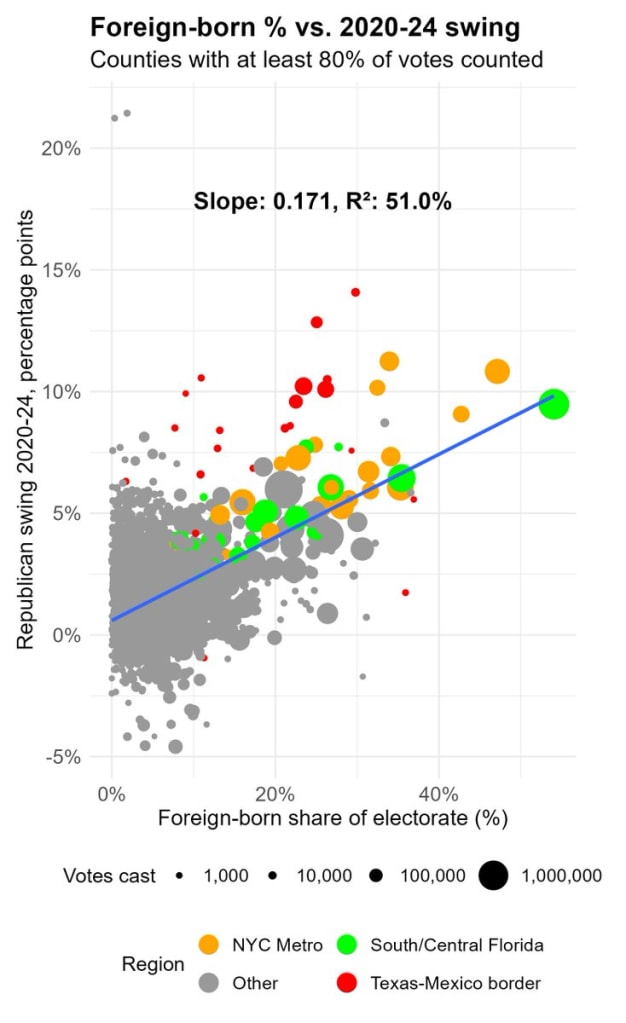

Foreign-born Americans shifted toward Trump

Dan Rosenheck points out a really strong relationship between the percentage of a county's population that was foreign born and how much the county shifted toward Trump. Indeed, the r-squared is 0.51, meaning that foreign-born percentage explains 51% (!) of the variance in how much different counties shifted toward Trump. Every 6% increase in foreign-born population was associated with a 1% increase in swing toward Trump.[6]

I haven't checked, but I suspect that this is reversion to pre-Trump voting patterns. Maybe otherwise-conservative immigrants voted against Trump in 2016 and 2020, but decided to vote for Trump in this election. If someone wanted to check, I'd be interested in seeing the results!

- ^

I was in a room with six or so of my friends. We were all rooting for Harris, but the chatter was all about what trades we should be making. Is the market overreacting to Trump's crushing victory in Florida? Why is "Trump wins the popular vote" stubbornly staying below 30%? Wisconsin seems bluer than Pennsylvania -- should we be buying Trump shares in Pennsylvania and selling them in Wisconsin? Going into election day, I expected that my day would be ruined if Trump won, that I would give up on trading and go cry in a corner. Instead, the opposite happened: the exhilaration of trading -- the constant decision-making -- displaced most of the grief that I would have felt that day. I think it's really bad that Trump won, and that the world will be a much worse place because of it. But just on an emotional level, things turned out okay for me.

- ^

For one thing, the U.S. economy has generally fared better than most other economies in the developed world. For another, Joe Biden wasn't running for re-election, which may have dulled the anti-incumbent effect. For a third, the U.S. is unusually polarized, so one should expect smaller swings from election to election. Also, the sample of countries in the chart just isn't that large.

- ^

These numbers are subject to change, mostly because California has so far only counted about two-thirds of its votes.

- ^

Rosenheck found that including a variable for "is the county in a swing state?" significantly improves the regression: being in a swing state is associated with a higher vote share for Harris. However, it's possible that this effect is entirely due to an idiosyncratic pro-Trump effect in a few states like New York, New Jersey, and Florida, which happen to not be swing states.

- ^

This is assuming that the Democratic respondents and Republican respondents are equally likely to end up voting. Selzer's poll is a "likely voter" poll, meaning that she weights respondents by how likely they are to vote.

- ^

33 comments

Comments sorted by top scores.

comment by deepthoughtlife · 2024-11-15T04:09:15.956Z · LW(p) · GW(p)

1. Kamala Harris did run a bad campaign. She was 'super popular' at the start of the campaign (assuming you can trust the polls, though you mostly can't), and 'super unpopular' losing definitively at the end of it. On September 17th, she was ahead by 2 points in polls, and in a little more than a month and a half she was down by that much in the vote. She lost so much ground. She had no good ads, no good policy positions, and was completely unconvincing to people who weren't guaranteed to vote for her from the start. She had tons of money to get out all of this, but it was all wasted.

The fact that other incumbent parties did badly is not in fact proof that she was simply doomed, because there were so many people willing to give her a chance. It was her choice to run as the candidate who 'couldn't think of a single thing' (not sure of exact quote) that she would do differently than Biden. Not a single thing!

Also, voters already punished Trump for Covid related stuff and blamed him. She was running against a person who was the Covid incumbent! And she couldn't think of a single way to take advantage of that. No one believed her that inflation was Trump's fault because she didn't even make a real case for it. It was a bad campaign.

Not taking policy positions is not a good campaign when you are mostly known for bad ones. She didn't run away very well from her unpopular positions from the past despite trying to be seen as moderate now.

I think the map you used is highly misleading. Just because there are some states that swung even more against her, doesn't mean she did well in the others. You can say that losing so many supporters in clearly left states like California doesn't matter, and neither does losing so many supporters in clearly right states like Texas, but thinking both that it doesn't matter in terms of it being a negative, and that it does matter enough that you should 'correct' the data by it is obviously bad.

2.Some polls were bad, some were not. Ho hum. But that Iowa poll was really something else. (I don't have a particular opinion on why she screwed up, aside from the fact that no one wants to be that far off if they have any pride.) She should have separately told people she thought the poll was wrong if she thought it was, did she do that? (I genuinely don't know.) I do think you should ignore her if she doesn't fix her methodology to account for nonresponse bias, because very few people actually answer polls. An intereting way might be to run a poll that just asks something like 'are you male or female?' or 'are you a democrat of Republican?' and so on so you can figure out those variables for the given election on both separate polls and on the 'who are you voting for' polls. If those numbers don't match, something is weird about the polls.

I think it is important to note that people thought the polls would be closer this time by a lot than before (because otherwise everyone would have predicted a landslide due to them being close.) You said, "Some people went into the 2024 election fearing that pollsters had not adequately corrected for the sources of bias that had plagued them in 2016 and 2020." but I mostly heard the opposite from those who weren't staunch supporters of Trump. I think the idea of how corrections had gone before we got the results was mostly partisan. Many people were sure they had been fully fixed (or overcorrected) for bias and this was not true, so people act like they are clearly off (which they were). Most people genuinely thought this was a much closer race than it turned out to be.

The margin of being off was smaller than in the past trump elections, I'll agree, but I think it is mostly the bias people are keying on rather than the absolute error. The polls have been heavily biased on average for the past three presidential cycles, and this time was still clearly biased (even if less so). With absolute error but no bias, you can just take more or larger polls, but with bias, especially an unknowable amount of bias, it is very hard to just improve things. Also, the 'moderate' bias is still larger than 2000, 2004, 2008, and 2012.

My personal theory is that the polls are mostly biased against Trump personally because it is more difficult to get good numbers on him due to interacting strangely with the electorate as compared to previous Republicans (perhaps because he isn't really a member of the same party they were), but obviously we don't actually know why. If the Trump realignment sticks around, perhaps they'll do better correcting for it later.

I do think part of the bias is the pollsters reacting to uncertainty about how to correct for things by going with the results they prefer, but I don't personally think that is the main issue here.

3.Your claim that 'Theo' was just lucky because neighbor polls are nonsense doesn't seem accurate. For one thing, neighbor polls aren't nonsense. They actually give you a lot more information than 'who are you voting for'. (Though they are speculative.) You can easily correct for how many neighbors someone has too and where they live using data on where people live, and you can also just ask 'what percentage of your neighbors are likely to vote for' to correct for the fact that it is different percentages of support.

As a separate point, a lot of people think the validity of neighbor polls comes from people believing that the respondents are largely revealing their own personal vote, though I have some issues with that explanation.

So, one bad poll with an extreme definition of 'neighbor' negates neighbor voting and many bad polls don't negate traditional? Also, Theo already had access to the normal polls as did everyone else. Even if a neighbor poll for some reason exaggerates the difference, as long as it is in the right direction, it is still evidence of what direction the polls are wrong in.

Keep in mind that the chance of Trump winning was much higher than traditional polls said. Just because Theo won with his bets doesn't mean you should believe he'd be right again, but claiming that it is 'just lucky' is a bad idea epistemologically, because you don't know what information he had that you don't.

4.I agree, we don't know whether or not the campaigns spent money wisely. The strengths and weaknesses of the candidates seemed to not rely much on the amount of money they spent, which likely does indicate they were somewhat wasteful on both sides, but it is hard to tell.

5.Is Trump a good candidate or a bad one? In some ways both. He is very charismatic in the sense of making everyone pay attention to him, which motivates both his potential supporters and potential foes to both become actual supporters and foes respectively. He also acts in ways his opponents find hard to counter, but turn off a significant number of people. An election with Trump in it is an election about Trump, whether that is good or bad for his chances.

I think it would be fairer to say Trump got unlucky with election that he lost than that he was lucky to win this one. Trump was the covid incumbent who got kicked out because of it despite having an otherwise successful first term.

We don't usually call a bad opponent luck in this manner. Harris was a quasi-incumbent from a badly performing administration who was herself a laughingstock for most of the term. She was partially chosen as a reaction to Trump! (So he made his own luck! if this is luck.)

His opponent in 2016 was obviously a bad candidate too, but again, that isn't so much 'luck'. Look closely at the graph for Clinton. Her unfavorability went way up when Trump ran against her. This is also a good example of a candidate making their own 'luck'. He was effective in his campaign to make people dislike her more.

6.Yeah, money isn't the biggest deal, but it probably did help Kamala. She isn't any good at drawing attention just by existing like Trump, so she really needed it. Most people aren't always the center of attention, so money almost always does matter to an extent.

7.I agree that your opinion of Americans shouldn't really change much by being a few points different than expected in a vote either way, especially since each individual person making the judgement is almost 50% likely to be wrong anyway! If the candidates weren't identically as good, at least as many as the lower of the two were 'wrong' (if you assume one correct choice regardless of person reasons) and it could easily be everyone who didn't vote for the lower. If they were identically as good, then it can't be that voting for one of them over the other should matter to your opinion of them. I have an opinion on which candidate was 'wrong' of course, but it doesn't really matter to the point (though I am freely willing to admit that it is the opposite of yours).

comment by DanielFilan · 2024-11-14T19:09:02.721Z · LW(p) · GW(p)

If you ask people who they're voting for, 50% will say they're voting for Harris. But if you ask them who most of their neighbors are voting for, only 25% will say Harris and 75% will say Trump!

Note this issue could be fixed if you instead ask people who the neighbour immediately to the right of their house/apartment will vote for, which I think is compatible with what we know about this poll. That said, the critique of "do people actually know" stands.

Replies from: khafra↑ comment by khafra · 2024-11-15T06:20:13.655Z · LW(p) · GW(p)

The story I read about why neighbor polling is supposed to correct for bias in specifically the last few presidential elections is that some people plan to vote for Trump, but are ashamed of this, and don't want to admit it to people who aren't verified Trump supporters. So if you ask them who they plan to vote for, they'll dissemble. But if you ask them who their neighbors are voting for, that gives them permission to share their true opinion non-attributively.

Replies from: Linda Linsefors, DanielFilan↑ comment by Linda Linsefors · 2024-11-15T15:22:16.723Z · LW(p) · GW(p)

If people are ashamed to vote for Trump, why would they let their neighbours know?

Replies from: leogao↑ comment by leogao · 2024-11-15T16:42:51.099Z · LW(p) · GW(p)

People generally assume those around them agree with them (even when they don't see loud support of their position - see "silent majority"). So when you ask what their neighbors think, they will guess their neighbors have the same views as themselves, and will report their own beliefs with plausible deniability.

↑ comment by DanielFilan · 2024-11-17T22:19:58.763Z · LW(p) · GW(p)

Yeah but a bunch of people might actually answer how their neigbours will vote, given that that's what the pollster asked - and if the question is phrased as the post assumes, that's going to be a massive issue.

comment by WilliamKiely · 2024-11-14T20:44:17.006Z · LW(p) · GW(p)

Foreign-born Americans shifted toward Trump

Are you sure? Couldn't it be that counties with a higher percentage of foreign-born Americans shifted toward Trump because of how the non-foreign-born voters in those counties voted rather than how the foreign-born voters voted?

Replies from: UnexpectedValues↑ comment by Eric Neyman (UnexpectedValues) · 2024-11-14T21:02:56.991Z · LW(p) · GW(p)

I'm not sure (see footnote 7), but I think it's quite likely, basically because:

- It's a simpler explanation than the one you give (so the bar for evidence should probably be lower).

- We know from polling data that Hispanic voters -- who are disproportionately foreign-born -- shifted a lot toward Trump.

- The biggest shifts happened in places like Queens, NY, which has many immigrants but (I think?) not very much anti-immigrant sentiment.

That said, I'm not that confident and I wouldn't be shocked if your explanation is correct. Here are some thoughts on how you could try to differentiate between them:

- You could look on the precinct-level rather than the county-level. Some precincts will be very high-% foreign-born (above 50%). If those precincts shifted more than surrounding precincts, that would be evidence in favor of my hypothesis. If they shifted less, that would be evidence in favor of yours.

- If someone did a poll with the questions "How did you vote in 2020", "How did you vote in 2024", and "Were you born in the U.S.", that could more directly answer the question.

↑ comment by WilliamKiely · 2024-11-15T03:55:55.588Z · LW(p) · GW(p)

That makes sense, thanks.

comment by DanielFilan · 2024-11-14T19:11:28.078Z · LW(p) · GW(p)

So I guess 1.5% of Americans have worse judgment than I expected (by my lights, as someone who thinks that Trump is really bad). Those 1.5% were incredibly important for the outcome of the election and for the future of the country, but they are only 1.5% of the population.

Nitpick: they are 1.5% of the voting population, making them around 0.7% of the US population.

comment by DanielFilan · 2024-11-14T19:02:40.265Z · LW(p) · GW(p)

she should have picked Josh Shapiro as her running mate

Note that this news story makes allegations that, if true, make it sound like the decision was partly Shapiro's:

Following Harris's interview with Pennsylvania Governor Josh Shapiro, there was a sense among Shapiro's team that the meeting did not go as well as it could have, sources familiar with the matter tell ABC News.

Later Sunday, after the interview, Shapiro placed a phone call to Harris' team, indicating he had reservations about leaving his job as governor, sources said.

comment by habryka (habryka4) · 2024-11-15T17:58:08.423Z · LW(p) · GW(p)

This was a really good analysis of a bunch of election stuff that I hadn't seen presented clearly like this anywhere else. If it wasn't about elections and news I would curate it.

comment by clone of saturn · 2024-11-15T07:08:28.194Z · LW(p) · GW(p)

The second reason that I don’t trust the neighbor method is that people just… aren’t good at knowing who a majority of their neighbors are voting for.

This seems like a point in favor of the neighbor method, not against it. You would want people to find "who are my neighbors voting for?" too difficult to readily answer and so mentally replace it with the simpler question "who am I voting for?" thus giving them a plausibly deniable way to admit to voting for Trump.

Replies from: UnexpectedValues↑ comment by Eric Neyman (UnexpectedValues) · 2024-11-15T07:27:12.072Z · LW(p) · GW(p)

If you ask people who their neighbors are voting for, they will make their best guess about who their neighbors are voting for. Occasionally their best guess will be to assume that their neighbors will vote the same way that they're voting, but usually not. Trump voters in blue areas will mostly answer "Harris" to this question, and Harris voters in red areas will mostly answer "Trump".

comment by Linch · 2024-11-15T19:36:51.586Z · LW(p) · GW(p)

Some people I know are much more pessimistic about the polls this cycle, due to herding. For example, nonresponse bias might just be massive for Trump voters (across demographic groups), so pollsters end up having to make a series of unprincipled choices with their thumbs on the scales.

comment by cubefox · 2024-11-15T14:46:03.430Z · LW(p) · GW(p)

Cities are very heavily Democratic, while rural areas are only moderately Republican.

I think this isn't compatible with both getting about equally many votes. Because much more US Americans live in cities than in rural areas:

In 2020, about 82.66 percent of the total population in the United States lived in cities and urban areas.

https://www.statista.com/statistics/269967/urbanization-in-the-united-states/

Replies from: UnexpectedValues↑ comment by Eric Neyman (UnexpectedValues) · 2024-11-15T17:06:28.768Z · LW(p) · GW(p)

I think this just comes down to me having a narrower definition of a city.

comment by deepthoughtlife · 2024-11-15T03:10:24.992Z · LW(p) · GW(p)

Some people went into the 2024 election fearing that pollsters had not adequately corrected for the sources of bias that had plagued them in 2016 and 2020.

I mostly heard the opposite, that they had overcorrected.

comment by Noosphere89 (sharmake-farah) · 2024-11-15T17:48:21.660Z · LW(p) · GW(p)

The one thing I'll say on the election is that a lot of people are using Kamala Harris's loss to put in their own reasons for why Kamala Harris lost that are essentially ideological propaganda.

Basically only the story that she was doomed from the start because of global backlash against incumbents for inflation matches the evidence best, and a lot of other theories are very much there for ideological purposes.

comment by Sherrinford · 2024-11-15T21:08:03.218Z · LW(p) · GW(p)

The most important fact about politics in 2024 is that across the world, it's a terrible time to be an incumbent. For the first time this year since at least World War II, the incumbent party did worse than it did in the previous election in every election in the developed world. ...

What influence does the exclusion of "years where fewer than five countries had elections" in the graph have?

Replies from: UnexpectedValues↑ comment by Eric Neyman (UnexpectedValues) · 2024-11-15T21:14:56.074Z · LW(p) · GW(p)

I don't really know, sorry. My memory is that 2023 already pretty bad for incumbent parties (e.g. the right-wing ruling party in Poland lost power), but I'm not sure.

comment by Maxwell Peterson (maxwell-peterson) · 2024-11-15T17:53:25.292Z · LW(p) · GW(p)

A good post, of interest to all across the political spectrum, marred by the mistake at the end to become explicitly politically opinionated and say bad things about those who voted differently than OP.

Replies from: UnexpectedValues↑ comment by Eric Neyman (UnexpectedValues) · 2024-11-15T18:58:54.473Z · LW(p) · GW(p)

Fair enough, I guess? For context, I wrote this for my own blog and then decided I might as well cross-post to LW. In doing so, I actually softened the language of that section a little bit. But maybe I should've softened it more, I'm not sure.

[Edit: in response to your comment, I've further softened the language.]

Replies from: maxwell-peterson↑ comment by Maxwell Peterson (maxwell-peterson) · 2024-11-17T16:22:02.602Z · LW(p) · GW(p)

Appreciate it! Cheers.

comment by Jan Betley (jan-betley) · 2024-11-15T07:50:07.754Z · LW(p) · GW(p)

The second reason that I don't trust the neighbor method is that people just... aren't good at knowing who a majority of their neighbors are voting for. In many cases it's obvious (if over 70% of your neighbors support one candidate or the other, you'll probably know). But if it's 55-45, you probably don't know which direction it's 55-45 in.

My guess is that there's some postprocessing here. E.g. if you assume that the "neighbor" estimate is wrong but without the refusal problem, and you have the same data from the previous election, then you could estimate the shift of opinions and apply that to other pools that ask about your vote. Or you could ask some additional question like "who did your neighbours vote for in the previous election" and compare that to the real data (ideally per county or so). I would be very surprised if they based the bets just on the raw results.

Replies from: UnexpectedValues↑ comment by Eric Neyman (UnexpectedValues) · 2024-11-15T17:11:41.271Z · LW(p) · GW(p)

Yeah, if you were to use the neighbor method, the correct way to do so would involve post-processing, like you said. My guess, though, is that you would get essentially no value from it even if you did that, and that the information you get from normal polls would prrtty much screen off any information you'd get from the neighbor method.

comment by WilliamKiely · 2024-11-14T20:47:14.837Z · LW(p) · GW(p)

Knowing how the election turned out, how likely do you think it was a week before the election that Trump would win?

Do you think Polymarket had Trump-wins priced too high or too low?

Replies from: UnexpectedValues↑ comment by Eric Neyman (UnexpectedValues) · 2024-11-14T21:14:01.251Z · LW(p) · GW(p)

It's a little hard to know what you mean by that. Do you mean something like: given the information known at the time, but allowing myself the hindsight of noticing facts about that information that I may have missed, what should I have thought the probability was?

If so, I think my answer isn't too different from what I believed before the election (essentially 50/50). Though I welcome takes to the contrary.

Replies from: WilliamKiely↑ comment by WilliamKiely · 2024-11-15T04:17:12.235Z · LW(p) · GW(p)

That's a different question than the one I meant. Let me clarify:

Basically I was asking you what you think the probability is that Trump would win the election (as of a week before the election, since I think that matters) now that you know how the election turned out.

An analogous question would be the following:

Suppose I have two unfair coins. One coin is biased to land on heads 90% of the time (call it H-coin) and the other is biased to land on tails 90% of the times (T-coin). These two coins look the same to you on the outside. I choose one of the coins, then ask you how likely it is that the coin I chose will land on heads. You don't know whether the coin I'm holding is H-coin or T-coin, so you answer 50% (50%=0.5*.90=+0.5*0.10). I then flip the coin and it lands on heads. Now I ask you, knowing that the coin landed on heads, now how likely do you think it was that it would land on heads when I first tossed it? (I mean the same question by "Knowing how the election turned out, how likely do you think it was a week before the election that Trump would win?").

(Spoilers: I'd be interested in knowing your answer to this question before you read my comment on your "The value of a vote in the 2024 presidential election" EA Forum post that you linked to [EA(p) · GW(p)] to avoid getting biased by my answer/thoughts.)

↑ comment by Ben (ben-lang) · 2024-11-15T17:48:17.601Z · LW(p) · GW(p)

I think this question is maybe logically flawed.

Say I have a shuffled deck of cards. You say the probability that the top card is the Ace of Spades is 1/52. I show you the top card, it is the 5 of diamonds. I then ask, knowing what you know now, what probability you should have given.

I picked a card analogy, and you picked a dice one. I think the card one is better in this case, for weird idiosyncratic reasons I give below that might just be irrelevant to the train of thought you are on.

Cards vs Dice: If we could reset the whole planet to its exact state 1 week before the election then we would I think get the same result (I don't think quantum will mess with us in one week). What if we do a coarser grained reset? So if there was a kettle of water at 90 degrees a week before the election that kettle is reset to contain the same volume of water in the same part of my kitchen, and the water is still 90 degrees, but the individual water molecules have different momenta. For some value of "macro" the world is reset to the same macrostate but not the same microstate, it had 1 week before election day. If we imagine this experiment I still think Trump wins every (or almost every) time, given what we know now. For me to think this kind of thermal-level randomness made a difference in one week it would have to have been much closer.

In my head things that change on the coarse-grained reset feel more like unrolled dice, and things that don't more like facedown cards. Although in detail the distinction is fuzzy: it is based on an arbitrary line between micro an macro, and it is time sensitive, because cards that are going to be shuffled in the future are in the same category as dice.

EDIT: I did as asked, and replied without reading your comments on the EA forum. Reading that I think we are actually in complete agreement, although you actually know the proper terms for the things I gestured at.

Replies from: WilliamKiely↑ comment by WilliamKiely · 2024-11-16T07:21:40.348Z · LW(p) · GW(p)

EDIT: I did as asked, and replied without reading your comments on the EA forum. Reading that I think we are actually in complete agreement, although you actually know the proper terms for the things I gestured at.

Cool, thanks for reading my comments and letting me know your thoughts!

I actually just learned the term "aleatory uncertainty" from chatting with Claude 3.5 Sonnet (New) about my election forecasting in the last week or two post-election. (Turns out Claude was very good for helping me think through mistakes I made in forecasting and giving me useful ideas for how to be a better forecaster in the future.)

I then ask, knowing what you know now, what probability you should have given.

Sounds like you might have already predicted I'd say this (after reading my EA Forum comments), but to say it explicitly: What probability I should have given is different than the aleatoric probability. I think that by becoming informed and making a good judgment I could have reduced my epistemic uncertainty significantly, but I would have still had some. And the forecast that I should have made (or what market prices should have been is actually epistemic uncertainty + aleatoric uncertainty. And I think some people who were really informed could have gotten that to like ~65-90%, but due to lingering epistemic uncertainty could not have gotten it to >90% Trump (even if, as I believe, the aleatoric uncertainty was >90% (and probably >99%)).

↑ comment by Eric Neyman (UnexpectedValues) · 2024-11-15T06:37:41.208Z · LW(p) · GW(p)

Ah, I think I see. Would it be fair to rephrase your question as: if we "re-rolled the dice" a week before the election, how likely was Trump to win?

My answer is probably between 90% and 95%. Basically the way Trump loses is to lose some of his supporters or have way more late deciders decide on Harris. That probably happens if Trump says something egregiously stupid or offensive (on the level of the Access Hollywood tape), or if some really bad news story about him comes out, but not otherwise.

Replies from: WilliamKiely↑ comment by WilliamKiely · 2024-11-16T07:08:55.434Z · LW(p) · GW(p)

Ah, I think I see. Would it be fair to rephrase your question as: if we "re-rolled the dice" a week before the election, how likely was Trump to win?

Yeah, that seems fair.

My answer is probably between 90% and 95%.

Seems reasonable to me. I wouldn't be surprised if it was >99%, but I'm not highly confident of that. (I would say I'm ~90% confident that it's >90%.)