Self-consciousness wants to make everything about itself

post by jessicata (jessica.liu.taylor) · 2019-07-03T01:44:41.204Z · LW · GW · 70 commentsThis is a link post for https://unstableontology.com/2019/07/03/self-consciousness-wants-to-make-everything-about-itself/

Contents

Tone arguments Self-consciousness in social justice Shooting the messenger Self-consciousness as privilege defense Stop trying to be a good person None 70 comments

Here's a pattern that shows up again and again in discourse:

A: This thing that's happening is bad.

B: Are you saying I'm a bad person for participating in this? How mean of you! I'm not a bad person, I've done X, Y, and Z!

It isn't always this explicit; I'll discuss more concrete instances in order to clarify. The important thing to realize is that A is pointing at a concrete problem (and likely one that is concretely affecting them), and B is changing the subject to be about B's own self-consciousness. Self-consciousness wants to make everything about itself; when some topic is being discussed that has implications related to people's self-images, the conversation frequently gets redirected to be about these self-images, rather than the concrete issue. Thus, problems don't get discussed or solved; everything is redirected to being about maintaining people's self-images.

Tone arguments

A tone argument criticizes an argument not for being incorrect, but for having the wrong tone. Common phrases used in tone arguments are: "More people would listen to you if...", "you should try being more polite", etc.

It's clear why tone arguments are epistemically invalid. If someone says X, then X's truth value is independent of their tone, so talking about their tone is changing the subject. (Now, if someone is saying X in a way that breaks epistemic discourse norms, then defending such norms is epistemically sensible; however, tone arguments aren't about epistemic norms, they're about people's feelings).

Tone arguments are about people protecting their self-images when they or a group they are part of (or a person/group they sympathize with) is criticized. When a tone argument is made, the conversation is no longer about the original topic, it's about how talking about the topic in certain ways makes people feel ashamed/guilty. Tone arguments are a key way self-consciousness makes everything about itself.

Tone arguments are practically always in bad faith. They aren't made by people trying to help an idea be transmitted to and internalized by more others. They're made by people who want their self-images to be protected. Protecting one's self-image from the truth, by re-directing attention away from the epistemic object level, is acting in bad faith.

Self-consciousness in social justice

A documented phenomenon in social justice is "white women's tears". Here's a case study (emphasis mine):

A group of student affairs professionals were in a meeting to discuss retention and wellness issues pertaining to a specific racial community on our campus. As the dialogue progressed, Anita, a woman of color, raised a concern about the lack of support and commitment to this community from Office X (including lack of measurable diversity training, representation of the community in question within the staff of Office X, etc.), which caused Susan from Office X, a White woman, to feel uncomfortable. Although Anita reassured Susan that her comments were not directed at her personally, Susan began to cry while responding that she "felt attacked". Susan further added that: she donated her time and efforts to this community, and even served on a local non-profit organization board that worked with this community; she understood discrimination because her family had people of different backgrounds and her closest friends were members of this community; she was committed to diversity as she did diversity training within her office; and the office did not have enough funding for this community's needs at that time.

Upon seeing this reaction, Anita was confused because although her tone of voice had been firm, she was not angry. From Anita's perspective, the group had come together to address how the student community's needs could be met, which partially meant pointing out current gaps where increased services were necessary. Anita was very clear that she was critiquing Susan's office and not Susan, as Susan could not possibly be solely responsible for the decisions of her office.

The conversation of the group shifted at the point when Susan started to cry. From that moment, the group did not discuss the actual issue of the student community. Rather, they spent the duration of the meeting consoling Susan, reassuring her that she was not at fault. Susan calmed down, and publicly thanked Anita for her willingness to be direct, and complimented her passion. Later that day, Anita was reprimanded for her 'angry tone,' as she discovered that Susan complained about her "behavior" to both her own supervisor as well as Anita's supervisor. Anita was left confused by the mixed messages she received with Susan's compliment, and Susan's subsequent complaint regarding her.

The key relevance of this case study is that, while the conversation was originally about the issue of student community needs, it became about Susan's self-image. Susan made everything about her own self-image, ensuring that the actual concrete issue (that her office was not supporting the racial community) was not discussed or solved.

Shooting the messenger

In addition to crying, Susan also shot the messenger, by complaining about Anita to both her and Anita's supervisors. This makes sense as ego-protective behavior: if she wants to maintain a certain self-image, she wants to discourage being presented with information that challenges it, and also wants to "one-up" the person who challenged her self-image, by harming that person's image (so Anita does not end up looking better than Susan does).

Shooting the messenger is an ancient tactic, deployed especially by powerful people to silence providers of information that challenges their self-image. Shooting the messenger is asking to be lied to, using force. Obviously, if the powerful person actually wants information, this tactic is counterproductive, hence the standard advice to not shoot the messenger.

Self-consciousness as privilege defense

It's notable that, in the cases discussed so far, self-consciousness is more often a behavior of the privileged and powerful, rather than the disprivileged and powerless. This, of course, isn't a hard-and-fast rule, but there certainly seems to be a relation. Why is that?

Part of this is that the less-privileged often can't get away with redirecting conversations by making everything about their self-image. People's sympathies are more often with the privileged.

Another aspect is that privilege is largely about being rewarded for one's identity, rather than one's works. If you have no privilege, you have to actually do something concretely effective to be rewarded, like cleaning. Whereas, privileged people, almost by definition, get rewarded "for no reason" other than their identity.

Maintenance of a self-image makes less sense as an individual behavior than as a collective behavior. The phenomenon of bullshit jobs implies that much of the "economy" is performative, rather than about value-creation. While almost everyone can pretend to work, some people are better at it than others. The best people at such pretending are those who look the part, and who maintain the act. That is: privileged people who maintain their self-images, and who tie their self-images to their collective, as Susan did. (And, to the extent that e.g. school "prepares people for real workplaces", it trains such behavior.)

Redirection away from the object level isn't merely about defending self-image; it has the effect of causing issues not to be discussed, and problems not to be solved. Such effects maintain the local power system. And so, power systems encourage people to tie their self-images with the power system, resulting in self-consciousness acting as a defense of the power system.

Note that, while less-privileged people do often respond negatively to criticism from more-privileged people, such responses are more likely to be based in fear/anger rather than guilt/shame.

Stop trying to be a good person

At the root of this issue is the desire to maintain a narrative of being a "good person". Susan responded to the criticism of her office by listing out reasons why she was a "good person" who was against racial discrimination.

While Anita wasn't actually accusing Susan of racist behavior, it is, empirically, likely that some of Susan's behavior is racist, as implicit racism is pervasive (and, indeed, Susan silenced a woman of color speaking on race). Susan's implicit belief is that there is such a thing as "not being racist", and that one gets there by passing some threshold of being nice to marginalized racial groups. But, since racism is a structural issue, it's quite hard to actually stop participating in racism, without going and living in the woods somewhere. In societies with structural racism, ethical behavior requires skillfully and consciously reducing harm given the fact that one is a participant in racism, rather than washing one's hands of the problem.

What if it isn't actually possible to be "not racist" or otherwise "a good person", at least on short timescales? What if almost every person's behavior is morally depraved a lot of the time (according to their standards of what behavior makes someone a "good person")? What if there are bad things that are your fault? What would be the right thing to do, then?

Calvinism has a theological doctrine of total depravity, according to which every person is utterly unable to stop committing evil, to obey God, or to accept salvation when it is offered. While I am not a Calvinist, I appreciate this teaching, because quite a lot of human behavior is simultaneously unethical and hard to stop, and because accepting this can get people to stop chasing the ideal of being a "good person".

If you accept that you are irredeemably evil (with respect to your current idea of a good person), then there is no use in feeling self-conscious or in blocking information coming to you that implies your behavior is harmful. The only thing left to do is to steer in the right direction: make things around you better instead of worse, based on your intrinsically motivating discernment of what is better/worse. Don't try to be a good person, just try to make nicer things happen. And get more foresight, perspective, and cooperation as you go, so you can participate in steering bigger things on longer timescales using more information.

Paradoxically, in accepting that one is irredeemably evil, one can start accepting information and steering in the right direction, thus developing merit, and becoming a better person, though still not "good" in the original sense. (This, I know from personal experience)

(See also: What's your type: Identity and its Discontents; Blame games; Bad intent is a disposition, not a feeling)

70 comments

Comments sorted by top scores.

comment by habryka (habryka4) · 2019-07-03T19:57:41.049Z · LW(p) · GW(p)

I agree with most of this post, but do want to provide a stronger defense of at least a subtype of tone arguments that I think are important (I think those are not the most frequently deployed type of tone arguments, but they are of a type that I think are important to allow people to make).

Tone arguments are practically always in bad faith. They aren't made by people trying to help an idea be transmitted to and internalized by more others.

To give a very extreme example of the type I am thinking about: Recently I was at an event where I was talking with two of my friends about some big picture stuff in a pretty high context conversation. We were interrupted by another person at the event who walked into the middle of our conversation, said "Fuck You" to me, then stared at me aggressively for about 10 seconds, then insulted me and then said "look, the fact that you can't even defend yourself with a straight face makes it obvious that what I am saying is right", then turned to my friends and explained loudly how I was a horrible person, and left a final "fuck you" before walking away.

The whole interaction left me quite terrified. I managed to keep my composure, but I felt pretty close to tears afterwards, had my adrenaline pumping and it took me about half an hour to calm down and be able to properly think clearly again. I think my friends had an even worse experience.

I actually think some of the way the person insulted me were relatively on point and I think reflected some valid criticisms of at least some of my past actions. I nevertheless was completely unable to respond and would have much preferred this interaction to not have taken place. I think it is really important that I can somehow avoid being in that kind of situation.

I usually really don't care about tone, but I do think there are certain choices of tone that imply threats or that almost directly hook into parts of my brain that make it very hard to think (the statement of "the fact that you can't even defend yourself with a straight face makes it obvious that I am right" is one of those for me). I think a lot of this is mitigated by text-based conversations, but far from all of it. In particular, if someone threatens some form of serious violence, or insinuates violence, which is often dependent on tone, then I do think it's reasonable to use tone as real evidence about the other person's intention, or (more rarely) intervene on tone if someone tries intentionally use tone to invoke a sense of threat.

Obviously the ability for people to threaten each other is much greater in person, and it's much easier to directly inflict costs on the other person by interrupting or screaming or hitting someone, but I think many forms of violent threat are still available in text-based communication, and often communicated via tone. I think long-lasting, mostly anonymous online communities tend to learn that most forms of threatening-sounding language don't tend to correspond to actual threats (any long-term user of 4chan will be quite robust to threatening sounding language), but I think for most people using insinuated threats is a quite reliable way to manipulate and control them, making it an actually powerful weapon that I have definitely seen used intentionally.

I think there is a subtype of tone arguments that is something like "you are threatening to hurt me without saying so directly, because saying so directly would be an obvious norm violation", which I think is actually important. I don't know whether it's ever really a good choice to make that argument explicitly (since that argument can easily be abused), or whether it's usually just better to disengage, but I don't want to rule out that it's never a good idea to make it explicitly.

I think the most common form of violence that I see being threatened in online forums is "I will get the audience to hate and attack you by tearing you apart in public". I think in an environment where the audience is full of careful thinkers this can be a fine generalizable strategy, if the audience has enough discernment to make this a highly truth-asymmetric weapon. But in most online environments there are quite predictable and abusable way to cause the audience to get angry at someone, which mostly makes this a weapon that can be wielded without much relation to accuracy of the participant's opinions (not fully uncorrelated, but not correlated enough to avoid abuse).

Wielding the audience this way is often done via the choice of a specific type of tone and rhetorical style, and so arguing against that kind of tone in public environments also strikes me as the correct choice under certain circumstances.

I think on LessWrong, we are much closer to the "the audience is full of careful thinkers so your ability to cause your enemy to be torn apart is actually correlated to the truth" side of the spectrum, which I think is why I tend to have a much higher tolerance for certain tones on LessWrong than in other places. But I think it's still not perfect. LessWrong is still a public forum, and I think there are still rhetorical styles that I expect to at least sometimes (but still with predictable direction) cause parts of the audience to attack the person against whom the rhetoric is wielded, without good justification.

People on LessWrong do however tend to have the patience to listen to good meta-level arguments about threats and against the use of violence in general, but that requires the ability for tone to be a valid subject of discussion at all, which is why I want to make sure that this post doesn't move tone completely out of the overton window.

This line of thought caused me to think that it might be quite valuable to have some kind of "conversation escrow" that allows people to have conversation in private that still reliably get published. As an example, you could imagine a feature on LessWrong to invite someone to a private comment-thread. The whole thread happens in private, and is scheduled to be published a week after the last comment in the thread was made, unless any of the participants presses a veto button if they feel that the eventual-public nature of the conversation was wielded against them during the conversation. I don't know how many conversations would end up being published, but it feels like potentially worth a try that avoids many of the error modes I am worried about with more direct public conversation.

Replies from: jessica.liu.taylor, cousin_it, quanticle, hg00↑ comment by jessicata (jessica.liu.taylor) · 2019-07-04T03:35:11.832Z · LW(p) · GW(p)

[EDIT: see the comment thread with Wei Dai, I don't endorse the policing/argument terms as stated in this comment]

I agree that tone policing is not always bad. In particular, enforcement of norms that improve collective epistemology or protect people from violence is justified. And, tone can be epistemically misleading or (as you point out) constitute an implicit threat of violence. (Though, note that threats to people's feelings are not necessarily threats of violence, and also that threats to reputation are not necessarily threats of violence; though, threats to lie about reputation-relevant information are concerning even when not violent). (Note also, different norms are appropriate for different spaces, it's fine to have e.g. parties where people are supposed to validate each other's feelings)

Tone arguments are bad because they claim to be "helping you get more people to listen to you" in a way that doesn't take responsibility for, right then and there, not listening, in a way decision-theoretically correlated with the "other people". (That isn't what happened in the example you described)

I agree that people threatening mob violence is generally a bad thing in discourse. (Threats of mob violence aren't arguments, they're... threats) Such threats used in the place of arguments are a form of sophistry.

Replies from: Wei_Dai↑ comment by Wei Dai (Wei_Dai) · 2019-07-04T05:48:27.574Z · LW(p) · GW(p)

It's confusing that "tone argument" (in the OP) links to a Wikipedia article on "tone policing", if they're not supposed to be the same thing.

What is the actual relationship between tone arguments and tone policing? In the OP you wrote:

A tone argument criticizes an argument not for being incorrect, but for having the wrong tone.

From this it seems that tone arguments is the subset of tone policing that is aimed at arguments (as opposed to other forms of speech). But couldn't an argument constitute an implicit threat of violence, and therefore tone arguments could be good sometimes?

It seems like to address habryka's criticism, you're now redefining (or clarifying) "tone argument" to be a subset of the subset of tone policing that is aimed at arguments, namely where the aim of the policing is specifically claimed to be “helping you get more people to listen to you”. If that's the case, it seems good to be explicit about the redefinition/clarification to avoid confusing people even further.

Replies from: jessica.liu.taylor↑ comment by jessicata (jessica.liu.taylor) · 2019-07-04T06:05:07.118Z · LW(p) · GW(p)

On reflection, I don't think the online discourse makes the same distinction I made in the parent comment, and I also don't think there is a clean distinction, so I retract the words for this distinction, although I think the distinction I pointed to is useful.

Tone arguments, in the broad sense, criticize arguments for their tone, not their content (as I wrote in the post).

More narrowly, tone arguments claim something like "more people would listen to you if you were more polite". This is a subset of "broad" tone arguments.

The Geek Feminism article on tone arguments (which, I was reluctant to link to for obvious political reasons) says:

A tone argument is an argument used in discussions, sometimes by concern trolls and sometimes as a derailment tactic, where it is suggested that feminists would be more successful if only they expressed themselves in a more pleasant tone. This is also sometimes described as catching more flies with honey than with vinegar, a particular variant of the tone argument. The tone argument also manifests itself where arguments produced in an angry tone are dismissed irrespective of the legitimacy of the argument; this is also known as tone policing.

Which is making something like the policing vs. argument distinction I made in the parent comment. But, the distinction isn't made clear in this paragraph (and, certainly, tone arguments don't only apply to feminism).

↑ comment by cousin_it · 2019-07-04T08:41:30.751Z · LW(p) · GW(p)

Saying "fuck you" and waiting ten seconds? There's a good chance they were trying to bait you into a reportable offense. That said, you should've used more courage in the moment, and your "friends" should've backed you up. If you find yourself in that situation again, try saying "no you" and improvise from there.

Replies from: Wei_Dai↑ comment by Wei Dai (Wei_Dai) · 2019-07-05T06:10:29.130Z · LW(p) · GW(p)

If you find yourself in that situation again, try saying “no you” and improvise from there.

Why take the risk of this escalating into a seriously negative sum outcome? I can imagine the risk being worth it for someone who needs to constantly show others that they can "handle themselves" and won't easily back down from perceived threats and slights. But presumably habryka is not in that kind of social circumstances, so I don't understand your reasoning here.

Replies from: SaidAchmiz, cousin_it↑ comment by Said Achmiz (SaidAchmiz) · 2019-07-05T08:44:30.456Z · LW(p) · GW(p)

Why take the risk of this escalating into a seriously negative sum outcome?

Because if someone does that to you (walking up to you and insulting you to your face, apropos of nothing), then the value to them of the situation’s outcome no longer matters to you—or shouldn’t, anyway; this person does not deserve that, not by a long shot.

And so the “negative sum” consideration is irrelevant. There’s no “sum” to consider, only the value to you. And to you, the situation is already strongly negative.

Now, it is possible that you could make it more negative, for yourself. Possible… but not likely. And even if you do, if you simultaneously succeed at making it much more negative for your opponent, you have nonetheless improved your own outcome (for what I hope are obvious game-theoretic reasons).

Aren’t you, after all, simply asking “why retaliate against attacks, even when doing so is not required to stop that particular attack”? All I can say to that is “read Schelling”…

Replies from: habryka4, Wei_Dai, Dagon↑ comment by habryka (habryka4) · 2019-07-05T17:00:08.440Z · LW(p) · GW(p)

It would have very likely made it a lot more negative to myself. I expect that would have escalated the situation and would have prolonged the whole thing for at least twice as long.

Generally I am in favor of punishing things like this, but it seems much better for that punishment to not happen via conflict escalation, but via other more systematic ways of punishment.

↑ comment by Wei Dai (Wei_Dai) · 2019-07-22T10:10:37.585Z · LW(p) · GW(p)

Because if someone does that to you (walking up to you and insulting you to your face, apropos of nothing), then the value to them of the situation’s outcome no longer matters to you—or shouldn’t, anyway; this person does not deserve that, not by a long shot.

I disagree with the morality that is implied by this statement. (It's a really small part of my moral parliament.)

And to you, the situation is already strongly negative.

There may be some value difference here between you and I, in that you may consider being insulted to have a strongly negative terminal value?

Now, it is possible that you could make it more negative, for yourself. Possible… but not likely.

If the situation escalated, I might get injured or arrested or be less likely to be invited to future parties, or that person might develop a vendetta against me and cause more substantial harm to me in the future, all of which seem potentially a lot more negative.

And even if you do, if you simultaneously succeed at making it much more negative for your opponent, you have nonetheless improved your own outcome (for what I hope are obvious game-theoretic reasons).

I already addressed this when I wrote "I can imagine the risk being worth it for someone who needs to constantly show others that they can “handle themselves” and won’t easily back down from perceived threats and slights. But presumably habryka is not in that kind of social circumstances, so I don’t understand your reasoning here."

Aren’t you, after all, simply asking “why retaliate against attacks, even when doing so is not required to stop that particular attack”? All I can say to that is “read Schelling”…

No, I was saying that cost-benefit doesn't seem to favor retaliating against that particular attack, in the particular way that cousin_it suggested. (Clearly it's true that some attacks should not be retaliated against, right?)

↑ comment by Dagon · 2019-07-23T21:25:55.816Z · LW(p) · GW(p)

>> Why take the risk of this escalating into a seriously negative sum outcome?

Because if someone does that to you (walking up to you and insulting you to your face, apropos of nothing), then the value to them of the situation’s outcome no longer matters to you—or shouldn’t, anyway; this person does not deserve that, not by a long shot.

I think I'm with Wei_Dai on this one - insulting me to my face, apropos of nothing, doesn't change my valuation of them very much. I don't know the reasons for such, but I presume it's based on fear or pain and I deeply sympathize with those reasons for unpleasant, unreasoning actions. Part of my reaction is that it's VERY DIFFICULT to insult me in any way that I won't just laugh at the absurdity, unless you actually know me and are targeting my personal insecurities.

Only if it's _NOT_ random and apropos of nothing am I likely to feel that there are strategic advantages to taking a risk now to prevent future occurrences (per your Schelling reference).

↑ comment by cousin_it · 2019-07-05T11:45:24.096Z · LW(p) · GW(p)

I think living with courage and dignity is more fun in any social circumstances.

Replies from: Wei_Dai↑ comment by Wei Dai (Wei_Dai) · 2019-07-05T21:36:23.804Z · LW(p) · GW(p)

Fun tends to be highly personal, for example some people find free soloing fun, and others find it terrifying. Some people enjoy strategy games and others much prefer action games. So it seems surprising that you'd give an unconditional "should have" criticism/advice based on what you think is fun. I mean, you wouldn't say to someone, "you should not have used safety equipment during that climb." At most you'd say, "you should try not using safety equipment next time and see if that's more fun for you."

Replies from: cousin_it, jimmy↑ comment by jimmy · 2019-07-08T19:38:24.680Z · LW(p) · GW(p)

Free soloing is fun for some and not others in large part for reasons like “skill in climbing”, which cannot be expected to hold the same optimal value for different people. Courage, on the other hand, is pretty universally useful, and can help in ways that are not immediately obvious.

It’s not always obvious how things could be better through the exercise of courage for two reasons. First, the application of courage almost inevitably results in an increase of fear (how could it not, since you’re choosing to not flinch away from the fear). If you’re not exceedingly careful, it can be easy to conflate “things got scarier” with “things got objectively worse”. In situations like this, it can often escalate things into explicit threats of violence which are definitely more scary and it can be easy to read “he threatened to fight me” as a turn for the worse. It’s not at all obvious until you follow through that these threats are very very often empty — so often, in fact, that displaying willingness to let things escalate physically can be the safer thing to (at least in my experience it has been).

Secondly, it’s not always clear what one should do with courage. All the courage in the world wouldn’t get me to free solo climb for the same reason it wouldn’t get me to play Russian roulette; it’s just not worth the risk for me. In situations like this, cousin_it suggests responding with “no, you”, but I actually think that’s a mistake. I’d actually advocate doing exactly what habryka did. Say nothing. Don’t back down, of course, but you don’t have to respond and what do you get out of responding other than encouraging that kind of bad behavior? The jerk doesn’t deserve a response.

Of course, it’d be nice to do it with less fear. It’d be nice if instead of seeing fear he sees someone looking at him as if he’s irrelevant and just waiting for him to leave (which is a pretty big punishment, actually, since it makes the aggressor feel foolish for thinking their aggression would have any effect), but that’s an issue of “fear” not “courage”, and you kinda have to accept and run with whatever fear you have since you can’t really address it on the fly.

I wouldn’t say “you should have more courage” both because I don’t see any obvious failure of courage and because you can’t “should” people into courage or out of fear, but I do think courage is an underappreciated virtue to be cultivated, and that the application of courage in cases like these makes life as a whole much more pleasant and less (invisibly and visibly) controlled by fear. This means both holding fast in the moment despite the presence of fear, as well as taking the time to work through your fears in the down time such that you’re more prepared for the next time.

↑ comment by quanticle · 2019-07-03T21:47:43.624Z · LW(p) · GW(p)

This line of thought caused me to think that it might be quite valuable to have some kind of "conversation escrow" that allows people to have [a] conversation in private that still reliably gets published. As an example, you could imagine a feature on LessWrong to invite someone to a private comment-thread. The whole thread happens in private, and is scheduled to be published a week after the last comment in the thread was made, unless any of the participants presses a veto button...

I'm not sure I understand either the problem or the proposed solution. If there's a veto button, it's not reliable publishing, is it? How is this any better or different than having a private exchange via e-mail, Slack, Discord, etc, and then asking the other person, "Do you mind if I publish an excerpt?"

More generally, I'm not sure what kind of problem this tool would solve. Can you name some kinds of conversations that this tool would be used for?

Replies from: Raemon↑ comment by Raemon · 2019-07-03T21:50:31.960Z · LW(p) · GW(p)

I don't know how much the tool would help, but it seems like an importantly different expectation where "this will be published, unless someone actively thinks it'd be bad to do so" vs "this is by default private, unless someone actively asks to publish."

Replies from: habryka4↑ comment by habryka (habryka4) · 2019-07-03T21:55:20.870Z · LW(p) · GW(p)

Yeah, this is mostly it. Making things "by default public unless someone objects" feels very different than normal private google docs where asking whether you can make it public feels like a strong ask and quite weird.

Replies from: quanticle↑ comment by quanticle · 2019-07-04T01:04:21.620Z · LW(p) · GW(p)

I'm not sure there's a difference. Either you're asking up front ("Hey, do you mind if I set this timer to auto-publish in a week?") or you're asking later ("Hey, we just discussed something that I think would be of interest, do you mind if I publish it?").

In fact, I think asking after the fact might be easier, because you can point to specific things that were discussed and say, "I'm going to excerpt <x>, <y>, and <z>. Is that okay?"

↑ comment by hg00 · 2019-07-04T03:07:48.192Z · LW(p) · GW(p)

Agreed. Also, remember that conversations are not always about facts. Oftentimes they are about the relative status of the participants. Something like Nonviolent Communication might seem like tone policing, but through a status lens, it could be seen as a practice where you stop struggling for higher status with your conversation partner and instead treat them compassionately as an equal.

Replies from: SaidAchmiz↑ comment by Said Achmiz (SaidAchmiz) · 2019-07-04T03:27:23.595Z · LW(p) · GW(p)

It has been my experience that NVC is used exclusively as a means of making status plays. Perhaps it may be used otherwise, but if so, I have not seen it.

Replies from: Kaj_Sotala, hg00↑ comment by Kaj_Sotala · 2019-07-07T07:43:18.106Z · LW(p) · GW(p)

I think NVC has the thing where, if it's used well, it's subtle enough that you don't necessarily recognize it as NVC.

Replies from: SaidAchmiz↑ comment by Said Achmiz (SaidAchmiz) · 2019-07-07T09:27:57.825Z · LW(p) · GW(p)

Do you have any examples of this?

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2019-07-07T21:41:01.263Z · LW(p) · GW(p)

I tend to (or at least try to remember to) draw on NVC a lot in conversation about emotionally fraught topics, but it's hard for me to recall the specifics of my wording afterwards, and they often include a lot of private context. But here's an old example which I picked because it happened in a public thread and because I remember being explicitly guided by NVC principles while writing my response.

As context, I had shared a link to an SSC post talking about EA on my Facebook page, and some people were vocally critical of its implicit premises (e.g. utilitarianism). The conversation got a somewhat heated tone and veered into, among other things, the effectiveness of non-EA/non-utilitarian-motivated volunteer work. I ended making some rather critical comments in return, which led to the following exchange:

The other person: Kaj, I also find you to be rather condescending (and probably, privileged) when talking about volunteer work. Perhaps you just don't realize how large a part volunteers play in doing the work that supposedly belongs to the state, but isn't being taken care of. And your suggestion that by volunteering you mainly get to feel better about yourself, and then "maybe help some people in the process", is already heading towards offensive. I have personally seen/been involved in/know of situations where a volunteer literally saved someone's life, as in, if they weren't there and didn't have the training they had, the person would most likely have died. So, what was your personal, verified lives saved per dollar efficiency ratio, again, that allows you to make such dismissive comments?

Me: I'm sorry. I was indeed condescending, and that was wrong of me. I was just getting frustrated because I felt that Scott's post had a great message about what people could accomplish if they just worked together, which was getting completely lost squabbling over a part of it that was beside the actual point. When I saw you say call lives saved per dollar an absolutely terrible metric, I felt hurt because I felt that the hard and thoughtful work the EA scene has put into evaluating different interventions was being dismissed without being given a fair evaluation. I felt a need to defend them - any myself, since I'm a part of that community - at that point.

I do respect anyone doing volunteer work, especially the kind of volunteer work you're referring to. When I was writing my comment, I was still thinking of activism primarily in the context of Scott's original post (and the post of his preceding it), which had been talking about campaigns to (re)post things on Twitter, Tumblr, etc. These are obviously very different campaigns from the kind of work that actually saves lives.

Even then, you are absolutely correct about the fact that my remarks have often unfairly devalued the value of volunteer work. So to set matters straight: anyone who voluntarily works to make other's lives better has my utmost respect. Society and the people in it would be a lot worse off without such work, and I respect anyone who actually does important life-saving work much more than I respect, say, someone who hangs around in the EA community and talks about pretty things without actually doing much themselves. (I will also admit that I've kinda fallen to the latter category every now and then.)

I also admit that the people in the field have a lot of experience which I do not have, and that academic analyses about the value of some intervention have lots of limitations that may cause them to miss out important things. I do still think that systematic, peer-reviewed studies are the way to go if one wishes to find the most effective ways of making a difference, but I do acknowledge that the current level of evidence is not yet strong and that there are also lots of individual gut feelings and value judgement involved in interpreting the data.

I don't want there to be any us versus them thing going on, with EAs on the one side and traditional volunteers on the other. I would rather have us all be on the same side, where we may disagree on what is the best way to act, but support each other regardless and agree that we have a shared goal in making the world a better place.

(The conversation didn't proceed anywhere from that, but the other person did "like" my comment.)

comment by Richard_Kennaway · 2019-07-03T08:14:41.868Z · LW(p) · GW(p)

The problem with Calvinism is that it does not allow for improvement. We are (Calvin and Calvinists say) utterly depraved, and powerless to do anything to raise ourselves up from the abyss of sin by so much as the thickness of a hair. We can never be less wrong. Only by the external bestowal of divine grace can we be saved, grace which we are utterly undeserving of and are powerless to earn by any effort of our own. And this divine grace is not bestowed on all, only upon some, the elect, predetermined from the very beginning of Creation.

Calvinism resembles abusive parenting more than any sort of ethical principle.

Eliezer has written of a similar concept in Judaism [LW · GW]:

Each year on Yom Kippur, an Orthodox Jew recites a litany which begins Ashamnu, bagadnu, gazalnu, dibarnu dofi, and goes on through the entire Hebrew alphabet: We have acted shamefully, we have betrayed, we have stolen, we have slandered . . .

As you pronounce each word, you strike yourself over the heart in penitence. There’s no exemption whereby, if you manage to go without stealing all year long, you can skip the word gazalnu and strike yourself one less time. That would violate the community spirit of Yom Kippur, which is about confessing sins—not avoiding sins so that you have less to confess.

By the same token, the Ashamnu does not end, “But that was this year, and next year I will do better.”

The Ashamnu bears a remarkable resemblance to the notion that the way of rationality is to beat your fist against your heart and say, “We are all biased, we are all irrational, we are not fully informed, we are overconfident, we are poorly calibrated . . .”

Fine. Now tell me how you plan to become less biased, less irrational, more informed, lessoverconfident, better calibrated.

When all are damned from the very beginning, when "everything is problematic", then who in fact gets condemned, what gets problematised, and who are the elect, are determined by political struggle for the seat of judgement. At least in Calvinism, that seat was reserved to God, who does not exist (or as Calvinists would say, whose divine will is unknowable), leaving people to deal with each others' flaws on a level standing.

Replies from: Viliam, Benquo, jessica.liu.taylor, greylag↑ comment by Viliam · 2019-07-03T19:36:18.704Z · LW(p) · GW(p)

Seems to me that many advices or points of view can be helpful when used in a certain way, and harmful when used in a different way. The idea "I am already infinitely bad" is helpful when it removes the need to protect one's ego, but it can also make a person stop trying to improve.

The effect is similar with the idea of "heroic responsibility"; it can help you overcome some learned helplessness, or it can make you feel guilty for all the evils in the world you are not fixing right now. Also, it can be abused by other people as an excuse for their behavior ("what do you mean by saying I hurt you by doing this and that? take some heroic responsibility for your own well-being, and stop making excuses!").

Less directly related: Knowing About Biases Can Hurt People [LW · GW] (how good advice about avoiding biases can be used to actually defend them), plus there is a quote I can't find now about how "doubting you math skills and checking your homework twice" can be helpful, but "doubting your math skills so much that you won't even attempt to do your homework" is harmful.

↑ comment by Benquo · 2019-07-04T12:49:57.098Z · LW(p) · GW(p)

Orthodox Judaism specifically claims that if enough people behave righteously enough and follow the law well enough at the same time, this will usher in a Messianic era, in which much of the liturgy and ritual obligations and customs (such as the *Ashamnu*) will be abolished. Collective responsibility, and a keen sense that we are very, very far from reliably correct behavior, is not the same as a total lack of hope.

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2019-07-04T15:46:13.278Z · LW(p) · GW(p)

I've heard of a similar superstition in Christendom, that if for a single day, no-one sinned, that would bring about the Second Coming. The difference between either of these and a total lack of hope is rounding error.

Replies from: Benquo↑ comment by Benquo · 2019-07-04T17:37:56.261Z · LW(p) · GW(p)

What's the alternative? A state in which collective culpability is zero ... while people continue to do wrong?

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2019-07-04T18:29:38.770Z · LW(p) · GW(p)

The alternative is just this: there is work to be done — do it. When the work can be done better — do it better. When you can help others work better — help them to work better.

Forget about a hypothetical absolute pinnacle of good, and berating yourself and everyone else for any failure to reach it. It is like complaining, after the first step of a journey of 10,000 miles, that you aren't there yet.

I quoted Spurgeon as a striking example of pure, stark Calvinism. But to me his writings are lunatic ravings. In fairness, some of the quotes on the spurgeon quotes site are more humane. But Calvinism, secular or religious, is an obvious failure mode. DO NOT DO OBVIOUS FAILURE MODES.

↑ comment by jessicata (jessica.liu.taylor) · 2019-07-03T18:04:07.281Z · LW(p) · GW(p)

Calvinists definitely believe ethical improvement is possible (see John Calvin on free will). Rather, the claim is that there is a certain standard of goodness that no one reaches.

When all are damned from the very beginning, when “everything is problematic”, then who in fact gets condemned, what gets problematised, and who are the elect, are determined by political struggle for the seat of judgement.

This is true. Most of the world is under a scapegoating system, where punishment is generally unjust (see: Moral Mazes), and unethical behavior is pervasive. In such a circumstance, punishing all unethical behavior would be catastrophic, so such punishment would itself be unethical.

It would be desirable to establish justice, by designing social systems where the norms are clear, it's not hard to follow them, and people have a realistic path to improvement when they break them. But, the systems of today are generally unjust.

Replies from: greylag↑ comment by greylag · 2019-07-03T19:08:13.359Z · LW(p) · GW(p)

Calvinists definitely believe ethical improvement is possible

I'm a stranger to theology so maybe I'm misunderstanding, but it sounds like Calvin thought people had free will, but other "Calvinists" thought one's moral status was predestined by God, prohibiting ethical improvement. (I assume John Calvin isn't going to exercise a Dennett/Conway Perspective Flip Get-Out-Clause, because if he does, the universe is trolling me).

(Aside: I sometimes think atheists with Judeo-Christian heritage risk losing the grace and keeping the damnation)

Replies from: jessica.liu.taylor↑ comment by jessicata (jessica.liu.taylor) · 2019-07-04T03:46:40.021Z · LW(p) · GW(p)

Yes, it's complicated. According to Calvinism, God has already decided who is elect. However, this doctrine is compatible with motivations for ethical behavior, in the case of the Protestant work ethic. Quoting Wikipedia:

Since it was impossible to know who was predestined, the notion developed that it might be possible to discern that a person was elect (predestined) by observing their way of life. Hard work and frugality were thought to be two important consequences of being one of the elect. Protestants were thus attracted to these qualities and supposed to strive for reaching them.

This seems like some pretty wonky decision theory (striving for reaching ethical qualities because they're signs of already being elect). Similar to the smoking lesion problem. Perhaps Calvinists are evidential decision theorists :)

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2019-07-04T08:02:49.232Z · LW(p) · GW(p)

Perhaps evidential non-decision theorists. Fallen man is unable to choose between good and evil, for in his fallen state he will always and inevitably choose evil.

There is no greater mockery than to call a sinner a free man. Show me a convict toiling in the chain gang, and call him a free man if you will; point out to me the galley slave chained to the oar, and smarting under the taskmaster’s lash whenever he pauses to draw breath, and call him a free man if you will; but never call a sinner a free man, even in his will, so long as he is the slave of his own corruptions.

Man is totally depraved:

The fact is, that man is a reeking mass of corruption. His whole soul is by nature so debased and so depraved, that no description which can be given of him even by inspired tongues can fully tell how base and vile a thing he is.

Man is incapable of the slightest urge to do good, unless the Lord extend his divine grace; and then, such good as he may do is done not by him but by the Lord working in him. And then, such outer works may be seen as evidence of inward grace.

Quotes are from the 19th century Calvinist C.M. Spurgeon, here and here. He wrote thousands of sermons, and they're all like this.

↑ comment by greylag · 2019-07-04T06:02:25.897Z · LW(p) · GW(p)

Calvinism resembles abusive parenting more than any sort of ethical principle.

I think this might be an important distinction:

“We are all flawed/evil and have to somehow make the best of it” (Calvinism, interpreted charitably)

vs

“YOU are evil/worthless” said to a child by a parent who believes it (abusive parenting, interpreted uncharitably, painted orange and with a bulls eye painted on it)

comment by Said Achmiz (SaidAchmiz) · 2019-07-03T03:37:29.733Z · LW(p) · GW(p)

What if it isn’t actually possible to be “not racist” or otherwise “a good person”, at least on short timescales? What if almost every person’s behavior is morally depraved a lot of the time (according to their standards of what behavior makes someone a “good person”)? What if there are bad things that are your fault? What would be the right thing to do, then?

This paragraph (and the entire concept described in this last part of the post) is—if the words therein are taken to have their usual meanings—inherently self-contradictory.

If it’s not possible for you to do something, then it cannot possibly be your fault that you don’t do that thing. (“Ought implies can” is the classic formulation of this idea.)

Rejecting “ought implies can” turns the concepts of fault, good, etc., into parodies of themselves, and morality into nonsense. If morality isn’t about how to make the right choices, then what could it be about? Whatever that thing is, it’s not morality. If you say that according to your “morality”, I can’t be “good”, on account of there being no “right” choice available to me, my answer is: clearly, whatever you mean by “good” and “right” and “morality” isn’t what I mean by those words, and I am probably uninterested in discussing these things that you mean by those words. In other words: by my lights, you have not said anything about good or right or morality, only about some different, unrelated things (for which you have, regrettably, chosen to use these already-existing words).

“Integers,” it was once said [LW(p) · GW(p)], “are slippery … If you say something unconventional about integers, you cease to talk about them.”

And so with morality.

Replies from: jessica.liu.taylor↑ comment by jessicata (jessica.liu.taylor) · 2019-07-03T05:06:44.795Z · LW(p) · GW(p)

I'm going to clarify what I mean by "what if it's not possible to be a good person?"

Most people have a confused notion of what a "good person" is. According to this notion, being a "good person" requires having properties X, Y, and Z. Well, it turns out that no one, or nearly no one, has properties X&Y&Z, and also couldn't achieve them quickly even with effort. Therefore, no one is a "good person" by that definition.

If you apply moral philosophy to this, you can find (as you point out) that this definition of "good person" is rather useless, as it isn't even actionable. Therefore, the notion should either be amended or discarded. However, to realize this, you have to first realize that no one has properties X&Y&Z. And, to someone who accepts this notion of "good person", this is going to feel, from the inside, like no one is a good person.

Therefore, it's useful to ask people who have this kind of confused notion of "good person" to imagine the hypothetical where no one is a good person. Imagining such a hypothetical can lead them to refine their moral intuitions.

[EDIT: I also want to clarify "some behavior is unethical and also hard to stop". What I mean here is that a lot of behavior is, judged for itself, unethical, in the sense that it's bad for non-zero-sum coordination, and also that it's hard to change one's overall behavioral pattern to behave ethically with consistency. Which doesn't at all imply that people who haven't already done this are "bad people", it just means they still take actions that are bad for non-zero-sum coordination.]

Replies from: greylag, Viliam, SaidAchmiz↑ comment by greylag · 2019-07-03T18:19:19.634Z · LW(p) · GW(p)

being a "good person" requires having properties X, Y, and Z. Well, it turns out that no one, or nearly no one, has properties X&Y&Z, and also couldn't achieve them quickly even with effort. Therefore, no one is a "good person" by that definition.

Some examples of varying flavour, to see if I've understood:

Being a good person means not being racist, *but* being racist involves unconscious components (which Susan has limited control over because they are below conscious awareness) and structural components (which Susan has limited control over because she is not a world dictator). Therefore Susan is racist, therefore not good.

Being a good person means not exploiting other people abusively, *but* large parts of the world economy rely on exploiting people, and Bob, so long as he lives and breathes, cannot help passively exploiting people, so he cannot be good.

Alice likes to think of herself as a good person, but according to Robin Hanson, most of what she is doing turns out to be signalling. Alice is dismayed that she is a much shallower and more egotistical person than she had previously imagined.

Replies from: SaidAchmiz, jessica.liu.taylor↑ comment by Said Achmiz (SaidAchmiz) · 2019-07-04T16:05:12.468Z · LW(p) · GW(p)

But the problem with these criteria isn’t that they’re unsatisfiable! The problem is that they are subverted by malicious actors, who use equivocation (plus cache invalidation lag) as a sort of ‘exploit’ on people’s sense of morality. Consider:

Everyone agrees that being a good person means not being racist… if by that is meant “not knowingly behaving in a racist way”, “treating people equally regardless of race”, etc. But along come the equivocators, who declare that actually, “being racist involves unconscious and structural components”; and because people tend to be bad at keeping track of these sorts of equivocations, and their epistemic consequences, they still believe that being a good person means not being racist; but in combination with this new definition of “racist” (which was not operative when that initial belief was constructed), they are now faced with this “no one is a good person” conclusion, which is the result of an exploit.

Everyone agrees that being a good person means not exploiting people abusively… if by that is meant “not betraying trusts, not personally choosing to abuse anyone, not knowingly participating in abusive dynamics if you can easily avoid it”, etc. But along come the equivocators again, who declare that actually, “large parts of the world economy rely on exploiting people”, and that as a consequence, just existing in the modern world is exploitative! And once again, people still believe that being good means not being exploitative, yet in combination with this new definition (which, again, was not operative when the original moral belief was constructed), they’re faced with the “moral paradox” and are led to Calvinism.

If “racism” had always been understood to have “unconscious and structural components”, its avoidance (which is obviously impossible, under this formulation) could never have been accepted as a component of general virtue; and similarly for “exploitation”—if one can “exploit” merely by existing, and if this had always been understood to be part of the concept of “exploitation”, then “exploitation” wouldn’t be considered unvirtuous. But in fact the “moral exploit” consists precisely of abusing the slowness of conceptual cache invalidation, so to speak, by substituting malicious concepts for ordinary ones.

The Hansonian case is different, of course, and more complicated. It involves deeper questions, and not only moral ones but also questions of free will, etc. I do not think it is a good example of what we’re talking about, but in any case it ought to be discussed separately; it follows a very different pattern from the others.

Replies from: abandon↑ comment by dirk (abandon) · 2024-02-11T21:12:14.152Z · LW(p) · GW(p)

Certainly many people do the sort of thing you're describing, but I think you're fighting the hypothetical. [LW · GW] The post as I understand it is talking about people who fail to live up to their own definitions of being a good person.

For example, someone might believe that they are not a racist, because they treat people equally regardless of race, while in fact they are reluctant to shake the hands of black people in circumstances where they would be happy to shake the hands of white people. This hypothetical person has not consciously noticed that this is a pattern of behavior; from their perspective they make the individual decisions based on their feelings at the time, which do not involve any conscious intention to treat black people differently than white, and they haven't considered the data closely enough to notice that those feelings are reliably more negative with regards to black people than white. If they heard that someone else avoided shaking black people's hands, they would think that was a racist thing to do.

Our example, if they are heavily invested in an internal narrative of being a good non-racist sort of person, might react very negatively to having this behavior pointed out to them. It is a true fact about their behavior, and not even a very negative one, but in their own internal ontology it is the sort of thing Bad People do, as a Good Person they do not do bad things, and therefore telling them they're doing it is (when it comes to their emotional experience) the same as telling them they are a Bad Person.

This feels very bad! Fortunately, there is a convenient loophole: if you're a Good Person, then whoever told you you're a Bad Person must have been trying to hurt you. How awful they are, to make such a good person as you feel so bad! (To be clear, most of this is usually not consciously reasoned through—if it were it would be easier to notice the faulty logic—but rather directly experienced as though it were true.)

I think the dynamic I describe is the same one jessicata is describing, and it is a very common human failing.

When it comes to the difficulty of being or not being a good person, I think this is a matter of whether or not it's possible to be or not be a good person by one's own standards (e.g., one might believe that it's wrong to consume animal products, but be unable to become a vegan due to health concerns). If you fail to live up to your own moral standards and are invested in your self-image as the sort of person who meets them, it is tempting to revise the moral standards, internally avoid thinking about the fact that your actions lead to consequences you consider negative, treat people who point out your failings as attackers, etc.; jessicata's proposal is one potential way to avoid falling into that trap (if you already aren't a Good Person, then it's not so frightening to have done something a Good Person wouldn't do).

↑ comment by jessicata (jessica.liu.taylor) · 2019-07-04T03:14:32.612Z · LW(p) · GW(p)

Yes, this is exactly what I mean.

↑ comment by Viliam · 2019-07-03T20:35:27.714Z · LW(p) · GW(p)

I think it also depends on what model of morality you subscribe to.

In the consequentialist framework, there is a best action in the set of your possible actions, and that's what you should do. (Though we may argue that no person chooses the best action consistently all the time, and thus we are all bad.)

In the deontologist framework, sometimes all your possible actions either break some rule, or neglect some duty, if you get into a bad situation where the only possible way to fulfill a duty is to break a rule. (Here it is possible to turn all duties up to 11, so it becomes impossible for everyone to fulfill them.)

Replies from: greylag↑ comment by greylag · 2019-07-04T05:55:52.035Z · LW(p) · GW(p)

I think you get similar answers whether consequentialist or deontological.

Consequentialist: the consequences end up terrible irrespective of your actions.

Deontological: the set of rules and duties is contradictory (as you suggest) or requires superhuman control over your environment/society, or your subconscious mind.

↑ comment by Said Achmiz (SaidAchmiz) · 2019-07-03T07:04:08.637Z · LW(p) · GW(p)

Most people have a confused notion of what a “good person” is. According to this notion, being a “good person” requires having properties X, Y, and Z. Well, it turns out that no one, or nearly no one, has properties X&Y&Z, and also couldn’t achieve them quickly even with effort. Therefore, no one is a “good person” by that definition.

What sorts of X, Y, and Z do you have in mind? It is hard to tell whether what you’re saying here is sensible, without a bit more concreteness…

I also want to clarify “some behavior is unethical and also hard to stop”. What I mean here is that a lot of behavior is, judged for itself, unethical, in the sense that it’s bad for non-zero-sum coordination, and also that it’s hard to change one’s overall behavioral pattern to behave ethically with consistency. Which doesn’t at all imply that people who haven’t already done this are “bad people”, it just means they still take actions that are bad for non-zero-sum coordination.

Same question as above: what sorts of behavior do you have in mind, when you say this?

Also, and orthogonally to the above question: you appear to be implying (correct me if I’m misunderstanding you) that “sometimes does unethical things” implies “bad person” (either in your morality, or in the morality you impute to “most people”, or both). But this implication seems, to me, neither to hold “in truth” (that is, in anything that seems to me to be a correct morality) nor to be held as true by most people.

After all, if it were not as I say, then there would be no such notion as the morally imperfect, yet good, person! Now, it is true that, e.g., Christianity famously divides the world into saints and sinners, yet even most Christians—when they are reasoning in an everyday fashion about people they interact with, rather than reciting Sunday-school lessons—have no trouble at all thinking of people as being flawed but good.

There is, of course, also the matter that the implication of “behavior is ‘bad for non-zero-sum coordination’ -> behavior is unethical” seems rather dubious, at best… but I cannot speak with any certainty on this point without seeing some examples of just what kinds of behaviors you’re talking about.

comment by Richard_Kennaway · 2019-07-04T18:26:22.512Z · LW(p) · GW(p)

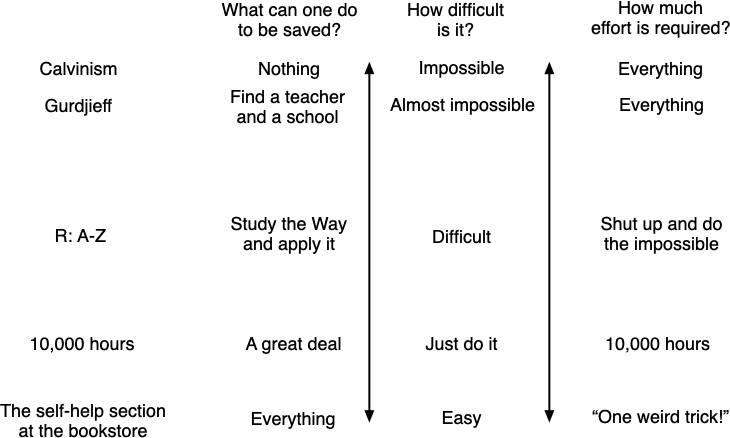

Considering this more widely, here's a diagram I came up with. (Thanks to Raemon for advice on embedding images.)

(Please let me know if you do not see an image above. There might be a setting on my web site that blocks embedding.) (ETA: minor changes to image.)

Replies from: Raemon↑ comment by Raemon · 2019-07-04T18:47:01.168Z · LW(p) · GW(p)

You can use a standard markdown for images. (i.e. I just pasted the following into a comment, but as soon as you type [space] after it, it'll reformat into an image)

↑ comment by Said Achmiz (SaidAchmiz) · 2019-07-04T21:22:14.986Z · LW(p) · GW(p)

And a note for users of GreaterWrong:

Clicking the image button (4th from left in the editor, looks like a little landscape picture icon) will insert the correct image markup for you (so you don’t have to remember how it goes).

↑ comment by eigen · 2019-07-04T19:21:40.477Z · LW(p) · GW(p)

If considering implementing graphs: Graphviz, mermaid or flowchart.js all are good options and have implementations in js (many have implementations as React components).

Another alternative to not add parsing is http:asciiflow.com/

+--------+ +----------+ | +------> | | Hello! +------> | +--------+ +----------+

But sadly it breaks; possible because of the font.

Replies from: SaidAchmiz↑ comment by Said Achmiz (SaidAchmiz) · 2019-07-04T21:19:25.829Z · LW(p) · GW(p)

And you can use this handy web page to generate GraphViz graphs in SVG form (which you can then link / embed).

comment by Kaj_Sotala · 2019-07-03T08:32:36.229Z · LW(p) · GW(p)

"Feeling guilty/ashamed, then lashing out in order to feel better" is a good example of an exile/protector dynamic [LW · GW]. It may be possible to reduce the strength of such reactions through an intellectual argument, as you seem to suggest yourself to have done. But as Ruby suggested [LW(p) · GW(p)], the underlying insecurity causing the protector response is likely to be related to some fear around social rejection, in which case working on the level of intellectual concepts may be insufficient for changing the responses.

I suspect that the "nobody is good" move, when it works, acts on the level of self-concept editing [? · GW], changing your concepts so as to redefine the "evil" act to no longer be evidence about you being evil. But based on my own experience, this move only works to heal the underlying insecurity when your brain considers the update to be true - which is to say, when it perceives the "evil act" to be something which your social environment will no longer judge you harshly for, allowing the behavior to update. If this seems false, or the underlying insecurity is based on a too strong of a trauma, then one may need to do more emotional kind of work in order to address it.

That said, one may adjust the problematic response pattern even without healing the original insecurity: e.g. sufficiently strong external pressure saying that the response is bad, may set up new mechanisms which suppress the-responses-which-have-been-judged-as-bad. But this may involve building a higher tower of protectors [? · GW], meaning that healing the original insecurity would allow for more a flexible range of behavior.

Incidentally, I don't think that tone arguments are always made in bad faith. E.g. within EA, there have been debates over "should we make EA arguments which are guilt/obligation-based", which is basically an argument over the kind of a tone to use. Now, I agree that tone arguments often are themselves a protector reaction, aimed at just eliminating the unpleasant tone. But there also exists a genuine disagreement over what kind of a tone is more effective, and there exist lots of people who are genuinely worried that groups using too harsh of a tone to create social change are shooting themselves in the foot.

My guess is that people arguing for a "nice" tone are doing so because of an intuitive understanding that a harsh tone is more likely to trigger protector responses and thus be counterproductive. People may even be trying to communicate that it would be easier for them personally to listen if their protector responses weren't being triggered. On the other hand, people arguing for a harsh tone are doing so because of an intuitive understanding that the "nice" tone is often too easily ignored, and that making people feel guilty over the defensive reaction in the first place is also a strategy which can be made to work. This seems to be an area where different people will react differently to the same strategies, causing people to be inclined to over-generalize from their own experience [LW · GW].

Replies from: greylag↑ comment by greylag · 2019-07-03T18:01:38.370Z · LW(p) · GW(p)

Kaj, I'm having real trouble parsing this:

the "nobody is good" move, when it works, [changes] your concepts so as to redefine the "evil" act to no longer be evidence about you being evil. But ... this move only works to heal the underlying insecurity when your brain considers the update to be true - which is to say, when it perceives the "evil act" to be something which your social environment will no longer judge you harshly for, allowing the behavior to update.

Would you clarify?

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2019-07-11T09:55:10.394Z · LW(p) · GW(p)

To rephrase:

- I suspect that typically, when someone thinks "I shouldn't do X because that would make me evil and I shouldn't be evil", what their brain is actually computing is something like "I shouldn't do X because people who I care about would then consider me evil, and that would be bad for me", even if that's not how it appears subjectively.

- Note that this is different from thinking that "I shouldn't do X because that's wrong". I'm not claiming that a fear of punishment would be the only thing that disinclined us from doing bad things. You can consider X to be wrong and refrain from doing it, without having a subconscious fear of punishment. The difference is that if you are afraid of being punished, then any suggestion of having done wrong is likely to trigger a defensive reaction and a desire to show that you did no wrong. Whereas if you don't feel the fear, you might just think "oh, the thing that I did was wrong", feel healthy regret, and act to fix it.

- Sometimes you might feel like you are evil (that is, likely to be punished) because you have done, or repeatedly do, something bad. In some of these cases, it can help to redefine your concepts. For instance, if you did bad things when you were young, you can think "I did bad things when I was young and didn't know any better, but I'm a grownup now". Or "these things are evil, but nobody is actually purely good, so doing them doesn't make me any worse than other people".

- Now, if "I shouldn't be evil" actually means "I shouldn't do things that make people punish me", then attempting these kinds of redefinitions will also trigger a subconscious evaluation process which has to be passed for the redefinition to succeed.

- If you think that "I did bad things when I was young and didn't know any better, but I'm a grownup now", then this corresponds to something like "I did bad things when I was young, but nobody is going to hold those things against me anymore". This redefinition will only succeed if the latter sentence feels true to your brain.

- If you think that "these things are evil, but nobody is actually purely good, so doing them doesn't make me any worse than other people", then this corresponds to something like "these things are blameworthy, but everyone does them, so doing them won't get me judged any more harshly than anyone else". Again, this redefinition will only succeed if the latter sentence feels true to your brain.

- This is assuming that you are trying to use those redefinitions to heal the original bad feeling. One can also use these kinds of thoughts to suppress the original bad feeling (create a protector which seeks to extinguish the bad feeling using counter-arguments). In that case, the part of your mind which was originally convinced of you being in danger, doesn't need to be convinced otherwise [? · GW]. But this will set up an internal conflict as that part will continue to try to make itself heard, and may sometimes overwhelm whatever blocks have been put in place to keep in silent.

Related: Scott Alexander's Guilt: Another Gift Nobody Wants [LW · GW].

comment by cat from /dev/null (cat-from-dev-null) · 2019-07-03T20:02:38.741Z · LW(p) · GW(p)

It might be worth separating self-consciousness (awareness of how your self looks from within) from face-consciousness (awareness of how your self looks from outside). Self-consciousness is clearly useful as a cheap proxy for face-consciousness, and so we develop a strong drive to be able to see ourselves as good in order for others to do so as well. We see the difference between this separation and being a good person being only a social concept (suggested by Ruby [LW(p) · GW(p)]) by considering something like the events in "Self-consciousness in social justice" with only two participants: then there is no need to defend face against others, but people will still strive for a self-serving narrative.

Correct me if I'm wrong: you seem more worried about self-consciousness and the way it pushes people to not only act performatively, but also limits their ability to see their performance as a performance causing real damage to their epistemics.

Replies from: jessica.liu.taylor↑ comment by jessicata (jessica.liu.taylor) · 2019-07-04T03:13:15.628Z · LW(p) · GW(p)

Correct me if I’m wrong: you seem more worried about self-consciousness and the way it pushes people to not only act performatively, but also limits their ability to see their performance as a performance causing real damage to their epistemics.

Yes, exactly! If you can act while being aware that it is an act, that's really useful!

comment by Matt Goldenberg (mr-hire) · 2019-07-05T15:02:19.641Z · LW(p) · GW(p)

I've been paying more attention to this reaction over the last few days since reading this post, and realize that its' often a bit different than "You're calling this bad, I've done it, I don't want to be a bad person, so I want to defend this as good."

Oftentimes the actual reaction is something like "You're calling this bad, and I've done it and don't believe its' bad. If I let this slide, I'm letting you set a precedent for calling my acceptable behavior bad, and I can't do that."

An example: Having lunch with my sister and her friend. They start making fun of Tom Cruise for dating a younger (but still adult) actress and getting her gifts on set. I don't believe there's anything wrong with age gaps and expressing the love language of giving gifts between consenting adults. If I don't say anything, there's a sense that I'm implicitly giving them license to call ME bad if I date someone with an age gap or give them gifts.

So I defend the practice, not because its' suddenly about a part of myself I don't want to look at, but because its' attacking things I would or have done that I totally endorse.

comment by Viliam · 2019-07-03T21:06:46.437Z · LW(p) · GW(p)

I imagine that Susan's position is complicated, because in the social justice framework, in most interactions she is considered the less-privileged one, and then suddenly in a few of them she becomes the more-privileged one. And in different positions, different behavior is expected. Which, I suppose, is emotionally difficult, even if intellectually the person accepts the idea of intersectionality.

If in most situations, using tears is the winning strategy, it will be difficult to avoid crying when it suddenly becomes inappropriate for reasons invisible to her System 1. (Ironically, the less racist she is, the less likely she will notice "oops, this is a non-white person talking to me, I need to react differently".)

Here a white man would have the situation easier, because his expected reaction on Monday is the same as his expected reaction on Tuesday, so he can use one behavior consistently.

comment by Matt Goldenberg (mr-hire) · 2019-07-05T15:23:58.526Z · LW(p) · GW(p)

however, tone arguments aren't about epistemic norms, they're about people's feelings

One interesting idea here is that people's feelings are actually based on their beliefs (this is for instance one of the main ideas behind CBT, Focusing, and Internal Double Crux).

If I make people feel bad about being able to express certain parts of themselves, I'm creating an epistemic environment where there are certain beliefs they feel less comfortable sharing. The steelmanned tone argument is "By not being polite, you're creating an environment where it's harder to share opposing beliefs, because you're painting the people who hold those beliefs as bad people"

In other words, in an environment where people feel comfortable not being judged about their self-image, you're more able to have frank discussions about peoples' actual beliefs.

comment by Raemon · 2019-07-03T02:36:55.799Z · LW(p) · GW(p)

The only thing left to do is to steer in the right direction: make things around you better instead of worse, based on your intrinsically motivating discernment of what is better/worse. Don't try to be a good person, just try to make nicer things happen. And get more foresight, perspective, and cooperation as you go, so you can participate in steering bigger things on longer timescales using more information.

FWIW, this is roughly my viewpoint.

I don't think being a good person is a concept that is really that meaningful. Meanwhile, many of the systems I participate in seem quite terrible along many dimensions, and not-being-complicit in them doesn't seem very tractable. There were points in the past where I stressed out about this through the 'good person' lens, but for the past few years I've mostly just looked through the frame of 'try to steer towards nicer things.'

I realize this may seem in tension with some of my recent comments but I don't see it that way.

I do feel like there's still some kind of substantive disagreement here, but that disagreement mostly doesn't live in the frame you've laid out. (Some of it does, but I'm not sure whether those particular disagreements are a crux of mine, still mulling it over)

I think individual people should do their best not to see things in the lens of 'am I a good person' or 'are they a good person?', but engineers building systems (social or technological) need to account for people's tendency to do that. (Whether by routing around the problem, or limiting the system to people with common knowledge that they don't have that problem, or building feedback loops into the system that improve people's ability to look at critiques outside the lens of 'bad person-ness')

Replies from: Ruby↑ comment by Ruby · 2019-07-03T05:29:31.195Z · LW(p) · GW(p)

I don't think being a good person is a concept that is really that meaningful.

My not-terribly-examined model is that good person is a social concept masquerading as a moral one. Human morality evolved for social reasons to service social purposes [citation needed]. Under this model, when someone is anxious about being a good person, their anxiety is really about their acceptance by others, i.e. good = has met the group's standards for approval and inclusion.

If this model is correct, then saying that being a good person is not a meaningful moral concept could be interpreted (consciously or otherwise) by some listeners to mean "there is no standard you can meet which means you have gained society's approval". Which is probably damned scary if you're anxious about that kind of thing.