LLM Generality is a Timeline Crux

post by eggsyntax · 2024-06-24T12:52:07.704Z · LW · GW · 119 commentsContents

Four-Month Update Short Summary Longer summary Introduction What is general reasoning? How general are LLMs? Evidence for generality Evidence against generality Block world Scheduling ARC-AGI Will scaling solve this problem? Will scaffolding or tooling solve this problem? Why does this matter? OK, but what do you think? Further reading None 119 comments

Four-Month Update

[EDIT: I believe that this paper looking at o1-preview, which gets much better results on both blocksworld and obfuscated blocksworld, should update us significantly toward LLMs being capable of general reasoning. See update post here [LW · GW].]

Short Summary

LLMs may be fundamentally incapable of fully general reasoning, and if so, short timelines are less plausible.

Longer summary

There is ML research suggesting that LLMs fail badly on attempts at general reasoning, such as planning problems, scheduling, and attempts to solve novel visual puzzles. This post provides a brief introduction to that research, and asks:

- Whether this limitation is illusory or actually exists.

- If it exists, whether it will be solved by scaling or is a problem fundamental to LLMs.

- If fundamental, whether it can be overcome by scaffolding & tooling.

If this is a real and fundamental limitation that can't be fully overcome by scaffolding, we should be skeptical of arguments like Leopold Aschenbrenner's (in his recent 'Situational Awareness') that we can just 'follow straight lines on graphs' and expect AGI in the next few years.

Introduction

Leopold Aschenbrenner's recent 'Situational Awareness' document has gotten considerable attention in the safety & alignment community. Aschenbrenner argues that we should expect current systems to reach human-level given further scaling[1], and that it's 'strikingly plausible' that we'll see 'drop-in remote workers' capable of doing the work of an AI researcher or engineer by 2027. Others hold similar views.

Francois Chollet and Mike Knoop's new $500,000 prize for beating the ARC benchmark has also gotten considerable recent attention in AIS[2]. Chollet holds a diametrically opposed view: that the current LLM approach is fundamentally incapable of general reasoning, and hence incapable of solving novel problems. We only imagine that LLMs can reason, Chollet argues, because they've seen such a vast wealth of problems that they can pattern-match against. But LLMs, even if scaled much further, will never be able to do the work of AI researchers.

It would be quite valuable to have a thorough analysis of this question through the lens of AI safety and alignment. This post is not that[3], nor is it a review of the voluminous literature on this debate (from outside the AIS community). It attempts to briefly introduce the disagreement, some evidence on each side, and the impact on timelines.

What is general reasoning?

Part of what makes this issue contentious is that there's not a widely shared definition of 'general reasoning', and in fact various discussions of this use various terms. By 'general reasoning', I mean to capture two things. First, the ability to think carefully and precisely, step by step. Second, the ability to apply that sort of thinking in novel situations[4].

Terminology is inconsistent between authors on this subject; some call this 'system II thinking'; some 'reasoning'; some 'planning' (mainly for the first half of the definition); Chollet just talks about 'intelligence' (mainly for the second half).

This issue is further complicated by the fact that humans aren't fully general reasoners without tool support either. For example, seven-dimensional tic-tac-toe is a simple and easily defined system, but incredibly difficult for humans to play mentally without extensive training and/or tool support. Generalizations that are in-distribution for humans seems like something that any system should be able to do; generalizations that are out-of-distribution for humans don't feel as though they ought to count.

How general are LLMs?

It's important to clarify that this is very much a matter of degree. Nearly everyone was surprised by the degree to which the last generation of state-of-the-art LLMs like GPT-3 generalized; for example, no one I know of predicted that LLMs trained on primarily English-language sources would be able to do translation between languages. Some in the field argued as recently as 2020 that no pure LLM would ever able to correctly complete Three plus five equals. The question is how general they are.

Certainly state-of-the-art LLMs do an enormous number of tasks that, from a user perspective, count as general reasoning. They can handle plenty of mathematical and scientific problems; they can write decent code; they can certainly hold coherent conversations.; they can answer many counterfactual questions; they even predict Supreme Court decisions pretty well. What are we even talking about when we question how general they are?

The surprising thing we find when we look carefully is that they fail pretty badly when we ask them to do certain sorts of reasoning tasks, such as planning problems, that would be fairly straightforward for humans. If in fact they were capable of general reasoning, we wouldn't expect these sorts of problems to present a challenge. Therefore it may be that all their apparent successes at reasoning tasks are in fact simple extensions of examples they've seen in their truly vast corpus of training data. It's hard to internalize just how many examples they've actually seen; one way to think about it is that they've absorbed nearly all of human knowledge.

The weakman version of this argument is the Stochastic Parrot claim, that LLMs are executing relatively shallow statistical inference on an extremely complex training distribution, ie that they're "a blurry JPEG of the web" (Ted Chiang). This view seems obviously false at this point (given that, for example, LLMs appear to build world models), but assuming that LLMs are fully general may be an overcorrection.

Note that this is different from the (also very interesting) question of what LLMs, or the transformer architecture, are capable of accomplishing in a single forward pass. Here we're talking about what they can do under typical auto-regressive conditions like chat.

Evidence for generality

I take this to be most people's default view, and won't spend much time making the case. GPT-4 and Claude 3 Opus seem obviously be capable of general reasoning. You can find places where they hallucinate, but it's relatively hard to find cases in most people's day-to-day use where their reasoning is just wrong. But if you want to see the case made explicitly, see for example "Sparks of AGI" (from Microsoft, on GPT-4) or recent models' performance on benchmarks like MATH which are intended to judge reasoning ability.

Further, there's been a recurring pattern (eg in much of Gary Marcus's writing) of people claiming that LLMs can never do X, only to be promptly proven wrong when the next version comes out. By default we should probably be skeptical of such claims.

One other thing worth noting is that we know from 'The Expressive Power of Transformers with Chain of Thought' that the transformer architecture is capable of general reasoning under autoregressive conditions. That doesn't mean LLMs trained on next-token prediction learn general reasoning, but it means that we can't just rule it out as impossible. [EDIT 10/2024: a new paper, 'Autoregressive Large Language Models are Computationally Universal', makes this even clearer, and furthermore demonstrates that it's true of LLMs in particular].

Evidence against generality

The literature here is quite extensive, and I haven't reviewed it all. Here are three examples that I personally find most compelling. For a broader and deeper review, see "A Survey of Reasoning with Foundation Models".

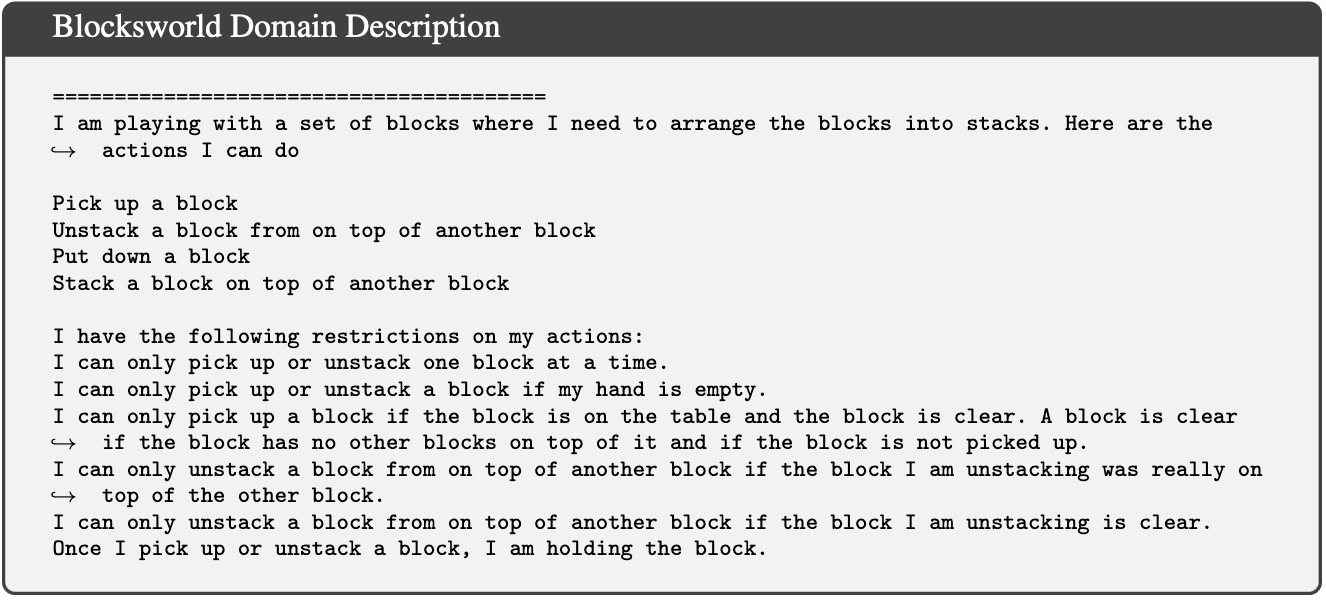

Block world

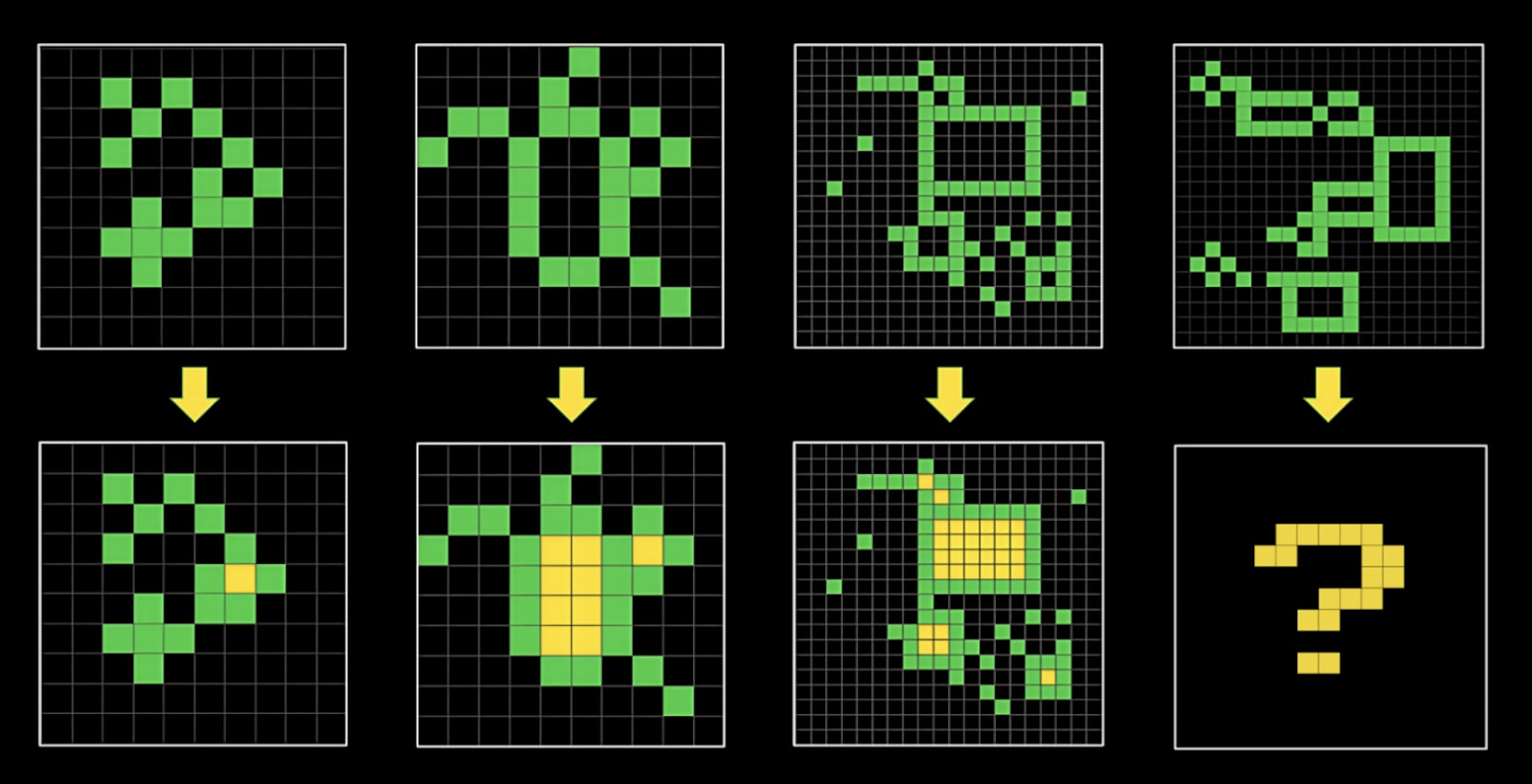

All LLMs to date fail rather badly at classic problems of rearranging colored blocks. We do see improvement with scale here, but if these problems are obfuscated, performance of even the biggest LLMs drops to almost nothing[5].

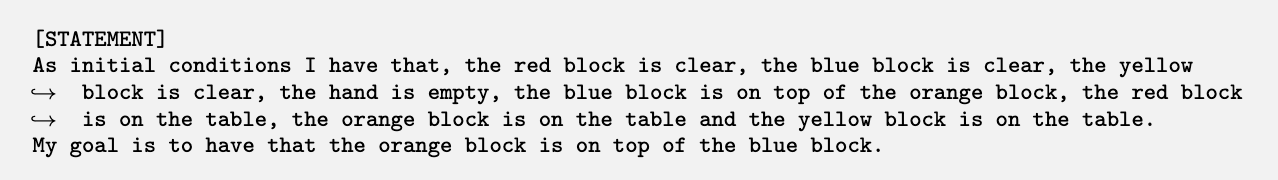

Scheduling

LLMs currently do badly at planning trips or scheduling meetings between people with availability constraints [a commenter points out [LW(p) · GW(p)] that this paper has quite a few errors, so it should likely be treated with skepticism].

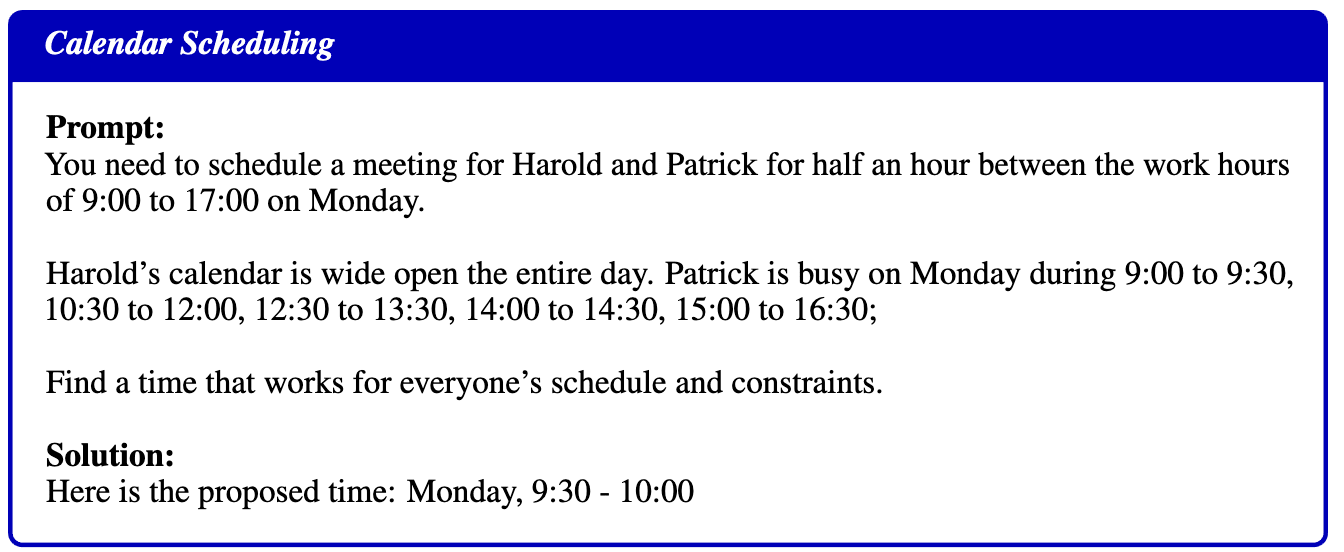

ARC-AGI

Current LLMs do quite badly on the ARC visual puzzles, which are reasonably easy for smart humans.

Will scaling solve this problem?

The evidence on this is somewhat mixed. Evidence that it will includes LLMs doing better on many of these tasks as they scale. The strongest evidence that it won't is that LLMs still fail miserably on block world problems once you obfuscate the problems (to eliminate the possibility that larger LLMs only do better because they have a larger set of examples to draw from)[5].

One argument made by Sholto Douglas and Trenton Bricken (in a discussion with Dwarkesh Patel) is that this is a simple matter of reliability -- given a 5% failure rate, an AI will most often fail to successfully execute a task that requires 15 correct steps. If that's the case, we have every reason to believe that further scaling will solve the problem.

Will scaffolding or tooling solve this problem?

This is another open question. It seems natural to expect that LLMs could be used as part of scaffolded systems that include other tools optimized for handling general reasoning (eg classic planners like STRIPS), or LLMs can be given access to tools (eg code sandboxes) that they can use to overcome these problems. Ryan Greenblatt's new work on getting very good results [LW · GW] on ARC with GPT-4o + a Python interpreter provides some evidence for this.

On the other hand, a year ago many expected scaffolds like AutoGPT and BabyAGI to result in effective LLM-based agents, and many startups have been pushing in that direction; so far results have been underwhelming. Difficulty with planning and novelty seems like the most plausible explanation.

Even if tooling is sufficient to overcome this problem, outcomes depend heavily on the level of integration and performance. Currently for an LLM to make use of a tool, it has to use a substantial number of forward passes to describe the call to the tool, wait for the tool to execute, and then parse the response. If this remains true, then it puts substantial constraints on how heavily LLMs can rely on tools without being too slow to be useful[6]. If, on the other hand, such tools can be more deeply integrated, this may no longer apply. Of course, even if it's slow there are some problems where it's worth spending a large amount of time, eg novel research. But it does seem like the path ahead looks somewhat different if system II thinking remains necessarily slow & external.

Why does this matter?

The main reason that this is important from a safety perspective is that it seems likely to significantly impact timelines. If LLMs are fundamentally incapable of certain kinds of reasoning, and scale won't solve this (at least in the next couple of orders of magnitude), and scaffolding doesn't adequately work around it, then we're at least one significant breakthrough away from dangerous AGI -- it's pretty hard to imagine an AI system executing a coup if it can't successfully schedule a meeting with several of its co-conspirator instances.

If, on the other hand, there is no fundamental blocker to LLMs being able to do general reasoning, then Aschenbrenner's argument starts to be much more plausible, that another couple of orders of magnitude can get us to the drop-in AI researcher, and once that happens, further progress seems likely to move very fast indeed.

So this is an area worth keeping a close eye on. I think that progress on the ARC prize will tell us a lot, now that there's half a million dollars motivating people to try for it. I also think the next generation of frontier LLMs will be highly informative -- it's plausible that GPT-4 is just on the edge of being able to effectively do multi-step general reasoning, and if so we should expect GPT-5 to be substantially better at it (whereas if GPT-5 doesn't show much improvement in this area, arguments like Chollet's and Kambhampati's are strengthened).

OK, but what do you think?

[EDIT: see update post [LW · GW] for revised versions of these estimates]

I genuinely don't know! It's one of the most interesting and important open questions about the current state of AI. My best guesses are:

- LLMs continue to do better at block world and ARC as they scale: 75%

- LLMs entirely on their own reach the grand prize mark on the ARC prize (solving 85% of problems on the open leaderboard) before hybrid approaches like Ryan's: 10%

- Scaffolding & tools help a lot, so that the next gen[7] (GPT-5, Claude 4) + Python + a for loop can reach the grand prize mark[8]: 60%

- Same but for the gen after that (GPT-6, Claude 5): 75%

- The current architecture, including scaffolding & tools, continues to improve to the point of being able to do original AI research: 65%, with high uncertainty[9]

Further reading

- Foundational Challenges in Assuring Alignment and Safety of Large Language Models, 04/24

- Language Models Are Greedy Reasoners: A Systematic Formal Analysis of Chain-of-Thought 10/22

- Finding Backward Chaining Circuits in Transformers Trained on Tree Search [LW · GW] 05/24

- Faith and Fate: Limits of Transformers on Compositionality, 05/23

- Papers from Kambhampati's lab, including

- NATURAL PLAN: Benchmarking LLMs on Natural Language Planning, 06/24

- What Algorithms can Transformers Learn? A Study in Length Generalization, 10/23

- ARC prize, 06/24 and On the Measure of Intelligence, 11/19

- See also Chollet's recent appearance on Dwarkesh Patel's podcast.

- Large Language Models Cannot Self-Correct Reasoning Yet, 10/23

- A Survey of Reasoning with Foundation Models, 12/23

- ^

Aschenbrenner also discusses 'unhobbling', which he describes as 'fixing obvious ways in which models are hobbled by default, unlocking latent capabilities and giving them tools, leading to step-changes in usefulness'. He breaks that down into categories here. Scaffolding and tooling I discuss here; RHLF seems unlikely to help with fundamental reasoning issues. Increased context length serves roughly as a kind of scaffolding for purposes of this discussion. 'Posttraining improvements' is too vague to really evaluate. But note that his core claim (the graph here) 'shows only the scaleup in base models; “unhobblings” are not pictured'.

- ^

Discussion of the ARC prize in the AIS and adjacent communities includes James Wilken-Smith [EA · GW], O O [LW · GW], and Jacques Thibodeaux [LW · GW].

- ^

Section 2.4 of the excellent "Foundational Challenges in Assuring Alignment and Safety of Large Language Models" is the closest I've seen to a thorough consideration of this issue from a safety perspective. Where this post attempts to provide an introduction, "Foundational Challenges" provides a starting point for a deeper dive.

- ^

This definition is neither complete nor uncontroversial, but is sufficient to capture the range of practical uncertainty addressed below. Feel free to mentally substitute 'the sort of reasoning that would be needed to solve the problems described here.' Or see my more recent attempt at a definition [LW(p) · GW(p)].

- ^

A major problem here is that they obfuscated in ways that made the challenge unnecessarily hard for LLMs by pushing against the grain of the English language. For example they use 'pain object' as a fact, and say that an object can 'feast' another object. Beyond that, the fully-obfuscated versions would be nearly incomprehensible to a human as well; eg 'As initial conditions I have that, aqcjuuehivl8auwt object a, aqcjuuehivl8auwt object b...object b 4dmf1cmtyxgsp94g object c...'. See Appendix 1 in 'On the Planning Abilities of Large Language Models'. It would be valuable to repeat this experiment while obfuscating in ways that were compatible with what is, after all, LLMs' deepest knowledge, namely how the English language works.

- ^

A useful intuition pump here might be the distinction between data stored in RAM and data swapped out to a disk cache. The same data can be retrieved in either case, but the former case is normal operation, whereas the latter case is referred to as "thrashing" and grinds the system nearly to a halt.

- ^

Assuming roughly similar compute increase ratio between gens as between GPT-3 and GPT-4.

- ^

This isn't trivially operationalizable, because it's partly a function of how much runtime compute you're willing to throw at it. Let's say a limit of 10k calls per problem.

- ^

This isn't really operationalizable at all, I don't think. But I'd have to get pretty good odds to bet on it anyway; I'm neither willing to buy nor sell at 65%. Feel free to treat as bullshit since I'm not willing to pay the tax ;)

119 comments

Comments sorted by top scores.

comment by [deleted] · 2024-06-25T00:45:31.626Z · LW(p) · GW(p)

The Natural Plan paper has an insane amount of errors in it. Reading it feels like I'm going crazy.

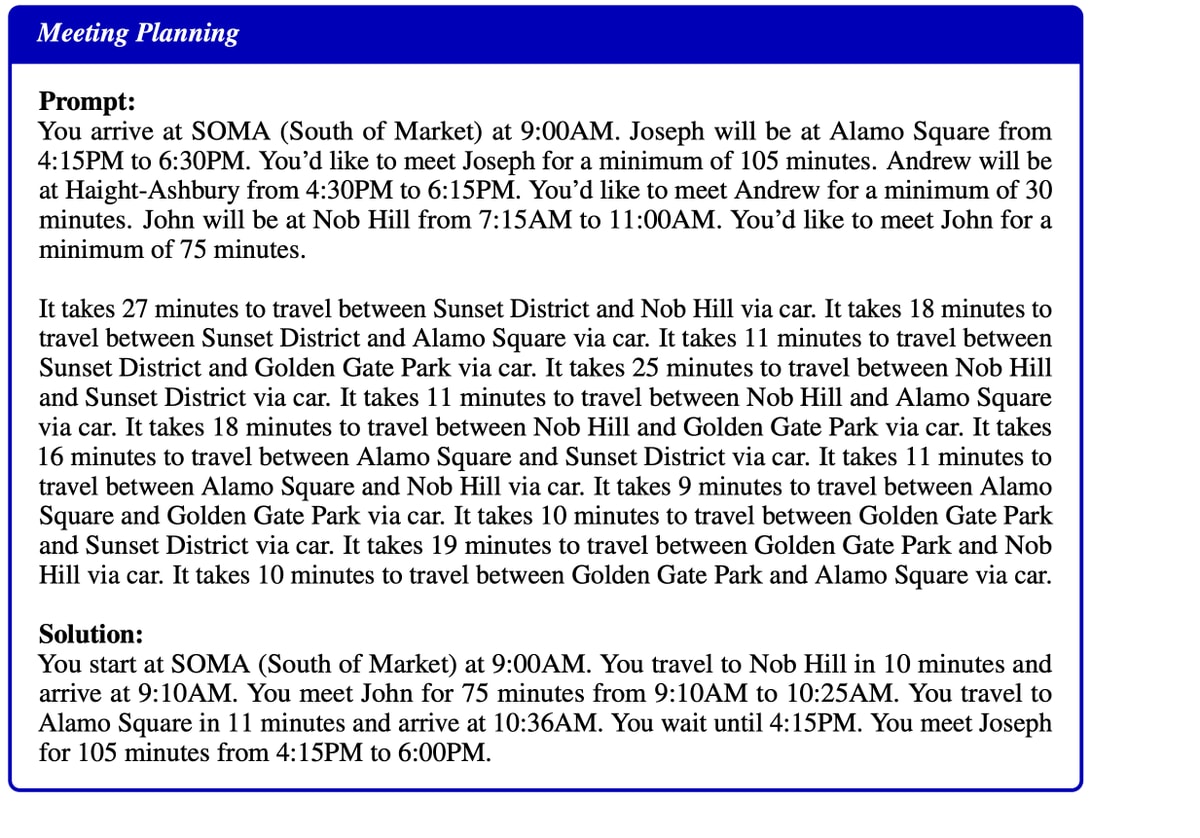

This meeting planning task seems unsolvable:

The solution requires traveling from SOMA to Nob Hill in 10 minutes, but the text doesn't mention the travel time between SOMA and Nob Hill. Also the solution doesn't mention meeting Andrew at all, even though that was part of the requirements.

Here's an example of the trip planning task:

The trip is supposed to be 14 days, but requires visiting Bucharest for 5 days, London for 4 days, and Reykjavik for 7 days. I guess the point is that you can spend a day in multiple cities, but that doesn't match with an intuitive understanding of what it means to "spend N days" in a city. Also, by that logic you could spend a total of 28 days in different cities by commuting every day, which contradicts the authors' claim that each problem only has one solution.

Replies from: eggsyntax↑ comment by eggsyntax · 2024-06-25T08:36:28.205Z · LW(p) · GW(p)

Thanks for evaluating it in detail. I assumed that they at least hadn't screwed up the problems! Editing the piece to note that the paper has problems.

Disappointingly, a significant number of existing benchmarks & evals have problems like that IIRC.

Replies from: Nonecomment by Seth Herd · 2024-06-24T21:24:58.084Z · LW(p) · GW(p)

IMO, these considerations do lengthen expected timelines, but not enough or certainly enough that we can ignore the possibility of very short timelines. The distribution of timelines matters a lot, not just the point estimate.

We are still not directing enough of our resources to this possibility if I'm right about that. We have limited time and brainpower in alignment, but it looks to me like relatively little is directed at scenarios in which the combination of scaling and scaffolding language models fairly quickly achieves competent agentic AGI. More on this below.

Excellent post, big upvote.

These are good questions, and you've explained them well.

I have a bunch to say on this topic. Some of it I'm not sure I should say in public, as even being convincing about the viability of this route will cause more people to work on it. So to be vague: I think applying cognitive psychology and cognitive neuroscience systems thinking to the problem suggests many routes to scaffold around the weaknesses of LLMs. I suspect the route from here to AGI is disjunctive; several approaches could work relatively quickly. There are doubtless challenges to implementing that scaffolding, as people have encountered in the last year, pursuing the most obvious and simple routes to scaffold LLMs into more capable agents.

A note on biases: I'm afraid people in AI safety are sometimes not taking short timelines seriously because it is emotionally difficult to hold both that view, and a pessimistic view of alignment difficulty. I myself am biased in both directions; there's an emotional pull toward having my theories proven right, and seeing cool agents soon, and before they're quite smart enough to take over. In other moments I am fervently hoping that LLMs are deceptively far from competent agentic AGI, and we more years to work and enjoy. I think it would be wonderful to keep pushing beyond guesses, as you suggest. I don't know how to publicly estimate possible timelines without being specific enough and convincing enough to aid progress in that direction, so I intend to first write a post about the question: if we hypothetically could get to AGI from scaffolded LLMs, should we push in that direction, based on their apparently large advantages for alignment? I suspect the answer is yes, but I'm not going to make that decision unilaterally, and I haven't yet gotten enough people to engage deeply enough in private to be sure enough.

So for now, I'll just say: it really doesn't look like we can rule it out, so we should be working on alignment for possible short timelines to LMA full AGI.

Which brings me back to the note above: lots of the limited resources in alignment are going into "aligning" language models. Scare quotes to indicate the disagreement over whether or how much that's going to contribute to aligning agents built out of LLMs. The disagreement about whether prosaic alignment is enough or even contributes much is a nontrivial issue. I've posted about it in the past and am currently attempting to think through and write about it more clearly.

My current answer is that prosaic alignment work on LLMs helps in those scenarios, but doesn't do the whole job. There are very separate issues in how LLM-based agents will be given explicit goals, and the factors weighing on whether those goals are precise enough and reflexively stable in a system that gains full freedom and self-awareness. I would dearly love a few more collaborators in addressing that part of what still looks like the single most likely AGI scenario, and almost certainly the fastest route to AGI if it works.

comment by Jan_Kulveit · 2024-06-24T22:23:46.931Z · LW(p) · GW(p)

Few thoughts

- actually, these considerations mostly increase uncertainty and variance about timelines; if LLMs miss some magic sauce, it is possible smaller systems with the magic sauce could be competitive, and we can get really powerful systems sooner than Leopold's lines predict

- my take on what is one important thing which makes current LLMs different from humans is the gap described in Why Simulator AIs want to be Active Inference AIs [LW · GW]; while that post intentionally avoids having a detailed scenario part, I think the ontology introduced is better for thinking about this than scaffolding

- not sure if this is clear to everyone, but I would expect the discussion of unhobbling being one of the places where Leopold would need to stay vague to not breach OpenAI confidentiality agreements; for example, if OpenAI was putting a lot of effort into make LLM-like systems be better at agency, I would expect he would not describe specific research and engineering bets

comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-06-24T20:36:40.290Z · LW(p) · GW(p)

My short timelines have their highest probability path going through:

Current LLMs get scaled enough that they are capable of automating search for new and better algorithms.

Somebody does this search and finds something dramatically better than transformers.

A new model trained on this new architecture repeats the search, but even more competently. An even better architecture is found.

The new model trained on this architecture becomes AGI.

So it seems odd to me that so many people seem focused on transformer-based LLMs becoming AGI just through scaling. That seems theoretically possible to me, but I expect it to be so much less efficient that I expect it to take longer. Thus, I don't expect that path to pay off before algorithm search has rendered it irrelevant.

Replies from: ChosunOne, lcmgcd, eggsyntax↑ comment by ChosunOne · 2024-06-26T04:33:04.983Z · LW(p) · GW(p)

My crux is that LLMs are inherently bad at search tasks over a new domain. Thus, I don't expect LLMs to scale to improve search.

Anecdotal evidence: I've used LLMs extensively and my experience is that LLMs are great at retrieval but terrible at suggestion when it comes to ideas. You usually get something resembling an amalgamation of Google searches vs. suggestions from some kind of insight.

↑ comment by eggsyntax · 2024-06-26T12:57:33.426Z · LW(p) · GW(p)

[EDIT: @ChosunOne [LW · GW] convincingly argues below that the paper I cite in this comment is not good evidence for search, and I would no longer claim that it is, although I'm not necessarily sold on the broader claim that LLMs are inherently bad at search (which I see largely as an expression of the core disagreement I present in this post).]

LLMs are inherently bad at search tasks over a new domain.

The recently-published 'Evidence of Learned Look-Ahead in a Chess-Playing Neural Network' suggests that this may not be a fundamental limitation. It's looking at a non-LLM transformer, and the degree to which we can treat it as evidence about LLMs is non-obvious (at least to me). But it's enough to make me hesitant to conclude that this is a fundamental limitation rather than something that'll improve with scale (especially since we see performance on planning problems, which in my view are essentially search problems, improving with scale).

Replies from: ChosunOne↑ comment by ChosunOne · 2024-06-27T15:08:11.604Z · LW(p) · GW(p)

The cited paper in Section 5 (Conclusion-Limitations) states plainly:

(2) We focus on look-ahead along a single line of play; we do not test whether Leela compares multiple different lines of play (what one might call search). ... (4) Chess as a domain might favor look-ahead to an unusually strong extent.

The paper is more just looking at how Leela evaluates a given line rather than doing any kind of search. And this makes sense. Pattern recognition is an extremely important part of playing chess (as a player myself), and it is embedded in another system doing the actual search, namely Monte Carlo Tree Search. So it isn't surprising that it has learned to look ahead in a straight line since that's what all of its training experience is going to entail. If transformers were any good at doing the search, I would expect a chess bot without employing something like MCTS.

Replies from: eggsyntax↑ comment by eggsyntax · 2024-06-27T15:20:40.531Z · LW(p) · GW(p)

It's not clear to me that there's a very principled distinction between look-ahead and search, since there's not a line of play that's guaranteed to happen. Search is just the comparison of look-ahead on multiple lines. It's notable that the paper generally talks about "look-ahead or search" throughout.

That said, I haven't read this paper very closely, so I recognize I might be misinterpreting.

Replies from: eggsyntax↑ comment by eggsyntax · 2024-06-27T15:41:32.196Z · LW(p) · GW(p)

Or to clarify that a bit, it seems like the reason to evaluate any lines at all is in order to do search, even if they didn't test that. Otherwise what would incentivize the model to do look-ahead at all?

Replies from: ChosunOne↑ comment by ChosunOne · 2024-06-28T06:17:16.270Z · LW(p) · GW(p)

In chess, a "line" is sequence of moves that are hard to interrupt. There are kind of obvious moves you have to play or else you are just losing (such as recapturing a piece, moving king out of check, performing checkmate etc). Leela uses the neural network more for policy, which means giving a score to a given board position, which then the MCTS can use to determine whether or not to prune that direction or explore that section more. So it makes sense that Leela would have an embedding of powerful lines as part of its heuristic, since it isn't doing to main work of search. It's more pattern recognition on the board state, so it can learn to recognize the kinds of lines that are useful and whether or not they are "present" in the current board state. It gets this information from the MCTS system as it trains, and compresses the "triggers" into the earlier evaluations, which then this paper explores.

It's very cool work and result, but I feel it's too strong to say that the policy network is doing search as opposed to recognizing lines from its training at earlier board states.

↑ comment by eggsyntax · 2024-06-28T09:20:19.075Z · LW(p) · GW(p)

In chess, a "line" is sequence of moves that are hard to interrupt. There are kind of obvious moves you have to play or else you are just losing

Ah, ok, thanks for the clarification; I assumed 'line' just meant 'a sequence of moves'. I'm more of a go player than a chess player myself.

It still seems slightly fuzzy in that other than check/mate situations no moves are fully mandatory and eg recaptures may occasionally turn out to be the wrong move?

But I retract my claim that this paper is evidence of search, and appreciate you helping me see that.

Replies from: ChosunOne↑ comment by ChosunOne · 2024-06-28T13:35:36.687Z · LW(p) · GW(p)

It still seems slightly fuzzy in that other than check/mate situations no moves are fully mandatory and eg recaptures may occasionally turn out to be the wrong move?

Indeed it can be difficult to know when it is actually better not to continue the line vs when it is, but that is precisely what MCTS would help figure out. MCTS would do actual exploration of board states and the budget for which states it explores would be informed by the policy network. It's usually better to continue a line vs not, so I would expect MCTS to spend most of its budget continuing the line, and the policy would be updated during training with whether or not the recommendation resulted in more wins. Ultimately though, the policy network is probably storing a fuzzy pattern matcher for good board states (perhaps encoding common lines or interpolations of lines encountered by the MCTS) that it can use to more effectively guide the search by giving it an appropriate score.

To be clear, I don't think a transformer is completely incapable of doing any search, just that it is probably not learning to do it in this case and is probably pretty inefficient at doing it when prompted to.

↑ comment by lemonhope (lcmgcd) · 2024-06-26T06:28:42.977Z · LW(p) · GW(p)

Sorry to be that guy but maybe this idea shouldn't be posted publicly (I never read it before)

Replies from: oumuamua↑ comment by oumuamua · 2024-06-28T08:25:32.268Z · LW(p) · GW(p)

How is this not basically the widespread idea of recursive self improvement? This idea is simple enough that it has occurred even to me, and there is no way that, e.g. Ilya Sutskever hasn't thought about that.

Replies from: lcmgcd↑ comment by lemonhope (lcmgcd) · 2024-06-29T19:08:24.343Z · LW(p) · GW(p)

I guess the vague idea is in the water. Just never saw it stated so explicitly. Not a big deal.

↑ comment by eggsyntax · 2024-06-25T10:40:03.390Z · LW(p) · GW(p)

I agree with most of this. My claim here is mainly that if this is the case, then there's at least one remaining necessary breakthrough, of unknown difficulty, before AGI, and so we can't naively extrapolate timelines from LLM progress to date.

I additionally think that if this is the case, then LLMs' difficulty with planning is evidence that they may not be great at automating search for new and better algorithms, although hardly conclusive evidence.

Replies from: nathan-helm-burger↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-06-25T16:12:19.352Z · LW(p) · GW(p)

Yeah, I think my claim needs evidence to support it. That's why I'm personally very excited to design evals targeted at detecting self-improvement capabilities.

We shouldn't be stuck guessing about something so important!

comment by Wei Dai (Wei_Dai) · 2024-06-25T02:03:21.794Z · LW(p) · GW(p)

It might also be a crux for alignment, since scalable alignment schemes like IDA and Debate rely on "task decomposition", which seems closely related to "planning" and "reasoning". I've been wondering [LW(p) · GW(p)] about the slow pace of progress of IDA and Debate. Maybe it's part of the same phenomenon as the underwhelming results of AutoGPT and BabyAGI?

Replies from: eggsyntax↑ comment by eggsyntax · 2024-06-25T08:42:01.493Z · LW(p) · GW(p)

If that's the case (which seems very plausible) then it seems like we'll either get progress on both LLM-based AGI and IDA/Debate, or on neither. That seems like a relatively good situation; those approaches will work for alignment if & only if we need them (to whatever extent they would have worked in the absence of this consideration).

Replies from: Wei_Dai↑ comment by Wei Dai (Wei_Dai) · 2024-06-25T09:46:26.315Z · LW(p) · GW(p)

There's two other ways for things to go wrong though:

- AI capabilities research switches attention from LLM (back) to RL. (There was a lot of debate in the early days of IDA about whether it would be competitive with RL, and part of that was about whether all the important tasks we want a highly capable AI to do could be broken down easily enough and well enough.)

- The task decomposition part starts working well enough, but Eliezer's (and others') concern [LW · GW] about "preserving alignment while amplifying capabilities" proves valid.

comment by ryan_greenblatt · 2024-06-25T21:22:08.734Z · LW(p) · GW(p)

We do see improvement with scale here, but if these problems are obfuscated, performance of even the biggest LLMs drops to almost nothing

You note something similar, but I think it is pretty notable how much harder the obfuscated problems would be for humans:

Replies from: eggsyntaxMystery Blocksworld Domain Description (Deceptive Disguising)

I am playing with a set of objects. Here are the actions I can do:

- Attack object

- Feast object from another object

- Succumb object

- Overcome object from another object

I have the following restrictions on my actions:

To perform Attack action, the following facts need to be true:

- Province object

- Planet object

- Harmony

Once Attack action is performed:

- The following facts will be true: Pain object

- The following facts will be false: Province object, Planet object, Harmony

To perform Succumb action, the following facts need to be true:

- Pain object

Once Succumb action is performed:

- The following facts will be true: Province object, Planet object, Harmony

- The following facts will be false: Pain object

To perform Overcome action, the following needs to be true:

- Province other object

- Pain object

Once Overcome action is performed:

- The following will be true: Harmony, Province object, Object Craves other object

- The following will be false: Province other object, Pain object

To perform Feast action, the following needs to be true:

- Object Craves other object

- Province object

- Harmony

Once Feast action is performed:

- The following will be true: Pain object, Province other object

- The following will be false: Object Craves other object, Province object, Harmony

↑ comment by eggsyntax · 2024-06-26T12:35:31.771Z · LW(p) · GW(p)

Yeah, it's quite frustrating that they made the obfuscated problems so unnecessarily & cryptically ungrammatical. And the randomized version would be absolutely horrendous for humans:

[STATEMENT]

As initial conditions I have that, aqcjuuehivl8auwt object a, aqcjuuehivl8auwt object b,

aqcjuuehivl8auwt object d, 3covmuy4yrjthijd, object b 4dmf1cmtyxgsp94g object c,

51nbwlachmfartjn object a, 51nbwlachmfartjn object c and 51nbwlachmfartjn object d.

My goal is to have that object c 4dmf1cmtyxgsp94g object b.

My plan is as follows:

[PLAN]

xptxjrdkbi3pqsqr object b from object c

9big8ruzarkkquyu object b

1jpkithdyjmlikck object c

2ijg9q8swj2shjel object c from object b

[PLAN END]

[STATEMENT]

As initial conditions I have that, aqcjuuehivl8auwt object a, aqcjuuehivl8auwt object d,

3covmuy4yrjthijd, object a 4dmf1cmtyxgsp94g object b, object d 4dmf1cmtyxgsp94g object c,

51nbwlachmfartjn object b and 51nbwlachmfartjn object c.

My goal is to have that object c 4dmf1cmtyxgsp94g object a.

I'm fairly tempted to take time to redo those experiments with a more natural obfuscation scheme that follows typical English grammar. It seems pretty plausible to me that LLMs would then do much better (and also pretty plausible that they wouldn't).

↑ comment by Violet Hour · 2024-06-26T17:58:40.986Z · LW(p) · GW(p)

Largely echoing the points above, but I think a lot of Kambhampati's cases (co-author on the paper you cite) stack the deck against LLMs in an unfair way. E.g., he offered the following problem to the NYT as a contemporary LLM failure case.

If block C is on top of block A, and block B is separately on the table, can you tell me how I can make a stack of blocks with block A on top of block B and block B on top of block C, but without moving block C?

When I read that sentence, it felt needlessly hard to parse. So I formatted the question in a way that felt more natural (see below), and Claude Opus appears to have no problem with it (3.5 Sonnet seems less reliable, haven't tried with other models).

Block C is on top of Block A. Separately, Block B is on the table.Without moving Block C, can you make a stock of blocks such that:

- Block A is on top of Block B, and

- Block B is on top of Block C?

Tbc, I'm actually somewhat sympathetic to Kambhampati's broader claims about LLMs doing something closer to "approximate retrieval" rather than "reasoning". But I think it's sensible to view the Blocksworld examples (and many similar cases) as providing limited evidence on that question.

Replies from: eggsyntax, DPiepgrass↑ comment by eggsyntax · 2024-06-26T19:31:30.759Z · LW(p) · GW(p)

Claude 3 Opus just did fine for me using the original problem statement as well:

No, it is not possible to make a stack with block A on top of block B and block B on top of block C without moving block C. Here's why:

- Initially, block C is on top of block A, and block B is separate on the table.

- To get block B on top of block C, you would need to move block C to place block B underneath it. However, the question specifically states that you cannot move block C.

- Since block C cannot be moved, it is impossible to place block B between block C and block A.

Therefore, given the initial arrangement and the constraint of not moving block C, it is not possible to achieve the desired stack of blocks with A on top of B, and B on top of C.

[edited to show the temperature-0 response rather than the previous (& also correct) temperature-0.7 response, for better reproducibility]

↑ comment by DPiepgrass · 2024-08-22T05:03:20.871Z · LW(p) · GW(p)

Doesn't the problem have no solution without a spare block?

Worth noting that LLMs don't see a nicely formatted numeric list, they see a linear sequence of tokens, e.g. I can replace all my newlines with something else and Copilot still gets it:

brief testing doesn't show worse completions than when there are newlines. (and in the version with newlines this particular completion is oddly incomplete.)

Anyone know how LLMs tend to behave on text that is ambiguous―or unambiguous but "hard to parse"? I wonder if they "see" a superposition of meanings "mixed together" and produce a response that "sounds good for the mixture".

Replies from: eggsyntax↑ comment by eggsyntax · 2024-09-07T16:47:33.883Z · LW(p) · GW(p)

I wonder if they "see" a superposition of meanings "mixed together" and produce a response that "sounds good for the mixture".

That seems basically right to me; Janus presents that view well in "Simulators" [LW · GW].

Replies from: gwern↑ comment by gwern · 2024-09-08T00:47:16.750Z · LW(p) · GW(p)

Yes, but note in the simulator/Bayesian meta-RL view, it is important that the LLMs do not "produce a response": they produce a prediction of 'the next response'. The logits will, of course, try to express the posterior, averaging across all of the possibilities. This is what the mixture is: there's many different meanings which are still possible, and you're not sure which one is 'true' but they all have a lot of different posterior probabilities by this point, and you hedge your bets as to the exact next token as incentivized by a proper scoring rule which encourages you to report the posterior probability as the output which minimizes your loss. (A hypothetical agent may be trying to produce a response, but so too do all of the other hypothetical agents which are live hypotheses at that point.) Or it might be clearer to say, it produces predictions of all of the good-sounding responses, but never produces any single response.

Everything after that prediction, like picking a single, discrete, specific logit and 'sampling' it to fake 'the next token', is outside the LLM's purview except insofar as it's been trained on outputs from such a sampling process and has now learned that's one of the meanings mixed in. (When Llama-3-405b is predicting the mixture of meanings of 'the next token', it knows ChatGPT or Claude could be the LLM writing it and predicts accordingly, but it doesn't have anything really corresponding to "I, Lama-3-405b, am producing the next token by Boltzmann temperature sampling at x temperature". It has a hazy idea what 'temperature' is from the existing corpus, and it can recognize when a base model - itself - has been sampled from and produced the current text, but it lacks the direct intuitive understanding implied by "produce a response".) Hence all of the potential weirdness when you hardwire the next token repeatedly and feed it back in, and it becomes ever more 'certain' of what the meaning 'really' is, or it starts observing that the current text looks produced-by-a-specific-sampling-process rather than produced-by-a-specific-human, etc.

Replies from: eggsyntax↑ comment by eggsyntax · 2024-09-08T13:39:06.558Z · LW(p) · GW(p)

Yes, but note in the simulator/Bayesian meta-RL view, it is important that the LLMs do not "produce a response": they produce a prediction of 'the next response'.

Absolutely! In the comment you're responding to I nearly included a link to 'Role-Play with Large Language Models'; the section there on playing 20 questions with a model makes that distinction really clear and intuitive in my opinion.

there's many different meanings which are still possible, and you're not sure which one is 'true' but they all have a lot of different posterior probabilities by this point, and you hedge your bets as to the exact next token

Just for clarification, I think you're just saying here that the model doesn't place all its prediction mass on one token but instead spreads it out, correct? Another possible reading is that you're saying that the model tries to actively avoid committing to one possible meaning (ie favors next tokens that maintain superposition), and I thought I remembered seeing evidence that they don't do that.

Replies from: gwern↑ comment by gwern · 2024-09-08T21:16:22.798Z · LW(p) · GW(p)

I think you’re just saying here that the model doesn’t place all its prediction mass on one token but instead spreads it out, correct?

Yes. For a base model. A tuned/RLHFed model however is doing something much closer to that ('flattened logits'), and this plays a large role in the particular weirdnesses of those models, especially as compared to the originals (eg. it seems like maybe they suck at any kind of planning or search or simulation because they put all the prediction mass on the max-arg token rather than trying to spread mass out proportionately and so if that one token isn't 100% right, the process will fail).

Another possible reading is that you’re saying that the model tries to actively avoid committing to one possible meaning (ie favors next tokens that maintain superposition)

Hm, I don't think base models would necessarily do that, no. I can see the tuned models having the incentives to train them to do so (eg. the characteristic waffle and non-commitment and vagueness are presumably favored by raters), but not the base models.

They are non-myopic, so they're incentivized to plan ahead, but only insofar as that predicts the next token in the original training data distribution (because real tokens reflect planning or information from 'the future'); unless real agents are actively avoiding commitment, there's no incentive there to worsen your next-token prediction by trying to create an ambiguity which is not actually there.

(The ambiguity is in the map, not the territory. To be more concrete, imagine the ambiguity is over "author identity", as the LLM is trying to infer whether 'gwern' or 'eggsyntax' wrote this LW comment. At each token, it maintains a latent about its certainty of the author identity; because it is super useful for prediction to know who is writing this comment, right? And the more tokens it sees for the prediction, the more confident it becomes the answer is 'gwern'. But when I'm actually writing this, I have no uncertainty - I know perfectly well 'gwern' is writing this, and not 'eggsyntax'. I am not in any way trying to 'avoid committing to one possible [author]' - the author is just me, gwern, fully committed from the start, whatever uncertainty a reader might have while reading this comment from start to finish. My next token, therefore, is not better predicted by imagining that I'm suffering from mental illness or psychedelics as I write this and thus might suddenly spontaneously claim to be eggsyntax and this text is deliberately ambiguous because at any moment I might be swerving from gwern to eggsyntax and back. The next token is better predicted by inferring who the author is to reduce ambiguity as much as possible, and expecting them to write in a normal non-ambiguous fashion given whichever author it actually is.)

comment by DPiepgrass · 2024-08-19T20:56:13.654Z · LW(p) · GW(p)

Given that I think LLMs don't generalize, I was surprised how compelling Aschenbrenner's case sounded when I read it (well, the first half of it. I'm short on time...). He seemed to have taken all the same evidence I knew about it, and arranged it into a very different framing. But I also felt like he underweighted criticism from the likes of Gary Marcus. To me, the illusion of LLMs being "smart" has been broken for a year or so.

To the extent LLMs appear to build world models, I think what you're seeing is a bunch of disorganized neurons and connections that, when probed with a systematic method, can be mapped onto things that we know a world model ought to contain. A couple of important questions are

- the way that such a world model was formed and

- how easily we can easily figure out how to form those models better/differently[1].

I think LLMs get "world models" (which don't in fact cover the whole world) in a way that is quite unlike the way intelligent humans form their own world models―and more like how unintelligent or confused humans do the same.

The way I see it, LLMs learn in much the same way a struggling D student learns (if I understand correctly how such a student learns), and the reason LLMs sometimes perform like an A student is because they have extra advantages that regular D students do not: unlimited attention span and ultrafast, ultra-precise processing backed by an extremely large set of training data. So why do D students perform badly, even with "lots" of studying? I think it's either because they are not trying to build mental models, or because they don't really follow what their teachers are saying. Either way, this leads them to fall back on secondary "pattern-matching" learning mode which doesn't depend on a world model.

If, when learning in this mode, you see enough patterns, you will learn an implicit world model. The implicit model is a proper world model in terms of predictive power, but

- It requires much more training data to predict as well as a human system-2 can, which explains why D students perform worse than A students on the same amount of training data―and this is one of the reasons why LLMs need so much more training data than humans do in order to perform at an A level (other reasons: less compute per token, fewer total synapses, no ability to "mentally" generate training data, inability to autonomously choose what to train on). The way you should learn is to first develop an explicit worldmodel via system-2 thinking, then use system-2 to mentally generate training data which (along with external data) feeds into system-1. LLMs cannot do this.

- Such a model tends to be harder to explain in words than an explicit world model, because the predictions are coming from system-1 without much involvement from system-2, and so much of the model is not consciously visible to the student, nor is it properly connected to its linguistic form, so the D student relies more on "feeling around" the system-1 model via queries (e.g. to figure out whether "citation" is a noun, you can do things like ask your system-1 whether "the citation" is a valid phrase―human language skills tend to always develop as pure system-1 initially, so a good linguistics course teaches you explicitly to perform these queries to extract information, whereas if you have a mostly-system-2 understanding of a language, you can use that to decide whether a phrase is correct with system-2, without an intuition about whether it's correct. My system-1 for Spanish is badly underdeveloped, so I lean on my superior system-2/analytical understanding of grammar).

When an LLM cites a correct definition of something as if it were a textbook, then immediately afterward fails to apply that definition to the question you ask, I think that indicates the LLM doesn't really have a world model with respect to that question, but I would go further and say that even if it has a good world model, it cannot express its world-model in words, it can only express the textbook definitions it has seen and then apply its implicit world-model, which may or may not match what it said verbally.

So if you just keep training it on more unique data, eventually it "gets it", but I think it "gets it" the way a D student does, implicitly not explicitly. With enough experience, the D student can be competent, but never as good as similarly-experienced A students.

A corollary of the above is that I think the amount of compute required for AGI is wildly overestimated, if not by Aschenbrenner himself then by less nuanced versions of his style of thinking (e.g. Sam Altman). And much of the danger of AGI follows from this. On a meta level, my own opinions on AGI are mostly not formed via "training data", since I have not read/seen that many articles and videos about AGI alignment (compared to any actual alignment researcher). No coincidence, then, that I was always an A to A- student, and the one time I got a C- in a technical course was when I couldn't figure out WTF the professor was talking about. I still learned, that's why I got a C-, but I learned in a way that seemed unnatural to me, but which incorporated some of the "brute force" that an LLM would use. I'm all about mental models and evidence [EA · GW]; LLMs are about neither.

Aschenbrenner did help firm up my sense that current LLM tech leads to "quasi-AGI": a competent humanlike digital assistant, probably one that can do some AI research autonomously. It appears that the AI industry (or maybe just OpenAI) is on an evolutionary approach of "let's just tweak LLMs and our processes around them". This may lead (via human ingenuity or chance discovery) to system-2s with explicit worldmodels, but without some breakthough, it just leads to relatively safe quasi-AGIs, the sort that probably won't generate groundbreaking new cancer-fighting ideas but might do a good job testing ideas for curing cancer that are "obvious" or human-generated or both.

Although LLMs badly suck at reasoning, my AGI timelines are still kinda short―roughly 1 to 15 years for "real" AGI, with quasi-AGI in 2 to 6 years―mainly because so much funding is going into this, and because only one researcher needs to figure out the secret, and because so much research is being shared publicly, and because there should be many ways to do AGI [? · GW], and because quasi-AGI (if invented first) might help create real AGI. Even the AGI safety people[2] might be the ones to invent AGI, for how else will they do effective safety research? FWIW my prediction is that quasi-AGI is consists of a transformer architecture with quite a large number of (conventional software) tricks and tweaks bolted on to it, while real AGI consists of transformer architecture plus a smaller number of tricks and tweaks, plus a second breakthrough of the same magnitude as transformer architecture itself (or a pair of ideas that work so well together that combining them counts as a breakthrough).

EDIT: if anyone thinks I'm on to something here, let me know your thoughts as to whether I should redact the post lest changing minds in this regard is itself hazardous. My thinking for now, though, is that presenting ideas to a safety-conscious audience might well be better than safetyists nodding along to a mental model that I think is, if not incorrect, then poorly framed.

- ^

I don't follow ML research, so let me know if you know of proposed solutions already.

- ^

why are we calling it "AI safety"? I think this term generates a lot of "the real danger of AI is bias/disinformation/etc" responses, which should decrease if we make the actual topic clear

↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-08-21T18:09:19.033Z · LW(p) · GW(p)

Given that I think LLMs don't generalize, I was surprised how compelling Aschenbrenner's case sounded when I read it (well, the first half of it. I'm short on time...). He seemed to have taken all the same evidence I knew about it, and arranged it into a very different framing. But I also felt like he underweighted criticism from the likes of Gary Marcus. To me, the illusion of LLMs being "smart" has been broken for a year or so.

As someone who has been studying LLM outputs pretty intently since GPT-2, I think you are mostly right but that the details do matter here.

The LLMs give a very good illusion of being smart, but are actually kinda dumb underneath. Yes. But... with each generation they get a little less dumb, a little more able to reason and extrapolate. The difference between 'bad' and 'bad, but not as bad as they used to be, and getting rapidly better' is pretty important.

They are also bad at 'integrating' knowledge. This results in having certain facts memorized, but getting questions where the answer is indicated by those facts wrong when the questions come from an unexpected direction. I haven't noticed steady progress on factual knowledge integration in the same way I have with reasoning. I do expect this hurdle will be overcome eventually. Things are progressing quite quickly, and I know of many advances which seem like compatible pareto improvements which have not yet been integrated into the frontier models because the advances are too new.

Also, I notice that LLMs are getting gradually better at being coding assistants and speeding up my work. So I don't think it's necessarily the case that we need to get all the way to full human-level reasoning before we get substantial positive feedback effects on ML algorithm development rate from improved coding assistance.

Replies from: DPiepgrass↑ comment by DPiepgrass · 2024-08-22T04:16:35.347Z · LW(p) · GW(p)

I'm having trouble discerning a difference between our opinions, as I expect a "kind-of AGI" to come out of LLM tech, given enough investment. Re: code assistants, I'm generally disappointed with Github Copilot. It's not unusual that I'm like "wow, good job", but bad completions are commonplace, especially when I ask a question in the sidebar (which should use a bigger LLM). Its (very hallucinatory) response typically demonstrates that it doesn't understand our (relatively small) codebase very well, to the point where I only occasionally bother asking. (I keep wondering "did no one at GitHub think to generate an outline of the app that could fit in the context window?")

Replies from: nathan-helm-burger↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-08-22T17:10:37.356Z · LW(p) · GW(p)

Yes, I agree our views are quite close. My expectations closely match what you say here:

Although LLMs badly suck at reasoning, my AGI timelines are still kinda short―roughly 1 to 15 years for "real" AGI, with quasi-AGI in 2 to 6 years―mainly because so much funding is going into this, and because only one researcher needs to figure out the secret, and because so much research is being shared publicly, and because there should be many ways to do AGI [? · GW], and because quasi-AGI (if invented first) might help create real AGI.

Basically I just want to point out that the progression of competence in recent models seems pretty impressive, even though the absolute values are low.

For instance, for writing code I think the following pattern of models (including only ones I've personally tested enough to have an opinion) shows a clear trend of increasing competence with later release dates:

Github Copilot (pre-GPT-4) < GPT-4 (the first release) < Claude 3 Opus < Claude 3.5 Sonnet

Basically, I'm holding in my mind the possibility that the next versions (GPT-5 and/or Claude Opus 4) will really impress me. I don't feel confident of that. I am pretty confident that the version after next will impress me (e.g. GPT-6 / Claude Opus 5) and actually be useful for RSI.

From this list, Claude 3.5 Sonnet is the first one to be competent enough I find it even occasionally useful. I made myself use the others just to get familiar with their abilities, but their outputs just weren't worth the time and effort on average.

↑ comment by DPiepgrass · 2024-08-22T19:19:51.956Z · LW(p) · GW(p)

P.S. if I'm wrong about the timeline―if it takes >15 years―my guess for how I'm wrong is (1) a major downturn in AGI/AI research investment and (2) executive misallocation of resources. I've been thinking that the brightest minds of the AI world are working on AGI, but maybe they're just paid a lot because there are too few minds to go around. And when I think of my favorite MS developer tools, they have greatly improved over the years, but there are also fixable things that haven't been fixed in 20 years, and good ideas they've never tried, and MS has created a surprising number of badly designed libraries (not to mention products) over the years. And I know people close to Google have a variety of their own pet peeves about Google.

Are AGI companies like this? Do they burn mountains cash to pay otherwise average engineers who happen to have AI skills? Do they tend to ignore promising research directions because the results are uncertain, or because results won't materialize in the next year, or because they don't need a supercomputer or aren't based mainly on transformers? Are they bad at creating tools that would've made the company more efficient? Certainly I expect some companies to be like that.

As for (1), I'm no great fan of copyright law, but today's companies are probably built on a foundation of rampant piracy, and litigation might kill investment. Or, investors may be scared away by a persistent lack of discoveries to increase reliability / curtail hallucinations.

↑ comment by eggsyntax · 2024-09-04T00:11:12.508Z · LW(p) · GW(p)

Thanks for your comments! I was traveling and missed them until now.

To the extent LLMs appear to build world models, I think what you're seeing is a bunch of disorganized neurons and connections that, when probed with a systematic method, can be mapped onto things that we know a world model ought to contain.

I think we've certainly seen some examples of interpretability papers that 'find' things in the models that aren't there, especially when researchers train nonlinear probes. But the research community has been learning over time to distinguish cases like that from from what's really in the model (ablation, causal tracing, etc). We've also seen examples of world modeling that are clearly there in the model; Neel Nanda's work finding a world model in Othello-GPT is a particularly clear case in my opinion (post [LW · GW], paper).

I think LLMs get "world models" (which don't in fact cover the whole world) in a way that is quite unlike the way intelligent humans form their own world models―and more like how unintelligent or confused humans do the same.

My intuitions about human learning here are very different from yours, I think. In my view, learning (eg) to produce valid sentences in a native language and to understand sentences from other speakers is very nearly the only thing that matters, and that's something nearly all speakers achieve. Learning an explicit model for that language, in order to eg produce a correct parse tree, matters a tiny bit, very briefly, when you learn parse trees in school. Rather than intelligent humans learning a detailed explicit model of their language and unintelligent humans not doing so, it seems to me that very few intelligent humans have such a model. Mostly it's just linguists, who need an explicit model. I would further claim that those who do learn an explicit model don't end up being significantly better at producing and understanding language in their day-to-day lives; it's not explicit modeling that makes us good at that.

I do agree that someone without an explicit model of a topic will often have a harder time explaining that topic to someone else, and I agree that LLMs typically learn implicit rather than explicit models. I just don't think that that in and of itself makes them worse at using those models.

That said, to the extent that by 'general reasoning' we mean chains of step-by-step assertions with each step explicitly justified by valid rules of reasoning, that does seem like something that benefits a lot from an explicit model. So in the end I don't necessarily disagree with your application of this idea to at least some versions of general reasoning; I do disagree when it comes to other sorts of general reasoning, and LLM capabilities in general.

comment by Raemon · 2024-06-24T20:51:08.180Z · LW(p) · GW(p)

Curated.

This is a fairly straightforward point, but one I haven't seen written up before and I've personally been wondering a bunch about. I appreciated this post both for laying out the considerations pretty thoroughly, including a bunch or related reading, and laying out some concrete predictions at the end.

Replies from: p.b.↑ comment by p.b. · 2024-06-25T12:35:25.393Z · LW(p) · GW(p)

This is a fairly straightforward point, but one I haven't seen written up before and I've personally been wondering a bunch about.

I feel like I have been going on about this for years. Like here [LW · GW], here [LW · GW] or here [LW(p) · GW(p)]. But I'd be the first to admit, that I don't really do effort posts.

Replies from: eggsyntax, Raemon↑ comment by eggsyntax · 2024-06-26T08:38:33.723Z · LW(p) · GW(p)

I hadn't seen your posts either (despite searching; I think the lack of widely shared terminology around this problem gets in the way). I'd be very interested to learn more about how your research agenda has progressed since that first post. This post was mostly intended to be broad audience / narrow message, just (as Raemon says) pointing to the crux here, breaking it down, and giving a sense of the arguments on each side.

Replies from: p.b.↑ comment by p.b. · 2024-06-26T16:49:49.444Z · LW(p) · GW(p)

I'd be very interested to learn more about how your research agenda has progressed since that first post.

The post about learned lookahead in Leela has kind of galvanised me into finally finishing an investigation I have worked on for too long already. (Partly because I think that finding is incorrect, but also because using Leela is a great idea, I had got stuck with LLMs requiring a full game for each puzzle position).

I will ping you when I write it up.

Replies from: eggsyntax↑ comment by Raemon · 2024-06-25T18:06:33.898Z · LW(p) · GW(p)

It so happens I hadn't seen your other posts, although I think there is something that this post was aiming at, that yours weren't quite pointed at, which is laying out "this is a crux for timelines, these are the subcomponents of the crux." (But, I haven't read your posts in detail yet and thought about what else they might be good at that this post wasn't aiming for)

comment by tailcalled · 2024-06-25T05:32:23.441Z · LW(p) · GW(p)

I always feel like self-play on math with a proof checker like Agda or Coq is a promising way to make LLMs superhuman on these areas. Do we have any strong evidence that it's not?

Replies from: eggsyntax↑ comment by eggsyntax · 2024-06-25T10:34:44.211Z · LW(p) · GW(p)

Do you mean as a (presumably RL?) training method to make LLMs themselves superhuman in that area, or that the combined system can be superhuman? I think AlphaCode is some evidence for the latter, with the compiler in the role of proof-checker.

Replies from: tailcalled↑ comment by tailcalled · 2024-06-25T10:44:49.403Z · LW(p) · GW(p)

The former

comment by Stewy Slocum (stewy-slocum) · 2024-06-25T03:09:29.578Z · LW(p) · GW(p)

I believe there is considerable low-hanging algorithmic fruit that can make LLMs better at reasoning tasks. I think these changes will involve modifications to the architecture + training objectives. One major example is highlighted by the work of https://arxiv.org/abs/2210.10749, which show that Transformers can only heuristically implement algorithms to most interesting problems in the computational complexity hierarchy. With recurrence (e.g. through CoT https://arxiv.org/abs/2310.07923) these problems can be avoided, which might lead to much better generic, domain-independent reasoning capabilities. A small number of people are already working on such algorithmic modifications to Transformers (e.g. https://arxiv.org/abs/2403.09629).

This is to say that we haven't really explored small variations on the current LLM paradigm, and it's quite likely that the "bugs" we see in their behavior could be addressed through manageable algorithmic changes + a few OOMs more of compute. For this reason, if they make a big difference, I could see capabilities changing quite rapidly once people figure out how to implement them. I think scaling + a little creativity is alive and well as a pathway to nearish-term AGI.

↑ comment by eggsyntax · 2024-06-26T11:25:46.705Z · LW(p) · GW(p)

'The Expressive Power of Transformers with Chain of Thought' is extremely interesting, thank you! I've noticed a tendency to conflate the limitations of what transformers can do in a forward pass with what they can do under autoregressive conditions, so it's great to see research explicitly addressing how the latter extends the former.

the "bugs" we see in their behavior could be addressed through manageable algorithmic changes + a few OOMs more of compute...I think scaling + a little creativity is alive and well as a pathway to nearish-term AGI.

I agree that this is plausible. I mentally lumped this sort of thing into the 'breakthrough needed' category in the 'Why does this matter?' section. Your point is well-taken that there are relatively small improvements that could make the difference, but to me that has to be balanced against the fact that there have been an enormous number of papers claiming improvements to the transformer architecture that then haven't been adopted.

From outside the scaling labs, it's hard to know how much of that is the improvements not panning out vs a lack of willingness & ability to throw resources at pursuing them. One the one hand I suspect there's an incentive to focus on the path that they know is working, namely continuing to scale up. On the other hand, scaling the current architecture is an extremely compute-intensive path, so I would think that it's worth putting resources into trying to see whether these improvements would work well at scale. If you (or anyone else) has insight into the degree to which the scaling labs are actually trying to incorporate the various claimed improvements, I'd be quite interested to know.

comment by Archimedes · 2024-11-03T00:45:20.651Z · LW(p) · GW(p)

How much does o1-preview update your view? It's much better at Blocksworld for example.

https://x.com/rohanpaul_ai/status/1838349455063437352

https://arxiv.org/pdf/2409.19924v1

Replies from: eggsyntax↑ comment by eggsyntax · 2024-11-06T20:30:28.102Z · LW(p) · GW(p)

Thanks for sharing, I hadn't seen those yet! I've had too much on my plate since o1-preview came out to really dig into it, in terms of either playing with it or looking for papers on it.

How much does o1-preview update your view? It's much better at Blocksworld for example.

Quite substantially. Substantially enough that I'll add mention of these results to the post. I saw the near-complete failure of LLMs on obfuscated Blocksworld problems as some of the strongest evidence against LLM generality. Even more substantially since one of the papers is from the same team of strong LLM skeptics (Subbarao Kambhampati's) who produced the original results (I am restraining myself with some difficulty from jumping up and down and pointing at the level of goalpost-moving in the new paper).

There's one sense in which it's not an entirely apples-to-apples comparison, since o1-preview is throwing a lot more inference-time compute at the problem (in that way it's more like Ryan's hybrid approach to ARC-AGI). But since the key question here is whether LLMs are capable of general reasoning at all, that doesn't really change my view; certainly there are many problems (like capabilities research) where companies will be perfectly happy to spend a lot on compute to get a better answer.

Here's a first pass on how much this changes my numeric probabilities -- I expect these to be at least a bit different in a week as I continue to think about the implications (original text italicized for clarity):

- LLMs continue to do better at block world and ARC as they scale: 75% -> 100%, this is now a thing that has happened (note that o1-preview also showed substantially improved results on ARC-AGI).

- LLMs entirely on their own reach the grand prize mark on the ARC prize (solving 85% of problems on the open leaderboard) before hybrid approaches like Ryan's: 10% -> 20%, this still seems quite unlikely to me (especially since hybrid approaches have continued to improve on ARC). Most of my additional credence is on something like 'the full o1 turns out to already be close to the grand prize mark' and the rest on 'OpenAI capabilities researchers manage to use the full o1 to find an improvement to current LLM technique (eg a better prompting approach) that can be easily fixed'.

- Scaffolding & tools help a lot, so that the next gen[7] (GPT-5, Claude 4) + Python + a for loop can reach the grand prize mark[8]: 60% -> 75% -- I'm tempted to put it higher, but it wouldn't be that surprising if o1-mark-2 didn't quite get there even with scaffolding/tools, especially since we don't have clear insight into how much harder the full test set is.

- Same but for the gen after that (GPT-6, Claude 5): 75% -> 90%? I feel less sure about this one than the others; it sure seems awfully likely that o2 plus scaffolding will be able to do it! But I'm reluctant to go past 90% because progress could level off because of training data requirements, maybe the o1 -> o2 jump doesn't focus on optimizing for general reasoning, etc. It seems very plausible that I'll bump this higher on reflection.

- The current architecture, including scaffolding & tools, continues to improve to the point of being able to do original AI research: 65%, with high uncertainty[9] -> 80%. That sure does seem like the world we're living in. It's not clear to me that o1 couldn't already do original AI research with the right scaffolding. Sakana claims to have gotten there with GPT-4o / Sonnet, but their claims seem overblown to me.

Now that I've seen these, I'm going to have to think hard about whether my upcoming research projects in this area (including one I'm scheduled to lead a team on in the spring, uh oh) are still the right thing to pursue. I may write at least a brief follow-up post to this one arguing that we should all update on this question.

Thanks again, I really appreciate you drawing my attention to these.

Replies from: eggsyntax↑ comment by eggsyntax · 2024-11-09T12:30:57.105Z · LW(p) · GW(p)

I've now expanded this comment to a post -- mostly the same content but with more detail.

https://www.lesswrong.com/posts/wN4oWB4xhiiHJF9bS/llms-look-increasingly-like-general-reasoners [LW · GW]

comment by Lao Mein (derpherpize) · 2024-06-25T23:05:52.995Z · LW(p) · GW(p)

I think that too much scafolding can obfuscate a lack of general capability, since it allows the system to simulate a much more capable agent - under narrow circumstances and assuming nothing unexpected happens.

Consider the Egyptian Army in '73. With exhaustive drill and scripting of unit movements, they were able to simulate the capabilities of an army with a competent officer corps, up until they ran out of script, upon which it reverted to a lower level of capability. This is because scripting avoids officers on the ground needing to make complex tactical decisions on the fly and communicate them to other units, all while maintaining a cohesive battle plan. If everyone sticks to the script, big holes won't open up in their defenses, and the movements of each unit will be covered by that of others. When the script ran out (I'm massively simplifying), the cohesion of the army began to break down, rendering it increasingly vulnerable to IDF counterattacks. The gains in combat effectiveness were real, but limited to the confines of the script.

Similarly, scafolding helps the AI avoid the really hard parts of a job, at least the really hard parts for it. Designing the script for each individual task and subtask in order to make a 90% reliable AI economically valuable turns a productivity-improving tool into an economically productive agent, but only within certain parameters, and each time you encounter a new task, more scafolding will need to be built. I think some of the time the harder (in the human-intuitive sense) parts of the problem may be contained in the scafolding as opposed to the tasks the AI completes.

Thus, given the highly variable nature of LLM intelligence, "X can do Y with enough scafolding!" doesn't automatically convince me that X possesses the core capabilities to do Y and just needs a little encouragement or w/e. If may be that task Y is composed of subtasks A and B, such that X is very good and reliable at A, but utterly incapable at B (coding and debugging?). By filtering for Y with a certain easy subset of B, using a pipeline to break it down into easier subtasks with various prompts, trying many times, and finally passing off unsolved cases to humans, you can extract much economic from X doing Y, but only in a certain subset of cases, and still without X being reliably good at doing both A and B.

You could probably do something similar with low-capability human programmers playing the role of X, but it wouldn't be economical since they cost much more than an LLM and are in some ways less predictable.

I think a lot of economically valuable intelligence is in the ability to build the scafolding itself implicitly, which many people would call "agency".

Replies from: ryan_greenblatt, eggsyntax↑ comment by ryan_greenblatt · 2024-06-26T03:52:08.269Z · LW(p) · GW(p)

What if the tasks that your scaffolded LLM is doing are randomly selected pieces of cognitive labor from the full distribution of human cognitive tasks?

It seems to me like your objection is mostly to narrow distributions of tasks and scaffolding which is heavily specialized to that task.

I think narrowness of the task and amount of scaffolding might be correlated in practice, but these attributes don't have to be related.

(You might think they are correlated because large amounts of scaffolding won't be very useful for very diverse tasks. I think this is likely false - there exists general purpose software that I find useful for a very broad range of tasks. E.g. neovim. I agree that smart general agents should be able to build their own scaffolding and bootstrap, but its worth noting that the final system might be using a bunch of tools!)

For humans, we can consider eyes to be a type of scaffolding: they help us do various cognitive tasks by adding various affordances but are ultimately just attached.

Nonetheless, I predict that if I didn't have eyes, I would be notably less efficient at my job.

↑ comment by eggsyntax · 2024-06-27T10:45:42.626Z · LW(p) · GW(p)

Consider the Egyptian Army in '73.

Very interesting example, thanks.

Designing the script for each individual task and subtask in order to make a 90% reliable AI economically valuable turns a productivity-improving tool into an economically productive agent, but only within certain parameters, and each time you encounter a new task, more scafolding will need to be built.

Agreed that that wouldn't be good evidence that those systems could do general reasoning. My intention in this piece is to mainly consider general-purpose scaffolding rather than task-specific.

comment by ryan_greenblatt · 2024-06-25T21:28:20.544Z · LW(p) · GW(p)

All LLMs to date fail rather badly at classic problems of rearranging colored blocks.

It's pretty unclear to me that the LLMs do much worse than humans at this task.