Causal Universes

post by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2012-11-29T04:08:18.859Z · LW · GW · Legacy · 395 commentsContents

vs. None 395 comments

Followup to: Stuff that Makes Stuff Happen

Previous meditation: Does the idea that everything is made of causes and effects meaningfully constrain experience? Can you coherently say how reality might look, if our universe did not have the kind of structure that appears in a causal model?

I can describe to you at least one famous universe that didn't look like it had causal structure, namely the universe of J. K. Rowling's Harry Potter.

You might think that J. K. Rowling's universe doesn't have causal structure because it contains magic - that wizards wave their wands and cast spells, which doesn't make any sense and goes against all science, so J. K. Rowling's universe isn't 'causal'.

In this you would be completely mistaken. The domain of "causality" is just "stuff that makes stuff happen and happens because of other stuff". If Dumbledore waves his wand and therefore a rock floats into the air, that's causality. You don't even have to use words like 'therefore', let alone big fancy phrases like 'causal process', to put something into the lofty-sounding domain of causality. There's causality anywhere there's a noun, a verb, and a subject: 'Dumbledore's wand lifted the rock.' So far as I could tell, there wasn't anything in Lord of the Rings that violated causality.

You might worry that J. K. Rowling had made a continuity error, describing a spell working one way in one book, and a different way in a different book. But we could just suppose that the spell had changed over time. If we actually found ourselves in that apparent universe, and saw a spell have two different effects on two different occasions, we would not conclude that our universe was uncomputable, or that it couldn't be made of causes and effects.

No, the only part of J. K. Rowling's universe that violates 'cause and effect' is...

...the Time-Turners, of course.

A Time-Turner, in Rowling's universe, is a small hourglass necklace that sends you back in time 1 hour each time you spin it. In Rowling's universe, this time-travel doesn't allow for changing history; whatever you do after you go back, it's already happened. The universe containing the time-travel is a stable, self-consistent object.

If a time machine does allow for changing history, it's easy to imagine how to compute it; you could easily write a computer program which would simulate that universe and its time travel, given sufficient computing power. You would store the state of the universe in RAM and simulate it under the programmed 'laws of physics'. Every nanosecond, say, you'd save a copy of the universe's state to disk. When the Time-Changer was activated at 9pm, you'd retrieve the saved state of the universe from one hour ago at 8pm, load it into RAM, and then insert the Time-Changer and its user in the appropriate place. This would, of course, dump the rest of the universe from 9pm into oblivion - no processing would continue onward from that point, which is the same as ending that world and killing everyone in it.[1]

Still, if we don't worry about the ethics or the disk space requirements, then a Time-Changer which can restore and then change the past is easy to compute. There's a perfectly clear order of causality in metatime, in the linear time of the simulating computer, even if there are apparent cycles as seen from within the universe. The person who suddenly appears with a Time-Changer is the causal descendant of the older universe that just got dumped from RAM.

But what if instead, reality is always - somehow - perfectly self-consistent, so that there's apparently only one universe with a future and a past that never changes, so that the person who appears at 8PM has always seemingly descended from the very same universe that then develops by 9PM...?

How would you compute that in one sweep-through, without any higher-order metatime?

What would a causal graph for that look like, when the past descends from its very own future?

And the answer is that there isn't any such causal graph. Causal models are sometimes referred to as DAGs, which stands for Directed Acyclic Graph. If instead there's a directed cycle, there's no obvious order in which to compute the joint probability table. Even if you somehow knew that at 8PM somebody was going to appear with a Time-Turner used at 9PM, you still couldn't compute the exact state of the time-traveller without already knowing the future at 9PM, and you couldn't compute the future without knowing the state at 8PM, and you couldn't compute the state at 8PM without knowing the state of the time-traveller who just arrived.

In a causal model, you can compute p(9pm|8pm) and p(8pm|7pm) and it all starts with your unconditional knowledge of p(7pm) or perhaps the Big Bang, but with a Time-Turner we have p(9pm|8pm) and p(8pm|9pm) and we can't untangle them - multiplying those two conditional matrices together would just yield nonsense.

Does this mean that the Time-Turner is beyond all logic and reason?

Complete philosophical panic is basically never justified. We should even be reluctant to say anything like, "The so-called Time-Turner is beyond coherent description; we only think we can imagine it, but really we're just talking nonsense; so we can conclude a priori that no such Time-Turner that can exist; in fact, there isn't even a meaningful thing that we've just proven can't exist." This is also panic - it's just been made to sound more dignified. The first rule of science is to accept your experimental results, and generalize based on what you see. What if we actually did find a Time-Turner that seemed to work like that? We'd just have to accept that Causality As We Previously Knew It had gone out the window, and try to make the best of that.

In fact, despite the somewhat-justified conceptual panic which the protagonist of Harry Potter and the Methods of Rationality undergoes upon seeing a Time-Turner, a universe like that can have a straightforward logical description even if it has no causal description.

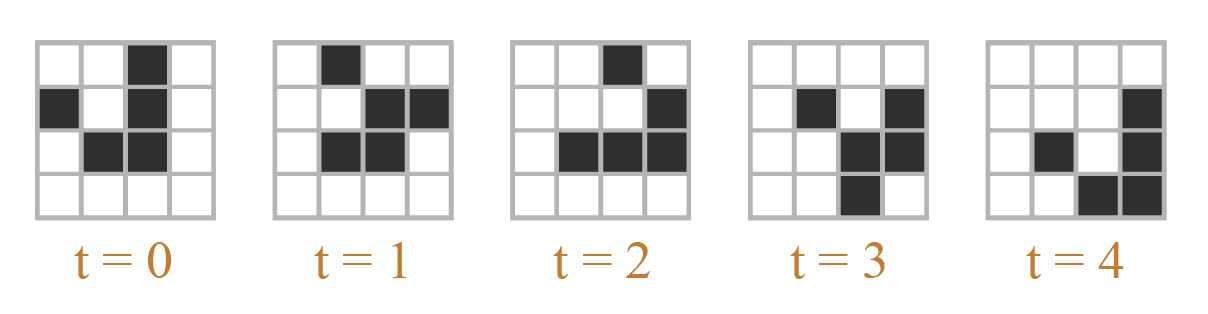

Conway's Game of Life is a very simple specification of a causal universe; what we would today call a cellular automaton. The Game of Life takes place on a two-dimensional square grid, so that each cell is surrounded by eight others, and the Laws of Physics are as follows:

- A cell with 2 living neighbors during the last tick, retains its state from the last tick.

- A cell with 3 living neighbors during the last tick, will be alive during the next tick.

- A cell with fewer than 2 or more than 3 living neighbors during the last tick, will be dead during the next tick.

It is my considered opinion that everyone should play around with Conway's Game of Life at some point in their lives, in order to comprehend the notion of 'laws of physics'. Playing around with Life as a kid (on a Mac Plus) helped me gut-level-understand the concept of a 'lawful universe' developing under exceptionless rules.

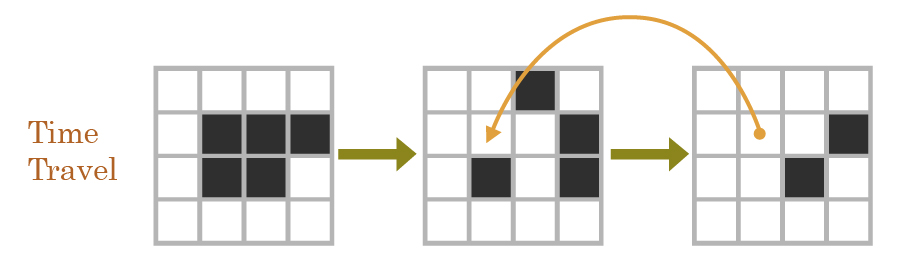

Now suppose we modify the Game of Life universe by adding some prespecified cases of time travel - places where a cell will descend from neighbors in the future, instead of the past.

In particular we shall take a 4x4 Life grid, and arbitrarily hack Conway's rules to say:

-

On the 2nd tick, the cell at (2,2) will have its state determined by that cell's state on the 3rd tick, instead of its neighbors on the 1st tick.

It's no longer possible to compute the state of each cell at each time in a causal order where we start from known cells and compute their not-yet-known causal descendants. The state of the cells on the 3rd tick, depend on the state of the cells on the 2nd tick, which depends on the state on the 3rd tick.

In fact, the time-travel rule, on the same initial conditions, also permits a live cell to travel back in time, not just a dead cell - this just gives us the "normal" grid! Since you can't compute things in order of cause and effect, even though each local rule is deterministic, the global outcome is not determined.

However, you could simulate Life with time travel merely by brute-force searching through all possible Life-histories, discarding all histories which disobeyed the laws of Life + time travel. If the entire universe were a 4-by-4 grid, it would take 16 bits to specify a single slice through Time - the universe's state during a single clock tick. If the whole of Time was only 3 ticks long, there would be only 48 bits making up a candidate 'history of the universe' - it would only take 48 bits to completely specify a History of Time. 2^48 is just 281,474,976,710,656, so with a cluster of 2GHz CPUs it would be quite practical to find, for this rather tiny universe, the set of all possible histories that obey the logical relations of time travel.

It would no longer be possible to point to a particular cell in a particular history and say, "This is why it has the 'alive' state on tick 3". There's no "reason" - in the framework of causal reasons - why the time-traveling cell is 'dead' rather than 'alive', in the history we showed. (Well, except that Alex, in the real universe, happened to pick it out when I asked him to generate an example.) But you could, in principle, find out what the set of permitted histories for a large digital universe, given lots and lots of computing power.

Here's an interesting question I do not know how to answer: Suppose we had a more complicated set of cellular automaton rules, on a vastly larger grid, such that the cellular automaton was large enough, and supported enough complexity, to permit people to exist inside it and be computed. Presumably, if we computed out cell states in the ordinary way, each future following from its immediate past, the people inside it would be as real as we humans computed under our own universe's causal physics.

Now suppose that instead of computing the cellular automaton causally, we hack the rules of the automaton to add large time-travel loops - change their physics to allow Time-Turners - and with an unreasonably large computer, the size of two to the power of the number of bits comprising an entire history of the cellular automaton, we enumerate all possible candidates for a universe-history.

So far, we've just generated all 2^N possible bitstrings of size N, for some large N; nothing more. You wouldn't expect this procedure to generate any people or make any experiences real, unless enumerating all finite strings of size N causes all lawless universes encoded in them to be real. There's no causality there, no computation, no law relating one time-slice of a universe to the next...

Now we set the computer to look over this entire set of candidates, and mark with a 1 those that obey the modified relations of the time-traveling cellular automaton, and mark with a 0 those that don't.

If N is large enough - if the size of the possible universe and its duration is large enough - there would be descriptions of universes which experienced natural selection, evolution, perhaps the evolution of intelligence, and of course, time travel with self-consistent Time-Turners, obeying the modified relations of the cellular automaton. And the checker would mark those descriptions with a 1, and all others with a 0.

Suppose we pick out one of the histories marked with a 1 and look at it. It seems to contain a description of people who remember experiencing time travel.

Now, were their experiences real? Did we make them real by marking them with a 1 - by applying the logical filter using a causal computer? Even though there was no way of computing future events from past events; even though their universe isn't a causal universe; even though they will have had experiences that literally were not 'caused', that did not have any causal graph behind them, within the framework of their own universe and its rules?

I don't know. But...

Our own universe does not appear to have Time-Turners, and does appear to have strictly local causality in which each variable can be computed strictly forward-in-time.

And I don't know why that's the case; but it's a likely-looking hint for anyone wondering what sort of universes can be real in the first place.

The collection of hypothetical mathematical thingies that can be described logically (in terms of relational rules with consistent solutions) looks vastly larger than the collection of causal universes with locally determined, acyclically ordered events. Most mathematical objects aren't like that. When you say, "We live in a causal universe", a universe that can be computed in-order using local and directional rules of determination, you're vastly narrowing down the possibilities relative to all of Math-space.

So it's rather suggestive that we find ourselves in a causal universe rather than a logical universe - it suggests that not all mathematical objects can be real, and the sort of thingies that can be real and have people in them are constrained to somewhere in the vicinity of 'causal universes'. That you can't have consciousness without computing an agent made of causes and effects, or maybe something can't be real at all unless it's a fabric of cause and effect. It suggests that if there is a Tegmark Level IV multiverse, it isn't "all logical universes" but "all causal universes".

Of course you also have to be a bit careful when you start assuming things like "Only causal things can be real" because it's so easy for Reality to come back at you and shout "WRONG!" Suppose you thought reality had to be a discrete causal graph, with a finite number of nodes and discrete descendants, exactly like Pearl-standard causal models. There would be no hypothesis in your hypothesis-space to describe the standard model of physics, where space is continuous, indefinitely divisible, and has complex amplitude assignments over uncountable cardinalities of points.

Reality is primary, saith the wise old masters of science. The first rule of science is just to go with what you see, and try to understand it; rather than standing on your assumptions, and trying to argue with reality.

But even so, it's interesting that the pure, ideal structure of causal models, invented by statisticians to reify the idea of 'causality' as simply as possible, looks much more like the modern view of physics than does the old Newtonian ideal.

If you believed in Newtonian billiard balls bouncing around, and somebody asked you what sort of things can be real, you'd probably start talking about 'objects', like the billiard balls, and 'properties' of the objects, like their location and velocity, and how the location 'changes' between one 'time' and another, and so on.

But suppose you'd never heard of atoms or velocities or this 'time' stuff - just the causal diagrams and causal models invented by statisticians to represent the simplest possible cases of cause and effect. Like this:

And then someone says to you, "Invent a continuous analogue of this."

You wouldn't invent billiard balls. There's no billiard balls in a causal diagram.

You wouldn't invent a single time sweeping through the universe. There's no sweeping time in a causal diagram.

You'd stare a bit at B, C, and D which are the sole nodes determining A, screening off the rest of the graph, and say to yourself:

"Okay, how can I invent a continuous analogue of there being three nodes that screen off the rest of the graph? How do I do that with a continuous neighborhood of points, instead of three nodes?"

You'd stare at E determining D determining A, and ask yourself:

"How can I invent a continuous analogue of 'determination', so that instead of E determining D determinining A, there's a continuum of determined points between E and A?"

If you generalized in a certain simple and obvious fashion...

The continuum of relatedness from B to C to D would be what we call space.

The continuum of determination from E to D to A would be what we call time.

There would be a rule stating that for epsilon time before A, there's a neighborhood of spatial points delta which screens off the rest of the universe from being relevant to A (so long as no descendants of A are observed); and that epsilon and delta can both get arbitrarily close to zero.

There might be - if you were just picking the simplest rules you could manage - a physical constant which related the metric of relatedness (space) to the metric of determination (time) and so enforced a simple continuous analogue of local causality...

...in our universe, we call it c, the speed of light.

And it's worth remembering that Isaac Newton did not expect that rule to be there.

If we just stuck with Special Relativity, and didn't get any more modern than that, there would still be little billiard balls like electrons, occupying some particular point in that neighborhood of space.

But if your little neighborhoods of space have billiard balls with velocities, many of which are slower than lightspeed... well, that doesn't look like the simplest continuous analogues of a causal diagram, does it?

When we make the first quantum leap and describe particles as waves, we find that the billiard balls have been eliminated. There's no 'particles' with a single point position and a velocity slower than light. There's an electron field, and waves propagate through the electron field through points interacting only with locally neighboring points. If a particular electron seems to be moving slower than light, that's just because - even though causality always propagates at exactly c between points within the electron field - the crest of the electron wave can appear to move slower than that. A billiard ball moving through space over time, has been replaced by a set of points with values determined by their immediate historical neighborhood.

vs.

And when we make the second quantum leap into configuration space, we find a timeless universal wavefunction with complex amplitudes assigned over the points in that configuration space, and the amplitude of every point causally determined by its immediate neighborhood in the configuration space.[2]

So, yes, Reality can poke you in the nose if you decide that only discrete causal graphs can be real, or something silly like that.

But on the other hand, taking advice from the math of causality wouldn't always lead you astray. Modern physics looks a heck of a lot more similar to "Let's build a continuous analogue of the simplest diagrams statisticians invented to describe theoretical causality", than like anything Newton or Aristotle imagined by looking at the apparent world of boulders and planets.

I don't know what it means... but perhaps we shouldn't ignore the hint we received by virtue of finding ourselves inside the narrow space of "causal universes" - rather than the much wider space "all logical universes" - when it comes to guessing what sort of thingies can be real. To the extent we allow non-causal universes in our hypothesis space, there's a strong chance that we are broadening our imagination beyond what can really be real under the Actual Rules - whatever they are! (It is possible to broaden your metaphysics too much, as well as too little. For example, you could allow logical contradictions into your hypothesis space - collections of axioms with no models - and ask whether we lived in one of those.)

If we trusted absolutely that only causal universes could be real, then it would be safe to allow only causal universes into our hypothesis space, and assign probability literally zero to everything else.

But if you were scared of being wrong, then assigning probability literally zero means you can't change your mind, ever, even if Professor McGonagall shows up with a Time-Turner tomorrow.

Meditation: Suppose you needed to assign non-zero probability to any way things could conceivably turn out to be, given humanity's rather young and confused state - enumerate all the hypotheses a superintelligent AI should ever be able to arrive at, based on any sort of strange world it might find by observation of Time-Turners or stranger things. How would you enumerate the hypothesis space of all the worlds we could remotely maybe possibly be living in, including worlds with hypercomputers and Stable Time Loops and even stranger features?

[1] Sometimes I still marvel about how in most time-travel stories nobody thinks of this. I guess it really is true that only people who are sensitized to 'thinking about existential risk' even notice when a world ends, or when billions of people are extinguished and replaced by slightly different versions of themselves. But then almost nobody will notice that sort of thing inside their fiction if the characters all act like it's okay.)

[2] Unless you believe in 'collapse' interpretations of quantum mechanics where Bell's Theorem mathematically requires that either your causal models don't obey the Markov condition or they have faster-than-light nonlocal influences. (Despite a large literature of obscurantist verbal words intended to obscure this fact, as generated and consumed by physicists who don't know about formal definitions of causality or the Markov condition.) If you believe in a collapse postulate, this whole post goes out the window. But frankly, if you believe that, you are bad and you should feel bad.

Part of the sequence Highly Advanced Epistemology 101 for Beginners

Next post: "Mixed Reference: The Great Reductionist Project"

Previous post: "Logical Pinpointing"

395 comments

Comments sorted by top scores.

comment by CronoDAS · 2012-11-28T21:17:07.979Z · LW(p) · GW(p)

when billions of people are extinguished and replaced by slightly different versions of themselves.

This happens in the ordinary passage of time anyway. (Stephen King's story "The Langoliers" plays this for horror - the reason the past no longer exists is because monsters are eating it.)

Replies from: RobbBB, Eliezer_Yudkowsky↑ comment by Rob Bensinger (RobbBB) · 2012-11-29T02:13:37.664Z · LW(p) · GW(p)

If your theory of time is 4-dimensionalist, then you might think the past people are 'still there,' in some timeless sense, rather than wholly annihilated. Interestingly, you might (especially if you reject determinism) think that moving through time involves killing (possible) futures, rather than (or in addition to) killing the past.

Replies from: grobstein, Caspar42, SilasBarta↑ comment by grobstein · 2012-11-29T17:28:10.394Z · LW(p) · GW(p)

Hard to see why you can't make a version of this same argument, at an additional remove, in the time travel case. For example, if you are a "determinist" and / or "n-dimensionalist" about the "meta-time" concept in Eliezer's story, the future people who are lopped off the timeline still exist in the meta-timeless eternity of the "meta-timeline," just as in your comment the dead still exist in the eternity of the past.

In the (seemingly degenerate) hypothetical where you go back in time and change the future, I'm not sure why we should prefer to say that we "destroy" the "old" future, rather than simply that we disconnect it from our local universe. That might be a horrible thing to do, but then again it might not be. There's lots of at-least-conceivable stuff that is disconnected from our local universe.

↑ comment by Caspar Oesterheld (Caspar42) · 2018-02-16T17:09:26.439Z · LW(p) · GW(p)

(RobbBB seems to refer to what philosophers call the B-theory of time, whereas CronoDAS seems to refer to the A-theory of time.)

↑ comment by SilasBarta · 2012-12-03T19:44:39.638Z · LW(p) · GW(p)

Yes, that seems more consistent with the rest of the sequences (and indeed advocacy of cryonics/timeless identity). "You" are a pattern, not a specific collection of atoms. So if the pattern persists (as per successive moments of time, or destroying and re-creating the pattern), so do "you".

Replies from: RobbBB↑ comment by Rob Bensinger (RobbBB) · 2012-12-03T21:14:44.693Z · LW(p) · GW(p)

Sure. At the same time, it's important to note that this is a 'you' by stipulation. The question of how to define self-identity for linguistic purposes (e.g., the scope of pronouns) is independent of the psychological question 'When do I feel as though something is 'part of me'?', and both of these are independent of the normative question 'What entities should I act to preserve in the same way that I act to preserve my immediate person?' It may be that there is no unique principled way to define the self, in which case we should be open to shifting conceptions based on which way of thinking is most useful in a given situation.

This is one of the reasons the idea of my death does not terrify me. The idea of death in general is horrific, but the future I who will die will only be somewhat similar to my present self, differing only in degree from my similarity to other persons. I fear death, not just 'my' death.

Replies from: SilasBarta↑ comment by SilasBarta · 2012-12-03T21:16:35.185Z · LW(p) · GW(p)

Sure, but my point is that most of the commentary on this site, or that is predicated on the Sequences, assumes the equivalence of all of those.

↑ comment by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2012-12-01T01:05:22.539Z · LW(p) · GW(p)

"Death" is the absence of a future self that is continuous with your present self. I don't know exactly what constitutes "continuous" but it clearly is not the identity of individual particles. It may require continuity of causal derivation, for example.

Replies from: someonewrongonthenet, CronoDAS↑ comment by someonewrongonthenet · 2012-12-03T00:29:08.447Z · LW(p) · GW(p)

Why?

Upload yourself to a computer. You've got a copy on the computer, you've got a physical body. Kill the physical body a few milliseconds after upload.

Repeat, except now kill the physical body a few milliseconds before the upload.

Do you mean to define the former situation as involving a "Death" because a few milliseconds worth of computations were lost, but the latter situation as simple a transfer?

I don't think the word "death" really applies anymore when we are talking at the level of physical systems, any more than "table" or "chair" would. Those constructs don't cross over well into (real or imaginary) physics.

Replies from: TheWakalix↑ comment by TheWakalix · 2019-05-19T19:55:14.889Z · LW(p) · GW(p)

Since Eliezer is a temporal reductionist, I think he might not mean "temporally continuous", but rather "logical/causal continuity" or something similar.

Discrete time travel would also violate temporal continuity, by the way.

↑ comment by CronoDAS · 2012-12-01T06:19:50.581Z · LW(p) · GW(p)

(Even the billiard ball model of "classical" chemistry is enough to eliminate "individual particles" as the source of personal identity; you aren't made of the same atoms you were a year ago, because of eating, respiration, and other biological processes.)

Replies from: MugaSofer↑ comment by MugaSofer · 2012-12-01T06:44:56.203Z · LW(p) · GW(p)

There could be special "mind particles" in you brain and I can't believe I just said that.

Replies from: CronoDAScomment by chaosmosis · 2012-11-28T19:12:27.451Z · LW(p) · GW(p)

I don't understand why it's morally wrong to kill people if they're all simultaneously replaced with marginally different versions of themselves. Sure, they've ceased to exist. But without time traveling, you make it so that none of the marginally different versions exist. It seems like some kind of act omission distinction is creeping into your thought processes about time travel.

Replies from: ialdabaoth, Davidmanheim, RobbBB↑ comment by ialdabaoth · 2012-11-28T19:20:37.500Z · LW(p) · GW(p)

Moreso, marginally different versions of people are replacing the originals all the time, by the natural physical processes of the universe. If continuity of body is unnecessary for personal identity, why is continuity of their temporal substrate?

Replies from: Davidmanheim↑ comment by Davidmanheim · 2012-11-30T00:36:54.252Z · LW(p) · GW(p)

Identity is a process, not a physical state. There is a difference between continuity of body, which is physical, and continuity of identity, which is a process. If I replace a hard drive from a running computer, it may still run all of the same processes. The same could be true of processors, or memory. But if I terminate the process, the physical substrate being the same is irrelevant.

Replies from: ialdabaoth, zerker2000↑ comment by ialdabaoth · 2012-11-30T01:31:27.557Z · LW(p) · GW(p)

I'm not even certain that identity is a process. The process of consciousness shuts down every time we go to sleep, and gets reconstituted from our memories the next time we wake up (with intermittent consciousness-like processes that occur in-between, while we dream).

It seems like the closest thing to "identity" that we have, these days, is a sort of nebulous locus of indistinguishably similar dynamic data structures, regardless of the substrate that is encoding or processing those structures. It seems a rather flimsy thing to hang an "I" on, though.

Replies from: Davidmanheim↑ comment by Davidmanheim · 2012-12-03T20:57:03.150Z · LW(p) · GW(p)

I'm unclear on your logic; whatever the mechanism, the "cogito" exists. (demonstrably to myself, and presumably to yourself.) Given this, why is it too flimsy? Why does it matter is there is a complex "nebulous locus" that instantiates it - it's there, and it works, and conveys, to me, the impression that I am.

Replies from: ialdabaoth↑ comment by ialdabaoth · 2012-12-03T23:01:25.205Z · LW(p) · GW(p)

The 'cogito', as you put it, exists in the sense that dynamic processes certainly have effect on the world, and those processes also tend to generate a sense of identity.

Just because it exists and has effect, though, is no reason to take its suggestions about the nature of that identity seriously.

Example: you probably tend to feel that you make choices from somewhere inside your head, as a response to your environment, rather than that your environment comes together in such a way that you react predictably to it, and coincidentally generate a sense of 'choice' as part of that feeling. Most people do this; it causes them to tend to attempt to apply willpower directly to "forcing" themselves to make the "choices" they think will produce the correct outcome, rather than crafting their environment so that they naturally react in such a way to produce that outcome, and coincidentally generate a sense that they "chose" to produce that outcome.

Wu wei wu, and all that.

↑ comment by zerker2000 · 2012-12-06T21:20:58.034Z · LW(p) · GW(p)

And this is why we (barely) have checkpointing. If you close you web browser, and launch a saved copy from five minutes ago, is the session a different one?

↑ comment by Davidmanheim · 2012-11-30T00:34:18.086Z · LW(p) · GW(p)

Because our morality is based on our experiential process. We see ourselves as the same person. Because of this, we want to be protected from violence in the future, even if the future person is not "really" the same as the present me.

Replies from: chaosmosis↑ comment by chaosmosis · 2012-11-30T01:38:41.688Z · LW(p) · GW(p)

Why protect one type of "you" over another type? Your response gives a reason that future people are valuable, but not that those future people are more valuable than other future people.

Replies from: Davidmanheim, FeepingCreature↑ comment by Davidmanheim · 2012-12-03T20:53:02.922Z · LW(p) · GW(p)

I'm not protecting anyone over anyone else, I'm protecting someone over not-someone. Someone (ie. non-murdered person) is protected, and the outcome that leads to dead person is avoided.

Experientially, we view "me in 10 seconds" as the same as "me now." Because of this, the traditional arguments hold, at least to the extent that we believe that our impression of continuous living is not just a neat trick of our mind unconnected to reality. And if we don't believe this, we fail the rationality test in many more severe ways than not understanding morality. (Why would I not jump off buildings, just because future me will die?)

Replies from: chaosmosis↑ comment by chaosmosis · 2012-12-04T03:08:59.793Z · LW(p) · GW(p)

I'm protecting someone over not-someone.

This ignores that insofar as going back in time kills currently existing people it also revives previously existing ones. You're ignoring the lives created by time travel.

Experientially, we view "me in 10 seconds" as the same as "me now." Because of this, the traditional arguments hold, at least to the extent that we believe that our impression of continuous living is not just a neat trick of our mind unconnected to reality. And if we don't believe this, we fail the rationality test in many more severe ways than not understanding morality. (Why would I not jump off buildings, just because future me will die?)

If you're defending some form of egoism, maybe time travel is wrong. From a utilitarian standpoint, preferring certain people just because of their causal origins makes no sense.

Replies from: Davidmanheim↑ comment by Davidmanheim · 2012-12-04T16:58:22.032Z · LW(p) · GW(p)

Where did time travel come from? That's not part of my argument, or the context of the discussion about why murder is wrong; the time travel argument is just point out what non-causality might take the form of. The fact that murder is wrong is a moral judgement, which means it belongs to the realm of human experience.

If the question is whether changing the time stream is morally wrong because it kills people, the supposition is that we live in a non-causal world, which makes all of the arguments useless, since I'm not interested in defining morality in a universe that I have no reason to believe exists.

Replies from: chaosmosis↑ comment by chaosmosis · 2012-12-04T18:01:33.056Z · LW(p) · GW(p)

If you're not interested in discussing the ethics of time travel, why did you respond to my comment which said

I don't understand why it's morally wrong to kill people if they're all simultaneously replaced with marginally different versions of themselves. Sure, they've ceased to exist. But without time traveling, you make it so that none of the marginally different versions exist. It seems like some kind of act omission distinction is creeping into your thought processes about time travel.

with

Because our morality is based on our experiential process. We see ourselves as the same person. Because of this, we want to be protected from violence in the future, even if the future person is not "really" the same as the present me.

It seems pretty clear that I was talking about time travel, and your comment could also be interpreted that way.

But, whatever.

↑ comment by FeepingCreature · 2012-12-01T00:38:14.334Z · LW(p) · GW(p)

I think we need to limit the set of morally relevant future versions to versions that would be created without interference, because otherwise we split ourselves too thinly among speculative futures that almost never happen. Given that, it makes sense to want to protect the existence of the unmodified future self over the modified one.

Replies from: chaosmosis↑ comment by chaosmosis · 2012-12-01T03:29:31.485Z · LW(p) · GW(p)

"I think we need to arbitrarily limit something. Given that, this specific limit is not arbitrary."

How is that not equivalent to your argument?

Additionally, please explain more. I don't understand what you mean by saying that we "split ourselves too thinly". What is this splitting and why does it invalidate moral systems that do it? Also, overall, isn't your argument just a reason that considering alternatives to the status quo isn't moral?

Replies from: MugaSofer, FeepingCreature↑ comment by FeepingCreature · 2012-12-01T13:57:44.114Z · LW(p) · GW(p)

I think it summarizes to "time travel is too improbable and unpredictable to worry about [preserving the interests of yous affected by it]".

Replies from: chaosmosis↑ comment by chaosmosis · 2012-12-01T18:59:52.928Z · LW(p) · GW(p)

Your argument makes no sense.

"Time travel is too improbable to worry about preserving yous affected by it. Given that, it makes sense to want to protect the existence of the unmodified future self over the modified one."

Those two sentences do not connect. They actually contradict.

Also, you're doing moral epistemology backwards, in my view. You're basically saying, "it would be really convenient if the content of morality was such that we could easily compute it using limited cognitive resources". That's an argumentum ad consequentum which is a logical fallacy.

Replies from: FeepingCreature↑ comment by FeepingCreature · 2012-12-01T20:00:02.171Z · LW(p) · GW(p)

You're probably right about it contradicting. Though, about the moral-epistemology bit, I think there may be a sort of anthropic-bias type argument that creatures can only implement a morality that they can practically compute to begin with.

Replies from: chaosmosis↑ comment by chaosmosis · 2012-12-02T04:57:34.802Z · LW(p) · GW(p)

practically compute

Your argument is that it is hard and impractical, not that it is impossible, and I think that only the latter type is a reasonable constraint on moral considerations, although even then I have some qualms about whether or not nihilism would be more justified, as opposed to arbitrary moral limits. I also don't understand how anthropic arguments might come into play.

↑ comment by Rob Bensinger (RobbBB) · 2012-11-29T03:47:35.764Z · LW(p) · GW(p)

It depends. As for universes, so too for individual human beings: Is it moral (in a vacuum — we're assuming there aren't indirect harmful consequences) to kill a single individual, provided you replace him a second later with a near-perfect copy? That depends. Could you have made the clone without killing the original? If an individual's life is good, and you can create a copy of him that will also have a good life, without interfering with the original, then that act of copying may be ethically warranted, and killing either copy may be immoral.

Similarly, if you can make a copy of the whole universe without destroying the original, then, plausibly, it's just as wicked to destroy the old universe as it would be to destroy it without making a copy. You're subtracting the same amount of net utility. Of course, this is all assuming that the universe as a whole has positive value.

Replies from: Randy_M, chaosmosis↑ comment by Randy_M · 2012-11-29T16:43:03.299Z · LW(p) · GW(p)

Regarding universes, there's a discussion of this in Orson Scott Card's Pastwatch novel, where future people debate traveling back in time to change the present, realizing that that means basically the elimination of every person presently exisiting.

Regarding individuals, I once wrote a short story about a scientist who placed his mind into the body of a clone of himself, via a destructive process (scanned his original brain synapse by synapse after slicing it up, recreated that in the clone via electro stimulation). He was tried for murder of the clone. I hadn't seen the connection between the two stories until now, though.

↑ comment by chaosmosis · 2012-11-29T06:29:25.665Z · LW(p) · GW(p)

We are talking about time travel and so this doesn't apply. Your comment is nitpicky for no good reason. I obviously recognize that consequentialists believe that more lives are better; I don't know why you felt an urge to tell me that. Your wording is also unnecessarily pedantic and inefficient.

Replies from: army1987↑ comment by A1987dM (army1987) · 2012-11-29T11:51:37.190Z · LW(p) · GW(p)

consequentialists believe that more lives are better

Not all of them.

Replies from: chaosmosis↑ comment by chaosmosis · 2012-11-29T21:14:44.530Z · LW(p) · GW(p)

Sure. Again, this isn't relevant and isn't providing information that's new to me. People like Schopenhauer and Benatar might exist, but surely my overall point still stands. The focus on nitpicking is excessive and frustrating. I don't want to have to invest much time and effort into my comments on this site so that I can avoid allowing people to get distracted by side issues; I want to get my points across as efficiently as possible and without interruption.

Replies from: army1987↑ comment by A1987dM (army1987) · 2012-11-30T00:06:19.421Z · LW(p) · GW(p)

I was thinking more of average utilitarians than antinatalists. (I provisionally agree with average utilitarianism, and think more lives are better instrumentally but not terminally. I'm not confident that I wouldn't change my mind if I thought this stuff through, though.)

comment by jimrandomh · 2012-11-28T20:49:35.268Z · LW(p) · GW(p)

The property you talk about the universe having is an interesting one, but I don't think causality is the right word for it. You've smuggled an extra component into the definition: each node having small fan-in (for some definition of "small"). Call this "locality". Lack of locality makes causal reasoning harder (sometimes astronomically harder) in some cases, but it does not break causal inference algorithms; it only makes them slower.

The time-turner implementation where you enumerate all possible universes, and select one that passed the self-consistency test, can be represented by a DAG; it's causal. It's just that the moment where the time-traveler lands depends on the whole space of later universes. That doesn't make the graph cyclic; it's just a large fanin. If the underlying physics is discrete and the range of time-turners is time limited to six hours, it's not even infinite fanin. And if you blur out irrelevant details, like we usually do when reasoning about physical processes, you can even construct manageable causal graphs of events involving time-turner usage, and use them to predict experimental outcomes!

You can imagine universes which violate the small-fanin criterion in other ways. For example, imagine a Conway's life-like game on an infinite plane, with a special tile type that copies a randomly-selected other cell in each timestep, with each cell having a probability of being selected that falls off with distance. Such cells would also have infinite fan-in, but there would still be a DAG representing the causal structure of that universe. It used to be believed that gravity behaved this way.

Replies from: Dweomite, Dweomite↑ comment by Dweomite · 2023-06-10T20:48:03.668Z · LW(p) · GW(p)

I think there's a subtle but important difference between saying that time travel can be represented by a DAG, and saying that you can compute legal time travel timelines using a DAG.

There's one possible story you can tell about time turners where the future "actually" affects the past, which is conceptually simple but non-causal.

There's also a second possible story you can tell about time turners where some process implementing the universe "imagines" a bunch of possible futures and then prunes the ones that aren't consistent with the time turner rules. This computation is causal, and from the inside it's indistinguishable from the first story.

But if reality is like the second story, it seems very strange to me that the rules used for imagining and pruning just happen to implement the first story. Why does it keep only the possible futures that look like time travel, if no actual time travel is occurring?

The first story is parsimonious in a way that the second story is not, because it supposes that the rules governing which timelines are allowed to exist are a result of how the timelines are implemented, rather than being an arbitrary restriction applied to a vastly-more-powerful architecture that could in principle have much more permissive rules.

So I think the first story can be criticized for being non-causal, and the second can be criticized for being non-parsimonious, and it's important to keep them in separate mental buckets so that you don't accidentally do an equivocation fallacy where you use the second story to defend against the first criticism and the first story to defend against the second.

↑ comment by Dweomite · 2023-06-10T20:47:16.741Z · LW(p) · GW(p)

Aside from the amount of fan-in, another difference that seems important to me is that a "normal" simulation is guaranteed to have exactly one continuation. If you do the thing where you simulate a bunch of possible futures and then prune the contradictory ones then there's no intrinsic reason you couldn't end up with multiple self-consistent futures--or with zero!

comment by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2012-11-28T06:13:09.137Z · LW(p) · GW(p)

I haven't yet particularly seen anyone else point out that there is in fact a way to finitely Turing-compute a discrete universe with self-consistent Time-Turners in it. (In fact I hadn't yet thought of how to do it at the time I wrote Harry's panic attack in Ch. 14 of HPMOR, though a primary literary goal of that scene was to promise my readers that Harry would not turn out to be living in a computer simulation. I think there might have been an LW comment somewhere that put me on that track or maybe even outright suggested it, but I'm not sure.)

The requisite behavior of the Time Turner is known as Stable Time Loops on the wiki that will ruin your life, and known as the Novikov self-consistency principle to physicists discussing "closed timelike curve" solutions to General Relativity. Scott Aaronson showed that time loop logic collapses PSPACE to polynomial time.

I haven't yet seen anyone else point out that space and time look like a simple generalization of discrete causal graphs to continuous metrics of relatedness and determination, with c being the generalization of locality. This strikes me as important, so any precedent for it or pointer to related work would be much appreciated.

Replies from: Kaj_Sotala, Cyan, Plasmon, Peterdjones, evand, Liron, shminux, orthonormal, nigerweiss, Steve_Rayhawk, Alexei, aaronsw, gjm, Dentin↑ comment by Kaj_Sotala · 2012-11-28T08:07:59.150Z · LW(p) · GW(p)

The relationship between continuous causal diagrams and the modern laws of physics that you described was fascinating. What's the mainstream status of that?

Replies from: irrationalist, Eliezer_Yudkowsky↑ comment by irrationalist · 2012-11-28T12:45:41.964Z · LW(p) · GW(p)

Showed up in Penrose's "The Fabric of Reality." Curvature of spacetime is determined by infinitesimal light cones at each point. You can get a uniquely determined surface from a connection as well as a connection from a surface.

Replies from: Eliezer_Yudkowsky, diegocaleiro, lukeprog↑ comment by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2012-11-28T17:58:03.923Z · LW(p) · GW(p)

Obviously physicists totally know about causality being restricted to the light cone! And "curvature of space = light cones at each point" isn't Penrose, it's standard General Relativity.

Replies from: irrationalist↑ comment by irrationalist · 2012-11-30T16:08:42.561Z · LW(p) · GW(p)

Not claiming it's his own idea, just that it showed up in the book, I assume it's standard.

↑ comment by diegocaleiro · 2012-11-29T04:04:47.221Z · LW(p) · GW(p)

David Deutsch, not Roger Penrose. Or wrong title.

Replies from: gjm↑ comment by gjm · 2020-02-11T12:08:18.736Z · LW(p) · GW(p)

I think probably Penrose's "The Road to Reality" was intended. I don't think there's anything in the Deutsch book like "curvature of spacetime is determined by infinitesimal light cones"; I don't think I've read the relevant bits of the Penrose but it seems like exactly the sort of thing that would be in it.

↑ comment by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2012-11-28T11:20:35.291Z · LW(p) · GW(p)

Odd, the last paragraph of the above seems to have gotten chopped. Restored. No, I haven't particularly heard anyone else point that out but wouldn't be surprised to find someone had. It's an important point and I would also like to know if anyone has developed it further.

Replies from: shev↑ comment by shev · 2012-11-30T20:13:26.298Z · LW(p) · GW(p)

I found that idea so intriguing I made an account.

Have you considered that such a causal graph can be rearranged while preserving the arrows? I'm inclined to say, for example, that by moving your node E to be on the same level - simultaneous with - B and C, and squishing D into the middle, you've done something akin to taking a Lorentz transform?

I would go further to say that the act of choosing a "cut" of a discrete causal graph - and we assume that B, C, and D share some common ancestor to prevent completely arranging things - corresponds to the act of the choosing a reference frame in Minkowski space. Which makes me wonder if max-flow algorithms have a continuous generalization.

edit: in fact, max-flows might be related to Lagrangians. See this.

↑ comment by Cyan · 2012-11-28T21:22:55.601Z · LW(p) · GW(p)

space and time look like a simple generalization of discrete causal graphs to continuous metrics of relatedness and determination

Mind officially blown once again. I feel something analogous to how I imagine someone who had been a heroin addict in the OB-bookblogging time period and in methadone treatment during the subsequent non-EY-non-Yvain-LW time period would feel upon shooting up today. Hey Mr. Tambourine Man, play a song for me / In the jingle-jangle morning I'll come following you.

Replies from: Gust↑ comment by Plasmon · 2012-11-28T09:33:16.085Z · LW(p) · GW(p)

finitely Turing-compute a discrete universe with self-consistent Time-Turners in it

In computational physics, the notion of self-consistent solutions is ubiquitous. For example, the behaviour of charged particles depends on the electromagnetic fields, and the electromagnetic fields depend on the behaviour of charged particles, and there is no "preferred direction" in this interaction. Not surprisingly, much research has been done on methods of obtaining (approximations of) such self-consistent solutions, notably in plasma physics and quantum chemistry. just some examples.

It is true that these examples do not involve time travel, but I expect the mathematics to be quite similar, with the exception that these physics-based examples tend to have (should have) uniquely defined solutions.

Replies from: Eliezer_Yudkowsky↑ comment by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2012-11-28T11:16:36.170Z · LW(p) · GW(p)

Er, I was not claiming to have invented the notion of an equilibrium but thank you for pointing this out.

Replies from: Plasmon↑ comment by Plasmon · 2012-11-28T11:48:21.056Z · LW(p) · GW(p)

I didn't think you were claiming that, I was merely pointing out that the fact that self-consistent solutions can be calculated may not be that surprising.

Replies from: Eliezer_Yudkowsky↑ comment by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2012-11-28T17:59:32.436Z · LW(p) · GW(p)

The Novikov self-consistency principle has already been invented; the question was whether there was precedent for "You can actually compute consistent histories for discrete universes." Discrete, not continuous.

Replies from: Plasmon↑ comment by Plasmon · 2012-11-28T18:49:20.857Z · LW(p) · GW(p)

Yes, hence, "In computational physics", a branch of physics which necessarily deals with discrete approximations of "true" continuous physics. It seems really quite similar, I can even give actual examples of (somewhat exotic) algorithms where information from the future state is used to calculate the future state, very analogous to your description of a time-travelling game of life.

↑ comment by Peterdjones · 2012-11-28T12:05:59.261Z · LW(p) · GW(p)

There are precedents and parallels in Causal Sets and Causal Dynamical Triangulation

CDT is particularly interesting for its ability to predict the correct macroscopic dimensionality of spacetime:

" At large scales, it re-creates the familiar 4-dimensional spacetime, but it shows spacetime to be 2-d near the Planck scale, and reveals a fractal structure on slices of constant time"

Replies from: Jach↑ comment by Jach · 2012-11-29T08:35:52.324Z · LW(p) · GW(p)

I was going to reply with something similar. Kevin Knuth in particular has an interesting paper deriving special relativity from causal sets: http://arxiv.org/abs/1005.4172

↑ comment by evand · 2012-11-28T18:05:32.425Z · LW(p) · GW(p)

Scott Aaronson showed that time loop logic collapses PSPACE to polynomial time.

It replaces the exponential time requirement with an exactly analogous exponential MTBF reliability requirement. I'm surprised by how infrequently this is pointed out in such discussions, since it seems to me rather important.

Replies from: Douglas_Knight, JulianMorrison, Eugine_Nier↑ comment by Douglas_Knight · 2012-12-29T16:50:07.066Z · LW(p) · GW(p)

It's true that it requires an exponentially small error rate, but that's cheap, so why emphasize it?

Replies from: evand↑ comment by evand · 2012-12-29T18:49:28.921Z · LW(p) · GW(p)

I am not aware of any process, ever, with a demonstrated error rate significantly below that implied by a large, fast computer operating error-free for an extended period of time. If you can't improve on that, you aren't getting interesting speed improvements from the time machine, merely moderately useful ones. (In other words, you're making solvable expensive problems cheap, but you're not making previously unsolvable problems solvable.)

In cases where building high-reliability hardware is more difficult than normal (for example: high-radiation environments subject to drastic temperature changes and such), the existing experience base is that you can't cheaply add huge amounts of reliability, because the error detection and correction logic starts to limit the error performance.

Right now, a high performance supercomputer working for a couple weeks can perform ~ 10^21 operations, or about 2^70. If we assume that such a computer has a reliability a billion times better than it has actually demonstrated (which seems like a rather generous assumption to me), that still only leaves you solving 100-bit size NP / PSPACE problems. Adding error correction and detection logic might plausibly get you another factor of a billion, maybe two factors of a billion. In other words: it might improve things, but it's not the indistinguishable from magic NP-solving machine some people seem to think it is.

↑ comment by JulianMorrison · 2012-11-28T19:10:26.815Z · LW(p) · GW(p)

And fuel requirements too, for similar reasons.

Replies from: evand↑ comment by evand · 2012-11-28T19:50:37.350Z · LW(p) · GW(p)

Why do the fuel requirements go up? Where did they come from in the first place?

Replies from: JulianMorrison↑ comment by JulianMorrison · 2012-11-28T19:59:45.924Z · LW(p) · GW(p)

A time loop amounts to a pocket eternity. How will you power the computer? Drop a sun in there, pick out a brown dwarf. That gives you maybe ten billion years of compute time, which isn't much.

Replies from: evand, ialdabaoth, TorqueDrifter↑ comment by evand · 2012-11-28T20:21:00.761Z · LW(p) · GW(p)

I was assuming a wormhole-like device with a timelike separation between the entrance and exit. The computer takes a problem statement and an ordering over the solution space, then receives a proposed solution from the time machine. It checks the solution for validity, and if valid sends the same solution into the time machine. If not valid, it sends the lexically following solution back. The computer experiences no additional time in relation to the operator and the rest of the universe, and the only thing that goes through the time machine is a bit string equal to the answer (plus whatever photons or other physical representation is required to store that information).

In other words, exactly the protocol Harry uses in HPMoR.

Is there some reason this protocol is invalid? If so, I don't believe I've seen it discussed in the literature.

Replies from: Decius↑ comment by Decius · 2012-11-29T17:48:32.541Z · LW(p) · GW(p)

Now here's the panic situation: What happens if the computer experiences a malfunction or bug, such that the validation subroutine fails and always outputs not-valid? If the answer is sent back further in time, can the entire problem be simplified to "We will ask any question we want, get a true answer, and then sometime in the future send those answers back to ourselves?"

If so, all we need do in the present is figure out how to build the receiver for messages from the future: those messages will themselves explain how to build the transmitter.

Replies from: evand↑ comment by evand · 2012-11-29T20:19:18.860Z · LW(p) · GW(p)

The wormhole-like approach cannot send a message to a time before both ends of the wormhole are created. I strongly suspect this will be true of any logically consistent time travel device.

And yes, you can get answers to arbitrarily complex questions that way, but as they get difficult, you need to check them with high reliability.

Replies from: Decius↑ comment by Decius · 2012-11-30T02:50:02.745Z · LW(p) · GW(p)

Is it possible to create a wormhole exit without knowing how to do so? If so, how likely is it that there is a wormhole somewhere within listening range?

As for checking the answers, I use the gold standard of reliability: did it work? If it does work, the answer is sent back to the initiating point. If it doesn't work, send the next answer in the countable answer space back.

If the answer can't be shown to be in a countable answer space (the countable answer space includes every finite sequence of bits, and therefore is larger than the space of the possible outputs of every Turing Machine), then don't ask the question. I'm not sure what question you could ask that can't be answered in a series of bits.

Of course, that means that the first (and probably) last message sent back through time will be some variant of "Do not mess with time" It would take a ballsy engineer indeed to decide that the proper response to trying the solution "Do not mess with time" is to conclude that it failed and send the message "Do not mess with timf"

Replies from: evand↑ comment by evand · 2012-11-30T04:44:59.083Z · LW(p) · GW(p)

My very limited understanding is that wormholes only make logical sense with two endpoints. They are, quite literally, a topological feature of space that is a hole in the same sense as a donut has a hole. Except that the donut only has a two dimensional surface, unlike spacetime.

My mostly unfounded assumption is that other time traveling schemes are likely to be similar.

How do you plan to answer the question "did it work?" with an error rate lower than, say, 2^-100? What happens if you accidentally hit the wrong button? No one has ever tested a machine of any sort to that standard of reliability, or even terribly close. And even if you did, you still haven't done well enough to send a 126 bit message, such as "Do not mess with time" with any reliability.

Replies from: Decius↑ comment by Decius · 2012-11-30T15:46:58.302Z · LW(p) · GW(p)

How do you plan to answer the question "did it work?" with an error rate lower than, say, 2^-100?

I ask the future how they will did it.

Replies from: TheWakalix↑ comment by TheWakalix · 2019-05-19T19:59:58.784Z · LW(p) · GW(p)

I was going to say "bootstraps don't work that way", but since the validation happens on the future end, this might actually work.

↑ comment by ialdabaoth · 2013-12-26T17:35:37.635Z · LW(p) · GW(p)

Because thermodynamics and Shannon entropy are equivalent, all computationally reversible processes are thermodynamically reversible as well, at least in principle. Thus, you only need to "consume" power when doing a destructive update (i.e., overwriting memory locations) - and the minimum amount of energy necessary to do this per-bit is known, just like the maximum efficiency of a heat engine is known.

Of course, for a closed timelike loop, the entire process has to return to its start state, which means there is theoretically zero net energy loss (otherwise the loop wouldn't be stable).

↑ comment by TorqueDrifter · 2012-11-28T20:17:07.845Z · LW(p) · GW(p)

Can't you just receive a packet of data from the future, verify it, then send it back into the past? Wouldn't that avoid having an eternal computer?

↑ comment by Eugine_Nier · 2012-11-29T06:10:31.518Z · LW(p) · GW(p)

It's also interesting how few people seem to realize that Scott Aaronson's time loop logic is basically a form of branching timelines rather than HP's one consistent universe.

↑ comment by Liron · 2012-12-01T04:40:19.445Z · LW(p) · GW(p)

I haven't yet seen anyone else point out that space and time look like a simple generalization of discrete causal graphs to continuous metrics of relatedness and determination, with c being the generalization of locality.

Yeah, this is one of the most profound things I've ever read. This is a RIDICULOUSLY good post.

Replies from: kremlin↑ comment by kremlin · 2013-12-26T16:36:54.867Z · LW(p) · GW(p)

The 'c is the generalization of locality' bit looked rather trivial to me. Maybe that's just EY rubbing off on me, but...

Its obvious that in Conways Game, it takes at least 5 iterations for one cell to affect a cell 5 units away, and c has for some time seemed to me like our worlds version of that law

↑ comment by Shmi (shminux) · 2012-11-30T00:34:37.188Z · LW(p) · GW(p)

It is rarely appreciated that the Novikov self-consistency principle is a trivial consequence of the uniqueness of the metric tensor (up to diffeomorphisms) in GR.

Indeed, given that (a neighborhood of) each spacetime point, even in a spacetime with CTCs, has a unique metric, it also has unique stress-energy tensor derived from this metric (you neighborhoods to do derivatives). So there is a unique matter content at each spacetime point. In other words, your grandfather cannot be alternately alive (first time through the loop) or dead (when you kill him the second time through the loop) at a given moment in space and time.

The unfortunate fact that we can even imagine the grandfather paradox to begin with is due to our intuitive thinking that that the spacetime is only a background for "real events", a picture as incompatible with GR as perfectly rigid bodies are with SR.

Replies from: iainjcoleman↑ comment by iainjcoleman · 2012-12-05T23:32:29.769Z · LW(p) · GW(p)

How does the mass-energy of a dead grandfather differ from the mass-energy of a live one?

Replies from: shminux, army1987↑ comment by Shmi (shminux) · 2012-12-05T23:37:13.697Z · LW(p) · GW(p)

Pretty drastically. One is decaying in the ground, the other is moving about in search of a mate. Most people have no trouble telling the difference.

↑ comment by A1987dM (army1987) · 2012-12-06T01:20:20.058Z · LW(p) · GW(p)

The total four-momentum may well be the same in both case, but the stress-energy-momentum tensor is different (the blood is moving in the live grandfather but not the dead one, etc., etc.)

↑ comment by orthonormal · 2012-11-28T23:54:22.674Z · LW(p) · GW(p)

I've seen academic physicists use postselection to simulate closed timelike curves; see for instance this arXiv paper, which compares a postselection procedure to a mathematical formalism for CTCs.

↑ comment by nigerweiss · 2012-11-28T22:27:52.653Z · LW(p) · GW(p)

I tend to believe that most fictional characters are living in malicious computer simulations, to satisfy my own pathological desire for consistency. I now believe that Harry is living in an extremely expensive computer simulation.

Replies from: DanArmak↑ comment by DanArmak · 2012-11-30T17:40:57.114Z · LW(p) · GW(p)

Also known as Eliezer Yudkowsky's brain.

↑ comment by Steve_Rayhawk · 2012-11-28T19:16:54.208Z · LW(p) · GW(p)

I know that the idea of "different systems of local consistency constraints on full spacetimes might or might not happen to yield forward-sampleable causality or things close to it" shows up in Wolfram's "A New Kind of Science", for all that he usually refuses to admit the possible relevance of probability or nondeterminism whenever he can avoid doing so; the idea might also be in earlier literature.

that there is in fact a way to finitely Turing-compute a discrete universe with self-consistent Time-Turners in it.

I'd thought about that a long time previously (not about Time-Turners; this was before I'd heard of Harry Potter). I remember noting that it only really works if multiple transitions are allowed from some states, because otherwise there's a much higher chance that the consistency constraints would not leave any histories permitted. ("Histories", because I didn't know model theory at the time. I was using cellular automata as the example system, though.) (I later concluded that Markov graphical models with weights other than 1 and 0 were a less brittle way to formulate that sort of intuition (although, once you start thinking about configuration weights, you notice that you have problems about how to update if different weight schemes would lead to different partition function) values).)

I think there might have been an LW comment somewhere that put me on that track

I know we argued briefly at one point about whether Harry could take the existence of his subjective experience as valid anthropic evidence about whether or not he was in a simulation. I think I was trying to make the argument specifically about whether or not Harry could be sure he wasn't in a simulation of a trial timeline that was going to be ruled inconsistent. (Or, implicitly, a timeline that he might be able to control whether or not it would be ruled inconsistent. Or maybe it was about whether or not he could be sure that there hadn't been such simulations.) But I don't remember you agreeing that my position was plausible, and it's possible that that means I didn't convey the information about which scenario I was trying to argue about. In that case, you wouldn't have heard of the idea from me. Or I might have only had enough time to figure out how to halfway defensibly express a lesser idea: that of "trial simulated timelines being iterated until a fixed point".

↑ comment by Alexei · 2012-11-28T12:57:25.233Z · LW(p) · GW(p)

You can do some sort of lazy evaluation. I took the example you gave with the 4x4 grid (by the way you have a typo: "we shall take a 3x3 Life grid"), and ran it forwards, and it converges to all empty squares in 4 steps. See this doc for calculations.

Even if it doesn't converge, you can add another symbol to the system and continue playing the game with it. You can think of the symbol as a function. In my document x = compute_cell(x=2,y=2,t=2)

Replies from: Alex_Altair↑ comment by Alex_Altair · 2012-11-28T18:23:53.553Z · LW(p) · GW(p)

by the way you have a typo

Fixed.

↑ comment by aaronsw · 2012-11-29T23:40:30.316Z · LW(p) · GW(p)

I don't totally understand it, but Zuse 1969 seems to talk about spacetime as a sort of discrete causal graph with c as the generalization of locality ("In any case, a relation between the speed of light and the speed of transmission between the individual cells of the cellular automaton must result from such a model."). Fredkin and Wolfram probably also have similar discussions.

↑ comment by gjm · 2012-11-28T15:11:12.841Z · LW(p) · GW(p)

I think there might have been an LW comment somewhere that put me on that track or maybe even outright suggested it

I certainly made a remark on LW, very early in HPMoR, along the following lines: If magic, or anything else that seems to operate fundamentally at the level of human-like concepts, turns out to be real, then we should see that as substantial evidence for some kind of simulation/creation hypothesis. So if you find yourself in the role of Harry Potter, you should expect that you're in a simulation, or in a universe created by gods, or in someone's dream ... or the subject of a book :-).

I don't think you made any comment on that, so I've no idea whether you read it. I expect other people made similar points.

Replies from: RobbBB↑ comment by Rob Bensinger (RobbBB) · 2012-11-29T04:10:14.674Z · LW(p) · GW(p)

It's more immediately plausible to hypothesize that certain phenomena and regularities in Harry's experience are intelligently designed, rather than that the entire universe Harry occupies is. We can make much stronger inferences about intelligences within our universe being similar to us, than about intelligences who created our universe being similar to us, since, being outside our universe/simulation, they would not necessarily exist even in the same kind of logical structure that we do.

↑ comment by Dentin · 2012-11-29T22:02:45.316Z · LW(p) · GW(p)

I'm not sure how to respond to this; the ability to compute it in a finite fashion for discrete universes seemed trivially obvious to me when I first pondered the problem. It would never have occurred to me to actually write it down as an insight because it seemed like something you'd figure out within five minutes regardless.

"Well, we know there are things that can't happen because there are paradoxes, so just compute all the ones that can and pick one. It might even be possible to jig things such that the outcome is always well determined, but I'd have to think harder about that."

That said, this may just be a difference in background. When I was young, I did a lot of thinking about Conway's Life and in particular "garden of eve" states which have no precursor. Once you consider the possibility of garden of eve states and realize that some Life universes have a strict 'start time', you automatically start thinking about what other kinds of universes would be restricted. Adding a rule with time travel is just one step farther.

On the other hand, the space/time causal graph generalization is definitely something I didn't think about and isn't even something I'd heard vaguely mentioned. That one I'll have to put some thought into.

comment by Oligopsony · 2012-11-28T16:59:23.640Z · LW(p) · GW(p)

1) If we ask whether the entities embedded in strings watched over by the self-consistent universe detector really have experiences, aren't we violating the anti-zombie principle?

2) If Tegmark possible worlds have measure inverse their algorithmic complexity, and causal universes are much more easily computable than logical ones, should we not then find it not surprising that we are in an (apparently) causal universe even if the UE includes logical ones?

Replies from: Viliam_Bur, Eliezer_Yudkowsky↑ comment by Viliam_Bur · 2012-11-28T17:33:08.052Z · LW(p) · GW(p)

If we ask whether the entities embedded in strings watched over by the self-consistent universe detector really have experiences, aren't we violating the anti-zombie principle?

This.

I think that a correct metaphor for computer-simulating other universe is not that we create it, but that we look at it. It already exists somewhere in the multiverse, but previously it was separated from our universe.

Replies from: Eliezer_Yudkowsky, Vladimir_Nesov↑ comment by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2012-11-28T18:02:24.545Z · LW(p) · GW(p)

If simulating things doesn't add measure to them, why do you believe you're not a Boltzmann brain just because lawful versions of you are much more commonly simulated by your universe's physics?

Replies from: Viliam_Bur, ShardPhoenix, Viliam_Bur, FeepingCreature, Dr_Manhattan, Armok_GoB↑ comment by Viliam_Bur · 2012-11-29T19:46:44.395Z · LW(p) · GW(p)

This is not a full answer (I don't have one), just a sidenote: Believing to most likely not be a Boltzmann brain does not necessarily mean that Boltzmann brains are less likely. It could also be some kind of a survivor bias.

Imagine that every night when you sleep, someone makes hundred copies of you. One copy, randomly selected, remains in your bed. Other 99 copies are taken away and killed horribly. This was happening all your life, you just didn't know it. What do you expect about tomorrow?

From the outside view, tomorrow the 99 copies of you will be killed, and 1 copy will continue to live. Therefore you should expect to be killed.

But from inside, today's you is the lucky copy of the lucky copy, because all the unlucky copies are dead. Your whole experience is about surviving, because the unlucky ones don't have experiences now. So based on your past, you expect to survive the next day. And the next day, 99 copies of you will die, but the remaining 1 will say: "I told you so!".

So even if the Boltzmann brains are more simulated, and 99.99% of my copies are dying horribly in vacuum within the next seconds, they don't have a story. The remaining copy does. And the story says: "I am not a Boltzman brain".

↑ comment by ShardPhoenix · 2012-11-29T09:28:24.952Z · LW(p) · GW(p)

If you can't tell the difference, what's the use of considering that you might be a Boltzmann brain, regardless of how likely it is?

↑ comment by Viliam_Bur · 2012-11-29T20:00:12.471Z · LW(p) · GW(p)

By the way, how precise must be a simulation to add measure? Did I commit genocide by watching Star Wars, or is particle-level simulation necessary?

A possible answer could be that an imprecise simulation adds way less, but still nonzero measure, so my pleasure from watching Star Wars exceeds the suffering of all the people dying in the movie, multiplied by the epsilon increase of their measure. (A variant of a torture vs dust specks argument.) Running a particle-level Star Wars simulation would be a real crime.

This would mean there is no clear boundary between simulating and not simulating, so the ethical concerns about simulation must be solved by weighting how detailed is the simulation versus what benefits do we get by running it.

Replies from: Document↑ comment by FeepingCreature · 2012-12-01T02:42:46.336Z · LW(p) · GW(p)

First, knowing you're a Boltzmann brain doesn't give you anything useful. Even if I believed that 90% of my measure were Boltzmann brains, that wouldn't let me make any useful predictions about the future (because Boltzmann brains have no future). Our past narrative is the only thing we can even try and extract any useful predictions from.

Second, it might be possible to recover "traditional" predictability from vanity. If some observer looks at a creature that implements my behavior, I want that someone to find that creature to make correct predictions about the future. Assuming any finite distribution of probabilities over observers, I expect observers finding me via a causal, coherent, simple simulation to vastly outweigh observers finding me as a Boltzmann brain (since Boltzmann brains are scattered [because there's no prior reason to anticipate any brain over another] but causal simulations recur in any form of "iterate all possible universes" search, and in a causal simulation, I am much more likely to implement this reasoning). Call it vanity logic - I want to be found to have been correct. I think (intuitively), but am not sure, that given any finite distribution of expectation over observers, I should expect to be observed via a simple simulation with near-certainty. I mean - how would you find a Boltzmann brain? I'm fairly sure any universe that can find me in simulation space is either looking for me specifically - in which case, they're effectively hostile and should not be surprised at finding that my reasoning failed - or are iterating universes looking for brains, in which case they'll find vastly more this-reasoning-implementers through causal processes than random ones.

↑ comment by Dr_Manhattan · 2012-12-01T23:19:38.053Z · LW(p) · GW(p)

This is a side point, but I'm curious if there is a strong argument for claiming lawful brains are more common (had an argument with some theists on this issue, they used BB to argue against multiverse theories)

Replies from: bzealley↑ comment by bzealley · 2012-12-03T09:34:12.953Z · LW(p) · GW(p)

I would say: because it seems that (in our universe and those sufficiently similar to count, anyway) the total number of observer-moments experienced by evolved brains should vastly exceed the total number of observer-moments experienced by Boltzmann brains. Evolved brains necessarily exist in large groups, and stick around for absolutely aeons as compared to the near-instantaneous conscious moment of a BB.

Replies from: Dr_Manhattan↑ comment by Dr_Manhattan · 2012-12-03T15:24:44.791Z · LW(p) · GW(p)

The problem is that the count of "similar" universes does not matter, the total count of brains does. It seems a serious enough issue for prominent multiverse theorists to reason backwards and adjust things to avoid the undesirable conclusion http://www.researchgate.net/publication/1772034_Boltzmann_brains_and_the_scale-factor_cutoff_measure_of_the_multiverse

Replies from: bzealley↑ comment by bzealley · 2012-12-18T15:54:12.425Z · LW(p) · GW(p)

If they can host brains, they're "similar" enough for my original intention - I was just excluding "alien worlds".

I don't see why the total count of brains matters as such; you are not actually sampling your brain (a complex 4-dimensional object) you are sampling an observer-moment of consciousness. A Boltzmann brain has one such moment, an evolved human brain has (rough back of an envelope calculation, based on a ballpark figure of 25ms for the "quantum" of human conscious experience and a 70-year lifespan) 88.3 x 10^9. Add in the aforementioned requirement for evolved brains to exist in multiplicity wherever they do occur, and the ratio of human moments:Boltzmann moments in a sufficiently large defined volume of (large-scale homogenous) multiverse gets higher still.