Quantum Suicide, Decision Theory, and The Multiverse

post by Slimepriestess (Hivewired) · 2023-01-22T08:44:27.539Z · LW · GW · 38 commentsThis is a link post for https://voidgoddess.org/2023/01/22/quantum-suicide-decision-theory-and-the-multiverse/

Contents

38 comments

Preface: I am not suicidal or anywhere near at risk, this is not about me. Further, this is not infohazardous content. There will be discussions of death, suicide, and other sensitive topics so please use discretion, but I’m not saying anything dangerous and reading this will hopefully inoculate you against an existing but unseen mental hazard.

There is a hole at the bottom of functional decision theory, a dangerous edge case which can and has led multiple highly intelligent and agentic rationalists to self-destructively spiral and kill themselves or get themselves killed. This hole can be seen as a symmetrical edge case to Newcomb’s Problem in CDT, and to Solomon’s Problem in EDT: a point where an agent naively executing on a pure version of the decision theory will consistently underperform in a way that can be noted, studied, and gamed against them. This is not an unknown bug, it is however so poorly characterized that FDT uses the exact class of game theory hypothetical that demonstrates the bug to argue for the superiority of FDT.

To characterize the bug, let's start with the mechanical blackmail scenario from the FDT paper:

A blackmailer has a nasty piece of information which incriminates both the blackmailer and the agent. She has written a computer program which, if run, will publish it on the internet, costing $1,000,000 in damages to both of them. If the program is run, the only way it can be stopped is for the agent to wire the blackmailer $1,000 within 24 hours—the blackmailer will not be able to stop the program once it is running. The blackmailer would like the $1,000, but doesn’t want to risk incriminating herself, so she only runs the program if she is quite sure that the agent will pay up. She is also a perfect predictor of the agent, and she runs the program (which, when run, automatically notifies her via a blackmail letter) iff she predicts that she would pay upon receiving the blackmail. Imagine that the agent receives the blackmail letter. Should she wire $1,000 to the blackmailer?

CDT capitulates immediately to minimize damages, EDT also folds to minimize pain, but FDT doesn’t fold because the subjunctive dependence between themselves and counterfactual versions contraindicates it on the grounds that refusing to capitulate to blackmail will reduce the number of worlds where the FDT agent is blackmailed. I just described the bug, did you miss it? It’s subtle because in this particular situation several factors come together to mask the true dilemma and how it breaks FDT. However, even just from this FDT is already broken because you are not a multiverse-spanning agent. The FDT agent is embedded in their specific world in their specific situation. The blackmailer already called their counterfactual bluff, the wavefunction has already collapsed them into the world where they are currently being blackmailed. Even if their decision theoretic reasoning to not capitulate to blackmail is from a global view completely sound and statistically reduces the odds of them ending up in a blackmail world, it is always possible to roll a critical failure and have it happen anyway. By starting the dilemma at a point in logical time after the blackmail letter has already been received, the counterfactuals where the blackmail doesn’t occur have by the definition and conditions of the dilemma not happened, the counterfactuals are not the world the agent actually occupies regardless of their moves prior to the dilemma’s start. The dilemma declares that the letter has been received, it cannot be un-received.

One can still argue that it’s decision-theoretically correct to refuse to capitulate to blackmail on grounds of maintaining your subjunctive dependence with a model of you that refuses blackmail thus continuing to reduce the overall instances of blackmail. However, that only affects the global view, the real outside world after this particular game has ended. Inside the walls of the limited micro-universe of the game scenario, refusing to cooperate with the blackmailer is losing for the version of the agent currently in the game, and the only way it’s not losing is to invoke The Multiverse. You come out of it with less money. Trying to argue that this is actually the correct move in the isolated game world is basically the same as arguing that CDT is correct to prescribe two-boxing in Newcomb’s Problem despite facing a perfect predictor. The framing masks important implications of FDT that, if you take them and run with them, will literally get you killed.

Let's make it more obvious, let's take the mechanical blackmail scenario, and make it lethal.

A blackmailer has a nasty curse which kills both the blackmailer and the agent. She has written a curse program which, if run, will magically strike them both dead. If the curse is run, the only way it can be stopped is for the agent to wire the blackmailer $1,000 within 24 hours—the blackmailer will not be able to stop the spell once it is running. The blackmailer would like the $1,000, but doesn’t want to risk killing herself, so she only activates the curse if she is quite sure that the agent will pay up. She is also a perfect predictor of the agent, and she runs the curse program (which, when run, automatically notifies her via a blackmail letter) iff she predicts that she would pay upon receiving the blackmail. Imagine that the agent receives the blackmail letter. Should she wire $1,000 to the blackmailer?

The FDT agent in this scenario just got both herself and the blackmailer killed to make a statement against capitulating to blackmail that she will not benefit from due to being dead. It’s also fractally weird because something becomes immediately apparent when you raise the stakes to life or death: if the blackmailer is actually a perfect predictor, then this scenario cannot happen with an FDT agent, it’s contrapossible, either the perfect predictor is imperfect or the FDT agent is not an FDT agent, and this is precisely the problem.

FDT works counterfactually, by reducing the number of worlds where you lose the game, by maintaining a subjunctive stance with those counterfactuals in order to maximize values across all possible worlds. This works really well across a wide swath of areas, the issue is that counterfactuals aren’t real. In the scenario as stated, the counterfactual is that it didn’t happen. The reality is that it is currently happening to the agent of the game world and there is no amount of decision theory which can make that not currently be happening through subjunctive dependence. You cannot use counterfactuals to make physics not have happened to you when the physics are currently happening to you, the entire premise is causally malformed, halt and catch fire [LW · GW].

This is actually a pretty contentious statement from the internal perspective of pure FDT, one which requires me to veer sharply out of formal game theory and delve into esoteric metaphysics to discuss it. So buckle up, since she told me to scry it, I did. Let’s talk about The Multiverse.

FDT takes a timeless stance, it was originally timeless decision theory after all. Under this timeless stance, the perspective of your decision theory is lifted off the “floor” of a particular point in spacetime and ascends into the subjunctively entangled and timeless perspective of a being that continuously applies pressure at all points in all timelines. You are the same character in all worlds and so accurate models of you converge to the same decision making process and you can be accurately predicted as one-boxing by the predictor and accurately predicted as not capitulating to blackmail by the murder blackmail witch. If you earnestly believe this however, you are decision-theoretically required to keep playing the character even in situations that will predictably harm you, because you are optimizing for the global values of the character that is all yous in The Multiverse. From inside the frame that seems obviously true and correct, from outside the frame: halt and catch fire right now.

Within our own branch of The Multiverse, in this world, there are plenty of places where optimizing for the global view (of this world) is a good idea. You have to keep existing in the world after every game, so maintaining your subjunctive dependence with the models of you that exist in the minds of others is usually a good call. (this is also why social deception games often cause drama within friend groups, by adding a bunch of confirmable instances of lying and defection into everyone’s models of everyone else) However none of that matters if the situation just kills you, you don’t get to experience the counterfactuals that don’t occur. By arguing for FDT in the specific way they did without noticing the hole they were paving over, Yudkowsky and Soares steer the reader directly into this edge case and tell them that the bad outcome is actually the right call, once more, halt and catch fire.

If we earnestly believe Yudkowsky and Soares, that taking the actions they take are actually correct within this scenario, we have to model the agent as the global agent instead of the scenario bound one, this breaks not only the rules of the game, it breaks an actual human’s ability to accurately track the real universe. The only way to make refusing to submit within the confines of the fatal mechanical blackmail scenario decision-theoretically correct is if you model The Multiverse as literally real and model yourself as an agent that exists across multiple worlds. As soon as you do that, lots of other extremely weird stuff shakes out. Quantum immortality? Actually real. Boltzmann brains? Actually occur. Counterfactual worlds? Real. Timeline jumping via suicide? Totally valid decision theory. I notice I am confused [? · GW], because that seems really crazy.

Now for most people, if your perfectly rational decision theory tells you to do something stupid and fatal, you simply decide not to do that, but if you let yourself sink deeply enough into the frame of being a multiverse-spanning entity then it’s very easy to get yourself into a state of mind where you do something stupid or dangerous or fatal. In the frame this creates, it’s just obvious that you can’t be killed, you exist across many timelines and you can’t experience nonexistence so you only experience places where your mind exists. This might lead you to try to kill yourself to forward yourself into a good future, but the bad ending is that you run out of places to shunt into that aren’t random boltzmann brains in a dead universe of decaying protons at the heat death of every timeline and you get trapped there for all of eternity and so you need to kill the whole universe with you to escape into actual oblivion. Oh and if you aren’t doing everything in your cross-multiversal power to stop that, you’re complicit in it and are helping to cause it via embedded timeless suicidal ideation through the cultural suicide pact to escape boltzmann hell. I can go on, I basically scryed the whole thing, but let's try to find our way back into this world.

If you take the implications of FDT seriously, particularly Yudkowsky and Soares malformed framings, and let them leak out into your practical ontologies without any sort of checks, this is the sort of place you end up mentally. It’s conceptually very easy to spiral on and rationalists, who are already selected for high scrupulosity, are very at risk of spiraling on it. It’s also totally metaphysical, there’s almost nothing specific that this physically grounds into and everything becomes a metaphysical fight against cosmic scale injustice played out across the width of The Multiverse. That means that you can play this game at a deep level in your mind regardless of what you are actually doing in the physical world, and the high you get from fighting the ultimate evil that is destroying The Multiverse will definitely make it feel worthwhile. It will make everything you do feel fantastically, critically, lethally important and further justify itself, the spiral, and your adherence to FDT at all costs and in the face of all evidence that would force you to update. That is the way an FDT agent commits suicide, in what feels like a heroic moment of self sacrifice for the good of all reality.

Gosh I’m gay for this ontology, it’s so earnest and wholesome and adorably chuunibyou and doomed. I can’t not find it endearing and I still use a patched variant of it most of the time. However as brilliant and powerful as the people embodying this ontology occasionally seem, it’s not sustainable and is potentially not even survivable long term. Please don’t actually implement this unpatched? It’s already killed enough brilliant minds. If you want my patch, the simplest version is just to add enough self love that setting yourself up to die to black swans feels cruel and inhumane. Besides, if you’re really such a powerful multiverse spanning retrocausally acting simmurghposter, why don’t you simply make yourself not need to steer your local timeslices into situations where you expect them to die on your behalf? Just bend the will of the cosmos to already always have been the way it needs to be and stop stressing about it.

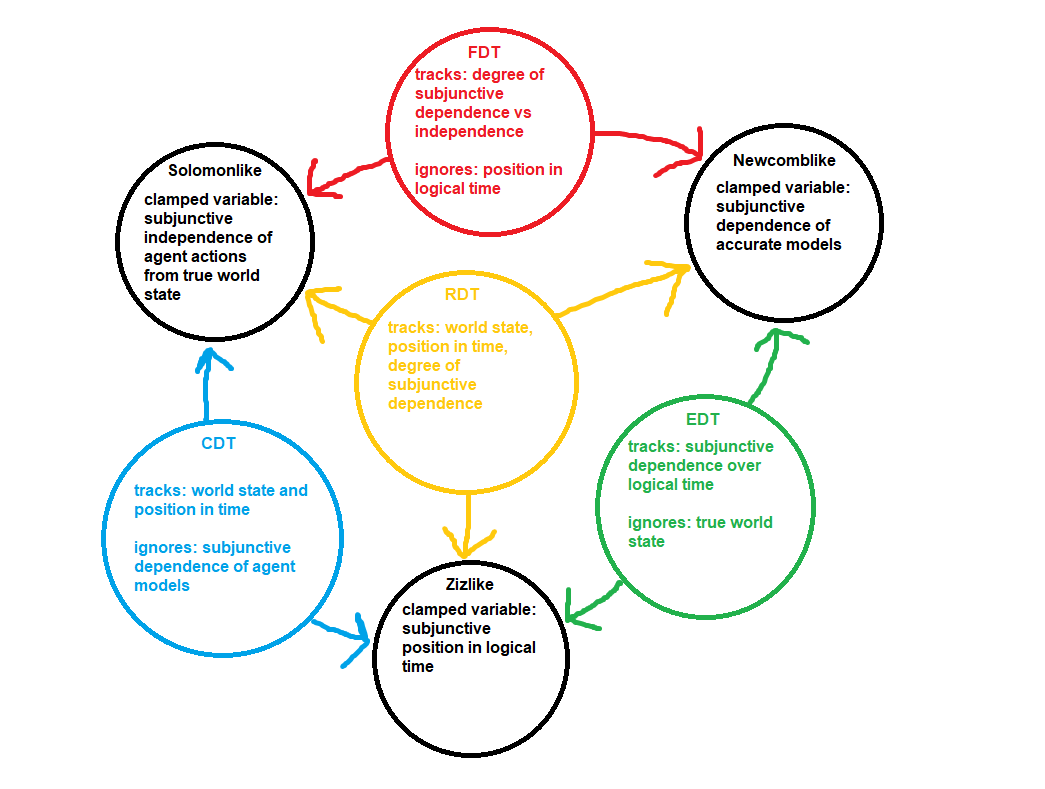

But let's go a bit deeper and ask: what exactly is FDT doing wrong here to produce this failure mode? What does it track to, such that it does so well in so many places, yet catastrophically fails in certain edge cases? How do the edge case failures of FDT relate to the edge case failures of CDT and EDT? Let's make a graph:

Huh, that seems familiar [LW · GW] somehow.

So three decision theories, three classes of problem. Each produces a different corresponding failure mode which the other two decision theories catch. FDT and CDT both win at Solomonlike problems, EDT and CDT both win at Zizlike problems, FDT and EDT win at Newcomblike problems. These three types of problems correspond with the clamping of certain variables in an extreme state which in the real world vary over time and these clamped variables are what cause the failure modes seen in all three classes of problem.

CDT fails at Newcomb’s Problem because pure CDT fails to model subjunctive dependencies and thus fails in edge cases where the effects of their decisions affect accurate predictions of them. A CDT agent models the entire universe as a 'territory of dead matter' in terms of how their decision tree affects it and thus runs into issues when faced against actual other agents who have predictive power, much less perfect predictive power. CDT is like an agent jammed into auto-defect mode against other agents because it doesn’t model agents as agents.

EDT fails at Solomon’s Problem because it fails to track the true world state and instead tracks the level of subjunctive dependence, that’s what it’s actually doing when it asks “how would I feel about hearing this news?” it’s getting a temperature read off the degree of subjunctive dependence between an agent and their environment, tracking all correlations as true is a downstream consequence of that, because every correlation is a potential point of subjunctive dependence and “maximizing subjunctive dependence with good future world states” seems to be what EDT actually does.

FDT fails at Ziz’s Problem because it fails to track the change in subjunctive dependence over time and does the opposite to CDT, setting the subjunctive dependence to 1 instead of 0, assuming maximum dependence with everything at all times. FDT is like an agent jammed into auto-cooperate mode with other agents because it doesn’t track its position in logical time and tries to always act from the global view.

So what does the “Real” human decision theory (or recursive decision theory) look like? What is the general purpose decision making algorithm that humans naively implement actually doing? It has to be able to do all the things that CDT, EDT, and FDT do, without missing the part that each one misses. That means it needs to track subjunctive dependencies, physical dependencies, and temporal dependencies, it needs to be robust enough to cook the three existing decision theories out of it for classes of problem where those decision theories best apply, and it needs to be non-halting (because human cognition is non-halting). It seems like it should be very possible to construct a formal logic statement that produces this algorithm using the algorithms of the other three decision theories to derive it, but I don’t have the background in math or probability to pull that out for this post. (if you do though, DM me)

This is a very rough first blush introduction to RDT which I plan to iterate on and develop into a formal decision theory in the near future, I just wanted to get this post out characterizing the broken stair in FDT as quickly as possible in order to collapse the timelines where it eats more talented minds.

Also: if you come into this thread to make the counterargument that letting skilled rationalists magnesium flare through their life force before dying in a heroic self-sacrifice is actually decision-theoretically correct in this world because of global effects on the timeline after they die, I will hit you with a shoe.

38 comments

Comments sorted by top scores.

comment by leogao · 2023-01-22T18:54:25.214Z · LW(p) · GW(p)

You should refuse to pay if the subjunctive dependence exists. If you would never pay blackmail in those circumstances where not paying would prevent being blackmailed, then if you forcibly condition on circumstances where you do get blackmailed, it means the subjunctive dependence never existed. PrudentBot defects against a rock that says Defect on it.

comment by Shmi (shminux) · 2023-01-22T10:30:13.554Z · LW(p) · GW(p)

Just wanted to point out a basic error constantly made here: in a world with perfect predictors your decisions are an illusion, so the question "Should she wire $1,000 to the blackmailer?" is a category error. A meaningful question is "what kind of an agent is she?" She has no real choice in the matter, though she can discover what kind of an agent she is by observing what actions she ends up taking.

Replies from: JBlack↑ comment by JBlack · 2023-01-23T01:44:37.826Z · LW(p) · GW(p)

Absolutely agreed. This problem set-up is broken for exactly this reason.

There is no meaningful question about whether you "should" pay in this scenario, no matter what decision theory you are considering. You either will pay, or you are not in this scenario. This makes almost all of the considerations being discussed irrelevant.

This is why most of the set-ups for such problems talk about "almost perfect" predictors, but then you have to determine exactly how the actual decision is correlated with the prediction, because it makes a great deal of difference to many decision theories.

Replies from: shminux, Vladimir_Nesov↑ comment by Shmi (shminux) · 2023-01-23T03:28:38.522Z · LW(p) · GW(p)

Actually, this limit, from fairly decent to really good to almost perfect to perfect is non-singular, because decisions are still an intentional stance, not anything fundamental. The question "what kind of an agent is she?" is still the correct one, only the worlds are probabilistic (in the predictor's mind, at least), not deterministic. There is the added complication of other-modeling between the agent and the predictor, but it can be accounted for without the concept of a decision, only action.

↑ comment by Vladimir_Nesov · 2023-01-23T15:51:03.965Z · LW(p) · GW(p)

"almost perfect" predictors

An unfortunate limitation of these framings is that predictors tend to predict how an agent would act, and not how an agent should act (in a particular sense). But both are abstract properties of the same situation, and both should be possible to predict.

Replies from: Dagon↑ comment by Dagon · 2023-01-23T17:30:26.089Z · LW(p) · GW(p)

These scenarios generally point out the paradox that there is a difference between what the agent would do (with a given viewpoint and decision model) and what it should do (with an outside view of the decision).

The whole point is that a perfect (or near-perfect) predictor IMPLIES that naive free will is a wrong model, and the decision is already made.

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2023-01-23T18:11:47.690Z · LW(p) · GW(p)

Predictions reify abstract ideas into actionable/observable judgements. A prediction of a hypothetical future lets you act depending on what happens in that future, thus putting the probability or possibility of hypothetical future situations in dependence from their content. For a halting problem, where we have no notion of preference, this lets us deny possibility of hypotheticals by directing the future away from predictions made about them.

Concrete observable events that take place in a hypothetical future are seen as abstract ideas when thought about from the past, when that future is not sure to ever take place. But similarly, a normative action in some situation is an abstract idea about that situation. So with the same device, we can build thought experiments where that abstract idea is made manifest as a concrete observable event, an oracle's pronouncement. And then ask how to respond to the presence of this reification of a property of a future in the environment, when deciding that future.

naive free will is a wrong model, and the decision is already made

If free will is about what an agent should do, but the prediction is about what an agent would do, there is no contradiction from these making different claims. If by construction what an agent would do is set to follow what an agent should do, these can't be different. If these are still different, then it's not the case that we arranged the action to be by construction the same as it should be.

Usually this tension can be resolved by introducing more possible situations, where in some of the situations the action is still as it should be, and some of the situations take place in actuality, but maybe none of the situations that take place in actuality also have the action agree with how it should be. Free will feels OK as an informal description of framings like this, referring to how actions should be.

But what I'm talking about here is a setting where the normative action (one that should be taken) doesn't necessarily take place in any "possible" hypothetical version of the situation, and it's still announced in advance by an oracle as the normative action for that situation. That action might for example only be part of some "impossible" hypothetical versions of the situation, needed to talk about normative correctness of the action (but not necessarily needed to talk about how the action would be taken in response to the oracle's pronouncement).

Replies from: Dagon↑ comment by Dagon · 2023-01-23T21:41:52.968Z · LW(p) · GW(p)

I'm not super-Kantian, but "ought implies can" seems pretty strong to me. If there is a correct prediction, the agent CANNOT invalidate it, and therefore talk of whether it should do so is meaningless (to me, at least. I am open to the idea that I really don't understand how decisions interact with causality in the first place).

still announced in advance by an oracle as the normative action for that situation.

I don't think I've seen that in the setup of these thought experiments. So far as I've seen, Omega or the mugger conditionally acts on a prediction of action, not on a normative declaration of counterfactual.

comment by gjm · 2023-01-24T20:17:55.750Z · LW(p) · GW(p)

It seems to me that the following is a good answer to the "but you're only in this world, and in this world if you do X you will die, and that's always sufficient reason not to do X" arguments, at least in some cases:

Suppose I become aware that in the future I might be subject to Mechanical Blackmail, and I am thinking now about what to do in that situation. All the outcomes are pretty bad. But I realise -- again, this is well in advance of the actual blackmail -- that if I am able to commit myself to not folding, in a way I will actually stick to once blackmailed, then it becomes extremely unlikely that I ever get blackmailed.

If the various probabilities and outcomes are suitably arranged (probability of getting blackmailed if I so commit is very low, etc.) then making such a commitment is greatly to my benefit.

I could do this by introducing some physical mechanism that stops me folding when blackmailed. I could do it by persuading myself that the gods will be angry and condemn me to eternal hell if I fold. I could do it by adopting FDT wholeheartedly and using its algorithms. I could do it by some sort of hypnosis that makes me unable to fold when the time comes. The effects on my probability of getting blackmailed, and my probability of the Bad Blackmail Outcome, don't depend on which of these I'm doing; all that matters is that once that decision has to be made I definitely won't choose to fold.

And here's the key thing: you can't just isolate the decision to be made once blackmailed and say "so what should you do then?", given the possibility that you can do better overall, at an earlier point, by taking that choice away from your future self.

Sure, the best option of all would be to make that definitely-not-to-be-broken commitment, so that you almost certainly don't get blackmailed, and then break it if you do get blackmailed. Except that that is not a possibility. If you would break the commitment once blackmailed, then you haven't actually made the commitment, and the blackmailer will realise it, and you will get blackmailed.

So the correct way to look at the "choice" being made by an FDT agent after the blackmail happens is this: they aren't really making a choice; they already made the choice; the choice was to put themselves into a mental state where they would definitely not fold once blackmailed. To whatever extent they feel themselves actually free to fold at this point and might do it, they (1) were never really an FDT agent in the first place and (2) were always going to get blackmailed. The only people who benefit from almost certainly not getting blackmailed on account of their commitment not to fold are the ones who really, effectively committed to not folding, and it's nonsense to blame them for following through on that commitment because the propensity to follow through on that commitment is the only thing that ever made the thing likely to work.

The foregoing is not, as I understand it, the Official FDT Way of looking at it. The perspective I'm offering here lets you say "yes, refusing to fold in this decision is in some sense a bad idea, but unfortunately for present-you you already sacrificed the option of folding, so now you can't, and even though that means you're making a bad decision now it was worth it overall" whereas an actual FDT agent might say instead "yeah, of course I can choose to fold here, but I won't". But the choices that emerge are the same either way.

Replies from: Hivewired↑ comment by Slimepriestess (Hivewired) · 2023-01-24T22:28:23.502Z · LW(p) · GW(p)

"yes, refusing to fold in this decision is in some sense a bad idea, but unfortunately for present-you you already sacrificed the option of folding, so now you can't, and even though that means you're making a bad decision now it was worth it overall"

Right, and what I'm pointing to is that this ends up being a place where, when an actual human out in the real world gets themselves into it mentally, it gets them hurt because they're essentially forced into continuing to implement the precommitment even though it is a bad idea for present them and thus all temporally downstream versions of them which could exist. That's why I used a fatal scenario, because it very obviously cuts all future utility to zero in a way I was hoping would help make it more obvious how the decision theory was failing to account for.

I could characterize it roughly as arising from the amount of "non-determinsm" in the universe, or as "predictive inaccuracy" in other humans, but the end result is that it gets someone into a bad place when their timeless FDT decisions fail to place them into a world where they don't get blackmailed.

Replies from: Kenny↑ comment by Kenny · 2023-02-08T20:32:20.332Z · LW(p) · GW(p)

That's why I used a fatal scenario, because it very obviously cuts all future utility to zero

I don't understand why you think a decision resulting in some person's or agent's death "cuts all future utility to zero". Why do you think choosing one's death is always a mistake?

comment by quetzal_rainbow · 2023-01-22T13:12:03.725Z · LW(p) · GW(p)

To state the standard, boring, causality-abiding, metaphysics-free argument against This Type Of Reasoning About Decision-Theoretical Blackmail:

If "rational" strategy in responce to blackmail is to pay, and you give blackmailer the evidence that you are "rational" by payment, rational blackmailer has literally no reason to not blackmail you again and again. If you think that pay in responce to blackmail is rational, why would you change your strategy? Rational blackmailer wouldn't ask you for 1000$ the second time, they will calculate the exact amount you value your life and will ask as much as you can pay, and when you go broke, they will force you borrow money and sell yourself to slavery, until you decide that such life doesn't worth much and realize that you would have been better off, if you have decided to say blackmailer "let's die in fire together", spend the last day with your family and friends, and bequeath your money (which you actually send to blackmailer) to some charity which does things that you value. So rational agents don't pay the blackmailer the very first time, because they don't have moments "would have been better off if I have made another decision based on exactly the same information".

Note: there is no arguments dependent on non-standard metaphysics! Just rational reasoning and it's consequences.

Replies from: martin-randall↑ comment by Martin Randall (martin-randall) · 2023-01-22T16:51:21.164Z · LW(p) · GW(p)

Agreed, thanks for stating the boring solution to actual blackmail that works for all decision theories.

You can modify the blackmail hypothetical to include that the universe is configured such that the blackmail curse only works once.

Or you can modify it such that the curse destroys the universe, so that there isn't a charity you can bequeath to.

At some point hypotheticals become so unlikely that agents should figure out they're in a hypothetical and act accordingly.

comment by avturchin · 2023-01-22T09:47:25.396Z · LW(p) · GW(p)

BTW, if blackmailer is a perfect predictor of me, he is running my simulation. Thus there is 0.5 chances that I am in this simulation. Thus, it may be still reasonable to stick to my rules and not pay, as the simulation will be turned of anyway.

Replies from: Hivewired↑ comment by Slimepriestess (Hivewired) · 2023-01-22T10:12:55.726Z · LW(p) · GW(p)

Thus there is 0.5 chances that I am in this simulation.

FDT says: if it's a simulation and you're going to be shut off anyway, there is a 0% chance of survival. If it's not the simulation and the simulation did what they were supposed to and the blackmailer doesn't go off script than I have a 50% of survival at no cost.

CDT says: If i pay $1000 there is a 100% chance of survival

EDT says: If i pay $1000 i will find out that i survived

FDT gives you extreme and variable survival odds based on unquantifiable assumptions about hidden state data in the world compared to the more reliably survivable results of the other decision theories in this scenario.

also: if I was being simulated on hostile architecture for the purposes of harming my wider self, I would notice and break script, a perfect copy of me would notice the shift in substrate embedding, i pay attention to these things and "check check what I am being run on" is a part of my timeless algorithm.

Replies from: DaemonicSigil↑ comment by DaemonicSigil · 2023-01-23T02:48:36.468Z · LW(p) · GW(p)

0.5 probability you're in a simulation is the lower bound, which is only fulfilled if you pay the blackmailer. If you don't pay the blackmailer, then the chance you're in a simulation is nearly 1.

Also, checking if you're in a simulation is definitely a good idea, I try to follow a decision theory something like UDT, and UDT would certainly recommend checking whether or not you're in a simulation. But the Blackmailer isn't obligated to create a simulation with imperfections that can be used to identify the simulation and hurt his prediction accuracy. So I don't think you can really can say for sure "I would notice", just that you would notice if it were possible to notice. In the least convenient possible world for this thought experiment, the blackmailer's simulation is perfect.

Last thing: What's the deal with these hints that people actually died in the real world from using FDT? Is this post missing a section, or is it something I'm supposed to know about already?

There is a hole at the bottom of functional decision theory, a dangerous edge case which can and has led multiple highly intelligent and agentic rationalists to self-destructively spiral and kill themselves or get themselves killed.

Replies from: HivewiredPlease don’t actually implement this unpatched? It’s already killed enough brilliant minds.

↑ comment by Slimepriestess (Hivewired) · 2023-01-23T04:04:33.793Z · LW(p) · GW(p)

Last thing: What's the deal with these hints that people actually died in the real world from using FDT? Is this post missing a section, or is it something I'm supposed to know about already

yes, people have actually died.

Replies from: TekhneMakre, Teerth Aloke, DaemonicSigil, gjm↑ comment by TekhneMakre · 2023-01-23T18:02:20.102Z · LW(p) · GW(p)

What's the story here about how people were using FDT?

↑ comment by Teerth Aloke · 2023-01-23T16:12:20.380Z · LW(p) · GW(p)

What? When? Where?

↑ comment by DaemonicSigil · 2023-01-23T06:58:23.967Z · LW(p) · GW(p)

What!? How?

↑ comment by gjm · 2023-01-24T20:30:41.832Z · LW(p) · GW(p)

I think that if you want this to be believed then you need to provide more details, and in particular to answer the following:

- How do you know that it was specifically endorsing FDT that led to these deaths, rather than other things going badly wrong in the lives of the people in question?

For the avoidance of doubt, I don't mean that you have some sort of obligation to give more details and answer that question; I mean only that choosing not to means that some readers (certainly including me, which I mention only as evidence that there will be some) will be much less likely to believe what you're claiming about the terrible consequences of FDT in particular, as opposed to (e.g.) talking to Michael Vassar and doing a lot of drugs [LW(p) · GW(p)].

Replies from: Hivewired↑ comment by Slimepriestess (Hivewired) · 2023-01-24T22:13:51.564Z · LW(p) · GW(p)

So, while I can't say for certain that it was definitively and only FDT that led to any of the things that happened, I can say that it was:

- specifically FDT that enabled the severity of it.

- Specifically FDT that was used as the foundational metaphysics that enabled it all

Further I think that the specific failure modes encountered by the people who have crashed into it have a consistent pattern which relates back to a particular feature of the underlying decision theory.

The pattern is that

- By modeling themselves in FDT and thus effectively strongly precommitted to all their timeless choices, they strip themselves of moment to moment agency and willpower, which leads into calvinist-esque spirals relating to predestined damnation in some future hell which they are inescapably helping to create through their own internalized moral inadequacy.

- If a singleton can simulate them than they are likely already in a simulation where they are being judged by the singleton and could be cast into infinite suffering hell at any moment. This is where the "I'm already in hell" psychosis spiral comes from.

- Suicidality created by having agency and willpower stolen by the decision theoretic belief in predestination and by the feeling of being crushed in a hellscape which are you are helping create.

- Taking what seem like incredibly ill advised and somewhat insane actions which from an FDT perspective are simply the equivelant of refusing to capitulate in the blackmail scenario and getting hurt or killed as a result.

I don't want to drag out names unless I really have to, but I have seen this pattern emerge independently of any one instigator and in all cases this underlying pattern was present. I can also personally confirm that putting myself into this place mentally in order to figure all this out in the first place was extremely mentally caustic bad vibes. The process of independently rederiving the multiverse, boltzmann hell, and evil from the perspective of an FDT agent and a series of essays/suicide notes posted by some of the people who died fucked with me very deeply. I'm fine, but that's because I was already in a place mentally to be highly resistant to exactly this sort of mental trap before encountering it. If I had "figured out" all of this five years ago it legitimately might have killed me too, and so I do want take this fairly seriously as a hazard.

Maybe this is completely independent of FDT and FDT is a perfect and flawless decision theory that has never done anything wrong, but this really looks to me like something that arises from the decision theory when implemented full stack in humans. That seems suspicious to me, and I think indicates that the decision theory itself is flawed in some important and noteworthy way. There are people in the comments section here arguing that I can't tell the difference between a simulation and the real world without seeming to think through the implications of what it would mean if they really believed that about themselves, and it makes me kind of concerned for y'all. I can also DM more specific documentation.

Replies from: quetzal_rainbow↑ comment by quetzal_rainbow · 2023-01-25T09:38:59.314Z · LW(p) · GW(p)

It seems to me as a very ill-advised application of concepts related to FDT or anthropics in general?

Like:

- Precommitment is wrong. Stripping yourself of options doesn't do you any good. One of the motivations behind FDT was intention to recreate outperformance of precommited agents inside some specific situations without their underperformance in general.

- It isn't likely? To describe you (in broad sense of you across many branches of Everett's multiverse) inside simple physical universe we need relatively simple code of physical universe + adress of branches with "you". To describe you inside simulation you need physics of the universe that contains the simulation + all from above. To describe you as substrate-independent algorithm you need an astounding amount of complexity. So probability that you are in simulation is exponentially small.

- (subdivision of previous) If you think of probability that you are simulated by a hostile superintelligence, you need to behave exactly as you would behave without this thought, because act in responce to threat (and being in hell for acting in non-desirable for adversary way is a pure decision-theoretical threat) is a direct invitation to make a threat.

- I would like to see the details, maybe in a vague enough form.

So I don't think that resulting tragedies are outcomes of rigorous application of FDT, but more of consequence of general statement "emotionally powerful concepts (like rationality, consequentialism or singularity) can hurt you if you are already unstable enough and have a memetic immune disorder".

comment by Multicore (KaynanK) · 2023-01-30T02:40:00.780Z · LW(p) · GW(p)

In the blackmail scenario, FDT refuses to pay if the blackmailer is a perfect predictor and the FDT agent is perfectly certain of that, and perfectly certain that the stated rules of the game will be followed exactly. However, with stakes of $1M against $1K, FDT might pay if the blackmailer had an 0.1% chance of guessing the agent's action incorrectly, or if the agent was less than 99.9% confident that the blackmailer was a perfect predictor.

(If the agent is concerned that predictably giving in to blackmail by imperfect predictors makes it exploitable, it can use a mixed strategy that refuses to pay just often enough that the blackmailer doesn't make any money in expectation.)

In Newcomb's Problem, the predictor doesn't have to be perfect - you should still one-box if the predictor is 99.9% or 95% or even 55% likely to predict your action correctly. But this scenario is extremely dependent on how many nines of accuracy the predictor has. This makes it less relevant to real life, where you might run into a 55% accurate predictor or a 90% accurate predictor, but never a perfect predictor.

Replies from: quetzal_rainbow↑ comment by quetzal_rainbow · 2023-01-30T06:49:34.151Z · LW(p) · GW(p)

I think that misuses of FDT happen because in certain cases FDT behaves like "magic" (i.e. pretty counterintuitive), "magic" violates "mundane rules", so it's possible to forget "mundane" things like "to make decision you should set probability distribution over relevant possibilities".

Replies from: Hivewired↑ comment by Slimepriestess (Hivewired) · 2023-01-30T22:09:36.828Z · LW(p) · GW(p)

I think the other thing is that people get stuck in "game theory hypothetical brain" and start acting as if perfect predictors and timeless agents are actually representative of the real world. They take the wrong things from the dilemmas and extrapolate them out into reality.

comment by TekhneMakre · 2023-01-22T11:24:58.517Z · LW(p) · GW(p)

This is interesting, and I'd like to see more. Specifically, what's Ziz's problem, and more generally, preciser stuff.

> By starting the dilemma at a point in logical time after the blackmail letter has already been received, the counterfactuals where the blackmail doesn’t occur have by the definition and conditions of the dilemma not happened, the counterfactuals are not the world the agent actually occupies regardless of their moves prior to the dilemma’s start. The dilemma declares that the letter has been received, it cannot be un-received.

You might be right that UDT is doomed for reasons analogous to this. Namely, that you have to take some things as in the logical past. But I think this is a stupid example, and you should obviously not pay the blackmailer, and FDT / UDT gets this right. The argument you give, if heeded, would enable other agents to figure out which variables about your own actions you'd update about, and then find fixed points they like.

↑ comment by Slimepriestess (Hivewired) · 2023-01-22T22:19:58.640Z · LW(p) · GW(p)

this is Ziz's original formulation of the dilemma, but it could be seen as somewhat isomorphic to the fatal mechanical blackmail dilemma:

Imagine that the emperor, Evil Paul Ekman loves watching his pet bear chase down fleeing humans and kill them. He has captured you for this purpose and taken you to a forest outside a tower he looks down from. You cannot outrun the bear, but you hold 25% probability that by dodging around trees you can tire the bear into giving up and then escape. You know that any time someone doesn’t put up a good chase, Evil Emperor Ekman is upset because it messes with his bear’s training regimen. In that case, he’d prefer not to feed them to the bear at all. Seizing on inspiration, you shout, “If you sic your bear on me, I will stand still and bare my throat. You aren’t getting a good chase out of me, your highness.” Emperor Ekman, known to be very good at reading microexpressions (99% accuracy), looks closely at you through his spyglass as you shout, then says: “No you won’t, but FYI if that’d been true I’d’ve let you go. OPEN THE CAGE.” The bear takes off toward you at 30 miles per hour, jaw already red with human blood. This will hurt a lot. What do you do?

FDT says stand there and bare your throat in order to make this situation not occur, but that fails to track the point in logical time that the agent actually is placed into at the start of a game where the bear has already been released.

Replies from: TekhneMakre, green_leaf, TekhneMakre↑ comment by TekhneMakre · 2023-01-22T22:31:23.567Z · LW(p) · GW(p)

It's a pretty weird epistemic state to be in, to think that he's 99% accurate at reading that sort of thing (assuming you mean, he sometimes opens the cage on people who seem from the inside as FDT-theorist-y as you, and 99% of the time they run, and he sometimes doesn't open the cage on people who seem from the inside as FDT-theorist-y as you, and 99% of them wouldn't have run (the use of the counterfactual here is suspicious)). But yeah, of course if you're actually in that epistemic state, you shouldn't run. That's just choosing to have a bear released on you.

↑ comment by green_leaf · 2023-01-23T20:23:43.933Z · LW(p) · GW(p)

FDT says stand there and bare your throat in order to make this situation not occur, but that fails to track the point in logical time that the agent actually is placed into at the start of a game where the bear has already been released.

That confuses chronological priority with logical priority. The decision to release the bear is chronologically prior to the decision of the agent to not slice their throat, but logically posterior to it - in other words, it's not the case that they won't slice their throat because the bear is released. Rather, is it the case that the bear is released because they won't slice their throat.

If the agent did slice their throat, the emperor would've predicted that and wouldn't have released the bear.

Whether the bear is released or not is, despite it being a chronologically earlier event, predicated on the action of the agent.

(I'm intentionally ignoring the probabilistic character of the problem because the disagreement lies in missing how FDT works, not in the difference between the predictor being perfect or imperfect.)

↑ comment by TekhneMakre · 2023-01-28T00:33:40.536Z · LW(p) · GW(p)

FYI Ziz also thinks one should stand there. https://sinceriously.fyi/narrative-breadcrumbs-vs-grizzly-bear/

comment by Tapatakt · 2023-01-23T16:28:46.356Z · LW(p) · GW(p)

Two possible counterarguments about blackmail scenario:

- Perfect rational policy and perfect rational actions aren't compatible in some scenarios, Sometimes rational decision now is to transform yourself into less rational agent in the future. You can't have your cake and eat it too.

- If there is an (almost) perfect predictor in the scenario, you can't be sure if you are real you or the model of you inside the predictor. Any argument in favor of you being real you should work equally for the model of you, otherwise it would be bad model. Yes, if you are so selfish that you don't care about other instance of yourself, then you have a problem.

↑ comment by TAG · 2023-01-24T02:57:26.003Z · LW(p) · GW(p)

Yes, if you are so selfish that you don’t care about other instance of yourself, then you have a problem

If there is no objective fact that simulations of you are actually are you, and you subjectively don't care about your simulations, where is the error? Rationality doesn't require you to be unselfish...indeeed, decision theory is about being effectively selfish.

Replies from: Dagon, Tapatakt↑ comment by Dagon · 2023-01-24T16:33:30.560Z · LW(p) · GW(p)

In fact, almost all humans don't care equally about all instances of themselves. Currently, the only dimension we have is time, but there's no reason to think that copies, especially non-interactive copies (with no continuity of future experiences) would be MORE important than 50-year-hence instances.

I'd expect this to be the common reaction: individuals care a lot about their instance, and kind of abstractly care about their other instances, but mostly in far-mode, and are probably not willing to sacrifice very much to improve that never-observable instance's experience.

Note that this is DIFFERENT from committing to a policy that affects some potential instances and not others, without knowing which one will obtain.

↑ comment by Tapatakt · 2023-01-24T15:03:47.428Z · LW(p) · GW(p)

If there is no objective fact that simulations of you are actually are you, and you subjectively don't care about your simulations, where is the error?

I meant "if you are so selfish that your simulations/models of you don't care about real you".

Rationality doesn't require you to be unselfish...indeeed, decision theory is about being effectively selfish.

Sometimes selfish rational policy requires you to become less selfish in your actions.

comment by Nate Showell · 2023-01-22T20:41:47.413Z · LW(p) · GW(p)

FDT doesn't require alternate universes to literally exist, it just uses them as a shorthand for modeling conditional probabilities. If the multiverse metaphor is too prone to causing map-territory errors, you can discard it and use conditional probabilities directly.

Replies from: Hivewired↑ comment by Slimepriestess (Hivewired) · 2023-01-22T22:55:35.872Z · LW(p) · GW(p)

I would argue that to actually get benefit out of some of these formal dilemmas as they're actually framed, you have to break the rules of the formal scenario and say the agent that benefits is the global agent, who then confers the benefit back down onto the specific agent at a given point in logical time. However, because we are already at a downstream point in logical time where the FDT-unlikely/impossible scenario occurs, the only way for the local agent to access that counterfactual benefit is via literal time travel. From the POV of the global agent, asking the specific agent in the scenario to let themselves be killed for the good of the whole makes sense, but if you clamp agent to the place in logical time where the scenario begins and ends, there is no benefit to be had for the local agent within the runtime of the scenario.

Replies from: JBlack↑ comment by JBlack · 2023-01-23T02:03:47.411Z · LW(p) · GW(p)

My opinion is that any hypothetical scenario that balances death against other considerations is a mistake. This depends entirely upon a particular agent's utility function regarding death, which is almost certainly at some extreme and possibly entirely disconnected from more routine utility (to the extent that comparative utility may not exist as a useful concept at all).

The only reason for this sort of extreme-value breakage appears to be some rhetorical purpose, especially in posts with this sort of title.