Improving on the Karma System

post by Raelifin · 2021-11-14T18:01:30.049Z · LW · GW · 36 commentsContents

Karma The Problems of Karma Other Options Eigenkarma Predicting/Modeling the Reader FB/Discord Style Reacts My Proposal The Benefits and Costs Monotonous Details QJR Gains/Losses Moderator Interface That's All, Folks None 37 comments

TL;DR: I think it would improve LW to switch from the current karma system to one that distinguishes [social reward] from [quality of writing/thought] by adding a 5-star scale to posts and comments. I also explore other options.

Karma

Back in 2008, LessWrong was created by forking the codebase for Reddit. This was pretty successful, and served the community for a few years, but ultimately it became clear that Reddit's design wasn't a great fit for our community. In 2017 Habryka and others at (what is now) Lightcone rebuilt the site from scratch, improving not only the code and aesthetic, but also the basic design. The sequences were prioritized, short-form-posts and question-posts were invented, and the front-page was made into a curated collection of (ideally) high-quality writing.

But the primary mechanism that Reddit uses to promote content was kept: Karma.

Karma is a fairly easy system to understand: A post (or a comment, which I'll be lumping in with top-level posts for brevity) has a score that starts around zero. Users can upvote a post to increase its score, or downvote it to decrease the score. Posts with a high score are promoted to the attention of other users, both by algorithms that decide the order of posts (often taking into account other factors, such as the time it was posted), and by auto-minimizing low-scoring posts or simply by the social-proof of big-number-means-good drawing the eye.

The simplicity/familiarity of karma is one of its major selling points. As Raemon points out [? · GW], it takes work to evaluate a new system, and the weirder that system is, the more suspicious you should be that it's a bad scheme. Voting is familiar enough that new users (especially in 2021!) will have no difficulty in understanding how to engage with the content on the site.

Giving positive/negative karma is also very low-effort, which is good in many circumstances! Low-effort engagement is a way to draw passive readers into participating in the community and feeling some investment in what they're reading. I may not be able/interested to write a five-page rebuttal to someone's sloppy argument, but I can sure as heck downvote it. Similarly, the opportunity to simply hit a button to approve of quality content can reduce a lot of noise of people patting each other on the back.

It feels good to get karma, despite the fake-internet-points thing. This encourages people to post and Make Number Get Big.

And perhaps, most of all, karma serves as a quantitative way of tracking what's good and what's not, both to create common knowledge across the site/community, but also to help the site promote good content and suppress bad content.

These are the primary reasons that Habryka had [LW(p) · GW(p)] when carrying over the karma system onto the new LessWrong.

We can do better.

The Problems of Karma

Ben Pace recently said to me that karma is usually seen as pretty good, but not perfect. And "in a world where everyone agrees karma isn't perfect, they're less upset when it's wrong."

Karma is a popularity contest. It's essentially democratic. LessWrongers understand that democracy isn't perfect, but (in principle) the glaring imperfection of ranking things purely on popularity means that when a user sees a karma score they'll understand it as only a very rough measure of quality.

But I don't buy that this is the best we can do.

And I certainly don't buy that we shouldn't try to improve things.

The problems with karma, as far as I can see are:

- The popularity of a post is not the same as its quality.

- A movie star might be naturally more popular than a curmudgeonly economist, but that doesn't mean they'll be better at running a country.

- Some people are better at voting thanks to knowledge/wisdom/etc; which an egalitarian system suppresses.

- An investment fund democratically managed by eight random people and Peter Thiel will vastly under-perform compared to one managed solely by Thiel. Sometimes a crowd is less wise than an expert.

- Karma is one-dimensional.

- If a post is informative but poorly written, should I upvote it? If it's adding to the discourse, but also spreading misinformation, should I downvote it?

- Karma is opaque.

- Something being highly up-voted doesn't actually tell me much about it. It could be informative, funny, or simply be preaching to the choir.

- The guidelines about what to vote for (ie "vote for what you want promoted") are very generic. [LW · GW]

- Karma score treats controversy as equivalent to ambivalence.

- This could be seen as a specific case of opacity being bad, but I think it's worth calling out how a karma score doesn't cleanly highlight controversy.

- It can feel bad to be downvoted, discouraging users from posting.

- This may seem like a non-issue to frequent users of the site, especially those who frequently post. But I hope it's easy to see the selection pressure, there. LessWrong has a reputation as a place where people are quite harsh, and I think this repels some (good) people, and the karma system contributes to this reputation.

- Voting's value is external/communal, with very little value captured directly by the voter.

- I think this problem isn't very big in our case (for a variety of reasons), but it still seems worth listing.

- Karma is prone to bandwagonning.

- Humans are biased to want to support the most popular/powerful/influential people in the tribe, and the karma signal reinforces this. I suspect that highly-upvoted posts become more highly upvoted by the nature of having lots of upvotes, even independent of all the other confounders (e.g. being prioritized by the algorithms).

- I'm less confident here when I think about how many contrarians we have, but on net I think I still believe this.

- The same number means very different things depending on readership.

- Joe Biden received more votes during 2020 than any American politician ever. Does this mean he's the most popular politician in history? No! He got more votes because there were more voters and high turnout. The raw number is far less informative than one might assume.

- Sudden influxes of readers can distort things even on a day-to-day basis.

- Karma rewards fast-thoughts, and punishes challenging ideas.

- In a long video (relevant section), Vihart makes the point that if there are two types of readers on a site--one that skims and makes snap votes on everything, and one that carefully considers each point while only handing out votes after careful consideration--then because the skimmer can vote orders of magnitude more, they have (by default) orders of magnitude more influence on the reaction around a post. Things which immediately appeal to the reader and offer no challenge get a quick upvote, while subtle points that require deep thought can get skipped for being too hard.

- This phenomenon is why, I think, most comment sections on the web are trash. But it goes beyond just having fast thinkers be the lion's share of respondents, because it also pressures creators to appeal to existing biases and surface-level thoughts. I've certainly skimmed more than a few egregiously long LW comments, and when I write in public some part of my mind keeps saying "No. You need to make it shorter and snappier. People are just going to skim this if it's a wall of text, even if that wall of text is more nuanced and correct."

If there are other problems that I've missed, please let me know in a comment.

Other Options

Many people have written about other systems for doing the things that karma is meant to do with fewer (or better) problems.

Eigenkarma

Habryka wrote in 2017 [LW · GW]:

I am currently experimenting with a karma system based on the concept of eigendemocracy by Scott Aaronson, which you can read about here, but which basically boils down to applying Google’s PageRank algorithm to karma allocation. How trusted you are as a user (your karma) is based on how much trusted users upvote you, and the circularity of this definition is solved using linear algebra.

And then in a 2019 update [LW(p) · GW(p)]:

There are some problems with this. The first one is whether to assign any voting power to new users. If you don't you remove a large part of the value of having a low-effort way of engaging with your site.

It also forces you to separate the points that you get on your content, from your total karma score, from your "karma-trust score" which introduces some complexity into the system. It also makes it so that increases in the points of your content, no longer neatly correspond to voting events, because the underlying reputation graph is constantly shifting and changing, making the social reward signal a lot weaker.

In exchange for this, you likely get a system that is better at filtering content, and probably has better judgement about what should be made common-knowledge or not.

Predicting/Modeling the Reader

Also from Habryka (2019 [LW(p) · GW(p)]):

[...]The basic idea is to just have a system that tries to do its best to predict what rating you are likely to give to a post, based on your voting record, the post, and other people's votes.

In some sense this is what Youtube and Facebook are doing in their systems[...]

And problems:

The biggest sacrifice I see in creating this system, is the loss in the ability to create common knowledge, since now all votes are ultimately private, and the ability for karma to establish social norms, or just common knowledge about foundational facts that the community is built around, is greatly diminished.

I also think it diminishes the degree to which votes can serve as a social reward signal, since there is no obvious thing to inform the user of when their content got votes on. No number that went up or down, just a few thousand weights in some distant predictive matrix, or neural net.

These systems can also be frustrating to users because it's unclear why something is being recommended, regardless of the common-knowledge issues.

FB/Discord Style Reacts

Both Habryka [LW · GW] and Raemon [LW · GW] have expressed interest in augmenting the karma system with a secondary tier of low-effort responses like angry, sad, heart, etc. These could be drawn from a small pool, like on Facebook, or a very broad pool, like Discord.

A major motivator here would be the idea that disentangling approval from all sorts of other nuanced responses would allow people to flag things like "I disagree with this, but am upvoting it because I think it's bringing an important perspective" without adding the noise or effort of commenting.

A "This changed my mind" button or the like would also allow more common-knowledge about what's important in LW culture.

Major issues with this proposal include:

- Added complexity, both for the UI and the learning curve for newbies

- Rounding off reactions to an oversimplified system of labels

- It doesn't actually solve many of the problems with karma, since it'd need to be in addition to a karma system

My Proposal

All the above stuff seems pretty good in some ways, but ultimately I think those solutions aren't where we should go. Instead, let me introduce how I think LessWrong should change.

(To start, this could be implemented on a select-few posts, and gradually rolled out. I'll talk more about this and other details in the last section, but I wanted to frame this as an proposed experiment. There's no need to change everything all at once!)

Here's the picture:

Each post (including comments) would have a "quality rating" instead of a karma score. This rating would be a 5-star scale, going between 0.5 and 5 stars, in half-star increments. All users can rate posts just like they'd rate a product on Amazon or whatever. On the front page, and other places where posts are listed but not intended to be voted on/rated, only the star rating is displayed.

In addition to the stars, on the post itself (where rating happens) would be a plus (+) button and a "gratitude number". Pushing that plus button makes the number go up and is a way of simply saying "thank you for writing this". On hover/longpress, there's a tooltip that says "Gratitude from: ..." and then a list of people who pressed the (+) button.

A hover/longpress on the star rating would give a barchart showing the distribution of ratings, a link with the text "(Quality Guidelines)", and either text that says "This comment has not been reviewed." or a link saying "This comment was reviewed by [Moderator]" that goes to a special comment by a moderator discussing the quality of the post.

To assist with clarity/transparency/objectivity, there would be a set of guidelines (linked to on the hover) as to how people should assess quality. I'm not going to presume to be able to name the exact guidelines in this post, but my suggestion is something like picking 5 main "targets" and assessing posts on each target. For example: Clarity (easy to read, not verbose, etc), Interestingness (pointing in novel and useful directions [LW(p) · GW(p)]), Validity/Correctness (possessing truth, good logic, and being free from bias), Informativeness (contributing meaningfully, citing sources, etc), and Friendliness (being anti-inflammatory, charitable, fun, kind, etc). Then, each post can score up to one star for each, and the total rating is the sum of the score for each target.

My inner Ben Pace is worried that trying to make an explicit guide for measuring quality will be inflammatory and divisive. I suspect that it's better to have a guide than no guide, and that this will decrease flame wars by creating common knowledge about what's desired. I'm curious what others think (including the real Ben).

Now, the last major aspect of the proposal is that users don't have equal weight in contributing to the quality rating. Instead, each user has a "quality judge reputation" (QJR) number (better names are welcome), which gives their ratings more weight, akin to how on the current LW a user's votes are weighted by their karma. Notably, however, QJR does not go up from posting, but only increases as the user gives good ratings.

A rating is good if it's close to what a moderator would give, if that moderator was tasked with carefully evaluating the quality of a post. In this way, my proposal is to move towards a system of augmented experts [LW(p) · GW(p)], where the users of the site are trying to predict what a moderator would judge. As long as the mods are fair and stick to the guidelines, this anchors the system towards the desired criteria for quality, and de-anchors popularity.

I'll cover the details in the final section, but the basic idea for allocating QJR is that moderators have a button that says "give me a post to evaluate" which samples randomly across the site, with a weighting towards a-couple-days-old and highly-controversial. Once given that post, the moderator then evaluates its quality for themselves, writing up an explanation of their rating for the public. Then, the site looks at all the ratings given by users, and moves QJR from those whose ratings didn't match the mod, to those whose ratings did. This nitty-gritty dynamics of this movement are specified in detail at the end of the post.

The Benefits and Costs

If the details of QJR and how it moves still doesn't quite make sense, let me compare it with something that I think is very analogous: a prediction market. In a way, I am suggesting a system similar to that proposed by Vitalik Buterin and Robin Hanson. When a user rates a post, they are essentially making a bet as to how a reasonable person (the moderator) would assess the quality of that post. The star rating that a post currently has is something like a market price for futures on the assessment. If I think a post is being undervalued, I can make expected profit (in QJR, anyway) by rating it higher.

As a result, we can use the collective predictions/bets of users of the site to promote content, even though moderators will only be able to carefully assess a small minority of posts.

But, if all that sounds too complicated and abstract: I'm basically just saying that people should judge post quality on a 5-star scale and have their rating weighted by (roughly) how much time they've actively spent on the site and how similar their views are to the moderators.

The primary reason not to make a change like this, I think, is that UI clutter and new systems are really expensive to a userbase. Websites, in my experience, tend to rot as companies jam in feature after feature until they're bloated with junk. But I expect this change to be pretty simple. Everyone knows how to rate something on a 5-star scale, and hitting a big plus symbol when you like something seems just as intuitive as upvoting.

There is some concern that making a quality assessment stops being low-effort, as users would need to think about the guidelines and the judgment of the average moderator. But I think in practice most people won't bend over backwards to do the social modeling, and will instead gain an intuition for "LW quality" that they can then use to spot posts that are over/undervalued. (Again, I suggest simply running the experiment and seeing.)

Ok, so how well does this handle the primary goals of karma?

- Serves as a good content filter? Yes!

- Social reward? Yes!

- I recommend making the gratitude number the most prominent number attached to a person's profile, followed by their post count (with average post quality in parens), comment count (again with parenthetical quality), and then QJR and number of wiki edits. I think the gratitude increase should be what a user sees on the top-bar of the site when they log back in, etc.

- Gratitude only goes up, and I think even if someone writes something that is judged to be low-quality, they'll still get some warm-fuzzies from seeing a list of people who liked their post, and seeing Number Go Up.

- Easy to understand? Yep!

- 5-star ratings and "Like" buttons are everywhere nowadays.

- Low effort? I think so.

- It takes two clicks to both rate the quality and give thanks, rather than just one click for voting up/down, but mostly I expect to be able to give ratings and thanks without much effort.

- Common Knowledge? Yes.

- By disentangling quality from appreciation we move towards a better signal of what ideal LessWrong content looks like.

Now let's return to the ten problems identified earlier and see how this system fares.

- Popularity =/= Quality? Yes!

- The gratitude button lets me say "Yay! You rock! I like you!" in a quantitative way, without giving the false impression that my endorsement means that the post was well-created.

- Differential voting power? Yes!

- Thanks to QJR weighting ratings, veteran users who consistently make good bets will have a great deal of sway on which posts are prioritized.

- Multi-dimensionality? Better than Karma, but still not great.

- Pulling out gratitude into its own number is a good start.

- Transparency/opacity? Better than Karma, but still not great.

- Having a set of guidelines and an explanation of moderator decisions seems good for having a sense of why things have the quality rating that they do.

- Having gratitude be public also probably matters here, but I don't have a good story for it.

- Controversy =/= Ambivalence? Just as bad as Karma.

- Risk of bad feels? Different than Karma, and potentially worse.

- I expect users, including new users, to enjoy seeing their gratitude number go up. But I expect many people to hate the idea of having a low-rated post.

- Ultimately I fear that having high community standards is just naturally linked to people fearing the judgment of the crowd. Gratitude is an attempt to soften that.

- Externalities are internalized? Yes.

- "Voters" gain a resource by making good bets, so the same capturing-externalities dynamics as Futarchy apply.

- Risk of bandwagonning? Likely about as bad as Karma.

- Post score is timeless? Yes! Or at least much better than Karma.

- Because things are rated on a consistent scale, 5-star posts can theoretically be compared across time more consistently than high-karma posts.

- Gratitude will be apples-and-oranges, however.

- Rewarding deep thoughts? Yes. Or at least much better than Karma.

- Because users are punished for giving bad ratings to posts, there is a natural pressure to keep people from skimming-and-rating quickly. Those people will naturally lose their QJR.

- The system still encourages people to write posts that get them a lot of gratitude, but low-quality + high-gratitude posts will be naturally de-prioritized by the site in ways I think are healthy.

I somewhat worry that the system will put too much pressure on posts to be high-quality. Throwaway comments on obscure posts become much more expensive when they lower an average score. Not sure what to do about that; suggestions are welcome.

In general, however, it seems to me that a system like this could be a massive improvement to how things are done now. In particular, I think it could make our community much better at concentrating our mental force [LW · GW] towards Scout-Mindset stances over Soldier-Mindset voting when things get hard [LW · GW], thanks to the disproportionate way in which individuals can stake their reputation on a post being high/low-quality, and how the incentives should significantly reduce Soldier-ish pressures on writers and voters.

I recommend you skip/skim the next section if you're not interested in the nitty-gritty. Thanks for reading! Tell me what you think in the comments, and upvote me if you either want to signal appreciation for the brainstorming or you approve of the idea. Surely nothing will go wrong, common-knowledge wise. 😛

Monotonous Details

To start, users with over 100 karma who have opted into experimental features on their settings page gain a checkbox when they create a new post. This checkbox defaults to empty and has a label that says "Use quality rating system instead of karma". Those posts use stars+gratitude instead of karma, including for the comments. Once a post is published, the checkbox is locked.

All users gain a box on the settings page labeled as "Make my reactions anonymous" that defaults to unchecked. When checked, hitting the plus button adds to the gratitude score, but doesn't reveal the user who pressed it.

Old posts don't get ported over, and my proposal is simply to leave things heterogeneous for now. Down the line we could try estimating the quality of posts based on their karma, and move karma score into gratitude to make a full migration, but I also just don't see that as necessary.

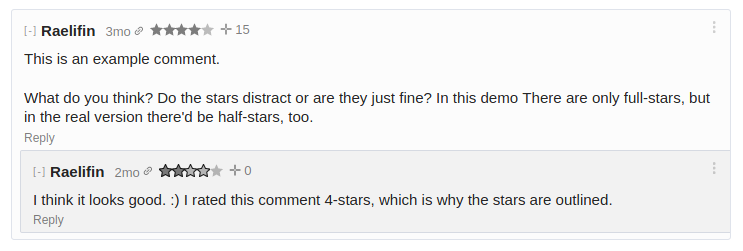

I think it's important that the UI continue to communicate the rating of the broader community even after a user has rated a post. That's why in my mockup I chose full star color for community rating and outlines for the user rating.

New users start with no QJR. This prevents people from creating new accounts for the purpose of abusing the rating system. Users accumulate QJR by rating posts (keep reading for details on that). Existing users are given QJR retroactively based on the logarithm of how many posts they've up/downvoted.

Comments are by default ordered by quality, but can be reordered by time or by gratitude. I'm not sure exactly how the site should compare karma scores with quality ratings for ordering top-level posts "by Magic". Possibly this would require creating an estimator for karma-given-quality or vice-versa.

When a post is made, the site automatically submits two "fake" ratings of 1.75 and 3.75 with a weight of 1 QJR each (note: this averages 2.75, which is rounded to 3 and is the average on a 5-star scale when 0-stars isn't allowed). These fake ratings helps to prevent the first rating from having undue influence, and also makes things nice for the edge-cases. These fake ratings are not shown on the rating histogram, but otherwise count as ratings for computing the weighted average for the community.

The community rating for a post is the weighted arithmetic mean, i.e. the sum of all ratings multiplied by those users' current QJR divided by the total QJR of all participating users. Community ratings are rounded to the nearest half-star for visual purposes.

EDIT: GuySrinivasan [LW · GW] points out in the comments [LW(p) · GW(p)] that many people are tempted to put down as extreme a rating as they can in order to sway the average more, even if their rating is more extreme than they truly believe. In light of this, I now think that the system would be best as a weighted median, rather than a weighted mean. The consequence of this would be that 1-star ratings are exactly as strong as 3-star ratings at moving the average, assuming the current community rating is above 3-stars.

QJR Gains/Losses

Users do not gain or lose QJR by rating posts unless a post they've rated is reviewed by a moderator. This prevents users who rate lots of posts, especially obscure/old posts from accumulating QJR.

When a moderator submits a review score, the site computes a 1:1 bet with each user who rated the post. To do so, it models two normal distributions based on the ratings that the post had gotten when the user rated it. One normal distribution has the mean and variance of the community rating not including the user's vote, and the other Gaussian has the mean and variance including the user's vote. The bet is to whether the moderator review is above or below the intersection of and . (Technically there are two intersections; it's the one in between the means.)

And in standard quadratic form:

For example, let's say that a user with 3 QJR makes a post and offers a 4-star review as the first real review of their post. The community rating for the post prior to the user's rating was and it had a variance of . After the user's rating, the weighted mean has shifted to and the variance has become . We can then calculate . Thus the site makes a bet with the user that says "I bet you that the moderator gives this post a rating of 3-stars or less" and the user takes them up on it.

The site then calculates how much of the user's QJR to wager based on the Kelly criterion, where we estimate the odds of (user) success by approximating the fraction of area of the Gaussian that "is a win". (In our example, , so we find the probability of winning by taking . If , then we instead approximate .) The details of taking approximations here and getting a probability out are messy and dumb, so I'm skipping them. In our example the modeled probability turns out to be approximately 71.5%. Thus the Kelly criterion says the optimal wager is , which would be 1.29 QJR, since the user currently has 3 QJR.

The minimum a user can wager is 1 QJR; if they don't have enough, they are gifted how ever much QJR is needed to make the bet. In this way, a user with 0 QJR should make as many ratings as they can, because even though they have no weight, and thus don't impact the community rating, they only have upside in expected winnings. (With the exception that if they win some QJR, then poor bets that have already been made will expose them to risk.)

QJR is not conserved. The site has an unlimited supply, and because many comments will obviously be higher quality than 3-stars, users will be able to earn easy QJR by making posts and rating them. This is a feature meant to encourage participation on the site, and reward long-term judges. There's also a bias where the people who make posts and rate them highly get more QJR the higher their quality is, which is obviously good. The flip side is that a person can theoretically game the system by making low quality posts and immediately rating them low, but this has a self-sabotaging protective effect where the site will de-prioritize such posts and make others unlikely to read them, thus unlikely to rate them, thus unlikely to have those posts selected for moderator review.

If a user changes their rating before another user has rated a post, only the second rating is used. If a user changes their rating on a post after others have also rated it, the user that changed their mind makes both bets, but their net QJR gain/loss is capped at the maximum gain/loss of any particular bet. This is to prevent gaming the system by re-rating the same post many times. Only the most recent rating is counted for the purposes of calculating the (current) community rating, however.

Posts can be rated my (different) moderators multiple times. When two moderators review a post, both of their review comments are linked to in the star-rating hover/longpress tooltip. When a new moderator reviews a post that has already been reviewed by a mod, only the reviews since the last mod review are turned into bets. This means that even after a moderator has reviewed a post, there is still incentive for users to rate it.

I picked the above system of making bets so as to incentivize users to rate a post with their true expectation of what a moderator would say. I think if a user expects moderator judgments to be non-Gaussian, they can rate things strategically and gain some QJR over the desired strategy, but I personally expect moderator decisions to be approximately Gaussian and don't think this is much of an issue.

I also don't think that one needs to clip the distributions around 0.5 and 5 in order for things to work right or to model the way that moderators will only rate in half-star increments, but I haven't run exhaustive proofs that things actually work as they should. If someone wants to try and prove things about the system, I'd be grateful.

Moderator Interface

Moderators are just users, most of the time. They have QJR and can rate posts, as normal.

Moderators are also encouraged to interface with a specific part of the site that lets them do quality reviews. In this interface they are automatically served a post on LessWrong, sampled randomly with a bias towards posts that have been reviewed, where the reviews differ from one-another, with a mild bias towards posts made a few days ago. I think it is important that moderators don't choose which posts to review themselves, to reduce bias.

As part of a review, a moderator has to give a star-rating to a post, and explain their judgment in a special comment. If a moderator has already given a quality rating to a post, the moderator only needs to write the comment. When giving a review, the moderator's QJR doesn't change.

By default I think moderator quality-comments should be minimized, with the moderator able to check a box when they make their comment that says something like "Don't minimize this quality review; it contributes to the discussion." Moderator comments explaining their quality review should be understood to be an objective evaluation of the post, rather than an object-level response to the post's content.

Moderator quality reviews don't disproportionately influence the rating of a post--the overall star rating of a post is purely a measure of what the community as a whole has said. (Moderators still have QJR, and their review still contributes, as weighted by their QJR.)

The sampling system ignores posts where the moderator has a conflict of interest. This defaults to only posts written by the mod, but can be extended to blacklisting the moderator from reviewing posts by a set of other users (e.g. romantic partners, bosses, etc).

That's All, Folks

If there are details that I've omitted that you are curious about, please leave a comment. I also welcome criticism of all kinds, but don't forget that the perfect can be the enemy of the good! My stance is that this proposed system isn't perfect, but it's superior to where we currently are.

Oh, also, I'm a fairly competent web developer/software engineer, and I potentially have room for some side-work. If we're bottlenecked on developer time, I can probably fix that. ¯\_(ツ)_/¯

36 comments

Comments sorted by top scores.

comment by jimrandomh · 2021-11-14T21:09:19.092Z · LW(p) · GW(p)

We're thinking a lot about this! Probably what's going to happen next is that we're going to implement more than one variation on voting, and pick a few posts where comments will use alternate voting systems. This will be separated out by post, not by user, since having users using a mix of voting systems on the same comments introduces a bunch of problems.

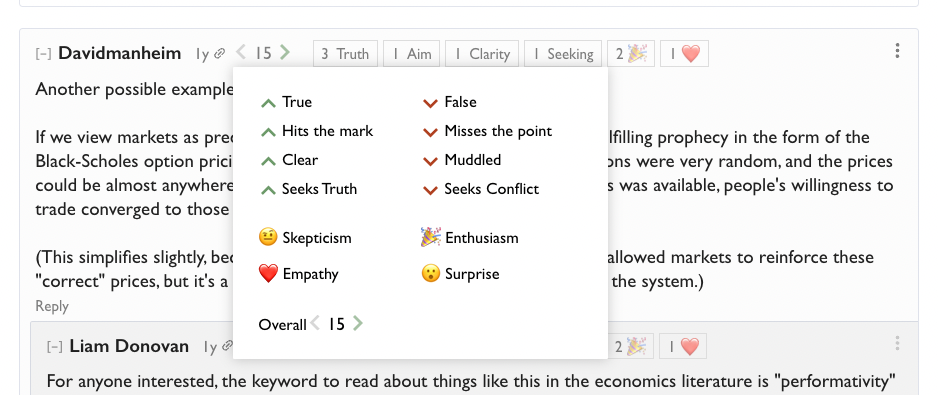

Ideally we can both improve sorting/evaluation, and also use aspects of the voting system itself as a culture-shaping tool to remind people of what sort of comments we're hoping for. Here's a mockup I posted in the LW development slack last week (one idea among multiple, and definitely not the final form):

(In this mockup, which currently is just a fake screenshot and not any real wired-up code, the way it works is you have an overall vote which works like existing karma, but can also pick any subset of the 12 things on the form that appears on hover-over. If you pick one of the postive or negative adjectives, it sets your overall vote to match the valence, but you can then override the overall vote if you want to say things like False/Upvote or True/Downvote.)

I think we're unlikely to settle on anything involving ratings-out-of-five-stars, mainly because a significant subset of users have preexisting associations with five-star scales which would make them overuse the top rating (and misinterpret non-5 ratings from others).

We're also considering making users able to (optionally) make their votes public.

Replies from: Vladimir_Nesov, delton137, ozziegooen, Liron, Raelifin↑ comment by Vladimir_Nesov · 2021-11-14T23:11:25.758Z · LW(p) · GW(p)

A fixed set of tags turns this into multiple-choice questions where all answers are inaccurate, and most answers are irrelevant. Write-in tags could be similar to voting on replies to a comment that evaluate it in some respect. Different people pay attention to different aspects, so the flexibility to vote on multiple aspects at once or differently from overall vote is unnecessary.

Replies from: jimrandomh↑ comment by jimrandomh · 2021-11-14T23:58:52.900Z · LW(p) · GW(p)

Different people pay attention to different aspects, so the flexibility to vote on multiple aspects at once or differently from overall vote is unnecessary.

There's a limited sense in which this is true - the adjective voting on Slashdot wouldn't benefit from allowing people to pick multiple adjectives, for example. But being able to express a mismatch between overall upvote/downvote and true/false or agree/disagree may be important; part of the goal is to nudge people's votes away from being based on agreement, and towards being based on argument quality.

↑ comment by delton137 · 2021-11-15T13:36:44.709Z · LW(p) · GW(p)

For what it's worth - I see value in votes being public by default. It can be very useful to see who upvoted or downvoted your comment. Of course then people will use the upvote feature just to indicate they read a post, but that's OK (we are familiar with that system from Facebook, Twitter, etc).

I'm pretty apathetic about all the other proposals here. Reactions seem to me to be unnecessary distractions. [side note - emojiis are very ambiguous so it's good you put words next to each one to explain what they are supposed to mean]. The way I would interpret reactions would be as a poll of people's system 1 snap judgements. That is arguably useful/interesting information in many contexts but also distracting in other contexts.

↑ comment by ozziegooen · 2021-11-15T10:12:42.926Z · LW(p) · GW(p)

Just want to say; I'm really excited to see this.

I might suggest starting with an "other" list that can be pretty long. With Slack, different subcommunities focus heavily on different emojis for different functional things. Users sometimes figure out neat innovations and those proliferate. So if it's all designed by the LW team, you might be missing out.

That said, I'd imagine 80% of the benefit is just having anything like this, so I'm happy to see that happen.

comment by Dagon · 2021-11-14T20:48:54.252Z · LW(p) · GW(p)

I'd like to support the "do nothing" proposal.

Karma is destined to be imperfect - there's no way to motivate any particular use of it when there's no mechanism to limit invalid use, and no actual utility of the points. It's current very simple implementation provides a little bit of benefit in guiding attention to popular posts, and that's enough.

Anything more complex and it will distract people from the content of the site. Or at least, it'll distract and annoy me, and I'd prefer not to add unnecessary complexity to a site I enjoy.

Replies from: lsusr, Measure↑ comment by lsusr · 2021-11-14T22:02:23.622Z · LW(p) · GW(p)

Nitpick. Accumulating karma is useful in one respect: high karma users get more automatic karma in our posts, which draws more attention to them.

I agree with the do nothing proposal, by the way. The current system, while imperfect, is simple and effective.

comment by SarahNibs (GuySrinivasan) · 2021-11-14T22:46:37.317Z · LW(p) · GW(p)

Very well thought out. I think the two biggest things missing from your analysis are:

- 5-star ratings have become corrupted in the wild. Small-time authors get legitimately angered when a fan rates their work as 4-stars on Amazon, because anything other than 5 stars is very damaging. We don't want to port this behavior/intuition to LW, but by default that's what we'd do. jimrandomh mentions this in their comment. I don't know how to overcome this problem while retaining 5-star ratings.

- Users like to "correct" a post/comment's rating. Personally I hate this behavior, but after ranting about it several times over the years I've learned that I don't represent everyone. :D So if they see a comment with average 2-stars, which they think should be 3.5, they will not want to rate it 3.5. Instead they will want to rate it 5.0 to "make up for" the other "wrongheaded" views. Maybe one way to overcome this problem is to allow a lightweight way to say "I think this is 3.5. I want to use my QJR to fix the current rating so I'm voting 5.0. Please increase the chance a mod sees this, double down on my bet, and maybe even change my rating back to 3.5 if the post becomes 3.5?? And also if a mod rates a post maybe this should drastically reduce the effective QJR of people contradicting the mod... or something, if a mod says 3.5 and the weighted avg is still only 2.5 I won't be happy".

↑ comment by Raelifin · 2021-11-14T23:00:56.222Z · LW(p) · GW(p)

I suggested the 5-star interface because it's the most common way of giving things scores on a fixed scale. We could easily use a slider, or a number between 0 and 100 from my perspective. I think we want to err towards intuitive/easy interfaces even if it means porting over some bad intuitions from Amazon or whatever, but I'm not confident on this point.

I toyed with the idea of having a strong-bet option, which lets a user put down a stronger QJR bet than normal, and thus influence the community rating more than they would by default (albeit exposing them to higher risk). I mainly avoided it in the above post because it seemed like unnecessary complexity, although I appreciate the point about people overcompensating in order to have more influence.

One idea that I just had is that instead of having the community rating set by the weighted mean, perhaps it should be the weighted median. The effect of this would be such that voting 5-stars on a 2-star post would have exactly the same amount of sway as voting 3.5, right up until the 3.5 line is crossed. I really like this idea, and will edit the post body to mention it. Thanks!

↑ comment by aphyer · 2021-11-15T00:48:09.615Z · LW(p) · GW(p)

Another issue I'd highlight is one of complexity. When I consider how much math is involved:

This post involves Gaussians, logarithms, weighted means, integration, and probably a few other things I missed.

The current karma system uses...addition? Sometimes subtraction?

One of these things is much more transparent to new users.

Replies from: GuySrinivasan↑ comment by SarahNibs (GuySrinivasan) · 2021-11-15T01:07:26.574Z · LW(p) · GW(p)

I am a huge fan of tiered-complexity views on complex underlying systems. The description to new users would be:

- Ratings are a magic median-like combination of how users rated a post. Click through for more details...

- Displayed ratings are the median of how users have rated the post/comment. Smoothed. Weighted by how LessWrongy the rater has been. Your own rating will have more effect when your historical ratings are good predictions of how trusted moderators end up rating. Click through for more details...

- Sometimes mods will rate posts/comments, after careful reflection of how they want LessWrong in general to rate. When they do, everyone who previously rated will be awarded additional weight to their future votes if their ratings were similar to what the mod decided, or penalized with less future vote weight if their ratings were pretty far off. That's how the weights are determined when aggregating people's votes on comments. Of course, it's more complicated than that. Folks were grandfathered in. New folks [behavior]. Mods who are regularly different than other mods and high-weight voters trigger investigation into whether they should be mods anymore, or whether everyone is getting something wrong. Multiple mod votes are a thing, as is voting similar to high-weight voters (?? maybe ?? is it ??), as is promoting high-weight voters to mods, as is etc etc. Click through for more details, including math...

- [treatise]

- [link to the documented code]

comment by Shmi (shminux) · 2021-11-15T03:33:09.132Z · LW(p) · GW(p)

My gut feeling is that attracting more attention to a metric, no matter how good, will inevitably Goodhart it. The current karma system lives happily in the background, and people have not attempted to game it much since the days of Eugine_Nier [LW · GW]. I am not sure what problem you are trying to solve, and whether your cure will not be worse than the disease.

Replies from: alkexr, Yoav Ravid↑ comment by alkexr · 2021-11-15T19:35:57.236Z · LW(p) · GW(p)

My gut feeling is that attracting more attention to a metric, no matter how good, will inevitably Goodhart it.

That is a good gut feeling to have, and Goodhart certainly does need to be invoked in the discussion. But the proposal is about using a different metric with a (perhaps) higher level of attention directed towards it, not just directing more attention to the same metric. Different metrics create different incentive landscapes to optimizers (LessWrongers, in this case), and not all incentive landscapes are equal relative to the goal of a Good LessWrong Community (whatever that means).

I am not sure what problem you are trying to solve, and whether your cure will not be worse than the disease.

This last sentence comes across as particularly low-effort, given that the post lists 10 dimensions along which it claims karma has problems, and then evaluates the proposed system relative to karma along those same dimensions.

↑ comment by Yoav Ravid · 2021-11-15T05:51:34.742Z · LW(p) · GW(p)

I don't think the problem is that people try to game it, but that it's flawed (in the many ways the post describes) even when people try to be honest.

comment by Elizabeth (pktechgirl) · 2021-11-14T20:01:54.395Z · LW(p) · GW(p)

Some people are better at voting thanks to knowledge/wisdom/etc; which an egalitarian system suppresses.

Double checking that you are aware that voting is weighted, with higher karma users having the option to give much stronger votes?

Replies from: Bezzi, Raelifin↑ comment by Bezzi · 2021-11-14T20:18:30.602Z · LW(p) · GW(p)

Just for convenience, I think the relevant piece of code is this.

Also, from what I read in that file, even normal votes are weighted. A regular upvote/downvote counts double if the user has at least 1000 karma (right?).

Replies from: Yoav Ravid↑ comment by Yoav Ravid · 2021-11-14T20:28:17.025Z · LW(p) · GW(p)

Yes, that's right.

comment by Filipe Marchesini (filipe-marchesini) · 2021-11-16T11:19:05.354Z · LW(p) · GW(p)

I would like to see different voting systems on different posts, so we could try them out and report back on how each one allows us to express what we think about those posts.

I don't like a single 5-star rating system, but I would love multiple 5-stars in different categories. For example, we could choose to give 5 stars for each of these categories you pointed out: clarity, interestingness, validity/correctness, informativeness, friendliness.

If we had a single 5-star rating system, and suppose I read a post that was completely clear and interesting, but with a wrong conclusion, I just don't know how many stars I would give it. But if I could give 5 stars for clarity and interest, I might give 0~3 stars for correctness (depending on how wrong it was).

Suppose there's a post with 2-star rating on its clarity and I believe the fair rating should be 3-star; I think I would click on 4~5 stars so that I could steer the rating to 3-star. I am not sure how to fix this behaviour, but the rating could say something like "select the final rating you think this post should have", and then I would click on 3-star, if the calculations somehow took care of that.

I completely endorse experimenting different voting systems, we can simply not use them if we realize they don't work well. We should be open to experimentation, and obviously the current system is not perfect and can be improved, and if you are willing to put time and effort into this, I will support and participate and help on discovering if they work better than the current system.

Replies from: Raelifin↑ comment by Raelifin · 2021-11-16T19:46:27.298Z · LW(p) · GW(p)

If you have multiple quality metrics then you need a way to aggregate them (barring more radical proposals). Let’s say you sum them (the specifics of how they combine are irrelevant here). What has been created is essentially a 25-star system with a more explicit breakdown. This is essentially what I was suggesting. Rate each post on 5 dimensions from 0 to 2, add the values together, and divide by two (min 0.5), and you have my proposed system. Perhaps you think the interface should clarify the distinct dimensions of quality, but I think UI simplicity is pretty important, and am wary of suggesting having to click 5+ times to rate a post.

I addressed the issue of overcompensating in an edit: if the weighting is a median then users are incentivized to select their true rating. Good thought. ☺️

Thanks for your support and feedback!

comment by tivelen · 2021-11-16T01:34:26.745Z · LW(p) · GW(p)

I appreciate the benefits of the karma system as a whole (sorting, hiding, and recommending comments based on perceived quality, as voted on by users and weighted by their own karma), but what are the benefits of specifically having the exact karma of comments be visible to anyone who reads them?

Some people in this thread have mentioned that they like that karma chugs along in the background: would it be even better if it were completely in the background, and stopped being an "Internet points" sort of thing like on all other social media? We are not immune to the effects of such things on rational thinking.

Sometimes in a discussion in comments, one party will be getting low karma on their posts, and the other high karma, and once you notice that you'll be subject to increased bias when reading the comments. Unless we're explicitly trying to bias ourselves towards posts others have upvoted, this seems to be operating against rationality.

Comments seem far more useful in helping writers make good posts. The "score" aspect of karma adds distracting social signaling, beyond what is necessary to keep posts prioritized properly. If I got X karma instead of Y karma for a post, it would tell me nothing about what I got right or wrong, and therefore wouldn't help me make better posts in the future. It would only make me compare myself to everyone else and let my biases construct reasoning for the different scores.

A sort of "Popular Comment" badge could still automatically be applied to high-karma comments, if indicating that is considered valuable, but I'm not sure that it would be.

TL;DR: Hiding the explicit karma totals of comments would keep all the benefits of karma for the health of the site, reduce cognitive load on readers and writers, and reduce the impact of groupthink, with no apparent downsides. Are there any benefits to seeing such totals that I've overlooked?

↑ comment by Raelifin · 2021-11-16T19:34:03.744Z · LW(p) · GW(p)

I agree that there are benefits to hiding karma, but it seems like there are two major costs. The first is in reducing transparency; I claim that people like knowing why something is selected for them, and if karma becomes invisible the information becomes hidden in a way that people won’t like. (One could argue it should be hidden despite people’s desires, but that seems less obvious.) The other major reason is one cited by Habryka: creating common knowledge. Visible Karma scores help people gain a shared understanding of what’s valued across the site. Rankings aren’t sufficient for this, because they can’t distinguish relative quality from absolute quality (eg I’m much more likely to read a post with 200 karma, even if it’s ranked lower due to staleness than one that has 50).

comment by Patodesu · 2024-12-31T08:28:12.453Z · LW(p) · GW(p)

If a 5-star system of voting were to be implemented, the UI of voting could continue being the same, and the weights of previous votes could be used but as if they had in between 1 stars increments: strong downvote, downvote, no vote, upvote, strong upvote.

And a middle (3 stars) vote could be added.

I know that people don't think of both ways of voting as equivalents, and a regular "upvote" could reduce the score of a comment/ post.

But they are similar enough, and the UI would be much simpler and not discourage people from voting.

comment by Jesse Kanner (jesse-kanner) · 2022-08-14T12:05:55.658Z · LW(p) · GW(p)

TL;DR - probably best to scrap rating people's posts and comments altogether. At very least change the name.

I'm not fond of the label "Karma". It suggests universal and hermetic moral judgement when in the context of this blog it's just, you know, people's impulsive opinion in the moment. It also suggests persistence - as Karma supposedly spills over into the next iteration.

My very first comment on LW garnered a -36 Karma score. It was thoughtful and carefully argued - and, yes, a bit spicy. But regardless, the community decided to just shun me with a click without actually engaging in the ideas I proposed. I feel ganged up upon and not taken seriously.

I still trudge ahead though with other comments but regrettably I feel compelled to adopt a "me versus you all" stance. Its saddening and anti-intellectual (and a direct violation of the LW ethic of "A community blog devoted to refining the art of rationality.")

Viewpoint diversity is vitally important for deep learning. I suggest dropping Karma altogether, or at least use simple up-down voting to shift comments to the bottom. But even that doesn't really feel right. What you have now is a Milgram machine.

Replies from: ExtrArrocomment by hath · 2021-11-14T19:58:07.988Z · LW(p) · GW(p)

What if we could score on a power-law level of quality, instead of just five stars for fulfilling each of five categories? There could be one order of magnitude for "well written/thought out", the next order of magnitude higher meaning "has become part of my world model" and one higher of "implemented the recommendations in this post, positively improved my life". The potential issue I see in the 5 star rating system is that it doesn't have enough variance; probably 95% of the posts I've read on here would be either 4 or 5 stars. Being able to rate posts with a decimal, so you can rate a post 4.5 instead of just 4 or 5, would also help, though it'd clutter the UI and make voting cost more spoons.

Replies from: lsusr, Raelifin↑ comment by Raelifin · 2021-11-14T22:50:31.774Z · LW(p) · GW(p)

I agree with the expectation that many posts/comments would be nearly indistinguishable on a five-star scale. I'm not sure there's a way around this while keeping most of the desirable properties of having a range of options, though perhaps increasing it from 10 options (half-stars) to 14 or 18 options would help.

My basic thought is that if I can see a bunch of 4.5 star posts, I don't really need the signal as to whether one is 4.3 stars vs 4.7 stars, even if 4.7 is much harder to achieve. I, as a reader, mostly just want a filter for bad/mediocre posts, and the high-end of the scale is just "stuff I want to read". If I really want to measure difference, I can still see which are more uncontroversially good, and also which has more gratitude.

I'm not sure how a power-law system would work. It seems like if there's still a fixed scale, you're marking down a number of zeroes instead of a number of stars. ...Unless you're just suggesting linear voting (ie karma)?

↑ comment by habryka (habryka4) · 2021-11-15T03:01:53.186Z · LW(p) · GW(p)

One of my ideas for this (when thinking about voting systems in general) is to have a rating that is trivially inconvenient to access. Like, you have a ranking system from F to A, but then you can also hold the A button for 10 seconds, and then award an S rank, and then you can hold the S button for 30 seconds, and award a double S rank, and then hold it for a full minute, and then award a triple S rank.

The only instance I've seen of something like this implemented is Medium's clap system, which allows you to give up to 50 claps, but you do have to click 50 times to actually give those claps.

Replies from: Yoav Ravid↑ comment by Yoav Ravid · 2021-11-15T05:55:21.061Z · LW(p) · GW(p)

If we were making just a small change to voting, then the one I would have liked to make is having something like the clap system instead of weakvotes and strongvotes, and have the cap decided by karma score (as it is now, if your strongvote is X, your cap would be X).