Brain Efficiency: Much More than You Wanted to Know

post by jacob_cannell · 2022-01-06T03:38:00.320Z · LW · GW · 103 commentsContents

Energy Thermodynamics Interconnect GPUs Synapses Space Density & Temperature Speed Circuits Serial vs Parallel vs Neuromorphic Vision Vector/Matrix Multiplication Deep Learning Data Asymptotic Vision GPT-N AlphaX Conclusions None 103 comments

What if the brain is highly efficient? To be more specific, there are several interconnected key measures of efficiency for physical learning machines:

- energy efficiency in ops/J

- spatial efficiency in ops/mm^2 or ops/mm^3

- speed efficiency in time/delay for key learned tasks

- circuit/compute efficiency in size and steps for key low level algorithmic tasks [1]

- learning/data efficiency in samples/observations/bits required to achieve a level of circuit efficiency, or per unit thereof

- software efficiency in suitability of learned algorithms to important tasks, is not directly addressed in this article[2]

Why should we care? Brain efficiency matters a great deal for AGI timelines and takeoff speeds, as AGI is implicitly/explicitly defined in terms of brain parity. If the brain is about 6 OOM away from the practical physical limits of energy efficiency, then roughly speaking we should expect about 6 OOM of further Moore's Law hardware improvement past the point of brain parity: perhaps two decades of progress at current rates, which could be compressed into a much shorter time period by an intelligence explosion - a hard takeoff.

But if the brain is already near said practical physical limits, then merely achieving brain parity in AGI at all will already require using up most of the optimizational slack, leaving not much left for a hard takeoff - thus a slower takeoff.

In worlds where brains are efficient, AGI is first feasible only near the end of Moore's Law (for non-exotic, irreversible computers), whereas in worlds where brains are highly inefficient, AGI's arrival is more decorrelated, but would probably come well before any Moore's Law slowdown.

In worlds where brains are ultra-efficient, AGI necessarily becomes neuromorphic or brain-like, as brains are then simply what economically efficient intelligence looks like in practice, as constrained by physics. This has important implications for AI-safety: it predicts/postdicts the success of AI approaches based on brain reverse engineering (such as DL) and the failure of non-brain like approaches, it predicts that AGI will consume compute & data in predictable brain like ways, and it suggests that AGI will be far more like human simulations/emulations than you'd otherwise expect and will require training/education/raising vaguely like humans, and thus that neuroscience and psychology are perhaps more useful for AI safety than abstract philosophy and mathematics.

If we live in such a world where brains are highly efficient, those of us interested in creating benevolent AGI should immediately drop everything and learn how brains work.

Energy

Computation is an organization of energy in the form of ordered state transitions transforming physical information towards some end. Computation requires an isolation of the computational system and its stored information from the complex noisy external environment. If state bits inside the computational system are unintentionally affected by the external environment, we call those bit errors due to noise, errors which must be prevented by significant noise barriers and or potentially costly error correction techniques.

Thermodynamics

Information is conserved under physics, so logical erasure of a bit from the computational system entails transferring said bit to the external environment, necessarily creating waste heat. This close connection between physical bit erasure and thermodynamics is expressed by the Landauer Limit[3], which is often quoted as

However the full minimal energy barrier analysis involves both transition times and transition probability, and this minimal simple lower bound only applies at the useless limit of 50% success/error probability or infinite transition time.

The key transition error probability is constrained by the bit energy:

Here's a range of bit energies and corresponding minimal room temp switch error rates (in electronvolts):

All computers (including brains) are ultimately built out of fundamental indivisible quantal elements in the form of atoms/molecules, each of which is also a computational device to which the Landauer Limit applies[6]. The combination of this tile/lego decomposition and the thermodynamic bit/energy relationship is a simple but powerful physics model that can predict a wide variety of micro and macro-scale computational thermodynamic measurements. Using this simple model one can predict minimal interconnect wire energy, analog or digital compute energy, and analog or digital device sizes in both brains and electronic computers.

Time and time again while writing this article, the simple first-principles physics model correctly predicted relevant OOM measurements well in advance of finding the known values in literature.

Interconnect

We can estimate a bound for brain compute energy via interconnect requirements, as interconnect tends to dominate energy costs at high device densities (when devices approach the size of wire segments). Both brains and current semiconductor chips are built on dissipative/irreversible wire signaling, and are mostly interconnect by volume.

Brains are mostly interconnect.

CPUs/GPUs are mostly interconnect.

A non-superconducting electronic wire (or axon) dissipates energy according to the same Landauer limit per minimal wire element. Thus we can estimate a bound on wire energy based on the minimal assumption of 1 minimal energy unit per bit per fundamental device tile, where the tile size for computation using electrons is simply the probabilistic radius or De Broglie wavelength of an electron[7:1], which is conveniently ~1nm for 1eV electrons, or about ~3nm for 0.1eV electrons. Silicon crystal spacing is about ~0.5nm and molecules are around ~1nm, all on the same scale.

Thus the fundamental baseline irreversible (nano) wire energy is: ~1 , with in the range of 0.1eV (low reliability) to 1eV (high reliability).

The predicted wire energy is J/bit/nm or ~100 fJ/bit/mm for semi-reliable signaling at 1V with = 1eV, down to ~10 fJ/bit/mm at 100mV with complex error correction, which is an excellent fit for actual interconnect wire energy[8][9][10][11], which only improves marginally through Moore's Law (mainly through complex sub-threshold signaling and associated additional error correction and decoding logic, again most viable for longer ranges).

For long distance interconnect or communication reversible (ie optical) signaling is obviously vastly superior in asymptotic energy efficiency, but photons and photonics are simply fundamentally too big/bulky/costly due to their ~1000x greater wavelength and thus largely impractical for the dominate on-chip short range interconnects[12]. Reversible signaling for electronic wires requires superconductance, which is even more impractical for the foreseeable future.

The brain has an estimated ~ meters of total axon/dendrite wiring length. Using an average wire data rate of 10 bit/s[13][14][15][16] (although some neurons transmit up to 90 bits/s[17]) implies an interconnect energy use of ~1W for reliable signaling (10bit/s * * W/bit/nm), or ~0.1W for lower bit rates and/or reliability. [18]

Estimates of actual brain wire signaling energy are near this range or within an OOM[19][20], so brain interconnect is within an OOM or so of energy efficiency limits for signaling, given its interconnect geometry (efficiency of interconnect geometry itself is a circuit/algorithm level question).

GPUs

A modern GPU has ~ transistors, with about half the transistors switching per cycle (CMOS logic is dense) at a rate of ~ hz[21], and so would experience bit logic errors at a rate of about two per month if operating near typical voltages of 1V (for speed) and using theoretically minimal single electron transistors[22]. The bit energy in 2021 GPUs corresponds to on order a few hundred electrons per transistor ( transistor switches per second using ~100 watts instead of the minimal 1W for theoretical semi-reliable single electron transistors, as ), and thus current GPUs are only about 2 OOM away from thermodynamic limits; which is probably an overestimate, as each hypothetical single-electron transistor needs perhaps 10 single-electron minimal interconnect segments, so GPUs are probably closer to 1 OOM from their practical thermodynamic limits (for any equivalent irreversible device doing all the same logic at the same speed and error rates)[23]. Interconnect energy dominates at the highest densities.

The distance to off chip VRAM on a large GPU is ~3 cm, so just reading bits to simulate one cycle of a brain-size ANN will cost almost 3kJ (1e{15} bits * 1e-19 J/bit/nm * 1e7cm/nm * 3), so 300kW to run at 100hz. The brain instead only needs to move per neuron values over similar long distances per cycle, which is ~10,000x more efficient than moving around the ~10,000x more numerous connection weights every cycle.

Current GPUs also provide op throughput (for matrix multiplication) up to flops/s or ops/s (for lower bit integer), which is close to current informed estimates for equivalent brain compute ops/s[24]. So that alone provides an indirect estimate that brains are within an OOM or two of thermodynamic limits - as current GPUs with equivalent throughput are within 1 to 2 OOM of their limits, and brains use 30x less energy for similar compute throughput (~10 watts vs ~300).

Synapses

The adult brain has on ~ synapses which perform a synaptic computation on order 0.5hz[25]. Each synaptic computation is something equivalent to a single analog multiplication op, or a small handful of ops (< 10). Neuron axon signals are binary, but single spikes are known to encode the equivalent of higher dynamic range values through various forms of temporal coding, and spike train pulses can also extend the range through nonlinear exponential coding - as synapses are known to have the short term non-linear adaptive mechanisms that implement non-linear signal decoding [26][27]. Thus the brain is likely doing on order to low-medium precision multiply-adds per second.

Analog operations are implemented by a large number of quantal/binary carrier units; with the binary precision equivalent to the signal to noise ratio where the noise follows a binomial distribution. The equivalent bit precision of an analog operation with N quantal carriers is the log of N (maximum signal information) minus the binomial noise entropy:

Where is the individual carrier switch transition error probability. If the individual carrier transitions are perfectly reliable then the entropy term is zero, but that would require unrealistically high reliability and interconnect energy. In the brain the switch transition error probability will be at least 0.06 for a single electron carrier at minimal useful Landauer Limit voltage of ~70mV like the brain uses (which also happens to simplify the math):

So true 8-bit equivalent analog multiplication requires about 100k carriers/switches and thus using noisy subthreshold ~0.1eV per carrier, for a minimal energy consumption on order 0.1W to 1W for the brain's estimated to synaptic ops/s. There is some room for uncertainty here, but not room for many OOM uncertainty. It does suggest that the wiring interconnect and synaptic computation energy costs are of nearly the same OOM. I take this as some evidence favoring the higher op/s number, as computation energy use below that of interconnect requirements is cheap/free.

Note that synapses occupy a full range of sizes and corresponding precisions, with most considerably lower than 8-bit precision (ranging down to 1-bit), which could significantly reduce the median minimal energy by multiple OOM, but wouldn't reduce the mean nearly as much, as the latter is dominated by the higher precision synapses because energy scales exponentially as with precision.

The estimate/assumption of 8-bit equivalence for the higher precision range may seem arbitrary, but I picked that value based on 1.) DL research indicating the need for around 5 to 8 bits per param for effective learning[29][30] (not to be confused with the bits/param for effective forward inference sans-learning, which can be much lower), and 2.) Direct estimates/measurements of (hippoccampal) mean synaptic precisions around 5 bits[31][32]. 3.) 8-bit precision happens to be near the threshold where digital multipliers begin to dominate (a minimal digital 8-bit multiplier requires on order minimal transistors/devices and thus roughly minimal wire segments connecting them, vs around carriers for the minimal 8-bit analog multiplier). A synapse is also an all-in-one highly compact computational device, memory store, and learning device capable of numerous possible neurotransmitter specific subcomputations.

The predicted involvement of ~ charge carriers then just so happens to match estimates of the mean number of ion carriers crossing the postsynaptic membrane during typical synaptic transmission[33]. This is ~10x the number of involved presynaptic neurotransmitter carrier molecules from a few released presynaptic vesicles, but synapses act as repeater amplifiers.

We can also compare the minimal energy prediction of for 8-bit equivalent analog multiply-add to the known and predicted values for upcoming efficient analog accelerators, which mostly have energy efficiency in the range[34][35][36][37] for < 8 bit, with the higher reported values around similar to the brain estimate here, but only for < 4-bit precision[38]. Analog devices can not be shrunk down to few nm sizes without sacrificing SNR and precision; their minimal size is determined by the need for a large number of carriers on order for equivalent bit precision , and c ~ 2, as discussed earlier.

Conclusion: The brain is probably at or within an OOM or so of fundamental thermodynamic/energy efficiency limits given its size, and also within a few OOM of more absolute efficiency limits (regardless of size), which could only be achieved by shrinking it's radius/size in proportion (to reduce wiring length energy costs).

Space

The brain has about total synapses in a volume of 1000 , or , so around volume / synapse. The brain's roughly 8-bit precision synapses requires on order electron carriers and thus on same order number of minimal 1 molecules. Actual synapses are flat disc shaped and only modestly larger than this predicts - with mean surface areas around . [39][40][41].

So even if we assume only 10% of synapses are that large, the minimal brain synaptic volume is about . Earlier we estimated around nm of total wiring length, and thus at least an equivalent or greater total wiring volume (in practice far more due to the need for thick low resistance wires for fast long distance transmission), but wire volume requirements scale linearly with dimension. So if we ignore all the machinery required for cellular maintenance and cooling, this indicates the brain is at most about 100x larger than strictly necessary (in radius), and more likely only 10x larger.

Density & Temperature

However, even though the wiring energy scales linearly with radius, the surface area power density which crucially determines temperature scales with the inverse squared radius, and the minimal energy requirements for synaptic computation are radius invariant.

The black body temperature of the brain scales with energy and surface area according to the Stefan-Boltzmann Law:

Where is the power per unit surface area in W/, and is the Stefan-Boltzmann constant. The human brain's output of 10W in 0.01m^2 results in a power density of 1000W / , very similar to that of the solar flux on the surface of the earth, which would result in an equilibrium temperature of or C, sufficient to boil the blood, if it wasn't actively cooled. Humans have evolved exceptional heat dissipation [LW(p) · GW(p)] capability using the entire skin surface for evaporative cooling[42] : a key adaption that supports both our exceptional long distance running ability, and our oversized brains (3X larger than expected for the default primate body plan, and brain tissue has 10x the power density of the rest of the body).

Shrinking the brain by a factor of 10 at the same power output would result in a ~3.16x temp increase to around 1180K, shrinking the brain minimally by a factor of 100 would result in a power density of W / and a local temperature of around 3,750K - similar to that of the surface of the sun.

Current 2021 gpus have a power density approaching W / , which severely constrains the design to that of a thin 2D surface to allow for massive cooling through large heatsinks and fans. This in turn constrains off-chip memory bandwidth to scale poorly: shrinking feature sizes with Moore's Law by a factor of D increases transistor density by a factor of , but at best only increases 2d off-chip wire density by a factor of only D, and doesn't directly help reduce wire energy cost at all.

A 2021 GPU with transistors has a surface area of about and so also potentially has room for at most 100x further density scaling, which would result in 10,000x higher transistor count, but given that it only has 1 or 2 OOM potential improvement in thermodynamic energy efficiency, significant further scaling of existing designs would result in untenable power consumption and surface temperature. In practice I expect around only 1 more OOM in dimension scaling (2 OOM in transistor density), with less than an OOM in energy scaling, resulting in dark silicon and or crazy cooling designs[23:1].

Conclusion: The brain is perhaps 1 to 2 OOM larger than the physical limits for a computer of equivalent power, but is constrained to its somewhat larger than minimal size due in part to thermodynamic cooling considerations.

Speed

Brain computation speed is constrained by upper neuron firing rates of around 1 khz and axon propagation velocity of up to 100 m/s [43], which are both about a million times slower than current computer clock rates of near 1 Ghz and wire propagation velocity at roughly half the speed of light. Interestingly, since both the compute frequency and signal velocity scale together at the same rate, computers and brains both are optimized to transmit fastest signals across their radius on the time scale of their equivalent clock frequency: the fastest axon signals can travel about 10 cm per spike timestep in the brain, and also up to on order 10 cm per clock cycle in a computer.

So why is the brain so slow? The answer is again probably energy efficiency.

The maximum frequency of a CMOS device is constrained by the voltage, and scales approximately with [44][45]:

With typical current values in the range of 1.0 for and perhaps 0.5 for . The equivalent values for neural circuits are 0.070 for and around 0.055 for , which would still support clock frequencies in the MHz range. So a digital computer operating at the extreme subthreshold voltages the brain uses could still switch a thousand times faster.

However, as the minimal total energy usage also scales linearly with switch frequency, and the brain is already operating near thermodynamic efficiency limits at slow speeds, a neuromorphic computer equivalent to the brain, with equivalent synapses (functioning simultaneously as both memory and analog compute elements), would also consume around 10W operating at brain speeds at 1kHz. Scaling a brain to MHz speeds would increase energy and thermal output into the 10kW range and thus surface power density into the / range, similar to current GPUs. Scaling a brain to GHz speeds would increase energy and thermal output into the 10MW range, and surface power density to / , with temperatures well above the surface of the sun.

So in the same brain budget of 10W power and thermodynamic size constraints, one can choose between a computer/circuit with bytes of param memory and byte/s of local memory bandwidth but low sub kHZ speed, or a system with up to bytes/s of local memory bandwidth and gHZ speed, but only bytes of local param memory. The most powerful GPUs or accelerators today achieve around bytes/s of bandwidth from only the register file or lowest level cache, the total size of which tends to be on order bytes or less.

For any particular energy budget there is a Landauer Limit imposed maximum net communication flow rate through the system and a direct tradeoff between clock speed and accessible memory size at that flow rate.

A single 2021 GPU has the compute power to evaluate a brain sized neural circuit running at low brain speeds, but it has less than 1/1000th of the required RAM. So you then need about 1000 GPUs to fit the neural circuit in RAM, at which point you can then run 1000 copies of the circuit in parallel, but using multiple OOMs more energy per agent/brain for all the required data movement.

It turns out that spreading out the communication flow rate budget over a huge memory store with a slow clock rate is fundamentally more powerful than a fast clock rate over a small memory store. One obvious reason: learning machines have a need to at least store their observational history. A human experiences a sensory input stream at a bitrate of about bps (assuming maximal near-lossless compression) for about seconds over typical historical lifespan, for a total of about bits. The brain has about synapses that store roughly 5 bits each, for about bits of storage. This is probably not a coincidence.

In three separate linages - primates, cetaceans, and proboscideans - brains evolved to large sizes of on order neocortical neurons and synapses (humans: ~20B neocortical neurons, ~80B total, elephants: ~6B neocortical neurons[46], ~250B total, long-finned pilot whale: ~37B neocortical neurons[47], unknown total), concomitant with long (40+) year lifespans. Humans are unique only in having a brain several times larger than normal for our total energy budget, probably due to the unusually high energy payoff for linguistic/cultural intelligence.

Conclusion: The brain is a million times slower than digital computers, but its slow speed is probably efficient for its given energy budget, as it allows for a full utilization of an enormous memory capacity and memory bandwidth. As a consequence of being very slow, brains are enormously circuit cycle efficient. Thus even some hypothetical superintelligence, running on non-exotic hardware, will not be able to think much faster than an artificial brain running on equivalent hardware at the same clock rate.

Circuits

Measuring circuit efficiency - as a complex high level and task dependent metric - is naturally far more challenging than measuring simpler low level physical metrics like energy efficiency. We first can establish a general model of the asymptotic efficiency of three broad categories of computers: serial, parallel, and neuromorphic (processor in memory). Then we can analyze a few example brain circuits that are reasonably well understood, and compare their size and delay to known bounds or rough estimates thereof.

Serial vs Parallel vs Neuromorphic

A pure serial (Von Neumman architecture) computer is one that executes one simple instruction per clock cycle, fetching opcodes and data from a memory hierarchy. A pure serial computer of size , and a clock frequency of can execute up to only ~ low level instructions per second over a memory of size at most ~ for a 2d system (as in modern CPUs/GPUs, constrained to 2D by heat dissipation requirements). In the worst case when each instruction accesses a random memory value the processor stalls; the worst case performance is thus bound by ~ where is the device size, and m/s is the speed of light bound signal speed. So even a perfectly dense (nanometer scale transistors) 10cm x 10cm pure serial CPU+RAM has performance of only a few billion ops/s when running any algorithms that access memory randomly or perform only few ops per access.

A fully parallel (Von Neumman architecture) computer can execute up to instructions per clock, and so has a best case performance that scales as and a worst case of ~. The optimal parallel 10cm x 10cm computational device thus has a maximum potential that is about 16 orders of magnitude greater than the pure serial device.

An optimal neuromorphic computer then simply has a worst and best case performance that is , for 2d or for a 3d device like the brain, as its processing units and memory units (synapses) are the same.

Physics is inherently parallel, and thus serial computation simply doesn't scale. The minor big O analysis asymptotic advantages of serial algorithms are completely dominated by the superior asymptotic physical scaling of parallel computation. In other words, big O analysis is wrong, as it naively treats computation and memory access as the same thing, when in fact the cost of memory access is not constant, and scales up poorly with memory/device size.

The neuromorphic (processor in memory) computational paradigm is asymptotically optimal scaling wise, but within that paradigm we can then further differentiate circuit efficiency in terms of width/size and delay.

Vision

In terms of circuit depth/delay, humans/primates can perform complex visual recognition and other cognitive tasks in around 100ms to a second, which translates to just a dozen to a hundred inter-module compute steps (each of which takes about 10ms to integrate a few spikes, transmit to the next layer, etc). This naturally indicates learned cortical circuits are near depth optimal, in terms of learning minimal depth circuits for complex tasks, when minimal depth is task useful. As the cortex/cerebellum/BG/thalamus system is a generic universal learning system [LW · GW], showing evidence for efficiency in the single well understood task of vision suffices to show evidence for general efficiency; the 'visual' cortical modules are just generic cortical modules that only happen to learn vision when wired to visual inputs, and will readily learn audio or complex sonar processing with appropriate non-standard input wiring.

A consequence of near-optimal depth/delay implies that the fastest possible thinking minds will necessarily be brain-like, as brains use the near-optimal minimal number of steps to think. So any superintelligence running on any non-exotic computer will not be able to think much faster than an artificial brain running on the same equivalent hardware and clock speeds.

In terms of circuit width/size the picture is more complex, but vision circuits are fairly well understood.

The retina not only collects and detects light, it also performs early image filtering/compression with a compact few-layer network. Most vertebrates have a retina network, and although there is considerable variation it is mostly in width, distribution, and a few other hyperparams. The retina performs a reasonably simple well known function (mostly difference of gaussian style filters to exploit low frequency spatio-temporal correlations - the low hanging statistical fruit of natural images), and seems reasonably near-optimal for this function given its stringent energy, area, and latency constraints.

The first layer of vision in the cortex - V1 - is a more massively scaled up early visual layer (esp. in primates/humans), and is also apparently highly efficient given its role to extract useful low-order spatio-temporal correlations for compression and downstream recognition. Extensive experiments in DL on training a variety of visual circuits with similar structural constraints (local receptive field connectivity, etc) on natural image sequences all typically learn V1 like features in first/early layers, such that failure to do so is often an indicator of some error. Some of the first successful learned vision feature extractors were in fact created as a model of V1[48], and modern DL systems with local connectivity still learn similar low level features. As a mathematical theory, sparse coding explains why such features are optimal, as a natural overcomplete/sparse generalization of PCA.

Vector/Matrix Multiplication

We know that much if not most of the principle computations the brain must perform map to the well studied problem of vector matrix multiplication.

Multiplication of an input vector X and a weight matrix W has a known optimal form in maximally efficient 2D analog circuity: the crossbar architecture. The input vector X of size M is encoded along a simple uniform vector of wires traversing the structure left to right. The output vector Y of size N is also encoded as another uniform wire vector, but traversing in a perpendicular direction from top to bottom. The weight matrix W is then implemented with analog devices on each of the MxN wire crossings.

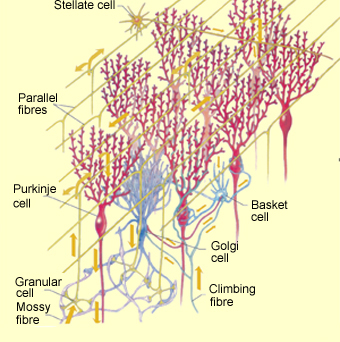

In one natural extension of this crossbar architecture to 3 dimensions, the input vector X becomes a 2D array of wires of dimension x , and each output vector Y becomes a flat planar structure (reduction tree), with a potential connection to every input wire. This 3D structure then has a depth of order N, for the N output summation planes. This particular structure is optimal for M ~ , with other variations optimal for M ~ N. This is a simplified description of the geometric structure of the cerebellum:

Deep Learning

Deep learning systems trained with brain-like architectural/functional constraints (recurrence[49][50], local sparse connectivity, etc) on naturalistic data[51] with generic multi-task and or self-supervised objectives are in fact our very best models of relevant brain circuits[52][53][54]; developing many otherwise seemingly brain-specific features such as two specialized processing streams[55][56], categorical specialization[57], etc., and can explain brain limitations[58][59]. Likewise, DL evolving towards AGI converges on brain reverse engineering[60][61], especially when optimizing towards maximal energy efficiency for complex real world tasks.

The spectacular success of brain reverse engineering aka DL - and its complete dominance in modern AI - is strong evidence for brain circuit efficiency, as both biological and technological evolution, although very different processes, both converge on similar solutions given the same constraints.

Conclusion: It's difficult to make strong definitive statements about circuit efficiency, but current evidence is most compatible with high brain circuit efficiency, and I'm not aware of any significant evidence against.

Data

Data efficiency is a common (although perhaps unfounded) critique of DL. Part of this disadvantage could simply be due to economics: large scale DL systems can take advantage of huge datasets, so there is little immediate practical need to focus on learning from limited datasets. But in the longer term as we approach AGI, learning quickly from limited data becomes increasingly important: it is much of what we mean when we say a human is smart or quick or intelligent.

We can analyze data/learning efficiency on two levels: asymptotic learning efficiency, and practical larger-scale system level data efficiency.

Asymptotic

In terms of known algorithmic learning theory, a data-optimal learning machine with memory O(M) can store/evaluate up to M unique models in parallel per circuit timestep, and can prune about half of said virtual models per observational bit per timestep - as in well known Solomonoff Induction, full Bayesian Inference, or prediction through expert selection[62]. The memory freed can then be recycled to evaluate new models the next timestep, so at the limit such a machine can evaluate O(M*T) models in T timesteps. Thus any practical learning machine can evaluate at most O(N) models and same order data observations, where N is the net compute expended for training (nearly all virtual models are discarded at an average evaluation cost of only O(C)). Assuming that 'winning' predictive models are distributed uniformly over model-space, this implies a power law relationship between predictive entropy (log predictive error), and the entropy of model space explored (and thus log compute for training). Deep learning systems are already in this power-law regime[63][64], thus so is the brain, and they are both already in the optimal broad asymptotic complexity class.

In terms of tighter bounds on practical large scale data efficiency, we do not have direct apples-to-apples comparisons as humans and current DL systems are trained on different datasets. But some DL systems are trained on datasets that could be considered a relevant subset of the human training dataset.

Vision

DL vision systems can achieve mildly superhuman performance on specific image recognition games like Imagenet, but these systems are trained on a large labeled dataset of 1M images, whereas humans are first pretrained unsupervised on a larger mostly unlabeled dataset of perhaps 1B images (1 image/s for 32 years), with a tiny fraction of linguistically labeled images (or perhaps none for very specific dog breed categories).

If you look at Imagenet labels, they range from the obvious: syringe, to the obscure: gyromitra. Average untrained human performance of around 75% top-5 is reasonably impressive considering that untrained humans have 0 labels for many categories. Trained humans can achieve up to 95% top-5 accuracy, comparable to DL SOTA from 2017. Now 2021 DL SOTA is around 99% top-5 using all labels, and self-supervised SOTA (using a big model) matches human expert ability using 10% of labels (about 100 labels per category),[65] but using multiple data passes. Assuming a human expert takes a second or two to evaluate an image, a single training pass on 10% of the imagenet labels would take about 40 hours: a full time work week, perhaps a month for multiple passes. It's unclear at this point if humans could approach the higher 99% score if only some were willing to put in months or years of training, but it seems plausible.

DL visual systems take advantage of spatial (ie convolutional) weight sharing to reduce/compress parameters and speed up learning. This is difficult/impossible for slow neuromorphic processors like the brain, so this handicap makes brain data efficiency somewhat less directly comparable and somewhat more impressive.

GPT-N

OpenAI's GPT-3 is a 175B param model (or 1e12 bits at 5.75 bits/param) trained on a corpus of about 400B BPE tokens, or roughly 100B words (or 1e12 bits at 10 bits/word), whereas older humans are 'trained' on perhaps 10B words (about 5 per second for 64 years), or more generally about 10B timesteps of about 200ms each, corresponding roughly to one saccadic image, one word, precept, etc. A single saccadic image has around 1M pixels compressible to about 0.1bpp, suggesting a human experiences on order 1e15 bits per lifetime, on par with up to 1e15 bits of synaptic information (2e14 synapses * 5 bit/synapse).

Scaling analysis of GPT-N [LW · GW] suggests high benchmark performance (vague human parity) will require scaling up to a brain size model a bit above 1e14 params and a similar size dataset. This is interesting because it suggests that current DL models (or at least transformers), are perhaps as parameter efficient as the brain, but are far less data efficient in terms of consumed words/tokens. This may not be surprising if we consider that difficulty of the grounding problem: GPT is trying to learn the meaning of language without first learning the grounding of these symbols in a sensorimotor model of the world.

These scaling laws indicate GPT-N would require about 3 to 4 OOM more word data than humans to match human performance, but GPT-3 already trains on a large chunk of the internet. However most of this data is highly redundant. Humans don't train by reading paragraphs drawn uniformly at random from the entire internet - as the vast majority of such data is near worthless. GPT-N models could be made more data efficient through brain inspired active learning (using a smaller net to predict gradient magnitudes to select informative text to train the larger model), and then multi-modal curriculum training for symbol grounding, more like the human education/training process.

AlphaX

AlphaGo achieved human champion performance after training on about 40 million positions, equivalent to about 400k games, which is roughly an OOM more games than a human professional will play during lifetime training (4k games/year * 10 years)[66].

AlphaZero matched human champion performance after training on only about 4 million positions(~100k updates of 4k positions each) and thus 40k games - matching my estimated human data efficiency.

However AlphaX models learn their action-value prediction functions from each MCT state evaluation, just as human brains probably learn the equivalent from imaginative planning state evaluations. But human brains - being far slower - perform at least one OOM less imagined state evaluation rollouts per board move evaluation than AlphaX models, which implies the brain is learning more per imagined state evaluation. The same naturally applies to DeepMind's newer EfficientZero - which learns human-level Atari in only 2 hours realtime[67] but this corresponds to a huge number of imagined internal state evaluations, on same order as similar model-free Atari agents.

Another way of looking at it: if AlphaX models really were fully as data efficient as the human brain in terms of learning speed per evaluation step and equivalent clock cycle, then we'd expect them to achieve human level play a million times faster than the typical human 10 years: ie in about 5 minutes (vs ~2 hours for EfficientZero, or ~30 hours for AlphaZero). Some component of this is obviously inefficiency in GPU clock cycles per evaluation step, but to counter that AlphaX models are tiny and often trained in parallel on many GPUs/TPUs.

Conclusion: SOTA DL systems have arguably matched the brain's data learning efficiency in the domain of vision - albeit with some artificial advantages like weight-sharing countering potential brain advantages. DL RL systems have also arguably matched brain data efficiency in games such as Go, but only in terms of physical move evaluations; there still appears to be a non-trivial learning gap where the brain learns much more per virtual move evaluation, which DL systems compensate for by rapidly evaluating far more virtual moves during MCTS rollouts. There is still a significant data efficiency gap in natural language, but training datasets are very different and almost certainly favor the brain (multimodal curriculum training and active learning).

Thus there is no evidence here of brain learning inefficiency (for systems of similar size/power). Instead DL still probably has more to learn from the brain on how to learn efficiently beyond SGD, and the probable convergence of biological and technological evolution to what appears to be the same fundamental data efficiency scaling laws is evidence for brain efficiency.

Conclusions

The brain is about as efficient as any conventional learning machine[68] can be given:

- An energy budget of 10W

- A thermodynamic cooling constrained surface power density similar to that of earth's surface (1kW/), and thus a 10cm radius.

- A total training dataset of about 10 billion precepts or 'steps'

If we only knew the remaining secrets of the brain today, we could train a brain-sized model consisting of a small population of about 1000 agents/sims, running on about as many GPUs, in probably about a month or less, for about $1M. This would require only about 1kW per agent or less, and so if the world really desired it, we could support a population of billions of such agents without dramatically increasing total world power production.

Nvidia - the single company producing most of the relevant flops today - produced roughly 5e21 flops of GPU compute in 2021, or the equivalent of about 5 million brains [69], perhaps surpassing the compute of the 3.6 million humans born in the US. With 200% growth in net flops output per year from all sources it will take about a decade for net GPU compute to exceed net world brain compute.[70]

Eventually advances in software and neuromorphic computing should reduce the energy requirement down to brain levels of 10W or so, allowing for up to a trillion brain-scale agents at near future world power supply, with at least a concomitant 100x increase in GDP[71]. All of this without any exotic computing.

Achieving those levels of energy efficiency will probably require brain-like neuromorphic-ish hardware, circuits, and learned software via training/education. The future of AGI is to become more like the brain, not less.

Here we focus on ecologically important tasks like visual inference - how efficient are brain circuits for evolutionarily important tasks?. For more recent economically important tasks such as multiplying large numbers the case for brain circuit inefficiency is quite strong (although there are some potential exceptions - human mentants such as Von Neumann). ↩︎

Obviously the brain's software (the mind) is still rapidly evolving with cultural/technological evolution. The efficiency of learned algorithms (as complex multi-step programs) that humans use to discover new theories of physics, create new DL algorithms, think more rationally about investing, or the said theories or algorithms themselves, are not considered here. ↩︎

Landauer, Rolf. "Irreversibility and heat generation in the computing process." IBM journal of research and development 5.3 (1961): 183-191. gs-link ↩︎

Zhirnov, Victor V., et al. "Limits to binary logic switch scaling-a gedanken model." Proceedings of the IEEE 91.11 (2003): 1934-1939. gs-link ↩︎

Frank, Michael P. "Approaching the Physical Limits of Computing." gs-link ↩︎

The tile/lego model comes from Cavin/Zhirnov et al in "Science and engineering beyond Moore's law"[7] and related publications. ↩︎

Cavin, Ralph K., Paolo Lugli, and Victor V. Zhirnov. "Science and engineering beyond Moore's law." Proceedings of the IEEE 100.Special Centennial Issue (2012): 1720-1749. gs-link ↩︎ ↩︎ ↩︎

Postman, Jacob, and Patrick Chiang. "A survey addressing on-chip interconnect: Energy and reliability considerations." International Scholarly Research Notices 2012 (2012). gs-link ↩︎

Das, Subhasis, Tor M. Aamodt, and William J. Dally. "SLIP: reducing wire energy in the memory hierarchy." Proceedings of the 42nd Annual International Symposium on Computer Architecture. 2015. gs-link ↩︎

Zhang, Hang, et al. "Architecting energy-efficient STT-RAM based register file on GPGPUs via delta compression." 2016 53nd ACM/EDAC/IEEE Design Automation Conference (DAC). IEEE, 2016. linkgs-link ↩︎

Park, Sunghyun, et al. "40.4 fJ/bit/mm low-swing on-chip signaling with self-resetting logic repeaters embedded within a mesh NoC in 45nm SOI CMOS." 2013 Design, Automation & Test in Europe Conference & Exhibition (DATE). IEEE, 2013. gs-link ↩︎

As a recent example, TeraPHY offers apparently SOTA electrical to optical interconnect with power efficiency of 5pJ/bit, which surpasses irreversible wire energy of ~100fJ/bit/mm only at just beyond GPU die-size distances of 5cm, and would only just match SOTA electrical interconnect for communication over a full cerebras wafer-scale device. ↩︎

Reich, Daniel S., et al. "Interspike intervals, receptive fields, and information encoding in primary visual cortex." Journal of Neuroscience 20.5 (2000): 1964-1974. gs-link ↩︎

Singh, Chandan, and William B. Levy. "A consensus layer V pyramidal neuron can sustain interpulse-interval coding." PloS one 12.7 (2017): e0180839. gs-link ↩︎

Individual spikes carry more information at lower spike rates (longer interspike intervals), making sparse low spike rates especially energy efficient, but high total bandwidth, low signal latency, and high area efficiency all require higher spike rates. ↩︎

Koch, Kristin, et al. "How much the eye tells the brain." Current Biology 16.14 (2006): 1428-1434. gs-link ↩︎

Strong, Steven P., et al. "Entropy and information in neural spike trains." Physical review letters 80.1 (1998): 197. gs-link ↩︎

There are more complex physical tradeoffs between wire diameter, signal speed, and energy, such that minimally energy efficient signalling is probably too costly in other constrained dimensions. ↩︎

Lennie, Peter. "The cost of cortical computation." Current biology 13.6 (2003): 493-497. gs-link ↩︎

Ralph Merkle estimated the energy per 'Ranvier op' - per spike energy along the distance of 1mm jumps between nodes of Ranvier - at 5 x J, which at 5 x J/nm is only ~2x the Landauer Limit, corresponding to single electron devices per nm operating at around 40 mV. He also estimates an average connection distance of 1mm and uses that to directly estimate about 1 synaptic op per 1mm 'Ranvier op', and thus about ops/s, based on this energy constraint. ↩︎

Wikipedia, RTX 3090 stats ↩︎

The minimal Landauer bit error rate for 1eV switches is 1e-25, vs 1e10 transistors at 1e9 hz for 1e6 seconds (2 weeks). ↩︎

Cavin et al estimate end of Moore's Law CMOS device characteristics from a detailed model of known physical limits[7:2]. A GPU at these limits could have 10x feature scaling vs 2021 and 100x transistor density, but only about 3x greater energy efficiency, so a GPU of this era could have 3 trillion transistors, but would use/burn an unrealistic 10kW to run all those transistors at GHz speed. ↩︎ ↩︎

Carlsmith at Open Philanthropy produced a huge report resulting in a wide distribution over brain compute power, with a median/mode around ops/s. Although the median/mode is reasonable, this report includes too many poorly informed estimates, resulting in an unnecessarily high variance distribution. The simpler estimate of synapses switching at around ~0.5hz, with 1 synaptic op equivalent to at least one but up to ten low precision flops or analog multiply-adds, should result in most mass concentrated around op/s and ops/s. There is little uncertainty in the synapse count, not much in the average synaptic firing rate, and the evidence from neuroscience provides fairly strong support, but ultimately the Landauer Limit as analyzed here rules out much more than ops/s, and Carlsmith's report ignores interconnect energy and is confused about the actual practical thermodynamic limits of analog computation. ↩︎

Mean of Neuron firing rates in humans ↩︎

In some synapses synaptic facilitation acts very much like an exponential decoder, where the spike train sequence 11 has a postsynaptic potential that is 3x greater than the sequence 10, the sequence 111 is 9x greater than 100, etc. - see the reference below. ↩︎

Jackman, Skyler L., and Wade G. Regehr. "The mechanisms and functions of synaptic facilitation." Neuron 94.3 (2017): 447-464. gs-link ↩︎

See the following article for a completely different approach resulting in the same SNR relationship following 3.16 in Sarpeshkar, Rahul. "Analog versus digital: extrapolating from electronics to neurobiology." Neural computation 10.7 (1998): 1601-1638. gs-link ↩︎

Miyashita, Daisuke, Edward H. Lee, and Boris Murmann. "Convolutional neural networks using logarithmic data representation." arXiv preprint arXiv:1603.01025 (2016). gs-link ↩︎

Wang, Naigang, et al. "Training deep neural networks with 8-bit floating point numbers." Proceedings of the 32nd International Conference on Neural Information Processing Systems. 2018. gs-link ↩︎

Bartol Jr, Thomas M., et al. "Nanoconnectomic upper bound on the variability of synaptic plasticity." Elife 4 (2015): e10778. gs-link ↩︎

Bartol, Thomas M., et al. "Hippocampal spine head sizes are highly precise." bioRxiv (2015): 016329. gs-link ↩︎

Attwell, David, and Simon B. Laughlin. "An energy budget for signaling in the grey matter of the brain." Journal of Cerebral Blood Flow & Metabolism 21.10 (2001): 1133-1145. gs-link ↩︎

Bavandpour, Mohammad, et al. "Mixed-Signal Neuromorphic Processors: Quo Vadis?" 2019 IEEE SOI-3D-Subthreshold Microelectronics Technology Unified Conference (S3S). IEEE, 2019. gs-link ↩︎

Chen, Jia, et al. "Multiply accumulate operations in memristor crossbar arrays for analog computing." Journal of Semiconductors 42.1 (2021): 013104. gs-link ↩︎

Li, Huihan, et al. "Memristive crossbar arrays for storage and computing applications." Advanced Intelligent Systems 3.9 (2021): 2100017. gs-link ↩︎

Li, Can, et al. "Analogue signal and image processing with large memristor crossbars." Nature electronics 1.1 (2018): 52-59. gs-link ↩︎

Mahmoodi, M. Reza, and Dmitri Strukov. "Breaking POps/J barrier with analog multiplier circuits based on nonvolatile memories." Proceedings of the International Symposium on Low Power Electronics and Design. 2018. gs-link ↩︎

Montero-Crespo, Marta, et al. "Three-dimensional synaptic organization of the human hippocampal CA1 field." Elife 9 (2020): e57013. gs-link ↩︎

Santuy, Andrea, et al. "Study of the size and shape of synapses in the juvenile rat somatosensory cortex with 3D electron microscopy." Eneuro 5.1 (2018). gs-link ↩︎

Brengelmann, George L. "Specialized brain cooling in humans?." The FASEB Journal 7.12 (1993): 1148-1153. gs-link ↩︎

Wikipedia: Nerve Conduction Velocity ↩︎

ScienceDirect: Dynamic power dissipation, EQ Ov.10 ↩︎

Gonzalez, Ricardo, Benjamin M. Gordon, and Mark A. Horowitz. "Supply and threshold voltage scaling for low power CMOS." IEEE Journal of Solid-State Circuits 32.8 (1997): 1210-1216. gs-link ↩︎

Herculano-Houzel, Suzana, et al. "The elephant brain in numbers." Frontiers in neuroanatomy 8 (2014): 46. gs-link ↩︎

Mortensen, Heidi S., et al. "Quantitative relationships in delphinid neocortex." Frontiers in Neuroanatomy 8 (2014): 132. gs-link ↩︎

Olshausen, Bruno A., and David J. Field. "Sparse coding with an overcomplete basis set: A strategy employed by V1?." Vision research 37.23 (1997): 3311-3325. gs-link ↩︎

Kar, Kohitij, et al. "Evidence that recurrent circuits are critical to the ventral stream’s execution of core object recognition behavior." Nature neuroscience 22.6 (2019): 974-983. gs-link ↩︎

Nayebi, Aran, et al. "Task-driven convolutional recurrent models of the visual system." arXiv preprint arXiv:1807.00053 (2018). gs-link ↩︎

Mehrer, Johannes, et al. "An ecologically motivated image dataset for deep learning yields better models of human vision." Proceedings of the National Academy of Sciences 118.8 (2021). gs-link ↩︎

Yamins, Daniel LK, and James J. DiCarlo. "Using goal-driven deep learning models to understand sensory cortex." Nature neuroscience 19.3 (2016): 356-365. gs-link ↩︎

Zhang, Richard, et al. "The unreasonable effectiveness of deep features as a perceptual metric." Proceedings of the IEEE conference on computer vision and pattern recognition. 2018. gs-link ↩︎

Cichy, Radoslaw M., and Daniel Kaiser. "Deep neural networks as scientific models." Trends in cognitive sciences 23.4 (2019): 305-317. gs-link ↩︎

Bakhtiari, Shahab, et al. "The functional specialization of visual cortex emerges from training parallel pathways with self-supervised predictive learning." (2021). gs-link ↩︎

Mineault, Patrick, et al. "Your head is there to move you around: Goal-driven models of the primate dorsal pathway." Advances in Neural Information Processing Systems 34 (2021). gs-link ↩︎

Dobs, Katharina, et al. "Brain-like functional specialization emerges spontaneously in deep neural networks." bioRxiv (2021). gs-link ↩︎

Elsayed, Gamaleldin F., et al. "Adversarial examples that fool both computer vision and time-limited humans." arXiv preprint arXiv:1802.08195 (2018). gs-link ↩︎

Nicholson, David A., and Astrid A. Prinz. "Deep Neural Network Models of Object Recognition Exhibit Human-like Limitations When Performing Visual Search Tasks." bioRxiv (2021): 2020-10. gs-link ↩︎

Hassabis, Demis, et al. "Neuroscience-inspired artificial intelligence." Neuron 95.2 (2017): 245-258. gs-link ↩︎

Zador, Anthony M. "A critique of pure learning and what artificial neural networks can learn from animal brains." Nature communications 10.1 (2019): 1-7. gs-link ↩︎

Haussler, David, Jyrki Kivinen, and Manfred K. Warmuth. "Tight worst-case loss bounds for predicting with expert advice." European Conference on Computational Learning Theory. Springer, Berlin, Heidelberg, 1995. gs-link ↩︎

Hestness, Joel, et al. "Deep learning scaling is predictable, empirically." arXiv preprint arXiv:1712.00409 (2017). gs-link ↩︎

Rosenfeld, Jonathan S., et al. "A constructive prediction of the generalization error across scales." arXiv preprint arXiv:1909.12673 (2019). gs-link ↩︎

Chen, Ting, et al. "Big self-supervised models are strong semi-supervised learners." arXiv preprint arXiv:2006.10029 (2020). gs-link ↩︎

Silver, David, et al. "Mastering the game of Go with deep neural networks and tree search." nature 529.7587 (2016): 484-489. ↩︎

Ye, Weirui, et al. "Mastering atari games with limited data." Advances in Neural Information Processing Systems 34 (2021). ↩︎

Practical here implies irreversible - obviously an exotic reversible or quantum computer could potentially do much better in terms of energy efficiency, but all evidence suggests brain size exotic computers are still far in the future, after the arrival of AGI on conventional computers. ↩︎

Nvidia's 2021 revenue is about $25B, about half of which is from consumer GPUs which provide near brain level ops/s for around $2,000. The other half of revenue for data-center GPUs is around 5x more expensive per flop. ↩︎

Without any further progress in flops/s/$ from Moore's Law, this would entail Nvidia's revenue exceeding United States GDP in a decade. More realistically, even if Nvidia retains a dominant lead, it seems much more likely to arrive from an even split: 30x increase in revenue, 30x increase in flops/s/$. But as this article indicates, there is limited further slack in Moore's Law, so some amount of growth must come from economic scaling up the fraction of GDP going into compute. ↩︎

Obviously neuromorphic AGI or sims/uploads will have numerous transformative advantages over humans: ability to copy/fork entire minds, share modules, dynamically expand modules beyond human brain limits, run at variable speeds far beyond 100hz, interace more directly with computational systems, etc. ↩︎

103 comments

Comments sorted by top scores.

comment by Vaniver · 2022-01-07T19:24:49.466Z · LW(p) · GW(p)

But if the brain is already near said practical physical limits, then merely achieving brain parity in AGI at all will already require using up most of the optimizational slack, leaving not much left for a hard takeoff - thus a slower takeoff.

While you do talk about stuff related to this in the post / I'm not sure you disagree about facts, I think I want to argue about interpretation / frame.

That is, efficiency is a numerator over a denominator; I grant that we're looking at the right numerator, but even if human brains are maximally efficient by denominator 1, they might be highly inefficient by denominator 2, and the core value of AI may be being able to switch from denominator 1 to denominator 2 (rather than being a 'straightforward upgrade').

The analogy between birds and planes is probably useful here; birds are (as you would expect!) very efficient at miles flown per calorie, but if it's way easier to get 'calories' through chemical engineering on petroleum, then a less efficient plane that consumes jet fuel can end up cheaper. And if what's economically relevant is "top speed" or "time it takes to go from New York to London", then planes can solidly beat birds. I think we were living in the 'fast takeoff' world for planes (in a technical instead of economic sense), even tho this sort of reasoning would have suggested there would be slow takeoff as we struggled to reach bird efficiency.

The easiest disanalogy between humans and computers is probably "ease of adding more watts"; my brain is running at ~10W because it was 'designed' in an era when calories were super-scarce and cooling was difficult. But electricity is super cheap, and putting 200W through my GPU and then dumping it into my room costs basically nothing. (Once you have 'datacenter' levels of compute, electricity and cooling costs are significant; but again substantially cheaper than the costs of feeding similar numbers of humans.)

A second important disanalogy is something like "ease of adding more compute in parallel"; if I want to add a second GPU to my computer, this is a mild hassle and only takes some tweaks to work; if I want to add a second brain to my body, this is basically impossible. [This is maybe underselling humans, who make organizations to 'add brains' in this way, but I think this is still probably quite important for timeline-related concerns.]

Replies from: jacob_cannell↑ comment by jacob_cannell · 2022-01-07T20:09:45.051Z · LW(p) · GW(p)

I discuss some of that in [this comment] in reply to Steven Byrnes. I agree electricity is cheap, and discuss that. But electricity is not free, and still becomes a constraint.

In the end of the article I discuss/estimate near future brain-scale AGI requiring 1000 GPUs for 1000 brain size agents in parallel, using roughly 1MW total or 1KW per agent instance. That works out to about $2,000/yr per agent for the power&cooling cost. Or if we just estimate directly based on vast.ai prices it's more like $5,000/yr per agent total for hardware rental (including power costs). The rental price using enterprise GPUs is at least 4x as much, so more like $20,000/yr per agent. So the potential economic advantage is not yet multiple OOM. It's actually more like little to no advantage for low-end robotic labor, or perhaps 1 OOM advantage for programmers/researchers/ec. But if we had AGI today GPU prices would just skyrocket to arbitrage that advantage, at least until foundries could ramp up GPU production.

So anyway given some bound/estimate for power cost per agent, this does allow us to roughly bound the total amount of AGI compute near term achievable, as both world power production and foundry output is difficult to ramp up rapidly.

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2022-01-09T01:30:35.201Z · LW(p) · GW(p)

$2,000/yr per agent is nothing, when we are talking about hypothetical AGI. This seems to be evidence against your claim that energy is a taut constraint.

Sure, the actual price of compute would be more, because of the hardware and facilities etc. But that doesn't change the bottom line that energy is not a taut constraint.

Maybe you are saying that in the future energy will become a taut constraint because we can't make chips significantly more energy efficient but we can make them significantly cheaper in every other way, so energy will become the dominant part of the cost of compute?

Replies from: jacob_cannell, daniel-kokotajlo↑ comment by jacob_cannell · 2022-01-09T15:37:28.809Z · LW(p) · GW(p)

Energy is always an engineering constraint: it's a primary constraint on Moore's Law, and thus also a primary limiter on a fast takeoff with GPUs (because world power supply isn't enough to support net ANN compute much larger than current brain population net compute).

But again I already indicated it's probably not a 'taut constraint' on early AGI in terms of economic cost - at least in my model of likely requirements for early not-smarter-than-human AGI.

Also yes additionally longer term we can expect energy to become a larger fraction of economic cost - through some combination of more efficient chip production, or just the slowing of moore's law itself (which implies chips holding value for much longer, thus reducing the dominant hardware depreciation component of rental costs)

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2022-01-09T02:02:57.904Z · LW(p) · GW(p)

Or maybe you aren't saying energy is a taut constraint at all? It sure sounded like you did but maybe I misinterpreted you.

comment by Steven Byrnes (steve2152) · 2022-01-06T16:26:17.408Z · LW(p) · GW(p)

Nice post!

Brain efficiency matters a great deal for AGI timelines and takeoff speeds, as AGI is implicitly/explicitly defined in terms of brain parity. If the brain is about 6 OOM away from the practical physical limits of energy efficiency, then roughly speaking we should expect about 6 OOM of further Moore's Law hardware improvement past the point of brain parity: perhaps two decades of progress at current rates, which could be compressed into a much shorter time period by an intelligence explosion - a hard takeoff.

But if the brain is already near said practical physical limits, then merely achieving brain parity in AGI at all will already require using up most of the optimizational slack, leaving not much left for a hard takeoff - thus a slower takeoff.

I guess your model is something like

Step 1: hardware with efficiency similar to the brain,

Step 2: recursive self-improvement but only if Moore's law hasn't topped out yet by that point.

And therefore (on this model) it's important to know if "efficiency similar to the brain" is close to the limits.

If that's the model, I have some doubts. My model would be more like:

Step 1: algorithms with capability similar to the brain in some respects (which could have efficiency dramatically lower than the brain, because people are perfectly happy to run algorithms on huge banks of GPUs sucking down 100s of kW of electricity, etc.).

Step 2: fast improvement of capability (maybe) via any of:

2A: Better code (vintage Recursive Self-Improvement, or "we got the secret sauce, now we pick the low-hanging fruit of making it work better")

2B: More or better training (or "more time to learn and think" in the brain-like context [a.k.a. online-learning])

2C: More hardware resources (more parameters, more chips, more instances, either because the programmers decide to after have promising results, or because the AGI is hacking into cloud servers or whatever).

Each of these might or might not happen, depending on whether there is scope for improvement that hasn't already been squeezed out before step 1, which in turn depends on lots of things.

I didn't even mention "2D: Better chips", because it seems much slower than A,B,C. Normally I think of "hard takeoff" as being defined as "days, weeks, or at most months", in which case fabricating new better chips seems unlikely to contribute.

I also agree with FeepingCreature's comment that "the brain and today's deep neural nets are comparably efficient at thus-and-such task" is pretty weak evidence that there isn't some "neither-of-the-above" algorithm waiting to be discovered which is much more efficient than either. There might or might not be, I think it's hard to say.

Replies from: jacob_cannell, donald-hobson↑ comment by jacob_cannell · 2022-01-06T19:21:19.601Z · LW(p) · GW(p)

Well at the end I said "If we only knew the remaining secrets of the brain today, we could train a brain-sized model consisting of a small population of about 1000 agents/sims, running on about as many GPUs"

So my model absolutely is that we are limited by algorithmic knowledge. If we had that knowledge today we would be training AGI right now, because as this article indicates 1000 GPUs are already roughly powerful enough to simulate 1000 instances of a single shared brain-size ANN. Sure it may use a MW of power, or 1 kW per agent-instance, so 100x less efficient than the brain, but only 10x less efficient than the whole attached human body, and regardless that doesn't matter much as human workers are 4 or 5 OOM more expensive than their equivalent raw energy cost.

I also agree with FeepingCreature's comment that "the brain and today's deep neural nets are comparably efficient at thus-and-such task" is pretty weak evidence that there isn't some "neither-of-the-above" algorithm waiting to be discovered which is much more efficient than either. There might or might not be, I think it's hard to say.

I don't think it's weak evidence at all, because of all the evidence we have of biological evolution achieving near optimality in so many other key efficiency metrics - at some point you just have to concede and update that biological evolution finds highly efficient or near-optimal solutions. The DL comparisons then show such and such amounts of technological evolution - a different search process - is converging on similar algorithms and limits. I find this rather compelling - and I'm genuinely curious as to why you don't? (Perhaps one could argue that DL is too influenced by the brain? But we really did try many other approaches) I found FeepingCreature's comment to be confused - as if he didn't read the article (but perhaps I should make some sections more clear?).

Replies from: maks-stachowiak, steve2152↑ comment by Maks Stachowiak (maks-stachowiak) · 2022-01-06T20:45:39.247Z · LW(p) · GW(p)

About your intuition that evolution made brains optimal... well but then there are people like John von Neumann who clearly demonstrate that the human brain can be orders of magnitude more productive without significantly higher energy costs.

My model of the human brain isn't that it's the most powerful biological information processing organ possible - far from it. In my view of the world we are merely the first species that passed an intelligence treshold allowing it to produce a technological civilisation. As soon as a species passed that treshold civilisation popped into existence.

We are the dumbest species possible that still manages to coordinate and accumulate technology. This doesn't tell you much about what the limits of biology are.

Replies from: jacob_cannell↑ comment by jacob_cannell · 2022-01-06T21:10:35.412Z · LW(p) · GW(p)

About your intuition that evolution made brains optimal... well but then there are people like John von Neumann who clearly demonstrate that the human brain can be orders of magnitude more productive without significantly higher energy costs.

Optimal is a word one should use with caution and always with respect to some measure, and I use it selectively, usually as 'near-optimal' or some such. The article does not argue that brains are 'optimal' in some generic sense. I referenced JVN just as an example of a mentat - that human brains are capable of learning more reasonably efficient numeric circuits, even though that's well outside of evolutionary objectives. JVN certainly isn't the only example of a human mentat like that, and he certainly isn't evidence "that the human brain can be orders of magnitude more productive".

We are the dumbest species possible that still manages to coordinate and accumulate technology. This doesn't tell you much about what the limits of biology are.

Sure, I agree your stated "humans first to cross the finish line" model (or really EY's) doesn't tell you much about the limits of biology. To understand the actual limits of biology, you have to identify what those actual physical limits are, and then evaluate how close brains are to said limits. That is in fact what this article does.

In my view of the world we are merely the first species that passed an intelligence treshold allowing it to produce a technological civilisation. As soon as a species passed that treshold civilisation popped into existence.

We passed the threshold for language. We passed the threshold from evolutionarily specific intelligence to universal Turing Machines style intelligence through linguistic mental programs/programming. Before that everything a big brain learns during a lifetime is lost, after that it allowed for immortal substrate independent mental programs to evolve separately from the disposable brain soma: cultural/memetic evolution. This is a one time major phase shift in evolution, not some specific brain adaptation (even though some of the latter obviously enables the former).

↑ comment by Steven Byrnes (steve2152) · 2022-01-06T19:57:25.373Z · LW(p) · GW(p)

For example, if there were an image-processing algorithm that used many fewer operations overall, but where those operations were more serial and less parallel—e.g. it required 1000 sequential steps for each image—then I think evolution would not have found it, because brains are too slow.

So then you need a different reason to think that such an algorithm doesn't exist.

Maybe you can say "If such an algorithm existed, AI researchers would have found it by now." But would they really? If AI researchers hadn't been stealing ideas from the brain, would they have even invented neural nets by now? I dunno.

Or you can say "Something about the nature of image processing is that doing 1000 sequential steps just isn't that useful for the task." I guess I find that claim kinda plausible, but I'm just not very confident, I don't feel like I have such a deep grasp of the fundamental nature of image processing that I can make claims like that.

In other domains besides image processing, I'd be even less confident. For example, I can kinda imagine some slightly-alien form of "reasoning" or "planning" that was mostly like human "reasoning" or "planning" but sometimes involved fast serial operations. After all, I find it very handy to have a fast serial laptop. If access to fast serial processing is useful for "me", maybe it would be also useful for the low-level implementation of my brain algorithms. I dunno. Again, I think it's hard to say either way.

Replies from: gwern, jacob_cannell↑ comment by gwern · 2022-01-07T00:19:05.269Z · LW(p) · GW(p)

For example, if there were an image-processing algorithm that used many fewer operations overall, but where those operations were more serial and less parallel—e.g. it required 1000 sequential steps for each image—then I think evolution would not have found it, because brains are too slow.

Peter Watts would like you to ponder how Portia spiders think about what they see. :)

Replies from: Ansel↑ comment by Ansel · 2022-01-07T21:00:52.161Z · LW(p) · GW(p)

Is that link safe to click for someone with Arachnophobia?

Replies from: Gunnar_Zarncke, gwern↑ comment by Gunnar_Zarncke · 2022-01-07T23:07:50.236Z · LW(p) · GW(p)

no pictures

↑ comment by jacob_cannell · 2022-01-06T20:19:45.977Z · LW(p) · GW(p)

EDIT: I updated the circuits section of the article with an improved model of Serial vs Parallel vs Neurmorphic(PIM) scalability, which better illustrates how serial computation doesn't scale.

Yes you bring up a good point, and one I should have discussed in more detail (but the article is already pretty long). However the article does provide part of the framework to answer this question.

There definitely are serial/parallel tradeoffs where the parallel version of an algorithm tends to use marginally more compute asymptotically. However these simple big O asymptotic models do not consider the fundamental costs of wire energy transit for remote memory accesses, which actually scale as for 2D memory. So in that sense the simple big O models are asymptotically wrong. If you use the correct more detailed models which account for the actual wire energy costs, everything changes, and the parallel versions leveraging distributed local memory and thus avoiding wire energy transit are generally more energy efficient - but through using a more memory heavy algorithmic approach.

Another way of looking at it is to compare serial-optimized VN processors (CPUs) vs parallel-optimized VN processors (GPUs), vs parallel processor-in-memory (brains, neuromorphic).

Pure serial CPUs (ignoring parallel/vector instructions) with tens of billions of transistors have only order a few dozen cores but not much higher clock rates than GPUs, despite using all that die space for marginal serial speed increase - serial speed scales extremely poorly with transistor density, end of dennard scaling, etc. A GPU with tens of billions of transistors instead has tens of thousands of ALU cores, but is still ultimately limited by very slow poor scaling of off-chip RAM bandwidth proportional to (where N is device area), and wire energy that doesn't scale at all. The neuromorphic/PIM machine has perfect mem bandwidth scaling at 1:1 ratio - it can access all of it's RAM per clock cycle, pays near zero energy to access RAM (as memory and compute are unified), and everything scales linear with N.

Physics is fundamentally parallel, not serial, so the latter just doesn't scale.

But of course on top of all that there is latency/delay - so for example the brain is also strongly optimized for minimal depth for minimal delay, and to some extent that may compete with optimizing for energy. Ironically delay is also a problem in GPU ANNs - huge problem for tesla's self driving cars for example - because GPUs need to operate on huge batches to amortize their very limited/expensive memory bandwidth.

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2022-01-10T15:13:43.432Z · LW(p) · GW(p)

Yeah, latency / depth is the main thing I was thinking of.

If my boss says "You must calculate sin(x) in 2 clock cycles", I would have no choice but to waste a ton of memory on a giant lookup table. (Maybe "2" is the wrong number of clock cycles here, but you get the idea.) If I'm allowed 10 clock cycles, maybe I can reduce x mod 2π first, and thus use a much smaller lookup table, thus waste a lot less memory. If I'm allowed 200 clock cycles to calculate sin(x), I can use C code that has no lookup table at all, and thus roughly zero memory and communications. (EDIT: Oops, LOL, the C code I linked uses a lookup table. I could have linked this one instead.)

So I still feel like I don't want to take it for granted that there's a certain amount of "algorithmic work" that needs to be done for "intelligence", and that amount of "work" is similar to what the human brain uses. I feel like there might be potential algorithmic strategies out there that are just out of the question for the human brain, because of serial depth. (Among other reasons.)

Also, it's not all-or-nothing: I can imagine an AGI that involves a big parallel processor, and a small fast serial coprocessor. Maybe there are little pieces of the algorithm that would massively benefit from serialization, and the brain is bottlenecked in capability (or wastes memory / resources) by the need to find workarounds for those pieces. Or maybe not, who knows.

↑ comment by Donald Hobson (donald-hobson) · 2022-01-10T17:44:25.298Z · LW(p) · GW(p)

in which case fabricating new better chips seems unlikely to contribute.

Fabricating new better chips will be part of a Foom once the AI has nanotech. This might be because humans had already made nanotech by this point, or it might involve using a DNA printer to make nanotech in a day. (The latter requires a substantial amount of intelligence already, so this is a process that probably won't kick in the moment the AI gets to about human level. )

comment by Charlie Steiner · 2022-01-07T06:02:00.316Z · LW(p) · GW(p)

That model of wire energy sounds so non-physical I had to look it up.

(It reminds me of the billiard ball model of electrons. If you think of electrons as billiard balls, it's hard to figure out how metals work, because it seems like the electrons will have a hard time getting through all the atoms that are in the way - there's too much bouncing and jostling. But if electrons are waves, they can flow right past all the atoms as long as they're a crystal lattice, and suddenly it's dissipation that becomes the unusual process that needs explanation.)

So I looked through your references but I couldn't find any mention of this formula. Not that I would have been shocked if I did - semiconductor engineers do all sorts of weird stuff that condensed matter physicists wouldn't. But anyhow, I'm pretty sure that there's no way the minimum energy dissipation in wires scales the way you say it does, and I'm curious if you have some authoritative source.

We can imagine several kinds of losses: radiative losses from high-frequency activity, resistive losses from moving lots of current, and irreversible capacitive losses from charging and discharging wires. I actually am pretty sure that the first two are smaller than the irreversible capacitive loss, and there are some nice excuses to ignore them: radiative losses might affect chips a little but there's no way the brain cares about them, and there's no way that resistive losses are going to have a basis in information theory because superconductors exist.

So, capacitance of wires! Capacitor energy is QV/2, or CV^2/2. Let's make a spherical cow assumption that all wires in a chip are half as capacitive as ideal coax cables, and the dielectric is the same thickness as the wires. Then the capacitance is about 1.3*10^-10 Farads/m (note: this drops as you make chips bigger, but only logarithmically). So for 1V wires, capacitive energy is about 7*10^-11 J/m per discharge (70 fJ/mm, a close match to the number you cite!).

But look at the scaling - it's V^2! Not controlled by Landauer.

Anyhow I felt like there were several things like this in the post, but this is the one I decided to do a relatively deep dive on.

Replies from: steve2152, jacob_cannell, obserience↑ comment by Steven Byrnes (steve2152) · 2022-01-10T14:37:09.419Z · LW(p) · GW(p)

FWIW I am also a physicist and the interconnect energy discussion also seemed wrong to me, but I hadn't bothered to look into it enough to comment.

I attended a small conference on energy-efficient electronics a decade ago. My memory is a bit hazy, but I believe Eli Yablonovitch (who I tend to trust on these kinds of questions) kicked off with an overview of interconnect losses (and paths forward), and for normal metal wire losses he wrote down the formula derived from charging and discharging the (unintentional / stray) capacitors between the wires and other bits of metal in their vicinity. Then he talked about various solutions like various kinds of low-V switches (negative-capacitance voltage-amplifying transistors, NEMS mechanical switches, quantum tunneling transistors, etc.), and LED+waveguide optical interconnects (e.g. this paper).