[Book Review] "The Bell Curve" by Charles Murray

post by lsusr · 2021-11-02T05:49:22.198Z · LW · GW · 134 commentsContents

Stratification Educational Stratification Occupational Stratification Social Partitioning Heritability of Intelligence Negative Effects of Low Intelligence Poverty High School Graduation Labor Force Participation Family Welfare Dependency Parenting Crime Ethnicity and Cognition Black and White Americans Africa Black-White Trends How much is genetic? Race and Employment Projecting Demography Raising Cognitive Ability Affirmative Action College Affirmative Action Workplace Affirmative Action Conclusion None 135 comments

Factor analysis is a mathematical method of inferring simple correlations between observations. It's the foundation of the Big Five personality traits. It's also behind how we define intelligence.

A person's ability to perform one cognitive task is positively correlated with basically every other cognitive task. If you collect a variety of cognitive measures you can use linear algebra to extract a single measure which we call . Intelligence quotient (IQ) is a test specifically designed to measure . IQ isn't a perfect measure of but it's convenient and robust.

Here are six conclusions regarding tests of cognitive ability, drawn from the classical tradition, that are now beyond significant technical dispute:

There is such thing as a general factor of cognitive ability on which human beings differ.

All standardized tests of academic aptitude or achievement measure this general factor to some degree, but IQ tests expressly designed for that purpose measure it most accurately.

IQ scores match, to a first degree, whatever it is that ordinary people mean when they use the word intelligent or smart in ordinary language.

IQ scores are stable, although not perfectly so, over much of a person's life.

Properly administered IQ tests are not demonstrably biased against social, economic, ethnic, or racial groups.

Cognitive ability is substantially heritable, apparently no less than 40 percent and no more than 80 percent.

Charles Murray doesn't bother proving the above points. These facts are well established among scientists. Instead, The Bell Curve: Intelligence and Class Structire in American Life is about what means to American society.

Stratification

Educational Stratification

Smarter people have always had an advantage. The people who go to college have always been smarter than average. The correlation between college and intelligence increased after WWII. Charles Murray argues that the competitive advantage of intelligence is magnified in a technological society. I agree that this has been the case so far and that the trend has continued between 1994 when Murray published his book and 2021 when I am writing this review.

SAT scores can be mapped to IQ. The entering class of Harvard in 1926 had a mean IQ of about 117. IQ is defined to have an average of 100 and a standard deviation of 15. Harvard in 1926 thus hovered around the 88th percentile of the nation's youths. Other colleges got similar scores. The average Pennsylvania college was lower with an IQ of 107 (68th percentile). Elite Pennsylvania colleges had students between the 75th and 90th percentiles.

By 1964, the average student of a Pennsylvania college had an IQ in the 89th percentile. Elite colleges' average freshmen were in the 99th percentile.

Charles Murray uses a measure called median overlap to quantify social stratification. Median overlap indicates what proportion of IQ scores the lower-scoring group matched or exceeded the median score in the higher-scoring group. Two identical groups would have a median overlap of 50%.

| Groups Being Compared | Median Overlap |

|---|---|

| High school graduates with college graduates | 7% |

| High school graduates with Ph.D.s, M.D.s, or LL.B.s | 1% |

| College graduates with Ph.D.s, M.D.s, and LL.B.s | 21% |

College graduates are not representative of the population. If most of your social circle is (or will be) a college graduate then your social circle is smarter than the population mean.

The national percentage of 18-year-olds with the ability to get a score of 700 or above on the SAT-Verbal test is in the vicinity of one in three hundred. Think about the consequences when about half of these students are going to universities in which 17 percent of their classmates also had SAT-Vs in the 700s and another 48 percent had scores in the 600s. It is difficult to exaggerate how different the elite college population is from the population at large—first in its level of intellectual talent, and correlatively in its outlook on society, politics, ethics, religion, and all the other domains in which intellectuals, especially intellectuals concentrated into communities, tend to develop their own conventional wisdoms.

Occupational Stratification

You can arrange jobs by their relative status. Job status tends to run in families. This could be because of social forces or it could be because of heritable . We can test which hypothesis is true via an adoptive twin study. A study in Denmark tracked several hundred men and women adopted before they were one year old. "In adulthood, they were compared with both their biological siblings and their adoptive siblings, the idea being to see whether common genes or common home life determined where they landed on the occupational ladder. The biologically related siblings resembled each other in job status, even though they grew up in different homes. And among them, the full siblings had more similar job status than the half siblings. Meanwhile, adoptive siblings were not significantly correlated with each other in job status."

High-status jobs have become much more cognitively demanding over the last hundred years. Charles Murray uses a bunch of data to prove this. I'll skip over his data because the claim it's so obviously to someone living in the Internet age. Even being an marketer is complicated these days.

Credentialism is a real thing. Could it be that IQ causes education which causes high status jobs but cognitive ability doesn't actually increase job performance? Or does sheer intellectual horsepower have market value? We have data to answer this question.

The most comprehensive modern surveys of the use of tests for hiring, promotion, and licensing, in civilian, military, private, and government occupations, repeatedly point to three conclusions about worker performance, as follows.

Job training and job performance in many common occupations are well predicted by any broadly based test of intelligence, as compared to narrower tests more specifically targeted to the routines of the job. As a corollary: Narrower tests that predict well do so largely because they happen themselves to be correlated with tests of general cognitive ability.

Mental tests predict job performance largely via their loading on .

The correlations between tested intelligence and job performance are higher than had been estimated prior to the 1980s. They are high enough to have economic consequences.

IQ tests frequently measure one's ability to solve abstract puzzles. Programming interview algorithm puzzles are tests of a person's abstract problem-solving ability. I wonder how much of Google's algorithm interview tests predictive power comes from factor. Some of it must. The question is: How much? If the answer is "a lot" then these tests could be a de facto workaround for the 1971 Supreme Court case Griggs v. Duke Power Co. which found that IQ-based employment constituted employment discrimination under disparate impact theory.

An applicant for a job as a mechanics should be judged on how well he does on a mechanical aptitude test while an applicant for a job as a clerk should be judged on tests measuring clerical skills, and so forth. So decreed the Supreme Court, and why not? In addition to the expert testimony before the Court favoring it, it seemed to make good common sense…. The problem is that common sense turned out to be wrong.

The best experiments compel lots of people people to do things. The US military compels a lots of people to do things. Thus, some of our best data on 's relationship to job performance comes from the military.

| Enlisted Military Skill Category | Percentage of Training Success Explained by | Percentage of Training Success Explained by Everything Else |

|---|---|---|

| Nuclear weapons specialist | 77.3 | 0.8 |

| Air crew operations specialist | 69.7 | 1.8 |

| Weather specialist | 68.7 | 2.6 |

| Intelligence specialist | 66.7 | 7.0 |

| Fireman | 59.7 | 0.6 |

| Dental assistant | 55.2 | 1.0 |

| Security police | 53.6 | 1.4 |

| Vehicle maintenance | 49.3 | 7.7 |

| Maintenance | 28.4 | 2.7 |

"[T]he explanatory power of was almost thirty times greater than of all other cognitive factors in ASVAB combined." In addition, the importance of was stronger for more complicated tasks. Other military studies find similar results to this one.

There's no reason to believe civilian jobs are any less dependent on than military jobs. For cognitively-demanding jobs like law, neurology and research in the hard sciences, we should expect the percentage of training success explained by to be well over 70%. Similar results appear for civilian jobs.

If we measure civilian job performance instead of military training success we get a smaller (but still large) impact of . Note that the measures below probably contain significant overlap. Part of college grades' predictive power comes from them being an imperfect measure of .

| Predictor | Validity Predicting Job Performance Ratings |

|---|---|

| Cognitive test score | .53 |

| Biographical data | .37 |

| Reference checks | .26 |

| Education | .22 |

| Interview | .14 |

| College grades | .11 |

| Interest | .10 |

| Age | -.01 |

Charles Murray's data shows that a secretary or a dentist who is one standard deviation better than average is worth a 40% premium in salary. Such jobs undersell the impact of worker variation among job performers. Jobs with leverage have a disproportionate impact on society. Anyone who has worked in a highly-technical field with leverage (like software developers, scientists or business executives) knows that someone one standard deviation above average is worth much more than 40% more.

As technology advances, the number of highly-technical jobs with leverage increases. This drives up the value of which increases income inequality.

Social Partitioning

The cognitive elite usually partition ourselves off into specialized neighborhoods. For example, I live in Seattle. Seattle is one of the most software-heavy cities in the world. Seattle contains headquarters of Microsoft and Amazon are here. You can barely throw a router without hitting a programmer. You'd expect highschools to be full of technical volunteers. But that's only in the rich neighborhoods. I, weirdly, live in a poor, dangerous[1] neighborhood where I volunteer as a coach for the local high school's robotics club. If I wasn't around there would be no engineers teaching or coaching at the highschool. None of my friends live here. They all live in the rich, safe neighborhoods.

Heritability of Intelligence

The most modern study of identical twins reared in separate homes suggests a heritability for general intelligence if .75 and .80, a value near the top of the range found in contemporary technical literature. Other direct estimates use data on ordinary siblings who were raised apart or on parents and their adopted-away children. Usually the heritability estimates from such data are lower but rarely below .4.

The heritability of intelligence combines with cognitive stratification to increase IQ variance. The average husband-wife IQ correlation is between .2 and .6. Whatever the number used to be, I expect it has increased in the 27 years since The Bell Curve was published. Technically-speaking, elite graduates have always married each other. However, the concentration of cognitive ability among elites increases the genetic impact of this phenomenon.

Negative Effects of Low Intelligence

All the graphs in this section control for race by including only white people.

Poverty

Is poverty caused by IQ or by one's parents' social class? What would you bet money that the answer is?

Parental social economic status matters but the impact is small compared to IQ.

The black lines intersect at an IQ of 130. I think that once you pass a high enough threshold of intelligence, school stops mattering because you can teach yourself things faster than schools can teach you. Credentials don't matter either because exceptional people are wasted in cookie-cutter roles.

High School Graduation

There was no IQ gap between high school dropouts and graduates in the first half of the 20th century, before graduating high school became the norm. After high school became the norm, dropouts became low IQ.

| IQ | Percentage of Whites Who Did Not Graduate of Pass a High School Equivalency Exam |

|---|---|

| >125 | 0 |

| 110-125 | 0 (actually 0.4) |

| 90-110 | 6 |

| 75-90 | 35 |

| <75 | 55 |

In this case, IQ is even more predictive than parental social economic status. However, for temporary dropouts, social economic status matters a lot. (In terms of life outcomes, youths with a GED look more like dropouts than high school graduates.)

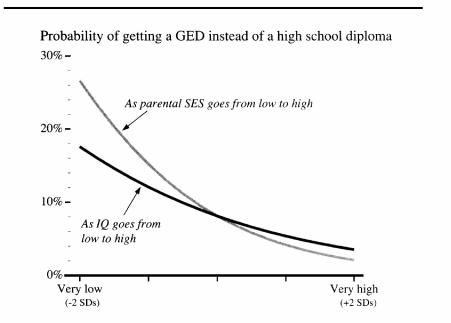

The image I (and Charles Murray) get is of dumb rich kids who get therapists, private tutors, special schools—the works. Highschool is easier to hack than college (and work, as we'll get to later). The following graph is, once again, white youths only.

Labor Force Participation

Being smart causes you to work more. Being born rich causes you to work less.

Being smart reduces the likelihood of a work-inhibiting disability.

| No. of White Males per 1,000 Who Reported Being Prevented from Working by Health Problems | IQ | No. of White Males Per 1,000 Who Reported Limits in Amount or Kind of Work by Health Problems |

|---|---|---|

| 0 | >125 | 13 |

| 5 | 110-125 | 21 |

| 5 | 90-110 | 37 |

| 36 | 75-90 | 45 |

| 78 | <75 | 62 |

Lower-intelligence jobs tend to involve physical objects which can injure you. However, this fails to account for the whole situation. "[G]iven that both men have blue-collar jobs, the man with an IQ of 85 has double the probability of a work disability of a man with an IQ of 115…the finding seems to be robust." It could be that dumb people are more likely to injure themselves or that they misrepresent their reasons not working or both.

Technically, unemployment is different from being out of the labor force. Unemployment also shows that being smart is negatively correlated with being unemployed in 1989.

Parental socioeconomic status had no measurable effect on unemployment. All that money spent on buying a high school diploma does not transfer to increased employment status. The following graph is of white men.

Family

Young white women with lower IQ are much more likely to give birth to an illegitimate baby in absolute terms and relative to legitimate births. How much more?

| IQ | Percentage of Young White Women Who Have Given Birth to an Illegitimate Baby | Percentage of Births that are Illegitimate |

|---|---|---|

| >125 | 2 | 7 |

| 110-125 | 4 | 7 |

| 90-110 | 8 | 13 |

| 75-90 | 75-90 | 17 |

| <75 | 32 | 42 |

Not only are children of mothers in the top quartile of intelligence…more likely to be born within marriage, they are more likely to have been conceived within marriage (no shotgun wedding).

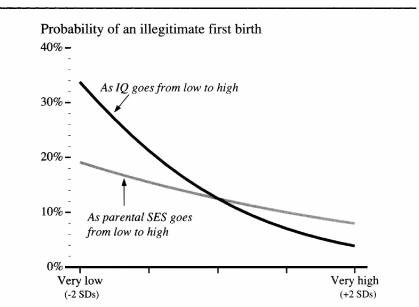

As usual, IQ outweighs parental socioeconomic status. The following graph is for white women.

Remember that IQ correlates with socioeconomic status. "High socioeconomic status offered weak protection against illegitimacy once IQ had been taken into account."

Welfare Dependency

Charles Murray gives a bunch of graphs and charts about how IQ affects welfare dependency. I bet you can guess what kind of a relationship they show.

Parenting

A low IQ [of the mother] is a major risk factor [for a low birth weight baby], whereas the mother's socioeconomic background is irrelevant.

Surprisingly to me, the mother's age at birth of the child did not affect her changes of giving birth to a low-birth-weight baby. Poverty didn't matter either. I suspect this is because America has a calorie surplus. I predict poverty was a very important factor in extremely poor pre-industrial societies.

A mother's socioeconomic background does have a large effect (independent of the mother's IQ) of her child's chances of spending the first years of its life in poverty. This isn't to say IQ doesn't matter. It's just the first result in our entire analysis where IQ doesn't dominate all other factors.

Mother IQ does have a big impact on the quality of her childrens' home life.

| IQ | Percentage of Children Growing Up in Homes in the Bottom Decile of the HOME Index |

|---|---|

| >125 | 0 |

| 110-125 | 2 |

| 90-110 | 6 |

| 75-90 | 11 |

| <75 | 24 |

The children of mothers with low IQs have worse temperaments (more difficulty and less friendliness), worse motor & social development and more behavior problems. (There's a bump in some worse outcomes for the smartest mothers, but this might just be an artifact of the small sample size.) The mother's socioeconomic background has a large effect on childrens' development problems, though not quite as high as the mother's IQ.

If you want smart kids then a smart mother is way more important than the mother's socioeconomic background. By now, this should come as no surprise.

Crime

High IQ correlates with not getting involved with the criminal justice system. Move along.

Ethnicity and Cognition

Different ethnic groups vary on cognitive ability.

Jews—specifically, Ashkenazi Jews of European origins—test higher than any other ethnic group…. These tests results [sic] are matched by analyses of occupational and scientific attainment by Jews, which consistently show their disproportionate level of success, usually by orders of magnitude, in various inventories of scientific and artistic achievement.

"Do Asians Have Higher IQs than Whites? Probably yes, if Asian refers to the Japanese and Chinese (and perhaps also Koreans), whom we will refer here as East Asians." Definitely yes if "Asian" refers to Chinese-Americans. This can be entirely explained by US immigration policy. It is hard to get into the USA if you are an East Asian. The United States has discriminated against Asian immigrants for most of its history and continues to do so. The United States is a desirable place to life. If you're an Asian and you want to get into the US then it helps to be smart. If would be weird if Asian-Americans weren't smarter than other immigrants. ("Other immigrants" includes all non-Asian, non-Native Americans.) Since intelligence is significantly heritable and people tend to intermarry among our own ethnic groups (often because the alternative was illegal[2]), a founder effect can be expected to persist across the handful of generations the United States has existed for.

The Bell Curve is mostly about America. It's disconcerting to me when he suddenly compares American students to students from Japan and Hong Kong. When he says "black" he uses a sample of African-American (and not Africa-African) but when he says "Japanese" he uses a sample of Japan-Japanese (and not Japanese-American). When he says "Jews" he includes the whole global diaspora and not (I presume) Latino converts.

I think Charles Murray fails to realize that Asian-Americans are such a biased sample of Asians that the two must be separated when you're studying . Fortunately, Asia-Asians are not a critical pillar of Murray's argument. Charles Murray tends to bucket Americans into black and white and somtimes Latino.

Black and White Americans

These differences are statistical. They apply to populations.

People frequently complain of IQ tests being biased. It is possible to determine whether a test is biased.

"If the SAT is biased against blacks, it will underpredict their college performance. If tests were biased in this way, blacks as a group would do better in college than the admissions office expected based on just their SATs." In either case "[a] test biased against blacks does not predict black performance in the real world in the same way that it predicts white performance in the real world. The evidence of bias is external in the sense that it shows up in differing validities for blacks and whites. External evidence of bias has been sought in hundreds of studies. It has been evaluated relative to performance in elementary school, in secondary school, in the university, in the armed forces, in unskilled and skilled jobs, in the professions. Overwhelmingly, the evidence is that the major standardized tests used to help make school and job decisions do not underpredict black performance, nor does the expert community find that other general or systematic difference in the predictive accuracy of tests for blacks and whites."

IQ tests often involve language. A smart Russia-Russian genius who does not speak English would fail an IQ test given in English. "For groups that have special language considerations—Latinos and American Indians, for example—some internal evidence of bias has been found, unless English is their native language." Native language is not an issue for African-Americans because African-Americans are native English speakers.

What about cultural knowledge? "The [black-white] difference is wider on items that appear to be culturally neutral than on items that appear to be culturally loaded. We italicise this point because it is both so well established empirically yet comes as such a surprise to most people who are new to this topic."

What about test-taking ability and motivation? We can test whether testing itself is behind a black-white difference by comparing standard IQ tests to tests of memorizing digits. Reciting digits backwards takes twice as much as reciting them forward. This experiment controls for test-taking ability and motivation because the forward and backward recitations are given under identical conditions. The black-white difference is about twice as great concerning reciting digits backwards as it is concerning reciting digits forwards.

Reaction correlates strongly with but movement time is less correlated. Whites consistently beat blacks on reaction time even tests though black movement time is faster than white movement time.

Any explanation for a the black-white IQ difference based on culture and society must explain the IQ difference, the number recitation difference, the reaction time difference, the movement time similarity and the difference in every cognitive measures of performance and achievement.

Lead in the water or epigenetic effects of slavery would constitute such an explanation. Such explanations would throw into doubt whether the difference is genetic but would also prove biological determinism.

What about socioeconomic status? The size of the black-white IQ gaps shrinks when socioeconomic status is controlled for. However, socioeconomic status is at least partially a result of cognitive ability. "In terms of the numbers, a reasonable rule of thumb is that controlling for socioeconomic status reduces the overall B/W difference by about third."

We can test for whether socioeconomic status causes the IQ difference by comparing blacks and whites of equal socioeconomic status. If the black-white IQ difference was caused by socioeconomic status then blacks and whites of equal socioeconomic status would have similar IQs. This is not what we observe.

It might be that the black-white difference comes from a mix of socioeconomic status plus systemic racism.

Africa

Charles Murray's analysis of Africa-Africans bothers me for the same reason his analysis of Asians bothers me. In this case, he assumes African-Americans are representative of Africa-Africans. For instance, he discusses how difficult it is "to assemble data on the average African black" even though African-Americans are mostly from West Africa. Given pre-historical human migration patterns, it is my understanding that West Africans are more genetically distant from East Africans than White people are from Asians. If I am right about Africa-African diversity then Africa-Africans are too broad of a reference class. He should be comparing African-Americans to West Africans[3].

Charles Murray believes scholars are reluctant to discuss Africa-African IQ scores because they are so low. I think he means to imply that African-African and African-American IQs are genetically connected. I think such a juxtaposition undersells the Flynn Effect. Industrialization improves the kind of abstract reasoning measured by IQ tests. Fluid and crystallized intelligence have both increased in the rich world in the decades following WWII. The increase happened too fast for it to be because of evolution. It might be due to better health or it could be because our environment is more conducive to abstract thought. I suspect the Flynn Effect comes from a mix of both. The United States and Africa are on opposite ends of the prosperity spectrum. Charles Murray is careful to write "ethnicity" instead of "race", but his classification system is closer to how I think about race than how I think about ethnicity. African-Americans and Africa-Africans are of the same race but different ethnicities.

African blacks are, on average, substantially below African-Americans in intelligence scores. Psychometrically, there is little reason to think that these results mean anything different about cognitive functioning than they mean in non-African populations. For our purposes, the main point is that the hypothesis about the special circumstances of American blacks depressing their test scores is not substantiated by the African data.

I disagree with Charles Murray's logic here. Suppose (in contradiction to first-order genetic pre-history) that Africa-Africans and the African diaspora were genetically homogeneous. A difference in IQ between African-Americans and Africa-Africans would imply that which society you live in substantially influences IQ. If America is segregated in such a way that kept African-Americans living in awful conditions then we would expect African-Americans' IQs to be depressed. Jim Crow laws were enforced until 1965. Martin Luther King Jr. was shot in 1968, a mere 26 years before the publication of The Bell Curve. Blacks and whites continue to be de facto racially segregated today in 2021. Even if racism ended in 1965 (it didn't), 29 years is not enough time to complete erase the damage caused by centuries of slavery and Jim Crow.

Charles Murray does acknowledge the possible effect of systemic racism. "The legacy of historic racism may still be taking its toll on cognitive development, but we must allow the possibility that it has lessened, at least for new generations. This too might account for some narrowing of the black-white gap."

Black-White Trends

The black-white gap narrowed in the years leading up to the publication of The Bell Curve. This is exactly what we would expect to observe if IQ differences are caused by social conditions because racism has been decreasing over the decades.

Charles Murray acknowledges that rising standards of living increase the intelligence of the economically disadvantaged because improved nutrition, shelter and health care directly removes impediments to brain development. The biggest increase in black scores happened at the low end of the range. This is evidence that improved living conditions of life improved IQ because the lowest hanging fruit hangs from the bottom end of the socioeconomic ladder.

How much is genetic?

Just because something is heritable does not mean the observed differences are genetic in origin. "This point is so basic, and so commonly misunderstood, that it deserves emphasis: That a trait is genetically transmitted in individuals does not mean that group differences in that trait are also genetic in origin." For example, getting skinny early can be caused by genetics or it can be caused by liposuction. The fact that one population is fat and another population is skinny does not mean that the difference was caused by genetics. It could just be that one group has better access to liposuction.

As demonstrated earlier, socioeconomic factors do not influence IQ much. For the black-white difference to be explained by social factors, those factors would have to exclude socioeconomic status.

Recall further that the B/W difference (in standardized units) is smallest at the lowest socioeconomic levels. Why, if the B/W difference is entirely environmental, should the advantage of the "white" environment compared to the "black" be greater among the better-off and better-educated blacks and whites? We have not been able to think of a plausible reason. An appeal to the effects of racism to explain ethnic differences also requires explaining why environments poisoned by discrimination and racism for some other groups—against the Chinese or the Jews in some regions of America, for example—have left them with higher scores than the national average.

One plausible reason is that Chinese-Americans and Jews value academic success stronger than whites and blacks. African-Americans' African culture was systematically destroyed by slavery. They never got the academic cultural package. We could test the cultural values hypothesis by examining at what happens when Chinese or Jewish kids are raised by white families and vice versa. The Bell Curve doesn't have this particular data but it does have white-black data. An examination of 100 adopted children of black, white and mixed racial ancestery found that "[t]he bottom line is that the gap between the adopted children with two black parents and the adopted children with two white parents was seventeen points, in line with the B/W difference customarily observed. Whatever the environmental impact may have been, it cannot have been large." This is evidence against the cultural transmission hypothesis—at least when comparing blacks and whites. Several other studies "tipped toward some sort of mixed gene-environment explanation of the B/W difference without saying how much of the difference is genetic and how much environmental…. It seems highly likely to us that both genes and the environment have something to do with racial differences. What might the mix be? We are resolutely agnostic on that issue; as far as we can determine, the evidence does not yet justify an estimate…. In any case, you are not going to learn tomorrow that all the cognitive differences between races are 100 percent genetic in origin, because the scientific state of knowledge, unfinished as it is, already gives ample evidence that environment is part of the story."

For Japanese living in Asia, a 1987 review of the literature demonstrated without much question that the verbal-visuospatial difference persists even in examinations that have been thoroughly adapted to the Japanese language and, indeed, in tests developed by the Japanese themselves. A study of a small sample of Korean infants adopted into white families in Belgium found the familiar elevated visuospatial scores.

The study of Korean infants seems like the right way to answer this question. The only issue is the small sample size.

What's especially interesting to me, personally, is that "East Asians living overseas score about the same or slightly lower than whites on verbal IQ and substantially higher on visuospatial IQ." This suggests to me that the stereotype of white managers supervising Asian engineers might reflect an actual difference in abilities. (If anyone has updated evidence which contradicts this, please put it in the comments.)

"This finding has an echo in the United States, where Asian-American students abound in engineering, in medical schools, and in graduate programs in the sciences, but are scarce in laws schools and graduate programs in the humanities and the social sciences." I agree that unfamiliarity with the English and American culture is not a plausible explanation for relatively subpar Asian-American linguistic performance. Asian-Americans born in the United States are fluent English speakers. However, I offer an alternative explanation. It could be that engineering, medicine and the sciences are simply more meritocratic than law, the humanities and the social sciences.

Interestingly, "American Indians and Inuit similarly score higher visuospatial than verbally; their ancestors migrated to the Americas from East Asia hundreds of centuries ago. The verbal-visuospatial discrepancy goes deeper than linguistic background." This surprised me since the Inuit are descended form the Aleut who migrated to America around 10,000 years ago—well before East Asian civilization. It's not obvious to me what environmental pressures would encourage higher visuospatial ability for Arctic Native Americans compared to Europeans.

Charles Murray dismisses the hypothesis that East Asian culture improves East Asians' visuospatial abilities.

Why do visuospatial abilities develop more than verbal abilities in people of East Asian ancestry in Japan, Hong Kong, Taiwan, mainland China, and other Asian countries and in the United States and elsewhere, despite the differences among the cultures and languages in all of those countries? Any simple socioeconomic, cultural, or linguistic explanation is out of the question, given the diversity of living conditions, native languages, educational resources, and cultural practices experienced by Hong Kong Chinese, Japan in Japan or the United States, Koreans in Korea or Belgium, and Inuit or American Indians.

I don't know what's going on with the Native Americans or exactly what "other Asian countries" includes (I'm betting it doesn't include Turks) but people from East Asia and the East Asian disapora have cultures that consistently value book learning. Japan, Hong Kong, Taiwan and mainland China eat similar foods, write in similar ways, (except, perhaps, for Korea) are all cultural descendants of the Tang Dynasty.

If Native Americans have high IQ and high IQ improves life outcomes then why aren't Native Americans overrepresented in the tech sector? I was so suspicious of the Native Americans connection that I looked up the their IQ test scores. According to this website, Native American IQ is below average for the US and Canada. Native Americans seem to me like the odd ones out of this group. Sure, they might have relatively high visualspatial abilities compared to linguistic abilities. But Native American IQs are below East Asians'. I think Charles Murray is once again using too big of a bucket. East Asians and Native Americans should not be lumped together.

We are not so rash as to assert that the environment or the culture os wholly irrelevant to the development of verbal and visuospatial abilities, but the common genetic history of racial East Asians and their North American or European descendents on the one hand, and the racial Europeans and their North American descendents, on the other, cannot plausibly be dismissed as irrelevant.

I think the common history of East Asians and Native Americans can (in this context[4]) be totally dismissed as irrelevant. Just look at alcohol tolerance. Native Americans were decimated when Europeans introduced alcohol. Meanwhile, East Asians have been drinking alcohol long enough to evolve the Asian flush. These populations have been separate for so long that one of them adapted to civilization in a way the other one didn't. Charles Murray proved that high visuospatial abilities help people rise to the top of a technically-advanced civilization. It would not surprise me one group that has competed against itself inside of the world's most technologically-advanced civilization for hundreds of generations had a higher visuospatial ability than another group which hasn't.

Race and Employment

Lots of (but not all) racial differences in life outcomes can be explained by controlling for IQ.

Projecting Demography

The higher the education, the fewer the babies.

Different immigrant populations have different IQs. Richard Lynn assigned "means of 105 to East Asians, 91 to Pacific populations, 84 to blacks, and 100 to whites. We assign 91 to Latinos. We know of no data for Middle East or South Asian populations that permit even a rough estimate." I like how the data here breaks Asians down into smaller groups. The average "works out to about 95" seems like a bad omeb but immigrants tend to come from worse places than the United States. I expect the Flynn effect will bring their descendents' average up.

So what if the mean IQ is dropping by a point or two per generation? One reason to worry is that the drop may be enlarging ethnic differences in cognitive ability at a time when the nation badly needs narrowing differences. Another reason to worry is that when the mean shifts a little, the size of the tails of the distribution changes a lot.

While this makes sense on paper, we need to acknowledge a technical point about statistics. The Bell Curve is named after the Gaussian distribution. IQ is a Gaussian distribution. But that doesn't necessarily reflect a natural phemonenon. IQ tests are mapped to a Gaussian distribution by fiat. Charles Murray never proved that IQ is actually a Gaussian distribution. Many real-world phenomena are long-tailed. (Though biological phenomena like height are often Gaussian.) It is a perfectly reasonable prior that small changes to the mean could result in large effects at the tails. Ashkenazi Jewish history small changes to the mean do cause a massive impact on the tails. But I don't think the evidence presented in The Bell Curve is adequate to prove that is Gaussian distributed.

Raising Cognitive Ability

Recent studies have uncovered other salient facts about the way IQ scores depend on genes. They have found, for example, that the more general the measure of intelligence—the closer it is to —the higher is the heritability. Also, the evidence seems to say that the heritability of IQ rises as one ages, all the way from early childhood to late adulthood…. Most of the traditional estimates of heritability have been based on youngsters, which means that they are likely to underestimate the role of genes later in life.

If better measures of have higher heritability that's a sign that it's the worse measures of are easier to hack. If the heritability of IQ goes up as one ages that suggests youth interventions are just gaming the metrics—especially when youth interventions frequently produce only short-term increases in measured IQ.

Once a society has provided basic schooling and eliminated the obvious things like malnutrition and lead in the water, the best way to increase will be eugenics. (I am bearish on AI parenting.) I am not advocating a return to the unscientific policies of the 20th century. Forcibly imposing eugenic policies is horrific and counterproductive. Rather, I predict that once good genetic editing technology is available, parents will voluntarily choose the best genes for their children. There will[5] come a day when not giving your kids the best genes will be seen by civilized people as backwards and reactionary. The shift in societal ethics will happen no later than a two generations (forty years) after the genetic editing of human zygotes becomes safe and affordable.

Besides cherry-pick our descendants' genotypes, is there anything else we can do? Improved nutrition definitely increases cognitive ability, but there is diminishing returns. Once you have adequate nutrition, getting more adequate nutrition doesn't do anything.

Having school (verses no school) does raise IQ. Thus, "some of the Flynn effect around the world is explained by the upward equalization of schooling, but a by-product is that schooling in and of itself no longer predicts adult intelligence as strongly…. The more uniform a country's schooling is, the more correlated the adult IQ is with childhood IQ." Increasing access to schooling increases the strength of natural differences on IQ because when you eliminate societally-imposed inequality all that's left is natural variation.

A whole bunch of programs purport to increase IQ but none of them show a significant long-term effect after many years. It seems to me like they're just gaming the short-term metrics. "An inexpensive, reliable method of raising IQ is not available."

Affirmative Action

College Affirmative Action

I'm not going to dive deep into Charles Murrays thoughts on affirmative action because they're incontrovertible. Affirmative action in college admissions prioritizes affluent blacks over disadvantaged whites. It's also anti-Asian.

The edge given to minority applicants to college and graduate school is not a nod in their favor in the case of a close call but an extremely large advantage that puts black and Latino candidates in a separate admissions competition. On elite campuses, the average black freshman is in the region of the 10th to 15th percentile of the distribution of cognitive ability among white freshman. Nationwide, the gap seems to be at least that large, perhaps larger. The gap does not diminish in graduate school. If anything, it may be larger.

In the world of college admissions, Asians are a conspicuously unprotected minority. At the elite schools, they suffer a modest penalty, with the average Asian freshman being at about the 60th percentile of the white cognitive ability distribution. Our data from state universities are too sparse to draw conclusions. In all of the available cases, the difference between white and Asian distributions is small (either plus or minus) compared to the large differences separating blacks and Latinos from whites.

The edge given to minority candidates could be more easily defended if the competition were between disadvantaged minority youths and privileged white youths. But nearly as large a cognitive difference separates disadvantaged black freshmen from disadvantaged white freshmen. Still more difficult to defend, blacks from affluent socioeconomic backgrounds are given a substantial edge over disadvantaged whites.

Racist admissions harm smart blacks and Latinos.

In society at large, a college degree does not have the same meaning for a minority graduate and a white one, with consequences that reverberate in the workplace and continue throughout life.

Workplace Affirmative Action

[A]fter controlling for IQ, it is hard to demonstrate that the United States still suffers from a major problem of racial discrimination in occupations and pay.

Conclusion

Charles Murray ends with a chapter on where we're going, which he followed it up later with an entire book on class stratification among white Americans.

What worries us first about the emerging cognitive elite is its coalescence into a class that views American society increasingly through a lens of its own.

⋮

The problem is not simply that smart people rise to the top more efficiently these days. If the only quality that CEOs of major corporations and movie directors and the White House inner circle had in common were their raw intelligence, things would not be so much different now than they have always been, for some degree the most successful have always been drawn disproportionally from the most intelligent. But the invisible migration of the twentieth century has done much more than let the most intellectually able succeed more easily. It has also segregated them and socialized them. The members of the cognitive elite are likely to have gone to the same kinds of schools, live in similar neighborhoods, go to the same kinds of theaters and restaurants, read the same magazines and newspapers, watch the same television programs, even drive the same makes of cars.

They also tend to be ignorant of the same things.

I was robbed at gunpoint last weekend. ↩︎

Interracial marriage was illegal in nearly every state before 1888. It remained illegal in 15 states all the way until 1967 when the laws were overturned by the Supreme Court ruling Loving v. Virginia. ↩︎

Unless Charles Murray believes that forces outlined in Jared Diamond's Guns, Germs and Steel (which, ironically, was written in opposition to race-based heritable theories of achievement differences) caused Eurasians to evolve higher than their African forbears. While writing this footnote, I realized that the hypothesis is worth considering. Rice-based peoples evolved alcohol intolerance. Indian, Iraqi, Chinese and Japanese men evolved small penises. Software advances faster than hardware. It would be weird if civilization didn't cause cognitive adaptations too. I want to predict that cognitive adaptations to happen faster than physiological adaptations but I don't know how they can be compared. ↩︎

In Jared Diamond's Guns, Germs, and Steel context, Native Americans' sister relationship to East Asians does matter. ↩︎

As usual, this prediction is conditional on neither the singularity nor a civilizational collapse occurring. ↩︎

134 comments

Comments sorted by top scores.

comment by JenniferRM · 2021-11-02T17:01:39.313Z · LW(p) · GW(p)

I read the book years ago "to find out what all the fuss was about" and I was surprised to find that the book was only about white America for the most part.

After thinking about it, my opinion was that Murray should have left out the one chapter about race (because that discussion consumed all the oxygen and also) because the thing I was very surprised by, and which seemed like a big deal, and which potentially was something that could be changed via policy, and thus probably deserved most of the oxygen, was the story where:

the invisible migration of the twentieth century has done much more than let the most intellectually able succeed more easily. It has also segregated them and socialized them.

The story I remember from the book was that colleges entrance processes had become a sieve that retained high IQ whites while letting low IQ whites pass through and fall away.

Then there are low-IQ societies where an underclass lives with nearly no opportunity to see role models doing similar things in substantially more clever ways.

My memory is that the book focused quite a bit on how this is not how college used to work in the 1930s or so, and that it was at least partly consciously set up through the adoption of standardized testing like the SAT and ACT as a filter to use government subsidies to pull people out of their communities and get them into college in the period from 1950 to 1980 or so.

Prior to this, the primary determinant of college entry was parental SES and wealth, as the "economic winners with mediocre children" tried to pass on their social position to their children via every possible hack they could cleverly think to try.

(At a local personal level, I admire this cleverness, put in service first to their own children, where it properly belongs, but I worry that they had no theory of a larger society, and how that might be structured for the common good, and I fear that their local virtue was tragically and naively magnified into the larger structure of society to create a systematic class benefit that is not healthy for the whole of society, or even for the "so-called winners" of the dynamic...)

My memory of Murray's book is that he pointed out that if you go back to the 1940s, and look at the IQ distribution of the average carpenter, you'd find a genius here or there, and these were often the carpenters who the other carpenters looked up to, and learned carpentry tricks from...

...but now, since nearly all people have taken the SAT or ACT in high school, the smart "potential carpenters" all get snatched up and taken away from the community that raised them. This levels out the carpentry IQ distribution, by putting a ceiling on it, basically.

If you think about US immigration policy, "causing brain drain from other countries" is a built in part of the design. (Hence student visas for example.)

If this is helpful for the US, then it seems reasonable that it would be harmful to the other countries...

...but international policy is plausibly is a domain where altruism should have less sway, unless mechanisms to ensure reciprocity exist...

...and once you have mechanistically ensured reciprocity are you even actually in different legal countries anymore? It is almost a tautology then, that "altruism 'should' be less of a factor for an agent in a domain where reciprocal altruism can't be enforced".

So while I can see how "brain drain vs other countries" makes some sense as a semi-properly-selfish foreign policy (until treaties equalize things perhaps) it also makes sense to me that enacting a policy of subsidized brain drain on "normal america" by "the parts of america in small urban bubbles proximate to competitive universities" seems like... sociologically bad?

So it could be that domestic brain drain is maybe kind of evil? Also, if it is evil then the beneficiaries might have some incentives to try to deny is happening by denying that IQ is even real?

Then it becomes interesting to notice that domestic neighborhood level brain drain could potentially be stopped by changing laws.

I think Murray never called for this because there wasn't strong data to back it up, but following the logic to the maximally likely model of the world, then thinking about how to get "more of what most people in America" (probably) want based on that model (like a live player would)...

...the thing I found myself believing at the end of the book is that The Invisible Migration Should Be Stopped.

The natural way to do this doesn't even seem that complicated, and it might even work, and all it seems like you'd have to do is:

(1) make it illegal for universities to use IQ tests (so they can go back to being a way for abnormally successful rich parents to try to transmit their personal success to their mediocre children who have regressed to the mean) but

(2) make it legal for businesses to use IQ tests directly, and maybe even

(3) tax businesses for hogging up all the smart people, if they try to brain drain into their own firm?

If smart people were intentionally "spread around" (instead of "bunched up"), I think a lot fewer of them would be walking around worried about everything... I think they would feel less pinched and scared, and less "strongly competed with on all sides".

Also, they might demand a more geographically even distribution of high quality government services?

And hopefully, over time, they would be less generally insane, because maybe the insanity comes from being forced to into brutal "stacked ranking" competition with so many other geniuses, so that their oligarchic survival depends on inventing fake (and thus arbitrarily controllable) reasons to fire co-workers?

Then... if this worked... maybe they would be more able to relax and focus on teaching and play?

And I think this would be good for the more normal people who (if the smarties were more spread out) would have better role models for the propagation of a more adaptively functional culture throughout society.

Relevantly, as a tendency-demonstrating exceptional case, presumably caused by unusual local factors:

I, weirdly, live in a poor, dangerous[1] neighborhood where I volunteer as a coach for the local high school's robotics club. If I wasn't around there would be no engineers teaching or coaching at the highschool.

Nice! I admire your willingness and capacity to help others who are local to you <3

[1] I was robbed at gunpoint last weekend.

You have my sympathy. I hope you are personally OK. Also, I hope, for the sake of that whole neighborhood, that the criminal is swiftly captured and justly punished. I fear there is little I can do to help you or your neighborhood from my own distant location, but if you think of something, please let me know.

Replies from: cousin_it, SaidAchmiz, lsusr↑ comment by cousin_it · 2021-11-03T07:59:20.358Z · LW(p) · GW(p)

(3) tax businesses for hogging up all the smart people, if they try to brain drain into their own firm?

Due to tax incidence, that's the same as taxing smart people for getting together. I don't like that for two reasons. First, people should be free to get together. Second, the freedom of smart people to get together could be responsible for large economic gains, so we should be careful about messing with it.

↑ comment by Said Achmiz (SaidAchmiz) · 2021-11-02T20:21:04.664Z · LW(p) · GW(p)

See also: “The Problem Isn’t the ‘Merit,’ It’s the ‘Ocracy’” (The Scholar’s Stage).

↑ comment by lsusr · 2021-11-05T01:25:27.664Z · LW(p) · GW(p)

You have my sympathy. I hope you are personally OK. Also, I hope, for the sake of that whole neighborhood, that the criminal is swiftly captured and justly punished. I fear there is little I can do to help you or your neighborhood from my own distant location, but if you think of something, please let me know.

I'm totally unharmed. I didn't even lose my phone. There is absolutely nothing you can do but appreciate the offer and the well wishes.

Replies from: JenniferRM↑ comment by JenniferRM · 2021-11-05T16:50:41.392Z · LW(p) · GW(p)

I'm glad you are unharmed and that my well wishes were welcome :-)

comment by Zack_M_Davis · 2021-11-03T02:48:55.693Z · LW(p) · GW(p)

What's with the neglect of Richard J. Herrnstein?! His name actually comes first on the cover!

Replies from: lsusr↑ comment by lsusr · 2021-11-05T01:29:38.431Z · LW(p) · GW(p)

In retrospect, I wish I had titled this [Book Review] "The Bell Curve" by Richard Herrnstein instead. That would have been funny.

I have read two other books by Charles Murray and zero other books by Richard Herrnstein. In my head, I think of all of them as "Charles Murray books", which is unfair to Richard Herrnstein.

Replies from: Benito↑ comment by Ben Pace (Benito) · 2021-11-05T02:13:34.847Z · LW(p) · GW(p)

+1 it would have been funny, especially if you'd opened by lampshading it.

comment by PeterMcCluskey · 2021-11-03T19:59:09.941Z · LW(p) · GW(p)

I mostly agree with this review, but it endorses some rather poor parts of the book.

Properly administered IQ tests are not demonstrably biased against social, economic, ethnic, or racial groups. ... Charles Murray doesn’t bother proving the above points. These facts are well established among scientists.

Cultural neutrality is not well established. The Bell Curve's claims here ought to be rephrased as something more like "the cultural biases of IQ tests are equivalent to the biases that 20th century academia promoted". I've written about this here [LW · GW] and here [LW(p) · GW(p)].

the gap between the adopted children with two black parents and the adopted children with two white parents was seventeen points, in line with the B/W difference customarily observed. Whatever the environmental impact may have been, it cannot have been large.

This seems to assume that parental impact constitutes most of environmental impact. Books such as The Nurture Assumption and WEIRDest People have convinced me that this assumption is way off. The Bell Curve has a section on malparenting seemed plausible to me at the time it was written, but which now looks pretty misguided (in much the same way as mainstream social science was/is misguided).

comment by Rafael Harth (sil-ver) · 2021-11-02T20:59:16.792Z · LW(p) · GW(p)

Imagine a world where having [a post mentioning the bell curve] visible on the frontpage runs a risk of destroying a lot of value. This could be through any number of mechanisms like

- The site is discussed somewhere, someone claims that it's a home for racism and points to this post as evidence. [Someone who in another universe would have become a valueable contributor to LW] sees this (but doesn't read the post) it and decides not to check LW out.

- A woke and EA-aligned person gets wind of it and henceforth thinks all x-risk related causes are unworthy of support

- Someone links the article from somewhere, it gets posted on far right reddit board, a bunch of people make accounts on LessWrong to make dumb comments, someone from the NYT sees it and writes a hit piece. By this time all of the dumb comments are downvoted into invisibility (and none of them ever had high karma to begin with), but the NYT reporter just deals with this by writing that the mods had to step in and censor the most outrageous comments or something.

Question: If you think this is not worth worrying about -- why? What do you know, and how do you think you know it? And in what way would a world-where-it-is-worth-worrying-about look different?

To avoid repeating arguments, there have been discussions similar to this before. Here are the arguments that I remember (I'm sure this is not exhaustive).

-

Pro: Not allowing posts poisons are epistemic discourse; saying 'let's be systematically correct about everything but is a significantly worse algorithm than 'let's be systematically correct', and this can have wide-ranging effects. (Zack_M_Davis [LW · GW] strongly argued for this point, e.g. here [LW(p) · GW(p)]) (this is also on one of the posts where the discussion has happened before, in this case because I made a comment arguing the post shouldn't be on LW)

- Contra: But we could take it offline. (Even Hubinger [LW · GW], e.g., here [LW(p) · GW(p)])

- Contra: I've thought about this a lot since the discussion happened, and I increasingly just don't buy that the negative effects are real. Especially not in this case, which seems more clear-cut than the dating post. The Bell Curve seems to be just about the single most controversial book in the world for a good chunk of people, just about any other book would be less of an issue. I assume the argument is that censorship is not proportional to the amount that is censored, but I don't understand the mechanism here. How does this hurt discourse?

-

Pro: LessWrong obviously isn't about this kind of stuff and anyone who takes an honest look at the site will notice that immediately. (Ben Pace [LW · GW] argued this here [LW(p) · GW(p)].) He also said that he's "pretty pro just fighting those fights, rather than giving in and letting people on the internet who use the representativeness heuristic to attack people decide what we get to talk about."

- I'm unconvinced by this for the same reasons I was then. I agree with the claim, but I don't think assuming people are reasonable is realistic, and I don't understand why we should just fight those fights. Where's the cost-benefit calculation?

- Follow the above links for arguments from Ben against the above.

- I'm unconvinced by this for the same reasons I was then. I agree with the claim, but I don't think assuming people are reasonable is realistic, and I don't understand why we should just fight those fights. Where's the cost-benefit calculation?

-

Pro: LessWrong will get politicized anyway and we should start to practice. (Wei Dai [LW · GW], e.g. here [LW(p) · GW(p)])

- This makes a lot more sense to me, but starting with a post on the Bell Curve is not the right way to do it. I would welcome some kind of actual plan for how this can be done from the moderators.

Until then, my position is that this post shouldn't be on LessWrong. I've strong-downvoted it and would ultra-strong-downvote it if I could. However, I do think I'm open to evidence for the contrary. I would much welcome some kind of a cost-benefit calculation that concludes that this is a good idea. If it's wroth doing, it's worth doing with made-up statistics. If I were to do such a calculation, it would get a bunch of negative numbers for things like what i mentioned at the top of this comment, and almost nothing positive because the benefit of allowing this seems genuinely negligible to me.

Replies from: Ruby, AllAmericanBreakfast, Benito, steven0461, Ericf↑ comment by Ruby · 2021-11-03T03:46:06.505Z · LW(p) · GW(p)

In my capacity as moderator, I saw this post this morning and decided to leave it posted (albeit as Personal blog with reduced visibility).

I think limiting the scope of what can be discussed is costly for our ability to think about the world and figure out what's true (a project that is overall essential to AGI outcomes, I believe) and therefore I want to minimize such limitations. That said, there are conversations that wouldn't be worth having on LessWrong, topics that I expect would attract attention just not worth it–those I would block. However, this post didn't feel like where I wanted to draw the line. Blocking this post feels like it would be cutting out too much for the sake of safety and giving the fear of adversaries too much control over of us and our inquiries. I liked how this post gave me a great summary of controversial material so that I now know what the backlash was in response to. I can imagine other posts where I feel differently (in fact, there was a recent post I told an author it might be better to leave off the site, though they missed my message and posted anyway, which ended up being fine).

It's not easy to articulate where I think the line is or why this post seemed on the left of it, but it was a deliberate judgment call. I appreciate others speaking up with their concerns and their own judgment calls. If anyone ever wants to bring these up with me directly (not to say that comment threads aren't okay), feel free to DM me or email me: ruby@lesswrong.com

To address something that was mentioned, I expect to change my response in the face of posting trends, if they seemed fraught. There are a number of measure we could potentially take then.

↑ comment by Rafael Harth (sil-ver) · 2021-11-03T11:13:43.786Z · LW(p) · GW(p)

Thanks for being transparent. I'm very happy to see that I was wrong in saying no-one else is taking it seriously. (I didn't notice that the post wasn't on the frontpage, which I think proves that you did take it seriously.)

I think limiting the scope of what can be discussed is costly for our ability to think about the world and figure out what's true (a project that is overall essential to AGI outcomes, I believe) and therefore I want to minimize such limitations.

I don't understand this concern (which I classify as the same kind of thing voiced by Zack many times and AAB just a few comments up [LW(p) · GW(p)].) We've have a norm against discussing politics since before LessWrong 2.0, which doesn't seem to have had any noticeable negative effects on our ability to discuss other topics. I think what I'm advocating for is to extend this norm by a pretty moderate amount? Like, the set of interesting topics in politics seems to me to be much larger than the set of interesting [topics with the property that they risk significant backlash from people who are concerned about social justice]. (I do see how this post is useful, but the bell curve is literally in a class that contains a single element. There seem to be < 5 posts per year which I don't want to have on LW for these kinds of reasons, and most of them are less useful than this one.) My gears-level prediction for how much that would degrade discussion in other areas is basically zero, but at this point I must be missing something?

A difference I can see is that disallowing this post would be done explicitly out of fear or backlash whereas the norm against politics is because politics is the mind killer, but i guess I don't see why that makes a difference (and doesn't the mind killer argument extend to these kinds of topics anyway?)

It's not easy to articulate where I think the line is or why this post seemed on the left of it, but it was a deliberate judgment call. I appreciate others speaking up with their concerns and their own judgment calls. If anyone ever wants to bring these up with me directly (not to say that comment threads aren't okay), feel free to DM me or email me: ruby@lesswrong.com

I do think that if we order all posts by where they appear on this spectrum, I would put this farther to the right than any other post I remember, so we genuniely seem to differ in our judgment here.

I echo anon03 in that the title is extremely provocative, but minus the claim that this is only a descriptive statement. I think it's obviously intentionally provocative (though I will take this back if the author says otherwise), given that the author wrote this [LW · GW] four days ago

My favorite thing about living in the 21ˢᵗ century is that nobody can stop me from publishing whatever I want. [...] People tell me they're worried of being cancelled by woke culture. I think this is just a convenient excuse for laziness and cowardice. What are you afraid of saying? [...] Are you afraid to say that there are significant heritable intelligence disparities between ethnic groups? It's the obvious conclusion if you think critically about US immigration policy.

I think condemning TBC has become one of the most widely agreed on loyalty tests for many people who care about social justice. It seems clear to me that Isusr intended this post to have symbolic value, so that being provocative was an intended property. If their utility function had been to review this book because it's very useful while minimizing risk, a very effective way to do this would have been to exclude the name from the title.

Replies from: Vaniver, Ruby, Benito↑ comment by Vaniver · 2021-11-03T19:40:59.112Z · LW(p) · GW(p)

Elsewhere you write (and also ask to consolidate, so I'm responding here):

The main disagreement seems to come down to how much we would give up when disallowing posts like this. My gears model still says 'almost nothing' since all it would take is to extend the norm "let's not talk about politics" to "let's not talk about politics and extremely sensitive social-justice adjacent issues", and I feel like that would extend the set of interesting taboo topics by something like 10%.

I think I used to endorse a model like this much more than I do now. A particular thing that I found sort of radicalizing was the "sexual preference" moment, in which a phrase that I had personally used and wouldn't have associated with malice was overnight retconned to be a sign of bigotry, as far as I can tell primarily to score points during the nomination hearings for Amy Coney Barrett. (I don't know anything special about Barrett's legal decisions or whether or not she's a bigot; I also think that sexual orientation isn't a choice for basically anyone at the moment; I also don't think 'preference' implies that it was a choice, any more than my 'flavor preferences' are my choice instead of being an uncontrollable fact about me.)

Supposing we agree that the taboo only covers ~10% more topics in 2020, I'm not sure I expect it will only cover 10% more topics in 2025, or 2030, or so on? And so you need to make a pitch not just "this pays for itself now" but instead something like "this will pay for itself for the whole trajectory that we care about, or it will be obvious when we should change our policy and it no longer pays for itself."

Replies from: AllAmericanBreakfast, steven0461↑ comment by DirectedEvolution (AllAmericanBreakfast) · 2021-11-04T04:28:59.613Z · LW(p) · GW(p)

This is a helpful addendum. I didn't want to bust out the slippery slope argument because I didn't have clarity on the gears-level mechanism. But in this case, we seem to have a ratchet in which X is deemed newly offensive, and a lot of attention is focused on just this particular word or phrase X. Because "it's just this one word," resisting the offensive-ization is made to seem petty - wouldn't it be such a small thing to give up, in exchange for inflicting a whole lot less suffering on others?

Next week it'll be some other X though, and the only way this ends is if you can re-establish some sort of Schelling Fence of free discourse and resist any further calls to expand censorship, even if they're small and have good reasons to back them up.

I think that to someone who disagrees with me, they might say that what's in fact happening is an increase in knowledge and an improvement in culture, reflected in language. In the same way that I expect to routinely update my picture of the world when I read the newspaper, why shouldn't I expect to routinely update my language to reflect evolving cultural understandings of how to treat other people well?

My response to this objection would be that, in much the same way as phrases like "sexual preference" can be seen as offensive for their implications, or a book can be objected to for its symbolism, mild forms of censorship or "updates" in speech codes can provoke anxiety, induce fear, and restrain thought. This may not be their intention, but it is their effect, at least at times and in the present cultural climate.

So a standard of free discourse and a Schelling Fence against expansion of censorship is justified not (just) to avoid a slippery slope of ever-expanding censorship, or to attract people with certain needs or to establish a pipeline into certain roles or jobs. Its purpose is also to create a space in which we have declared that we will strive to be less timid, not just less wrong.

We might not always prioritize or succeed in that goal, but establishing that this is a space where we are giving ourselves permission to try is a feature of explicit anti-censorship norms.

Prioritizing freedom of thought and lessening timidity isn't always the right goal. Sometimes, inclusivity, warmth, and a sense of agreeableness and safety is the right way to organize certain spaces. Different cultural moments, or institutions, might need marginally more safe spaces. Sometimes, though, they need more risky spaces. My observation tells me that our culture is currently in need of marginally more risky spaces, even if the number of safe spaces remains the same. A way to protect LW's status as a risky space is to protect our anti-censorship norms, and sometimes to exercise our privilege to post risky material such as this post.

Replies from: steven0461, sil-ver↑ comment by steven0461 · 2021-11-04T05:45:19.286Z · LW(p) · GW(p)

My observation tells me that our culture is currently in need of marginally more risky spaces, even if the number of safe spaces remains the same.

Our culture is desperately in need of spaces that are correct about the most important technical issues, and insisting that the few such spaces that exist have to also become politically risky spaces jeopardizes their ability to function for no good reason given that the internet lets you build as many separate spaces as you want elsewhere.

Replies from: AllAmericanBreakfast, Vaniver↑ comment by DirectedEvolution (AllAmericanBreakfast) · 2021-11-04T05:59:38.218Z · LW(p) · GW(p)

I’m going to be a little nitpicky here. LW is not “becoming,” but rather already is a politically risky space, and has been for a long time. There are several good reasons, which I and others have discussed elsewhere here. They may not be persuasive to you, and that’s OK, but they do exist as reasons. Finally, the internet may let you build a separate forum elsewhere and try to attract participants, but that is a non-trivial ask.

My position is that accepting intellectual risk is part and parcel of creating an intellectual environment capable of maintaining the epistemic rigor that we both think is necessary.

It is you, and others here, who are advocating a change of the status quo to create a bigger wall between x-risk topics and political controversy. I think that this would harm the goal of preventing x-risk, on current margins, as I’ve argued elsewhere here. We both have our reasons, and I’ve written down the sort of evidence that would cause me to change my point of view.

Fortunately, I enjoy the privilege of being the winner by default in this contest, since the site’s current norms already accord with my beliefs and preferences. So I don’t feel the need to gather evidence to persuade you of my position, assuming you don’t find my arguments here compelling. However, if you do choose to make the effort to gather some of the evidence I’ve elsewhere outlined, I not only would eagerly read it, but would feel personally grateful to you for making the effort. I think those efforts would be valuable for the health of this website and also for mitigating X-risk. However, they would be time-consuming, effortful, and may not pay off in the end.

↑ comment by Vaniver · 2021-11-04T15:41:39.462Z · LW(p) · GW(p)

Our culture is desperately in need of spaces that are correct about the most important technical issues

I also care a lot about this; I think there are three important things to track.

First is that people might have reputations to protect or purity to maintain, and so want to be careful about what they associate with. (This is one of the reasons behind the separate Alignment Forum URL; users who wouldn't want to post something to Less Wrong can post someplace classier.)

Second is that people might not be willing to pay costs to follow taboos. The more a space is politically safe, the less people like Robin Hanson will want to be there, because many of their ideas are easier to think of if you're not spending any of your attention on political safety.

Third is that the core topics you care about might, at some point, become political. (Certainly AI alignment was 'political' for many years before it became mainstream, and will become political again as soon as it stops becoming mainstream, or if it becomes partisan.)

The first is one of the reasons why LW isn't a free speech absolutist site, even tho with a fixed population of posters that would probably help us be more correct. But the second and third are why LW isn't a zero-risk space either.

Replies from: steven0461, steven0461↑ comment by steven0461 · 2021-11-04T21:22:41.319Z · LW(p) · GW(p)

Some more points I want to make:

- I don't care about moderation decisions for this particular post, I'm just dismayed by how eager LessWrongers seem to be to rationalize shooting themselves in the foot, which is also my foot and humanity's foot, for the short term satisfaction of getting to think of themselves as aligned with the forces of truth in a falsely constructed dichotomy against the forces of falsehood.

- On any sufficiently controversial subject, responsible members of groups with vulnerable reputations will censor themselves if they have sufficiently unpopular views, which makes discussions on sufficiently controversial subjects within such groups a sham. The rationalist community should oppose shams instead of encouraging them.

- Whether political pressure leaks into technical subjects mostly depends on people's meta-level recognition that inferences subject to political pressure are unreliable, and hosting sham discussions makes this recognition harder.

- The rationalist community should avoid causing people to think irrationally, and a very frequent type of irrational thinking (even among otherwise very smart people) is "this is on the same website as something offensive, so I'm not going to listen to it". "Let's keep putting important things on the same website as unimportant and offensive things until they learn" is not a strategy that I expect to work here.

- It would be really nice to be able to stand up to left wing political entryism, and the only principled way to do this is to be very conscientious about standing up to right wing political entryism, where in this case "right wing" means any politics sufficiently offensive to the left wing, regardless of whether it thinks of itself as right wing.

I'm not as confident about these conclusions as it sounds, but my lack of confidence comes from seeing that people whose judgment I trust disagree, and it does not come from the arguments that have been given, which have not seemed to me to be good.

Replies from: jessica.liu.taylor↑ comment by jessicata (jessica.liu.taylor) · 2021-11-04T23:29:10.058Z · LW(p) · GW(p)

It would be really nice to be able to stand up to left wing political entryism, and the only principled way to do this is to be very conscientious about standing up to right wing political entryism, where in this case “right wing” means any politics sufficiently offensive to the left wing, regardless of whether it thinks of itself as right wing.

"Stand up to X by not doing anything X would be offended by" is obviously an unworkable strategy, it's taking a negotiating stance that is maximally yielding in the ultimatum game, so should expect to receive as little surplus utility as possible in negotiation.

(Not doing anything X would be offended by is generally a strategy for working with X, not standing up to X; it could work if interests are aligned enough that it isn't necessary to demand much in negotiation. But given your concern about "entryism" that doesn't seem like the situation you think you're in.)

Replies from: gjm, steven0461↑ comment by gjm · 2021-11-05T00:17:33.438Z · LW(p) · GW(p)

steven0461 isn't proposing standing up to X by not doing things that would offend X.

He is proposing standing up to the right by not doing things that would offend the left, and standing up to the left by not doing things that would offend the right. Avoiding posts like the OP here is intended to be an example of the former, which (steven0461 suggests) has value not only for its own sake but also because it lets us also stand up to the left by avoiding things that offend the right, without being hypocrites.