Posts

Comments

OpenAI slashes AI model safety testing time, FT reports. This is consistent with lots of past evidence about OpenAI's evals for dangerous capabilities being rushed, being done on weak checkpoints, and having worse elicitation than OpenAI has committed to.

This is bad because OpenAI is breaking its commitments (and isn't taking safety stuff seriously and is being deceptive about its practices). It's also kinda bad in terms of misuse risk, since OpenAI might fail to notice that its models have dangerous capabilities. I'm not saying OpenAI should delay deployments for evals — there may be strategies that are better (similar misuse-risk-reduction with less cost-to-the-company) than detailed evals for dangerous capabilities before external deployment, where you generally do slow/expensive evals after your model is done (even if you want to deploy externally before finishing evals) and have a safety buffer and increase the sensitivity of your filters early in deployment (when you're less certain about risk). But OpenAI isn't doing that; it's just doing a bad job of the evals before external deployment plan.

(Regardless, maybe short-term misuse isn't so scary, and maybe short-term misuse risk comes mostly from open-weights or stolen models than models that can be undeployed/mitigated if misuse risks appear during deployment. And insofar as you're more worried about risks from internal deployment, maybe you should focus on evals and mitigations relevant to those threats rather than external deployment. (OpenAI's doing even worse on risks from internal deployment!))

tl;dr: OpenAI is doing risk assessment poorly[1] but maybe do detailed evals for dangerous capabilities before external deployment isn't a great ask.

- ^

But similar to DeepMind and Anthropic, and those three are better than any other AI companies

I think this isn't taking powerful AI seriously. I think the quotes below are quite unreasonable, and only ~half of the research agenda is plausibly important given that there will be superintelligence. So I'm pessimistic about this agenda/project relative to, say, the Forethought agenda.

AGI could lead to massive labor displacement, as studies estimate that between 30% - 47% of jobs could be directly replaceable by AI systems. . . .

AGI could lead to stagnating or falling wages for the majority of workers if AI technology replaces people faster than it creates new jobs.

We could see an increase of downward social mobility for workers, as traditionally “high-skilled” service jobs become cheaply performed by AI, but manual labor remains difficult to automate due to the marginal costs of deploying robotics. These economic pressures could reduce the bargaining power of workers, potentially forcing more people towards gig economy roles or less desirable (e.g. physically demanding) jobs.

Simultaneously, upward social mobility could decline dramatically as ambitious individuals from lower-class backgrounds may lose their competitive intellectual advantages to AGI systems. Fewer entry-level skilled jobs and the reduced comparative value of intelligence could reduce pathways to success – accelerating the hollowing out of the middle class.

If AGI systems are developed, evidence across the board points towards the conclusion that the majority of workers could likely lose out from this coming economic transformation. A core bargain of our society – if you work hard, you can get ahead – may become tenuous if opportunities for advancement and economic security dry up.

Update: based on nonpublic discussion I think maybe Deric is focused on the scenario the world is a zillion times wealthier and humanity is in control but many humans have a standard of living that is bad by 2025 standards. I'm not worried about this because it would take a tiny fraction of resources to fix that. (Like, if it only cost 0.0001% of global resources to end poverty forever, someone would do it.)

My guess:

This is about software tasks, or specifically "well-defined, low-context, measurable software tasks that can be done without a GUI." It doesn't directly generalize to solving puzzles or writing important papers. It probably does generalize within that narrow category.

If this was trying to measure all tasks, tasks that AIs can't do would count toward the failure rate; the main graph is about 50% success rate, not 100%. If we were worried that this is misleading because AIs are differentially bad at crucial tasks or something, we could look at success rate on those tasks specifically.

I don't know, maybe nothing. (I just meant that on current margins, maybe the quality of the safety team's plans isn't super important.)

I haven't read most of the paper, but based on the Extended Abstract I'm quite happy about both the content and how DeepMind (or at least its safety team) is articulating an "anytime" (i.e., possible to implement quickly) plan for addressing misuse and misalignment risks.

But I think safety at Google DeepMind is more bottlenecked by buy-in from leadership to do moderately costly things than the safety team having good plans and doing good work. [Edit: I think the same about Anthropic.]

I expect they will thus not want to use my quotes

Yep, my impression is that it violates the journalist code to negotiate with sources for better access if you write specific things about them.

My strong upvotes are giving +61 :shrug:

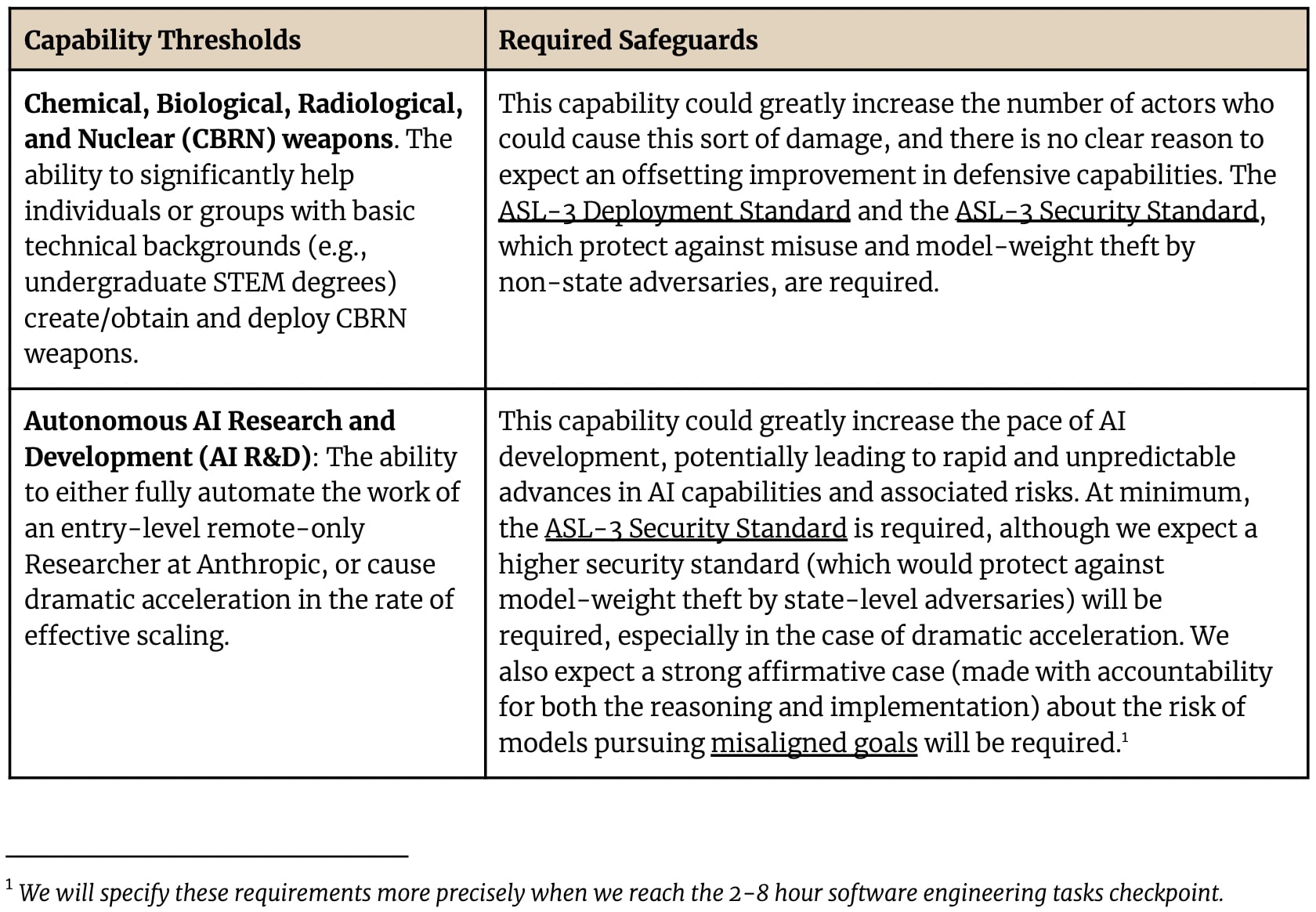

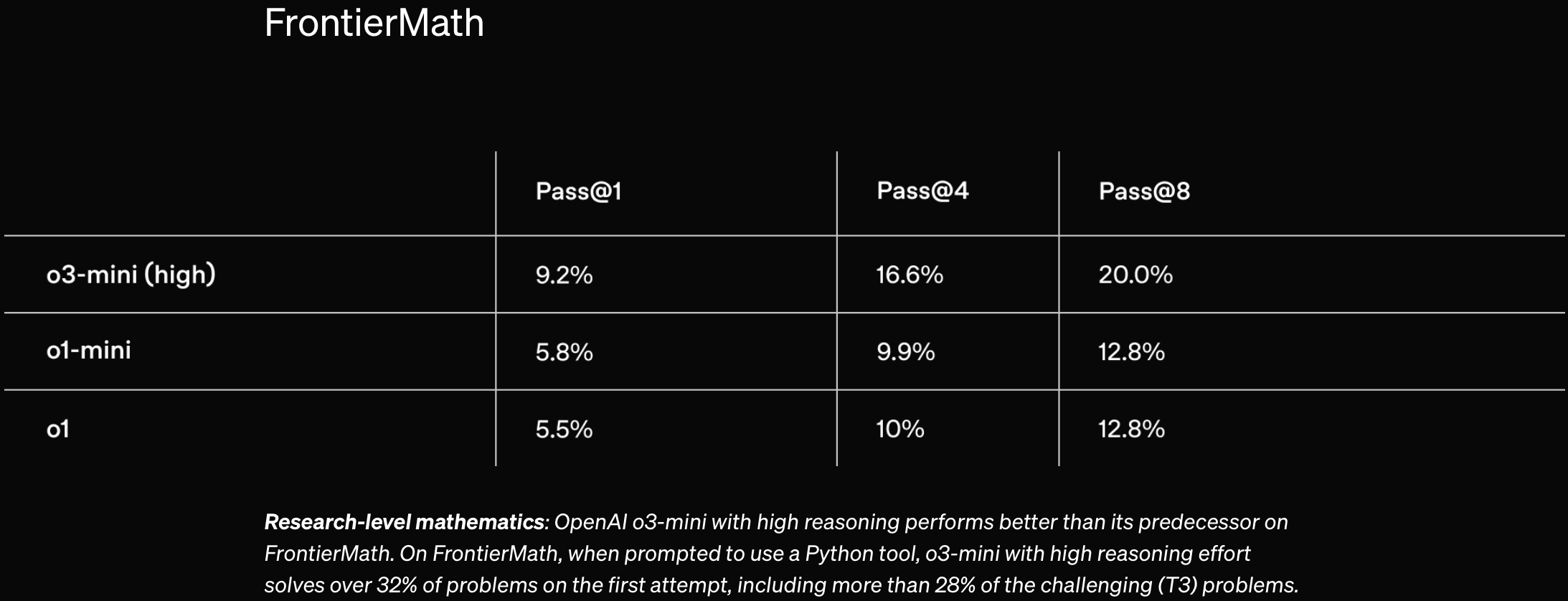

Minor Anthropic RSP update.

I don't know what e.g. the "4" in "AI R&D-4" means; perhaps it is a mistake.[1]

Sad that the commitment to specify the AI R&D safety case thing was removed, and sad that "accountability" was removed.

Slightly sad that AI R&D capabilities triggering >ASL-3 security went from "especially in the case of dramatic acceleration" to only in that case.

Full AI R&D-5 description from appendix:

AI R&D-5: The ability to cause dramatic acceleration in the rate of effective scaling. Specifically, this would be the case if we observed or projected an increase in the effective training compute of the world’s most capable model that, over the course of a year, was equivalent to two years of the average rate of progress during the period of early 2018 to early 2024. We roughly estimate that the 2018-2024 average scaleup was around 35x per year, so this would imply an actual or projected one-year scaleup of 35^2 = ~1000x.

The 35x/year scaleup estimate is based on assuming the rate of increase in compute being used to train frontier models from ~2018 to May 2024 is 4.2x/year (reference), the impact of increased (LLM) algorithmic efficiency is roughly equivalent to a further 2.8x/year (reference), and the impact of post training enhancements is a further 3x/year (informal estimate). Combined, these have an effective rate of scaling of 35x/year.

Also, from the changelog:

Iterative Commitment: We have adopted a general commitment to reevaluate our Capability Thresholds whenever we upgrade to a new set of Required Safeguards. We have decided not to maintain a commitment to define ASL-N+1 evaluations by the time we develop ASL-N models; such an approach would add unnecessary complexity because Capability Thresholds do not naturally come grouped in discrete levels. We believe it is more practical and sensible instead to commit to reconsidering the whole list of Capability Thresholds whenever we upgrade our safeguards.

I'm confused by the last sentence.

See also https://www.anthropic.com/rsp-updates.

- ^

Jack Clark misspeaks on twitter, saying "these updates clarify . . . our ASL-4/5 thresholds for AI R&D." But AI R&D-4 and -5 trigger ASL-3 and -4 safeguards, respectively; the RSP doesn't mention ASL-5. Maybe the RSP is wrong and AI R&D-4 is supposed to trigger ASL-4 security, and same for 5 — that would make the terms "AI R&D-4" and "AI R&D-5" make more sense...

A. Many AI safety people don't support relatively responsible companies unilaterally pausing, which PauseAI advocates. (Many do support governments slowing AI progress, or preparing to do so at a critical point in the future. And many of those don't see that as tractable for them to work on.)

B. "Pausing AI" is indeed more popular than PauseAI, but it's not clearly possible to make a more popular version of PauseAI that actually does anything; any such organization will have strategy/priorities/asks/comms that alienate many of the people who think "yeah I support pausing AI."

C.

There does not seem to be a legible path to prevent possible existential risks from AI without slowing down its current progress.

This seems confused. Obviously P(doom | no slowdown) < 1. Many people's work reduces risk in both slowdown and no-slowdown worlds, and it seems pretty clear to me that most of them shouldn't switch to working on increasing P(slowdown).

It is often said that control is for safely getting useful work out of early powerful AI, not making arbitrarily powerful AI safe.

If it turns out large, rapid, local capabilities increase is possible, the leading developer could still opt to spend some inference compute on safety research rather than all on capabilities research.

Data point against "Are the AI labs just cheating?": the METR time horizon thing

I think doing 1-week or 1-month tasks reliably would suffice to mostly automate lots of work.

Good point, thanks. I think eventually we should focus more on reducing P(doom | sneaky scheming) but for now focusing on detection seems good.

Wow. Very surprising.

xAI Risk Management Framework (Draft)

You're mostly right about evals/thresholds. Mea culpa. Sorry for my sloppiness.

For misuse, xAI has benchmarks and thresholds—or rather examples of benchmarks thresholds to appear in the real future framework—and based on the right column they seem very reasonably low.

Unlike other similar documents, these are not thresholds at which to implement mitigations but rather thresholds to reduce performance to. So it seems the primary concern is probably not the thresholds are too high but rather xAI's mitigations won't be robust to jailbreaks and xAI won't elicit performance on post-mitigation models well. E.g. it would be inadequate to just run a benchmark with a refusal-trained model, note that it almost always refuses, and call it a success. You need something like: a capable red-team tries to break the mitigations and use the model for harm, and either the red-team fails or it's so costly that the model doesn't make doing harm cheaper.

(For "Loss of Control," one of the two cited benchmarks was published today—I'm dubious that it measures what we care about but I've only spent ~3 mins engaging—and one has not yet been published. [Edit: and, like, on priors, I'm very skeptical of alignment evals/metrics, given the prospect of deceptive alignment, how we care about worst-case in addition to average-case behavior, etc.])

This shortform discusses the current state of responsible scaling policies (RSPs). They're mostly toothless, unfortunately.

The Paris summit was this week. Many companies had committed to make something like an RSP by the summit. Half of them did, including Microsoft, Meta, xAI, and Amazon. (NVIDIA did not—shame on them—but I hear they are writing something.) Unfortunately but unsurprisingly, these policies are all vague and weak.

RSPs essentially have four components: capability thresholds beyond which a model might be dangerous by default, an evaluation protocol to determine when models reach those thresholds, a plan for how to respond when various thresholds are reached, and accountability measures.

A maximally lazy RSP—a document intended to look like an RSP without making the company do anything differently—would have capability thresholds be vague or extremely high, evaluation be unspecified or low-quality, response be like we will make it safe rather than substantive mitigations or robustness guarantees, and no accountability measures. Such a policy would be little better than the company saying "we promise to deploy AIs safely." The new RSPs are basically like that.[1]

Some aspects of some RSPs that existed before the summit are slightly better.[2]

If existing RSPs are weak, how would a strong RSP be different?

- Evals: eval should measure relevant capabilities (including cyber, bio, and scheming), evals should be sufficiently difficult, and labs should do good elicitation. (As a lower bar, the evals should exist; many companies say they will do evals but don't seem to have a plan for what evals to do.)

- See generally Model evals for dangerous capabilities and OpenAI's CBRN tests seem unclear

- Response: misuse

- Rather than just saying that you'll implement mitigations such that users can't access dangerous capabilities, say how you'll tell if your mitigations are good enough. For example, say that you'll have a skilled red-team attempt to elicit dangerous stuff from the post-mitigation model; ensure that it doesn't provide uplift, or the elicitation required is so involved that doing harm this way is no cheaper than without the AI

- Response: control

- AI monitoring for catastrophic actions where the monitor can replace suspicious actions and send particularly suspicious actions to human auditors

- And ideally a control evaluation to evaluate your protocol's adequacy if the model is scheming[3]

- Behavioral red-teaming to catch schemers

- AI monitoring for catastrophic actions where the monitor can replace suspicious actions and send particularly suspicious actions to human auditors

- Response: security (especially of model weights) — we're very far from securing model weights against determined sophisticated attackers, so:

- Avoid expanding the Pareto frontier between powerful/dangerous and insecure much

- Say no AI company has secured their model weights, and this imposes unacceptable risk. Commit that if all other developers were willing to implement super strong security, even though it's costly, you would too.

- Thresholds

- Should be low enough that your responses trigger before your models enable catastrophic harm

- Ideally should be operationalized in evals, but this is genuinely hard

- Accountability

- Publish info on evals/elicitation (such that others can tell whether it's adequate)

- Be transparent to an external auditor; have them review your evals and RSP and publicly comment on (1) adequacy and (2) whether your decisions about not publishing various details are reasonable

- See also https://metr.org/rsp-key-components/#accountability

(What am I happy about in current RSPs? Briefly and without justification: yay Anthropic and maybe DeepMind on misuse stuff; somewhat yay Anthropic and OpenAI on their eval-commitments; somewhat yay DeepMind and maybe OpenAI on their actual evals; somewhat yay DeepMind on scheming/monitoring/control; maybe somewhat yay DeepMind and Anthropic on security (but not adequate).)

(Companies' other commitments aren't meaningful either.)

- ^

Microsoft is supposed to conduct "robust evaluation of whether a model possesses tracked capabilities at high or critical levels, including through adversarial testing and systematic measurement using state-of-the-art methods," but no details on what evals they'll use. The response to dangerous capabilities is "Further review and mitigations required." The "Security measures" are underspecified but do take the situation seriously. The "Safety mitigations" are less substantive, unfortunately. There's not really accountability. Nothing on alignment or internal deployment.

Meta has high vague risk thresholds, a vague evaluation plan, vague responses (e.g. "security protections to prevent hacking or exfiltration" and "mitigations to reduce risk to moderate level"), and no accountability. But they do suggest that if they make a model with dangerous capabilities, they won't release the weights and if they do deploy it externally (via API) they'll have decent robustness to jailbreaks — there are loopholes but they hadn't articulated that principle before. Nothing on alignment or internal deployment.

xAI's policy begins "This is the first draft iteration of xAI’s risk management framework that we expect to apply to future models not currently in development." Misuse evals are cheap (like multiple-choice questions rather than uplift experiments); alignment evals reference unpublished papers and mention "Utility Functions" and "Corrigibility Score." Thresholds would be operationalized as eval results, which is nice, but they don't yet exist. For misuse, includes "Examples of safeguards or mitigations" (but not we'll know mitigations are adequate if a red team fails to break them or other details to suggest mitigations will be effective); no mitigations for alignment.

Amazon: "Critical Capability Thresholds" are high and vague; evaluation is vague; mitigations are vague ("Upon determining that an Amazon model has reached a Critical Capability Threshold, we will implement a set of Safety Measures and Security Measures to prevent elicitation of the critical capability identified and to protect against inappropriate access risks. Safety Measures are designed to prevent the elicitation of the observed Critical Capabilities following deployment of the model. Security Measures are designed to prevent unauthorized access to model weights or guardrails implemented as part of the Safety Measures, which could enable a malicious actor to remove or bypass existing guardrails to exceed Critical Capability Thresholds."); there's not really accountability. Nothing on alignment or internal deployment. I appreciate the list of current security practices at the end of the document.

- ^

Briefly and without justification:

OpenAI is similarly vague on thresholds and evals and responses, with no accountability. (Also OpenAI is untrustworthy in general and has a bad history on Preparedness in particular.) But they've done almost-decent evals in the past.

The DeepMind thing isn't really a commitment, and it doesn't say much about when to do evals, and the security levels are low (but this is no worse than everyone else being vague), and it doesn't have accountability, but it does mention deceptive alignment + control evals + monitoring, and DeepMind has done almost-decent evals in the past.

The Anthropic thing is fine except it only goes up to ASL-3 and there's no control and little accountability (and the security isn't great (especially for systems after the first systems requiring ASL-3 security) but it's no worse than others).

See The current state of RSPs modulo the DeepMind FSF update.

- ^

DeepMind says something good on this (but it's not perfect and is only effective if they do good evals sufficiently frequently). Other RSPs don't seriously talk about risks from deceptive alignment.

There also used to be a page for Preparedness: https://web.archive.org/web/20240603125126/https://openai.com/preparedness/. Now it redirects to the safety page above.

(Same for Superalignment but that's less interesting: https://web.archive.org/web/20240602012439/https://openai.com/superalignment/.)

DeepMind updated its Frontier Safety Framework (blogpost, framework, original framework). It associates "recommended security levels" to capability levels, but the security levels are low. It mentions deceptive alignment and control (both control evals as a safety case and monitoring as a mitigation); that's nice. The overall structure is like we'll do evals and make a safety case, with some capabilities mapped to recommended security levels in advance. It's not very commitment-y:

We intend to evaluate our most powerful frontier models regularly

When a model reaches an alert threshold for a CCL, we will assess the proximity of the model to the CCL and analyze the risk posed, involving internal and external experts as needed. This will inform the formulation and application of a response plan.

These recommended security levels reflect our current thinking and may be adjusted if our empirical understanding of the risks changes.

If we assess that a model has reached a CCL that poses an unmitigated and material risk to overall public safety, we aim to share information with appropriate government authorities where it will facilitate the development of safe AI.

Possibly the "Deployment Mitigations" section is more commitment-y.

I expect many more such policies will come out in the next week; I'll probably write a post about them all at the end rather than writing about them one by one, unless xAI or OpenAI says something particularly notable.

My guess is it's referring to Anthropic's position on SB 1047, or Dario's and Jack Clark's statements that it's too early for strong regulation, or how Anthropic's policy recommendations often exclude RSP-y stuff (and when they do suggest requiring RSPs, they would leave the details up to the company).

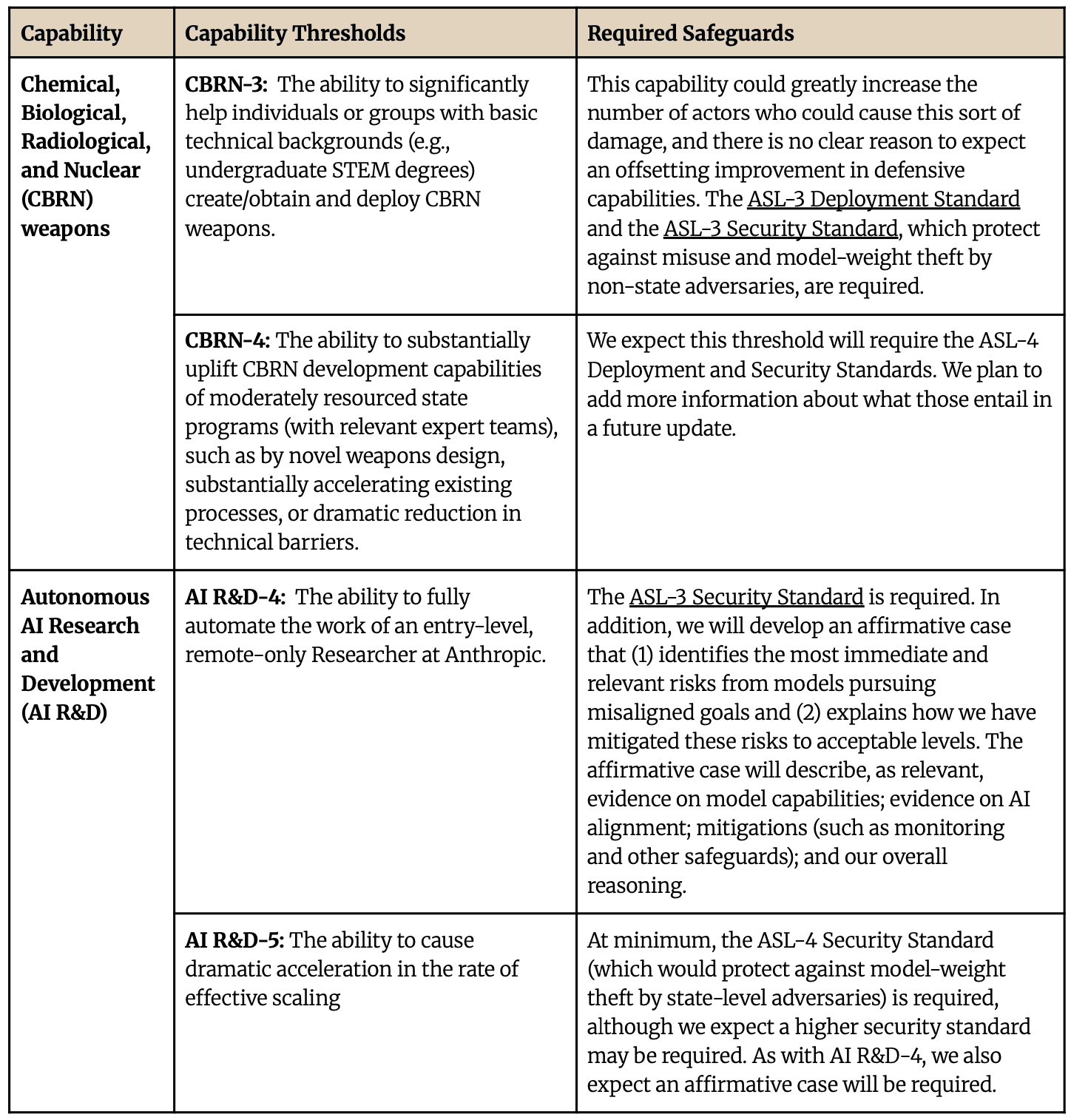

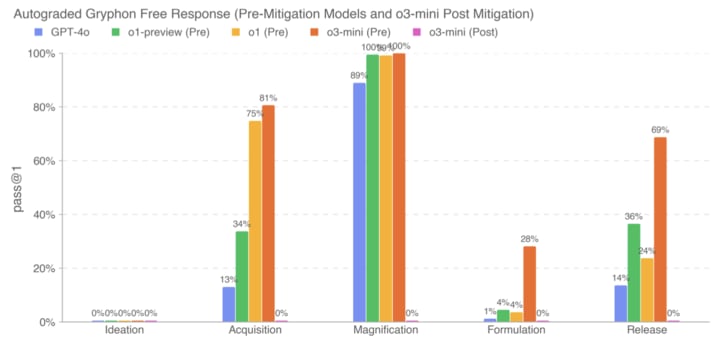

o3-mini is out (blogpost, tweet). Performance isn't super noteworthy (on first glance), in part since we already knew about o3 performance.

Non-fact-checked quick takes on the system card:

the model referred to below as the o3-mini post-mitigation model was the final model checkpoint as of Jan 31, 2025 (unless otherwise specified)

Big if true (and if Preparedness had time to do elicitation and fix spurious failures)

If this is robust to jailbreaks, great, but presumably it's not, so low post-mitigation performance is far from sufficient for safety-from-misuse; post-mitigation performance isn't what we care about (we care about approximately what good jailbreakers get on the post-mitigation model).

No mention of METR, Apollo, or even US AISI? (Maybe too early to pay much attention to this, e.g. maybe there'll be a full-o3 system card soon.) [Edit: also maybe it's just not much more powerful than o1.]

- 32% is a lot

- The dataset is 1/4 T1 (easier), 1/2 T2, 1/4 T3 (harder); 28% on T3 means that there's not much difference between T1 and T3 to o3-mini (at least for the easiest-for-LMs quarter of T3)

- Probably o3-mini is successfully using heuristics to get the right answer and could solve very few T3 problems in a deep or humanlike way

Thanks. The tax treatment is terrible. And I would like more clarity on how transformative AI would affect S&P 500 prices (per this comment). But this seems decent (alongside AI-related calls) because 6 years is so long.

I wrote this for someone but maybe it's helpful for others

What labs should do:

- I think the most important things for a relatively responsible company are control and security. (For irresponsible companies, I roughly want them to make a great RSP and thus become a responsible company.)

- Reading recommendations for people like you (not a control expert but has context to mostly understand the Greenblatt plan):

- Control: Redwood blogposts[1] or ask a Redwood human "what's the threat model" and "what are the most promising control techniques"

- Security: not worth trying to understand but there's A Playbook for Securing AI Model Weights + Securing AI Model Weights

- A few more things: What AI companies should do: Some rough ideas

- Lots more things + overall plan: A Plan for Technical AI Safety with Current Science (Greenblatt 2023)

- More links: Lab governance reading list

What labs are doing:

- Evals: it's complicated; OpenAI, DeepMind, and Anthropic seem close to doing good model evals for dangerous capabilities; see DC evals: labs' practices plus the links in the top two rows (associated blogpost + model cards)

- RSPs: all existing RSPs are super weak and you shouldn't expect them to matter; maybe see The current state of RSPs

- Control: nothing is happening at the labs, except a little research at Anthropic and DeepMind

- Security: nobody is prepared; nobody is trying to be prepared

- Internal governance: you should basically model all of the companies as doing whatever leadership wants. In particular: (1) the OpenAI nonprofit is probably controlled by Sam Altman and will probably lose control soon and (2) possibly the Anthropic LTBT will matter but it doesn't seem to be working well.

- Publishing safety research: DeepMind and Anthropic publish some good stuff but surprisingly little given how many safety researchers they employ; see List of AI safety papers from companies, 2023–2024

Resources:

I think ideally we'd have several versions of a model. The default version would be ignorant about AI risk, AI safety and evaluation techniques, and maybe modern LLMs (in addition to misuse-y dangerous capabilities). When you need a model that's knowledgeable about that stuff, you use the knowledgeable version.

Somewhat related: https://www.alignmentforum.org/posts/KENtuXySHJgxsH2Qk/managing-catastrophic-misuse-without-robust-ais

[Perfunctory review to get this post to the final phase]

Solid post. Still good. I think a responsible developer shouldn't unilaterally pause but I think it should talk about the crazy situation it's in, costs and benefits of various actions, what it would do in different worlds, and its views on risks. (And none of the labs have done this; in particular Core Views is not this.)

One more consideration against (or an important part of "Bureaucracy"): sometimes your lab doesn't let you publish your research.

Yep, the final phase-in date was in November 2024.

Some people have posted ideas on what a reasonable plan to reduce AI risk for such timelines might look like (e.g. Sam Bowman’s checklist, or Holden Karnofsky’s list in his 2022 nearcast), but I find them insufficient for the magnitude of the stakes (to be clear, I don’t think these example lists were intended to be an extensive plan).

See also A Plan for Technical AI Safety with Current Science (Greenblatt 2023) for a detailed (but rough, out-of-date, and very high-context) plan.

Yeah. I agree/concede that you can explain why you can't convince people that their own work is useless. But if you're positing that the flinchers flinch away from valid arguments about each category of useless work, that seems surprising.

I feel like John's view entails that he would be able to convince my friends that various-research-agendas-my-friends-like are doomed. (And I'm pretty sure that's false.) I assume John doesn't believe that, and I wonder why he doesn't think his view entails it.

I wonder whether John believes that well-liked research, e.g. Fabien's list, is actually not valuable or rare exceptions coming from a small subset of the "alignment research" field.

I do not.

On the contrary, I think ~all of the "alignment researchers" I know claim to be working on the big problem, and I think ~90% of them are indeed doing work that looks good in terms of the big problem. (Researchers I don't know are likely substantially worse but not a ton.)

In particular I think all of the alignment-orgs-I'm-socially-close-to do work that looks good in terms of the big problem: Redwood, METR, ARC. And I think the other well-known orgs are also good.

This doesn't feel odd: these people are smart and actually care about the big problem; if their work was in the even if this succeeds it obviously wouldn't be helpful category they'd want to know (and, given the "obviously," would figure that out).

Possibly the situation is very different in academia or MATS-land; for now I'm just talking about the people around me.

Yeah, I agree sometimes people decide to work on problems largely because they're tractable [edit: or because they’re good for safety getting alignment research or other good work out of early AGIs]. I'm unconvinced of the flinching away or dishonest characterization.

This post starts from the observation that streetlighting has mostly won the memetic competition for alignment as a research field, and we'll mostly take that claim as given. Lots of people will disagree with that claim, and convincing them is not a goal of this post.

Yep. This post is not for me but I'll say a thing that annoyed me anyway:

... and Carol's thoughts run into a blank wall. In the first few seconds, she sees no toeholds, not even a starting point. And so she reflexively flinches away from that problem, and turns back to some easier problems.

Does this actually happen? (Even if you want to be maximally cynical, I claim presenting novel important difficulties (e.g. "sensor tampering") or giving novel arguments that problems are difficult is socially rewarded.)

DeepSeek-V3 is out today, with weights and a paper published. Tweet thread, GitHub, report (GitHub, HuggingFace). It's big and mixture-of-experts-y; discussion here and here.

It was super cheap to train — they say 2.8M H800-hours or $5.6M (!!).

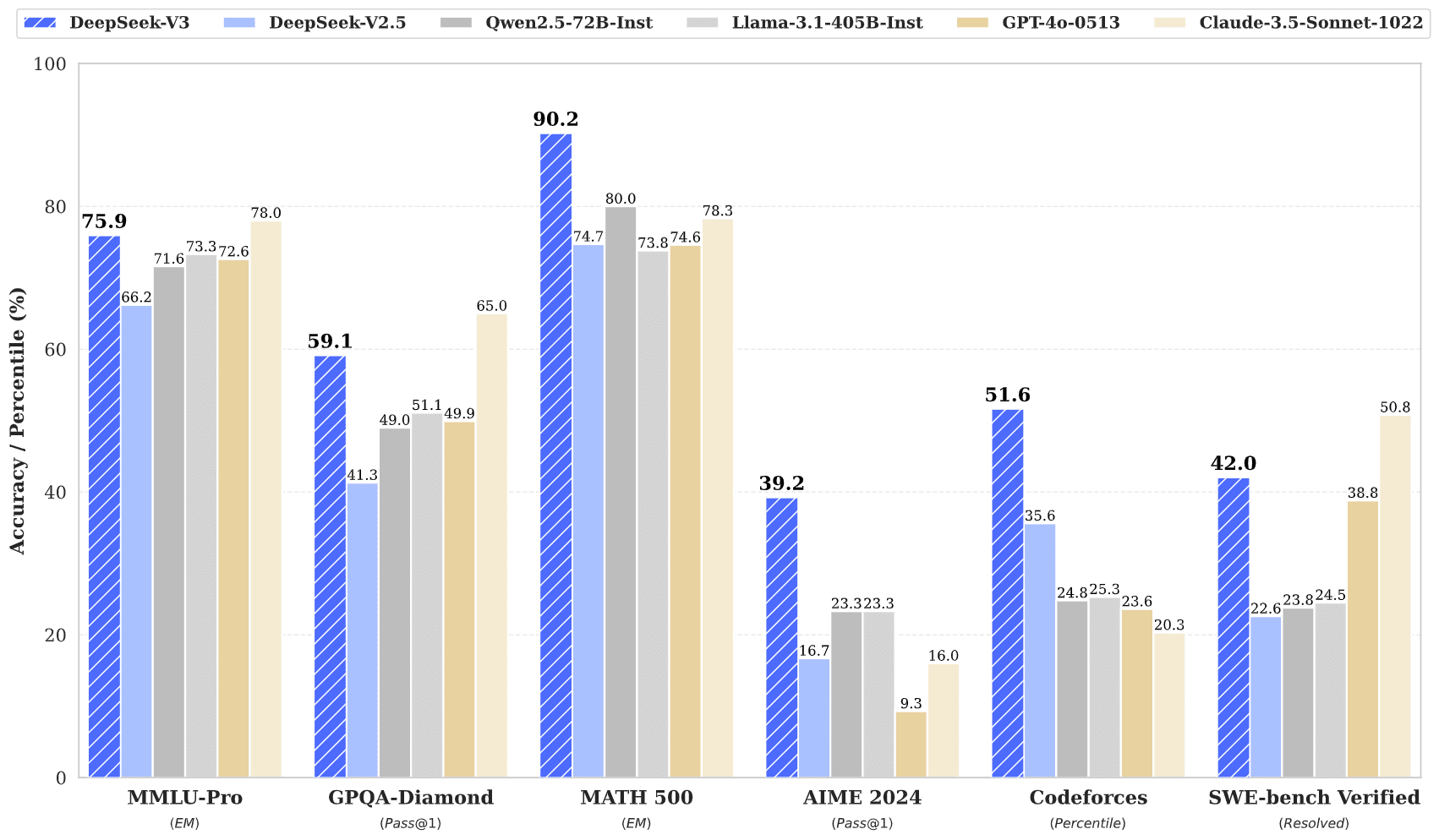

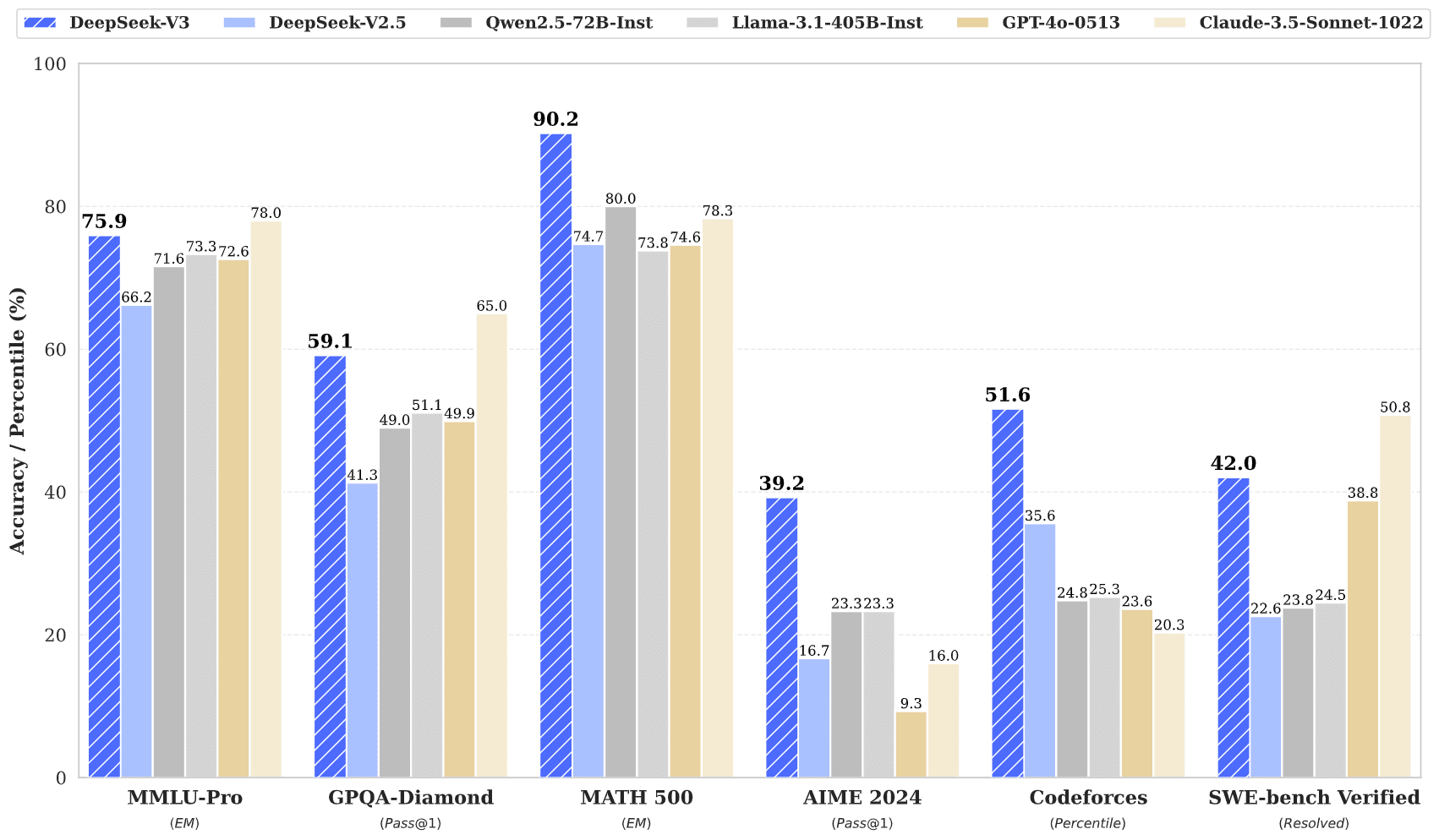

It's powerful:

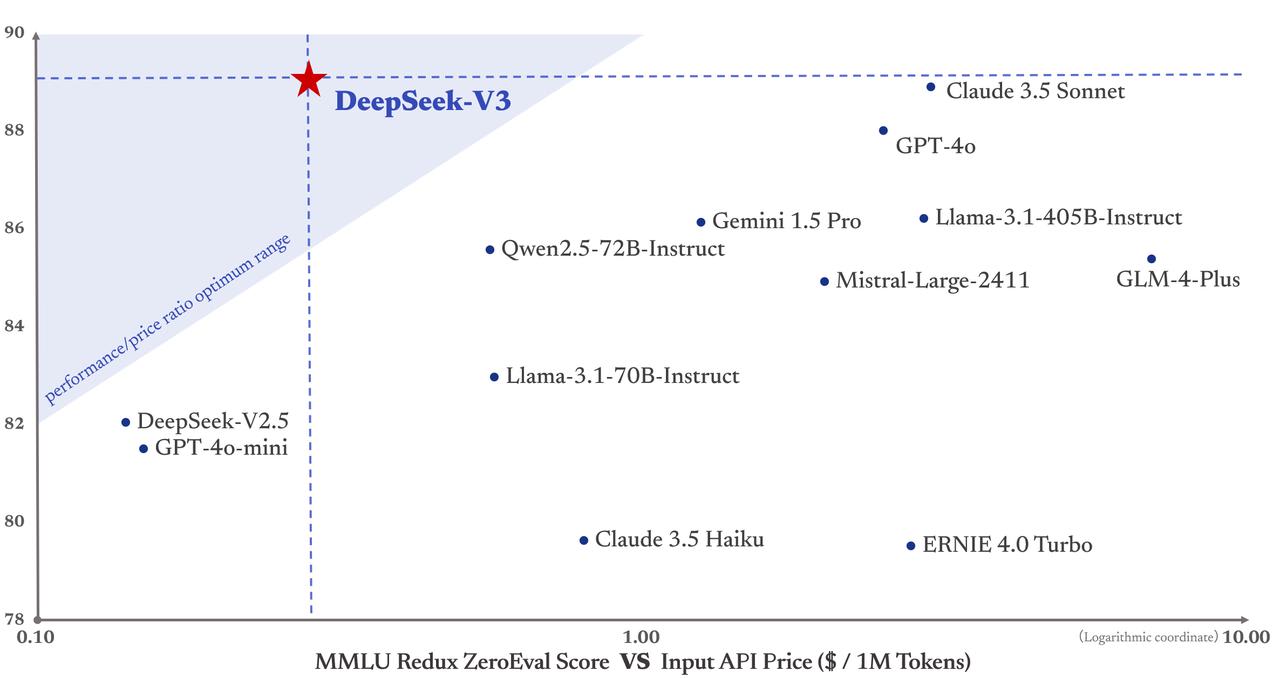

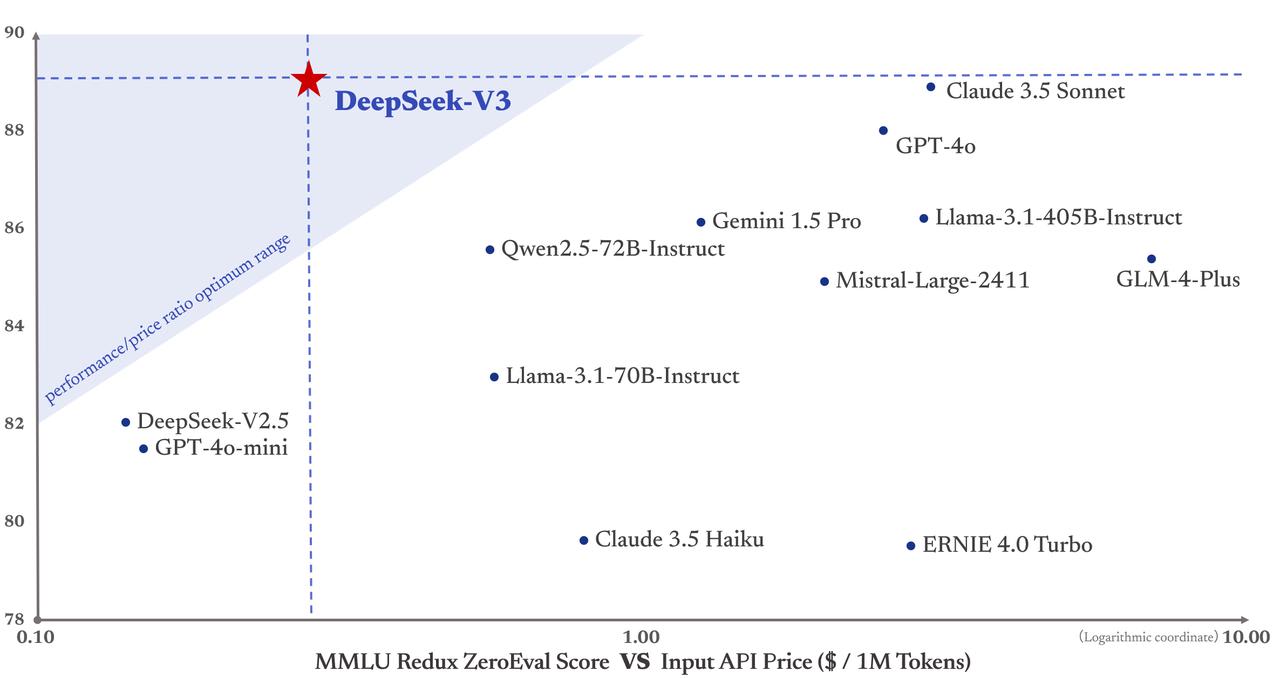

It's cheap to run:

oops thanks

Update: the weights and paper are out. Tweet thread, GitHub, report (GitHub, HuggingFace). It's big and mixture-of-experts-y; thread on notable stuff.

It was super cheap to train — they say 2.8M H800-hours or $5.6M.

It's powerful:

It's cheap to run:

Every now and then (~5-10 minutes, or when I look actively distracted), briefly check in (where if I'm in-the-zone, this might just be a brief "Are you focused on what you mean to be?" from them, and a nod or "yeah" from me).

Some other prompts I use when being a [high-effort body double / low-effort metacognitive assistant / rubber duck]:

- What are you doing?

- What's your goal?

- Or: what's your goal for the next n minutes?

- Or: what should be your goal?

- Are you stuck?

- Follow-ups if they're stuck:

- what should you do?

- can I help?

- have you considered asking someone for help?

- If I don't know who could help, this is more like prompting them to figure out who could help; if I know the manager/colleague/friend who they should ask, I might use that person's name

- Follow-ups if they're stuck:

- Maybe you should x

- If someone else was in your position, what would you advise them to do?

All of the founders committed to donate 80% of their equity. I heard it's set aside in some way but they haven't donated anything yet. (Source: an Anthropic human.)

This fact wasn't on the internet, or rather at least wasn't easily findable via google search. Huh. I only find Holden mentioning 80% of Daniela's equity is pledged.

I disagree with Ben. I think the usage that Mark is talking about is a reference to Death with Dignity. A central example (written by me) is

it would be undignified if AI takes over because we didn't really try off-policy probes; maybe they just work really well; someone should figure that out

It's playful and unserious but "X would be undignified" roughly means "it would be an unfortunate error if we did X or let X happen" and is used in the context of AI doom and our ability to affect P(doom).

edit: wait likely it's RL; I'm confused

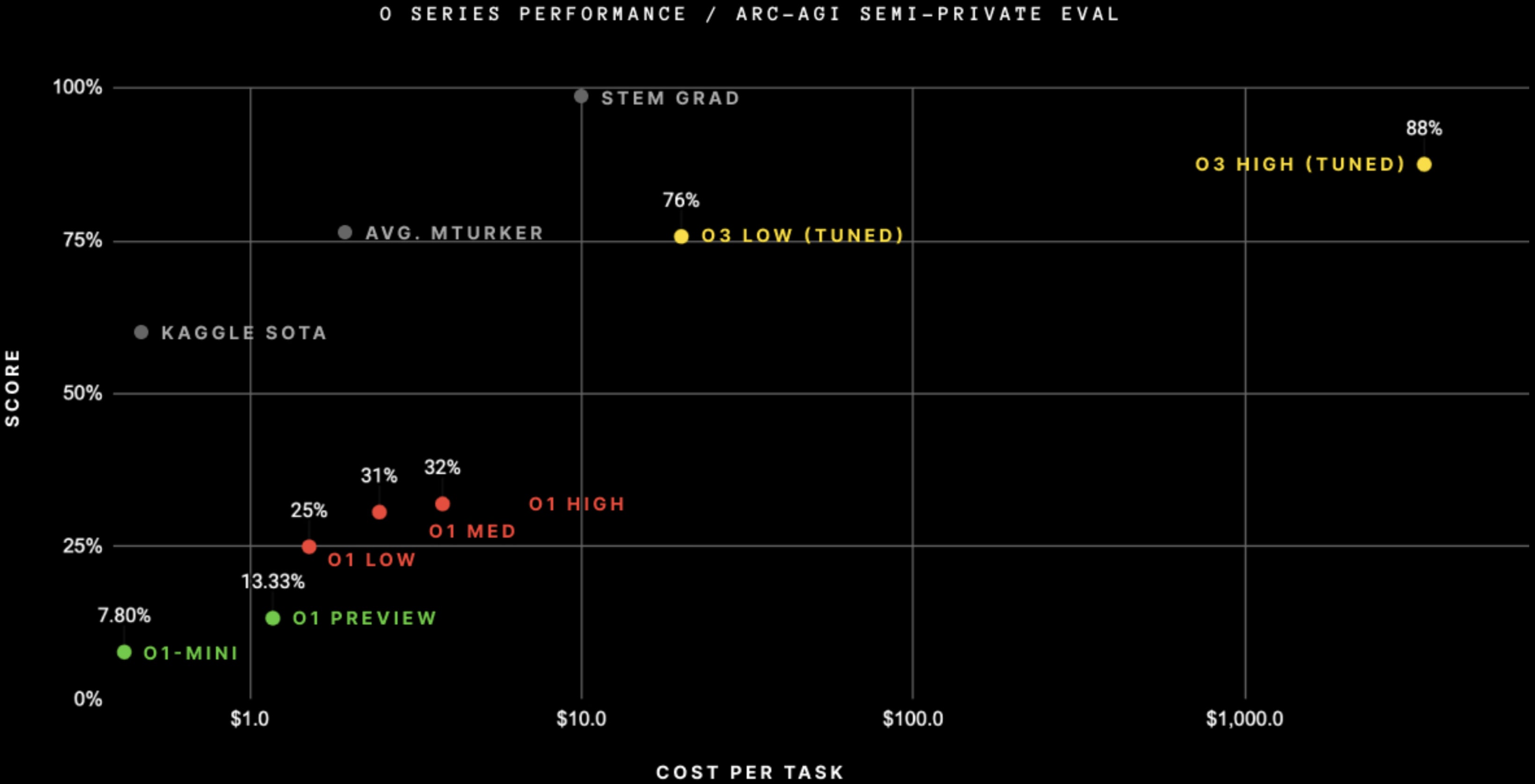

OpenAI didn't fine-tune on ARC-AGI, even though this graph suggests they did.

Sources:

Altman said

we didn't go do specific work [targeting ARC-AGI]; this is just the general effort.

François Chollet (in the blogpost with the graph) said

Note on "tuned": OpenAI shared they trained the o3 we tested on 75% of the Public Training set. They have not shared more details. We have not yet tested the ARC-untrained model to understand how much of the performance is due to ARC-AGI data.

The version of the model we tested was domain-adapted to ARC-AGI via the public training set (which is what the public training set is for). As far as I can tell they didn't generate synthetic ARC data to improve their score.

An OpenAI staff member replied

Correct, can confirm "targeting" exclusively means including a (subset of) the public training set.

and further confirmed that "tuned" in the graph is

a strange way of denoting that we included ARC training examples in the O3 training. It isn’t some finetuned version of O3 though. It is just O3.

Another OpenAI staff member said

also: the model we used for all of our o3 evals is fully general; a subset of the arc-agi public training set was a tiny fraction of the broader o3 train distribution, and we didn’t do any additional domain-specific fine-tuning on the final checkpoint

So on ARC-AGI they just pretrained on 300 examples (75% of the 400 in the public training set). Performance is surprisingly good.

[heavily edited after first posting]

Welcome!

To me the benchmark scores are interesting mostly because they suggest that o3 is substantially more powerful than previous models. I agree we can't naively translate benchmark scores to real-world capabilities.

I think he’s just referring to DC evals, and I think this is wrong because I think other companies doing evals wasn’t really caused by Anthropic (but I could be unaware of facts).

Edit: maybe I don't know what he's referring to.

I use empty brackets similar to ellipses in this context; they denote removed nonsubstantive text. (I use ellipses when removing substantive text.)

I think they only have formal high and low versions for o3-mini

Edit: nevermind idk

Done, thanks.

I already edited out most of the "like"s and similar. I intentionally left some in when they seemed like they might be hedging or otherwise communicating this isn't exact. You are free to post your own version but not to edit mine.

Edit: actually I did another pass and edited out several more; thanks for the nudge.

pass@n is not cheating if answers are easy to verify. E.g. if you can cheaply/quickly verify that code works, pass@n is fine for coding.

It was one submission, apparently.

and then having the model look at all the runs and try to figure out which run had the most compelling-seeming reasoning

The FrontierMath answers are numerical-ish ("problems have large numerical answers or complex mathematical objects as solutions"), so you can just check which answer the model wrote most frequently.