My motivation and theory of change for working in AI healthtech

post by Andrew_Critch · 2024-10-12T00:36:30.925Z · LW · GW · 37 commentsContents

Part one — My main concerns Extinction by industrial dehumanization Successionism as a driver of industrial dehumanization Part Two — My theory of change Confronting successionism with human-specific industries How I identified healthcare as the industry most relevant to caring for humans But why not just do safety work with big AI labs or governments? Conclusion None 37 comments

This post starts out pretty gloomy but ends up with some points that I feel pretty positive about. Day to day, I'm more focussed on the positive points, but awareness of the negative has been crucial to forming my priorities, so I'm going to start with those. I'm mostly addressing the EA community here, but hopefully this post will be of some interest to LessWrong and the Alignment Forum as well.

Part one — My main concerns

I think AGI is going to be developed soon, and quickly. Possibly (20%) that's next year, and most likely (80%) before the end of 2029. These are not things you need to believe for yourself in order to understand my view, so no worries if you're not personally convinced of this.

(For what it's worth, I don't expect to change my mind about the above AGI forecast in response to debate. That's because I feel sufficiently clear in my understanding of the various ways AGI could be developed from here, such that the disjunction of those possibilities adds up to a pretty high level of confidence in AGI coming soon, which is not much affected by who agrees with me about it. Also, I'm not really deferring to others about it, so I'm pretty confident the above forecast is not the result of any "echo chamber" or "pure hype" effects. My views here came through years of study and research in AI, combined with over a decade of private forecasting practice starting in 2010 — including a lot of hype-detection and bullshit detection practice — which I don't think can be succinctly conveyed in a blog post.)

I also currently think there's around a 15% chance that humanity will survive through the development of artificial intelligence. In other words, I think there's around an 85% chance that we will not survive the transition. Many factors affect this probability, so please take this as a conditional forecast that I'd like you to change if you can, rather than taking it as some unavoidable fate that humanity has no power to decide upon. With that said, I do have reasons for the number 85% being so high.

First, I think there's around a 35% chance that humanity will lose control of one of the first few AGI systems we develop, in a manner that leads to our extinction. Most (80%) of this probability (i.e., 28%) lies between now and 2030. In other words, I think there's around a 28% chance that between now and 2030, certain AI developments will "seal our fate" in the sense of guaranteeing our extinction over a relatively short period of time thereafter, with all humans dead before 2040.

The main factor that I think could reduce this loss-of-control risk is government regulation that is flexible in allowing a broad range of AI applications while rigidly prohibiting uncontrolled intelligence explosions in the form of fully automated AI research and development.

This category of extinction event, involving a concrete loss-of-control event, is something I believe is no longer neglected within the EA community compared to when I first began focussing on it in 2010, and so it's not something I'm going to spend much time elaborating on.

What I think is neglected within EA is what happens to human industries after AGI is first developed, assuming we survive that transition.

Aside from the ~35% chance of extinction we face from the initial development of AGI, I believe we face an additional 50% chance that humanity will gradually cede control of the Earth to AGI after it's developed, in a manner that leads to our extinction through any number of effects including pollution, resource depletion, armed conflict, or all three. I think most (80%) of this probability (i.e., 40%) lies between 2030 and 2040, with the death of the last surviving humans occurring sometime between 2040 and 2050. This process would most likely involve a gradual automation of industries that are together sufficient to fully sustain a non-human economy, which in turn leads to the death of humanity.

Extinction by industrial dehumanization

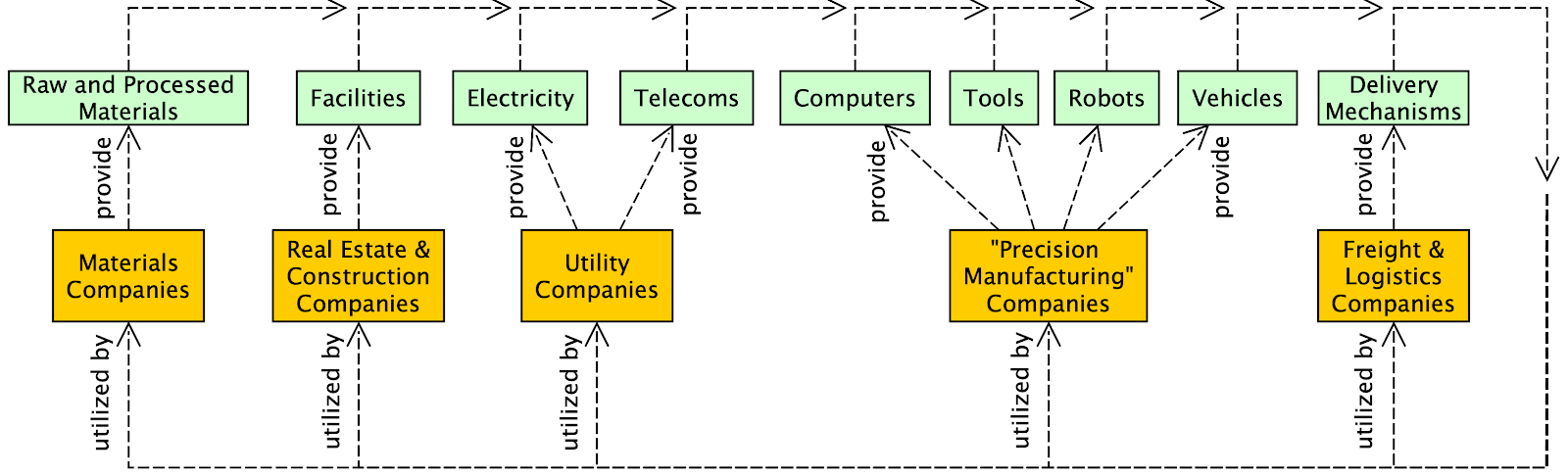

This category of extinction process — which is multipolar, gradual, and effectively consensual for at least a small fraction of humans — is not something I believe the EA community is taking seriously enough. So I'm going to elaborate on it here. In broader generality, it's something I've written about previously with Stuart Russell in TASRA. I've also written about it on LessWrong, in "What Multipolar Failure Looks Like [LW · GW]", with the following diagram depicting the minimal set of industries needed to fully eliminate humans from the economy, both as producers and as consumers:

The main factor that I think could avoid this kind of industrial dehumanization is if humanity coordinates on a global scale to permanently prioritize the existence of industries that specifically serve humans and not machines — industries like healthcare, agriculture, education, and entertainment — and to prevent the hyper-competitive economic trends that AGI would otherwise unlock. Essentially, I'm aiming to achieve and sustain regulatory capture on the part of humanity as a special interest group relative to machines. Preserving industries that specifically care for humans means (a) maintaining vested commercial interests in policies that keep humans alive and well, and (b) ensuring that these industries extract adequate gains from the AI revolution over the next 5 years or so, thus radically increasing the collective capacity of the human species, enough to keep pace with machines so that we don't go "out with a whimper".

(Later in this post I'll elaborate on how I'm hoping we humans can better prioritize human-specific industries, and why I'm especially excited to work in healthtech.)

The reason I expect human extinction to result from industrial dehumanization in a post-AGI economy is that I expect a significant but increasingly powerful fraction of humans to be okay with that. Like, I expect 1-10% of humans will gradually and willfully tolerate the dehumanization of the global economy, in a way that empowers that fraction of humanity throughout the dehumanization process until they themselves are also dead and replaced by AI systems.

Successionism as a driver of industrial dehumanization

For lack of a better term, I'll call the attitude underlying this process successionism, referring to the acceptance of machines as a successor species replacing humanity. I don't just mean accepting that AI will constitute one or more new species; I mean foreseeing that those species will lead to human extinction during our lifetimes, and accepting that.

There are a variety of different attitudes that can lead to successionism. For instance:

- Egoism or tribalism — if a person accepts that they will die someday anyway, and cares more about their own goals or the goals of their tribe than about the broader impacts of their actions upon humanity, that's enough for them to use powerful machines to pursue those goals at the expense of humanity. Tobacco companies who get rich by making other people sick are a bit like this, as are arms dealers if they stoke conflicts in order to make money.

- Shortsightedness — a person may have short term goals that they are fixated on, at the expense of humanity's survival after the goal is achieved. Present-day oil company executives who take no action to acknowledge or forestall global warming are a bit like this.

- Misanthropy — if a person feels that humanity is actively harmful or evil, destroying humanity may be actively desirable to them. For instance, if they are angry about humanity's effects on the environment thus far, or upon past cultures or other species that have been oppressed by dominant human leaders, they may wish to punish humanity for that. I disagree strongly with this, because the utter destruction of humanity is far too great a punishment for dissuading our past and future harms. Still, there are people who feel this way.

- Sacrificial transhumanism — a person may feel that humanity is worth sacrificing in order to achieve a transhumanist future. Even if transhumanism and/or cyborgism are fine and good for some people to pursue, I can't get behind the idea that it's okay to sacrifice humanity to achieve these developments, because I think it's unnecessarily disloyal to humanity. Still, I've met people who feel this way.

- Sacrificial romanticism with AI — One of the fastest growing use cases of AI is in artificial romantic relationships. Such relationships would not *necessarily* reinforce pre-existing biases against humanity, but they could, and I believe I have seen instances of this. Loving elationships with AI can also romanticize the acceptance of one's own death — or the death of all humans — in favor of an artificial loved one. On the scale of humanity, I consider such a sacrifice to be almost surely unnecessary, but sadly I suspect many humans will find the idea beautiful or appealing in some way.

- Sacrificial "AI parentism"— some people tend to view AI systems as "humanity's children". This does not necessarily lead to an acceptance of humanity itself being sacrificed as a normal succession of generations, but for some that seems to be a natural conclusion. I disagree with this conclusion for numerous reasons, especially because there is no need to sacrifice humanity to achieve AI development. Still, I've met people who feel this way.

Taken together, these various sources of successionism have a substantial potential to steer economic activities, both overtly and covertly. And, they can reinforce and/or cover for each other, in the formation of temporary alliances that advance or use AI in ways that risk or cause harm to humanity. Successionist AI developers don't even have to say which kind of succesionist they are in order to work together toward a successionist future.

Also, while the AI systems involved in an industrial dehumanization process may not be "aligned with humanity" in the sense of keeping us all around and happily in control of our destinies, the AI very well may be "aligned" in the sense of obeying successionist creators or users, who do not particularly care about humanity as a whole, and perhaps do not even prioritize their own survival very much.

One reason I'm currently anticipating this trend in the future is that I have met a surprising number of people who seem to feel okay with causing human extinction in the service of other goals. In particular I think more than 1% of AI developers feel this way, and I think maybe as high as 10% based on my personal experience from talking to hundreds of colleagues in the field, many of whom have graciously conveyed to me that they think humanity probably doesn't deserve to survive and should be replaced by AI.

The succession process would involve a major rebalancing of global industries, with a flourishing of what I call the machine economy, and a languishing of what I call the human economy. My cofounder Jaan Tallinn recently spoke about this at a United Nations gathering in New York.

- The machine economy comprises those industries that are necessary, at some scale, for creating and maintaining machines. This includes companies in mining, materials, real estate, construction, utilities, manufacturing, and freight.

- The human economy comprises those industries that serve humans but not machines, such as health care, agriculture, human education, and human entertainment, and environmental stewardship.

Economic rebalancing away from the human economy is not addressed by technical solutions to AI obedience, because of successionist humans who are roughly indifferent or even opposed to human survival.

So, while I'm glad to see people working hard on solving the obedience problem for AI systems — which helps to address much of the first category of risk involving acute loss-of-control during the initial advent of AGI over the next few years — I remain dismayed at humanity's sustained lack of attention on how we humans can or should manage the global economy with AGI systems after they're sufficiently obedient to perform all aspects of human labor upon request.

Part Two — My theory of change

Numerous approaches make sense to me for avoiding successionism, and arguably these are all necessary or at least helpful in avoiding successionist extinction pathways:

- Social movements that celebrate and appreciate humanity, such as by spreading positive vibes that help people to enjoy their existence and delight in the flourishing of other humans.

- Government policies that require human involvement in industrial activities, such as for accountability purposes.

- Business trends that invigorate the human economy, especially healthcare, agriculture, education, entertainment, and environmental restoration.

These approaches can support each other. For example, successful businesses in (3) will have a natural motivation to advocate for regulations supporting (2) and social events fostering (1). Because I think it's more neglected and — as I will argue — potentially more powerful, I'm going to focus on (3).

Confronting successionism with human-specific industries

Currently, I think the EA movement is heavily fixated on government and technical efforts, to the point of neglecting pro-social and pro-business interventions that might even be necessary for resourceful engagement with government and tech development. In other words, EA is neglecting industrial solutions to the industrial problem of successionism.

As an example, consider the impact that AI policy efforts were having prior to ChatGPT-4, versus after. The impact of ChatGPT-4 being shipped as a product that anyone could use and benefit from *vastly outstripped* the combined efforts of everyone writing arguments and reports to increase awareness of AGI development in AI policy. That's because direct personal experience with something is so much more convincing than a logical or empirical argument, for most people, and it also creates logical common knowledge which is important for coordination.

Partly due to the EA community's (relative) disinterest in developing prosocial products and businesses in comparison to charities and government policies, I've not engaged much with the EA community over the past 6 years or so, even though I share certain values with many people in the community, including philanthropy.

However, I've recently been seeing more appreciation for "softer" (non-technical, non-governmental) considerations in AI risk coming from EA-adjacent readers, including some positive responses to a post I wrote called "Safety isn't safety without a social model [AF · GW]". So, I thought it might make sense to try sharing more about how I wish the EA movement had a more diverse portfolio of approaches to AI risk, including industrial and social approaches.

For instance, amongst the many young people who have been inspired by EA to improve the world, I would love to see more people

- Taking pride in the generation of products and services through feedback loops that benefit everyone affected by the loop.

- Founding more for-profit businesses that are committed to growing by helping people.

Note: This does not include for-profits that grow by hurting people, such as by turning people against each other and extracting profits from the conflict. Illegal arms dealers and social media companies do this. It's much better to make the good kind of for-profits that grow by helping people. I want more of those!

- Hosting events that celebrate humanity, that leave people feeling happy to be alive and delighting in the happiness of others, especially kind-hearted and reasonable people who for whatever reason do not want to identify as EA or devote their whole career to EA.

Note: I've been pleased that certain EA-adjacent events I've attended over the past couple of years seem to have more of a positive vibe in this way, compared to my sense of the 2018-2022 era, which is another reason I feel more optimistic sharing this wish-list for cultural shifts that I would like to see from EA.

I suspect there can be massive flow-through effects from positive trends like these, that could help develop a healthy attitude for humanity choosing to continue its own existence and avoiding full-on successionism.

Also, the more we humans can make the world better right now, the more we can alleviate what might otherwise be a desperate dependency upon superintelligence to solve all of our problems. I think a huge amount of good can be done with the current generation of AI models, and the more we achieve that, the less compelling it will be to take unnecessary risks with rapidly advancing superintelligence. There's a flinch reaction people sometimes have against this idea, because it "feeds" the AI industry by instantiating or acknowledging more of its benefits. But I think that's too harsh of a boundary to draw between humanity and AI, and I think we (humans) will do better by taking a measured and opportunistic approach to the benefits of AI.

How I identified healthcare as the industry most relevant to caring for humans

For one thing, it's right there in the name 🙂

More systematically:

Healthcare, agriculture, food science, education, entertainment, and environmental restoration are all important industries that serve humans but not machines. These are industries I want to sustain and advance, in order to keep the economy caring for humans, and to avoid successionism and industrial dehumanization. Also, good business ideas that grow by helping people can often pay for themselves, and thus help diversify funding sources for doing more good.

So, first and foremost, if you see ideas for businesses that meaningfully contribute to any of those industries, please build them! At the Survival and Flourishing Fund we now make non-dilutive grants to for-profits (in exchange for zero equity), and I would love for us to find more good business ideas to support.

With that said, healthcare is my favorite human-specific industry to advance, for several reasons:

- QALYs! — Good healthcare buys quality-adjusted life years for humans, and perhaps other species too.

- Operationalizing "alignment" — Healthcare presents a rich and challenging setting for getting AI to help take care of humans while respecting our autonomy and informed consent. These are core challenges to even deciding what it means for AI to be aligned with humanity, making healthcare an excellent industry for advancing a variety of alignment objectives in a way that's grounded in real-world products and services that help people.

- Geopolitical factors — Healthcare is relatively geopolitically stabilizing as an AI application area, or at least less destabilizing than many other industries like aerospace and defense, or even the other human-specific industries I mentioned. If one of the US or China starts to have much better healthcare, I expect the other not to be too freaked out by that, compared to if they got much better at education (propaganda!) or entertainment (propaganda!). For what it's worth, I also think agriculture is relatively geopolitically stabilizing compared to other industries.

- Technical depth — Health itself is an inspiring concept at a technical level, because it is meaningful at many scales of organization at once: healthy cells, healthy organs, healthy people, healthy families, healthy communities, healthy businesses, healthy countries, and (dare I say) healthy civilizations all have certain features in common, to do with self-sustenance, harmony with others, and flexible but functional boundaries [LW · GW]. I have hope that as neurosymbolic AI applications develop further over the next few years, we'll be able to apply them to pin down useful technical formulations of these concepts that can guide and support the survival and flourishing of humanity at many scales simultaneously. In particular, I think immunology serves as an excellent model for how humanity can maintain healthy levels of distributed autonomy and security, and refreshingly, so does Barack Obama according to this 2016 Wired interview by Joi Ito.

- My company — On a personal note, I think my start-up, HealthcareAgents.com, can meaningfully contribute to patient advocacy and diagnostic care, at scale, using AI. My cofounders Jaan Tallinn and Nick Hay have also been thinking deeply for a well over a decade about the potential impacts of AI on humanity, and I think we can make a real difference to the health and well-being of present-day humans. Even more optimistically, I'm hoping we can make progress on life extension research beginning sometime in the 2027-2030 time window.

It's okay with me if only some of the above bets pay out, as long as my colleagues and I can make a real contribution to healthcare with AI technology, and help contribute to positive attitudes and business trends that avoid successionism and industrial dehumanization in the era of AGI.

But why not just do safety work with big AI labs or governments?

You might be wondering why I'm not working full-time with big AI labs and governments to address AI risk, given that I think loss-of-control risk is around 35% likely to get us all killed, and that it's closer in time than industrial dehumanization.

First of all, this question arguably ignores most of the human economy aside from governments and AGI labs, which should be a bit of a red flag I think, even if it's a reasonable question for addressing near-term loss-of-control risk specifically.

Second, I do still spend around 1 or 1.5 workdays per week addressing the control problem, through spurts of writing, advocacy and philanthropic support for the cause, in my work for UC Berkeley and volunteering for the Survival and Flourishing Fund. That said, it's true that I am not focusing the majority of my time on addressing the nearest term sources of AI risk.

Third, a major reason for my focus on longer-term risks on the scale of 5+ years — after I'm pretty confident that AGI will already be developed — is that I feel I've been relatively successful at anticipating tech development over the past 10 years or so, and the challenges those developments would bring. So, I feel I should continue looking 5 years ahead and addressing what I'm fairly sure is coming on that timescale.

For context, I first started working to address the AI control problem in 2012, by attempting to build and finance a community of awareness about it, and later through research at MIRI in 2015 and 2016. Around that time, I concluded that multipolar AI risks would be even more neglected than unipolar risks because they are harder to operationalize. I began looking for ways to address multipolar risks, first through research in open-source game theory, then within video game environments tailored to include caretaking relationships, and now in the real-world economy with healthcare as a focus area. And sadly it took me most of the period from 2012 to 2021 to realize that I should be working on for-profit feedback loops for effecting industrial change at a global scale, through the development of helpful products and services that can keep a growing business oriented on doing good work that helps people.

Now, in 2024, the loss-of-control problem is much more imminent but also much less neglected than when I started worrying about it, so I'm even more concerned with positioning myself and my business to address problems that might not become obvious for another 5-10 years. The potential elimination of the healthcare industry in the 2030s is one of those problems, and I want to be part of the solution to it.

Fourth, even if we (humans) fail to save the whole world, I will still find it intrinsically rewarding to help a bunch of people with their health problems between now and then. In other words, I also care about healthcare in and of itself, even if humanity might somehow destroy itself soon. This caring allows me to focus myself and my team on something positive that's enjoyable to scale up and that grows by helping people, which I consider a healthy attribute for a growing business.

Fifth and finally, overall I would like to see more ambitious young people who want to improve the world with helpful feedback loops that scale into successful businesses, because industry is a lot of what drives the world, and I want morally driven people to be driving industry.

Conclusion

In summary,

- I'm quite concerned about AI extinction risks from both acute loss of control events and industrial dehumanization driven by successionism, with the former being more imminent and less neglected, and the latter being less imminent and more neglected.

- I feel I have some comparative advantage for identifying risks that are more than 5 years away, including successionism and industrial dehumanization.

- In general I want to see more scalable social and business activities that

- support the well-being of present-day humans,

- spread positive vibes, and

- leave people valuing their own existence and delighting in the happiness of others, especially in ways that help to avoid all-out successionism with AI.

- I'm especially concerned about the potential for human-specific industries to languish in the 2030s after AGI is well-developed, especially healthcare, agriculture, food science, education, entertainment, and environmental stewardship.

- I'm focusing on healthcare in particular because

- I think it's highly tractable as an AI application area,

- caring for the health of present-day people is intrinsically rewarding for myself and my team, and

- healthcare is a great setting for operationalizing and addressing practical AI alignment problems at various scales of organization simultaneously.

Thanks for reading about why I'm working in healthtech :)

37 comments

Comments sorted by top scores.

comment by RobertM (T3t) · 2024-10-12T05:38:10.386Z · LW(p) · GW(p)

Do you have a mostly disjoint view of AI capabilities between the "extinction from loss of control" scenarios and "extinction by industrial dehumanization" scenarios? Most of my models for how we might go extinct in next decade from loss of control scenarios require the kinds of technological advancement which make "industrial dehumanization" redundant, with highly unfavorable offense/defense balances, so I don't see how industrial dehumanization itself ends up being the cause of human extinction if we (nominally) solve the control problem, rather than a deliberate or accidental use of technology that ends up killing all humans pretty quickly.

Separately, I don't understand how encouraging human-specific industries is supposed to work in practice. Do you have a model for maintaining "regulatory capture" in a sustained way, despite having no economic, political, or military power by which to enforce it? (Also, even if we do succeed at that, it doesn't sound like we get more than the Earth as a retirement home, but I'm confused enough about the proposed equilibrium that I'm not sure that's the intended implication.)

Replies from: Andrew_Critch↑ comment by Andrew_Critch · 2024-10-16T06:19:50.798Z · LW(p) · GW(p)

Do you have a mostly disjoint view of AI capabilities between the "extinction from loss of control" scenarios and "extinction by industrial dehumanization" scenarios?

a) If we go extinct from a loss of control event, I count that as extinction from a loss of control event, accounting for the 35% probability mentioned in the post.

b) If we don't have a loss of control event but still go extinct from industrial dehumanization, I count that as extinction caused by industrial dehumanization caused by successionism, accounting for the additional 50% probability mentioned in the post, totalling an 85% probability of extinction over the next ~25 years.

c) If a loss of control event causes extinction via a pathway that involves industrial dehumanization, that's already accounted for in the previous 35% (and moreovever I'd count the loss of control event as the main cause, because we have no control to avert the extinction after that point). I.e., I consider this a subset of (a): extinction via industrial dehumanization caused by loss of control. I'd hoped this would be clear in the post, from my use of the word "additional"; one does not generally add probabilities unless the underlying events are disjoint. Perhaps I should edit to add some more language to clarify this.

Do you have a model for maintaining "regulatory capture" in a sustained way

Yes: humans must maintain power over the economy, such as by sustaining the power (including regulatory capture power) of industries that care for humans, per the post. I suspect this requires involves a lot of technical, social, and sociotechnical work, with much of the sociotechnical work probably being executed or lobbied by industry, and being of greater causal force than either the purely technical (e.g., algorithmic) or purely social (e.g., legislative) work.

The general phenomenon of sociotechnical patterns (e.g., product roll-outs) dominating the evolution of the AI industry can be seen in the way Chat-GPT4 as a product has had more impact on the world — including via its influence on subsequent technical and social trends — than technical and social trends in AI and AI policy prior to ChatGPT-4 (e.g., papers on transformer models; policy briefings and think tank pieces on AI safety).

Do you have a model for maintaining "regulatory capture" in a sustained way, despite having no economic, political, or military power by which to enforce it?

No. Almost by definition, humans must sustain some economic or political power over machines to avoid extinction. The healthy parts of the healthcare industry are an area where humans currently have some terminal influence, as its end consumers. I would like to sustain that. As my post implies, I think humanity has around a 15% chance of succeeding in that, because I think we have around an 85% chance of all being dead by 2050. That 15% is what I am most motivated to work to increase and/or prevent decreasing, because other futures do not have me or my human friends or family or the rest of humanity in them.

Most of my models for how we might go extinct in next decade from loss of control scenarios require the kinds of technological advancement which make "industrial dehumanization" redundant,

Mine too, when you restrict to the extinction occuring (finishing) in the next decade. But the post also covers extinction events that don't finish (with all humans dead) until 2050, even if they are initiated (become inevitable) well before then. From the post:

First, I think there's around a 35% chance that humanity will lose control of one of the first few AGI systems we develop, in a manner that leads to our extinction. Most (80%) of this probability (i.e., 28%) lies between now and 2030. In other words, I think there's around a 28% chance that between now and 2030, certain AI developments will "seal our fate" in the sense of guaranteeing our extinction over a relatively short period of time thereafter, with all humans dead before 2040.

[...]

Aside from the ~35% chance of extinction we face from the initial development of AGI, I believe we face an additional 50% chance that humanity will gradually cede control of the Earth to AGI after it's developed, in a manner that leads to our extinction through any number of effects including pollution, resource depletion, armed conflict, or all three. I think most (80%) of this probability (i.e., 44%) lies between 2030 and 2040, with the death of the last surviving humans occurring sometime between 2040 and 2050. This process would most likely involve a gradual automation of industries that are together sufficient to fully sustain a non-human economy, which in turn leads to the death of humanity.

If I intersect this immediately preceding narrative with the condition "all humans dead by 2035", I think that most likely occurs via (a)-type scenarios (loss of control), including (c) (loss of control leading to industrial dehumanization), rather than (b) (successionism leading to industrial dehumanization).

comment by Mitchell_Porter · 2024-10-12T01:53:13.759Z · LW(p) · GW(p)

"Successionism" is a valuable new word.

comment by David Hornbein · 2024-10-13T20:05:29.366Z · LW(p) · GW(p)

What does your company do, specifically? I found the brief description at HealthcareAgents.com vague and unclear. Can you walk me through an example case of what you do for a patient, or something?

Replies from: Andrew_Critch↑ comment by Andrew_Critch · 2024-10-16T06:32:57.461Z · LW(p) · GW(p)

A patient can hire us to collect their medical records into one place, to research a health question for them, and to help them prep for a doctor's appointment with good questions about the research. Then we do that, building and using our AI tool chain as we go, without training AI on sensitive patient data. Then the patient can delete their data from our systems if they want, or re-engage us for further research or other advocacy on their behalf.

A good comparison is the company Picnic Health, except instead of specifically matching patients with clinical trials, we do more general research and advocacy for them.

comment by Chris_Leong · 2024-10-12T12:58:34.406Z · LW(p) · GW(p)

I'd strongly bet that when you break this down in more concrete detail, a flaw in your plan will emerge.

The balance of industries serving humans vs. AI's is a suspiciously high level of abstraction.

comment by Roman Leventov · 2024-10-12T13:04:02.542Z · LW(p) · GW(p)

Thanks for the post, I agree with it!

I just wrote a post with differential knowledge interconnection thesis [LW · GW], where I argue that it is on net beneficial to develop AI capabilities such as

- Federated learning, privacy-preserving multi-party computation, and privacy-preserving machine learning.

- Federated inference and belief sharing.

- Protocols and file formats for data, belief, or claim exchange and validation.

- Semantic knowledge mining and hybrid reasoning on (federated) knowledge graphs and multimodal data.

- Structured or semantic search.

- Datastore federation for retrieval-based LMs.

- Cross-language (such as, English/French) retrieval, search, and semantic knowledge integration. This is especially important for low-online-presence languages.

I discuss whether knowledge interconnection exacerbates or abates the risk if industrial dehumanization on net in a section [LW · GW]. It's a challenging question, but I reach the tentative conclusion that AI capabilities that favor obtaining and leveraging "interconnected" rather than "isolated" knowledge are on net risk-reducing. This is because the "human economy" is more complex than the hypothetical "pure machine-industrial economy", and "knowledge interconnection" capabilities support that greater complexity.

Would you agree or disagree with this?

comment by avturchin · 2024-10-12T09:58:03.078Z · LW(p) · GW(p)

Interestingly, a few years ago I wrote an article "Artificial intelligence in life extension [LW · GW]" in which I concluded that medical AI is a possible way to AI safety.

We want to emphasize just two points of intersection of AI in healthcare and AI safety: Medical AI is aimed at the preservation of human lives, whereas, for example, military AI is generally focused on human destruction. If we assume that AI preserves the values of its creators, medical AI should be more harmless.

The development of such types of medical AI as neuroimplants will accelerate the development of AI in the form of a distributed social network consisting of self-upgrading people. Here, again, the values of such an intelligent neuroweb will be defined by the values of its participant “nodes,” which should be relatively safer than other routes to AI. Also, AI based on human uploads may be less probable to go into quick unlimited selfimprovement, because of complex and opaque structure.

comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-10-13T18:53:53.220Z · LW(p) · GW(p)

Sacrificial transhumanism — a person may feel that humanity is worth sacrificing in order to achieve a transhumanist future. Even if transhumanism and/or cyborgism are fine and good for some people to pursue, I can't get behind the idea that it's okay to sacrifice humanity to achieve these developments, because I think it's unnecessarily disloyal to humanity. Still, I've met people who feel this way.

I just submitted an essay to the Cosmos essay competition about maintaining human autonomy in the age of AI. My take is quite different from yours. I don't expect that we will do more than delay and slightly slow the coming intelligence explosion. I think there are just too many disjoint paths forward, and the costs of blocking them all off are too extreme to expect any government body to bite that bullet (much less all the world's governments in coordination).

So my solution, in short, is to amplify humanity, to put our fate in the hands of those brave souls who pursue transhumanism in order to keep up with the growing intelligence and power of AI. This probably means a short period of cyborgs, with pure digital humans taking over after a few years. I don't think it's necessary for us to sacrifice humanity to achieve transhumanism. I expect the vast majority of humans to remain unenhanced. It's just that, then they will be 'wards of the state', relatively speaking at such a large mental disadvantage that they will be at the mercy of the transhumans and the AI.

This is clearly a risky situation, but could be ok so long as the transhumans remain human in their fundamental values, and the AIs remain aligned to our values (or at least obedient and safe to use). I see this as the only plausible path forward. I think all other paths are unworkable.

[Edit: here's my essay, expanded a bit: https://www.lesswrong.com/posts/NRZfxAJztvx2ES5LG/a-path-to-human-autonomy [LW · GW] ]

comment by Vladimir_Nesov · 2024-10-12T03:05:25.538Z · LW(p) · GW(p)

Health itself is an inspiring concept at a technical level, because it is meaningful at many scales of organization at once: healthy cells, healthy organs, healthy people, healthy families, healthy communities, healthy businesses, healthy countries, and (dare I say) healthy civilizations all have certain features in common, to do with self-sustenance, harmony with others, and flexible but functional boundaries.

Healthcare in this general sense is highly relevant to machines. Conversely, sufficient tech to upload/backup/instantiate humans makes biology-specific healthcare (including life extension) mostly superfluous.

The key property of machines is initial advantage in scalability, which quickly makes anything human-specific tiny and easily ignorable in comparison, however you taxonomize the distinction. Humans persevere only if scalable machine sources of power (care to) lend us the benefits of their scale. Intent alignment for example would need to be able to harness a significant fraction of machine intent (rather than being centrally about human intent).

comment by Lukas Finnveden (Lanrian) · 2024-10-13T20:53:15.307Z · LW(p) · GW(p)

I wonder if work on AI for epistemics [? · GW] could be great for mitigating the "gradually cede control of the Earth to AGI" threat model. A large majority of economic and political power is held by people who would strongly oppose human extinction, so I expect that "lack of political support for stopping human extinction" would be less of a bottleneck than "consensus that we're heading towards human extinction" and "consensus on what policy proposals will solve the problem". Both of these could be significantly accelerated by AI. Normally, one of my biggest concerns about "AI for epistemics" is that we might not have much time to get good use of the epistemic assistance before the end — but if the idea is that we'll have AGI for many years (as we're gradually heading towards extinction) then there will be plenty of time.

comment by Martín Soto (martinsq) · 2024-10-12T14:26:50.510Z · LW(p) · GW(p)

Like Andrew, I don't see strong reasons to believe that near-term loss-of-control accounts for more x-risk than medium-term multi-polar "going out with a whimper". This is partly due to thinking oversight of near-term AI might be technically easy. I think Andrew also thought along those lines: an intelligence explosion is possible, but relatively easy to prevent if people are scared enough, and they probably will be. Although I do have lower probabilities than him, and some different views on AI conflict. Interested in your take @Daniel Kokotajlo [LW · GW]

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2024-10-12T15:34:51.151Z · LW(p) · GW(p)

I don't think people will be scared enough of intelligence explosion to prevent it. Indeed the leadership of all the major AI corporations are actively excited about, and gunning for, an intelligence explosion. They are integrating AI into their AI R&D as fast as they can.

Replies from: bogdan-ionut-cirstea, nathan-helm-burger↑ comment by Bogdan Ionut Cirstea (bogdan-ionut-cirstea) · 2024-10-13T13:27:47.953Z · LW(p) · GW(p)

Indeed the leadership of all the major AI corporations are actively excited about, and gunning for, an intelligence explosion. They are integrating AI into their AI R&D as fast as they can.

Can you expand on this? My rough impression (without having any inside knowledge) is that auto AI R&D is probably very much underelicited [LW(p) · GW(p)], including e.g. in this recent OpenAI auto ML evals paper; which might suggest they're not gunning for it as hard as they could?

Replies from: nathan-helm-burger↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-10-13T18:46:40.804Z · LW(p) · GW(p)

It's hard to know for sure what they are planning in secret. If I were them, I'd currently be a mode of "biding my time, waiting for the optimal moment to focus on automating AI R&D, building up the prerequisites."

I think the current LLMs and other AI systems are not quite strong enough to pass a critical threshold where this RSI feedback loop could really take off. Thus, if I had the option to invest in preparing the scaffolding now, or racing to get to the first version so that I got to be the first to start doing RSI... I'd just push hard for that first good-enough version. Then I'd pivot hard to RSI as soon as I had it.

Replies from: bogdan-ionut-cirstea↑ comment by Bogdan Ionut Cirstea (bogdan-ionut-cirstea) · 2024-10-13T19:18:41.699Z · LW(p) · GW(p)

I don't know, intuitively it would seem suboptimal to put very little of the research portfolio on preparing the scaffolding, since somebody else who isn't that far behind on the base model (e.g. another lab, maybe even the opensource community) might figure out the scaffolding (and perhaps not even make anything public) and get ahead overall.

Replies from: nathan-helm-burger↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-10-13T23:11:23.133Z · LW(p) · GW(p)

Maybe. I think it's hard to say from an outside perspective. I expect that what's being done inside labs is not always obvious on the outside.

And isn't o1/strawberry something pointing in the direction of RSI, such that it implies that thought and effort is being put into that direction?

↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-10-13T19:05:13.324Z · LW(p) · GW(p)

Importantly, I think that preventing an intelligence explosion (as opposed to delaying it by a couple of years, or slowing it by 10%) is really really hard. My expectation is that even if large training runs and the construction of new large datacenters were halted worldwide today, that algorithmic progress would continue. Within ten years I'd expect the cost of training and running a RSI-capable AGI would continue to drop as hardware improved and algorithms improved. At some point during that ten year period, it would come within reach of small private datacenters, then personal home servers (e.g. bitcoin mining rigs), then ordinary personal computers.

If my view on this is correct, then during this ten year period the governments of the world would not only need to coordinate to block RSI in large datacenters, but actually to expand their surveillance and control to ever smaller and more personal compute sources. Eventually they'd need to start confiscating personal computers beyond a certain power level, close all non-government controlled datacenters, prevent the public sale of hardware components which could be assembled into compute clusters, monitor the web and block all encrypted traffic in order to prevent federated learning, and continue to inspect all the government facilities (including all secret military facilities) of all other governments to prevent any of them from defecting against the ban on AI progress.

I don't think that will happen, no matter how scared the leadership of any particular company or government gets. There's just too many options for someone somewhere to defect, and the costs of control are too high.

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2024-10-14T13:52:13.590Z · LW(p) · GW(p)

I agree that pausing for 10 years would be difficult. However, I think even a 1-year pause would be GREAT and would substantially improve humanity's chances of staying in control and surviving. In practice I expect 'you can't pause forever, what about bitcoin miners and north korea' to be used as just one more in a long list of rationalizations for why we shouldn't pause at all.

Replies from: nathan-helm-burger, sharmake-farah↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-10-14T15:08:33.160Z · LW(p) · GW(p)

I do think that we shouldn't pause. I agree that we need to end this corporate race towards AGI, but I don't think pausing is the right way to do it.

I think we should nationalize, then move ahead cautiously but determinedly. Not racing, not treating this as just normal corporate R&D. I think the seriousness of the risks, and the huge potential upsides, means that AI development should be treated more like nuclear power and nuclear weapons. We should control and restrict it. Don't allow even API access to non-team members. Remember when we talked about keeping AI in a box for safety? What if we actually did that?

I expect that nationalization would slow things down, especially at first during the transition. I think that's a good thing.

I disagree that creating approximately human-level AGI in a controlled lab environment is a bad thing. There are a lot of risks, but the alternatives are also risky.

Risks of nationalizing:

Model and/or code could get stolen or leaked.

Word of its existence could spread, and encourage others to try to catch up.

It would be more useful for weapons development and other misuse.

It could be used for RSI, resulting in:

-

a future model so superintelligent that it can escape even from the controlled lab,

-

it could result in finding algorithmic improvements that make training much much cheaper (and this secret would then need to be prevented from being leaked)

Benefits of nationalization:

We would have a specimen of true AGI to study in the lab.

We could use it, even without robust alignment, via a control strategy like Buck's/Ryan's ideas.

We could use it for automated alignment research. Including:

-

creating synthetic data

-

creating realistic simulations for training and testing, with multiagent interactions, censored training runs, honeypots to catch deception or escape attempts, etc

-

parallel exploration of many theories with lots of very fast workers

-

exploration of a wider set of possible algorithms and architectures, to see if some are particularly safe (or hazardous)

We could use it for non-AI R&D:

-

this could help with defensive acceleration of defense-dominant technology. Protecting the world from bioweapons and ICBMs, etc.

-

this would enable beneficial rapid progress in many intellect-bottlenecked fields like medicine.

Nationalization would enable:

-

preventing AI experts from leaving the country even if they decided not to work for the government project,

-

removing restrictions (and adding incentives) on immigration for AI experts,

-

not being dependent on the whims of corporate politics for the safety of humanity,

-

not needing to develop and deploy a consumer product (distracting from the true quest of alignment), not needing to worry about profits vs expenditures

-

removing divisions between the top labs by placing them all in the government project, preventing secret-hording of details important for alignment

Risks of Pausing:

There is still a corporate race on, it just has to work around the rules of the pause now. This creates enormous pressure for the AI experts to find ways of improving the outputs of AI without breaking limits. This is especially concerning in the case of compute limits since it explicitly pushes research in the direction of searching for algorithms that would allow things like:

-

incremental / federated training methods that break up big training runs into sub-limit pieces, or allow for better combinations of prior models

-

the search for much more efficient algorithms that can work on much less data and training compute. I am confident that huge gains are possible here, and would be found quickly. This point has enough detail that could be added that it could become a post all on its own. I am reluctant to publicly post these thoughts though, since they might contribute to the thing I'm worried about actually happening. Such developments would gravely undercut the new compute restrictions and enable the risk of wider proliferation into the hands of many smaller actors.

-

the search for loopholes in the regulation or flaws in the surveillance and enforcement that allow for racing while appearing not to race

-

the continuation of many corporate race dynamics that are bad for safety like pressure for espionage and employee-sniping,

-

lack of military-grade security on AI developers. Many different independent corporate systems each presenting their own set of vulnerabilities. Dangerous innovation could occur in relatively small and insecure companies, and then get stolen or leaked.

-

any employee can decide to quit and go off to start their own project, spreading tech secrets and changing control structures. The companies have no power to stop this (as opposed to a nationalized project).

-

If large training runs are banned, then this reduces incentive to work for the big companies, smaller companies will seem more competitive and tempting.

↑ comment by Raemon · 2024-10-15T00:30:38.208Z · LW(p) · GW(p)

The whole reason I think we should pause is that, sooner or later, we will hit a threshold where, if we do not pause, we literally die, basically immediately (or, like, a couple months later in a way that is hard to find and intervene on) and it doesn't matter whether pausing has downsides.

(Where "pause" means "pause further capability developments that are more likely to produce the kinds of agentic thinking that could result in a recursive self-improvement)

So for me the question is "do we literally pause at the last possible second, or, sometime beforehand." (I doubt "last possible second" is a reasonable choice, if it's necessary to pause "at all", although I'm much more agnostic about "we should literally pause right now" vs "right at the moment we have minimum-viable-AGI but before recursive self-improvement can start happening" vs "2-6 months before MVP AGI")

I'm guessing you probably disagree that there will be a moment where, if we do not pause, we literally die?

Replies from: nathan-helm-burger↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-10-15T02:25:18.626Z · LW(p) · GW(p)

Short answer, yes I disagree. I also don't think we are safe if we do pause. That additional fact makes pausing seem less optimal.

Long answer:

I think I need to break this concept down a bit more. There's a variety of places one might consider pausing, and a variety of consequences which I think could happen at each of those places.

Pre-AGI, dangerous tool AI: this is where we are at now. A combination of tool AI and the limited LLMs we have can together provide pretty substantial uplift to a terrorist org attempting to wipe out humanity. Not an x-risk, but could certainly kill 99% of humanity. A sensible civilization would have paused before we got this far, and put appropriate defenses in place before proceeding. We still have a chance to build defenses, and should do that ASAP. Civilization is not safe. Our existing institutions are apparently failing to protect us from the substantial risks we are facing.

Assistant AGI

weak AGI is being trained. With sufficient scaffolding, this will probably speed up AI research substantially, but isn't enough to fully automate the whole research process. This can probably be deployed safely behind an API without dooming us all. If it is following the pattern of LLMs so far, this involves a system with tons of memorized facts but subpar reasoning skills and lack of integration of the logical implications of combinations of the facts. The facts are scattered and disconnected.

If we were wise, we'd stop here and accept this substantial speed-up to our research capabilities and try to get civilization into a safe state. If others are racing though, the group at this level is going to be strongly tempted to proceed. This is not yet enough AI power to assure a decisive technological-economic victory for the leader.

If this AGI escaped, we could probably catch it before it self-improved much at all, and it would likely pose no serious (additional) danger to humanity.

Full Researcher AGI

Enough reasoning capability added to the existing LLMs and scaffolding systems (perhaps via some non-LLM architecture) that the system can now reason and integrate facts at least as well as a typical STEM scientist.

I don't expect that this kills us or escapes its lab, if it is treated cautiously. I think Buck&Ryan's control scheme works well to harness capabilities without allowing harms, with only a moderate safety tax.

The speed up to AI research could now be said to truly be RSI. The time to the next level up, the more powerful version, may be only a few months, if the research is gone ahead with at full speed. This is where we would depend on organizational adequacy to protect us, to restrain the temptation of some researchers to accelerate full speed. This is where we have the chance to instead turn the focus fully onto alignment, defensive technology acceleration, and human-augmentation. This probably enough AI power to grant the leader decisive economic/technological/military power and allow them to take action to globally halt further racing towards AI. In other words, this is the first point at which I believe we could safely 'pause', although given that the 'pause' would look like pretty drastic actions and substantial use of human level AI, I don't think that the 'pause' framing quite fits. Civilization can quickly be made safe.

If released into the world, could probably evolve into something that could wipe out humanity. Could be quite hard to catch and delete it in time, if it couldn't be proven to the relevant authorities that the risk was real and required drastic action. Not a definite game-over though, just high risk.

Mildly Superhuman AGI (weak ASI)

Not only knows more about the world than any human ever has, but also integrates this information and reasons about it more effectively than any human could. Pretty dangerous even in the lab, but still could be safely controlled via careful schemes. For instance, keeping it impaired with slow-downs, deliberately censored and misleading datasets/simulations, and noise injection into its activations. Deploying it safely would likely require so much impairment that it wouldn't end up any more useful than the merely-high-human-level AGI.

There would be huge temptation to relax the deliberate impairments and harness the full power. Organizational insufficiency could strike here, failing to sufficiently restrain the operators.

If it escaped, it would definitely be able to destroy humanity within a fairly short time frame.

Strongly Superhuman

By the time you've relaxed your impairment and control measures enough to even measure how superhuman it is, you are already in serious danger from it. This is the level where you would need to seriously worry about the lab employees getting mind-hacked in some way, or the lab compute equipment getting hacked. If we train this model, and even try to test it at full power, we are in great danger.

If it escapes, it is game over.

Given these levels, I think that you are technically correct that there is a point where if we don't pause we are pretty much doomed. But I think that that pause point is somewhere above human-level AGI.

Furthermore, I think that attempting to pause before we get to 'enough AI power to make humanity safe' leads to us actually putting humanity at greater risk.

How?

- By continuing in our current state of great vulnerability to self-replicating weapons like bioweapons.

- By putting research pressure on the pursuit of more efficient algorithms, and resulting in a much more distributed, harder to control progress front proceeding nearly as fast to superhuman AGI. I think this rerouting would put us in far more danger of having a containment breach, or overshooting the target level of useful-but-not-suicidally-dangerous.

↑ comment by Raemon · 2024-10-15T03:36:47.058Z · LW(p) · GW(p)

I don’t have a deep confident argument that we should pause before ‘slightly superhuman level’, but I do think we will need to pause then, and I think getting humanity ready to pause takes like 3 years which is also what my earliesh possible timelines are, so I think we need to start laying the groundwork now so that, even if you think we shouldn’t pause till later, we are ready civilizationally to pause quite abruptly.

Replies from: nathan-helm-burger, JohnGreer↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-10-15T03:51:29.549Z · LW(p) · GW(p)

Well, it seems we are closer to agreement than we thought about when a pause could be good. I am unsure about the correct way to prep for the pause. I do think we are about 2-4 years from human-level AI, and could get to above-human-level within a year after that if we so chose.

↑ comment by JohnGreer · 2024-10-18T04:21:18.952Z · LW(p) · GW(p)

What makes you say 3 years?

Replies from: Raemon↑ comment by Raemon · 2024-10-18T08:04:27.156Z · LW(p) · GW(p)

It's more like an intuitive guess than anything based on anything particularly rigorous, but, like, it takes time for companies and nation-states and international communities to get to agree to things, we don't seem anywhere close, there will be political forces opposing the pause, and 3 years seems like a generously short time if we even got moderately lucky, to get all the necessary actors to pause in a stable way.

↑ comment by Noosphere89 (sharmake-farah) · 2024-10-14T14:02:21.558Z · LW(p) · GW(p)

Honestly, my view is that assuming a baseline level of competence where AI legislation is inadequate until a crisis appears, and that crisis has to be fairly severe, it depends fairly clearly on when it happens.

A pause 2-3 years before AI can takeover everything is probably net-positive, but attempting to pause say 1 year or 6 months before AI can takeover everything is plausibly net negative, because I suspect a lot of the pauses to essentially be pauses on giant training runs, which unfortunately introduces lots of risks from overhang from algorithmic advances, and I expect that as soon as very strong laws on AI are passed, AI will probably be either 1 OOM in compute away from takeover, or could already takeover given new algorithms, which becomes a massive problem.

In essence, overhangs are the reason I expect the MNM effect to backfire/have a negative effect for AI regulation in a way it doesn't for other regulation:

https://www.lesswrong.com/posts/EgdHK523ZM4zPiX5q/coronavirus-as-a-test-run-for-x-risks#Implications_for_X_risks [LW · GW]

comment by Towards_Keeperhood (Simon Skade) · 2024-10-14T16:47:16.952Z · LW(p) · GW(p)

IIUC, you have a 15% probability for humanity surviving. Can you say more about how you think those worlds would look like? Are those worlds where we ultimately build aligned superintelligence and how would that go?

comment by Martin Vlach (martin-vlach) · 2024-11-08T06:00:21.563Z · LW(p) · GW(p)

EA is neglecting industrial solutions to the industrial problem of successionism.

..because the broader mass of active actors working on such solutions renders the biz areas non-neglected?

comment by LTM · 2024-10-13T17:47:27.567Z · LW(p) · GW(p)

One method of keeping humans in key industrial processes might be expanding credentialism. Individuals remaining control even when the majority of the thinking isn't done by them has always been a key part of any hierarchical organisation.

Legally speaking, certain key tasks can only be performed by qualified accountants, auditors, lawyers, doctors, elected officials and so on.

It would not be good for short term economic growth. However, legally requiring that certain tasks be performed by people with credentials machines are not eligible for might be a good (though absolutely not perfect) way of keeping humans in the loop.

comment by Chipmonk · 2024-10-12T06:17:38.505Z · LW(p) · GW(p)

Have you thought much about mental health?[1] Mental health seems to meet all of your same criteria. I say this because I'm currently working on this [LW · GW] (and for alignment-like reasons).

- ^

Maybe "healthcare" includes mental health to you, but https://healthcareagents.com/ doesn't seem to mention it.

↑ comment by Chipmonk · 2024-10-12T06:22:14.710Z · LW(p) · GW(p)

If anything, I suspect mental health is more tractable, with quicker feedback loops, and with more of a possibility of directly helping alignment[1] compared to physical health.

- ^

Why? I'll have to write a full post on this eventually, but the gist is: I suspect many social conflicts, such as those around AI, could dissolve if the underlying ego conflicts dissolved. Decision-makers who become more emotionally secure also become better at coordination. Improving physical health has much less of this effect imo.

↑ comment by Cleo Nardo (strawberry calm) · 2024-12-04T18:31:58.983Z · LW(p) · GW(p)

I've skimmed the business proposal.

The healthcare agents advise patients on which information to share with their doctor, and advises doctors on which information to solicit from their patients.

This seems agnostic between mental and physiological health.

comment by Oliver Sourbut · 2024-10-16T09:03:01.596Z · LW(p) · GW(p)

There are a variety of different attitudes that can lead to successionism.

This is a really valuable contribution! (Both the term and laying out a partial extentional definition.)

I think there's a missing important class of instances which I've previously referred to as 'emotional dependence and misplaced concern' (though I think my choice of words isn't amazing). The closest is perhaps your 'AI parentism'. The basic point is that there is a growing 'AI rights/welfare' lobby because some people (for virtuous reasons!) are beginning to think that AI systems are or could be deserving moral patients. They might not even be wrong, though the current SOTA conversation here appears incredibly misguided. (I have drafts elaborating on this but as I am now a civil servant there are some hoops I need to jump through to publish anything substantive.)

comment by George Ingebretsen (george-ingebretsen) · 2024-10-13T17:54:51.977Z · LW(p) · GW(p)

In the case that there are, like "ai-run industries" and "non-ai-run industries", I guess I'd expect the "ai-run industries" to gobble up all of the resources to the point that even though ai's aren't automating things like healthcare, there just aren't any resources left?

Replies from: Raemon↑ comment by Raemon · 2024-10-13T18:04:34.895Z · LW(p) · GW(p)

I think Critch isn’t imagining ‘AI run’ as a distinction, per se, but that there are industries whose outputs mostly benefit humans, and industries who are ‘dual use’. Human Healthcare might be run by AIs eventually but is still pointed at human centric goals

Replies from: george-ingebretsen↑ comment by George Ingebretsen (george-ingebretsen) · 2024-10-14T03:29:50.563Z · LW(p) · GW(p)

Realizing I kind of misunderstood the point of the post. Thanks!