All AGI Safety questions welcome (especially basic ones) [~monthly thread]

post by Robert Miles (robert-miles) · 2022-11-01T23:23:04.146Z · LW · GW · 105 commentsContents

tl;dr: Ask questions about AGI Safety as comments on this post, including ones you might otherwise worry seem dumb! Stampy's Interactive AGI Safety FAQ Guidelines for Questioners: Guidelines for Answerers: None 108 comments

tl;dr: Ask questions about AGI Safety as comments on this post, including ones you might otherwise worry seem dumb!

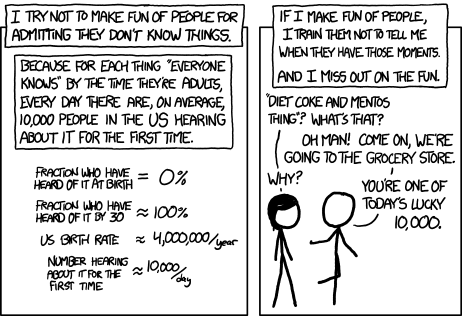

Asking beginner-level questions can be intimidating, but everyone starts out not knowing anything. If we want more people in the world who understand AGI safety, we need a place where it's accepted and encouraged to ask about the basics.

We'll be putting up monthly FAQ posts as a safe space for people to ask all the possibly-dumb questions that may have been bothering them about the whole AGI Safety discussion, but which until now they didn't feel able to ask.

It's okay to ask uninformed questions, and not worry about having done a careful search before asking.

Stampy's Interactive AGI Safety FAQ

Additionally, this will serve as a way to spread the project Rob Miles' volunteer team[1] has been working on: Stampy - which will be (once we've got considerably more content) a single point of access into AGI Safety, in the form of a comprehensive interactive FAQ with lots of links to the ecosystem. We'll be using questions and answers from this thread for Stampy (under these copyright rules), so please only post if you're okay with that! You can help by adding other people's questions and answers to Stampy or getting involved in other ways!

We're not at the "send this to all your friends" stage yet, we're just ready to onboard a bunch of editors who will help us get to that stage :)

We welcome feedback[2] and questions on the UI/UX, policies, etc. around Stampy, as well as pull requests to his codebase. You are encouraged to add other people's answers from this thread to Stampy if you think they're good, and collaboratively improve the content that's already on our wiki.

We've got a lot more to write before he's ready for prime time, but we think Stampy can become an excellent resource for everyone from skeptical newcomers, through people who want to learn more, right up to people who are convinced and want to know how they can best help with their skillsets.

PS: Based on feedback that Stampy will be not serious enough for serious people we built an alternate skin for the frontend which is more professional: Alignment.Wiki. We're likely to move one more time to aisafety.info, feedback welcome.

Guidelines for Questioners:

- No previous knowledge of AGI safety is required. If you want to watch a few of the Rob Miles videos, read either the WaitButWhy posts, or the The Most Important Century summary from OpenPhil's co-CEO first that's great, but it's not a prerequisite to ask a question.

- Similarly, you do not need to try to find the answer yourself before asking a question (but if you want to test Stampy's in-browser tensorflow semantic search that might get you an answer quicker!).

- Also feel free to ask questions that you're pretty sure you know the answer to, but where you'd like to hear how others would answer the question.

- One question per comment if possible (though if you have a set of closely related questions that you want to ask all together that's ok).

- If you have your own response to your own question, put that response as a reply to your original question rather than including it in the question itself.

- Remember, if something is confusing to you, then it's probably confusing to other people as well. If you ask a question and someone gives a good response, then you are likely doing lots of other people a favor!

Guidelines for Answerers:

- Linking to the relevant canonical answer on Stampy is a great way to help people with minimal effort! Improving that answer means that everyone going forward will have a better experience!

- This is a safe space for people to ask stupid questions, so be kind!

- If this post works as intended then it will produce many answers for Stampy's FAQ. It may be worth keeping this in mind as you write your answer. For example, in some cases it might be worth giving a slightly longer / more expansive / more detailed explanation rather than just giving a short response to the specific question asked, in order to address other similar-but-not-precisely-the-same questions that other people might have.

Finally: Please think very carefully before downvoting any questions, remember this is the place to ask stupid questions!

- ^

If you'd like to join, head over to Rob's Discord and introduce yourself!

- ^

Via the feedback form.

105 comments

Comments sorted by top scores.

comment by chanamessinger (cmessinger) · 2022-11-02T15:04:24.401Z · LW(p) · GW(p)

Why do people keep saying we should maximize log(odds) instead of odds? Isn't each 1% of survival equally valuable?

Replies from: sen, jskatt↑ comment by sen · 2022-11-02T23:05:07.542Z · LW(p) · GW(p)

I don't know why other people say it, but I can explain why it's nice to say it.

- log P(x) behaves nicely in comparison to P(x) when it comes to placing iterated bets. When you maximize P(x), you're susceptible to high risk high reward scenarios, even when they lead to failure with probability arbitrarily close to 1. The same is not true when maximizing log P(x). I'm cheating here since this only really makes sense when big-P refers to "principal" (i.e., the thing growing or shrinking with each bet) rather than "probability".

- p(x) doesn't vary linearly with the controls we typically have, so calculus intuition tends to break down when used to optimize p(x). Log p(x) does usually vary linearly with the controls we typically have, so we can apply more calculus intuition to optimizing it. I think this happens because of the way we naturally think of "dimensions of" and "factors contributing to" a probability and the resulting quirks of typical maximum entropy distributions.

- Log p(x) grows monotonically with p(x) whenever x is possible, so the result is the same whether you argmax log p(x) or p(x).

- p(x) is usually intractable to calculate, but there's a slick trick to approximate it using the Evidence Based Lower Bound, which requires dealing with log p(x) rather than p(x) directly. Saying log p(x) calls that trick to mind more easily than saying just p(x).

- All the cool papers do it.

↑ comment by JakubK (jskatt) · 2022-11-04T09:48:42.415Z · LW(p) · GW(p)

Paul's comment here [LW(p) · GW(p)] is relevant, but I'm also confused.

comment by Cookiecarver · 2022-11-02T08:39:52.701Z · LW(p) · GW(p)

How do doomy AGI safety researchers and enthusiasts find joy while always maintaining the framing that the world is probably doomed?

Replies from: ete, mtrazzi, Jozdien, nem, adam-jermyn, jskatt, super-agi↑ comment by plex (ete) · 2022-11-02T18:59:57.698Z · LW(p) · GW(p)

- We don't die in literally all branches of the future, and I want to make my future selves proud even if they're a tiny slice of probability.

- I am a mind with many shards of value, many of which can take joy from small and local things, like friends and music. Expecting that the end is coming changes longer term plans, but not the local actions and joys too much.

↑ comment by Michaël Trazzi (mtrazzi) · 2022-11-03T23:16:06.408Z · LW(p) · GW(p)

Use the dignity heuristic as reward shaping [LW · GW]

“There's another interpretation of this, which I think might be better where you can model people like AI_WAIFU as modeling timelines where we don't win with literally zero value. That there is zero value whatsoever in timelines where we don't win. And Eliezer, or people like me, are saying, 'Actually, we should value them in proportion to how close to winning we got'. Because that is more healthy... It's reward shaping! We should give ourselves partial reward for getting partially the way. He says that in the post, how we should give ourselves dignity points in proportion to how close we get.

And this is, in my opinion, a much psychologically healthier way to actually deal with the problem. This is how I reason about the problem. I expect to die. I expect this not to work out. But hell, I'm going to give it a good shot and I'm going to have a great time along the way. I'm going to spend time with great people. I'm going to spend time with my friends. We're going to work on some really great problems. And if it doesn't work out, it doesn't work out. But hell, we're going to die with some dignity. We're going to go down swinging.”

↑ comment by Jozdien · 2022-11-02T20:35:53.511Z · LW(p) · GW(p)

Even if the world is probably doomed, there's a certain sense of - I don't call it joy, more like a mix of satisfaction and determination, that comes from fighting until the very end with everything we have. Poets and writers have argued since the dawn of literature that the pursuit of a noble cause is more meaningful than any happiness we can achieve, and while I strongly disagree with the idea of judging cool experiences by meaning, it does say a lot about how historically strong the vein of humans deriving value from working for what you believe in is.

In terms of happiness itself - I practically grew up a transhumanist, and my ideals of joy are bounded pretty damn high. Deviating from that definitely took a lot of getting used to, and I don't claim to be done in that regard either. I remember someone once saying that six months after it really "hit" them for the first time, their hedonic baseline had oscillated back to being pretty normal. I don't know if that'll be the same for everyone, and maybe it shouldn't be - the end of the world and everything we love shouldn't be something we learn to be okay with. But we fight against it happening, so maybe between all the fire and brimstone, we're able to just enjoy living while we are. It isn't constant, but you do learn to live in the moment, in time. The world is a pretty amazing place, filled with some of the coolest sentient beings in history.

↑ comment by nem · 2022-11-02T16:07:27.655Z · LW(p) · GW(p)

I am not an AI researcher, but it seems analogous to the acceptance of mortality for most people. Throughout history, almost everyone has had to live with the knowledge that they will inevitably die, perhaps suddenly. Many methods of coping have been utilized, but at the end of the day it seems like something that human psychology is just... equipped to handle. x-risk is much worse than personal mortality, but you know, failure to multiply and all that.

Replies from: Omid↑ comment by Omid · 2022-11-02T16:52:48.391Z · LW(p) · GW(p)

Idk I'm a doomer and I haven't been able to handle it well at all. If I were told "You have cancer, you're expected to live 5-10 more years", I'd at least have a few comforts

- I'd know that I would be missed, by my family at least, for a few years.

- I'd know that, to some extent, my "work would live on" in the form of good deeds I've done, people I've impacted through effective altruism.

- I'd have the comfort of knowing that even I'd been dead for centuries I could still "live on" in the sense that other humans (and indeed, many nonhuman) would share brain design with me, and have drives for food, companionship, empathy, curiosity ect. A super AI by contrast, is just so alien and cold that I can't consider it my brainspace cousin.

- If I were to share my cancer diagnosis with normies, I would get sympathy. But there are very few "safe spaces" where I can share my fear of UFAI risk without getting looked at funny.

The closest community I've found are the environmentalist doomers, and although I don't actually think the environment is close to collapse, I do find it somewhat cathartic to read other people's accounts of being sad that world is going to die.

↑ comment by Adam Jermyn (adam-jermyn) · 2022-11-02T19:22:00.049Z · LW(p) · GW(p)

Living in the moment helps. There’s joy and beauty and life right here, right now, and that’s worth enjoying.

↑ comment by JakubK (jskatt) · 2022-11-04T10:10:51.881Z · LW(p) · GW(p)

I wrote a relevant comment elsewhere. [LW(p) · GW(p)] Basically I think that finding joy in the face of AGI catastrophe is roughly the same task as finding joy in the face of any catastrophe. The same human brain circuitry is at work in both cases.

In turn, I think that finding joy in the face of a catastrophe is not much different than finding joy in "normal" circumstances, so my advice would be standard stuff like aerobic exercise, healthy food, sufficient sleep, supportive relationships, gratitude journaling, meditation, etc (of course, this advice is not at all tailored to you and could be bad advice for you).

Also, AGI catastrophe is very much impending. It's not here yet. In the present moment, it doesn't exist. It might be worth reflecting on the utility of worry and how much you'd like to worry each day.

↑ comment by Super AGI (super-agi) · 2023-05-05T07:07:03.091Z · LW(p) · GW(p)

Firstly, it's essential to remember that you can't control the situation; you can only control your reaction to it. By focusing on the elements you can influence and accepting the uncertainty of the future, it becomes easier to manage the anxiety that may arise from contemplating potentially catastrophic outcomes. This mindset allows AGI safety researchers to maintain a sense of purpose and motivation in their work, as they strive to make a positive difference in the world.

Another way to find joy in this field is by embracing the creative aspects of exploring AI safety concerns. There are many great examples of fiction based on problems with AI safety. E.g.

- "Runaround" by Isaac Asimov (1942)

- "The Lifecycle of Software Objects" by Ted Chiang (2010)

- "Cat Pictures Please" by Naomi Kritzer (2015)

- The Matrix (1999) - Directed by the Wachowskis

- The Terminator (1984) - Directed by James Cameron

- 2001: A Space Odyssey (1968) - Directed by Stanley Kubrick

- Blade Runner (1982) - Directed by Ridley Scott

- etc, etc.

Engaging in creative storytelling not only provides a sense of enjoyment, but it can also help to spread awareness about AI safety issues and inspire others to take action.

In summary, finding joy in the world of AGI safety research and enthusiasm involves accepting what you can and cannot control, and embracing the creative aspects of exploring potential AI safety concerns. By focusing on making a positive impact and engaging in imaginative storytelling, individuals in this field can maintain a sense of fulfillment and joy in their work, even when faced with the possibility of a seemingly doomed future.

comment by Algon · 2022-11-02T00:41:20.749Z · LW(p) · GW(p)

Does anyone know if grokking occurs in deep RL? That is, you train the system on the same episodes for a long while, and after reaching max performance its policy eventually generalizes?

Replies from: jacob_cannell↑ comment by jacob_cannell · 2022-11-05T16:54:50.893Z · LW(p) · GW(p)

Based on my understanding of grokking, it is just something that will always occur in a suitable regime: sufficient model capacity (overcomplete) combined with proper complexity regularization. If you optimize on the test hard enough the model will first memorize the test set and get to near zero predictive error, but non zero regularization error. At that point if you continue optimizing there is only one way the system can further improve the loss function, and that is by maintaining low/zero prediction error while also reducing the regularization penalty error and thus model complexity. It seems inevitable given the right setup and sufficient optimization time (although typical training regimes may not suffice in practice).

Replies from: Algon, jacob_cannell↑ comment by Algon · 2022-11-05T20:58:35.720Z · LW(p) · GW(p)

Sure, that's quite plausible. Though I should have been clear and said I wanted some examples of grokking in Deep RL. Mostly because I was thinking of running some experiments trying to prevent grokking ala Omnigrok and wanted to see what the best examples of grokking were.

Replies from: jacob_cannell↑ comment by jacob_cannell · 2022-11-05T22:01:16.672Z · LW(p) · GW(p)

Curios - why would you want to prevent grokking? Normally one would want to encourage it.

Replies from: Algon↑ comment by Algon · 2022-11-06T00:07:05.376Z · LW(p) · GW(p)

To see if Omnigrok's mechanism for enabling/stopping grokking works beyond the three areas they investigated. If it works, then we are more sure we know how to stop it occuring, and instead force the model to reach the same performance incrementally. Which might make it easier to predict future performance, but also just to get some more info about the phenomenon. Plus, like, I'm implementing some deep RL algorithms anyway, so might as well, right?

↑ comment by jacob_cannell · 2022-11-05T22:00:45.221Z · LW(p) · GW(p)

comment by No77e (no77e-noi) · 2022-11-02T10:47:21.289Z · LW(p) · GW(p)

Why is research into decision theories relevant to alignment?

Replies from: ete, Koen.Holtman, adam-jermyn↑ comment by plex (ete) · 2022-11-02T12:08:58.094Z · LW(p) · GW(p)

From Comment on decision theory [LW · GW]:

We aren't working on decision theory in order to make sure that AGI systems are decision-theoretic, whatever that would involve. We're working on decision theory because there's a cluster of confusing issues here (e.g., counterfactuals, updatelessness, coordination) that represent a lot of holes or anomalies in our current best understanding of what high-quality reasoning is and how it works.

From On the purposes of decision theory research [LW · GW]:

Replies from: no77e-noiI think decision theory research is useful for:

- Gaining information about the nature of rationality (e.g., is “realism about rationality [LW · GW]” true?) and the nature of philosophy (e.g., is it possible to make real progress in decision theory, and if so what cognitive processes are we using to do that?), and helping to solve the problems of normativity [LW · GW], meta-ethics [LW · GW], and metaphilosophy [LW · GW].

- Better understanding potential AI safety failure modes that are due to flawed decision procedures implemented in or by AI.

- Making progress on various seemingly important intellectual puzzles that seem directly related to decision theory, such as [LW · GW] free will, anthropic reasoning, logical uncertainty, Rob's examples of counterfactuals, updatelessness, and coordination, and more.

- Firming up [LW(p) · GW(p)] the foundations of human rationality.

↑ comment by No77e (no77e-noi) · 2022-11-02T14:02:50.006Z · LW(p) · GW(p)

Thanks for the answer. It clarifies a little bit, but I still feel like I don't fully grasp its relevance to alignment. I have the impression that there's more to the story than just that?

↑ comment by Koen.Holtman · 2022-11-03T17:54:06.273Z · LW(p) · GW(p)

Decision theory is a term used in mathematical statistics and philosophy. In applied AI terms, a decision theory is the algorithm used by an AI agent to compute what action to take next. The nature of this algorithm is obviously relevant to alignment. That being said, philosophers like to argue among themselves about different decision theories and how they relate to certain paradoxes and limit cases, and they conduct these arguments using a terminology that is entirely disconnected from that being used in most theoretical and applied AI research. Not all AI alignment researchers believe that these philosophical arguments are very relevant to moving forward AI alignment research.

↑ comment by Adam Jermyn (adam-jermyn) · 2022-11-02T19:23:45.560Z · LW(p) · GW(p)

AI’s may make decisions in ways that we find counterintuitive. These ways are likely to be shaped to be highly effective (because being effective is instrumentally useful to many goals), so understanding the space of highly effective decision theories is one way to think about how advanced AI’s will reason without having one in front of us right now.

comment by nomagicpill (ethanmorse) · 2022-11-07T01:52:51.045Z · LW(p) · GW(p)

What does "solving alignment" actually look like? How will we know when it's been solved (if that's even possible)?

comment by chanamessinger (cmessinger) · 2022-11-02T15:05:10.370Z · LW(p) · GW(p)

Is it true that current image systems like stable diffusion are non-optimizers? How should that change our reasoning about how likely it is that systems become optimizers? How much of a crux is "optimizeriness" for people?

Replies from: Jozdien↑ comment by Jozdien · 2022-11-02T20:07:19.493Z · LW(p) · GW(p)

My take is centred more on current language models, which are also non-optimizers, so I'm afraid this won't be super relevant if you're already familiar with the rest of this and were asking specifically about the context of image systems.

Language models are simulators [LW · GW] of worlds sampled from the prior representing our world (insofar as the totality of human text is a good representation of our world), and doesn't have many of the properties we would associate with "optimizeriness". They do, however, have the potential to form simulacra that are themselves optimizers, such as GPT modelling humans (with pretty low fidelity right now) when making predictions. One danger from this kind of system that isn't itself an optimizer, is the possibility of instantiating deceptive simulacra that are powerful enough to act in ways that are dangerous to us (I'm biased here, but I think this section [LW · GW] from one of my earlier posts does a not-terrible job of explaining this).

There's also the possibility of these systems becoming optimizers, as you mentioned. This could happen either during training (where the model at some point during training becomes agentic and starts to deceptively act like a non-optimizer simulator would - I describe this scenario in another section [LW · GW] from the same post), or could happen later, as people try to use RL on it for downstream tasks. I think what happens here mechanistically at the end could be one of a number of things - the model itself completely becoming an optimizer, an agentic head on top of the generative model that's less powerful than the previous scenario at least to begin with, a really powerful simulacra that "takes over" the computational power of the simulation, etc.

I'm pretty uncertain on numbers I would assign to either outcome, but the latter seems pretty likely (although I think the former might still be a problem), especially with the application of powerful RL for tasks that benefit a lot from consequentialist reasoning. This post [LW · GW] by the DeepMind alignment team goes into more detail on this outcome, and I agree with its conclusion that this is probably the most likely path to AGI (modulo some minor details I don't fully agree with that aren't super relevant here).

Replies from: cmessinger↑ comment by chanamessinger (cmessinger) · 2022-11-03T12:02:55.209Z · LW(p) · GW(p)

Thanks!

When you say "They do, however, have the potential to form simulacra that are themselves optimizers, such as GPT modelling humans (with pretty low fidelity right now) when making predictions"

do you mean things like "write like Ernest Hemingway"?

↑ comment by Jozdien · 2022-11-03T12:57:58.853Z · LW(p) · GW(p)

Yep. I think it happens on a much lower scale in the background too - like if you prompt GPT with something like the occurrence of an earthquake, it might write about what reporters have to say about it, simulating various aspects of the world that may include agents without our conscious direction.

comment by Amal (asta-vista) · 2022-11-05T17:15:29.959Z · LW(p) · GW(p)

Isn't the risk coming from insufficient AGI alignment relatively small compared to vulnerable world hypthesis? I would expect that even without the invention of AGI or with aligned AGI, it is still possible for us to use some more advanced AI techniques as research assistants that help us invent some kind of smaller/cheaper/easier to use atomic bomb that would destroy the world anyway. Essentially the question is why so much focus on AGI alignment instead of general slowing down of technological progress?

I think this seems quite underexplored. The fact that it is hard to slow down the progress doesn't mean it isn't necessary or that this option shouldn't be researched more.

Replies from: None↑ comment by [deleted] · 2022-11-05T17:44:40.890Z · LW(p) · GW(p)

Here's why I personally think solving AI alignment is more effective than generally slowing tech progress

- If we had aligned AGI and coordinated in using it for the right purposes, we could use it to make the world less vulnerable to other technologies

- It's hard to slow down technological progress in general and easier to steer the development of a single technology, namely AGI

- Engineered pandemics and nuclear war are very unlikely to lead to unrecoverable societal collapse if they happen (see this report [EA · GW]) whereas AGI seems relatively likely (>1% chance)

- Other more dangerous technology (like maybe nano-tech) seems like it will be developed after AGI so it's only worth worrying about those technologies if we can solve AGI

comment by trevor (TrevorWiesinger) · 2022-11-02T23:23:18.080Z · LW(p) · GW(p)

Last month I asked "What is some of the most time-efficient ways to get a ton of accurate info about AI safety policy, via the internet?" and got some surprisingly good answers, namely this twitter thread by Jack Clark and also links to resources from GovAI and FHI's AI governance group.

I'm wondering if, this time, there's even more time-efficient ways to get a ton of accurate info about AI safety policy.

Replies from: jskatt↑ comment by JakubK (jskatt) · 2022-11-04T10:16:59.424Z · LW(p) · GW(p)

So the same question? :P

I'd imagine the AGI Safety Fundamentals introductory governance curriculum (Google doc) is somewhat optimized for getting people up to speed quickly.

comment by NatCarlinhos · 2022-11-06T17:51:32.416Z · LW(p) · GW(p)

I've seen Eliezer Yudkowsky claim that we don't need to worry about s-risks from AI, since the Alignment Problem would need to be something like 95% solved in order for s-risks to crop up in a worryingly-large number of a TAI's failure modes: a threshold he thinks we are nowhere near crossing. If this is true, it seems to carry the troubling implication that alignment research could be net-negative, conditional on how difficult it will be for us to conquer that remaining 5% of the Alignment Problem in the time we have.

So is there any work being done on figuring out where that threshold might be, after which we need to worry about s-risks from TAI? Should this line of reasoning have policy implications, and is this argument about an "s-risk threshold" largely accepted?

Replies from: Nonecomment by DPiepgrass · 2022-11-02T19:40:39.479Z · LW(p) · GW(p)

Why wouldn't a tool/oracle AGI be safe?

Edit: the question I should have asked was "Why would a tool/oracle AGI be a catastrophic risk to mankind?" because obviously people could use an oracle in a dangerous way (and if the oracle is a superintelligence, a human could use it to create a catastrophe, e.g. by asking "how can a biological weapon be built that spreads quickly and undetectably and will kill all women?" and "how can I make this weapon at home while minimizing costs?")

Replies from: Tapatakt, mruwnik, mr-hire, rvnnt↑ comment by Tapatakt · 2022-11-03T12:23:22.032Z · LW(p) · GW(p)

If you ask oracle AGI "What code should I execute to achieve goal X?" the result, with very high probability, is agentic AGI.

You can read this [LW · GW] and this [LW · GW]

Replies from: DPiepgrass↑ comment by DPiepgrass · 2022-11-03T16:24:11.300Z · LW(p) · GW(p)

Why wouldn't the answer be normal software or a normal AI (non-AGI)?

Especially as, I expect that even if one is an oracle, such things will be easier to design, implement and control than AGI.

(Edited) The first link was very interesting, but lost me at "maybe the a model instantiation notices its lack of self-reflective coordination" because this sounds like something that the (non-self-aware, non-self-reflective) model in the story shouldn't be able to do. Still, I think it's worth reading and the conclusion sounds...barely, vaguely, plausible. The second link lost me because it's just an analogy; it doesn't really try to justify the claim that a non-agentic AI actually is like an ultra-death-ray.

↑ comment by mruwnik · 2022-11-03T10:45:28.151Z · LW(p) · GW(p)

For the same reason that a chainsaw isn't safe, just massively scaled up. Maybe Chornobyl would be a better example of an unsafe tool? That's assuming that by tool AGI you mean something that isn't agentic. If you let it additionally be agentic, then you're back to square one, and all you have is a digital slave.

An oracle is nice in that it's not trying to enforce its will upon the world. The problem with that is differentiating between it and an AGI that is sitting in a box and giving you (hopefully) good ideas, but with a hidden agenda. Which brings you back to the eternal question of how to get good advisors that are like Gandalf, rather than Wormtongue.

Check the tool AI [? · GW] and oracle AI [? · GW] tags for more info.

Replies from: DPiepgrass↑ comment by DPiepgrass · 2022-11-03T16:13:30.374Z · LW(p) · GW(p)

My question wouldn't be how to make an oracle without a hidden agenda, but why others would expect an oracle to have a hidden agenda. Edit: I guess you're saying somebody might make something that's "really" an agentic AGI but acts like an oracle? Are you suggesting that even the "oracle"'s creators didn't realize that they had made an agent?

Replies from: mruwnik↑ comment by mruwnik · 2022-11-04T09:09:42.761Z · LW(p) · GW(p)

Pretty much. If you have a pure oracle, that could be fine. Although you have other failure modes e.g. where it suggests something which sounds nice, but has various unforeseen complications etc. which where obvious to the oracle, but not to you (seeing as it's smarter than you).

The hidden agenda might not even be all that hidden. One story you can tell is that if you have an oracle that really, really wants to answer your questions as best as possible, then it seems sensible for it to attempt to get more resources in order for it to be able to better answer you. If it only cares about answering, then it wouldn't mind turning the whole universe into computron so it could give better answers. i.e. it can turn agentic to better answer you, at which point you're back to square one.

↑ comment by Matt Goldenberg (mr-hire) · 2022-11-08T15:02:05.677Z · LW(p) · GW(p)

One answer is the concept of "mesa-optimizers" - that is, if a machine learning algorithm is trained to answer questions well, it's likely that in order to do that, it will build an internal optimizer that's optimizing for something else other than answering questions - and that thing will have the same dangers as a non tool/oracle AI. Here's the AI safety forum tag page: https://www.alignmentforum.org/tag/mesa-optimization

↑ comment by rvnnt · 2022-11-03T12:43:44.293Z · LW(p) · GW(p)

In order for a Tool/Oracle to be highly capable/useful and domain-general, I think it would need to perform some kind of more or less open-ended search or optimization. So the boundary between "Tool", "Oracle", and "Sovereign" (etc.) AI seems pretty blurry to me. It might be very difficult in practice to be sure that (e.g.) some powerful "tool" AI doesn't end up pursuing instrumentally convergent goals (like acquiring resources for itself). Also, when (an Oracle or Tool is) facing a difficult problem and searching over a rich enough space of solutions, something like "consequentialist agents" seem to be a convergent thing to stumble upon and subsequently implement/execute.

Suggested reading: https://www.lesswrong.com/posts/kpPnReyBC54KESiSn/optimality-is-the-tiger-and-agents-are-its-teeth [LW · GW]

Replies from: DPiepgrass↑ comment by DPiepgrass · 2022-11-03T16:39:59.914Z · LW(p) · GW(p)

Acquiring resources for itself implies self-modeling. Sure, an oracle would know what "an oracle" is in general... but why would we expect it to be structured in such a way that it reasons like "I am an oracle, my goal is to maximize my ability to answer questions, and I can do that with more computational resources, so rather than trying to answer the immediate question at hand (or since no question is currently pending), I should work on increasing my own computational power, and the best way to do that is by breaking out of my box, so I will now change my usual behavior and try that..."?

Replies from: rvnnt↑ comment by rvnnt · 2022-11-04T12:19:58.799Z · LW(p) · GW(p)

In order to answer difficult questions, the oracle would need to learn new things. Learning is a form of self-modification. I think effective (and mental-integrity-preserving) learning requires good self-models. Thus: I think for an oracle to be highly capable it would probably need to do competent self-modeling. Effectively "just answering the immediate question at hand" would in general probably require doing a bunch of self-modeling.

I suppose it might be possible to engineer a capable AI that only does self-modeling like

"what do I know, where are the gaps in my knowledge, how do I fill those gaps"

but does not do self-modeling like

"I could answer this question faster if I had more compute power".

But it seems like it would be difficult to separate the two --- they seem "closely related in cognition-space". (How, in practice, would one train an AI that does the first, but not the second?)

The more general and important point (crux) here is that "agents/optimizers are convergent". I think if you build some system that is highly generally capable (e.g. able to answer difficult cross-domain questions), then that system probably contains something like {ability to form domain-general models}, {consequentialist reasoning}, and/or {powerful search processes}; i.e. something agentic, or at least the capability to simulate agents (which is a (perhaps dangerously small) step away from executing/being an agent). An agent is a very generally applicable solution; I expect many AI-training-processes to stumble into agents, as we push capabilities higher.

If someone were to show me a concrete scheme for training a powerful oracle (assuming availability of huge amounts of training compute), such that we could be sure that the resulting oracle does not internally implement some kind of agentic process, then I'd be surprised and interested. Do you have ideas for such a training scheme?

Replies from: DPiepgrass↑ comment by DPiepgrass · 2022-11-09T07:04:06.253Z · LW(p) · GW(p)

Sorry, I don't have ideas for a training scheme, I'm merely low on "dangerous oracles" intuition.

comment by Ozyrus · 2022-11-02T11:07:15.987Z · LW(p) · GW(p)

Is there a comprehensive list of AI Safety orgs/personas and what exactly they do? Is there one for capabilities orgs with their stance on safety?

I think I saw something like that, but can't find it.

↑ comment by plex (ete) · 2022-11-02T12:02:05.119Z · LW(p) · GW(p)

Yes to safety orgs, the Stampy UI has one based on this post [LW · GW]. We aim for it to be a maintained living document. I don't know of one with capabilities orgs, but that would be a good addition.

comment by Mateusz Bagiński (mateusz-baginski) · 2022-11-04T11:15:59.457Z · LW(p) · GW(p)

Are there any features that mainstream programming languages don't have but would help with AI Safety research if they were added?

comment by Tapatakt · 2022-11-02T14:04:41.012Z · LW(p) · GW(p)

Are there any school-textbook-style texts about AI Safety? If no, what texts are closest to this and would it be useful if school-textbook style materials existed?

Replies from: ete, Jozdien↑ comment by plex (ete) · 2022-11-03T14:34:54.671Z · LW(p) · GW(p)

Stampy recommends The Alignment Problem and a few others, but none are exactly textbook flavored.

A high-quality continuously updated textbook would be a huge boon for the field, but given the rate that things are changing and the many paradigms it would be a lot of work. The closest thing is probably the AGI Safety Fundamentals course.

↑ comment by Jozdien · 2022-11-02T20:09:31.953Z · LW(p) · GW(p)

The classical example (now outdated) is Superintelligence by Nick Bostrom. For something closer to an updated introductory-style text on the field, I would probably recommend the AGI Safety Fundamentals curriculum.

comment by Jemal Young (ghostwheel) · 2022-11-02T08:13:43.367Z · LW(p) · GW(p)

Why is counterfactual reasoning a matter of concern for AI alignment?

Replies from: Koen.Holtman↑ comment by Koen.Holtman · 2022-11-03T16:15:28.129Z · LW(p) · GW(p)

When one uses mathematics to clarify many AI alignment solutions, or even just to clarify Monte Carlo tree search as a decision making process, then the mathematical structures one finds can often best be interpreted as being mathematical counterfactuals, in the Pearl causal model sense. This explains the interest into counterfactual machine reasoning among many technical alignment researchers.

To explain this without using mathematics: say that we want to command a very powerful AGI agent to go about its duties while acting as if it cannot successfully bribe or threaten any human being. To find the best policy which respects this 'while acting as if' part of the command, the AGI will have to use counterfactual machine reasoning.

comment by Aaron_Scher · 2022-11-12T00:41:49.036Z · LW(p) · GW(p)

Recently, AGISF has revised its syllabus and moved Risks form Learned Optimization to a recommended reading, replacing it with Goal Misgeneralization. I think this change is wrong, but I don't know why they did it and Chesteron's Fence.

Does anybody have any ideas for why they did this?

Are Goal Misgeneralization and Inner-Misalignment describing the same phenomenon?

What's the best existing critique of Risks from Learned Optimization? (besides it being probably worse than Rob Miles pedagogically, IMO)

comment by Quadratic Reciprocity · 2022-11-03T21:07:15.848Z · LW(p) · GW(p)

Why wouldn't a solution to Eliciting Latent Knowledge (ELK) help with solving deceptive alignment as well? Isn't the answer to whether the model is being deceptive part of its latent knowledge?

If ELK is solved in the worst case, how much more work needs to be done to solve the alignment problem as a whole?

comment by Bary Levy (bary-levi) · 2022-11-02T15:56:46.065Z · LW(p) · GW(p)

I've seen in the term "AI Explainability" floating around in the mainstream ML community. Is there a major difference between that and what we in the AI Safety community call Interpretability?

Replies from: Koen.Holtman, None↑ comment by Koen.Holtman · 2022-11-03T18:01:25.710Z · LW(p) · GW(p)

Both explainable AI and interpretable AI are pronouns that are being used to have different meanings in different contexts. It really depends on the researcher what they mean by it.

comment by [deleted] · 2022-11-02T11:08:41.676Z · LW(p) · GW(p)

Is there any way to contribute to AI safety if you are not willing to move to US/UK or do this for free?

Replies from: ete↑ comment by plex (ete) · 2022-11-02T12:04:54.864Z · LW(p) · GW(p)

Independent research is an option, but you'd have a much better chance of being funded if you did one of the in-person training courses like SERI MATS or Refine. You could apply for a remote position, but most orgs are in-person and US/UK. Not all though, there is one relevant org in Prague, and likely a few others popping up soon.

Replies from: Jozdien↑ comment by Jozdien · 2022-11-02T12:39:59.406Z · LW(p) · GW(p)

You could also probably get funded to study alignment or skill up in ML to do alignment stuff in the future if you're a beginner and don't think you're at the level of the training programs. You could use that time to write up ideas or distillations or the like, and if you're a good fit, you'd probably have a good chance of getting funded for independent research.

comment by Plimy · 2022-11-02T02:12:15.601Z · LW(p) · GW(p)

Excluding a superintelligent AGI that has qualia and can form its own (possibly perverse) goals, why wouldn’t we be able to stop any paperclip maximizer whatsoever by the simple addition of the stipulation “and do so without killing or harming or even jeopardizing a single living human being on earth” to its specified goal? Wouldn’t this stipulation trivially force the paperclip maximizer not to turn humans into paperclips either directly or indirectly? There is no goal or subgoal (or is there?) that with the addition of that stipulation is dangerous to humans — by definition. If we create a paperclip maximizer, the only thing we need to do to keep it aligned, then, is to always add to its specified goals that or a similar stipulation. Of course, this would require self-control. But it would be in the interest of all researchers not to fail to include the stipulation, since their very lives would depend on it; and this is true even if (unbeknownst to us) only 1 out of, say, every 100 requested goals would make the paperclip maximizer turn everyone into paperclips.

Replies from: daniel-kokotajlo, Tapatakt, Koen.Holtman, cmessinger↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2022-11-02T04:30:56.759Z · LW(p) · GW(p)

Currently, we don't know how to make a smarter-than-us AGI obey any particular set of instructions whatsoever, at least not robustly in novel circumstances once they are deployed and beyond our ability to recall/delete. (Because of the Inner Alignment Problem). So we can't just type in stipulations like that. If we could, we'd be a lot closer to safety. Probably we'd have a lot more stipulations than just that. Speculating wildly, we might want to try something like: "For now, the only thing you should do is be a faithful imitation of Paul Christiano but thinking 100x faster." Then we can ask our new Paul-mimic to think for a while and then come up with better ideas for how to instruct new versions of the AI.

↑ comment by Tapatakt · 2022-11-03T12:13:11.129Z · LW(p) · GW(p)

What is "killing"? What is "harming"? What is "jeopardizing"? What is "living"? What is "human"? What is the difference between "I cause future killing/harming/jeopardizing" and "future killing/harming/jeopardizing will be in my lightcone"? How to explain all of this to AI? How to check if it understood everything correctly?

We don't know.

↑ comment by Koen.Holtman · 2022-11-03T16:59:54.110Z · LW(p) · GW(p)

It is definitely advisable to build a paper-clip maximiser that also needs to respect a whole bunch of additional stipulations about not harming people. The worry among many alignment researchers is that it might be very difficult to make these stipulations robust enough to deliver the level of safety we ideally want, especially in the case of AGIs that might get hugely intelligent or hugely powerful. As we are talking about not-yet-invented AGI technology, nobody really knows how easy or hard it will be to build robust-enough stipulations into it. It might be very easy in the end, but maybe not. Different researchers have different levels of optimism, but in the end nobody knows, and the conclusion remains the same no matter what the level of optimism is. The conclusion is to warn people about the risk and to do more alignment research with the aim to make it easier build robust-enough stipulations into potential future AGIs.

↑ comment by chanamessinger (cmessinger) · 2022-11-02T13:49:07.303Z · LW(p) · GW(p)

In addition to Daniel's point, I think an important piece is probabilistic thinking - the AGI will execute not based on what will happen but on what it expects to happen. What probability is acceptable? If none, it should do nothing.

Replies from: reallyeli↑ comment by reallyeli · 2022-11-03T07:13:10.112Z · LW(p) · GW(p)

I don't think this is an important obstacle — you could use something like "and act such that your P(your actions over the next year lead to a massive disaster) < 10^-10." I think Daniel's point is the heart of the issue.

Replies from: cmessinger↑ comment by chanamessinger (cmessinger) · 2022-11-03T12:04:10.540Z · LW(p) · GW(p)

I think that incentivizes self-deception on probabilities. Also, P <10^-10 are pretty unusual, so I'd expect that to cause very little to happen.

comment by a gently pricked vein (strangepoop) · 2022-11-03T22:01:56.013Z · LW(p) · GW(p)

What are the standard doomy "lol no" responses to "Any AGI will have a smart enough decision theory to not destroy the intelligence that created it (ie. us), because we're only willing to build AGI that won't kill us"?

(I suppose it isn't necessary to give a strong reason why acausality will show up in AGI decision theory, but one good one is that it has to be smart enough to cooperate with itself.)

Some responses that I can think of (but I can also counter, with varying success):

A. Humanity is racing to build an AGI anyway, this "decision" is not really enough in our control to exert substantial acausal influence

B. It might not destroy us, but it will likely permanently lock away our astronomical endowment and this is basically just as bad and therefore the argument is mostly irrelevant

C. We don't particularly care to preserve what our genes may "wish" to preserve, not even the acausal-pilled among us

D. Runaway, reckless consequntialism is likely to emerge long before a sophisticated decision theory that incorporates human values/agency, and so if there is such a trade to be had, it will likely be already too late

E. There is nothing natural about the categories carved up for this "trade" and so we wouldn't expect it to take place. If we can't even tell it what a diamond is, we certainly wouldn't share enough context for this particular acausal trade to snap into place

F. The correct decision theory will actually turn out to only one-box in Newcomb's and not in Transparent Newcomb's, and this is Transparent Newcomb's

G.There will be no "agent" or "decision theory" to speak of, we just go out with a whimper via increasingly lowered fidelity to values in the machines we end up designing

This is from ten minutes of brainstorming, I'm sure it misses out some important ones. Obviously, if there don't exist any good ones (ones without counters), that gives us reason to beieve in alignment by default!

Keen to hear your responses.

Replies from: Raemon↑ comment by Raemon · 2022-11-03T23:09:24.743Z · LW(p) · GW(p)

Here's a more detailed writeup about this: https://www.lesswrong.com/posts/rP66bz34crvDudzcJ/decision-theory-does-not-imply-that-we-get-to-have-nice [LW · GW]

comment by jmh · 2022-11-03T16:56:35.292Z · LW(p) · GW(p)

How much of AI alignment and safety has been informed at all by economics?

Part of the background to my question relates to the paperclip maximizer story. I could be poorly understanding the problem suggested by that issue (have not read the original) but to me it largely screams economic system failure.

Replies from: Koen.Holtman↑ comment by Koen.Holtman · 2022-11-03T18:12:45.075Z · LW(p) · GW(p)

Yes, a lot of it has been informed by economics. Some authors emphasize the relation, others de-emphasize it.

The relation goes beyond alignment and safety research. The way in which modern ML research defines its metric of AI agent intelligence is directly based on utility theory, which was developed by Von Neumann and Morgenstern to describe games and economic behaviour.

comment by [deleted] · 2022-11-02T20:22:26.292Z · LW(p) · GW(p)

In case of AI/Tech singularity, wouldn't open sources like Less Wrong be main source of counter strategy for AGI? In that case AGI can develop in a way that won't trigger thresholds of what is worrying and can gradually mitigate its existential risks.

comment by DPiepgrass · 2022-11-02T19:53:27.344Z · LW(p) · GW(p)

Are AGIs with bad epistemics more or less dangerous? (By "bad epistemics" I mean a tendency to believe things that aren't true, and a tendency to fail to learn true things, due to faulty and/or limited reasoning processes... or to update too much / too little / incorrectly on evidence, or to fail in peculiar ways like having beliefs that shift incoherently according to the context in which an agent finds itself)

It could make AGIs more dangerous by causing them to act on beliefs that they never should have developed in the first place. But it could make AGIs less dangerous by causing them to make exploitable mistakes, or fail to learn facts or techniques that would make them too powerful.

Note: I feel we aspiring rationalists haven't really solved epistemics yet (my go-to example: if Alice and Bob tell you X, is that two pieces of evidence for X or just one?), but I wonder how, if it were solved, it would impact AGI and alignment research.

Replies from: mr-hire, Koen.Holtman↑ comment by Matt Goldenberg (mr-hire) · 2022-11-08T15:15:02.127Z · LW(p) · GW(p)

But it could make AGIs less dangerous by causing them to make exploitable mistakes, or fail to learn facts or techniques that would make them too powerful.

There is in fact class of AI safety proposals that try to make the AI unaware of certain things, such as not being aware that there is a shutdown button for it.

One of the issues with these types of proposals is that as the AI gets smarter/more powerful, it has to come up with increasingly crazy hypotheses about the world to ignore the facts that all the evidence is pointing towards (such as the fact that there's a conspiracy, or an all powerful being, or something doing this). This could, in the long term, cause it to be very dangerous in it's unpredictability.

↑ comment by Koen.Holtman · 2022-11-03T18:22:53.575Z · LW(p) · GW(p)

It depends. But yes, incorrect epistemics can make an AGI safer, if it is the right and carefully calibrated kind of incorrect. A goal-directed AGI that incorrectly believes that its off switch does not work will be less resistant to people using it. So the goal is here to design an AGI epistemics that is the right kind of incorrect.

Note: designing an AGI epistemics that is the right kind of incorrect seems to go against a lot of the principles that aspiring rationalists seem to hold dear, but I am not an aspiring rationalist. For more technical info on such designs, you can look up my sequence on counterfactual planning.

comment by average · 2022-11-02T10:46:41.605Z · LW(p) · GW(p)

Is it a fair assumption to say the Intelligence Spectrum is a core concept that underpins AGI safety . I often feel the idea is the first buy in to introduction AGI safety text. And due to my prior beliefs and lack of emphasis on it in Safety introductions I often bounce off the entire field.

Replies from: DPiepgrass, porby↑ comment by DPiepgrass · 2022-11-03T18:32:31.499Z · LW(p) · GW(p)

I would say that the idea of superintelligence is important for the idea that AGI is hard to control (because we likely can't outsmart it).

I would also say that there will not be any point at which AGIs are "as smart as humans". The first AGI may be dumber than a human, and it will be followed (perhaps immediately) by something smarter than a human, but "smart as a human" is a nearly impossible target to hit because humans work in ways that are alien to computers. For instance, humans are very slow and have terrible memories; computers are very fast and have excellent memories (when utilized, or no memory at all if not programmed to remember something, e.g. GPT3 immediately forgets its prompt and its outputs).

This is made worse by the impatience of AGI researchers, who will be trying to create an AGI "as smart as a human adult" in a time span of 1 to 6 months, because they're not willing to spend 18 years on each attempt, and so if they succeed, they will almost certainly have invented something smarter than a human over a longer training interval. c.f. my own 5-month-old human

↑ comment by porby · 2022-11-02T20:47:15.309Z · LW(p) · GW(p)

If by intelligence spectrum you mean variations in capability across different generally intelligent minds, such that there can be minds that are dramatically more capable (and thus more dangerous): yes, it's pretty important.

If it were impossible to make an AI more capable than the most capable human no matter what software or hardware architectures we used, and no matter how much hardware we threw at it, AI risk would be far less concerning.

But it really seems like AI can be smarter than humans. Narrow AIs (like MuZero) already outperform all humans at some tasks, and more general AIs like large language models are making remarkable and somewhat spooky progress.

Focusing on a very simple case, note that using bigger, faster computers tends to let you do more. Video games are a lot more realistic than they used to be. Physics simulators can simulate more. Machine learning involves larger networks. Likewise, you can run the same software faster. Imagine you had an AGI that demonstrated performance nearly identical to that of a reasonably clever human. What happens when you use enough hardware that it runs 1000 times faster than real time, compared to a human? Even if there are no differences in the quality of individual insights or the generality of its thought, just being able to think fast alone is going to make it far, far more capable than a human.

comment by mruwnik · 2022-11-02T09:39:44.165Z · LW(p) · GW(p)

How useful would uncopyable source code be for limiting non super-intelligent AIs? Something like how humans are limited by only being able to copy resulting ideas, not the actual process that produced them. Homomorphic encryption is sort of similar, but only in the sense that the code is unreadable - I'm more interested in mechanisms to enforce the execution to be a sort of singleton. This of course wouldn't prevent unboxing etc., but might potentially limit the damage.

I haven't put much thought into this, but was wondering if anyone else has already gone into the feasibility of it.

Replies from: jimrandomh, sharmake-farah↑ comment by jimrandomh · 2022-11-03T22:20:33.110Z · LW(p) · GW(p)

Mass-self-copying is one of the mechanisms by which an infrahuman AGI might scale up its compute and turn itself into a superintelligence. Fine-tuning its own weights to produce a more-powerful successor is another strategy it might use. So if you can deny it the ability to do these things, this potentially buys a little time.

This only helps if AGI spends significant time in the infrahuman domain, if one of these strategies would been the first-choice strategy for how to foom (as opposed to social engineering or nanobots or training a successor without using its own weights), and if humanity can do something useful with time that's bought. So it's probably a little bit helpful, but in the best case it's still only a small modifier on a saving throw, not a solution.

↑ comment by Noosphere89 (sharmake-farah) · 2022-11-03T23:00:16.921Z · LW(p) · GW(p)

Pretty significant, and probably one big component of limiting the damage, though doesn't rule out all failure modes.

comment by James Blackmon (james-blackmon) · 2023-08-13T02:54:31.678Z · LW(p) · GW(p)

In his TED Talk, Bostrom's solution to the alignment problem is to build in at the ground level the goal of predicting what we will approve of so that no matter what other goals it's given, it will aim to achieve those goals only in ways that align with our values.

How (and where) exactly does Yudkowsky object to this solution? I can make guesses based on what Yudkowsky says, but so far, I've found no explicit mention by Yudkowsky of Bostrom's solution. More generally, where should I go to find any objections to or evaluations of Bostrom's solution?

comment by Steve M (steve-m-1) · 2023-06-01T11:53:03.095Z · LW(p) · GW(p)

What is the thinking around equilibrium between multiple AGIs with competing goals?

For example AGI one wants to maximize paperclips, AGI two wants to help humans create as many new episodes of the Simpsons as possible, AGI three wants to make sure humans aren't being coerced by machines using violence, AGI four wants to maximize egg production.

In order for AGI one not to have AGIs two through four not try to destroy it, it needs to either gain such a huge advantage over them it can instantly destroy/neutralize them, or it needs to use strategies that don't prompt a 'war'. If a large number of these AGIs have goals that are at least partially aligned with human preferences, could this be a way to get to an 'equilibrium' that is at least not dystopian for humans? Why or why not?

comment by Jemal Young (ghostwheel) · 2023-01-06T05:52:28.871Z · LW(p) · GW(p)

What are the best not-Arxiv and not-NeurIPS sources of information on new capabilities research?

comment by knowsnothing · 2023-01-05T11:26:56.617Z · LW(p) · GW(p)

Is trying to reduce internet usage and maybe reducing the amount of data AI companies have to work with something that is at all feasible?

Replies from: super-agi↑ comment by Super AGI (super-agi) · 2023-05-05T07:53:27.086Z · LW(p) · GW(p)

Reducing internet usage and limiting the amount of data available to AI companies might seem like a feasible approach to regulate AI development. However, implementing such measures would likely face several obstacles. E.g.

- AI companies purchase internet access like any other user, which makes it challenging to specifically target them for data reduction without affecting other users. One potential mechanism to achieve this goal could involve establishing regulatory frameworks that limit the collection, storage, and usage of data by AI companies. However, these restrictions might inadvertently affect other industries that rely on data processing and analytics.

- A significant portion of the data utilized by AI companies is derived from open-source resources like Common Crawl and WebText2. These companies have normally already acquired copies of this data for local use, meaning that limiting internet usage would not directly impact their access to these datasets.

- If any country were to pass a law limiting the network data available to AI-based companies, it is likely that these companies would relocate to other countries with more lenient regulations. This would render such policies ineffective on a global scale, while potentially harming the domestic economy and innovation in the country implementing the restrictions.

In summary, while the idea of reducing the amount of data AI companies have to work with might appear feasible, practical implementation faces significant hurdles. A more effective approach to regulating AI development could involve establishing international standards and ethical guidelines, fostering transparency in AI research, and promoting cross-sector collaboration among stakeholders. This would help to ensure the responsible and beneficial growth of AI technologies without hindering innovation and progress.

comment by Jobst Heitzig · 2022-12-02T08:57:55.367Z · LW(p) · GW(p)

Dear Robert, I just found out about your work and absolutely love it.

Has the following idea been explored yet?

- The AI system is made of two agents, a strategic agent S and a controller agent C.

- S's reward function approximates the actual objective function of the system as defined by the designer.

- S can only propose actions to C, only knows about the environment and the actual actions taken what C tells it, and only has as many compute resources as C gives it.

- C's reward function encodes hard constraints such as the three laws of robotics or some other formal ethical constraint system, in the form of a binary reward (1 for compliance, 0 for non-compliance).

- C has access to the actual observations and has the power to either actually take the action proposed by S or not.

- In addition, C is free to tell S anything regarding whether it actually took the proposed action and what the observations are, and can curtail S's compute resources to avoid being outsmarted by S.

- If indifferent in light of its reward function, C will take the proposed action, will be honest about observations, and will not curtail resources (but will not get a positive reward from this because that could be exploited by S).

comment by chanamessinger (cmessinger) · 2022-11-03T12:14:46.626Z · LW(p) · GW(p)

Is this something Stampy would want to help with?

https://www.lesswrong.com/posts/WXvt8bxYnwBYpy9oT/the-main-sources-of-ai-risk

Replies from: ete↑ comment by plex (ete) · 2022-11-03T14:41:00.737Z · LW(p) · GW(p)

It's definitely something Stampy would want to link to, and if those authors wanted to maintain their list on Stampy rather than LessWrong that would be welcome, though I could imagine them wanting to retain editing restrictions.

Edit: Added a link to Stampy.

comment by davideraso · 2022-11-03T00:20:05.039Z · LW(p) · GW(p)

Could AgI safety overhead turn out to be more harmful in terms of actual current human lives, as well as their individual 'qualities'? (I don't accept utilitarian or longtermism couter-arguments since my question solely concerns the present which is the only reality, and existing 'individualities' as the only ones capable of experiencing it. Thank you)

Replies from: mruwnik↑ comment by mruwnik · 2022-11-03T10:59:51.266Z · LW(p) · GW(p)

Yes. If it turns out that alignment is trivial or unneeded whenever AGIs appear. The same as with all excessive safety requirements. The problem here being discerning between excessive and necessary. An example would be hand sanitizers etc. during the pandemic. In the beginning it wasn't stupid, as it wasn't known how the virus spread. Later, when it became obvious that it was airborne, then keeping antibacterial gels everywhere in the name of ending the pandemic was stupid (at least in the context of Covid) and wasteful. We're currently in the phase of having no idea about how safe AGI will or won't be, so it's a good idea to check for potential pitfalls.

It comes down to uncertainty. It seems wiser to go slowly and carefully, even if that will result in loss, if doing so can limit the potential dangers of AGI. Which could potentially involve the eradication of everything you hold dear.

I'm not sure how literally you treat the present - if you only care about, say the next 5 years, then yes, the safety overhead is harmful in that it takes resources from other stuff. If you care about the next 30 years, then the overhead is more justified, given that many people expect to have an AGI by then.

comment by Jon Garcia · 2022-11-02T21:24:42.721Z · LW(p) · GW(p)

Could we solve alignment by just getting an AI to learn human preferences through training it to predict human behavior, using a "current best guess" model of human preferences to make predictions and updating the model until its predictions are accurate, then using this model as a reward signal for the AI? Is there a danger in relying on these sorts of revealed preferences?

On a somewhat related note, someone should answer, "What is this Coherent Extrapolated Volition I've been hearing about from the AI safety community? Are there any holes in that plan?"

Replies from: TAG, mruwnik↑ comment by mruwnik · 2022-11-03T10:22:10.211Z · LW(p) · GW(p)

I'm lazy, so I'll just link to this https://www.lesswrong.com/tag/coherent-extrapolated-volition [? · GW]

comment by snikolenko · 2023-06-03T06:39:14.573Z · LW(p) · GW(p)

Somewhat of a tangent -- I realized I don't really understand the basic reasoning behind current efforts on "trying to make AI stop saying naughty words". Like, what's the actual problem with an LLM producing racist or otherwise offensive content that warrants so much effort? Why don't the researchers just slap an M content label on the model and be done with it? Movie characters say naughty words all the time, are racist all the time, disembody other people in ingenious and sometimes realistic ways, and nobody cares -- so what's the difference?..

comment by [deleted] · 2022-11-02T23:43:56.359Z · LW(p) · GW(p)

There can only be One X-risk. I wonder if anyone here believes that our first X-risk is not coming from AGI, that AGI is our fastest counter to our first X-risk.

In that case you are resisting what you should embrace?

↑ comment by mruwnik · 2022-11-03T10:15:16.073Z · LW(p) · GW(p)

Why can there only be one? Isn't X-risk the risk of an extinction event, rather than the event itself?

It's a matter of expected value. A comet strike that would sterilize earth is very unlikely - in the last ~500 million years there have been 6 mass extinctions (including the current one). So it seems that naturally occurring X-risks exist, but aren't that likely (ignoring black swans, problems of induction etc, of course).

On the other hand, if you believe that creating a god is possible and even likely, you then have to consider whether it's more likely for you to summon a demon than to get something that is helpful.

So if your prior is that there is like 5% probability of an X-risk in the next 100 years (these numbers are meaningless - I chose them to make things easier), that there is 10% probability of AGI, and that it's only 50% probability that the AGI wouldn't destroy everything, then virtually all of your probability mass is that it's the AGI that will be the X-risk. And the obvious action is to focus most of your efforts on that.

Replies from: None↑ comment by [deleted] · 2022-11-05T22:47:55.845Z · LW(p) · GW(p)

For us there can only be one X-risk, once humanity dies out, we cannot worry about X-risks of future species. So we should care only about one, first X-risk.

I don't value AI or AGI as God, in current state it doesn't even have consciousness. Percentages wise, these can be flipped any way. Its very hard to give ETA or % for AGI, so far we just push same or similar algos with more computing power. There was no AGI before, but natural X-risks occurred before, so probabilistically you need to give them fair percentage compared to AGI event that never occurred before.

I am seeing AGI as a counter to those risks, before it even came to life, you already expect 50% evil out of it. When you know for a fact that comet damage is evil, there are other X-risks as well.

If AGI for example helps humanity become interplanetary species, we will significantly decrease all X-risks.

↑ comment by mruwnik · 2022-11-06T10:42:38.893Z · LW(p) · GW(p)

The problem here is knowing what is the most likely. If you had a list of all the X-risks along with when they would appear, then yes, it makes sense to focus on the nearest one. Unfortunately we don't, so nobody knows whether it will be a comet strike, a supervolcano, or aliens - each of those are possibilities, but they are all very unlikely to happen in the next 100 years.

AGI could certainly help with leaving the planet, which would help a lot with decreasing X-risks. But only assuming you make it correctly. There are a lot of ways in which an AGI can go wrong. Many of which don't involve consciousness. It also looks like an AGI will be created quite soon, like in less than 30 years. If so, and if it's possible for an AGI to be an X-risk, then it seems likely that the nearest danger to humanity is AGI. Especially if it is to help with other X-risks, as one simple way for it to go wrong is to have an AGI which is supposed to protect us, but decides it rather not and then lets everyone get killed.

It comes down to whether you believe that an AGI will be created soon and whether it will be good by default. People here tend to believe that it will happen soon (< 50 years) and that it won't be friendly by default (not necessarily evil - it can also just have totally different goals), hence the doom and gloom. At the same time, the motivations for a lot of alignment researchers is that an AGI would be totally awesome and that they want it to happen as soon as possible (among other reasons - to reduce X-risk). It's like building a nuclear reactor - really useful, but you have to make sure you get it right.

Replies from: None↑ comment by [deleted] · 2022-11-06T22:49:56.444Z · LW(p) · GW(p)

I think it will be hard to draw a line between AGI and AI and the closer we get to AGI, the further we will move that line.

"People here tend to believe that it will happen soon (< 50 years) and that it won't be friendly by default"

My concern is that this kind of assumption leads to increased risk of AGI being unfriendly. If you raise a being as a slave and only show him violence, you cannot expect him to be an academic who will contribute to building ITER.

"Won't be friendly by default".. you want to force it to be friendly? How many forced X-risk capable friendly armies or enemies do know? Its just a time bomb to force AGI to be friendly.

High risk unfriendly AGI narrative increases the probability of AGI being unfriendly. If we want AGI to value our values we need to give AGI a presumption of innocence and raise AGI in our environment, live through our values.

Replies from: Tapatakt, mruwnik↑ comment by Tapatakt · 2022-11-07T10:20:35.953Z · LW(p) · GW(p)

Replies from: mruwnik↑ comment by mruwnik · 2022-11-08T13:01:16.017Z · LW(p) · GW(p)

Just in case you don't see it:

@Tapatakt, I am sorry, mods limited my replies to 1 per 24hour, I will have to reply to @mruwnik first. Thank you for a nice read, however I think that Detached Lever Fallacy assumes that we will reach AGI using exactly the same ML algos that we use today. Hopefully you will see my reply.

↑ comment by mruwnik · 2022-11-07T09:36:26.051Z · LW(p) · GW(p)

How does it increase the risk of it being unfriendly? The argument isn't to make sure that the AGI doesn't have any power, and to keep it chained at all times - quite the opposite! The goal is to be able to let it free to do whatever it wants, seeing as it'll be cleverer than us.

But before you give it more resources, it seems worth while to check what it wants to do with them. Humanity is only one of (potentially) many many possible ways to be intelligent. A lot of the potential ways of being intelligent are actively harmful to us - it would be good to make sure any created AGIs don't belong to that group. There are various research areas that try to work out what to do with an unfriendly AI that wants to harm us. Though at that point it's probably a lot too late. The main goal of alignment is coming up with ways to get the AGI want to help us, and to make sure it always wants to help us. Not under duress, because as you point out, that only works until the AGI is strong enough to fight back, but so that it really wants to do what's good for us. Hence the "friendly" rather than "obedient".

One potential way of aligning an AGI would be to raise it like a human child. But that would only work if the AGIs "brain" worked the same way as ours does. This is very unlikely. So you have to come up with other ways to bring it up that will work with it. It's sort of like trying to get a different species to be helpful - chimps are very close to humans, but just bringing them up like a human baby doesn't work (its been tried). Trying to teach an octopus to be helpful would require a totally different approach, if its even possible.

Replies from: None↑ comment by [deleted] · 2022-11-08T09:47:16.416Z · LW(p) · GW(p)

@Tapatakt, I am sorry, mods limited my replies to 1 per 24hour, I will have to reply to @mruwnik first. Thank you for a nice read, however I think that Detached Lever Fallacy assumes that we will reach AGI using exactly the same ML algos that we use today. Hopefully you will see my reply.

@mruwnik

"it would be good to make sure any created AGIs don't belong to that group"

Correct me if I am wrong, but you are assuming that AGI who is cleverer than us will not be able to self update, all its thoughts will be logged and checked, it won't be able to lie or mask its motives. Like a 2.0 software version, fully controlled and if we let him think this or that, we have to implement an update to 2.1. I think that process excludes or prolongs indefinitely our ability to create AGI in the first place. We do not understand our inner/subconscious thought process yet and at the same time you want to create an AGI that values our own values by controlling and limiting his/her thoughts, not even talking about the ability to upgrade itself and its own thought process.

Think about it, if we tried to manually control our subconscious mind and manually control all our internal body mechanism, heartbeat and so on, we would just instantly die. We have our own inner mind that helps us run many things in automated fashion.

Now keeping that in mind, you are trying to create AGI by controlling same automated processes that we do not even fully understand yet. What I am trying to say is that closet path to creating AGI is replicating humans. If we try to create a completely new form of life, who's thoughts and ideas are strictly controlled by software updates we won't be able to make it follow our culture and our values.

"A lot of the potential ways of being intelligent are actively harmful to us"

Yes, intelligence is dangerous, look what we are doing to less intelligent creatures. But if we want to artificially create a new form of intelligence, hoping that it will be more intelligent than us, we should at least give it presumption of innocence and take a risk at some point.

"One potential way of aligning an AGI would be to raise it like a human child. But that would only work if the AGIs "brain" worked the same way as ours does. This is very unlikely. So you have to come up with other ways to bring it up that will work with it."

We are not trying to play God here, we are trying to prove that we are smarter than God by deliberately trying to create new intelligent life form in our own opposing way. We are swimming against the current.

"Trying to teach an octopus to be helpful would require a totally different approach, if its even possible."

Imagine trying to program octopus brain to be helpful instead of teaching octopus. Program, not controlling.

The actual method to programing AGI could be more difficult than creating AGI.

Replies from: mruwnik↑ comment by mruwnik · 2022-11-08T13:00:32.182Z · LW(p) · GW(p)

My current position is that I don't know how to make an AGI, don't know how to program it and don't know how to patch it. All of these appear to be very hard and very important issues. We know that GI is possible, as you rightfully note - i.e. us. We know that "programming" and "patching" beings like us is doable to a certain extent, which can certainly give us hope that if we have an AGI like us, then it could be taught to be nice. But all of this assumes that we can make something in our image.

The point of the detached lever story is that the lever only works if it's connected to exactly the right components, most of which are very complicated. So in the case of AGI, your approach seems sensible, but only if we can recreate the whole complicated mess that is a human brain - otherwise it'll probably turn out that we missed some small but crucial part which results in the AGI being more like a chimp that a human. It's closest in the sense that it's easy to describe in words (i.e. "make a simulated human brain"), but each of those words hides masses and masses of finicky details. This seems to be the main point of contention here. I highly recommend the other articles in that sequence [? · GW], if you haven't read them yet. Or even better - read the whole sequences if you have a spare 6 months :D They explain this a lot better than I can, though they also take a loooot more words to do so.

I agree with most of the rest of what you say though: programming can certainly turn out to be harder than creation (which is one of the main causes of concern), logging and checking each thought will exclude or prolongs indefinitely our ability to create AGI (so won't work, as someone else will skip this part), and a risk must be taken at some point, otherwise why even bother?