shortform

post by Bird Concept (jacobjacob) · 2019-07-23T02:56:35.132Z · LW · GW · 80 commentsContents

80 comments

What it says on the tin.

80 comments

Comments sorted by top scores.

comment by Bird Concept (jacobjacob) · 2024-07-23T06:24:27.592Z · LW(p) · GW(p)

Someone posted these quotes in a Slack I'm in... what Ellsberg said to Kissinger:

“Henry, there’s something I would like to tell you, for what it’s worth, something I wish I had been told years ago. You’ve been a consultant for a long time, and you’ve dealt a great deal with top secret information. But you’re about to receive a whole slew of special clearances, maybe fifteen or twenty of them, that are higher than top secret.

“I’ve had a number of these myself, and I’ve known other people who have just acquired them, and I have a pretty good sense of what the effects of receiving these clearances are on a person who didn’t previously know they even existed. And the effects of reading the information that they will make available to you.

[...]

“In the meantime it will have become very hard for you to learn from anybody who doesn’t have these clearances. Because you’ll be thinking as you listen to them: ‘What would this man be telling me if he knew what I know? Would he be giving me the same advice, or would it totally change his predictions and recommendations?’ And that mental exercise is so torturous that after a while you give it up and just stop listening. I’ve seen this with my superiors, my colleagues….and with myself.

“You will deal with a person who doesn’t have those clearances only from the point of view of what you want him to believe and what impression you want him to go away with, since you’ll have to lie carefully to him about what you know. In effect, you will have to manipulate him. You’ll give up trying to assess what he has to say. The danger is, you’ll become something like a moron. You’ll become incapable of learning from most people in the world, no matter how much experience they may have in their particular areas that may be much greater than yours.”

(link)

Replies from: Jonas Vollmer, TsviBT↑ comment by Jonas V (Jonas Vollmer) · 2024-07-23T18:18:25.725Z · LW(p) · GW(p)

Someone else added these quotes from a 1968 article about how the Vietnam war could go so wrong:

Replies from: daniel-kokotajlo, kave, habryka4, TsviBTDespite the banishment of the experts, internal doubters and dissenters did indeed appear and persist. Yet as I watched the process, such men were effectively neutralized by a subtle dynamic: the domestication of dissenters. Such "domestication" arose out of a twofold clubbish need: on the one hand, the dissenter's desire to stay aboard; and on the other hand, the nondissenter's conscience. Simply stated, dissent, when recognized, was made to feel at home. On the lowest possible scale of importance, I must confess my own considerable sense of dignity and acceptance (both vital) when my senior White House employer would refer to me as his "favorite dove." Far more significant was the case of the former Undersecretary of State, George Ball. Once Mr. Ball began to express doubts, he was warmly institutionalized: he was encouraged to become the inhouse devil's advocate on Vietnam. The upshot was inevitable: the process of escalation allowed for periodic requests to Mr. Ball to speak his piece; Ball felt good, I assume (he had fought for righteousness); the others felt good (they had given a full hearing to the dovish option); and there was minimal unpleasantness. The club remained intact; and it is of course possible that matters would have gotten worse faster if Mr. Ball had kept silent, or left before his final departure in the fall of 1966. There was also, of course, the case of the last institutionalized doubter, Bill Moyers. The President is said to have greeted his arrival at meetings with an affectionate, "Well, here comes Mr. Stop-the-Bombing...." Here again the dynamics of domesticated dissent sustained the relationship for a while.

A related point—and crucial, I suppose, to government at all times—was the "effectiveness" trap, the trap that keeps men from speaking out, as clearly or often as they might, within the government. And it is the trap that keeps men from resigning in protest and airing their dissent outside the government. The most important asset that a man brings to bureaucratic life is his "effectiveness," a mysterious combination of training, style, and connections. The most ominous complaint that can be whispered of a bureaucrat is: "I'm afraid Charlie's beginning to lose his effectiveness." To preserve your effectiveness, you must decide where and when to fight the mainstream of policy; the opportunities range from pillow talk with your wife, to private drinks with your friends, to meetings with the Secretary of State or the President. The inclination to remain silent or to acquiesce in the presence of the great men—to live to fight another day, to give on this issue so that you can be "effective" on later issues—is overwhelming. Nor is it the tendency of youth alone; some of our most senior officials, men of wealth and fame, whose place in history is secure, have remained silent lest their connection with power be terminated. As for the disinclination to resign in protest: while not necessarily a Washington or even American specialty, it seems more true of a government in which ministers have no parliamentary backbench to which to retreat. In the absence of such a refuge, it is easy to rationalize the decision to stay aboard. By doing so, one may be able to prevent a few bad things from happening and perhaps even make a few good things happen. To exit is to lose even those marginal chances for "effectiveness."

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2024-07-23T19:27:29.755Z · LW(p) · GW(p)

Wow, yeah. This is totally going on at OpenAI, and I expect at other AGI corporations also.

Replies from: Raemon↑ comment by Raemon · 2024-07-23T21:39:29.130Z · LW(p) · GW(p)

I'd be interested in a few more details/gears. (Also, are you primarily replying about the immediate parent, i.e. domestication of dissent, or also about the previous one)

Two different angles of curiosity I have are:

- what sort of things you might you look out for, in particular, to notice if this was happening to you at OpenAI or similar?

- something like... what's your estimate of the effect size here? Do you have personal experience feeling captured by this dynamic? If so, what was it like? Or did you observe other people seeming to be captured, and what was your impression (perhaps in vague terms) of the diff that the dynamic was producing?

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2024-07-24T04:26:17.904Z · LW(p) · GW(p)

I was talking about the immediate parent, not the previous one. Though as secrecy gets ramped up, the effect described in the previous one might set in as well.

I have personal experience feeling captured by this dynamic, yes, and from conversations with other people i get the impression that it was even stronger for many others.

Hard to say how large of an effect it has. It definitely creates a significant chilling effect on criticism/dissent. (I think people who were employees alongside me while I was there will attest that I was pretty outspoken... yet I often found myself refraining from saying things that seemed true and important, due to not wanting to rock the boat / lose 'credibility' etc.

The point about salving the consciences of the majority is interesting and seems true to me as well. I feel like there's definitely a dynamic of 'the dissenters make polite reserved versions of their criticisms, and feel good about themselves for fighting the good fight, and the orthodox listen patiently and then find some justification to proceed as planned, feeling good about themselves for hearing out the dissent.'

I don't know of an easy solution to this problem. Perhaps something to do with regular anonymous surveys? idk.

↑ comment by kave · 2024-07-26T19:01:02.350Z · LW(p) · GW(p)

I wish this quote were a little more explicit about what's going wrong. On a literal reading it's saying that some people who disagreed attended meetings and were made to feel comfortable. I think it's super plausible that this leads to some kind of pernicious effect, but I wish it spelt out more what.

I guess the best thing I can infer is that the author thinks public resignations and dissent would have been somewhat effective and the domesticated dissenters were basically ineffective?

Or is the context of the piece just that he's explaining the absence of prominent public dissent?

↑ comment by Viliam · 2024-07-27T10:53:32.665Z · LW(p) · GW(p)

My understanding is that institutions can create an internal "game of telephone" while believing that they are listening to the opposing opinions.

Basically, information gets diluted at each step:

- you tell someone that "X is a problem", but they understand it merely as "we need to provide some verbal justification why we are going to do X anyway", i.e. they don't treat it as an actual problem, but as a mere bureaucratic obstacle to be navigated around;

- your friend tells you that "X is a huge problem", but your takeaway is merely that "X is a problem", because on one hand you trust your friend, on the other hand you think your friend is too obsessed with X and exaggerates the impact;

- your friend had an inside information (which is the reason you have actually decided to listen to him in the first place) that X will probably kill everyone, but he realizes that if he tells it this way, you will probably decide that he is insane, so he instead chooses to put it diplomatically as "X is a huge problem".

Taken together... you hired someone as an expert to provide an important information (and you congratulate yourself for doing it), but ultimately you ignored everything he said. And everyone felt happy in the process... your friend felt happy that the people making the important decisions were listening to him... your organization felt happy for having an ISO-certified process to listen to outside critics... except that ultimately nothing happened.

I am not saying that in a parallel reality where your friend instead wrote a Facebook post "we are all going to die" (and was ignored by everyone who matters) had a better outcome. But it had less self-deception by everyone involved.

↑ comment by habryka (habryka4) · 2024-07-23T18:37:28.965Z · LW(p) · GW(p)

Huh, this is a good quote.

comment by Bird Concept (jacobjacob) · 2019-12-12T12:34:36.262Z · LW(p) · GW(p)

Rationality has pubs; we need gyms

Consider the difference between a pub and a gym.

You go to a pub with your rationalist friends to:

- hang out

- discuss interesting ideas

- maybe maths a bit in a notebook someone brought

- gossip

- get inspired about the important mission you're all on

- relax

- brainstorm ambitious plans to save the future

- generally have a good time

You go to a gym to:

- exercise

- that is, repeat a particular movement over and over, paying attention to the motion as you go, being very deliberate about using it correctly

- gradually trying new or heavier moves to improve in areas you are weak in

- maybe talk and socialise -- but that is secondary to your primary focus of becoming stronger

- in fact, it is common knowledge that the point is to practice, and you will not get socially punished for trying really hard, or stopping a conversation quickly and then just focus on your own thing in silence, or making weird noises or grunts, or sweating... in fact, this is all expected

- not necessarily have a good time, but invest in your long-term health, strength and flexibility

One key distinction here is effort.

Going to a bar is low effort. Going to a gym is high effort.

In fact, going to gym requires such a high effort that most people have a constant nagging guilt about doing it. They proceed to set up accountability systems with others, hire personal trainers, use habit installer apps, buy gym memberships as commitment devices, use clever hacks to always have their gym bag packed and ready to go, introspect on their feelings off anxiety about it and try to find work-arounds or sports which suit them, and so forth...

People know gyms are usually a schlep, yet they also know going there is important, so they accept that they'll have to try really hard to build systems which get them exercising.

However, things seem different for rationality. I've often heard people go "this rationality stuff doesn't seem very effective, people just read some blog posts and go to a workshop or two, but don't really seem more effective than other mortals".

But we wouldn't be surprised if someone said "this fitness stuff doesn't seem very effective, some of my friends just read some physiology bloggers and then went to a 5-day calisthenics bootcamp once, but they're not in good shape at all". Of course they aren't!

I think I want to suggest two improvements:

1) On the margin, we should push more for a cultural norm of deliberate practice in the art of rationality.

It should be natural to get together with your friends once a week and use OpenPhil's calibration app, do Thinking Physics problems, practice CFAR techniques, etc...

2) But primarily: we build gyms.

Gyms are places where hundreds of millions of dollars of research have gone into designing equipment specifically allowing you to exercise certain muscles. There are also changing rooms to help you get ready, norms around how much you talk (or not) to help people focus, personal trainers who can give you advice, saunas and showers to help you relax afterwards...

For rationality, we basically have nothing like this [1]. Each time you want to practice rationality, you basically have to start by inventing your own exercises.

[1] The only example I know of is Kocherga, which seems great. But I don't know a lot about what they're doing, and ideally which should have rationality gyms either online or in every major hub, not just Moscow.

Replies from: ozziegooen, gworley, ChristianKl, ioannes_shade↑ comment by ozziegooen · 2019-12-12T22:17:04.064Z · LW(p) · GW(p)

Few small points

-

I personally find the word "need" (like in the title) a bit aversive. I think it's generally meant as, "the benefit is higher than the opportunity cost"; but even that is a difficult statement. The word itself seems to imply necessary, and my guess is that some people would read "need" thinking it's "highly certian."

-

While I obviously have hesitation with religious groups, they have figured out a bunch of good things. Personally my gym is a very solo experience; I think that the community in churches and monasteries may make them better as a thing to learn from. I thought Sunday Assembly seemed interesting, though when I attended one, it kind of had a "Sunday School" vibe, which turned me off. I think I like the futurist/hackerspace/unconference/EA/philosopher combination personally, if such a combination could exist.

↑ comment by Bird Concept (jacobjacob) · 2019-12-13T08:24:18.201Z · LW(p) · GW(p)

1. I did think about that when I wrote it, and it's a bit strong. (I set myself a challenge to write and publish this in 15 min, so didn't spent any more time optimising the title.) Other recommendations welcome. Thinking about the actual claim though, I find myself quite confident that something in this direction is right. (A larger uncertainty would be if it is the best thing for us to sink resources into, compared to other interventions).

2. Agree that there seems to be lots of black-box wisdom embedded in the institutions and practices of religions, and could be cool to try to unwrap it and import some good lessons.

I will note though that there's a difference between:

- the Sunday sermon thing (which to me seems more useful for building common knowledge, community, and a sense of mission and virtue).

- the gym idea, which is much more about deliberate practice, starting from wherever you're currently at

↑ comment by ozziegooen · 2019-12-16T18:04:47.172Z · LW(p) · GW(p)

My main issue with "need" isn't really that it's strong, but that I predict it's often misunderstood; people use it for all different levels of strength.

Fair point about the Sunday sermon thing. Some religious groups though do encourage lots of practice in religious settings though. (Like prayer in Islam)

↑ comment by Gordon Seidoh Worley (gworley) · 2019-12-12T18:45:00.709Z · LW(p) · GW(p)

I agree with the spirit of your point, but I think we would be better served by a category anchored by an example other than a modern gym.

To me the problem is that the modern gym is atomized and transactional: going to the gym is generally a solitary activity, even when you take a class or go with friends, because it's about your workout and not collaboratively achieving something. There are notable exceptions, but most of the time when I think of people going to the gym I imagine them working out as individuals for individual purposes.

Rationality training takes more. It requires bumping up against other people to see what happens when you "meet the enemy" of reality, and doing that in a productive way requires a kind of collective safety or trust in your fellow participants to both meet you fairly and to support you even while correcting you. Maybe this was a feature of the classic Greek gymnasium, but I find it lacking from most modern gyms.

We do have another kind of place that does regularly engage in this kind of mutual engagement in practice that is not atomized or transactional, and that's the dojo. The salient example to most people will be the dojo for practicing a martial art, and that's a place where trust and shared purpose exist. Sure, you might spend time on your own learning forms, but once you have mastered the basics you'll be engaged with other students head on in situations where, if one of you doesn't do what you should, one or both of you can get seriously injured. Thus it is with rationality training, although there the injuries are emotional or mental rather than physical.

Replies from: jacobjacob, Hazard↑ comment by Bird Concept (jacobjacob) · 2019-12-13T08:18:55.928Z · LW(p) · GW(p)

I haven't been to a dojo (except briefly as a kid) so don't have a clear model what it's about.

Not sure how I feel about the part on "you must face off against an opponent, and you run the risk of getting hurt". I think I disagree, and might write up why later.

↑ comment by ChristianKl · 2019-12-12T14:39:38.094Z · LW(p) · GW(p)

I'm not sure we have any rationalist pubs. We don't have much physical space that's decidated to rationality.

We do have plenty of events and while some events are more about social interaction we also have events that are more about training skills. CFAR has (or had?) their weekly dojo and many other cities like Berlin (where I live) have their own weekly dojo event.

Replies from: jacobjacob↑ comment by Bird Concept (jacobjacob) · 2019-12-12T16:02:44.653Z · LW(p) · GW(p)

I didn't know about weekly dojos and have never attended any, that sounds very exciting. Tell me more about what happens at the Berlin weekly dojo events?

Also, to clarify, I meant both "pubs" and "gyms" metaphorically -- i.e. lots of what happens on LessWrong is like a pub in the above sense, whereas other things, like the recent exercise prize [LW · GW], is like a gym.

Replies from: ChristianKl↑ comment by ChristianKl · 2019-12-12T17:03:54.135Z · LW(p) · GW(p)

The overall structure we have in Berlin is at the moment every Monday:

19:00-19:10 Meditation (Everyone that's late can only enter after the meditation is finished)

19:10-19:20 Check commitments that were made last week and only people to brag about other accomplishments

19:20-19:30 Two sessions of Resolve Cycles (we still use the Focused Grit name)

19:30-19:40 Session Planning

19:40-20:05 First Session

20:05-20:10 Break

20:10-20:35 Second Session

20:35-20:40 Break

20:40-21:05 Third Session

21:05-21:10 Break

21:10-21:15 Set commitments for the next week

Individual sessions are proposed in the session planning and everybody can go to sessions they find interesting. Sometimes there are sessions in parallel.

Some sessions are about practicing CFAR techniques, some about Hamming Circles for individual issues of a person. Some are also about information exchanges like exchanging ways to use Anki.

Our dojo is invite-only and people who are members are expected to attend regularly. Every dojo session is hosted by one of the dojo members and the person who hosts the session is supposed to bring at least one session. Everyone is expected to host in a given time frame.

We now have the dojo I think for over a year and that's the structure which evolved. It has the advantage that there's no need for one person to do a huge amount of preparation. In the CFAR version Valentine used to present new techniques every week but that was part of the job of being in charge of curriculum development at CFAR.

We also have a monthly meetup that usually mixes some rationality technique with social hangout at a 50:50 ratio.

https://www.lesswrong.com/posts/Rxz6uvTayTjDGk3Rq/personal-story-about-benefits-of-rationality-dojo-and [LW · GW] is a story from a dojo that happened in Ohio. Given that the link to the dojo website is dead, it might be that the dojo died.

Replies from: jacobjacob↑ comment by Bird Concept (jacobjacob) · 2019-12-12T18:08:42.097Z · LW(p) · GW(p)

Thanks for describing that! Some questions:

1) What are some examples of what "practicing CFAR techniques" looks like?

2) To what extent are dojos expected to do "new things" vs. repeated practice of a particular thing?

For example, I'd say there's a difference between a gym and a... marathon? match? I think there's more of the latter in the community at the moment: attempting to solve particular bugs using whatever means are necessary.

↑ comment by ioannes (ioannes_shade) · 2019-12-12T15:53:25.922Z · LW(p) · GW(p)

Rationality gym (of a certain flavor): https://www.monasticacademy.com

comment by Bird Concept (jacobjacob) · 2019-08-01T10:57:47.325Z · LW(p) · GW(p)

I edited a transcript of a 1.5h conversation, and it was 10.000 words. That's roughly 1/8 of a book. Realising that a day of conversations can have more verbal content than half a book(!) seems to say something about how useless many conferences are, and how incredibly useful they could be.

Replies from: Viliam, Raemon↑ comment by Viliam · 2019-08-08T20:37:13.140Z · LW(p) · GW(p)

In conversation, you can adjust your speech to your partner. Don't waste time explaining things they already know; but don't skip over things they don't. Find examples and metaphors relevant to their work. Etc.

When talking to a group of people, everyone is at a different place, so no matter how you talk, it will be suboptimal for some of them. Some will be bored, some will misunderstand parts. Some parts will be uninteresting for some of them.

Replies from: jacobjacob↑ comment by Bird Concept (jacobjacob) · 2019-08-09T10:56:01.318Z · LW(p) · GW(p)

Yup. I think it follows that when people do talk to groups in that way, they're not primarily aiming to communicate useful information in an optimal manner (if they were, there'd be better ways). They must be doing something else.

Plausible candidates are: building common knowledge [LW · GW] and signalling of various kinds .

Replies from: Viliam↑ comment by Viliam · 2019-08-14T21:39:38.369Z · LW(p) · GW(p)

Just because talking to a group is not optimal for an average group member, it could still be close to optimal for some of them (the audience that the speaker had in mind when preparing the speech), and could maximize the total knowledge -- as a toy model, instead of one person getting 10 points of knowledge, there are ten people getting 5, 4, 3, 3, 2, 2, 2, 1, 1, 1 points of knowledge, each number smaller than 10 but their sum 24 is greater than 10; in other words, we are not optimizing for the individual's input, but for the speaker's output.

But building common knowledge seems more likely. The advantage of speech is that everyone knows that everyone at the same lecture heard the same explanation; which allows later debates among the audience. If you read a book alone, you don't know who else did.

And of course, signaling such as "this person is considered an expert (or has sufficiently high status so that everyone pretends they are an expert) by both the organizers and the audience". I would assume that the less information people get from the lecture, the stronger the status signaling.

↑ comment by Raemon · 2019-08-01T19:14:34.456Z · LW(p) · GW(p)

Is the implied background claim that conferences are useless, and with this comment, you're remarking that it's sunk in how especially sad that is?

Something something about how many words you have [LW · GW] if you're doing in-person communication.

Replies from: jacobjacob↑ comment by Bird Concept (jacobjacob) · 2019-08-02T11:50:06.984Z · LW(p) · GW(p)

No, it's just me being surprised about how much information conversation can contain (I expected the bandwidth to be far lower, and the benefits mostly to be due to quick feedback loops). Combine that with personal experience of attending bad conferences and lame conversations, and things seem sad. But that's not the key point.

Really like your post. (Also it's crazy if true that the conversational upper bound decreases by 1 if people are gossiping, since they have to model the gossip subject.)

comment by Bird Concept (jacobjacob) · 2024-12-20T23:19:58.834Z · LW(p) · GW(p)

It is January 2020.

Replies from: keltan, Mitchell_Porter, yanni, james-oofou↑ comment by keltan · 2024-12-21T05:26:01.378Z · LW(p) · GW(p)

In reference to o3 right? Comparing it to just before the 2020 pandemic started?

As in “Something large is about to happen and we are unprepared”?

Replies from: niplav↑ comment by niplav · 2024-12-21T05:48:51.297Z · LW(p) · GW(p)

It's funny, you're right. But I intuitively read it as "it's January 2020—GPT-3 is not yet released, but it will be in a couple of months. Get ready."

2020 is firmly in my head as the year the scaling revolution started, and only secondarily as the year the COVID-19 pandemic started.

↑ comment by Mitchell_Porter · 2024-12-22T09:26:24.546Z · LW(p) · GW(p)

By the start of April half the world was locked down, and Covid was the dominant factor in human affairs for the next two years or so. Do you think that issues pertaining to AI agents are going to be dominating human affairs so soon and so totally?

Replies from: jacobjacob↑ comment by Bird Concept (jacobjacob) · 2024-12-23T22:43:19.665Z · LW(p) · GW(p)

I don't expect it that soon, but do I expect more likely than not that there's a covid-esque fire alarm + rapid upheaval moment.

↑ comment by james oofou (james-oofou) · 2025-04-07T03:43:45.321Z · LW(p) · GW(p)

This aged amusingly.

comment by Bird Concept (jacobjacob) · 2019-08-14T00:28:29.812Z · LW(p) · GW(p)

What important book that needs fact-checking is nobody fact-checking?

Replies from: jp, mr-hire, MathieuRoy↑ comment by jp · 2019-08-14T04:14:12.824Z · LW(p) · GW(p)

The Sequences could use a refresher possibly.

Deep Work was my immediate thought after Elizabeth's spot check [LW · GW].

↑ comment by Matt Goldenberg (mr-hire) · 2019-10-30T15:22:42.555Z · LW(p) · GW(p)

Superforecasting.

↑ comment by Mati_Roy (MathieuRoy) · 2019-11-02T11:45:54.300Z · LW(p) · GW(p)

Maybe "The End of Banking: Money, Credit, and the Digital Revolution"

comment by Bird Concept (jacobjacob) · 2019-09-04T20:24:59.987Z · LW(p) · GW(p)

Someone tried to solve a big schlep of event organizing.

Through this app, you:

- Pledge money when signing up to an event

- Lose it if you don't attend

- Get it back if you attend + a share of the money from all the no-shows

For some reason it uses crypto as the currency. I'm also not sure about the third clause, which seems to incentivise you to want others to no-show to get their deposits.

Anyway, I've heard people wanting something like this to exist and might try it myself at some future event I'll organize.

H/T Vitalik Buterin's Twitter

Replies from: Raemon, capybaralet, melanie-heisey↑ comment by Raemon · 2019-09-04T20:29:30.355Z · LW(p) · GW(p)

That's an interesting idea. The crypto thing I'm sure gets some niche market more easily but does make it so that I have less interest in it.

Replies from: mr-hire↑ comment by Matt Goldenberg (mr-hire) · 2019-09-06T17:08:29.728Z · LW(p) · GW(p)

I'm not even sure if it gets the niche market easily. Most crypto people don't use their crypto for dapps or payments

↑ comment by David Scott Krueger (formerly: capybaralet) (capybaralet) · 2019-09-07T05:38:22.224Z · LW(p) · GW(p)

I had that idea!

Assurance contracts are going to turn us into a superorganism, for better or worse. You heard it here first.

↑ comment by Melanie Heisey (melanie-heisey) · 2019-09-05T17:19:21.885Z · LW(p) · GW(p)

Does seem promising

comment by Bird Concept (jacobjacob) · 2019-10-23T18:59:35.570Z · LW(p) · GW(p)

Blackberries and bananas

Here's a simple metaphor I've recently been using in some double-cruxes about intellectual infrastructure and tools, with Eli Tyre, Ozzie Gooen, and Richard Ngo.

Short people can pick blackberries. Tall people can pick both blackberries and bananas. We can give short people lots of training to make them able to pick blackberries faster, but no amount of blackberries can replace a banana if you're trying to make banana split.

Similarly, making progress in a pre-paradigmatic field might require x number of key insights. But are those insights bananas, which can only be picked by geniuses, whereas ordinary researchers can only get us blackberries?

Or, is this metaphor false, such that having a certain number of non-geniuses + excellent tools, we can actually replicate geniuses?

This has implications for the impact of rationality training and internet intellectual infrastructure, as well as what versions of those endeavours are most promising to focus on.

comment by Bird Concept (jacobjacob) · 2019-07-23T03:00:29.143Z · LW(p) · GW(p)

Had a good conversation with elityre today. Two nuggets of insight:

- If you want to level up, find people who seem mysteriously better than you at something, and try to learn from them.

- Work on projects which are plausibly crucial. That is, projects such that if we look back from a win scenario, it's not too implausible we'd say they were part of the reason.

comment by Bird Concept (jacobjacob) · 2019-12-27T14:37:29.275Z · LW(p) · GW(p)

I made a Foretold notebook for predicting which posts will end up in the Best of 2018 book, following the LessWrong review.

You can submit your own predictions as well.

At some point I might write a longer post explaining why I think having something like "futures markets" on these things can create a more "efficient market" for content.

comment by Bird Concept (jacobjacob) · 2020-10-30T23:04:34.357Z · LW(p) · GW(p)

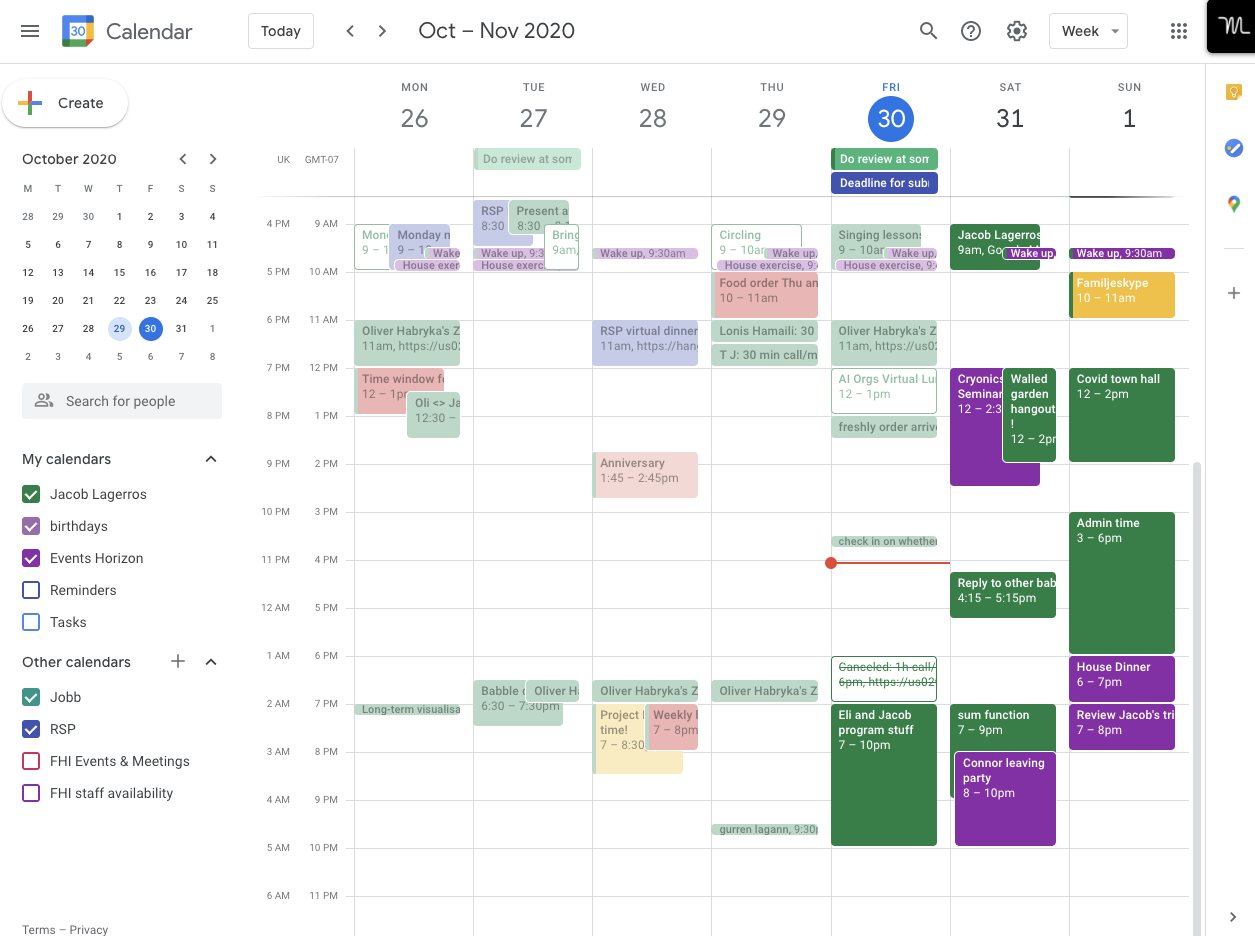

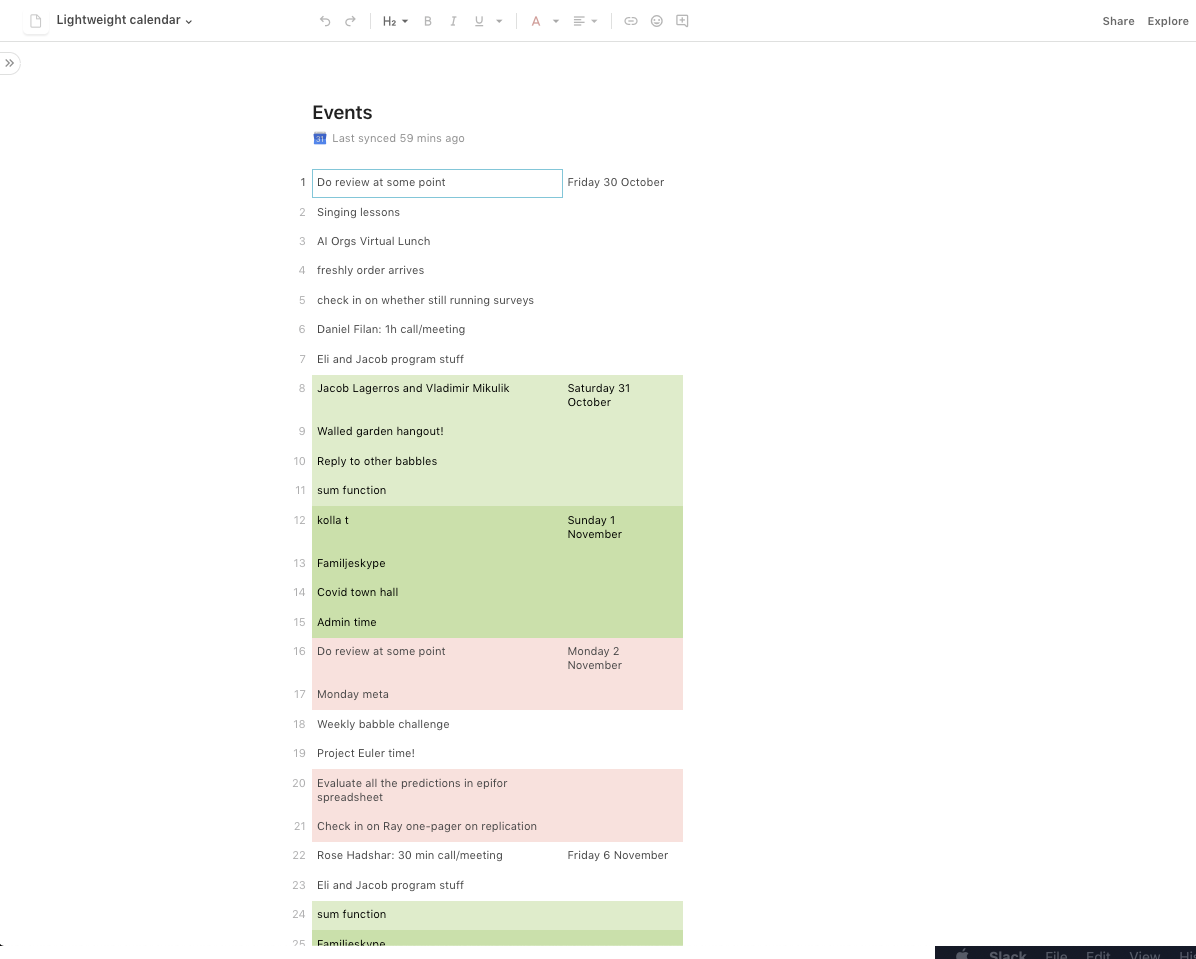

Anyone have good recommendations for a lightweight, minimalist calendar app?

Google calendar is feeling so bloated.

I had a quick stab at building something more lightweight in Coda, just for myself. Compare these two:

I get that G Calendar is trying to solve a bunch of problems for lots of different users. But I feel I can't be the only one with who'd want something more spacious and calm.

↑ comment by Matt Goldenberg (mr-hire) · 2020-11-01T03:11:14.639Z · LW(p) · GW(p)

Google calendar agenda view looks much more like your coda doc. Have you tried setting it as your default view?

Replies from: jacobjacob↑ comment by Bird Concept (jacobjacob) · 2020-11-02T20:09:23.559Z · LW(p) · GW(p)

No, I looked around for a bit and am not sure how to do that.

Replies from: mr-hire↑ comment by Matt Goldenberg (mr-hire) · 2020-11-02T20:25:52.109Z · LW(p) · GW(p)

Press A to get to the Agenda view. I think Gcal remembers where you last were, so you should automatically end up on that view next time.

comment by Bird Concept (jacobjacob) · 2020-01-14T21:08:48.584Z · LW(p) · GW(p)

I saw an ad for a new kind of pant: stylish as suit pants, but flexible as sweatpants. I didn't have time to order them now. But I saved the link in a new tab in my clothes database -- an Airtable that tracks all the clothes I own.

This crystallised some thoughts about external systems that have been brewing at the back of my mind. In particular, about the gears-level principles that make some of them useful, and powerful,

When I say "external", I am pointing to things like spreadsheets, apps, databases, organisations, notebooks, institutions, room layouts... and distinguishing those from minds, thoughts and habits. (Though this distinction isn't exact, as will be clear below, and some of these ideas are at an early stage.)

Externalising systems allows the following benefits...

1. Gathering answers to unsearchable queries

There are often things I want lists of, which are very hard to Google or research. For example:

- List of groundbreaking discoveries that seem trivial in hindsight

- List of different kinds of confusion, identified by their phenomenological qualia

- List of good-faith arguments which are elaborate and rigorous, though uncertain, and which turned out to be wrong

etc.

Currently there is no search engine (but the human mind) capable of finding many of these answers (if I am expecting a certain level of quality). But for that reason researching the lists is also very hard.

The only way I can build these lists is by accumulating those nuggets of insight over time.

And the way I make that happen, is to make sure to have external systems which are ready to capture those insights as they appear.

2. Seizing serendipity

Luck favours the prepared mind.

Consider the following anecdote:

Richard Feynman was fond of giving the following advice on how to be a genius. [As an example, he said that] you have to keep a dozen of your favorite problems constantly present in your mind, although by and large they will lay in a dormant state. Every time you hear or read a new trick or a new result, test it against each of your twelve problems to see whether it helps. Every once in a while there will be a hit, and people will say: "How did he do it? He must be a genius!"

I think this is true far beyond beyond intellectual discovery. In order for the most valuable companies to exist, there must be VCs ready to fund those companies when their founders are toying with the ideas. In order for the best jokes to exist, there must be audiences ready to hear them.

3. Collision of producers and consumers

Wikipedia has a page on "Bayes theorem".

But it doesn't have a page on things like "The particular confusion that many people feel when trying to apply conservation of expected evidence to scenario X".

Why?

One answer is that more detailed pages aren't as useful. But I think that can't be the entire truth. Some of the greatest insights in science take a lot of sentences to explain (or, even if they have catchy conclusions, they depend on sub-steps which are hard to explain).

Rather, the survival of Wikipedia pages depends on both those who want to edit and those who want to read the page being able to find it. It depends on collisions, the emergence of natural Schelling points for accumulating content on a topic. And that's probably something like exponentially harder to accomplish the longer your thing takes to describe and search for.

Collisions don't just have to occur between different contributors. They must also occur across time.

For example, sometimes when I've had 3 different task management systems going, I end up just using a document at the end of the day. Because I can't trust that if I leave a task in any one of the systems, future Jacob will return to that same system to find it.

4. Allowing collaboration

External systems allow multiple people to contribute. This usually requires some formalism (a database, mathematical notation, lexicons, ...), and some sacrifice of flexibility (which grows superlinearly as the number of contributors grow).

5. Defining systems extensionally rather than intensionally

These are terms from analytic philosophy. Roughly, the "intension" of the concept "dog" is a furry, four-legged mammal which evolved to be friendly and cooperative with a human owner. The "extension" of "dog" is simply the set of all dogs: {Goofy, Sunny, Bo, Beast, Clifford, ...}

If you're defining a concept extensionally, you can simply point to examples as soon as you have some fleeting intuitive sense of what you're after, but long before you can articulate explicit necessary and sufficient conditions for the concept.

Similarly, an externalised system can grow organically, before anyone knows what it is going to become.

6. Learning from mistakes

I have a packing list database, that I use when I travel. I input some parameters about how long I'll be gone and how warm the location is, and it'll output a checklist for everything I need to bring.

It's got at least 30 items per trip.

One unexpected benefit from this, is that whenever I forget something -- sunglasses, plug converters, snacks -- I have a way to ensure I never make that mistake again. I simply add it to my database, and as long as future Jacob uses the database, he'll avoid repeating my error.

This is similar to Ray Dalio's Principles. I recall him suggesting that the act of writing down and reifying his guiding wisdom gave him a way to seize mistakes and turn them into a stronger future self.

This is also true for the Github repo of the current project I'm working on. Whenever I visit our site and find a bug, I have a habit of immediately filing an issue, for it to be solved later. There is a pipeline whereby these real-world nuggets of user experience -- hard-worn lessons from interacting with the app "in-the-field", that you couldn't practically have predicted from first principles -- get converted into a better app. So, whenever a new bug is picked up by me or a user, in addition to annoyance, it causes a little flinch of excitement (though the same might not be true for our main developer...). This also relates to the fact that we're dealing in code. Any mistake can be improved in such a way that no future user will experience it.

comment by Bird Concept (jacobjacob) · 2019-07-23T03:16:50.351Z · LW(p) · GW(p)

I'm confused about the effects of the internet on social groups. [1]

On the one hand... the internet enables much larger social groups (e.g. reddit communities with tens of thousands of members) and much larger circles of social influence (e.g. instagram celebrities having millions of followers). Both of these will tend to display network effects. However, A) social status is zero-sum, and B) there are diminishing returns to the health benefits of social status [2]. This suggests that on the margin moving from a very large number of small communities (e.g. bowling clubs) to a smaller number of larger communities (e.g. bowling YouTubers) will be net negative, because the benefits of status gains at the top will diminish faster than the harms from losses at the median.

On the other hand... the internet enables much more niched social groups. Ceteris paribus, this suggests it should enable more groups and higher quality groups.

I don't know how to weigh these effects, but currenly expect the former to be a fair bit larger.

[1] In addition to being confused I'm also uncertain, due to lack of data I could obtain in a few hours, probably.

[2] In contrast to the psychological benefits of status, I think social capital can sometimes have increasing returns. One mechanism: if you grow your network, the number of connections between units in your network grows even faster.

Replies from: mr-hire, Pattern↑ comment by Matt Goldenberg (mr-hire) · 2019-07-27T22:26:55.538Z · LW(p) · GW(p)

My recent thinking about this in relation to creative content is this:

1. The middle has gotten much narrower. That is, because news can travel fast, and we can see what people's opinions are, and high status people have more reach, the "average person's aesthetic loves" are now much closer to the typical person. People who make content for a mainstream audience now have to contend with this mega-tastemaking machine, and there's not as much space to go around because EVERYBODY is watching game of thrones and reading harry potter.

2. The tails have gotten much wider. That is, because self-publishing is easy, and searching is easy, and SOOO much content is at our fingertips, there are way more and way more varied fringe tastes than ever before, and people who make weird or out there content now have the option of making a living with 1000 true fans.

I haven't thought about it too much, but it's possible the internet has had the same effect on groups in general. With mainstream groups being bigger, and there being more room for niche groups. In general if we're talking about people's wellbeing, I suspect the first effect (of seeing how lititle status you have relative to the megastars) to overwhelm the effect of being able to find niche groups to be a part of.

↑ comment by Pattern · 2019-07-23T04:17:32.885Z · LW(p) · GW(p)

So whether the internet is good or bad hinges on 1) whether (or to what degree) the health benefits of social status can be imparted via the internet, and 2) knowledge about social graphs that might be obtained from Facebook, or possessed by the NSA?

Replies from: jacobjacob↑ comment by Bird Concept (jacobjacob) · 2019-07-23T04:23:53.478Z · LW(p) · GW(p)

If you're asking whether your paraphrase actually captures my model, it doesn't. If you're making a point, I'm afraid I don't get it.

Replies from: Patterncomment by Bird Concept (jacobjacob) · 2019-10-29T20:38:53.828Z · LW(p) · GW(p)

Something interesting happens when one draws on a whiteboard ⬜✍️while talking.

Even drawing 🌀an arbitrary squiggle while making a point makes me more likely to remember it, whereas points made without squiggles are more easily forgotten.

This is a powerful observation.

We can chunk complex ideas into simple pointers.

This means I can use 2d surfaces as a thinking tool in a new way. I don't have to process content by extending strings over time, and forcibly feeding an exact trail of thought into my mind by navigating with my eyes. Instead I can distill the entire scenario into 🔭a single, manageable, overviewable whole -- and do so in a way which leaves room for my own trails and 🕸️networks of thought.

At a glance I remember what was said, without having to spend mental effort keeping track of that. This allows me to focus more fully on what's important.

In the same way, I've started to like using emojis in 😃📄essays and other documents. They feel like a spiritual counterpart of whiteboard squiggles.

I'm quite excited about this. In future I intend to 🧪experiment more with it.

Replies from: KatjaGrace, habryka4

↑ comment by KatjaGrace · 2019-10-31T17:08:55.828Z · LW(p) · GW(p)

I think random objects might work in a similar way. e.g. if talking in a restaurant, you grab the ketchup bottle and the salt to represent your point. I've only experimented with this once, with ultimately quite an elaborate set of condiments, tableware and fries involved. It seemed to make things more memorable and followable, but I wasn't much inclined to do it more for some reason. Possibly at that scale it was a lot of effort beyond the conversation.

Things I see around me sometimes get involved in my thoughts in a way that seems related. For instance, if I'm thinking about the interactions of two orgs while I'm near some trees, two of the trees will come to represent the two orgs, and my thoughts about how they should interact will echo ways that the trees are interacting, without me intending this.

↑ comment by habryka (habryka4) · 2019-10-29T21:18:54.614Z · LW(p) · GW(p)

I... am surprised by how much I hate this comment. I very likely don't endorse it, but I sure seem to have some kind of adverse reaction to emojis.

Replies from: jacobjacob↑ comment by Bird Concept (jacobjacob) · 2019-10-30T13:21:27.100Z · LW(p) · GW(p)

Well, I strong downvoted because adversarial tone, though I'd be pretty excited about fighting about this in the right kind of way.

Curious if you could introspect/tell me more about the aversion?

Replies from: habryka4↑ comment by habryka (habryka4) · 2019-10-30T16:26:58.918Z · LW(p) · GW(p)

Oh, I totally didn't mean it in an adversarial tone, only in a playful tone. I am generally in favor of people experimenting with weird content formats, I just had an unusually strong emotional reaction to this one, which seemed good to share without trying to imply any kind of judgement.

Can do a more detailed introspective pass later, just seemed good to clear up the tone.

Replies from: Lanrian, jacobjacob↑ comment by Lukas Finnveden (Lanrian) · 2019-10-30T22:50:02.880Z · LW(p) · GW(p)

(Potential reason for confusion: "don't endorse it" in habryka's first comment could be interpreted as not endorsing "this comment", when habryka actually said he didn't endorse his emotional reaction to the comment.)

Replies from: habryka4↑ comment by habryka (habryka4) · 2019-10-30T23:34:56.041Z · LW(p) · GW(p)

Oh, that would make a bunch of sense. Yes, the "it" was referring to my emotional reaction, not the comment.

Replies from: jacobjacob↑ comment by Bird Concept (jacobjacob) · 2019-10-31T12:24:25.566Z · LW(p) · GW(p)

Ah! I read "it" as the comment. That does change my mind about how adversarial it was.

↑ comment by Bird Concept (jacobjacob) · 2019-10-31T12:29:45.367Z · LW(p) · GW(p)

I am quite uncertain but have an intuition that there should be an expectation of more justification accompanying stronger negative aversions (and "hate" is about as strong as it gets).

(Naturally not everything has to be fully justified, that's an unbearable demand which will stifle lots of important discourse. This is rather a point about the degree to which different things should be, and how communities should make an unfortunate trade-off to avoid Moloch when communicating aversions.)

comment by Bird Concept (jacobjacob) · 2019-08-08T15:04:01.840Z · LW(p) · GW(p)

If you're building something and people's first response is "Doesn't this already exist?" that might be a good sign.

It's hard to find things that actually are good (as opposed to just seeming good), and if you succeed, there ought to be some story for why no one else got there first.

Sometimes that story might be:

People want X. A thing existed which called itself "X", so everyone assumed X already existed. If you look close enough, "X" is actually pretty different from X, but from a distance they blend together; causing people looking for ideas to look elsewhere.

comment by Bird Concept (jacobjacob) · 2019-08-02T12:10:16.753Z · LW(p) · GW(p)

There are different kinds of information instutitions.

- Info-generating (e.g. Science, ...)

- Info-capturing (e.g. short-form? prediction markets?)

- Info-sharing (e.g. postal services, newspapers, social platforms, language regulators, ...)

- Info-preserving (e.g. libraries, archives, religions, Chesterton's fence-norms, ...)

- Info-aggregating (e.g. prediction markets, distill.pub...)

I hadn't considered the use of info-capturing institutions until recently, and in particular how prediction sites might help with this. If you have an insight or an update, there is cheap and standardised way to make it part of the world's share knowledge.

The cheapness means you might actually do it. And the standardisation means it will interface more easily with the other parts of the info pipeline (easier to share, easier to preserve, easier to aggregate into established wisdom...)

Replies from: abe-dillon↑ comment by Abe Dillon (abe-dillon) · 2019-08-02T22:05:46.297Z · LW(p) · GW(p)

According to the standard model of physics: information can't be created or destroyed. I don't know if science can be said to "generate" information rather than capturing it. It seems like you might be referring to a less formal notion of information, maybe "knowledge".

Are short-forms really about information and knowledge? It's my understanding that they're about short thoughts and ideas.

I've been contemplating the value alignment problem and have come to the idea that the "telos" of life is to capture and preserve information. This seemingly implies some measure of the utility of information, because information that's more relevant to the problem of capturing and preserving information is more important to capture and preserve than information that's irrelevant to capturing and preserving information. You might call such a measure "knowledge", but there's probably already an information theoretic formalization of that word.

I have to admit, I don't have a strong background in information theory. I'm not really sure if it even makes sense to discuss what some information is "about". I think there's something called the Data-Information-Knowledge-Wisdom (DIKW) hierarchy which may help sort that out. I think data is the bits used to store information. Like the information content of an un-compressed word document might be the same after compressing said document, it just takes up less data. Knowledge might be how information relates to other information, like you might think it takes one bit of information to convey whether the British are invading by land or by sea, but if you have more information about what factors into that decision, like the weather then the signal conveys less than one bit of information because you can make a pretty good prediction without it. In other words: our universe follows some rules and causal relationships so treating events as independent random occurrences is rarely correct. Wisdom, I believe; is about using the knowledge and information you have to make decisions.

Take all that with a grain of salt.

comment by Bird Concept (jacobjacob) · 2024-05-08T07:54:31.698Z · LW(p) · GW(p)

Anyone know folks working on semiconductors in Taiwan and Abu Dhabi, or on fiber at Tata Industries in Mumbai?

I'm currently travelling around the world and talking to folks about various kinds of AI infrastructure, and looking for recommendations of folks to meet!

If so, freel free to DM me!

(If you don't know me, I'm a dev here on LessWrong and was also part of founding Lightcone Infrastructure.)

Replies from: mesaoptimizer↑ comment by mesaoptimizer · 2024-05-08T08:19:27.767Z · LW(p) · GW(p)

fiber at Tata Industries in Mumbai

Could you elaborate on how Tata Industries is relevant here? Based on a DDG search, the only news I find involving Tata and AI infrastructure is one where a subsidiary named TCS is supposedly getting into the generative AI gold rush.

Replies from: jacobjacob↑ comment by Bird Concept (jacobjacob) · 2024-05-08T08:30:34.408Z · LW(p) · GW(p)

That's more about me being interested in key global infrastructure, I've been curious about them for quite a lot of years after realising the combination of how significant what they're building is vs how few folks know about them. I don't know that they have any particularly generative AI related projects in the short term.

comment by Bird Concept (jacobjacob) · 2021-05-02T12:33:31.995Z · LW(p) · GW(p)

Have you been meaning to buy the LessWrong Books [? · GW], but not been able to due to financial reasons?

Then I might have a solution for you.

Whenever we do a user interview with someone, they get a book set for free. Now one of our user interviewees asked that instead their set be given to someone who otherwise couldn't afford it.

So, well, we've got one free set up for grabs!

If you're interested, just send me a private message and briefly describe why getting the book was financially prohibitive to you, and I might be able to send a set your way.

comment by Bird Concept (jacobjacob) · 2020-11-28T03:44:37.290Z · LW(p) · GW(p)

Very uncertain about this, but makes an interesting claim about a relation between temperature, caffeine and RSI: https://codewithoutrules.com/2016/11/18/rsi-solution/

comment by Bird Concept (jacobjacob) · 2020-10-14T01:48:35.002Z · LW(p) · GW(p)

Testing whether images work in spoiler tags

↑ comment by Bird Concept (jacobjacob) · 2020-10-14T01:48:47.739Z · LW(p) · GW(p)

Apparently they don't.

Replies from: mr-hire↑ comment by Matt Goldenberg (mr-hire) · 2020-10-14T02:05:49.825Z · LW(p) · GW(p)

Alternative hypothesis: His smile lights up even the darkest spoiler tag.

Replies from: Gurkenglas↑ comment by Gurkenglas · 2020-10-15T22:30:34.475Z · LW(p) · GW(p)

then it would be

↑ comment by Ben Pace (Benito) · 2020-10-15T23:03:44.790Z · LW(p) · GW(p)

Take my upvote.