Open thread, August 19-25, 2013

post by David_Gerard · 2013-08-19T06:58:15.174Z · LW · GW · Legacy · 326 commentsContents

326 comments

If it's worth saying, but not worth its own post (even in Discussion), then it goes here.

326 comments

Comments sorted by top scores.

comment by Omid · 2013-08-19T16:28:21.937Z · LW(p) · GW(p)

Commercials sound funnier if you mentally replace "up to" with "no more than."

Replies from: bbleeker↑ comment by Sabiola (bbleeker) · 2013-08-20T14:56:22.433Z · LW(p) · GW(p)

Also easier to translate. In fact, we often translate "up to" with "maximaal", the equivalent of "up to a maximum of" in Dutch. But of course that only translates the practical sense, and leaves out the implication of "up to a maximum of xx (and that is a LOT)". We could translate it with "wel" ("wel xx" ~ "even as much as xx"), but in most contexts, that sounds really... American, over the top, exaggerated. And also it doesn't sound exact enough, when it clearly is intended to be a hard limit.

comment by pan · 2013-08-19T22:12:51.663Z · LW(p) · GW(p)

Why doesn't CFAR just tape record one of the workshops and throw it on youtube? Or at least put the notes online and update them each time they change for the next workshop? It seems like these two things would take very little effort, and while not perfect, would be a good middle ground for those unable to attend a workshop.

I can definitely appreciate the idea that person to person learning can't be matched with these, but it seems to me if the goal is to help the world through rationality, and not to make money by forcing people to attend workshops, then something like tape recording would make sense. (not an attack on CFAR, just a question from someone not overly familiar with it).

Replies from: sixes_and_sevens, ChristianKl, Benito, somervta↑ comment by sixes_and_sevens · 2013-08-21T11:39:43.494Z · LW(p) · GW(p)

I'm a keen swing dancer. Over the past year or so, a pair of internationally reputable swing dance teachers have been running something called "Swing 90X", (riffing off P90X). The idea is that you establish a local practice group, film your progress, submit your recordings to them, and they give you exercises and feedback over the course of 90 days. By the end of it, you're a significantly more badass dancer.

It would obviously be better if everything happened in person, (and a lot does happen in person; there's a massive international swing dance scene), but time, money and travel constraints make this prohibitively difficult for a lot of people, and the whole Swing 90X thing is a response to this, which is significantly better than the next best thing.

It's worth considering if a similar sort of model could work for CFAR training.

↑ comment by ChristianKl · 2013-08-19T22:28:05.215Z · LW(p) · GW(p)

One of the core ideas of CFAR is to develop tools to teach rationality. For that purpose it's useful to avoid making the course material completely open at this point in time. CFAR wants to publish scientific papers that validate their ideas about teaching rationality.

Doing things in person helps with running experiments and those experiments might be less clear when some people already viewed the lectures online.

Replies from: pan, Frood↑ comment by Frood · 2013-08-20T06:07:16.772Z · LW(p) · GW(p)

I'm guessing that the goal here is to gather information on how to teach rationality to the 'average' person? As in, the person off of the street who's never asked themselves "what do I think I know and how do I think I know it?". But as far as I can tell, LWers make up a large portion of the workshop attendees. Many of us will have already spent enough time reading articles/sequences about related topics that it's as if we've "already viewed the lectures online".

Also, it's not as if the entire internet is going to flock to the content the second that it gets posted. There will still be an endless pool of people to use in the experiments. And wouldn't the experiments be more informative if the data points weren't all paying participants with rationality as a high priority? Shouldn't the experiments involve trying to teach a random class of high-schoolers or something?

What am I missing?

Replies from: ChristianKl↑ comment by ChristianKl · 2013-08-26T17:26:30.315Z · LW(p) · GW(p)

And wouldn't the experiments be more informative if the data points weren't all paying participants with rationality as a high priority?

As far as I understand that isn't the case. They do give out scholarship, so not everyone pays. I also thinks that they do testing of the techniques outside of the workshops.

Shouldn't the experiments involve trying to teach a random class of high-schoolers or something?

Doing research costs money and CFAR seems to want to fund itself through workshop fees. If they would focus on high school classes they would need a different source of funding.

↑ comment by Ben Pace (Benito) · 2013-08-21T13:59:35.837Z · LW(p) · GW(p)

Is a CFAR workshop like a lecture? I thought it would be closer to a group discussion, and perhaps subgroups within. This would make a recording highly unfocused and difficult to follow.

Replies from: somervta↑ comment by somervta · 2013-08-22T09:25:36.554Z · LW(p) · GW(p)

Any one unit in the workshop is probably something in between a lecture, a practice session and a discussion between the instructor and the attendees. Each unit is different in this respect. For most of the units, a recording of a session would probably not be very useful on its own.

↑ comment by somervta · 2013-08-21T01:51:24.072Z · LW(p) · GW(p)

(April 2013 Workshop Attendee)

(The argument is that) A lot of the CFAR workshop material is very context dependent, and would lose significant value if distilled into text or video. Personally speaking, a lot of what I got out of the workshop was only achievable in the intensive environment - the casual discussion about the material, the reasons behind why you might want to do something, etc - a lot of it can't be conveyed in a one hour video. Now, maybe CFAR could go ahead and try to get at least some of the content value into videos, etc, but that has two concerns. One is the reputational problem with 'publishing' lesser-quality material, and the other is sorta-almost akin to the 'valley of bad rationality'. If you teach someone, say, the mechanics of aversion therapy, but not when to use it, or they learn a superficial version of the principle, that can be worse than never having learned it at all, and it seems plausible that this is true of some of the CFAR material also.

Replies from: pan↑ comment by pan · 2013-08-21T15:33:22.306Z · LW(p) · GW(p)

I agree that there are concerns, and you would lose a lot of the depth, but my real concern is with how this makes me perceive CFAR. When I am told that there are things I can't see/hear until I pay money, it makes me feel like it's all some sort of money making scheme, and question whether the goal is actually just to teach as many people as much as possible, or just to maximize revenue. Again, let me clarify that I'm not trying to attack CFAR, I believe that they probably are an honest and good thing, but I'm trying to convey how I initially feel when I'm told that I can't get certain material until I pay money.

It's akin to my personal heuristic of never taking advice from anyone who stands to gain from my decision. Being told by people at CFAR that I can't see this material until I pay the money is the opposite of how I want to decide to attend a workshop, I instead want to see the tapes or read the raw material and decide on my own that I would benefit from being in person.

Replies from: metastable, palladias, tgb, somervta↑ comment by metastable · 2013-08-21T19:16:22.074Z · LW(p) · GW(p)

Yeah, I feel these objections, and I don't think your heuristic is bad. I would say, though, and I hold no brief for CFAR, never having donated or attended a workshop, that there is another heuristic possibly worth considering: generally more valuable products are not free. There are many exceptions to this, and it is possible for sellers to counterhack this common heuristic by using higher prices to falsely signal higher quality to consumers. But the heuristic is not worthless, it just has to be applied carefully.

↑ comment by palladias · 2013-08-25T18:23:24.412Z · LW(p) · GW(p)

We do offer some free classes in the Bay Area. As we beta-test tweaks or work on developing new material, we invite people in to give us feedback on classes in development. We don't charge for these test sessions, and, if you're local, you can sign up here. Obviously, this is unfortunately geographically limited. We do have a sample workshop schedule up, so you can get a sense of what we teach.

If the written material online isn't enough, you can try to chat with one of us if we're in town (I dropped in on a NYC group at the beginning of August). Or you can drop in an application, and you'll automatically be chatting with one of us and can ask as many questions as you like in a one-on-one interview. Applying doesn't create any obligation to buy; the skype interview is meant to help both parties learn more about each other.

↑ comment by tgb · 2013-08-21T18:28:37.815Z · LW(p) · GW(p)

While you have good points, I would like to say that making money is not unaligned with the goal of teaching as many people as possible. It seems like a good strategy is to develop high-quality material by starting off teaching only those able to pay. This lets some subsidize the development of more open course material. If they haven't gotten to the point where they have released the subsidized material, then I'd give them some more time and judge them again in some years. It's a young organization trying to create material from scratch in many areas.

↑ comment by somervta · 2013-08-22T09:21:09.043Z · LW(p) · GW(p)

I feel your concerns, but tbh I think the main disconnect is the research/development vs teaching dichotomy, not (primarily) the considerations I mentioned. The volunteers at the workshop (who were previous attendees) were really quite emphatic about how much they had improved, including content and coherency as well as organization.

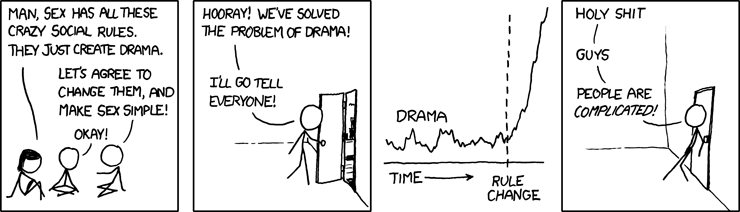

(Relevant)

comment by drethelin · 2013-08-22T19:00:12.299Z · LW(p) · GW(p)

I think one of my very favorite things about commenting on Lesswrong is that usually when you make a short statement or ask a question people will just respond to what you said rather than taking it as a sign to attack what they think that question implies is your tribe.

comment by Omid · 2013-08-20T16:02:11.575Z · LW(p) · GW(p)

This article, written by Dreeve's wife has displaced Yvain's polyamory essay as the most interesting relationships article I've read this year. The basic idea is that instead of trying to split chores or common goods equally, you use auctions. For example, if the bathroom needs to be cleaned, each partner says how much they'd be willing to clean it for. The person with the higher bid pays the what the other person bid, and that person does the cleaning.

It's easy to see why commenters accused them of being libertarian. But I think egalitarians should examine this system too. Most couples agree that chores and common goods should be split equally. But what does "equally" mean? It's hard to quantify exactly how much each person contributes to a relationship. This allows the more powerful person to exaggerate their contributions and pressure the weaker person into doing more than their fair share. But auctions safeguard against this abuse requiring participants to quantify how much they value each task.

For example, feminists argue that women do more domestic chores than men, and that these chores go unnoticed by men. Men do a little bit, but because men don't see all the work women do, they end up thinking that they're doing their share when they aren't. Auctions safeguard against this abuse. Instead of the wife just cleaning the bathroom, she and her husbands bid for how much they'd be willing to clean the bathroom for. The lower bid is considered the fair market price of cleaning the bathroom. Then she and her husband engage in a joint-purchase auction to decide if the bathroom will be cleaned at all. Either the bathroom gets cleaned and the cleaner gets fairly compensated, or the bathroom doesn't get cleaned because the total utility of cleaning the bathroom is less than the disutility of cleaning the bathroom.

And that's it. No arguing about who cleaned it last. No debating whether it really needs to cleaned. No room for misogynist cultural machines to pressure the wife into doing more than her fair share. Just a market transaction that is efficient and fair.

Replies from: kalium, Manfred, passive_fist, knb, Luke_A_Somers, Multiheaded, NancyLebovitz, maia, shminux↑ comment by kalium · 2013-08-21T05:46:58.696Z · LW(p) · GW(p)

This sounds interesting for cases where both parties are economically secure.

However I can't see it working in my case since my housemates each earn somewhere around ten times what I do. Under this system, my bids would always be lowest and I would do all the chores without exception. While I would feel unable to turn down this chance to earn money, my status would drop from that of an equal to that of a servant. I would find this unacceptable.

Replies from: Viliam_Bur, Fronken↑ comment by Viliam_Bur · 2013-08-31T10:58:41.466Z · LW(p) · GW(p)

my housemates each earn somewhere around ten times what I do. Under this system, my bids would always be lowest and I would do all the chores without exception.

I believe you are wrong. (Or I am; in which case please explain to me how.) Here is what I would do it if I lived with a bunch of millionaires, assuming my money is limited:

The first time, I would ask a realistic price X. And I would do the chores. I would put the gained money apart into "the money I don't really own, because I will use them in future to get my status back" budget.

The second time, I would ask 1.5 × X. The third time, 2 × X. The fourth time, 3 × X. If asked, I would explain the change by saying: "I guess I was totally miscalibrated about how I value my time. Well, I'm learning. Sorry, this bidding system is so new and confusing to me." But I would act like I am not really required to explain anything.

Let's assume I always do the chores. Then my income grows exponentially, which is a nice thing per se, but most importantly, it cannot continue forever. At some moment, my bid would be so insanely high, that even Bill Gates would volunteer to do the chores instead. -- Which is completely okay for me, because I would pay him the $1000000000 per hour from my "get the status back" budget, which at the given time already contains the money.

That's it. Keep your money from chores in a separate budget and use them only to pay others for doing the chores. Increase or decrease the bids depending on the state of that budget. If the price becomes relatively stable, there is no way you would do more chores than the other people around you.

The only imbalance I can imagine is if you have a housemate A which always bids more than a housemate B, in which case you will end up between them, always doing more chores than A but less than B. Assuming there are 10 A's and 1 B, and the B is considered very low status, this might result in a rather low status for you, too. -- The system merely guarantees you won't get the lowest status, even if you are the less wealthy person in the house; but you can still get the second-lowest place.

↑ comment by Manfred · 2013-08-20T17:16:54.578Z · LW(p) · GW(p)

Wasn't it Ariely's Predictably Irrational that went over market norms vs. tribe norms? If you just had ordinary people start doing this, I would guess it would crash and burn for the obvious market-norm reasons (the urge to game the system, basically). And some ew-squick power disparity stuff if this is ever enforced by a third party or even social pressure.

Replies from: maia↑ comment by maia · 2013-08-20T18:16:35.741Z · LW(p) · GW(p)

Empirically speaking, this system has worked in our house (of 7 people, for about 6 months so far). What kind of gaming the system were you thinking of?

We do use social pressure: there is social pressure to do your contracted chores, and keep your chore point balance positive. This hasn't really created power disparities per se.

Replies from: someonewrongonthenet, Manfred↑ comment by someonewrongonthenet · 2013-08-20T20:36:39.029Z · LW(p) · GW(p)

What kind of gaming the system were you thinking of?

If the idea is to say exactly how much you are willing to pay, there would be an incentive to:

1) Broadcast that you find all labor extra unpleasant and all goods extra valuable, to encourage people to bid high

2) Bid artificially lower values when you know someone enjoys a labor / doesn't mind parting with a good and will bid accordingly.

In short, optimal play would involve deception, and it happens to be a deception of the sort that might not be difficult to commit subconsciously. You might deceive yourself into thinking you find a chore unpleasant - I have read experimental evidence to support the notion that intrinsically rewarding tasks lose some of their appeal when paired with extrinsic rewards.

No comment on whether the traditional way is any better or worse - I think these two testimonials are sufficient evidence for this to be worth people who have a willing human tribe handy to try it, despite the theoretical issues. After all,

we trust each other not to be cheats and jerks. That’s true love, baby

Edit: There is another, more pleasant problem: If you and I are engaged in trade, and I actually care about your utility function, that's going to effect the price. The whole point of this system is to communicate utility evenly after subtracting for the fact that you care about each other (otherwise why bother with a system?)

Concrete example: We are trying to transfer ownership of a computer monitor, and I'm willing to give it to you for free because I care about you. But if I were to take that into account, then we are essentially back to the traditional method. I'd have to attempt to conjure up the value at which i'd sell the monitor to someone I was neutral towards.

Of course, you could just use this as an argument stopper - whenever there is real disagreement, you use money to effect an easy compromise. But then there is monetary pressure to be argumentative and difficult, and social pressure not to be - it would be socially awkward and monetarily advantageous if you were constantly the one who had a problem with unmet needs.

Replies from: maia↑ comment by maia · 2013-08-21T02:51:59.833Z · LW(p) · GW(p)

1) Broadcast that you find all labor extra unpleasant and all goods extra valuable, to encourage people to bid high

But if other people bid high, then you have to pay more. And they will know if you bid lower, because the auctions are public. How does this help you?

2) Bid artificially lower values when you know someone enjoys a labor / doesn't mind parting with a good and will bid accordingly.

I don't understand how this helps you either; if you bid lower and therefore win the auction, then you have to do the chore for less than you value it at. That's no fun.

The way our system works, it actually gives the lowest bidder, not their actual bid, but the second lowest bid minus 1; that way you don't have to do bidding wars, and can more or less just bid what you value it at. It does create the issue that you mention - bid sniping, if you know what the lowest bidder will bid you can bid just above it so they get as little as possible - but this is at the risk of having to actually do the chore for that little, because bids are binding.

I'd very much like to understand the issues you bring up, because if they are real problems, we might be able to take some stabs at solving them.

whenever there is real disagreement, you use money to effect an easy compromise.

This has become somewhat of a norm in our house. We can pass around chore points in exchange for rides to places and so forth; it's useful, because you can ask for favors without using up your social capital. (Just your chore points capital, which is easier to gain more of and more transparent.)

Replies from: someonewrongonthenet↑ comment by someonewrongonthenet · 2013-08-21T13:19:24.151Z · LW(p) · GW(p)

if you bid lower and therefore win the auction, then you have to do the chore for less than you value it at. That's no fun.

You only do this when you plan to be the buyer. The idea is to win the auction and become the buyer, but putting up as little money as possible. If you know that the other guy will do it for $5, you bid $6, even if you actually value it at $10. As you said, I'm talking about bid sniping.

But if other people bid high, then you have to pay more.

Ah, I should have written "broadcast that you find all labor extra unpleasant and all goods extra valuable when you are the seller (giving up a good or doing a labour) so that people pay you more to do it."

If you're willing to do a chore for _$10, but you broadcast that you find it more than -$10 of unpleasantness, the other party will be influenced to bid higher - say, $40. Then, you can bid $30, and get paid more. It's just price inflation - in a traditional transaction, a seller wants the buyer to pay as much as they are willing to pay. To do this, the seller must artificially inflate the buyer's perception of how much the item is worth to the seller. The same holds true here.

When you intend to be the buyer you do the opposite - broadcast that you're willing to do the labor for cheap to lower prices, then bid snipe. As in a traditional transaction, the buyer wants the seller to believe that the item is not of much worth to the buyer. The buyer also has to try to guess the minimum amount that the seller will part with the item.

it actually gives the lowest bidder, not their actual bid, but the second lowest bid minus 1

So what I wrote above was assuming the price was a midpoint between the buyer's and seller's bid, which gives them both equal power to set the price. This rule slightly alters things, by putting all the price setting power in the buyer's hands.

Under this rule, after all the deceptive price inflation is said and done you should still bid an honest $10 if you are only playing once - though since this is an iterated case, you probably want to bid higher just to keep up appearances if you are trying to be deceptive.

One of the nice things about this rule is that there is no incentive to be deceptive unless other people are bid sniping. The weakness of this rule is that it creates a stronger incentive to bid snipe.

Price inflation (seller's strategy) and bid sniping (buyer's strategy) are the two basic forms of deception in this game. Your rule empowers the buyer to set the price, thereby making price inflation harder at the cost of making bid sniping easier. I don't think there is a way around this - it seems to be a general property of trading. Finding a way around it would probably solve some larger scale economic problems.

Replies from: rocurley↑ comment by rocurley · 2013-08-21T19:36:18.221Z · LW(p) · GW(p)

(I'm one of the other users/devs of Choron)

There are two ways I know of that the market can try to defeat bid sniping, and one way a bidder can (that I know of).

Our system does not display the lowest bid, only the second lowest bid. For a one-shot auction where you had poor information about the others preferences, this would solve bid sniping. However, in our case, chores come up multiple times, and I'm pretty sure that it's public knowledge how much I bid on shopping, for example.

If you're in a situation where the lowest bid is hidden, but your bidding is predictable, you can sometimes bid higher than you normally would. This punishes people who bid less than they're willing to actually do the chore for, but imposes costs on you and the market as a whole as well, in the form of higher prices for the chore.

A third option, which we do not implement (credit to Richard for this idea), is to randomly award the auction to one of the two (or n) lowest bidders, with probability inversely related to their bid. In particular, if you pick between the lowest 2 bidders, both have claimed to be willing to do the job for the 2nd bidder's price (so the price isn't higher and noone can claim they were forced to do something for less than they wanted). This punishes bid-snipers by taking them at their word that they're willing to do the chore for the reduced price, at the cost of determinism, which allows better planning.

Replies from: someonewrongonthenet↑ comment by someonewrongonthenet · 2013-08-23T01:22:00.410Z · LW(p) · GW(p)

at the cost of determinism

And market efficiency.

Plus, I think it doesn't work when there are only two players? If I honestly bid $30, and you bid $40 and randomly get awarded the auction, then I have to pay you $40. And that leaves me at -$10 disutility, since the task was only -$30 to me.

Replies from: rocurley↑ comment by rocurley · 2013-08-23T03:44:30.858Z · LW(p) · GW(p)

To be sure I'm following you: If the 2nd bidder gets it (for the same price as the first bidder), the market efficiency is lost because the 2nd person is indifferent between winning and not, while the first would have liked to win it? If so, I think that's right.

If there are two players... I agree the first bidder is worse off than they would be if they had won. This seems like a special case of the above though: why is it more broken with 2 players?

Replies from: someonewrongonthenet↑ comment by someonewrongonthenet · 2013-08-25T17:05:03.172Z · LW(p) · GW(p)

To be sure I'm following you...

Yes, that's one of the inefficiencies. The other inefficiency is that whenever the 2nd player wins, the service gets more expensive.

If there are two players... I agree the first bidder is worse off than they would be if they had won. This seems like a special case of the above though: why is it more broken with 2 players?

Because of the fact that the service gets more expensive. When there are multiple players, this might not seem like such a big deal - sure, you might pay more than the cheapest possible price, but you are still ultimately all benefiting (even if you aren't maximally benefiting). Small market inefficiencies are tolerable.

It's not so bad with 3 players who bid 20, 30, 40, since even if the 30-bidder wins, the other two players only have to pay 15 each. It's still inefficient, but it's not worse than no trade.

However, when your economy consists of two people, market inefficiency is felt more keenly. Consider the example I gave earlier once more:

I bid 30. You bid 40. So I can sell you my service for $30-$40, and we both benefit. . But wait! The coin flip makes you win the auction. So now I have to pay you $40.

My stated preference is that I would not be willing to pay more than $30 for this service. But I am forced to do so. The market inefficiency has not merely resulted in a sub-optimal outcome - it's actually worse than if I had not traded at all!

Edit: What's worse is that you can name any price. So suppose it's just us two, I bid $10 and you bid $100, and it goes to the second bidder...

Replies from: rocurley↑ comment by rocurley · 2013-08-27T01:28:00.002Z · LW(p) · GW(p)

I don't think that the service gets more expensive under a second price auction (which Choron uses). If you bid $10 and I bid $100, normally it would go to you for $100. In the randomized case, it might go to me for $100.

I think I agree with you about the possibility of harm in the 2 person case.

Replies from: someonewrongonthenet↑ comment by someonewrongonthenet · 2013-08-27T15:59:13.310Z · LW(p) · GW(p)

I don't think that the service gets more expensive under a second price auction (which Choron uses). If you bid $10 and I bid $100, normally it would go to you for $100. In the randomized case, it might go to me for $100.

Oh yes, that's right. I think I initially misunderstood the rules of the second price - I thought it would be $10 to me or $100 to you , randomly chosen.

↑ comment by Manfred · 2013-08-20T20:54:50.992Z · LW(p) · GW(p)

What kind of gaming the system were you thinking of?

Yeah, bidding = deception. But in addition to someonewrong's answer, I was thinking you could just end up doing a shitty job at things (e.g. cleaning the bathroom). Which is to say, if this were an actual labor market, and not a method of communicating between people who like each other and have outside-the-market reasons to cooperate, the market doesn't have much competition.

Replies from: maia, juliawise↑ comment by maia · 2013-08-21T02:42:28.487Z · LW(p) · GW(p)

Yeah, that's unfortunately not something we can really handle other than decreeing "Doing this chore entails doing X and it doesn't count if you don't do X." Enforcing the system isn't solved by the system itself.

a method of communicating between people who like each other and have outside-the-market reasons to cooperate

Good way to describe it.

↑ comment by juliawise · 2013-10-13T15:00:26.144Z · LW(p) · GW(p)

Except she specifies that if they're bidding above market wages for a task (cleaning the bathroom would work fine), they'll just pay someone else to do it. Of course, chores like getting up to deal with a sick child are not so outsourceable.

↑ comment by passive_fist · 2013-08-22T08:06:30.665Z · LW(p) · GW(p)

Most couples agree that chores and common goods should be split equally.

I'm skeptical that most couples agree with this.

Anyway, all of these types of 'chore division' systems that I've seen so far totally disregard human psychology. Remember that the goal isn't to have a fair chore system. The goal is to have a system that preserves a happy and stable relationship. If the resulting system winds up not being 'fair', that's ok.

Replies from: army1987↑ comment by A1987dM (army1987) · 2013-08-22T21:20:14.543Z · LW(p) · GW(p)

I'm skeptical that most couples agree with this.

Most couples worldwide, or most couples in W.E.I.R.D. societies?

Replies from: passive_fist↑ comment by passive_fist · 2013-08-23T02:12:41.998Z · LW(p) · GW(p)

Both.

↑ comment by knb · 2013-08-20T22:39:06.201Z · LW(p) · GW(p)

Wow someone else thought of doing this too!

My roommate and I started doing this a year ago. It went pretty well for the first few months. Then our neighbor heard about how much we were paying eachother for chores and started outbidding us.

Replies from: Vaniver↑ comment by Vaniver · 2013-08-22T23:54:58.887Z · LW(p) · GW(p)

Then our neighbor heard about how much we were paying eachother for chores and started outbidding us.

This is one of the features of this policy, actually- you can use this as a natural measure of what tasks you should outsource. If a maid would cost $20 to clean the apartment, and you and your roommates all want at least $50 to do it, then the efficient thing to do is to hire a maid.

Replies from: Viliam_Bur↑ comment by Viliam_Bur · 2013-08-31T10:44:37.280Z · LW(p) · GW(p)

The problem could be that they actually are willing to do it for $10, but it's a low-status thing to admit.

If we both lived in the same appartment, and we both pretended that our time is precious that we are only willing to clean the appartment for $1000... and I do it 50% of the time, and you do it 50% of the time, at the end none of us gets poor despite the unrealistic prices, because each of us gets all the money back.

Now when the third person comes and cares about money more than about status (which is easier for them, because they don't live in the same appartment with us), our pretending is exposed and we become either more honest or poor.

↑ comment by Luke_A_Somers · 2013-08-20T18:04:41.267Z · LW(p) · GW(p)

I can see this working better than a dysfunctional household, but if you're both in the habit of just doing things, this is going to make everything worse.

Replies from: dreeves↑ comment by dreeves · 2013-09-23T01:37:26.029Z · LW(p) · GW(p)

Very fair point! Just like with Beeminder, if you're lucky enough to simply not suffer from akrasia then all the craziness with commitment devices is entirely superfluous. I liken it to literal myopia. If you don't have the problem then more power to you. If you do then apply the requisite technology to fix it (glasses, commitment devices, decision auctions).

But actually I think decision auctions are different. There's no such thing as not having the problem they solve. Preferences will conflict sometimes. Just that normal people have perfectly adequate approximations (turn taking, feeling each other out, informal mental point systems, barter) to what we've formalized and nerded up with our decision auctions.

↑ comment by Multiheaded · 2013-08-22T17:33:39.360Z · LW(p) · GW(p)

And that's it. No arguing about who cleaned it last. No debating whether it really needs to cleaned. No room for misogynist cultural machines to pressure the wife into doing more than her fair share. Just a market transaction that is efficient and fair.

P.S.: those last two sentences ("No room for misogynist cultural machines to pressure the wife into doing more than her fair share. Just a market transaction that is efficient and fair.") also remind me of "If those women were really oppressed, someone would have tended to have freed them by then."

Replies from: Omid↑ comment by Omid · 2013-08-23T00:59:22.034Z · LW(p) · GW(p)

The polyamory and BDSM subcultures prove that nerds can create new social rules that improve sex. Of course, you can't just theorize about what the best social rules would be and then declare that you've "solved the problem." But when you see people living happier lives as a result of changing their social rules, there's nothing wrong with inviting other people to take a look.

I don't understand your postscript. I didn't say there is no inequality in chore division because if there were a chore market would have removed it. I said a chore market would have more equality than the standard each-person-does-what-they-think-is-fair system. Your response seems like fully generalized counterargument: anyone who proposes a way to reduce inequality can be accused of denying that the inequality exists.

Replies from: Nornagest, fubarobfusco↑ comment by Nornagest · 2013-08-26T00:37:46.504Z · LW(p) · GW(p)

The polyamory and BDSM subcultures prove that nerds can create new social rules that improve sex

The modern BDSM culture's origins are somewhat obscure, but I don't think I'd be comfortable saying it was created by nerds despite its present demographics. The leather scene is only one of its cultural poles, but that's generally thought to have grown out of the post-WWII gay biker scene: not the nerdiest of subcultures, to say the least.

I don't know as much about the origins of poly, but I suspect the same would likely be true there.

↑ comment by fubarobfusco · 2013-08-25T23:48:03.857Z · LW(p) · GW(p)

The polyamory and BDSM subcultures prove that nerds can create new social rules that improve sex.

Hmm, I don't know that I would consider those rules overall to be clearly superior for everyone, although they do reasonably well for me. Rather, I value the existence of different subcultures with different norms, so that people can choose those that suit their predilections and needs.

(More politically: A "liberal" society composed of overlapping subcultures with different norms, in a context of individual rights and social support, seems to be almost certain to meet more people's needs than a "totalizing" society with a single set of norms.)

There are certain of those social rules that seem to be pretty clear improvements to me, though — chiefly the increased care on the subject of consent. That's an improvement in a vanilla-monogamous-heteronormative subculture as well as a kink-poly-genderqueer one.

Replies from: Viliam_Bur↑ comment by Viliam_Bur · 2013-08-31T11:34:48.501Z · LW(p) · GW(p)

(More politically: A "liberal" society composed of overlapping subcultures with different norms, in a context of individual rights and social support, seems to be almost certain to meet more people's needs than a "totalizing" society with a single set of norms.)

This works best if none of the "subcultures with different norms" creates huge negative externatilies for the rest of the society. Otherwise, some people get angry. -- And then we need to go meta and create some global rules that either prevent the former from creating the externalities, or the latter from expressing their anger.

I guess in case of BDSM subculture this works without problems. And I guess the test of the polyamorous community will be how well they will treat their children (hopefully better than polygamous mormons treat their sons), or perhaps how will they handle the poly- equivalents of divorce, especially the economical aspects of it (if there is a significant shared property).

↑ comment by NancyLebovitz · 2013-08-24T10:06:04.357Z · LW(p) · GW(p)

One datapoint: I know of one household (two adults, one child) which worked out chores by having people list which chores they liked, which they tolerated, and which they hated. It turned out that there was enough intrinsic motivation to make taking care of the house work.

↑ comment by maia · 2013-08-20T18:14:14.661Z · LW(p) · GW(p)

Roger and I wrote a web app for exactly this purpose - dividing chores via auction. This has worked well for chore management for a house of 7 roommates, for about 6 months so far.

The feminism angle didn't even occur to us! It's just been really useful for dividing chores optimally.

↑ comment by Shmi (shminux) · 2013-08-20T18:26:36.261Z · LW(p) · GW(p)

I can see it working when all parties are trustworthy and committed to fairness, which is a high threshold to begin with. Also, everyone has to buy into the idea of other people being autonomous agents, with no shoulds attached. Still, this might run into trouble when one party badly wants something flatly unacceptable to the other and so unable to afford it and feeling resentful.

One (unrelated) interesting quote:

my womb is worth about the cost of one graduate-level course at Columbia, assuming I’m interested in bearing your kid to begin with.

comment by David_Gerard · 2013-08-19T06:59:14.080Z · LW(p) · GW(p)

Weekly open threads - how do you think it's working?

Replies from: Emile, RolfAndreassen↑ comment by Emile · 2013-08-19T07:22:52.887Z · LW(p) · GW(p)

I think it's much better than monthly open threads - back then, I would sometimes think "Hmm, I'd like to ask this in an open thread, but the last one is too old, nobody's looking at it any more".

Replies from: Manfred↑ comment by Manfred · 2013-08-19T12:49:16.086Z · LW(p) · GW(p)

You haven't ever posted a top-level comment in a weekly open thread.

Replies from: Kaj_Sotala, Tenoke↑ comment by Kaj_Sotala · 2013-08-19T18:18:08.769Z · LW(p) · GW(p)

I have, and I agree with Emile's assessment.

↑ comment by Tenoke · 2013-08-19T13:10:51.404Z · LW(p) · GW(p)

What has that to do with it?

Replies from: Manfred, Kawoomba↑ comment by Manfred · 2013-08-19T13:57:56.251Z · LW(p) · GW(p)

Suppose we were wondering about changing the flavor of our pizza. Someone says "Yeah, I'm really glad you've got these new flavors on your menu, I used to think the old recipe was boring and didn't order it much."

And then it turns out that this person hasn't ever actually tried any of your new flavors of pizza.

Sort of sets an upper bound on how much the introduction of new flavors has impacted this person's behavior.

Replies from: Tenoke, bogdanb, Emile↑ comment by Tenoke · 2013-08-19T14:16:31.389Z · LW(p) · GW(p)

You can judge a lot more about a thread than about a pizza by just looking at it.

Also, if you seriously think that Open Threads can only be evaluated by people with top-level comments in them you probably misunderstand both how most people use the Open Threads and what is required to judge them.

Replies from: Manfred↑ comment by bogdanb · 2013-08-19T19:34:42.322Z · LW(p) · GW(p)

Note that he didn’t say “I didn’t post much”, he just said that there existed times when he thought about posting but didn’t because of the age of the thread. That is useful evidence, you can’t just ignore it if it so happens that there are no instances of posting at all.

(In pizza terms, Emile said “I used to think the old recipe was bad and I never ordered it. It’s not that surprising in that case that there are no instances of ordering.)

↑ comment by Emile · 2013-08-19T16:26:35.619Z · LW(p) · GW(p)

Sure!

Though here is more of a case of "once in a blue moon I got o the pizza place ... and I'm bored and tired of life ... and want to try something crazy for a change ... but then I see the same old stuff on the menu, I think man, this world sucks ... but now they have the Sushi-Harissa-Livarot pizza, I know next time I'm going to feel better!"

I agree it's a bit weird that I say that p(post|weekly thread) > p(post| monthly thread) when so far there are no instances of post|weekly thread.

↑ comment by RolfAndreassen · 2013-08-19T16:09:41.883Z · LW(p) · GW(p)

I prefer it to the old format; once a month is too clumpy for an open thread. It was fine when this was a two-man blog, but not for a discussion forum.

comment by Anders_H · 2013-08-19T21:13:31.694Z · LW(p) · GW(p)

Last week, I gave a presentation at the Boston meetup, about using causal graphs to understand bias in the medical literature. Some of you requested the slides, so I have uploaded them at http://scholar.harvard.edu/files/huitfeldt/files/using_causal_graphs_to_understand_bias_in_the_medical_literature.pptx

Note that this is intended as a "Causality for non-majors" type presentation. If you need a higher level of precision, and are able the follow the maths, you would be much better off reading Pearl's book.

(Edited to change file location)

Replies from: Adele_L↑ comment by Adele_L · 2013-08-19T22:35:49.752Z · LW(p) · GW(p)

Thanks for making these available.

Even if you can follow the math, these sorts of things can be useful for orienting someone new to the field, or laying a conceptually simple map of the subject that can be elaborated on later. Sometimes, it's easier to use a map to get a feel for where things are than it is to explore directly.

comment by mstevens · 2013-08-20T10:39:18.187Z · LW(p) · GW(p)

I want to know more (ie anything) about game theory. What should I read?

Replies from: sixes_and_sevens, Manfred, None↑ comment by sixes_and_sevens · 2013-08-20T11:30:13.921Z · LW(p) · GW(p)

If you have the time, I heartily recommend Ben Polak's Introduction to Game Theory lectures. They are highly watchable and give a very solid introduction to the topic.

In terms of books, The Strategy of Conflict is the classic popular work, and it's good, but it's very much a product of its time. I imagine there are more accessible books out there. Yvain recommends The Art of Strategy, which I haven't read.

Replies from: mstevens↑ comment by mstevens · 2013-08-20T13:09:01.068Z · LW(p) · GW(p)

I hate trying to learn things from videos, but the books look interesting.

Replies from: sixes_and_sevens, sixes_and_sevens↑ comment by sixes_and_sevens · 2013-08-20T16:22:41.177Z · LW(p) · GW(p)

(If you want a specific link, here is Yvain's introduction to game theory sequence. There are some problems and inaccuracies with it which are generally discussed in comments, but as a quick overview aimed at a LW audience it should serve pretty well.)

↑ comment by sixes_and_sevens · 2013-08-20T15:00:21.435Z · LW(p) · GW(p)

What are your motives for learning about it? If it's to gain a bare-bones understanding sufficient for following discussion in Less Wrong, existing Less Wrong articles would probably equip you well enough.

Replies from: mstevens↑ comment by mstevens · 2013-08-21T07:51:51.470Z · LW(p) · GW(p)

My possibly crazy theory is that game theory would be a good way to understand feminism.

Replies from: sixes_and_sevens↑ comment by sixes_and_sevens · 2013-08-21T09:36:17.362Z · LW(p) · GW(p)

OK, I'm interested. Can you explain a little more?

Replies from: mstevens↑ comment by mstevens · 2013-08-21T10:20:00.281Z · LW(p) · GW(p)

It's a little bit intuition and might turn out to be daft, but

a) I've read just enough about game theory in the past to know what the prisoner's dilemma is

b) I was reading an argument/discussion on another blog about the men chatting up women, who may or may not be interested, scenario, and various discussions on irc with MixedNuts have given me the feeling that male/female interactions (which are obviously an area of central interest to feminism) are a similar class of thing and possibly game theory will help me understand said feminism and/or opposition to it.

Replies from: sixes_and_sevens, JQuinton↑ comment by sixes_and_sevens · 2013-08-21T11:00:57.997Z · LW(p) · GW(p)

A word of warning: you will probably draw all sorts of wacky conclusions about human interaction when first dabbling with game theory. There is huge potential for hatching beliefs that you may later regret expressing, especially on politically-charged subjects.

↑ comment by JQuinton · 2013-08-23T17:14:48.032Z · LW(p) · GW(p)

I also had the same intuition about male/female dynamics and the prisoner's dilemma. It also seems like a lot of men's behavior towards women is a result of a scarcity mentality. Surely there are some economic models that explain how people behave -- especially their bad behavior -- when they feel some product is scarce, and if these models were applied to male/female dynamics it might predict some behavior.

But since feminism is such a mind-killing topic, I wouldn't feel too comfortable expressing alternative explanations (especially among non-rationalists) since people tend to feel that if you disagree with the explanation then you disagree with the normative goals.

Replies from: satt↑ comment by satt · 2013-08-24T15:36:00.444Z · LW(p) · GW(p)

It also seems like a lot of men's behavior towards women is a result of a scarcity mentality. Surely there are some economic models that explain how people behave -- especially their bad behavior -- when they feel some product is scarce, and if these models were applied to male/female dynamics it might predict some behavior.

One model which I've seen come up repeatedly in the humanities is the "marriage market". Unsurprisingly, economists seem to use this idea most often in the literature, but peeking through the Google Scholar hits I see demographers, sociologists, and historians too. (At least one political philosopher uses the idea too.)

I don't know how predictive these models are. I haven't done a systematic review or anything remotely close to one, but when I've seen the marriage market metaphor used it's usually to explain an observation after the fact. Here is a specific example I spotted in Randall Collins's book ''Violence: A Micro-sociological Theory''. On pages 149 & 150 Collins offers this gloss on an escalating case of domestic violence:

It appears that the husband's occupational status is rising relative to his wife's; in this social class, their socializing is likely to be with the man's professional associates (Kanter 1977), and thus it is when she is in the presence of his professional peers that he belittles her, and it is in regard to what he perceives as her faulty self-presentation in these situations that he begins to engage in tirades at home. He is becoming relatively stronger socially, and she is coming to accept that relationship. Then he escalates his power advantage, as the momentum of verbal tirades flows into physical violence.

A sociological interpretation of the overall pattern is that within the first two years of their marriage, the man has discovered that he is in an improving position on the interactional market relative to his wife; since he apparently does not want to leave his wife, or seek additional partners, he uses his implicit market power to demand greater subservience from his wife in their own personal and sexual relationships. Blau's (1964) principle applies here: the person with a weaker exchange position can compensate by subservience. [...] In effect, they are trying out how their bargaining resources will be turned into ongoing roles: he is learning techniques of building his emotional momentum as dominator, she is learning to be a victim.

(Digression: Collins calls this a sociological interpretation, but I usually associate this kind of bargaining power-based explanation with microeconomics or game theory, not sociology. Perhaps I should expand my idea of what constitutes sociology. After all, Collins is a sociologist, and he has partly melded the bargaining power-based explanation with his own micro-sociological theory of violence.)

Replies from: Viliam_Bur↑ comment by Viliam_Bur · 2013-08-31T11:59:05.547Z · LW(p) · GW(p)

Collins calls this a sociological interpretation, but I usually associate this kind of bargaining power-based explanation with microeconomics or game theory, not sociology. Perhaps I should expand my idea of what constitutes sociology.

All sciences are describing various aspects of the reality, but there is one reality, and all these aspects are connected. Asking whether some explanation belongs to science X or science Y is useful when we want to find the best tools to deal with it; but the more important question is whether the explanation is true or false; how well it predicts reality.

Some applied topics may be considered by various sciences to be in their (extended) territory. For example, I have seen game theory considered a part of a) mathematics, b) economy, and c) psychology. I guess the mechanism itself is mathematical, and it has important economical and psychological consequences, so it is usefull for all of them to know about it.

There may be the case that one outcome is influenced by many factors, and the different factors are best explained by different sciences. For example, some aspects of relationships in marriage can be explained by biology, psychology, economics, sociology, perhaps even theology when the people are religious. Then it is good to check across all sciences to see whether we didn't miss some important factor. But the goal would be to create the best model, not to pick the favourite explanation. (The best model would include all relevant factors, but relatively to their strength.)

Trying to focus on one science only... I guess it is trying to influence the outcome; motivated thinking. For example if someone decides to ignore the biology and only focus on sociology, that already makes it obvious what kind of answer they want to get. And if someone decides to ignore the sociology and only focus on biology, that also makes it obvious. But the real question should be how specifically do both biological and sociological aspect influence the result.

Replies from: satt↑ comment by satt · 2013-09-02T01:55:40.314Z · LW(p) · GW(p)

Asking whether some explanation belongs to science X or science Y is useful when we want to find the best tools to deal with it; but the more important question is whether the explanation is true or false; how well it predicts reality.

Indeed. Still, I want my mental models/stereotypes of different sciences to roughly match what scientists in those different fields are actually doing.

comment by Dorikka · 2013-08-19T19:32:07.065Z · LW(p) · GW(p)

Open comment thread:

If it's worth saying, but not worth its own top-level comment in the open thread, it goes here.

(Copied since it was well received last time.)

Replies from: shminux, Armok_GoB↑ comment by Shmi (shminux) · 2013-08-19T20:22:55.213Z · LW(p) · GW(p)

What's the name of the bias/fallacy/phenomenon where you learn something (new information, approach, calculation, way of thinking, ...) but after awhile revert to the old ideas/habits/views etc.?

Replies from: RobbBB, moreati↑ comment by Rob Bensinger (RobbBB) · 2013-08-20T06:12:53.188Z · LW(p) · GW(p)

Relapse? Backsliding? Recidivism? Unstickiness? Retrogression? Downdating?

Replies from: shminux↑ comment by Shmi (shminux) · 2013-08-20T17:40:52.066Z · LW(p) · GW(p)

Hmm, some of these are good terms, but the issue is so common, I assumed there would be a standard term for it, at least in the education circles.

↑ comment by moreati · 2013-08-19T21:48:41.211Z · LW(p) · GW(p)

I can't think of an academic name, the common phrases in Britain are 'stuck in your ways', 'bloody minded', 'better the the devil you know'.

Replies from: army1987↑ comment by A1987dM (army1987) · 2013-08-21T18:09:19.347Z · LW(p) · GW(p)

Depending on what timescales shminux is thinking of as “awhile” (hours or months?), RobbBB's suggestions may be better.

↑ comment by Armok_GoB · 2013-08-27T19:49:08.187Z · LW(p) · GW(p)

Open subcomment subthread:

If it's not worth saying anywhere, it goes here.

Replies from: Dorikkacomment by knb · 2013-08-20T01:15:13.017Z · LW(p) · GW(p)

I don't know how technically viable hyperloop is, but it seems especially well suited for the United States.

Investing in a hyperloop system doesn't make as much sense in Europe or Japan for a number of reasons:

European/Japanese cities are closer together, so Hyperloop's long acceleration times are a larger relative penalty in terms of speed. The existing HSR systems reach their lower top speeds more quickly.

Most European countries and Japan already have decent HSR systems and are set to decline in population. Big new infrastructure projects tend not to make as much sense when populations are declining and the infrastructure cost : population ratio is increasing by default.

Existing HSR systems create a natural political enemy for Hyperloop proposals. For most countries, having HSR and Hyperloop doesn't make sense.

In contrast, the US seems far better suited:

The US is set for a massive population increase, requiring large new investments in transportation infrastructure in any case.

The US has lots of large but far-flung cities, so long acceleration times are not as much of a relative penalty.

The US has little existing HSR to act as a competitor. The political class has expressed interest in increasing passenger rail infrastructure.

Hyperloop is proposed to carry automobiles. Low walkability of US towns is the big killer of intercity passenger rail in the US. Taking HSR might be faster than driving, but in addition to other benefits, driving saves money on having to rent a car when you reach the destination city.

Another possible early adopter is China (because they still need more transport infrastructure, land acquisition is a trivial problem for the Communist party, and they have a larger area, mitigating the slow acceleration problem.) I see China as less likely than the US because they do have a fairly large HSR system and it is expanding quickly. Also, China is set for population decline within a few decades, although they have some decades of slow growth left.

Russia is another possible candidate. Admittedly they have the declining population problem, but they still need more transport infrastructure and they have several big, far-flung cities. The current Russian transportation system is quite unsafe, so they could be expected to be willing to invest in big new projects. The slow acceleration problem would again be mitigated by Russia's large size.

Replies from: luminosity, None, CAE_Jones, DanielLC, metastable↑ comment by luminosity · 2013-08-20T11:24:09.526Z · LW(p) · GW(p)

Don't forget Australia. We have a few, large cities separated by long distances. In particular, Melbourne to Sydney is one of the highest traffic air routes in the world, roughly the same distance as the proposed Hyperloop, and there has been on and off talk of high speed rail links. Additionally, Sydney airport has a curfew, and is more or less operating at capacity. Offloading Melbourne-bound passengers to a cheaper, faster option would free up more flights for other destinations.

↑ comment by [deleted] · 2013-08-20T04:29:09.885Z · LW(p) · GW(p)

In theory there is no difference between theory and practice. In practice, there is.

I continue to fail to see how this idea is anything more than a cool idea that would take huge amounts of testing and engineering hurdles to get going if it indeed would prove viable. Nothing is as simple as its untested dream ever is.

Not hating on it, but seriously, hold your horses...

Replies from: knb↑ comment by knb · 2013-08-21T09:48:28.736Z · LW(p) · GW(p)

I feel like I covered this in the first sentence with, "I don't know how technically viable hyperloop is." My point is just to argue that the US would be especially well-suited for hyperloop if it turns out to be viable. My goal was mainly to try to argue against the apparent popular wisdom that hyperloop would never be built in the US for the same reason HSR (mostly) wasn't.

↑ comment by CAE_Jones · 2013-08-20T02:30:06.261Z · LW(p) · GW(p)

I was only vaguely following the Hyperloop thread on Lesswrong, but this analysis convinced me to Google it to learn more. I was immediately bombarded with a page full of search results that were pecimmistic at best (mocking, pretending at fallasy of gray but still patronizing, and politically indignant (the LA Times) were among the results on the first page)[1]. I was actually kinda hopeful about the concept, since America desperately needs better transit infrastructure, and KND's analysis of it being best suited for America makes plenty of sense so far as I can tell.

[1] I didn't actually open any of the results, just read the titles and descriptions. The tone might have been exaggerated or even completely mutated by that filter, but that seems unlikely for the titles and excerpts I read.

Replies from: RolfAndreassen↑ comment by RolfAndreassen · 2013-08-20T19:11:32.874Z · LW(p) · GW(p)

I suggest that this is very weak evidence against the viability, either political, economic, or technical, of the Hyperloop. Any project that is obviously viable and useful has been done already; consequently, both useful and non-useful projects get the same amount of resistance of the form "Here's a problem I spent at least ten seconds thinking up, now you must take three days to counter it or I will pout. In public. Thus spoiling all your chances of ever getting your pet project accepted, hah!"

↑ comment by DanielLC · 2013-08-20T05:57:51.665Z · LW(p) · GW(p)

I've been told that railways primarily get money from freight, and nobody cares that much about freight getting there immediately. As such, high speed railways are not a good idea.

I know you can't leave this to free enterprise per se. If someone doesn't want to sell their house, you can't exactly steer a railroad around it. However, if eminent domain is used, then if it's worth building, the market will build it. Let the government offer eminent domain use for railroads, and let them be built if they're truly needed.

Replies from: kalium, knb↑ comment by kalium · 2013-08-20T17:19:41.786Z · LW(p) · GW(p)

Much of Amtrak uses tracks owned by freight companies, and that this is responsible for a good chunk of Amtrak's poor performance. However, high-speed rail on non-freight-owned tracks works pretty well in the rest of the world; it just needs its own right-of-way (in some cases running freight at night when the high-speed trains aren't running, but still having priority over freight traffic).

Replies from: DanielLC↑ comment by DanielLC · 2013-08-20T23:04:15.673Z · LW(p) · GW(p)

Are high speed trains profitable enough for people to build them without government money? I'm not sure how to look that up.

Replies from: knb, kalium, fubarobfusco↑ comment by knb · 2013-08-21T09:40:12.964Z · LW(p) · GW(p)

Many of the private passenger rail companies were losing money before they were nationalized, but that was under heavy regulation and price controls. The freight rail companies were losing money before they were deregulated as well. These days they are quite profitable.

A lot of the old right-of-way has been lost so they would certainly need government help to overcome the tragedy-of-the-anticommons problem.

Replies from: DanielLC↑ comment by DanielLC · 2013-08-21T22:55:28.246Z · LW(p) · GW(p)

A lot of the old right-of-way has been lost so they would certainly need government help to overcome the tragedy-of-the-anticommons problem.

You mean the problem that someone isn't going to be willing to sell their property? Eminent domain is certainly necessary. I'm just wondering if it's sufficient.

↑ comment by kalium · 2013-08-20T23:34:34.027Z · LW(p) · GW(p)

That's not at all the same question as "Are high-speed trains a good idea?"

Any decent HSR would generate quite a lot of value not captured by fares. It would be more informative to compare the economic development of regions that have built high-speed rail against that of similar regions which haven't or which did so later.

France's TGV is profitable. Do you think that because it might not have been built without government funding it was a bad idea to build?

↑ comment by DanielLC · 2013-08-21T03:36:40.482Z · LW(p) · GW(p)

It would be more informative to compare the economic development of regions that have built high-speed rail against that of similar regions which haven't or which did so later.

If the HSR charges based on marginal cost, and marginal and average cost are significantly different, then this could be a problem. I intuitively assumed they'd be fairly close. Thinking about it more, I've heard that airports charge vastly more for people who are flying for business than for pleasure, which suggests there is a signifcant difference. Of course, it also suggests that they might be able to capture it through price discrimination, since the airports seem to manage.

How much government help is necessary for a train to be built?

It would be more informative to compare the economic development of regions that have built high-speed rail against that of similar regions which haven't or which did so later.

The economics of a train is not comparable to the economics of a city. If you can actually notice the difference in economic development caused by the train, then the train is so insanely valuable that it would be blindingly obvious from looking at how often they're built by the private sector.

France's TGV is profitable. Do you think that because it might not have been built without government funding it was a bad idea to build?

Making a profit is not a sufficient condition for it to be worth while to build. It has to make enough profit to make up for the capital cost. It might well do that, and it is possible to check, but it's a lot easier to ask if one has been built without government funding.

If it is worth while to build trains in general, and the government doesn't always fund them, then someone will build one without the government funding them.

Replies from: kalium, kalium↑ comment by kalium · 2013-08-21T04:50:57.598Z · LW(p) · GW(p)

If you can actually notice the difference in economic development caused by the train, then the train is so insanely valuable that it would be blindingly obvious from looking at how often they're built by the private sector.

I don't understand the reasoning by which you conclude that if an effect is measurable it must be so overwhelmingly huge that you wouldn't have to measure it.

On a much smaller scale, property values rise substantially in the neighborhood of light rail stations, but this value is not easily captured by whoever builds the rails. Despite the measurability of this created value, we do not find that "[light rail] is so insanely valuable that it would be blindingly obvious from looking at how often they're built by the private sector."

Replies from: DanielLC↑ comment by DanielLC · 2013-08-21T05:06:03.346Z · LW(p) · GW(p)

If the effect is measurable on an accurate but imprecise scale (such as the effect of a train on the economy), then it will be overwhelming on an inaccurate but precise scale (such as ticket sales).

You are suggesting we measure the utility of a single business by its effect on the entire economy. Unless my guesses of the relative sizes are way off, the cost of a train is tiny compared to the normal variation of the economy. In order for the effect to be noticeable, the train would have to pay for itself many, many times over. Ticket sales, and by extension the free market, might not be entirely accurate in judging the value of a train. But it's not so inaccurate that an effect of that magnitude will go unnoticed.

Am I missing something? Are trains really valuable enough that they'd be noticed on the scale of cities?

Replies from: kalium↑ comment by kalium · 2013-08-21T05:34:05.118Z · LW(p) · GW(p)

Are you claiming that a scenario in which

Fares cover 90% of (construction + operating costs)

Faster, more convenient transportation creates non-captured value worth 20% of (construction + operating costs)

is impossible? You seem to be looking at this from a very all-or-nothing point of view.

Replies from: DanielLC↑ comment by DanielLC · 2013-08-21T23:02:00.038Z · LW(p) · GW(p)

Faster, more convenient transportation is what fares are charging for. Non-captured value is more complicated than that.

If the non-captured value is 20% of the captured value, it's highly unlikely that trains will frequently be worth building, but rarely capture enough value. That would require that the true value stay within a very narrow area.

If it's not a monopoly good, and marginal costs are close to average costs, then captured value will only go down as people build more trains, so that value not being captured doesn't prevent trains from being built. If it is a monopoly good (I think it is, but I would appreciate it if some who actually knows tells me), and marginal costs are much lower than average costs, then a significant portion of the value will not be captured. Much more than 20%. It's not entirely unreasonable that the true value is such that trains are rarely built when they should often be built.

That's part of why I asked:

How much government help is necessary for a train to be built?

If the government is subsidizing it by, say, 20%, then the trains are likely worth while. If the government practically has to pay for the infrastructure to get people to operate trains, not so much.

Also, that comment isn't really applicable to what you just posted it as a response to. It would fit better as a response to my last comment. The comment you responded to was just saying that unless the value of trains is orders of magnitude more than the cost, you'd never notice by looking at the economy.

↑ comment by kalium · 2013-08-21T04:43:06.751Z · LW(p) · GW(p)

If the HSR charges based on marginal cost, and marginal and average cost are significantly different, then this could be a problem. I intuitively assumed they'd be fairly close. Thinking about it more, I've heard that airports charge vastly more for people who are flying for business than for pleasure, which suggests there is a significant difference.

Marginal and average cost are obviously different, but your example of business fliers is not relevant. Business fliers aren't paying for their flights, but do often get to choose which airline they take. If there is one population that pays for their own flights and another population that does not even consider cost, it would be silly not to discriminate whatever the relation between marginal and average cost.

Replies from: DanielLC↑ comment by DanielLC · 2013-08-21T05:13:11.803Z · LW(p) · GW(p)

The businesses are perfectly capable of choosing not to pay for their employees flights. The fact that they do, and that they don't consider the costs, shows that their willingness to pay is much higher than the marginal cost. If it wasn't for price discrimination, consumer surplus would be high, and a large amount of value produced by the airlines would go towards the consumers.

Are high-speed trains natural monopolies? That is, are the capital costs (e.g. rail lines) much higher than the marginal costs (e.g. train cars)? I think they are, and if they are considering the consumer surplus is important, but if they're not, then it doesn't matter.

Replies from: kalium↑ comment by kalium · 2013-08-21T05:20:06.671Z · LW(p) · GW(p)

The fact that they do, and that they don't consider the costs, shows that their willingness to pay is much higher than the marginal cost.

What marginal cost are you referring to here? If it's the cost to the airline of one butt-in-seat, we know it's less than one fare because the airline is willing to sell that ticket. And this has nothing to do with average cost. I think you've lost the thread a bit.

Replies from: DanielLC↑ comment by DanielLC · 2013-08-21T22:59:55.115Z · LW(p) · GW(p)

What I mean is that, if everyone payed what people who travel for pleasure pay, then people travelling for business would pay much less than they're willing to, so the amount of value airports produce would be a lot less than what they'd get. If they charged everyone the same, either it would get so expensive that people would only travel for business, even though it's worth while for people to travel for pleasure, or it would be cheap enough that people travelling for business would fly for a fraction of what they're willing to pay. Either way, airports that are worth building would go unbuilt since the airport wouldn't actually be able to make enough money to build it.

↑ comment by fubarobfusco · 2013-08-20T23:49:04.408Z · LW(p) · GW(p)

Are high speed trains profitable enough for people to build them without government money?

Are highways?

Replies from: DanielLC↑ comment by DanielLC · 2013-08-21T03:23:34.665Z · LW(p) · GW(p)

Some roads do collect tolls. Again, I don't know how to look it up, but I don't think they have government help. They're in the minority, but they show that having roads is socially optimal. Similarly, if there are high-speed trains that operate without government help, we know that it's good to have high-speed trains, and while it may be that government encouragement is resulting in too many of them being built, we should still build some.

↑ comment by knb · 2013-08-21T09:36:05.711Z · LW(p) · GW(p)

I'm not sure what your point is here. Passenger rail and freight rail are usually decoupled. Amtrak operates on freight rail in most places because the government orders the rail companies to give preference to passenger rail (at substantial cost to the private freight railways).

Hyperloop would help out a lot, since it takes the burden off of freight rail. I suppose hyperloop could be privately operated (that would be my preference, so long as there was commonsense regulation against monopolistic pricing).

Replies from: DanielLC↑ comment by DanielLC · 2013-08-21T23:04:44.520Z · LW(p) · GW(p)

so long as there was commonsense regulation against monopolistic pricing

If competitors can simply build more hyperloops, monopolistic pricing won't be a problem. If you only need one hyperloop, then monopolistic pricing is insufficient. They will still make less money than they produce. Getting rid of monopolistic pricing runs the risk of keeping anyone from building the hyperloops.

↑ comment by metastable · 2013-08-20T05:21:15.343Z · LW(p) · GW(p)

I'd like to hear more about possibilities in China, if you've got more. Everything I've read lately suggests that they've extensively overbuilt their infrastructure, much of it with bad debt, in the rush to create urban jobs. And it seems like they're teetering on the edge of a land-development bubble, and that urbanization has already started slowing. But they do get rights-of-way trivially, as you say, and they're geographically a lot more like the US than Europe.

Replies from: Eliezer_Yudkowsky↑ comment by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2013-08-20T06:18:18.947Z · LW(p) · GW(p)

(The Money Illusion would like to dispute this view of China. Not sure how much to trust Sumner on this but he strikes me as generally smart.)

Replies from: gattsuru↑ comment by gattsuru · 2013-08-20T17:01:31.652Z · LW(p) · GW(p)

Mr. Sumner has some pretty clear systemic assumptions toward government spending on infrastructure. This article seems to agree with both aspects, without conflicting with either, however.

The Chinese government /is/ opening up new opportunities for non-Chinese companies to provide infrastructure, in order to further cover land development. But they're doing so at least in part because urbanization is slowing and these investments are perceived locally as higher-risk to already risk-heavy banks, and foreign investors are likely to be more adventurous or to lack information.

comment by Tenoke · 2013-08-30T13:33:04.046Z · LW(p) · GW(p)

I lost an AI box experiment against PatrickRobotham with me as the AI today on irc. If anyone else wants to play against me then PM me here or contact me on #lesswrong.

Replies from: Kawoomba, shminux↑ comment by Shmi (shminux) · 2013-08-30T18:14:44.231Z · LW(p) · GW(p)

Failing to convince your jailer to let you out is the highly likely outcome, so it is not very interesting. I would love to hear about any simulated AI winning against an informed opponent.

Replies from: Tenokecomment by David_Gerard · 2013-08-20T17:31:36.503Z · LW(p) · GW(p)

When you're trying to raise the sanity waterline, dredging the swamps can be a hazardous occupation. Indian rationalist skeptic Narendra Dabholkar was assassinated this morning.

Replies from: shminux, knb↑ comment by Shmi (shminux) · 2013-08-20T17:45:47.312Z · LW(p) · GW(p)

Political activism, especially in the third world, is inherently dangerous, whether or not it is rationality-related.

↑ comment by knb · 2013-08-20T22:11:02.079Z · LW(p) · GW(p)

He was trying to pass a law to suppress religious freedoms of small sects. That doesn't raise the sanity waterline, it just increases tensions and hatred between groups.

Replies from: David_Gerard↑ comment by David_Gerard · 2013-08-21T11:44:06.646Z · LW(p) · GW(p)

That's a ludicrously forgiving reading of what the bill (which looks like going through) is about. Steelmanning is an exercise in clarifying one's own thoughts, not in justifying fraud and witch-hunting.

Replies from: fubarobfusco, knb↑ comment by fubarobfusco · 2013-08-22T03:58:48.053Z · LW(p) · GW(p)

I haven't been able to find the text of the bill — only summaries such as this one. Do you have a link?

↑ comment by knb · 2013-08-21T19:56:27.857Z · LW(p) · GW(p)

Did you even read my comment?

Replies from: David_Gerard↑ comment by David_Gerard · 2013-08-21T22:22:14.768Z · LW(p) · GW(p)

Yes, I did. Your characterisation of the new law is factually ridiculous.

Replies from: knbcomment by David_Gerard · 2013-08-30T22:49:40.044Z · LW(p) · GW(p)

So, are $POORETHNICGROUP so poor, badly off and socially failed because they are about 15 IQ points stupider than $RICHETHNICGROUP? No, it may be the other way around: poverty directly loses you around 15 IQ points on average.

Or so says Anandi Mani et al. "Poverty Impedes Cognitive Function" Science 341, 976 (2013); DOI: 10.1126/science.1238041. A PDF while it lasts (from the nice person with the candy on /r/scholar) and the newspaper article I first spotted it in. The authors have written quite a lot of papers on this subject.

Replies from: Transfuturist, Vaniver↑ comment by Transfuturist · 2013-08-31T01:52:41.047Z · LW(p) · GW(p)

The biggest problem I have with racists claiming racial realism is this.

Replies from: Protagoras, David_Gerard↑ comment by Protagoras · 2013-08-31T03:13:09.708Z · LW(p) · GW(p)

The racists claim that this is irrelevant because of research that corrects for socioeconomic status and still finds IQ differences. Of course, researchers have found plenty of evidence of important environmental influences on IQ not measured by SES. It seems especially bad for the racial realist hypothesis that people who, for example, identify as "black" in America have the the same IQ disadvantage compared to whites whether their ancestory is 4% European or 40% European; how much African vs. European ancestry someone has seems to matter only indirectly to the IQ effects, which seem to directly follow whichever artificial simplified category someone is identified as belonging to.

Replies from: Viliam_Bur, Vaniver, David_Gerard↑ comment by Viliam_Bur · 2013-08-31T12:30:27.594Z · LW(p) · GW(p)

Not completely serious, just wondering about possible implications, for sake of munchkinism:

Would it be possible to invent some new color, for example "purple", so that identifying with that color would increase someone's IQ?

I guess it would first require the rest of the society accepting the superiority (at least in intelligence) of the purple people, and their purpleness being easy to identify and difficult for others to fake. (Possible to achieve with some genetic manipulation.)

Also, could this mechanism possibly explain the higher intelligence of Jews? I mean, if we stopped suspecting them from making international conspiracies and secretly ruling the world (which obviously requires a lot of intelligence), would their IQs consequently drop to the average level?

Also... what about Asians? It is the popularity of anime than increases their IQ, or what?

Replies from: Protagoras, bogus↑ comment by Protagoras · 2013-08-31T15:35:09.713Z · LW(p) · GW(p)