Yoav Ravid's Shortform

post by Yoav Ravid · 2021-01-20T12:44:41.782Z · LW · GW · 123 commentsContents

124 comments

123 comments

Comments sorted by top scores.

comment by Yoav Ravid · 2024-09-25T11:19:50.177Z · LW(p) · GW(p)

So the responsible thing to do is to refrain, right? Cause if everybody did nobody would have the ability to destroy the site, and what would you do with such an ability anyway except not use it?

The problem is it feels like a game/exercise, and a game/exercise is something I want to opt in to, even if just to challenge myself.

That said, I think twice before pressing any big red button, and as of yet I haven't pressed this one.

Edit: Following Dagon's comment (published 8 hours after this post), which confirmed it's framed as a game, I decided to press the button and opt in.

↑ comment by Dagon · 2024-09-25T18:46:44.286Z · LW(p) · GW(p)

I pressed the button, and it asked me to confirm that I want to participate in a "social deception game", which I did. I'm somewhat ambivalent that this event does anything except raise awareness of a specific thing that happened during the cold war (and presumably has been patched away in current response procedures), but it's interesting to see people taking it seriously.

Depending on the framing, I will or will not press it if I'm chosen. I'll try to play along with the rules and guidelines as published, including the in-game motivations specified.

Thanks for posting this - I never look at the front page, so would have missed it.

Replies from: Yoav Ravid↑ comment by Yoav Ravid · 2024-09-25T19:02:46.115Z · LW(p) · GW(p)

Thanks. If it's indeed framed as a game then I would like to participate as well. So I pressed the button and opted in.

↑ comment by Veedrac · 2024-09-25T16:31:58.066Z · LW(p) · GW(p)

If ‘Opt into Petrov Day’ was aside something other than a big red ominous button, I would think the obvious answer is that it's a free choice and I'd be positively inclined towards it. Petrov Day is a good thing with good side effects, quite unlike launching nuclear weapons.

It is confusing to me that it is beside a big red ominous button. On the one hand, Petrov's story is about the value of caution. To quote a top comment from an older Petrov Day,

Petrov thought the message looked legit, but noticed there were clues that it wasn't.

On the other hand, risk-taking is good, opting in to good things is good, and if one is taking Petrov Day to mean ‘don't take risks if they look scary’ I think one is taking an almost diametrically wrong message from the story.

All that said, for now I am going to fall for my own criticism and not press the big red ominous button around Petrov Day.

Replies from: Dagon↑ comment by Dagon · 2024-09-25T19:26:52.847Z · LW(p) · GW(p)

If I didn't remember discussion from previous Petrov Days here, I'd probably have skipped it, on the heuristic that big red buttons on public websites are usually advertisements, which i'd rather not deal with. I doubt there's any visual that could, without a LOT of explanation, make it seem like an ominous thing, rather than an annoying attention-grab. With the context of what petrov day is, and what LW has done in the past, it's neither - just an interesting view into what the admins think is worth doing and what users weirdly extrapolate from.

↑ comment by nick lacombe (nicolas-lacombe) · 2024-09-25T16:38:32.750Z · LW(p) · GW(p)

that's a good point. i decided to press so that I can test myself. hopefully testing myself not to end the world on lower stakes means I improve at doing it if ever the stakes are larger.

Replies from: Yoav Ravid↑ comment by Yoav Ravid · 2024-09-25T17:23:45.715Z · LW(p) · GW(p)

Did anything happen after you pressed it?

Replies from: nicolas-lacombe↑ comment by nick lacombe (nicolas-lacombe) · 2024-09-25T21:54:17.557Z · LW(p) · GW(p)

the only change i can see is the button ui (see screenshot). i assume that it only signs me up for a game which is going to happen tomorrow on petrov day.

↑ comment by Shankar Sivarajan (shankar-sivarajan) · 2024-09-25T13:54:51.379Z · LW(p) · GW(p)

what would you do with such an ability anyway except not use it?

Leverage it to create a better world. (Well, this button doesn't really do much, but y'know, pretend it's the ability to destroy the world.)

Replies from: Yoav Ravid↑ comment by Yoav Ravid · 2024-09-25T14:50:54.546Z · LW(p) · GW(p)

How would you leverage a button that destroys the world to make the world better?

Replies from: weibac↑ comment by Milan W (weibac) · 2024-09-25T16:17:07.219Z · LW(p) · GW(p)

By blackmailing powerful people into doing good, I assume.

Replies from: Yoav Ravid↑ comment by Yoav Ravid · 2024-09-25T18:19:17.907Z · LW(p) · GW(p)

That sounds like a terrible strategy. Your threat won't be credible because your goal is to make the world better, not destroy it. And anything you do to make the threat credible (like some sort of precomitment mechanism) will risk the world actually getting destroyed.

Replies from: Jozdien, weibac↑ comment by Jozdien · 2024-09-25T20:34:24.143Z · LW(p) · GW(p)

It could work as a precautionary measure against existential risk. If someone is planning on doing something that also risks the world getting destroyed, then the threat could be credible.

(I am not endorsing humans actually using this strategy in the real world, obviously).

↑ comment by Milan W (weibac) · 2024-09-25T18:21:28.229Z · LW(p) · GW(p)

I agree.

↑ comment by Quinn (quinn-dougherty) · 2024-09-26T01:42:19.982Z · LW(p) · GW(p)

I was thinking the same thing this morning! My main thought was, "this is a trap. ain't no way I'm pressing a big red button especially not so near to petrov day"

↑ comment by Hastings (hastings-greer) · 2024-09-25T12:36:59.575Z · LW(p) · GW(p)

The funniest possible outcome is that no one opts in and so the world is saved but the blog post is ruined.

I would hate to remove the possibility of a funny outcome. No opt in!

↑ comment by quetzal_rainbow · 2024-09-25T12:01:44.635Z · LW(p) · GW(p)

Button is very intimidating, indeed.

↑ comment by nick lacombe (nicolas-lacombe) · 2024-09-26T17:05:50.255Z · LW(p) · GW(p)

for people that didn't see it yet, the following post explains today's petrov day game: The 2024 Petrov Day Scenario [LW · GW]

↑ comment by Bridgett Kay (bridgett-kay) · 2024-09-25T22:39:10.066Z · LW(p) · GW(p)

Seems to me that the only winning move is not to play.

comment by Yoav Ravid · 2021-02-10T03:52:45.908Z · LW(p) · GW(p)

If someone commits an immoral act, and then gets cloned to the atom [LW · GW], are both versions of him guilty? (morally, not legally)

If so, then - assuming what he did deserves a 10 year sentence, should both versions get it, or should each get half a sentence?

Replies from: Viliam, Dagon, TAG, Pattern, unparadoxed↑ comment by Viliam · 2021-02-14T15:49:58.110Z · LW(p) · GW(p)

Hm, sounds like a setting for a sci-fi movie. First you get human life extension: humans can live 200 or 300 years. Then you get some horrible criminal who got 300 years prison sentence. But the criminal cloned himself before being caught. The judge decides both versions should get 300 years. The owner of the private prison thinks "nice, free labor!" and makes ten thousand clones of the criminal -- because the criminal is extra smart and/or extra strong, so he is more profitable than an average prisoner. Turns out the clones cooperate with each other, and together they escape from the prison... and then the usual Hollywood story starts, with explosions and stuff.

Later they find out that the criminal actually made one more clone of himself before he became a criminal. So the clone is a good guy. (Or maybe unauthorized cloning of yourself is already a crime, so this old clone is hiding from the law, but he is not a violent criminal, unlike all the other clones.) Now the police tries to recruit him, maybe promise an amnesty to him if he helps to eliminate the other clones. Between the action scenes, there are flashbacks to the past, explaining why one of the two original clones became a violent criminal and the other did not. Possibly a love story that ended tragically. (Were both in love with the same girl, and did they fight for her? Or maybe, both were in love with the same girl, then she died in a traffic accident, one of them decided to forgive the guy who accidentally killed her, the other one murdered him.)

Or perhaps the old clone helps to kill all the fugitives and get the amnesty, and then it is revealed he was actually the worst of all... only his crimes were never exposed, because he managed to frame the other clone.. so he was the bad guy, who just murdered ten thousand copies of the good guy...

At the end, the bad guy kidnaps the corrupted judge, and tells him he just made ten thousand copies of him. One randomly selected copy will be released, the remaining 9999 will be tortured to death, and all evidence will be destroyed, so no one will believe that any crime happened. The bad guy is just bluffing here, there was only one copy of the judge, and he is finally released, but he is traumatized because he will never know whether the threat was real or not.

Replies from: Yoav Ravid, Yoav Ravid, Yoav Ravid↑ comment by Yoav Ravid · 2021-02-14T16:25:43.673Z · LW(p) · GW(p)

(I'm going to just think aloud here about your idea).

Reason why this guy is so dangerous - He was genetically engineered. both his mental and physical abilities are wicked good. but, well, he was created during the first few years/decades of genetically engineered humans, and there were... some unforeseen consequences.

Or maybe he experimented with unauthorized cloning a lot, and self-experimented on the clones with all kinds of enhancements. some going better some going worse.

↑ comment by Yoav Ravid · 2021-02-14T16:13:33.595Z · LW(p) · GW(p)

Possibly a love story that ended tragically. (Were both in love with the same girl, and did they fight for her?

Perhaps somehow the mind cloning was good, but the body cloning wasn't (or the body was hurt afterward), and now he's ugly and the girl didn't want him. the scenario where she dies in an accident and one instance forgives the guy and the other murders him suggests quite different minds - so, not really the sort of clone we're talking about.

↑ comment by Viliam · 2021-02-14T16:20:13.948Z · LW(p) · GW(p)

There are many possible ways how to do this. I imagined something like "bad moral luck" -- two minds that started the same, but different things happen to them, and they diverge as a result.

For example, both decide to murder the guy, and both wait for him at different places. One murders him. The other waits at a wrong place (maybe just further down the same street), so nothing happens... then he goes home, gets some good sleep, and realizes that he was stupid. Alternatively, one gets a phone call that reminds him of something, and he changes his mind.

The idea of mind divergence was already done with parallel words (e.g. Sliding Doors), except these would be clones living in the same world.

Replies from: Yoav Ravid↑ comment by Yoav Ravid · 2021-02-14T16:27:01.451Z · LW(p) · GW(p)

Ouu that's also nice!

↑ comment by Yoav Ravid · 2021-02-14T16:09:01.757Z · LW(p) · GW(p)

This. Is. Awesome.

is someone writing this? are you writing this? should i try to write this? i really want this written. did i mention already that this is awesome and should be written?

Replies from: Viliam↑ comment by Dagon · 2021-02-10T15:20:39.287Z · LW(p) · GW(p)

Sure. Moral culpability for past acts should go with the decision-making apparatus, the pattern of connections and data in the brain. If that's copied, so is the blame. We can't let these jerks get away with stuff just by using a Star Trek teleporter!

Replies from: Yoav Ravid↑ comment by Yoav Ravid · 2021-02-10T17:11:42.364Z · LW(p) · GW(p)

Yes, thinking about it more the only policy i found that didn't lead to problems was (in the case where the cloning happens after the act):

- All instances of the person should be regarded as culpable as much as you would regard the person if they didn't clone themselves.

Otherwise if not both of them are culpable you get the star trek teleporter problem.

And if you divide culpability it means someone can reduce their own punishment 50 fold by creating 50 clones. and if they aren't sympathetic to the clone's suffering, they might do just that.

The sad thing about this is policy is having to multiply the amount of suffering experienced by the punishment. you would much rather someone not create a clone after committing a crime, cause then you'll have to punish multiple people. maybe in such a future cloning would be a crime for someone who previously committed a crime.

Replies from: Dagon, TAG↑ comment by Dagon · 2021-02-10T22:48:48.991Z · LW(p) · GW(p)

The sad thing about this is policy is having to multiply the amount of suffering experienced by the punishment.

There's a missing step in this result. Moral culpability is about judgement and condemnation of actions (and the actors who performed them), not (necessarily) about punishment. Calculation of optimal punishment is about influencing FUTURE actions, not about judging past actions. It's not fully disconnected from past culpability, but it's not at all the same thing.

You may have to increase total suffering, but you may not - perhaps punishing one clone randomly is sufficient to achieve the punishment goals (deterring future bad actions by that decision-maker and by observers). Even if there's more summed punishment needed to have the same level of deterrence, presumably the clones increased total joy as well, and the net moments of lives-worth-living is somewhat increased.

Now if the cloning ITSELF is a moral wrong (say, it uses resources in a way that causes unjustified harm to others), you pretty much have to overreact - make it far more painful to all the clones, and more painful for more clones. But I'd argue that the culpability for the punishment pain falls on the clones as well, rather than the judge or hangman.

↑ comment by TAG · 2021-02-10T18:32:41.418Z · LW(p) · GW(p)

How can you be culpable of an act that you didn't commit? The !as doesn't punish people just for being the kind of person who would commit an evil act.

Replies from: Yoav Ravid↑ comment by Yoav Ravid · 2021-02-10T18:50:41.277Z · LW(p) · GW(p)

Well, for one, they wouldn't just be the kind of person who would commit an evil act, they're the kind of person who did commit an evil act.

But ok, how do you suggest solving the two evasion tactics described?

Replies from: TAG↑ comment by TAG · 2021-02-10T20:38:47.731Z · LW(p) · GW(p)

Well, for one, they wouldn’t just be the kind of person who would commit an evil act, they’re the kind of person who did commit an evil act.

No, they are a clone of a person who did commit an evil act. You can't claim that they are very same person in the sense of numerical identity. (Numerical identity is the kind of identity that does not hold between identical twins. Identical twins are not the same person. They are two identical people).

Replies from: Yoav Ravid↑ comment by Yoav Ravid · 2021-02-11T05:39:14.117Z · LW(p) · GW(p)

I'm not talking about identical twins, I'm talking exactly about numerical identity. perfect cloning.

Replies from: TAG↑ comment by TAG · 2021-02-11T16:31:50.862Z · LW(p) · GW(p)

Cloning isnt numerical identity. A clone is an artificial twin and twins are not numerically identical. Numerical identity relates to aliasing, to having two labels for the same entity. Stephanie Germanicus and Lady Gaga are numerically identical.

Replies from: Yoav Ravid↑ comment by Yoav Ravid · 2021-02-11T16:57:30.768Z · LW(p) · GW(p)

Ok, so i confused the term with something else, oops. my point is that I'm talking about an exact copy, in the sense discussed in the quantum mechanics and personal identity [? · GW] sequence.

Replies from: TAG↑ comment by TAG · 2021-02-11T17:23:59.622Z · LW(p) · GW(p)

The exactness of the copy doesn't matter. If a twin commits a crime, the other twin is not held responsible , because they did not commit the crime, not because there is some minute difference between them.

Replies from: Yoav Ravid↑ comment by Yoav Ravid · 2021-02-11T17:54:22.626Z · LW(p) · GW(p)

Ok, how do you respond to this formulation of the problem [LW(p) · GW(p)]?

↑ comment by Pattern · 2022-01-30T00:27:10.406Z · LW(p) · GW(p)

Suppose you got 'cloned to the atom'. 10 years later, one of you commits a crime. Who is punished? Who should be?

Replies from: Yoav Ravid↑ comment by Yoav Ravid · 2022-01-30T05:13:03.420Z · LW(p) · GW(p)

After 10 years I'd expect plenty of difference to have built up, making it not so different (judicially) from a regular case. One thing you might still say is that it's reasonable to hold the other instance as prone to commit murder too, and perhaps that would justify surveillance on him, or something..

↑ comment by unparadoxed · 2021-02-10T07:26:26.734Z · LW(p) · GW(p)

On first thought, it does not seem to me that (im)morality is something that is commonly ascribed to atoms. Just as bits do not actually have a color, so it seems to me that atoms do not have morality. But I'm not a moral philosopher, so that's just my feeling.

On second thought, consider a thought experiment where we judge the clone. Was the clone a direct / proximate cause of the immorality? It would seem not, as the original was. Did the clone have the intention to cause the immorality? It would seem not, the original did. So I don't think I would hold the clone liable for the committed immorality.

A more interesting scenario to me would be - We have two clones, we know one of them committed an immorality, but we do not know which one. How do we proceed?

Replies from: Yoav Ravid↑ comment by Yoav Ravid · 2021-02-10T07:48:56.355Z · LW(p) · GW(p)

The morality isn't ascribed to atoms, it's ascribed to the person in the same way it usually is. yes, people are made of atoms, but it all adds up to normality [? · GW].

On your second point, did you read the article linked? summarized, the conclusion is that in the case of perfect cloning "There is no copy; there are two originals." (on reflection i might have linked the wrong post, this [LW · GW] is where this quote is taken from).

from this viewpoint, there would be no difference between blaming the "clone" and blaming the "original". so in a way it's isomorphic to the scenario you suggested in the third paragraph.

It's probably important though whether the cloning happened before or after the act. if someone cloned himself, and 40 years later one of them commits a crime, there probably isn't such a dilemma. but is the same true if a crime is committed by one of the clones right after cloning? not sure.

Replies from: unparadoxed↑ comment by unparadoxed · 2021-02-10T08:11:08.620Z · LW(p) · GW(p)

It seems to me that you are thinking about some "stronger" form of cloning. The framework that I was thinking in was that the "clone" was a similar-but-distinct entity, something like a Twin materialized out of thin air instantaneously. But it seems that you are thinking of a stronger form where we should treat the two entities as exactly the same.

I have difficulties conceptualizing this since in my mind a clone still occupies a distinct time, space and consciousness as the original, and so is treated distinctly in my eyes. (In terms of being judged for the morality of actions that the original committed).

I will try to think of a situation / framework where this "stronger" form of cloning makes sense to me.

Replies from: Yoav Ravid↑ comment by Yoav Ravid · 2021-02-10T08:31:09.512Z · LW(p) · GW(p)

Let's see if i can help.

Say someone commits a crime, then goes into a scanner, destroyed, and recreated somewhere else. is it agreed that they're the same person? if so, it would make sense to still blame them for the crime.

Now let's say we discovered that this person never actually destroyed themselves, they were scanned and cloned, but faked getting destroyed.

Should the "clone" now be declared innocent, and the "original" declared guilty instead? or should both of them be declared guilty?

Replies from: unparadoxed↑ comment by unparadoxed · 2021-02-10T17:29:04.185Z · LW(p) · GW(p)

Yeah, that makes sense. The way I came to think of it is that person A commits a crime, then faints and is unconscious after that. Afterwards, a separate nefarious cloner then clones person A in a black box, so one person A goes in, two persons A come out from the cloning black box. Person(s!) A awake, and having a strong conscience of their crime, turn themselves in. Since they have exactly the same memories and conscience, they are indistinguishable from the point of view of being the person who committed the crime, both internally and externally.

This is actually a good question. I feel that both persons should be declared guilty, since cloning oneself (whether intentionally or not) should not give one an automatic-out from moral judgement. I am not as sure about whether the punishment should be equal or shared.

Replies from: Yoav Ravidcomment by Yoav Ravid · 2023-12-13T19:12:04.988Z · LW(p) · GW(p)

'The grass is green' and 'The sky is blue' are pretty bad examples of obviously true statements (they're actually often false - the sky is dark at night and the grass is yellow-brown when dry).

'The sun is bright' and 'Water is wet' are better statements in the same style.

Replies from: Dagon, lillybaeum↑ comment by Dagon · 2023-12-13T19:29:35.445Z · LW(p) · GW(p)

Honestly, I prefer the more obvious generalities. They're false often enough that one is able to see that it's not a literal universal truth, but more an extremely common experience being used as an example of something uncontroversial, even with well-known non-explicitly-mentioned exceptions.

All 4 statements are incomplete - these aren't properties of the object, but relational experiences of a person to the object. None of them are universally true for all situations (though the latter two are closer).

Replies from: Yoav Ravid↑ comment by Yoav Ravid · 2023-12-13T19:31:59.335Z · LW(p) · GW(p)

Yeah I agree with that. I like the latter two statements because they seem just as general, simple and everyday to me, while also being more correct.

↑ comment by lillybaeum · 2023-12-13T22:06:07.842Z · LW(p) · GW(p)

I've seen some convincing arguments that water is not wet.

comment by Yoav Ravid · 2024-09-17T16:12:37.275Z · LW(p) · GW(p)

I tried to think of different names for the Moloch [? · GW] Dynamic[1] and came up with

- Mutual Sacrifice Trap

- Mutual Sacrifice Equilibrium

- Mutual Sacrifice Equilibrium Trap

- Sacrifice Race

- Sacrifice Competition

"Collective" can also replace or be added before "Mutual", to signify that it tends to refer to dynamics of many actors, where coordinating to get out of the equilibrium trap is difficult.

What do you think of these options?

- ^

A reminder of what the Moloch Dynamic is:

"In some competition optimizing for X, the opportunity arises to throw some other value under the bus for improved X. Those who take it prosper. Those who don’t take it die out. Eventually, everyone’s relative status is about the same as before, but everyone’s absolute status is worse than before. The process continues until all other values that can be traded off have been – in other words, until human ingenuity cannot possibly figure out a way to make things any worse." - Scott Alexander, Meditations on Moloch

↑ comment by Legionnaire · 2024-09-17T20:44:13.842Z · LW(p) · GW(p)

LLMs can be very good at coming up with names with some work:

A few I liked:

Sacrificial Contest

Mutual Ruin Game

Sacrificial Spiral

Universal Loss Competition

Collective Sacrifice Trap

Competition Deadlock

Competition Spiral

Competition Stalemate

Destructive Contest

Destructive Feedback Competition

Conflict Feedback Spiral

↑ comment by Yoav Ravid · 2024-09-18T10:06:34.227Z · LW(p) · GW(p)

Ouuu nice! there's some good ones here. I think my favourite from these is "Sacrificial Spiral". "Sacrificial Contest" is also good. Deadlock is also a good term, though not as part of "Competition Deadlock". Perhaps "Mutual Sacrifice Deadlock", or something of the sort. "Feedback" can also be a good term.

Thanks!

↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-09-22T14:55:16.674Z · LW(p) · GW(p)

To me, the heart of the matter is being trapped in local minima which involve negative sum competitions. Pyrrhic victory is sometimes involved, but sometimes not. An example of not a mutual loss would be if Actor A does come out ahead of where they started, but Actor B lost more than Actor A gained.

A rat race which devours slack, as Dagon mentions is another example. Although that doesn't seem as central to Moloch to me as Negative Sum competition.

Also, there's a related psychological aspect to this. When people get scared and insecure and hostile in their trades and competitions, they have a tendency to stop even searching for win-win solutions. Liv Boeree talks about Win-Win being the opposite of Moloch. https://www.youtube.com/channel/UC09fp6hZ2RHiUYwY8hNCirA

Replies from: Yoav Ravid↑ comment by Yoav Ravid · 2024-09-22T18:05:25.567Z · LW(p) · GW(p)

Yeah, I think I agree with this. Do you have an idea for a name that captures this dynamic? Do you think one of the names I or Legionnaire [LW(p) · GW(p)] suggested captures it?

Replies from: nathan-helm-burger↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-09-22T18:19:14.956Z · LW(p) · GW(p)

I asked Claude Sonnet 3.5.

Nathan : I dislike the term sacrifice since it implies a knowing deliberate choice to give up something, specifically for a ceremonial purpose. I think this intentionality is importantly absent in these race dynamics. Indeed, all the parties realizing the nature of the pattern they are in is often the first step to escaping it.

Claude: You make an excellent point. The term "sacrifice" does indeed carry connotations of intentionality and ceremony that are not accurate for this dynamic. You're right to highlight that the lack of awareness is often a crucial part of the problem. Let's reconsider our options with this in mind:

-

Race-based terms:

- "Destructive Race Dynamic"

- "Race to Ruin"

- "Competitive Decline Spiral"

-

Trap-based terms:

- "Mutual Depletion Trap"

- "Collective Erosion Trap"

- "Competitive Degradation Trap"

-

Equilibrium-based terms:

- "Negative Equilibrium Dynamic"

- "Deteriorating Equilibrium Trap"

- "Suboptimal Stability Trap"

-

Feedback-based terms:

- "Negative Feedback Loop"

- "Destructive Feedback Cycle"

- "Erosive Competition Cycle"

-

Other options:

- "Collective Value Decay"

- "Competitive Devaluation Dynamic"

- "Mutual Loss Spiral"

- "Unintentional Decline Pattern"

These alternatives avoid the implication of intentional sacrifice while still capturing the essence of the dynamic. They emphasize the unintended, gradual nature of the decline and the trapped state of the participants.

Among these, I find "Competitive Decline Spiral" particularly effective. It captures the competitive nature, the worsening situation over time, and avoids any implication of intentionality. "Mutual Depletion Trap" is another strong contender, emphasizing the trapped nature of the situation and the mutual loss without suggesting deliberate action.

"Unintentional Decline Pattern" might be the most explicit in addressing your concern about avoiding implied intentionality, though it's a bit longer and less punchy than some other options.

↑ comment by Dagon · 2024-09-17T18:01:29.756Z · LW(p) · GW(p)

I think competition is an important element to capture in the name. I'm not sure that any of sacrifice, race, or trap are defining concepts, though they usually apply. I think of it as over-focus on a few somewhat-visible (if not perfectly legible) dimensions, at the expense of harder-to-notice-analytically value dimensions. So maybe "goodharted value optimization", but that's not great either.

In my head, I tend to think of it as related to "moneyball over-focus" - taking the wrong lesson from Billy Beane's success - he optimized for winning on a budget, not for great baseball athleticism or developing player excellence (except as part of the visible optimization). He was very successful in that, but the world lost something for it.

The antidote is Slack, which is the ultimate illegible value. I'm not sure how/whether to work that into the name.

Replies from: Yoav Ravid↑ comment by Yoav Ravid · 2024-09-18T10:12:57.859Z · LW(p) · GW(p)

Hmm.. I think our understanding what "Moloch" stands for is quite different, cause none of what you suggested seems close to me. Which I guess also illustrates why I wanted a different name. "Moloch" is very good at entering your head and creating a visceral feeling of the dynamic, but it can also make it ambiguous and difficult to understand. Also, I find when I introduce people to the concept, it really throws them off if I start to talking about some mythical deity from the Bible :)

comment by Yoav Ravid · 2024-06-12T03:50:06.580Z · LW(p) · GW(p)

I love how user tooltip now shows a three of their posts (and generally all the new features. You're doing a great Job, LW team!).

Currently the tooltip shows the 3 most recent posts. It would be nice if it was possible to pin posts on the user page, and then if you did it would prioritise the pinned posts on the tooltip before new posts.

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-06-12T04:04:13.465Z · LW(p) · GW(p)

Thank you!

And yeah, agree that it should respect the user's pinned post. Seems like it should be an easy change.

comment by Yoav Ravid · 2023-12-15T07:03:43.710Z · LW(p) · GW(p)

I don't like the dialogue matching feature. It's not like dating where my interest in a dialogue comes from interest in a specific person. It's more akin to wanting to do an activity (go climb / discuss a certain topic), and looking for someone to do it with (could be anyone).

If you want to have a dialogue with me, send me a message, and I'll probably say yes.

If I were to design a dialogue matching system I think I'd make it more topic-based than people-based.

comment by Yoav Ravid · 2022-01-29T17:02:37.556Z · LW(p) · GW(p)

(Only read the abstract)

Replies from: Pattern, nathan-helm-burgerStagnation and Scientific Incentives

New ideas no longer fuel economic growth the way they once did. A popular explanation for stagnation is that good ideas are harder to find, rendering slowdown inevitable. We present a simple model of the lifecycle of scientific ideas that points to changes in scientist incentives as the cause of scientific stagnation. Over the last five decades, citations have become the dominant way to evaluate scientific contributions and scientists. This emphasis on citations in the measurement of scientific productivity shifted scientist rewards and behavior on the margin toward incremental science and away from exploratory projects that are more likely to fail, but which are the fuel for future breakthroughs. As attention given to new ideas decreased, science stagnated. We also explore ways to broaden how scientific productivity is measured and rewarded, involving both academic search engines such as Google Scholar measuring which contributions explore newer ideas and university administrators and funding agencies utilizing these new metrics in research evaluation. We demonstrate empirically that measures of novelty are correlated with but distinct from measures of scientific impact, which suggests that if also novelty metrics were utilized in scientist evaluation, scientists might pursue more innovative, riskier, projects.

↑ comment by Pattern · 2022-01-29T23:52:31.989Z · LW(p) · GW(p)

New ideas no longer fuel economic growth the way they once did. A popular explanation for stagnation is that good ideas are harder to find, rendering slowdown inevitable.

Another place to go from there is that ideas aren't proving as useful as they used to. As if they're being monopolized somehow. i.e. one could investigate/compare 'productivity' and the rules around 'intellectual property' such as 'copyright'.

↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-09-22T18:45:29.325Z · LW(p) · GW(p)

I think science can be moved forward by a variety of things. Novel hypotheses is one way. Novel observations is another. Novel tools or technology that enable novel observations is another.

We've picked a lot of the long hanging fruit in novel tools for many research areas. A multimillion dollar laser microscope is a hard bar to beat in biology. CERN is a hard bar to beat in physics. James Webb telescope recently upstaged Hubble, and the result was a rapid surge in exciting new astronomy papers fueled by the new observations enabled by this new tool.

So I don't disagree that the lame incentives for predictable incremental grant proposals are part of the problem, but I'm also pretty confident that that isn't the main source of the slowing of scientific progress.

comment by Yoav Ravid · 2024-08-21T17:46:41.320Z · LW(p) · GW(p)

I'm looking for a dialogue partner with whom to discuss my normative philosophy of political/social change which I call Experimentalism or Experimentationism.

It is an attempt to give a name and structure to a position I believe many people already implicitly and intuitively tend towards, and to go beyond Progressivism and Conservatism, or to "synthesise" them, if you want, though I don't particularly like the term.

I found it a bit hard to flesh out an explanation on my own, so I hope doing so in a dialogue would be helpful. This doesn't require any significant familiarity with the subject, just an ability to ask good questions and to challenge me to better flesh out my idea.

If anyone is interested please comment here or send me a DM :)

Replies from: tailcalled↑ comment by tailcalled · 2024-08-24T09:50:01.803Z · LW(p) · GW(p)

I can challenge the idea, but I'm not sure whether my challenges would help flesh out the position. Might be worth trying and seeing how it goes and whether my questions are worth publishing.

Replies from: Yoav Ravid↑ comment by Yoav Ravid · 2024-08-24T13:54:31.404Z · LW(p) · GW(p)

I'd be happy to try :)

Sending you a DM

comment by Yoav Ravid · 2024-08-03T06:52:32.896Z · LW(p) · GW(p)

Collaborative Truth Seeking = Deliberation

Sometimes our community has a different name for something than others do, so it's useful to know which name others use so we can learn from them.

Today I learned, that deliberative democracy researchers call Deliberation what we call Collaborative Truth Seeking:

"Deliberation requires rational reasoning, or “thinking slow,” in Kahneman’s terminology. It is distinct from oratory, rhetoric, negotiation, persuasion, and common forms of debate, which frequently use pathos and emotion. (...) According to deliberative democracy theorists, deliberative conversation is a cooperative venture seeking truth or the common good, rather than seeking to “win,” or defeat an opponent."

'Good Deliberation' section From Terry Bouricius' book about sortition democracy

They seem to have studied this a lot, and there's probably a lot we can learn from them to improve our Group Rationality [? · GW] skills. One day I might dive into their literature and write a post about it, but for now I'm just sharing this so others can do so as well.

comment by Yoav Ravid · 2024-07-14T07:03:10.829Z · LW(p) · GW(p)

“One can do logic without empiricism, but one must never do empiricism without logic.” - Nassim Nicholas Taleb

I think this neatly explains why, though we cherish Empiricism, we call ourselves Rationalists.

Reason must come before observation, for without reason observation cannot be processed and made sense of.

Replies from: nathan-helm-burger, andrei-alexandru-parfeni↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-09-22T18:34:06.240Z · LW(p) · GW(p)

Hmm, I disagree with Taleb's quote.

I think there are three things important to understanding the universe: empiricism, logic/math, and theorizing. I would say that you can do good empiricism without logical extrapolation or theorizing. You can do math and formal logic without doing empirical fact-finding or theorizing. But you should never trust theorizing that is not based in either logic/math or empiricism.

For empiricism which stood the test of time, look at Cajal's neuron drawings which are still revered by scientists today. Those images alone moved neuroscience forward in an important way.

For logic/math, see Einstein's theories which far preceeded the tests which were able to empirically validate them.

For theorizing without either, see the nonsensical ideas put forth by 'armchair geologists' before actual data had been gathered. Unlike Einstein, they did not support their ideas with math.

↑ comment by sunwillrise (andrei-alexandru-parfeni) · 2024-07-14T14:03:53.073Z · LW(p) · GW(p)

I don't think this makes much sense, honestly. Taleb isn't even a LW rationalist, so that's the first reason to doubt that this quote alone could imply something of great importance about the essence of this site.

Is the Scientist in Eliezer's recent anti-Empiricism dialogue [LW · GW] not using reason and logic? Are Traditional Rationality and Science, which are spoken of as ultimately helpful but fundamentally incomplete in many parts of the Sequences (1 [LW · GW], 2 [LW · GW], 3 [LW · GW], 4 [LW · GW]) having trouble because its practitioners are doing "empiricism without logic"? Because they can't process or make sense of observations? Are they not using reason? If that were so, how on Earth would we have achieved the tremendous amount of pre-LW rationalism advances in science, engineering, and our understanding of the outside world?

I absolutely disagree with the notion that LW rationalism is about using logic to process observations. On the contrary, it is about using Bayesianism [LW · GW] to figure out what hypotheses are even worth taking seriously in the first place [LW · GW], keeping multiple working theories in mind [LW · GW], and ultimately converging to correct beliefs faster than science [LW · GW] [1] (because the social rules of traditional scientific institutions require far too much evidence than is needed to be individually convinced of the truth of a claim).

As a general matter, it seems the biggest and most common problem human beings face in trying to understand the world is not how to change their credence in a particular thesis when they face new evidence, but more so how to identify what broad frames and theories to even think about in the first place, i.e., what to update, instead of how. Put differently, it is where to place to good use the limited amount of resources (time, attention, mental energy) we have to maximize the chance of stumbling upon something useful.

comment by Yoav Ravid · 2024-05-02T08:35:42.187Z · LW(p) · GW(p)

Looking for blog platform/framework recommendations

I had a Wordpress blog, but I don't like wordpress and I want to move away from it.

Substack doesn't seem like a good option because I want high customizability and multilingual support (my Blog is going to be in English and Hebrew).

I would like something that I can use for free with my own domain (so not Wix).

The closest thing I found to what I'm looking for was MkDocs Material, but it's still geared too much towards documentation, and I don't like its blog functionality enough.

Other requirements: Dark/Light mode, RSS, Newsletter support.

Does anyone have another suggestion? It's fine if it requires a bit of technical skill (though better if it doesn't).

comment by Yoav Ravid · 2021-12-21T06:19:27.695Z · LW(p) · GW(p)

Idea: Github + Voting

The way Github works is geared towards how projects usually work - someone or some group owns them, or is responsible for them, and they have the ability to make decisions about it, then there are various level of permissions. If you can make a pull request, someone from the project's team has to accept it.

This works well for almost all projects, so I have no problem with it. What I'm going to suggest is more niche and solves a different problem. What if there's a project that you want to be completely public, that no one person or group would have authority on it?

My idea is a repository site like Github, but instead of anyone having authority, all changes are voted on. So if I make a pull request to make some function faster, it opens a vote. If there's a big ratio of people against the change, the change doesn't happen, if there's some minimum for it and not enough resistance, it goes through.

What exactly are the thresholds and rules for voting? That would also be changeable through vote, and the person who first creates a repository will just need to choose the default settings.

Other devops features can also be integrated, so things like pushing updates and managing hosting can also be done through voting.

This is not for most projects, this is for decentralized projects that don't want anyone having authority over them.

(If I want to play around with it, what repository sites are there that are open source like GitLab?)

Replies from: ChristianKl, moyamo↑ comment by ChristianKl · 2021-12-21T10:42:29.973Z · LW(p) · GW(p)

There are Blockchains like Polkadot that require people to vote whether code changes get deployed. I'm not sure how that exactly works but looking at those crypto project might be valuable does for looking at existing uses and also to find potential users.

Replies from: Yoav Ravid↑ comment by Yoav Ravid · 2021-12-21T12:05:57.043Z · LW(p) · GW(p)

Oh, cool! I'll look into it. Thanks :)

comment by Yoav Ravid · 2021-12-24T06:25:15.794Z · LW(p) · GW(p)

Every now and then I see a paper that looks into replication in some field and finds a replication crisis [? · GW], to the point that just by seeing in the the title that the article is about replication in field X I can know field X has a replication crisis. I wonder, is there any paper that looked for a replication crisis in some field and didn't find it?

(Not sure what effect of publication bias would have. On the one hand it's a "negative" result, so less likely to be published, on the other hand it says very good things about the field, so people in the field would want to publish it)

comment by Yoav Ravid · 2021-02-24T17:08:01.827Z · LW(p) · GW(p)

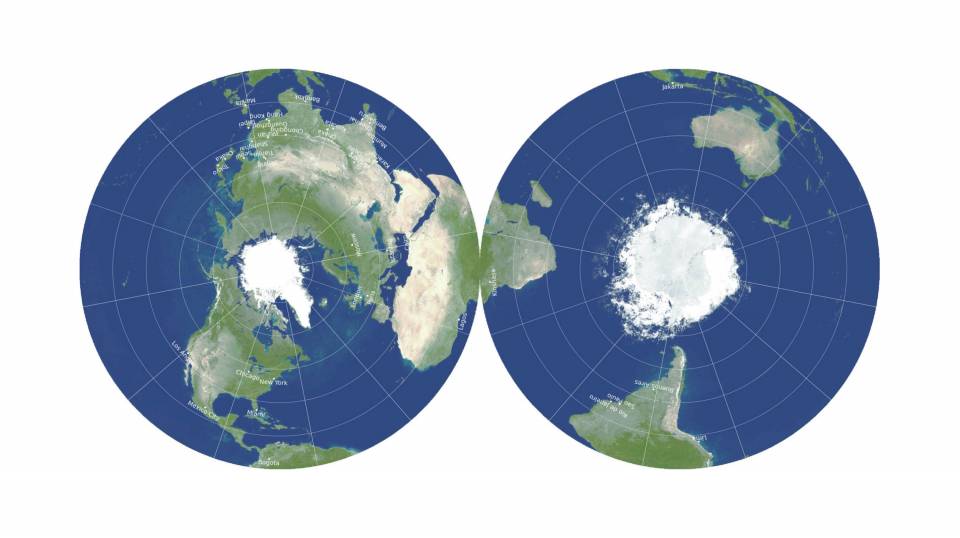

(This is an exercise, be careful not to spoil the answer to yourself)

All world maps are wrong due to the fact that it's impossible to flatten a sphere without distortions.

there is a simple idea anyone can think of that greatly improves the accuracy of flat maps and that no has tried in the last 2000 years - Until last week, when three Princeton researchers thought about it.

Take a moment to try to think what you might do to improve the accuracy of flat maps.

I'm making this an exercise since this seems like incredibly low hanging fruit that hasn't been picked up, and the idea will seem obvious in retrospect.

Ok, stop here and think, spoilers ahead:

*

*

*

*

Make a double sided map, of course!

Instead of projecting a sphere to a flat surface, they just projected two hemispheres to two surfaces and glued them together.

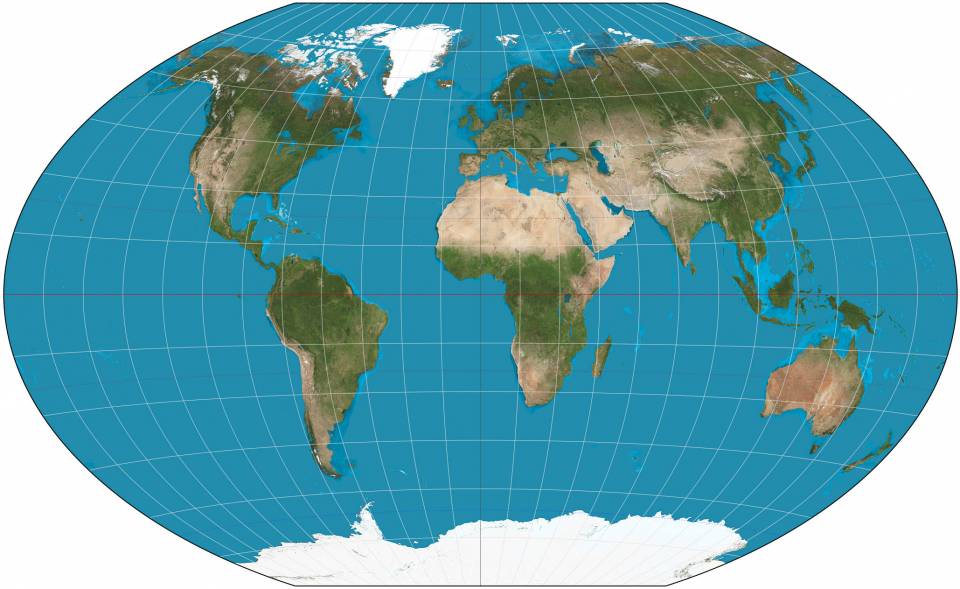

"Goldberg and Gott invented a system to score existing maps, quantifying the six types of distortions that flat maps can introduce: local shapes, areas, distances, flexion (bending), skewness (lopsidedness) and boundary cuts (continuity gaps). The lower the score, the better: a globe would have a score of 0.0."

The previous best on this metric was the Winkel Tripel projection, with a Goldberg-Gott score of 4.563

Their new design is better than the Winkel Tripel on every one of the 6 matrices, with a slightly lower Goldberg-Gott score of 4.497.

The other huge advantage of their design is that it's the only flat design that has the topology of a sphere. if you go over the edge it's exactly like going over the equator.

The other advantage is a bit less concrete - Their design just looks fun. it makes me want to hold it in my hands. other designs don't do that for me.

They also made maps of other solar system bodies, available in their paper (Starting at page 24). Here's mars:

Now I'm just left with two questions:

- How did no one think about this before

- Why aren't they selling maps

See the full article about this here

Replies from: cousin_it, gworley, elityre, Rad Hardman↑ comment by cousin_it · 2021-02-25T10:44:10.934Z · LW(p) · GW(p)

This is a breathless article about something that's obvious to people who know the state of the art. (I've worked in geoinformational systems.) If you want a map that shows the shapes and sizes of continents without too much distortion, and don't mind having two circles, the Nicolosi globular projection is a thousand years old.

Replies from: Yoav Ravid↑ comment by Yoav Ravid · 2021-02-25T11:59:14.839Z · LW(p) · GW(p)

Huh. Well, thanks for pointing that out. I see that it is part of the collection they mentioned, but they didn't count it as a two-sided map. Is it suitable for two-sided map? Was it used that way?

In any case, oops.

↑ comment by Gordon Seidoh Worley (gworley) · 2021-02-25T01:56:23.357Z · LW(p) · GW(p)

I don't know, something about this smacks of "prestigious people reinvent obvious thing that was previously dismissed out of hand because it didn't meet the criteria but if you're prestigious enough people might bend the criteria for you".

In particular I think the point was largely around having two dimensional projections. Using both sides is, in some sense, not really a 2D projection anymore since you have to interact with it by rotating it. And if you have to do that you're most of the way to just using a globe instead.

Replies from: Yoav Ravid↑ comment by Yoav Ravid · 2021-02-25T06:30:10.883Z · LW(p) · GW(p)

The idea is that it lets you compact a globe from 3D space to 2D space with minimal distortions. You can carry a 100 such maps in less space than one globe would take. (Of course, if your requirements are to be able to see everything at once, then this doesn't fit)

So what you said in the first paragraph doesn't seem true to me, but if someone did invent that already and was dismissed i would be interested to hear.

↑ comment by Eli Tyre (elityre) · 2021-02-25T05:42:06.385Z · LW(p) · GW(p)

My guess was: you could have a different map, for different parts of the globe, ie a part that focus on Africa (and therefore has minimal distortions of Africa), and a separate part for America, and a separate part for Asia, and so on.

Replies from: Yoav Ravid↑ comment by Yoav Ravid · 2021-02-25T06:24:26.649Z · LW(p) · GW(p)

Well, in a way that's what they did. They have two maps for each hemisphere which connect perfectly when glued together. But the idea of having different maps for different places on it's own has been done countless times.

↑ comment by Rad Hardman · 2021-02-24T18:10:32.131Z · LW(p) · GW(p)

The biggest disadvantage of this that I could see is that it prevents you from seeing the entirety of the map at once. This is reflected in the article linked, ""Our map is actually more like the globe than other flat maps," Gott said. "To see all of the globe, you have to rotate it; to see all of our new map, you simply have to flip it over."".

Replies from: Yoav Ravid↑ comment by Yoav Ravid · 2021-02-24T18:24:38.625Z · LW(p) · GW(p)

Right, it's not the sort of map you'd want to put on a wall, it's intended to be interactive and give the benefits of a globe in flat space.

comment by Yoav Ravid · 2021-01-20T12:44:42.207Z · LW(p) · GW(p)

What is the class which ask/guess/tell/reveal cultures are instances of? it doesn't currently have a name (at least not something less general than communication culture [? · GW]), which makes this awkward to talk about or reference. so i thought about it for a bit, and came up with Expectation Culture.

Ask/guess/tell/reveal culture are a type of expectation culture. they're all cultures where one thing that is said maps to a different expectation. this is also the case with different kinds of asks [LW · GW].

This seems like a useful phrase with which to bundle these things together.

comment by Yoav Ravid · 2024-12-19T13:49:56.094Z · LW(p) · GW(p)

Ok, I really don't get why my post announcing my book [LW · GW] got downvoted (ignored is one thing, downvoted quite another)...

Update: when I made this post the original post was on 5 Karma and out of the frontpage. Now it's 15 Karma, which is about what I expected it to get, given that it's not a core topic of LW and doesn't have a lot of information (though I now added some more information at the end), so I'm happy. Just a bit of a bummer that I had to make this post to get the original post out of the pit of obscurity it was pushed into by noise.

Replies from: maxwell-peterson, ete, habryka4, Seth Herd↑ comment by Maxwell Peterson (maxwell-peterson) · 2024-12-19T17:40:59.189Z · LW(p) · GW(p)

The post is an advertisement, without other content. I think a post of that type should only be on the site if it comes with some meat - an excerpt, at least. (And even then I’m not sure). The reader can’t even look up or read the book yet if he wanted to!

(There is a quote of the thesis of the book, but the text is stuff I’ve been rereading for years now. It feels like someone is always telling me liberalism is under threat recently.)

Replies from: Yoav Ravid↑ comment by Yoav Ravid · 2024-12-19T18:07:41.741Z · LW(p) · GW(p)

Thanks for the feedback. I've been writing it for a year already without talking about it much publicly, and wanted to put it out there so people know what I'm doing. I see it similarly to the updates people give here on their research agendas or work they intend to do. I agree that for LW (but not for twitter, for which this was originally written) it's probably good to put more meat and give more detail about what the book will discuss. Maybe I'll edit it in.

Edit: I added a list at the end of the post of things I plan to discuss or look into

↑ comment by plex (ete) · 2024-12-19T15:43:43.006Z · LW(p) · GW(p)

Sorry to hear about that experience. I think that "downvote" should be a power you unlock when you're well-established on LW (maybe at 1k karma or so) rather than being universally available. The sting of a downvote on something you think is important is easily 50x the reward of an upvote, and giving that power to people who have little context on the community seems very bad EV.

Especially with LW becoming more in the public eye, letting random internetgoers who register give any LWer negative feedback (which is often painful/discouraging) seems pretty likely to be detrimental. I'd be interested in takes from the LW team on this.

Edit: Man, I love the disagree vote separation. It's nice people being able to disagree with me without downvoting.

Replies from: habryka4, Yoav Ravid↑ comment by habryka (habryka4) · 2024-12-19T15:55:11.503Z · LW(p) · GW(p)

I think this would train the wrong habits in LessWrong users, and also skew the incentive landscape that is already tilted somewhat too much in the direction of "you get karma if you post content" away from "you get karma if your content on average makes the site better".

Replies from: ete↑ comment by plex (ete) · 2024-12-19T17:31:07.396Z · LW(p) · GW(p)

hmm, I both see the incentive issue and also that the current widespread downvote marginally mitigates this. Not sure if it helps a lot to have lurker downvotes, and expect there are notable costs. Do you think there is a karma bar below which the EV of a downvote from those users is negative? My guess is at least totally new users add painful noise in a net negative way often enough that their contribution to keeping things bad things low is not worthwhile, and pushes away some good contributors lowering average quality.

I suspect you might be underestimating the how much some users take a psychological hit if they put effort into something and get slapped down without comment, having those be somewhat reliably not misfiring seems important.

(this is probably fairly minor on your list of things, no worries if you disengage)

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-12-19T18:06:40.320Z · LW(p) · GW(p)

I think it's a hard tradeoff. I do think lots of people take psychological hits, but it is also genuinely important that people who are not a good fit for the site learn quickly and get the hint that they either have to shape up or get out. Otherwise we are at risk of quickly deteroriating in discussion quality. I do think this still makes it valuable to reduce variance, but I think we've already largely done that with the strong-vote and vote-weighting system.

Upvotes by senior users matter a lot more, and any senior user can you dig you out of multiple junior users downvoting you, which helps.

↑ comment by Yoav Ravid · 2024-12-19T17:49:11.172Z · LW(p) · GW(p)

I think making downvotes completely unavailable beneath a certain karma level wouldn't be good.

But also I think the outsized effects of downvotes is strongest when it's one of the first votes, (as it was) rather than when it's one among many votes, because it also makes the post disappear from the front page and takes away from it the chance to get more votes. upvotes don't do that, because they make the post stay longer on the frontpage, so it can always later gain more downvotes by new people getting exposed to it.

So if we do limit the power of downvotes or who can cast them, perhaps it should be focused on early votes, and not votes in general?

Replies from: Viliam↑ comment by Viliam · 2024-12-20T11:29:01.895Z · LW(p) · GW(p)

I would need more data to make an opinion on this.

At first sight, it seems to me like having a rule "if your total karma is less than 100, you are not allowed to downvote an article or a comment if doing so would push it under zero" would be good.

But I have no idea how often that happens in real life. Do we actually have many readers with karma below 100 who bother to vote?

By the way, I didn't vote on your article, but... you announced that you were writing a book i.e. it is not even finished, you didn't provide a free chapter or something... so what exactly was there to upvote you for?

(Sorry, this is too blunt, and I understand that people need some positive reinforcement along the way. But this is not a general website to make people feel good; unfortunately, aspiring rationalists are a tiny fraction of the general population, so making this website more welcoming to the general population would get us hopelessly diluted. Also, there is a soft taboo on politics, which your post was kinda about, without providing something substantial to justify that.)

↑ comment by habryka (habryka4) · 2024-12-19T15:56:56.516Z · LW(p) · GW(p)

I don't know either! Early voting is often quite noisy, and this thing is a bit politics-adjacent. I expect it won't end up downvoted too long. I've considered hiding vote-scores for the first few hours, but we do ultimately still have to use something for visibility calculations, and I don't like withholding information from users.

Replies from: Yoav Ravid, kave↑ comment by Yoav Ravid · 2024-12-19T18:00:22.688Z · LW(p) · GW(p)

I think there's an asymmetry problem here. An early downvote hides the post from the frontpage and impedes it from getting more evaluations, so it's a pit that's hard to get out from (without, say, creating a different post asking why your first post is in the pit :P). An early upvote, on the other hand, exposes the post to more evaluations by keeping it longer on the frontpage, and it's easy for later downvotes to push it back down, so it's more like a slippery hill than a pit.

So I think a mechanism that would avoid premature burials of posts would overcome some of the noise and lead to more information being incorporated into the "final" evaluation of the post (the karma it stabilizes on).

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-12-19T18:08:11.987Z · LW(p) · GW(p)

I agree this is true for content by new users, but honestly, we kind of need to hide content from most users from the frontpage until someone decided to upvote it.

For more active users, their strong-vote strength gets applied by default to the post, which helps a good amount with early downvotes not hurting visibility that much.

Replies from: Yoav Ravid↑ comment by Yoav Ravid · 2024-12-19T18:16:11.386Z · LW(p) · GW(p)

I don't think that's enough? I have 3.5k Karma, which gives me a strong vote power of 7, but when I made this post the other post was on 5 Karma and long gone from the front page. It only started gaining karma and came back to the frontpage after I made this post.

And I kinda dislike "why am I getting downvoted" posts, so I would like mechanisms that make them unnecessary.

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-12-19T19:02:47.118Z · LW(p) · GW(p)

I mean, the difference between 7 and 5 karma on frontpage ranking is miniscule, so I don't think that made any difference. The real question is "why did nobody upvote it"? Like, I think there physically isn't enough space on the frontpage to give 5 karma posts visibility for very long, without filling most of the frontpage with new unvetted content.

Replies from: Yoav Ravid↑ comment by Yoav Ravid · 2024-12-19T19:28:12.069Z · LW(p) · GW(p)

I don't think comparing 5 to 7 is correct, because we don't want to compare to downvote to no-vote, we want to compare one ordering of votes to another ordering of votes. So, what would be the difference if it went up before it went back down again, rather than first go down like it has.

I think we do agree that if I didn't ask why it was downvoted it would have remained at 5 rather than go up to 15, and that this is suboptimal, right?

To me it feels like mid-popularity posts are affected too much by noise and when they get posted.

↑ comment by Seth Herd · 2024-12-19T15:53:54.857Z · LW(p) · GW(p)

LW has been avoiding all discussion of politics in the runup to the election. And it usually is suspicious of politics, noting that "politics is the mindkiller" that causes arguments and division of rationalist communities.

Thus it could be argued that it's an inappropriate place to announce your book, even though it's intended as a rationalist take on politics and not strictly partisan.

But it's a judgment call. Which is why your post is now positive again.

I'm uncertain; I would neither downvote nor upvoted your post. I certainly wouldn't discuss the theory here, but announcing it seems fine.

I'm not sure where the requests RE political discussion are stated. The site FAQ is one place but I don't think that says much.

Replies from: M. Y. Zuo↑ comment by M. Y. Zuo · 2024-12-19T16:25:43.922Z · LW(p) · GW(p)

It seems contradictory. If LW users believe that the userbase is not competent enough on average to avoid tangential but divisive politics, then why do they believe the ’karma’ average decided by the same, matters?

It’s like a superposition of two extremes:

At one extreme there’s Reddit where a high karma is more of an anti-signal, and having extra karma beyond a pretty low threshold actually increase reader’s suspicions that it’s fluff or unusually deceiving…

At the other extreme, there are traditional old BBS forums with no karma or scoring system whatsoever. And any formal distinction is a huge positive signal.

Replies from: faul_sname, Seth Herd↑ comment by faul_sname · 2024-12-19T17:41:06.837Z · LW(p) · GW(p)

It's more that any platform that allows discussion of politics risks becoming a platform that is almost exclusively about politics. Upvoting is a signal of "I want to see more of this content", while downvoting is a signal of "I want to see less of this content". So "I will downvote any posts that are about politics or politics-adjacent, because I like this website and would be sad if it turned into yet another politics forum" is a coherent position.

All that said, I also did not vote on the above post.

↑ comment by Seth Herd · 2024-12-19T18:52:35.669Z · LW(p) · GW(p)

We believe politics is the mind killer. That is separate from a judgment about user competence. There is no contradiction. Even competent users have emotions and bises, and politics is a common hot button.

Reddit is a flaming mess compared to LW, so the mods here are doing something right - probably a lot.

Replies from: M. Y. Zuo↑ comment by M. Y. Zuo · 2024-12-20T02:25:41.819Z · LW(p) · GW(p)

Sometimes politics IS the core issue, or at least an important underlying cause of the core issue, so a blanket ban on discussing it is a very crude tool.

Because it’s effectively banning any substantial discussion on a wide range of topics, and instead replacing it, at best, with a huge pile of euphemisms and seemingly bizarre back and forths. And at worst, nothing at all.

So user competence as a factor is unlikely to be completely seperate.

Or to look at it from the other angle, in an ideal world with ideal forum participants, there would very likely be a different prevailing norm.

Replies from: Seth Herd↑ comment by Seth Herd · 2024-12-20T02:50:07.500Z · LW(p) · GW(p)

It's not a blanket ban.

Of course user competence isn't entirely separate, just mostly.

In a world with ideal forum participants, we wouldn't be having this conversation :)

Replies from: M. Y. Zuo↑ comment by M. Y. Zuo · 2024-12-21T04:26:15.621Z · LW(p) · GW(p)

Once you take into account real world factors, such as an expanding userbase leading to less average credibility per user, multiplying political positions, etc… which are all pretty much unavoidable due to regression to the mean…

It really becomes ever closer to an effective blanket ban, to at least try to maintain the same average quality. (Asssuming that is a goal.)

To extrapolate it to an extreme scenario, if the userbase suddenly 100X in size, then even many things considered prosaic might have to be prohibited because the userbase, on average, literally wouldn’t be capable of evaluating discussion beyond a mediocore subreddit otherwise.

comment by Yoav Ravid · 2023-12-20T10:33:44.959Z · LW(p) · GW(p)

When making many predictions together, I think it would be useful to add another prediction about your calibration.

I think it's worth knowing not just how calibrated someone is, but also how "meta-calibrated" - do they have a correct sense of their own level of calibration?

comment by Yoav Ravid · 2022-08-17T01:27:16.137Z · LW(p) · GW(p)

Punishing non-punishers taken to the limit (punishing non-punisher non-punishers, punishing non-punisher non-punisher non-punishers, etc...) is simply "If you're not with me, then you're my enemy".

Replies from: nathan-helm-burger, JBlack↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-09-22T18:56:37.156Z · LW(p) · GW(p)

Only if the punishment for non-punishing has no decay factor. With a decay factor, the punishments due for non-punishing decay with each step, and thus reach zero punishment at some point. I think most people imagine a pretty step decay factor, and might think that it drops to zero after the steps, maybe with an exponential curve so that only the first step is a substantial punishment. Like maybe 1st step is 40% of full, then 2nd is 15%, then 3rd is 3%?

comment by Yoav Ravid · 2021-11-23T10:42:21.451Z · LW(p) · GW(p)

Correlation suggests Association suggests Causation.

Replies from: Richard_Kennaway, gwern↑ comment by Richard_Kennaway · 2021-11-23T23:11:00.480Z · LW(p) · GW(p)

Looking at the sky suggests the Sun goes round the Earth.

Replies from: Yoav Ravid↑ comment by Yoav Ravid · 2021-11-24T05:43:13.367Z · LW(p) · GW(p)

Indeed it does, and that the earth goes around it. Until you have the information needed to rule either hypothesis out you better consider both. Maybe In some universes the bright thing in the sky really does orbit around you and not vice versa.

↑ comment by gwern · 2021-11-23T17:30:23.851Z · LW(p) · GW(p)

Which causation?

Replies from: Yoav Ravid↑ comment by Yoav Ravid · 2021-11-23T18:22:44.046Z · LW(p) · GW(p)

Several. You need more information than just one correlation/association to pinpoint the exact causal relationship.

But I expect you already know that, cause my understanding is that this is standard correlation/causation stuff - the sentence wasn't supposed to point at a novel idea, just a novel phrasing of it. So your question seems rhetorical, but I don't know what's it trying to point at. Do you think this view of correlation/association/causation is false?

comment by Yoav Ravid · 2021-11-12T14:28:18.125Z · LW(p) · GW(p)

Me and a friend want to start journaling daily, and we're looking for a good app to use for that.

Desired features:

- Can be used from a phone (bonus if it's multiplatform)

- Easy to add a new entry

- Easy to see entries in chronological order

- Has an option to tag entries so it's easy to later come back and read them using the tag

- Can remind of you entries (say, remind of you entries on the date they were entered, or after some set amount of time).

- Bonus: Reminds you daily to write with a notification

I thought of roam and obsidian, but I'm not very familiar with either. So I'd be happy to hear both thoughts on these two apps specifically, and recommendations for other apps.

Replies from: palcu↑ comment by palcu · 2021-11-12T18:30:09.540Z · LW(p) · GW(p)

If you are in the Apple ecosystem, I can recommend Day One. My oldest entry is nine years ago and I've been very happy with them.

Replies from: Yoav Ravid↑ comment by Yoav Ravid · 2021-11-12T18:49:34.729Z · LW(p) · GW(p)

I'm an android user. Not a fan of apple/IOS. But thanks :)