The Power to Demolish Bad Arguments

post by Liron · 2019-09-02T12:57:23.341Z · LW · GW · 84 commentsContents

"Acme exploits its workers!" Zooming Into the Claim “Startups should have more impact!” None 84 comments

This is Part I of the Specificity Sequence [LW · GW]

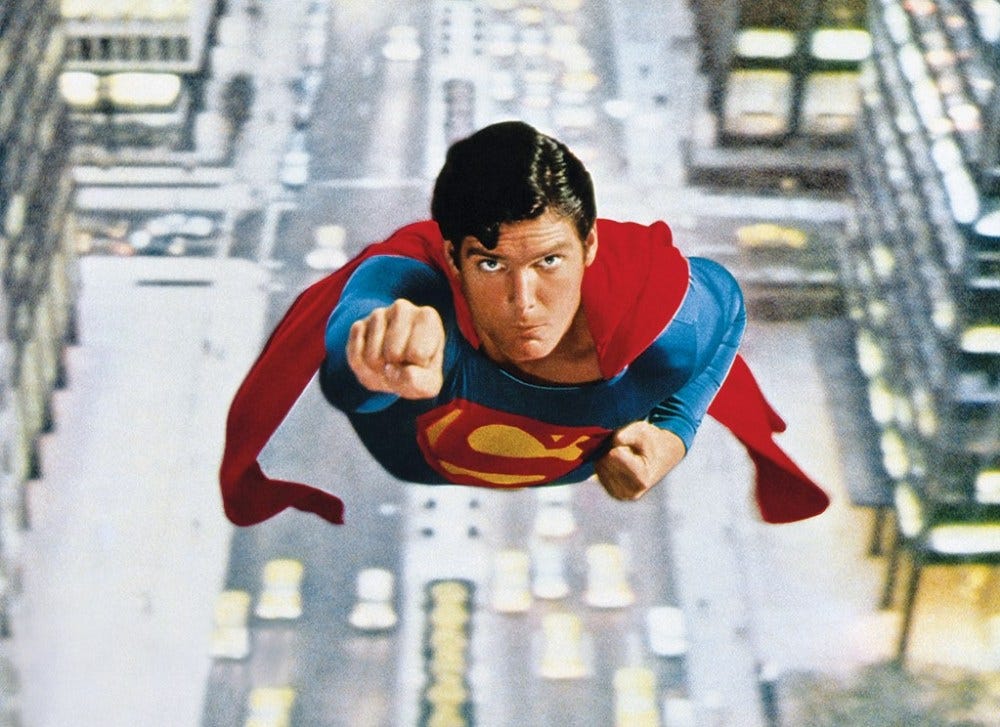

Imagine you've played ordinary chess your whole life, until one day the game becomes 3D. That's what unlocking the power of specificity feels like: a new dimension you suddenly perceive all concepts to have. By learning to navigate the specificity dimension, you'll be training a unique mental superpower. With it, you'll be able to jump outside the ordinary course of arguments and fly through the conceptual landscape. Fly, I say!

"Acme exploits its workers!"

Want to see what a 3D argument looks like? Consider a conversation I had the other day when my friend “Steve” put forward a claim that seemed counter to my own worldview:

Steve: Acme exploits its workers by paying them too little!

We were only one sentence into the conversation and my understanding of Steve’s point was high-level, devoid of specific detail. But I assumed that whatever his exact point was, I could refute it using my understanding of basic economics. So I shot back with a counterpoint:

Liron: No, job creation is a force for good at any wage. Acme increases the demand for labor, which drives wages up in the economy as a whole.

I injected principles of Econ 101 into the discussion because I figured they could help me expose that Steve misunderstood Acme's impact on its workers.

My smart-sounding response might let me pass for an intelligent conversationalist in non-rationalist circles. But my rationalist friends wouldn't have been impressed at my 2D tactics, parrying Steve's point with my own counterpoint. They'd have sensed that I'm not progressing toward clarity and mutual understanding, that I'm ignorant of the way of Double Crux [? · GW].

If I were playing 3D chess, my opening move wouldn't be to slide a defensive piece (the Econ 101 Rook) across the board. It would be to... shove my face at the board and stare at the pieces from an inch away.

Here's what an attempt to do this might look like:

Steve: Acme exploits its workers by paying them too little!

Liron: What do you mean by “exploits its workers”?

Steve: Come on, you know what “exploit” means… Dictionary.com says it means “to use selfishly for one’s own ends”

Liron: You’re saying you have a beef with any company that acts “selfish”? Doesn’t every company under capitalism aim to maximize returns for its shareholders?

Steve: Capitalism can be good sometimes, but Acme has gone beyond the pale with their exploitation of workers. They’re basically ruining capitalism.

No, this is still not the enlightening conversation we were hoping for.

But where did I go wrong? Wasn't I making a respectable attempt to lead the conversation toward clear and precise definitions? Wasn't I navigating the first waypoint on the road to Double Crux?

Can you figure out where I went wrong?

…

…

…

It was a mistake for me to ask Steve for a mere definition of the term “exploit”. I should have asked for a specific example of what he imagines “exploit” to mean in this context. What specifically does it mean—actually, forget "mean"—what specifically does it look like for Acme to "exploit its workers by paying them too little"?

When Steve explained that "exploit" means "to use selfishly", he fulfilled my request for a definition, but the whole back-and-forth didn't yield any insight for either of us. In retrospect, it was a wasted motion for me to ask, "What do you mean by 'exploits its workers'".

Then I, instead of making another attempt to shove my face to stare at the board up close, couldn't help myself: I went back to sliding my pieces around. I set out to rebut the claim that "Acme uses its workers selfishly" by tossing the big abstract concept of “capitalism” into the discussion.

At this point, imagine that Steve were a malicious actor whose only goal was to score rhetorical points on me. He'd be thrilled to hear me say the word “capitalism”. "Capitalism" is a nice high-level concept for him to build an infinite variety of smart-sounding defenses out of, together with other high-level concepts like “exploitation” and “selfishness”.

A malicious Steve can be rhetorically effective against me even without possessing a structured understanding of the subject he’s making a claim about. His mental model of the subject could just be a ball pit of loosely-associated concepts. He can hold up his end of the conversation surprisingly well by just snatching a nearby ball and flinging it at me. And what have I done by mentioning “capitalism”? I’ve gone and tossed in another ball.

I'd like to think that Steve isn't malicious, that he isn't trying to score rhetorical points on me, and that the point he's trying to make has some structure and depth to it. But there's only one way to be sure: By using the power of specificity to get a closer look! Here's how it's done:

Steve: Acme exploits its workers by paying them too little!

Liron: Can you help me paint a specific mental picture of a worker being exploited by Acme?

Steve: Ok… A single dad who works at Acme and never gets to spend time with his kids because he works so much. He's living paycheck to paycheck and he doesn't get any paid vacation days. The next time his car breaks down, he won’t even be able to fix it because he barely makes minimum wage. You should try living on minimum wage so you can see how hard it is!

Liron: You’re saying Acme should be blamed for this specific person’s unpleasant life circumstances, right?

Steve: Yes, because they have thousands of workers in these kinds of circumstances, and meanwhile their stock is worth $80 billion.

Steve doesn’t realize this yet, but by coaxing out a specific example of his claim, I've suddenly made it impossible for him to use a ball pit of loosely-associated concepts to score rhetorical points on me. From this point on, the only way he can win the argument with me is by clarifying and supporting his claim in a way that helps me update my mental model of the subject. This isn’t your average 2D argument anymore. We’re now flying like Superman.

Liron: Ok, sticking with this one specific worker's hypothetical story — what would they be doing if Acme didn’t exist?

Steve: Getting a different job

Liron: Ok, what specific job?

Steve: I don’t know, depends what their skills are

Liron: This is your specific story Steve, you get to pick any specific plausible details you want in order to support any point you want!

I have to stop and point out how crazy this is.

You’d think the way smart people argue is by supporting their claims with evidence, right? But here I’m giving Steve a handicap where he gets to make up fake evidence (telling me any hypothetical specific story) just to establish that his argument is coherent by checking whether empirical support for it ever could meaningfully exist. I'm asking Steve to step over a really low bar here.

Surprisingly, in real-world arguments, this lowly bar often stops people in their tracks. The conversation often goes like this:

Steve: I guess he could instead be a cashier at McDonald’s. Because then he’d at least get three weeks per year of paid vacation time.

Liron: In a world where Acme exists, couldn’t this specific guy still go get a job as a cashier at McDonald’s? Plus, wouldn’t he have less competition for that McDonald's cashier job because some of the other would-be applicants got recruited to be Acme workers instead? Can we conclude that the specific person who you chose to illustrate your point is actually being *helped* by the existence of Acme?

Steve: No because he’s an Acme worker, not a McDonald’s cashier

Liron: So doesn’t that mean Acme offered him a better deal than McDonald’s, thereby improving his life?

Steve: No, Acme just tricked him into thinking that it’s a better deal because they run ads saying how flexible their job is, and how you can set your own hours. But it’s actually a worse deal for the worker.

Liron: So like, McDonald’s offered him $13/hr plus three weeks per year of paid vacation, while Acme offered $13/hr with no paid vacation time, but more flexibility to set his own hours?

Steve: Um, ya, something like that.

Liron: So if Acme did a better job of educating prospective workers that they don't offer the same paid vacation time that some other companies do, then would you stop saying that Acme is “exploiting its workers by paying them too little”?

Steve: No, because Acme preys on uneducated workers who need quick access to cash, and they also intend to automate away the workers’ jobs as soon as they can.

Liron: It looks like you’re now making new claims that weren’t represented in the specific story you chose, right?

Steve: Yes, but I can tell other stories

Liron: But for the specific story you chose to tell that was supposed to best illustrate your claim, the “exploitation” you’re referring to only deprived the worker of the value of a McDonald’s cashier’s paid vacation time, which might be like a 5% difference in total compensation value? And since his work schedule is much more flexible as an Acme worker, couldn’t that easily be worth the 5% to him, so that he wasn’t “tricked” into joining Acme but rather made a decision in rational self-interest?

Steve: Yeah maybe, but anyway that’s just one story.

Liron: No worries, we can start over and talk about a specific story that you think would illustrate your main claim. Who knows, I might even end up agreeing with your claim once I understand it. It's just hard to understand what you're saying about Acme without having at least one example in mind, even if it's a hypothetical one.

Steve thinks for a little while...

Steve: I don't know all the exploitative shit Acme does ok? I just think Acme is a greedy company.

When someone makes a claim you (think you) disagree with, don't immediately start gaming out which 2D moves you'll counterargue with. Instead, start by drilling down in the specificity dimension: think through one or more specific scenarios to which their claim applies.

If you can't think of any specific scenarios to which their claim applies, maybe it's because there are none. Maybe the thinking behind their original claim is incoherent.

In complex topics such as politics and economics, the sad reality is that people who think they’re arguing for a claim are often not even making a claim. In the above conversation, I never got to a point where I was trying to refute Steve’s argument, I was just trying to get specific clarity on what Steve’s claim is, and I never could. We weren't able to discuss an example of what specific world-state constitutes, in his judgment, a referent of the statement “Acme exploits its workers by paying them too little”.

Zooming Into the Claim

Imagine Steve shows you this map and says, “Oregon’s coastline is too straight. I wish all coastlines were less straight so that they could all have a bay!”

Resist the temptation to argue back, “You’re wrong, bays are stupid!” Hopefully, you’ve built up the habit of nailing down a claim’s specific meaning before trying to argue against it.

Steve is making a claim about “Oregon’s coastline”, which is a pretty abstract concept. In order to unpack the claim’s specific meaning, we have to zoom into the concept of a “coastline” and see it in more detail as this specific configuration of land and water:

From this perspective, a good first reply would be, “Well, Steve, what about Coos Bay over here? Are you happy with Oregon’s coastline as long as Coos Bay is part of it, or do you still think it’s too straight even though it has this bay?”

Notice that we can’t predict how Steve will answer our specific clarifying question. So we never knew what Steve’s words meant in the first place, did we? Now you can see why it wasn’t yet productive for us to start arguing against him.

When you hear a claim that sounds meaningful, but isn’t 100% concrete and specific, the first thing you want to do is zoom into its specifics. In many cases, you’ll then find yourself disambiguating between multiple valid specific interpretations, like for Steve’s claim that “Oregon’s coastline is too straight”.

In other cases, you’ll discover that there was no specific meaning in the mind of the speaker, like in the case of Steve’s claim that “Acme exploits its workers by paying them too little” — a staggering thing to discover.

TFW a statement unexpectedly turns out to have no specific meaning

“Startups should have more impact!”

Consider this excerpt from a recent series of tweets by Michael Seibel, CEO of the Y Combinator startup accelerator program:

Successful tech founders would have far better lives and legacies if they competed for happiness and impact instead of wealth and users/revenue.

We need to change [the] model from build a big company, get rich, and then starting a foundation...

To build a big company, get rich, and use the company's reach and power to make the world a better place.

When I first read these tweets, my impression was that Michael was providing useful suggestions that any founder could act on to make their startup more of a force for good. But then I activated my specificity powers…

Before elaborating on what I think is the failure of specificity on Michael’s part, I want to say that I really appreciate Michael and Y Combinator engaging with this topic in the first place. It would be easy for them to keep their head down and stick to their original wheelhouse of funding successful startups and making huge financial returns, but instead, YC repeatedly pushes the envelope into new areas such as founding OpenAI and creating their Request for Carbon Removal Technologies. The Y Combinator community is an amazing group of smart and morally good people, and I’m proud to call myself a YC founder (my company Relationship Hero was in the YC Summer 2017 batch). Michael’s heart is in the right place to suggest that startup founders may have certain underused mechanisms by which to make the world a better place.

That said… is there any coherent takeaway from this series of tweets, or not?

The key phrases seem to be that startup founders should “compete for happiness and impact” and “use the company’s reach and power to make the world a better place”.

It sounds meaningful, doesn’t it? But notice that it’s generically-worded and lacks any specific examples. This is a red flag.

Remember when you first heard Steve’s claim that “Acme exploits its workers by paying them too little”? At first, it sounded like a meaningful claim. But as we tried to nail down what it meant, it collapsed into nothing. Will the same thing happen here?

Specificity powers, activate! Form of: Tweet reply

What's a specific example, real or hypothetical, of a $1B+ founder trading off less revenue for more impact?

Cuz at the $1B+ level, competing for impact may look indistinguishable from competing for revenue.

E.g. Elon Musk companies have huge impact and huge valuations.

Let’s consider a specific example of a startup founder who is highly successful: Elon Musk and his company SpaceX, currently valued at $33B. The company’s mission statement is proudly displayed at the top of their about page:

SpaceX designs, manufactures and launches advanced rockets and spacecraft. The company was founded in 2002 to revolutionize space technology, with the ultimate goal of enabling people to live on other planets.

What I love about SpaceX is that everything they do follows from Elon Musk’s original goal of making human life multiplanetary. Check out this incredible post by Tim Urban to understand Elon’s plan in detail. Elon’s 20-year playbook is breathtaking:

- Identify a major problem in the world

A single catastrophic event on Earth can permanently wipe out the human species - Propose a method of fixing it

Colonize other planets, starting with Mars - Design a self-sustaining company or organization to get it done

Invent reusable rockets to drop the price per launch, then dominate the $27B/yr market for space launches

I would enthusiastically advise any founder to follow Elon’s playbook, as long as they have the stomach to commit to it for 20+ years.

So how does this relate to Michael’s tweets? I believe my advice to “follow Elon’s playbook” constitutes a specific example of Michael’s suggestion to “use the company’s reach and power to make the world a better place”.

But here’s the thing: Elon’s playbook is something you have to do before you found the company. First you have to identify a major problem in the world, then you come up with a plan to start a certain type of company. How do you apply Michael’s advice once you’ve already got a company?

To see what I mean, let’s pick another specific example of a successful founder: Drew Houston and Dropbox ($11B market cap). We know that Michael wants Drew to “compete for happiness and impact” and to “use the company’s reach and power to make the world a better place”. But what does that mean here? What specific advice would Michael have for Drew?

Let’s brainstorm some possible ideas for specific actions that Michael might want Drew to take:

- Change Dropbox’s mission to something that has more impact on happiness

- Donate 10% of Dropbox’s profits to efforts to reduce world hunger

- Give all Dropbox employees two months of paid vacation each year

I know, these are just stabs in the dark, because we need to talk about specifics somehow. Did Michael really mean any of these? The ones about charity and employee benefits seem too obvious. Let’s explore the possibility that Michael might be recommending that Dropbox change its mission.

Here’s Dropbox’s current mission from their about page:

We’re here to unleash the world’s creative energy by designing a more enlightened way of working.

Seems like a nice mission that helps the world, right? I use Dropbox myself and can confirm that the product makes my life a little better. So would Michael say that Dropbox is an example of “competing for happiness and impact”?

If so, then it would have been really helpful if Michael had written in one of his tweets, “I mean like how Dropbox is unleashing the world’s creative energy”. Mentioning Dropbox, or any other specific example, would have really clarified what Michael is talking about.

And if Dropbox’s current mission isn’t what Michael is calling for, then how would Dropbox need to change it in order to better “compete for happiness and impact”? For instance, would it help if they tack on “and we guarantee that anyone can have access to cloud storage regardless of their ability to pay for it”, or not?

Notice how this parallels my conversation with Steve about Acme. We begin with what sounds like a meaningful exhortation: Companies should compete for happiness and impact instead of wealth and users/revenue! Acme shouldn’t exploit its workers! But when we reach for specifics, we suddenly find ourselves grasping at straws. I showed three specific guesses of what Michael’s advice could mean for Drew, but we have no idea what it does mean, if anything.

Imagine that the CEO of Acme wanted to take Steve’s advice about how not to exploit workers. He’d be in the same situation as Drew from Dropbox: confused about the specifics of what his company was supposedly doing wrong, to begin with.

Once you’ve mastered the power of specificity, you’ll see this kind of thing everywhere: a statement that at first sounds full of substance, but then turns out to actually be empty. And the clearest warning sign is the absence of specific examples.

Next post: How Specificity Works [LW · GW]

84 comments

Comments sorted by top scores.

comment by JenniferRM · 2019-09-02T18:09:35.915Z · LW(p) · GW(p)

I have a strong appreciation for the general point that "specificity is sometimes really great", but I'm wondering if this point might miss the forest for the trees with some large portion of its actual audience?

If you buy that in some sense all debates are bravery debates then audience can matter a lot, and perhaps this point addresses central tendencies in "global english internet discourse" while failing to address central tendencies on LW?

There is a sense in which nearly all highly general statements are technically false, because they admit of at least some counter examples.

However any such statement might still be a useful in a structured argument of very high quality, perhaps as an illustration of a troubling central tendency, or a "lemma" in a multi-part probabalistic argument.

It might even be the case that the MEDIAN EXAMPLE of a real tendency is highly imperfect without that "demolishing" the point.

Suppose for example that someone has focused on a lot on higher level structural truths whose evidential basis was, say, a thorough exploration of many meta-analyses about a given subject.

"Mel the meta-meta-analyst" might be communicating summary claims that are important and generally true that "Sophia the specificity demander" might rhetorically "win against" in a way that does not structurally correspond to the central tendencies of the actual world.

Mel might know things about medical practice without ever having treated a patient or even talked to a single doctor or nurse. Mel might understand something about how classrooms work without being a teacher or ever having visited a classroom. Mel might know things about the behavior of congressional representatives without ever working as a congressional staffer. If forced to confabulate an exemplar patient, or exemplar classroom, or an exemplar political representative the details might be easy to challenge even as a claim about the central tendencies is correct.

Naively, I would think that for Mel to be justified in his claims (even WITHOUT having exemplars ready-to-hand during debate) Mel might need to be moderately scrupulous in his collection of meta-analytic data, and know enough about statistics to include and exclude studies or meta-analyses in appropriately weighed ways. Perhaps he would also need to be good at assessing the character of authors and scientists to be able to predict which ones are outright faking their data, or using incredibly sloppy data collection?

The core point here is that Sophia might not be lead to the truth SIMPLY by demanding specificity without regard to the nature of the claims of her interlocutor.

If Sophia thinks this tactic gives her "the POWER to DEMOLISH arguments" in full generality, that might not actually be true, and it might even lower the quality of her beliefs over time, especially if she mostly converses with smart people (worth learning from, in their area(s) of expertise) rather than idiots (nearly all of whose claims might perhaps be worth demolishing on average).

It is totally possible that some people are just confused and wrong (as, indeed, many people seem to be, on many topics... which is OK because ignorance is the default and there is more information in the world now than any human can integrate within a lifetime of study). In that case, demanding specificity to demolish confused and wrong arguments might genuinely and helpfully debug many low quality abstract claims.

However, I think there's a lot to be said for first asking someone about the positive rigorous basis of any new claim, to see if the person who brought it up can articulate a constructive epistemic strategy.

If they have a constructive epistemic strategy that doesn't rely on personal knowledge of specific details, that would be reasonable, because I think such things ARE possible.

A culturally local example might be Hanson's general claim that medical insurance coverage does not appear to "cause health" on average. No single vivid patient generates this result. Vivid stories do exist here, but they don't adequately justify the broader claim. Rather, the substantiation arises from tallying many outcomes in a variety of circumstances and empirically noticing relations between circumstances and tallies.

If I was asked to offer a single specific positive example of "general arguments being worthwhile" I might nominate Visible Learning by John Hattie as a fascinating and extremely abstract synthesis of >1M students participating in >50k studies of K-12 learning. In this case a core claim of the book is that mindless teaching happens sometimes, nearly all mindful attempts to improve things work a bit, and very rarely a large number of things "go right" and unusually large effect sizes can be observed. I've never seen one of these ideal classrooms I think, but the arguments that they have a collection of general characteristics seem solid so far.

Maybe I'll change my mind by the end? I'm still in progress on this particular book, which makes it sort of "top of mind" for me, but the lack of specifics in the book present a readability challenge rather than an epistemic challenge ;-P

The book Made to Stick, by contrast, uses Stories that are Simple, Surprising, Emotional, Concrete, and Credible to argue that the best way to convince people of something is to tell them Stories that are Simple, Surprising, Emotional, Concrete, and Credible.

As near as I can tell, Made to Stick describes how to convince people of things whether or not the thing is true, which means that if these techniques work (and can in fact cause many false ideas to spread through speech communities with low epistemic hygiene, which the book arguably did not really "establish") then a useful epistemic heuristic might be to give a small evidential PENALTY to all claims illustrated merely via vivid example.

I guess one thing I would like to say here at the end is that I mean this comment in a positive spirit. I upvoted this article and the previous one, and if the rest of the sequence has similar quality I will upvote those as well.

I'm generally IN FAVOR of writing imperfect things and then unpacking and discussing them. This is a better than median post in my opinion, and deserved discussion, rather than deserving to be ignored :-)

Replies from: Liron, Pongo, Liron↑ comment by Liron · 2019-09-02T19:11:17.937Z · LW(p) · GW(p)

A culturally local example might be Hanson's general claim that medical insurance coverage does not appear to "cause health" on average. No single vivid patient generates this result. Vivid stories do exist here, but they don't adequately justify the broader claim. Rather, the substantiation arises from tallying many outcomes in a variety of circumstances and empirically noticing relations between circumstances and tallies.

I don't see how this is an example of a time when my specificity test shouldn't be used, because Robin Hanson would simply pass my specificity test. It's safe to say that Robin has thought through at least one specific example of what the claim that "medical insurance doesn't cause health" means. The sample dialogue with me and Robin would look like this:

Robin: Medical insurance coverage doesn't cause health on average!

Liron: What's a specific example (real or hypothetical) of someone who seeks medical insurance coverage because they think they're improving their health outcome, but who actually would have had the same health outcome without insurance?

Robin: A 50-year-old man opts for a job that has insurance benefits over one that doesn't, because he believes it will improve his expected health outcome. For the next 10 years, he's healthy, so the insurance didn't improve his outcome there, but he wouldn't expect it to. Then at age 60, he gets a heart attack and goes to the hospital and gets double bypass surgery. But in the hypothetical where this same person hadn't had health insurance, he would have had that same bypass surgery anyway, and then paid off the cost over the next few years. And then later he dies of old age. The data shows that this example I made up is a representative one - definitely not in every sense, but just in the sense that there's no delta between health outcomes in counterfactual world-states where people either have or don't have health insurance.

Liron: Okay, I guess your claim is coherent. I have no idea if it's true or false, but I'm ready to start hearing your evidence, now that you've cleared this low bar of having a coherent claim.

Robin: It was such an easy exercise though, it seemed like a pointless formality.

Liron: Right. The exercise was designed to be the kind of basic roadblock that's a pointless formality for Robin Hanson while being surprisingly difficult for the majority of humans.

Mel might know things about medical practice without ever having treated a patient or even talked to a single doctor or nurse. Mel might understand something about how classrooms work without being a teacher or ever having visited a classroom. Mel might know things about the behavior of congressional representatives without ever working as a congressional staffer. If forced to confabulate an exemplar patient, or exemplar classroom, or an exemplar political representative the details might be easy to challenge even as a claim about the central tendencies is correct.

Since I don't think the Robin Hanson example was enough to change my mind, is there another example of a general claim Mel might make where we can agree he's fundamentally right to make that claim but shouldn't expect him to furnish a specific example of it?

If he's been reading meta-analyses, couldn't he just follow their references to analyses that contain specific object-level experiments that were done, and furnish those as his examples?

I wouldn't insist that he has an example "ready to hand during debate"; it's okay if he says "if you want an example, here's where we can pull one up". I agree we should be careful to make sure that the net effect of asking for examples is to raise the level of discourse, but I don't think it's a hard problem.

I appreciate your high-quality comment. What I've done in this comment, obviously, is activated my specificity powers. I won't claim that I've demolished your argument, I just hope readers will agree that my approach was fair, respectful, productive, and not unnecessarily "adversarial"!

Replies from: JenniferRM, Slider↑ comment by JenniferRM · 2019-09-03T01:01:07.927Z · LW(p) · GW(p)

I appreciate your high-quality comment.

I likewise appreciate your prompt and generous response :-)

I think I see how you imagine a hypothetical example of "no net health from insurance" might work as a filter that "passes" Hanson's claim.

In this case, I don't think your example works super well and might almost cause more problems that not?

Differences of detail in different people's examples might SUBTRACT from attention to key facts relevant to a larger claim because people might propose different examples that hint at different larger causal models.

Like, if I was going to give the strongest possible hypothetical example to illustrate the basic idea of "no net health from insurance" I'd offers something like:

EXAMPLE: Alice has some minor symptoms of something that would clear up by itself and because she has health insurance she visits a doctor. ("Doctor visits" is one of the few things that health insurance strongly and reliably causes in many people.) While there she gets a nosocomial infection that is antibiotic resistant, lowering her life expectancy. This is more common than many people think. Done.

This example is quite different from your example. In your example medical treatment is good, and the key difference is basically just "pre-pay" vs "post-pay".

(Also, neither of our examples covers the issue where many innovative medical treatments often lower mortality due to the disease they aim at while, somehow (accidentally?) RAISING all cause mortality...)

In my mind, the substantive big picture claim rests ultimately on the sum of many positive and negative factors, each of which arguably deserves "an example of its own". (Things that raise my confidence quite a lot is often hearing the person's own best argument AGAINST their own conclusion, and then hearing an adequate argument against that critique. I trust the winning mind quite a bit more when someone is of two minds.)

No example is going to JUSTIFIABLY convince me, and the LACK of an example for one or all of the important factors wouldn't prevent me from being justifiably convinced by other methods that don't route through "specific examples".

ALSO: For that matter, I DO NOT ACTUALLY KNOW if Robin Hanson is actually right about medical insurance's net results, in the past or now. I vaguely suspect that he is right, but I'm not strongly confident. Real answers might require studies that haven't been performed? In the meantime I have insurance because "what if I get sick?!" and because "don't be a weirdo".

---

I think my key crux here has something to do with the rhetorical standards and conversational norms that "should" apply to various conversations between different kinds of people.

I assumed that having examples "ready-to-hand" (or offered early in a written argument) was something that you would actually be strongly in favor of (and below I'll offer a steelman in defense of), but then you said:

I wouldn't insist that he has an example "ready to hand during debate"; it's okay if he says "if you want an example, here's where we can pull one up".

So for me it would ALSO BE OK to say "If you want an example I'm sorry. I can't think of one right now. As a rule, I don't think in terms of fictional stories. I put effort into thinking in terms of causal models and measurables and authors with axes to grind and bridging theories and studies that rule out causal models and what observations I'd expect from differently weighed ensembles of the models not yet ruled out... Maybe I can explain more of my current working causal model and tell you some authors that care about it, and you can look up their studies and try to find one from which you can invent stories if that helps you?"

If someone said that TO ME I would experience it as a sort of a rhetorical "fuck you"... but WHAT a fuck you! {/me kisses her fingers} Then I would pump them for author recommendations!

My personal goal is often just to find out how the OTHER person feels they do their best thinking, run that process under emulation if I can, and then try to ask good questions from inside their frames. If they have lots of examples there's a certain virtue to that... but I can think of other good signs of systematically productive thought.

---

If I was going to run "example based discussion" under emulation to try to help you understand my position, I would offer the example of John Hattie's "Visible Learning".

It is literally a meta-meta-analysis of education.

It spends the first two chapters just setting up the methodology and responding preemptively to quibbles that will predictable come when motivated thinkers (like classroom teachers that the theory says are teaching suboptimally) try to hear what Hattie has to say.

Chapter 3 finally lays out an abstract architecture of principles for good teaching, by talking about six relevant factors and connecting them all (very very abstractly and loosely) to: tight OODA loops (though not under that name) and Popperian epistemology (explicitly).

I'll fully grant that it can take me an hour to read 5 pages of this book, and I'm stopping a lot and trying to imagine what Hattie might be saying at each step. The key point for me is that he's not filling the book with examples, but with abstract empirically authoritative statistical claims about a complex and multi-faceted domain. It doesn't feel like bullshit, it feels like extremely condensed wisdom.

Because of academic citation norms, in some sense his claims ultimately ground out in studies that are arguably "nothing BUT examples"? He's trying to condense >800 meta-analyses that cover >50k actual studies that cover >1M observed children.

I could imagine you arguing that this proves how useful examples are, because his book is based on over a million examples, but he hasn't talked about an example ONCE so far. He talks about methods and subjectively observed tendencies in meta-analyses mostly, trying to prepare the reader with a schema in which later results can land.

Plausibly, anyone could follow Hattie's citations back to an interesting meta-analysis, look at its references, track back to a likely study, look in their methods section, and find their questionnaires, track back to the methods paper validating an the questionnaire, then look in the supplementary materials to get specific questionnaire items... Then someone could create an imaginary kid in their head who answered that questionnaire some way (like in the study) and then imagine them getting the outcome (like in the study) and use that scenario as "the example"?

I'm not doing that as I read the book. I trust that I could do the above, "because scholarship" but I'm not doing it. When I ask myself why, it seems like it is because it would make reading the (valuable seeming) book EVEN SLOWER?

---

I keep looping back in my mind to the idea that a lot of this strongly depends on which people are talking and what kinds of communication norms are even relevant, and I'm trying to find a place where I think I strongly agree with "looking for examples"...

It makes sense to me that, if I were in the role of an angel investor, and someone wanted $200k from me, and offered 10% of their 2-month-old garage/hobby project, then asking for examples of various of their business claims would be a good way to move forward.

They might not be good at causal modeling, or good at stats, or good at scholarship, or super verbal, but if they have a "native faculty" for building stuff, and budgeting, and building things that are actually useful to actual people... then probably the KEY capacities would be detectable as a head full of examples to various key questions that could be strongly dispositive.

Like... a head full of enough good examples could be sufficient for a basically neurotypical person to build a valuable company, especially if (1) they were examples that addressed key tactical/strategic questions, and (2) no intervening bad examples were ALSO in their head?

(Like if they had terrible examples of startup governance running around in their heads, these might eventually interfere with important parts of being a functional founder down the road. Detecting the inability to give bad examples seems naively hard to me...)

As an investor, I'd be VERY interested in "pre-loaded ready-to-hand theories" that seem likely to actually work. Examples are kinda like "pre-loaded ready-to-hand theories"? Possession of these theories in this form would be a good sign in terms of the founder's readiness to execute very fast, which is a virtue in startups.

A LACK of ready-to-hand examples would suggest that even a good and feasible idea whose premises were "merely scientifically true" might not happen very fast if an angel funded it and the founder had to instantly start executing on it full time.

I would not be offended if you want to tap out. I feel like we haven't found a crux yet. I think examples and specificity are interesting and useful and important, but I merely have intuitions about why, roughly like "duh, of course you need data to train a model", not any high church formal theory with a fancy name that I can link to in wikipedia :-P

Replies from: Liron↑ comment by Liron · 2019-09-03T01:50:39.457Z · LW(p) · GW(p)

I agree that this section of your comment is the most cruxy:

So for me it would ALSO BE OK to say "If you want an example I'm sorry. I can't think of one right now. As a rule, I don't think in terms of fictional stories. I put effort into thinking in terms of causal models and measurables and authors with axes to grind and bridging theories and studies that rule out causal models and what observations I'd expect from differently weighed ensembles of the models not yet ruled out... Maybe I can explain more of my current working causal model and tell you some authors that care about it, and you can look up their studies and try to find one from which you can invent stories if that helps you?"

Yes. Then I would say, "Ok, I've never encountered a coherent generalization for which I couldn't easily generate an example, so go ahead and tell me your causal model and I'll probably cook up an obvious example to satisfy myself in the first minute of your explanation."

Anyone talking about having a "causal model" is probably beyond the level that my specificity trick is going to demolish. The specificity trick I focus on in this post is for demolishing the coherence of the claims of the average untrained arguer, or occasionally catching oneself at thinking overly vaguely. That's it.

Replies from: JenniferRM↑ comment by JenniferRM · 2019-09-03T02:44:58.049Z · LW(p) · GW(p)

"...go ahead and tell me your causal model and I'll probably cook up an obvious example to satisfy myself in the first minute of your explanation."

I think maybe we agree... verbosely... with different emphasis? :-)

At least I think we could communicate reasonably well. I feel like the danger, if any, would arise from playing example ping pong and having the serious disagreements arise from how we "cook (instantiate?)" examples into models, and "uncook (generalize?)" models into examples.

When people just say what their model "actually is", I really like it.

When people only point to instances I feel like the instances often under-determine the hypothetical underlying idea and leave me still confused as to how to generate novel instances for myself that they would assent to as predictions consistent with the idea that they "meant to mean" with the instances.

Maybe: intensive theories > extensive theories?

Replies from: Liron↑ comment by Slider · 2019-09-02T22:51:13.246Z · LW(p) · GW(p)

I feel like the same scrutinity standard is not being applied. Guy with health insurance doesn't check their health more often catching diseases earlier? Uncertainty doesn't cause stress and workload on circulatory system? Why are these not holes that prevent it from being coherent? Why can't Steve claim he has a friend that can be called that can exempilify exploitation?

If the bar is infact low Steve passed it upon positing McDonalds as relevant alternative and the argument went on to actually argue the argument. Or alternatively it requires opinion to have that Robin specification to be coherent and a reasonable arguer could try to hold it to be incoherent.

I feel like this is a case where epistemic status breaks symmetry. A white coat doctor and a witch doctor making the same claims requires the witch doctor to show more evidence to reach the same credibility levels. If argument truly screens off authority the problems needs to be in the argument. Steve is required to have the specification ready on hand during debate.

Replies from: Liron↑ comment by Liron · 2019-09-02T23:03:48.839Z · LW(p) · GW(p)

The difference is that we all have a much clearer picture of what Robin Hanson's claim means than what Steve means, so Robin's claim is sufficiently coherent and Steve's isn't. I agree there's a gray area on what is "sufficiently coherent", but I think we can agree there's a significant difference on this coherence spectrum between Steve's claim and Robin's claim.

For example, any listener can reasonably infer from Robin's claim that someone on medical insurance who gets cancer shouldn't be expected to survive with a higher probability than someone without medical insurance. But reasonable listeners can have differing guesses about whether or not Steve's claim would also describe a world where Uber institutes a $15/hr flat hourly wage for all drivers.

Why can't Steve claim he has a friend that can be called that can exempilify exploitation?

Sure, then I'd just try to understand what specific property of the friend counts as exploitation. In Robin's case, I already have good reasonable guesses about the operational definitions of "health". Yes I can try to play "gotcha" and argue that Robin hasn't nailed down his claim, and in some cases that might actually be the right thing to do - it's up to me to determine what's sufficiently coherent for my own understanding, and nail down the claim to that standard, before moving onto arguing about the truth-value of the claim.

If the bar is infact low Steve passed it upon positing McDonalds as relevant alternative and the argument went on to actually argue the argument

Ah, if Steve really meant "Yes Uber screws the employee out of $1/hr compared to McDonald's", then he would have passed the specificity bar. But the specific Steve I portray in the dialogue didn't pass the bar because that's not really the point he felt he wanted to make. The Steve that I portray found himself confused because his claim really was insufficiently specific, and therefore really was demolished, unexpectedly to him.

Replies from: Slider↑ comment by Slider · 2019-09-03T00:30:53.675Z · LW(p) · GW(p)

Well I am more familiar with settings where I have a duty to understand the world rather than the world having the duty to explain itself to me. I also hold that having unfamiliar things hit higher standards creates epistemic xenophobia. I would hold it important that one doesn't assign falsehood to a claim they don't understand. Althought it is also true that assigning truth to a claim one doesn't understand is dangerous to relatively same caliber.

My go-to assumption would be that Steve understands something different with the word and might be running some sort of moon logic in his head. Rather than declare the "moon proof" to be invalid it's more important that the translation between moon logic and my planet logic interfaces without confusion. Instead of using a word/concept I do know wrong he is using a word or concept I do not know.

"Coherent" usually points to a concept where a sentence is judged on it's home logics terms. But as used here it's clearly in the eye of the beholder. So it's less "makes objective sense" and more a "makes sense to whom?". The shared reality you create in a discussion or debate would be the arbiter but if the argument realies too much on those mechanics it doesn't generalise to contextes outside of that.

Replies from: Liron↑ comment by Pongo · 2020-05-03T04:33:34.707Z · LW(p) · GW(p)

Related: it's tempting to interpret Ignorance, a skilled practice as pushing for the epistemological stance that specificity should overwhelm Mel the meta meta-analyst

↑ comment by Liron · 2019-09-02T19:41:19.806Z · LW(p) · GW(p)

There is a sense in which nearly all highly general statements are technically false, because they admit of at least some counter examples.

I think this is a common misunderstanding that people are having about what I'm saying. I'm not saying to hunt for a counterexample that demolishes a claim. I'm saying to ask the person making the claim for a single specific example that's consistent with the general claim.

Imagine that a general claim has 900 examples and 100 counterexamples. Then I'm just asking to see one of the 900 examples :)

comment by Liron · 2021-01-12T02:54:56.868Z · LW(p) · GW(p)

I <3 Specificity

For years, I've been aware of myself "activating my specificity powers" multiple times per day, but it's kind of a lonely power to have. "I'm going to swivel my brain around and ride it in the general→specific direction. Care to join me?" is not something you can say in most group settings. It's hard to explain to people that I'm not just asking them to be specific right now, in this one context. I wish I could make them see that specificity is just this massively under-appreciated cross-domain power. That's why I wanted this sequence to exist.

I gratuitously violated a bunch of important LW norms

- As Kaj insightfully observed last year, choosing Uber as the original post's object-level subject made it a political mind-killer.

- On top of that, the original post's only role model of a specificity-empowered rationalist was this repulsive "Liron" character who visibly got off on raising his own status by demolishing people's claims.

Many commenters took me to task on the two issues above, as well as raising other valid issues, like whether the post implies that specificity is always the right power to activate in every situation.

The voting for this post was probably a rare combination: many upvotes, many downvotes, and presumably many conflicted non-voters who liked the core lesson but didn't want to upvote the norm violations. I'd love to go back in time and launch this again without the double norm violation self-own.

I'm revising it

Today I rewrote a big chunk of my dialogue with Steve, with the goal of making my character a better role model of a LessWrong-style rationalist, and just being overall more clearly explained. For example, in the revised version I talk about how asking Steve to clarify his specific point isn't my sneaky fully-general argument trick to prove that Steve's wrong and I'm right, but rather, it's taking the first step on the road to Double Crux.

I also changed Steve's claim to be about a fictional company called Acme, instead of talking about the politically-charged Uber.

I think it's worth sharing

Since writing this last year, I've received a dozen or so messages from people thanking me and remarking that they think about it surprisingly often in their daily lives. I'm proud to help teach the world about specificity on behalf of the LW community that taught it to me, and I'm happy to revise this further to make it something we're proud of.

comment by MondSemmel · 2021-01-10T22:39:41.684Z · LW(p) · GW(p)

The notion of specificity may be useful, but to me its presentation in terms of tone (beginning with the title "The Power to Demolish Bad Arguments") and examples seemed rather antithetical to the Less Wrong philosophy of truth-seeking.

For instance, I read the "Uber exploits its drivers" example discussion as follows: the author already disagrees with the claim as their bottom line [LW · GW], then tries to win the discussion by picking their counterpart's arguments apart, all the while insulting this fictitious person with asides like "By sloshing around his mental ball pit and flinging smart-sounding assertions about “capitalism” and “exploitation”, he just might win over a neutral audience of our peers.".

In contrast to e.g. Double Crux, that seems like an unproductive and misguided pursuit - reversed stupidity is not intelligence [LW · GW], and hence even if we "demolish" our counterpart's supposedly bad arguments, at best we discover that they could not shift our priors.

And more generally, the essay gave me a yucky sense of "rationalists try to prove their superiority by creating strawmen and then beating them in arguments", sneer culture, etc. It doesn't help that some of its central examples involve hot-button issues on which many readers will have strong and yet divergent opinions, which imo makes them rather unsuited as examples for teaching most rationality techniques or concepts.

Replies from: Liron, fritz-iversen↑ comment by Liron · 2021-01-11T01:40:56.362Z · LW(p) · GW(p)

Meta-level reply

The essay gave me a yucky sense of "rationalists try to prove their superiority by creating strawmen and then beating them in arguments", sneer culture, etc. It doesn't help that some of its central examples involve hot-button issues on which many readers will have strong and yet divergent opinions, which imo makes them rather unsuited as examples for teaching most rationality techniques or concept

Yeah, I take your point that the post's tone and political-ish topic choice undermine the ability of readers to absorb its lessons about the power of specificity. This is a clear message I've gotten from many commenters, whether explicitly or implicitly. I shall edit the post.

Update: I've edited the post to remove a lot of parts that I recognized as gratuitous yuckiness.

Object-level reply

In the meantime, I still think it's worth pointing out where I think you are, in fact, analyzing the content wrong and not absorbing its lessons :)

For instance, I read the "Uber exploits its drivers" example discussion as follows: the author already disagrees with the claim as their bottom line [LW · GW], then tries to win the discussion by picking their counterpart's arguments apart

My dialogue character has various positive-affect a-priori beliefs about Uber, but having an a-priori belief state isn't the same thing as having an immutable bottom line. If Steve had put forth a coherent claim, and a shred of support for that claim, then the argument would have left me with a modified a-posteriori belief state.

In contrast to e.g. Double Crux, that seems like an unproductive and misguided pursuit

My character is making a good-faith attempt at Double Crux. It's just impossible for me to ascertain Steve's claim-underlying crux until I first ascertain Steve's claim.

even if we "demolish" our counterpart's supposedly bad arguments, at best we discover that they could not shift our priors.

You seem to be objecting that selling "the power to demolish bad arguments" means that I'm selling a Fully General Counterargument, but I'm not. The way this dialogue goes isn't representative of every possible dialogue where the power of specificity is applied. If Steve's claim were coherent, then asking him to be specific would end up helping me change my own mind faster and demolish my own a-priori beliefs.

reversed stupidity is not intelligence

It doesn't seem relevant to mention this. In the dialogue, there's no instance of me creating or modifying my beliefs about Uber by reversing anything.

all the while insulting this fictitious person with asides like "By sloshing around his mental ball pit and flinging smart-sounding assertions about “capitalism” and “exploitation”, he just might win over a neutral audience of our peers.".

I'm making an example out of Steve because I want to teach the reader about an important and widely-applicable observation about so-called "intellectual discussions": that participants often win over a crowd by making smart-sounding general assertions whose corresponding set of possible specific interpretations is the empty set.

↑ comment by Fritz Iversen (fritz-iversen) · 2021-11-27T16:51:40.089Z · LW(p) · GW(p)

I think you are on the right track.

The problem is, "specifity" has to be handled in a really specific way and the intention has to be the desire to get from the realm of unclear arguments to clear insight.

If you see discussions as a chess game, you're already sending your brain in the wrong direction, to the goal of "winning" the conversation, which is something fundamentally different than the goal of clarity.

Just as specificity remains abstract here and is therefore misunderstood, one would have to ask: What exactly is specificity supposed to be?

Linguistics would help here. For the problem that is negotiated grows out of the deficiencies of language, namely that language is contaminated with ambiguities. Linguistically specific is when numbers and entities (names) come in.

With "Acme" there is already an entity - otherwise everything, even the so-called specific argument - remains highly abstract. Therefore, the specificity trick in the dialogs remain just that - a manipulative trick. And tricks don't lead to clarity.

Specificity would be possible here only by injecting numbers: "How many dollars does Acme extract in surplus value per hour worked by their workers?"

After that, the exploitation would have been specifically quantified and one could talk about whether Acme is brutally or somewhat unjustified exploiting the workers' bad situation or whether the wages are fair.

The specific economics of Acme would, of course, be even more complicated, insofar as one would have to ask whether much of the added value is already being absorbed by overpaid senior executives.

At the end of any specific discussion, however, the panelists must ask themselves what they want to be: fair or unfair? Those who want to gain clarity about this have to answer it for themselves.

Then briefly on Uber: Uber is a bad business idea. It's bad because it can only become profitable if Uber dominates its markets up to the point that they don't have no competition anymore. Their costs are too high. A simple service is burdened with huge overhead costs (would have to re rechearched specifically, I know), and these overhead costs are then partly imposed on the service users when user are in desperate need, partly on the service providers.

Even with Uber, you can debate back and forth the specific figures for a long time. In the end, users have to ask themselves: Do I want to use a business model that is so bad that it can only exist as a quasi-monopolist?

I don't do that because I don't want to.

If someone like Peter Thiel, for example, is such a bad businessman that he only can survive in non-competitive situations, then he might say: Zero competition is my way of succeeding since I can't make money as soon as there is some competition. Fairness doesn't matter to me.

Hoewever, Specificity is healing. That's right. When one talks, one can never talk specifically enough. However, many ideological debates suffer not from too many abstract concepts, but often from false specificities. Specificities, after all, are always popular for setting false frames. In the end, clarity is only achieved by those who really want clarity, and not simply by those who want to win.

comment by Zvi · 2021-01-11T21:48:00.958Z · LW(p) · GW(p)

Echoing previous reviews (it's weird to me the site still suggested this to review anyway, seems like it was covered already?) I would strongly advise against including this. While it has a useful central point - that specificity is important and you should look for and request it - I agree with other reviewers that the style here is very much the set of things LW shouldn't be about, and LWers shouldn't be about, but that others think LW-style people are about, and it's structuring all these discussions as if arguments are soldiers and the goal is to win while being snarky about it.

Frankly, I found the whole thing annoying and boring, and wouldn't have finished it if I wasn't reviewing.

I don't think changing the central example would overcome any of my core objections to the post, although it would likely improve it.

There's a version of this post that is much shorter, makes the central point quickly, and is something I would link to occasionally, but this very much isn't it.

Replies from: Liron↑ comment by Liron · 2021-01-11T22:26:03.955Z · LW(p) · GW(p)

Zvi, I respect your opinion a lot and I've come to accept that the tone disqualifies the original version from being a good representation of LW. I'm working on a revision now.

Update: I've edited the post to remove a lot of parts that I recognized as gratuitous yuckiness.

comment by clone of saturn · 2020-12-18T09:36:27.258Z · LW(p) · GW(p)

I really dislike the central example used in this post, for reasons explained in this article. I hope it isn't included in the next LW book series without changing to a better example.

Replies from: frontier64, Benito↑ comment by frontier64 · 2020-12-26T00:33:44.905Z · LW(p) · GW(p)

This comment leads me to believe that you misunderstand the point of the example. Demonstrating that an arguer doesn't have a coherent understanding of their claim doesn't mean that the claim itself is incoherent. It just means that if you argue against that particular person on that particular claim nobody is likely to gain anything out of it[1]. The validity of the example does not correlate to whether "Uber exploits its drivers!" or not.

You agree with Steve in the example and because the example shows Steve being unable to defend his point you don't like it. You should strive to understand however that Steve's incoherent defense of his claim has nothing to do with your very coherent reasons for believing the same claim.

I think that the example is strengthened if Steve's central claim is correct despite the fact that he can't defend it coherently.

At least, that's my take. I haven't read the rest of this sequence yet so I don't know if Liron explains what you gain out of discovering that somebody's argument is incoherent. ↩︎

↑ comment by Ericf · 2020-12-26T02:08:01.124Z · LW(p) · GW(p)

This looks like a case where hanging a lampshade would be useful. A footnote on the original claim by Steve saying: "The Steve character here does not have the eloquence to express this argument, but <source> and <source> present the case that Uber does, in fact, exploit its workers"

Replies from: frontier64↑ comment by frontier64 · 2020-12-26T04:32:10.956Z · LW(p) · GW(p)

Making an unnecessary and possibly false object-level claim would only hurt the post. It's irrelevant to Liron's discussion whether Steve's claim is right or wrong and getting sidetracked [LW · GW] by it's potential truthfulness would muddy the point.

Replies from: Ericf↑ comment by Ericf · 2020-12-26T16:42:20.270Z · LW(p) · GW(p)

Yeah, that's why I didn't advocate making an argument, just acknowledging that such arguments exist, and linking to a couple of reasonable ones.

If an author chooses to use a real-world example, and uses a staw man as one side, that is automatically going to generate bad feelings in people who know better the better arguments. By hanging a lampshade on the straw man, the author aknowledges those feelings, explicitly sets them aside, and the discussion of debate technique (or other meta-level review) can proceed from there without getting sidetracked.

And yes, the reader could make that inference, but

- Don't make the reader do more work than necessary.

- It (may) be epistemically damaging to expose people to bad arguments: https://en.m.wikipedia.org/wiki/Inoculation_theory

- Not all readers implicitly trust the storyteller, and by avoiding plot holes, the author can avoid knocking the narritive train onto a sidetrack. https://www.shamusyoung.com/twentysidedtale/?p=17692

↑ comment by frontier64 · 2020-12-26T22:11:43.413Z · LW(p) · GW(p)

I don't understand your usage of the term "hanging a lampshade" in this context. I don't think either Steve's or Liron's behavior in the hypothetical is unrealistic or unreasonable. I have seen similar conversations before. Liron even stated that the Steve was basically him from some time ago. I thought hanging a lampshade is when the fictional scenario is unrealistic or overly coincidental and the author wants to alleviate reader ire by letting them know that he thinks the situation is unlikely as well. Since the situation here isn't unrealistic, I don't see the relevance of hanging a lampshade.

If the article should be amended to include pro-"Uber exploits drivers" arguments it should also include contra arguments to maintain parity. Otherwise we have the exact same scenario but in reverse, as including only pro-"Uber exploits drivers" arguments will "automatically [...] generate bad feelings in people who know better the better arguments". This is why getting into the object-level accuracy of Steve's claim has negative value. Trying to do so will bloat the article and muddy the waters.

Replies from: Ericf↑ comment by Ericf · 2020-12-27T05:21:09.177Z · LW(p) · GW(p)

Steve's argumemt is explicitly bad, but the original post doesn't say that better arguments exist. And "Uber exploits workers?" isn't a settled question like, say, "vaccines cause autism?" or "new species develop from existing species." so the author shouldn't presume that the audience is totally familiar with the relative merits of both (or either) side(s), and can recognize that Steve is making a relatively poor argument for his point. And the overall structure pattern-matches to straw man fallacy. Perhaps that's the only thing that needs to be lampshade? If instead of 'Steve" the character is called "Scarecrow" (and then call the other one Dorathy, for narrative consistency/humor)

↑ comment by Ben Pace (Benito) · 2020-12-19T05:34:16.980Z · LW(p) · GW(p)

Alas, paywall. Summary?

Replies from: habryka4↑ comment by habryka (habryka4) · 2020-12-19T06:07:41.072Z · LW(p) · GW(p)

It worked straightforwardly in an incognito window for me.

Only section I could find that seemed relevant to the example is this:

Uber exploited artificial market power to subvert normal market dynamics. Its extensive driver recruitment programs used gross dishonesty to deceive drivers, including ongoing misrepresentations of gross pay (prior to deducting vehicle costs) as net pay, and at one point the company claimed that Uber drivers in New York averaged $90,000 in annual earnings. Uber’s shift of the full vehicle burden onto drivers created additional artificial power. Traditional cab drivers could easily move to other jobs if they were unhappy, but Uber’s drivers were locked into vehicle financial obligations that made it much more difficult to leave once they discovered how poor actual pay and conditions were.

Overall, I do think the article makes some decent points, but I am overall not particularly compelled by it. The document seems to try to argue that Uber cannot possibly become profitable. I would be happy to take a bet that Uber will become profitable within the next 5 years.

It also makes some weak arguments that drivers are worse off working for Uber, but doesn't really back them up, just saying that "Uber pushed them down to minimum wage", but seems to completely ignore the value of flexibility of working for Uber, which (talking to many drivers over the years, as well as friends who temporarily got into driving for Uber) is one of the biggest value adds for drivers.

Replies from: Nonecomment by Gurkenglas · 2019-09-02T13:47:10.018Z · LW(p) · GW(p)

By telling Steve to be specific, you are trying to trick him into adopting an incoherent position. You should be trying to argue against your opponent at his strongest, in this case in the general case that he has thought the most about. If you lay out your strategy before going specific, he can choose an example that is more resilient to it. In your example, if Uber didn't exist, that job may have been a taxi driver job instead, which pays more, because there's less rent seeking stacked against you.

Replies from: Liron↑ comment by Liron · 2019-09-02T13:53:32.903Z · LW(p) · GW(p)

It's not a trick, he's allowed to backtrack and amend his choice of specific example and have a re-do of my response. In this dialogue, I chose the underlying reality to be that Steve's "point" really is incoherent, because a Monte Carlo simulation of real-world arguers has a high probability of landing on this scenario.

Replies from: Gurkenglas, Slider↑ comment by Gurkenglas · 2019-09-02T14:26:10.468Z · LW(p) · GW(p)

You saw coming that his position would be temporarily incoherent, that's why you went there. I expect Steve to be aware of this at some level, and update on how hostile the debate is. Minimize the amount of times you have to prove him wrong.

Replies from: Liron↑ comment by Slider · 2019-09-02T16:09:52.507Z · LW(p) · GW(p)

Relies very heavily that in adversial context a free pick should be an optimal pick. The other arguer demonstrated that he didn't even realise that he can pick so its is reasonable to assume he doesn't know the pick should be optimal.

Doing re-dos without preannouncing them is giving free mulligans for yourself. I think would have been in the safe in saying that he did not make new claims, denying the mulligan. There could have been 10 facets of the exploitation in the scenario and fixing one of them would still leave 9 open. You can't say that a forest doesn't exist if it is not any of the individual trees.

The new claim is also not contradictory with the old story. It could also be taken as further spesification of it.

Replies from: Liron↑ comment by Liron · 2019-09-02T16:45:01.803Z · LW(p) · GW(p)

Ok but what if he truly doesn't have a point? Because as the author of the fictional situation, I'm saying that's the premise. Under these circumstances, isn't it right and proper to play the kind of "adversarial game" with him that he's likely to "lose"?

Replies from: assignvaluetothisb, Slider, Citrus↑ comment by assignvaluetothisb · 2019-09-06T12:00:06.667Z · LW(p) · GW(p)

It is pretty easy to 'win' any argument that the other side has to take a strong stance behind.

Go ahead, ask something where you take a strong stance.

I was going to say Uber has definately done 'blank', but I'm not very Steve and saying that Uber isn't as 'fair' as amazon or walmart is a position that is easy to agree with or against. Whoever makes out the best is going to agree with this adversarial gain.

↑ comment by Slider · 2019-09-02T20:26:08.177Z · LW(p) · GW(p)

Relying that your opponent does a mistake is not a super reliable strategy. If someone reads your story and uses it as inspiration to start an argument they might end up in a situation where the actual person doesn' t make that mistake. That could feel a lot more like "shooting yourself in the face in an argument" rather than "demolishing an argument".

Argument methods that work because of misdirection arguably don't serve truth very well or work very indirectly (being deceptive makes it rewarding for the other to be keen).

Most people have reasons for their stances. Their point might be louzy or unimportant but usually one exists. If he truly doesn' t have a point then there is no specific story to tell. As author you have the options of him having a story or not meaning anything with his words but not both.

Replies from: Liron↑ comment by Citrus · 2019-09-02T18:34:40.466Z · LW(p) · GW(p)

I guess the point of humanity is to achieve as much prosperity as possible. Adversarial techniques help when competition improves our chances -- helpful in physical activities, when groups compete, in markets generally. But in a conversation with someone your best bet to help humanity is to help them come around to your superior understanding, and adversarial conversation won't achieve that.

The ideal strategy looks something like the best path along which you can lead them, where you can demonstrate to them they are wrong and they will believe you, which usually involves you demonstrating a very clear and comprehensive understanding, citing information, but doing it all in a way that seems collaborative.

Replies from: Liron↑ comment by Liron · 2019-09-02T18:18:40.367Z · LW(p) · GW(p)

Asking for specific examples is not a rhetorical device, it's a tool for clear thinking. What I'm illustrating with Steve is a productive approach that raises the standard of discourse. IMO.

I've personally been in the Steve role many times: I used to hang out a lot with Anna Salamon when I was still new to LessWrong-style rationality, and I distinctly remember how I would make statements that Anna would then basically just ask me to clarify, and in attempting to do so I would realize I probably don't have a coherent point, and this is what talking to a smarter person than me feels like. She was being empathetic and considerate to me like she always is, not adversarial at all, but it's still accurate to say she demolished my arguments.

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2019-09-16T05:59:47.690Z · LW(p) · GW(p)

I believe that the thing which is making many of your commenters misinterpret the post is that you chose a political example [LW · GW] for the dialogue. That gives people the (reasonable, as this is a common move) suspicion that you have a motive of attacking your political enemy while disguising it as rationality advice.

Even if they don't think that, if they have any sympathies towards the side that you seem to be attacking, they will still feel it necessary to defend that side. To do otherwise would risk granting the implicit notion that the "Uber exploits its drivers" side would have no coherent arguments in general, regardless of whether or not you meant to send that message.

You mentioned that you have personal examples where Anna pointed out to you that your position was incoherently. Something like that would probably have been a better example to use: saying "here's an example of how I made this mistake" won't be suspected of having ulterior motives the way that "here's an example of a mistake made by someone who I might reasonably be suspected to consider a political opponent" will.

Replies from: Liron, Liron↑ comment by Liron · 2019-09-16T10:57:44.563Z · LW(p) · GW(p)

Ahhh right, you got me, I was unnecessarily political! It didn't pattern match to the kind of political arguing that I see in my bubble, but I get why anyone who feels skeptical or unhappy about Uber's practices won't be maximally receptive to learning about specificity using this example, and why even people who don't have an opinion about Uber have expressed feeling "uncomfortable" with the example. Thanks!

At some point I may go back and replace with another example. I'm open to ideas.

comment by Matt Goldenberg (mr-hire) · 2021-01-11T20:16:25.495Z · LW(p) · GW(p)

I really liked this sequence. I agree that specificity is important, and think this sequence does a great job of illustrating many scenarios in which it might be useful.

However, I believe that there are a couple implicit frames that permeate the entire sequence, alongside the call for specificity. I believe that these frames together can create a "valley of bad rationality" in which calls for specificity can actually make you worse at reasoning than the default.

------------------------------------

The first of these frames is not just that being specific can be useful, but that it's ALWAYS the right thing, and that being non-specific is a sign of sloppy thinking or expression.

Again, nowhere in the sequence is this outright stated. Rather it's implied through word choice, tone, and focus. Here's a few passages from this article that I believe showcase this implicit belief:

Nooooo, this is not the enlightening conversation we were hoping for. You can sense that I haven’t made much progress “pinning him down”.

By sloshing around his mental ball pit and flinging smart-sounding assertions about “capitalism” and “exploitation”, he just might win over a neutral audience of our peers.

by making him flesh out a specific example of his claim, I’ve now pulled him out of his ball pit of loosely-associated concepts.

It sounds meaningful, doesn’t it? But notice that it’s generically-worded and lacks any specific examples. This is a red flag.

All of these frame non-specificity and genericness as almost certainly wrong or bad, and even potentially dumb or low status. So why do I believe this belief is problematic? A number of reasons.

Firstly, I believe that that not all knowledge is propositional. Meaning, much knowledge is encoded in the Brain as a series of loose associations leading to intuitions honed from lots of tight feedback loops.

Holding this sort of knowledge is in fact often actually a sign of deep expertise in someone, rather than sloppy thinking. It's just expertise that came through lots of repetitions rather than reading, discussing, or explicit logical thinking.

When someone has this type of knowledge, the right move is often NOT to get more specific (which I discovered over the past year of 20 or so interviews with people with this type of knowledge) [LW · GW].

Often, the right move is to ask them to get in touch with some of these intuitions, and just see what comes up. To free associate, or express in vague ways.

For instance, when working with Malcolm Ocean on his implicit understanding of design, rather than first asking him the aspects of good design, we worked on an "expressive sentence" of "Design is not just for unlocking doors, but for creating new doorways." This is still a very vague sentence, but it was able to evoke many aspects of his intuitions. Only once we had a few of these vague sentences that came at the problem from different angles did we use them to get specific about the different elements of good design.

The second reason why I think the belief of "being non-specific is sloppy thinking" is bad is that there are several types of rationality. "Predictive Rationality "- that is, instrumental rationality that focuses on whether something will happen or whether something is true, will often benefit from specificity.

However there's also "Generative Rationality", that is, generating useful ideas. As alkjash notes in his Babble and Prune sequence, Babbling (generating useful thoughts) often benefits from not having to make ideas very specific in the early stage , and may in fact be stymied by that. If you have the kneejerk reaction of always asking for more specificity, it may lead to worse ideas in yourself or your culture.

In addition to "Generative Rationality" (which often comes before predictive rationality) you can also talk about "Effectuative Rationality", getting people to take action on their ideas (which often comes after predictive rationality.

Here too, specificity can often be harmful. Much of the management research finds that to create pro-active employees, you want to be quite specific in what you want, but very vague about "how", in order to let your underlings feel ownership and initiative. If you have the belief that you must always be specific, you may screw this up.

----------------------------------------

The second implicit belief is one of "default to Combat Culture [LW · GW]".

Once again, it's not explicitly stated. However, here are some passages that I believe point to this implicit belief:

The Power to Demolish Bad Arguments

Want to see what a 3D Chess argument looks like? Behold the conversation I had the other day with my friend “Steve”: