LessWrong Has Agree/Disagree Voting On All New Comment Threads

post by Ben Pace (Benito) · 2022-06-24T00:43:17.136Z · LW · GW · 217 commentsContents

How the system works Ben's personal reasons for being excited about this split Please give us feedback None 219 comments

Starting today we're activating two-factor voting on all new comment threads.

Now there are two axes on which you can vote on comments: the standard karma axis remains on the left, and the new axis on the right lets you show much you agree or disagree with the content of a comment.

How the system works

For the pre-existing voting system, the most common interpretation of up/down-voting is "Do I want to see more or less of this content on the site?" As an item gets more/less votes, the item changes in visibility, and the karma-weighting of the author is eventually changed as well.

Agree/disagree is just added on to this system. Here's how it all hooks up.

- Agree/disagree voting does not translate into a user's or post's karma — its sole function is to communicate agreement/disagreement. It has no other direct effects on the site or content visibility (i.e. no effect on sorting algorithms).

- For both regular voting and the new agree/disagree voting, you have the ability to normal-strength vote and strong-vote. Click once for normal-strength vote. For strong-vote, click-and-hold on desktop or double-tap on mobile. The weight of your strong-vote is approximately proportional to your karma on a log-scale (exact numbers here [LW · GW]).

Ben's personal reasons for being excited about this split

Here's a couple of reasons that are alive for me.

- I personally feel much more comfortable upvoting good comments that I disagree with or whose truth value I am highly uncertain about, because I don’t feel that my vote will be mistaken as setting the social reality of what is true.

- I also feel very comfortable strong-agreeing with things while not up/downvoting on them, so as to indicate which side of an argument seems true to me without my voting being read as “this person gets to keep accruing more and more social status for just repeating a common position at length”.

- Similarly to the first bullet, I think that many writers have interesting and valuable ideas but whose truth-value I am quite unsure about or even disagree with. This split allows voters to repeatedly signal that a given writer's comments are of high value, without building a false-consensus that LessWrong has high confidence that the ideas are true. (For example, many people have incompatible but valuable ideas about how AGI development will go, and I want authors to get lots of karma and visibility for excellent contributions without this ambiguity.)

- There are many comments I think are bad but am averse to downvoting, because I feel that it is ambiguous whether the person is being downvoted because everyone thinks their take is unfashionable or whether it's because the person is wasting the commons with their behavior (e.g. belittling, starting bravery debates, not doing basic reading comprehension, etc). With this split I feel more comfortable downvoting bad comments without worrying that everyone else who states the position will worry if they'll also be downvoted.

- I have seen some comments that previously would have been "downvoted to hell" are now on positive karma, and are instead "disagreed to hell". I won't point them out to avoid focusing on individuals, but this seems like an obvious improvement in communication ability.

I could go on but I'll stop here.

Please give us feedback

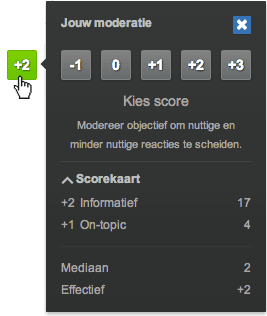

This is one of the main voting experiments we've tried on the site (here's the other one [LW · GW]). We may try more changes and improvement in the future. Please let us know about your experience with this new voting axis, especially in the next 1-2 weeks.

If you find it concerning/invigorating/confusing/clarifying/other, we'd like to know about it. Comment on this post with feedback and I'll give you an upvote (and maybe others will give you an agree-vote!) or let us know in the intercom button in the bottom right of the screen.

We've rolled it out on many (15+) threads now (example [LW · GW]), and my impression is that it's worked as hoped and allowed for better communication about the truth.

appreciation for high-quality comments that many users disagree with.

217 comments

Comments sorted by top scores.

comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2022-06-24T17:30:33.605Z · LW(p) · GW(p)

Still somewhat sad about this, as it feels to me like a half-solution that's pumping against human nature.

My claims in previous discussions about alternate voting systems were that:

- It was going to be really important to have a single click, not multiple clicks (i.e. any two-separate-votes system was going to have people overwhelmingly just using one of the votes and largely ignoring the second one)

- It was going to be really important to use visual cues and directionality and not just have two things side by side

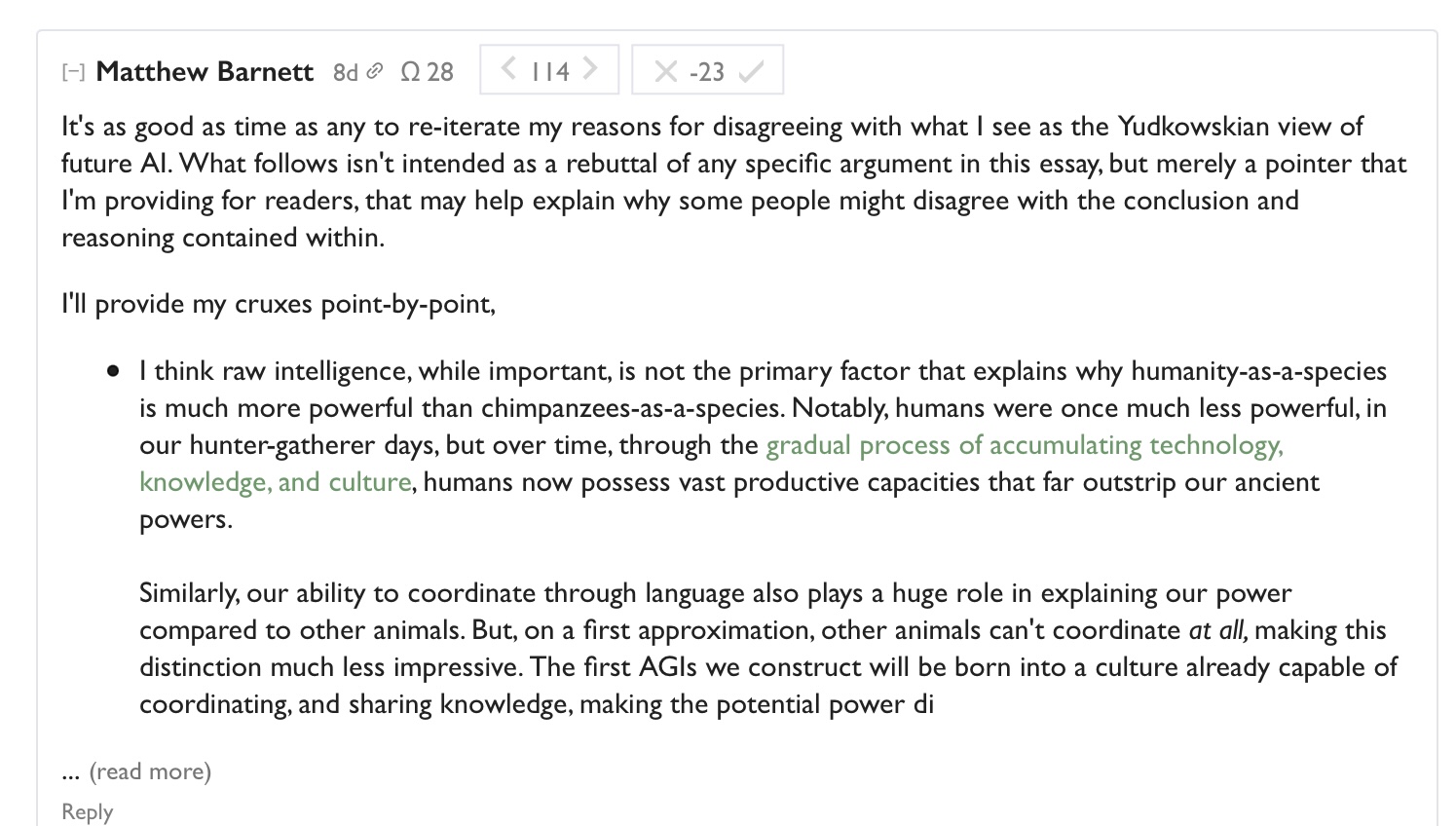

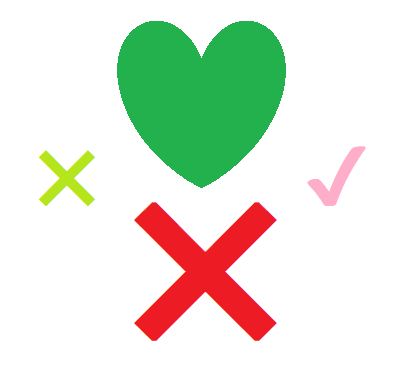

I wanted something like the following:

... where users would single-click one of the four buttons and could click-hold to strong vote, but would with a single click show:

- Upvote this and also it's true (the dark blue one)

- This seems true but slight downvote/it's not helping/it's making things worse (the light blue one)

- This seems false or sketchy but slight upvote/it's helping/I'm glad it's here (the light orange one)

- Downvote this and also it's false (the dark orange one).

True-false is on the forward/backward axis, in other words, and good/bad is on the vertical axis, as usual.

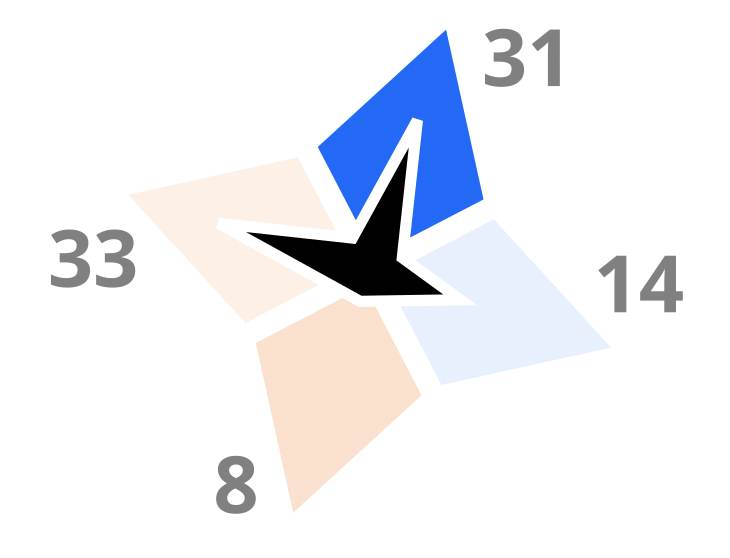

The display for aggregation could look a lot of different ways; please don't hate the below for its ugly first-draft nature:

For example, maybe the numbers are invisible unless you hover, or something. In this example, the blue shows which one I clicked.

This would also allow you (if you wanted) to display an aggregated "what's this user's rep" function—you could make some hover-over thing or put a place on people's profile pages where you could see whether a given user was generally given dark blue, light blue, light orange, or dark orange feedback.

The reason I'm sad about the thing that's happening here is that:

- I suspect it's a half-solution that will decay back to mostly-people-just-use-the-first-vote

- I don't think it lets me grok the quality of the reaction to a comment at a glance; I keep having to effortfully process "okay, what does—okay, this means that people like it but think it's slightly false, unless they—hmm, a lot more people voted up-down than true-false, unless they all strong voted up-down but weak-voted tru—you know what, I can't get any meaningful info out of this."

- I suspect it will sap whatever momentum there was for a truly better voting system, such that if it turns out I'm right and a year from now this didn't really help, it'll be even less likely that we can muster for another attempt.

↑ comment by Vladimir_Nesov · 2022-06-24T18:00:42.510Z · LW(p) · GW(p)

display an aggregated "what's this user's rep" function

Agreement votes must never be aggregated, otherwise there is incentive for uncontroversial commenting.

Replies from: Andrew_Critch, sean-h↑ comment by Andrew_Critch · 2022-07-02T14:10:17.607Z · LW(p) · GW(p)

I agree with the sentiment here, but I think you have too little faith in some people's willingness to be disagreeable... especially on LessWrong! Personally I'd feel fine/great about having a high karma and a low net-agreement score, because it means I'm adding a unique perspective to the community that people value.

Replies from: Andrew_Critch↑ comment by Andrew_Critch · 2022-07-02T14:10:53.706Z · LW(p) · GW(p)

... and, I'd go so far as to bet that the large number of agreement with your comment here is representative of a bunch of users that would feel similarly, but I'm putting this in a separate comment so accrues a separate agree/disagree score. If lots of people disagree, I'll update :)

↑ comment by Sean H (sean-h) · 2022-06-27T20:11:45.248Z · LW(p) · GW(p)

Absolutely! Agree 100%.

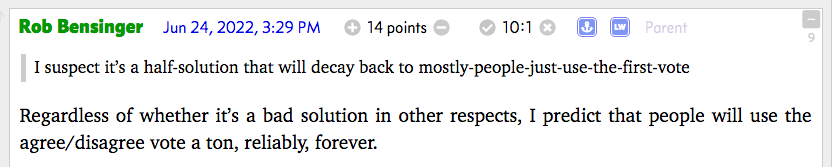

↑ comment by Rob Bensinger (RobbBB) · 2022-06-24T19:29:42.160Z · LW(p) · GW(p)

I suspect it's a half-solution that will decay back to mostly-people-just-use-the-first-vote

Regardless of whether it's a bad solution in other respects, I predict that people will use the agree/disagree vote a ton, reliably, forever.

I don't think it lets me grok the quality of the reaction to a comment at a glance; I keep having to effortfully process "okay, what does—okay, this means that people like it but think it's slightly false, unless they—hmm, a lot more people voted up-down than true-false, unless they all strong voted up-down but weak-voted tru—you know what, I can't get any meaningful info out of this."

I mostly care about agree/disagree votes (especially when it comes to specifics). From my perspective, the upvotes/downvotes are less important info; they're mostly there to reward good behavior and make it easier to find the best content fast.

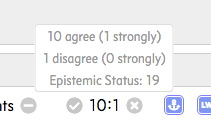

In that respect, the thing that annoys me about agree/disagree votes isn't any particular relationship to the upvotes/downvotes; it's that there isn't a consistent way to distinguish 'a few people agreeing strongly' from 'a larger number of people agreeing weakly', 'everyone agrees with this but weakly' from 'some agree strongly but they're being partly offset by others who disagree', or 'this is the author agreeing with their own comment' from 'this is a peer independently vouching for the comment's accuracy'.

I think all of those things would ideally be distinguishable, at least on hover. (Or the ambiguity would be eliminated by changing how the feature works -- e.g., get rid of the strong/weak distinction for agreevotes, get rid of the thing where users can agreevote their own comments, etc.)

The specific thing I'd suggest is to get rid of 'authors can agree/disagree vote on their own comments' (LW already has a 'disendorse' feature), and to replace the current UI with a tiny bar graph showing the rough relative number of strong agree, weak agree, strong disagree, and weak disagree votes (at least on hover).

Replies from: Duncan_Sabien, Vladimir_Nesov↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2022-06-24T20:19:59.790Z · LW(p) · GW(p)

I predict that people will use the agree/disagree vote a ton, reliably, forever.

I feel zero motivation to use it. I feel zero value gained from it, in its current form. I actually find it a deterrent, e.g. looking at the information coming in on my comment above gave me a noticeable "ok just never comment on LW again" feeling.

(I now fear social punishment for admitting this fact, like people will decide that me having detected such an impulse means I'm some kind of petty or lame or bad or whatever, but eh, it's true and relevant. I don't find downvotes motivationally deterring in the same fashion, at all.)

EDIT: this has been true in other instances of looking at these numbers on my other comments in the past; not an isolated incident.

↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2022-06-24T20:30:03.222Z · LW(p) · GW(p)

More detail on the underlying emotion:

"Okay, so it's ... it's plus eight, on some karma meaning ... something, but negative nine on agreement? What the heck does this even mean, do people think it's good but wrong, are some people upvoting but others downvoting in a different place—I hate this. I hate everything about this. Just give up and go somewhere where the information is clear and parse-able."

Like, maybe it would feel better if I could see something that at least confirmed to me how many people voted in both places? So I'm not left with absolutely no idea how to compare the +8 to the -9?

But overall it just hurts/confuses and I'm having to actively fight my own you'd-be-happier-not-being-here feelings, which are very strong in a way that they aren't in the one-vote system, and wouldn't be in either my compass rose system or Rob's heart/X system.

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2022-06-24T20:55:30.981Z · LW(p) · GW(p)

do people think it's good but wrong [...] I hate this

The parent comment serves as a counterexample to this interpretation: It seems natural to agreement-downvote your comment to indicate that I don't share this feeling/salient-impression, without meaning to communicate that I believe your feeling-report to be false (about your own impression). And to karma-upvote it to indicate that I care for existence of this feeling to become a known issue and to incentivise corroboration from others (with visibility given by karma-upvoting) who feel similarly (which might in part be communicated with agreement-upvoting).

Replies from: Duncan_Sabien↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2022-06-24T20:58:00.531Z · LW(p) · GW(p)

I think you're confusing "this should make sense to you, Duncan" with "therefore this makes sense to you, Duncan"

(or more broadly, "this should make sense to people" with "therefore, it will/will be good.")

I agree that there is some effortful, System-2 processing that I could do, to draw out the meaning that you have spelled out above.

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2022-06-24T21:08:23.772Z · LW(p) · GW(p)

there is some effortful, System-2 processing that I could do

The important distinction is about existence of System-1 distillation that enables ease, which develops with a bit of exposure, and of the character of that distillation. (Is it ugly/ruinous/not-forming, despite the training data being fine?) Whether a new thing is immediately familiar is much less strategically relevant.

Replies from: Duncan_Sabien↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2022-06-24T21:22:33.202Z · LW(p) · GW(p)

This function has been available, and I've encountered it off and on, for months. This isn't a case of "c'mon, give it a few tries before you judge it." I've had more than a bit of exposure.

↑ comment by M. Y. Zuo · 2022-06-27T02:55:28.229Z · LW(p) · GW(p)

If being highly upvoted yet highly disagreed with make you feel deterred and never want to comment again, wouldn't that also be the case if you see a lot of light orange beside your comments?

Since it seems unlikely you'll forget your own proposal nor what the colours correspond to.

In fact it may hasten your departure since bright colours are a lot more difficult to ignore than a grey number.

Replies from: Duncan_Sabien↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2022-06-27T04:06:04.242Z · LW(p) · GW(p)

I do not have a model/explanation for why, but no, apparently not. I've got pretty decent introspection and very good predicting-future-Duncan's-responses skill and the light orange does not produce the same demoralization as negative numbers.

Though the negative numbers also produce less demoralization if the prompt is changed in accordance with some suggestions to something like "I could truthfully say this or something close to it from my own beliefs and experience."

↑ comment by Vladimir_Nesov · 2022-06-24T19:58:55.356Z · LW(p) · GW(p)

From my perspective, the upvotes/downvotes are less important info

Their role is different: it's about quality/incentives, so the appropriate way of deciding visibility (comment ordering) and aggregating into user's overall footprint/contribution. Agreement clarifies attitude to individual comments without compromising the quality vote, in particular making it straightforward/convenient to express approval/incentivization of disagreed-with comments. In this way agreement vote improves fidelity of the more strategic quality/incentives vote, while communicating an additional tactical fact about each particular comment.

↑ comment by Kaj_Sotala · 2022-06-26T21:37:17.978Z · LW(p) · GW(p)

It was going to be really important to have a single click, not multiple clicks (i.e. any two-separate-votes system was going to have people overwhelmingly just using one of the votes and largely ignoring the second one)

I feel like it's slightly less work for me to consider one axis and up/downvote it, and then consider the second axis and up/downvote it, than it'd be to vote on two axes with a single click. The former lets me consider the two separately, "make one decision and then forget about it", whereas the latter requires me to think about both at the same time. That means that I'm (slightly) more likely to cast two votes on a multiple-click system than on a single-click system.

Though I do also consider it a feature if the system allows me to only cast one vote rather than forcing me to do both. E.g. in situations where I want to upvote a domain expert's comment giving an explanation about a domain that I'm not familiar with, so don't feel qualified to cast a vote on its truth even though I want to indicate that I appreciate having the explanation.

↑ comment by Said Achmiz (SaidAchmiz) · 2022-06-29T23:11:23.558Z · LW(p) · GW(p)

After using the new system for a couple of days, I now believe that a single-click[1] system, like the one Duncan describes, would probably be preferable for interaction efficiency / satisfaction reasons. (Having to click on two different UI widgets in two different screen locations—i.e., mouse move, click, another mouse move, click—is an annoyance.)

One downside of Duncan’s proposed widget design would be that it cannot accommodate the full range of currently permissible input values. The current two-widget system has 25 possible states (karma and agreement can each independently take on any of five values: ++, +, 0, −, −−), while the proposed “blue and orange compass rose” single-widget system has only 9 possible states (the neutral state, plus two strengths of vote × four directions).

It is not immediately obvious to me what an ideal solution would look like. The obvious solution (in terms of interaction design) would be to construct a mapping from the 25 states to the 9, wherein some of the 25 currently available input states should be impermissible, and some sets of the remainder of the 25 should each be collapsed into one of the 9 input states of the proposed widget. (I haven’t thought about the problem enough to know if a satisfactory such mapping exists.)

Or, to be more precise, a “single-click / double-click / click-and-hold, depending on implementation details and desired outcome, but in all cases a pointer interaction with only one UI widget in one location on the screen” system. ↩︎

↑ comment by gwern · 2023-04-20T19:41:01.793Z · LW(p) · GW(p)

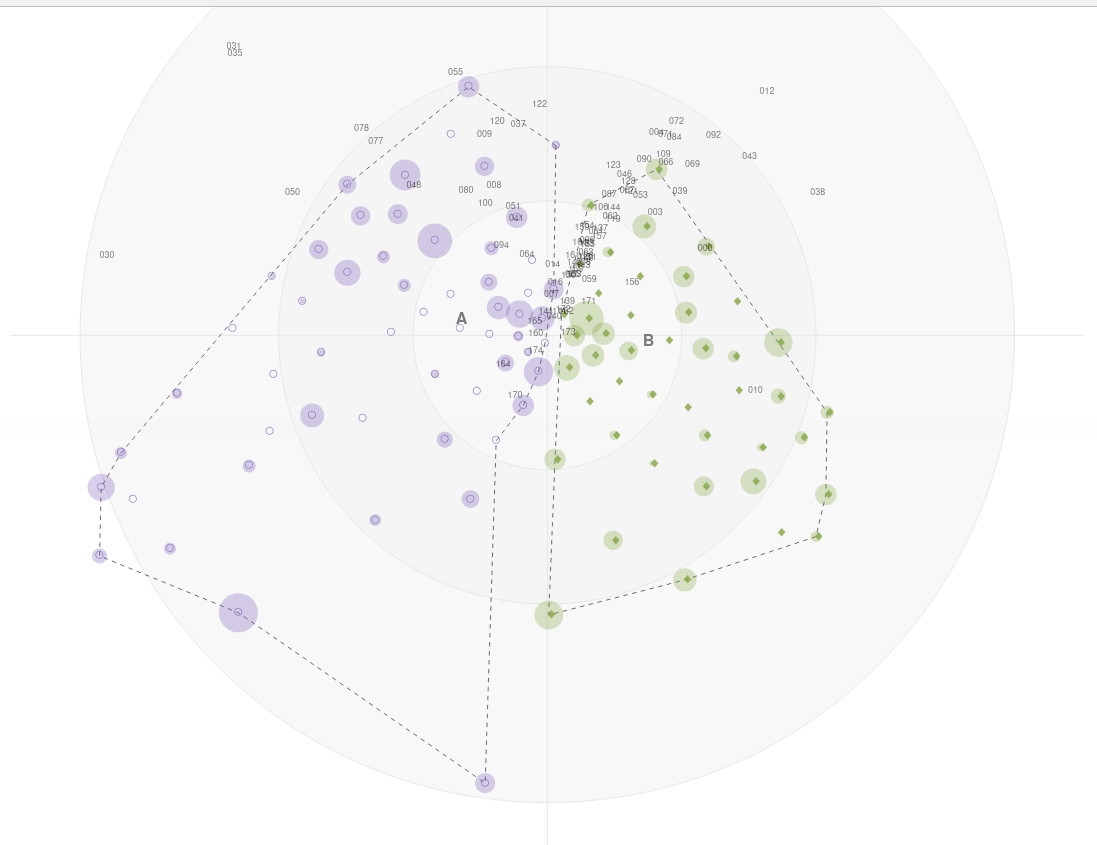

Hypothetically, you could represent all of the states by using the diamond, but adding a second 'diamond' or 'shell' around it, and making all of the vertexes and regions clickable. To express a +/+ you click in the upper right region; to express ++/++, the uppermost right region; to express 0/++, you click on the right-most tip; to express ++/0, you click on the bottom tip; and so on. The regions can be colored. (And for users who don't get strong votes, it degrades nicely: you should omit the outer shell corresponding to the strong votes.) I'm sure I've seen this before in video games or something, but I'm not sure where or what it may be called (various search queries for 'diamond' don't pull up anything relevant). It's a bit like a radar chart, but discretized.

This would be easy to use (as long as the vertexes have big hit boxes) since you make only 1 click (rather than needing to click 4 times or hold long twice for a ++/++) and use the mouse to choose what the pair is (which is a good use of mice), and could be implemented even as far back as Web 1.0 with imagemaps, but somewhat hard to explain - however, that's what tooltips are for, and this is for power-users in the first place, so some learning curve is tolerable.

↑ comment by Rana Dexsin · 2022-06-24T21:55:40.273Z · LW(p) · GW(p)

I find all of the four-way graphical depictions in this subthread to be horribly confusing at an immediate glance; indeed I had to fight against reversing the axes on the one you showed. I already know what a karma system is, as imperfect as that is, and I already know what agreement and disagreement are—and being able to choose to only use one of the axes at any given moment is an engagement win for me, because (for instance) if I start out wanting to react “yes, I agree” but then have to think about “but also was the post good in a different sense” before I can record my answer, or vice versa, that means I have to perform the entire other thought-train with the first part in my working-memory stack. And my incentive and inclination to vote at all doesn't start out very high. It's like replacing two smaller stairs with one large stair of combined height.

A more specific failure mode there is lack of representation for null results on either axis, especially “I think this was a good contribution, but it makes no specific claims to agree or disagree with and instead advances the conversation some other way” and “I think this was a good contribution, but I will have to think for another week to figure out whether I agree or disagree with it on the object level, and vaguely holding onto the voting-intention in the back of my mind for a week gives a horrible feeling of cruftifying my already-strained attention systems”.

To try to expand the separate-axes system in this exact case, I have upvoted your comment here on the quality axis and also marked my disagreement (which I don't like the term “downvote” for, as described elsewhere), because I think it's a good thing that you went to the effort of thinking about this and posting about it, and I think the explanation is coherent and reasonable, but I also think the suggestion itself would be more complicated and difficult and overall worse than what's been implemented, largely because of differing impressions of the actual world. I think this is much better than having the choice of downvoting the comment and thus indicating that I wish you hadn't posted this, which is false, or upvoting it and risking a perception that I wanted the proposal to be implemented, which is also false.

I have meta-difficulty grasping why you find the at-a-glance compounding of info difficult under the separate-axes system. Do you feel inclined to try to explain it differently? In particular, I do not understand why “a lot more people” and “strong/weak votes” play so heavily into your reported thought process. I process the numbers as pre-aggregated information with a stock “congealed” (one-mental-step access) approximate correction-and-blurring model of the imperfections of the aggregation and selection most of the time. Trying to dig further is rare and mainly happens if I'm quite surprised by what I see initially.

↑ comment by Raemon · 2022-06-26T05:17:02.906Z · LW(p) · GW(p)

I think many of the UI ideas here are potentially interesting, but one major issue is the amount of space we have to work with. The design here is particularly cool because it's a compass rose which matches the LW logo, but... I don't see how we could fit a version into every comment that actually worked. (Maybe if it was little but got big when you hovered over it?)

(to be clear these seem potentially fixable, just noting that that's where my attention goes next in a problem-solving-y way)

FYI In my mind there's still some radically different solutions that might be worth trying for agree/disagree, I'm still pretty uncertain about the whole thing.

Replies from: Duncan_Sabien↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2022-06-26T06:55:05.740Z · LW(p) · GW(p)

Yeah, the UI issues seem real and substantial.

In my mind, the thing is roughly as tall as the entire box holding the current vote buttons.

Replies from: Duncan_Sabien↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2022-06-26T23:56:41.996Z · LW(p) · GW(p)

↑ comment by Rob Bensinger (RobbBB) · 2022-06-24T19:48:41.562Z · LW(p) · GW(p)

I found the current UI intuitive. I find the four-pointed star you suggested confusing (though mayyyybe I'd like it if I got used to it?). I tend to mix up my left and my right, and I don't associate left/right with false/true at all, nor do I associate blue with "truth". (if anything, I associate blue more with goodness, so I might have guessed dark-blue was 'good and true' and light-blue was 'good and false')

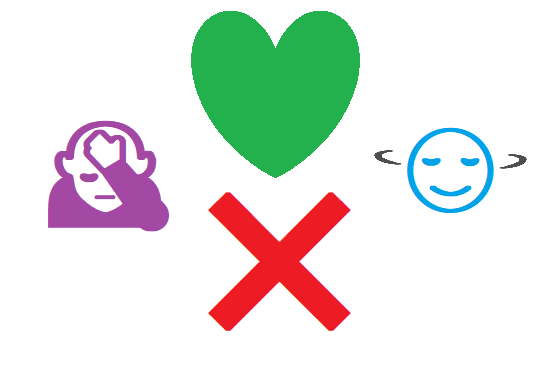

A version of this I'm confident would be easier for me to track is, e.g.:

It's less pretty, but:

- The shapes give me an indication of what each direction means. ✔ and ✖ I think are very useful and clear in that respect: to me, they're obviously about true/false rather than good/bad.

- Green vs. red still isn't super clear. But it's at least clearer than blue vs. red, to me; and if I forget what the colors mean, I have clear indicators via 'ah, there's a green X, but no green checkmark, because the heart is the special "good on all dimensions" symbol, and because green means "good" (so it would be redundant to have a green heart and a green checkmark)'.

- The left and right options are smaller and more faded. Some consequences:

- (a) This makes the image as a whole feel less overwhelming, because there's a clear hierarchy that encourages me to first pay attention to one thing, then only consider the other thing as an afterthought. In this case, I first notice the heart and X, which give me an anchor for what green, red, and X mean. Then I notice the smaller symbols, which I can then use my anchors to help interpret. This is easier than trying to parse four symbols at the exact same moment, especially when those symbols have complicated interactions rather than being primitives.

- I think this points at the core reason Duncan's proposal is harder for me to fit in my head than the status quo: my working memory can barely handle four things at once, and the four options here are really ordered pairs. At least, my brain thinks of them as ordered pairs rather than as primitives: I don't have four distinct qualia or images or concepts for (true, good), (true, bad), (false, good), and (false,bad), I just have the dichotomies "true v. false" and "good v. bad". Trying to compare all four options at once overloads my brain, whereas trying to compare two things (good v. bad) and then two other things (true v. false) is a lot easier for me.

- Having a "heart" symbol is a step in the right direction in that respect, because it's closer to "a unitary concept" in my mind rather than "an ordered pair". If I had four very clearly distinct symbols for the four options, and they all made sense to me and were hard to confuse for each other, then that might more-or-less solve the problem for me.

- (b) This makes it easier for me to chunk the two faded options as a separate category, and to think my way to 'what does these mean?' hierarchically: first I notice that these are the two 'mixed' options (because they're small and faded and off to the sides), then I notice which one is 'true mixed' versus 'false mixed' (because true mixed will have a check, while false mixed has an X).

- (a) This makes the image as a whole feel less overwhelming, because there's a clear hierarchy that encourages me to first pay attention to one thing, then only consider the other thing as an afterthought. In this case, I first notice the heart and X, which give me an anchor for what green, red, and X mean. Then I notice the smaller symbols, which I can then use my anchors to help interpret. This is easier than trying to parse four symbols at the exact same moment, especially when those symbols have complicated interactions rather than being primitives.

↑ comment by Rob Bensinger (RobbBB) · 2022-06-24T20:12:45.420Z · LW(p) · GW(p)

Here's a version that's probably closer to what would actually work for me:

Now all four are closer to being conceptual primitives for me. 💚 is 'good on all the dimensions'; ❌ is 'bad on all the dimensions'.

The facepalm emoji is meant to evoke a specific emotional reaction: that exasperated feeling I get when I see someone saying a thing that's technically true but is totally irrelevant, or counter-productive. (Colored purple because purple is an 'ambiguous but bad-leaning' color, e.g., in Hollywood movies, and is associated with villainy and trolling.)

The shaking-head icon is meant to evoke another emotional reaction: the feeling of being a teacher who's happy with their student's performance, but is condescendingly shaking their head to say "No, you got the wrong answer". (Colored blue because blue is 'ambiguous but good-leaning' and is associated with innocence and youthful naïveté.)

Neither of these emotional reactions capture the range of situations where I'd want to vote (true,bad) or (false,good). But my goal is to give me a vivid, salient handle at all on what the symbols might mean, at a glance; I think the hard part for me is rapidly distinguishing the symbols at all when there are so many options, not so much 'figuring out the True Meaning of the symbol once I've distinguished it from the other three'.

Replies from: RobbBB, gjm↑ comment by Rob Bensinger (RobbBB) · 2022-06-25T08:04:52.644Z · LW(p) · GW(p)

I don't like my own proposals, so do the disagree-votes mean that you agree with me that these are bad proposals, or do they mean you disagree with me and think they're good? :P

Replies from: RobbBB, habryka4↑ comment by Rob Bensinger (RobbBB) · 2022-06-25T08:05:39.882Z · LW(p) · GW(p)

(I should have phrased this as a bald assertion rather than a question, so people could (dis)agree with it to efficiently reply. :P)

↑ comment by habryka (habryka4) · 2022-06-25T17:20:34.672Z · LW(p) · GW(p)

For me it meant "I think this is a bad proposal".

↑ comment by Richard_Kennaway · 2022-06-27T08:34:46.172Z · LW(p) · GW(p)

With two axes, each on a scale -strong/-weak/null/weak/strong, there are 24 non-trivial possibilities. Why have you chosen these four, excluding such things as "this is an important contribution that I completely agree with", or "this is balderdash on both axes"?

Replies from: Duncan_Sabien↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2022-06-27T19:15:08.956Z · LW(p) · GW(p)

Subjective sense of what would make LessWrong both a) more a place I'm excited to be, and b) (not unrelatedly) more of a place that helps me be better according to my own goals and values.

↑ comment by MondSemmel · 2022-06-25T00:10:44.152Z · LW(p) · GW(p)

I'm also struggling to interpret cases where karma & agreement diverge, and would also prefer a system that lets me understand how individuals have voted. E.g. Duncan's comment above currently has positive karma but negative agreement, with different numbers of upvotes and agreement votes. There are many potential voting patterns that can have such a result, so it's unclear how to interpret it.

Whereas in Duncan's suggestion, a) all votes contain two bits of information and hence take a stand on something like agreement (so there's never a divergence between numbers of votes on different axes), and b) you can tell if e.g. your score is the result of lots of voters with "begrudging upvotes", or "conflicted downvotes" or something.

Replies from: Benito↑ comment by Ben Pace (Benito) · 2022-06-25T00:41:42.449Z · LW(p) · GW(p)

Whereas in Duncan's suggestion, a) all votes contain two bits of information and hence take a stand on something like agreement

I didn't notice that! I don't want to have to decide on whether to reward or punish someone every time I figure out whether they said a true or false thing. Seems like it would also severely enhance the problem of "people who say things that most people believe get lots of karma".

↑ comment by Vladimir_Nesov · 2022-06-24T17:57:34.766Z · LW(p) · GW(p)

The alternative solutions you are gesturing at do communicate the problems of the current solution, but I think they are worse than the current solution, and I'm not sure there is a feasible UI change that's significantly better than the current solution (among methods for collecting the data with the same meaning, quality/agreement score). Being convenient to use and not using up too much space are harsh constraints.

comment by Adam Scholl (adam_scholl) · 2022-06-24T07:04:09.144Z · LW(p) · GW(p)

For what it's worth, I quite dislike this change. Partly because I find it cluttered and confusing, but also because I think audience agreement/disagreement should in fact be a key factor influencing comment rankings.

In the previous system, my voting strategy roughly reflected the product of (how glad I was some comment was written) and (how much I agreed with it). I think this product better approximates my overall sense of how much I want to recommend people read the comment—since all else equal, I do want to recommend comments more insofar as I agree with them more.

Replies from: Benito, Benito, pktechgirl, Vladimir_Nesov↑ comment by Ben Pace (Benito) · 2022-06-24T07:26:40.817Z · LW(p) · GW(p)

all else equal, I do want to recommend comments more insofar as I agree with them more

It's a fair point. Sometimes the point of a thread is to discuss and explore a topic, and sometimes the point of a thread is to locally answer a question. In the former I want to reward the most surprising and new marginal information over the most obvious info. In the latter I just want to see the answer.

I'll definitely keep my eye out for whether this system breaks some threads, though it seems likely to me that "producing the right answer in a thread about answering a question" will be correctly upvoted in that context.

Replies from: RobbBB↑ comment by Rob Bensinger (RobbBB) · 2022-06-24T18:44:25.711Z · LW(p) · GW(p)

I almost wonder if there should be a slider bar for post authors to set how much they want to incentivize truth-as-evaluated-by-LWers vs. incentivizing debate / spitballing / brainstorming / devil's advocacy / diversity of opinion / uncommon or nonstandard views / etc. in their post's comment section.

Setting the slider all the way toward Non-Truth would result in users getting 0 karma for agree-votes. Setting the slider all the way toward Truth would result in users getting lots of karma (and would reduce the amount of karma users get from normal Upvotes a bit, so people are less inclined to just pick the 'Truth' option in order to maximize karma). Nice consequences of this:

- It gives users more control over what they want to see in their comment section. (Similar to how users get to decide their posts' moderation policies.)

- Over time, we'd get empirical evidence about which system is better overall, or better for certain use cases. If the results are sufficiently clear and consistent, admins could then get rid of the slider and lock in the whole site at the known-to-be-best level.

↑ comment by MikkW (mikkel-wilson) · 2022-06-25T08:02:55.912Z · LW(p) · GW(p)

I agree that having such a slider could be good, but I think it should only impact visibility of comments in that post's comments section, and shouldn't impact karma (only quality-axis votes should impact karma even if the slider is set to give maximum visibility to high-'agree' comments).

Replies from: RobbBB↑ comment by Rob Bensinger (RobbBB) · 2022-06-25T20:34:50.855Z · LW(p) · GW(p)

Hm, I'd have guessed the opposite was better.

↑ comment by Ben Pace (Benito) · 2022-06-24T07:26:34.084Z · LW(p) · GW(p)

Partly because I find it cluttered and confusing, but also because I think audience agreement/disagreement should in fact be a key factor influencing comment rankings.

I have a different ontology here. I'd say that "truth-tracking" is pretty different from "true". A comment section with just the audience's main beliefs highly upvoted is different from one where the conversational moves that seem truth-tracking are highly upvoted. The former leans more easily into an echo-chamber than the latter, which better rewards side-ways moves and thoughtful arguments for positions most people disagree with.

Replies from: Andrew_Critch↑ comment by Andrew_Critch · 2022-07-02T13:41:13.644Z · LW(p) · GW(p)

I mostly agree with Ben here, though I think Adam's preference could be served by having a few optional sorting options available to the user on a given page, like "Sort by most agreement" or "sort by most controversial". Without changing the semantics of what you have now, you could even allow the user to enter a custom sorting function (air-table style), like "2*karma + 3*(agreement + disagreement)" and sort by that. These could all be hidden under a three-dots menu dropdown to avoid clutter.

Replies from: adam_scholl↑ comment by Adam Scholl (adam_scholl) · 2022-07-05T20:12:38.728Z · LW(p) · GW(p)

I could imagine this sort of fix mostly solving the problem for readers, but so far at least I've been most pained by this while voting. The categories "truth-tracking" and "true" don't seem cleanly distinguishable to me—nor do e.g. "this is the sort of thing I want to see on LW" and "I agree"—so now I experience type error-ish aversion and confusion each time I vote.

Replies from: Benito↑ comment by Ben Pace (Benito) · 2022-07-05T20:14:51.338Z · LW(p) · GW(p)

I see. I'd be interested in chatting about your experience with you offline, sometime this week.

↑ comment by Elizabeth (pktechgirl) · 2022-06-24T17:53:13.730Z · LW(p) · GW(p)

I would be extremely surprised if karma does not track with agreement votes in the majority of cases. I only expect them to diverge in a narrow range of cases like excellently stated arguments people disagree with, extremely banal comments that are true but don't really add anything, actual voting [LW · GW], and high social conflict posts. If we can operationalize this prediction I'm interested in a bet.

Replies from: habryka4, Alex Caswen↑ comment by habryka (habryka4) · 2022-06-24T21:46:46.116Z · LW(p) · GW(p)

I used to think this and now disagree! (See e.g. the karma vs. agree/disagree on this post)

Would be open to operationalizing this (just to be clear, I of course still expect them to be correlated).

↑ comment by Alex Caswen · 2023-04-19T05:17:32.945Z · LW(p) · GW(p)

I agree or disagree based on content, & UpVote or Downvote based on vibes.

Historically socially toxic nerds have been excused for being assholes if they were smart, knowledgeable, and always right. With this system, I can agree with what they say, but downvote how they say it.

In my opinion LW ironically is the community that needs this the least but YMMV. I am not surprised it is the community that has implemented it.

↑ comment by Vladimir_Nesov · 2022-06-24T16:10:50.468Z · LW(p) · GW(p)

Even a completely wrong claim occasionally contributes relevant ideas to the discussion. A comment can contain many claims and ideas, and salient wrongness of some of the claims (or subjective opinions not shared by the voter) can easily coexist with correctness/relevance of other statements in the same comment. So upvote/disagree is a natural situation. Downvote/correct corresponds to something true that's trivial/irrelevant/inappropriate/unkind. Being forced to collapse such cases into a single scale is painful, and the resulting ranking is ambiguous to the point of uselessness.

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2022-06-24T17:39:26.621Z · LW(p) · GW(p)

Bug report: At the moment, the parent comment says that it has 2 votes on the karma box for the total score of +2 (the karma self-vote is the default +2), and 1 vote on the agreement box for the total score of +2 (there is no agreement self-vote). When I remove the default self-upvote, it still says that there are 2 votes on the karma box (for the total score of 0). For the old karma-only comments removing the self-vote results in decrementing the number of votes displayed, and a comment with removed self-upvote that nobody else voted on says that it has 0 votes.

I believe here one other user agreement-upvoted the comment with strength +2, and nobody karma-voted except for the default +2 self-karma-upvote. So in this example I expect to see that the number of karma votes displayed after removal of the default self-upvote is 0, not 2. And I expect to see that the number of karma votes when self-upvote remains is 1, not 2. (I did reload the page in both voting states in a logged-off context to check that it's not just a local javascript or same-user-observation issue.)

Replies from: habryka4↑ comment by habryka (habryka4) · 2022-06-24T22:13:28.271Z · LW(p) · GW(p)

Yeah, I noticed this myself. We should fix this.

comment by Raemon · 2022-06-24T01:13:02.250Z · LW(p) · GW(p)

I'm currently pretty dissatisfied with the icons for Agree/Disagree. They look ugly and cluttered to me. Unfortunately all the other icons I can think of ("thumbs up?", "+ / - "?) come with an implication of general positive affect that's hard to distinguish from upvote/downvote.

Curious if anyone has ideas for alternate icons or UI stylings here.

Replies from: Dagon, MondSemmel, Dustin, Raemon↑ comment by Dagon · 2022-06-24T02:19:52.261Z · LW(p) · GW(p)

I think I'd change the left/right for regular karma to up/down, to match common usage. I agree with dissatisfaction for the agree/dis icons, but I'm not sure what's better. Perhaps = and ≠, but that's not perfect either. Perhaps a handshake for agree, but I don't know the opposite for disagree.

edit: I'd also swap the icons. Good on the left, bad on the right. Only works if the votes are no longer less-than/greater-than symbols, though.

Replies from: habryka4, RyanCarey, RobbBB↑ comment by habryka (habryka4) · 2022-06-24T17:06:25.423Z · LW(p) · GW(p)

The problem with doing up/down is mostly just that this is hard to combine with the bigger arrows we use for strong-votes. If you just rotate them naively, the arrows stick out from the comment when strong-voted, or we have to add a bunch of padding to the comment to make it fit, which looks ugly and reduces information density.

Replies from: lahwran↑ comment by the gears to ascension (lahwran) · 2022-06-24T23:59:53.102Z · LW(p) · GW(p)

what if you rotate the arrows's icons to icon-up, icon-down, but don't move them into a vertical column?

↑ comment by Rob Bensinger (RobbBB) · 2022-06-24T09:34:01.261Z · LW(p) · GW(p)

I think I'd change the left/right for regular karma to up/down, to match common usage.

Weak disagree

I agree with dissatisfaction for the agree/dis icons

Disagree, they don't bother me

edit: I'd also swap the icons. Good on the left, bad on the right. Only works if the votes are no longer less-than/greater-than symbols, though.

Yeah plausibly, if the switch is made

Replies from: RobbBB↑ comment by Rob Bensinger (RobbBB) · 2022-06-24T09:47:41.663Z · LW(p) · GW(p)

I kinda like that the site 'LessWrong' uses a 'less' symbol for downvotes, and 'more' for upvotes.

I also like how this gestures at the intended interpretation of voting (an indication of whether you want less or more of the thing, not necessarily of the comment's inherent goodness or badness).

I think the current symbols for agree / disagree are fine. Maybe there's a version that does the 'less vs. more' thing too, though. (Here referring to 'less true/probable' vs. 'more true/probable'.) E.g., ⩤ and ⩥, or ⧏ and ⧐, or ◀ and ▶.

↑ comment by MondSemmel · 2022-06-24T10:35:28.357Z · LW(p) · GW(p)

Aesthetically speaking, this current implementation still looks rather ugly to me. Specific things I find ugly:

- Left-right arrows in the comments vs. down-up arrows on LW posts.

- The visible boundary box around normal votes & agree-disagree votes.

- I might understand vertical lines between date & normal upvotes, and between normal upvotes & agree-disagree votes. But why do we need boundary lines at the top & bottom?

- And rather than even vertical lines, maybe just extra whitespace between the various votes might already be enough?

- The boundary boxes even seem to push some of the other UI elements around by a few pixels:

- See this screenshot from desktop Firefox: the boundary box creates a few pixels of extra whitespace above and below the comment headline. This creates undesirable wasted space.

- Also, the comment menu button on the right (the three vertical dots) are not aligned with the text on the left, but rather with the upper line of the boundary box.

- None of the comment hover tooltips are aligned: That is, when hovering over comment username, date, normal downvote & upvote button, normal karma, agree & disagree vote button, and agreement karma, the tooltips just seem to pop up at semi-random but inconsistent positions.

↑ comment by MondSemmel · 2022-06-24T10:55:31.075Z · LW(p) · GW(p)

And while I'm already in my noticing-tiny-things perfectionist mode: The line spacings between paragraphs and bulleted lists of various indentation levels seem inconsistent. Though maybe that's good typographical practice?

See this screenshot from desktop Firefox: there seem to be 3+ different line spacings with little consistency. For example:

- big spacing between an unindented paragraph and a bullet point

- medium spacing between bullet points of the same indentation level

- medium spacing between a bullet point of a higher indentation level, followed by one with a lower indentation level

- tiny spacing between a bullet point of a lower indentation level, followed by one with a higher indentation level

- big spacing between the end of a comment and the "Reply" button

↑ comment by Dustin · 2022-06-24T02:58:50.600Z · LW(p) · GW(p)

What about something like text buttons?

When I'm designing a UI, I try to use text if there is not a good iconographic way of representing a concept.

Something like:

AGREE (-12) DISAGREE

I'm not sure how that would look with the current karma widget. Would require some experimentation.

↑ comment by Raemon · 2022-06-24T22:56:34.693Z · LW(p) · GW(p)

Huh, are all the disagreement votes here meaning "the current icons are not cluttered looking?" I'm hella surprised, I was not expecting this to be a controversial take since the current UI was whipped up really quickly.

Replies from: Benito, RobbBB↑ comment by Ben Pace (Benito) · 2022-06-25T00:32:06.185Z · LW(p) · GW(p)

My upvote-disagree meant "Current UI is not that bad, though am supportive of a thread of dissatisfied folks exploring alts".

↑ comment by Rob Bensinger (RobbBB) · 2022-06-25T07:58:31.599Z · LW(p) · GW(p)

UI looks fine to me! There might be improvements available, but I'd need to see the alternatives to know whether I think they're better.

comment by Dagon · 2022-06-24T14:20:58.931Z · LW(p) · GW(p)

Should we show the agreement number as a ratio rather than a sum? regular votes can be summed, because "low total" doesn't matter much whether it's a mix of up and down, or just low engagement overall. But for agreement, I want to know how agreed it was among those who bothered to have an opinion. Not having an opinion is not a negative on agreement.

I think I'd either show total and number of votes (as 20 / 12), or just the ratio (1.66).

edit: I may get this in the current setup from looking at agreement compared to karma, once I get used to it. But that makes it worth aligning the default self-votes for the two, so comments don't start out controversial.

Replies from: habryka4, RobbBB↑ comment by habryka (habryka4) · 2022-06-24T21:51:04.620Z · LW(p) · GW(p)

I think this is worth some experiments at least. I do think any number that is visible on every comment really needs to pass a very high bar, though this one seems like it could plausibly pass it.

↑ comment by Rob Bensinger (RobbBB) · 2022-06-24T20:24:35.588Z · LW(p) · GW(p)

+1

I may get this in the current setup from looking at agreement compared to karma

I don't think this is reliable enough.

comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2022-06-24T21:44:40.389Z · LW(p) · GW(p)

Pulling together thoughts from a variety of subthreads:

I expect this to meaningfully deter me/create substantial demoralization and bad feelings when I attempt to participate in comment threads, and therefore cause me to do so even less than I currently do.

This impression has been building across all the implementations of the two-factor voting over the past few months.

In particular: the thing I wanted and was excited about from a novel or two-factor voting system was a distinction between what's overall approved or disapproved (i.e. I like or dislike the addition to the conversation, think it was productive or counterproductive) and what's true or false (i.e. I endorse the claims or reasoning and think that more people should believe them to be true).

I very much do not believe that "agree or disagree" is a good proxy for that/tracks that. I think that it doesn't train LWers to distinguish their sense of truth or falsehood from how much their monkey brain wants to signal-boost a given contribution. I don't think it is going to nudge us toward better discourse and clearer separation of [truth] and [value].

It feels like it's an active step away from that, and therefore it makes me sad. It's signal-boosting mob agreement and mob disagreement in a way that feels like more unthinking subjectivity, rather than less.

I think this would not be true if I had faith in the userbase, i.e. in a group composed entirely of Oli, Vaniver, Said, Logan, and Eliezer, I would trust the agreement/disagreement button.

But with LW writ large, I think it's sort of ... halfway pretending to be a signal of truth while secretly just being more-of-the-thing-karma-was-already-doing, i.e. popularity contest made slightly better by the fact that the people judging popularity are trying a little to make actually-good things popular.

(This impression based on scattered assessments of the second vote on various comments over the past few months.)

Replies from: habryka4, Duncan_Sabien, gjm, ambigram↑ comment by habryka (habryka4) · 2022-06-24T22:00:25.216Z · LW(p) · GW(p)

I very much do not believe that "agree or disagree" is a good proxy for that/tracks that. I think that it doesn't train[ LWers to distinguish their sense of truth or falsehood from how much their monkey brain wants to signal-boost a given contribution. I don't think it is going to nudge us toward better discourse and clearer separation of [truth] and [value].

See my other comment. I don't think agree/disagree is much different from true/false, and am confused about the strength of your reaction here. I personally don't have a strong preference, and only mildly prefer "agree/disagree" because it is more clearly in the same category as "approve/disapprove", i.e. an action, instead of a state.

I think the hover-over text needs tweaking anyways. If other people also have a preference for saying something like "Agree: Do you think the content of this comment is true?" and "Disagree: Do you think the content of this comment is false?", then that seems good to me. Having "approve/disapprove" and "true/false" as the top-level distinction does sure parse as a type error to me (why is one an action, and the other one an adjective?).

I also think we should definitely change the hover for the karma-vote dimension to say "approve" and "disapprove", instead of "like" and "dislike", which I think captures the dimensions here better.

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2022-06-24T22:29:29.974Z · LW(p) · GW(p)

Agree: Do you think the content of this comment is true?

Apart from equivocation [LW(p) · GW(p)] of words with usefully different meanings, I think it's less useful to extract truth-dimension than agreement-dimension, since truth-dimension is present less often, doesn't help with improving approval-dimension [LW(p) · GW(p)], and agreement-dimension becomes truth-dimension for objective claims, so truth-dimension is a special case of the more-useful-for-other-things agreement-dimension.

Replies from: Duncan_Sabien, habryka4↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2022-06-24T22:33:04.504Z · LW(p) · GW(p)

I think the karma dimension already captures the-parts-of-the-agreement-dimension-that-aren't-truth.

Replies from: Vladimir_Nesov, Rana Dexsin↑ comment by Vladimir_Nesov · 2022-06-24T22:46:50.877Z · LW(p) · GW(p)

I think this is false. Subjective disagreement shouldn't imply disapproval, capturing subjective-disagreement by disapproval rounds it off to disincentivization of non-conformity, which is a problem. Extracting it into a separate dimension solves this karma-problem.

It is less useful for what you want because it's contextually-more-ambiguous than the truth-verdict. So I think the meaningful disagreement between me and you/habryka(?) might be in which issue is more important (to spend the second-voting-dimension slot on). I think the large quantity of karma-upvoted/agreement-downvoted comments to this post is some evidence for the importance of the idea I'm professing.

↑ comment by Rana Dexsin · 2022-06-24T22:55:43.984Z · LW(p) · GW(p)

To derive from something I said as a secondary part of another comment, possibly more clearly: I think that extracting “social approval that this post was a good idea and should be promoted” while conflating other forms of “agreement” is a better choice of dimensionality reduction than extracting “objective truth of the statements in this post” while conflating other forms of “approval”. Note that the former makes this change kind of a “reverse extraction” where the karma system was meant to be centered around that one element to begin with and now has some noise removed, while the other elements now have a place to be rather than vanishing. The last part of that may center some disapprovals of the new system, along the lines of “amplifying the rest of it into its own number (rather than leaving it as an ambiguous background presence) introduces more noise than is removed by keeping the social approval axis ‘clean’” (which I don't believe, but I can partly see why other people might believe).

Of Strange Loop relevance: I am treating most of the above beliefs of mine here as having primarily intersubjective truth value, which is similar in a lot of relevant ways to an objective truth value but only contextually interconvertible.

↑ comment by habryka (habryka4) · 2022-06-24T22:31:54.308Z · LW(p) · GW(p)

Hmm, what about language like

"Agree: Do you think the content of this comment is true? (Or if the comment is about an emotional reaction or belief of the author, does that statement resonate with you?)"

It sure is a mouthful, but it feels like it points towards a coherent cluster.

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2022-06-24T23:13:14.486Z · LW(p) · GW(p)

I think the thing Duncan wants is harder to formulate than this, it has to disallow [LW(p) · GW(p)] voting on aspects of the comment that are not about factual claims whose truth is relevant. And since most claims are true, it somehow has to avoid everyone-truth-upvotes-everything default in a way that retains some sort of useful signal instead of deciding the number of upvotes based on truth-unrelated selection effects. I don't see what this should mean for comments-in-general, carefully explained, and I don't currently have much hope that it can be operationalized into something more useful [LW(p) · GW(p)] than agreement.

↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2022-06-24T21:47:30.266Z · LW(p) · GW(p)

I am self-aware about the fact that this might just mean "this isn't your scene, Duncan; you don't belong" more than "this group is doing something wrong for this group's goals and values."

Like, the complaint here is not necessarily "y'all're doing it Wrong" with a capital W so much as "y'all're doing it in a way that seems wrong to me, given what I think 'wrong' is," and there might just be genuine disagreement about wrongness.

But I think "agree/disagree" points people toward yet more of the same social junk that we're trying to bootstrap out of, in a way that "true/false" does not. It feels like that's where this went wrong/that's what makes this seem doomed-from-the-start and makes me really emotionally resistant to it.

I do not trust the aggregated agreement or disagreement of LW writ large to help me see more clearly or be a better reasoner, and I do not expect it to identify and signal-boost truth and good argument for e.g. young promising new users trying to become less wrong.

Replies from: Duncan_Sabien, Rana Dexsin↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2022-06-24T21:53:59.816Z · LW(p) · GW(p)

e.g. a -1 just appeared on the top-level comment in the "agree/disagree" category and it makes me want to take my ball and go home and never come back.

I'm taking that feeling as object, rather than being fully subject to it, but when I anticipate fighting against that feeling every time I leave a comment, I conclude "this is a bad place for me to be."

EDIT: it's now -3. Is the takeaway "this comment is substantially more false than true"?

EDIT: now at -5, and yes, indeed, it is making me want to LEAVE LESSWRONG.

Replies from: Valentine, habryka4, Duncan_Sabien↑ comment by Valentine · 2022-06-25T21:45:49.425Z · LW(p) · GW(p)

This means you're using others' reactions to define what you are or are not okay with.

I mean, if you think this -1 -3 -5 is reflecting something true, are you saying you would rather keep that truth hidden so you can keep feeling good about posting in ignorance?

And if you think it's not reflecting something true, doesn't your reaction highlight a place where your reactions need calibrating?

I'm pretty sure you're actually talking about collective incentives and you're just using yourself as an example to point out the incentive landscape.

But this is a place where a collective culture of emotional codependence actively screws with epistemics.

Which is to say, I disagree in a principled way with your sense of "wrongness" here, in the sense you name in your previous comment [LW(p) · GW(p)]:

Like, the complaint here is not necessarily "y'all're doing it Wrong" with a capital W so much as "y'all're doing it in a way that seems wrong to me, given what I think 'wrong' is," and there might just be genuine disagreement about wrongness.

I think a good truth-tracking culture acknowledges, but doesn't try to ameliorate, the discomfort you're naming in the comment I'm replying to.

(Whether LW agrees with me here is another matter entirely! This is just me.)

Replies from: Duncan_Sabien↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2022-06-25T23:25:40.713Z · LW(p) · GW(p)

I mean, if you think this

-1-3-5 is reflecting something true, are you saying you would rather keep that truth hidden so you can keep feeling good about posting in ignorance?

No, not quite.

There's a difference (for instance) between knowledge and common knowledge, and there's a difference (for instance) between animosity and punching.

Or maybe this is what you meant with "actually talking about collective incentives and you're just using yourself as an example to point out the incentive landscape."

A bunch of LWers can be individually and independently wrong about matters of fact, and this is different from them creating common knowledge that they all disagree with a thing (wrongly).

It's better in an important sense for ten individually wrong people to each not have common knowledge that the other nine also are wrong about this thing, because otherwise they come together and form the anti-vax movement.

Similarly, a bunch of LWers can be individually in grumbly disagreement with me, and this is different from there being a flag for the grumbly discontent to come together and form SneerClub.

(It's worth noting here that there is a mirror to all of this, i.e. there's the world in which people are quietly right or in which their quiet discontent is, like, a Correct Moral Objection or something. But it is an explicit part of my thesis here that I do not trust LWers en-masse. I think the actual consensus of LWers is usually hideously misguided, and that a lot of LW's structure (e.g. weighted voting) helps to correct and ameliorate this fact, though not perfectly (e.g. Ben Hoffman's patently-false slander of me being in positive vote territory for over a week with no one speaking in objection to it, which is a feature of Old LessWrong A Long Time Ago but it nevertheless still looms large in my model because I think New LessWrong Today is more like the post-Civil-War South (i.e. not all that changed) than like post-WWII-Japan (i.e. deeply restructured)).)

What I want is for Coalitions of Wrongness to have a harder time forming, and Coalitions of Rightness to have an easier time forming.

It is up in the air whether RightnessAndWrongnessAccordingToDuncan is closer to actually right than RightnessAndWrongnessAccordingToTheLWMob.

But it seems to me that the vote button in its current implementation, and evaluated according to the votes coming in, was more likely to be in the non-overlap between those two, and in the LWMob part, which means an asymmetric weapon in the wrong direction.

Sorry, this comment is sort of quickly tossed off; please let me know if it doesn't make sense.

Replies from: Valentine↑ comment by Valentine · 2022-06-26T01:59:50.289Z · LW(p) · GW(p)

Mmm. It makes sense. It was a nuance I missed about your intent. Thank you.

What I want is for Coalitions of Wrongness to have a harder time forming, and Coalitions of Rightness to have an easier time forming.

Abstractly that seems maybe good.

My gut sense is you can't do that by targeting how coalitions form. That engenders Goodhart drift. You've got to do it by making truth easier to notice in some asymmetric way.

I don't know how to do that.

I agree that this voting system doesn't address your concern.

It's unclear to me how big a problem it is though. Maybe it's huge. I don't know.

↑ comment by habryka (habryka4) · 2022-06-24T22:08:10.259Z · LW(p) · GW(p)

EDIT: it's now -3. Is the takeaway "this comment is substantially more false than true"?

I think other people are saying "the sentences that Duncan says about himself are not true for me" while also saying "I am nevertheless glad that Duncan said it". This seems like great information for me, and is like, quite important for me getting information from this thread about how people want us to change the feature.

Replies from: Vladimir_Nesov, Duncan_Sabien↑ comment by Vladimir_Nesov · 2022-06-24T22:35:03.938Z · LW(p) · GW(p)

And if you change agreement-dimension to truth-dimension, this data will no longer be possible to express in terms of voting, because it's not the case that Duncan-opinion is false.

↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2022-06-24T22:12:54.938Z · LW(p) · GW(p)

The distinction between "not true for me, the reader" and "not true at all" is not clear.

And that is the distinction between "agree/disagree" and "true/false."

Replies from: habryka4, Kaj_Sotala↑ comment by habryka (habryka4) · 2022-06-24T22:16:54.254Z · LW(p) · GW(p)

Hmm, I do sure find the first one more helpful when people talk about themselves. Like, if someone says "I think X", I want to know when other people would say "I think not X". I don't want people to tell me if they really think whether the OP accurately reported on their own beliefs and really believes X.

Replies from: Duncan_Sabien, Rana Dexsin↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2022-06-24T22:22:54.362Z · LW(p) · GW(p)

Yeah. Both are useful, and each is more useful in some context or other. I just want it to be relatively unambiguous which is happening—I really felt like I was being told I was wrong in my top-level comment. That was the emotional valence.

Replies from: RobbBB↑ comment by Rob Bensinger (RobbBB) · 2022-06-25T22:03:32.519Z · LW(p) · GW(p)

I'm sad this is your experience!

I interpret "agree/disagree" in this context as literally 'is this comment true, as far as you can tell, or is it false?', so when I imagine changing it to "true/false" I don't imagine it feeling any different to me. (Which also means I'm not personally opposed to such a change. 🤷)

Maybe relevant that I'm used to Arbital's 'assign a probability to this claim' feature. I just tihnk of this as a more coarse-grained, fast version of Arbital's tool for assigning probabilities to claims.

When I see disagree-votes on my comments, I think I typically feel bad about it if it's also downvoted (often some flavor of 'nooo you're not fully understanding a thing I was trying to communicate!'), but happy about it if it's upvoted. Something like:

- Amusement at the upvote/agreevote disparity, and warm feelings toward LW that it was able to mentally separate its approval for the comment from how much probability it assigns to the comment being true.

- Pride in LW for being one of the rare places on the Internet that cares about the distinction between 'I like this' and 'I think this is true'.

- I mostly don't perceive the disagreevotes as 'you are flatly telling me to my face that I'm wrong'. Rather, I perceive it more like 'these people are writing journal entries to themselves saying "Dear Diary, my current belief is X"', and then LW kindly records a bunch of these diary entries in a single centralized location so we can get cool polling data about where people are at. It feels to me like a side-note.

Possibly I was primed to interpret things this way by Arbital? On Arbital, probability assignments get their own page you can click through to; and Arbital pages are timeless, so people often go visit a page and vote on years after the post was originally created, with (I think?) no expectation the post author will ever see that they voted. And their names and specific probabilities are attached. All of which creates a sense of 'this isn't a response to the post, it's just a tool for people to keep track of what they think of things'

Maybe that's the crux? I might be totally wrong, but I imagine you seeing a disagree-vote and reading it instead as 'a normal downvote, but with a false pretension of being somehow unusually Epistemic and Virtuous', or as an attempt to manipulate social reality and say 'we, the elite members of the Community of Rationality, heirs to the throne of LessWrong, hereby decree (from behind our veil of anonymity) (with zero accountability or argumentation) that your view is False; thus do we bindingly affirm the Consensus Position of this site'.

I think I can also better understand your perspective (though again, correct me if I'm wrong) if I imagine I'm in hostile territory surrounded by enemies.

Like, maybe you imagine five people stalking you around LW downvoting-and-disagreevoting on everything your post, unfairly strawmanning you, etc.; and then there's a separate population of LWers who are more fair-minded and slower to rush to judgment.

But if the latter group of people tends to upvote you and humbly abstain from (dis)agreevoting, then the pattern we'll often see is 'you're being upvoted and disagreed with', as though the latter fair-minded population were doing both the upvoting and the disagreevoting. (Or as though the site as a whole were virtuously doing the support-and-defend-people's-right-to-say-unpopular-things thing.) Which is in fact wildly different from a world where the fair-minded people are neutral or positive about the truth-value of your comments, while the Duncan-hounding trolls.

And even if the probability of the 'Duncan-hounding trolls' thing is low, it's maddening to have so much uncertainty about which of those scenarios (or other scenarios) is occurring. And it's doubly maddening to have to worry that third parties might assign unduly low probability to the 'Duncan-hounding trolls' thing, and to related scenarios. And that they might prematurely discount Duncan's view, or be inclined to strawman it, after seeing a -8 or whatever that tells them 'social reality is that this comment is Wrong'.

Again, tell me if this is all totally off-base. This is me story-telling so you can correct my models; I don't have a crystal ball. But that's an example of a scenario where I'd feel way more anxious about the new system, and where I'd feel very happy to have a way of telling how many people are agreeing and upvoting, versus agreeing and downvoting, versus disagreeing and upvoting, versus disagreeing and downvoting.

Plausibly a big part of why we feel differently about the system is that you've had lots of negative experiences on LW and don't trust the consensus here, while I feel more OK about it?

Like, I don't think LW is reliably correct, and I don't think of 'people who use LW' as the great-at-epistemics core of the rationalescent community. But I feel fine about the site, and able to advocate my views, be heard about, persuade people, etc. If your experience is instead one of constantly having to struggle to be understood at all, fighting your way to not be strawmanned, having a minority position that's constantly under siege, etc., then I could imagine having a totally different experience.

Replies from: Duncan_Sabien↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2022-06-26T02:56:08.654Z · LW(p) · GW(p)

It is not totally off-base; these hypotheses above plus my reply to Val pretty much cover the reaction.

I imagine you seeing a disagree-vote and reading it instead as 'a normal downvote, but with a false pretension of being somehow unusually Epistemic and Virtuous', or as an attempt to manipulate social reality and say 'we, the elite members of the Community of Rationality, heirs to the throne of LessWrong, hereby decree (from behind our veil of anonymity) (with zero accountability or argumentation) that your view is False; thus do we bindingly affirm the Consensus Position of this site'.

... resonated pretty strongly.

But that's an example of a scenario where I'd feel way more anxious about the new system, and where I'd feel very happy to have a way of telling how many people are agreeing and upvoting, versus agreeing and downvoting, versus disagreeing and upvoting, versus disagreeing and downvoting.

Yes.

Plausibly a big part of why we feel differently about the system is that you've had lots of negative experiences on LW and don't trust the consensus here, while I feel more OK about it?

If your experience is instead one of constantly having to struggle to be understood at all, fighting your way to not be strawmanned, having a minority position that's constantly under siege, etc., then I could imagine having a totally different experience.

Yes. In particular, I feel I have been, not just misunderstood, but something-like attacked or willfully misinterpreted, many times, and usually I am wanting someone, anyone, to come to my defense, and I only get that defense perhaps one such time in three.

Worth noting that I was on board with the def of approve/disapprove being "I could truthfully say this or something close to it from my own beliefs and experience."

Replies from: SaidAchmiz↑ comment by Said Achmiz (SaidAchmiz) · 2022-06-26T06:39:18.627Z · LW(p) · GW(p)

Worth noting that I was on board with the def of approve/disapprove being “I could truthfully say this or something close to it from my own beliefs and experience.”

It seems to me (and really, this doubles as a general comment on the pre-existing upvote/downvote system, and almost all variants of the UI for this one, etc.) that… a big part of the problem with a system like this, is that… “what people take to be the meaning of a vote (of any kind and in any direction)” is not something that you (as the hypothetical system’s designer) can control, or determine, or hold stable, or predict, etc.

Indeed it’s not only possible, but likely, that:

- different people will interpret votes differently;

- people who cast the votes will interpret them differently from people who use the votes as readers;

- there will be difficult-to-predict patterns in which people interpret votes how;

- how people interpret votes, and what patterns there are in this, will drift over time;

- how people think about the meaning of the votes (when explicitly thinking about them) differs from how people’s usage of the votes (from either end) maps to their cognitive and affective states (i.e., people think they think about votes one way, but they actually think about votes another way);

… etc., etc.

So, to be frank, I think that any such voting system is doomed to be useless for measuring anything more subtle or nuanced than the barest emotivism (“boo”/“yay”), simply because it’s not possible to consistently and with predictable consequences dictate an interpretation for the votes, to be reliably and stably adhered to by all users of the site.

Replies from: TekhneMakre, Duncan_Sabien↑ comment by TekhneMakre · 2022-06-26T07:10:47.759Z · LW(p) · GW(p)

If true, that would imply an even higher potential value of meta-filtering (users can choose which other users's feedback they want to modulate their experience).

Replies from: SaidAchmiz↑ comment by Said Achmiz (SaidAchmiz) · 2022-06-26T07:46:25.626Z · LW(p) · GW(p)

I don’t think this follows… after all, once you’re whitelisting a relatively small set of users you want to hear from, why not just get those users’ comments, and skip the voting?

(And if you’re talking about a large set of “preferred respondents”, then… I’m not sure how this could be managed, in a practical sense?)

Replies from: TekhneMakre↑ comment by TekhneMakre · 2022-06-26T08:11:02.085Z · LW(p) · GW(p)

That's why it's a hard problem. The idea would be to get leverage by letting you say "I trust this user's judgement, including about whose judgement to trust". Then you use something like (personalized) PageRank / eigenmorality https://scottaaronson.blog/?p=1820 to get useful information despite the circularity of "trusting who to trust about who to trust about ...", and which leverages all the users's ratings of trust.

↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2022-06-26T06:56:37.174Z · LW(p) · GW(p)

I agree, but I find something valuable about, like, unambiguous labels anyway?

Like it's easier for me to metabolize "fine, these people are using the button 'wrong' according to the explicit request made by the site" somehow, than it is to metabolize the confusingly ambiguous open-ended "agree/disagree" which, from comments all throughout this post, clearly means like six different clusters of Thing.

Replies from: SaidAchmiz↑ comment by Said Achmiz (SaidAchmiz) · 2022-06-26T07:44:44.285Z · LW(p) · GW(p)

Did you mean “confusingly ambiguous”? If not, then could you explain that bit?

Replies from: Duncan_Sabien↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2022-06-26T21:38:02.763Z · LW(p) · GW(p)

I did mean confusingly ambiguous, which is an ironic typo. Thanks.

I think we should be in the business of not setting up brand-new motte-and-baileys, and enshrining them in site architecture.

Replies from: SaidAchmiz↑ comment by Said Achmiz (SaidAchmiz) · 2022-06-26T22:34:27.482Z · LW(p) · GW(p)

Yes, I certainly agree with this.

(I do wonder whether the lack of agreement on the unwisdom of setting up new motte-and-baileys comes from the lack of agreement that the existing things are also motte-and-baileys… or something like them, anyway—is there even an “official” meaning of the karma vote buttons? Probably there is, but it’s not well-known enough to even be a “motte”, it seems to me… well, anyhow, as I said—maybe some folks think that the vote buttons are good and work well and convey useful info, and accordingly they also think that the agreement vote buttons will do likewise?)

↑ comment by Rana Dexsin · 2022-06-24T22:28:03.977Z · LW(p) · GW(p)

I think an expansion of that subproblem is that “agreement” is determined in more contexts and modalities depending on the context of the comment. Having only one axis for it means the context can be chosen implicitly, which (to my mind) sort of happens anyway. Modes of agreement include truth in the objective sense but also observational (we see the same thing, not quite the same as what model-belief that generates), emotional (we feel the same response), axiological (we think the same actions are good), and salience-based (we both think this model is relevant—this is the one of the cases where fuzziness versus the approval axis might come most into play). In my experience it seems reasonably clear for most comments which axis is “primary” (and I would just avoid indicating/interpreting on the “agreement” axis in case of ambiguity), but maybe that's an illusion? And separating all of those out would be a much more radical departure from a single-axis karma system, and impose even more complexity (and maybe rigidity?), but it might be worth considering what other ideas are around that.

More narrowly, I think having only the “objective truth” axis as the other axis might be good in some domains but fails badly in a more tangled conversation, and especially fails badly while partial models and observations are being thrown around, and that's an important part of group rationality in practice.

↑ comment by Kaj_Sotala · 2022-06-26T21:46:13.046Z · LW(p) · GW(p)

And that is the distinction between "agree/disagree" and "true/false."

If the labels were "true/false", wouldn't it still be unclear when people meant "not true for me, the reader" and when they meant "not true at all"?

Replies from: Duncan_Sabien↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2022-06-26T23:33:23.566Z · LW(p) · GW(p)

I've gone into this in more detail elsewhere. Ultimately, the solution I like best is "Upvoting on this axis means 'I could truthfully say this or something close to it from my own beliefs and experience.'"