Birds, Brains, Planes, and AI: Against Appeals to the Complexity/Mysteriousness/Efficiency of the Brain

post by Daniel Kokotajlo (daniel-kokotajlo) · 2021-01-18T12:08:13.418Z · LW · GW · 86 commentsContents

Plan:

Illustrative Analogy

Exciting Graph

Analysis

Part 1: Extra brute force can make the problem a lot easier

Part 2: Evolution produces complex mysterious efficient designs by default, even when simple inefficient designs work just fine for human purposes.

Part 3: What’s bogus and what’s not

Part 4: Example: Data-efficiency

Conclusion

Appendix

None

86 comments

[Epistemic status: Strong opinions lightly held, this time with a cool graph.]

I argue that an entire class of common arguments against short timelines is bogus, and provide weak evidence that anchoring to the human-brain-human-lifetime milestone is reasonable.

In a sentence, my argument is that the complexity and mysteriousness and efficiency of the human brain (compared to artificial neural nets) is almost zero evidence that building TAI will be difficult, because evolution typically makes things complex and mysterious and efficient, even when there are simple, easily understood, inefficient designs that work almost as well (or even better!) for human purposes.

In slogan form: If all we had to do to get TAI was make a simple neural net 10x the size of my brain, my brain would still look the way it does.

The case of birds & planes illustrates this point nicely. Moreover, it is also a precedent for several other short-timelines talking points, such as the human-brain-human-lifetime (HBHL) anchor.

Plan:

- Illustrative Analogy

- Exciting Graph

- Analysis

- Extra brute force can make the problem a lot easier

- Evolution produces complex mysterious efficient designs by default, even when simple inefficient designs work just fine for human purposes.

- What’s bogus and what’s not

- Example: Data-efficiency

- Conclusion

- Appendix

1909 French military plane, the Antionette VII.

By Deep silence (Mikaël Restoux) - Own work (Bourget museum, in France), CC BY 2.5, https://commons.wikimedia.org/w/index.php?curid=1615429

Illustrative Analogy

| AI timelines, from our current perspective | Flying machine timelines, from the perspective of the late 1800’s: |

| Shorty: Human brains are giant neural nets. This is reason to think we can make human-level AGI (or at least AI with strategically relevant [AF · GW] skills, like politics and science [LW · GW]) by making giant neural nets. | Shorty: Birds are winged creatures that paddle through the air. This is reason to think we can make winged machines that paddle through the air. |

Longs: Whoa whoa, there are loads of important differences between brains and artificial neural nets: [what follows is a direct quote from the objection a friend raised when reading an early draft of this post!] - It's at least possible that the wiring diagram of neurons plus weights is too coarse-grained to accurately model the brain's computation, but it's all there is in deep neural nets. If we need to pay attention to glial cells, intracellular processes, different neurotransmitters etc., it's not clear how to integrate this into the deep learning paradigm. - My impression is that several biological observations on the brain don't have a plausible analog in deep neural nets: growing new neurons (though unclear how important it is for an adult brain), "repurposing" in response to brain damage, … | Longs: Whoa whoa, there are loads of important differences between birds and flying machines:

- Birds paddle the air by flapping, whereas current machine designs use propellers and fixed wings.

- It’s at least possible that the anatomical diagram of bones, muscles, and wing surfaces is too coarse-grained to accurately model how a bird flies, but that’s all there is to current machine designs (replacing bones with struts and muscles with motors, that is). If we need to pay attention to the percolation of air through and between feathers, micro-eddies in the air sensed by the bird and instinctively responded to, etc. it’s not clear how to integrate this into the mechanical paradigm.

- My impression is that several biological observations of birds don’t have a plausible analog in machines: Growing new feathers and flesh (though unclear how important this is for adult birds), “repurposing” in response to damage ... |

Shorty: The key variables seem to be size and training time. Current neural nets are tiny; the biggest one is only one-thousandth the size of the human brain. But they are rapidly getting bigger. Once we have enough compute to train neural nets as big as the human brain for as long as a human lifetime (HBHL), it should in principle be possible for us to build HLAGI. No doubt there will be lots of details to work out, of course. But that shouldn’t take more than a few years. | Shorty: The key variables seem to be engine-power and engine weight. Current motors are not strong & light enough, but they are rapidly getting better. Once the power-to-weight ratio of our motors surpasses the power-to-weight ratio of bird muscles, it should be in principle possible for us to build a flying machine. No doubt there will be lots of details to work out, of course. But that shouldn’t take more than a few years. |

Longs: Bah! I don’t think we know what the key variables are. For example, biological brains seem to be able to learn faster, with less data, than artificial neural nets. And we don’t know why.

| Longs: Bah! I don’t think we know what the key variables are. For example, birds seem to be able to soar long distances without flapping their wings at all, and we still haven’t figured out how they do it. Another example: We still don’t know how birds manage to steer through the air without crashing (flight stability & control). Besides, “there will be lots of details to work out” is a huge understatement. It took evolution billions of generations of billions of individuals to produce birds. What makes you think we’ll be able to do it quickly? It’s plausible that actually we’ll have to do it the way evolution did it, i.e. meta-design, i.e. evolve a large population of flying machines, tweaking our blueprints each generation of crashed machines to grope towards better designs. And even if you think we’ll be able to do it substantially quicker than evolution did, it’s pretty presumptuous to think we could do it quickly enough that the date our engines achieve power/weight parity with bird muscle is relevant for forecasting. |

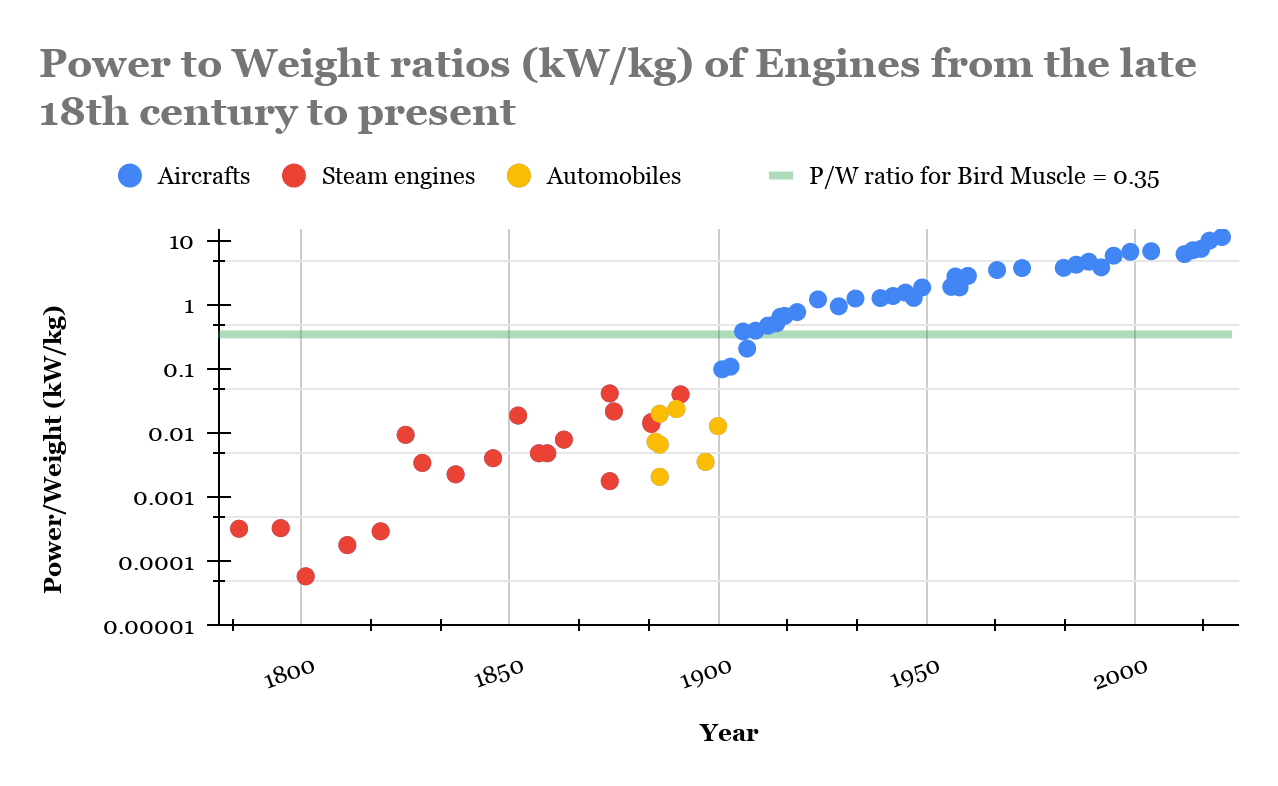

Exciting Graph

This data shows that Shorty was entirely correct about forecasting heavier-than-air flight. (For details about the data, see appendix.) Whether Shorty will also be correct about forecasting TAI remains to be seen.

In some sense, Shorty has already made two successful predictions: I started writing this argument before having any of this data; I just had an intuition that power-to-weight is the key variable for flight and that therefore we probably got flying machines shortly after having comparable power-to-weight as bird muscle. Halfway through the first draft, I googled and confirmed that yes, the Wright Flyer’s motor was close to bird muscle in power-to-weight. Then, while writing the second draft, I hired an RA, Amogh Nanjajjar, to collect more data and build this graph. As expected, there was a trend of power-to-weight improving over time, with flight happening right around the time bird-muscle parity was reached.

I had previously heard from a friend, who read a book about the invention of flight, that the Wright brothers were the first because they (a) studied birds and learned some insights from them, and (b) did a bunch of trial and error, rapid iteration, etc. (e.g. in wind tunnels). The story I heard was all about the importance of insight and experimentation--but this graph seems to show that the key constraint was engine power-to-weight. Insight and experimentation were important for determining who invented flight, but not for determining which decade flight was invented in.

Analysis

Part 1: Extra brute force can make the problem a lot easier

One way in which compute can substitute for insights/algorithms/architectures/ideas is that you can use compute to search for them. But there is a different and arguably more important way in which compute can substitute for insights/etc.: Scaling up the key variables, so that the problem becomes easier, so that fewer insights/etc. are needed.

For example, with flight, the problem becomes easier the more power/weight ratio your motors have. Even if the Wright brothers didn’t exist and nobody else had their insights, eventually we would have achieved powered flight anyway, because when our engines are 100x more powerful for the same weight, we can use extremely simple, inefficient designs. (For example, imagine a u-shaped craft with a low center of gravity and helicopter-style rotors on each tip. Add a third, smaller propeller on a turret somewhere for steering. EDIT: Oops, lol, I'm actually wrong about this. Keeping center of gravity low doesn't help. Welp, this is embarrassing.)

With neural nets, we have plenty of evidence now that bigger = better, with theory to back it up. Suppose the problem of making human-level AGI with HBHL levels of compute is really difficult. OK, 10x the parameter count and 10x the training time and try again. Still too hard? Repeat.

Note that I’m not saying that if you take a particular design that doesn’t work, and make it bigger, it’ll start working. (If you took Da Vinci’s flying machine and made the engine 100x more powerful, it would not work). Rather, I’m saying that the problem of finding a design that works gets qualitatively easier the more parameters and training time you have to work with.

Finally, remember that human-level AGI is not the only kind of TAI. Sufficiently powerful R&D tools would work, as would sufficiently powerful persuasion tools [AF · GW], as might something that is agenty and inferior to humans in some ways but vastly superior in others.

Part 2: Evolution produces complex mysterious efficient designs by default, even when simple inefficient designs work just fine for human purposes.

Suppose that actually all we have to do to get TAI is something fairly simple and obvious, but with a neural net 10x the size of my (actual) brain and trained for 10x longer. In this world, does the human brain look any different than it does in the actual world?

No. Here is a nonexhaustive list of reasons why evolution would evolve human brains to look like they do, with all their complexity and mysteriousness and efficiency, even if the same capability levels could be reached with 10x more neurons and a very simple architecture. Feel free to skip ahead if you think this is obvious.

- In general, evolved creatures are complex and mysterious to us, even when simple and human-comprehensible architectures work fine. Take birds, for example: As mentioned before, all the way up to the Wright brothers there were a lot of very basic things about birds that were still not understood. From this article: “They watched buzzards glide from horizon to horizon without moving their wings, and guessed they must be sucking some mysterious essence of upness from the air. Few seemed to realize that air moves up and down as well as horizontally.” I don’t know much about ornithology but I’d be willing to bet that there were lots of important things discovered about birds after airplanes already existed, and that there are still at least a few remaining mysteries about how birds fly. (Spot check: Yep, the history of ornithopters page says “...the development of comprehensive aerodynamic theory for flapping remains an outstanding problem...”). And of course evolved creatures are often more efficient in various ways than their still-useful engineered counterparts.

- Making the brain 10x bigger would be enormously costly to fitness, because it would cost 10x more energy and restrict mobility (not to mention the difficulties of getting through the birth canal!) Much better to come up with clever modules, instincts, optimizations, etc. that achieve the same capabilities in a smaller brain.

- Evolution is heavily constrained on training data, perhaps even more than on brain size. It can’t just evolve the organism to have 10x more training data, because longer-lived organisms have more opportunities to be eaten or suffer accidents, especially in their 10x-longer childhoods. Far better to hard-code some behaviors as instincts.

- Evolution gets clever optimizations and modules and such “for free” in some sense. Since it is evolving millions of individuals for millions of generations anyway, it’s not a big deal for it to perform massive search and gradient descent through architecture-space.

- Completely blank slate brains (i.e. extremely simple architecture, no instincts or finely tuned priors) would be unfit even if they were highly capable because they wouldn’t be aligned to evolution’s values (i.e. reproduction.) Perhaps most of the complexity in the human brain--the instincts, inbuilt priors, and even most of the modules--isn’t for capabilities at all, but rather for alignment [LW · GW].

Part 3: What’s bogus and what’s not

The general pattern of argument I think is bogus is:

The brain has property X, which seems to be important to how it functions. We don’t know how to make AI’s with property X. It took evolution a long time to make brains have property X. This is reason to think TAI is not near.

As argued above, if TAI is near, there should still be many X which are important to how the brain functions, which we don’t know how to reproduce in AI, and which it took evolution a long time to produce. So rattling off a bunch of X’s is basically zero evidence against TAI being near.

Put differently, here are two objections any particular argument of this type needs to overcome:

- TAI does not actually require X (analogous to how airplanes didn’t require anywhere near the energy-efficiency of birds, nor the ability to soar, nor the ability to flap their wings, nor the ability to take off from unimproved surfaces… the list goes on)

- We’ll figure out how to get property X in AIs soon after we have the other key properties (size and training time), because (a) we can do search, like evolution did but much more efficient, (b) we can increase the other key variables to make our design/search problem easier, and (c) we can use human ingenuity & biological inspiration. Historically there is plenty of precedent for the previous three factors being strong enough; see e.g. the case of powered flight.

This reveals how the arguments could be reformulated to become non-bogus! They need to argue (a) that X is probably necessary for TAI, and (b) that X isn’t something that we’ll figure out fairly quickly once the key variables of size and training time are surpassed.

In some cases there are decent arguments to be made for both (a) and (b). I think efficiency is one of them, so I’ll use that as my example below.

Part 4: Example: Data-efficiency

Let’s work through the example of data-efficiency. A bad version of this argument would be:

Humans are much more data-efficient learners than current AI systems. Data-efficiency is very important; any human who learned as inefficiently as current AI would basically be mentally disabled. This is reason to think TAI is not near.

The rebuttal to this bad argument is:

If birds were as energy-inefficient as planes, they’d be disabled too, and would probably die quickly. Yet planes work fine. (See Table 1 from this AI Impacts page) Even if TAI is near, there are going to be lots of X’s that are important for the brain, that we don’t know how to make in AI yet, but that are either unnecessary for TAI or not too difficult to get once we have the other key variables. So even if TAI is near, I should expect to hear people going around pointing out various X’s and claiming that this is reason to think TAI is far away. You haven’t done anything to convince me that this isn’t what’s happening with X = data-efficiency.

However, I do think the argument can be reformulated and expanded to become good. Here’s a sketch, inspired by Ajeya Cotra’s argument here.

We probably can’t get TAI without figuring out how to make AIs that are as data-efficient as humans. It’s true that there are some useful tasks for which there is plenty of data--like call center work, or driving trucks--but AIs that can do these tasks won’t be transformative. Transformative AI will be doing things like managing corporations, leading armies, designing new chips, and writing AI theory publications. Insofar as AI learns more slowly than humans, by the time it accumulates enough experience doing one of these tasks, (a) the world would have changed enough that its skills would be obsolete, and/or (b) it would have made a lot of expensive mistakes in the meantime.

Moreover, we probably won’t figure out how to make AIs that are as data-efficient as humans for a long time--decades at least. This is because 1. We’ve been trying to figure this out for decades and haven’t succeeded, and 2. Having a few orders of magnitude more compute won’t help much. Now, to justify point #2: Neural nets actually do get more data-efficient as they get bigger, but we can plot the trend and see that they will still be less data-efficient than humans when they are a few orders of magnitude bigger. So making them bigger won’t be enough, we’ll need new architectures/algorithms/etc. As for using compute to search for architectures/etc., that might work, but given how long evolution took, we should think it’s unlikely that we could do this with only a few orders of magnitude of searching—probably we’d need to do many generations of large population size. (We could also think of this search process as analogous to typical deep learning training runs, in which case we should expect it’ll take many gradient updates with large batch size.) Anyhow, there’s no reason to think that data-efficient learning is something you need to be human-brain-sized to do. If we can’t make our tiny AIs learn efficiently after several decades of trying, we shouldn’t be able to make big AIs learn efficiently after just one more decade of trying.

I think this is a good argument. Do I buy it? Not yet. For one thing, I haven’t verified whether the claims it makes are true, I just made them up as plausible claims which would be persuasive to me if true. For another, some of the claims actually seem false to me. Finally, I suspect that in 1895 someone could have made a similarly plausible argument about energy efficiency, and another similarly plausible argument about flight control, and both arguments would have been wrong: Energy efficiency turned out to be insufficiently necessary, and flight control turned out to be insufficiently difficult!

Conclusion

What I am not saying: I am not saying that the case of birds and planes is strong evidence that TAI will happen once we hit the HBHL milestone. I do think it is evidence, but it is weak evidence. (For my all-things-considered view of how many orders of magnitude of compute it’ll take to get TAI, see future posts, or ask me.) I would like to see a more thorough investigation of cases in which humans attempt to design something that has an obvious biological analogue. It would be interesting to see if the case of flight was typical. Flight being typical would be strong evidence for short timelines, I think.

What I am saying: I am saying that many common anti-short-timelines arguments are bogus. They need to do much more than just appeal to the complexity/mysteriousness/efficiency of the brain; they need to argue that some property X is both necessary for TAI and not about to be figured out for AI anytime soon, not even after the HBHL milestone is passed by several orders of magnitude.

Why this matters: In my opinion the biggest source of uncertainty about AI timelines has to do with how much “special sauce” is necessary for making transformative AI. As jylin04 puts it [LW · GW],

A first and frequently debated crux is whether we can get to TAI from end-to-end training of models specified by relatively few bits of information at initialization, such as neural networks initialized with random weights. OpenAI in particular seems to take the affirmative view[^3], while people in academia, especially those with more of a neuroscience / cognitive science background, seem to think instead that we'll have to hard-code in lots of inductive biases from neuroscience to get to AGI [^4].

In my words: Evolution clearly put lots of special sauce into humans, and took millions of generations of millions of individuals to do so. How much special sauce will we need to get TAI?

Shorty is one end of a spectrum of disagreement on this question. Shorty thinks the amount of special sauce required is small enough that we’ll “work out the details” within a few years of having the key variables (size and training time). At the other end of the spectrum would be someone who thought that the amount of special sauce required is similar to the amount found in the brain. Longs is in the middle. Longs thinks the amount of special sauce required is large enough that the HBHL milestone isn’t particularly relevant to timelines; we’ll either have to brute-force search for the special sauce like evolution did, or have some brilliant new insights, or mimic the brain, etc.

This post rebutted common arguments against Shorty’s position. It also presented weak evidence in favor of Shorty’s position: the precedent of birds and planes. In future posts I’ll say more about what I think the probability distribution over amount-of-special-sauce-needed should be and why.

Acknowedgements: Thanks to my RA, Amogh Nanjajjar, for compiling the data and building the graph. Thanks to Kaj Sotala, Max Daniel, Lukas Gloor, and Carl Shulman for comments on drafts. This research was conducted at the Center on Long-Term Risk and the Polaris Research Institute.

Appendix

Some footnotes:

- I didn’t say anything about why we might think size and training time are the key variables, or even what “key variables” means. Hopefully I’ll get a chance in the comments or in subsequent posts.

- I deliberately left vague what “training time” means and what “size” means. Thus, I’m not commiting myself to any particular way of calculating the HBHL milestone yet. I’m open to being convinced that the HBHL milestone is farther in the future than it might seem.

- Persuasion tools, even very powerful ones, wouldn’t be TAI by the standard definition. However they would constitute a potential-AI-induced-point-of-no-return [LW · GW], so they still count for timelines purposes.

- This "How much special sauce is needed?" variable is very similar to Ajeya Cotra's variable "how much compute would lead to TAI given 2020's algorithms."

Some bookkeeping details about the data:

- This dataset is not complete. Amogh did a reasonably thorough search for engines throughout the period (with a focus on stuff before 1910) but was unable to find power or weight stats for many of the engines we heard about. Nevertheless I am reasonably confident that this dataset is representative; if an engine was significantly better than the others of its time, probably this would have been mentioned and Amogh would have flagged it as a potential outlier.

- Many of the points for steam engine power/weight should really be bumped up slightly. This is because most of the data we had was for the weight of the entire locomotive of a steam-powered train, rather than just the steam engine part. I don’t know what fraction of a locomotive is non-steam-engine but 50% seems like a reasonable guess. I don’t think this changes the overall picture much; in particular, the two highest red dots do not need to be bumped up at all (I checked).

- The birds bar is the power/weight ratio for the muscles of a particular species of bird, reported by this source, which reports the power/weight for a particular species of bird. Amogh has done a bit of searching and doesn’t think muscle power/weight is significantly different for other species of bird. Seems plausible to me; even if the average bird has muscles that are twice (or half) as powerful-per-kilogram, the overall graph would look basically the same.

- I attempted to find estimates of human muscle power-to-weight ratio; it gets smaller the more tired the muscles get, but at peak performance for fit individuals it seems to be about an order of magnitude less than bird muscle. (This chart lists power-to-weight ratio for human cyclists, which according to this are probably about half muscle, so look at the left-hand column and double it.) Interestingly, this means that the engines of the first flying machines were possibly the first engines to be substantially better than human flapping/pedaling as a source of flying-machine power.

- EDIT Gaaah I forgot to include a link to the data! Here's the spreadsheet.

86 comments

Comments sorted by top scores.

comment by Steven Byrnes (steve2152) · 2021-01-18T16:14:12.637Z · LW(p) · GW(p)

Related to one aspect of this: my post Building brain-inspired AGI is infinitely easier than understanding the brain [LW · GW]

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2021-01-18T16:30:47.554Z · LW(p) · GW(p)

Ah! If I had read that before, I had forgotten about it, sorry. This is indeed highly relevant. Strong-upvoted to signal boost.

comment by Bucky · 2021-01-21T10:47:48.386Z · LW(p) · GW(p)

Flying machines are one example but can we choose other examples which would teach the opposite lesson?

Nuclear Fusion Power Generation

Longs: The only way we know sustained nuclear fusion can be achieved is in stars. If we are confined to things less big than the sun then sustaining nuclear fusion to produce power will be difficult and there are many unknown unknowns.

Shorty: The key parameters are temperature and pressure and then controlling the plasma. A Tokamak design should be sufficient to achieve this - if we lose control it just means we need stronger / better magnets.

Replies from: Veedrac, daniel-kokotajlo↑ comment by Veedrac · 2021-01-24T20:23:42.566Z · LW(p) · GW(p)

The appeal-to-nature's-constants argument doesn't work great in this context because the sun actually produces fairly low power per unit volume. Nuclear fusion on Earth requires vastly higher power density to be practical.

That said, I think it is correct that temperature and pressure are the key factors. I just don't think the factors map on to the natural equivalents, as much as onto some physical equations that give us the Q factor.

In the context of the article, controlling the plasma is an appeal to complexity; if it turns out to be a rate limiter even after temperature and pressure suffice, then it would be evidence against the argument, but if it turns out not to matter that much, it would be evidence for.

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2021-01-25T10:19:43.348Z · LW(p) · GW(p)

Controlling the plasma is an appeal to complexity, but it isn't an appeal to the complexity of the natural design. The natural design is super simple in this case. So it's not analogous to the types of arguments I think are bogus.

Replies from: Veedrac↑ comment by Veedrac · 2021-01-25T11:30:00.515Z · LW(p) · GW(p)

OK, but doesn't this hurt the point in the post? Shortly's claim that the key variables for AI ‘seem to be size and training time’ and not other measures of complexity seems no stronger (and actually much weaker) than the analogous claim that the key variables for fusion seem to be temperature and pressure, and not other measures of complexity like plasma control.

If the point of the post is only to argue against one specific framing for introducing appeals to complexity, rather than advocate for the simpler models, it seems to lose most of its predictive power for AI, since most of those appeals to complexity can be easily rephrased.

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2021-01-25T12:00:24.971Z · LW(p) · GW(p)

Thanks for these questions and arguments, they've given me something to think about. Here's my current take:

The point of this post was to argue against a common type of argument I heard. I agree that some of these appeals can be rephrased to become non-bogus, and indeed I sketched an account of how they need to rephrase in order to become non-bogus: They need to argue that a.) X is probably necessary for TAI, and b.) X probably won't arrive shortly after the other variables are achieved. I think most of the arguments I am calling bogus cannot be rephrased in this way to achieve a and b, or if they can, I haven't seen it done yet.

The secondary point of this post was to provide evidence for the HBHL milestone, basically "Hey, the case of flight seems analogous in a bunch of ways to the case of AI, and if AI goes the way flight went, it'll happen around the HBHL milestone." This point is much weaker for the obvious reason that flight is just one case-study and we can think of others (like maybe fusion?) that yield the opposite lessons. I think flight is more analogous to AI than fusion, but I'm not sure.

Thus, to people who already assigned non-negligible weight to the HBHL and who didn't put much stock in the bogus arguments, my post is just preaching to the choir and provides no further evidence. My post should only cause a big update in people who either bought the bogus arguments, or who assigned such a low probability to the HBHL milestone that a single historical case study is enough to make them feel like their probability was too low.

Shortly's claim that the key variables for AI ‘seem to be size and training time’ and not other measures of complexity seems no stronger (and actually much weaker) than the analogous claim that the key variables for AI seem to be temperature and pressure, and not other measures of complexity like plasma control.

I agree that it's unclear whether "size and training time" are the key variables; maybe we need to add "control" to the list of key variables. In the case of fusion, it certainly seems that control is a key variable, at least in retrospect -- since we've had temperature and pressure equal to the sun for a while. In the case of flight, one could probably have made a convincing argument that control was a key variable, a major constraint that would take a long time to be overcome... but you would have been totally wrong; control was figured out very quickly once the other variables were in place (but not before!). Moreover, for flight at least, the control problem becomes easier and easier the more power-to-weight you have. For fusion, in my naive guessing opinion the control problem does not become easier the more temperature and pressure you have. For AI, the control problem (not the alignment kind, the capabilities kind) does become easier the more compute you have, because you can use compute to search over architectures, and because you can use parameter count and training time to compensate for other failings like data-inefficiency or whatever. So this argument which I just gave (and which I hinted at in the OP) does seem to suggest that AI will be more like flight than like fusion, but I don't by any means think this is a knock-down argument!

Replies from: Veedrac↑ comment by Veedrac · 2021-01-25T15:39:22.142Z · LW(p) · GW(p)

In the case of fusion, it certainly seems that control is a key variable, at least in retrospect -- since we've had temperature and pressure equal to the sun for a while.

To get this out of the way, I expect that fusion progress is in fact predominantly determined by temperature and pressure (and factors like that that go into the Q factor), and expect that issues with control won't seem very relevant to long-run timelines in retrospect. It's true that we've had temperature and pressure equal to the sun for a while, but it's also true that low-yield fusion is pretty easy. The missing piece to that cannot simply be control, since even a perfectly controlled ounce of a replica sun is not going to produce much energy. Rather, we just have a higher bar to cross before we get yield.

In fusion, you can use temperature and pressure to trade off against control issues. This is most clearly illustrated in hydrogen bombs. In fact, there is little in-principle reason you couldn't use hydrogen bombs to heat water to power a turbine, even if it's not the most politically or economically sensible design.

They need to argue that a.) X is probably necessary for TAI, and b.) X probably won't arrive shortly after the other variables are achieved. I think most of the arguments I am calling bogus cannot be rephrased in this way to achieve a and b, or if they can, I haven't seen it done yet.

While I've seen arguments about the complexity of neuron wiring and function, the argument has rarely been ‘and therefore we need a more exact diagram to capture the human thought processes so we can replicate it’, as much as ‘and therefore intelligence is likely to rely on a lot of specialized machinery and hardcoded knowledge.’

This argument refutes that in its naïve direct form, because, as you say, nature would add complexity irrespective of necessity, even for marginal gains. But if you allow for fusion to say, well, the simple model isn't working out, so let's add [miscellaneous complexity term], as long as it's not directly in analogy to nature, then why can't AI Longs say, well, GPT-3 clearly isn't capturing certain facets of cognition, and scaling doesn't immediately seem to be fixing that, so let's add [miscellaneous complexity term] too? Hence, ‘and therefore intelligence is likely to rely on a lot of specialized machinery and hardcoded knowledge.’

I don't think we necessarily disagree on much wrt. grounded arguments about AI, but I think if one of the key arguments (‘Part 1: Extra brute force can make the problem a lot easier’) is that certain driving forces are fungible, and can trade-off for complexity, then it seems like cases where that doesn't hold (eg. your model of fusion) would be evidence against the argument's generality. Because we don't really know how intelligence works, it seems that either you need to have a lot of belief in this class of argument (which is the case for me), or you need to be very careful applying it to this domain.

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2021-01-25T17:12:23.442Z · LW(p) · GW(p)

I expect that fusion progress is in fact predominantly determined by temperature and pressure (and factors like that that go into the Q factor), and expect that issues with control won't seem very relevant to long-run timelines in retrospect. It's true that we've had temperature and pressure equal to the sun for a while, but it's also true that low-yield fusion is pretty easy. The missing piece to that cannot simply be control, since even a perfectly controlled ounce of a replica sun is not going to produce much energy. Rather, we just have a higher bar to cross before we get yield. In fusion, you can use temperature and pressure to trade off against control issues. This is most clearly illustrated in hydrogen bombs. In fact, there is little in-principle reason you couldn't use hydrogen bombs to heat water to power a turbine, even if it's not the most politically or economically sensible design.

OK, then in that case I feel like the case of fusion is totally not a counterexample-precedent to Shorty's methodology, because the Sun is just not at all analogous to what we are trying to do with fusion power generation. I'm surprised and intrigued to hear that control isn't a big deal. I assume you know more about fusion than me so I'm deferring to you.

While I've seen arguments about the complexity of neuron wiring and function, the argument has rarely been ‘and therefore we need a more exact diagram to capture the human thought processes so we can replicate it’, as much as ‘and therefore intelligence is likely to rely on a lot of specialized machinery and hardcoded knowledge.’

This argument refutes that in its naïve direct form, because, as you say, nature would add complexity irrespective of necessity, even for marginal gains.

Then we agree, at least on the main point of this paper, which was indeed just to refute this sort of argument, which I heard surprisingly often. Just because the brain is complex mysterious etc. doesn't mean 'therefore intelligence is likely to rely on a lot of specialized machinery and hardcoded knowledge.'

But if you allow for fusion to say, well, the simple model isn't working out, so let's add [miscellaneous complexity term], as long as it's not directly in analogy to nature, then why can't AI Longs say, well, GPT-3 clearly isn't capturing certain facets of cognition, and scaling doesn't immediately seem to be fixing that, so let's add [miscellaneous complexity term] too? Hence, ‘and therefore intelligence is likely to rely on a lot of specialized machinery and hardcoded knowledge.’

I called that complexity term "Special sauce." I have not in this post argued that the amount of special sauce needed is small; I left open the possibility that it might be large. The precedent of birds and planes is evidence that necessary special sauce can be small even in situations where one might think it is large, but like I said, it's just one case, so we shouldn't update too strongly based on it. Maybe we can find other cases in which necessary special sauce does seem to be big. Maybe fusion is such a case, though as described above, it's unclear -- it seems like you are saying that we just haven't reached enough temperature and pressure yet to get viable fusion? In which case fusion isn't an example of lots of special sauce being needed after all.

I don't think we necessarily disagree on much wrt. grounded arguments about AI, but I think if one of the key arguments (‘Part 1: Extra brute force can make the problem a lot easier’) is that certain driving forces are fungible, and can trade-off for complexity, then it seems like cases where that doesn't hold (eg. your model of fusion) would be evidence against the argument's generality. Because we don't really know how intelligence works, it seems that either you need to have a lot of belief in this class of argument (which is the case for me), or you need to be very careful applying it to this domain.

I'm not sure I followed this paragraph. Are you saying that you think that, in general, there are key variables for any particular design problem which make the problem easier as they are scaled up? But that I shouldn't think that, given what I erroneously thought about fusion?

Replies from: Veedrac↑ comment by Veedrac · 2021-01-26T12:30:36.172Z · LW(p) · GW(p)

I am by no means an expert on fusion power, I've just been loosely following the field after the recent bunch of fusion startups, a significant fraction of which seem to have come about precisely because HTS magnets significantly shifted the field strength you can achieve at practical sizes. Control and instabilities are absolutely a real practical concern, as are a bunch of other things like neutron damage; my expectation is only that they are second-order difficulties in the long run, much like wing shape was a second-order difficulty for flight. My framing is largely shaped by this MIT talk (here's another, here's their startup).

I called that complexity term "Special sauce." I have not in this post argued that the amount of special sauce needed is small; I left open the possibility that it might be large.

I'm probably just wanting the article to be something it's not then!

I'll try to clarify my point about key variables. The real-world debate of short versus long AI timelines pretty much boils down to the question of whether the techniques we have for AI capture enough of cognition, that short-term future prospects (scaling and research both) end up capturing enough of the important ones for TAI.

It's pretty obvious that GPT-3 doesn't do some things we'd expect a generally intelligent agent to do, and it also seems to me (and seems to be a commonality among skeptics) that we don't have enough of a grounded understanding of intelligence to expect to fill in these pieces from first principles, at least in the short term. Which means the question boils down to ‘can we buy these capabilities with other things we do have, particularly the increasing scale of computation, and by iterating on ideas?’

Flight is a clear case where, as you've said, you can trade the one variable (power-to-weight) to make up for inefficiencies and deficiencies in the other aspects. I expect fusion is another. A case where this doesn't seem to be clearly the case is in building useful, self-replicating nanoscale robots to manufacture things, in analogy to cells and microorganisms. Lithography and biotech have given us good tools for building small objects with defined patterns, but there seems to be a lot of fundamental complexity to the task that can't easily be solved by this. Even if we could fabricate a cubic millimeter of matter with every atom precisely positioned, it's not clear how much of the gap this would close. There is an issue here with trading off scale and manufacturing to substitute for complexity and the things we don't understand.

‘Part 1: Extra brute force can make the problem a lot easier’ says that you can do this sort of trade for AI, and it justifies this in part by drawing analogy to flight. But it's hard to see what intrinsically motivates this comparison specifically, because trading off a motor's power-to-weight ratio for physical upness is very different to trading off a computer's FLOP rate for abstract thinkingness. I assumed you did this because you believed (as I do) that this sort of argument is general. Hence, a general argument should apply generally, so unless there's something special about fusion, it should apply there too. If you don't believe it's a general sort of argument, then why the comparison to flight, rather than to useful, self-replicating nanoscale robots?

If instead you're just drawing comparison to flight to say it's potentially possible that compute is fungible with complexity, rather than it being likely, then it just seems like not a very impactful argument.

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2021-01-26T14:19:14.608Z · LW(p) · GW(p)

Thanks again for the detailed reply; I feel like I'm coming to understand you (and fusion!) much better.

You may indeed be hoping the OP is something it's not.

That said, I think I have more to say in agreement with your strong position:

There is an issue here with trading off scale and manufacturing to substitute for complexity and the things we don't understand.

‘Part 1: Extra brute force can make the problem a lot easier’ says that you can do this sort of trade for AI, and it justifies this in part by drawing analogy to flight. But it's hard to see what intrinsically motivates this comparison specifically, because trading off a motor's power-to-weight ratio for physical upness is very different to trading off a computer's FLOP rate for abstract thinkingness. I assumed you did this because you believed (as I do) that this sort of argument is general. Hence, a general argument should apply generally, so unless there's something special about fusion, it should apply there too. If you don't believe it's a general sort of argument, then why the comparison to flight, rather than to useful, self-replicating nanoscale robots?

If instead you're just drawing comparison to flight to say it's potentially possible that compute is fungible with complexity, rather than it being likely, then it just seems like not a very impactful argument.

1. I don't know enough about nanotech to say whether it's a counterexample to Shorty's position Currently I suspect it isn't. This is a separate issue from the issue you raise, which is whether it's a counterexample to the position "In general, you can substitute brute force in some variables for special sauce." Call this position the strong view.

2. I'm not sure whether I hold the strong view. I certainly didn't try to argue for it in the OP (though I did present a small amount of evidence for it I suppose.)

3. I do hold the strong-view-applied-to-AI. That is, I do think we can make the problem of building TAI easier by using more compute. (As you say, compute is fungible with complexity). I gave two reasons for this in the OP: Can scale up the key variables, and can use compute to automate the search for special sauce. I think both of these reasons are solid on their own; I don't need to appeal to historical case studies to justify them.

4. I am happy to expand on both arguments if you like. I think the "can use compute to automate search for special sauce" is pretty self-explanatory. The "can scale up the key variables" thing is based on deep learning theory as I understand it, which is that bigger neural nets work by containing more and better lottery tickets (and you need longer to train to isolate and promote those tickets from the sludge of competitor subnetworks?). And neural networks are universal function approximators. So whatever skill it is that humans do and that you are trying to get an AI to do, with a big enough neural net trained on enough data, you'll succeed. And "big enough" means probably about the size of the human brain. This is just the sketch of a skeleton of an argument of course, but I could go on...

Replies from: Veedrac↑ comment by Veedrac · 2021-01-26T23:19:18.450Z · LW(p) · GW(p)

Thanks, I think I pretty much understand your framing now.

I think the only thing I really disagree with is that “"can use compute to automate search for special sauce" is pretty self-explanatory.” I think this heavily depends on what sort of variable you expect the special sauce to be. Eg. for useful, self-replicating nanoscale robots, my hypothetical atomic manufacturing technology would enable rapid automated iteration, but it's unclear how you could use that to automatically search for a solution in practice. It's an enabler for research, moreso than a substitute. Personally I'm not sure how I'd justify that claim for AI without importing a whole bunch of background knowledge of the generality of optimization procedures!

IIUC this is mostly outside the scope of what your article was about, and we don't disagree on the meat of the matter, so I'm happy to leave this here.

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2021-01-27T08:23:25.856Z · LW(p) · GW(p)

I think I agree that it's not clear compute can be used to search for special sauce in general, but in the case of AI it seems pretty clear to me: AIs themselves run in computers, and the capabilities we are interested in (some of them, at least) can be detected on AIs in simulations (no need for e.g. robotic bodies) and so we can do trial-and-error on our AI designs in proportion to how much compute we have. More compute, more trial-and-error. (Except it's more efficient than mere trial-and-error, we have access to all sorts of learning and meta-learning and architecture search algorithms, not to mention human insight). If you had enough compute, you could just simulate the entire history of life evolving on an earth-sized planet for a billion years, in a very detailed and realistic physics environment!

Replies from: Veedrac↑ comment by Veedrac · 2021-01-27T12:44:41.929Z · LW(p) · GW(p)

Eventually the conclusion holds trivially, sure, but that takes us very far from the HBHL anchor. Most evolutionary algorithms we do today are very constrained in what programs they can generate, and are run over small models for a small number of iteration steps. A more general search would be exponentially slower, and even more disconnected from current ML. If you expect that sort of research to be pulling a lot of weight, you probably shouldn't expect the result to look like large connectionist models trained on lots of data, and you lose most of the argument for anchoring to HBHL.

A more standard framing is that ‘we can do trial-and-error on our AI designs’, but there we're again in a regime where scale is an enabler for research, moreso than a substitute for it. Architecture search will still fine-tune and validate these ideas, but is less likely to drive them directly in a significant way.

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2021-01-27T13:13:18.147Z · LW(p) · GW(p)

Eventually the conclusion holds trivially, sure, but that takes us very far from the HBHL anchor.

It takes us about 17 orders of magnitude away from the HBHL anchor, in fact. Which is not very far, when you think about it. Divide 100 percentage points of probability mass evenly across those 17 orders of magnitude, and you get almost 6% per OOM, which means something like 4x as much probability mass on the HBHL anchor than Ajeya puts on it in her report!

If you expect that sort of research to be pulling a lot of weight, you probably shouldn't expect the result to look like large connectionist models trained on lots of data, and you lose most of the argument for anchoring to HBHL.

I don't follow this argument. It sounds like double-counting to me, like: "If you put some of your probability mass away from HBHL, that means you are less confident that AI will be made in the HBHL-like way, which means you should have even less of your probability mass on HBHL."

A more standard framing is that ‘we can do trial-and-error on our AI designs’, but there we're again in a regime where scale is an enabler for research, moreso than a substitute for it. Architecture search will still fine-tune and validate these ideas, but is less likely to drive them directly in a significant way.

I'm not sure I get the distinction between enabler and substitute, or why it is relevant here. The point is that we can use compute to search for the missing special sauce. Maybe humans are still in the loop; sure.

Replies from: Veedrac↑ comment by Veedrac · 2021-01-27T15:47:10.728Z · LW(p) · GW(p)

It takes us about 17 orders of magnitude away from the HBHL anchor, in fact. Which is not very far, when you think about it. Divide 100 percentage points of probability mass evenly across those 17 orders of magnitude, and you get almost 6% per OOM, which means something like 4x as much probability mass on the HBHL anchor than Ajeya puts on it in her report!

I don't understand what you're doing here. Why 17 orders of magnitude, and why would I split 100% across each order?

I don't follow this argument. It sounds like double-counting to me

Read ‘and therefore’, not ‘and in addition’. The point is that the more you spend your compute on search, the less directly your search can exploit computationally expensive models.

Put another way, if you have HBHL compute but spend nine orders of magnitude on search, then the per-model compute is much less than HBHL, so the reasons to argue for HBHL don't apply to it. Equivalently, if your per-model compute estimate is HBHL, then the HBHL metric is only relevant for timelines if search is fairly limited.

I'm not sure I get the distinction between enabler and substitute, or why it is relevant here. The point is that we can use compute to search for the missing special sauce. Maybe humans are still in the loop; sure.

Motors are an enabler in the context of flight research because they let you build and test designs, learn what issues to solve, build better physical models, and verify good ideas.

Motors are a substitute in the context of flight research because a better motor means more, easier, and less optimal solutions become viable.

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2021-01-27T16:48:59.734Z · LW(p) · GW(p)

Ajeya estimates (and I agree with her) how much compute it would take to recapitulate evolution, i.e. simulate the entire history of life on earth evolving for a billion years etc. The number she gets is 10^41 FLOP give or take a few OOMs. That's 17 OOMs away from where we are now. So if you take 10^41 as an upper bound, and divide up the probability evenly across the OOMs... Of course it probably shouldn't be a hard upper bound, so instead of dividing up 100 percentage points you should divide up 95 or 90 or whatever your credence is that TAI could be achieved for 10^41 or less compute. But that wouldn't change the result much, which is that a naive, flat-across-orders-of-magnitude-up-until-the-upper-bound-is-reached distribution would assign substantially higher probability to Shorty's position than Ajeya does.

I'm still not following the argument. I agree that you won't be able to use your HBHL compute to do search over HBHL-sized brains+childhoods, because if you only have HBHL compute, you can only do one HBHL-sized brain+childhood. But that doesn't undermine my point, which is that as you get more compute, you can use it to do search. So e.g. when you have 3 OOMs more compute than the HBHL milestone, you can do automated search over 1000 HBHL-sized brains+childhoods. (Also I suppose even when you only have HBHL compute you could do search over architectures and childhoods that are a little bit smaller and hope that the lessons generalize)

I think part of what might be going on here is that since Shorty's position isn't "TAI will happen as soon as we hit HBHL" but rather "TAI will happen shortly after we hit HBHL" there's room for an OOM or three of extra compute beyond the HBHL to be used. (Compute costs decrease fairly quickly, and investment can increase much faster, and probably will when TAI is nigh) I agree that we can't use compute to search for special sauce if we only have exactly HBHL compute (setting aside the paranthetica in the previous paragraph, which suggests that we can)

Replies from: Veedrac↑ comment by Veedrac · 2021-01-27T19:13:11.352Z · LW(p) · GW(p)

Well I understand now where you get the 17, but I don't understand why you want to spread it uniformly across the orders of magnitude. Shouldn't you put the all probability mass for the brute-force evolution approach on some gaussian around where we'd expect that to land, and only have probability elsewhere to account for competing hypotheses? Like I think it's fair to say the probability of a ground-up evolutionary approach only using 10-100 agents is way closer to zero than to 4%.

I'm still not following the argument. [...] So e.g. when you have 3 OOMs more compute than the HBHL milestone

I think you're mixing up my paragraphs. I was referring here to cases where you're trying to substitute searching over programs for the AI special sauce.

If you're in the position where searching 1000 HBHL hypotheses finds TAI, then the implicit assumption is that model scaling has already substituted for the majority of AI special sauce, and the remaining search is just an enabler for figuring out the few remaining details. That or that there wasn't much special sauce in the first place.

To maybe make my framing a bit more transparent, consider the example of a company trying to build useful, self-replicating nanoscale robots using a atomically precise 3D printer under the conditions where 1) nobody there has a good idea of how to go about doing this, and 2) you have 1000 tries.

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2021-03-14T17:09:22.941Z · LW(p) · GW(p)

Sorry I didn't see this until now!

--I agree that for the brute-force evolution approach, we should have a gaussian around where we'd expect that to land. My "Let's just do evenly across all the OOMs between now and evolution" is only a reasonable first-pass approach to what our all-things-considered distribution should be like, including evolution but also various other strategies. (Even better would be having a taxonomy of the various strategies and a gaussian for each; this is sorta what Ajeya does. the problem is that insofar as you don't trust your taxonomy to be exhaustive, the resulting distribution is untrustworthy as well.) I think it's reasonable to extend the probability mass down to where we are now, because we are currently at the HBHL milestone pretty much, which seems like a pretty relevant milestone to say the least.

If you're in the position where searching 1000 HBHL hypotheses finds TAI, then the implicit assumption is that model scaling has already substituted for the majority of AI special sauce, and the remaining search is just an enabler for figuring out the few remaining details. That or that there wasn't much special sauce in the first place.

This seems right to me.

To maybe make my framing a bit more transparent, consider the example of a company trying to build useful, self-replicating nanoscale robots using a atomically precise 3D printer under the conditions where 1) nobody there has a good idea of how to go about doing this, and 2) you have 1000 tries.

I like this analogy. I think our intuitions about how hard it would be might differ though. Also, our intuitions about the extent to which nobody has a good idea of how to make TAI might differ too.

Replies from: Veedrac↑ comment by Veedrac · 2021-03-17T20:19:54.535Z · LW(p) · GW(p)

Also, our intuitions about the extent to which nobody has a good idea of how to make TAI might differ too.

To be clear I'm not saying nobody has a good idea of how to make TAI. I expect pretty short timelines, because I expect the remaining fundamental challenges aren't very big.

What I don't expect is that the remaining fundamental challenges go away through small-N search over large architectures, if the special sauce does turn out to be significant.

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2021-01-21T11:17:50.307Z · LW(p) · GW(p)

Good point! I'd love to see a more thorough investigation into cases like this. This is the best comment so far IMO; strong-upvoted.

My immediate reply would be: Shorty here is just wrong about what the key parameters are; as Longs points out, size seems pretty important, because it means you don't have to worry about control. Trying to make a fusion reactor much smaller than a star seems to me to be analogous to trying to make a flying machine with engines much weaker than bird muscle, or an AI with neural nets much smaller than human brains. Yeah, maybe it's possible in principle, but in practice we should expect it to be very difficult. But I'm not sure, I'd want to think about this more.

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2021-01-21T11:32:03.959Z · LW(p) · GW(p)

Update: Actually, I think I analyzed that wrong. Shorty did mention "controlling the plasma" as a key variable; in that case, I agree that Shorty got the key variables correct. Shorty's methodology is to plot a graph with the key variables and say "We'll achieve it when our variables reach roughly the same level as they are in nature's equivalent." But how do we measure level of control? How can we say that we've reached the same level of control over the plasma as the Sun has? This bit seems implausible. So I think a steelman Shorty would either say that it's unknown whether we've reached the key variables yet (because we don't know how good tokamaks are at controlling plasma) or that control isn't a key variable (because it can be compensated for by other things, like temperature and pressure.) (Though in this case if Shorty went that second route, they'd probably just be wrong? Compare to the case of flight, where the problem of controlling the craft really does become a lot easier when you have access to more powerful&light engines. I don't know much about fusion designs but I suspect that cranking up temperature and pressure doesn't, in fact, make controlling the reaction easier. Am I wrong?)

Replies from: Bucky↑ comment by Bucky · 2021-01-22T08:45:36.859Z · LW(p) · GW(p)

Probably nowadays what Shorty missed was the difficulty in dealing with the energetic neutrons being created and associated radiation. Then associated maintenance costs etc and therefore price-competitiveness. I chose nuclear fusion purely because it was the most salient example of project-that-always-misses-its-deadlines.

(I did my university placement year in nuclear fusion research but still don't feel like I properly understand it! I'm pretty sure you're right though about temperature, pressure and control.)

In theory a steelman Shorty could have thought of all of these things but in practice it's hard to think of everything. I find myself in the weird position of agreeing with you but arguing in the opposite direction.

For a random large project X, which is more likely to be true:

- Project X took longer than expert estimates because of failure to account for Y

- Project X was delivered approximately on time

In general I suspect that it is the former (1). In that case the burden of evidence is on Shorty to show why project X is outside of the reference class of typical-large-projects and maybe in some subclass where accurate predictions of timelines are more achievable.

Maybe what is required is to justify TAI as being in the subclass

- projects-that-are-mainly-determined-by-a-single-limiting-factor

or

- projects-whose-key-variables-are-reliably-identifiable-in-advance

I think this is essentially the argument the OP is making in Analysis Part1?

***

I notice in the above I've probably gone beyond the original argument - the OP was arguing specifically against using the fact that natural systems have such properties to say that they're required. I'm talking about something more general - systems generally have more complexity than we realize. I think this is importantly different.

It may be the case that Longs' argument about brains having such properties is based on an intuition from the broader argument. I think that the OP is essentially correct in saying that adding examples from the human brain into the argument does little to make such an argument stronger (Analysis part 2).

***

(1) Although there is also the question of how much later counts as a failure of prediction. I guess Shorty is arguing for TAI in the next 20 years, Longs is arguing 50-100 years?

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2021-01-22T11:20:58.139Z · LW(p) · GW(p)

I still prefer my analysis above: Fusion is not a case of Shorty being wrong, because a steelman Shorty wouldn't have predicted that we'd get fusion soon. Why? Because we don't have the key variables. Why? Because controlling the plasma is one of the key variables, and the sun has near-perfect control, whereas we are trying to substitute with various designs which may or may not work.

Shorty is actually arguing for TAI much sooner than 20 years from now; if TAI comes around the HBHL milestone then it could happen any day now, it's just a matter of spending a billion dollars on compute and then iterating a few times to work out the details, wright-brothers style. Of course we shouldn't think Shorty is probably correct here; the truth is probably somewhere in between. (Unless we do more historical analyses and find that the case of flight is truly representative of the reference class AI fits in, in which case ho boy singularity here we come)

And yeah, the main purpose of the OP was to argue that certain anti-short-timelines arguments are bogus; this issue of whether timelines are actually short or long is secondary and the case of flight is just one case study, of limited evidential import.

I do take your point that maybe Longs' argument was drawing on intuitions of the sort you are sketching out. In other words, maybe there's a steelman of the arguments I think are bogus, such that they become non-bogus. I already agree this is true in at least one way (see Part 3). I like your point about large projects -- insofar as we think of AI in that reference class, it seems like our timelines should be "Take whatever the experts say and then double it." But if we had done this for flight we would have been disastrously wrong. I definitely want to think, talk, and hear more about these issues... I'd like to have a model of what sorts of technologies are like fusion and what sort are like flight, and why.

I like your suggestions:

projects-that-are-mainly-determined-by-a-single-limiting-factor

projects-whose-key-variables-are-reliably-identifiable-in-advance

My own (hinted at in the OP) was going to be something like "When your basic theory of a design problem is developed enough that you have identified the key variables, and there is a natural design that solves the problem in a similar way to the thing you are trying to build, then you can predict roughly when the problem will be solved by saying that it'll happen around the time that parity-with-the-natural-design is reached in the key variables. What are key variables? I'm not sure how to define them, but one property that seems maybe important is that the design problem becomes easier when you have more of the key variables."

Another thing worth mentioning is that probably having a healthy competition between different smart people is important. The Wright brother succeeded but there were several other groups around the same time also trying to build flying machines, who were less successful (or who took longer to succeed). If instead there had been one big government-funded project, there's more room for human error and the usual failures to cause cost overruns and delays. (OTOH having more funding might have made it happen sooner? IDK). In the case of AI, there are enough different projects full of enough smart people working on the problem that I don't think this is a major constraint. I'd be curious to hear more about the case of fusion. I've heard some people say that actually it could have been achieved by now if only it had more funding, and I think I've heard other people say that it could have been achieved by now if it was handled by a competitive market instead of a handful of bureaucracies (though I may be misremembering that, maybe no one said that).

comment by Thomas Kwa (thomas-kwa) · 2021-01-28T19:29:58.253Z · LW(p) · GW(p)

(For example, imagine a u-shaped craft with a low center of gravity and helicopter-style rotors on each tip. Add a third, smaller propeller on a turret somewhere for steering.)

Extremely minor nitpick: the low center of gravity wouldn't stabilize the craft. Helicopters are unstable regardless of where the rotors are relative to the center of gravity, due to the pendulum rocket fallacy.

Replies from: robert-miles, daniel-kokotajlo↑ comment by Robert Miles (robert-miles) · 2021-03-04T10:32:18.658Z · LW(p) · GW(p)

I came here to say this :)

If you do the stabilisation with the rotors in the usual helicopter way, you basically have a Chinook (though you don't need the extra steering propeller because you can control the rotors well enough)

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2021-03-04T11:29:21.917Z · LW(p) · GW(p)

A Chinook was basically what I was envisioning... what does a Chinook do that my U-shaped proposal wouldn't do? How does stabilization with rotors work? EDIT: Ok, so helicopters use some sort of weighted balls attached to their rotors, and maybe some flexibility in the rotors also... I still don't fully understand how it works but it seems like there are probably explainer videos somewhere.

Replies from: robert-miles↑ comment by Robert Miles (robert-miles) · 2021-03-15T12:14:41.760Z · LW(p) · GW(p)

Yeah, the mechanics of helicopter rotors is pretty complex and a bit counter-intuitive, Smarter Every Day has a series on it

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2021-03-04T11:28:04.167Z · LW(p) · GW(p)

Damn! I feel foolish, should have looked this up first. Thanks!

EDIT: OK, so simple design try #2: What about a quadcopter (with counter-rotating propellers of course to cancel out torque) but where the propellers are angled away from the center of mass instead of just pointing straight down--that way if the craft starts tilting in some direction, it will have an imbalance of forces such that more of the upward component comes from the side that is tilting down, and less from the side that is tilting up, and so the former side will rise and the latter side will fall, and it'll be not-tilted again. This was the other idea I had, but I wrote the U-shaped thing because it took fewer words to explain. ... is this wrong too? EDIT: Now I'm worried this is wrong too for the same reason... damn... I guess I'm still just very confused about the pendulum rocket fallacy and why it's a fallacy. I should go read more.)

comment by Steven Byrnes (steve2152) · 2021-01-18T16:30:15.856Z · LW(p) · GW(p)

Moreover, we probably won’t figure out how to make AIs that are as data-efficient as humans for a long time--decades at least.

I know you weren't endorsing this claim as definitely true, but FYI my take is that other families of learning algorithms besides deep neural networks are in fact as data-efficient as humans, particularly those related to probabilistic programming and analysis-by-synthesis, see examples here [LW · GW].

comment by Rohin Shah (rohinmshah) · 2021-01-20T18:56:26.136Z · LW(p) · GW(p)

Planned summary for the Alignment Newsletter:

This post argues against a particular class of arguments about AI timelines. These arguments have the form: “The brain has property X, but we don’t know how to make AIs with property X. Since it took evolution a long time to make brains with property X, we should expect it will take us a long time as well”. The reason these are not compelling is because humans often use different approaches to solve problems than evolution did, and so humans might solve the overall problem without ever needing to have property X. To make these arguments more convincing, you need to argue 1) why property X really is _necessary_ and 2) why property X won’t follow quickly once everything else is in place.

This is illustrated with a hypothetical example of someone trying to predict when humans would achieve heavier-than-air flight: in practice, you could have made decent predictions just by looking at the power to weight ratios of engines vs. birds. Someone who argued that we were far away because “we don’t know how to make wings that flap” would have made incorrect predictions.

Planned opinion:

Replies from: daniel-kokotajloThis all seems generally right to me, and is part of the reason I like the <@biological anchors approach@>(@Draft report on AI timelines@) to forecasting transformative AI.

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2021-01-20T19:23:14.579Z · LW(p) · GW(p)

Sounds good to me! I suggest you replace "we don't know how to make wings that flap" with "we don't even know how birds stay up for so long without flapping their wings," because IMO it's a more compelling example. But it's not a big deal either way.

As an aside, I'd be interested to hear your views given this shared framing. Since your timelines are much longer than mine, and similar to Ajeya's, my guess is that you'd say TAI requires data-efficiency and that said data-efficiency will be really hard to get, even once we are routinely training AIs the size of the human brain for longer than a human lifetime. In other words, I'd guess that you would make some argument like the one I sketched in Part 3. Am I right? If so, I'd love to hear a more fleshed-out version of that argument from someone who endorses it -- I suppose there's what Ajeya has in her report...

Replies from: rohinmshah↑ comment by Rohin Shah (rohinmshah) · 2021-01-20T21:14:19.674Z · LW(p) · GW(p)

Sorry, what in this post contradicts anything in Ajeya's report? I agree with your headline conclusion of

If all we had to do to get TAI was make a simple neural net 10x the size of my brain, my brain would still look the way it does.

This also seems to be the assumption that Ajeya uses. I actually suspect we could get away with a smaller neural net ,that is similar in size to or somewhat smaller than the brain.

I guess the report then uses existing ML scaling laws to predict how much compute we need to train a neural net the size of a brain, whereas you prefer to use the human lifetime to predict it instead? From my perspective, the former just seems way more principled / well-motivated / likely to give you the right answer, given that the scaling laws seem to be quite precise and reasonably robust.

I would predict that we won't get human-level data efficiency for neural net training, but that's a consequence of my trust in scaling laws (+ a simple model for why that would be the case, namely that evolution can bake in some prior knowledge that it will be harder for humans to do, and you need more data to compensate).

I suggest you replace "we don't know how to make wings that flap" with "we don't even know how birds stay up for so long without flapping their wings,"

Done.

Replies from: daniel-kokotajlo, daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2021-01-21T11:47:02.023Z · LW(p) · GW(p)

OK, so here is a fuller response:

First of all, yeah, as far as I can tell you and I agree on everything in the OP. Like I said, this disagreement is an aside.

Now that you mention it / I think about it more, there's another strong point to add to the argument I sketched in part 3: Insofar as our NN's aren't data-efficient, it'll take more compute to train them, and so even if TAI need not be data-efficient, short-timelines-TAI must be. (Because in the short term, we don't have much more compute. I'm embarrassed I didn't notice this earlier and include it in the argument.) That helps the argument a lot; it means that all the argument has to do is establish that we aren't going to get more data-efficient NN's anytime soon.

And yeah, I agree the scaling laws are a great source of evidence about this. I had them in mind when I wrote the argument in part 3. I guess I'm just not as convinced as you (?) that (a) when we are routinely training NN's with 10e15 params, it'll take roughly 10e15 data points to get to a useful level of performance, and (b) average horizon length for the data points will need to be more than short.

Some reasons I currently doubt (a):

--A bunch of people I talk to, who know more about AI than me, seem confident that we can get several OOMs more data-efficient training than the GPT's had using various already-developed tricks and techniques.

--The scaling laws, IIRC, don't tell us how much data is needed to reach a useful level of performance. Rather, they tell us how much data is needed if you want to use your compute budget optimally. It could be that at 10e15 params and 10e15 data points, performance is actually much higher than merely useful; maybe only 10e13 params and 10e13 data points would be the first to cross the usefulness threshold. (Counterpoint: Extrapolating GPT performance trends on text prediction suggests it wouldn't be human-level at text prediction until about 10e15 params and 10e15 data points, according to data I got from Lanrian. Countercounterpoint: Extrapolating GPT performance trends on tasks other than text prediction makes it seem to me that it could be pretty useful well before then; see these figures, [LW · GW] in which I think 10e15/10e15 would be the far-right edge of the graph).

Some reasons I currently doubt (b):

--I've been impressed with how much GPT-3 has learned despite having a very short horizon length, very limited data modality, very limited input channel, very limited architecture, very small size, etc. This makes me think that yeah, if we improve on GPT-3 in all of those dimensions, we could get something really useful for some transformative tasks, even if we keep the horizon length small.

--I think that humans have a tiny horizon length -- our brains are constantly updating, right? I guess it's hard to make the comparison, given how it's an analog system etc. But it sure seems like the equivalent of the average horizon length for the brain is around a second or so. Now, it could be that humans get away with such a small horizon length because of all the fancy optimizations evolution has done on them. But it also could just be that that's all you need.

--Having a small average horizon length doesn't preclude also training lots on long-horizon tasks. It just means that on average your horizon length is small. So e.g. if the training process involves a bit of predict-the-next input, and also a bit of make-and-execute-plans-actions-over-the-span-of-days, you could get quite a few data points of the latter variety and still have a short average horizon length.

I'm very uncertain about all of this and would love to hear your thoughts, which is why I asked. :)

Replies from: rohinmshah, nostalgebraist, steve2152↑ comment by Rohin Shah (rohinmshah) · 2021-01-21T17:39:31.270Z · LW(p) · GW(p)

Now that you mention it / I think about it more, there's another strong point to add to the argument I sketched in part 3: Insofar as our NN's aren't data-efficient, it'll take more compute to train them, and so even if TAI need not be data-efficient, short-timelines-TAI must be.

Yeah, this is (part of) why I put compute + scaling laws front and center and make inferences about data efficiency; you can have much stronger conclusions when you start reasoning from the thing you believe is the bottleneck.

--A bunch of people I talk to, who know more about AI than me, seem confident that we can get several OOMs more data-efficient training than the GPT's had using various already-developed tricks and techniques.

Note that Ajeya's report does have a term for "algorithmic efficiency", that has a doubling time of 2-3 years.