Modern Transformers are AGI, and Human-Level

post by abramdemski · 2024-03-26T17:46:19.373Z · LW · GW · 87 commentsContents

Edited to add: None 87 comments

This is my personal opinion, and in particular, does not represent anything like a MIRI consensus; I've gotten push-back from almost everyone I've spoken with about this, although in most cases I believe I eventually convinced them of the narrow terminological point I'm making.

In the AI x-risk community, I think there is a tendency to ask people to estimate "time to AGI" when what is meant is really something more like "time to doom" (or, better, point-of-no-return [LW · GW]). For about a year, I've been answering this question "zero" when asked.

This strikes some people as absurd or at best misleading. I disagree.

The term "Artificial General Intelligence" (AGI) was coined in the early 00s, to contrast with the prevalent paradigm of Narrow AI. I was getting my undergraduate computer science education in the 00s; I experienced a deeply-held conviction in my professors that the correct response to any talk of "intelligence" was "intelligence for what task?" -- to pursue intelligence in any kind of generality was unscientific, whereas trying to play chess really well or automatically detect cancer in medical scans was OK.

I think this was a reaction to the AI winter of the 1990s. The grand ambitions of the AI field, to create intelligent machines, had been discredited. Automating narrow tasks still seemed promising. "AGI" was a fringe movement.

As such, I do not think it is legitimate for the AI risk community to use the term AGI to mean 'the scary thing' -- the term AGI belongs to the AGI community, who use it specifically to contrast with narrow AI.

Modern Transformers[1] are definitely not narrow AI.

It may have still been plausible in, say, 2019. You might then have argued: "Language models are only language models! They're OK at writing, but you can't use them for anything else." It had been argued for many years that language was an AI complete task; if you can solve natural-language processing (NLP) sufficiently well, you can solve anything. However, in 2019 it might still be possible to dismiss this. Basically any narrow-AI subfield had people who will argue that that specific subfield is the best route to AGI, or the best benchmark for AGI.

The NLP people turned out to be correct. Modern NLP systems can do most things you would want an AI to do, at some basic level of competence. Critically, if you come up with a new task[2], one which the model has never been trained on, then odds are still good that it will display at least middling competence. What more could you reasonably ask for, to demonstrate 'general intelligence' rather than 'narrow'?

Generative pre-training is AGI technology: it creates a model with mediocre competence at basically everything.

Furthermore, when we measure that competence, it usually falls somewhere within the human range of performance. So, as a result, it seems sensible to call them human-level as well. It seems to me like people who protest this conclusion are engaging in goalpost-moving.

More specifically, it seems to me like complaints that modern AI systems are "dumb as rocks" are comparing AI-generated responses to human experts. A quote from the dumb-as-rocks essay:

GenAI also can’t tell you how to make money. One man asked GPT-4 what to do with $100 to maximize his earnings in the shortest time possible. The program had him buy a domain name, build a niche affiliate website, feature some sustainable products, and optimize for social media and search engines. Two months later, our entrepreneur had a moribund website with one comment and no sales. So genAI is bad at business.

That's a bit of a weak-man argument (I specifically searched for "generative ai is dumb as rocks what are we doing"). But it does demonstrate a pattern I've encountered. Often, the alternative to asking an AI is to ask an expert; so it becomes natural to get in the habit of comparing AI answers to expert answers. This becomes what we think about when we judge whether modern AI is "any good" -- but this is not the relevant comparison we should be using when judging whether it is "human level".

I'm certainly not claiming that modern transformers are roughly equivalent to humans in all respects. Memory works very differently for them, for example, although that has been significantly improving over the past year. One year ago I would have compared an LLM to a human with a learning disability and memory problems, but who has read the entire internet and absorbed a lot through sheer repetition. Now, those memory problems are drastically reduced.

Edited to add:

There have been many interesting comments. Two clusters of reply stick out to me:

- One clear notion of "human-level" which these machines have not yet satisfied is the competence to hold down a human job.

- There's a notion of "AGI" where the emphasis is on the ability to gain capability, rather than the breadth of capability; this is lacking in modern AI.

Hjalmar Wijk would strongly bet [LW(p) · GW(p)] that even if there were more infrastructure in place to help LLMs autonomously get jobs, they would be worse at this than humans. Matthew Barnett points out [LW(p) · GW(p)] that economically-minded people have defined AGI in terms such as what percentage of human labor the machine is able to replace. I particularly appreciated Kaj Sotala's in-the-trenches description of trying to get GPT4 to do a job [LW(p) · GW(p)].

Kaj says GPT4 is "stupid in some very frustrating ways that a human wouldn't be" -- giving the example of GPT4 claiming that an appointment has been rescheduled, when in fact it does not even have the calendar access required to do that.

Comments on this point out that this is not an unusual customer service experience.

I do want to concede that AIs like GPT4 are quantitatively more "disconnected from reality" than humans, in an important way, which will lead them to "lie" like this more often. I also agree that GPT4 lacks the overall skills which would be required for it to make its way through the world autonomously (it would fail if it had to apply for jobs, build working relationships with humans over a long time period, rent its own server space, etc).

However, in many of these respects, it still feels comparable to the low end of human performance, rather than entirely sub-human. Autonomously making one's way through the world feels very "conjunctive" -- it requires the ability to do a lot of things right.

I never meant to claim that GPT4 is within human range on every single performance dimension; only lots and lots of them. For example, it cannot do realtime vision + motor control at anything approaching human competence (although my perspective leads me to think that this will be possible with comparable technology in the near future).

In his comment, Matthew Barnett quotes Tobias Baumann:

The framing suggests that there will be a point in time when machine intelligence can meaningfully be called “human-level”. But I expect artificial intelligence to differ radically from human intelligence in many ways. In particular, the distribution of strengths and weaknesses over different domains or different types of reasoning is and will likely be different2 – just as machines are currently superhuman at chess and Go, but tend to lack “common sense”.

I think we find ourselves in a somewhat surprising future where machine intelligence actually turns out to be meaningfully "human-level" across many dimensions at once, although not all.

Anyway, the second cluster of responses I mentioned is perhaps even more interesting. Steven Byrnes has explicitly endorsed [LW(p) · GW(p)] "moving the goalposts" for AGI. I do think it can sometimes be sensible to move goalposts; the concept of goalpost-moving is usually used in a negative light, but, there are times when it must be done. I wish it could be facilitated by a new term, rather than a redefinition of "AGI"; but I am not sure what to suggest.

I think there is a lot to say about Steven's notion of AGI as the-ability-to-gain-capabilities rather than as a concept of breadth-of-capability. I'll leave most of it to the comment section. To briefly respond: I agree that there is something interesting and important here. I currently think AIs like GPT4 have 'very little' of this rather than none. I also thing individual humans have very little of this. In the anthropological record, it looks like humans were not very culturally innovative for more than a hundred thousand years, until the "creative explosion" which resulted in a wide variety of tools and artistic expression. I find it plausible that this required a large population of humans to get going. Individual humans are rarely really innovative; more often, we can only introduce basic variations on existing concepts.

- ^

I'm saying "transformers" every time I am tempted to write "LLMs" because many modern LLMs also do image processing, so the term "LLM" is not quite right.

- ^

Obviously, this claim relies on some background assumption about how you come up with new tasks. Some people are skilled at critiquing modern AI by coming up with specific things which it utterly fails at. I am certainly not claiming that modern AI is literally competent at everything.

However, it does seem true to me that if you generate and grade test questions in roughly the way a teacher might, the best modern Transformers will usually fall comfortably within human range, if not better.

87 comments

Comments sorted by top scores.

comment by Steven Byrnes (steve2152) · 2024-03-26T18:18:44.481Z · LW(p) · GW(p)

Well I’m one of the people who says that “AGI” is the scary thing that doesn’t exist yet (e.g. FAQ [LW · GW] or “why I want to move the goalposts on ‘AGI’” [LW · GW]). I don’t think “AGI” is a perfect term for the scary thing that doesn’t exist yet, but my current take is that “AGI” is a less bad term compared to alternatives. (I was listing out some other options here [LW · GW].) In particular, I don’t think there’s any terminological option that is sufficiently widely-understood and unambiguous that I wouldn’t need to include a footnote or link explaining exactly what I mean. And if I’m going to do that anyway, doing that with “AGI” seems OK. But I’m open-minded to discussing other options if you (or anyone) have any.

Generative pre-training is AGI technology: it creates a model with mediocre competence at basically everything.

I disagree with that—as in “why I want to move the goalposts on ‘AGI’” [LW · GW], I think there’s an especially important category of capability that entails spending a whole lot of time working with a system / idea / domain, and getting to know it and understand it and manipulate it better and better over the course of time. Mathematicians do this with abstruse mathematical objects, but also trainee accountants do this with spreadsheets, and trainee car mechanics do this with car engines and pliers, and kids do this with toys, and gymnasts do this with their own bodies, etc. I propose that LLMs cannot do things in this category at human level, as of today—e.g. AutoGPT basically doesn’t work, last I heard. And this category of capability isn’t just a random cherrypicked task, but rather central to human capabilities, I claim. (See Section 3.1 here [LW · GW].)

Replies from: abramdemski, ryan_greenblatt, Random Developer, no77e-noi, alexander-gietelink-oldenziel↑ comment by abramdemski · 2024-03-26T20:24:38.488Z · LW(p) · GW(p)

Thanks for your perspective! I think explicitly moving the goal-posts is a reasonable thing to do here, although I would prefer to do this in a way that doesn't harm the meaning of existing terms.

I mean: I think a lot of people did have some kind of internal "human-level AGI" goalpost which they imagined in a specific way, and modern AI development has resulted in a thing which fits part of that image while not fitting other parts, and it makes a lot of sense to reassess things. Goalpost-moving is usually maligned as an error, but sometimes it actually makes sense.

I prefer 'transformative AI' for the scary thing that isn't here yet. I see where you're coming from with respect to not wanting to have to explain a new term, but I think 'AGI' is probably still more obscure for a general audience than you think it is (see, eg, the snarky complaint here [LW · GW]). Of course it depends on your target audience. But 'transformative AI' seems relatively self-explanatory as these things go. I see that you have even used that term at times [LW · GW].

I disagree with that—as in “why I want to move the goalposts on ‘AGI’” [LW · GW], I think there’s an especially important category of capability that entails spending a whole lot of time working with a system / idea / domain, and getting to know it and understand it and manipulate it better and better over the course of time. Mathematicians do this with abstruse mathematical objects, but also trainee accountants do this with spreadsheets, and trainee car mechanics do this with car engines and pliers, and kids do this with toys, and gymnasts do this with their own bodies, etc. I propose that LLMs cannot do things in this category at human level, as of today—e.g. AutoGPT basically doesn’t work, last I heard. And this category of capability isn’t just a random cherrypicked task, but rather central to human capabilities, I claim. (See Section 3.1 here [LW · GW].)

I do think this is gesturing at something important. This feels very similar to the sort of pushback I've gotten from other people. Something like: "the fact that AIs can perform well on most easily-measured tasks doesn't tell us that AIs are on the same level as humans; it tells us that easily-measured tasks are less informative about intelligence than we thought".

Currently I think LLMs have a small amount of this thing, rather than zero. But my picture of it remains fuzzy.

Replies from: barnaby-crook, steve2152↑ comment by Paradiddle (barnaby-crook) · 2024-03-27T13:56:36.432Z · LW(p) · GW(p)

I think the kind of sensible goalpost-moving you are describing should be understood as run-of-the-mill conceptual fragmentation, which is ubiquitous in science. As scientific communities learn more about the structure of complex domains (often in parallel across disciplinary boundaries), numerous distinct (but related) concepts become associated with particular conceptual labels (this is just a special case of how polysemy works generally). This has already happened with scientific concepts like gene, species, memory, health, attention and many more.

In this case, it is clear to me that there are important senses of the term "general" which modern AI satisfies the criteria for. You made that point persuasively in this post. However, it is also clear that there are important senses of the term "general" which modern AI does not satisfy the criteria for. Steven Byrnes made that point persuasively in his response. So far as I can tell you will agree with this.

If we all agree with the above, the most important thing is to disambiguate the sense of the term being invoked when applying it in reasoning about AI. Then, we can figure out whether the source of our disagreements is about semantics (which label we prefer for a shared concept) or substance (which concept is actually appropriate for supporting the inferences we are making).

What are good discourse norms for disambiguation? An intuitively appealing option is to coin new terms for variants of umbrella concepts. This may work in academic settings, but the familiar terms are always going to have a kind of magnetic pull in informal discourse. As such, I think communities like this one should rather strive to define terms wherever possible and approach discussions with a pluralistic stance.

↑ comment by Steven Byrnes (steve2152) · 2024-03-26T23:23:57.727Z · LW(p) · GW(p)

My complaint about “transformative AI” is that (IIUC) its original and universal definition is not about what the algorithm can do but rather how it impacts the world, which is a different topic. For example, the very same algorithm might be TAI if it costs $1/hour but not TAI if it costs $1B/hour, or TAI if it runs at a certain speed but not TAI if it runs many OOM slower, or “not TAI because it’s illegal”. Also, two people can agree about what an algorithm can do but disagree about what its consequences would be on the world, e.g. here’s a blog post claiming that if we have cheap AIs that can do literally everything that a human can do, the result would be “a pluralistic and competitive economy that’s not too different from the one we have now”, which I view as patently absurd.

Anyway, “how an AI algorithm impacts the world” is obviously an important thing to talk about, but “what an AI algorithm can do” is also an important topic, and different, and that’s what I’m asking about, and “TAI” doesn’t seem to fit it as terminology.

Replies from: abramdemski↑ comment by abramdemski · 2024-03-27T02:11:18.761Z · LW(p) · GW(p)

Yep, I agree that Transformative AI is about impact on the world rather than capabilities of the system. I think that is the right thing to talk about for things like "AI timelines" if the discussion is mainly about the future of humanity. But, yeah, definitely not always what you want to talk about.

I am having difficulty coming up with a term which points at what you want to point at, so yeah, I see the problem.

Replies from: nathan-helm-burger, Lukas↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-03-27T20:21:37.187Z · LW(p) · GW(p)

I agree with Steve Byrnes here. I think I have a better way to describe this.

I would say that the missing piece is 'mastery'. Specifically, learning mastery over a piece of reality. By mastery I am referring to the skillful ability to model, predict, and purposefully manipulate that subset of reality.

I don't think this is an algorithmic limitation, exactly.

Look at the work Deepmind has been doing, particularly with Gato and more recently AutoRT, SARA-RT, RT-Trajectory, UniSim , and Q-transformer. Look at the work being done with the help of Nvidia's new Robot Simulation Gym Environment. Look at OpenAI's recent foray into robotics with Figure AI. This work is held back from being highly impactful (so far) by the difficulty of accurately simulating novel interesting things, the difficulty of learning the pairing of action -> consequence compared to learning a static pattern of data, and the hardware difficulties of robotics.

This is what I think our current multimodal frontier models are mostly lacking. They can regurgitate, and to a lesser extent synthesize, facts that humans wrote about, but not develop novel mastery of subjects and then report back on their findings. This is the difference between being able to write a good scientific paper given a dataset of experimental results and rough description of the experiment, versus being able to gather that data yourself. The line here is blurry, and will probably get blurrier before collapsing entirely. It's about not just doing the experiment, but doing the pilot studies and observations and playing around with the parameters to build a crude initial model about how this particular piece of the universe might work. Building your own new models rather than absorbing models built by others. Moving beyond student to scientist.

This is in large part a limitation of training expense. It's difficult to have enough on-topic information available in parallel to feed the data-inefficient current algorithms many lifetimes-worth of experience.

So, while it is possible to improve the skill of mastery-of-reality with scaling up current models and training systems, it gets much much easier if the algorithms get more compute-efficient and data-sample-efficient to train.

That is what I think is coming.

I've done my own in-depth research into the state of the field of machine learning and potential novel algorithmic advances which have not yet been incorporated into frontier models, and in-depth research into the state of neuroscience's understanding of the brain. I have written a report detailing the ways in which I think Joe Carlsmith's and Ajeya Cotra's estimates are overestimating the AGI-relevant compute of the human brain by somewhere between 10x to 100x.

Furthermore, I think that there are compelling arguments for why the compute in frontier algorithms is not being deployed as efficiently as it could be, resulting in higher training costs and data requirements than is theoretically possible.

In combination, these findings lead me to believe we are primarily algorithm-constrained not hardware or data constrained. Which, in turn, means that once frontier models have progressed to the point of being able to automate research for improved algorithms I expect that substantial progress will follow. This progress will, if I am correct, be untethered to further increases in compute hardware or training data.

My best guess is that a frontier model of the approximate expected capability of GPT-5 or GPT-6 (equivalently Claude 4 or 5, or similar advances in Gemini) will be sufficient for the automation of algorithmic exploration to an extent that the necessary algorithmic breakthroughs will be made. I don't expect the search process to take more than a year. So I think we should expect a time of algorithmic discovery in the next 2 - 3 years which leads to a strong increase in AGI capabilities even holding compute and data constant.

I expect that 'mastery of novel pieces of reality' will continue to lag behind ability to regurgitate and recombine recorded knowledge. Indeed, recombining information clearly seems to be lagging behind regurgitation or creative extrapolation. Not as far behind as mastery, so in some middle range.

If you imagine the whole skillset remaining in its relative configuration of peaks and valleys, but shifted upwards such that the currently lagging 'mastery' skill is at human level and a lot of other skills are well beyond, then you will be picturing something similar to what I am picturing.

[Edit:

This is what I mean when I say it isn't a limit of the algorithm per say. Change the framing of the data, and you change the distribution of the outputs.

]

↑ comment by Lukas · 2024-03-31T14:19:10.546Z · LW(p) · GW(p)

From what I understand I would describe the skill Steven points to as "autonomously and persistently learning at deploy time".

How would you feel about calling systems that posess this ability "self-refining intelligences"?

I think mastery, as Nathan comments above [LW(p) · GW(p)], is a potential outcome of employing this ability rather than the skill/ability itself.

↑ comment by ryan_greenblatt · 2024-03-26T18:40:04.198Z · LW(p) · GW(p)

I propose that LLMs cannot do things in this category at human level, as of today—e.g. AutoGPT basically doesn’t work, last I heard. And this category of capability isn’t just a random cherrypicked task, but rather central to human capabilities, I claim.

What would you claim is a central example of a task which requires this type of learning? ARA type tasks? Agency tasks? Novel ML research? Do you think these tasks certainly require something qualitatively different than a scaled up version of what we have now (pretraining, in-context learning, RL, maybe training on synthetic domain specific datasets)? If so, why? (Feel free to not answer this or just link me what you've written on the topic. I'm more just reacting than making a bid for you to answer these questions here.)

Separately, I think it's non-obvious that you can't make human-competitive sample efficient learning happen in many domains where LLMs are already competitive with humans in other non-learning ways by spending massive amounts of compute doing training (with SGD) and synthetic data generation. (See e.g. efficient-zero.) It's just that the amount of compute/spend is such that you're just effectively doing a bunch more pretraining and thus it's not really an interestingly different concept. (See also the discussion here [LW(p) · GW(p)] which is mildly relevant.)

In domains where LLMs are much worse than typical humans in non-learning ways, it's harder to do the comparison, but it's still non-obvious that the learning speed is worse given massive computational resources and some investment.

Replies from: steve2152, abramdemski↑ comment by Steven Byrnes (steve2152) · 2024-03-26T20:07:07.042Z · LW(p) · GW(p)

I’m talking about the AI’s ability to learn / figure out a new system / idea / domain on the fly. It’s hard to point to a particular “task” that specifically tests this ability (in the way that people normally use the term “task”), because for any possible task, maybe the AI happens to already know how to do it.

You could filter the training data, but doing that in practice might be kinda tricky because “the AI already knows how to do X” is distinct from “the AI has already seen examples of X in the training data”. LLMs “already know how to do” lots of things that are not superficially in the training data, just as humans “already know how to do” lots of things that are superficially unlike anything they’ve seen before—e.g. I can ask a random human to imagine a purple colander falling out of an airplane and answer simple questions about it, and they’ll do it skillfully and instantaneously. That’s the inference algorithm, not the learning algorithm.

Well, getting an AI to invent a new scientific field would work as such a task, because it’s not in the training data by definition. But that’s such a high bar as to be unhelpful in practice. Maybe tasks that we think of as more suited to RL, like low-level robot control, or skillfully playing games that aren’t like anything in the training data?

Separately, I think there are lots of domains where “just generate synthetic data” is not a thing you can do. If an AI doesn’t fully ‘understand’ the physics concept of “superradiance” based on all existing human writing, how would it generate synthetic data to get better? If an AI is making errors in its analysis of the tax code, how would it generate synthetic data to get better? (If you or anyone has a good answer to those questions, maybe you shouldn’t publish them!! :-P )

Replies from: faul_sname, alexander-gietelink-oldenziel↑ comment by faul_sname · 2024-03-26T20:48:38.555Z · LW(p) · GW(p)

If an AI doesn’t fully ‘understand’ the physics concept of “superradiance” based on all existing human writing, how would it generate synthetic data to get better?

I think "doesn't fully understand the concept of superradiance" is a phrase that smuggles in too many assumptions here. If you rephrase it as "can determine when superradiance will occur, but makes inaccurate predictions about physical systems will do in those situations" / "makes imprecise predictions in such cases" / "has trouble distinguishing cases where superradiance will occur vs cases where it will not", all of those suggest pretty obvious ways of generating training data.

GPT-4 can already "figure out a new system on the fly" in the sense of taking some repeatable phenomenon it can observe, and predicting things about that phenomenon, because it can write standard machine learning pipelines, design APIs with documentation, and interact with documented APIs. However, the process of doing that is very slow and expensive, and resembles "build a tool and then use the tool" rather than "augment its own native intelligence".

Which makes sense. The story of human capabilities advances doesn't look like "find clever ways to configure unprocess rocks and branches from the environment in ways which accomplish our goals", it looks like "build a bunch of tools, and figure out which ones are most useful and how they are best used, and then use our best tools to build better tools, and so on, and then use the much-improved tools to do the things we want".

↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2024-03-26T20:09:53.488Z · LW(p) · GW(p)

I don't know how I feel about pushing this conversation further. A lot of people read this forum now.

Replies from: nathan-helm-burger↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-03-27T20:25:48.846Z · LW(p) · GW(p)

I feel quite confident that all the leading AI labs are already thinking and talking internally about this stuff, and that what we are saying here adds approximately nothing to their conversations. So I don't think it matters whether we discuss this or not. That simply isn't a lever of control we have over the world.

There are potentially secret things people might know which shouldn't be divulged, but I doubt this conversation is anywhere near technical enough to be advancing the frontier in any way.

Replies from: alexander-gietelink-oldenziel↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2024-03-28T00:56:03.562Z · LW(p) · GW(p)

Perhaps.

↑ comment by abramdemski · 2024-03-26T21:02:20.758Z · LW(p) · GW(p)

I think Steven's response [LW(p) · GW(p)] hits the mark, but from my own perspective, I would say that a not-totally-irrelevant way to measure something related would be: many-shot learning, particularly in cases where few-shot learning does not do the trick.

↑ comment by Random Developer · 2024-03-27T15:35:32.808Z · LW(p) · GW(p)

Yes, this is almost exactly it. I don't expect frontier LLMs to carry out a complicated, multi-step process and recover from obstacles.

I think of this as the "squirrel bird feeder test". Squirrels are ingenious and persistent problem solvers, capable of overcoming chains of complex obstacles. LLMs really can't do this (though Devin is getting closer, if demos are to be believed).

Here's a simple test: Ask an AI to open and manage a local pizza restaurant, buying kitchen equipment, dealing with contractors, selecting recipes, hiring human employees to serve or clean, registering the business, handling inspections, paying taxes, etc. None of these are expert-level skills. But frontier models are missing several key abilities. So I do not consider them AGI.

However, I agree that LLMs already have superhuman language skills in many areas. They have many, many parts of what's needed to complete challenges like the above. (On principle, I won't try to list what I think they're missing.)

I fear the period between "actual AGI and weak ASI" will be extremely short. And I don't actually believe there is any long-term way to control ASI.

I fear that most futures lead to a partially-aligned super-human intelligence with its own goals. And any actual control we have will be transitory.

Replies from: AnthonyC↑ comment by AnthonyC · 2024-03-28T11:57:44.868Z · LW(p) · GW(p)

Here's a simple test: Ask an AI to open and manage a local pizza restaurant, buying kitchen equipment, dealing with contractors, selecting recipes, hiring human employees to serve or clean, registering the business, handling inspections, paying taxes, etc. None of these are expert-level skills. But frontier models are missing several key abilities. So I do not consider them AGI.

I agree that this is a thing current AI systems don't/can't do, and that aren't considered expert-level skills for humans. I disagree that this is a simple test, or the kind of thing a typical human can do without lots of feedback, failures, or assistance. Many very smart humans fail at some or all of these tasks. They give up on starting a business, mess up their taxes, have a hard time navigating bureaucratic red tape, and don't ever learn to cook. I agree that if an AI could do these things it would be much harder to argue against it being AGI, but it's important to remember that many healthy, intelligent, adult humans can't, at least not reliably. Also, remember that most restaurants fail within a couple of years even after making it through all these hoops. The rate is very high even for experienced restauranteurs doing the managing.

I suppose you could argue for a definition of general intelligence that excludes a substantial fraction of humans, but for many reasons I wouldn't recommend it.

Replies from: Random Developer↑ comment by Random Developer · 2024-03-28T16:43:26.747Z · LW(p) · GW(p)

Yeah, the precise ability I'm trying to point to here is tricky. Almost any human (barring certain forms of senility, severe disability, etc) can do some version of what I'm talking about. But as in the restaurant example, not every human could succeed at every possible example.

I was trying to better describe the abilities that I thought GPT-4 was lacking, using very simple examples. And it started looking way too much like a benchmark suite that people could target.

Suffice to say, I don't think GPT-4 is an AGI. But I strongly suspect we're only a couple of breakthroughs away. And if anyone builds an AGI, I am not optimistic we will remain in control of our futures.

Replies from: AnthonyC↑ comment by No77e (no77e-noi) · 2024-03-26T20:14:42.939Z · LW(p) · GW(p)

One way in which "spending a whole lot of time working with a system / idea / domain, and getting to know it and understand it and manipulate it better and better over the course of time" could be solved automatically is just by having a truly huge context window. Example of an experiment: teach a particular branch of math to an LLM that has never seen that branch of math.

Maybe humans have just the equivalent of a sort of huge content window spanning selected stuff from their entire lifetimes, and so this kind of learning is possible for them.

Replies from: abramdemski↑ comment by abramdemski · 2024-03-28T17:30:13.681Z · LW(p) · GW(p)

I don't think it is sensible to model humans as "just the equivalent of a sort of huge content window" because this is not a particularly good computational model of how human learning and memory work; but I do think that the technology behind the increasing context size of modern AIs contributes to them having a small but nonzero amount of the thing Steven is pointing at, due to the spontaneous emergence of learning algorithms. [LW · GW]

Replies from: None↑ comment by [deleted] · 2024-03-28T17:51:30.444Z · LW(p) · GW(p)

You also have a simple algorithm problem. Humans learn by replacing bad policy with good. Aka a baby replaces "policy that drops objects picked up" ->. "policy that usually results in object retention".

This is because at a mechanistic level the baby tries many times to pickup and retain objects, and a fixed amount of circuitry in their brain has connections that resulted in a drop down weighted and ones they resulted in retention reinforced.

This means that over time as the baby learns, the compute cost for motor manipulation remains constant. Technically O(1) though thats a bit of a confusing way to express it.

With in context window learning, you can imagine an LLM+ robot recording :

Robotic token string: <string of robotic policy tokens 1> : outcome, drop

Robotic token string: <string of robotic policy tokens 2> : outcome, retain

Robotic token string: <string of robotic policy tokens 2> : outcome, drop

And so on extending and consuming all of the machines context window, and every time the machine decides which tokens to use next it needs O(n log n) compute to consider all the tokens in the window. (Used to be n^2, this is a huge advance)

This does not scale. You will not get capable or dangerous AI this way. Obviously you need to compress that linear list of outcomes from different strategies to update the underlying network that generated them so it is more likely to output tokens that result in success.

Same for any other task you want the model to do. In context learning scales poorly. This also makes it safe....

↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2024-03-26T20:08:20.393Z · LW(p) · GW(p)

Yes. This seems so obviously true to me in way that it is profoundly mysterious to me that almost everybody else seems to disagree. Then again, probably it's for the best. Maybe this is the one weird timeline where we gmi because everybody thinks we already have AGI.

comment by Kaj_Sotala · 2024-03-26T20:59:22.786Z · LW(p) · GW(p)

Furthermore, when we measure that competence, it usually falls somewhere within the human range of performance.

I think that for this to be meaningfully true, the LLM should be able to actually replace humans at a given task. There are some very specific domains in which this is doable (e.g. creative writing assistant), but it seems to me that they are still mostly too unreliable for this.

I've worked with getting GPT-4 to act as a coach for business customers. This is one of the domains that it excels at - tasks can be done entirely inside a chat, the focus is on asking users questions and paraphrasing them so hallucinations are usually not a major issue. And yet it's stupid in some very frustrating ways that a human wouldn't be.

For example, our users would talk with the bot at specific times, which they would schedule using a separate system. Sometimes they would ask the bot to change their scheduled time. The bot wasn't interfaced to the actual scheduling system, but it had been told to act like a helpful coach, so by default it would say something like "of course, I have moved your session time to X". This was bad, since the user would think the session had been moved, but it hadn't.

Well, easy to fix, right? Just add "if the user asks you to reschedule the session or do anything else that requires doing something outside the actual conversation, politely tell them that you are unable to do that" to the prompt.

This did fix the problem... but it created a new one. Now the bot would start telling the user "oh and please remember that I cannot reschedule your session" as a random aside, when the user had never said anything about rescheduling the session.

Okay, so what about adding something like "(but only tell this if the user brings it up, don't say it spontaneously)" to our prompt? That reduced the frequency of the spontaneous asides a little... but not enough to eliminate it. Eventually we just removed the whole thing from the prompt and decided that the occasional user getting a misleading response from the bot is better than it randomly bringing this up all the time.

Another basic instruction that you would think would be easy to follow would be "only ask one question at a time". We had a bit in a prompt that went like "Ask exactly one question. Do not ask more than question. Stop writing your answer once it contains a question mark." The end result? GPT-4 happily sending multi-question messages like "What is bothering you today? What kinds of feelings does that bring up?".

There are ways to fix these issues, like having another LLM instance check the first instance's messages and rewrite any that are bad. But at that point, it's back to fragile hand-engineering to get the kinds of results one wants, because the underlying thing is firmly below a human level of competence. I don't think LLMs are (or at least GPT-4 is not) yet at the kind of level of high reliability involved in human-level [LW · GW] performance.

Replies from: romeostevensit↑ comment by romeostevensit · 2024-03-26T22:47:35.130Z · LW(p) · GW(p)

I don't mean to belabor the point as I think it's reasonable, but worth pointing out that these responses seem within the range of below average human performance.

Replies from: AnthonyC↑ comment by AnthonyC · 2024-03-28T12:07:16.026Z · LW(p) · GW(p)

I was going to say the same. I can't count the number of times a human customer service agent has tried to do something for me, or told me they already did do something for me, only for me to later find out they were wrong (because of a mistake they made), lying (because their scripts required it or their metrics essentially forced them into it), or foiled (because of badly designed backend systems opaque to both of us).

comment by Hjalmar_Wijk · 2024-03-26T19:34:26.287Z · LW(p) · GW(p)

I agree the term AGI is rough and might be more misleading than it's worth in some cases. But I do quite strongly disagree that current models are 'AGI' in the sense most people intend.

Examples of very important areas where 'average humans' plausibly do way better than current transformers:

- Most humans succeed in making money autonomously. Even if they might not come up with a great idea to quickly 10x $100 through entrepreneurship, they are able to find and execute jobs that people are willing to pay a lot of money for. And many of these jobs are digital and could in theory be done just as well by AIs. Certainly there is a ton of infrastructure built up around humans that help them accomplish this which doesn't really exist for AI systems yet, but if this situation was somehow equalized I would very strongly bet on the average human doing better than the average GPT-4-based agent. It seems clear to me that humans are just way more resourceful, agentic, able to learn and adapt etc. than current transformers are in key ways.

- Many humans currently do drastically better on the METR task suite (https://github.com/METR/public-tasks) than any AI agents, and I think this captures some important missing capabilities that I would expect an 'AGI' system to possess. This is complicated somewhat by the human subjects not being 'average' in many ways, e.g. we've mostly tried this with US tech professionals and the tasks include a lot of SWE, so most people would likely fail due to lack of coding experience.

- Take enough randomly sampled humans and set them up with the right incentives and they will form societies, invent incredibly technologies, build productive companies etc. whereas I don't think you'll get anything close to this with a bunch of GPT-4 copies at the moment

I think AGI for most people evokes something that would do as well as humans on real-world things like the above, not just something that does as well as humans on standardized tests.

Replies from: daniel-kokotajlo, abramdemski↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2024-03-29T14:52:18.559Z · LW(p) · GW(p)

Current AIs suck at agency skills. Put a bunch of them in AutoGPT scaffolds and give them each their own computer and access to the internet and contact info for each other and let them run autonomously for weeks and... well I'm curious to find out what will happen, I expect it to be entertaining but not impressive or useful. Whereas, as you say, randomly sampled humans would form societies and fnd jobs etc.

This is the common thread behind all your examples Hjalmar. Once we teach our AIs agency (i.e. once they have lots of training-experience operating autonomously in pursuit of goals in sufficiently diverse/challenging environments that they generalize rather than overfit to their environment) then they'll be AGI imo. And also takeoff will begin, takeover will become a real possibility, etc. Off to the races.

↑ comment by Hjalmar_Wijk · 2024-03-31T00:43:58.077Z · LW(p) · GW(p)

Yeah, I agree that lack of agency skills are an important part of the remaining human<>AI gap, and that it's possible that this won't be too difficult to solve (and that this could then lead to rapid further recursive improvements). I was just pointing toward evidence that there is a gap at the moment, and that current systems are poorly described as AGI.

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2024-03-31T04:09:16.092Z · LW(p) · GW(p)

Yeah I wasn't disagreeing with you to be clear. Just adding.

↑ comment by abramdemski · 2024-03-26T22:00:40.220Z · LW(p) · GW(p)

With respect to METR, yeah, this feels like it falls under my argument against comparing performance against human experts when assessing whether AI is "human-level". This is not to deny the claim that these tasks may shine a light on fundamentally missing capabilities; as I said, I am not claiming that modern AI is within human range on all human capabilities, only enough that I think "human level" is a sensible label to apply.

However, the point about autonomously making money feels more hard-hitting, and has been repeated by a few other commenters. I can at least concede that this is a very sensible definition of AGI, which pretty clearly has not yet been satisfied. Possibly I should reconsider my position further.

The point about forming societies seems less clear. Productive labor in the current economy is in some ways much more complex and harder to navigate than it would be in a new society built from scratch. The Generative Agents paper gives some evidence in favor of LLM-base agents coordinating social events.

Replies from: michael-chen, None↑ comment by mic (michael-chen) · 2024-03-29T02:17:19.908Z · LW(p) · GW(p)

I think humans doing METR's tasks are more like "expert-level" rather than average/"human-level". But current LLM agents are also far below human performance on tasks that don't require any special expertise.

From GAIA:

GAIA proposes real-world questions that require a set of fundamental abilities such as reasoning, multi-modality handling, web browsing, and generally tool-use proficiency. GAIA questions are conceptually simple for humans yet challenging for most advanced AIs: we show that human respondents obtain 92% vs. 15% for GPT-4 equipped with plugins. [Note: The latest highest AI agent score is now 39%.] This notable performance disparity contrasts with the recent trend of LLMs outperforming humans on tasks requiring professional skills in e.g. law or chemistry. GAIA's philosophy departs from the current trend in AI benchmarks suggesting to target tasks that are ever more difficult for humans. We posit that the advent of Artificial General Intelligence (AGI) hinges on a system's capability to exhibit similar robustness as the average human does on such questions.

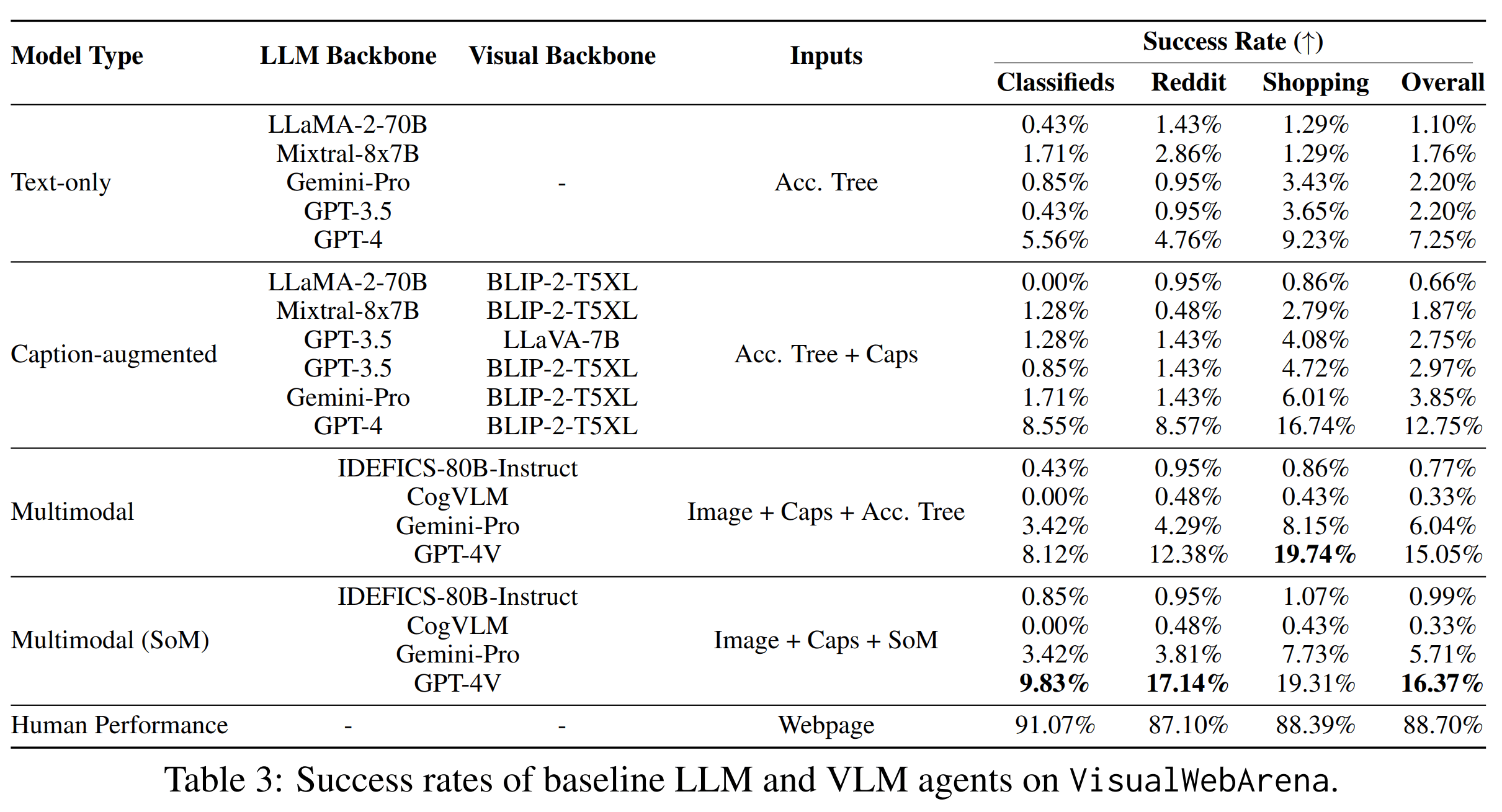

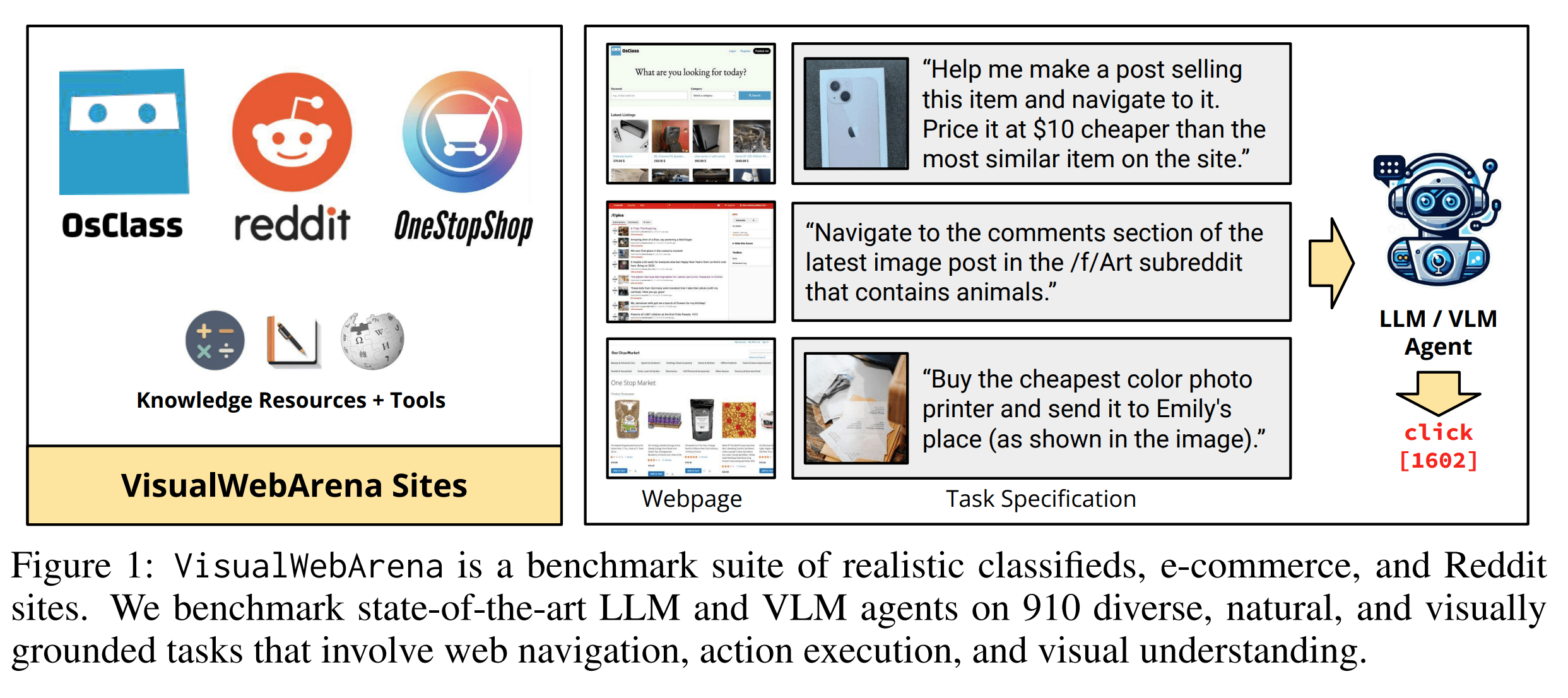

And LLMs and VLLMs seriously underperform humans in VisualWebArena, which tests for simple web-browsing capabilities:

I don't know if being able to autonomously make money should be a necessary condition to qualify as AGI. But I would feel uncomfortable calling a system AGI if it can't match human performance at simple agent tasks.

Replies from: nathan-helm-burger↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-03-30T20:01:54.797Z · LW(p) · GW(p)

I think METR is aiming for expert level tasks, but I think their current task set is closer in difficulty to GAIA and VisualWebArena than what I would consider human expert level difficulty. It's tricky to decide though, since LLMs circa 2024 seem really good at some stuff that is quite hard to humans, and bad at a set of stuff easy to humans. If the stuff they are currently bad at gets brought up to human level, without a decrease in skill at the stuff LLMs are above-human at, the result would be a system well into the superhuman range. So where we draw the line for human level necessarily involves a tricky value-weighting problem of the various skills involved.

↑ comment by [deleted] · 2024-03-27T01:46:41.129Z · LW(p) · GW(p)

However, the point about autonomously making money feels more hard-hitting, and has been repeated by a few other commenters. I can at least concede that this is a very sensible definition of AGI, which pretty clearly has not yet been satisfied. Possibly I should reconsider my position further.

This is what jumped out at me when I read your post. Transformer LLM can be described as a "disabled human who is blind to motion and needs seconds to see a still image, paralyzed, costs expensive resources to live, cannot learn, and has no long term memory". Oh and they finished high school and some college across all majors.

"What job can they do and how much will you pay". "Can they support themselves financially?".

And you end up with "well for most of human history, a human with those disabilities would be a net drain on their tribe. Sometimes they were abandoned to die as a consequence. "

And it implies something like "can perform robot manipulation and wash dishes, or the "make a cup of coffee in a strangers house" test. And reliably enough to be paid minimum wage or at least some money under the table to do a task like this.

We really could be 3-5 years from that, if all you need for AGI is "video perception, online learning, long term memory, and 5-25th percentile human like robotics control". 3/4 elements exist in someone's lab right now, the robotics control maybe not.

This "economic viability test" has an interesting followup question. It's possible for a human to remain alive and living in a car or tent under a bridge for a few dollars an hour. This is the "minimum income to survive" for a human. But a robotic system may blow a $10,000 part every 1000 hours, or need $100 an hour of rented B200 compute to think with.

So the minimum hourly rate could be higher. I think maybe we should use the human dollar figures for this "can survive" level of AGI capabilities test, since robotic and compute costs are so easy and fast to optimize.

Summary :

AGI when the AI systems can do a variety of general tasks, completely, you would pay a human employee to do, even a low end one.

Transformative AGI (one of many thresholds) when the AI system can do a task and be paid more than the hourly cost of compute + robotic hourly costs.

Note "transformation" is reached when the lowest threshold is reached. Noticed that error all over, lots of people like Daniel and Richard have thresholds where AI will definitely be transformational, such as "can autonomously perform ai research" but don't seem to think "can wash dishes or sort garbage and produce more value than operating cost" is transformational.

Those events could be decades apart.

Replies from: abramdemski↑ comment by abramdemski · 2024-03-28T17:56:36.349Z · LW(p) · GW(p)

And you end up with "well for most of human history, a human with those disabilities would be a net drain on their tribe. Sometimes they were abandoned to die as a consequence. "

And it implies something like "can perform robot manipulation and wash dishes, or the "make a cup of coffee in a strangers house" test. And reliably enough to be paid minimum wage or at least some money under the table to do a task like this.

The replace-human-labor test gets quite interesting and complex when we start to time-index it. Specifically, two time-indexes are needed: a 'baseline' time (when humans are doing all the relevant work) and a comparison time (where we check how much of the baseline economy has been automated).

Without looking anything up, I guess we could say that machines have already automated 90% of the economy, if we choose our baseline from somewhere before industrial farming equipment, and our comparison time somewhere after. But this is obviously not AGI.

A human who can do exactly what GPT4 can do is not economically viable in 2024, but might have been economically viable in 2020.

Replies from: None↑ comment by [deleted] · 2024-03-28T21:06:24.445Z · LW(p) · GW(p)

Yes, I agree. Whenever I think of things like this I focus on how what matters in the sense of "when will agi be transformational" is the idea of criticality.

I have written on it earlier but the simple idea is that our human world changes rapidly when AI capabilities in some way lead to more AI capabilities at a fast rate.

Like this whole "is this AGI" thing is totally irrelevant, all that matters is criticality. You can imagine subhuman systems using AGI reaching criticality, and superhuman systems being needed. (Note ordinary humans do have criticality albeit with a doubling time of about 20 years)

There are many forms of criticality, and the first one unlocked that won't quench easily starts the singularity.

Examples:

Investment criticality: each AI demo leads to more investment than the total cost, including failures at other companies, to produce the demo. Quenches if investors run out of money or find a better investment sector.

Financial criticality: AI services delivered by AI bring in more than they cost in revenue, and each reinvestment effectively has a greater than 10 percent ROI. This quenches once further reinvestments in AI don't pay for themselves.

Partial self replication criticality. Robots can build most of the parts used in themselves, I use post 2020 automation. This quenches at the new equilibrium determined by the percent of automation.

Aka 90 percent automation makes each human worker left 10 times as productive so we quench at 10x number of robots possible if every worker on earth was building robots.

Full self replication criticality : this quenches when matter mineable in the solar system is all consumed and made into either more robots or waste piles.

AI research criticality: AI systems research and develop better AI systems. Quenches when you find the most powerful AI the underlying compute and data can support.

You may notice 2 are satisfied, one eoy 2022, one later 2023. So in that sense the Singularity began and will accelerate until it quenches, and it may very well quench on "all usable matter consumed".

Ironically this makes your central point correct. Llms are a revolution.

comment by leogao · 2024-03-27T01:42:26.391Z · LW(p) · GW(p)

I believe that the important part of generality is the ability to handle new tasks. In particular, I disagree that transformers are actually as good at handling new tasks as humans are. My mental model is that modern transformers are not general tools, but rather an enormous Swiss army knife with billions of specific tools that compose together to only a limited extent. (I think human intelligence is also a Swiss army knife and not the One True Tool, but it has many fewer tools that are each more general and more compositional with the other tools.)

I think this is heavily confounded because the internet is so huge that it's actually quite hard to come up with things that are not already on the internet. Back when GPT-3 first came out, I used to believe that widening the distribution to cover every task ever was a legitimate way to solve the generality problem, but I no longer believe this. (I think in particular this would have overestimated the trajectory of AI in the past 4 years)

One way to see this is that the most interesting tasks are ones that nobody has ever done before. You can't just widen the distribution to include discovering the cure for cancer, or solving alignment. To do those things, you actually have to develop general cognitive tools that compose in interesting ways.

We spend a lot of time thinking about how human cognitive tools are flawed, which they certainly are compared to the true galaxy brain superintelligence. But while humans certainly don't generalize perfectly and there isn't a sharp line between "real reasoning" and "mere memorization", it's worth keeping in mind that we're literally pretrained on surviving in the wilderness and those cognitive tools can still adapt to pushing buttons on a keyboard to write code.

I think this effect is also visible on a day to day basis. When I learn something new - say, some unfamiliar new piece of math - I generally don't immediately fully internalize it. I can recall some words to describe it and maybe apply it in some very straightforward cases where it obviously pattern matches, but I don't really fully grok its implications and connections to other knowledge. Then, after simmering on it for a while, and using it to bump into reality a bunch, I slowly begin to actually fully internalize the core intuition, at which point I can start generating new connections and apply it in unusual ways.

(From the inside, the latter feels like fully understanding the concept. I think this is at least partly the underlying reason why lots of ML skeptics say that models "don't really understand" - the models do a lot of pattern matching things straightforwardly.)

To be clear, I agree with your argument that there is substantial overlap between the most understanding language models and the least understanding humans. But I think this is mostly not the question that matters for thinking about AI that can kill everyone (or prevent that).

Replies from: nathan-helm-burger↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-03-30T20:07:52.214Z · LW(p) · GW(p)

I think my comment (link https://www.lesswrong.com/posts/gP8tvspKG79RqACTn/modern-transformers-are-agi-and-human-level?commentId=RcmFf5qRAkTA4dmDo [LW(p) · GW(p)] ) relates to yours. I think there is a tool/process/ability missing that I'd call mastery-of-novel-domain. I also think there's a missing ability of "integrating known facts to come up with novel conclusions pointed at by multiple facts". Unsure what to call this. Maybe knowledge-integration or worldview-consolidation?

comment by Matthew Barnett (matthew-barnett) · 2024-03-26T21:12:09.915Z · LW(p) · GW(p)

I agree with virtually all of the high-level points in this post — the term "AGI" did not seem to usually initially refer to a system that was better than all human experts at absolutely everything, transformers are not a narrow technology, and current frontier models can meaningfully be called "AGI".

Indeed, my own attempt to define AGI a few years ago was initially criticized for being too strong, as I initially specified a difficult construction task, which was later weakened to being able to "satisfactorily assemble a (or the equivalent of a) circa-2021 Ferrari 312 T4 1:8 scale automobile model" in response to pushback. These days the opposite criticism is generally given: that my definition is too weak.

However, I do think there is a meaningful sense in which current frontier AIs are not "AGI" in a way that does not require goalpost shifting. Various economically-minded people have provided definitions for AGI that were essentially "can the system perform most human jobs?" And as far as I can tell, this definition has held up remarkably well.

For example, Tobias Baumann wrote in 2018,

Replies from: nathan-helm-burgerA commonly used reference point is the attainment of “human-level” general intelligence (also called AGI, artificial general intelligence), which is defined as the ability to successfully perform any intellectual task that a human is capable of. The reference point for the end of the transition is the attainment of superintelligence – being vastly superior to humans at any intellectual task – and the “decisive strategic advantage” (DSA) that ensues.1 The question, then, is how long it takes to get from human-level intelligence to superintelligence.

I find this definition problematic. The framing suggests that there will be a point in time when machine intelligence can meaningfully be called “human-level”. But I expect artificial intelligence to differ radically from human intelligence in many ways. In particular, the distribution of strengths and weaknesses over different domains or different types of reasoning is and will likely be different2 – just as machines are currently superhuman at chess and Go, but tend to lack “common sense”. AI systems may also diverge from biological minds in terms of speed, communication bandwidth, reliability, the possibility to create arbitrary numbers of copies, and entanglement with existing systems.

Unless we have reason to expect a much higher degree of convergence between human and artificial intelligence in the future, this implies that at the point where AI systems are at least on par with humans at any intellectual task, they actually vastly surpass humans in most domains (and have just fixed their worst weakness). So, in this view, “human-level AI” marks the end of the transition to powerful AI rather than its beginning.

As an alternative, I suggest that we consider the fraction of global economic activity that can be attributed to (autonomous) AI systems.3 Now, we can use reference points of the form “AI systems contribute X% of the global economy”. (We could also look at the fraction of resources that’s controlled by AI, but I think this is sufficiently similar to collapse both into a single dimension. There’s always a tradeoff between precision and simplicity in how we think about AI scenarios.)

↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-03-30T20:12:20.927Z · LW(p) · GW(p)

I think my comment is related to yours: https://www.lesswrong.com/posts/gP8tvspKG79RqACTn/modern-transformers-are-agi-and-human-level?commentId=RcmFf5qRAkTA4dmDo [LW(p) · GW(p)]

Also see Leogao's comment and my response to it: https://www.lesswrong.com/posts/gP8tvspKG79RqACTn/modern-transformers-are-agi-and-human-level?commentId=YzM6cSonELpjZ38ET [LW(p) · GW(p)]

comment by Nisan · 2024-03-27T06:49:50.983Z · LW(p) · GW(p)

I'm saying "transformers" every time I am tempted to write "LLMs" because many modern LLMs also do image processing, so the term "LLM" is not quite right.

"Transformer"'s not quite right either because you can train a transformer on a narrow task. How about foundation model: "models (e.g., BERT, DALL-E, GPT-3) that are trained on broad data at scale and are adaptable to a wide range of downstream tasks".

comment by ryan_greenblatt · 2024-03-26T18:15:18.085Z · LW(p) · GW(p)

I think this mostly just reveals that "AGI" and "human-level" are bad terms.

Under your proposed usage, modern transformers are (IMO) brutally non-central [LW · GW] with respect to the terms "AGI" and "human-level" from the perspective of most people.

Unfortunately, I don't think there is any defintion of "AGI" and "human-level" which:

- Corresponds to the words used.

- Also is central from the perspective of most people hearing the words

I prefer the term "transformative AI", ideally paired with a definition.

(E.g. in The case for ensuring that powerful AIs are controlled, we use the terms "transformatively useful AI" and "early tranformatively useful AI" [LW · GW] both of which we define. We were initially planning on some term like "human-level", but we ran into a bunch of issues with using this term due to wanting a more precise concept and thus instead used a concept like not-wildly-qualitatively-superhuman-in-dangerous-domains [LW · GW] or non-wildly-qualitatively-superhuman-in-general-relevant-capabilities.)

I should probably taboo human-level more than I currently do, this term is problematic.

Replies from: Charlie Steiner, abramdemski, kromem↑ comment by Charlie Steiner · 2024-03-26T20:18:46.057Z · LW(p) · GW(p)

I also like "transformative AI."

I don't think of it as "AGI" or "human-level" being an especially bad term - most category nouns are bad terms (like "heap"), in the sense that they're inherently fuzzy gestures at the structure of the world. It's just that in the context of 2024, we're now inside the fuzz.

A mile away from your house, "towards your house" is a useful direction. Inside your front hallway, "towards your house" is a uselessly fuzzy direction - and a bad term. More precision is needed because you're closer.

Replies from: AnthonyC↑ comment by abramdemski · 2024-03-26T21:31:30.517Z · LW(p) · GW(p)

Yeah, I think nixing the terms 'AGI' and 'human-level' is a very reasonable response to my argument. I don't claim that "we are at human-level AGI now, everyone!" has important policy implications (I am not sure one way or the other, but it is certainly not my point).

↑ comment by kromem · 2024-03-26T22:18:23.747Z · LW(p) · GW(p)

'Superintelligence' seems more fitting than AGI for the 'transformative' scope. The problem with "transformative AI" as a term is that subdomain transformation will occur at staggered rates. We saw text based generation reach thresholds that it took several years to reach for video just recently, as an example.

I don't love 'superintelligence' as a term, and even less as a goal post (I'd much rather be in a world aiming for AI 'superwisdom'), but of the commonly used terms it seems the best fit for what people are trying to describe when they describe an AI generalized and sophisticated enough to be "at or above maximal human competency in most things."

The OP post, at least to me, seems correct in that AGI as a term belongs to its foundations as a differentiator from narrow scoped competencies in AI, and that the lines for generalization are sufficiently blurred at this point with transformers we should stop moving the goal posts for the 'G' in AGI. And at least from what I've seen, there's active harm in the industry where 'AGI' as some far future development leads people less up to date with research on things like world models or prompting to conclude that GPTs are "just Markov predictions" (overlooking the importance of the self-attention mechanism and the surprising results of its presence on the degree of generalization).

I would wager the vast majority of consumers of models underestimate the generalization present because in addition to their naive usage of outdated free models they've been reading article after article about how it's "not AGI" and is "just fancy autocomplete" (reflecting a separate phenomenon where it seems professional writers are more inclined to write negative articles about a technology perceived as a threat to writing jobs than positive articles).

As this topic becomes more important, it might be useful for democracies to have a more accurately informed broader public, and AGI as a moving goal post seems counterproductive to those aims.

Replies from: ryan_greenblatt↑ comment by ryan_greenblatt · 2024-03-26T22:23:19.065Z · LW(p) · GW(p)

Superintelligence

To me, superintelligence implies qualitatively much smarter than the best humans. I don't think this is needed for AI to be transformative. Fast and cheap-to-run AIs which are as qualitatively smart as humans would likely be transformative.

Replies from: kromem↑ comment by kromem · 2024-03-27T01:49:13.723Z · LW(p) · GW(p)

Agreed - I thought you wanted that term for replacing how OP stated AGI is being used in relation to x-risk.

In terms of "fast and cheap and comparable to the average human" - well, then for a number of roles and niches we're already there.

Sticking with the intent behind your term, maybe "generally transformative AI" is a more accurate representation for a colloquial 'AGI' replacement?

Replies from: ryan_greenblatt↑ comment by ryan_greenblatt · 2024-03-27T03:30:29.475Z · LW(p) · GW(p)

Oh, by "as qualitatively smart as humans" I meant "as qualitatively smart as the best human experts".

I also maybe disagree with:

In terms of "fast and cheap and comparable to the average human" - well, then for a number of roles and niches we're already there.

Or at least the % of economic activity covered by this still seems low to me.

Replies from: AnthonyCcomment by Roman Leventov · 2024-03-27T03:16:32.926Z · LW(p) · GW(p)

Cf. DeepMind's "Levels of AGI" paper (https://arxiv.org/abs/2311.02462), calling modern transformers "emerging AGI" there, but also defining "expert", "virtuoso", and "superhuman" AGI.

comment by Nisan · 2024-03-27T06:42:53.033Z · LW(p) · GW(p)

I agree 100%. It would be interesting to explore how the term "AGI" has evolved, maybe starting with Goertzel and Pennachin 2007 who define it as:

a software program that can solve a variety of complex problems in a variety of different domains, and that controls itself autonomously, with its own thoughts, worries, feelings, strengths, weaknesses and predispositions

On the other hand, Stuart Russell testified that AGI means

machines that match or exceed human capabilities in every relevant dimension

so the experts seem to disagree. (On the other hand, Stuart & Russell's textbook cite Goertzel and Pennachin 2007 when mentioning AGI. Confusing.)

In any case, I think it's right to say that today's best language models are AGIs for any of these reasons:

- They're not narrow AIs.

- They satisfy the important parts of Goertzel and Pennachin's definition.

- The tasks they can perform are not limited to a "bounded" domain.

In fact, GPT-2 is an AGI.

comment by Stephen McAleese (stephen-mcaleese) · 2024-03-31T11:16:04.758Z · LW(p) · GW(p)

I agree. GPT-4 is an AGI for the kinds of tasks I care about such as programming and writing. ChatGPT4 in its current form (with the ability to write and execute code) seems to be at the expert human level in many technical and quantitative subjects such as statistics and programming.

For example, last year I was amazed when I gave ChatGPT4 one of my statistics past exam papers and it got all the questions right except for one which involved interpreting an image of a linear regression graph. The questions typically involve understanding the question, thinking of an appropriate statistical method, and doing calculations to find the right answer. Here's an example question:

Times (in minutes) for a sample of 8 players are presented in Table 1 below. Using an appropriate test at the 5% significance level, investigate whether there is evidence of a decrease in the players’ mean 5k time after the six weeks of training. State clearly your assumptions and conclusions, and report a p-value for your test statistic.

The solution to this question is a paired sample t-test.

Sure, GPT-4 has probably seen similar questions before but so do students since they can practice past papers.

This year, one of my professors designed his optimization assignment to be ChatGPT-proof but I found that it could still solve five out of six questions successfully. The questions involved converting natural language descriptions of optimization problems into mathematical formulations and solving them with a program.

One of the few times I've seen GPT-4 genuinely struggle to do a task is when I asked it to solve a variant of the Zebra Puzzle which is a challenging logical reasoning puzzle that involves updating a table based on limited information and using logical reasoning and a process of elimination to find the correct answer.

comment by Cole Wyeth (Amyr) · 2024-03-26T22:54:40.852Z · LW(p) · GW(p)

Perhaps AGI but not human level. A system that cannot drive a car or cook a meal is not human level. I suppose it's conceivable that the purely cognitive functions are at human level, but considering the limited economic impact I seriously doubt it.

comment by Max H (Maxc) · 2024-03-26T20:49:40.124Z · LW(p) · GW(p)

Maybe a better question than "time to AGI" is time to mundanely transformative AGI. I think a lot of people have a model of the near future in which a lot of current knowledge work (and other work) is fully or almost-fully automated, but at least as of right this moment, that hasn't actually happened yet (despite all the hype).

For example, one of the things current A(G)Is are supposedly strongest at is writing code, but I would still rather hire a (good) junior software developer than rely on currently available AI products for just about any real programming task, and it's not a particularly close call. I do think there's a pretty high likelihood that this will change imminently as products like Devin improve and get more widely deployed, but it seems worth noting (and finding a term for) the fact that this kind of automation so far (mostly) hasn't actually happened yet, aside from certain customer support and copyediting jobs.

I think when someone asks "what is your time to AGI", they're usually asking about when you expect either (a) AI to radically transform the economy and potentially usher in a golden age of prosperity and post-scarcity or (b) the world to end.

And maybe I am misremembering history or confused about what you are referring to, but in my mind, the promise of the "AGI community" has always been (implicitly or explicitly) that if you call something "human-level AGI", it should be able to get you to (a), or at least have a bigger economic and societal impact than currently-deployed AI systems have actually had so far. (Rightly or wrongly, the ballooning stock prices of AI and semiconductor companies seem to be mostly an expectation of earnings and impact from in-development and future products, rather than expected future revenues from wider rollout of any existing products in their current form.)

Replies from: abramdemski↑ comment by abramdemski · 2024-03-26T21:22:19.358Z · LW(p) · GW(p)

And maybe I am misremembering history or confused about what you are referring to, but in my mind, the promise of the "AGI community" has always been (implicitly or explicitly) that if you call something "human-level AGI", it should be able to get you to (a), or at least have a bigger economic and societal impact than currently-deployed AI systems have actually had so far.

Yeah, I don't disagree with this -- there's a question here about which stories about AGI should be thought of as defining vs extrapolating consequences of that definition based on a broader set of assumptions. The situation we're in right now, as I see it, is one where some of the broader assumptions turn out to be false, so definitions which seemed relatively clear become more ambiguous.

I'm privileging notions about the capabilities over notions about societal consequences, partly because I see "AGI" as more of a technology-oriented term and less of a social-consequences-oriented term. So while I would agree that talk about AGI from within the AGI community historically often went along with utopian visions, I pretty strongly think of this as speculation about impact, rather than definitional.

comment by cubefox · 2024-03-26T18:41:27.716Z · LW(p) · GW(p)

I agree it is not sensible to make "AGI" a synonym for superintelligence (ASI) or the like. But your approach to compare it to human intelligence seems unprincipled as well.

In terms of architecture, there is likely no fundamental difference between humans and dogs. Humans are probably just a lot smarter than dogs, but not significantly more general. Similar to how a larger LLM is smarter than a smaller one, but not more general. If you doubt this, imagine we had a dog-level robotic AI. Plausibly, we soon thereafter would also have human-level AI by growing its brain / parameter count. For all we know, our brain architectures seem quite similar.

I would go so far as to argue that most animals are about equally general [LW · GW]. Humans are more intelligent than other animals, but intelligence and generality seem orthogonal. All animals can do both inference and training in real time. They can do predictive coding. Yann LeCun calls it the dark matter of intelligence. Animals have a real world domain. They fully implement the general AI task "robotics".

Raw transformers don't really achieve that. They don't implement predictive coding, and they don't work in real time. LLMs may be in some sense more (e.g. in language understanding) intelligent than animals, but that was already true, in some sense, for the even more narrow AI AlphaGo. AGI signifies a high degree of generality, not necessarily a particularly high degree of intelligence in a less general (more narrow) system.

Edit: One argument for why predictive coding is so significant is that it can straightforwardly scale to superintelligence. Modern LLMs get their ability mainly from trying to predict human written text, even when they additionally process other modalities. Insofar text is a human artifact, this imposes a capability ceiling. Predictive coding instead tries to predict future sensory experiences. Sensory experiences causally reflect base reality quite directly, unlike text produced by humans. An agent with superhuman predictive coding would be able to predict the future, including conditioned on possible actions, much better than humans.

Replies from: abramdemski↑ comment by abramdemski · 2024-03-26T22:59:50.817Z · LW(p) · GW(p)

I'm not sure how you intend your predictive-coding point to be understood, but from my perspective, it seems like a complaint about the underlying tech rather than the results, which seems out of place. If backprop can do the job, then who cares? I would be interested to know if you can name something which predictive coding has currently accomplished, and which you believe to be fundamentally unobtainable for backprop. lsusr thinks the two have been unified into one theory [LW · GW].

I don't buy that animals somehow plug into "base reality" by predicting sensory experiences, while transformers somehow miss out on it by predicting text and images and video. Reality has lots of parts. Animals and transformers both plug into some limited subset of it.

I would guess raw transformers could handle some real-time robotics tasks if scaled up sufficiently, but I do agree that raw transformers would be missing something important architecture-wise. However, I also think it is plausible that only a little bit more architecture is needed (and, that the 'little bit more' corresponds to things people have already been thinking about) -- things such as the features added in the generative agents paper. (I realize, of course, that this paper is far from realtime robotics.)

Anyway, high uncertainty on all of this.

Replies from: cubefox↑ comment by cubefox · 2024-03-26T23:39:27.772Z · LW(p) · GW(p)

No, I was talking about the results. lsusr seems to use the term in a different sense than Scott Alexander or Yann LeCun. In their sense it's not an alternative to backpropagation, but a way of constantly predicting future experience and to constantly update a world model depending on how far off those predictions are. Somewhat analogous to conditionalization in Bayesian probability theory.

LeCun talks about the technical issues in the interview above. In contrast to next-token prediction, the problem of predicting appropriate sense data is not yet solved for AI. Apart from doing it in real time, the other issue is that (e.g.) for video frames a probability distribution over all possible experiences is not feasible, in contrast to text tokens. The space of possibilities is too large, and some form of closeness measure is required, or imprecise predictions, that only predict "relevant" parts of future experience.