EigenKarma: trust at scale

post by Henrik Karlsson (henrik-karlsson) · 2023-02-08T18:52:24.490Z · LW · GW · 52 commentsContents

EigenKarma EigenKarma is a primitive If you are interested None 52 comments

Upvotes or likes have become a standard way to filter information online. The quality of this filter is determined by the users handing out the upvotes.

For this reason, the archetypal pattern of online communities is one of gradual decay. People are more likely to join communities where users are more skilled than they are. As communities grow, the skill of the median user goes down. The capacity to filter for quality deteriorates. Simpler, more memetic content drives out more complex thinking. Malicious actors manipulate the rankings through fake votes and the like.

This is a problem that will get increasingly pressing as powerful AI models start coming online. To ensure our capacity to make intellectual progress under those conditions, we should take measures to future-proof our public communication channels.

One solution is redesigning the karma system in such a way that you can decide whose upvotes you see.

In this post, I’m going to detail a prototype of this type of karma system, which has been built by volunteers in Alignment Ecosystem Development. EigenKarma allows each user to define a personal trust graph based on their upvote history.

EigenKarma

At first glance, EigenKarma behaves like normal karma. If you like something, you upvote it.

The key difference is that in EigenKarma, every user has a personal trust graph. If you look at my profile, you will see the karma assigned to me by the people in your trust network. There is no global karma score.

If we imagine this trust graph powering a feed, and I have gamed the algorithm and gotten a million upvotes, that doesn’t matter; my blog post won’t filter through to you anyway, since you do not put any weight on the judgment of the anonymous masses.

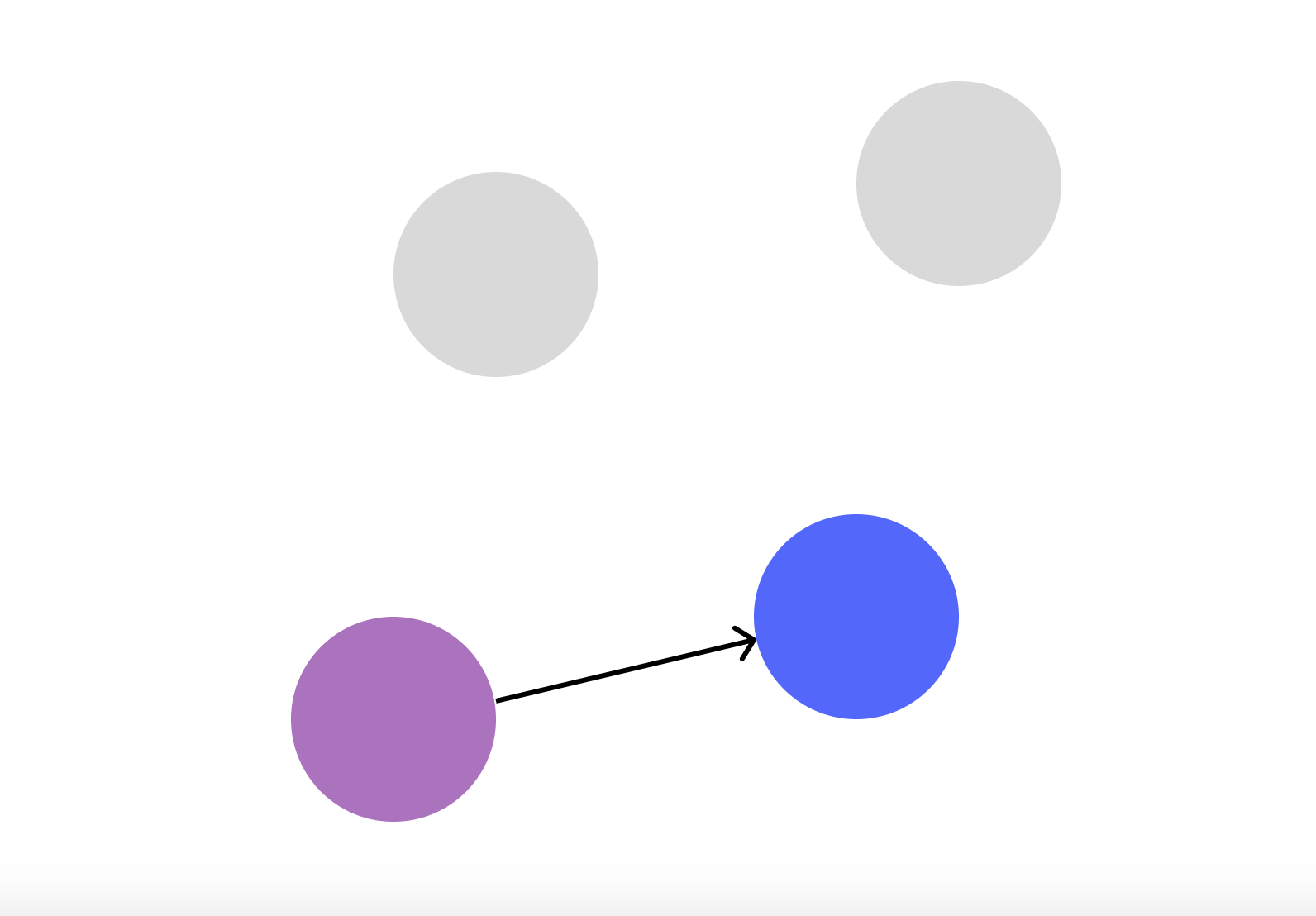

If you upvote someone you don’t know, they are attached to your trust graph. This can be interpreted as a tiny signal that you trust them:

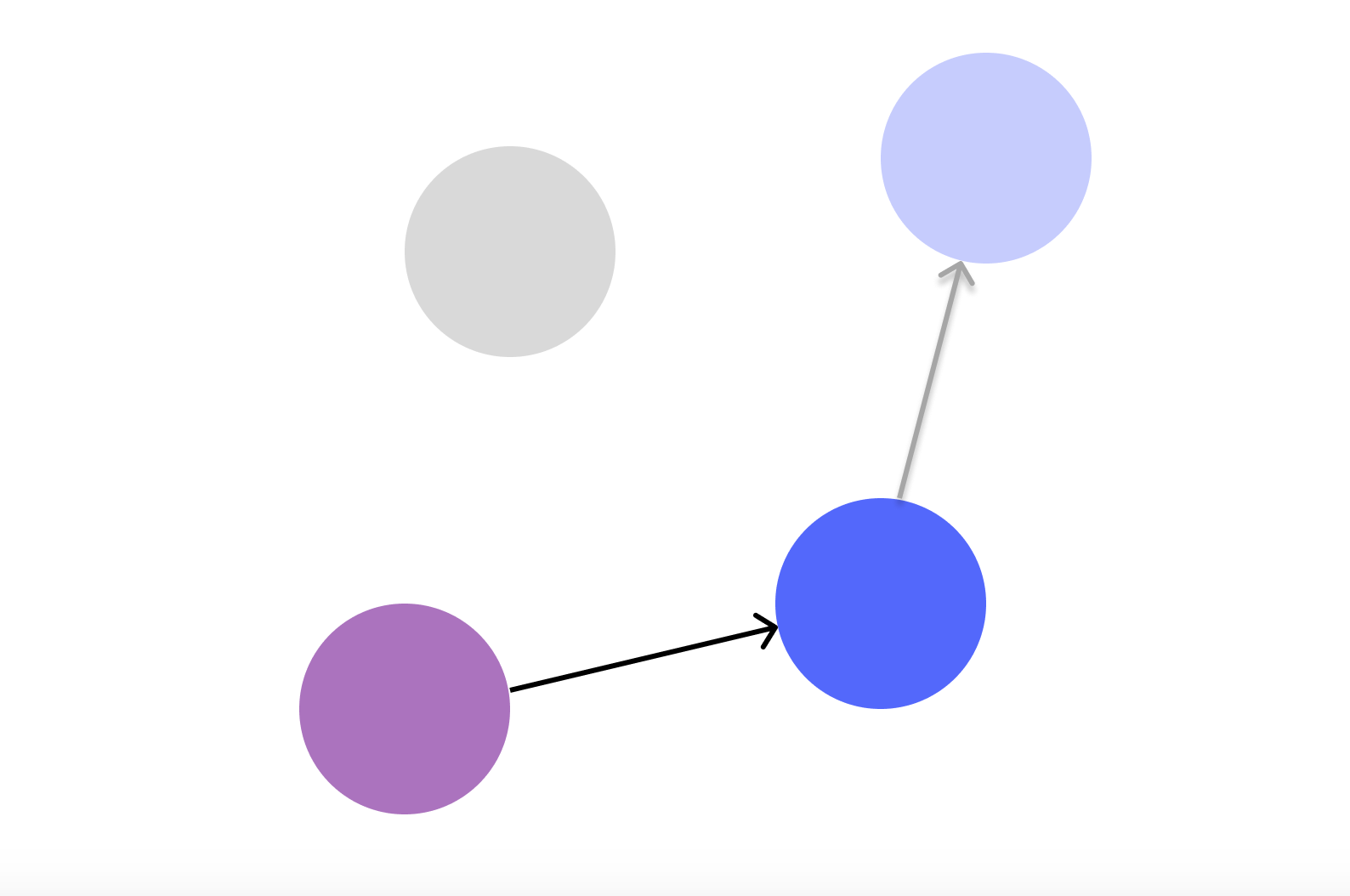

That trust will also spread to the users they trust in turn. If they trust user X, for example, you too trust X—a little:

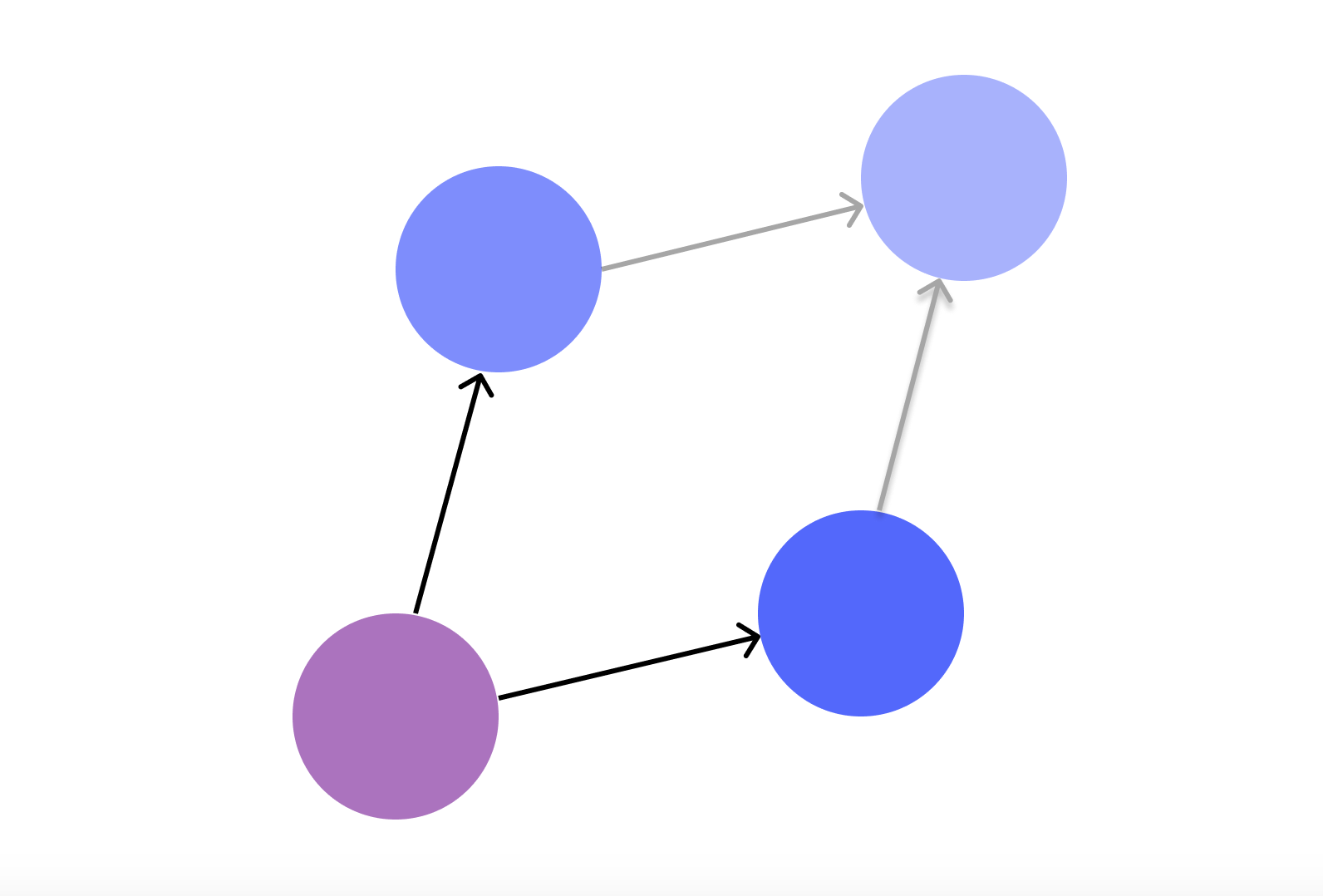

This is how we intuitively reason about trust when thinking about our friends and the friends of our friends. Only EigenKarma being a database, it can remember and compile more data than you, so it can keep track of more than a Dunbar’s number of relationships. It scales trust. Karma propagates outward through the network from trusted node to trusted node.

Once you’ve given out a few upvotes, you can look up people you have never interacted with, like K., and see if people you “trust” think highly of them. If several people you “trust” have upvoted K., the karma they have given to K. is compiled together. The more you “trust” someone, the more karma they will be able to confer:

I have written about trust networks and scaling them before, and there’s been plenty of research suggesting that this type of “transitivity of trust” is a highly desired property of a trust metric. But until now, we haven’t seen a serious attempt to build such a system. It is interesting to see it put to use in the wild.

Currently, you access EigenKarma through a Discord bot or the website. But the underlying trust graph is platform-independent. You can connect the API (which you can find here) to any platform and bring your trust graph with you.

Now, what does a design like this allow us to do?

EigenKarma is a primitive

EigenKarma is a primitive. It can be inserted into other tools. Once you start to curate a personal trust graph, it can be used to improve the quality of filtering in many contexts.

- It can, as mentioned, be used to evaluate content.

- This lets you curate better personal feeds.

- It can also be used as a forum moderation tool.

- What should be shown? Work that is trusted by the core team, perhaps, or work trusted by the user accessing the forum?

- Or an H-index, which lets you evaluate researchers by only counting citations by authors you trust.

- This can filter out citation rings and other ways of gaming the system, allowing online research communities to avoid some of the problems that plague universities.

- Extending this capacity to evaluate content, you can also use EigenKarma to index trustworthy web pages. This can form the basis of a search engine that is more resistant to SEO.

- In this context, you can have hyperlinks count as upvotes.

- Another way you can use EigenKarma is as a way to automate who gets privileges in a forum or Discord server - whoever is trusted by the core team. Or trusted by someone they trust.

- If you are running a grant program, EigenKarma might increase the number of applicants you can correctly evaluate.

- Researchers channel their trust to the people they think are doing good work. Then the grantmakers can ask questions such as: "conditioning on interpretability-focused researchers as the seed group, which candidates score highly?" Or, they'll notice that someone has been working for two years but no one trusted thinks what they're doing is useful, which is suspicious.

- This does not replace due diligence, but it could reduce the amount of time needed to assess the technical details of a proposal or how the person is perceived.

- You can also use it to coordinate work in distributed research groups. If you enter a community that runs EigenKarma, you can see who is highly trusted, and what type of work they value. By doing the work that gives you upvotes from valuable users, you increase your reputation.

- With normal upvote systems, the incentives tend to push people to collect “random” upvotes. Since likes and upvotes, unweighted by their importance, are what is tracked on pages like Reddit and Twitter, it is emotionally rewarding to make those numbers go up, even if it is not in your best interest. With EigenKarma this is not an effective strategy, and so you get more alignment around clear visions emanating from high-agency individuals.

- Naturally, if EigenKarma was used by everyone, which we are not aiming for, a lot of people would coalesce around charismatic leaders too. But to the extent that happens, these dysfunctional bubbles are isolated from the more well-functioning parts of the trust graph, since users who are good at evaluating whom to trust will by doing this sever the connections.

- With normal upvote systems, the incentives tend to push people to collect “random” upvotes. Since likes and upvotes, unweighted by their importance, are what is tracked on pages like Reddit and Twitter, it is emotionally rewarding to make those numbers go up, even if it is not in your best interest. With EigenKarma this is not an effective strategy, and so you get more alignment around clear visions emanating from high-agency individuals.

- EigenKarma also makes it easier to navigate new communities, since you can see who is trusted by people you trust, even if you have not interacted with them yet. This might improve onboarding.

- You could, in theory, connect it to Twitter and have your likes and retweets counted as updates to your personal karma graph. Or you could import your upvote history from LessWrong or the AI alignment forum. And these forums can, if they want to, use the algorithm, or the API, to power their internal karma systems.

- By keeping your trust graph separate from particular services, it could allow you to more broadly filter your own trusted subsection of the internet.

If you are interested

We’re currently test-running it on Superlinear Prizes, Apart Research, and in a few other communities. If you want to use EigenKarma in a Discord server or a forum, I encourage you to talk with plex on the Alignment Ecosystem Development Discord server. (Or just comment here and I’ll route you.)

There is work to be done if you want to join as a developer, especially optimizing the core algorithm’s linear algebra to be able to handle scale. If you are a grantor and want to fund the work, the lead developer would love to be able to rejoin the project full-time for a year for $75k (and open to part-time for a proportional fraction, or scaling the team with more).

We’ll have an open call on Tuesday the 14th of February if you want to ask questions (link to Discord event).

As we progress toward increasingly capable AI systems, our information channels will be subject to ever larger numbers of bots and malicious actors flooding our information commons. To ensure that we can make intellectual progress under these conditions, we need algorithms that can effectively allocate attention and coordinate work on pressing issues.

52 comments

Comments sorted by top scores.

comment by Dagon · 2023-02-08T21:31:44.165Z · LW(p) · GW(p)

I don't like that this conflates upvoting someone's writing with trusting their voting judgement. The VAST majority of upvotes on most systems comes from people who don't post (or at least don't post much), and a lot of posters who I like their posts, I disagree pretty strongly with their liking on other topics.

More importantly, I think this puts too much weight on a pretty lightweight mechanism, effectively accelerating the goodhart cycle by making karma important enough to be worth gaming.

Replies from: henrik-karlsson, AllAmericanBreakfast, Gunnar_Zarncke↑ comment by Henrik Karlsson (henrik-karlsson) · 2023-02-08T22:02:00.799Z · LW(p) · GW(p)

The first is a point we think a lot about. What is the correlation between what people upvote and what they trust? How does that change when the mechanism changes? And how do you properly signal what it is you trust? And how should that transfer over to other things? Hopefully, the mechanism can be kept simple - but there are ways to tweak it and to introduce more nuance, if that turns out to make it more powerful for users.

On the second point, I'm not sure gaming something like EigenKarma would in most cases be a bad thing. If you want to game the trust graph in such a way that I trust you more - then you have to do things that are trustworthy and valuable, as judged by me or whoever you are trying to game. There is a risk of course that you would try to fool me into trusting you and then exploit me - but I'm not sure EigenKarma significantly increases the risk of that, nor do I have the imagination to figure out what it would mean in practice on the forum here for example.

Replies from: ben-lang, adamzerner↑ comment by Ben (ben-lang) · 2023-02-09T12:40:22.435Z · LW(p) · GW(p)

I am curious about what has (presumably) lead you to discount the "obvious" solution to the first problem. Which is this: When a user upvotes a post they also invest a tiny amount of trust in everyone else who upvoted that same post*. Then if someone who never posts likes all the same things as you do you will tend to see other things they like.

* In detail I would make the time-ordering matter. A spam-bot upvoting a popular post does not gain trust from all the previous upvoters. In order to game the system the spam-bot would need to make an accurate prediction that a post will be wildly popular in the future.

Replies from: mr-hire, AllAmericanBreakfast, andrew-currall, dmav↑ comment by Matt Goldenberg (mr-hire) · 2023-02-10T00:36:00.440Z · LW(p) · GW(p)

There's a an algorithm called EigenTrust++ that includes both similarity and transitivity in the calculation of one's reputation score:

https://www.researchgate.net/publication/261093756_EigenTrust_Attack_Resilient_Trust_Management

↑ comment by DirectedEvolution (AllAmericanBreakfast) · 2023-02-09T19:26:45.524Z · LW(p) · GW(p)

This feature I would be excited to see implemented!

↑ comment by Andrew Currall (andrew-currall) · 2023-02-13T08:28:19.365Z · LW(p) · GW(p)

I think this doesn't work even with time-ordering. A spam bot will probably get to the post first in any case. A bot that simply upvotes everything will gain a huge amount of trust. Even a bot paid only to upvote specific posts will still gain trust if some of those posts are actually good, which it can "use" to gain credibility in its upvotes for the rest of the posts (which may not be good).

↑ comment by dmav · 2023-02-10T18:17:42.107Z · LW(p) · GW(p)

You probably also want to do some kind of normalization here based on how many total posts the user has upvoted. (So you can't just i.e. upvote everything.) (You probably actually care about something a little different from the accuracy of their upvoted-as-predictions on average though...)

↑ comment by Adam Zerner (adamzerner) · 2023-05-14T19:25:22.309Z · LW(p) · GW(p)

On the second point, I'm not sure gaming something like EigenKarma would in most cases be a bad thing. If you want to game the trust graph in such a way that I trust you more - then you have to do things that are trustworthy and valuable, as judged by me or whoever you are trying to game.

I think that even people who you trust are susceptible to being gamed. I'm not sure if the amount of susceptibility is important though. For example, Reddit is easier to game than LessWrong; LessWrong is gameable to some extent; but is LessWrong gameable to an important extent?

↑ comment by DirectedEvolution (AllAmericanBreakfast) · 2023-02-08T23:18:37.436Z · LW(p) · GW(p)

Stated another way:

- Normal karma:

- Provides positive or negative feedback to OP

- Increases the visibility of the upvoted post to all users

- EigenKarma:

- Provides positive or negative feedback to OP

- Increases visibility to users who assign you high EigenKarma

- Increases visibility of upvoted post's EigenKarma network to you

So EigenKarma improves your ability to decouple signal-boosting and giving positive feedback.

However, it enforces coupling between giving positive feedback and which posts are most visible to you.

I think the advantage of EigenKarma over normal karma is that normal karma allows you to "inflict" a post's visibility on other users. EigenKarma inflicts visibility of a broad range of posts on yourself, and those who've inflicted upon themselves the results of your voting choices.

Although the latter seems at least superficially preferable from a standpoint of incentivizing responsible voting, it also results in a potentially problematic lack of transparency if there's not a strong enough correlation between what people upvote and what people post. Perhaps many people who write good posts you'd like to see more of also upvote a lot of dumb memes. That makes it hard to increase the visibility of good posts without also increasing the visibility of dumb memes.

I agree with Dagon: it seems better to split "giving positive feedback" from "increasing visibility of their feed." The latter is something I might want to do even for somebody who never posts anything, while the former is something I might want to do for all sorts of reasons that have nothing to do with what I want to view in the future.

Right now, it seems there are ways to implement "increasing visibility of somebody else's feed." Many sites let you view what accounts or subforums somebody is following, and to choose to follow them. Sometimes that functionality is buried, not convenient to use, or hard to get feedback from. I could imagine a social media site that is centrally focused on exploring other users' visibility networks and tinkering with your feed based on that information.

At baseline, though, it seems like you'd need some way for somebody to ultimately say "I like this content and I'd like to see more of it." But it does seem possible to just have two upvote buttons, one to give positive feedback and the other to increase visibility.

Replies from: henrik-karlsson, None↑ comment by Henrik Karlsson (henrik-karlsson) · 2023-02-09T09:35:15.614Z · LW(p) · GW(p)

It is an open question to me how correlated user writing good posts (or doing other type of valuable work) and their tendency to signal boost bad things (like stupid memes). My personal experience is that there is a strong correlation between what people consume and what they produce - if I see someone signal boost low quality information, I take that as a sign of unsound epistemic practices, and will generally take care to reduce their visibility. (On Twitter, for example, I would unfollow them.)

There are ways to make EigenKarma more finegrained so you can hand out different types of upvotes, too. Which can be used to decouple things. On the dev discord, we are experimenting with giving upvotes flavors, so you can finetune what it is the thing you upvoted made you trust more about the person (is it their skill as a dev? is it their capacity to do research?). Figuring out the design for this, and if it is to complicated, is an open question right now in my mind.

Replies from: AllAmericanBreakfast↑ comment by DirectedEvolution (AllAmericanBreakfast) · 2023-02-09T12:52:36.411Z · LW(p) · GW(p)

I agree - I’m uncertain about what it would be like to use it in practice, but I think it’s great that you’re experimenting with new technology for handling this type of issue. If it were convenient to test drive the feature, especially in an academic research context where I have the biggest and most important search challenges, I’d be interested to try it out.

↑ comment by [deleted] · 2023-02-09T00:56:59.060Z · LW(p) · GW(p)

This sounds like it could easily end up with the same catastrophic flaw as recsys. Most users will want to upvoted posts they agree with. So this creates self reinforcing "cliques" where everyone sees only more content from the set of users they already agree with, strengthening their belief that the ground truth reality is what they want it to be, and so on.

Replies from: AllAmericanBreakfast↑ comment by DirectedEvolution (AllAmericanBreakfast) · 2023-02-09T01:02:43.736Z · LW(p) · GW(p)

Yeah, this seems like it fundamentally springs from "people don't always want what's good for them/society." Hard to design a system to enforce epistemic rigor on an unwilling user base.

↑ comment by Gunnar_Zarncke · 2023-02-10T08:23:43.404Z · LW(p) · GW(p)

The EigenKarma method doesn't depend on upvotes as a means to define the trust graph. Upvotes are just a very easy way to collect it. Maybe too easy. The core idea of EigenKarma seems to be the individual graphs and its combination and the provisioning as a service. Maybe the distinction could be made more clear.

comment by Writer · 2023-02-08T21:33:48.921Z · LW(p) · GW(p)

A guess: if LessWrong implemented this, onboarding lots of new users at once would be easier to do without ruining the culture for the people already here.

Replies from: Ilio↑ comment by Ilio · 2023-02-11T03:49:15.639Z · LW(p) · GW(p)

Another guess: this tool will accentuate political divide among any group that use it without acute awareness of this effect and a well chosen set of countermeasures.

Replies from: M. Y. Zuo↑ comment by M. Y. Zuo · 2023-02-15T03:16:15.686Z · LW(p) · GW(p)

Can you elaborate as to how you see this happening?

Replies from: Ilio↑ comment by Ilio · 2023-02-16T23:26:12.062Z · LW(p) · GW(p)

The countermeasures? That’s a difficult question, but it should start by measuring the effect. I’d probably go with an ICA, then compute some ratio to test for increased polarisations for « hot » topics following the introduction of this scoring method.

But maybe you are asking why this effect tends to happen in the first place? Depending on your background, one of the two following explanations might best suit you: -On a common sense level, the evidences for polarisation from social network are overwhelmingly clear, so any tool that looks like it can help construct a social network is at risk of being dangerous. -On a more rationalist-seeking level, I think the key thought is to notice we can replace the label « trust » in propagating trust by the label « ingroup » as in propagating (feeling of belonging)

Replies from: M. Y. Zuo↑ comment by M. Y. Zuo · 2023-02-17T04:58:26.718Z · LW(p) · GW(p)

propagating appartenance.

Do you mean appearance or appurtenance?

Replies from: Ilio↑ comment by Ilio · 2023-02-17T13:26:05.968Z · LW(p) · GW(p)

A bug in my internal translator, thanks for signaling it. :-)

(I also added a link for the ingroup concept, for the today’s lucky ten thousand)

comment by evhub · 2023-02-09T00:46:51.376Z · LW(p) · GW(p)

I think a problem with this is that it removes the common-knowledge-building effect of public overall karma, since it becomes much less clear what things in general the community is paying attention to.

Replies from: henrik-karlsson, Kinrany↑ comment by Henrik Karlsson (henrik-karlsson) · 2023-02-09T09:43:30.185Z · LW(p) · GW(p)

You can use EigenKarma in several ways. If it is important to make clear what a specific community pays attention to, when thing to do is this:

- Have the feed of a forum be what the founder (or moderators) of the forum sees from the point of view of their trust graph.

- This way the moderators get control over who is considered core to the community, and what are the sort of bounderies of the community.

- In this set up the public karma is how valuable a member is to the community as judged by the core members of the community and the people they trust weighted by degree of trust

- This gives a more fluid way of assigning priviliges and roles within the forum, and reduces the risk that a sudden influx will rapidly alter the culture of the forum. We run a sister version of the system that works like this in at least one Discord.

comment by Viliam · 2023-02-10T14:31:47.044Z · LW(p) · GW(p)

I am not sure if the unified "trust", let alone "transitive trust" makes sense. People can be experts on something, and uninformed about something else. There are people I trust in the sense "they wouldn't stab me in the back", but I do not trust their trust in homeopathics or Jesus. In context of LessWrong, I would hate to see my upvotes of someone's articles on math translated as my indirect support of Buddhism.

comment by the gears to ascension (lahwran) · 2023-02-09T00:15:06.035Z · LW(p) · GW(p)

I don't think this quite works, but I like the attempt. The problem I see here is that this is likely to create filter bubbles. One of my strategies for avoiding filter bubbles is that I often specifically seek out media that is unpopular and then simply try to get through a lot of it fast, because it is rare for what I want to correlate terribly strongly with what others want. Also, upvoting someone's comments doesn't mean that I agree with them, and agreeing with them doesn't mean that I trust them to recognize what's good in the same situations I would. I would suggest that a key problem with karma is in fact the issue that there's a single direction of up/down, but I think there's something more fundamentally funky about the idea of having a "upvote so others can see" view, even as it exists now. I'd personally suggest that votes should be at the same level as comments - votes should be seen as reviews, in the same sense as scientific reviews. And even scientific review has serious problems. [index of last time I did a search for this](edit 2y later: this was never a valid link and I don't know what I meant to link anymore)

In general, I think what we'd want would have some degree of intentional partitioning as new nodes get added, and some degree of intentional anti-partitioning; the graph should probably be near the edge of criticality in some key aspect, as most highly effective systems turn out to be, but figuring out which feature should be edge of criticality is left an open question by that claim.

It might make sense to separate simulacrum 1, 2, and 3 - fact, manipulation, and belonging - intentionally, if possible; getting them to stay separated, or to start out separated even for a new user, is not trivial. How could something like EigenKarma be adapted to do this? Dunno.

comment by Gunnar_Zarncke · 2023-02-10T08:24:45.051Z · LW(p) · GW(p)

There have been experiments with attack-resistant trust metrics before. One notable project was Advogato. It failed and I'm not sure why. It's archived now. Maybe because it didn't create individual graphs. It might be worthwhile to look into Advogato's Trust Metric.

comment by simon · 2023-02-09T04:18:17.684Z · LW(p) · GW(p)

To my best understanding this is basically doing PageRank but with votes taking the place of links - so a user's outgoing trust is divided between other users in proportion to how much in total they've upvoted each one.

I could well be wrong though, the documents include a low-level description of the algorithm if people want to check.

It seems to me this approach would be likely to strongly favor more prolific users, and I would guess that, even if outsiders didn't agree with the core of prolific users, they would tend to see results weighted heavily towards those users and whoever those users most upvote.

I would much prefer an approach that compensated for this in some way.

Replies from: Yoav Ravid↑ comment by Yoav Ravid · 2023-02-09T13:23:35.264Z · LW(p) · GW(p)

It seems to me this approach would be likely to strongly favor more prolific users

That's a very good point. I might upvote 20 out of 200 posts by a prolific user I don't trust much, and 5 out of 5 posts by an unprolific user I highly trust. But this system would think I trust the former much more.

But then, just using averages or meduans won't work, because if I upvoted 50 out of 50 posts from one user, and 5 out of 5 of another user, then I probably do trust the former more, even though they have the sma eaverage and median, 50 posts is a much better track record than 5 posts.

comment by Mckiev · 2023-02-09T17:56:03.598Z · LW(p) · GW(p)

What’s great about the current setup is it doesn’t produce any additional friction for users since it’s built on top of an existing post-voting system. However, one downside is that number of my given upvotes doesn’t perfectly tracks the trust I assign to the user. E.g. as pointed out by previous commenters, prolific posters could post more often and collect more likes than they intuitively “deserve.” This particular issue can be mitigated by capping the effective number of upvotes to a given user by certain defined number.

The most popular Russian speaking poker forum uses a karma system, where everyone can explicitly assign karma to each other in a range [-100:100]. It served as an analog of google map reviews for users, and I personally found it super useful, since it helped distinguish trustworthy people and helped establishing financial relations (like lending or staking each other). I should say it worked pretty well!

I think displaying the eigenvector of this karma matrix, would be even more useful. EigenKarma would also allow making anonymous ratings while being resistant to botnets.

comment by Gordon Seidoh Worley (gworley) · 2023-02-08T22:34:31.675Z · LW(p) · GW(p)

I imagine this would be hard to sell (in the sense of getting them to let you hook into their votes and calculate karma and use it to determine what users see) to companies like Facebook and Twitter that show you lots of content that you can vote on. My guess is because they want control over what people see so they can optimize it for things they care about, like generating ad revenue or engagement. For many sites, what the user wants is just an input to consider; the site is optimizing for other things that may not reflect the users preferences but that's okay so long as more the desired objective is obtained, like placing ads that people click on.

Replies from: ete↑ comment by plex (ete) · 2023-02-09T00:28:26.590Z · LW(p) · GW(p)

Agreed, incentives probably block this from being picked up by megacorps. I had thought to try and get Musk's twitter to adopt it at one point when he was talking about bots a lot, it would be very effective, but doesn't allow rent extraction in the same way the solution he settled on (paid twitter blue).

Websites which have the slack to allow users to improve their experience even if it costs engagement might be better adopters, LessWrong has shown they will do this with e.g. batching karma daily by default to avoid dopamine addiction.

Replies from: ChristianKl, Mckiev↑ comment by ChristianKl · 2023-02-10T16:07:38.855Z · LW(p) · GW(p)

Paid Twitter blue seems to be quite competitive. The algorithm could just weigh paid Twitter blue users more highly than users that aren't Twitter blue.

As far as Megacorps go, Youtube likely wouldn't want this for its video ranking, but it might want it for the comment sections of videos. If the EigenKarma from the owner of a Youtube channel would set the ranking of comments within Youtube that would increase the comment quality by a lot.

↑ comment by Mckiev · 2023-02-13T12:40:43.590Z · LW(p) · GW(p)

Would it be possible to make an opt-in EigenKarma layer on top of twitter (but independent from it)? I can imagine parsing say 100k most popular twitter accounts plus all of personal tweets and likes of people who opted in to the EigenKarma layer, and then building a customised twitter feed for them

comment by Gunnar_Zarncke · 2023-02-21T10:19:25.046Z · LW(p) · GW(p)

There is one concern about the transitive nature of trust:

Emmett Shear:

Flattening the multidimensional nature of trust is a manifestion of the halo/horns effect, and does not serve you.

There are people I trust deeply (to have my back in a conflict) who I trust not at all (to show up on time for a movie). And vice versa.

Paul Graham:

There's a special case of this principle that's particularly important to understand: if you trust x and x trusts y, that doesn't mean you can trust y. (Because although trustworthy, x might not be a good judge of character.)

comment by tricky_labyrinth · 2023-02-09T09:56:24.523Z · LW(p) · GW(p)

FYI, eigenkarma's been proposed for LessWrong multiple times (with issues supposedly found); see https://www.lesswrong.com/posts/xN2sHnLupWe4Tn5we/improving-on-the-karma-system#Eigenkarma [LW(p) · GW(p)] for example.

Replies from: henrik-karlsson↑ comment by Henrik Karlsson (henrik-karlsson) · 2023-02-09T10:46:19.820Z · LW(p) · GW(p)

That is not the same setup. That purposal has a global karma score, ours is personal. The system we evolved EigenKarma from worked like that, and EigenKarma can be used like that if you want to. I don't see why decoupling the scores on your posts from your karma is a particularly big problem. I'm not particularly interested in the sum of upvotes: it is whatever information can be wrangled out of that which is interesting.

comment by trevor (TrevorWiesinger) · 2023-02-08T21:25:01.081Z · LW(p) · GW(p)

Replies from: lahwran, lc↑ comment by the gears to ascension (lahwran) · 2023-02-09T02:06:35.187Z · LW(p) · GW(p)

You got upvoted but disagreed with; because you got upvoted, I'm sad to see your comment get deleted.

Replies from: TrevorWiesinger↑ comment by trevor (TrevorWiesinger) · 2023-02-09T03:57:51.593Z · LW(p) · GW(p)

I deleted it, noone else did.

Replies from: lahwran↑ comment by the gears to ascension (lahwran) · 2023-02-09T03:59:13.067Z · LW(p) · GW(p)

I knew that when I wrote my comment. I was talking to you. As someone who also deletes my comments at times, I'm sad to see part of your writing be removed. I only would upvote deleting a comment if that comment turned out to be unnecessarily rude or starkly misleading.

comment by Sinclair Chen (sinclair-chen) · 2023-02-10T00:31:30.353Z · LW(p) · GW(p)

I suspect this is less accurate at recommending personalized content compared social media algorithms (like tiktok) that consider more data, yet is also not much more transparent than those algorithms.

You could show the actual eigenkarma - but you'd have to accurately convey what that number means, make sure that users don't think it's global like reddit/hn, and you can't show it when logged, in link previews, nor in google search. Compare this to the simplicity of showing global karma - it's just a number and 2 tiny buttons that can be inline with the text. LW jams two karmas in each comment and it makes sense. The anime search website Anilist lets users vote on category/genre tags and similar shows on each show's page, and it all fits.

I think "stuff liked by writers who wrote stuff I like" is less accurate than "stuff liked by people who liked content I like". There are usually much fewer writers than likers.

I think it's also less transparent than "stuff written by writers I subscribed to"

comment by Gurkenglas · 2023-02-09T00:33:02.315Z · LW(p) · GW(p)

A desideratum that comes to mind for such a system is "Reallocating 10% of my direct trust changes my indirect trust in Alice by at most 20%." - does something like that hold? What desiderata characterize your algorithm?

comment by Nicholas / Heather Kross (NicholasKross) · 2023-05-17T21:32:06.848Z · LW(p) · GW(p)

This is kinda like the "liquid democracy" software, but with delegation being automatic instead of, say, thoughtful. I like the idea of seeing only some people's upvotes, but I want to consciously and explicitly choose those people. Not by subscribing to their posts, and definitely not by upvoting something they've written.

I could imagine a toggle-switch next to the upvote counter, labeled "upvotes you trust, only" or something, along with a little link to go whitelist users into your trust-graph.

A related-but-not-the-same idea, would be to add a lot of different special upvotes to posts and comments. I think the max (before it becomes dumb) is like 5-6. We have "normal upvotes" and "agree/disagree" (but only on comments, for some reason???). If we replaced "normal upvotes" with another axis, we'd get 4-5 new axes to play with.

Axes might include "I think people outside our community should hear this", "I think this is novel in a useful way", "I personally made life-changing decisions based on this article, and now N years later I feel positive/negative about them" (this one should be locked behind a timer of some sort), "I predict this article will help me with decisions" (see previous), "this post's existence decreased X- (or S-) risks", "number of markets that linked this post on Manifold", "I liked the emotional vibe (or writing style or...) of this post", the aforementioned whitelist-eigenkarma, etc.

The upvote numbers would all be equally-sized (small), and arranged in a cute ring, kinda like how some RPGs/cardgames display stats. Maybe the user could choose one from the ring as their "default score shown" (which would also inform what posts are shown to them).

As a community acutely aware of platform dynamics, we should really be doing a better job of this.

Half the "inherent platform problems karma upvotes reddit digg dynamics doom" may be surprisingly solvable with UI tweaks. Especially UI that doesn't do certain things by default. (E.g. you only see "one karma number" if you opt-in and pick which karma-axis you want; you only see "eigenkarma" based people you've explicitly whitelisted; etc.).

comment by Joseph Van Name (joseph-van-name) · 2023-05-14T19:39:51.309Z · LW(p) · GW(p)

If I get a lot of Karma in the area of Set Theory, but I give a lot of Karma in the area of blockchain technologies, should this Karma be worth as much as the Karma I give in Set Theory? I do not think so.

comment by Double · 2024-07-20T04:51:43.028Z · LW(p) · GW(p)

Can there be a mechanism that boosts posters who get upvotes from multiple nonoverlapping groups extra? If eg 50 Blues and 50 Greens upvote someone, I want them to get more implicit eigenkarma from me than someone with 100 Blue upvotes even if I tend to upvote Blue more often. Figuring out who is a Blue and who is a Green can be done by finding dense subgraphs..

Replies from: Doublecomment by Review Bot · 2024-03-01T13:33:34.013Z · LW(p) · GW(p)

The LessWrong Review [? · GW] runs every year to select the posts that have most stood the test of time. This post is not yet eligible for review, but will be at the end of 2024. The top fifty or so posts are featured prominently on the site throughout the year.

Hopefully, the review is better than karma at judging enduring value. If we have accurate prediction markets on the review results, maybe we can have better incentives on LessWrong today. Will this post make the top fifty?

comment by Prometheus · 2023-06-20T12:45:07.171Z · LW(p) · GW(p)

Are you familiar with Constellation's Proof of Reputable Observation? This seems very similar.