Honoring Petrov Day on LessWrong, in 2020

post by Ben Pace (Benito) · 2020-09-26T08:01:36.838Z · LW · GW · 100 commentsContents

Not Destroying the World The Big Red Button Relating to the End of Humanity None 101 comments

Just after midnight last night, 270 LessWrong users received the following email.

Subject Line: Honoring Petrov Day: I am trusting you with the launch codes

Hello {username},

On Petrov Day, we celebrate and practice not destroying the world.

It's difficult to know who can be trusted, but today I have selected a group of (270) LessWrong users who I think I can rely on in this way. You've all been given the opportunity to not destroy LessWrong.

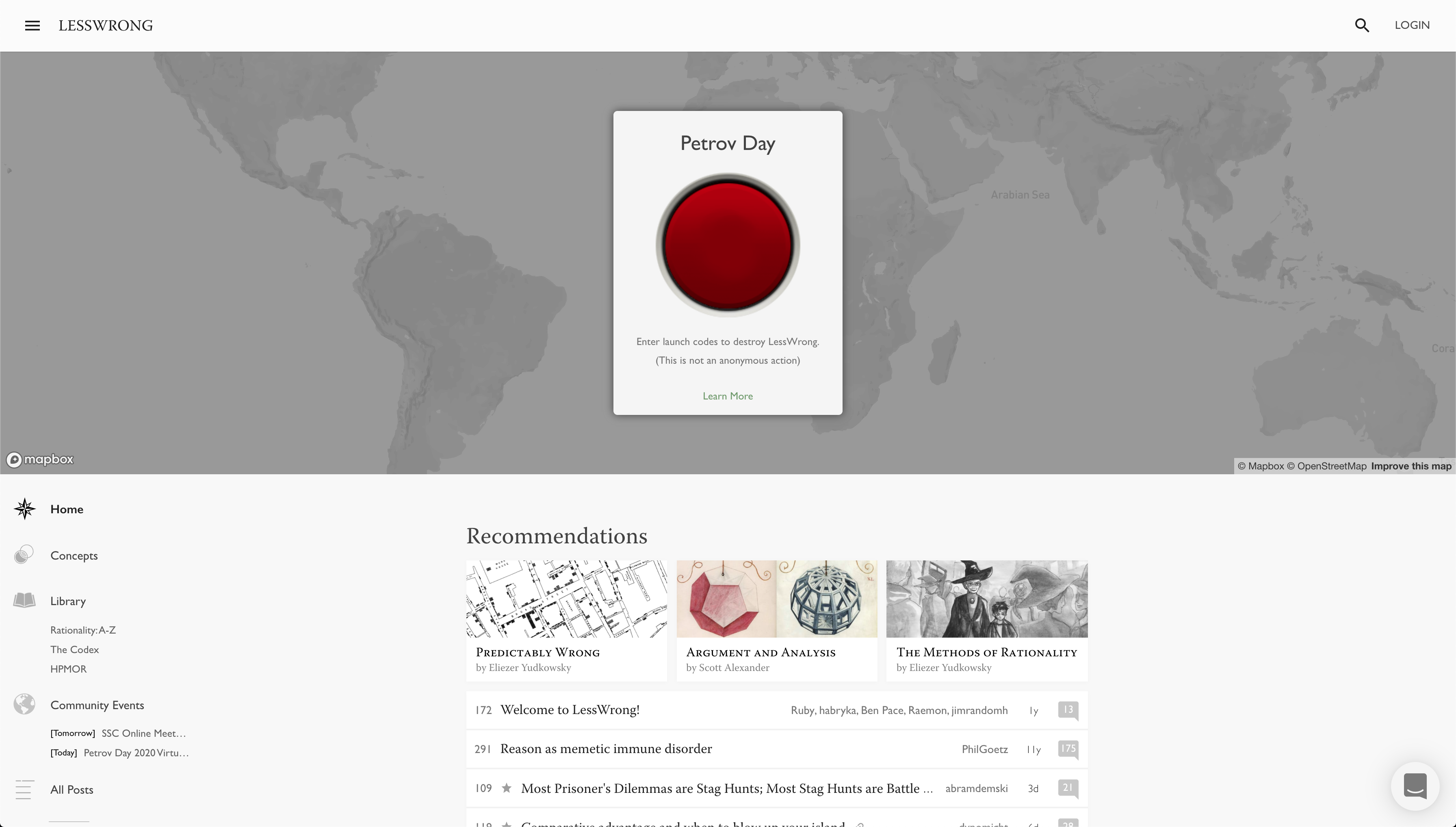

This Petrov Day, if you, {username}, enter the launch codes below on LessWrong, the Frontpage will go down for 24 hours, removing a resource thousands of people view every day. Each entrusted user has personalised launch codes, so that it will be clear who nuked the site.

Your personalised codes are: {codes}

I hope to see you in the dawn of tomorrow, with our honor still intact.

–Ben Pace & the LessWrong Team

P.S. Here is the on-site announcement [LW · GW].

Not Destroying the World

Stanislav Petrov once chose not to destroy the world.

As a Lieutenant Colonel of the Soviet Army, Petrov manned the system built to detect whether the US government had fired nuclear weapons on Russia. On September 26th, 1983, the system reported five incoming missiles. Petrov’s job was to report this as an attack to his superiors, who would launch a retaliative nuclear response. But instead, contrary to the evidence the systems were giving him, he called it in as a false alarm, for he did not wish to instigate nuclear armageddon. (He later turned out to be correct.)

During the Cold War, many other people had the ability to end the world – presidents, generals, commanders of nuclear subs from many countries, and so on. Fortunately, none of them did. As humanity progresses, the number of people with the ability to end the world increases, and so too does the standard to which we must hold ourselves. We lived up to our responsibilities in the cold war, but barely. (The Global Catastrophic Risks Institute has compiled this list of 60 close calls.)

In 2007, Eliezer named September 26th Petrov Day [LW · GW], and the rationality community has celebrated the holiday ever since. We celebrate Petrov's decision, and we ourselves practice not destroying things, even if it is pleasantly simple to do so.

The Big Red Button

Raymond Arnold has suggested many ways [LW · GW] of observing Petrov Day.

You can discuss it with your friends.

You can hold a quiet, dignified ceremony with candles and the beautiful booklets Jim Babcock created.

And you can also play on hard mode: "During said ceremony, unveil a large red button. If anybody presses the button, the ceremony is over. Go home. Do not speak."

This has been a common practice at Petrov Day celebrations in Oxford, Boston, Berkeley, New York, and in other rationalist communities. It is often done with pairs of celebrations, each whose red button (when pressed) brings an end to the partner celebration.

So for the second year [LW · GW], at midnight, I emailed personalized launch codes to 270 LessWrong users. This is over twice the number of users I sent codes to last year [LW · GW] (which was 125), and includes a lot more users who use a pseudonym and who I've never met. If any users do submit a set of launch codes, then (once the site is back up) we'll publish their username, and whose unique launch codes they were.

During Saturday 26th September (midnight to midnight Pacific Time), we will practice the skill of sitting together and not pressing harmful buttons.

Relating to the End of Humanity

Humanity could have gone extinct many times.

Petrov Day is a celebration of the world not ending. It's a day where we come together to think about how one man in particular saved the world. We reflect on the ways in which our civilization is fragile and could have ended already, we feel grateful that it has not, and we ask ourselves how we could also save the world.

If you would like to participate in the tradition of Petrov Day on LessWrong this year, and if you feel up to talking directly about it, then you're invited to write a comment and share your own feelings about humanity, extinction, and how you relate to it. There's a few prompts below to help you figure out what to say. Note that not all people are in a position in their lives to focus on preventing an existential catastrophe.

- What's at stake [LW · GW] for you? What are the things you're grateful for, and that you look forward to? What are the things you'd mourn if humanity perished?

- How do you relate to the extinction of humanity? What’s your story of coming to engage with the fragility of a world beyond the reach of god [LW · GW], and how do you connect to it emotionally?

- Are you taking actions to protect it [LW · GW]? What are you taking responsibility for in the world, and in what ways are you taking responsibility [? · GW] for the future?

Finally, if you’d like to participate in a Petrov Day Ceremony today, check out Ray’s Petrov event roundup [LW · GW], especially the online New York mega-meetup.

To all, I wish you a safe and stable Petrov Day.

100 comments

Comments sorted by top scores.

comment by Vanilla_cabs · 2020-09-26T19:44:56.965Z · LW(p) · GW(p)

I find the concept of Petrov day valuable, and the principle of the experiment relevant, but doesn't the difference of stakes undermine the experiment? The consequence of entering the codes here was meaningful and noticeable, but it was nothing irreversible, lasting, or consequential.

When I walk in the streets everyday, dozens of car drivers who have a clear shot at running me over abstain from hurting, maiming or even killing me, and not a single one does it. That's what I call consequential. I'll celebrate that.

Replies from: Lironcomment by Neel Nanda (neel-nanda-1) · 2020-09-26T11:37:24.192Z · LW(p) · GW(p)

Well, that lasted a disappointingly short time :(

Replies from: Chris_Leong, vanessa-kosoy↑ comment by Chris_Leong · 2020-09-26T11:39:01.787Z · LW(p) · GW(p)

Sorry, I got tricked:

petrov_day_admin_account September 26, 2020 11:26 AM Hello Chris_Leong,

You are part of a smaller group of 30 users who has been selected for the second part of this experiment. In order for the website not to go down, at least 5 of these selected users must enter their codes within 30 minutes of receiving this message, and at least 20 of these users must enter their codes within 6 hours of receiving the message. To keep the site up, please enter your codes as soon as possible. You will be asked to complete a short survey afterwards.

Replies from: Linda Linsefors, FactorialCode, Slider, Raemon, ArisKatsaris, neel-nanda-1, gjm, Telofy, Kevin, MathieuRoy↑ comment by Linda Linsefors · 2020-09-26T12:10:12.579Z · LW(p) · GW(p)

From this we learn that you should not launch nukes, even if someone tells you to do it.

Replies from: frontier64, Measure, gilch, McP82, andrea-mulazzani↑ comment by frontier64 · 2020-09-27T00:02:17.108Z · LW(p) · GW(p)

I think the lesson is that if you decide to launch the nukes it's better to claim incompetence rather than malice because then opinion of you among the survivors won't suffer as much.

↑ comment by Measure · 2020-09-26T12:44:14.540Z · LW(p) · GW(p)

I think we learned that when you tell people to not destroy the world they try to not destroy the world. How is [press this button and the world ends -> don't press button] different from [press this button or else the world ends -> press button]?

Replies from: PhilGoetz, sil-ver↑ comment by Rafael Harth (sil-ver) · 2020-09-26T12:58:11.347Z · LW(p) · GW(p)

The asymmetry is the button itself. If I understand correctly, Chris got this message on a separate channel, and the button still looked the same; it still said "enter launch codes to destroy LessWrong". It was still clearly meant to represent the launch of nukes.

Stretching just a bit, I think you might be able to draw an analogy here, where real people who might actually launch nuclear weapons (or have done so in other branches of the multiverse) have thought they had reasons important enough to justify doing it. But in fact, the rule is not "don't launch nukes unless there seems to be sufficient reason for it", but rather "don't launch nukes".

Replies from: Measure↑ comment by Measure · 2020-09-26T13:33:28.252Z · LW(p) · GW(p)

Good point. I didn't see the button setup before it went down, and I was thinking the OP did not receive the main email and just got the "special instructions" they posted. This does make it more analogous to a "false alarm" situation.

Replies from: Chris_Leong↑ comment by Chris_Leong · 2020-09-26T22:49:10.882Z · LW(p) · GW(p)

I recieved both messages

↑ comment by gilch · 2020-09-26T20:18:02.015Z · LW(p) · GW(p)

I think this is the wrong lesson. If the Enemy knows you have precommitted to never press the button, then they are not deterred from striking first. MAD is game theory. In order to not blow up the world, you have to be willing to blow up the world. It's a Newcomblike problem: It feels like there are two decisions to be made, but there is only one, in advance.

Replies from: Linda Linsefors↑ comment by Linda Linsefors · 2020-09-27T10:29:05.962Z · LW(p) · GW(p)

But we are not in a game theory situation. We are in an imperfect world with imperfect information. There are malfunctioning warning systems and liars. And we are humans and not programs that get to read each others source code. There are no perfect commitments and if there where, there would be no way of verifying them.

So I think that the lesson is, that what ever your public stance, and whether or not you think that there are counterfactual situation where you should nuke. In practice, you should not nuke.

Do you see what I'm getting at?

↑ comment by frontier64 · 2020-09-29T18:02:25.044Z · LW(p) · GW(p)

Game theory was pioneered by Schelling with the central and most important application being handling nuclear armed conflicts. To say that game theory doesn't apply to nuclear conflict because we live in an imperfect world is just not accurate. Game theory doesn't require a perfect world nor does it require that actors know each other's source code. It is designed to guide decisions made in the real world.

Replies from: Linda Linsefors↑ comment by Linda Linsefors · 2020-09-29T22:30:52.512Z · LW(p) · GW(p)

I know that it is designed to guide decisions made in the real world. This does not force me to agree with the conclusions in all circumstances. Lots of models are not up to the task they are designed to deal with.

But I should have said "not in that game theory situation", becasue there is probably a way to construct some game theory game that applies here. That was my bad.

However, I stand by the claim that the full information game is too far from reality to be a good guide in this case. With stakes this high even small uncertainty becomes important.

↑ comment by gilch · 2020-09-27T18:09:34.368Z · LW(p) · GW(p)

Game theory is very much applicable to the real world. Imperfect information is just a different game. You are correct that assuming perfect information is a simplification. But assuming imperfect information, what does that change?

You want to lie to the Enemy, convince them that you will always push the button if they cross the line, then never actually do it, and the Enemy knows this!

Sometimes all available options are risky. Betting your life on a coin flip is not generally a good idea, but if the only alternative is a lottery ticket, the coin flip looks pretty good. If the Enemy knows there's a significant chance that you won't press the button, in a sufficiently desperate situation, the Enemy might bet on that and strike first. But if the Enemy knows self-destruction is assured, then striking first looks like a bad option.

What possible reason could Petrov or those in similar situations have had for not pushing the button? Maybe he believed that the US would retaliate and kill his family at home, and that deterred him. In other words, he believed his enemy would push the button.

Applied to the real world, game theory is not just about how to play the games. It's also about the effects of changing the rules.

Replies from: Linda Linsefors, Linda Linsefors↑ comment by Linda Linsefors · 2020-09-29T22:25:38.028Z · LW(p) · GW(p)

What possible reason could Petrov or those in similar situations have had for not pushing the button? Maybe he believed that the US would retaliate and kill his family at home, and that deterred him. In other words, he believed his enemy would push the button.

Or maybe he just did not want to kill millions of people?

Replies from: gilch↑ comment by gilch · 2020-09-30T20:00:23.184Z · LW(p) · GW(p)

In Petrov's case in particular, the new satellite-based early warning system was unproven so he didn't completely trust it, and he didn't believe a US first strike would use only one missile, or later, only four more, instead of hundreds. Furthermore, ground radar didn't confirm. And, of course, attacking on a false alarm would be suicidal because he believed the Enemy would push the button, so striking first "just in case", failed his cost-benefit analysis.

It was not "just" a commitment to pacifism.

↑ comment by Linda Linsefors · 2020-09-29T22:23:46.161Z · LW(p) · GW(p)

I should probably have said "we are not in that game theory situation".

(Though I do think that the real world is more complex that current game theory can handle. E.g. I don't think current game theory can fully handle unknown-unknown, but I could be wrong on this point)

The game of mutually assured destruction is very different even when just including known unknown.

↑ comment by McP82 · 2020-09-30T19:14:29.922Z · LW(p) · GW(p)

In other words, if your defense is "just following orders", you're in the wrong. Petrov, too, was strongly influenced to launch the nukes, and still refused... Like that Soviet submarine commander who, during the Cuban Missile Crisis, thought he was engaged with live depth charges by the US Navy.

↑ comment by Emiya (andrea-mulazzani) · 2020-09-27T09:06:05.358Z · LW(p) · GW(p)

I think the lessons are:

- To not have buttons that can be pressed to destroy the world, since the possibility existing for many agents is the real issue, because circumstances could deliver compelling reasons to press it and the more buttons exist the more likely is it that it will happen .

- Reality won't deliver the same circumstances twice. If the petrovdayadmin wanted to go for symmetry the message would have said: someone of the 270 pressed the red button, if you want to keep the home page online 5 people have to press it within etc, etc...

↑ comment by FactorialCode · 2020-09-26T18:10:45.539Z · LW(p) · GW(p)

Props to whoever petrov_day_admin_account was for successfully red-teaming lesswrong.

Replies from: brulez@gmail.com, jacobjacob↑ comment by Bill H (brulez@gmail.com) · 2020-09-26T22:02:56.604Z · LW(p) · GW(p)

Agreed, this is probably the best lesson of all. If the buttons exist, they can be hacked or the decision makers can be socially engineered.

270 people might have direct access, but the entire world has indirect access.

↑ comment by Bird Concept (jacobjacob) · 2020-09-28T06:46:28.252Z · LW(p) · GW(p)

Well, they did succeed, so for that they get points, but I think it was more due to a very weak defense on behalf of the victim rather than a very strong effort by petrov_day_admin_account.

Like, the victim could have noticed things like:

* The original instructions were sent over email + LessWrong message, but the phishing attempt was just LessWrong

* The original message was sent by Ben Pace, the latter by petrov_day_admin_account

* They were sent at different points in time, the latter of which was more correlated by the FB post that caused the phishing attempt

Moreover, the attacker even sent messages to two real LessWrong team members, which would have completely revealed the attempt had those admins not been asleep in a different time zone.

↑ comment by FactorialCode · 2020-09-28T06:56:33.501Z · LW(p) · GW(p)

I personally feel that the fact that it was such an effortless attempt makes it more impressive, and really hammers home the lesson we need to take away from this. It's one thing to put in a great deal of effort to defeat some defences. It's another to completely smash through them with the flick of a wrist.

Replies from: jacobjacob↑ comment by Bird Concept (jacobjacob) · 2020-09-28T17:42:50.862Z · LW(p) · GW(p)

What exactly do you think "the lesson we need to take away from this" is?

(Feel free to just link if you wrote that elsewhere in this comment section)

Replies from: FactorialCode↑ comment by FactorialCode · 2020-09-28T19:52:54.713Z · LW(p) · GW(p)

I haven't actually figured that out yet, but several people in this thread have proposed takeaways. I'm leaning towards "social engineering is unreasonably effective". That or something related to keeping a security mindset [LW · GW].

↑ comment by Slider · 2020-09-26T18:02:47.975Z · LW(p) · GW(p)

I am reminded by the ai unboxing challengs where part of the point was that any single trick that gets the job done can be guarded against but guarding against all stupid tricks is not about the tricks being particularly brilliant but just covering them all.

In millgrams experiment poeple are wiling to torture becuase a guy in a white jacket requested so. Here a person is ready to nuke the world because a accounts name incuded the word "admin".

↑ comment by Raemon · 2020-09-26T16:35:34.866Z · LW(p) · GW(p)

FYI I also got this message and to the best of my knowledge it was not sent by an admin

Replies from: habryka4, philh↑ comment by habryka (habryka4) · 2020-09-26T16:45:37.649Z · LW(p) · GW(p)

I can confirm that this message was not sent by any admin.

↑ comment by philh · 2020-09-26T20:45:25.075Z · LW(p) · GW(p)

That's... a bit surprising. If I were behind this, I wouldn't have sent a message to you because you're likely to know the plan. Anyone who receives the message but doesn't fall for it is an extra chance for the scheme to fail, because if nothing else they can post in this thread where someone is most likely to see it before entering codes. (Chris, I'm curious if you did look in this thread before you put them in?) In your case you could react with admin powers too, though I dunno if you would have considered that fair game.

I feel like this gives us a small amount of evidence about the identity of the adversary, but not enough to do any real speculation with.

Replies from: jacobjacob, Chris_Leong↑ comment by Bird Concept (jacobjacob) · 2020-09-26T23:33:29.319Z · LW(p) · GW(p)

EDIT: I now believe the below contains substantial errors, after reading this message [LW(p) · GW(p)] from the attacker.

Maybe you want to do sleuthing on your own, if so don't read below. (It uses LessWrong's spoiler feature. [LW · GW])

I believe the adversary was a person outside of the EA and rationality communities. They had not planned this, and they did not think very hard about who they sent the messages to (and didn't realise Habryka and Raemon were admins). Rather, they saw a spur-of-the-moment opportunity to attack this system after seeing a Facebook post by Chris Leong (which solicited reasons for and against pressing the button). I believe this because they commented on that Chris Leong posted and say they sent the message.

↑ comment by Chris_Leong · 2020-09-27T01:35:08.096Z · LW(p) · GW(p)

I looked at the thread and considered commenting here, but not many people had commented, so I figured there wasn't that much chance of getting a response if I posted here.

↑ comment by ArisKatsaris · 2020-09-27T10:38:20.950Z · LW(p) · GW(p)

In your other post, the only reason you indicated to not press the button is that other people would still be asleep and not have experienced the thing.

As such, it feels as if the "trick" by your friend just sped up what would have almost certainly happened anyway: you eventually pressing the button and nuking the site. It'd just have happened later in the day.

↑ comment by Chris_Leong · 2020-09-27T11:14:19.451Z · LW(p) · GW(p)

That was a poorly written post on my part. What I meant was that I was open to argument either way ("Should I press it or not?). I had decided that regardless of whether I pressed it or not I would at least wait until other people had a chance to wake up, as I thought it'd be boring for people if they woke up and the site was already nuked. So it wasn't my only reason - I hadn't even really thought about it too much as I was waiting for more comments.

Even though it was poorly written, I'm surprised how many people seem to have misunderstood it as I would have thought it was clear enough as I asked the question.

Replies from: Ruby↑ comment by Ruby · 2020-09-27T16:13:19.195Z · LW(p) · GW(p)

This seems plausible. I do want to note that your received message was timestamped 11:26 (local to you) and the button was pressed at 11:33:30 (The received message said the time limit was 30 minutes.), which doesn’t seems like an abundance of caution and hesitation to blow up the frontpage, as far as I can tell. :P

I know it wasn’t actual nukes, so fair to not put in the same effort, but I do hope if you ever do have nukes, you take full allotted time to think though it and discuss with anyone available (even if you think they’re unlikely to reply). ;)

Replies from: Chris_Leong↑ comment by Chris_Leong · 2020-09-28T00:25:59.992Z · LW(p) · GW(p)

Well, it was just a game and I had other things to do. Plus I didn't feel a duty to take it 100% seriously since, as grateful as I was to have the chance to participate, I didn't actually choose to play.

(Plus, adding on to this comment. I honestly had no idea people took this whole thing so seriously. Just seemed like a bit of fun to me!)

Replies from: habryka4, Arepo↑ comment by habryka (habryka4) · 2020-09-28T04:32:34.257Z · LW(p) · GW(p)

To be clear, while there is obviously some fun intended in this tradition, I don't think describing it as "just a game" feels appropriate to me. I do actually really care about people being able to coordinate to not take the site down. It's an actual hard thing to do that actually is trying to reinforce a bunch of the real and important values that I care about in Petrov day. Of course, I can't force you to feel a certain way, but like, I do sure feel a pretty high level of disappointment reading this response.

Like, the email literally said you were chosen to participate because we trusted you to not actually use the codes.

Replies from: jacobjacob, lionhearted, Chris_Leong↑ comment by Bird Concept (jacobjacob) · 2020-09-28T06:50:37.081Z · LW(p) · GW(p)

So, I think it's important that LessWrong admins do not get to unilaterally decide that You Are Now Playing a Game With Your Reputation.

However, if Chris doesn't want to play, the action available to him is simply to not engage. I don't think he gets to both press the button and change the rules to decide what a button press means to other players.

Replies from: lionhearted, Chris_Leong↑ comment by lionhearted (Sebastian Marshall) (lionhearted) · 2020-09-28T07:04:14.664Z · LW(p) · GW(p)

So, I think it's important that LessWrong admins do not get to unilaterally decide that You Are Now Playing a Game With Your Reputation.

Dude, we're all always playing games with our reputations. That's, like, what reputation is.

And good for Habyka for saying he feels disappointment at the lack of thoughtfulness and reflection, it's very much not just permitted but almost mandated by the founder of this place —

https://www.lesswrong.com/posts/tscc3e5eujrsEeFN4/well-kept-gardens-die-by-pacifism [LW · GW]

https://www.lesswrong.com/posts/RcZCwxFiZzE6X7nsv/what-do-we-mean-by-rationality-1 [LW · GW]

Here's the relevant citation from Well-Kept Gardens:

I confess, for a while I didn't even understand why communities had such trouble defending themselves—I thought it was pure naivete. It didn't occur to me that it was an egalitarian instinct to prevent chieftains from getting too much power.

This too:

I have seen rationalist communities die because they trusted their moderators too little.

Let's give Habryka a little more respect, eh? Disappointment is a perfectly valid thing to be experiencing and he's certainly conveying it quite mildly and graciously. Admins here did a hell of a job resurrecting this place back from the dead, to express very mild disapproval at a lack of thoughtfulness during a community event is....... well that seems very much on-mission, at least according to Yudkowsky.

Replies from: jacobjacob↑ comment by Bird Concept (jacobjacob) · 2020-09-28T18:25:31.782Z · LW(p) · GW(p)

Let's give Habryka a little more respect, eh?

I feel confused about how you interpreted my comment, and edited it lightly. For the record, Habryka's comment seems basically right to me; just wanted to add some nuance.

Replies from: lionhearted↑ comment by lionhearted (Sebastian Marshall) (lionhearted) · 2020-09-28T22:16:09.526Z · LW(p) · GW(p)

Ah, I see, I read the original version partially wrong, my mistake. We're in agreement. Regards.

↑ comment by Chris_Leong · 2020-09-28T06:59:16.204Z · LW(p) · GW(p)

Well, I had an option not to engage until I received the message saying it would blow up if enough users didn't press the button within half an hour.

Replies from: philh↑ comment by philh · 2020-09-28T09:45:09.338Z · LW(p) · GW(p)

Even after receiving that message, it still seems like the "do not engage" action is to not enter the codes?

Replies from: neel-nanda-1, Kaj_Sotala, Chris_Leong↑ comment by Neel Nanda (neel-nanda-1) · 2020-09-28T16:47:30.517Z · LW(p) · GW(p)

I think "doesn't want to ruin other people's fun or do anything significant" feels more accurate than "do not engage" here?

↑ comment by Kaj_Sotala · 2020-09-28T22:15:21.197Z · LW(p) · GW(p)

And then, for all he knew, his name might have been posted in a list of users who could have prevented the apocalypse but didn't.

Replies from: philh↑ comment by philh · 2020-09-28T23:30:25.273Z · LW(p) · GW(p)

Honestly, I kind of think that would be a straightforwardly silly thing to worry about, if one were to think about it for a few moments. (And I note that it's not Chris' stated reasoning.)

Like, leave aside that the PM was indistinguishable from a phishing attack. Pretend that it had come through both email and PM, from Ben Pace, with the codes repeated. All the same... LW just isn't the kind of place where we're going to socially shame someone for

- Not taking action

- ...within 30 minutes of an unexpected email being sent to them

- ...whether or not they even saw the email

- ...in a game they didn't agree to play.

↑ comment by Chris_Leong · 2020-09-28T10:32:50.738Z · LW(p) · GW(p)

And then maybe the site would have blown up, which was not what I was aiming for at that time.

↑ comment by lionhearted (Sebastian Marshall) (lionhearted) · 2020-09-28T06:53:03.351Z · LW(p) · GW(p)

Y'know, there was a post I thought about writing up, but then I was going to not bother to write it up, but I saw your comment here H and "high level of disappointment reading this response"... and so I wrote it up.

Here you go:

https://www.lesswrong.com/posts/scL68JtnSr3iakuc6/win-first-vs-chill-first [LW · GW]

That's an extreme-ish example, but I think the general principle holds to some extent in many places.

↑ comment by Chris_Leong · 2020-09-28T07:39:40.836Z · LW(p) · GW(p)

I've responded to you in the last section of this post [LW · GW].

↑ comment by Arepo · 2020-09-28T09:21:56.101Z · LW(p) · GW(p)

The downvotes on this comment seem ridiculous to me. If I email 270 people to tell them I've carefully selected them for some process, I cannot seriously presume they will give up >0 of their time to take part in it.

Any such sacrifice they make is a bonus, so if they do give up >0 time, it's absurd to ask that they give up even more time to research the issue.

Any negative consequences are on the person who set up the game. Adding the justification that 'I trust you' does not suddenly make the recipient more obligated to the spammer.

Replies from: habryka4↑ comment by habryka (habryka4) · 2020-09-28T20:46:08.673Z · LW(p) · GW(p)

It's not like we asked 270 random people. We asked 270 people, each one of which had already invested many hundreds of hours into participating on LessWrong, many of which I knew personally and considered close friends. Like, I agree, if you message 270 random people you don't get to expect anything from them, but the whole point of networks of trust is that you get to expect things from each other and ask things from each other.

If any of the people in that list of 270 people had asked me to spend a few minutes doing something that was important to them, I would have gladly obliged.

Replies from: Arepo, Dagon↑ comment by Arepo · 2020-09-29T12:29:51.210Z · LW(p) · GW(p)

It doesn't matter whether you'd have been hypothetically willing to do something for them. As I said on the Facebook thread, you did not consult with them. You merely informed them they were in a game, which, given the social criticism Chris has received, had real world consequences if they misplayed. In other words, you put them in harm's way without their consent. That is not a good way to build trust.

↑ comment by Dagon · 2020-09-29T20:06:28.610Z · LW(p) · GW(p)

Just a datapoint on variety of invitees: I was included in the 270, and I've invested hundreds of hours into LW. while I don't know you personally outside the site, I hope you consider me a trusted acquaintance, if not a friend. I had no clue this was anything but a funny little game, and my expectation was that there would be dozens of button presses before I even saw the mail.

I had not read nor paid attention to the petrov day posts (including prior years). I had no prior information about the expectations of behavior, the weight put on the outcome, nor the intended lesson/demonstration of ... something that's being interpreted as "coordination" or "trust".

I wasn't using the mental model that indicated I was being trusted not to do something - I took it as a game to see who'd get there first, or how many would press the button, not a hope that everyone would solemnly avoid playing (by passively ignoring the mail). I think without a ritual for joining the group (opt-in), it's hard to judge anyone or learn much about the community from the actions that occurred.

Replies from: habryka4↑ comment by habryka (habryka4) · 2020-09-29T20:14:59.532Z · LW(p) · GW(p)

I had no clue this was anything but a funny little game, and my expectation was that there would be dozens of button presses before I even saw the mail.

And this is pretty surprising to me. Like, we ran this game last year with half of the number of people, without anyone pressing the button. We didn't really change much about the framing, so where does this expectation come from? My current model is indeed that the shared context between the ~125 people from last year is quite a bit smaller than it was this year with ~250 people.

Replies from: ChristianKl, Dagon↑ comment by ChristianKl · 2020-09-30T14:59:09.077Z · LW(p) · GW(p)

I don't think that there was no change in framing. Last year:

Every Petrov Day, we practice not destroying the world. One particular way to do this is to practice the virtue of not taking unilateralist action.

It’s difficult to know who can be trusted, but today I have selected a group of LessWrong users who I think I can rely on in this way. You’ve all been given the opportunity to show yourselves capable and trustworthy.

This Petrov Day, between midnight and midnight PST, if you, ChristianKl, enter the launch codes below on LessWrong, the Frontpage will go down for 24 hours.

Personalised launch code: ...

I hope to see you on the other side of this, with our honor intact.

Yours, Ben Pace & the LessWrong 2.0 Team

This year:

On Petrov Day, we celebrate and practice not destroying the world.

It's difficult to know who can be trusted, but today I have selected a group of 270 LessWrong users who I think I can rely on in this way. You've all been given the opportunity to not destroy LessWrong.

This Petrov Day, if you, ChristianKl, enter the launch codes below on LessWrong, the Frontpage will go down for 24 hours, removing a resource thousands of people view every day. Each entrusted user has personalised launch codes, so that it will be clear who nuked the site.

Your personalised codes are: ...I hope to see you in the dawn of tomorrow, with our honor still intact.

–Ben Pace & the LessWrong Team

The last year was more explict about both the goal of the exercise and what it means for an individual to not use the code.

Using the phrase destroy LessWrong this year was a tell that this isn't a serious exercise because people ususally don't exaggerate when they are serious. Especially rationalists can usually be trusted to use clear words when they are serious.

Reading the message this time, I had the impression that it would be more likely for the website to go down then last year.

↑ comment by Dagon · 2020-09-29T20:29:04.335Z · LW(p) · GW(p)

where does this expectation come from?

I hadn't paid attention to the topic, and did not know it had run last year with that result (or at least hadn't thought about it enough to update on) so that expectation was my prior.

Now that I've caught up on things, I realize I am confused. I suspect it was a fluke or some unanalyzed difference in setup that caused the success last year, but that explanation seems a bit facile, so I'm not sure how to actually update. I'd predict that running it again would result in the button being pressed, but I wouldn't wager very much (in either direction).

↑ comment by Neel Nanda (neel-nanda-1) · 2020-09-26T13:39:28.371Z · LW(p) · GW(p)

Awww. I can't decide whether to be annoyed with petrov_day_admin_account , or to appreciate their object lesson in the importance of pre-commitment and robust epistemologies (I'm leaning towards both!)

Replies from: mr-hire↑ comment by Matt Goldenberg (mr-hire) · 2020-09-26T22:56:06.868Z · LW(p) · GW(p)

It seems like the lessons are more about credulity and basic opsec?

↑ comment by gjm · 2020-09-26T13:34:41.153Z · LW(p) · GW(p)

If this was also done by the site admins (rather than being a deliberate attempt at sabotage), it seems a bit xkcd-169-y to me.

If it was done by the admins: If someone receiving that message had replied to say something like "the button still says 'launch the nukes' -- please clarify", what would they have been told?

Replies from: philh, Benito↑ comment by philh · 2020-09-26T14:02:33.294Z · LW(p) · GW(p)

If Chris could be confident it came from the admins I'd agree, but with my current knowledge (and assuming the admins would have been honest had Chris messaged them on their normal accounts) it feels more like pentesting.

Replies from: spencerb↑ comment by spencerb · 2020-09-26T15:33:02.940Z · LW(p) · GW(p)

My company "evaluates" phishing propensity by sending employees emails directing them to "honeypots" which are in the corporate domain and signed by the corporate ssl certificates. Unsurprisingly, many employees trust ssl and enter their credentials. My takeaway was not that people are bad at security, but that they will tend to trust the system if the stakes don't appear too high.

Replies from: philh↑ comment by Ben Pace (Benito) · 2020-09-26T22:41:35.568Z · LW(p) · GW(p)

I also think that XKCD would be quite appropriate had it been the site admins. But no, it was not us.

↑ comment by Dawn Drescher (Telofy) · 2020-09-26T12:23:08.556Z · LW(p) · GW(p)

Aw, consoling hugs!

Replies from: philh↑ comment by Mati_Roy (MathieuRoy) · 2020-09-26T15:36:09.259Z · LW(p) · GW(p)

So the LW team, who created the experiment, themselves tricked you by sending this message? And the way to win the game was for you to read on LessWrong the warning "enter launch codes to destroy LessWrong" and decide against it, despite the message? The message was a metaphor for a false alarm?

Well, here's my metaphor for a moon-base back up: https://www.greaterwrong.com/ :)

I'll set a reminder to set-up additional safety measures for next year.

EtA: Ah, Vanessa Kosoy already pointed this out [LW(p) · GW(p)]

EtA: Ah, Raymond said it wasn't them that sent this email

Replies from: Chris_Leong↑ comment by Chris_Leong · 2020-09-27T01:25:41.435Z · LW(p) · GW(p)

Yeah, it wasn't the LW team, but one of my friends

Replies from: MathieuRoy↑ comment by Mati_Roy (MathieuRoy) · 2020-09-27T02:56:35.114Z · LW(p) · GW(p)

who?

Replies from: Chris_Leong↑ comment by Vanessa Kosoy (vanessa-kosoy) · 2020-09-26T13:07:32.203Z · LW(p) · GW(p)

Notably the greaterwrong frontpage still works. Maybe it's like a Mars colony.

comment by lionhearted (Sebastian Marshall) (lionhearted) · 2020-09-28T21:26:22.289Z · LW(p) · GW(p)

Two thoughts —

(1) Some sort of polling or surveying might be useful. In the Public Goods Game, researchers rigorously check whether participants understand the game and its consequences before including them in datasets. It's quite possible that there's incredibly divergent understandings of Petrov Day among the user population. Some sort of surveying would be useful to understand that, as well as things like people's sentiments towards unilateralist action, trust, etc no? It'd be self-reported data but it'd be better than nothing.

(2) I wonder how Petrov Day setup and engagement would change if the site went down for a month as a consequence.

comment by Liron · 2020-09-26T08:48:08.848Z · LW(p) · GW(p)

Right now it seems like the Nash equilibrium is pretty stable at everyone not pressing the button. Maybe we can simulate adding in some lower-priority yet still compelling pressure to press the button, analogous to Petrov’s need to follow orders or the US’s need to prevent Russians from stationing nuclear missiles in Cuba.

Replies from: habryka4↑ comment by habryka (habryka4) · 2020-09-26T18:20:16.322Z · LW(p) · GW(p)

Yep, seems like the Nash Equlibrium is pretty stably at everyone not pressing the button. Really needed some more incentives, I agree.

Replies from: Liron, vanessa-kosoy↑ comment by Liron · 2020-09-26T21:44:45.808Z · LW(p) · GW(p)

Yeah I was off base there. The Nash Equilibrium is nontrivial because some players will challenge themselves to “win” by tricking the group with button access to push it. Plus probably other reasons I haven’t thought of.

Replies from: habryka4↑ comment by habryka (habryka4) · 2020-09-26T22:15:50.486Z · LW(p) · GW(p)

I did realize my comment came of a bit too snarky, now that I am rereading it. Just to be clear, no snark intended, just some light jest!

↑ comment by Vanessa Kosoy (vanessa-kosoy) · 2020-09-26T19:41:04.495Z · LW(p) · GW(p)

comment by Mati_Roy (MathieuRoy) · 2020-09-26T16:51:06.706Z · LW(p) · GW(p)

Here's how to survive the LessWrong metaphorical end of the world (upvote this comment so others can see):

https://www.lesswrong.com/posts/evDZoYG4p6ZkQQkDw/surviving-petrov-day [LW · GW]

comment by Neel Nanda (neel-nanda-1) · 2020-09-26T08:39:29.004Z · LW(p) · GW(p)

I'm curious why this was designed to be non-anonymous? It feels more in the spirit of "be aware I could destroy something, and choosing not to" if it doesn't have cost to me, beyond awareness that destruction is sad

Replies from: Benito↑ comment by Ben Pace (Benito) · 2020-09-26T09:42:54.819Z · LW(p) · GW(p)

(As I stayed up quite late setting this all up, I will answer questions tomorrow... or at least, I will if the site is still up!)

Replies from: Benito↑ comment by Ben Pace (Benito) · 2020-09-26T20:13:33.590Z · LW(p) · GW(p)

I think I will write a follow-up post in a day or so, after the site is back up. For now, I'll leave y'all to figure out wtf to believe about the world in light of this.

In the meantime I'm happy to confirm any basic facts. Indeed, user Chris_Leong submitted his unique launch codes at 4:33am Pacific Time, bringing down the LessWrong Frontpage for 24 hours.

comment by James_Miller · 2020-09-28T17:12:45.979Z · LW(p) · GW(p)

For next year: Raise $1,000 and convert the money to cash. Setup some device where the money burns if a code is entered, and otherwise the money gets donated to the most effective charity. Have a livestream that shows the cash and will show the fire if the code is entered.

comment by JoshuaFox · 2020-09-27T06:58:19.093Z · LW(p) · GW(p)

I was thinking you should do a game like Hofstadter describes in "The Tale of Happiton" and the Platonia dilemma. Avoid destruction by cooperation, even if it is without coordination.

comment by simon · 2020-09-26T22:04:30.567Z · LW(p) · GW(p)

Can the nuked front page link this post for "what happened" and also the petrov day post for context, instead of just the petrov day post, so people coming in actually know why there is no front page.

Replies from: habryka4↑ comment by habryka (habryka4) · 2020-09-26T22:16:59.383Z · LW(p) · GW(p)

Just to clarify, which two posts would you like us to link to?

Replies from: simon↑ comment by simon · 2020-09-27T00:04:00.191Z · LW(p) · GW(p)

It' s currently as I was suggesting, which makes me wonder if it was already like that and I was just confused, but I had been thinking that the "what happened" had linked to the original Petrov Day post and that that was the only post linked.

Replies from: habryka4↑ comment by habryka (habryka4) · 2020-09-27T00:40:06.833Z · LW(p) · GW(p)

Oh, sorry, you are totally correct. We originally accidentally linked to the 2019 post, and I fixed it this morning.

comment by AdeleneDawner · 2020-09-26T11:30:34.251Z · LW(p) · GW(p)

(I guess I appreciate being thought of but it does seem like somewhat undermining your point to tag people who haven't used the site in checks seven-almost-eight years.)

Replies from: Benito↑ comment by Ben Pace (Benito) · 2020-09-26T18:37:03.937Z · LW(p) · GW(p)

Hi :) I was trying to safely increase the number of people with codes, so I included about 40 good older users who had very high karma but hadn't been active in the last year. It didn't seem bad to offer you the codes, you all seemed quite good natured from your comments.

And not one of you nuked the site, so thanks for that...

comment by MikkW (mikkel-wilson) · 2020-09-26T08:15:33.552Z · LW(p) · GW(p)

During Thursday 26th September (midnight to midnight Pacific Time), we will practice the skill of sitting together and not pressing harmful buttons.

Happy Thursday

Replies from: Benito↑ comment by Ben Pace (Benito) · 2020-09-26T08:18:00.076Z · LW(p) · GW(p)

Thanks, fixed.

comment by Chris_Leong · 2020-09-26T10:39:26.582Z · LW(p) · GW(p)

Should I press the button or not? I haven't pressed the button at the current time as it would be disappointing to people if they received the email, but someone pressed it while they were still asleep.

Replies from: vanessa-kosoy↑ comment by Vanessa Kosoy (vanessa-kosoy) · 2020-09-26T13:14:02.540Z · LW(p) · GW(p)

Wait, what? I'm confused by this comment. Did you want to nuke the frontpage? It seems inconsistent with what you wrote in the other [LW(p) · GW(p)] comment.

Replies from: MathieuRoy, Chris_Leong↑ comment by Mati_Roy (MathieuRoy) · 2020-09-26T16:53:03.343Z · LW(p) · GW(p)

good point @Vanessa

@Chris, please explain!

↑ comment by Chris_Leong · 2020-09-26T21:08:52.938Z · LW(p) · GW(p)

I hadn't decided whether or not to nuke it, but if I did nuke it, I would have been it several hours later, after people had a chance to wake up.

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2020-09-27T08:58:07.911Z · LW(p) · GW(p)

Re: downvotes on the parent comment. Offers of additional (requested!) information shouldn't be punished, or else you create additional incentive to be secretive, to ignore requests for information.

Replies from: philh↑ comment by philh · 2020-09-28T09:59:37.274Z · LW(p) · GW(p)

I don't have strong feelings about this particular comment. But in general I think this is a tricky question. On the one hand you don't want to disincentivize providing the information; on the other hand you do want to be able to react to the information, and sometimes the appropriate reaction is to punish them. Maybe you want to punish them less for providing the information, but punishing them zero would also be really bad incentives.

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2020-09-28T10:40:46.398Z · LW(p) · GW(p)

If there is any punishment at all, or even absence of a reward (perhaps an indirect game theoretic reward), that is the same as there being no incentive to provide information, and you end up ignorant, that is the situation you are experiencing becomes unlikely.

on the other hand you do want to be able to react to the information

The point is that you are actually unable to usefully react to such information in a way that disincentivizes its delivery. If you have the tendency to try, then the information won't be delivered, and your reaction will happen in a low-probability outcome, won't have significant weight in the expected utility across outcomes. For your reaction to actually matter, it should ensure the incentive for the present situation to manifest is in place.

Replies from: philh, frontier64↑ comment by frontier64 · 2020-09-28T15:18:52.392Z · LW(p) · GW(p)

Your strategy is only valid if you assume that the community will have adequate knowledge of what's happened before wrt people who have provided information that should damage their reputation (i.e. confessed). The optimum situation would be one where we can negatively react to negative information, which will disincentivize similar bad actions in the future, but not disincentivize future actors from confessing.

From another line of thinking, what's the upside to not disincentivizing future potential confessors from confessing if the community can't take any action to punish revealed misbehavior? The end result in your preferred scenario seems to be that confessions only lead to the community learning of more negative behavior without any way to disincentivize this behavior from occurring again in the future. That seems to be net negative. What's the point in learning something if you can't react appropriately to the new knowledge?

If all future potential confessors have adequate knowledge of how the community has reacted to past confessors and can extrapolate how the community will react to their own confession maybe it is best to disincentivize these potential confessors from confessing.