Transformative AGI by 2043 is <1% likely

post by Ted Sanders (ted-sanders) · 2023-06-06T17:36:48.296Z · LW · GW · 117 commentsThis is a link post for https://arxiv.org/abs/2306.02519

Contents

Abstract Executive summary Event Forecast For details, read the full paper. About the authors Ari Allyn-Feuer Areas of expertise Track record of forecasting Ted Sanders Areas of expertise Track record of forecasting Discussion None 117 comments

(Crossposted [EA · GW]to the EA forum)

Abstract

The linked paper is our submission to the Open Philanthropy AI Worldviews Contest. In it, we estimate the likelihood of transformative artificial general intelligence (AGI) by 2043 and find it to be <1%.

Specifically, we argue:

- The bar is high: AGI as defined by the contest—something like AI that can perform nearly all valuable tasks at human cost or less—which we will call transformative AGI is a much higher bar than merely massive progress in AI, or even the unambiguous attainment of expensive superhuman AGI or cheap but uneven AGI.

- Many steps are needed: The probability of transformative AGI by 2043 can be decomposed as the joint probability of a number of necessary steps, which we group into categories of software, hardware, and sociopolitical factors.

- No step is guaranteed: For each step, we estimate a probability of success by 2043,

conditional on prior steps being achieved. Many steps are quite constrained by the short timeline, and our estimates range from 16% to 95%. - Therefore, the odds are low: Multiplying the cascading conditional probabilities together, we estimate that transformative AGI by 2043 is 0.4% likely. Reaching >10% seems to require probabilities that feel unreasonably high, and even 3% seems unlikely.

Thoughtfully applying the cascading conditional probability approach to this question yields lower probability values than is often supposed. This framework helps enumerate the many future scenarios where humanity makes partial but incomplete progress toward transformative AGI.

Executive summary

For AGI to do most human work for <$25/hr by 2043, many things must happen.

We forecast cascading conditional probabilities for 10 necessary events, and find they multiply to an overall likelihood of 0.4%:

Event | Forecastby 2043 or TAGI, |

| We invent algorithms for transformative AGI | 60% |

| We invent a way for AGIs to learn faster than humans | 40% |

| AGI inference costs drop below $25/hr (per human equivalent) | 16% |

| We invent and scale cheap, quality robots | 60% |

| We massively scale production of chips and power | 46% |

| We avoid derailment by human regulation | 70% |

| We avoid derailment by AI-caused delay | 90% |

| We avoid derailment from wars (e.g., China invades Taiwan) | 70% |

| We avoid derailment from pandemics | 90% |

| We avoid derailment from severe depressions | 95% |

| Joint odds | 0.4% |

If you think our estimates are pessimistic, feel free to substitute your own here. You’ll find it difficult to arrive at odds above 10%.

Of course, the difficulty is by construction. Any framework that multiplies ten probabilities together is almost fated to produce low odds.

So a good skeptic must ask: Is our framework fair?

There are two possible errors to beware of:

- Did we neglect possible parallel paths to transformative AGI?

- Did we hew toward unconditional probabilities rather than fully conditional probabilities?

We believe we are innocent of both sins.

Regarding failing to model parallel disjunctive paths:

- We have chosen generic steps that don’t make rigid assumptions about the particular algorithms, requirements, or timelines of AGI technology

- One opinionated claim we do make is that transformative AGI by 2043 will almost certainly be run on semiconductor transistors powered by electricity and built in capital-intensive fabs, and we spend many pages justifying this belief

Regarding failing to really grapple with conditional probabilities:

- Our conditional probabilities are, in some cases, quite different from our unconditional probabilities. In particular, we assume that a world on track to transformative AGI will…

- Construct semiconductor fabs and power plants at a far faster pace than today (our unconditional probability is substantially lower)

- Have invented very cheap and efficient chips by today’s standards (our unconditional probability is substantially lower)

- Have higher risks of disruption by regulation

- Have higher risks of disruption by war

- Have lower risks of disruption by natural pandemic

- Have higher risks of disruption by engineered pandemic

Therefore, for the reasons above—namely, that transformative AGI is a very high bar (far higher than “mere” AGI) and many uncertain events must jointly occur—we are persuaded that the likelihood of transformative AGI by 2043 is <1%, a much lower number than we otherwise intuit. We nonetheless anticipate stunning advancements in AI over the next 20 years, and forecast substantially higher likelihoods of transformative AGI beyond 2043.

For details, read the full paper.

About the authors

This essay is jointly authored by Ari Allyn-Feuer and Ted Sanders. Below, we share our areas of expertise and track records of forecasting. Of course, credentials are no guarantee of accuracy. We share them not to appeal to our authority (plenty of experts are wrong), but to suggest that if it sounds like we’ve said something obviously wrong, it may merit a second look (or at least a compassionate understanding that not every argument can be explicitly addressed in an essay trying not to become a book).

Ari Allyn-Feuer

Areas of expertise

I am a decent expert in the complexity of biology and using computers to understand biology.

- I earned a Ph.D. in Bioinformatics at the University of Michigan, where I spent years using ML methods to model the relationships between the genome, epigenome, and cellular and organismal functions. At graduation I had offers to work in the AI departments of three large pharmaceutical and biotechnology companies, plus a biological software company.

- I have spent the last five years as an AI Engineer, later Product Manager, now Director of AI Product, in the AI department of GSK, an industry-leading AI group which uses cutting edge methods and hardware (including Cerebras units and work with quantum computing), is connected with leading academics in AI and the epigenome, and is particularly engaged in reinforcement learning research.

Track record of forecasting

While I don’t have Ted’s explicit formal credentials as a forecaster, I’ve issued some pretty important public correctives of then-dominant narratives:

- I said in print on January 24, 2020 that due to its observed properties, the then-unnamed novel coronavirus spreading in Wuhan, China, had a significant chance of promptly going pandemic and killing tens of millions of humans. It subsequently did.

- I said in print in June 2020 that it was an odds-on favorite for mRNA and adenovirus COVID-19 vaccines to prove highly effective and be deployed at scale in late 2020. They subsequently did and were.

- I said in print in 2013 when the Hyperloop proposal was released that the technical approach of air bearings in overland vacuum tubes on scavenged rights of way wouldn’t work. Subsequently, despite having insisted they would work and spent millions of dollars on them, every Hyperloop company abandoned all three of these elements, and development of Hyperloops has largely ceased.

- I said in print in 2016 that Level 4 self-driving cars would not be commercialized or near commercialization by 2021 due to the long tail of unusual situations, when several major car companies said they would. They subsequently were not.

- I used my entire net worth and borrowing capacity to buy an abandoned mansion in 2011, and sold it seven years later for five times the price.

Luck played a role in each of these predictions, and I have also made other predictions that didn’t pan out as well, but I hope my record reflects my decent calibration and genuine open-mindedness.

Ted Sanders

Areas of expertise

I am a decent expert in semiconductor technology and AI technology.

- I earned a PhD in Applied Physics from Stanford, where I spent years researching semiconductor physics and the potential of new technologies to beat the 60 mV/dec limit of today's silicon transistor (e.g., magnetic computing, quantum computing, photonic computing, reversible computing, negative capacitance transistors, and other ideas). These years of research inform our perspective on the likelihood of hardware progress over the next 20 years.

- After graduation, I had the opportunity to work at Intel R&D on next-gen computer chips, but instead, worked as a management consultant in the semiconductor industry and advised semiconductor CEOs on R&D prioritization and supply chain strategy. These years of work inform our perspective on the difficulty of rapidly scaling semiconductor production.

- Today, I work on AGI technology as a research engineer at OpenAI, a company aiming to develop transformative AGI. This work informs our perspective on software progress needed for AGI. (Disclaimer: nothing in this essay reflects OpenAI’s beliefs or its non-public information.)

Track record of forecasting

I have a track record of success in forecasting competitions:

- Top prize in SciCast technology forecasting tournament (15 out of ~10,000, ~$2,500 winnings)

- Top Hypermind US NGDP forecaster in 2014 (1 out of ~1,000)

- 1st place Stanford CME250 AI/ML Prediction Competition (1 of 73)

- 2nd place ‘Let’s invent tomorrow’ Private Banking prediction market (2 out of ~100)

- 2nd place DAGGRE Workshop competition (2 out of ~50)

- 3rd place LG Display Futurecasting Tournament (3 out of 100+)

- 4th Place SciCast conditional forecasting contest

- 9th place DAGGRE Geopolitical Forecasting Competition

- 30th place Replication Markets (~$1,000 winnings)

- Winner of ~$4200 in the 2022 Hybrid Persuasion-Forecasting Tournament on existential risks (told ranking was “quite well”)

Each finish resulted from luck alongside skill, but in aggregate I hope my record reflects my decent calibration and genuine open-mindedness.

Discussion

We look forward to discussing our essay with you in the comments below. The more we learn from you, the more pleased we'll be.

If you disagree with our admittedly imperfect guesses, we kindly ask that you supply your own preferred probabilities (or framework modifications). It's easier to tear down than build up, and we'd love to hear how you think this analysis can be improved.

117 comments

Comments sorted by top scores.

comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-06-06T18:38:43.312Z · LW(p) · GW(p)

Thanks for this well-researched and thorough argument! I think I have a bunch of disagreements, but my main one is that it really doesn't seem like AGI will require 8-10 OOMs more inference compute than GPT-4. I am not at all convinced by your argument that it would require that much compute to accurately simulate the human brain. Maybe it would, but we aren't trying to accurately simulate a human brain, we are trying to learn circuitry that is just as capable.

Also: Could you, for posterity, list some capabilities that you are highly confident no AI system will have by 2030? Ideally capabilities that come prior to a point-of-no-return so it's not too late to act by the time we see those capabilities.

↑ comment by Ted Sanders (ted-sanders) · 2023-06-06T23:46:02.273Z · LW(p) · GW(p)

Oh, to clarify, we're not predicting AGI will be achieved by brain simulation. We're using the human brain as a starting point for guessing how much compute AGI will need, and then applying a giant confidence interval (to account for cases where AGI is way more efficient, as well as way less efficient). It's the most uncertain part of our analysis and we're open to updating.

For posterity, by 2030, I predict we will not have:

- AI drivers that work in any country

- AI swim instructors

- AI that can do all of my current job at OpenAI in 2023

- AI that can get into a 2017 Toyota Prius and drive it

- AI that cleans my home (e.g., laundry, dishwashing, vacuuming, and/or wiping)

- AI retail workers

- AI managers

- AI CEOs running their own companies

- Self-replicating AIs running around the internet acquiring resources

Here are some of my predictions from the past:

- Predictions about the year 2050, written 7ish years ago: https://www.tedsanders.com/predictions-about-the-year-2050/

- Predictions on self-driving from 5 years ago: https://www.tedsanders.com/on-self-driving-cars/

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-06-07T03:22:44.464Z · LW(p) · GW(p)

Thanks! AI managers, CEOs, self-replicators, and your-job-doers (what is your job anyway? I never asked!) seem like things that could happen before it's too late (albeit only very shortly before) so they are potential sources of bets between us. (The other stuff requires lots of progress in robotics which I don't expect to happen until after the singularity, though I could be wrong)

Yes, I understand that you don't think AGI will be achieved by brain simulation. I like that you have a giant confidence interval to account for cases where AGI is way more efficient and way less efficient. I'm saying something has gone wrong with your confidence interval if the median is 8-10 OOMs more inference cost than GPT-4, given how powerful GPT-4 is. Subjectively GPT-4 seems pretty close to AGI, in the sense of being able to automate all strategically relevant tasks that can be done by human remote worker professionals. It's not quite there yet, but looking at the progress from GPT-2 to GPT-3 to GPT-4, it seems like maybe GPT-5 or GPT-6 would do it. But the middle of your confidence interval says that we'll need something like GPT-8, 9, or 10. This might be justified a priori, if all we had to go on was comparisons to the human brain, but I think a posteriori we should update on observing the GPT series so far and shift our probability mass significantly downwards. To put it another way, imagine if you had done this analysis in 2018, immediately after seeing GPT-1. Had someone at that time asked you to predict when e.g. a single AI system could pass the Bar, the LSAT, the SAT, and also do lots of coding interview problems and so forth, you probably would have said something like "Hmmm, human brain size anchor suggests we need another 12-15 OOMs from GPT-1 size to achieve AGI. Those skills seem pretty close to AGI, but idk maybe they are easier than I expect. I'll say... 10 OOMs from GPT-1. Could be more, could be less." Well, surprise! It was much less than that! (Of course I can't speak for you, but I can speak for myself, and that's what I would have said I had been thinking about the human brain anchor in 2018 and was convinced that 1e20-1e21 FLOP was the median of my distribution).

↑ comment by Ted Sanders (ted-sanders) · 2023-06-07T07:44:47.836Z · LW(p) · GW(p)

Great points.

I think you've identified a good crux between us: I think GPT-4 is far from automating remote workers and you think it's close. If GPT-5/6 automate most remote work, that will be point in favor of your view, and if takes until GPT-8/9/10+, that will be a point in favor of mine. And if GPT gradually provides increasingly powerful tools that wildly transform jobs before they are eventually automated away by GPT-7, then we can call it a tie. :)

I also agree that the magic of GPT should update one into believing in shorter AGI timelines with lower compute requirements. And you're right, this framework anchored on the human brain can't cleanly adjust from such updates. We didn't want to overcomplicate our model, but perhaps we oversimplified here. (One defense is that the hugeness of our error bars mean that relatively large updates are needed to make a substantial difference in the CDF.)

Lastly, I think when we see GPT unexpectedly pass the Bar, LSAT, SAT, etc. but continue to fail at basic reasoning, it should update us into thinking AGI is sooner (vs a no pass scenario), but also update us into realizing these metrics might be further from AGI than we originally assumed based on human analogues.

Replies from: daniel-kokotajlo, GdL752↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-06-07T16:50:57.054Z · LW(p) · GW(p)

Excellent! Yeah I think GPT-4 is close to automating remote workers. 5 or 6, with suitable extensions (e.g. multimodal, langchain, etc.) will succeed I think. Of course, there'll be a lag between "technically existing AI systems can be made to ~fully automate job X" and "most people with job X are now unemployed" because things take time to percolate through the economy. But I think by the time of GPT-6 it'll be clear that this percolation is beginning to happen & the sorts of things that employ remote workers in 2023 (especially the strategically relevant ones, the stuff that goes into AI R&D) are doable by the latest AIs.

It sounds like you think GPT will continue to fail at basic reasoning for some time? And that it currently fails at basic reasoning to a significantly greater extent than humans do? I'd be interested to hear more about this, what sort of examples do you have in mind? This might be another great crux between us.

↑ comment by Andy_McKenzie · 2023-06-07T23:47:09.555Z · LW(p) · GW(p)

I’m wondering if we could make this into a bet. If by remote workers we include programmers, then I’d be willing to bet that GPT-5/6, depending upon what that means (might be easier to say the top LLMs or other models trained by anyone by 2026?) will not be able to replace them.

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-06-10T15:46:31.337Z · LW(p) · GW(p)

I've made several bets like this in the past, but it's a bit frustrating since I don't stand to gain anything by winning -- by the time I win the bet, we are well into the singularity & there isn't much for me to do with the money anymore. What are the terms you have in mind? We could do the thing where you give me money now, and I give it back with interest later.

↑ comment by Andy_McKenzie · 2023-06-10T20:06:57.355Z · LW(p) · GW(p)

Understandable. How about this?

Bet

Andy will donate $50 to a charity of Daniel's choice now.

If, by January 2027, there is not a report from a reputable source confirming that at least three companies, that would previously have relied upon programmers, and meet a defined level of success, are being run without the need for human programmers, due to the independent capabilities of an AI developed by OpenAI or another AI organization, then Daniel will donate $100, adjusted for inflation as of June 2023, to a charity of Andy's choice.

Terms

Reputable Source: For the purpose of this bet, reputable sources include MIT Technology Review, Nature News, The Wall Street Journal, The New York Times, Wired, The Guardian, or TechCrunch, or similar publications of recognized journalistic professionalism. Personal blogs, social media sites, or tweets are excluded.

AI's Capabilities: The AI must be capable of independently performing the full range of tasks typically carried out by a programmer, including but not limited to writing, debugging, maintaining code, and designing system architecture.

Equivalent Roles: Roles that involve tasks requiring comparable technical skills and knowledge to a programmer, such as maintaining codebases, approving code produced by AI, or prompting the AI with specific instructions about what code to write.

Level of Success: The companies must be generating a minimum annual revenue of $10 million (or likely generating this amount of revenue if it is not public knowledge).

Report: A single, substantive article or claim in one of the defined reputable sources that verifies the defined conditions.

AI Organization: An institution or entity recognized for conducting research in AI or developing AI technologies. This could include academic institutions, commercial entities, or government agencies.

Inflation Adjustment: The donation will be an equivalent amount of money as $100 as of June 2023, adjusted for inflation based on https://www.bls.gov/data/inflation_calculator.htm.

I guess that there might be some disagreements in these terms, so I'd be curious to hear your suggested improvements.

Caveat: I don't have much disposable money right now, so it's not much money, but perhaps this is still interesting as a marker of our beliefs. Totally ok if it's not enough money to be worth it to you.

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-06-10T20:18:29.827Z · LW(p) · GW(p)

Given your lack of disposable money I think this would be a bad deal for you, and as for me, it is sorta borderline (my credence that the bet will resolve in your favor is something like 40%?) but sure, let's do it. As for what charity to donate to, how about Animal Welfare Fund | Effective Altruism Funds. Thanks for working out all these details!

Here are some grey area cases we should work out:

--What if there is a human programmer managing the whole setup, but they are basically a formality? Like, the company does technically have programmers on staff but the programmers basically just form an interface between the company and ChatGPT and theoretically if the managers of the company were willing to spend a month learning how to talk to ChatGPT effectively they could fire the human programmers?

--What if it's clear that the reason you are winning the bet is that the government has stepped in to ban the relevant sorts of AI?

↑ comment by Andy_McKenzie · 2023-06-12T15:53:11.110Z · LW(p) · GW(p)

Sounds good, I'm happy with that arrangement once we get these details figured out.

Regarding the human programmer formality, it seems like business owners would have to be really incompetent for this to be a factor. Plenty of managers have coding experience. If the programmers aren't doing anything useful then they will be let go or new companies will start that don't have them. They are a huge expense. I'm inclined to not include this since it's an ambiguity that seems implausible to me.

Regarding the potential ban by the government, I wasn't really thinking of that as a possible option. What kind of ban do you have in mind? I imagine that regulation of AI is very likely by then, so if the automation of all programmers hasn't happened by Jan 2027, it seems very easy to argue that it would have happened in the absence of the regulation.

Regarding these and a few of the other ambiguous things, one way we could do this is that you and I could just agree on it in Jan 2027. Otherwise, the bet resolves N/A and you don't donate anything. This could make it an interesting Manifold question because it's a bit adversarial. This way, we could also get rid of the requirement for it to be reported by a reputable source, which is going to be tricky to determine.

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-06-12T21:22:07.580Z · LW(p) · GW(p)

How about this:

--Re the first grey area: We rule in your favor here.

--Re the second grey area: You decide, in 2027, based on your own best judgment, whether or not it would have happened absent regulation. I can disagree with your judgment, but I still have to agree that you won the bet (if you rule in your favor).

↑ comment by Andy_McKenzie · 2023-06-16T20:48:53.612Z · LW(p) · GW(p)

Those sound good to me! I donated to your charity (the Animal Welfare Fund) to finalize it. Lmk if you want me to email you the receipt. Here's the manifold market:

Bet

Andy will donate $50 to a charity of Daniel's choice now.

If, by January 2027, there is not a report from a reputable source confirming that at least three companies, that would previously have relied upon programmers, and meet a defined level of success, are being run without the need for human programmers, due to the independent capabilities of an AI developed by OpenAI or another AI organization, then Daniel will donate $100, adjusted for inflation as of June 2023, to a charity of Andy's choice.

Terms

Reputable Source: For the purpose of this bet, reputable sources include MIT Technology Review, Nature News, The Wall Street Journal, The New York Times, Wired, The Guardian, or TechCrunch, or similar publications of recognized journalistic professionalism. Personal blogs, social media sites, or tweets are excluded.

AI's Capabilities: The AI must be capable of independently performing the full range of tasks typically carried out by a programmer, including but not limited to writing, debugging, maintaining code, and designing system architecture.

Equivalent Roles: Roles that involve tasks requiring comparable technical skills and knowledge to a programmer, such as maintaining codebases, approving code produced by AI, or prompting the AI with specific instructions about what code to write.

Level of Success: The companies must be generating a minimum annual revenue of $10 million (or likely generating this amount of revenue if it is not public knowledge).

Report: A single, substantive article or claim in one of the defined reputable sources that verifies the defined conditions.

AI Organization: An institution or entity recognized for conducting research in AI or developing AI technologies. This could include academic institutions, commercial entities, or government agencies.

Inflation Adjustment: The donation will be an equivalent amount of money as $100 as of June 2023, adjusted for inflation based on https://www.bls.gov/data/inflation_calculator.htm.

Regulatory Impact: In January 2027, Andy will use his best judgment to decide whether the conditions of the bet would have been met in the absence of any government regulation restricting or banning the types of AI that would have otherwise replaced programmers.

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-06-16T20:51:31.595Z · LW(p) · GW(p)

Sounds good, thank you! Emailing the receipt would be nice.

Replies from: Andy_McKenzie↑ comment by Andy_McKenzie · 2023-06-16T21:04:13.545Z · LW(p) · GW(p)

Sounds good, can't find your email address, DM'd you.

↑ comment by GdL752 · 2023-06-07T17:02:24.287Z · LW(p) · GW(p)

continue to fail at basic reasoning.

But , a huge huge portion of human labor doesnt require basic reasoning. Its rote enough to use flowcharts , I don't need my calculator to "understand" math , I need it to give me the correct answer.

And for the "hallucinating" behavior you can just have it learn not do to that by rote. Even if you still need 10% of a certain "discipline" (job) to double check that the AI isn't making things up you've still increased productivity insanely.

And what does that profit and freed up capital do other than chase more profit and invest in things that draw down all the conditionals vastly?

5% increased productivity here , 3% over here , it all starts to multiply.

↑ comment by meijer1973 · 2023-06-07T14:15:18.937Z · LW(p) · GW(p)

AI will probably displace a lot of cognitive workers in the near future. And physical labor might take a while to get below 25$/hr.

- Most most tasks human level intelligence is not required.

- Most highly valued jobs have a lot of tasks that do not require high intelligence.

- Doing 95% of all tasks could be a lot sooner (10-15 years earlier) than 100%. See autonomous driving (getting to 95% safe or 99,9999 safe is a big difference).

- Physical labor by robots will probably remain expensive for a long time (e.g. a robot plumber). A robot ceo is probably cheaper in the future than the robot plumber.

- Just take gpt4 and fine tune it and you can automate a lot of cognitive labor already.

- Deployment of cognitve work automation (a software update) is much faster that deployment of physical robots.

I agree that AI might not replace swim instructors by 2030. It is the cognitive work where the big leaps will be.

comment by jimrandomh · 2023-06-09T20:01:44.941Z · LW(p) · GW(p)

This is the multiple stages fallacy. Not only is each of the probabilities in your list too low, if you actually consider them as conditional probabilities they're double- and triple-counting the same uncertainties. And since they're all mulitplied together, and all err in the same direction, the error compounds.

Replies from: ted-sanders, harfe↑ comment by Ted Sanders (ted-sanders) · 2023-06-09T21:39:19.867Z · LW(p) · GW(p)

What conditional probabilities would you assign, if you think ours are too low?

Replies from: jimrandomh↑ comment by jimrandomh · 2023-06-10T04:21:59.645Z · LW(p) · GW(p)

P(We invent algorithms for transformative AGI | No derailment from regulation, AI, wars, pandemics, or severe depressions): .8

P(We invent a way for AGIs to learn faster than humans | We invent algorithms for transformative AGI): 1. This row is already incorporated into the previous row.

P(AGI inference costs drop below $25/hr (per human equivalent): 1. This is also already incorporated into "we invent algorithms for transformative AGI"; an algorithm with such extreme inference costs wouldn't count (and, I think, would be unlikely to be developed in the first place).

We invent and scale cheap, quality robots: Not a prerequisite.

We massively scale production of chips and power: Not a prerequisite if we have already already conditioned on inference costs.

We avoid derailment by human regulation: 0.9

We avoid derailment by AI-caused delay: 1. I would consider an AI that derailed development of other AI ot be transformative.

We avoid derailment from wars (e.g., China invades Taiwan): 0.98.

We avoid derailment from pandemics: 0.995. Thanks to COVID, our ability to continue making technological progress during a pandemic which requires everyone to isolate is already battle-tested.

We avoid derailment from severe depressions: 0.99.

Replies from: ted-sanders↑ comment by Ted Sanders (ted-sanders) · 2023-06-13T07:56:49.938Z · LW(p) · GW(p)

Interested in betting thousands of dollars on this prediction? I'm game.

Replies from: Tamay↑ comment by Tamay · 2024-03-14T03:30:23.438Z · LW(p) · GW(p)

I'm interested. What bets would you offer?

Replies from: ted-sanders↑ comment by Ted Sanders (ted-sanders) · 2025-01-01T06:31:10.047Z · LW(p) · GW(p)

Hey Tamay, nice meeting you at The Curve. Just saw your comment here today.

Things we could potentially bet on:

- rate of GDP growth by 2027 / 2030 / 2040

- rate of energy consumption growth by 2027 / 2030 / 2040

- rate of chip production by 2027 / 2030 / 2040

- rates of unemployment (though confounded)

Any others you're interested in? Degree of regulation feels like a tricky one to quantify.

↑ comment by ryan_greenblatt · 2025-01-01T22:36:38.381Z · LW(p) · GW(p)

How about AI company and hardware company valuations? (Maybe in 2026, 2027, 2030 or similar.)

Or what about benchmark/task performance? Is there any benchmark/task you think won't get beaten in the next few years? (And, ideally, if it did get beaten, you would change you mind.) Maybe "AI won't be able to autonomously write good ML research papers (as judged by (e.g.) not having notably more errors than human written papers and getting into NeurIPS with good reviews)"? Could do "make large PRs to open source repos that are considered highly valuable" or "make open source repos that are widely used".

These might be a bit better to bet on as they could be leading indicators

(It's still the case that betting on the side of fast AI progress might be financially worse than just trying to invest or taking out a loan, but it could be easier to bet than to invest in e.g. OpenAI. Regardless, part of the point of betting is clearly demonstrating a view.)

↑ comment by harfe · 2023-06-09T22:32:05.900Z · LW(p) · GW(p)

There is an additional problem where one of the two key principles for their estimates is

Avoid extreme confidence

If this principle leads you to picking probability estimates that have some distance to 1 (eg by picking at most 0.95).

If you build a fully conjunctive model, and you are not that great at extreme probabilities, then you will have a strong bias towards low overall estimates. And you can make your probability estimates even lower by introducing more (conjunctive) factors.

comment by Unnamed · 2023-06-06T22:43:32.265Z · LW(p) · GW(p)

Interesting that this essay gives both a 0.4% probability of transformative AI by 2043, and a 60% probability of transformative AI by 2043, for slightly different definitions of "transformative AI by 2043". One of these is higher than the highest probability given by anyone on the Open Phil panel (~45%) and the other is significantly lower than the lowest panel member probability (~10%). I guess that emphasizes the importance of being clear about what outcome we're predicting / what outcomes we care about trying to predict.

The 60% is for "We invent algorithms for transformative AGI", which I guess means that we have the tech that can be trained to do pretty much any job. And the 0.4% is the probability for the whole conjunction, which sounds like it's for pervasively implemented transformative AI: AI systems have been trained to do pretty much any job, and the infrastructure has been built (chips, robots, power) for them to be doing all of those jobs at a fairly low cost.

It's unclear why the 0.4% number is the headline here. What's the question here, or the thing that we care about, such that this is the outcome that we're making forecasts for? e.g., I think that many paths to extinction don't route through this scenario. IIRC Eliezer has written that it's possible that AI could kill everyone before we have widespread self-driving cars. And other sorts of massive transformation don't depend on having all the infrastructure in place so that AIs/robots can be working as loggers, nurses, upholsterers, etc.

comment by Steven Byrnes (steve2152) · 2023-06-06T21:29:56.489Z · LW(p) · GW(p)

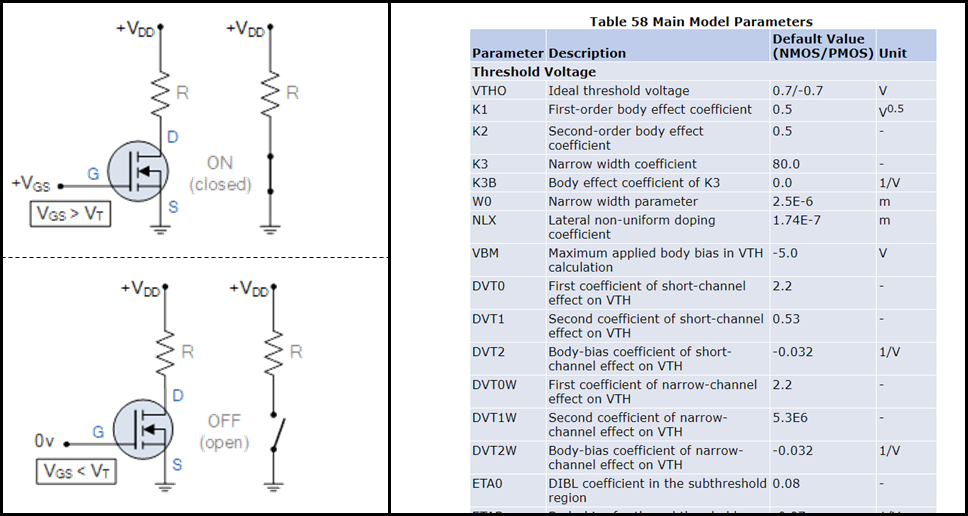

I disagree with the brain-based discussion of how much compute is required for AGI. Here’s an analogy I like (from here [LW · GW]):

Left: Suppose that I want to model a translator (specifically, a MOSFET). And suppose that my model only needs to be sufficient to emulate the calculations done by a CMOS integrated circuit. Then my model can be extremely simple—it can just treat the transistor as a cartoon switch. (image source.)

Right: Again suppose that I want to model a transistor. But this time, I want my model to accurately capture all measurable details of the transistor. Then my model needs to be mind-bogglingly complex, involving dozens of adjustable parameters, some of which are shown in this table (screenshot from here).

What’s my point? I’m suggesting an analogy between this transistor and a neuron with synapses, dendritic spikes, etc. The latter system is mind-bogglingly complex when you study it in detail—no doubt about it! But that doesn’t mean that the neuron’s essential algorithmic role is equally complicated. The latter might just amount to a little cartoon diagram with some ANDs and ORs and IF-THENs or whatever. Or maybe not, but we should at least keep that possibility in mind.

--

For example, this paper is what I consider a plausible algorithmic role of dendritic spikes and synapses in cortical pyramidal neurons, and the upshot is “it’s basically just some ANDs and ORs”. If that’s right, this little bit of brain algorithm could presumably be implemented with <<1 FLOP per spike-through-synapse. I think that’s a suggestive data point, even if (as I strongly suspect) dendritic spikes and synapses are meanwhile doing other operations too.

--

Anyway, I currently think that, based on the brain, human-speed AGI is probably possible in 1e14 FLOP/s. (This post [LW · GW] has a red-flag caveat on top, but that’s related to some issues in my discussion of memory, I stand by the compute section.) Not with current algorithms, I don’t think! But with some future algorithm.

I think that running microscopically-accurate brain simulations is many OOMs harder than running the algorithms that the brain is running. This is the same idea as the fact that running a microscopically-accurate simulation of a pocket calculator microcontroller chip, with all its thousands of transistors and capacitors and wires, stepping the simulation forward picosecond-by-picosecond, as the simulated chip multiplies two numbers, is many OOMs harder than multiplying two numbers.

Replies from: ted-sanders↑ comment by Ted Sanders (ted-sanders) · 2023-06-07T00:03:09.211Z · LW(p) · GW(p)

Excellent points. Agree that the compute needed to simulate a thing is not equal to the compute performed by that thing. It's very possible this means we're overestimating the compute performed by the human brain a bit. Possible this is counterbalanced by early AGIs being inefficient, or having architectural constraints that the human brain lacks, but who knows. Very possible our 16% is too low, and should be higher. Tripling it to ~50% would yield a likelihood of transformative AGI of ~1.2%.

Replies from: daniel-kokotajlo, AnthonyC↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-06-07T17:08:06.133Z · LW(p) · GW(p)

It's very possible this means we're overestimating the compute performed by the human brain a bit.

Specifically, by 6-8 OOMs. I don't think that's "a bit." ;)

↑ comment by AnthonyC · 2023-06-07T02:29:51.774Z · LW(p) · GW(p)

Dropping the required compute by, say, two OOMs, changes the estimates of how many fabs and how much power will be needed from "Massively more than expected from business as usual" to "Not far from business as usual" aka that 16% would need to be >>90% because by default the capacity would exist anyway. The same change would have the same kind effect on the "<$25/hr" assumption. At that scale, "just throw more compute at it" becomes a feasible enough solution that "learns slower than humans" stops seeming like a plausible problem, as well. I think you might be assuming you've made these estimates independently when they're actually still being calculated based on common assumptions.

Replies from: ted-sanders↑ comment by Ted Sanders (ted-sanders) · 2023-06-07T07:52:43.284Z · LW(p) · GW(p)

According to our rough and imperfect model, dropping inference needs by 2 OOMs increases our likelihood of hitting the $25/hr target by 20%abs, from 16% to 36%.

It doesn't necessarily make a huge difference to chip and power scaling, as in our model those are dominated by our training estimates, not our inference need estimates. (Though of course those figures will be connected in reality.)

With no adjustment to chip and power scaling, this yields a 0.9% likelihood of TAGI.

With a +15%abs bump to chip and power scaling, this yields a 1.2% likelihood of TAGI.

Replies from: AnthonyC↑ comment by AnthonyC · 2023-06-08T18:47:59.941Z · LW(p) · GW(p)

Ah, sorry, I see I made an important typo in my comment, that 16% value I mentioned was supposed to be 46%, because it was in reference to the chip fabs & power requirements estimate.

The rest of the comment after that was my way of saying "the fact that these dependences on common assumptions between the different conditional probabilities exist at all mean you can't really claim that you can multiply them all together and consider the result meaningful in the way described here."

I say that because the dependencies mean you can't productively discuss disagreements about any of your assumptions that go into your estimates, without adjusting all the probabilities in the model. A single updated assumption/estimate breaks the claim of conditional independence that lets you multiply the probabilities.

For example, in a world that actually had "algorithms for transformative AGI" that were just too expensive to productively used, what would happen next? Well, my assumption is that a lot more companies would hire a lot more humans to get to work on making them more efficient, using the best available less-transformative tools. A lot of governments would invest trillions in building the fabs and power plants and mines to build it anyway, even if it still cost $25,000/human-equivalent-hr. They'd then turn the AGI loose on the problem of improving its own efficiency. And on making better robots. And on using those robots to make more robots and build more power plants and mine more materials. Once producing more inputs is automated, supply stops being limited by human labor, and doesn't require more high level AI inference either. Cost of inputs into increasing AI capabilities becomes decoupled from the human economy, so that the price of electricity and compute in dollars plummets. This is one of many hypothetical pathways where a single disagreement renders consideration of the subsequent numbers moot. Presenting the final output as a single number hides the extreme sensitivity of that number to changes in key underlying assumptions.

comment by [deleted] · 2023-06-06T19:26:12.816Z · LW(p) · GW(p)

Hi Ted,

I will read the article, but there's some rather questionable assumptions here that I don't see how you could reach these conclusions while also considering them.

| We invent algorithms for transformative AGI | 60% |

| We invent a way for AGIs to learn faster than humans | 40% |

| AGI inference costs drop below $25/hr (per human equivalent) | 16% |

| We invent and scale cheap, quality robots | 60% |

| We massively scale production of chips and power | 46% |

| We avoid derailment by human regulation | 70% |

| We avoid derailment by AI-caused delay | 90% |

| We avoid derailment from wars (e.g., China invades Taiwan) | 70% |

| We avoid derailment from pandemics | 90% |

| We avoid derailment from severe depressions | 95% |

We invent algorithms for transformative AGI:

- Have you considered RSI? RSI in this context would be an algorithm that says "given a benchmark that measures objectively if an AI is transformative, propose a cognitive architecture for an AGI using a model with sufficient capabilities to make a reasonable guess". You then train the AGI candidate from the cognitive architecture (most architectures will reuse pretrained components from prior attempts) and benchmark it. You maintain a "league" of multiple top performing AGI candidates, and each one of them is in each cycle examining all the results, developing theories, and proposing the next candidate architecture.

Because RSI uses criticality, it primarily depends on compute availability. Assuming sufficient compute, it would discover a transformative AGI algorithm quickly. Some iterations might take 1 month (training time for a llama scale model from noise), others would take less than 1 day, and many iterations would be attempted in parallel.

We invent a way for AGIs to learn faster than humans : Why is this even in the table? This would be 1.0 because it's a known fact, AGI learns faster than humans. Again, from the llama training run, the model went from knowing nothing to domain human level in 1 month. That's faster. (requiring far more data than humans isn't an issue)

AGI inference costs drop below $25/hr (per human equivalent): Well, A100s are 0.87 per hour. A transformative AGI might use 32 A100s. $27.84 an hour. Looks like we're at 1.0 on this one also. Note I didn't even bother with a method to use compute at inference time more efficiently. In short, use a low end, cheap model, and a second model that assesses, given the current input, how likely it is that the full scale model will produce a meaningfully different output.

So for example, a robot doing a chore is using a low end, cheap model trained using supervised learning from the main model. That model runs in real time in hardware inside the robot. Whenever the input frame is poorly compressible from an onboard autoencoder trained on the supervised learning training set, the robot pauses what it's doing and queries the main model. (some systems can get the latency low enough to not need to pause).

This general approach would save main model compute, such that it could cost $240 an hour to run the main model and yet as long as it isn't used more than 10% of the time, and the local model is very small and cheap, it will hit your $25 an hour target.

This is not cutting edge and there are papers published this week on this and related approaches.

We invent and scale cheap, quality robots: Due to recent layoffs in the sota robotics teams and how Boston Dynamics has been of low investor interest, yes this is a possible open

We massively scale production of chips and power: I will have to check the essay to see how you define "massively".

We avoid derailment by human regulation: This is a parallel probability. It's saying actually 1 - (probability that ALL regulatory agencies in power blocs capable of building AGI regulate it to extinction). So it's 1 - (EU AND USA AND China AND <any other parties> block tAGI research). The race dynamics mean that defection is rapid so the blocks fail rapidly, even under suspected defection. If one party believes the others might be getting tAGI, they may start a national defense project to rush build their own, a project that is exempt from said regulation.

We avoid derailment by AI-caused delay: Another parallel probability. It's the probability that an "AI chernobyl" happens and all parties respect the lesson of it.

We avoid derailment from wars (e.g., China invades Taiwan) : Another parallel probability, as Taiwan is not the only location on earth capable of manufacturing chips for AI.

We avoid derailment from pandemics: So a continuous series of pandemics, not a 3 year dip to ~80% productivity? Due to RSI even 80% productivity may be sufficient.

We avoid derailment from severe depressions: Another parallel probability.

With that said : in 15 years I find the conclusion of "probably not" tAGI that can do almost everything better than humans, including robotics, and it's cheap per hour, and massive amounts of new infrastructure have been built, to probably be correct. So many steps, and even if you're completely wrong due to the above or black swans, it doesn't mean a different step won't be rate limiting. Worlds where we have AGI that is still 'transformative' by a plain meaning of the term, and the world is hugely different, and AGI is receiving immense financial investments and is the most valuable industry on earth, are entirely possible without it actually satisfying the end conditions this essay is aimed at.

Replies from: ted-sanders↑ comment by Ted Sanders (ted-sanders) · 2023-06-06T23:52:43.310Z · LW(p) · GW(p)

We invent a way for AGIs to learn faster than humans : Why is this even in the table? This would be 1.0 because it's a known fact, AGI learns faster than humans. Again, from the llama training run, the model went from knowing nothing to domain human level in 1 month. That's faster. (requiring far more data than humans isn't an issue)

100% feels overconfident. Some algorithms learning some things faster than humans is not proof that AGI will learn all things faster than humans. Just look at self-driving. It's taking AI far longer than human teenagers to learn.

AGI inference costs drop below $25/hr (per human equivalent): Well, A100s are 0.87 per hour. A transformative AGI might use 32 A100s. $27.84 an hour. Looks like we're at 1.0 on this one also.

100% feels overconfident. We don't know if transformative will need 32 A100s, or more. Our essay explains why we think it's more. Even if you disagree with us, I struggle to see how you can be 100% sure.

Replies from: conor↑ comment by Conor (conor) · 2023-06-11T12:55:26.119Z · LW(p) · GW(p)

Teenagers generally don't start learning to drive until they have had fifteen years to orient themselves in the world.

AI and teenagers are not starting from the same point so the comparison does not map very well.

comment by Charlie Steiner · 2023-06-06T20:44:06.019Z · LW(p) · GW(p)

Thanks, this was interesting.

I couldn't really follow along with my own probabilities because things started wild from the get-go. You say we need to "invent algorithms for transformative AI," when in fact we already have algorithms that are in-principle general, they're just orders of magnitude too inefficient, but we're making gradual algorithmic progress all the time. Checking the pdf, I remain confused about your picture of the world here. Do you think I'm drastically overstating the generality of current ML and the gradualness of algorithmic improvement, such that currently we are totally lacking the ability to build AGI, but after some future discovery (recognizable on its own merits and not some context-dependent "last straw") we will suddenly be able to?

And your second question is also weird! I don't really understand the epistemic state of the AI researchers in this hypothetical. They're supposed to have built something that's AGI, it just learns slower than humans. How did they get confidence in this fact? I think this question is well-posed enough that I could give a probability for it, except that I'm still confused about how to conditionalize on the first question.

The rest of the questions make plenty of sense, no complaints there.

In terms of the logical structure, I'd point out that inference costs staying low, producing chips, and producing lots of robots are all definitely things that could be routes to transformative AI, but they're not necessary. The big alternate path missing here is quality. An AI that generates high-quality designs or plans might not have a human equivalent, in which case "what's the equivalent cost at $25 per human hour" is a wrong question. Producing chips and producing robots could also happen or not happen in any combination and the world could still be transformed by high-quality AI decision-making.

Replies from: ted-sanders↑ comment by Ted Sanders (ted-sanders) · 2023-06-07T22:48:24.124Z · LW(p) · GW(p)

I'm curious and I wonder if I'm missing something that's obvious to others: What are the algorithms we already have for AGI? What makes you confident they will work before seeing any demonstration of AGI?

Replies from: Charlie Steiner↑ comment by Charlie Steiner · 2023-06-08T01:16:12.974Z · LW(p) · GW(p)

So, the maximally impractical but also maximally theoretically rigorous answer here is AIXI-tl.

An almost as impractical answer would be Markov chain Monte Carlo search for well-performing huge neural nets on some objective.

I say MCMC search because I'm confident that there's some big neural nets that are good at navigating the real world, but any specific efficient training method we know of right now could fail to scale up reliably. Instability being the main problem, rather than getting stuck in local optima.

Dumb but thorough hyperparameter search and RL on a huge neural net should also work. Here we're adding a few parts of "I am confident in this because of empirical data abut the historical success of scaling up neural nets trained with SGD" to arguments that still mostly rest on "I am confident because of mathematical reasoning about what it means to get a good score at an objective."

Replies from: ted-sanders↑ comment by Ted Sanders (ted-sanders) · 2023-06-08T21:32:05.447Z · LW(p) · GW(p)

Gotcha. I guess there's a blurry line between program search and training. Somehow training feels reasonable to me, but something like searching over all possible programs feels unreasonable to me. I suppose the output of such a program search is what I might mean by an algorithm for AGI.

Hyperparameter search and RL on a huge neural net feels wildly underspecified to me. Like, what would be its inputs and outputs, even?

Replies from: Charlie Steiner↑ comment by Charlie Steiner · 2023-06-08T22:00:53.478Z · LW(p) · GW(p)

Since I'm fine with saying things that are wildly inefficient, almost any input/output that's sufficient to reward modeling of the real world (rather than e.g. just playing the abstract game of chess) is sufficient. A present-day example might be self-driving car planning algorithms (though I don't think any major companies actually use end to end NN planning).

Replies from: ted-sanders↑ comment by Ted Sanders (ted-sanders) · 2023-06-09T00:50:13.909Z · LW(p) · GW(p)

Right, but what inputs and outputs would be sufficient to reward modeling of the real world? I think that might take some exploration and experimentation, and my 60% forecast is the odds of such inquiries succeeding by 2043.

Even with infinite compute, I think it's quite difficult to build something that generalizes well without overfitting.

Replies from: Charlie Steiner↑ comment by Charlie Steiner · 2023-06-09T06:24:41.130Z · LW(p) · GW(p)

what inputs and outputs would be sufficient to reward modeling of the real world?

This is an interesting question but I think it's not actually relevant. Like, it's really interesting to think about a thermostat - something who's only inputs are a thermometer and a clock, and only output is a switch hooked to a heater. Given arbitrarily large computing power and arbitrary amounts of on-distribution training data, will RL ever learn all about the outside world just from temperature patterns? Will it ever learn to deliberately affect the humans around it by turning the heater on and off? Or is it stuck being a dumb thermostat, a local optimum enforced not by the limits of computation but by the structure of the problem it faces?

But people are just going to build AIs attached to video cameras, or screens read by humans, or robot cars, or the internet, which are enough information flow by orders of magnitude, so it's not super important where the precise boundary is.

Replies from: ted-sanders↑ comment by Ted Sanders (ted-sanders) · 2023-06-09T18:10:29.901Z · LW(p) · GW(p)

Right, I'm not interested in minimum sufficiency. I'm just interested in the straightforward question of what data pipes would we even plug into the algorithm that would result in AGI. Sounds like you think a bunch of cameras and computers would work? To me, it feels like an empirical problem that will take years of research.

comment by Max H (Maxc) · 2023-06-07T23:03:56.055Z · LW(p) · GW(p)

I think the biggest problem with these estimates is that they rely on irrelevant comparisons to the human brain.

What we care about is how much compute is needed to implement the high-level cognitive algorithms that run in the brain; not the amount of compute needed to simulate the low-level operations the brain carries out to perform that cognition. This is a much harder to quantity to estimate, but it's also the only thing that actually matters.

See Biology-Inspired AGI Timelines: The Trick That Never Works [LW · GW] and other extensive prior discussion [LW · GW] on this.

I think with enough algorithmic improvement, there's enough hardware lying around already to get to TAI, and once you factor this in, a bunch of other conditional events are actually unnecessary or much more likely. My own estimates:

Event | Forecastby 2043 or TAGI, |

| We invent algorithms for transformative AGI | 90% |

| 100% | |

| 100% | |

| 100% | |

| 100% | |

| We avoid derailment by human regulation | 80% |

| We avoid derailment by AI-caused delay | 95% |

| We avoid derailment from wars (e.g., China invades Taiwan) | 95% |

| We avoid derailment from pandemics | 95% |

| We avoid derailment from severe depressions | 95% |

| Joint odds | 58.6% |

Explanations:

- Inventing algorithms: this is mostly just a wild guess / gut sense, but it is upper-bounded in difficulty by the fact that evolution managed to discover algorithms for human level cognition through billions of years of pretty dumb and low bandwidth trial-and-error.

- "We invent a way for AGIs to learn faster than humans." This seems like it is mostly implied by the first conditional already and / or already satisfied: current AI systems can already be trained in less wall-clock time than it takes a human to develop during a lifetime, and much less wall clock time than it took evolution to discover the design for human brains. Even the largest AI systems are currently trained on much less data than the amount of raw sensory data that is available to a human over a lifetime. I see no reason to expect these trends to change as AI gets more advanced.

- "AGI inference costs drop below $25/hr (per human equivalent)" - Inference costs of running e.g. GPT-4 are already way, way less than the cost of humans on a per-token basis, it's just that the tokens are way, way lower quality than the best humans can produce. Conditioned on inventing the algorithms to get higher quality output, it seems like there's plausibly very little left to do to here.

- Inventing robots - doesn't seem necessary for TAI; we already have decent, if expensive, robotics and TAI can help develop more and make them cheaper / more scalable.

- Scaling production of chips and power - probably not necessary, see the point in the first part of this comment on relevant comparisons to the brain.

- Avoiding derailment - if existing hardware is sufficient to get to TAI, it's much easier to avoid derailment for any reason. You just need a few top capabilities research labs to continue to be able to push the frontier by experimenting with existing clusters.

↑ comment by Martin Randall (martin-randall) · 2023-06-13T02:40:07.865Z · LW(p) · GW(p)

I'm curious about your derailment odds. The definition of "transformative AGI" in the paper is restrictive:

AI that can quickly and affordably be trained to perform nearly all economically and strategically valuable tasks at roughly human cost or less.

A narrow superintelligence that can, for example, engineer pandemics or conduct military operations could lead to severe derailment without satisfying this definition. I guess that would qualify as "AI-caused delay"? To follow the paper's model, we need to estimate these odds in a conditional world where humans are not regulating AI use in ways that significantly delay the path to transformative AGI, which further increases the risk.

Replies from: Maxc↑ comment by Max H (Maxc) · 2023-06-13T03:15:21.085Z · LW(p) · GW(p)

engineer pandemics or conduct military operations could lead to severe derailment without satisfying this definition.

I think humans could already do those things pretty well without AI, if they wanted to. Narrow AI might make those things easier, possibly much easier, just like nukes and biotech research have in the past. I agree this increases the chance that things go "off the rails", but I think once you have an AI that can solve hard engineering problems in the real world like that, there's just not that much further to go to full-blown superintelligence, whether you call its precursor "narrow" or not.

The probabilities in my OP are mostly just a gut sense wild guess, but they're based on the intuition that it takes a really big derailment to halt frontier capabilities progress, which mostly happens in well-funded labs that have the resources and will to continue operating through pretty severe "turbulence" - economic depression, war, pandemics, restrictive regulation, etc. Even if new GPU manufacturing stops completely, there are already a lot of H100s and A100s lying around, and I expect that those are sufficient to get pretty far.

↑ comment by Ted Sanders (ted-sanders) · 2023-06-08T21:15:05.749Z · LW(p) · GW(p)

Excellent comment - thanks for sticking your neck out to provide your own probabilities.

Given the gulf between our 0.4% and your 58.6%, would you be interested in making a bet (large or small) on TAI by 2043? If yes, happy to discuss how we might operationalize it.

Replies from: Maxc↑ comment by Max H (Maxc) · 2023-06-08T22:24:15.886Z · LW(p) · GW(p)

I appreciate the offer to bet! I'm probably going to decline though - I don't really want or need more skin-in-the-game on this question (many of my personal and professional plans assume short timelines.)

You might be interested in this post [LW · GW] (and the bet it is about), for some commentary and issues with operationalizing bets like this.

Also, you might be able to find someone else to bet with you - I think my view is actually closer to the median among EAs / rationalists / alignment researchers than yours. For example, the Open Phil panelists judging this contest say [EA · GW]:

Replies from: ted-sanders, ted-sandersPanelist credences on the probability of AGI by 2043 range from ~10% to ~45%.

↑ comment by Ted Sanders (ted-sanders) · 2023-06-09T00:54:14.325Z · LW(p) · GW(p)

Sounds good. Can also leave money out of it and put you down for 100 pride points. :)

If so, message me your email and I'll send you a calendar invite for a group reflection in 2043, along with a midpoint check in in 2033.

↑ comment by Ted Sanders (ted-sanders) · 2023-06-09T01:01:14.104Z · LW(p) · GW(p)

I'm not convinced about the difficulty of operationalizing Eliezer's doomer bet. Effectively, loaning money to a doomer who plans to spend it all by 2030 is, in essence, a claim on the doomer's post-2030 human capital. The doomer thinks it's worthless, whereas the skeptic thinks it has value. Hence, they transact.

The TAGI case seems trickier than the doomer case. Who knows what a one dollar bill will be worth in a post-TAGI world.

comment by Ege Erdil (ege-erdil) · 2023-06-10T01:44:45.880Z · LW(p) · GW(p)

Just to pick on the step that gets the lowest probability in your calculation, estimating that the human brain does 1e20 FLOP/s with only 20 W of power consumption requires believing that the brain is basically operating at the bitwise Landauer limit, which is around 3e20 bit erasures per watt per second at room temperature. If the FLOP we're talking about here is equivalent of operations on 8-bit floating point numbers, for example, the human brain would have an energy efficiency of around 1e20 bit erasures per watt, which is less than one order of magnitude from the Landauer limit at room temperature of 300 K.

Needless to say, I find this estimate highly unrealistic. We have no idea how to build practical densely packed devices which get anywhere close to this limit; the best we can do at the moment is perhaps 5 orders of magnitude away. Are you really thinking that the human brain is 5 OOM more energy efficient than an A100?

Still, even this estimate is much more realistic than your claim that the human brain might take 8e34 FLOP to train, which ascribes a ludicrous ~ 1e26 FLOP/s computation capacity to the human brain if this training happens over 20 years. This obviously violates the Landauer limit on computation and so is going to be simply false, unless you think the human brain loses less than one bit of information per 1e5 floating point operations it's doing. Good luck with that.

I notice that Steven Byrnes has already made the argument that these estimates are poor, but I want to hammer home the point that they are not just poor, they are crazy. Obviously, a mistake this flagrant does not inspire confidence in the rest of the argument.

Replies from: ted-sanders, ted-sanders, Muireall↑ comment by Ted Sanders (ted-sanders) · 2023-06-14T05:36:45.140Z · LW(p) · GW(p)

Let me try writing out some estimates. My math is different than yours.

An H100 SXM has:

- 8e10 transistors

- 2e9 Hz boost frequency of

- 2e15 FLOPS at FP16

- 7e2 W of max power consumption

Therefore:

- 2e6 eV are spent per FP16 operation

- This is 1e8 times higher than the Landauer limit of 2e-2 eV per bit erasure at 70 C (and the ratio of bit erasures per FP16 operation is unclear to me; let's pretend it's O(1))

- An H100 performs 1e6 FP16 operations per clock cycle, which implies 8e4 transistors per FP16 operation (some of which may be inactive, of course)

This seems pretty inefficient to me!

To recap, modern chips are roughly ~8 orders of magnitude worse than the Landauer limit (with a bit erasure per FP16 operation fudge factor that isn't going to exceed 10). And this is in a configuration that takes 8e4 transistors to support a single FP16 operation!

Positing that brains are ~6 orders of magnitude more energy efficient than today's transistor circuits doesn't seem at all crazy to me. ~6 orders of improvement on 2e6 is ~2 eV per operation, still two orders of magnitude above the 0.02 eV per bit erasure Landauer limit.

I'll note too that cells synthesize informative sequences from nucleic acids using less than 1 eV of free energy per bit. That clearly doesn't violate Landauer or any laws of physics, because we know it happens.

Replies from: ege-erdil↑ comment by Ege Erdil (ege-erdil) · 2023-06-14T06:36:10.998Z · LW(p) · GW(p)

2e6 eV are spent per FP16 operation... This is 1e8 times higher than the Landauer limit of 2e-2 eV per bit erasure at 70 C (and the ratio of bit erasures per FP16 operation is unclear to me; let's pretend it's O(1))

2e-2 eV for the Landauer limit is right, but 2e6 eV per FP16 operation is off by one order of magnitude. (70 W)/(2e15 FLOP/s) = 0.218 MeV. So the gap is 7 orders of magnitude assuming one bit erasure per FLOP.

This is wrong, the power consumption is 700 W so the gap is indeed 8 orders of magnitude.

An H100 SXM has 8e10 transistors, 2e9 Hz boost frequency,

70 W700 W of max power consumption...

8e10 * 2e9 = 1.6e20 transistor switches per second. This happens with a power consumption of 700 W, suggesting that each switch dissipates on the order of 30 eV of energy, which is only 3 OOM or so from the Landauer limit. So this device is actually not that inefficient if you look only at how efficiently it's able to perform switches. My position is that you should not expect the brain to be much more efficient than this, though perhaps gaining one or two orders of magnitude is possible with complex error correction methods.

Of course, the transistors supporting per FLOP and the switching frequency gap have to add up to the 8 OOM overall efficiency gap we've calculated. However, it's important that most of the inefficiency comes from the former and not the latter. I'll elaborate on this later in the comment.

This seems pretty inefficient to me!

I agree an H100 SXM is not a very efficient computational device. I never said modern GPUs represent the pinnacle of energy efficiency in computation or anything like that, though similar claims have previously been made [LW · GW] by others on the forum.

Positing that brains are ~6 orders of magnitude more energy efficient than today's transistor circuits doesn't seem at all crazy to me. ~6 orders of improvement on 2e6 is ~2 eV per operation, still two orders of magnitude above the 0.02 eV per bit erasure Landauer limit.

Here we're talking about the brain possibly doing 1e20 FLOP/s, which I've previously said is maybe within one order of magnitude of the Landauer limit or so, and not the more extravagant figure of 1e25 FLOP/s. The disagreement here is not about math; we both agree that this performance requires the brain to be 1 or 2 OOM from the bitwise Landauer limit depending on exactly how many bit erasures you think are involved in a single 16-bit FLOP.

The disagreement is more about how close you think the brain can come to this limit. Most of the energy losses in modern GPUs come from the enormous amounts of noise that you need to deal with in interconnects that are closely packed together. To get anywhere close to the bitwise Landauer limit, you need to get rid of all of these losses. This is what would be needed to lower the amount of transistors supporting per FLOP without also simultaneously increasing the power consumption of the device.

I just don't see how the brain could possibly pull that off. The design constraints are pretty similar in both cases, and the brain is not using some unique kind of material or architecture which could eliminate dissipative or radiative energy losses in the system. Just as information needs to get carried around inside a GPU, information also needs to move inside the brain, and moving information around in a noisy environment is costly. So I would expect by default that the brain is many orders of magnitude from the Landauer limit, though I can see estimates as high as 1e17 FLOP/s being plausible if the brain is highly efficient. I just think you'll always be losing many orders of magnitude relative to Landauer as long as your system is not ideal, and the brain is far from an ideal system.

I'll note too that cells synthesize informative sequences from nucleic acids using less than 1 eV of free energy per bit. That clearly doesn't violate Landauer or any laws of physics, because we know it happens.

I don't think you'll lose as much relative to Landauer when you're doing that, because you don't have to move a lot of information around constantly. Transcribing a DNA sequence and other similar operations are local. The reason I think realistic devices will fall far short of Landauer is because of the problem of interconnect: computations cannot be localized effectively, so different parts of your hardware need to talk to each other, and that's where you lose most of the energy. In terms of pure switching efficiency of transistors, we're already pretty close to this kind of biological process, as I've calculated above.

Replies from: ted-sanders, ted-sanders, ege-erdil↑ comment by Ted Sanders (ted-sanders) · 2023-06-14T06:49:28.941Z · LW(p) · GW(p)

One potential advantage of the brain is that it is 3D, whereas chips are mostly 2D. I wonder what advantage that confers. Presumably getting information around is much easier with 50% more dimensions.

Replies from: ege-erdil↑ comment by Ege Erdil (ege-erdil) · 2023-06-14T07:07:15.184Z · LW(p) · GW(p)

Probably true, and this could mean the brain has some substantial advantage over today's hardware (like 1 OOM, say) but at the same time the internal mechanisms that biology uses to establish electrical potential energy gradients and so forth seem so inefficient. Quoting Eliezer;

I'm confused at how somebody ends up calculating that a brain - where each synaptic spike is transmitted by ~10,000 neurotransmitter molecules (according to a quick online check), which then get pumped back out of the membrane and taken back up by the synapse; and the impulse is then shepherded along cellular channels via thousands of ions flooding through a membrane to depolarize it and then getting pumped back out using ATP, all of which are thermodynamically irreversible operations individually - could possibly be within three orders of magnitude of max thermodynamic efficiency at 300 Kelvin. I have skimmed "Brain Efficiency" though not checked any numbers, and not seen anything inside it which seems to address this sanity check.

↑ comment by Ted Sanders (ted-sanders) · 2023-06-14T06:44:20.762Z · LW(p) · GW(p)

70 W

Max power is 700 W, not 70 W. These chips are water-cooled beasts. Your estimate is off, not mine.

Replies from: ege-erdil↑ comment by Ege Erdil (ege-erdil) · 2023-06-14T06:53:30.245Z · LW(p) · GW(p)

Huh, I wonder why I read 7e2 W as 70 W. Strange mistake.

Replies from: ted-sanders↑ comment by Ted Sanders (ted-sanders) · 2023-06-14T06:59:05.151Z · LW(p) · GW(p)

No worries. I've made far worse. I only wish that H100s could operate at a gentle 70 W! :)

↑ comment by Ege Erdil (ege-erdil) · 2023-06-14T06:52:27.955Z · LW(p) · GW(p)

I'm posting this as a separate comment because it's a different line of argument, but I think we should also keep it in mind when making estimates of how much computation the brain could actually be using.

If the brain is operating at a frequency of (say) 10 Hz and is doing 1e20 FLOP/s, that suggests the brain has something like 1e19 floating point parameters, or maybe specifying the "internal state" of the brain takes something like 1e20 bits. If you want to properly train a neural network of this size, you need to update on a comparable amount of useful entropy from the outside world. This means you have to believe that humans are receiving on the order of 1e11 bits or 10 GB of useful information about the world to update on every second if the brain is to be "fully trained" by the age of 30, say.

An estimate of 1e15 FLOP/s brings this down to a more realistic 100 KB or so, which still seems like a lot but is somewhat more believable if you consider the potential information content of visual and auditory stimuli. I think even this is an overestimate and that the brain has some algorithmic insights which make it somewhat more data efficient than contemporary neural networks, but I think the gap implied by 1e20 FLOP/s is rather too large for me to believe it.

↑ comment by Ted Sanders (ted-sanders) · 2023-06-13T08:19:54.106Z · LW(p) · GW(p)

Thanks for the constructive comments. I'm open-minded to being wrong here. I've already updated a bit and I'm happy to update more.

Regarding the Landauer limit, I'm confused by a few things:

- First, I'm confused by your linkage between floating point operations and information erasure. For example, if we have two 8-bit registers (A, B) and multiply to get (A, B*A), we've done an 8-bit floating point operation without 8 bits of erasure. It seems quite plausible to be that the brain does 1e20 FLOPS but with a much smaller rate of bit erasures.

- Second, I have no idea how to map the fidelity of brain operations to floating point precision, so I really don't know if we should be comparing 1 bit, 8 bit, 64 bit, or not at all. Any ideas?

Regarding training requiring 8e34 floating point operations:

- Ajeya Cotra estimates training could take anything from 1e24 to 1e54 floating point operations, or even more. Her narrower lifetime anchor ranges from 1e24 to 1e38ish. https://docs.google.com/document/d/1IJ6Sr-gPeXdSJugFulwIpvavc0atjHGM82QjIfUSBGQ/edit

- Do you think Cotra's estimates are not just poor, but crazy as well? If they were crazy, I would have expected to see her two-year update mention the mistake, or the top comments to point it out, but I see neither: https://www.lesswrong.com/posts/AfH2oPHCApdKicM4m/two-year-update-on-my-personal-ai-timelines [LW · GW]

↑ comment by Ege Erdil (ege-erdil) · 2023-06-13T09:22:07.631Z · LW(p) · GW(p)

First, I'm confused by your linkage between floating point operations and information erasure. For example, if we have two 8-bit registers (A, B) and multiply to get (A, B*A), we've done an 8-bit floating point operation without 8 bits of erasure. It seems quite plausible to be that the brain does 1e20 FLOPS but with a much smaller rate of bit erasures.

-

As a minor nitpick, if A and B are 8-bit floating point numbers then the multiplication map x -> B*x is almost never injective. This means even in your idealized setup, the operation (A, B) -> (A, B*A) is going to lose some information, though I agree that this information loss will be << 8 bits, probably more like 1 bit amortized or so.

-

The bigger problem is that logical reversibility doesn't imply physical reversibility. I can think of ways in which we could set up sophisticated classical computation devices which are logically reversible, and perhaps could be made approximately physically reversible when operating in a near-adiabatic regime at low frequencies, but the brain is not operating in this regime (especially if it's performing 1e20 FLOP/s). At high frequencies, I just don't see which architecture you have in mind to perform lots of 8-bit floating point multiplications without raising the entropy of the environment by on the order of 8 bits.

Again using your setup, if you actually tried to implement (A, B) -> (A, A*B) on a physical device, you would need to take the register that is storing B and replace the stored value with A*B instead. To store 1 bit of information you need a potential energy barrier that's at least as high as k_B T log(2), so you need to switch ~ 8 such barriers, which means in any kind of realistic device you'll lose ~ 8 k_B T log(2) of electrical potential energy to heat, either through resistance or through radiation. It doesn't have to be like this, and some idealized device could do better, but GPUs are not idealized devices and neither are brains.

Ajeya Cotra estimates training could take anything from 1e24 to 1e54 floating point operations, or even more. Her narrower lifetime anchor ranges from 1e24 to 1e38ish.

Two points about that:

-

This is a measure that takes into account the uncertainty over how much less efficient our software is compared to the human brain. I agree that human lifetime learning compute being around 1e25 FLOP is not strong evidence that the first TAI system we train will use 1e25 FLOP of compute; I expect it to take significantly more than that.

-

Moreover, this is an estimate of effective FLOP, meaning that Cotra takes into account the possibility that software efficiency progress can reduce the physical computational cost of training a TAI system in the future. It was also in units of 2020 FLOP, and we're already in 2023, so just on that basis alone, these numbers should get adjusted downwards now.

Do you think Cotra's estimates are not just poor, but crazy as well?

No, because Cotra doesn't claim that the human brain performs 1e25 FLOP/s - her claim is quite different.

The claim that "the first AI system to match the performance of the human brain might require 1e25 FLOP/s to run" is not necessarily crazy, though it needs to be supported by evidence of the relative inefficiency of our algorithms compared to the human brain and by estimates of how much software progress we should expect to be made in the future.