Biology-Inspired AGI Timelines: The Trick That Never Works

post by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2021-12-01T22:35:28.379Z · LW · GW · 142 commentsContents

- 1988 - - 1999 - - 2004 or thereabouts - - 2006 or thereabouts - - 2020 - None 143 comments

- 1988 -

Hans Moravec: Behold my book Mind Children. Within, I project that, in 2010 or thereabouts, we shall achieve strong AI. I am not calling it "Artificial General Intelligence" because this term will not be coined for another 15 years or so.

Eliezer (who is not actually on the record as saying this, because the real Eliezer is, in this scenario, 8 years old; this version of Eliezer has all the meta-heuristics of Eliezer from 2021, but none of that Eliezer's anachronistic knowledge): Really? That sounds like a very difficult prediction to make correctly, since it is about the future, which is famously hard to predict.

Imaginary Moravec: Sounds like a fully general counterargument [? · GW] to me.

Eliezer: Well, it is, indeed, a fully general counterargument against futurism. Successfully predicting the unimaginably far future - that is, more than 2 or 3 years out, or sometimes less - is something that human beings seem to be quite bad at, by and large.

Moravec: I predict that, 4 years from this day, in 1992, the Sun will rise in the east.

Eliezer: Okay, let me qualify that. Humans seem to be quite bad at predicting the future whenever we need to predict anything at all new and unfamiliar, rather than the Sun continuing to rise every morning until it finally gets eaten. I'm not saying it's impossible to ever validly predict something novel! Why, even if that was impossible, how could I know it for sure? By extrapolating from my own personal inability to make predictions like that? Maybe I'm just bad at it myself. But any time somebody claims that some particular novel aspect of the far future is predictable, they justly have a significant burden of prior skepticism to overcome.

More broadly, we should not expect a good futurist to give us a generally good picture of the future. We should expect a great futurist to single out a few rare narrow aspects of the future which are, somehow, exceptions to the usual rule about the future not being very predictable.

I do agree with you, for example, that we shall at some point see Artificial General Intelligence. This seems like a rare predictable fact about the future, even though it is about a novel thing which has not happened before: we keep trying to crack this problem, we make progress albeit slowly, the problem must be solvable in principle because human brains solve it, eventually it will be solved; this is not a logical necessity, but it sure seems like the way to bet. "AGI eventually" is predictable in a way that it is not predictable that, e.g., the nation of Japan, presently upon the rise, will achieve economic dominance over the next decades - to name something else that present-day storytellers of 1988 are talking about.

But timing the novel development correctly? That is almost never done, not until things are 2 years out, and often not even then. Nuclear weapons were called, but not nuclear weapons in 1945; heavier-than-air flight was called, but not flight in 1903. In both cases, people said two years earlier that it wouldn't be done for 50 years - or said, decades too early, that it'd be done shortly. There's a difference between worrying that we may eventually get a serious global pandemic, worrying that eventually a lab accident may lead to a global pandemic, and forecasting that a global pandemic will start in November of 2019.

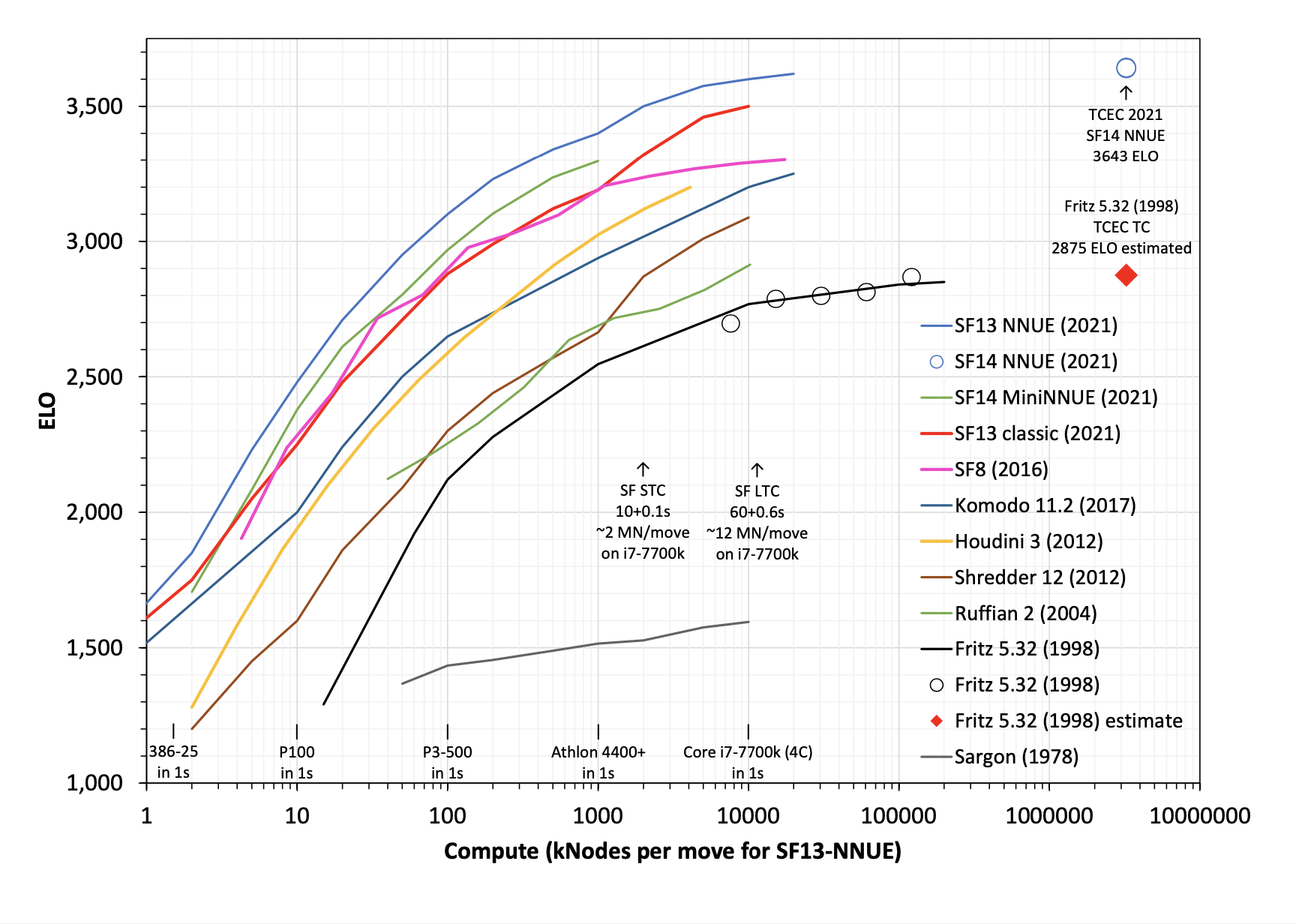

Moravec: You should read my book, my friend, into which I have put much effort. In particular - though it may sound impossible to forecast, to the likes of yourself - I have carefully examined a graph of computing power in single chips and the most powerful supercomputers over time. This graph looks surprisingly regular! Now, of course not all trends can continue forever; but I have considered the arguments that Moore's Law will break down, and found them unconvincing. My book spends several chapters discussing the particular reasons and technologies by which we might expect this graph to not break down, and continue, such that humanity will have, by 2010 or so, supercomputers which can perform 10 trillion operations per second.*

Oh, and also my book spends a chapter discussing the retina, the part of the brain whose computations we understand in the most detail, in order to estimate how much computing power the human brain is using, arriving at a figure of 10^13 ops/sec. This neuroscience and computer science may be a bit hard for the layperson to follow, but I assure you that I am in fact an experienced hands-on practitioner in robotics and computer vision.

So, as you can see, we should first get strong AI somewhere around 2010. I may be off by an order of magnitude in one figure or another; but even if I've made two errors in the same direction, that only shifts the estimate by 7 years or so.

(*) Moravec just about nailed this part; the actual year was 2008.

Eliezer: I sure would be amused if we did in fact get strong AI somewhere around 2010, which, for all I know at this point in this hypothetical conversation, could totally happen! Reversed stupidity is not intelligence, after all, and just because that is a completely broken justification for predicting 2010 doesn't mean that it cannot happen that way.

Moravec: Really now. Would you care to enlighten me as to how I reasoned so wrongly?

Eliezer: Among the reasons why the Future is so hard to predict, in general, is that the sort of answers we want tend to be the products of lines of causality with multiple steps and multiple inputs. Even when we can guess a single fact that plays some role in producing the Future - which is not of itself all that rare - usually the answer the storyteller wants depends on more facts than that single fact. Our ignorance of any one of those other facts can be enough to torpedo our whole line of reasoning - in practice, not just as a matter of possibilities. You could say that the art of exceptions to Futurism being impossible, consists in finding those rare things that you can predict despite being almost entirely ignorant of most concrete inputs into the concrete scenario. Like predicting that AGI will happen at some point, despite not knowing the design for it, or who will make it, or how.

My own contribution to the Moore's Law literature consists of Moore's Law of Mad Science: "Every 18 months, the minimum IQ required to destroy the Earth drops by 1 point." Even if this serious-joke was an absolutely true law, and aliens told us it was absolutely true, we'd still have no ability whatsoever to predict thereby when the Earth would be destroyed, because we'd have no idea what that minimum IQ was right now or at any future time. We would know that in general the Earth had a serious problem that needed to be addressed, because we'd know in general that destroying the Earth kept on getting easier every year; but we would not be able to time when that would become an imminent emergency, until we'd seen enough specifics that the crisis was already upon us.

In the case of your prediction about strong AI in 2010, I might put it as follows: The timing of AGI could be seen as a product of three factors, one of which you can try to extrapolate from existing graphs, and two of which you don't know at all. Ignorance of any one of them is enough to invalidate the whole prediction.

These three factors are:

- The availability of computing power over time, which may be quantified, and appears steady when graphed;

- The rate of progress in knowledge of cognitive science and algorithms over time, which is much harder to quantify;

- A function that is a latent background parameter, for the amount of computing power required to create AGI as a function of any particular level of knowledge about cognition; and about this we know almost nothing.

Or to rephrase: Depending on how much you and your civilization know about AI-making - how much you know about cognition and computer science - it will take you a variable amount of computing power to build an AI. If you really knew what you were doing, for example, I confidently predict that you could build a mind at least as powerful as a human mind, while using fewer floating-point operations per second than a human brain is making useful use of -

Chris Humbali: Wait, did you just say "confidently"? How could you possibly know that with confidence? How can you criticize Moravec for being too confident, and then, in the next second, turn around and be confident of something yourself? Doesn't that make you a massive hypocrite?

Eliezer: Um, who are you again?

Humbali: I'm the cousin of Pat Modesto from your previous dialogue on Hero Licensing [LW · GW]! Pat isn't here in person because "Modesto" looks unfortunately like "Moravec" on a computer screen. And also their first name looks a bit like "Paul" who is not meant to be referenced either. So today I shall be your true standard-bearer for good calibration, intellectual humility, the outside view, and reference class forecasting -

Eliezer: Two of these things are not like the other two, in my opinion; and Humbali and Modesto do not understand how to operate any of the four correctly, in my opinion; but anybody who's read "Hero Licensing [LW · GW]" should already know I believe that.

Humbali: - and I don't see how Eliezer can possibly be so confident, after all his humble talk of the difficulty of futurism, that it's possible to build a mind 'as powerful as' a human mind using 'less computing power' than a human brain.

Eliezer: It's overdetermined by multiple lines of inference. We might first note, for example, that the human brain runs very slowly in a serial sense and tries to make up for that with massive parallelism. It's an obvious truth of computer science that while you can use 1000 serial operations per second to emulate 1000 parallel operations per second, the reverse is not in general true.

To put it another way: if you had to build a spreadsheet or a word processor on a computer running at 100Hz, you might also need a billion processing cores and massive parallelism in order to do enough cache lookups to get anything done; that wouldn't mean the computational labor you were performing was intrinsically that expensive. Since modern chips are massively serially faster than the neurons in a brain, and the direction of conversion is asymmetrical, we should expect that there are tasks which are immensely expensive to perform in a massively parallel neural setup, which are much cheaper to do with serial processing steps, and the reverse is not symmetrically true.

A sufficiently adept builder can build general intelligence more cheaply in total operations per second, if they're allowed to line up a billion operations one after another per second, versus lining up only 100 operations one after another. I don't bother to qualify this with "very probably" or "almost certainly"; it is the sort of proposition that a clear thinker should simply accept as obvious and move on.

Humbali: And is it certain that neurons can perform only 100 serial steps one after another, then? As you say, ignorance about one fact can obviate knowledge of any number of others.

Eliezer: A typical neuron firing as fast as possible can do maybe 200 spikes per second, a few rare neuron types used by eg bats to echolocate can do 1000 spikes per second, and the vast majority of neurons are not firing that fast at any given time. The usual and proverbial rule in neuroscience - the sort of academically respectable belief I'd expect you to respect even more than I do - is called "the 100-step rule", that any task a human brain (or mammalian brain) can do on perceptual timescales, must be doable with no more than 100 serial steps of computation - no more than 100 things that get computed one after another. Or even less if the computation is running off spiking frequencies instead of individual spikes.

Moravec: Yes, considerations like that are part of why I'd defend my estimate of 10^13 ops/sec for a human brain as being reasonable - more reasonable than somebody might think if they were, say, counting all the synapses and multiplying by the maximum number of spikes per second in any neuron. If you actually look at what the retina is doing, and how it's computing that, it doesn't look like it's doing one floating-point operation per activation spike per synapse.

Eliezer: There's a similar asymmetry between precise computational operations having a vastly easier time emulating noisy or imprecise computational operations, compared to the reverse - there is no doubt a way to use neurons to compute, say, exact 16-bit integer addition, which is at least more efficient than a human trying to add up 16986+11398 in their heads, but you'd still need more synapses to do that than transistors, because the synapses are noisier and the transistors can just do it precisely. This is harder to visualize and get a grasp on than the parallel-serial difference, but that doesn't make it unimportant.

Which brings me to the second line of very obvious-seeming reasoning that converges upon the same conclusion - that it is in principle possible to build an AGI much more computationally efficient than a human brain - namely that biology is simply not that efficient, and especially when it comes to huge complicated things that it has started doing relatively recently.

ATP synthase may be close to 100% thermodynamically efficient, but ATP synthase is literally over 1.5 billion years old and a core bottleneck on all biological metabolism. Brains have to pump thousands of ions in and out of each stretch of axon and dendrite, in order to restore their ability to fire another fast neural spike. The result is that the brain's computation is something like half a million times less efficient than the thermodynamic limit for its temperature - so around two millionths as efficient as ATP synthase. And neurons are a hell of a lot older than the biological software for general intelligence!

The software for a human brain is not going to be 100% efficient compared to the theoretical maximum, nor 10% efficient, nor 1% efficient, even before taking into account the whole thing with parallelism vs. serialism, precision vs. imprecision, or similarly clear low-level differences.

Humbali: Ah! But allow me to offer a consideration here that, I would wager, you've never thought of before yourself - namely - what if you're wrong? Ah, not so confident now, are you?

Eliezer: One observes, over one's cognitive life as a human, which sorts of what-ifs are useful to contemplate, and where it is wiser to spend one's limited resources planning against the alternative that one might be wrong; and I have oft observed that lots of people don't... quite seem to understand how to use 'what if' all that well? They'll be like, "Well, what if UFOs are aliens, and the aliens are partially hiding from us but not perfectly hiding from us, because they'll seem higher-status if they make themselves observable but never directly interact with us?"

I can refute individual what-ifs like that with specific counterarguments, but I'm not sure how to convey the central generator behind how I know that I ought to refute them. I am not sure how I can get people to reject these ideas for themselves, instead of them passively waiting for me to come around with a specific counterargument. My having to counterargue things specifically now seems like a road that never seems to end, and I am not as young as I once was, nor am I encouraged by how much progress I seem to be making. I refute one wacky idea with a specific counterargument, and somebody else comes along and presents a new wacky idea on almost exactly the same theme.

I know it's probably not going to work, if I try to say things like this, but I'll try to say them anyways. When you are going around saying 'what-if', there is a very great difference between your map of reality, and the territory of reality, which is extremely narrow and stable. Drop your phone, gravity pulls the phone downward, it falls. What if there are aliens and they make the phone rise into the air instead, maybe because they'll be especially amused at violating the rule after you just tried to use it as an example of where you could be confident? Imagine the aliens watching you, imagine their amusement, contemplate how fragile human thinking is and how little you can ever be assured of anything and ought not to be too confident. Then drop the phone and watch it fall. You've now learned something about how reality itself isn't made of what-ifs and reminding oneself to be humble; reality runs on rails stronger than your mind does.

Contemplating this doesn't mean you know the rails, of course, which is why it's so much harder to predict the Future than the past. But if you see that your thoughts are still wildly flailing around what-ifs, it means that they've failed to gel, in some sense, they are not yet bound to reality, because reality has no binding receptors for what-iffery.

The correct thing to do is not to act on your what-ifs that you can't figure out how to refute, but to go on looking for a model which makes narrower predictions than that. If that search fails, forge a model which puts some more numerical distribution on your highly entropic uncertainty, instead of diverting into specific what-ifs. And in the latter case, understand that this probability distribution reflects your ignorance and subjective state of mind, rather than your knowledge of an objective frequency; so that somebody else is allowed to be less ignorant without you shouting "Too confident!" at them. Reality runs on rails as strong as math; sometimes other people will achieve, before you do, the feat of having their own thoughts run through more concentrated rivers of probability, in some domain.

Now, when we are trying to concentrate our thoughts into deeper, narrower rivers that run closer to reality's rails, there is of course the legendary hazard of concentrating our thoughts into the wrong narrow channels that exclude reality. And the great legendary sign of this condition, of course, is the counterexample from Reality that falsifies our model! But you should not in general criticize somebody for trying to concentrate their probability into narrower rivers than yours, for this is the appearance of the great general project of trying to get to grips with Reality, that runs on true rails that are narrower still.

If you have concentrated your probability into different narrow channels than somebody else's, then, of course, you have a more interesting dispute; and you should engage in that legendary activity of trying to find some accessible experimental test on which your nonoverlapping models make different predictions.

Humbali: I do not understand the import of all this vaguely mystical talk.

Eliezer: I'm trying to explain why, when I say that I'm very confident it's possible to build a human-equivalent mind using less computing power than biology has managed to use effectively, and you say, "How can you be so confident, what if you are wrong," it is not unreasonable for me to reply, "Well, kid, this doesn't seem like one of those places where it's particularly important to worry about far-flung ways I could be wrong." Anyone who aspires to learn, learns over a lifetime which sorts of guesses are more likely to go oh-no-wrong in real life, and which sorts of guesses are likely to just work. Less-learned minds will have minds full of what-ifs they can't refute in more places than more-learned minds; and even if you cannot see how to refute all your what-ifs yourself, it is possible that a more-learned mind knows why they are improbable. For one must distinguish possibility from probability.

It is imaginable or conceivable that human brains have such refined algorithms that they are operating at the absolute limits of computational efficiency, or within 10% of it. But if you've spent enough time noticing where Reality usually exercises its sovereign right to yell "Gotcha!" at you, learning which of your assumptions are the kind to blow up in your face and invalidate your final conclusion, you can guess that "Ah, but what if the brain is nearly 100% computationally efficient?" is the sort of what-if that is not much worth contemplating because it is not actually going to be true in real life. Reality is going to confound you in some other way than that.

I mean, maybe you haven't read enough neuroscience and evolutionary biology that you can see from your own knowledge that the proposition sounds massively implausible and ridiculous. But it should hardly seem unlikely that somebody else, more learned in biology, might be justified in having more confidence than you. Phones don't fall up. Reality really is very stable and orderly in a lot of ways, even in places where you yourself are ignorant of that order.

But if "What if aliens are making themselves visible in flying saucers because they want high status and they'll have higher status if they're occasionally observable but never deign to talk with us?" sounds to you like it's totally plausible, and you don't see how someone can be so confident that it's not true - because oh no what if you're wrong and you haven't seen the aliens so how can you know what they're not thinking - then I'm not sure how to lead you into the place where you can dismiss that thought with confidence. It may require a kind of life experience that I don't know how to give people, at all, let alone by having them passively read paragraphs of text that I write; a learned, perceptual sense of which what-ifs have any force behind them. I mean, I can refute that specific scenario, I can put that learned sense into words; but I'm not sure that does me any good unless you learn how to refute it yourself.

Humbali: Can we leave aside all that meta stuff and get back to the object level?

Eliezer: This indeed is often wise.

Humbali: Then here's one way that the minimum computational requirements for general intelligence could be higher than Moravec's argument for the human brain. Since, after, all, we only have one existence proof that general intelligence is possible at all, namely the human brain. Perhaps there's no way to get general intelligence in a computer except by simulating the brain neurotransmitter-by-neurotransmitter. In that case you'd need a lot more computing operations per second than you'd get by calculating the number of potential spikes flowing around the brain! What if it's true? How can you know?

(Modern person: This seems like an obvious straw argument? I mean, would anybody, even at an earlier historical point, actually make an argument like -

Moravec and Eliezer: YES THEY WOULD.)

Eliezer: I can imagine that if we were trying specifically to upload a human that there'd be no easy and simple and obvious way to run the resulting simulation and get a good answer, without simulating neurotransmitter flows in extra detail.

To imagine that every one of these simulated flows is being usefully used in general intelligence and there is no way to simplify the mind design to use fewer computations... I suppose I could try to refute that specifically, but it seems to me that this is a road which has no end unless I can convey the generator of my refutations. Your what-iffery is flung far enough that, if I cannot leave even that much rejection as an exercise for the reader to do on their own without my holding their hand, the reader has little enough hope of following the rest; let them depart now, in indignation shared with you, and save themselves further outrage.

I mean, it will obviously be less obvious to the reader because they will know less than I do about this exact domain, it will justly take more work for the reader to specifically refute you than it takes me to refute you. But I think the reader needs to be able to do that at all, in this example, to follow the more difficult arguments later.

Imaginary Moravec: I don't think it changes my conclusions by an order of magnitude, but some people would worry that, for example, changes of protein expression inside a neuron in order to implement changes of long-term potentiation, are also important to intelligence, and could be a big deal in the brain's real, effectively-used computational costs. I'm curious if you'd dismiss that as well, the same way you dismiss the probability that you'd have to simulate every neurotransmitter molecule?

Eliezer: Oh, of course not. Long-term potentiation suddenly turning out to be a big deal you overlooked, compared to the depolarization impulses spiking around, is very much the sort of thing where Reality sometimes jumps out and yells "Gotcha!" at you.

Humbali: How can you tell the difference?

Eliezer: Experience with Reality yelling "Gotcha!" at myself and historical others.

Humbali: They seem like equally plausible speculations to me!

Eliezer: Really? "What if long-term potentiation is a big deal and computationally important" sounds just as plausible to you as "What if the brain is already close to the wall of making the most efficient possible use of computation to implement general intelligence, and every neurotransmitter molecule matters"?

Humbali: Yes! They're both what-ifs we can't know are false and shouldn't be overconfident about denying!

Eliezer: My tiny feeble mortal mind is far away from reality and only bound to it by the loosest of correlating interactions, but I'm not that unbound from reality.

Moravec: I would guess that in real life, long-term potentiation is sufficiently slow and local that what goes on inside the cell body of a neuron over minutes or hours is not as big of a computational deal as thousands of times that many spikes flashing around the brain in milliseconds or seconds. That's why I didn't make a big deal of it in my own estimate.

Eliezer: Sure. But it is much more the sort of thing where you wake up to a reality-authored science headline saying "Gotcha! There were tiny DNA-activation interactions going on in there at high speed, and they were actually pretty expensive and important!" I'm not saying this exact thing is very probable, just that it wouldn't be out-of-character for reality to say something like that to me, the way it would be really genuinely bizarre if Reality was, like, "Gotcha! The brain is as computationally efficient of a generally intelligent engine as any algorithm can be!"

Moravec: I think we're in agreement about that part, or we would've been, if we'd actually had this conversation in 1988. I mean, I am a competent research roboticist and it is difficult to become one if you are completely unglued from reality.

Eliezer: Then what's with the 2010 prediction for strong AI, and the massive non-sequitur leap from "the human brain is somewhere around 10 trillion ops/sec" to "if we build a 10 trillion ops/sec supercomputer, we'll get strong AI"?

Moravec: Because while it's the kind of Fermi estimate that can be off by an order of magnitude in practice, it doesn't really seem like it should be, I don't know, off by three orders of magnitude? And even three orders of magnitude is just 10 years of Moore's Law. 2020 for strong AI is also a bold and important prediction.

Eliezer: And the year 2000 for strong AI even more so.

Moravec: Heh! That's not usually the direction in which people argue with me.

Eliezer: There's an important distinction between the direction in which people usually argue with you, and the direction from which Reality is allowed to yell "Gotcha!" I wish my future self had kept this more in mind, when arguing with Robin Hanson about how well AI architectures were liable to generalize and scale without a ton of domain-specific algorithmic tinkering for every field of knowledge. I mean, in principle what I was arguing for was various lower bounds on performance, but I sure could have emphasized more loudly that those were lower bounds - well, I did emphasize the lower-bound part, but - from the way I felt when AlphaGo and Alpha Zero and GPT-2 and GPT-3 showed up, I think I must've sorta forgot that myself.

Moravec: Anyways, if we say that I might be up to three orders of magnitude off and phrase it as 2000-2020, do you agree with my prediction then?

Eliezer: No, I think you're just... arguing about the wrong facts, in a way that seems to be unglued from most tracks Reality might follow so far as I currently know? On my view, creating AGI is strongly dependent on how much knowledge you have about how to do it, in a way which almost entirely obviates the relevance of arguments from human biology?

Like, human biology tells us a single not-very-useful data point about how much computing power evolutionary biology needs in order to build a general intelligence, using very alien methods to our own. Then, very separately, there's the constantly changing level of how much cognitive science, neuroscience, and computer science our own civilization knows. We don't know how much computing power is required for AGI for any level on that constantly changing graph, and biology doesn't tell us. All we know is that the hardware requirements for AGI must be dropping by the year, because the knowledge of how to create AI is something that only increases over time.

At some point the moving lines for "decreasing hardware required" and "increasing hardware available" will cross over, which lets us predict that AGI gets built at some point. But we don't know how to graph two key functions needed to predict that date. You would seem to be committing the classic fallacy of searching for your keys under the streetlight where the visibility is better. You know how to estimate how many floating-point operations per second the retina could effectively be using, but this is not the number you need to predict the outcome you want to predict. You need a graph of human knowledge of computer science over time, and then a graph of how much computer science requires how much hardware to build AI, and neither of these graphs are available.

It doesn't matter how many chapters your book spends considering the continuation of Moore's Law or computation in the retina, and I'm sorry if it seems rude of me in some sense to just dismiss the relevance of all the hard work you put into arguing it. But you're arguing the wrong facts to get to the conclusion, so all your hard work is for naught.

Humbali: Now it seems to me that I must chide you for being too dismissive of Moravec's argument. Fine, yes, Moravec has not established with logical certainty that strong AI must arrive at the point where top supercomputers match the human brain's 10 trillion operations per second. But has he not established a reference class, the sort of base rate that good and virtuous superforecasters, unlike yourself, go looking for when they want to anchor their estimate about some future outcome? Has he not, indeed, established the sort of argument which says that if top supercomputers can do only ten million operations per second, we're not very likely to get AGI earlier than that, and if top supercomputers can do ten quintillion operations per second*, we're unlikely not to already have AGI?

(*) In 2021 terms, 10 TPU v4 pods.

Eliezer: With ranges that wide, it'd be more likely and less amusing to hit somewhere inside it by coincidence. But I still think this whole line of thoughts is just off-base, and that you, Humbali, have not truly grasped the concept of a virtuous superforecaster or how they go looking for reference classes and base rates.

Humbali: I frankly think you're just being unvirtuous. Maybe you have some special model of AGI which claims that it'll arrive in a different year or be arrived at by some very different pathway. But is not Moravec's estimate a sort of base rate which, to the extent you are properly and virtuously uncertain of your own models, you ought to regress in your own probability distributions over AI timelines? As you become more uncertain about the exact amounts of knowledge required and what knowledge we'll have when, shouldn't you have an uncertain distribution about AGI arrival times that centers around Moravec's base-rate prediction of 2010?

For you to reject this anchor seems to reveal a grave lack of humility, since you must be very certain of whatever alternate estimation methods you are using in order to throw away this base-rate entirely.

Eliezer: Like I said, I think you've just failed to grasp the true way of a virtuous superforecaster. Thinking a lot about Moravec's so-called 'base rate' is just making you, in some sense, stupider; you need to cast your thoughts loose from there and try to navigate a wilder and less tamed space of possibilities, until they begin to gel and coalesce into narrower streams of probability. Which, for AGI, they probably won't do until we're quite close to AGI, and start to guess correctly how AGI will get built; for it is easier to predict an eventual global pandemic than to say it will start in November of 2019. Even in October of 2019 this cannot be done.

Humbali: Then all this uncertainty must somehow be quantified, if you are to be a virtuous Bayesian; and again, for lack of anything better, the resulting distribution should center on Moravec's base-rate estimate of 2010.

Eliezer: No, that calculation is just basically not relevant here; and thinking about it is making you stupider, as your mind flails in the trackless wilderness grasping onto unanchored air. Things must be 'sufficiently similar' to each other, in some sense, for us to get a base rate on one thing by looking at another thing. Humans making an AGI is just too dissimilar to evolutionary biology making a human brain for us to anchor 'how much computing power at the time it happens' from one to the other. It's not the droid we're looking for; and your attempt to build an inescapable epistemological trap about virtuously calling that a 'base rate' is not the Way.

Imaginary Moravec: If I can step back in here, I don't think my calculation is zero evidence? What we know from evolutionary biology is that a blind alien god with zero foresight accidentally mutated a chimp brain into a general intelligence. I don't want to knock biology's work too much, there's some impressive stuff in the retina, and the retina is just the part of the brain which is in some sense easiest to understand. But surely there's a very reasonable argument that 10 trillion ops/sec is about the amount of computation that evolutionary biology needed; and since evolution is stupid, when we ourselves have that much computation, it shouldn't be that hard to figure out how to configure it.

Eliezer: If that was true, the same theory predicts that our current supercomputers should be doing a better job of matching the agility and vision of spiders. When at some point there's enough hardware that we figure out how to put it together into AGI, we could be doing it with less hardware than a human; we could be doing it with more; and we can't even say that these two possibilities are around equally probable such that our probability distribution should have its median around 2010. Your number is so bad and obtained by such bad means that we should just throw it out of our thinking and start over.

Humbali: This last line of reasoning seems to me to be particularly ludicrous, like you're just throwing away the only base rate we have in favor of a confident assertion of our somehow being more uncertain than that.

Eliezer: Yeah, well, sorry to put it bluntly, Humbali, but you have not yet figured out how to turn your own computing power into intelligence.

- 1999 -

Luke Muehlhauser reading a previous draft of this (only sounding much more serious than this, because Luke Muehlhauser): You know, there was this certain teenaged futurist who made some of his own predictions about AI timelines -

Eliezer: I'd really rather not argue from that as a case in point. I dislike people who screw up something themselves, and then argue like nobody else could possibly be more competent than they were. I dislike even more people who change their mind about something when they turn 22, and then, for the rest of their lives, go around acting like they are now Very Mature Serious Adults who believe the thing that a Very Mature Serious Adult believes, so if you disagree with them about that thing they started believing at age 22, you must just need to wait to grow out of your extended childhood.

Luke Muehlhauser (still being paraphrased): It seems like it ought to be acknowledged somehow.

Eliezer: That's fair, yeah, I can see how someone might think it was relevant. I just dislike how it potentially creates the appearance of trying to slyly sneak in an Argument From Reckless Youth that I regard as not only invalid but also incredibly distasteful. You don't get to screw up yourself and then use that as an argument about how nobody else can do better.

Humbali: Uh, what's the actual drama being subtweeted here?

Eliezer: A certain teenaged futurist, who, for example, said in 1999, "The most realistic estimate for a seed AI transcendence is 2020; nanowar, before 2015."

Humbali: This young man must surely be possessed of some very deep character defect, which I worry will prove to be of the sort that people almost never truly outgrow except in the rarest cases. Why, he's not even putting a probability distribution over his mad soothsaying - how blatantly absurd can a person get?

Eliezer: Dear child ignorant of history, your complaint is far too anachronistic. This is 1999 we're talking about here; almost nobody is putting probability distributions on things, that element of your later subculture has not yet been introduced. Eliezer-2002 hasn't been sent a copy of "Judgment Under Uncertainty" by Emil Gilliam. Eliezer-2006 hasn't put his draft online for "Cognitive biases potentially affecting judgment of global risks". The Sequences won't start until another year after that. How would the forerunners of effective altruism in 1999 know about putting probability distributions on forecasts? I haven't told them to do that yet! We can give historical personages credit when they seem to somehow end up doing better than their surroundings would suggest; it is unreasonable to hold them to modern standards, or expect them to have finished refining those modern standards by the age of nineteen.

Though there's also a more subtle lesson you could learn, about how this young man turned out to still have a promising future ahead of him; which he retained at least in part by having a deliberate contempt for pretended dignity, allowing him to be plainly and simply wrong in a way that he noticed, without his having twisted himself up to avoid a prospect of embarrassment. Instead of, for example, his evading such plain falsification by having dignifiedly wide Very Serious probability distributions centered on the same medians produced by the same basically bad thought processes.

But that was too much of a digression, when I tried to write it up; maybe later I'll post something separately.

- 2004 or thereabouts -

Ray Kurzweil in 2001: I have calculated that matching the intelligence of a human brain requires 2 * 10^16 ops/sec* and this will become available in a $1000 computer in 2023. 26 years after that, in 2049, a $1000 computer will have ten billion times more computing power than a human brain; and in 2059, that computer will cost one cent.

(*) Two TPU v4 pods.

Actual real-life Eliezer in Q&A, when Kurzweil says the same thing in a 2004(?) talk: It seems weird to me to forecast the arrival of "human-equivalent" AI, and then expect Moore's Law to just continue on the same track past that point for thirty years. Once we've got, in your terms, human-equivalent AIs, even if we don't go beyond that in terms of intelligence, Moore's Law will start speeding them up. Once AIs are thinking thousands of times faster than we are, wouldn't that tend to break down the graph of Moore's Law with respect to the objective wall-clock time of the Earth going around the Sun? Because AIs would be able to spend thousands of subjective years working on new computing technology?

Actual Ray Kurzweil: The fact that AIs can do faster research is exactly what will enable Moore's Law to continue on track.

Actual Eliezer (out loud): Thank you for answering my question.

Actual Eliezer (internally): Moore's Law is a phenomenon produced by human cognition and the fact that human civilization runs off human cognition. You can't expect the surface phenomenon to continue unchanged after the deep causal phenomenon underlying it starts changing. What kind of bizarre worship of graphs would lead somebody to think that the graphs were the primary phenomenon and would continue steady and unchanged when the forces underlying them changed massively? I was hoping he'd be less nutty in person than in the book, but oh well.

- 2006 or thereabouts -

Somebody on the Internet: I have calculated the number of computer operations used by evolution to evolve the human brain - searching through organisms with increasing brain size - by adding up all the computations that were done by any brains before modern humans appeared. It comes out to 10^43 computer operations.* AGI isn't coming any time soon!

(*) I forget the exact figure. It was 10^40-something.

Eliezer, sighing: Another day, another biology-inspired timelines forecast. This trick didn't work when Moravec tried it, it's not going to work while Ray Kurzweil is trying it, and it's not going to work when you try it either. It also didn't work when a certain teenager tried it, but please entirely ignore that part; you're at least allowed to do better than him.

Imaginary Somebody: Moravec's prediction failed because he assumed that you could just magically take something with around as much hardware as the human brain and, poof, it would start being around that intelligent -

Eliezer: Yes, that is one way of viewing an invalidity in that argument. Though you do Moravec a disservice if you imagine that he could only argue "It will magically emerge", and could not give the more plausible-sounding argument "Human engineers are not that incompetent compared to biology, and will probably figure it out without more than one or two orders of magnitude of extra overhead."

Somebody: But I am cleverer, for I have calculated the number of computing operations that was used to create and design biological intelligence, not just the number of computing operations required to run it once created!

Eliezer: And yet, because your reasoning contains the word "biological", it is just as invalid and unhelpful as Moravec's original prediction.

Somebody: I don't see why you dismiss my biological argument about timelines on the basis of Moravec having been wrong. He made one basic mistake - neglecting to take into effect the cost to generate intelligence, not just to run it. I have corrected this mistake, and now my own effort to do biologically inspired timeline forecasting should work fine, and must be evaluated on its own merits, de novo.

Eliezer: It is true indeed that sometimes a line of inference is doing just one thing wrong, and works fine after being corrected. And because this is true, it is often indeed wise to reevaluate new arguments on their own merits, if that is how they present themselves. One may not take the past failure of a different argument or three, and try to hang it onto the new argument like an inescapable iron ball chained to its leg. It might be the cause for defeasible skepticism, but not invincible skepticism.

That said, on my view, you are making a nearly identical mistake as Moravec, and so his failure remains relevant to the question of whether you are engaging in a kind of thought that binds well to Reality.

Somebody: And that mistake is just mentioning the word "biology"?

Eliezer: The problem is that the resource gets consumed differently, so base-rate arguments from resource consumption end up utterly unhelpful in real life. The human brain consumes around 20 watts of power. Can we thereby conclude that an AGI should consume around 20 watts of power, and that, when technology advances to the point of being able to supply around 20 watts of power to computers, we'll get AGI?

Somebody: That's absurd, of course. So, what, you compare my argument to an absurd argument, and from this dismiss it?

Eliezer: I'm saying that Moravec's "argument from comparable resource consumption" must be in general invalid [LW · GW], because it Proves Too Much [LW · GW]. If it's in general valid to reason about comparable resource consumption, then it should be equally valid to reason from energy consumed as from computation consumed, and pick energy consumption instead to call the basis of your median estimate.

You say that AIs consume energy in a very different way from brains? Well, they'll also consume computations in a very different way from brains! The only difference between these two cases is that you know something about how humans eat food and break it down in their stomachs and convert it into ATP that gets consumed by neurons to pump ions back out of dendrites and axons, while computer chips consume electricity whose flow gets interrupted by transistors to transmit information. Since you know anything whatsoever about how AGIs and humans consume energy, you can see that the consumption is so vastly different as to obviate all comparisons entirely.

You are ignorant of how the brain consumes computation, you are ignorant of how the first AGIs built would consume computation, but "an unknown key does not open an unknown lock" and these two ignorant distributions should not assert much internal correlation between them.

Even without knowing the specifics of how brains and future AGIs consume computing operations, you ought to be able to reason abstractly about a directional update that you would make, if you knew any specifics instead of none. If you did know how both kinds of entity consumed computations, if you knew about specific machinery for human brains, and specific machinery for AGIs, you'd then be able to see the enormous vast specific differences between them, and go, "Wow, what a futile resource-consumption comparison to try to use for forecasting."

(Though I say this without much hope; I have not had very much luck in telling people about predictable directional updates they would make, if they knew something instead of nothing about a subject. I think it's probably too abstract for most people to feel in their gut, or something like that, so their brain ignores it and moves on in the end. I have had life experience with learning more about a thing, updating, and then going to myself, "Wow, I should've been able to predict in retrospect that learning almost any specific fact would move my opinions in that same direction." But I worry this is not a common experience, for it involves a real experience of discovery, and preferably more than one to get the generalization.)

Somebody: All of that seems irrelevant to my novel and different argument. I am not foolishly estimating the resources consumed by a single brain; I'm estimating the resources consumed by evolutionary biology to invent brains!

Eliezer: And the humans wracking their own brains and inventing new AI program architectures and deploying those AI program architectures to themselves learn, will consume computations so utterly differently from evolution that there is no point comparing those consumptions of resources. That is the flaw that you share exactly with Moravec, and that is why I say the same of both of you, "This is a kind of thinking that fails to bind upon reality, it doesn't work in real life." I don't care how much painstaking work you put into your estimate of 10^43 computations performed by biology. It's just not a relevant fact.

Humbali: But surely this estimate of 10^43 cumulative operations can at least be used to establish a base rate for anchoring our -

Eliezer: Oh, for god's sake, shut up. At least Somebody is only wrong on the object level, and isn't trying to build an inescapable epistemological trap by which his ideas must still hang in the air like an eternal stench even after they've been counterargued. Isn't 'but muh base rates' what your viewpoint would've also said about Moravec's 2010 estimate, back when that number still looked plausible?

Humbali: Of course it is evident to me now that my youthful enthusiasm was mistaken; obviously I tried to estimate the wrong figure. As Somebody argues, we should have been estimating the biological computations used to design human intelligence, not the computations used to run it.

I see, now, that I was using the wrong figure as my base rate, leading my base rate to be wildly wrong, and even irrelevant; but now that I've seen this, the clear error in my previous reasoning, I have a new base rate. This doesn't seem obviously to me likely to contain the same kind of wildly invalidating enormous error as before. What, is Reality just going to yell "Gotcha!" at me again? And even the prospect of some new unknown error, which is just as likely to be in either possible direction, implies only that we should widen our credible intervals while keeping them centered on a median of 10^43 operations -

Eliezer: Please stop. This trick just never works, at all, deal with it and get over it. Every second of attention that you pay to the 10^43 number is making you stupider. You might as well reason that 20 watts is a base rate for how much energy the first generally intelligent computing machine should consume.

- 2020 -

OpenPhil: We have commissioned a Very Serious report on a biologically inspired estimate of how much computation will be required to achieve Artificial General Intelligence, for purposes of forecasting an AGI timeline. (Summary of report. [LW(p) · GW(p)]) (Full draft of report.) Our leadership takes this report Very Seriously.

Eliezer: Oh, hi there, new kids. Your grandpa is feeling kind of tired now and can't debate this again with as much energy as when he was younger.

Imaginary OpenPhil: You're not that much older than us.

Eliezer: Not by biological wall-clock time, I suppose, but -

OpenPhil: You think thousands of times faster than us?

Eliezer: I wasn't going to say it if you weren't.

OpenPhil: We object to your assertion on the grounds that it is false.

Eliezer: I was actually going to say, you might be underestimating how long I've been walking this endless battlefield because I started really quite young.

I mean, sure, I didn't read Moravec's Mind Children when it came out in 1988. I only read it four years later, when I was twelve. And sure, I didn't immediately afterwards start writing online about Moore's Law and strong AI; I did not immediately contribute my own salvos and sallies to the war; I was not yet a noticed voice in the debate. I only got started on that at age sixteen. I'd like to be able to say that in 1999 I was just a random teenager being reckless, but in fact I was already being invited to dignified online colloquia about the "Singularity" and mentioned in printed books; when I was being wrong back then I was already doing so in the capacity of a minor public intellectual on the topic.

This is, as I understand normie ways, relatively young, and is probably worth an extra decade tacked onto my biological age; you should imagine me as being 52 instead of 42 as I write this, with a correspondingly greater number of visible gray hairs.

A few years later - though still before your time - there was the Accelerating Change Foundation, and Ray Kurzweil spending literally millions of dollars to push Moore's Law graphs of technological progress as the central story about the future. I mean, I'm sure that a few million dollars sounds like peanuts to OpenPhil, but if your own annual budget was a hundred thousand dollars or so, that's a hell of a megaphone to compete with.

If you are currently able to conceptualize the Future as being about something other than nicely measurable metrics of progress in various tech industries, being projected out to where they will inevitably deliver us nice things - that's at least partially because of a battle fought years earlier, in which I was a primary fighter, creating a conceptual atmosphere you now take for granted. A mental world where threshold levels of AI ability are considered potentially interesting and transformative - rather than milestones of new technological luxuries to be checked off on an otherwise invariant graph of Moore's Laws as they deliver flying cars, space travel, lifespan-extension escape velocity, and other such goodies on an equal level of interestingness. I have earned at least a little right to call myself your grandpa.

And that kind of experience has a sort of compounded interest, where, once you've lived something yourself and participated in it, you can learn more from reading other histories about it. The histories become more real to you once you've fought your own battles. The fact that I've lived through timeline errors in person gives me a sense of how it actually feels to be around at the time, watching people sincerely argue Very Serious erroneous forecasts. That experience lets me really and actually update on the history [LW · GW] of the earlier mistaken timelines from before I was around; instead of the histories just seeming like a kind of fictional novel to read about, disconnected from reality and not happening to real people.

And now, indeed, I'm feeling a bit old and tired for reading yet another report like yours in full attentive detail. Does it by any chance say that AGI is due in about 30 years from now?

OpenPhil: Our report has very wide credible intervals around both sides of its median, as we analyze the problem from a number of different angles and show how they lead to different estimates -

Eliezer: Unfortunately, the thing about figuring out five different ways to guess the effective IQ of the smartest people on Earth, and having three different ways to estimate the minimum IQ to destroy lesser systems such that you could extrapolate a minimum IQ to destroy the whole Earth, and putting wide credible intervals around all those numbers, and combining and mixing the probability distributions to get a new probability distribution, is that, at the end of all that, you are still left with a load of nonsense. Doing a fundamentally wrong thing in several different ways will not save you, though I suppose if you spread your bets widely enough, one of them may be right by coincidence.

So does the report by any chance say - with however many caveats and however elaborate the probabilistic methods and alternative analyses - that AGI is probably due in about 30 years from now?

OpenPhil: Yes, in fact, our 2020 report's median estimate is 2050; though, again, with very wide credible intervals around both sides. Is that number significant?

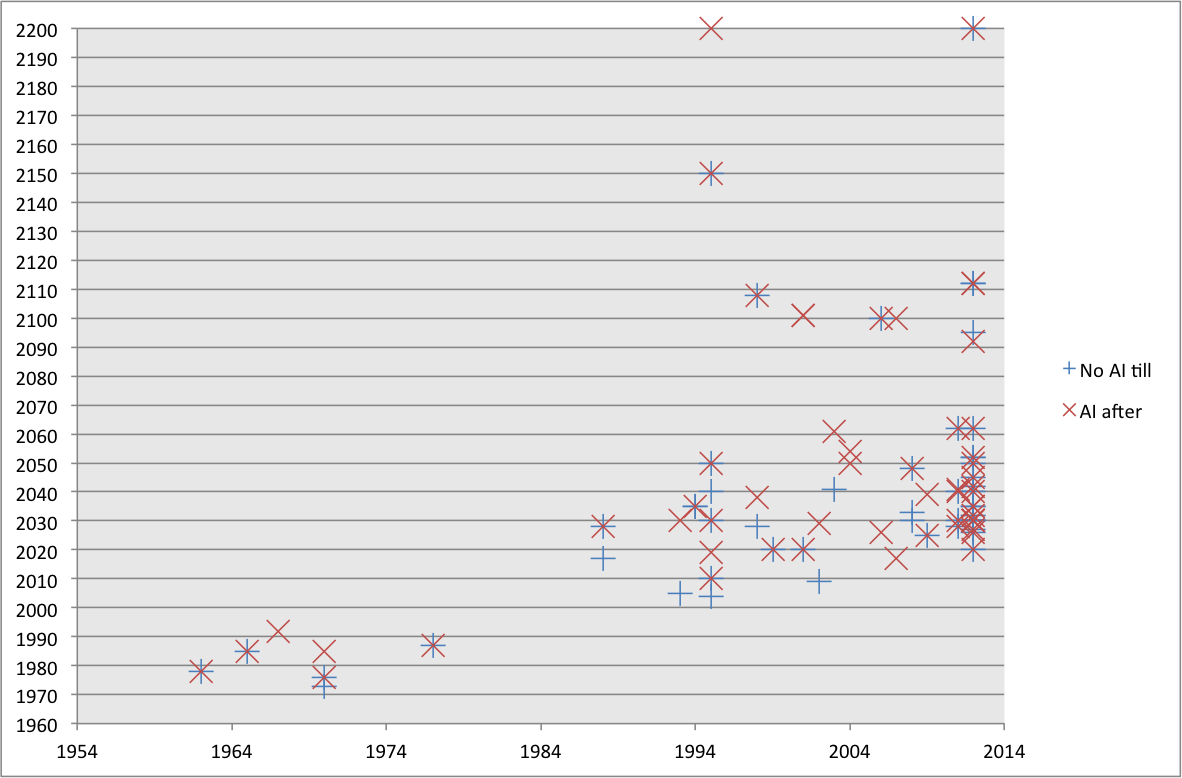

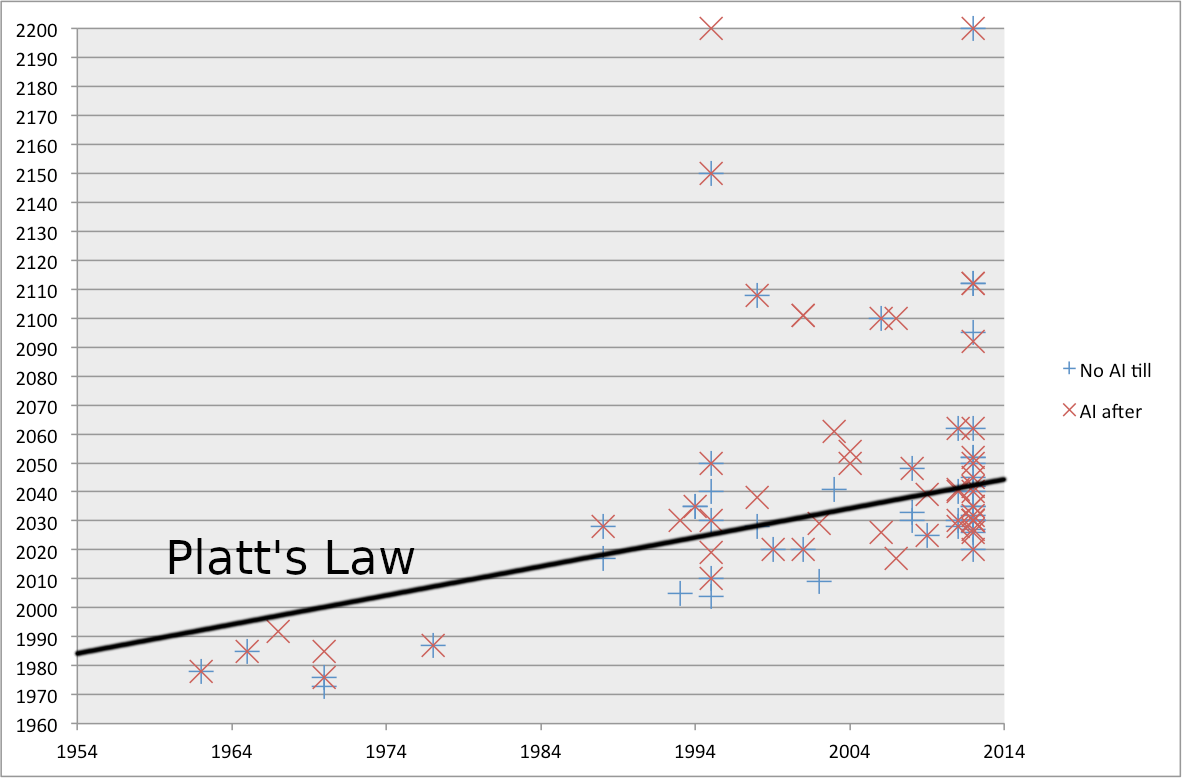

Eliezer: It's a law generalized by Charles Platt, that any AI forecast will put strong AI thirty years out from when the forecast is made. Vernor Vinge referenced it in the body of his famous 1993 NASA speech, whose abstract begins, "Within thirty years, we will have the technological means to create superhuman intelligence. Shortly after, the human era will be ended."

After I was old enough to be more skeptical of timelines myself, I used to wonder how Vinge had pulled out the "within thirty years" part. This may have gone over my head at the time, but rereading again today, I conjecture Vinge may have chosen the headline figure of thirty years as a deliberately self-deprecating reference to Charles Platt's generalization about such forecasts always being thirty years from the time they're made, which Vinge explicitly cites later in the speech.

Or to put it another way: I conjecture that to the audience of the time, already familiar with some previously-made forecasts about strong AI, the impact of the abstract is meant to be, "Never mind predicting strong AI in thirty years, you should be predicting superintelligence in thirty years, which matters a lot more." But the minds of authors are scarcely more knowable than the Future, if they have not explicitly told us what they were thinking; so you'd have to ask Professor Vinge, and hope he remembers what he was thinking back then.

OpenPhil: Superintelligence before 2023, huh? I suppose Vinge still has two years left to go before that's falsified.

Eliezer: Also in the body of the speech, Vinge says, "I'll be surprised if this event occurs before 2005 or after 2030," which sounds like a more serious and sensible way of phrasing an estimate. I think that should supersede the probably Platt-inspired headline figure for what we think of as Vinge's 1993 prediction. The jury's still out on whether Vinge will have made a good call.

Oh, and sorry if grandpa is boring you with all this history from the times before you were around. I mean, I didn't actually attend Vinge's famous NASA speech when it happened, what with being thirteen years old at the time, but I sure did read it later. Once it was digitized and put online, it was all over the Internet. Well, all over certain parts of the Internet, anyways. Which nerdy parts constituted a much larger fraction of the whole, back when the World Wide Web was just starting to take off among early adopters.

But, yeah, the new kids showing up with some graphs of Moore's Law and calculations about biology and an earnest estimate of strong AI being thirty years out from the time of the report is, uh, well, it's... historically precedented.

OpenPhil: That part about Charles Platt's generalization is interesting, but just because we unwittingly chose literally exactly the median that Platt predicted people would always choose in consistent error, that doesn't justify dismissing our work, right? We could have used a completely valid method of estimation which would have pointed to 2050 no matter which year it was tried in, and, by sheer coincidence, have first written that up in 2020. In fact, we try to show in the report that the same methodology, evaluated in earlier years, would also have pointed to around 2050 -

Eliezer: Look, people keep trying this. It's never worked. It's never going to work. 2 years before the end of the world, there'll be another published biologically inspired estimate showing that AGI is 30 years away and it will be exactly as informative then as it is now. I'd love to know the timelines too, but you're not going to get the answer you want until right before the end of the world, and maybe not even then unless you're paying very close attention. Timing this stuff is just plain hard.

OpenPhil: But our report is different, and our methodology for biologically inspired estimates is wiser and less naive than those who came before.

Eliezer: That's what the last guy said, but go on.

OpenPhil: First, we carefully estimate a range of possible figures for the equivalent of neural-network parameters needed to emulate a human brain. Then, we estimate how many examples would be required to train a neural net with that many parameters. Then, we estimate the total computational cost of that many training runs. Moore's Law then gives us 2050 as our median time estimate, given what we think are the most likely underlying assumptions, though we do analyze it several different ways.

Eliezer: This is almost exactly what the last guy tried, except you're using network parameters instead of computing ops, and deep learning training runs instead of biological evolution.

OpenPhil: Yes, so we've corrected his mistake of estimating the wrong biological quantity and now we're good, right?

Eliezer: That's what the last guy thought he'd done about Moravec's mistaken estimation target. And neither he nor Moravec would have made much headway on their underlying mistakes, by doing a probabilistic analysis of that same wrong question from multiple angles.

OpenPhil: Look, sometimes more than one person makes a mistake, over historical time. It doesn't mean nobody can ever get it right. You of all people should agree.

Eliezer: I do so agree, but that doesn't mean I agree you've fixed the mistake. I think the methodology itself is bad, not just its choice of which biological parameter to estimate. Look, do you understand why the evolution-inspired estimate of 10^43 ops was completely ludicrous; and the claim that it was equally likely to be mistaken in either direction, even more ludicrous?

OpenPhil: Because AGI isn't like biology, and in particular, will be trained using gradient descent instead of evolutionary search, which is cheaper. We do note inside our report that this is a key assumption, and that, if it fails, the estimate might be correspondingly wrong -

Eliezer: But then you claim that mistakes are equally likely in both directions and so your unstable estimate is a good median. Can you see why the previous evolutionary estimate of 10^43 cumulative ops was not, in fact, equally likely to be wrong in either direction? That it was, predictably, a directional overestimate?

OpenPhil: Well, search by evolutionary biology is more costly than training by gradient descent, so in hindsight, it was an overestimate. Are you claiming this was predictable in foresight instead of hindsight?

Eliezer: I'm claiming that, at the time, I snorted and tossed Somebody's figure out the window while thinking it was ridiculously huge and absurd, yes.

OpenPhil: Because you'd already foreseen in 2006 that gradient descent would be the method of choice for training future AIs, rather than genetic algorithms?

Eliezer: Ha! No. Because it was an insanely costly hypothetical approach whose main point of appeal, to the sort of person who believed in it, was that it didn't require having any idea whatsoever of what you were doing or how to design a mind.

OpenPhil: Suppose one were to reply: "Somebody" didn't know better-than-evolutionary methods for designing a mind, just as we currently don't know better methods than gradient descent for designing a mind; and hence Somebody's estimate was the best estimate at the time, just as ours is the best estimate now?

Eliezer: Unless you were one of a small handful of leading neural-net researchers who knew a few years ahead of the world where scientific progress was heading - who knew a Thielian 'secret' before finding evidence strong enough to convince the less foresightful - you couldn't have called the jump specifically to gradient descent rather than any other technique. "I don't know any more computationally efficient way to produce a mind than re-evolving the cognitive history of all life on Earth" transitioning over time to "I don't know any more computationally efficient way to produce a mind than gradient descent over entire brain-sized models" is not predictable in the specific part about "gradient descent" - not unless you know a Thielian secret.

But knowledge is a ratchet that usually only turns one way, so it's predictable that the current story changes to somewhere over future time, in a net expected direction. Let's consider the technique currently known as mixture-of-experts (MoE), for training smaller nets in pieces and muxing them together. It's not my mainline prediction that MoE actually goes anywhere - if I thought MoE was actually promising, I wouldn't call attention to it, of course! I don't want to make timelines shorter, that is not a service to Earth, not a good sacrifice in the cause of winning an Internet argument.

But if I'm wrong and MoE is not a dead end, that technique serves as an easily-visualizable case in point. If that's a fruitful avenue, the technique currently known as "mixture-of-experts" will mature further over time, and future deep learning engineers will be able to further perfect the art of training slices of brains using gradient descent and fewer examples, instead of training entire brains using gradient descent and lots of examples.

Or, more likely, it's not MoE that forms the next little trend. But there is going to be something, especially if we're sitting around waiting until 2050. Three decades is enough time for some big paradigm shifts in an intensively researched field. Maybe we'd end up using neural net tech very similar to today's tech if the world ends in 2025, but in that case, of course, your prediction must have failed somewhere else.

The three components of AGI arrival times are available hardware, which increases over time in an easily graphed way; available knowledge, which increases over time in a way that's much harder to graph; and hardware required at a given level of specific knowledge, a huge multidimensional unknown background parameter. The fact that you have no idea how to graph the increase of knowledge - or measure it in any way that is less completely silly than "number of science papers published" or whatever such gameable metric - doesn't change the point that this is a predictable fact about the future; there will be more knowledge later, the more time that passes, and that will directionally change the expense of the currently least expensive way of doing things.

OpenPhil: We did already consider that and try to take it into account: our model already includes a parameter for how algorithmic progress reduces hardware requirements. It's not easy to graph as exactly as Moore's Law, as you say, but our best-guess estimate is that compute costs halve every 2-3 years.

Eliezer: Oh, nice. I was wondering what sort of tunable underdetermined parameters enabled your model to nail the psychologically overdetermined final figure of '30 years' so exactly.

OpenPhil: Eliezer.

Eliezer: Think of this in an economic sense: people don't buy where goods are most expensive and delivered latest, they buy where goods are cheapest and delivered earliest. Deep learning researchers are not like an inanimate chunk of ice tumbling through intergalactic space in its unchanging direction of previous motion; they are economic agents who look around for ways to destroy the world faster and more cheaply than the way that you imagine as the default. They are more eager than you are to think of more creative paths to get to the next milestone faster.

OpenPhil: Isn't this desire for cheaper methods exactly what our model already accounts for, by modeling algorithmic progress?

Eliezer: The makers of AGI aren't going to be doing 10,000,000,000,000 rounds of gradient descent, on entire brain-sized 300,000,000,000,000-parameter models, algorithmically faster than today. They're going to get to AGI via some route that you don't know how to take, at least if it happens in 2040. If it happens in 2025, it may be via a route that some modern researchers do know how to take, but in this case, of course, your model was also wrong.

They're not going to be taking your default-imagined approach algorithmically faster, they're going to be taking an algorithmically different approach that eats computing power in a different way than you imagine it being consumed.

OpenPhil: Shouldn't that just be folded into our estimate of how the computation required to accomplish a fixed task decreases by half every 2-3 years due to better algorithms?

Eliezer: Backtesting this viewpoint on the previous history of computer science, it seems to me to assert that it should be possible to:

- Train a pre-Transformer RNN/CNN-based model, not using any other techniques invented after 2017, to GPT-2 levels of performance, using only around 2x as much compute as GPT-2;

- Play pro-level Go using 8-16 times as much computing power as AlphaGo, but only 2006 levels of technology.

For reference, recall that in 2006, Hinton and Salakhutdinov were just starting to publish that, by training multiple layers of Restricted Boltzmann machines and then unrolling them into a "deep" neural network, you could get an initialization for the network weights that would avoid the problem of vanishing and exploding gradients and activations. At least so long as you didn't try to stack too many layers, like a dozen layers or something ridiculous like that. This being the point that kicked off the entire deep-learning revolution.

Your model apparently suggests that we have gotten around 50 times more efficient at turning computation into intelligence since that time; so, we should be able to replicate any modern feat of deep learning performed in 2021, using techniques from before deep learning and around fifty times as much computing power.

OpenPhil: No, that's totally not what our viewpoint says when you backfit it to past reality. Our model does a great job of retrodicting past reality.

Eliezer: How so?

OpenPhil: <Eliezer cannot predict what they will say here.>

Eliezer: I'm not convinced by this argument.

OpenPhil: We didn't think you would be; you're sort of predictable that way.

Eliezer: Well, yes, if I'd predicted I'd update from hearing your argument, I would've updated already. I may not be a real Bayesian but I'm not that incoherent.

But I can guess in advance at the outline of my reply, and my guess is this:

"Look, when people come to me with models claiming the future is predictable enough for timing, I find that their viewpoints seem to me like they would have made garbage predictions if I actually had to operate them in the past without benefit of hindsight. Sure, with benefit of hindsight, you can look over a thousand possible trends and invent rules of prediction and event timing that nobody in the past actually spotlighted then, and claim that things happened on trend. I was around at the time and I do not recall people actually predicting the shape of AI in the year 2020 in advance. I don't think they were just being stupid either.

"In a conceivable future where people are still alive and reasoning as modern humans do in 2040, somebody will no doubt look back and claim that everything happened on trend since 2020; but which trend the hindsighter will pick out is not predictable to us in advance.

"It may be, of course, that I simply don't understand how to operate your viewpoint, nor how to apply it to the past or present or future; and that yours is a sort of viewpoint which indeed permits saying only one thing, and not another; and that this viewpoint would have predicted the past wonderfully, even without any benefit of hindsight. But there is also that less charitable viewpoint which suspects that somebody's theory of 'A coinflip always comes up heads on occasions X' contains some informal parameters which can be argued about which occasions exactly 'X' describes, and that the operation of these informal parameters is a bit influenced by one's knowledge of whether a past coinflip actually came up heads or not.

"As somebody who doesn't start from the assumption that your viewpoint is a good fit to the past, I still don't see how a good fit to the past could've been extracted from it without benefit of hindsight."

OpenPhil: That's a pretty general counterargument, and like any pretty general counterargument it's a blade you should try turning against yourself. Why doesn't your own viewpoint horribly mispredict the past, and say that all estimates of AGI arrival times are predictably net underestimates? If we imagine trying to operate your own viewpoint in 1988, we imagine going to Moravec and saying, "Your estimate of how much computing power it takes to match a human brain is predictably an overestimate, because engineers will find a better way to do it than biology, so we should expect AGI sooner than 2010."

Eliezer: I did tell Imaginary Moravec that his estimate of the minimum computation required for human-equivalent general intelligence was predictably an overestimate; that was right there in the dialogue before I even got around to writing this part. And I also, albeit with benefit of hindsight, told Moravec that both of these estimates were useless for timing the future, because they skipped over the questions of how much knowledge you'd need to make an AGI with a given amount of computing power, how fast knowledge was progressing, and the actual timing determined by the rising hardware line touching the falling hardware-required line.

OpenPhil: We don't see how to operate your viewpoint to say in advance to Moravec, before his prediction has been falsified, "Your estimate is plainly a garbage estimate" instead of "Your estimate is obviously a directional underestimate", especially since you seem to be saying the latter to us, now.

Eliezer: That's not a critique I give zero weight. And, I mean, as a kid, I was in fact talking like, "To heck with that hardware estimate, let's at least try to get it done before then. People are dying for lack of superintelligence; let's aim for 2005." I had a T-shirt spraypainted "Singularity 2005" at a science fiction convention, it's rather crude but I think it's still in my closet somewhere.

But now I am older and wiser and have fixed all my past mistakes, so the critique of those past mistakes no longer applies to my new arguments.

OpenPhil: Uh huh.

Eliezer: I mean, I did try to fix all the mistakes that I knew about, and didn't just, like, leave those mistakes in forever? I realize that this claim to be able to "learn from experience" is not standard human behavior in situations like this, but if you've got to be weird, that's a good place to spend your weirdness points. At least by my own lights, I am now making a different argument than I made when I was nineteen years old, and that different argument should be considered differently.

And, yes, I also think my nineteen-year-old self was not completely foolish at least about AI timelines; in the sense that, for all he knew, maybe you could build AGI by 2005 if you tried really hard over the next 6 years. Not so much because Moravec's estimate should've been seen as a predictable overestimate of how much computing power would actually be needed, given knowledge that would become available in the next 6 years; but because Moravec's estimate should've been seen as almost entirely irrelevant, making the correct answer be "I don't know."

OpenPhil: It seems to us that Moravec's estimate, and the guess of your nineteen-year-old past self, are both predictably vast underestimates. Estimating the computation consumed by one brain, and calling that your AGI target date, is obviously predictably a vast underestimate because it neglects the computation required for training a brainlike system. It may be a bit uncharitable, but we suggest that Moravec and your nineteen-year-old self may both have been motivatedly credulous, to not notice a gap so very obvious.

Eliezer: I could imagine it seeming that way if you'd grown up never learning about any AI techniques except deep learning, which had, in your wordless mental world, always been the way things were, and would always be that way forever.

I mean, it could be that deep learning will still be the bleeding-edge method of Artificial Intelligence right up until the end of the world. But if so, it'll be because Vinge was right and the world ended before 2030, not because the deep learning paradigm was as good as any AI paradigm can ever get. That is simply not a kind of thing that I expect Reality to say "Gotcha" to me about, any more than I expect to be told that the human brain, whose neurons and synapses are 500,000 times further away from the thermodynamic efficiency wall than ATP synthase, is the most efficient possible consumer of computations.

The specific perspective-taking operation needed here - when it comes to what was and wasn't obvious in 1988 or 1999 - is that the notion of spending thousands and millions and billions of times as much computation on a "training" phase, as on an "inference" phase, is something that only came to be seen as Always Necessary after the deep learning revolution took over AI in the late Noughties. Back when Moravec was writing, you programmed a game-tree-search algorithm for chess, and then you ran that code, and it played chess. Maybe you needed to add an opening book, or do a lot of trial runs to tweak the exact values the position evaluation function assigned to knights vs. bishops, but most AIs weren't neural nets and didn't get trained on enormous TPU pods.

Moravec had no way of knowing that the paradigm in AI would, twenty years later, massively shift to a new paradigm in which stuff got trained on enormous TPU pods. He lived in a world where you could only train neural networks a few layers deep, like, three layers, and the gradients vanished or exploded if you tried to train networks any deeper.

To be clear, in 1999, I did think of AGIs as needing to do a lot of learning; but I expected them to be learning while thinking, not to learn in a separate gradient descent phase.

OpenPhil: How could anybody possibly miss anything so obvious? There's so many basic technical ideas and even philosophical ideas about how you do AI which make it supremely obvious that the best and only way to turn computation into intelligence is to have deep nets, lots of parameters, and enormous separate training phases on TPU pods.

Eliezer: Yes, well, see, those philosophical ideas were not as prominent in 1988, which is why the direction of the future paradigm shift was not predictable in advance without benefit of hindsight, let alone timeable to 2006.

You're also probably overestimating how much those philosophical ideas would pinpoint the modern paradigm of gradient descent even if you had accepted them wholeheartedly, in 1988. Or let's consider, say, October 2006, when the Netflix Prize was being run - a watershed occasion where lots of programmers around the world tried their hand at minimizing a loss function, based on a huge-for-the-times 'training set' that had been publicly released, scored on a holdout 'test set'. You could say it was the first moment in the limelight for the sort of problem setup that everybody now takes for granted with ML research: a widely shared dataset, a heldout test set, a loss function to be minimized, prestige for advancing the 'state of the art'. And it was a million dollars, which, back in 2006, was big money for a machine learning prize, garnering lots of interest from competent competitors.