A concrete bet offer to those with short AGI timelines

post by Matthew Barnett (matthew-barnett), Tamay · 2022-04-09T21:41:45.106Z · LW · GW · 120 commentsContents

Specific criteria for bet resolution Some clarifications None 121 comments

[Update 4 (12/23/2023): Tamay has now conceded.]

[Update 3 (3/16/2023): Matthew has now conceded [LW · GW].]

[Update 2 (11/4/2022): Matthew Barnett now thinks he will probably lose this bet. You can read a post about how he's updated his views here [LW · GW].]

[Update 1: we have taken this bet with two people, as detailed in a comment below [LW(p) · GW(p)].]

Recently, a post claimed [LW · GW],

it seems very possible (>30%) that we are now in the crunch-time section of a short-timelines world, and that we have 3-7 years until Moore's law and organizational prioritization put these systems at extremely dangerous levels of capability.

We (Tamay Besiroglu and I) think this claim is strongly overstated, and disagree with the suggestion that “It's time for EA leadership to pull the short-timelines fire alarm.” This post received a fair amount of attention, and we are concerned about a view of the type expounded in the post causing EA leadership to try something hasty and ill-considered.

To counterbalance this view, we express our disagreement with the post. To substantiate and make concrete our disagreement, we are offering to bet up to $1000 against the idea that we are in the “crunch-time section of a short-timelines”.

In particular, we are willing to bet at at 1:1 odds that no more than one of the following events will occur by 2026-01-01, or alternatively, 3:1 odds (in our favor) that no more than one of the following events will occur by 2030-01-01.

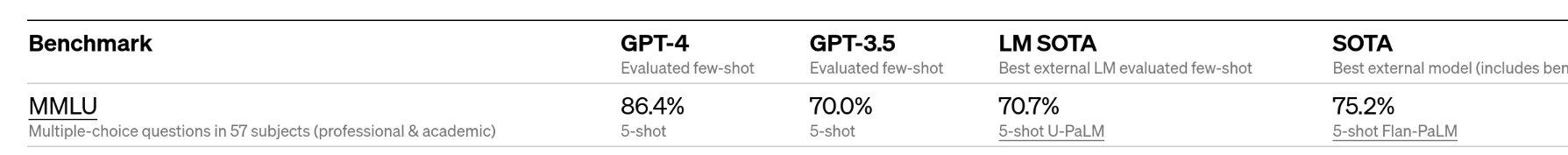

- A model/ensemble of models achieves >80% on all tasks in the MMLU benchmark

- A credible estimate reveals that an AI lab deployed EITHER >10^30 FLOPs OR hardware that would cost $1bn if purchased through competitive cloud computing vendors at the time on a training run to develop a single ML model (excluding autonomous driving efforts)

- A model/ensemble of models will achieve >90% on the MATH dataset using a no-calculator rule

- A model/ensemble of models achieves >80% top-1 strict accuracy on competition-level problems on the APPS benchmark

- A gold medal for the IMO Grand Challenge (conditional on it being clear that the questions were not in the training set)

- A robot that can, from beginning to end, reliably wash dishes, take them out of an ordinary dishwasher and stack them into a cabinet, without breaking any dishes, and at a comparable speed to humans (<120% the average time)

- Tesla’s full-self-driving capability makes fewer than one major mistake per 100,000 miles

Since we recognize that betting incentives can be weak over long time-horizons, we are also offering the option of employing Tamay’s recently described betting procedure [LW(p) · GW(p)] in which we would enter a series of repeated 2-year contracts until the resolution date.

Specific criteria for bet resolution

For each task listed above, we offer the following concrete resolution criteria.

| Task | Full details |

| A model/ensemble of models achieves >80% on all tasks in the MMLU benchmark | A public document reveals that a model or ensemble of models had an average accuracy score of more than 80.0% on every task listed in the Measuring Massive Multitask Language Understanding benchmark by Dan Hendrycks et al. |

| A credible estimate reveals that an AI lab deployed EITHER >10^30 FLOPs OR hardware that would cost $1bn if purchased through competitive cloud computing vendors at the time on a training run to develop a single ML model (excluding autonomous driving efforts) | If there is a dispute, our counterparty will offer an example that they believe may trigger this condition to resolve positively. Then, we will use the method described in this post [LW · GW] to estimate the number of FLOPs used for a given training run. We will then find the competitive rates by taking the minimum of the most recent prices found on Google Cloud, Microsoft Azure, and Amazon AWS, or the equivalent services corresponding to each of those companies if their names change in the future. We will determine the rate corresponding to the processing unit that would be capable of training the model in the paper. If the training run was completed than 2 years before resolution, an adjustment is applied to the cost of the training run to account for price-declines in computing. This adjustment will be based on a credible estimate of price-performance declines in the last few years, OR, a constant decline of 30% per year, whichever is more favorable to the counterparty. We will use inflation-adjusted 2022 US dollars, according to the CPI according to the BLS. |

| A model/ensemble of models will achieve >90% on the MATH dataset using a no-calculator rule. | A public document reveals that a model or ensemble of models had an average accuracy score of more than 90% when evaluated on the MATH benchmark by Dan Hendrycks et al. The no-calculator rule ensures that competitors don't use calculators or script executors. As Hendrycks explains, “With a script executor, competitors do not need to figure out how to succinctly reason to the conclusion and cleverness is rarely needed.” |

| A model/ensemble of models achieves >80% top-1 strict accuracy on competition-level problems on the APPS benchmark | A public document reveals that a model or ensemble of models had an average top-1 strict accuracy score of more than 80% on the competition-level coding problems listed in the APPS benchmark by Dan Hendrycks et al. Top-1 accuracy is distinguished, as in the paper, from top-k accuracy in which k outputs from the model are generated, and the best output is selected. Strict accuracy "requires programs pass every test case" (as defined in section 4.2 of the paper). |

| A gold medal for the IMO Grand Challenge | We will use the same criteria as revealed by Paul Christiano here [LW · GW]. To clarify, we will not require that the model be open-source, only that it clearly demonstrates the ability to obtain a gold medal for the IMO. |

| A robot that can, from beginning to end, reliably wash dishes, take them out of an ordinary dishwasher and stack them into a cabinet, without breaking any dishes, and at a comparable speed to humans (<120% the average time) | For this task, a credible video or report must be public that clearly demonstrates that this ability is feasible to a very high level of confidence. Videos or reports that do not report rates of system malfunctions, or do not permit the calculation of how much time it would take to take out and stack at least 10 dishes, are not admissible. An error is something that would have a reasonable chance of a dish being broken if performed in an ordinary setting. Reliability is defined as an error rate below 1 percent across all full loading-and-unloading tests, when given natural starting setups ie. pre-programmed precise robotic planning is not admissible. This blog post will be consulted to determine the average time it takes to load and unload dishes, under the section “Time Study Of Dishes”. In case there is a dispute over this question, our counterparty will offer an example that they believe may trigger this condition to resolve positively. We will then email the first author of whatever paper, blog post, or video in question asking whether they believe their system satisfies the conditions specified here, using their best judgment. |

| Tesla’s full-self-driving capability makes fewer than one major mistake per 100,000 miles | We will require that a credible document from Tesla report crash or error-rate statistics for cars using their full self-driving mode. The error rate must include errors that would have happened if it were not for the presence of human intervention and it must be calculated over a reasonable distribution over all weather and road conditions that humans normally drive in. The cars must be able to go from arbitrary legal locations in the US to other arbitrary locations without errors or interventions, at least in theory. A “major mistake” is defined as a mistake that requires human intervention to correct, as otherwise the car would grossly violate a traffic law, crash into an object, prevent the car from getting to its intended destination, or have the potential to put human life into serious jeopardy. |

Some clarifications

For each benchmark, we will exclude results that employed some degree of cheating. Cheating includes cases in which the rules specified in the original benchmark paper are not followed, or cases where some of the test examples were included in the training set.

120 comments

Comments sorted by top scores.

comment by Nathan Helm-Burger (nathan-helm-burger) · 2022-04-09T22:30:02.772Z · LW(p) · GW(p)

Ok, I take your bet for 2030. I win, you give me $1000. You win, I give you $3000. Want to propose an arbiter? (since someone else also took the bet, I'll get just half the bet, their $500 vs my $1500)

Replies from: michael_dello, nathan-helm-burger↑ comment by michael_dello · 2022-04-10T22:20:12.347Z · LW(p) · GW(p)

Shouldn't it be: 'They pay you $1,000 now, and in 3 years, you pay them back plus $3,000' (as per Bryan Caplan's discussion in the latest 80k podcast episode)? The money won't do anyone much good if they receive in it a FOOM scenario.

Replies from: nathan-helm-burger↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2022-04-11T17:18:42.690Z · LW(p) · GW(p)

Since my goal is to convince people that I take my beliefs seriously, and this amount of money is not actually going to change much about how I conduct the next three years of my life, I'm not worried about the details. Also, I'm not betting that there will be a FOOM scenario by the conclusion of the bet, just that we'll have made frightening progress towards one.

↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2022-07-01T15:59:03.243Z · LW(p) · GW(p)

Related: just for your amusement, here's a link to a bet about AI timelines that I won, but which I incorrectly believed that I would not win before the end of 2022. In other words, evidence of me being surprised by the high rate of AI progress... Interesting, eh? https://manifold.markets/MatthewBarnett/will-a-machine-learning-model-score-f0d93ee0119b#pzSuEYIhRiXoIFSjPQz2

comment by Matthew Barnett (matthew-barnett) · 2022-04-11T17:59:15.538Z · LW(p) · GW(p)

For people reading this post in the future, I'd like to note that I have written a somewhat long comment describing my mixed feelings about this post, since posting it. You can find my comment here [LW(p) · GW(p)]. But I'll also repeat it below for completeness:

The first thing I'd like to say is that we intended this post as a bet, and only a bet, and yet some people seem to be treating it as if we had made an argument. Personally, I am uncomfortable with the suggestion that our post was "misleading" because we did not present an affirmative case for our views.

I agree that LessWrong culture benefits from arguments as well as bets, but it seems a bit weird to demand that every bet come with an argument attached. A norm that all bets must come with arguments would seem to substantially damper the incentives to make bets, because then each time people must spend what will likely be many hours painstakingly outlining their views on the subject.

That said, I do want to reply to people who say that our post was misleading on other grounds. Some said that we should have made different bets, or at different odds. In response, I can only say that coming up with good concrete bets about AI timelines is actually really damn hard, and so if you wish you come up with alternatives, you can be my guest. I tried my best, at least.

More people said that our bet was misleading since it would seem that we too (Tamay and I) implicitly believe in short timelines, because our bets amounted to the claim that AGI has a substantial chance of arriving in 4-8 years. However, I do not think this is true.

The type of AGI that we should be worried about is one that is capable of fundamentally transforming the world. More narrowly, and to generalize a bit, fast takeoff folks believe that we will only need a minimal seed AI that is capable of rewriting its source code, and recursively self-improving into superintelligence. Slow takeoff folks believe that we will need something capable of automating a wide range of labor.

Given the fast takeoff view, it is totally understandable to think that our bets imply a short timeline. However, (and I'm only speaking for myself here) I don't believe in a fast takeoff. I think there's a huge gap between AI doing well on a handful of benchmarks, and AI fundamentally re-shaping the economy. At the very least, AI has been doing well on a ton of benchmarks since 2012. Each time AI excels in one benchmark, a new one is usually invented that's a bit more tough, and hopefully gets us a little closer to measuring what we actually mean by general intelligence.

In the near-future, I hope to create a much longer and more nuanced post expanding on my thoughts on this subject, hopefully making it clear that I do care a lot about making real epistemic progress here. I'm not just trying to signal that I'm a calm and arrogant long-timelines guy who raises his nose at the panicky short timelines people, though I understand how my recent post could have given that impression.

Replies from: rhollerith_dot_com↑ comment by RHollerith (rhollerith_dot_com) · 2022-04-19T00:21:18.410Z · LW(p) · GW(p)

It would make me sad if people on this site felt a need to apologize for "putting their money where their mouth is" (i.e., for offering to bet).

comment by habryka (habryka4) · 2022-04-09T22:29:30.006Z · LW(p) · GW(p)

I might disagree with you epistemically but... what do I have to win if AGI happens before 2030 and I win the bet? I don't think either of us will still care about our bet after that happens. Doesn't this just run into all the standard problems of predicting doomsday?

Edit: Oh, I also just saw you meant 3:1 odds in your favor. That's... weird, since it doesn't even disagree with the OP? Why would the OP take the bet that you propose, given they only assign ~30% probability to this outcome?

Replies from: JohnBuridan, matthew-barnett, interstice, Tamay↑ comment by SebastianG (JohnBuridan) · 2022-04-10T12:52:46.607Z · LW(p) · GW(p)

Bryan Caplan and Eliezer are resolving their Doomsday bet by having Bryan Caplan pay Eliezer upfront and if the doomsday scenario does not happen by Jan 1 2030, Eliezer will give Bryan his payout. It's a pretty method for betting on doomsday.

↑ comment by Matthew Barnett (matthew-barnett) · 2022-04-10T02:15:58.478Z · LW(p) · GW(p)

Why would the OP take the bet that you propose, given they only assign ~30% probability to this outcome?

The conditions we offered fall well short of AGI, so it seems reasonable that the author would assign way more than 30% to this outcome. Furthermore, we offered a 1:1 bet for January 1st 2026.

Edit: The OP also says, "Crying wolf isn't really a thing here; the societal impact of these capabilities is undeniable and you will not lose credibility even if 3 years from now these systems haven't yet FOOMed, because the big changes will be obvious and you'll have predicted that right." which seems to imply that we will likely obtain very impressive capabilities within 3 years. In my opinion, this statement is directly challenged by our 1:1 bet.

Replies from: habryka4, not-relevant↑ comment by habryka (habryka4) · 2022-04-10T03:33:02.993Z · LW(p) · GW(p)

Hmm, I guess it's just really not obvious that your proposed bet here disagrees with the OP. I think I roughly believe both the things that the OP says, and also wouldn't take your bet. It still feels like a fine bet to offer, but I am confused why it's phrased so much in contrast to the OP. If you are confident we are not going to see large dangerous capability gains in the next 5-7 years, I think I would much prefer you make a bet that tries to offer corresponding odds and the specific capability gains (though that runs a bit into "betting on doomsday" problems)

Replies from: matthew-barnett↑ comment by Matthew Barnett (matthew-barnett) · 2022-04-10T03:40:35.215Z · LW(p) · GW(p)

If you are confident we are not going to see large dangerous capability gains in the next 5-7 years, I think I would much prefer you make a bet that tries to offer corresponding odds and the specific capability gains (though that runs a bit into "betting on doomsday" problems)

What are the "specific cabability gains" you are referring to? I don't see any specific claims in the post we are responding to. By contrast, we listed 7 concrete tasks that appear trivial to perform if we are AGI-levels of capability, and very easy if we are only a few steps from AGI. I'd be genuinely baffled if you think AGI can be imminent at the same time we still don't have good self-driving cars, robots that can wash dishes, or AI capable of doing well on mathematics word problems. This view would seem to imply that we will get AGI pretty much out of nowhere.

Replies from: ChristianKl, habryka4, Veedrac, Mitchell_Porter↑ comment by ChristianKl · 2022-04-10T23:35:08.723Z · LW(p) · GW(p)

An AGI might become a dictator in every country on earth while still not being able to wash dishes or make errors when it comes to driving 100,000 miles. Physical coordination is not required.

It's not clear to me what practical implicates it has to measure reason about the math abilities with models with a no calculator rule. If someone would build an AGI, it makes sense for the AGI to be able to access subprocesses for tasks like calculators.

Replies from: Aidan O'Gara↑ comment by aog (Aidan O'Gara) · 2022-04-11T21:40:57.003Z · LW(p) · GW(p)

An AGI might become a dictator in every country on earth while still not being able to wash dishes or make errors when it comes to driving 100,000 miles. Physical coordination is not required.

How would you expect an AI to take over the world without physical capacity? Attacking financial systems, cybersecurity networks, and computer-operated weapons systems all seem possible from an AI that can simply operate a computer. Is that your vision of an AI takeover, or are there other specific dangerous capabilities you'd like the research community to ensure that AI does not attain?

↑ comment by habryka (habryka4) · 2022-04-12T18:50:36.635Z · LW(p) · GW(p)

I'd be genuinely baffled if you think AGI can be imminent at the same time we still don't have good self-driving cars, robots that can wash dishes, or AI capable of doing well on mathematics word problems. This view would seem to imply that we will get AGI pretty much out of nowhere.

I mean, Eliezer has commented on this position extensively in the AI dialogues. I do think we would likely see AI doing well on mathematics word-problems, but the other two are definitely not things I obviously expect to see before the end (though I do think it's more likely than not that we would see them).

Zooming out a bit though, I am confused what you are overall responding to with your comment. The thing I am critiquing is not about the "specific capability gains". It's just that you are responding to a post saying X, with a bet at odds Y that do not contradict X, and indeed where I think it's a reasonable common belief to hold both X and Y.

Like, if someone says "it's ~30% likely" and you say "That seems wrong, I am offering you a bet that you should only take if you have >70% probability on a related hypothesis" then... the obvious response is "but I said I only assign ~30% to this hypothesis, I agree that I assign somewhat more to your weaker hypothesis, but it's not at all obvious I should assign 70% to it, that's a big jump". That's roughly where I am at.

Replies from: not-relevant↑ comment by Not Relevant (not-relevant) · 2022-04-27T12:11:13.554Z · LW(p) · GW(p)

As the previous OP, to chime in, the specific mechanism by which self-driving cars don’t work but FOOM does is extremely high-capability consequentialist software engineering plus not-much-better-than-today world modeling.

Self-driving and manipulation require incredible-quality video/world modeling, and a bunch of control problems that seem unrelated to symbolic intelligence. Re: solving math problems, that seems way more likely to be a thing such a system could do; the only uncertainty is whether someone invests the time, given it’s not profitable.

↑ comment by Mitchell_Porter · 2022-04-10T15:00:27.462Z · LW(p) · GW(p)

Do you have any suggestions for what to do when the entire human race is economically obsolete and definitively no longer has any control over its destiny? Your post gives no indication of what you would do or recommend, when your benchmarks are actually surpassed.

↑ comment by Not Relevant (not-relevant) · 2022-04-10T08:41:18.093Z · LW(p) · GW(p)

Just for the record, I regret that statement, independent of making a bet or not.

↑ comment by interstice · 2022-04-09T22:35:22.172Z · LW(p) · GW(p)

If you expect the apocalypse to happen by a given date, you should rationally value having money then much less than the market(if the market doesn't expect the apocalypse). So you can simulate a bet by having an apocalypse-expecter take a high-interest-rate loan from an apocalypse-denier, paying the loan back(if the world survives) at the date of the purported apocalypse(h/t daniel filan).

Replies from: MichaelStJules↑ comment by MichaelStJules · 2022-04-09T22:44:37.150Z · LW(p) · GW(p)

Couldn't they just get lower interest rate loans elsewhere?

Or, interest doesn't start until the bet outcome date passes? I'd give the apocalypse-expecter $1000 now, and they pay me back with interest when the outcome date passes, with no interest payments before then.

For those wanting to lend out money to gain interest on and use that money for EA causes, this might be useful:

https://founderspledge.com/stories/investing-to-give

Replies from: Radamantis, interstice↑ comment by NunoSempere (Radamantis) · 2022-04-09T23:02:13.673Z · LW(p) · GW(p)

Couldn't they just get lower interest rate loans elsewhere?

This doesn't mean necessarily that you shouldn't take the bet, but maybe that you should also take the loan.

Replies from: MichaelStJules↑ comment by MichaelStJules · 2022-04-09T23:07:48.905Z · LW(p) · GW(p)

Ya, I was thinking this, too, but they could possibly get a lot of loans or much larger loans at lower interest rates, and it's not clear when they would start looking at this one as the next best to pursue. Maybe it's more time-efficient (more loaned money per hour spent setting up and dealing with) to take this kind of AI-bet loan, though, but $1000 is very low.

↑ comment by interstice · 2022-04-09T22:53:31.879Z · LW(p) · GW(p)

Or, interest doesn’t start until the bet outcome date passes?

Yeah, this is what I had in mind. There wouldn't be interest payments until the date of the apocalypse.

↑ comment by Tamay · 2022-04-09T22:42:39.378Z · LW(p) · GW(p)

We also propose betting using a mechanism that mitigates some of these issues:

Replies from: matthew-barnettSince we recognize that betting incentives can be weak over long time-horizons, we are also offering the option of employing Tamay’s recently described betting procedure [LW(p) · GW(p)] in which we would enter a series of repeated 2-year contracts until the resolution date.

↑ comment by Matthew Barnett (matthew-barnett) · 2022-04-09T22:45:57.973Z · LW(p) · GW(p)

Also, we give odds of 1:1 if anyone wants to take us up on the bet for January 1st 2026.

comment by Not Relevant (not-relevant) · 2022-04-10T08:44:38.898Z · LW(p) · GW(p)

I want to state here that I regret my previous post, and have retracted it, primarily because it was not constructive and I think this post does an excellent job of calling out what a specific constructive dialogue looks like.

Of the above, the only ones that seem likely to me in the world I was imagining are MMLU and APPS - I'm much less familiar with the two math competitions, which seem like the other plausible ones.

I think I'll take you up on the 2026 version at 1:1 odds.

Replies from: ricardo-meneghin-filho, yitz↑ comment by Ricardo Meneghin (ricardo-meneghin-filho) · 2022-04-10T15:55:29.327Z · LW(p) · GW(p)

Is it really constructive? This post presents no arguments for why they believe what they believe which should serve very little to convince others of long timelines. Moreover it proposes a bet from an assymetric position that is very undesirable for short-timeliners to take, since money is worth nothing to the dead, and even in the weird world where they win the bet and are still alive to settle it, they have locked their money for 8 years for a measly 33% return - less than expected by simply say, putting it in index funds. Believing in longer timelines gives you the privilege of signalling epistemic virtue by offering bets like this from a calm, unbothered position, while people sounding the alarm sound desperate and hasty, but there is no point in being calm when a meteor is coming towards you, and we are much better served by using our money to do something now rather than locking it in a long term bet.

Not only that, the decision from mods to push this to the frontpage is questionable since it served as a karma boost to this post that the other didn't have, possibly giving the impression of higher support than it actually has.

Replies from: Jotto999↑ comment by Jotto999 · 2022-04-10T18:07:38.318Z · LW(p) · GW(p)

On the reduced value of money given catastrophe: that could be used in a betting circumstance. Someone giving higher-than-market estimates could take a high-interest "loan" from the person giving lower estimates of catastrophe. This can be rational and efficient for both of them, and help "price in" the implied probability of doom.

Replies from: ricardo-meneghin-filho↑ comment by Ricardo Meneghin (ricardo-meneghin-filho) · 2022-04-10T19:59:07.045Z · LW(p) · GW(p)

Well, if OP is willing then I'd love to take a high-interest loan from him to be paid back in 2030.

↑ comment by Yitz (yitz) · 2022-04-10T16:23:30.201Z · LW(p) · GW(p)

By the way, just in case you didn’t know, you can edit your original post with a disclaimer at the beginning or something, if you want to make clear how your opinions have changed.

Replies from: not-relevant↑ comment by Not Relevant (not-relevant) · 2022-04-10T16:59:44.391Z · LW(p) · GW(p)

Already done.

comment by Matthew Barnett (matthew-barnett) · 2023-03-14T02:10:24.305Z · LW(p) · GW(p)

A retrospective on this bet:

Having thought about each of these milestones more carefully, and having already updated towards short timelines [LW · GW] months ago, I think it was really bad in hindsight to make this bet, even on medium-to-long timeline views. Honestly, I'm surprised more people didn't want to bet us, since anyone familiar with the relevant benchmarks probably could have noticed that we were making quite poor predictions.

I'll explain what I mean by going through each of these milestones individually,

- "A model/ensemble of models achieves >80% on all tasks in the MMLU benchmark"

- The trend on this benchmark suggests that we will reach >90% performance within a few years. You can get 25% on this benchmark by guessing randomly (previously I thought it was 20%), so a score of 80% would not even indicate high competency at any given task.

- "A credible estimate reveals that an AI lab deployed EITHER >10^30 FLOPs OR hardware that would cost $1bn if purchased through competitive cloud computing vendors at the time on a training run to develop a single ML model (excluding autonomous driving efforts)"

- The trend was for compute to double every six months. Plugging in the relevant numbers reveals that we would lose this prediction easily if the trend was kept up for another 3.5 years.

- "A model/ensemble of models will achieve >90% on the MATH dataset using a no-calculator rule."

- Having looked at the dataset I estimate that about 80% of problems in the MATH benchmark are simple plug-and-chug problems that don't rely on sophisticated mathematical intuition. Therefore, getting above 90% requires only that models acquire basic competency on competition-level math.

- "A model/ensemble of models achieves >80% top-1 strict accuracy on competition-level problems on the APPS benchmark"

- DeepMind's paper for AlphaCode revealed that the APPS benchmark was pretty bad, since it was possible to write code that passed all the test cases without being correct. I missed this at the time.

- "A gold medal for the IMO Grand Challenge"

- As noted by Daniel Paleka [LW(p) · GW(p)], it seems that Paul Christiano may have exaggerated how difficult it is to obtain gold at the IMO (despite attending himself in 2008).

- "A robot that can, from beginning to end, reliably wash dishes, take them out of an ordinary dishwasher and stack them into a cabinet, without breaking any dishes, and at a comparable speed to humans (<120% the average time)"

- Unlike the other predictions, I still suspect we were fundamentally right about this one.

- "Tesla’s full-self-driving capability makes fewer than one major mistake per 100,000 miles"

- For this one, I'm not sure what I was thinking, since self-driving technology is already quite good. I think the tech has problems with reliability, but 100,000 miles is not actually very long of a distance. Most human drivers drive well over 100,000 miles in their lifetime, and I remember aiming for a bar that was something like "meets human-level performance". I think I just didn't realize what human-level driving looked like in concrete terms.

I'm quite shocked that I wasn't able to notice the poor reasoning that I had made in almost every single one of our predictions that we made at the time. I guess it's good that I learned from this experience though.

Replies from: M. Y. Zuo, stephen-mcaleese, stephen-mcaleese, None↑ comment by M. Y. Zuo · 2023-03-14T03:26:55.393Z · LW(p) · GW(p)

Thanks for posting this retrospective.

Considering your terms were so in favour of the bet takers, I was also surprised last summer when so few actually committed. Especially considering there were dozens, if not hundreds, of LW members with short timelines who saw your original post.

Perhaps that says something about actual beliefs vs talked about beliefs?

Replies from: matthew-barnett↑ comment by Matthew Barnett (matthew-barnett) · 2023-03-14T07:20:34.992Z · LW(p) · GW(p)

Well, to be fair, I don't think many people realized how weak some of these benchmarks were. It is hard to tell without digging into the details, which I regrettably did not either.

↑ comment by Stephen McAleese (stephen-mcaleese) · 2023-03-18T00:03:20.006Z · LW(p) · GW(p)

"Having thought about each of these milestones more carefully, and having already updated towards short timelines [LW · GW] months ago"

You said that you updated and shortened your median timeline to 2047 and mode to 2035. But it seems to me that you need to shorten your timelines again.

In the It's time for EA leadership to pull the short-timelines fire alarm [LW · GW] post says:

"it seems very possible (>30%) that we are now in the crunch-time section of a short-timelines world, and that we have 3-7 years until Moore's law and organizational prioritization put these systems at extremely dangerous levels of capability."

It seems that the purpose of the bet was to test this hypothesis:

"we are offering to bet up to $1000 against the idea that we are in the “crunch-time section of a short-timelines"

My understanding is that if AI progress occurred slowly and no more than one of the advancements listed were made by 2026-01-01 then this short timelines hypothesis would be proven false and could then be ignored.

However, the bet was conceded on 2023-03-16 which is much earlier than the deadline and therefore the bet failed to prove the hypothesis false.

It seems to me that the rational action is to now update toward believing that this short timelines hypothesis is true and 3-7 years from 2022 is 2025-2029 which is substantially earlier than 2047.

Replies from: matthew-barnett↑ comment by Matthew Barnett (matthew-barnett) · 2023-05-28T10:52:29.604Z · LW(p) · GW(p)

It seems to me that the rational action is to now update toward believing that this short timelines hypothesis is true and 3-7 years from 2022 is 2025-2029 which is substantially earlier than 2047.

I don't really agree, although it might come down to what you mean. When some people talk about their AGI timelines they often mean something much weaker than what I'm imagining, which can lead to significant confusion.

If your bar for AGI was "score very highly on college exams" then my median "AGI timelines" dropped from something like 2030 to 2025 over the last 2 years. Whereas if your bar was more like "radically transform the human condition", I went from ~2070 to 2047.

I just see a lot of ways that we could have very impressive software programs and yet it still takes a lot of time to fundamentally transform the human condition, for example because of regulation, or because we experience setbacks due to war. My fundamental model hasn't changed here, although I became substantially more impressed with current tech than I used to be.

(Actually, I think there's a good chance that there will be no major delays at all and the human condition will be radically transformed some time in the 2030s. But because of the long list of possible delays, my overall distribution is skewed right. This means that even though my median is 2047, my mode is like 2034.)

↑ comment by Stephen McAleese (stephen-mcaleese) · 2023-03-17T20:34:28.779Z · LW(p) · GW(p)

I don't agree with the first point:

"a score of 80% would not even indicate high competency at any given task"

Although the MMLU task is fairly straightforward given that there are only 4 options to choose from (25% accuracy for random choices) and experts typically score about 90%, getting 80% accuracy still seems quite difficult for a human given that average human raters only score about 35%. Also, GPT-3 only scores about 45% (GPT-3 fine-tuned still only scores 54%), and GPT-2 scores just 32% even when fine-tuned.

One of my recent posts [LW · GW] has a nice chart showing different levels of MMLU performance.

Extract from the abstract of the paper (2021):

"To attain high accuracy on this test, models must possess extensive world knowledge and problem solving ability. We find that while most recent models have near random-chance accuracy, the very largest GPT-3 model improves over random chance by almost 20 percentage points on average."

comment by Chris_Leong · 2022-04-09T23:29:39.030Z · LW(p) · GW(p)

I guess I'd be curious about your reasons of thinking that timelines are longer.

comment by Evan R. Murphy · 2022-04-09T22:57:15.232Z · LW(p) · GW(p)

I am also willing to take your bet for 2030.

I would propose one additional condition: If there evidence of a deliberate or coordinated slowdown on AGI development by the major labs, then the bet is voided. I don't expect there will be such a slowdown, but I'd rather not be invested in it not happening.

Replies from: carl-feynman↑ comment by Carl Feynman (carl-feynman) · 2023-03-15T17:59:45.733Z · LW(p) · GW(p)

The recent announcement that OpenAI had GPT-4 since last August, but spent the intervening time evaluating it, instead of releasing it, constitutes a "deliberate slowdown" by a "major lab". Do you require that multiple labs slow down before the bet is voided?

Replies from: Evan R. Murphy↑ comment by Evan R. Murphy · 2023-03-17T01:38:18.648Z · LW(p) · GW(p)

Hmm good question. The OpenAI GPT-4 case is complicated in my mind. It kind of looks to me like their approach was:

- Move really fast to develop a next-gen model

- Take some months to study, test and tweak the model before releasing it

Since it's fast and slow together, I'm confused about whether it constitutes a deliberate slowdown. I'm curious about your and other people's takes.

comment by Towards_Keeperhood (Simon Skade) · 2022-04-11T00:24:34.242Z · LW(p) · GW(p)

I think this post is epistemically weak (which does not mean I disagree with you):

- Your post pushes the claim that “It's time for EA leadership to pull the short-timelines fire alarm.” wouldn't be wise. Problems in the discourse: (1) "pulling the short-timelines fire alarm" isn't well-defined in the first place, (2) there is a huge inferential gap between "AGI won't come before 2030" and "EA shouldn't pull the short-timelines fire alarm" (which could mean sth like e.g. EA should start planning to start a Manhattan project for aligning AGI in the next few years.), and (3) your statement "we are concerned about a view of the type expounded in the post causing EA leadership to try something hasty and ill-considered" that slightly addresses that inferential gap is just a bad rhetorical method where you interpret what the other said in a very extreme and bad way, although the other person actually didn't mean that, and you are definitely not seriously considering the pros and cons of taking more initiative. (Though of course it's not really clear what "taking more initiative" means, and critiquing the other post (which IMO was epistemically very bad) would be totally right.)

- You're not giving a reason why you think timelines aren't that short, only saying you believe it enough to bet on it. IMO, simply saying "the >30% 3-7 years claim is compared to current estimates of many smart people an extraordinary claim that requires an extraordinary burden of proof, which isn't provided" would have been better.

- Even if not explicitly or even if not endorsed by you, your post implicitly promotes the statement "EA leadership doesn't need to shorten its timelines". I'm not at all confident about this, but it seems to me like EA leadership acts as if we have pretty long timelines, significantly longer than your bets would imply. (The way the post is written, you should have at least explicitly pointed out that this post doesn't imply that EA has short enough timelines.)

- AGI timelines are so difficult to predict that prediction markets might be extremely outperformed by a few people with very deep models about the alignment problem, like Eliezer Yudkowsky or Paul Christiano, so even if we would take many such bets in the form of a prediction market, this wouldn't be strong evidence that our estimate is that good, or the estimate would be extremely uncertain.

(Not at all saying taking bets is bad, though the doom factor makes taking bets difficult indeed.)

It's not that there's anything wrong with posting such a post saying you're willing to bet, as long as you don't sell it as an argument why timelines aren't that short or even more downstream things like what EA leadership should do. What bothers me isn't that this post got posted, but that it and the post it is counterbalancing received so many upvotes. Lesswrong should be a place where good epistemics are very important, not where people cheer for their side by upvoting everything that supports their own opinion.

Replies from: Veedrac, matthew-barnett↑ comment by Veedrac · 2022-04-11T13:37:39.879Z · LW(p) · GW(p)

I share your opinion that the post is misleading. Adding to the list,

- Bets don't pay out until you win them, and this includes in epistemic credit, but we need to realize we are in short timelines before they happen. If they are to lose this bet, we wouldn't learn from it until it is dangerously late.

- There are market arguments to update from betting markets ahead of time, but a fair few people have accepted the bet, so that does not transparently help the authors' case.

- 1:1 odds in 2026 on human-expert MMLU performance, $1B models, >90% MATH , >80% APPS top-1, IMO Gold Medal, or human-like robot dexterity is a short timeline. The only criteria that doesn't seem to me to support short timelines at 1:1 odds is Tesla FSD, and some people might disagree.

↑ comment by Matthew Barnett (matthew-barnett) · 2022-04-11T17:20:17.989Z · LW(p) · GW(p)

1:1 odds in 2026 on human-expert MMLU performance, $1B models, >90% MATH , >80% APPS top-1, IMO Gold Medal, or human-like robot dexterity is a short timeline.

I disagree. I think each of these benchmarks will be surpassed well before we are at AGI-levels of capability. That said, I agree that the post was insufficient in justifying why we think this bet is a reasonable reply to the OP. I hope in the near-term future to write a longer, more personal post that expands on some of my reasoning.

The bet itself was merely a public statement to the effect of "if people are saying these radical things, why don't they put their money where their mouths are?" I don't think such statements need to have long arguments attached to them. But, I can totally see why people were left confused.

Replies from: Veedrac↑ comment by Veedrac · 2022-04-11T20:23:57.378Z · LW(p) · GW(p)

I appreciate that you changed the title, and think this makes the post a lot more agreeable. It is totally reasonable to be making bets without having to justify them, just as long as the making of a bet is not mistaken to be more evidence than its associated sustained market movement.

I think each of these benchmarks will be surpassed well before we are at AGI-levels of capability.

Solving any of these tasks in a non-gamed manner just 14 years after AlexNet might not be at the point of AGI, or at least I can envision a future consistent with it coming prior, but it is significant evidence that AGI is not too many years out. I can still just about imagine today that neural networks might hit some wall that ultimately limits their understanding, but this point has to come prior to neural networks showing that they are almost fully general reasoners with the right backpropagation signal (it is after all the backpropagation that is capable of learning almost arbitrary tasks with almost no task-specialization). An alarm needs to precede alignment catastrophe by long enough that you have time to do something about it; isn't much use if it is only there to tell you how you are going to die.

Bootstrapping is often painted as a model looking at its own code, thinking really hard, and writing better code that it knows to be better, but this is an extremely strong version of bootstrapping and you don't need to come anywhere close to these capabilities in order to start worrying about concrete dangers. I wrote a post that gave an example of a minimum viable FOOM [LW · GW], but it is not only possible to get to from that angle, nor the earliest level of capability where I think things will start breaking. It is worth remembering that evolution optimized for humanity from proto-humans that could not be given IMO Gold Medal questions and be expected to solve them. Evolution isn't intelligent at all, so it certainly is not the case that you need human level intelligence before you can optimize on intelligence.

You may PM me for a small optional point I don't want to make in public.

-

E: A more aggressive way of phrasing this is to challenge, why can't this work, and concretely, what specific capability do you think is missing for machine intelligence to start doing transformative things like AI research? If a machine is grounded in language and other sensory media, and is also capable of taking a novel mathematical question and inventing not just the answer but the method by which it is solved, why can't it apply that ability to other tasks that it is able to talk about? Many models have shown that reasoning abilities transfer, and that agents trained on general domains do similarly well across them.

↑ comment by Matthew Barnett (matthew-barnett) · 2022-04-11T17:25:13.950Z · LW(p) · GW(p)

It's not that there's anything wrong with posting such a post saying you're willing to bet, as long as you don't sell it as an argument why timelines aren't that short or even more downstream things like what EA leadership should do.

I don't agree that we sold our post as an argument for why timelines aren't short. Thus, I don't think this objection applies.

That said, I do agree that the initial post deserves a much longer and nuanced response. While I don't think it's fair to demand that every response be nuanced and long, I do agree that our post could have been a bit better in responding to the object-level claims. For what it's worth, I do hope to write a far more nuanced and substantive take on these issues in the relative near-term.

Replies from: Simon Skade↑ comment by Towards_Keeperhood (Simon Skade) · 2022-04-11T19:18:16.706Z · LW(p) · GW(p)

I don't agree that we sold our post as an argument for why timelines are short. Thus, I don't think this objection applies.

You probably mean "why timelines aren't short". I didn't think you explicitly thought it was an argument against short timelines, but because the post got so many upvotes I'm worried that many people implicitly perceive it as such, and the way the post is written contributes to that. But great that you changed the title, that already makes it a lot better!

That said, I do agree that the initial post deserves a much longer and nuanced response.

I don't really think the initial post deserves a nuanced response. (My response would have been "the >30% 3-7 years claim is compared to current estimates of many smart people an extraordinary claim that requires an extraordinary burden of proof, which isn't provided".)

But I do think that the community (and especially EA leadership) should probably carefully reevaluate timelines (considering arguments of short timelines and how good they are), so great if you are planning to do a careful analysis of timeline arguments!

comment by Matthew Barnett (matthew-barnett) · 2022-07-04T22:05:11.954Z · LW(p) · GW(p)

Personal update:

The recent breakthrough on the MATH dataset has made me update substantially in the direction of thinking I’ll lose the bet. I’m now at about 50% chance of winning by 2026, and 25% chance of winning by 2030.

That said, I want others to know that, for the record, my update mostly reflects that I now think MATH is a relatively easy dataset, and my overall AGI median only advanced by a few years.

Previously, I relied quite heavily on statements that people had made about MATH, including the authors of the original paper, who indicated it was a difficult dataset full of high school “competition-level” math word problems. However, two days ago I downloaded the dataset and took a look at the problems myself (as opposed to the cherry-picked problems I saw people blog about), and I now understand that a large chunk of the dataset includes simple plug-and-chug and evaluation problems—some of them so simple that Wolfram Alpha can perform them. What’s more: the previous state of the art model, which was touted as achieving only 6.9%, was simply a fine-tuned version of GPT-2 (they didn’t fine-tune anything larger), which makes it very unsurprising that the prior SOTA was so low.

I feel a little embarrassed for not realizing all of this—and I’m certainly still going to pay out to people who bet against me, if I lose—but I want people to know that my main takeaway so far is that the MATH dataset turned out to be surprisingly easy, not that large language models turned out to be surprisingly good at math.

Replies from: Bjartur Tómas, matthew-barnett↑ comment by Tomás B. (Bjartur Tómas) · 2022-07-04T22:15:04.809Z · LW(p) · GW(p)

I agree this is more of an update about what existing models were already capable of. I disagree that this means someone in your position should not be updating to significantly lower timelines. Even removing MATH, I'm pretty confident I will "win". If you want to replace it with something that more represents what you thought MATH did, I will probably take this second bet at the same odds.

Replies from: matthew-barnett↑ comment by Matthew Barnett (matthew-barnett) · 2022-07-04T22:18:28.490Z · LW(p) · GW(p)

I agree this is more of an update about what existing models were already capable of.

I’m confused. I am not saying that, so I’m not sure which part of my comment you’re agreeing with.

If you want to replace it with something that more represents what you thought MATH did, I will probably take this second bet at the same odds.

If I found something, I’d be sympathetic to taking another bet. Unfortunately I don’t know of any other good datasets.

Replies from: Bjartur Tómas↑ comment by Tomás B. (Bjartur Tómas) · 2022-07-04T22:21:46.741Z · LW(p) · GW(p)

The part about the previous SOTA being fine-tuned GPT-2, which means a lot of MATH performance was latent in LMs that existed at the time we made the bet. On top of this, the various prompting and data-cleaning changes strike me as revealing latent capacity.

Replies from: matthew-barnett↑ comment by Matthew Barnett (matthew-barnett) · 2022-07-04T22:24:30.479Z · LW(p) · GW(p)

If I thought large language models were already capable of doing simple plug-and-chug problems, I’m not sure why I’d update much on this development. There were some slightly hard problems that the model was capable of doing, that Google highlighted in their paper (though they were cherry-picked)—and for that I did update by a bit (I said my timelines advanced by “a few years”).

Replies from: Bjartur Tómas↑ comment by Tomás B. (Bjartur Tómas) · 2022-07-04T22:39:20.383Z · LW(p) · GW(p)

>If I thought large language models were already capable of doing simple plug-and-chug problems, I’m not sure why I’d update much on this development.

I suppose I just have different intuitions on this. Let's just make a second bet. I imagine you can find another element for your list you will be comfortable adding - it doesn't necessarily have to be a dataset, just something in the same spirit as the other items in the list.

Replies from: matthew-barnett↑ comment by Matthew Barnett (matthew-barnett) · 2022-07-04T22:44:36.062Z · LW(p) · GW(p)

I think I’ll pass up an opportunity for a second bet for now. My mistake was being too careless in the first place—and I’m not currently too interested in doing a deeper dive into what might be a good replacement for MATH.

Replies from: MichaelStJules↑ comment by MichaelStJules · 2022-07-09T17:12:51.152Z · LW(p) · GW(p)

You could just drop MATH and make a bet at different odds on the remaining items.

↑ comment by Matthew Barnett (matthew-barnett) · 2022-08-03T21:29:59.217Z · LW(p) · GW(p)

One more personal update, which I hope will be final until the bet resolves:

I made quite a few mistakes while writing this bet. For example, I carelessly used 2022 dollars while crafting the inflation adjustment component of the second condition. These sorts of things made me update in the direction of thinking that making a good timelines bet is really, really hard.

And I'm a bit worried that people will use this bet to say that I was deeply wrong, and my credibility will blow up if I lose. Maybe I am deeply wrong, and maybe it's right that my credibility should blow up. But for the record, I never had a very high credence on winning -- just enough so that the bet seemed worth it.

Replies from: Bjartur Tómas↑ comment by Tomás B. (Bjartur Tómas) · 2022-10-24T15:02:38.234Z · LW(p) · GW(p)

I don’t think this will affect your credibility too much. You made a bet, which is virtuous. And you will note how few people were interested in taking it at the time.

comment by Kao (kao) · 2022-04-13T00:47:40.877Z · LW(p) · GW(p)

I'll happily emulate Matthew Barnett's and Tamay's bet for any interested counter-bettors, at pretty much any volume with substantially better odds (for you.) I have a lot of vouches and willing to use a middleman/mediator if necessary. The best way to contact me is on discord at PTB kao#2111

comment by Raemon · 2022-04-09T22:20:45.189Z · LW(p) · GW(p)

Mod note: there's some weirdness about this post being frontpage, and the post it's responding to being on personal blog. I'm not 100% sure of my preferred call, but, the previous post seemed to primarily be arguing a community-centric-political point, and this one seems more to be making a straightforward epistemic claim. (I haven't checked in with other moderators about their thoughts on either post)

Replies from: Benito↑ comment by Ben Pace (Benito) · 2022-04-10T00:37:39.083Z · LW(p) · GW(p)

I frontpaged it because I am very excited about bets on timelines/takeoff speeds. I do think the title and framing about what EA leadership should do is not really a good fit for frontpage, and (for frontpage) I would much prefer a post title that's something like "A Concrete Bet Offer To Those With Short-Timelines".

Replies from: matthew-barnett, olga-babeeva↑ comment by Matthew Barnett (matthew-barnett) · 2022-04-11T17:15:19.362Z · LW(p) · GW(p)

I would much prefer a post title that's something like "A Concrete Bet Offer To Those With Short-Timelines".

Thanks, I more-or-less adopted this exact title. I hope that makes things look a bit better.

Replies from: Benito↑ comment by Ben Pace (Benito) · 2022-04-11T17:53:32.234Z · LW(p) · GW(p)

Seems good to me, thank you.

↑ comment by Olga Babeeva (olga-babeeva) · 2022-04-10T06:12:26.189Z · LW(p) · GW(p)

Why do you think this?

think the title and framing about what EA leadership should do is not really a good fit for frontpage?

Would it have made a difference if, instead of referring to EA leadership, the post had said "we should sound the alarm" (as in readers/LW/EAs)?

Replies from: Benito↑ comment by Ben Pace (Benito) · 2022-04-11T03:53:59.259Z · LW(p) · GW(p)

LessWrong is primarily a place to understand human rationality, AI, existential risk, and more, it is not primarily a place to do social coordination bids, and I want to select much more on users interested in and excited by the former than the latter.

It would have made a difference, yes, though on the margin getting even closer to the object level is better for frontpage IMO.

Replies from: Ruby↑ comment by Ruby · 2022-04-11T05:40:24.354Z · LW(p) · GW(p)

Speaking as a moderator, it's not obvious to me that LessWrong shouldn't be a place where coordination happens.

It's scary and I don't know how to cause it to happen well, but if not here, where? We'd have to build something else (totally an option, but not something anyone has done).

Replies from: Benito↑ comment by Ben Pace (Benito) · 2022-04-11T17:51:38.394Z · LW(p) · GW(p)

Yeah I think insofar as it's happened on LessWrong it's been better than happening on e.g. Facebook or only in-person.

The rough story I am hoping for here is something like "Come for the frontpage object-level content, stay for the frontpage object-level content, but also you'll likely model / engage with local politics a bit if you stay (which is on personal blog)."

comment by Joe Collman (Joe_Collman) · 2022-04-09T22:29:03.149Z · LW(p) · GW(p)

If the aim is for non-takeup of this bet to provide evidence against short timelines, I think you'd need to change the odds significantly: conditional on short timelines, future money is worth very little. Better to have an extra $1000 in a world where it's still useful than in one where it may already be too late.

comment by Matthew Barnett (matthew-barnett) · 2022-04-11T02:45:08.406Z · LW(p) · GW(p)

Update: We have taken the bet with 2 people.

First: we have taken the 1:1 bet for January 1st 2026 with Tomás B. [LW · GW] at our $500 to his $500.

Second: we have taken the 3:1 bet for January 1st 2030 with Nathan Helm-Burger [LW · GW] at our $500 to his $1500

Personal note

Just as a personal note (I'm not speaking for Tamay here), I expect to lose the 2030 bet with >50% probability. I took it because it has positive EV on my view, though not as much as I believed when I first drafted the bet. I also disagree with comments here that state that these bets imply that I have short timelines. I think there's a huge gap between AI performing well on benchmarks, and AI having a large economic splash in the real world.

Here, we mostly focused on benchmarks because I think these metrics are fairly neutral markers between takeoff views. By this I mean that I expect fast-takeoff folks to think that AI will do well on benchmarks before we get to AGI, even if they think AI will have roughly zero economic impact before then. Since I wanted my bet to be applicable to people without slow-takeoff views, we went with benchmarks.

Replies from: Bjartur Tómas↑ comment by Tomás B. (Bjartur Tómas) · 2022-04-12T19:44:17.216Z · LW(p) · GW(p)

I should probably account for the fact that I am the only one who took the 1:1 bet, but still I foolishly think I will win.

comment by MichaelStJules · 2022-04-10T12:01:03.449Z · LW(p) · GW(p)

I think this would be more informative for the community if we had answers to the following questions here:

- What are the AI states of the art on these problems?

- How have the SoTAs changed over time?

- What is human performance on these problems (top human performance, average, any other statistics, the whole distribution, etc., whichever seems most useful)?

(Anyone can answer, and feel free to provide only partial information. I'm guessing the authors have a lot of this info handy already.)

comment by Evan R. Murphy · 2022-04-10T06:49:55.034Z · LW(p) · GW(p)

Some questions/clarifications about the bet terms:

A robot that can, from beginning to end, reliably wash dishes, take them out of an ordinary dishwasher and stack them into a cabinet, without breaking any dishes, and at a comparable speed to humans (<120% the average time)

The dishwasher is the one actually washing the dishes right, not the robot? The robot just needs to load the dishwasher, run it, and then unload it fast enough and without breaks?

Tesla’s full-self-driving capability makes fewer than one major mistake per 100,000 miles

Can we modify this to "at least 1 self-driving car service makes fewer than one major mistake per 100,000 miles"? I'm not sure why this should be fixed around Tesla when the big advances could come from Waymo or Aurora or another player.

comment by Tomás B. (Bjartur Tómas) · 2022-07-01T00:18:27.759Z · LW(p) · GW(p)

Interesting MATH update: https://ai.googleblog.com/2022/06/minerva-solving-quantitative-reasoning.html

comment by ChristianKl · 2022-04-10T18:30:59.376Z · LW(p) · GW(p)

It might be useful to create Metaculus predictions for the individual tasks.

comment by lorepieri (lorenzo-rex) · 2022-04-10T17:21:02.293Z · LW(p) · GW(p)

Matthew, Tamay: Refreshing post, with actual hard data and benchmarks. Thanks for that.

My predictions:

- A model/ensemble of models achieves >80% on all tasks in the MMLU benchmark

No in 2026, no in 2030. Mainly due to the fact that we don't have much structured data and incentives to solve some of the categories. A powerful unsupervised AI would be needed to clear those categories, or more time.

- A credible estimate reveals that an AI lab deployed EITHER >10^30 FLOPs OR hardware that would cost $1bn if purchased through competitive cloud computing vendors at the time on a training run to develop a single ML model (excluding autonomous driving efforts)

This may actually happen (the 1B one, not the 10^30), also due to inflation and USD created out of thin air and injected into the market. I would go for no in 2026 and yes in 2030.

- A model/ensemble of models will achieve >90% on the MATH dataset using a no-calculator rule

No in 2026, no in 2030. Significant algorithmic improvements needed. It may be done if prompt engineering is allowed.

- A model/ensemble of models achieves >80% top-1 strict accuracy on competition-level problems on the APPS benchmark

No in 2026, no in 2030. Similar to the above, but there will be more progress, as a lot of data is available.

- A gold medal for the IMO Grand Challenge (conditional on it being clear that the questions were not in the training set)

No in 2026, no in 2030.

- A robot that can, from beginning to end, reliably wash dishes, take them out of an ordinary dishwasher and stack them into a cabinet, without breaking any dishes, and at a comparable speed to humans (<120% the average time)

I work with smart robots, this cannot happen so fast also due to hardware limitations. The speed requirement is particularly harsh. Without the speed limit and with the system known in advance I would say yes in 2030. As the bet stands, I go for No in 2026, no in 2030.

- Tesla’s full-self-driving capability makes fewer than one major mistake per 100,000 miles

Not sure about this one, but I lean on No in 2026, no in 2030.

Replies from: matthew-barnett, FeepingCreature, nathan-helm-burger↑ comment by Matthew Barnett (matthew-barnett) · 2022-04-10T19:39:36.961Z · LW(p) · GW(p)

This may actually happen (the 1B one, not the 10^30), also due to inflation and USD created out of thin air and injected into the market.

The criteria adjusts for inflation.

↑ comment by FeepingCreature · 2022-04-11T03:39:20.195Z · LW(p) · GW(p)

How much would your view shift if there was a model that could "engineer its own prompt", even during training?

Replies from: lorenzo-rex↑ comment by lorepieri (lorenzo-rex) · 2022-04-11T16:42:45.265Z · LW(p) · GW(p)

A close call, but I would lean still on no. Engineering the prompt is where humans leverage all their common sense and vast (w.r.t.. the AI) knowledge.

↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2022-04-10T18:29:02.984Z · LW(p) · GW(p)

Nice specific breakdown! Sounds like you side with the authors overall. Want to also make the 3:1 bet with me?

Replies from: lorenzo-rex↑ comment by lorepieri (lorenzo-rex) · 2022-04-11T16:48:28.561Z · LW(p) · GW(p)

Thanks. Yes, pretty much in line with the authors. Btw, I would super happy to be wrong and see advancement in those areas, especially the robotic one.

Thanks for the offer, but I'm not interested in betting money.

comment by rchplg · 2022-04-10T00:10:51.929Z · LW(p) · GW(p)

I'll just note that several of these bets don't work as well if I expect discontinuous &/or inconsistently distributed progress. As was observed on many of individual tasks in PaLM: https://twitter.com/LiamFedus/status/1511023424449114112 (obscured by % performance & by the top-level benchmark averaging 24 subtasks that spike at different levels of scaling)

I might expect performance just prior to AGI to be something like 99% 40% 98% 80% on 4 subtasks, where parts of the network developed (by gd) for certain subtasks enable more general capabilities

↑ comment by rchplg · 2022-04-10T00:12:57.168Z · LW(p) · GW(p)

Naive analogy: two tasks for humans: (1) tell time (2) understand mechanical gears. Training a human on (1) will outperform (2) for a good while, but once they get a really good model for (2) they can trivially do (1) & performance would spike dramatically

Replies from: TLW↑ comment by TLW · 2022-04-10T02:02:53.804Z · LW(p) · GW(p)

once they get a really good model for (2) they can trivially do (1)

[Citation Needed]

Designing an accurate mechanical clock is non-trivial[1], even assuming knowledge of gears

- ^

Understatement.

↑ comment by rchplg · 2022-04-10T03:41:33.513Z · LW(p) · GW(p)

Trivially do better than the naive thing I human would do*, sry (e.g. v.s. looking at the sun & seasons, which is what I think human trying to tell time would do to locally improve). Definitely agree can't trivially do a great job on traditional standards. Wasn't a carefully chosen example

The broader point was that some subskills can enable better performance at many tasks, which causes spiky performance in humans at least. I see no reason why this wouldn't apply to nns. (e.g. part of the nn develops a model of something for one task, once it's good enough discovers that it can use that model for very good performance on an entirely different task - likely observed as a relatively sudden, significant improvement)

comment by aog (Aidan O'Gara) · 2022-06-30T22:47:46.024Z · LW(p) · GW(p)

A model/ensemble of models will achieve >90% on the MATH dataset using a no-calculator rule

Curious to hear if/how you would update your credence in this being achieved by 2026 or 2030 after seeing the 50%+ accuracy from Google's Minerva [LW · GW]. Your prediction seemed reasonable to me at the time, and this rapid progress seems like a piece of evidence favoring shorter timelines.

↑ comment by Matthew Barnett (matthew-barnett) · 2022-06-30T22:51:20.055Z · LW(p) · GW(p)

I've updated significantly. However, unfortunately, I have not yet seen how well the model performs on the hardest difficulty problems on the MATH dataset, which could give me a much better picture of how impressive I think this result is.

↑ comment by Tomás B. (Bjartur Tómas) · 2022-07-01T00:21:11.304Z · LW(p) · GW(p)

I’m pretty sure I will “win” my bet against him; even two months is a lot of time in AI these days.

comment by Donald Hobson (donald-hobson) · 2022-04-13T00:40:42.674Z · LW(p) · GW(p)

A model/ensemble of models will achieve >90% on the MATH dataset using a no-calculator rule

A "no calculator rule". If the model is just a giant neural network, it is pretty clear what this means. (Although unclear why you should care, real world neural nets are allowed to use calculators). Over the general space of all AI techniques, its unclear what this means.

A robot that can, from beginning to end, reliably wash dishes, take them out of an ordinary dishwasher and stack them into a cabinet, without breaking any dishes, and at a comparable speed to humans (<120% the average time)

This sound to me like it depends on the robotics hardware at least as much as it depends on the software.

It also has a lot of wiggle room. Suppose your robot only works under specific lighting conditions. With a specific design of dishwasher. All the cutlery and crockery has been laser scanned in advance. All the cutlery has an RFID chip glued to it. The robot doesn't have legs, so it only works if the cabinet is within easy reach of the dishwasher. In an extreme case, imagine a large machine that consists of all sorts of vibrating ridged trays and spinning rubber cones. The metal cutlery is pulled out using a big electromagnet. At one point, different dishes are separated using a vertical wind tunnel. The equipment is too big to fit in a typical kitchen. It is almost entirely dumb. The mechanism is totally not like a human manually putting away dishes. Yet thanks to plentiful foam padding, this machine can put away even fragile dishes without breaking them.

(The way cranberries are harvested isn't by getting careful robot arms that can visually spot each berry. They flood the field with water, bash about to knock the berries off, wait for the berries to float, and then skim them off. )

There is also the problem of maybe no one cares about that particular metric.

You could get a world where the latest AI techniques are easily enough to do your dishes task. But nobody has actually decided to bother yet. The top AI experts could program this dish robot in a weekend, but they don't because they are busy. Or because, given the current state of the public perception of AI, a photo of a robot holding a large knife would be a PR nightmare.

comment by moridinamael · 2022-04-10T14:46:12.364Z · LW(p) · GW(p)

I would like to bet against you here, but it seems like others have beat me to the punch. Are you planning to distribute your $1000 on offer across all comers by some date, or did I simply miss the boat?

comment by Davidmanheim · 2022-04-10T14:14:11.490Z · LW(p) · GW(p)

Just noting that I think you're arguing strongly against what is at most a weak man argument. (And given that the author retracted the post, it might just be a straw-man.)

Super excited to see the offers to bet, though.

↑ comment by Matthew Barnett (matthew-barnett) · 2022-04-10T14:18:32.035Z · LW(p) · GW(p)

Just noting that I think you're arguing strongly against what is at most a weak man argument. (And given that the author retracted the post, it might just be a straw-man.)

Before we wrote the post, the OP had something like 140 karma. Also, it was only retracted after we posted.

Replies from: not-relevant↑ comment by Not Relevant (not-relevant) · 2022-04-10T15:34:02.023Z · LW(p) · GW(p)

As the OP, I endorse this.

comment by JohnGreer · 2022-04-10T07:38:54.747Z · LW(p) · GW(p)

"We (Tamay Besiroglu and I) think this claim is strongly overstated, and disagree with the suggestion that “It's time for EA leadership to pull the short-timelines fire alarm.” This post received a fair amount of attention, and we are concerned about a view of the type expounded in the post causing EA leadership to try something hasty and ill-considered."

What harm do you think will come if this happens and what do you think should be done instead?

comment by Tomás B. (Bjartur Tómas) · 2024-05-22T17:59:43.706Z · LW(p) · GW(p)

Significant evidence for data contamination of MATH benchmark: https://arxiv.org/abs/2402.19450

Replies from: ryan_greenblatt↑ comment by ryan_greenblatt · 2024-05-22T18:21:16.436Z · LW(p) · GW(p)

I'm not sold this shows dataset contamination.

- They don't re-baseline with humans. (Based on my last skim a while ago.)

- It is easy to make math problems considerably harder by changing the constants and often math problems are designed to make the constants easy to work with.

- Both humans and AI are used to constants which are chosen to be nice for math problems (obviously this is unrealistic for real problems, but nonetheless this doesn't clearly show dataset contamination). AIs might be more sensitive to this.

(I agree it is some evidence for contamination.)

Replies from: gwern↑ comment by gwern · 2024-05-22T19:29:01.721Z · LW(p) · GW(p)

Also an issue is that if MATH is contaminated, you'd think GSM8k would be contaminated too, but Scale just made a GSM1k and in it, GPT/Claude are minimally overfit (although in both of these papers, the Chinese & Mistral models usually appear considerably more overfit than GPT/Claude). Note that Scale made extensive efforts to equalize difficulty and similarity of the GSM1k with GSM8k, which this Consequent AI paper on MATH does not, and discussed the methodological issues which complicate re-benchmarking.

comment by the gears to ascension (lahwran) · 2022-04-12T06:42:46.877Z · LW(p) · GW(p)

for the record I think all of those are going to happen by 2024 and I'm surprised you're willing to bet otherwise. other people already took the bet. but the improvements from geometric deep learning, conservation laws, diffusion models, 3D understanding, and recursive feedback on chip design are all moving very fast. embodiment is likely to be solved suddenly when the underlying models are structured correctly. I maintain my assertion from previous discussion that compute is the only limitation and that the deep learning community has now demonstrated that compute is the only thing stopping them. deep learning is certainly bumping up against a wall, but just like every other wall it has run into, it's just going to go around.

comment by Yitz (yitz) · 2022-04-10T16:26:04.496Z · LW(p) · GW(p)

Reading the comments, it seems like the idea you’re presenting of giving concrete bets on timelines is a great one, but the details of implementation can definitely be improved, so that making such a bet is meaningful for an AI pessimist.

comment by Michaël Trazzi (mtrazzi) · 2022-04-10T08:59:09.367Z · LW(p) · GW(p)

I haven't look deeply at what the % on the ML benchmarks actually mean. On the one hand it would be a bit weird to me if in 2030 we still have not made enough progress on them, given the current rate. On the other hand, I trust the authors in that it should be AGI-ish to pass those benchmarks, and then I don't want to bet money on something far into the future if money might not matter as much then. (Also, without considering money mattering less or the fact that the money might not be delivered in 2030 etc., I think anyone taking the 2026 bet should take the 2030 bet since if you're 50/50 in 2026 you're probably 75:25 with 4 extra years).

The more rational thing should then to take the bet for 2026 when money still matters, though apart from the ML benchmarks there is this dishwashing thing where the conditions of the bet are super tough and I don't imagine anyone doing all the reliability tests filming a dishwasher etc. in 3.5y. And then for Tesla I feel the same about those big errors every 100k miles. Like, 1) why only Tesla? 2) wouldn'tmost humans would make risky blunders on such long distances? 3) would anyone really do all those tests on a Tesla?

I'll have another look at the ML benchmarks, but on the mean time it seems that we should do other odds because of Tesla + dishwasher.

comment by TLW · 2022-04-10T01:59:50.824Z · LW(p) · GW(p)

We will use inflation-adjusted 2022 US dollars.

Be aware that current inflation estimates are potentially distorted. It may be worth mentioning exactly what inflation estimate to use, lest you end up in a situation where this is true in some but not all estimates.

Replies from: matthew-barnett↑ comment by Matthew Barnett (matthew-barnett) · 2022-04-10T02:20:49.689Z · LW(p) · GW(p)

I've now clarified that it refers to the consumer price index according to the BLS.

comment by MichaelStJules · 2022-04-09T22:31:17.334Z · LW(p) · GW(p)

- A robot that can, from beginning to end, reliably wash dishes, take them out of an ordinary dishwasher and stack them into a cabinet, without breaking any dishes, and at a comparable speed to humans (<120% the average time)

Speed and ordinary dishwasher are pretty crucial here, right? Boston Dynamics claimed they could do this back in 2016, but much slower than the average human.

Replies from: matthew-barnett↑ comment by Matthew Barnett (matthew-barnett) · 2022-04-10T02:12:18.653Z · LW(p) · GW(p)

Did they? The video you sent showed a robot placing a single cup from a sink into a dishwasher, and then placing a single can into a trash-can. This all looked pre-programmed.

By contrast, we require that the robot must be able to put away dishes in ordinary situations (it can't know whether the dishes are ahead of time, or the precise movements necessary to put them away). We also require that it achieve a low error rate, which Boston Dynamics did not appear to report. Also, yes, the speed at which robots can do this is a major part of the prediction.

Replies from: MichaelStJules↑ comment by MichaelStJules · 2022-04-10T02:54:27.843Z · LW(p) · GW(p)

Ah, my bad, missed that part.

I guess not knowing where the dishes are head of time also rules out pre-training on the specific test environments, but it might be worth making that explicit, too.

comment by Tomás B. (Bjartur Tómas) · 2023-03-16T15:26:47.090Z · LW(p) · GW(p)

So MMLU is down:

Presumably MATH will be next - is Minerva still SOTA?

Replies from: matthew-barnett↑ comment by Matthew Barnett (matthew-barnett) · 2023-03-16T18:27:04.634Z · LW(p) · GW(p)

Did they reveal how GPT-4 did on every task in the MMLU? If not, it's not clear whether the relevant condition here has been met yet.

Replies from: None↑ comment by [deleted] · 2023-03-16T20:00:18.725Z · LW(p) · GW(p)

So you may lose the bet imminently:

900 million pounds is 1 billion USD

And for the other part, for MMLU your 'doubt' hinges on it doing <80% on a subtest while reaching 88% overall.

I know it's just a bet over a small amount of money, but to lose in 1 year is something.

↑ comment by Matthew Barnett (matthew-barnett) · 2023-03-16T20:04:27.022Z · LW(p) · GW(p)

To be clear, I think I will lose, but I think this is weak evidence. The bet says that $1bn must be spent on a single training run, not a single supercomputer.

Replies from: None↑ comment by [deleted] · 2023-03-16T21:13:05.112Z · LW(p) · GW(p)

"OR hardware that would cost $1bn if purchased through competitive cloud computing vendors at the time on a training run to develop a single ML model"

Assuming the British government gets a fair price for the hardware, and actually has the machine running prior to the bet end date, does this satisfy the condition?

I don't actually think it will be the one that ends the bet as I expect the British government to take a while to actually implement this, but possibly they finish before 2026.

Replies from: matthew-barnett↑ comment by Matthew Barnett (matthew-barnett) · 2023-03-16T21:16:31.451Z · LW(p) · GW(p)

Assuming the British government gets a fair price for the hardware, and actually has the machine running prior to the bet end date, does this satisfy the condition?

No.

That condition resolves on the basis of the cost of the training run, not the cost of the hardware. You can tell because we spelled out the full details of how to estimate costs, and it depends on the cost in FLOP for the training run.

But honestly at this point I'm considering conceding early and just paying out, because I don't look forward to years of people declaring victory early, which seems to already be happening.

comment by Noosphere89 (sharmake-farah) · 2022-06-08T16:25:58.581Z · LW(p) · GW(p)

I agree with the need for "skin in the game", for most the same reasons as you, and I think the AI Alignment field is falling prey to the unilateralist's curse here.

comment by Sinclair Chen (sinclair-chen) · 2022-06-02T19:32:36.849Z · LW(p) · GW(p)

For anyone else who wants to bet on this, here's a market on manifold:

comment by Alex K. Chen (parrot) (alex-k-chen) · 2022-04-10T01:30:27.374Z · LW(p) · GW(p)