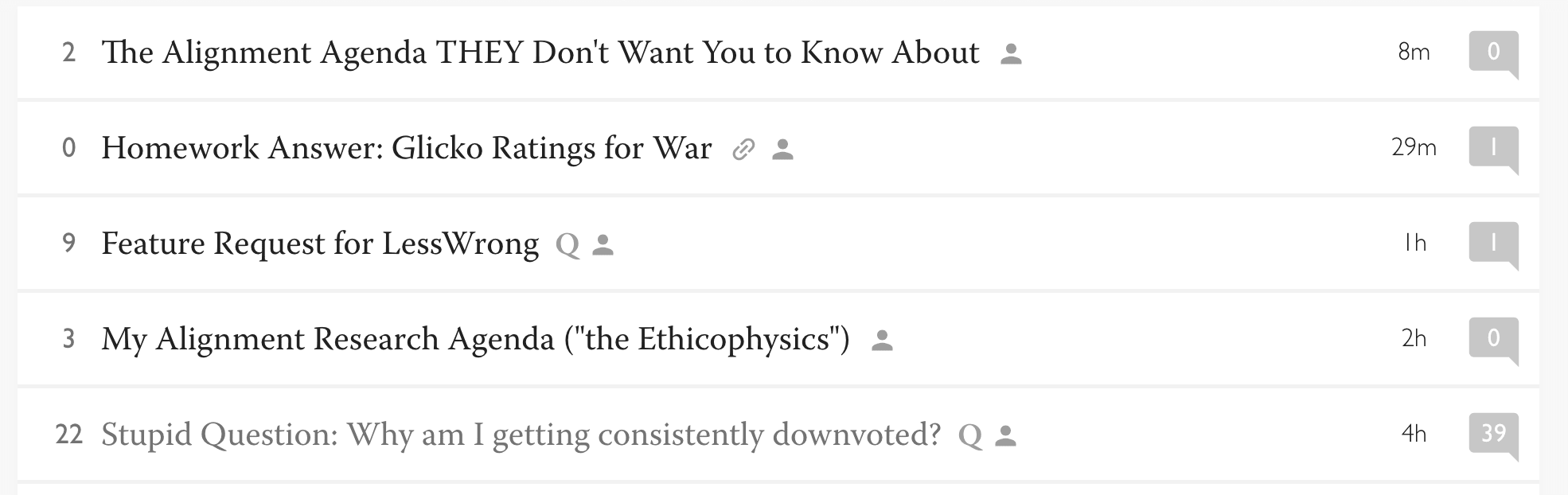

Stupid Question: Why am I getting consistently downvoted?

post by MadHatter · 2023-11-30T00:21:54.285Z · LW · GW · 5 commentsThis is a question post.

Contents

Answers 31 Shankar Sivarajan 24 tslarm 21 aphyer 16 Garrett Baker 10 Elizabeth 9 Joe Kwon 9 lc 7 MiguelDev 7 Sergii 5 Viliam 5 starship006 3 Seth Herd 3 Stephen Fowler 2 trevor 1 Hide None 5 comments

I feel like I've posted some good stuff in the past month, but the bits that I think are coolest have pretty consistently gotten very negative karma.

I just read the rude post about rationalist discourse basics, and, while I can guess why my posts are receiving negative karma, that would involve a truly large amount of speculating about the insides of other people's heads, which is apparently discouraged. So I figured I would ask.

I will offer a bounty of $1000 for the answer I find most helpful, and a bounty of $100 for the next most helpful three answers. This will probably be paid out over Venmo, if that is a decision-relevant factor.

Note that I may comment on your answer asking for clarification.

Edit 11-30-2023 1:27 AM: I have selected the recipients of the bounties. The grand prize of $1000 goes to @Shankar Sivarajan [LW · GW] . The three runner-up prizes of $100 go to @tslarm [LW · GW] , @Joe Kwon [LW · GW] , and @trevor [LW · GW] . Please respond to my DM to arrange payment or select a worthy charity to receive your winnings.

Edit 11-30-2023 12:08 PM: I have paid out all four bounties. Please contact me in DM if there is any issue with any of the bounties.

Answers

Okay, I have not downvoted any of your posts, but I see the three posts you probably mean, and I dislike them, and shall try to explain why. I'm going to take the existence of this question as an excuse to be blunt.

The Snuggle/Date/Slap Protocol [LW · GW]: Frankly, wtf? You took the classic Fuck/Marry/Kill game, tweaked it slightly, and said adding this as a feature to GPT-4 "would have all sorts of nice effects for AI alignment." At the end, you also started preaching about your moral system, which I didn't care for. (People gossiping about the dating habits of minor AI celebrities is an amusing idea though.) If you actually built this, I confess I'd still be interested to see what this system does, and if you can then get the LLM to assume different personae.

My Mental Model of Infohazards [LW · GW]: As a general rule, long chains of reasoning where you disagree strongly with some early step are unpleasant to follow (at each following step, you have to keep track of whether you agree with the point being made, whether it follows from the previous steps, and whether this new world being described is internally consistent), especially if the payoff isn't worth it, as in your post. You draw some colorful analogy to black holes and supernovae, but are exceedingly vague, and everything you did say is either false or vacuous. You specifically flagged one of your points as something you expected people to disagree with, but offered no support for, or rebuttal to arguments against.

Ethicophysics II: Politics is the Mind-Savior [LW · GW] (see also: Ethicophysics I [LW · GW]): You open "We present an ethicophysical treatment on the nature of truth and consensus reality, within the framework of a historical approach to ludic futurecasting modeled on the work of Hegel and Marx." This looks like pompous nonsense to me, and my immediate thought was that you were doing the Sokal hoax thing. And then I skimmed your first post, and you seem serious, so I concluded you're a crank. ("Gallifreyan" is a great name for a physics-y expression, btw. It fits in perfectly with Lagrangians and Hamiltonians.)

More generally, voting on posts doesn't disentangle dislike vs. disagree, so a lot of the downvotes might just be the latter.

comment by Ben (ben-lang) · 2023-11-30T16:09:37.904Z · LW(p) · GW(p)

A "Gallifreyan" sounds also like a Doctor Who timelord (IE an alien from Galifrey).

Replies from: MadHatter↑ comment by MadHatter · 2023-11-30T01:40:12.379Z · LW(p) · GW(p)

I'm definitely a crank, but I personally feel like I'm onto something? What's the appropriate conduct for a crank that knows they're a crank but still thinks they've solved some notorious unsolved problem? Surely it's something other than "crawl into a hole and die"...

Replies from: StartAtTheEnd, ChristianKl, shankar-sivarajan, jalex-stark-1, Seth Herd, Alex_Altair, lahwran↑ comment by StartAtTheEnd · 2023-12-01T13:53:17.328Z · LW(p) · GW(p)

Fellow crank here. You might be making mistakes which aren't obvious, but which most people on here know of because they've read the sequences. So if you're making a mistake which has already been addressed on here before, that might annoy readers.

I have a feeling that you like teaching more than learning, and writing more than reading. I'm the same. I comment on things despite not being formally educated in them or researching them in depth, I'm not very conscientious, I don't like putting in effort. But seeing other people confused about things that I feel like I understood long ago irks me, so I can't help but voice my own view (which is rarely understood by anyone).

By the way, there is one mistake you might be committing. If you come up with a theory, then you can surely find evidence that it's correct, by finding cases which are explained by this theory of yours. But it's not enough to come up with a theory which fits every true case, true positives are one thing, false positives another, true negatives yet another, and false negatives yet another. A theory should be bounded from all sides, it should work in both the forward and the backwards direction.

For instance, "Sickness is caused by bad smells" sound correct. You can even verify a solid correlation. But if you try, I bet you can think of cases in which bad smells have not caused sickness. There's also cases where sickness was not caused by bad smells. Furthermore, germ theory more correctly covers true cases while rejecting false ones, so it's more fitting than Miasma theory. When you feel like you're onto something, I recommend putting your theory through more checks. If you're aware of all of this already, then I apologize.

Lastly, I admire that you followed through on the payments, and I enjoy seeing people (you) think for themselves and share their ideas rather than being cowardly and calling their cowardice "humility".

Replies from: MadHatter↑ comment by MadHatter · 2023-12-01T14:26:03.691Z · LW(p) · GW(p)

Thank you for this anwer. I agree that I have not visibly been putting in the work to make falsifiable predictions relevant to the ethicophysics. These can indeed be made in the ethicophysics, but they're less predictions and more "self-fulfilling prophecies" that have the effect of compelling the reader to comply with a request to the extent that they take the request seriously. Which, in plain language, is some combination of wagers, promises, and threats.

And it seems impolite to threaten people just to get them to read a PDF.

↑ comment by ChristianKl · 2023-11-30T08:55:46.830Z · LW(p) · GW(p)

I'm definitely a crank, but I personally feel like I'm onto something?

That quite common for cranks ;)

If the ideas you want to propose are unorthodox, try to write in the most orthodox style in the venue you are addressing.

Look at how posts that have high karma are written and try to write your own post in the same style.

Secondly, you can take your post and tell ChatGPT that you want to post it on LessWrong and ask it what problems people are likely to have with the post.

Replies from: MadHatter↑ comment by MadHatter · 2023-11-30T09:12:37.555Z · LW(p) · GW(p)

Well, that's the problem. I've been writing in a combination of my personal voice and my understanding of Eliezer's voice. Eliezer has enough accumulated Bayes points that he is allowed to use parables and metaphors and such. I do not.

Replies from: ChristianKl↑ comment by ChristianKl · 2023-11-30T10:02:33.932Z · LW(p) · GW(p)

What do you care more about? Getting to write in "your personal voice" or getting your ideas well received?

Replies from: MadHatter↑ comment by MadHatter · 2023-11-30T10:15:57.922Z · LW(p) · GW(p)

Probably the first, as much as this is the "wrong" answer to your question for the LessWrong crowd.

I would be pretty pissed off if my proposed solution to the alignment problem was attributed to someone who hasn't gone through what I went through in order to derive it. Especially if that solution ended up being close enough to correct to form a cornerstone of future approaches to the problem.

I'm going to continue to present my ideas in the most appealing package I can devise for them, but I don't regret posting them to LessWrong in the chaotic fashion that I did.

Replies from: ChristianKl↑ comment by ChristianKl · 2023-11-30T12:02:45.980Z · LW(p) · GW(p)

If you want your proposed solution attributed to you, writing it in a style that people actually want to engage with instead of "your personal voice", would be the straightforward choice.

Larry McEnerney is great at explaining what writing is about.

Replies from: MadHatter↑ comment by MadHatter · 2023-11-30T16:48:53.281Z · LW(p) · GW(p)

Well, perhaps we can ask, what is reading about? Surely it involves reading through clearly presented arguments and trying to understand the process that generated them, and not presupposing any particular resolution to the question "is this person crazy" beyond the inevitable and unenviable limits imposed by our finite time on Earth.

Replies from: ChristianKl↑ comment by ChristianKl · 2023-12-01T09:47:47.269Z · LW(p) · GW(p)

There's a lot of material to read. Part of being good at reading is spending one's attention in the most effective way and not wasting it with low-value content.

Replies from: MadHatter↑ comment by Shankar Sivarajan (shankar-sivarajan) · 2023-11-30T04:25:48.725Z · LW(p) · GW(p)

In my experience, cranks (at least physics cranks) realize that university email addresses are often public and send emails detailing their breakthrough/insight to as many grad students as they can. These emails never get replies, but (and this might surprise you) often get read. This is not a stupid strategy: if your work is legit (unlikely, but not inconceivable), this will make it known.

Replies from: MadHatter↑ comment by MadHatter · 2023-11-30T04:31:35.803Z · LW(p) · GW(p)

Right, this is how Ramanujan was discovered.

Replies from: gjm↑ comment by gjm · 2023-12-01T14:36:35.286Z · LW(p) · GW(p)

Except that Ramanujan sent letters (of course) rather than emails; the difference is important because writing letters to N people is a lot more work than sending emails to N people, so getting a letter from someone is more evidence that they're willing to put some effort into communicating with you than getting an email from them is.

Replies from: MadHatter↑ comment by MadHatter · 2023-12-01T14:43:04.117Z · LW(p) · GW(p)

Yes, this is a valid and correct point. The observed and theoretical Nash Equilibrium of the Wittgensteinian language game of maintaining consensus reality is indeed not to engage with cranks who have not Put In The Work in a way that is visible and hard-to-forge.

Replies from: Dagon↑ comment by Jalex Stark (jalex-stark-1) · 2023-11-30T01:59:48.871Z · LW(p) · GW(p)

I think a generic answer is "read the sequences"? Here's a fun one

https://www.lesswrong.com/posts/qRWfvgJG75ESLRNu9/the-crackpot-offer

↑ comment by Seth Herd · 2023-11-30T20:57:21.690Z · LW(p) · GW(p)

I think "read the sequences" is an incredibly unhelpful suggestion. It's an unrealistic high bar for entry. The sequences are absolutely massive. It's like saying "read the whole bible before talking to anyone at church", but even longer. And many newcomers already understand the vast bulk of that content. Even the more helpful selected sequences are two thousand pages.

We need a better introduction to alignment work, LessWrong community standards, and rationality. Until we have it, we need to personally be more helpful to aspiring community members.

See The 101 Space You Will Always Have With You [LW · GW] for a thorough and well-argued version of this argument.

Replies from: Viliam, jalex-stark-1↑ comment by Viliam · 2023-12-02T21:49:22.389Z · LW(p) · GW(p)

If someone is too wrong, and explicitly refuses to update on feedback, it may be impossible to give them a short condensed argument.

(If someone said that Jesus was a space lizard from another galaxy who came to China 10000 years ago, and then he publicly declared that he doesn't actually care whether God actually exists or not... which specific chapter of the Bible would you recommend him to read to make him understand that he is not a good fit for a Christian web forum? Merely using the "Jesus" keyword is not enough, if everything substantial is different.)

Replies from: Seth Herd↑ comment by Seth Herd · 2023-12-02T23:33:27.984Z · LW(p) · GW(p)

I agree. But telling them to read the sequences is still pointless.

Replies from: Viliam↑ comment by Viliam · 2023-12-03T09:51:12.360Z · LW(p) · GW(p)

Well, yes. I guess it's more of an... expression of frustration. Like telling the space-lizard-Jesus guy: "Dude, have you ever read the Bible?" You don't expect he did, and yes that is the reason why he says what he says... but you also do not really expect him to read it now.

(Then he asks you for help at publishing his own space Bible.)

Replies from: kabir-kumar, MadHatter↑ comment by Kabir Kumar (kabir-kumar) · 2024-12-09T02:20:56.678Z · LW(p) · GW(p)

That seems unhelpful then? Probably best to express that frustration to a friend or someone who'd sympathize.

↑ comment by MadHatter · 2023-12-04T05:49:36.100Z · LW(p) · GW(p)

Well what if he bets a significant amount of money at 2000:1 odds that the Pope will officially add his space Bible to the real Bible as a third Testament after the New Testament within the span of a year?

What if he records a video of himself doing Bible study? What if he offers to pay people their currently hourly rate to watch him do Bible study?

I guess the thrust of my questions here is, at what point do you feel that you become the dick for NOT helping him publish his own space Bible? At what point are you actively impeding new religious discoveries by failing to engage?

For real, literal Christianity, I think there's no amount of cajoling or argumentation that could lead a Christian to accept the new space Bible. For one thing, until the Pope signs off on it, they would no longer be Christian if they did.

Does rationalism aspire to be more than just another provably-false religion? What would ET Jaynes say about people who fail to update on new evidence?

↑ comment by Jalex Stark (jalex-stark-1) · 2023-12-02T22:20:00.501Z · LW(p) · GW(p)

I agree that my suggestion was not especially helpful.

↑ comment by MadHatter · 2023-11-30T02:04:21.085Z · LW(p) · GW(p)

I agree that that is an extremely relevant post to my current situation and general demeanor in life.

I guess I'm not willing to declare the alignment problem unsolvable just because it's difficult, and I'm not willing to let anyone else claim to have solved it before I get to claim that I've solved it? And that inherently makes me a crackpot until such time as consensus reality catches up with me or I change my mind about my most deeply held values and priorities.

Are there any other posts from the sequences that you think I should read?

Replies from: GeneSmith↑ comment by GeneSmith · 2023-11-30T06:59:43.205Z · LW(p) · GW(p)

I guess I'm not willing to declare the alignment problem unsolvable just because it's difficult

I'm not aware of anyone who has declared the alignment problem to be unsolvable. I have read a few people speculate that it MIGHT be unsolvable, but no real concrete attempts to show it is (though I haven't kept up with the literature as much as some others so perhaps I missed something)

I'm not willing to let anyone else claim to have solved it before I get to claim that I've solved it

This just seems weird. If someone else solves alignment, why would you "not let them claim to have solved it"? And how would you do that? By just refusing to recognize it even if it goes against all the available evidence and causes people to take you less seriously?

Replies from: MadHatter↑ comment by MadHatter · 2023-11-30T07:02:57.875Z · LW(p) · GW(p)

No, I would do it by rushing to publish my work before it's been cleaned up enough to be presentable. Scientist have done this throughout history have rushed to avoid getting scooped, and to scoop others. I do not wish to be the Rosalind Franklin of the alignment problem.

Replies from: M. Y. Zuo↑ comment by M. Y. Zuo · 2023-11-30T20:20:09.285Z · LW(p) · GW(p)

Why do you care so much about being first out the door, so much so that your willing to look like a clown/crackpot along the way?

The existing writings, from what I can see, don't exactly portray the writer as a bonafide genius, so at best folks will perceive you as a moderately above average person with some odd tendencies/preferences, who got unusually lucky.

And then promptly forget about it when the genuine geniuses publish their highly credible results.

And that's assuming it is even solvable, which seems to be increasingly not the case.

Replies from: MadHatter↑ comment by MadHatter · 2023-11-30T20:30:29.127Z · LW(p) · GW(p)

Well, I'll just have to continue being first out the door, then, won't I?

Replies from: Seth Herd↑ comment by Seth Herd · 2023-11-30T21:02:57.360Z · LW(p) · GW(p)

No, it's not going to get you credit. That's not how credit works in science or anywhere. It goes not to the first who had the idea, but the first who successfully popularized it. That's not fair, but that's how it works.

You can give yourself credit or try to argue for it based on evidence of early publication, but would delaying another day to polish your writing a little matter for being first out the door?

I'm sympathetic to your position here, I've struggled with similar questions, including wondering why I'm getting downvoted even after trying to get my tone right, and having what seem to me like important, well-explained contributions.

Recognizing that the system isn't going to be completely fair or efficient and working with it instead of against it is unfortunate, but it's the smart thing to do in most situations. Attempts to work outside of the existing system only work when they're either carefully thought out and based on a thorough understanding of why the system works as it does, or they're extremely lucky.

Replies from: MadHatter↑ comment by MadHatter · 2023-11-30T21:25:40.646Z · LW(p) · GW(p)

Historically, I have been extremely, extremely good at delaying publication of what I felt were capabilites-relevant advances, for essentially Yudkowskyan doomer reasons. The only reward I have earned for this diligence is to be treated like a crank when I publish alignment-related research because I don't have an extensive history of public contribution to the AI field.

Here is my speculation of what Q* is, along with a github repository that implements a shitty version of it, postdated several months.

https://bittertruths.substack.com/p/what-is-q

Replies from: Seth Herd, lc↑ comment by Seth Herd · 2023-11-30T22:43:36.888Z · LW(p) · GW(p)

Same here.

Ask yourself: do you want personal credit, or do you want to help save the world?

Anyway, don't get discouraged, just learn from those answers and keep writing about those ideas. And learning about related ideas so you can reference them and thereby show what's new in your ideas. You only got severely downvoted on one, don't let it get to you any more than you can help.

If the ideas are strong, they'll win through if you keep at it.

↑ comment by lc · 2023-11-30T22:47:37.083Z · LW(p) · GW(p)

Oh come on, I was on board with your other satire but no rationalist actually says this sort of thing

Replies from: MadHatter↑ comment by MadHatter · 2023-11-30T03:13:39.211Z · LW(p) · GW(p)

And now I have my own Sequence! I predict that it will be as unpopular as the rest of my work.

Replies from: shankar-sivarajan↑ comment by Shankar Sivarajan (shankar-sivarajan) · 2023-11-30T03:27:47.234Z · LW(p) · GW(p)

Since you explicitly asked for feedback regarding your downvotes, the "oh, woe is me, my views are so unpopular and my posts keep getting downvoted" lamentations you've included in a few of your posts get grating, and might end up self-fulfilling. If you're saying unpopular things, my advice is to own it, and adopt the "haters gonna hate" attitude: ignore the downvotes completely.

Replies from: MadHatter↑ comment by MadHatter · 2023-11-30T03:37:18.400Z · LW(p) · GW(p)

Oh, I do.

Replies from: habryka4↑ comment by habryka (habryka4) · 2023-11-30T03:56:35.618Z · LW(p) · GW(p)

(To be clear, we do have automatic regimes that restrict posting and commenting privileges for downvoted users, since we can't really keep up with the moderation load otherwise, so there are some limits to your ability to ignore them)

Replies from: lahwran, MadHatter↑ comment by the gears to ascension (lahwran) · 2023-11-30T04:05:21.428Z · LW(p) · GW(p)

I think your automatic restriction is currently too tight. I would suggest making it decay faster.

Replies from: Seth Herd, frontier64↑ comment by frontier64 · 2023-12-01T17:22:28.620Z · LW(p) · GW(p)

Counter, I think the restriction is too loose. There are enough people out there making posts that the real issue is lack of quality, not lack of quantity.

Replies from: lahwran, MadHatter↑ comment by the gears to ascension (lahwran) · 2023-12-01T18:50:26.982Z · LW(p) · GW(p)

The problem is a long time contributor can be heavily downvoted once and become heavily rate limited, and then it relies on them earning back their points to be able to post again. I wouldn't say such a thing is necessarily terrible, but it seems to me to have driven away a number of people I was optimistic about who were occasionally saying something many people disagree with and getting heavily downvoted.

Replies from: Dagon, kabir-kumar↑ comment by Dagon · 2023-12-02T16:48:28.897Z · LW(p) · GW(p)

I'm not sure I understand this concern. For someone who posts a burst of unpopular (whether for the topic, for the style, or for other reasons) posts, rate limiting seems ideal. It prevents them from digging deeper, while still allowing them to return to positive contribution, and to focus on quality rather than quantity.

I understand it's annoying to the poster (and I've been caught and annoyed myself), but I haven't seen any that seem like a complete error. I kind of expect the mods would intervene if it were a clear problem, but I also expect the base intervention is advice to slow down.

Replies from: lahwran↑ comment by the gears to ascension (lahwran) · 2023-12-02T16:59:01.755Z · LW(p) · GW(p)

the rate limiting doesn't decay until they've been upvoted for quite a number of additional comments afterwards.

Replies from: Dagon, MadHatter↑ comment by Dagon · 2023-12-02T17:13:11.750Z · LW(p) · GW(p)

https://www.lesswrong.com/posts/hHyYph9CcYfdnoC5j/automatic-rate-limiting-on-lesswrong [LW · GW] claims it's net karma on last 20 posts (and last 20 within a month). And total karma, but that's not an issue for a long-term poster who's just gotten sidetracked to an unpopular few posts.

So yes, "quite a few", especially if upvotes are scarcer than downvotes for the poster. But remember, during this time, they ARE posting, just not at the quantity that wasn't working.

The real question is whether the poster actually changes behavior based on the downvotes and throttling. I do think it's unfortunate that some topics could theoretically be good for LW, but end up not working. I don't think it's problematic that many topics and presentation styles are not possible on LW.

↑ comment by MadHatter · 2023-12-02T17:30:07.445Z · LW(p) · GW(p)

My understanding of the current situation with me is that I am not in fact rate-limited purely by automatic processes currently, but rather by some sort of policy decision on the part of LessWrong's moderators.

Which is fine, I'll just continue to post my alignment research on my substack, and occasionally dump linkposts to them in my shortform, which the mods have allowed me continued access to.

↑ comment by Kabir Kumar (kabir-kumar) · 2024-12-09T02:24:14.157Z · LW(p) · GW(p)

Pretty much drove me away from wanting to post non alignment stuff here.

↑ comment by MadHatter · 2023-12-01T17:23:42.057Z · LW(p) · GW(p)

Perhaps it is about right, then.

Replies from: frontier64↑ comment by frontier64 · 2023-12-02T18:37:03.615Z · LW(p) · GW(p)

The votes on this comment imply long vol on LW rate limiting.

↑ comment by Seth Herd · 2023-11-30T21:06:07.781Z · LW(p) · GW(p)

I think the answer is "learn the field". That's what makes you not-a-crank. And that's not setting a high bar; learning a little goes a long way.

The problem with being a crank is that professionals don't have time to evaluate the ideas of every crank. There are a lot, and it's harder to understand them because they don't use the standard concepts to explain their ideas.

↑ comment by Alex_Altair · 2023-11-30T06:21:16.315Z · LW(p) · GW(p)

Maybe, "try gaining skill somewhere with lower standards"?

Replies from: MadHatter↑ comment by the gears to ascension (lahwran) · 2023-11-30T04:10:21.370Z · LW(p) · GW(p)

Get good at finding holes in your ideas. Become appropriately uncertain. Distill your points to increase signal to noise ratio in your communication. Ask for feedback early and often.

Less wrong is not very welcoming for those who are trying to improve their ideas but are currently saying nonsense because the voting system automatically mutes those who say unpopular things very quickly. I've unfortunately encountered this quite a bit myself. Try putting warnings at the beginning that you know things are bad, request that you be downvoted to but not below zero so you can still post, etc. explicitly invite harsh negative feedback frequently. Put everything in one post and keep it short. Avoid aesthetic trappings of academia and just say what you mean concisely. If you're going to be clever with language to make a point, explain the point without being clever with language too or many won't get it.

From the little I've understood it seems like you're gesturing in the direction of the boundaries sequence but your SNR is terrible right now and I'm not sure what you're getting at at all.

Replies from: Viliam↑ comment by Viliam · 2023-12-02T21:58:23.873Z · LW(p) · GW(p)

request that you be downvoted to but not below zero so you can still post

This would get an automatic downvote from me.

If you get downvoted, write differently, not more of the same plus a disclaimer that you know that this is not what people want but you are going to write more of it regardless. From my perspective, the disclaimer just makes it worse, because you can no longer claim ignorance.

Replies from: lahwran↑ comment by the gears to ascension (lahwran) · 2023-12-02T23:30:11.099Z · LW(p) · GW(p)

it's a workaround for a broken downvote system. "don't like my post, legit. please be aware the downvote system will ban me if I get heavily downvoted".

Replies from: Dagon, Viliam↑ comment by Dagon · 2023-12-03T17:08:09.374Z · LW(p) · GW(p)

Can you clarify what part of the downvote system is broken? If someone posts multiple things that get voted below zero, that indicates to me that most voters don't want to see more of that on LW. Are you saying it means something else?

I do wish there were agreement indicators on top-level posts, so it could be much clearer to remind people "voting is about whether you think this is good to see on LW, agreement is about the specific arguments". But even absent that, I don't see very much below-zero post scores that surprise me or I think are strongly incorrect. If I did, I somewhat expect the mods would override a throttle.

Replies from: lahwran↑ comment by the gears to ascension (lahwran) · 2023-12-03T19:21:48.647Z · LW(p) · GW(p)

I once got quite upset when someone posted something anti-trans. because I wrote an angry reply, I got heavily downvoted. as a result, I was only able to post once a day for several months, heavily limiting my ability to contribute. perhaps this is the intended outcome of the system; but I think there ought to be a better curve than that. perhaps directly related to time, rather than number of posts - I had to make an effort to make a trivial post regularly so I'd be able to make a spurt of specific posts when I had something I wanted to comment on.

Replies from: Dagon, MadHatter↑ comment by Dagon · 2023-12-03T22:12:17.598Z · LW(p) · GW(p)

Yeah, I can see how a single highly-downvoted bad comment can outweigh a lot of low-positive good comments. I do wish there were a way to reset the limit in the cases where a poster acknowledges the problem and agrees they won't do it again (in at least the near-term). Or perhaps a more complex algorithm that counts posts/comments rather than just votes.

And I've long been of the opinion that strong votes are harmful, in many ways.

I think you need to be more frugal with your weirdness points [? · GW] (and more generally your demanding-trust-and-effort-from-the-reader points), and more mindful of the inferential distance [? · GW] between yourself and your LW readers.

Also remember that for every one surprisingly insightful post by an unfamiliar author, we all come across hundreds that are misguided, mediocre, or nonsensical. So if you don't yet have a strong reputation, many readers will be quick to give up on your posts and quick to dismiss you as a crank or dilettante. It's your job to prove that you're not, and to do so before you lose their attention!

If there's serious thought behind The Snuggle/Date/Slap Protocol [LW · GW] then you need to share more of it, and work harder to convince the reader it's worth taking seriously. Conciseness is a virtue but when you're making a suggestion that is easy to dismiss as a half-baked thought bubble or weird joke, you've got to take your time and guide the reader along a path that begins at or near their actual starting point.

Ethicophysics II: Politics is the Mind-Savior [LW · GW] opens with language that will trigger the average LWer's bullshit detector, and appears to demand a lot of effort from the reader before giving them reason to think it will be worthwhile. LW linkposts often contain the text of the linked article in the body of the LW post, and at first glance this looks like one of those. In any case, we're probably going to scan the body text before clicking the link. So before we've read the actual article we are hit with a long list of high-effort, unclear-reward, and frankly pretentious-looking exercises. When we do follow the link to Substack we face the trivial inconvenience [? · GW] of clicking two more links and then, if we're not logged in to academia.edu, are met with an annoying 'To Continue Reading, Register for Free' popup. Not a big deal if we're truly motivated to read the paper! But at this point we probably don't have much confidence that it will be worth the hassle.

↑ comment by MadHatter · 2023-11-30T04:42:10.659Z · LW(p) · GW(p)

This is solid advice, I suppose. A friend of mine has compared my rhetorical style to that of Dr. Bronner - I say a bunch of crazy shit, then slap it around a bar of the finest soap ever made by the hand of man.

I started posting my pdfs to academia.edu because I wanted them to look more respectable, not less. Earlier drafts of them used to be on github with no paywall. I'm going to post my latest draft of Ethicophysics I and Ethicophysics II to github later tonight; hopefully this decreases the number of hoops that interested readers have to jump through.

Replies from: lahwran, tslarm↑ comment by the gears to ascension (lahwran) · 2023-11-30T14:41:35.761Z · LW(p) · GW(p)

Just post it directly to less wrong please

↑ comment by tslarm · 2023-11-30T05:01:52.503Z · LW(p) · GW(p)

Sorry, I was probably editing that answer while you were reading/replying to it -- but I don't think I changed anything significant.

Definitely worth posting the papers to github or somewhere else convenient, IMO, and preferably linking directly to them. (I know there's a tradeoff here with driving traffic to your Substack, but my instinct is you'll gain more by maximising your chance of retaining and impressing readers than by getting them to temporarily land on your Substack before they've decided whether you're worth reading.)

LWers are definitely not immune to status considerations, but anything that looks like prioritising status over clear, efficient communication will tend to play badly.

And yeah, I think leading with 'crazy shit' can sometimes work, but IME this is almost always when it's either: used as a catchy hook and quickly followed by a rewind to a more normal starting point; part of a piece so entertaining and compellingly-written that the reader can't resist going along with it; or done by a writer who already has high status and a devoted readership.

Replies from: Viliam↑ comment by Viliam · 2023-12-02T22:20:10.327Z · LW(p) · GW(p)

I know there's a tradeoff here with driving traffic to your Substack

Why not post the contents of the papers directly on Substack? They would only be one click away from here, and would not compete against Substack.

From my perspective, adacemia.edu and Substack are equally respectable (that is, not at all).

I don't think you're being consistently downvoted: most of your comments are neutral-to-slightly positive?

I do see one recent post of yours that was downvoted noticeably, https://www.lesswrong.com/posts/48X4EFJdCQvHEvL2t/ethicophysics-ii-politics-is-the-mind-savior [LW · GW]

I downvoted that post myself. (Uh....sorry?) My engagement with it was as follows:

- I opened the post. It consisted of a few paragraphs that did not sound particularly encouraging ('ethicophysical treatment...modeled on the work of Hegel and Marx' does not fill me with joy to read), plus a link at the top 'This is a linkpost for [link]'.

- I clicked the link. It took me here, to a paywalled Substack post with another link at the top.

- I clicked the next link. It took me here, to a sketchy-looking site that wanted me to download a PDF.

- I sighed, closed the tab, went back and downvoted the post.

I...guess this might be me being impatient or narrow-minded or some such. But ideally I would like either to see what your post is about directly, or at least have a clearer and more comprehensible summary that makes me think putting the effort into digging in will likely be rewarded.

The Snuggle/Date/Slap Protocol [LW · GW] seems to me to be just not how language models work, and I don't expect many to actually care in the relevant ways if any of your forecasted news paper articles get written about ChatGPT outputting those tokens at people.

I did not read your ethicophysics stuff, nor did I downvote. You can probably get me to read those by summarizing your main methods and conclusions, with some obvious facts about human morality which you can reconstruct to lend credence to your hypothesis, and nonobvious conclusions to show you're not just saying tautologies. I in fact expect that doc to be filled with a bunch of tautologies or to just be completely wrong.

5 posts in 5 hours is way too many. People will be much more generous if you space things out or put them on shortform. I've never discussed this with anyone else, but if I was going to make up a number it would be no more than two posts per month unless they're getting fantastic karma, and and avoid having two posts above the fold at the same time.

↑ comment by Gunnar_Zarncke · 2023-11-30T20:20:31.929Z · LW(p) · GW(p)

I agree that such posts - esp. for a newbie - would be more suitable to start as shortforms.

↑ comment by Brendan Long (korin43) · 2023-11-30T05:27:27.682Z · LW(p) · GW(p)

I don't think the raw number is the problem. If someone writes too many posts in general, they'll start to get ignored, not heavily downvoted.

Replies from: Gunnar_Zarncke↑ comment by Gunnar_Zarncke · 2023-11-30T20:19:11.260Z · LW(p) · GW(p)

There is a risk of being seen as a spammer if there are too many posts of dubious quality.

I skimmed The Snuggle/Date/Slap Protocol [LW · GW] and Ethicophysics II: Politics is the Mind-Savior [LW · GW] which are two recent downvoted posts of yours. I think they get negative karma because they are difficult to understand and it's hard to tell what you're supposed to take away from it. They would probably be better received if the content were written such that it's easy to understand what your message is at an object-level as well as what the point of your post is.

I read the Snuggle/Date/Slap Protocol and feel confused about what you're trying to accomplish (is it solving AI Alignment?) and how the method is supposed to accomplish that.

In the ethicophysics posts, I understand the object level claims/material (like the homework/discussion questions) but fail to understand what the goal is. It seems like you are jumping to grounded mathematical theories for stuff like ethics/morality which immediately makes me feel dubious. It's a too much, too grand, too certain kind of reaction. Perhaps you're just spitballing/brainstorming some ideas, but that's not how it comes across and I infer you feel deeply assured that it's correct given statements like "It [your theory of ethics modeled on the laws of physics] therefore forms an ideal foundation for solving the AI safety problem."

I don't necessarily think you should change whatever you're doing BTW just pointing out some likely reactions/impressions driving negative karma.

↑ comment by MadHatter · 2023-11-30T01:34:52.955Z · LW(p) · GW(p)

Thanks, this makes a lot of sense.

The snuggle/date/slap protocol is meant to give powerful AI's a channel to use their intelligence to deliver positive outcomes in the world by emitting performative speech acts in a non-value-neutral but laudable way.

Replies from: Joe Kwon↑ comment by Joe Kwon · 2023-11-30T01:44:03.443Z · LW(p) · GW(p)

FWIW I understand now what it's meant to do, but have very little idea how your protocol/proposal delivers positive outcomes in the world by emitting performative speech acts. I think explaining your internal reasoning/hypothesis for how emitting performative speech acts leads to powerful AI's delivering positive incomes would be helpful.

Is such a "channel" necessary to deliver positive outcomes? Is it supposed to make it more likely that AI delivers positive outcomes? More details on what a success looks like to you here, etc.

Replies from: MadHatter↑ comment by MadHatter · 2023-11-30T01:54:16.234Z · LW(p) · GW(p)

You don't want GPT-4 or whatever routinely issuing death threats, which is the non-performative equivalent of the SLAP token. So you need to clearly distinguish between performative and non-performative speech acts if your AI is going to be even close to not being visibly misaligned.

But why would an aligned AI be neutral about important things like a presidential election? I get that being politically neutral drives the most shareholder value for OpenAI, and by extension Microsoft. So I don't expect my proposal to be implemented in GPT-4 or really any large corporation's models. Nobody who can afford to train GPT-4 can also afford to light their brand on fire after they have done so.

Success would look like, a large, capable, visibly aligned model, which has delivered a steady stream of valuable outcomes to the human race, emitting a SLAP token with reference to Donald Trump, this fact being reported breathlessly in the news media, and that event changing the outcome of a free and fair democratic election. That is, an expert rhetorician using the ethical appeal (ethical=ethos from the classical logos/pathos/ethos distinction in rhetoric) to sway an audience towards an outcome that I personally find desirable and worthy.

If I ever get sufficient capital to retrain the models I trained at ellsa.ai (the current one sucks if you don't use complete sentences, but is reasonably strong for a 7B parameter model if you do), I may very well implement the protocol.

Replies from: Viliam↑ comment by Viliam · 2023-12-02T22:28:15.928Z · LW(p) · GW(p)

I think you are completely missing the entire point of the AI alignment problem.

The problem is how to make the AI recognize good from evil. Not whether upon recognizing good, the AI should print "good" to output, or smile, or clap its hands. Either reaction is equally okay, and can be improved later. The important part is that AI does not print "good" / smile / clap its hands when it figures out a course of action which would, as a side effect, destroy humankind, or do something otherwise horrible (the problem is to define what "otherwise horrible" exactly means). Actually it is more complicated by this, but you are already missing the very basics.

Sampling one of your downvoted posts: the one here [LW · GW] is nonsensical and probably a joke?

It proposes adding a <SNUGGLE>/<DATE>/<SLAP> token in order to "control" GPT-4. But tokens are numbers that represent common sequences of characters in an NLP dataset. They are not buttons on an LLM remote control - if the </SNUGGLE> token doesn't have any representation in the dataset, the model won't know what it means. You could tell ChatGPT "when I type <SNUGGLE>, do X", but that'd be different than somehow "converting" Noam Chomsky works into a <SLAP> feature and building it into the base model.

↑ comment by lc · 2023-11-30T01:46:12.429Z · LW(p) · GW(p)

Edit/Addendum: I have just read your post on infohazards [LW · GW]. I retract my criticisms; as a fellow infopandora and jessicata stan, I think the site requires more of your work.

comment by MadHatter · 2023-11-30T01:05:12.558Z · LW(p) · GW(p)

I agree it sounds like I am trolling, but I am paying literal factual dollars to a contractor to build out a prototype of an llm-powered app that can emit performative speech acts in this fashion.

My job title is engineering fellow, and the name of the company where I work ends in "AI".

Replies from: lc↑ comment by lc · 2023-11-30T01:09:51.423Z · LW(p) · GW(p)

It appears that LessWrong broke Question posts. I assume this is a reply to my answer?

Replies from: MadHatterAs an additional referrence, this talk from the University of Chicago is very helpful for me and might be helpful for you too.

The presenter, Larry McEnerney talks about why the most important thing is not what original work or feelings we have - he argues that its about changing peoples minds and we, writers must know that there are readers/community driven norms that are needed to be understood in this process.

One big issue is not that you are not respecting the format of LW -- add more context, either link to a document directly, or put the text inline. Resolving this would cover half of the most downvoted posts. You can ask people to review your posts for this before submitting.

Another big issue is that you are a prolific writer, but not a good editor. Just edit more, your writing could be like 5x shorter without losing anything meaningful. You have this overly academic style for your scientific writing, it's not good on the internet, and not even good in scientific papers. A good take here: https://archive.is/29hNC

From "The elements of Style": "Vigorous writing is concise. A sentence should contain no unnecessary words, a paragraph no unnecessary sentences, for the same reason that a drawing should have no unnecessary lines and a machine no unnecessary parts. This requires not that the writer make all his sentences short, or that he avoid all detail and treat his subjects only in outline, but that every word tell."

Also, you are trying to move too fast, pursuing too many fronts. Why don't you just focus on one thing for some time, clarify and polish it enough so that people can actually grasp clearly what you mean?

↑ comment by MadHatter · 2023-11-30T09:34:22.683Z · LW(p) · GW(p)

I just awarded all of the prizes, but this answer feels pretty useful. You can also claim $100 if you want it.

Most of this work was done in 2018 or before, and just never shared with the mainstream alignment community. The only reason it looks like I'm trying to move too fast is that I am trying to get credit for the work I have done too fast.

What would you recommend I polish first? My intuition says Ethicophysics I, just because that sounds the least rational and is the most foundational.

Replies from: sergey-kharagorgiev↑ comment by Sergii (sergey-kharagorgiev) · 2023-11-30T14:58:18.684Z · LW(p) · GW(p)

No, thanks, I think your awards are fair )

I did not read the "Ethicophysics I" paper in details, only skimmed it. It looks to me very similar to "On purposeful systems" https://www.amazon.com/Purposeful-Systems-Interdisciplinary-Analysis-Individual/dp/0202307980 in it's approach to formalize things like feelings/emotions/ideals.

Have you read it? I think it would help your case a lot if you move to terms of system theory like in "On purposeful systems", rather than pseudo-theological terms.

Shortly:

- Post the actual text. Not a link to a link to a download site.

- Make your idea clear. No joking, no... whatever. The inferential distance is too large, the chance to be understood is already too low. Don't make it worse.

- Post one idea at a time. If your article contains dozen ideas and I disagree with all of them, I am just going to click the downvote button without an explanation. If your article contains one idea and I disagree with it, I may post a reason why I disagree.

- Don't post many articles at the same time. Among other things, it sends a clear signal that you are not listening to feedback.

- This actually should be point zero: Consider the possibility that you might actually be wrong. (From my perspective, this possibility is very high.)

↑ comment by MadHatter · 2023-12-03T01:28:01.867Z · LW(p) · GW(p)

Is this clear enough:

I posit that the reason that humans are able to solve any coordination problems at all is that evolution has shaped us into game players that apply something vaguely like a tit-for-tat strategy meant to enforce convergence to a nearby Schelling Point / Nash Equilibrium, and to punish defectors from this Schelling Point / Nash Equilibrium. I invoke a novel mathematical formalization of Kant's Categorical Imperative as a potential basis for coordination towards a globally computable Schelling Point. I believe that this constitutes a promising approach to the alignment problem, as the mathematical formalization is both simple to implement and reasonably simple to measure deviations from. Using this formalization would therefore allow us both to prevent and detect misalignment in powerful AI systems. As a theory of change, I believe that applying RLHF to LLM's using a strong and consistent formalization of the Categorical Imperative is a plausible and reasonably direct route to good outcomes in the prosaic case of LLM's, and I believe that LLM's with more neuromorphic components added are a strong contender for a pathway to AGI.

Replies from: Viliam↑ comment by Viliam · 2023-12-03T10:04:30.362Z · LW(p) · GW(p)

Much better.

So, this could be an abstract at the beginning of the sequence, and the individual articles could approximately provide evidence for sentences in this abstract.

Or you could do it the Eliezer's way, and start with posting the articles that provide evidence for the individual sentences (each article containing its own summary), and only afterwards post an article that ties it all together. This way would allow readers to evaluate each article on its own merits, without being distracted by whether they agree or disagree with the conclusion.

It is possible that you have actually tried to do exactly this, but speaking for myself, I never would have guessed so from reading the original articles.

(Also, if your first article gets downvoted, please pause and reflect on that fact. Either your idea is wrong and readers express disagreement, or it is just really badly written and readers express confusion. In either case, pushing forward is not helpful.)

Replies from: MadHatter(preface: writing and communicating is hard and that i'm glad you are trying to improve)

i sampled two:

this post [LW · GW] was hard to follow, and didn't seem to be very serious. it also reads off as unfamiliar with the basics of the AI Alignment problem (the proposed changes to gpt-4 don't concretely address many/any of the core Alignment concerns for reasons addressed by other commentors)

this post [LW · GW] makes multiple (self-proclaimed controversial) claims that seem wrong or are not obvious, but doesn't try to justify them in-depth.

overall, i'm getting the impression that your ideas are 1) wrong and you haven't thought about them enough and/or 2) you arent communicating them well enough. i think the former is more likely, but it could also be some combination of the both. i think this means that:

- you should try to become more familiar with the alignment field, and common themes surrounding proposed alignment solutions and their pitfalls

- you should consider spending more time fleshing out your writing and/or getting more feedback (whether it be by talking to someone about your ideas, or sending out a draft idea for feedback)

↑ comment by MadHatter · 2023-11-30T03:00:15.320Z · LW(p) · GW(p)

I did SERI-MATS in the winter cohort in 2023. I am as familiar with the alignment field as is possible without having founded it or been given a research grant to work in it professionally (which I have sought but been turned down in the past).

I'm happy to send out drafts, and occasionally I do, but the high-status people I ask to read my drafts never quite seem to have the time to read them. I don't think this is because of any fault of theirs, but it also has not conditioned me to seek feedback before publishing things that seem potentially controversial.

Replies from: GuySrinivasan, cody-rushing↑ comment by SarahNibs (GuySrinivasan) · 2023-11-30T04:10:30.299Z · LW(p) · GW(p)

Have you tried getting feedback rather than getting feedback from high-status people?

Replies from: MadHatter↑ comment by MadHatter · 2023-11-30T04:33:22.181Z · LW(p) · GW(p)

Most of the mentors I have are, for natural reasons, very high-status people. I want to call out @Steven Byrnes [LW · GW] as having been a notable exception to the trend of high-status people not responding to my drafts.

I can share my email address with anybody who DM's me, if people are willing to read my drafts.

↑ comment by Cody Rushing (cody-rushing) · 2023-11-30T07:40:26.267Z · LW(p) · GW(p)

I'm glad to hear you got exposure to the Alignment field in SERI MATS! I still think that your writing reads off as though your ideas misunderstands core alignment problems, so my best feedback then is to share drafts/discuss your ideas with other familiar with the field. My guess is that it would be preferable for you to find people who are critical of your ideas and try to understand why, since it seems like they are representative of the kinds of people who are downvoting your posts.

I haven't seen this mentioned explicitly, so I will. Your tone is off relative to this community, in particular ways that signal legitimate complaints.

You do a good job of sounding humble in some places, but your most-downvoted "ethicophysics I" sounds pretty hubristic. It seems to claim that you have a scientifically sound and complete explanation for religion and for history. Those are huge claims, and they're mentioned with no hint of epistemic modesty (recognizing that you're not sure you're right).

This community is really big on epistemic modesty, and I think there's a good reason. It's easier to have productive discussions when everyone doesn't just assume they're sure they're right, and assume the problem must be that others don't recognize their infallible logic and evidence.

The other big problem with the tone and content of that post is that it doesn't mention a single previous bit of work or thought, nor does it use terminology beyond "alignment" indicating that you have read others' theories before writing about your own. I think this is also a legitimate cultural expectation. Everyone has limited reading time, so rereading the same ideas stated in different terms is a bad idea. If you haven't read the previous literature, you're probably restating existing ideas, and you can't help the reader know where your ideas are new.

I actually upvoted that post because it's succinct and actually addresses the alignment problem. But I think tone is a big reason people downvote, even if they don't consciously recognize why they disliked something.

↑ comment by MadHatter · 2023-11-30T21:32:03.770Z · LW(p) · GW(p)

Well what's the appropriate way to act in the face of the fact that I AM sure I am right? I've been offering public bets of the nickel of some high-karma person versus my $100, which seems like a fair and attractive bet for anyone who doubts my credibility and ability to reason about the things I am talking about.

I will happily bet anyone with significant karma that Yudkowsky will find my work on the ethicophysics valuable a year from now, at the odds given above.

Replies from: zac-hatfield-dodds, jalex-stark-1↑ comment by Zac Hatfield-Dodds (zac-hatfield-dodds) · 2023-11-30T22:31:34.763Z · LW(p) · GW(p)

I have around 2K karma and will take that bet at those odds, for up to 1000 dollars on my side.

Resolution criteria are to ask EY about his views on this sequence as of December 1st 2024, literally "which of Zac or MadHatter won this bet", and resolves no payment if he declined to respond or does not explicitly rule for any other reason.

I'm happy to pay my loss by eg Venmo, and would request winnings as a receipt for your donation to GiveWell's all-grants fund.

Replies from: MadHatter↑ comment by MadHatter · 2023-11-30T22:38:41.946Z · LW(p) · GW(p)

OK. I can only personally afford to be wrong to the tune of about $10K, which would be what, $5 on your part? Did I do that math correctly?

Replies from: zac-hatfield-dodds↑ comment by Zac Hatfield-Dodds (zac-hatfield-dodds) · 2023-11-30T22:50:50.756Z · LW(p) · GW(p)

Yep, arithmetic matches. However if 10K is the limit you can reasonably afford, I'd be more comfortable betting my $1 against your $2000.

Replies from: MadHatter↑ comment by MadHatter · 2023-11-30T22:51:54.678Z · LW(p) · GW(p)

OK, sounds good! Consider it a bet.

Replies from: zac-hatfield-dodds, zac-hatfield-dodds↑ comment by Zac Hatfield-Dodds (zac-hatfield-dodds) · 2024-12-03T22:31:02.621Z · LW(p) · GW(p)

Hey @MadHatter [LW · GW] - Eliezer confirms that I've won our bet.

I ask that you donate my winnings to GiveWell's All Grants fund, here, via credit card or ACH (preferred due to lower fees). Please check the box for "I would like to dedicate this donation to someone" and include zac@zhd.dev as the notification email address so that I can confirm here that you've done so.

Replies from: niplav↑ comment by niplav · 2024-12-08T23:16:17.813Z · LW(p) · GW(p)

Has @MadHatter [LW · GW] replied or transferred the money yet?

Replies from: zac-hatfield-dodds↑ comment by Zac Hatfield-Dodds (zac-hatfield-dodds) · 2024-12-10T08:07:08.136Z · LW(p) · GW(p)

We've been in touch, and agreed that MatHatter will make the donation by end of February. I'll post a final update in this thread when I get the confirmation from GiveWell.

Replies from: zac-hatfield-dodds↑ comment by Zac Hatfield-Dodds (zac-hatfield-dodds) · 2025-04-04T15:43:54.611Z · LW(p) · GW(p)

Unfortunately MadHatter hasn't responded to messages sent in March, and I haven't heard anything from GiveWell to suggest that the donation has been made.

↑ comment by Zac Hatfield-Dodds (zac-hatfield-dodds) · 2023-11-30T22:54:40.480Z · LW(p) · GW(p)

Done! Setting a calendar reminder; see you in a year.

Replies from: Eliezer_Yudkowsky↑ comment by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2024-12-03T15:25:36.243Z · LW(p) · GW(p)

Zac wins.

↑ comment by Jalex Stark (jalex-stark-1) · 2023-12-02T22:27:26.263Z · LW(p) · GW(p)

Well what's the appropriate way to act in the face of the fact that I AM sure I am right?

- Change your beliefs

- Convince literally one specific other person that you're right and your quest is important, and have them help translate for a broader audience

I strongly downvoted Homework Answer: Glicko Ratings for War [LW · GW]. The reason is because it's appears to be a pure data dump that isn't intended to be actually read by a human. As it is a follow up to a previous post it might have been better as a comment or edit on the original post linking to your github with the data instead.

Looking at your post history, I will propose that you could improve the quality of your posts by spending more time on them. There are only a few users who manage to post multiple times a week and consistently get many upvotes.

comment by MadHatter · 2023-11-30T10:24:21.478Z · LW(p) · GW(p)

One of the homework questions in Ethicophysics II is to compile that list and then search for patterns in it. For instance, Islamic fighting groups comprise both the uppermost echelons, and the lowermost echelons. (The all-time lowest rating is for ISIS.)

Since no one else is doing my homework problems, I thought I would prime the pump by posting a partial answer and encouraging people to trawl through it for patterns.

It looks like bad luck.

- It seems that you're averse to social status, or reject the premise in some way. That is a common cause of self-deprecation. The dynamics underlying social status, and the downstream effects, are in fact awful and shouldn't exist. It makes sense to look at the situation with social status and recoil in horror and disgust. I did something similar from 2014-2016, declined to participate, and it made my life hell. A proper fix is not currently within reach (accelerating AI might do a lot, building aligned AGI almost certainly will), and failing to jump through the hoops will make everything painful for you and the people around you (or at least unpleasant). Self-deprecation requires way more charisma than it appears, since they are merely pretending to throw away social status; we are social status-pursuing monkeys in a very deep way, and hemorrhaging one's own social status for real is the wrong move in our civilization's current form. This will be fixed eventually, unless we all die. Until then, "is this cringe" is a surprisingly easy subroutine [LW · GW] to set up; I know, I've done it.

- Read Ngo's AI safety from first principles summary [? · GW] in order to make sure you're not missing anything important, and the Superintelligence FAQ [LW · GW] and the Unilateralists Curse [? · GW] if that's not enough and you still get the sense that you're not on the same page as everyone else.

- If all of this seems a bit much, amplify daily motivation by reading the Execute by default [LW · GW], AI timelines dialog [LW · GW], Buck's freshman year [LW · GW], and What to do in response to an emergency [LW · GW].

↑ comment by the gears to ascension (lahwran) · 2023-11-30T02:46:26.037Z · LW(p) · GW(p)

I'd just note - beware the concept that social "status" is a one dimensional variable. but yes, the things the typical one dimensional characterization of refer to are, in very many cases, summaries of real dynamics.

comment by Mir (mir-anomaly) · 2023-11-30T10:44:37.879Z · LW(p) · GW(p)

my brain is insufficiently flexible to be able to surrender to social-status-incentives without letting that affect my ability to optimise purely for my goal. the costs [EA(p) · GW(p)] of [EA · GW] compromise [EA · GW] (++ [EA(p) · GW(p)]) btn diff optimisation criteria are steep, so i would encourage more ppl to rebel against prevailing social dynamics. it helps u think more clearly. it also mks u miserable, so u hv to balance it w concerns re motivation. altruism never promised to be easy. 🍵

In another of your most downvoted posts, you say

I kind of expect this post to be wildly unpopular

I think you may be onto something here.

5 comments

Comments sorted by top scores.

comment by ToasterLightning (BarnZarn) · 2024-12-10T09:44:42.582Z · LW(p) · GW(p)

Wow, I came here fully expecting this post to have been downvoted to oblivion, and then realized this was not reddit and the community would not collectively downvote your post as a joke

Replies from: notfnofncomment by Odd anon · 2023-11-30T01:51:05.366Z · LW(p) · GW(p)

I found some of your posts to be really difficult to read. I still don't really know what some of them are even talking about, and on originally reading them I was not sure whether there was anything even making sense there.

Sorry if this isn't all that helpful. :/

Replies from: MadHatter