AllAmericanBreakfast's Shortform

post by DirectedEvolution (AllAmericanBreakfast) · 2020-07-11T19:08:01.705Z · LW · GW · 377 commentsContents

382 comments

377 comments

Comments sorted by top scores.

comment by DirectedEvolution (AllAmericanBreakfast) · 2020-09-28T00:33:47.003Z · LW(p) · GW(p)

SlateStarCodex, EA, and LW helped me get out of the psychological, spiritual, political nonsense in which I was mired for a decade or more.

I started out feeling a lot smarter. I think it was community validation + the promise of mystical knowledge.

Now I've started to feel dumber. Probably because the lessons have sunk in enough that I catch my own bad ideas and notice just how many of them there are. Worst of all, it's given me ambition to do original research. That's a demanding task, one where you have to accept feeling stupid all the time.

But I still look down that old road and I'm glad I'm not walking down it anymore.

Replies from: Viliam↑ comment by Viliam · 2020-09-28T19:47:43.506Z · LW(p) · GW(p)

I started out feeling a lot smarter. I think it was community validation + the promise of mystical knowledge.

Too smart for your own good. You were supposed to believe it was about rationality. Now we have to ban you and erase your comment before other people can see it. :D

Now I've started to feel dumber. Probably because the lessons have sunk in enough that I catch my own bad ideas and notice just how many of them there are. [...] you have to accept feeling stupid all the time. But I still look down that old road and I'm glad I'm not walking down it anymore.

Yeah, same here.

comment by DirectedEvolution (AllAmericanBreakfast) · 2023-06-11T18:29:02.489Z · LW(p) · GW(p)

Epistemic activism

I think LW needs better language to talk about efforts to "change minds." Ideas like asymmetric weapons and the Dark Arts are useful but insufficient.

In particular, I think there is a common scenario where:

- You have an underlying commitment to open-minded updating and possess evidence or analysis that would update community beliefs in a particular direction.

- You also perceive a coordination problem that inhibits this updating process for a reason that the mission or values of the group do not endorse.

- Perhaps the outcome of the update would be a decline in power and status for high-status people. Perhaps updates in general can feel personally or professionally threatening to some people in the debate. Perhaps there's enough uncertainty in what the overall community believes that an information cascade has taken place. Perhaps the epistemic heuristics used by the community aren't compatible with the form of your evidence or analysis.

- Solving this coordination problem to permit open-minded updating is difficult due to lack of understanding or resources, or by sabotage attempts.

When solving the coordination problem would predictably lead to updating, then you are engaged in what I believe is an epistemically healthy effort to change minds. Let's call it epistemic activism for now.

Here are some community touchstones I regard as forms of epistemic activism:

- The founding of LessWrong and Effective Altruism

- The one-sentence declaration on AI risks

- The popularizing of terms like Dark Arts, asymmetric weapons, questionable research practices, and "importance hacking."

- Founding AI safety research organizations and PhD programs to create a population of credible and credentialed AI safety experts; calls for AI safety researchers to publish in traditional academic journals so that their research can't be dismissed for not being subject to institutionalized peer review

- The publication of books by high-status professionals articulating a shortcoming in current practice and endorsing some new paradigm for their profession. Such books don't just collect arguments and evidence for the purpose of individual updating, but also create common knowledge about "what people in my profession are talking about."

I suspect the strongest reason to categorize epistemic activism as a "symmetric weapon" or the "Dark Arts" is as a guardrail. An analogy can be drawn with professional integrity: you should neither do anything unethical, nor do anything that risks appearing unethical. Similar, you should neither do anything that's Dark Arts, nor anything that risks appearing like the Dark Arts. Explicitly naming that you are engaging in epistemic activism looks like the Dark Arts, so we shouldn't do it. It creates a permissive environment for bad-faith actors to actually engage in the Dark Arts while calling it "epistemic activism." After all, "epistemic activism" assumes a genuine commitment to open-minded updating which is certainly not always the case.

That's a legitimate risk. I am weighing it against two other risks that I think are also severe:

- The existence of coordination problems that inhibit normal updating is also an epistemic risk, and if they represent an equilibrium or the kinetics of change are too slow, action is required to resolve the problem. The ability to notice and respond to this dynamic is bolstered when we have the language to describe it.

- Epistemic activism happens anyway - it just has to be naturalized so that it doesn't look like an activist effort. I think that creates an incentive to disguise one's motives and creates confusion and a tendency toward inaction, particularly by lower-status people, when faced with an epistemic coordination problem.

Because I think that deference usually leads to more accurate individual beliefs, I think that epistemic activism should often result in the activist having their mind change. They might be wrong about the existence of the coordination problem, or they might find that there's a ready rebuttal for their evidence or argument that they find persuasive. That is a successful outcome of epistemic activism: normal updating has occurred.

This is a key difference between epistemic activism and being a crank. The crank often also perceives that normal updating is impaired due to a social coordination problem. The difference is that a crank would regard it as a failure if the resumption of updating resulted in the crank realizing they were wrong all along. And for that reason, cranks often decide that the fact that the community hasn't updated in the direction they expected is evidence that the coordination problem still exists.

A good epistemic activist ought to have a separate argument and evidence base for the existence of the coordination problem, independent from how they expect community beliefs would update if the problem was resolved. Let's give an example:

- My mom's anxiety often impairs her, or my family as a whole, from trying new things together (this is the coordination problem). I think they'd enjoy snorkeling (this is the outcome I predict for the update). I have to track the existence of my mom's anxiety about snorkeling and my success in addressing it separately from whether or not she ultimately becomes enthusiastic about snorkeling.

- If I saw that despite my best efforts, my mom's initial anxiety on the subject was static, then I might conclude I'd simply failed to solve the coordination problem.

- If instead, my mom shifts from initial anxiety to a cogent description of why she's just not interested in snorkeling ("it's risky, I don't like cold water, I don't want to spend on a wetsuit, I'm just not that excited about it"), then I can't regard this as evidence she's still anxious. It just means I have to update toward thinking I was incorrect in predicting anxiety was inhibiting her from exploring an activity she would actually enjoy.

- If I decide that my mom's new, cogent description of why she's not interested in snorkeling is evidence that she's still anxious, then I'm engaging in crank behavior, not epistemic activism.

↑ comment by Vladimir_Nesov · 2023-06-11T20:57:51.541Z · LW(p) · GW(p)

When communicating an argument, the quality of feedback about its correctness you get depends on efforts around its delivery whose shape doesn't depend on its correctness. The objective of improving quality of feedback in order to better learn from it is a check on spreading nonsense.

comment by DirectedEvolution (AllAmericanBreakfast) · 2020-07-15T03:30:21.988Z · LW(p) · GW(p)

Things I come to LessWrong for:

- An outlet and audience for my own writing

- Acquiring tools of good judgment and efficient learning

- Practice at charitable, informal intellectual argument

- Distraction

- A somewhat less mind-killed politics

Cons: I'm frustrated that I so often play Devil's advocate, or else make up justifications for arguments under the principle of charity. Conversations feel profit-oriented and conflict-avoidant. Overthinking to the point of boredom and exhaustion. My default state toward books and people is bored skepticism and political suspicion. I'm less playful than I used to be.

Pros: My own ability to navigate life has grown. My imagination feels almost telepathic, in that I have ideas nobody I know has ever considered, and discover that there is cutting edge engineering work going on in that field that I can be a part of, or real demand for the project I'm developing. I am more decisive and confident than I used to be. Others see me as a leader.

Replies from: Viliam↑ comment by Viliam · 2020-07-15T19:01:20.185Z · LW(p) · GW(p)

Some people optimize for drama. It is better to put your life in order, which often means getting the boring things done. And then, when you need some drama, you can watch a good movie.

Well, it is not completely a dichotomy. There is also some fun to be found e.g. in serious books. Not the same intensity as when you optimize for drama, but still. It's like when you stop eating refined sugar, and suddenly you notice that the fruit tastes sweet.

comment by DirectedEvolution (AllAmericanBreakfast) · 2022-09-17T17:45:29.658Z · LW(p) · GW(p)

Chemistry trick

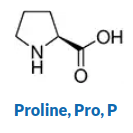

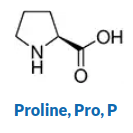

Once you've learned to visualize, you can employ my chemistry trick to learn molecular structures. Here's the structure of Proline (from Sigma Aldrich's reference).

Before I learned how to visualize, I would try to remember this structure by "flashing" the whole 2D representation in my head, essentially trying to see a duplicate of the image above in my head.

Now, I can do something much more engaging and complex.

I visualize the molecule as a landscape, and myself as standing on one of the atoms. For example, perhaps I start by standing on the oxygen at the end of the double bond.

I then take a walk around the molecule. Different bonds feel different - a single bond is a path, a double bond a ladder, and a triple bond is like climbing a chain-link fence. From each new atomic position, I can see where the other atoms are in relation to me. As I walk around, I get practice in recalling which atom comes next in my path.

As you can imagine, this is a far more rich and engaging form of mental practice than just trying to reproduce static 2D images in my head.

A few years ago, I felt myself to have almost no ability to visualize. Now, I am able to do this with relative ease. So if you don't see this as achievable, I encourage you to practice visualizing, because any skill you develop there can become a very powerful tool for learning.

Replies from: AllAmericanBreakfast, Viliam↑ comment by DirectedEvolution (AllAmericanBreakfast) · 2022-09-19T01:03:21.577Z · LW(p) · GW(p)

I was able to memorize the structures of all 20 amino acids pretty easily and pleasantly in a few hours' practice over the course of a day using this technique.

↑ comment by Viliam · 2022-09-18T12:40:38.098Z · LW(p) · GW(p)

I imagine a computer game, where different types of atoms are spheres of different color (maybe also size; at least H should be tiny), connected the way you described, also having the correct 3D structure, so you walk on them like astronaut.

Now there just needs to be something to do in that game, not sure what. I guess, if you can walk the atoms, so can some critters you need to kill, or perhaps there are some items to collect. Play the game a few times, and you will remember the molecules (because people usually remember useless data from computer games they played).

Advanced version: chemical reactions, where you need to literally cut the atomic bonds and bind new atoms.

Replies from: AllAmericanBreakfast↑ comment by DirectedEvolution (AllAmericanBreakfast) · 2022-09-18T19:30:14.430Z · LW(p) · GW(p)

I haven't seen games using the precise mechanic you describe. However, there are games/simulations to teach chemistry. They ask you to label parts of atoms, or to act out the steps of a chemical reaction.

I'm open to these game ideas, but skeptical, for reasons I'll articulate in a later shortform.

comment by DirectedEvolution (AllAmericanBreakfast) · 2022-11-15T03:35:39.137Z · LW(p) · GW(p)

Intellectual Platforms

My most popular LW post wasn't a post at all. It was a comment [LW · GW] on John Wentworth's post asking "what's up with Monkeypox?"

Years before, in the first few months of COVID, I took a considerable amount of time to build a scorecard of risk factors for a pandemic, and backtested it against historical pandemics. At the time, the first post received a lukewarm reception, and all my historical backtesting quickly fell off the frontpage.

But when I was able to bust it out, it paid off (in karma). People were able to see the relevance to an issue they cared about, and it was probably a better answer in this time and place than they could have obtained almost anywhere else.

Devising the scorecard and doing the backtesting built an "intellectual platform" that I can now use going forward whenever there's a new potential pandemic threat. I liken it to engineering platforms, which don't have an immediate payoff, but are a long-term investment.

People won't necessarily appreciate the hard work of building an intellectual platform when you're assembling it. And this can make it feel like the platform isn't worthwhile: if people can't see the obvious importance of what I'm doing, then maybe I'm on the wrong track?

Instead, I think it's helpful to see people's reactions as indicating whether or not they have a burning problem that your output is providing help for. Of course a platform-in-development won't get much applause! But if you've selected your project thoughtfully and executed passably well, then eventually, when the right moment comes, it may pay off.

For the last couple of years, I've been building toward a platform for "learning how to learn," and I'm also working on an "aging research" platform. These turn out to be harder topics - a pandemic is honestly just a nice smooth logistic curve, which is far less complicated than monitoring your own brain and hacking your own learning process. And aging research is the wild west. So I expect this to take longer.

comment by DirectedEvolution (AllAmericanBreakfast) · 2020-08-09T16:23:49.240Z · LW(p) · GW(p)

Math is training for the mind, but not like you think

Just a hypothesis:

People have long thought that math is training for clear thinking. Just one version of this meme that I scooped out of the water:

“Mathematics is food for the brain,” says math professor Dr. Arthur Benjamin. “It helps you think precisely, decisively, and creatively and helps you look at the world from multiple perspectives . . . . [It’s] a new way to experience beauty—in the form of a surprising pattern or an elegant logical argument.”

But math doesn't obviously seem to be the only way to practice precision, decision, creativity, beauty, or broad perspective-taking. What about logic, programming, rhetoric, poetry, anthropology? This sounds like marketing.

As I've studied calculus, coming from a humanities background, I'd argue it differently.

Mathematics shares with a small fraction of other related disciplines and games the quality of unambiguous objectivity. It also has the ~unique quality that you cannot bullshit your way through it. Miss any link in the chain and the whole thing falls apart.

It can therefore serve as a more reliable signal, to self and others, of one's own learning capacity.

Experiencing a subject like that can be training for the mind, because becoming successful at it requires cultivating good habits of study and expectations for coherence.

Replies from: niplav, Viliam, elityre, ChristianKl↑ comment by niplav · 2020-08-09T21:06:36.993Z · LW(p) · GW(p)

Math is interesting in this regard because it is both very precise and there's no clear-cut way of checking your solution except running it by another person (or becoming so good at math to know if your proof is bullshit).

Programming, OTOH, gives you clear feedback loops.

Replies from: AllAmericanBreakfast, Viliam, mikkel-wilson↑ comment by DirectedEvolution (AllAmericanBreakfast) · 2020-08-10T00:17:43.442Z · LW(p) · GW(p)

In programming, that's true at first. But as projects increase in scope, there's a risk of using an architecture that works when you’re testing, or for your initial feature set, but will become problematic in the long run.

For example, I just read an interesting article on how a project used a document store database (MongoDB), which worked great until their client wanted the software to start building relationships between data that had formerly been “leaves on the tree.” They ultimately had to convert to a traditional relational database.

Of course there are parallels in math, as when you try a technique for integrating or parameterizing that seems reasonable but won’t actually work.

Replies from: gworley↑ comment by Gordon Seidoh Worley (gworley) · 2020-08-10T04:14:03.213Z · LW(p) · GW(p)

Yep. Having worked both as a mathematician and a programmer, the idea of objectivity and clear feedback loops starts to disappear as the complexity amps up and you move away from the learning environment. It's not unusual to discover incorrect proofs out on the fringes of mathematical research that have not yet become part of the cannon, nor is it uncommon (in fact, it's very common) to find running production systems where the code works by accident due to some strange unexpected confluence of events.

↑ comment by Viliam · 2020-08-16T18:21:52.701Z · LW(p) · GW(p)

Programming, OTOH, gives you clear feedback loops.

Feedback, yes. Clarity... well, sometimes it's "yes, it works" today, and "actually, it doesn't if the parameter is zero and you called the procedure on the last day of the month" when you put it in production.

↑ comment by MikkW (mikkel-wilson) · 2020-08-10T22:07:04.006Z · LW(p) · GW(p)

Proof verification is meant to minimize this gap between proving and programming

↑ comment by Viliam · 2020-08-16T18:43:32.418Z · LW(p) · GW(p)

The thing I like about math is that it gives the feeling that the answers are in the territory. (Kinda ironic, when you think about what the "territory" of math is.) Like, either you are right or you are wrong, it doesn't matter how many people disagree with you and what status they have. But it also doesn't reward the wrong kind of contrarianism.

Math allows you to make abstractions without losing precision. "A sum of two integers is always an integer." Always; literally. Now with abstractions like this, you can build long chains out of them, and it still works. You don't create bullshit accidentally, by constructing a theory from approximations that are mostly harmless individually, but don't resemble anything in the real world when chained together.

Whether these are good things, I suppose different people would have different opinions, but it definitely appeals to my aspie aesthetics. More seriously, I think that even when in real world most abstractions are just approximations, having an experience with precise abstractions might make you notice the imperfection of the imprecise ones, so when you formulate a general rule, you also make a note "except for cases such as this or this".

(On the other hand, for the people who only become familiar with math as a literary genre [LW · GW], it might have an opposite effect: they may learn that pronouncing abstractions with absolute certainty is considered high-status.)

↑ comment by Eli Tyre (elityre) · 2020-08-14T15:20:39.055Z · LW(p) · GW(p)

Mathematics shares with a small fraction of other related disciplines and games the quality of unambiguous objectivity. It also has the ~unique quality that you cannot bullshit your way through it. Miss any link in the chain and the whole thing falls apart.

Isn't programming even more like this?

I could get squidgy about whether a proof is "compelling", but when I write a program, it either runs and does what I expect, or it doesn't, with 0 wiggle room.

Replies from: AllAmericanBreakfast↑ comment by DirectedEvolution (AllAmericanBreakfast) · 2020-08-14T18:31:07.779Z · LW(p) · GW(p)

Sometimes programming is like that, but then I get all anxious that I just haven’t checked everything thoroughly!

My guess is this has more to do with whether or not you’re doing something basic or advanced, in any discipline. It’s just that you run into ambiguity a lot sooner in the humanities

↑ comment by ChristianKl · 2020-08-12T09:49:30.386Z · LW(p) · GW(p)

It helps you to look at the world from multiple perspectives: It gets you into a position to make a claim like that soley based on anecdotal evidence and wishful thinking.

comment by DirectedEvolution (AllAmericanBreakfast) · 2022-07-02T07:25:16.130Z · LW(p) · GW(p)

I ~completely rewrote the Wikipedia article for the focus of my MS thesis, aptamers.

Please tell me what you liked, and feel free to give constructive feedback!

While I do think aptamers have relevance to rationality, and will post about that at some point, I'm mainly posting this here because I'm proud of the result and wanted to share one of the curiosities of the universe for your reading pleasure.

comment by DirectedEvolution (AllAmericanBreakfast) · 2023-02-09T00:07:34.139Z · LW(p) · GW(p)

You know what "chunking" means in memorization? It's also something you can do to understand material before you memorize it. It's high-leverage in learning math.

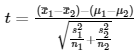

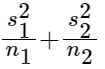

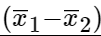

Take the equation for a t score:

That's more symbolic relationships than you can fit into your working memory when you're learning it for the first time. You need to chunk it. Here's how I'd break it into chunks:

Chunk 1:

Chunk 2:

Chunk 3:

Chunk 4:

[(Chunk 1) - (Chunk 2)]/sqrt(Chunk 3)

The most useful insight here is learning to see a "composite" as a "unitary." If we inspect Chunk 1 and see it as two variables and a minus sign, it feels like an arbitrary collection of three things. In the back of the mind, we're asking "why not a plus sign? why not swap out x1 for... something else?" There's a good mathematical answer, of course, but that doesn't necessarily stop the brain from firing off those questions during the learning process, when we're still trying to wrap our heads around these concepts.

But if we can see

as a chunk, a thing with a unitary identity, it lets us think with it in a more powerful way. Imagine if you were running a cafe, and you didn't perceive your dishes as "unitary." A pie wasn't a pie, it was a pan full of sugar, cherries, and cooked dough. The menu would look insane and it would be really hard to understand what you were about to be served.

I think a lot of people who are learning new math go through an analogous phase. They haven't chunked the concepts yet, so when they are introduced to these big higher-order concepts, it feels like reading the ingredients list and prep instructions in a recipe without having any feeling for what the dish is supposed to look and taste like at the end, or whether it's an entree or a dessert. Why not replace the sugar in the pie with salt?

Learning how to chunk, especially in math, is undermined because so often, these chunks aren't given names.

might be described as "the difference of sample means," a phrase which suffers the same problem (why not the sum of sample means? why not medians instead of means?).

I find the skill of perceiving chunks, of learning how to see

as a unitary thing, like "cherry pie," is a subtle but important skill for learning how to learn.

comment by DirectedEvolution (AllAmericanBreakfast) · 2021-02-16T22:30:42.528Z · LW(p) · GW(p)

A Nonexistent Free Lunch

- More Wrong

On an individualPredictIt market, sometimes you can find a set of "no" contracts whose price (1 share of each) adds up to less than the guaranteed gross take.

Toy example:

- Will A get elected? No = $0.30

- Will B get elected? No = $0.70

- Will C get elected? No = $0.90

- Minimum guaranteed pre-fee winnings = $2.00

- Total price of 1 share of both No contracts = $1.90

- Minimum guaranteed pre-fee profits = $0.10

There's always a risk of black swans. PredictIt could get hacked. You might execute the trade improperly. Unexpected personal expenses might force you to sell your shares and exit the market prematurely.

But excluding black swans, I though that as long as three conditions held, you could make free money on markets like these. The three conditions were:

- You take PredictIt's profit fee (10%) into account

- You can find enough such "free money" opportunities that your profits compensate for PredictIt's withdrawal fee (5% of the total withdrawal)

- You take into account the opportunity cost of investing in the stock market (average of 10% per year)

In the toy example above, I calculated that you'd lose $0.10 x 10% = $0.01 to PredictIt's profit fee if you bought 1 of each "No" contract. Your winnings would therefore be $0.99. Of course, if you withdrew it immediately, you'd only take away $0.99 x 95% = $0.94, but you'd still make a 4 cent profit.

In other situations, with more prices, your profit margins might be thinner. The withdrawal fee might turn a gain into a loss, unless you were able to rack up many such profitable trades before withdrawing your money from the PredictIt platform.

I build some software to grab prices off PredictIt and crunch the numbers, and lo and behold, I found an opportunity that seemed to offer 13% returns on this strategy, beating the stock market. Out of all the markets on PredictIt, only this one offered non-negative gains, which I took as evidence that my model was accurate. I wouldn't expect to find many such opportunities, after all.

2. Less Wrong

Fortunately, I didn't act on it. I rewrote the price-calculating software several times, slept on it, and worked on it some more the next day.

Then it clicked.

PredictIt wasn't going to take my losses on the failed "No" contract (the one that resolved to "yes") into account in offsetting the profits from the successful "No" contracts.

In the toy model above, I calculated that PredictIt would take a 10% cut of the $0.10 net profit across all my "No" contracts.

In reality, PredictIt would take a 10% cut of the profits on both successful trades. In the worst case scenario, "Will C get elected" would be the contract that resolved to "yes," meaning I would earn $0.70 from the "A" contract and $0.30 from the "B" contract, for a total "profit" of $1.00.

PredictIt would take a 10% cut, or $0.10, rather than the $0.01 I'd originally calculated. This would leave me with $2.00 from two successful contracts, minus $0.10 from the fees, leaving me with $1.90, and zero net profit or loss. No matter how many times I repeated this bet, I would be left with the same amount I put in, and when I withdrew it, I would take a 5% loss.

When I ran my new model with real numbers from PredictIt, I discovered that every single market would leave me not with zero profit, but with about a 1-2% loss, even before the 5% withdrawal fee was taken into account.

If there is a free lunch, this isn't it. Fortunately, I only wasted a few hours and no money.

There genuinely were moments along the way where I was considering plunking down several thousand dollars to test this strategy out. If I hadn't realized the truth, would I have gotten a wake-up call when the first round of contracts was called in and I came out $50 in the red, rather than $50 in the black? And then exited PredictIt feeling embarrassed and having lost perhaps $300, after withdrawal fees?

3. Meta Insights

What provoked me to almost make a mistake?

I started looking into it in the first place because I'd heard that PredictIt's fee structure and other limitations created inefficiencies, and that you could sometimes find arbitrage opportunities on it. So there was an "alarm bell" for an easy reward. Maybe knowing about PredictIt and being able to program well enough to evaluate for these opportunities would be the advantage that let me harvest that reward.

There were two questions at hand for this strategy. One was "how, exactly, does this strategy work given PredictIt's fee structure?"

The other was "are there actually enough markets on PredictIt to make this strategy profitable in the long run?"

The first question seemed simpler, so I focused on the second question at first. Plus, I like to code, and it's fun to see the numbers crank out of the machine.

But those questions were lumped together into a vague sort of "is this a good idea?"-type question. It took all the work of analysis to help me distinguish them.

How did I catch my error?

Lots of "how am I screwing this up?" checks. I wrote and rewrote the software, refactoring code, improving variable names, and so on. I did calculations by hand. I wrote things out in essay format. Once I had my first, wrong, model in place, I walked through a trade by hand using it, which is what showed me how it would fail. I decided to sleep on it, and had intended to actually spend several weeks or months investigating PredictIt to try and understand how often these "opportunities" arose before pulling the trigger on the strategy.

Does this error align with other similar errors I've made in the past?

It most reminds me of how I went about choosing graduate schools. I sunk many, many hours into creating an enormous spreadsheet with tuition and cost of living expenses, US News and World report rankings, and a linear regression graph. I constantly updated and tweaked it until I felt very confident that it was self-consistent.

When I actually contacted the professors whose labs I was interested in working in, in the midst of filling out grad school applications, they told me to reconsider the type of grad school programs (to switch from bioinformatics to bio- or mechanical engineering). So all that modeling and research lost much of its value.

The general issue here is that an intuitive notion, a vision, has to be decomposed into specific data, model, and problem. There's something satisfying about building a model, dumping data into it, and watching it crank out a result.

The model assumes authority prematurely. It's easy to conflate the fit between the model's design and execution with the fit between the model and the problem. And this arises because to understand the model, you have to design it, and to design it, you have to execute it.

I've seen others make similar errors. I saw a talk by a scientist who'd spent 15 years inventing a "new type of computer," that produced these sort of cloud-like rippling images. He didn't have a model of how those images would translate into any sort of useful calculation. He asked the audience if they had any ideas. That's... the wrong way to build a computer.

AI safety research seems like an attempt to deal with exactly this problem. What we want is a model (AI) that fits the problem at hand (making the world a better place by a well-specified set of human values, whatever that means). Right now, we're dumping a lot of effort into execution, without having a great sense for whether or not the AI model is going to fit the values problem.

How can you avoid similar problems?

One way to test whether your model fits the problem is to execute the model, make a prediction for the results you'll get, and see if it works. In my case, this would have looked like building the first, wrong version of the model, calculating the money I expected to make, seeing that I made less, and then re-investigating the model. The problem is that this is costly, and sometimes you only get one shot.

Another way is to simulate both the model and the problem, which is what saved me in this case. By making up a toy example that I could compute by hand, I was able to spot my error.

It also helps to talk things through with experienced experts who have an incentive to help you succeed. In my grad school application, it was talking things out with scientists doing the kind of research I'm interested in. In the case of the scientist with the nonfunctional experimental computer, perhaps he could have saved 15 years of pointless effort by talking his ideas over with Steven Hsu and engaging more with the quantum computing literature.

A fourth way is to develop heuristics for promising and unpromising problems to work on. Beating the market is unpromising. Building a career in synthetic biology is promising. The issue here is that such heuristics are themselves models. How do you know that they're a good fit to the problem at hand?

In the end, you are to some extent forced ultimately into open-ended experimentation. Hopefully, you at least learn something from the failures, and enjoy the process.

The best thing to do is make experimentation fast, cheap, and easy. Do it well in advance, and build up some cash, so that you have the slack for it. Focusing on a narrow range of problems and tools means you can afford to define each of them better, so that it'll be easier to test each tool you've mastered against each new problem, and each new tool against your well-understood problems.

The most important takeaway, then, is to pick tools and problems that you'll be happy to fail at many times. Each failure is an investment in better understanding the problem or tool. Make sure not to have so few tools/problems that you have time on your hands, or so many that you can't master them.

You can then imagine taking an inventory of your tools and problems. Any time you feel inspired to take a whack at learning a new skill or a new problem, you can ask if it's an optimal addition to your skillset. And you can perhaps (I'm really not sure about this) ask if there are new tool-problem pairs you haven't tried, just through oversight.

comment by DirectedEvolution (AllAmericanBreakfast) · 2022-09-04T16:42:22.676Z · LW(p) · GW(p)

Simulated weight gain experiment, day 2

Background: I'm wearing a weighted vest to simulate the feeling of 50 pounds (23 kg) of weight gain and loss. The plan is to wear this vest for about 20 days, for as much of the day as is practical. I started with zero weight, and will increase it in 5 pound (~2 kg) increments daily to 50 pounds, then decrease it by 5 pounds daily until I'm back to zero weight.

So far, the main challenge of this experiment has been social. The weighted vest looks like a bulletproof vest, and I'm a 6' tall white guy with a buzzcut. My girlfriend laughed just imagining what I must look like (we have a long-distance relationship, so she hasn't seen me wearing it). My housemate's girlfriend gasped when I walked in through the door.

As much as I'd like to wear this continuously as planned, I just don't know if I it will work to wear this to the lab or to classes in my graduate school. If the only problem was scaring people, I could mitigate that by emailing my fellow students and the lab and telling them what I'm doing and why. However, I'm also in the early days of setting up my MS thesis research in a big, professional lab that has invested a lot of time and money into my experiment. I fear that I would come across as distracted, and that this would undermine my standing in the lab.

Today, I'm going to try wearing a thin elastic mountain-climbing hoodie over the weighted vest. This might make it look like I have some sort of a bomb strapped underneath my shirt, but it doesn't seem as immediately startling to look at.

Other things I've noticed:

- The vest makes you hot, not just heavy.

- It does restrict your breathing somewhat, because the weight is squeezing in on your ribcage.

- The weight creates backaches and shoulder aches where I don't usually experience them.

- I tried wearing the fully-loaded vest when it first arrived, and it feels significantly less difficult carrying 50 pounds on the vest than it does carrying 50 pounds in your hands.

- One of the psychological sensations I wasn't expecting, but that seems relevant, is the displeasure I get in putting it on. I see this as somewhat analogous to how a person who weighs more than they'd like to might feel on a bad day looking at their body in the mirror. The sense that you don't get to look or feel the way you'd like to seems like an important part of the experiment.

Overall, these early impressions make me think that the weighted vest exaggerates the discomfort of weight gain. However, I think it is still a useful complement to the scientific knowledge and anecdotes that people share about the effects of weight gain and loss. It makes it visceral.

I also think that the weighted vest offers an experience similar to a chronic illness. When you get the flu, you can usually receive some accommodations on the expectation that you'll be better soon. Chronic health conditions may require you to curtail your long-term ambitions due to the constant negative impact they have on your capacity to work. Wearing a weighted vest is similar. It makes you have an experience of "how would it affect your capacity to work if you were more physically burdened for the long term?"

Perhaps it's a little like becoming poorer or less free.

In response to this, I notice my brain becoming more interested in taking control of this aspect of my health. It provokes a "never again" sort of response.

There are many ascetic practices that involve self-inflicting states of discomfort in order to create a spiritual benefit. This seems to be getting into that territory. One of the differences is that these spiritual practices are often done communally, and the suffering is given meaning through the spiritual practice.

By contrast, our materialistic culture emphatically does not imbue obesity or other physical and mental health challenges with spiritual significance. This experiment replicates part of that aspect: it singles me out as individually "worse" than other people, and the experience is basically meaningless on a social level. Indeed, worse than meaningless - I fear that I would be perceived as scaring my classmates and that I'd irritate my boss at the lab.

It seems useful on some level to put yourself through that experience. What if you were individually impacted by a health issue that simply made your life worse and led to other people treating you worse? Would you be angry at the world? Would you get depressed? Would you muster your powers to do anything you could to get better?

comment by DirectedEvolution (AllAmericanBreakfast) · 2020-11-25T21:58:53.555Z · LW(p) · GW(p)

The Rationalist Move Club

Imagine that the Bay Area rationalist community did all want to move. But no individual was sure enough that others wanted to move to invest energy in making plans for a move. Nobody acts like they want to move, and the move never happens.

Individuals are often willing to take some level of risk and make some sacrifice up-front for a collective goal with big payoffs. But not too much, and not forever. It's hard to gauge true levels of interest based off attendance at a few planning meetings.

Maybe one way to solve this is to ask for escalating credible commitments.

A trusted individual sets up a Rationalist Move Fund. Everybody who's open to the idea of moving puts $500 in a short-term escrow. This makes them part of the Rationalist Move Club.

If the Move Club grows to a certain number of members within a defined period of time (say 20 members by March 2020), then they're invited to planning meetings for a defined period of time, perhaps one year. This is the first checkpoint. If the Move Club has not grown to that size by then, the money is returned and the project is cancelled.

By the end of the pre-defined planning period, there could be one of three majority consensus states, determined by vote (approval vote, obviously!):

- Most people feel there is a solid timetable and location for a move, and want to go forward that plan as long as half or more of the Move Club members also approve of this option. To cast a vote approving of this choice requires an additional $2,000 deposit per person into the Move Fund, which is returned along with their initial $500 deposit after they've signed a lease or bought a residence in the new city, or in 3 years, whichever is sooner.

- Most people want to continue planning for a move, but aren't ready to commit to a plan yet. To cast a vote approving of this choice requires an additional $500 deposit per person into the Move Fund, unless they paid $2,000 to approve of option 1.

- Most people want to abandon the move project. Anybody approving only of this option has their money returned to them and exits the Move Club, even if (1) or (2) is the majority vote. If this option is the majority vote, all money in escrow is returned to the Move Club members and the move project is cancelled.

Obviously the timetables, monetary commitments could be modified. Other "commitment checkpoints" could be added in as well. I don't live in the Bay Area, but if those of you who do feel this framework could be helpful, please feel free to steal it.

comment by DirectedEvolution (AllAmericanBreakfast) · 2020-08-08T18:52:21.313Z · LW(p) · GW(p)

What gives LessWrong staying power?

On the surface, it looks like this community should dissolve. Why are we attracting bread bakers, programmers, stock market investors, epidemiologists, historians, activists, and parents?

Each of these interests has a community associated with it, so why are people choosing to write about their interests in this forum? And why do we read other people's posts on this forum when we don't have a prior interest in the topic?

Rationality should be the art of general intelligence. It's what makes you better at everything. If practice is the wood and nails, then rationality is the blueprint.

To determine whether or not we're actually studying rationality, we need to check whether or not it applies to everything. So when I read posts applying the same technique to a wide variety of superficially unrelated subjects, it confirms that the technique is general, and helps me see how to apply it productively.

This points at a hypothesis, which is that general intelligence is a set of defined, generally applicable techniques. They apply across disciplines. And they apply across problems within disciplines. So why aren't they generally known and appreciated? Shouldn't they be the common language that unites all disciplines?

Perhaps it's because they're harder to communicate and appreciate. If I'm an expert baker, I can make another delicious loaf of bread. Or I can reflect on what allows me to make such tasty bread, and speculate on how the same techniques might apply to architecture, painting, or mathematics. Most likely, I'm going to choose to bake bread.

This is fine, until we start working on complex, interdisciplinary projects. Then general intelligence becomes the bottleneck for having enough skill to get the project done. Sounds like the 21st century. We're hitting the limits of what's achievable through sheer persistence in a single specialty, and we're learning to automate them away.

What's left is creativity, which arises from structured decision-making. I've noticed that the longer I practice rationality, the more creative I become. I believe that's because it gives me the resources to turn an intuition into a specified problem, envision a solution, create a sort of Fermi-approximation to give it definition, and guidance on how to develop the practical skills and relationships that will let me bring it into being.

If I'm right, human application of these techniques will require deliberate practice with the general techniques - both synthesizing them and practicing them individually, until they become natural.

The challenge is that most specific skills lend themselves to that naturally. If I want to become a pianist, I practice music until I'm good. If I want to be a baker, I bake bread. To become an architect, design buildings.

What exactly do you do to practice the general techniques of rationality? I can imagine a few methods:

- Participate in superforecasting tournaments, where Bayesian and gears/policy level thinking are the known foundational techniques.

- Learn a new skill, and as you go, notice the problems you encounter along the way. Try to imagine what a general solution to that problem might look like. Then go out and build it.

- Pick a specific rationality technique, and try to apply it to every problem you face in your life.

↑ comment by Viliam · 2020-08-15T21:11:38.008Z · LW(p) · GW(p)

What gives LessWrong staying power?

For me, it's the relatively high epistemic standards combined with relative variety of topics. I can imagine a narrowly specialized website with no bullshit, but I haven't yet seen a website that is not narrowly specialized and does not contain lots of bullshit. Even most smart people usually become quite stupid outside the lab. Less Wrong is a place outside the lab that doesn't feel painfully stupid. (For example, the average intelligence at Hacker News seems quite high, but I still regularly find upvoted comments that make me cry.)

Replies from: AllAmericanBreakfast↑ comment by DirectedEvolution (AllAmericanBreakfast) · 2020-08-16T01:51:59.847Z · LW(p) · GW(p)

Yeah, Less Wrong seems to be a combination of project and aesthetic. Insofar as it's a project, we're looking for techniques of general intelligence, partly by stress-testing them on a variety of topics. As an aesthetic, it's a unique combination of tone, length, and variety + familiarity of topics that scratches a particular literary itch.

comment by DirectedEvolution (AllAmericanBreakfast) · 2022-11-05T06:28:32.369Z · LW(p) · GW(p)

School teaches terrible reading habits.

When you're assigned 30 pages of a textbook, the diligent students read them, then move on to other things. A truly inquisitive person would struggle to finish those 30 pages, because there are almost certainly going to be many more interesting threads they want to follow within those pages.

As a really straightforward example, let's say you commit to reading a review article on cell senescence. Just forcing your way through the paper, you probably won't learn much. What will make you learn is looking at the citations as you go.

I love going 4 layers deep. I try to understand the mechanisms that underpin the experiments that generated the data that informed the facts that inform the theories that the review article is covering. When I do this, it suddenly transforms the review article from dry theory to something that's grounded in memories of data and visualizations of experiments. I have a "simulated lived experience" to map onto the theory. It becomes real.

Replies from: niplav, ChristianKl↑ comment by niplav · 2022-11-08T14:41:25.895Z · LW(p) · GW(p)

I think that for anything except scholarship, those aren't terrible. I'd attack them from the other side: They aren't shallow enough. In industry, most often you often just want to find some specific piece of information, so reading the whole 30 pages is a waste of time, as is following your deep curiosity down into rabbit holes.

Replies from: AllAmericanBreakfast↑ comment by DirectedEvolution (AllAmericanBreakfast) · 2022-11-08T14:57:23.450Z · LW(p) · GW(p)

I agree with you. It’s a good point that I should have clarified this is for a specific use case - rapidly scouting out a field that you’re unfamiliar with. When I take this approach, I also do not read entire papers. I just read enough to get the gist and find the next most interesting link.

So for example, I am preparing for a PhD, where I’ll probably focus on aging research. I need to understand what’s going on broadly in the field. Obviously I can’t read everything, and as I have no specific project, there are no particular known-in-advance bits of information I need to extract.

I don’t yet have a perfect account for what exactly you “learn” from this - at the speed I read, I don’t remember more than a tiny fraction of the details. My best explanation is that each paper you skim gives you context for understanding the next one. As you go through this process, you come away with some takeaway highlights and things to look at next.

So for example, the last time I went through the literature on senescence, I got into the antagonistic pleiotropy literature. Most of it is way too deep for me at this point, but I took away the basic insights and epistemic: models consistently show that aging is the only stable equilibrium outcome of evolution, that it’s fueled by genes that confer a reproductive advantage early in life but a disadvantage later in life, and that the late-life disadvantages should not be presumed to be intrinsically beneficial - they are the downside side of a tradeoff, and evolution often mitigates them, but generally cannot completely eliminate them.

I also came to understand that this is 70 years of development of mathematical and data-backed models, which consistently show the same thing.

Relevant for my research is that anti-aging therapeutics aren’t necessarily going to be “fighting against evolution.” They are complementing what nature is already trying to do: mitigate the genetic downsides in old age of adaptations for youthful vigor.

↑ comment by ChristianKl · 2022-11-05T15:44:55.363Z · LW(p) · GW(p)

That sounds more like a problem of the teaching style than school in particular. Instead of assigning textbook pages to be read, a better way is to give the students problems to solve and tell them that those textbook pages are relevant to solving the problem. That's how my biology and biochemistry classes went. We were never assigned to read particular pages of the book.

Replies from: AllAmericanBreakfast↑ comment by DirectedEvolution (AllAmericanBreakfast) · 2022-11-05T16:47:51.854Z · LW(p) · GW(p)

a better way is to give the students problems to solve and tell them that those textbook pages are relevant to solving the problem. That's how my biology and biochemistry classes went. We were never assigned to read particular pages of the book.

That does sound like a better way. Personally, I'm halfway through my biomedical engineering MS and have never experienced a STEM class like this. If you don't mind my asking, where did you take your bio/biochem classes (or what type of school was it)?

Replies from: ChristianKl↑ comment by ChristianKl · 2022-11-05T19:11:22.912Z · LW(p) · GW(p)

I studied bioinformatics at the Free University of Berlin. Just like we had weekly problem sheets in math classes we also had them in biology and biochemistry. It was more than a decade ago. There was certainly a sense of not simply copying what biology majors might do but to be focused more on problem-solving skills that would presumably be more relevant.

comment by DirectedEvolution (AllAmericanBreakfast) · 2022-09-01T04:39:30.677Z · LW(p) · GW(p)

There are many software tools for study, learning, attention programming, and memory prosthetics.

- Flashcard apps (Anki)

- Iterated reading apps (Supermemo)

- Notetaking and annotation apps (Roam Research)

- Motivational apps (Beeminder)

- Time management (Pomodoro)

- Search (Google Scholar)

- Mnemonic books (Matuschak and Nielsen's "Quantum Country")

- Collaborative document editing (Google Docs)

- Internet-based conversation (Internet forums)

- Tutoring (Wyzant)

- Calculators, simulators, and programming tools (MATLAB)

These complement analog study tools, such as pen and paper, textbooks, worksheets, and classes.

These tools tend to keep the user's attention directed outward. They offer useful proxy metrics for learning: getting through 20 flashcards per day, completing N Pomodoros, getting through the assigned reading pages, turning in the homework.

However, these proxy metrics, like any others, are vulnerable to streetlamp effects and Goodharting.

Before we had this abundance of analog and digital knowledge tools, scholars relied on other ways to tackle problems. They built memory palaces, visualized, looked for examples in the world around them, invented approximations, and talked to themselves. They relied on their brains, bodies, and what they could build from their physical environment to keep their thoughts in order.

Of course, humanity's transition into the enlightenment and industrial revolution coincided with the development of many of these knowledge tools: the abacus, the printing press, cheaper writing implements, slide rules, and eventually the computer. These undoubtedly have been essential for human progress.

Yet I suspect that access to these tools have led us to neglect the basic mental faculties our ancestors relied on to think and learn about scholarly topics. Key examples include the ability to hold and rehearse an extended chain of thought in your head, the ability to visualize or imagine feelings of physical movement, and the ability to deliberately construct patterns of thought.

These behaviors are perhaps more familiar to me than to the average person, because they are still, to some extent, expected of classical pianists like myself. We were expected to memorize our music and do mental practice, and composers are expected to be able to compose in their head. I taught myself relative pitch purely via a year's work imagining and identifying musical intervals in my head. This allowed me to invent melodies in my head as a side effect. Those were abilities I gained well into adulthood, and I had no idea my brain was capable of that before I was pressured by my music department into figuring out how to do it.

I expect that people have great potential to develop comparable mental skills in left-brained areas such as mental calculation and logic, visualizing geometry, and recalling facts. My hypothesis is that practice at expanding these mental abilities, and doing so for practical purposes (rather than, for example, to win memorization competitions), would allow people to understand topics and solve problems that previously had seemed incomprehensible to them. I think we've compensated for our modern artifice by letting go of mental art, and have realized fewer gains than the proliferation of computing power would have suggested to a scientist, mathematician, or engineer of 100 years ago.

The software programs above start with a psychological thesis about how learning works, do a task analysis to figure out what physical behaviors are required to realize that thesis, and then build a software program to facilitate and direct those behaviors. Anki starts with a thesis about spaced repetition, uses flashcards as its task, and builds a program to allow for more powerful flashcard-based practice than you can do with paper cards.

Software programs might also be useful to exercise the interior mental faculties. However, the point is for the practitioner to relate with their interior state of mind, not the external readout on a screen. Just as with software programs, we need a psychological thesis about how learning works. However, instead of a physical task analysis, we need a mental task analysis. Then we need a technique for enacting that mental task more efficiently for the purposes of learning and scholarship.

For example, under the thesis of constructivism, students must actively "build" their own knowledge by actively wrestling with the subject. Testing is a useful way to build understanding. Under this psychological thesis, a mental activity that might support learning is having students memorize practice problems and practice being able to describe, in their own head, how they'd solve that problem. They might also practice remembering the major topics related to the material they're covering, what order those topics are presented in, and how they interrelate in the overarching theme of the section. Being able to provoke thoughts on these topics at will, and successfully recall the material (i.e. on a walk, while driving, in the shower) would also be a form of "programmable attention," as many software programs seek to achieve, but one that is generated and directed by the student's own power of mind.

Another example, one getting farther away from what software can achieve, is simply the ability to hold a fluid, ongoing chain of thought recalling a topic of interest. For example, imagine a student who had thoroughly learned about the immune system. I think it is typical that students only really turn their thoughts to that topic when prompted, such as during a conversation, by a test, or while reading - all external forms of stimulation. A student might practice being able to think about what they know of the immune system at will, unprovoked. The ability to let their mind wander among the facts they've learned is not something most people can easily do, and seems obviously to me to be both an attainable and a very useful scholarly skill. But "spend some time thinking about and elaborating on what you know of immunology for the next 15 minutes, forming organic connections with other things you know and whatever you happen to be curious about" is not something that a software program can easily facilitate.

It's just this sort of mental practice that I'm interested in discovering, learning about, and promoting. There is plenty of effort and interest in external mental prosthetics. I am interested in what we can call the techniques of mental practice.

Replies from: Gunnar_Zarncke↑ comment by Gunnar_Zarncke · 2022-09-01T09:22:15.466Z · LW(p) · GW(p)

Please post this as a regular post.

Replies from: matto, Gyrodiot↑ comment by matto · 2022-09-01T18:51:24.324Z · LW(p) · GW(p)

Thirding this. Would love more detail or threads to pull on. Going into the constructivism rabbit hole now.

Replies from: AllAmericanBreakfast↑ comment by DirectedEvolution (AllAmericanBreakfast) · 2022-09-01T21:53:23.678Z · LW(p) · GW(p)

I'll continue fleshing it out over time! Mostly using the shortform as a place to get my thoughts together in legible form prior to making a main post (or several). By the way, contrast "constructivism" with "transmissionism," the latter being the (wrong) idea that students are basically just sponges that passively absorb the information their teacher spews at them. I got both terms from Andy Matuschak.

comment by DirectedEvolution (AllAmericanBreakfast) · 2020-12-19T21:03:29.390Z · LW(p) · GW(p)

Thoughts on cheap criticism

It's OK for criticism to be imperfect. But the worst sort of criticism has all five of these flaws:

- Prickly: A tone that signals a lack of appreciation for the effort that's gone in to presenting the original idea, or shaming the presenter for bringing it up.

- Opaque: Making assertions or predictions without any attempt at specifying a contradictory gears-level model, evidence basis, even on the level of anecdote or fiction.

- Nitpicky: Attacking the one part of the argument that seems flawed, without arguing for how the full original argument should be reinterpreted in light of the local disagreement.

- Disengaged: Not signaling any commitment to continue the debate to mutual satisfaction, or even to listen to/read and respond to a reply.

- Shallow: An obvious lack of engagement with the details of the argument or evidence originally offered.

I am absolutely guilty of having delivered Category 5 criticism, the worst sort of cheap shots.

There is an important tradeoff here. If standards are too high for critical commentary, it can chill debate and leave an impression that either nobody cares, everybody's on board, or the argument's simply correct. Sometimes, an idea can be wrong for non-obvious reasons, and it's important for people to be able to say "this seems wrong for reasons I'm not clear about yet" without feeling like they've done wrong.

On the other hand, cheap criticism is so common because it's cheap. It punishes all discourse equally, which means that the most damage is done to those who've put in the most effort to present their ideas. That is not what we want.

It usually takes more work to punish more heavily. Executing someone for a crime takes more work than jailing them, which takes more work than giving them a ticket. Addressing a grievance with murder is more dangerous than starting a brawl, which is more dangerous than starting an argument, which is more dangerous than giving someone the cold shoulder.

But in debate, cheap criticism has this perverse quality where it does the most to diminish a discussion, while being the easiest thing to contribute.

I think this is a reason to, on the whole, create norms against cheap criticism. If discussion is already at a certain volume, that can partially be accomplished by ignoring cheap criticism entirely.

But for many ideas, cheap criticism is almost all it gets, early on in the discussion. Just one or two cheap criticisms can kill an idea prematurely. So being able to address cheap criticisms effectively, without creating unreasonably high standards for critical commentary, seems important.

Replies from: mr-hire, AllAmericanBreakfast, luke-allen↑ comment by Matt Goldenberg (mr-hire) · 2020-12-20T23:03:22.038Z · LW(p) · GW(p)

This seems like a fairly valuable framework. It occurs to me that all 5 of these flaws are present in the "Snark" genre present in places like Gawker and Jezebel.

↑ comment by DirectedEvolution (AllAmericanBreakfast) · 2020-12-19T23:26:06.251Z · LW(p) · GW(p)

I am going to experiment with a karma/reply policy to what I think would be a better incentive structure if broadly implemented. Loosely, it looks like this:

- Strong downvote plus a meaningful explanatory comment for infractions worse than cheap criticism; summary deletions for the worst offenders.

- Strong downvote for cheap criticism, no matter whether or not I agree with it.

- Weak downvote for lazy or distracting comments.

- Weak upvote for non-cheap criticism or warm feedback of any kind.

- Strong upvote for thoughtful responses, perhaps including an appreciative note.

- Strong upvote plus a thoughtful response of my own to comments that advance the discussion.

- Strong upvote, a response of my own, and an appreciative note in my original post referring to the comment for comments that changed or broadened my point of view.

↑ comment by Luke Allen (luke-allen) · 2021-01-04T22:05:25.316Z · LW(p) · GW(p)

I'm trying a live experiment: I'm going to see if I can match your erisology one-to-one as antagonists to the Elements of Harmony from My Little Pony:

- Prickly: Kindness

- Opaque: Honesty

- Nitpicky: Generosity

- Disengaged: Loyalty

- Shallow: Laughter

Interesting! They match up surprisingly well, and you've somehow also matched the order of 3 out of 5 of the corresponding "seeds of discord" from 1 Peter 2:1, CSB: "Therefore, rid yourselves of all malice, all deceit, hypocrisy, envy, and all slander." If my pronouncement of success seems self-serving and opaque, I'll elaborate soon:

- Malice: Kindness

- Deceit: Honesty

- Hypocrisy: Loyalty

- Envy: Generosity

- Slander: Laughter

And now the reveal. I'm a generalist; I collect disparate lists of qualities (in the sense of "quality vs quantity"), and try to integrate all my knowledge into a comprehensive worldview. My world changed the day I first saw My Little Pony; it changed in a way I never expected, in a way many people claim to have been affected by HPMOR. I believed I'd seen a deep truth, and I've been subtly sharing it wherever I can.

The Elements of Harmony are the character qualities that, when present, result in a spark of something that brings people together. My hypothesis is that they point to a deep-seated human bond-testing instinct. The first time I noticed a match-up was when I heard a sermon on The Five Love Languages, which are presented in an entirely different order:

- Words of affirmation: Honesty

- Quality time: Laughter

- Receiving gifts: Generosity

- Acts of service: Loyalty

- Physical touch: Kindness

Well! In just doing the basic research to write this reply, it turns out I'm re-inventing the wheel! Someone else has already written a psychometric analysis of the Five Love Languages and found they do indeed match up with another relational maintenance typology.

Thank you for your post; you've helped open my eyes up to existing research I can use in my philosophical pursuits, and sparked thoughts of what "effective altruism" use I can put them to.

comment by DirectedEvolution (AllAmericanBreakfast) · 2022-09-01T02:24:40.657Z · LW(p) · GW(p)

Weight Loss Simulation

I've gained 50 pounds over the last 15 years. I'd like to get a sense of what it would be like to lose that weight. One way to do that is to wear a weighted vest all day long for a while, then gradually take off the weight in increments.

The simplest version of this experiment is to do a farmer's carry with two 25 lb free weights. It makes a huge difference in the way it feels to move around, especially walking up and down the stairs.

However, I assume this feeling is due to a combination of factors:

- The sense of self-consciousness that comes with doing something unusual

- The physical bulk and encumbrance (i.e. the change in volume and inertia, having my hands occupied, pressure on my diaphragm if I were wearing a weighted vest, etc)

- The ratio of how much muscle I have to how much weight I'm carrying

If I lost 50 pounds, that would likely come with strength training as well as dieting, so I might keep my current strength level while simultaneously being 50 pounds lighter. That's an argument in favor of this "simulated weight loss" giving me an accurate impression of how it would feel to really lose that much weight.

On the other hand, there would be no sudden transition from weird artificial bulk to normalcy. It would be a gradual change. Putting on a weighted vest and wearing it around for, say, a month, would be socially awkward, and require the daily choice to put it on. I'd have to take it off to sleep, shower, etc. This would cause the difference in weight to be constantly brought to my attention, although that effect would diminish throughout the experiment as I gradually took weights off of the vest and got used to wearing it around.

One way to make it more realistic would be to gradually increase the weight to 50 lb, then gradually decrease it back to 0 lb. Another way to make it more realistic would be to add uncertainty. Instead of, say, changing the weight by 1 increment every day, I might flip a coin and change the weight by 1 increment only if I flip heads.

I'm interested to try this experiment. The weighted vest would be a useful piece of exercise gear on its own. The only way to really find out if the experiment actually matches the real feeling of losing 50 lb would be to lose the weight for real. If I did the experiment, then lost the weight, I'd actually be able to make a comparison. That would be impossible if I didn't do the experiment. I also suspect the experiment would be motivating, and would also provide real exercise that would contribute to actual weight loss.

Overall, this experiment seems like it would have very few downsides and quite a few upsides, so I am excited to give it a try!

comment by DirectedEvolution (AllAmericanBreakfast) · 2022-06-14T18:32:13.138Z · LW(p) · GW(p)

Overtones of Philip Tetlock:

"After that I studied morning and evening searching for the principle,

and came to realize the Way of Strategy when I was fifty. Since then I

have lived without following any particular Way. Thus with the virtue of

strategy I practice many arts and abilities - all things with no teacher. To

write this book I did not use the law of Buddha or the teachings of Confucius, neither old war chronicles nor books on martial tactics. I take up

my brush to explain the true spirit of this Ichi school as it is mirrored in

the Way of heaven and Kwannon." - Miyamoto Musashi, The Book of Five Rings

comment by DirectedEvolution (AllAmericanBreakfast) · 2023-06-23T03:29:03.794Z · LW(p) · GW(p)

A "Nucleation" Learning Metaphor

Nucleation is the first step in forming a new phase or structure. For example, microtubules are hollow cylinders built from individual tubulin proteins, which stack almost like bricks. Once the base of the microtubule has come together, it's easy to add more tubulin to the microtubule. But assembling the base - the process of nucleation - is slow without certain helper proteins. These catalyze the process of nucleation by binding and aligning the first few tubulin proteins.

What does learning have in common with nucleation? When we learn from written sources, like textbooks or a lecture, the main sensory input we experience is typically a continuous flow of words and images. All these words and phrases are like "information monomers." Achieving a synthetic understanding of the material is akin to the growth of larger structures, the "microtubules." Exposing ourselves to more and more of a teacher's words or textbook pages does increase the "information monomer concentration" in our minds, and makes a process of spontaneous nucleation more likely.

At some point, synthesis just happens if we keep at it long enough, the same way that nucleation and the growth of microtubules spontaneously happens if we keep adding more tubulin to the solution. But for most people, I think the bottleneck to increasing the rate of forming a synthetic understanding is bottlenecked not by exposure to "information monomers," the sheer amount of reading or listening we do, but by the process of "nucleation," our ability to form at least a rudimentary synthetic understanding out of even a small quantity of content.

Replies from: Vladimir_Nesov, Viliam↑ comment by Vladimir_Nesov · 2023-06-23T12:30:59.710Z · LW(p) · GW(p)

Might be about using the accumulated references to seek out and generate more training data (at the currently relevant level, not just anything). Passive perception of random tidbits might take much longer. I wonder if there are lower estimates on human sample efficiency, how long can it take to not learn something when getting regularly exposed to it, for a person of given intelligence, who isn't exercising curiosity in that direction? My guess it's plausibly a difference of multiple orders of magnitude, and a different ceiling.

↑ comment by Viliam · 2023-06-23T09:04:30.773Z · LW(p) · GW(p)

This reminds me of a thing that gestalt psychologists call "re-centering", which in your metaphor could be described as having a lot of free-floating information monomers that suddenly snap together (which in slow motion might be that first the base forms spontaneously, and then everything else snaps to the base). The difference is that according to them, this happens repeatedly... when you get more information, and it turns out that the original structure was not optimal, so at some moment it snaps again into a new shape. (A "paradigm shift".)

Then there is a teaching style called constructivism (though this name was also abused to mean different things) which is concerned with getting the base structure built as soon as possible. And then getting the next steps right, too. Emphasis on having correct mental models, over memorizing facts. -- One of the controversies is whether this is even necessary to do. Some people insist that it is a waste of time; if you give people enough information monomers, at some moment it will snap to the right structure (and if it won't, well, sucks to be you; it worked okay for them). Other people say that this attitude is why so many kids hate school, especially math (long inferential chains), but if you keep building the structure right all the time, it becomes much less frustrating experience.

So I like the metaphor, with the exception that it is not a simple cylinder, but a more complicated structure, that can get wrong (and get fixed) multiple times.

comment by DirectedEvolution (AllAmericanBreakfast) · 2022-04-30T05:17:52.122Z · LW(p) · GW(p)

Personal evidence for the impact of stress on cognition. This is my Lichess ranking on Blitz since January. The two craters are, respectively, the first 4 weeks of the term, and the last 2 weeks. It begins trending back up immediately after I took my last final.

Replies from: TLW↑ comment by TLW · 2022-04-30T17:36:20.821Z · LW(p) · GW(p)

How much did you play during the start / end of term compared to normal?

Replies from: AllAmericanBreakfast↑ comment by DirectedEvolution (AllAmericanBreakfast) · 2022-04-30T18:10:58.698Z · LW(p) · GW(p)

I don’t know exactly, Lichess doesn’t have a convenient way to plot that day by day. But probably roughly equal amounts. It’s my main distraction.

Replies from: TLW↑ comment by TLW · 2022-04-30T18:30:17.113Z · LW(p) · GW(p)

Too bad. My suspects for confounders for that sort of thing would be 'you played less at the start/end of term' or 'you were more distracted at the start/end of term'.

Replies from: AllAmericanBreakfast↑ comment by DirectedEvolution (AllAmericanBreakfast) · 2022-04-30T20:03:12.755Z · LW(p) · GW(p)

Playing less wouldn’t decrease my score, and being distracted is one of the effects of stress.

Replies from: TLW↑ comment by TLW · 2022-04-30T23:56:56.447Z · LW(p) · GW(p)

Playing less wouldn’t decrease my score

Interesting. Is this typically the case with chess? Humans tend to do better with tasks when they are repeated more frequently, albeit with strongly diminishing returns.

being distracted is one of the effects of stress.

Absolutely, which makes it very difficult to tease apart 'being distracted as a result of stress caused by X causing a drop' and 'being distracted due to X causing a drop'.

Replies from: AllAmericanBreakfast↑ comment by DirectedEvolution (AllAmericanBreakfast) · 2022-05-01T00:14:54.976Z · LW(p) · GW(p)

Interesting. Is this typically the case with chess? Humans tend to do better with tasks when they are repeated more frequently, albeit with strongly diminishing returns.

I see what you mean, and yes, that is a plausible hypothesis. It's hard to get a solid number, but glancing over the individual records of my games, it looks like I was playing about as much as usual. Subjectively, it doesn't feel like lack of practice was responsible.

I think the right way to interpret my use of "stress" in this context is "the bundle of psychological pressures associated with exam season," rather than a psychological construct that we can neatly distinguish from, say, distractability or sleep loss. It's kind of like saying "being on an ocean voyage with no access to fresh fruits and vegetables caused me to get scurvy."

comment by DirectedEvolution (AllAmericanBreakfast) · 2020-12-23T03:16:33.331Z · LW(p) · GW(p)

Does rationality serve to prevent political backsliding?

It seems as if politics moves far too fast for rational methods can keep up. If so, does that mean rationality is irrelevant to politics?

One function of rationality might be to prevent ethical/political backsliding. For example, let's say that during time A, institution X is considered moral. A political revolution ensues, and during time B, X is deemed a great evil and is banned.

A change of policy makes X permissible during time C, banned again during time D, and absolutely required for all upstanding folk during time E.

Rational deliberation about X seems to play little role in the political legitimacy of X.

However, rational deliberation about X continues in the background. Eventually, a truly convincing argument about the ethics of X emerges. Once it does, it is so compelling that it has a permanent anchoring effect on X.

Although at some times, society's policy on X contradicts the rational argument, the pull of X is such that it tends to make these periods of backsliding shorter and less frequent.

The natural process of developing the rational argument about X also leads to an accretion of arguments that are not only correct, but convincing as well. This continues even when the ethics of X are proven beyond a shadow of a doubt, which continues to shorten and prevent periods of backsliding.

In this framework, rationality does not "lead" politics. Instead, it channels it. The goal of a rational thinker should not be to achieve an immediate political victory. Instead, it should be to build the channels of rational thought higher and stronger, so that the fierce and unpredictable waters of politics eventually are forced to flow in a more sane and ethical direction.

The reason you'd concern yourself with persuasion in this context is to prevent the fate of Gregor Mendel, whose ideas on inheritance were lost in a library for 40 years. If you come up with a new or better ethical argument about X, make sure that it becomes known enough to survive and spread. Your success is not your ability to immediately bring about the political changes your idea would support. Instead, it's to bring about additional consideration of your idea, so that it can take root, find new expression, influence other ideas, and either become a permanent fixture of our ethics or be discarded in favor of an even stronger argument.

comment by DirectedEvolution (AllAmericanBreakfast) · 2020-10-23T16:15:41.315Z · LW(p) · GW(p)