Forecasting Thread: AI Timelines

post by Amandango, Daniel Kokotajlo (daniel-kokotajlo), Ben Pace (Benito) · 2020-08-22T02:33:09.431Z · LW · GW · 19 commentsThis is a question post.

Contents

How to make a distribution using Elicit How to overlay distributions on the same graph How to add an image to your comment Top Forecast Comparisons None Answers 44 datscilly 34 Ethan Perez 33 Daniel Kokotajlo 25 SDM 23 stuhlmueller 20 Ben Pace 17 Mark Xu 17 Adele Lopez 15 steven0461 14 Rohin Shah 13 Matthew Barnett 12 Mati_Roy 11 NunoSempere 10 VermillionStuka 9 elifland 8 ChosunOne 6 PabloAMC 4 paulgu 3 tim_dettmers -1 JoshuaFox None 19 comments

This is a thread for displaying your timeline until human-level AGI.

Every answer to this post should be a forecast. In this case, a forecast showing your AI timeline.

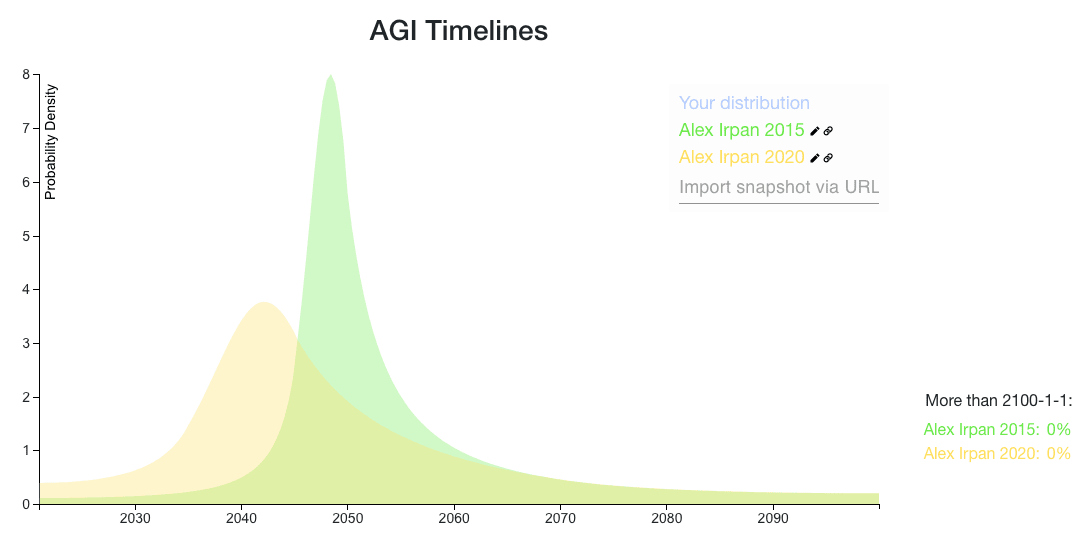

For example, here are Alex Irpan’s AGI timelines.

For extra credit, you can:

- Say why you believe it (what factors are you tracking?)

- Include someone else's distribution who you disagree with, and speculate as to the disagreement

How to make a distribution using Elicit

- Go to this page.

- Enter your beliefs in the bins.

- Specify an interval using the Min and Max bin, and put the probability you assign to that interval in the probability bin.

- For example, if you think there's a 50% probability of AGI before 2050, you can leave Min blank (it will default to the Min of the question range), enter 2050 in the Max bin, and enter 50% in the probability bin.

- The minimum of the range is January 1, 2021, and the maximum is January 1, 2100. You can assign probability above January 1, 2100 (which also includes 'never') or below January 1, 2021 using the Edit buttons next to the graph.

- Click 'Save snapshot,' to save your distribution to a static URL.

- A timestamp will appear below the 'Save snapshot' button. This links to the URL of your snapshot.

- Make sure to copy it before refreshing the page, otherwise it will disappear.

- Copy the snapshot timestamp link and paste it into your LessWrong comment.

- You can also add a screenshot of your distribution using the instructions below.

How to overlay distributions on the same graph

- Copy your snapshot URL.

- Paste it into the Import snapshot via URL box on the snapshot you want to compare your prediction to (e.g. the snapshot of Alex's distributions).

- Rename your distribution to keep track.

- Take a new snapshot if you want to save or share the overlaid distributions.

How to add an image to your comment

- Take a screenshot of your distribution

- Then do one of two things:

- If you have beta-features turned on in your account settings, drag-and-drop the image into your comment

- If not, upload it to an image hosting service, then write the following markdown syntax for the image to appear, with the url appearing where it says ‘link’:

- If it worked, you will see the image in the comment before hitting submit.

If you have any bugs or technical issues, reply to Ben (here [LW(p) · GW(p)]) in the comment section.

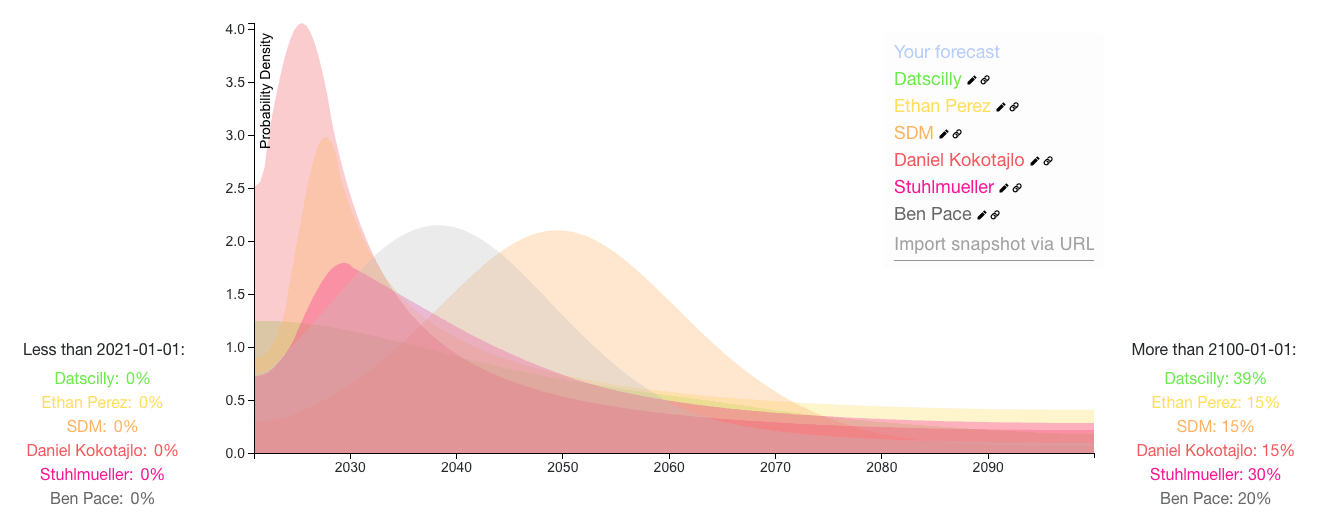

Top Forecast Comparisons

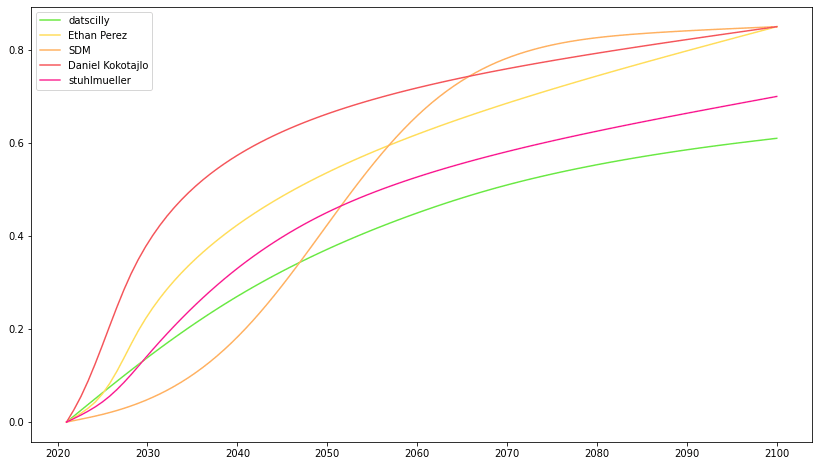

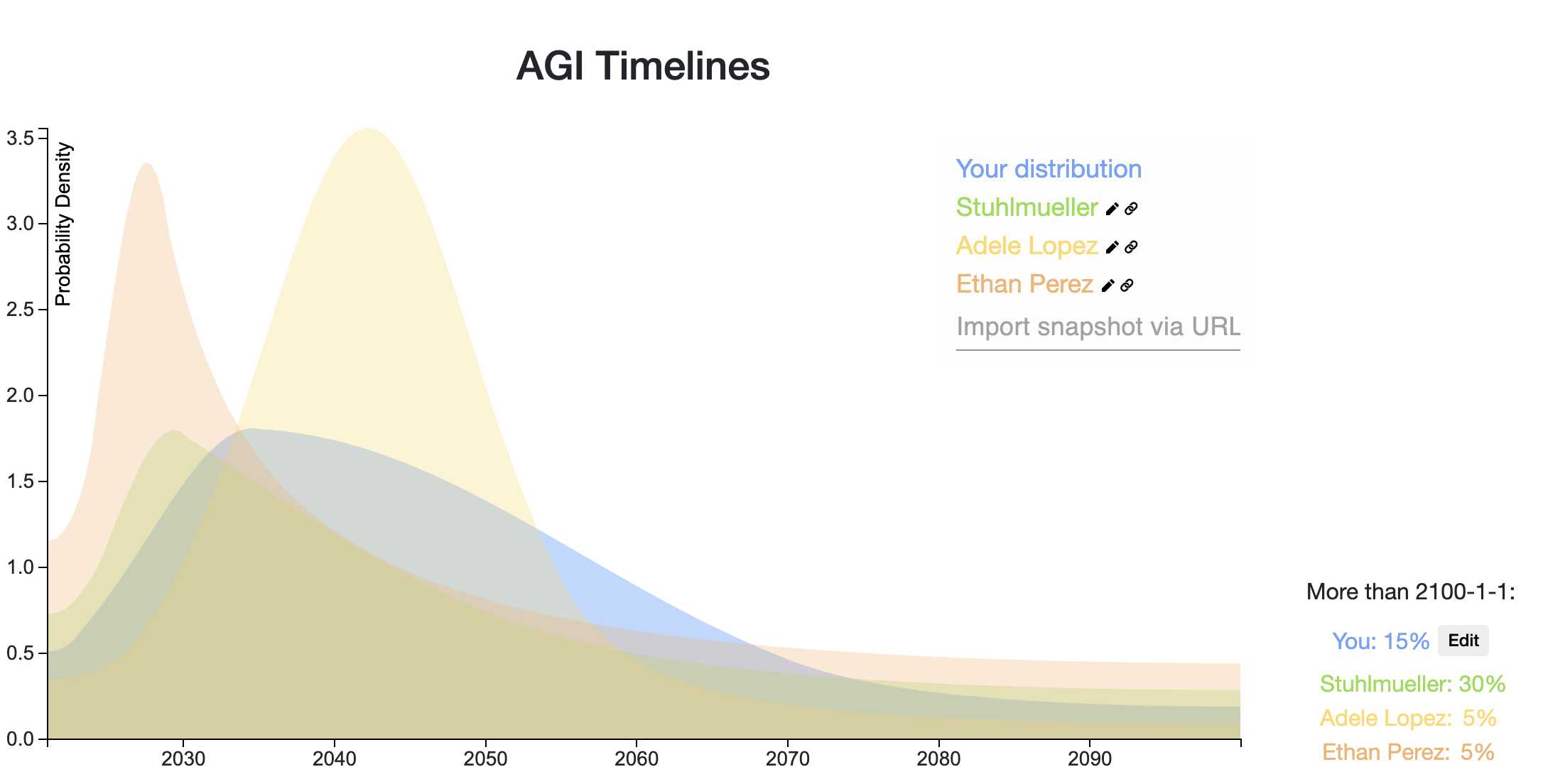

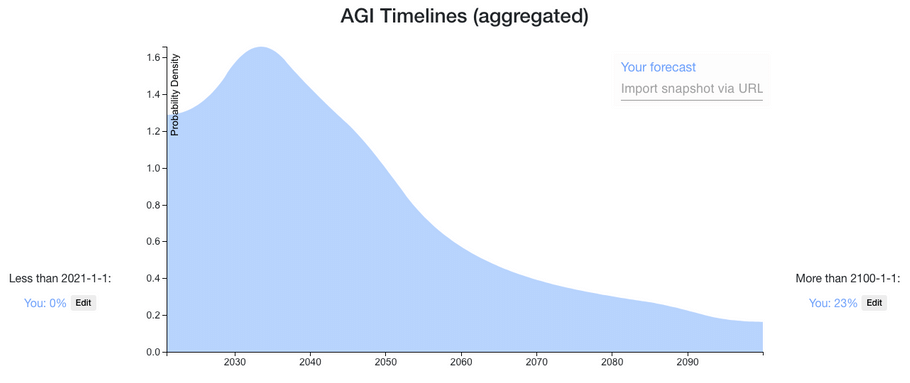

Here is a snapshot of the top voted forecasts from this thread, last updated 9/01/20. You can click the dropdown box near the bottom right of the graph to see the bins for each prediction.

Here is a comparison of the forecasts as a CDF:

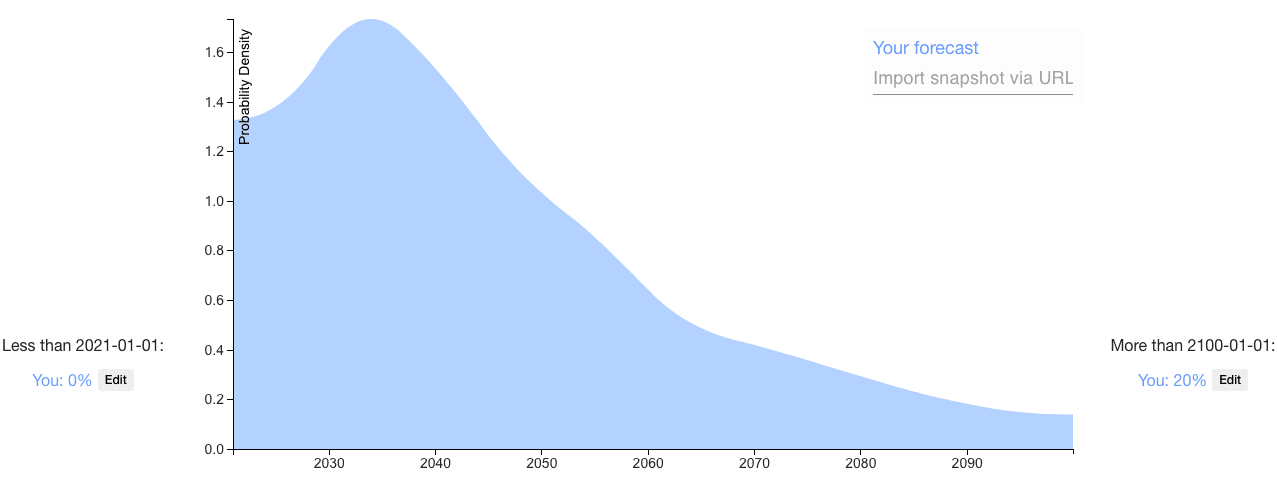

Here is a mixture of the distributions on this thread, weighted by normalized votes (last updated 9/01/20). The median is June 20, 2047. You can click the Interpret tab on the snapshot to see more percentiles.

Answers

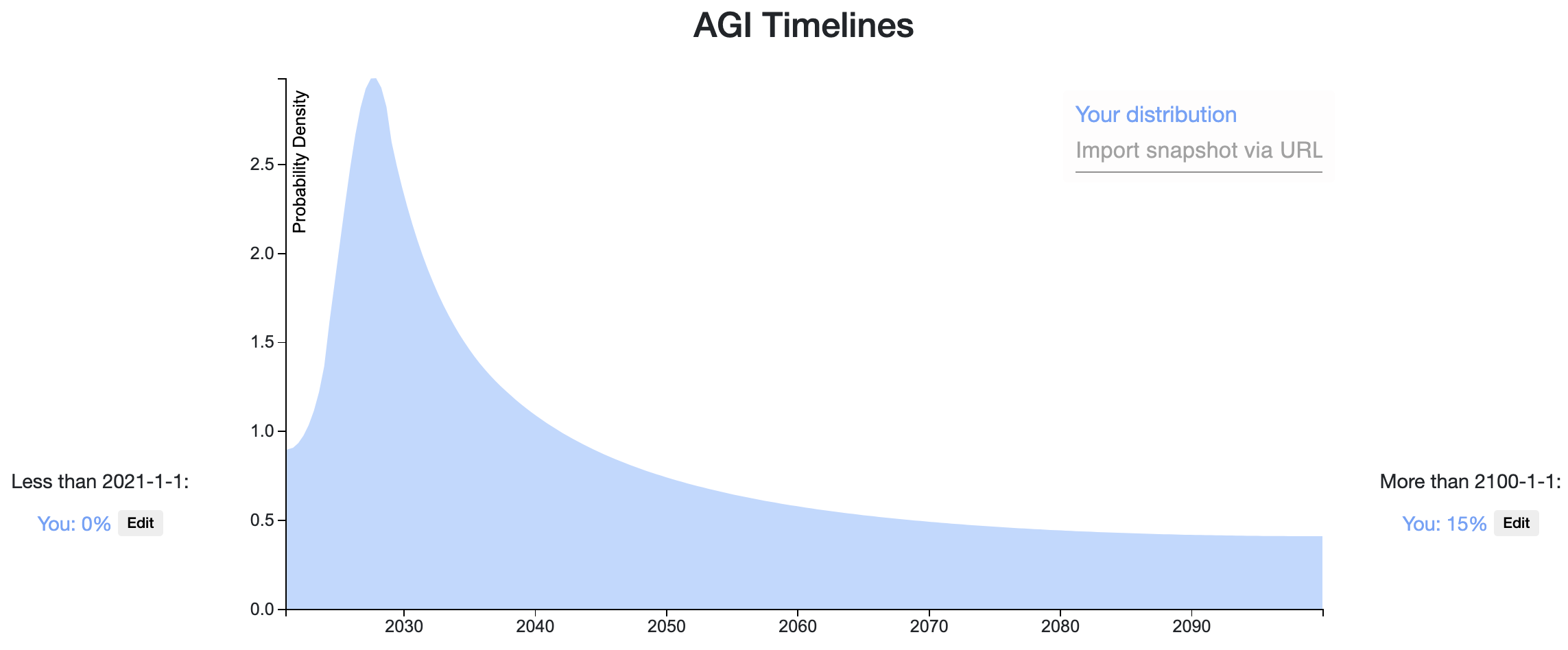

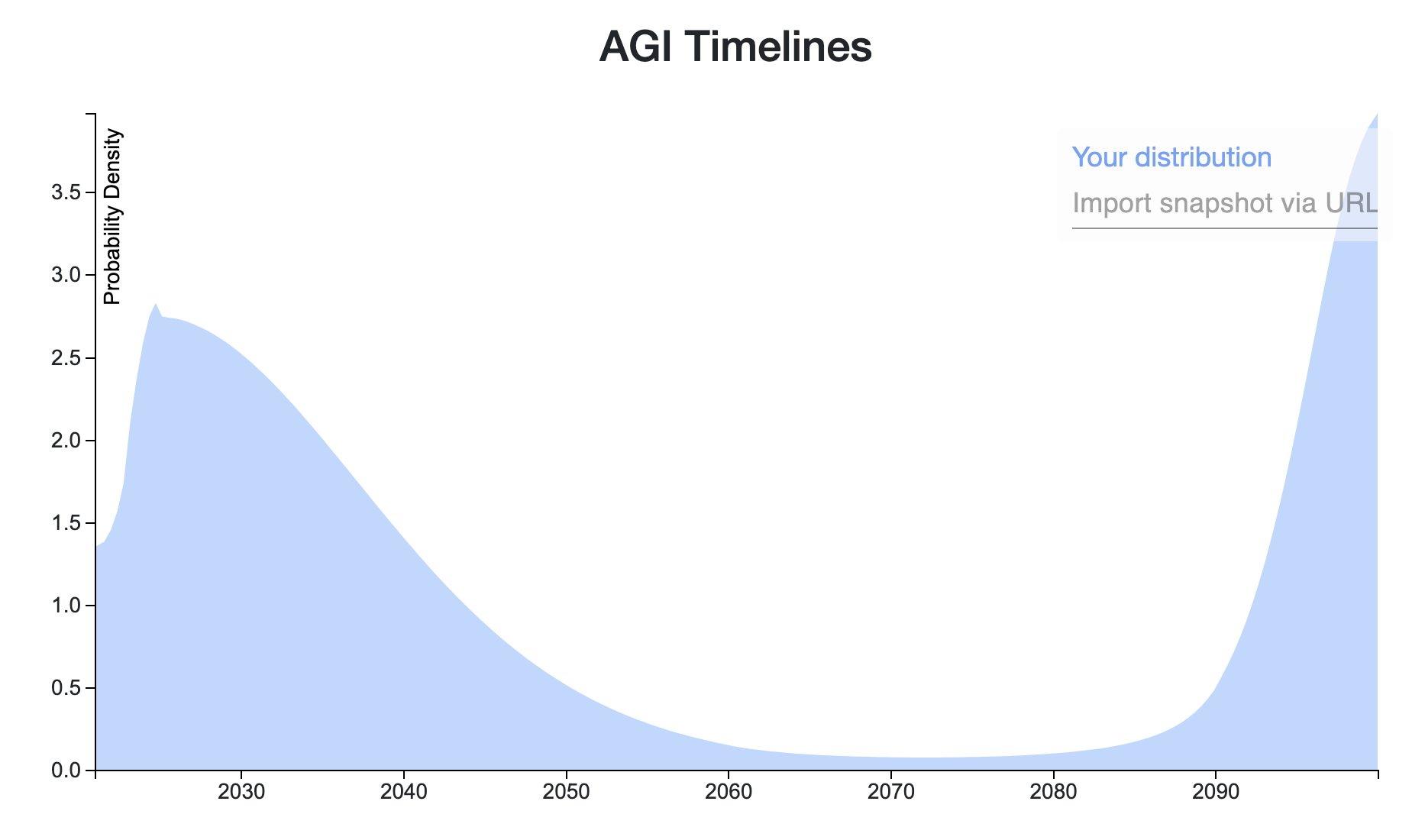

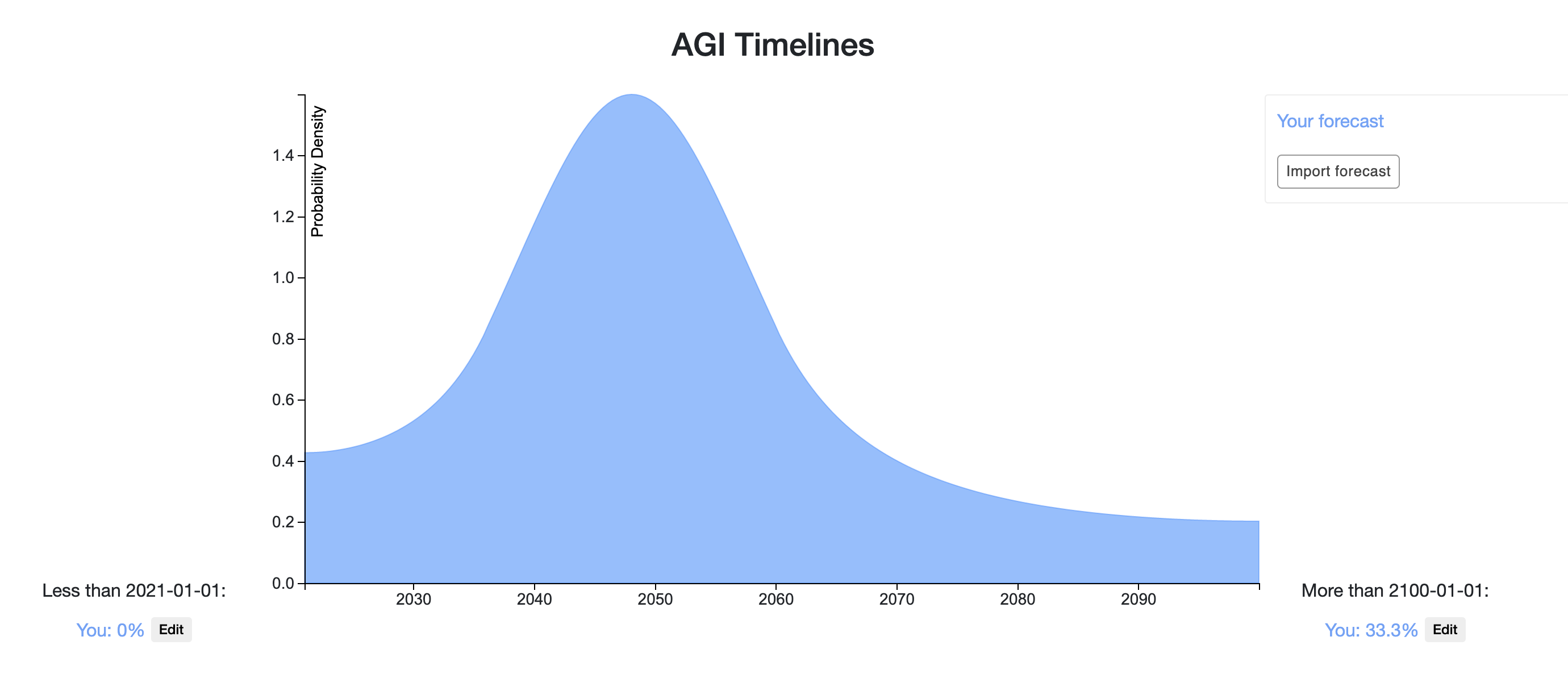

A week ago I recorded a prediction on AI timeline after reading a Vox article on GPT-3 . In general I'm much more spread out in time than the Lesswrong community. Also, I weigh more heavily outside view considerations than detailed inside view information. For example, a main consideration of my prediction is using the heurastic With 50% probability, things will last twice as long as they already have [EA(p) · GW(p)], with the starting time of 1956, the time of the Dartmouth College summer AI conference.

If AGI will definitely happen eventually, then the heuristic gives us [21.3, 64, 192] years at the [25th, 50th, 75th] percentiles of AGI to occur. AGI may never happen, but the chance of that is small enough that adjusting for that here will not make a big difference (I put ~10% that AGI will not happen for 500 years or more, but it already matches that distribution quite well).

A more inside view consideration is: what happens if the current machine learning paradigm scales to AGI? Given that assumption, a 50% confidence interval might be [2028, 2045] (since the current burst of machine learning research began in 2012-2013), which is more in line with the Lesswrong predictions and Metaculus community prediction . Taking the super outside view consideration and the outside view-ish consideration together, I get the prediction I made a week ago.

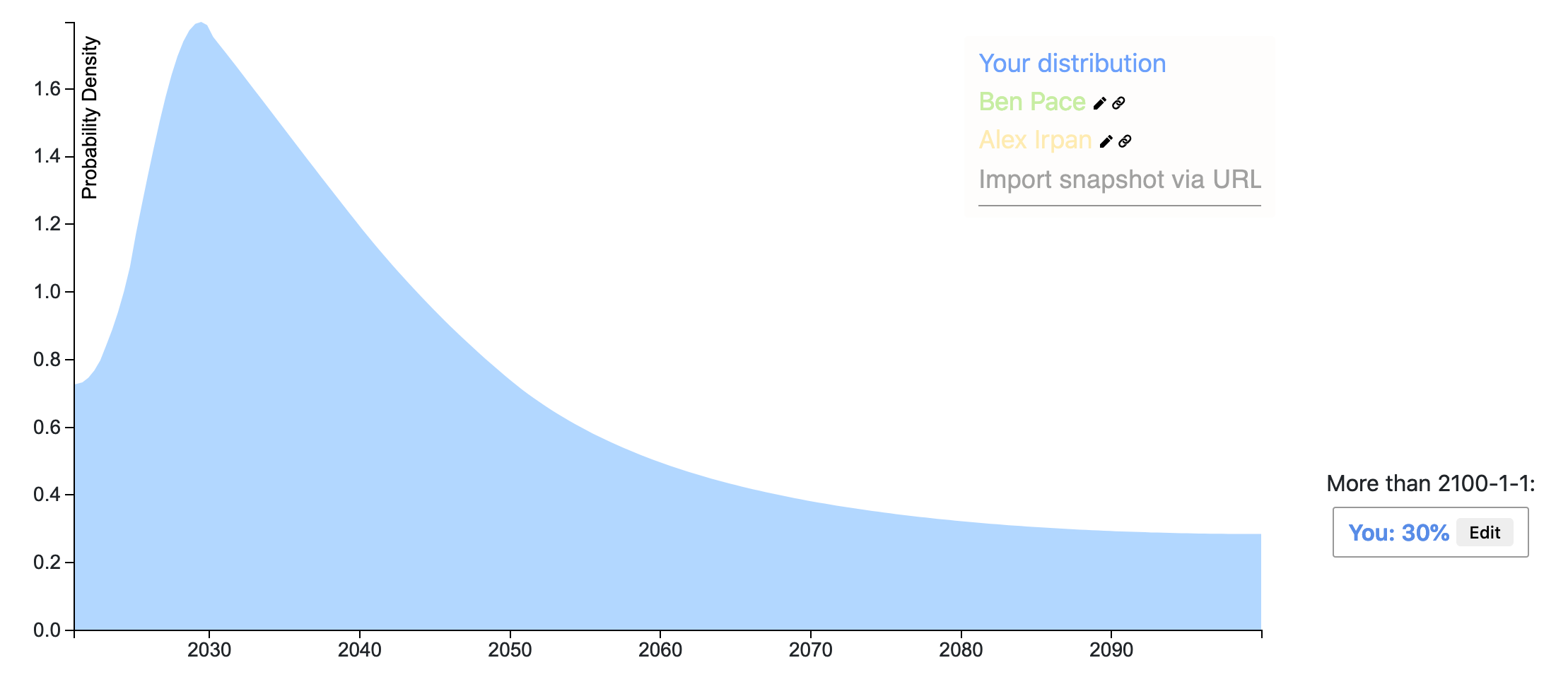

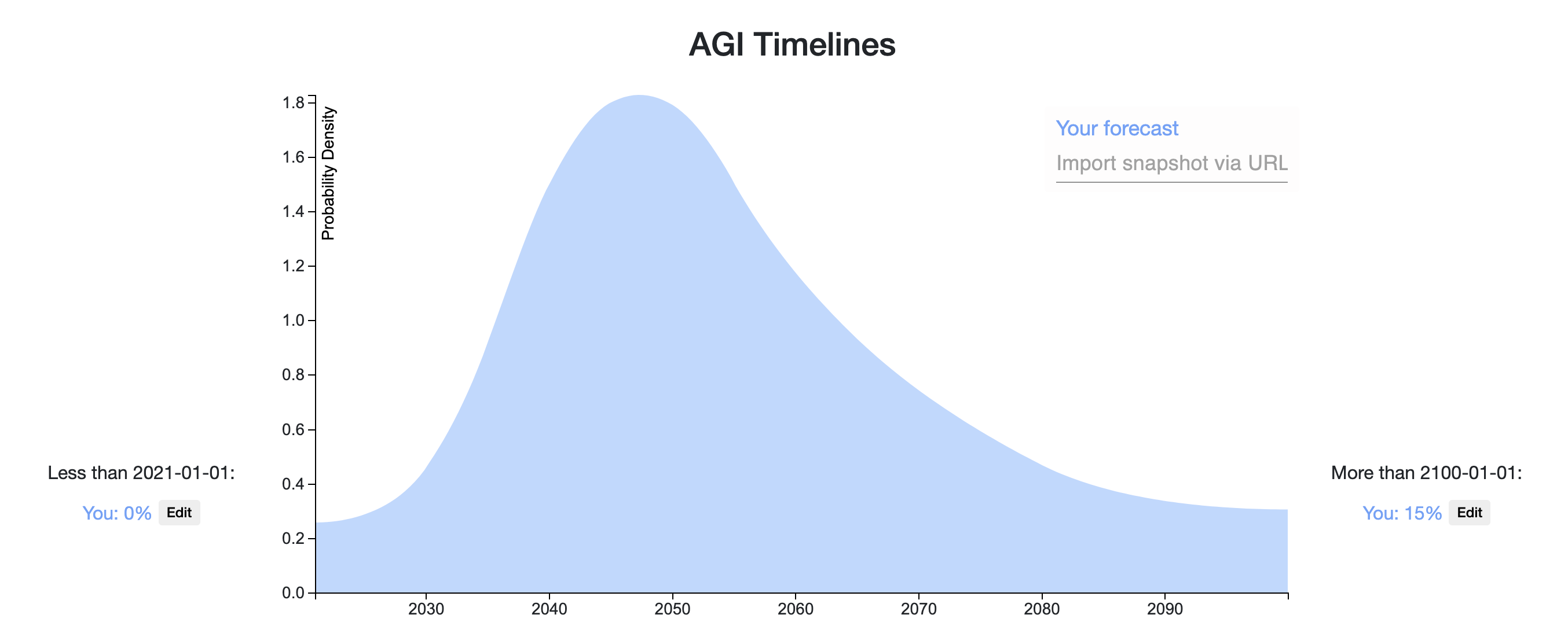

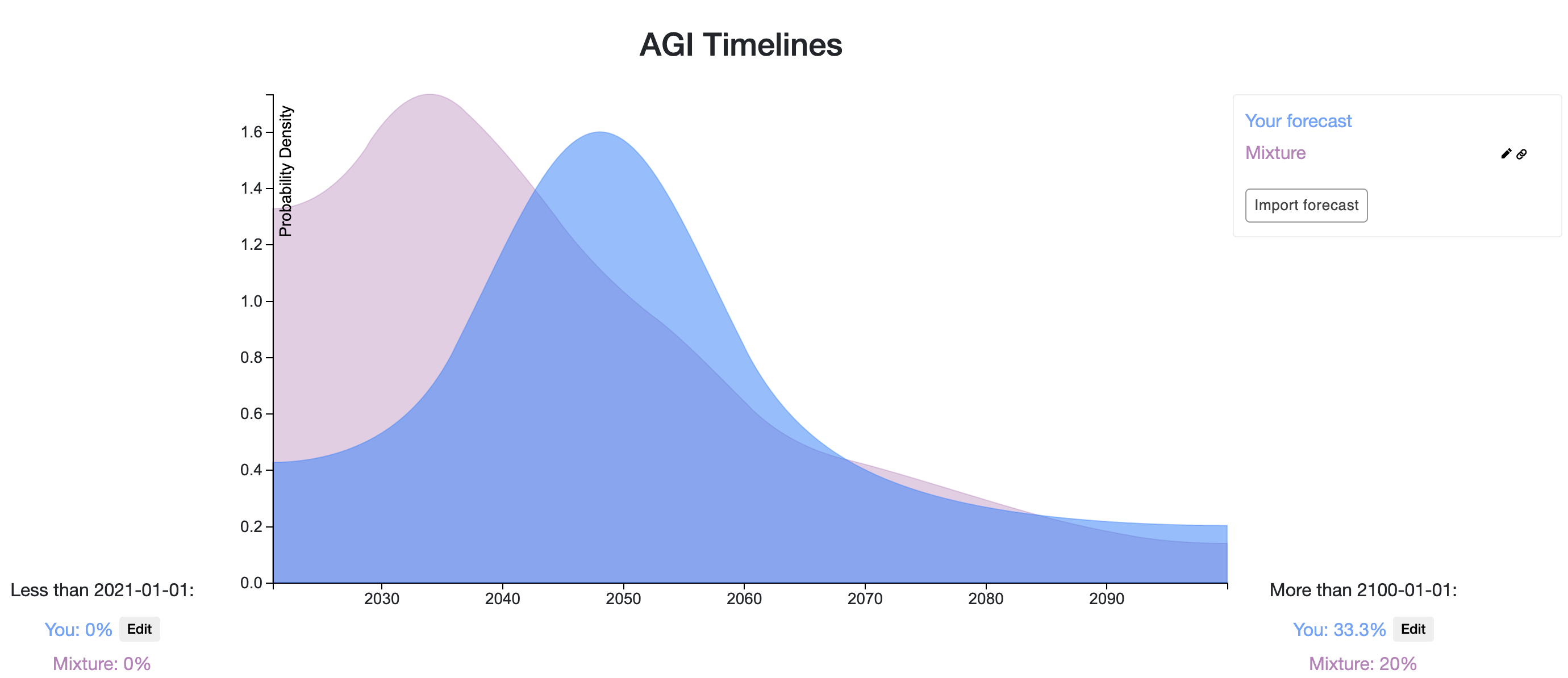

I adapted my prediction to the timeline of this post [1], and compared it with some other commenters predictions [2].

↑ comment by Ben Pace (Benito) · 2020-08-24T19:17:14.549Z · LW(p) · GW(p)

For example, a main consideration of my prediction is using the heurastic With 50% probability, things will last twice as long as they already have [EA(p) · GW(p)], with the starting time of 1956, the time of the Dartmouth College summer AI conference.

A counter hypothesis I’ve heard (not original to me) is: With 50% probability, we will be half-way through the AI researcher-years required to get AGI.

I think this suggests much shorter timelines, as most researchers have been doing research in the last ~10 years.

It's not clear to me what reference class makes sense here though. Like, I feel like 50% doesn’t make any sense. It implies that for all outstanding AI problems we’re fifty percent there. We’re 50% of the way to a rat brain, to a human emulation, to a vastly superintelligent AGI, etc. It’s not a clearly natural category for a field to be “done”, and it’s not clear which thing counts as ”done” in this particular field.

Replies from: gwern↑ comment by gwern · 2020-08-25T01:18:30.730Z · LW(p) · GW(p)

I was looking at the NIPS growth numbers last June and I made a joke:

AI researcher anthropics: 'researchers [should] tend to think AI is ~20 years away because given exponential growth of researchers & careers of ~30 years, the final generation of researchers will make up a majority of all researchers, hence, by SSA+Outside View, one must assume 20 years.'

(Of course, I'm making a rather carbon-chauvinistic assumption here that it's only human researchers/researcher-years which matter.)

↑ comment by NunoSempere (Radamantis) · 2020-08-30T22:43:45.775Z · LW(p) · GW(p)

Your prediction has the interesting property that (starting in 2021), you assign more probability to the next n seconds/ n years than to any subsequent period of n seconds/ n years.

Specifically, I think your distribution assigns too much probability about AGI in the immediately next three months/year/5 years, but I feel like we do have a bunch of information that points us away from such short timelines. If one takes that into account, then one might end up with a bump, maybe like so, where the location of the bump is debatable, and the decay afterwards is per Laplace's rule.

Replies from: Radamantis↑ comment by NunoSempere (Radamantis) · 2020-08-30T23:49:18.054Z · LW(p) · GW(p)

The location of the bump could be estimated by using Daniel Kokotajlo's answer as the "earliest plausible AGI."

↑ comment by grantschneider · 2020-09-05T19:24:08.631Z · LW(p) · GW(p)

https://elicit.ought.org/builder/J2lLvPmAY quick estimate, mostly influenced by the "outside view" and other commenters (of which I found the reasoning of tim_dettmer to be most convincing).

Replies from: Amandango↑ comment by Amandango · 2020-09-06T23:44:21.917Z · LW(p) · GW(p)

This links to a uniform distribution, guessing you didn't mean that! To link to your distribution, take a snapshot of your distribution, and then copy the snapshot url (which appears as a timestamp at the bottom of the page) and link that.

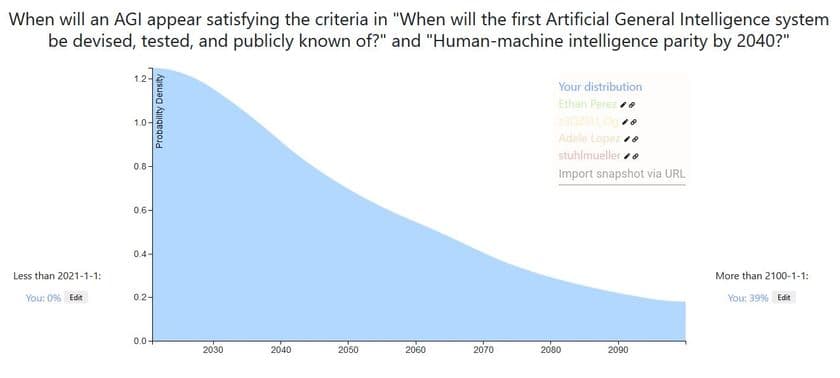

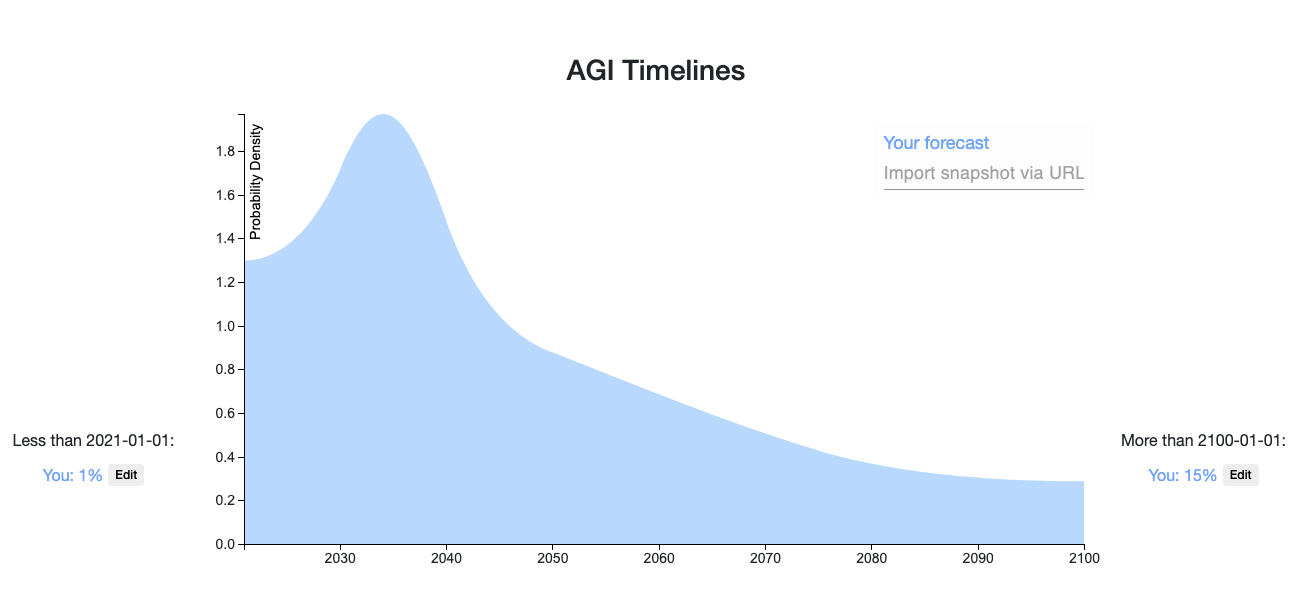

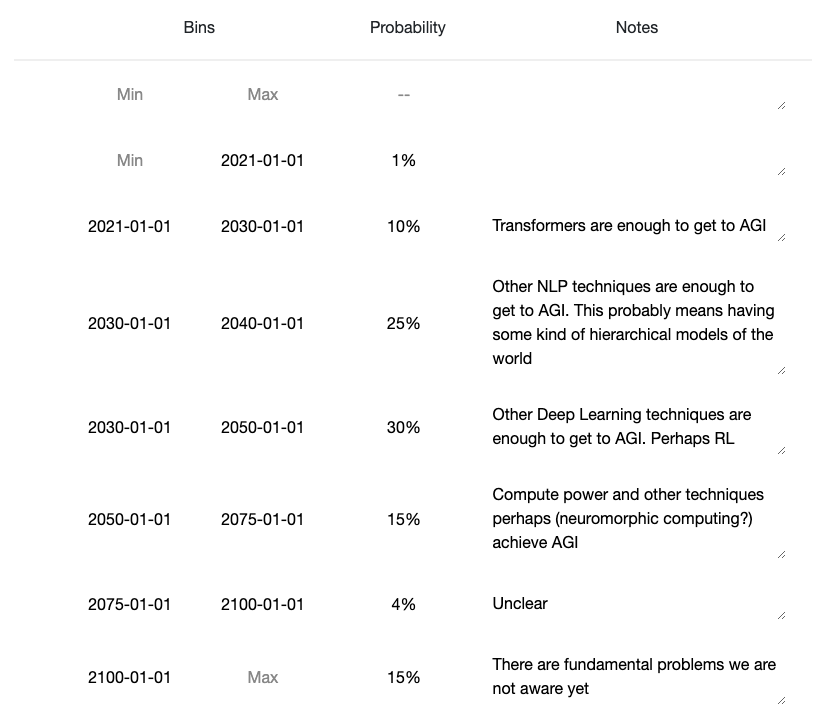

Here is my Elicit Snapshot.

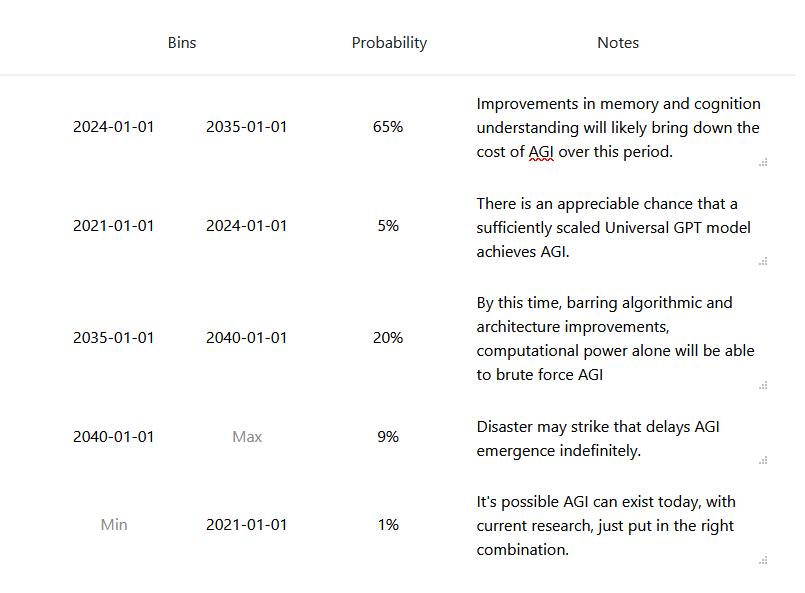

I'll follow the definition of AGI given in this Metaculus challenge, which roughly amounts to a single model that can "see, talk, act, and reason." My predicted distribution is a weighted sum of two component distributions described below:

- Prosaic AGI (25% probability). Timeline: 2024-2037 (Median: 2029): We develop AGI by scaling and combining existing techniques. The most probable paths I can foresee loosely involves 3 stages: (1) developing a language model with human-level language ability, then (2) giving it visual capabilities (i.e., talk about pictures and videos, solve SAT math problems with figures), and then (3) giving it capabilities to intelligently act in the world (i.e., trade stocks or navigate webpages). Below are my timelines for the above stages:

- Human-level Language Model: 1.5-4.5 years (Median: 2.5 years). We can predictably improve our language models by increasing model size (parameter count), which we can do in the following two ways:

- Scaling Language Model Size by 1000x relative to GPT3. 1000x is pretty feasible, but we'll hit difficult hardware/communication bandwidth constraints beyond 1000x as I understand.

- Increasing Effective Parameter Count by 100x using modeling tricks (Mixture of Experts, Sparse Tranformers, etc.)

- +Visual Capabilities: 2-6 extra years (Median: 4 years). We'll need good representation learning techniques for learning from visual input (which I think we mostly have). We'll also need to combine vision and language models, but there are many existing techniques for combining vision and language models to try here, and they generally work pretty well. A main potential bottleneck time-wise is that the language+vision components will likely need to be pretrained together, which slows the iteration time and reduces the number of research groups that can contribute (especially for learning from video, which is expensive). For reference, Language+Image pretrained models like ViLBERT came out 10 months after BERT did.

- +Action Capabilities: 0-6 extra years (Median: 2 years). GPT3-style zero-shot or few-shot instruction following is the most feasible/promising approach to me here; this approach could work as soon as we have a strong, pretrained vision+language model. Alternatively, we could use that model within a larger system, e.g. a policy trained with reinforcement learning, but this approach could take a while to get to work.

- Human-level Language Model: 1.5-4.5 years (Median: 2.5 years). We can predictably improve our language models by increasing model size (parameter count), which we can do in the following two ways:

- Breakthrough AGI (75% probability). Timeline: Uniform probability over the next century: We need several, fundamental breakthroughs to achieve AGI. Breakthroughs are hard to predict, so I'll assume a uniform distribution that we'll hit upon the necessary breakthroughs at any year <2100, with 15% total probability mass after 2100 (a rough estimate); I'm estimating 15% roughly based on a 5% probability that we won't find the right insights by 2100, 5% probability that we have the right insights but not enough compute by 2100, and 5% probability to account for planning fallacy, unknown unknowns, and the fact that a number of top AI researchers believe that we are very far from AGI.

My probability for Prosaic AGI is based on an estimated probability of each of the 3 stages of development working (described above):

P(Prosaic AGI) = P(Stage 1) x P(Stage 2) x P(Stage 3) = 3/4 x 2/3 x 1/2 = 1/4

------------------

Updates/Clarification after some feedback from Adam Gleave:

- Updated from 5% -> 15% probability that AGI won't happen by 2100 (see reasoning above). I've updated my Elicit snapshot appropriately.

- There are other concrete paths to AGI, but I consider these fairly low probability to work first (<5%) and experimental enough that it's hard to predict when they will work. For example, I can't think of a good way to predict when we'll get AGI from training agents in a simulated, multi-agent environment (e.g., in the style of OpenAI's Emergent Tool Use paper). Thus, I think it's reasonable to group such other paths to AGI into the "Breakthrough AGI" category and model these paths with a uniform distribution.

- I think you can do better than a uniform distribution for the "Breakthrough AGI" category, by incorporating the following information:

- Breakthroughs will be less frequent as time goes on, as the low-hanging fruit/insights are picked first. Adam suggested an exponential decay over time / Laplacian prior, which sounds reasonable.

- Growth of AI research community: Estimate the size of the AI research community at various points in time, and estimate the pace of research progress given that community size. It seems reasonable to assume that the pace of progress will increase logarithmically in the size of the research community, but I can also see arguments for why we'd benefit more or less from a larger community (or even have slower progress).

- Growth of funding/compute for AI research: As AI becomes increasingly monetizable, there will be more incentives for companies and governments to support AI research, e.g., in terms of growing industry labs, offering grants to academic labs to support researchers, and funding compute resources - each of these will speed up AI development.

↑ comment by Veedrac · 2020-08-25T00:02:33.072Z · LW(p) · GW(p)

Scaling Language Model Size by 1000x relative to GPT3. 1000x is pretty feasible, but we'll hit difficult hardware/communication bandwidth constraints beyond 1000x as I understand.

I think people are hugely underestimating how much room there is to scale.

The difficulty, as you mention, is bandwidth and communication, rather than cost per bit in isolation. An A100 manages 1.6TB/sec of bandwidth to its 40 GB of memory. We can handle sacrificing some of this speed, but something like SSDs aren't fast enough; 350 TB of SSD memory would cost just $40k, but would only manage 1-2 TB/s over the whole array, and could not push it to a single GPU. More DRAM on the GPU does hit physical scaling issues, and scaling out to larger clusters of GPUs does start to hit difficulties after a point.

This problem is not due to physical law, but the technologies in question. DRAM is fast, but has hit a scaling limit, whereas NAND scales well, but is much slower. And the larger the cluster of machines, the more bandwidth you have to sacrifice for signal integrity and routing.

Thing is, these are fixable issues if you allow for technology to shift. For example,

- Various sorts of persistent memories allow fast dense memories, like NRAM. There's also 3D XPoint and other ReRAMs, various sorts of MRAMs, etc.

- Multiple technologies allow for connecting hardware significantly more densely than we currently do, primarily things like chiplets and memory stacking. Intel's Ponte Vecchio intends to tie 96 (or 192?) compute dies together, across 6 interconnected GPUs, each made of 2 (or 4?) groups of 8 compute dies.

- Neural networks are amicable to ‘spatial computing’ (visualization), and using appropriate algorithms the end-to-end latency can largely be ignored as long as the block-to-block latency and throughput is sufficiently high. This means there's no clear limit to this sort of scaling, since the individual latencies are invariant to scale.

- The switches themselves between the computers are not at a limit yet, because of silicon photonics, which can even be integrated alongside compute dies. That example is in a switch, but they can also be integrated alongside GPUs.

- You mention this, but to complete the list, sparse training makes scale-out vastly easier, at the cost of reducing the effectiveness of scaling. GShard showed effectiveness at >99.9% sparsities for mixture-of-experts models, and it seems natural to imagine that a more flexible scheme with only, say, 90% training sparsity and support for full-density inference would allow for 10x scaling without meaningful downsides.

It seems plausible to me that a Manhattan Project could scale to models with a quintillion parameters, aka. 10,000,000x scaling, within 15 years, using only lightweight training sparsity. That's not to say it's necessarily feasible, but that I can't rule out technology allowing that level of scaling.

Replies from: ethan-perez↑ comment by Ethan Perez (ethan-perez) · 2020-08-25T23:53:45.928Z · LW(p) · GW(p)

When I cite scaling limit numbers, I'm mostly deferring to my personal discussions with Tim Dettmers (whose research is on hardware, sparsity, and language models), so I'd check out his comment [LW(p) · GW(p)] on this post for more details on his view of why we'll hit scaling limits soon!

Replies from: Veedrac↑ comment by Veedrac · 2020-08-26T06:16:20.425Z · LW(p) · GW(p)

I disagree with that post and its first two links so thoroughly that any direct reply or commentary on it would be more negative than I'd like to be on this site. (I do appreciate your comment, though, don't take this as discouragement for clarifying your position.) I don't want to leave it at that, so instead let me give a quick thought experiment.

A neuron's signal hop latency is about 5ms, and in that time light can travel about 1500km, a distance approximately equal to the radius of the moon. You could build a machine literally the size of the moon, floating in deep space, before the speed of light between the neurons became a problem relative to the chemical signals in biology, as long as no single neuron went more than half way through. Unlike today's silicon chips, a system like this would be restricted by the same latency propagation limits that the brain is, but still, it's the size of the moon. You could hook this moon-sized computer to a human-shaped shell on Earth, and as long as the computer was directly overhead, the human body could be as responsive and fully updatable as a real human.

While such a computer is obviously impractical on so many levels, I find it a good frame of reference to think about the characteristics of how computers scale upwards, much like Feynman's There's Plenty of Room at the Bottom was a good frame of reference for scaling down, considered back when transistors were still wired by hand. In particular, the speed of light is not a problem, and will never become one, except where it's a resource we use inefficiently.

↑ comment by NunoSempere (Radamantis) · 2020-08-30T22:48:38.731Z · LW(p) · GW(p)

That sharp peak feels really suspicious.

Replies from: ethan-perez↑ comment by Ethan Perez (ethan-perez) · 2020-09-01T22:40:10.026Z · LW(p) · GW(p)

Yes, the peak comes from (1) a relatively high (25%) confidence that current methods will lead to AGI and (2) my view that we'll achieve Prosaic AGI in a pretty small (~13-year) window if it's possible, after which it will be quite unlikely that scaling current methods will result in AGI (e.g., due to hitting scaling limits or a fundamental technical problem).

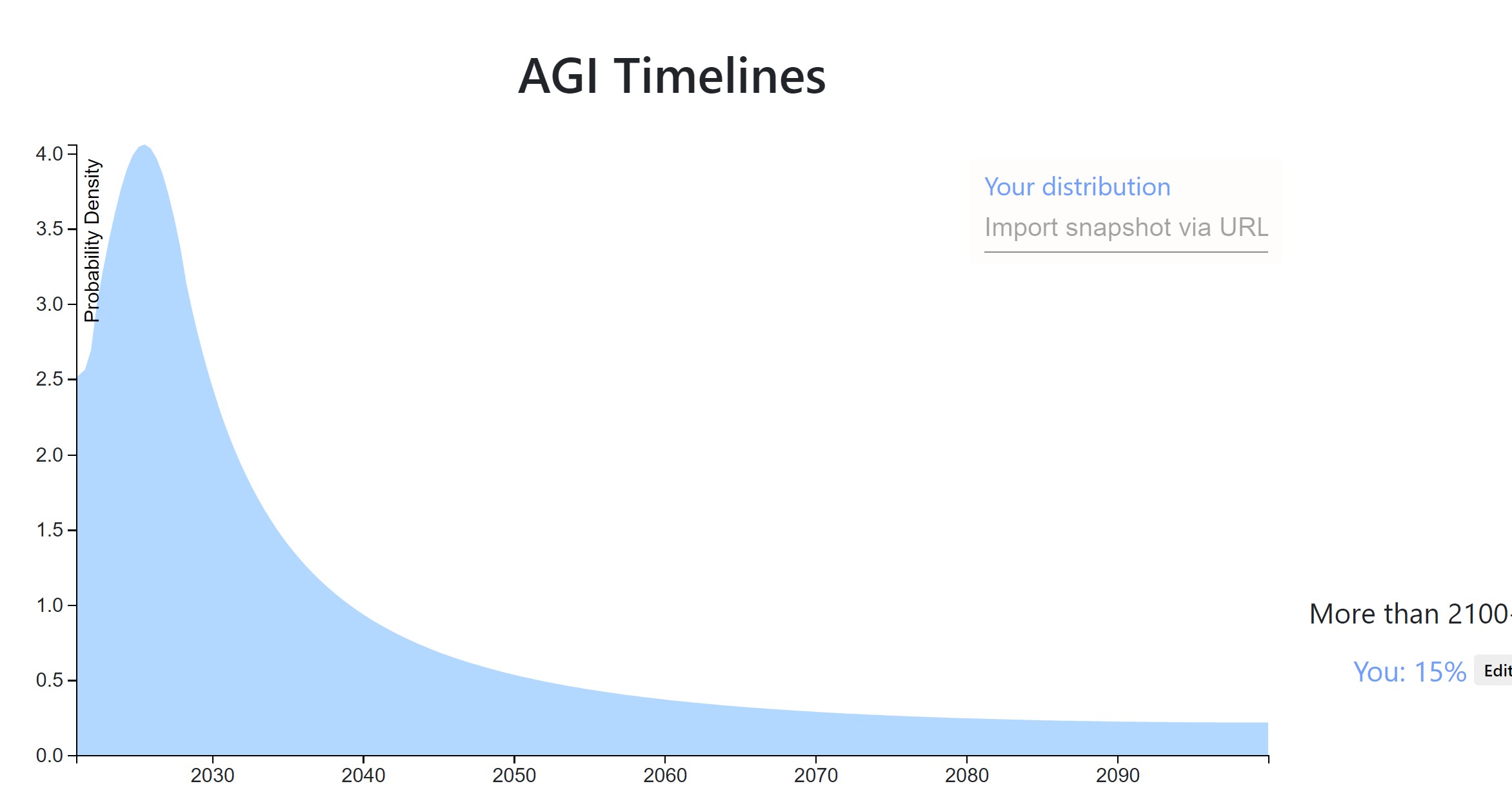

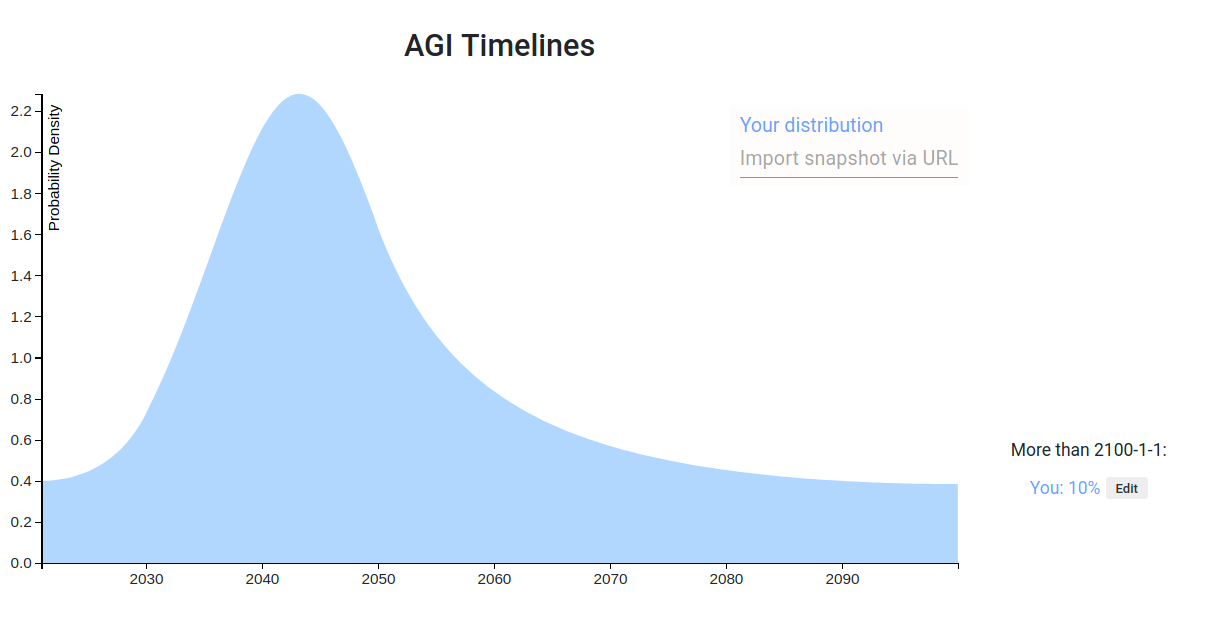

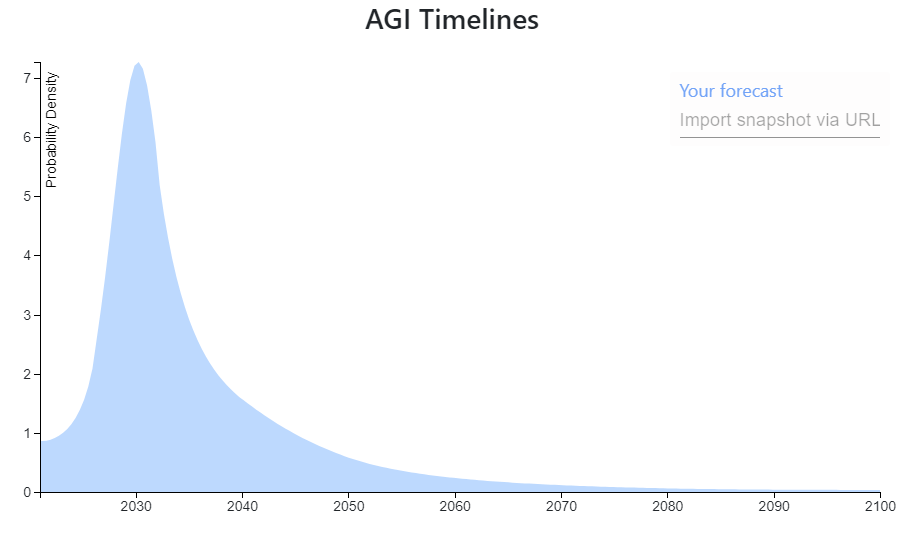

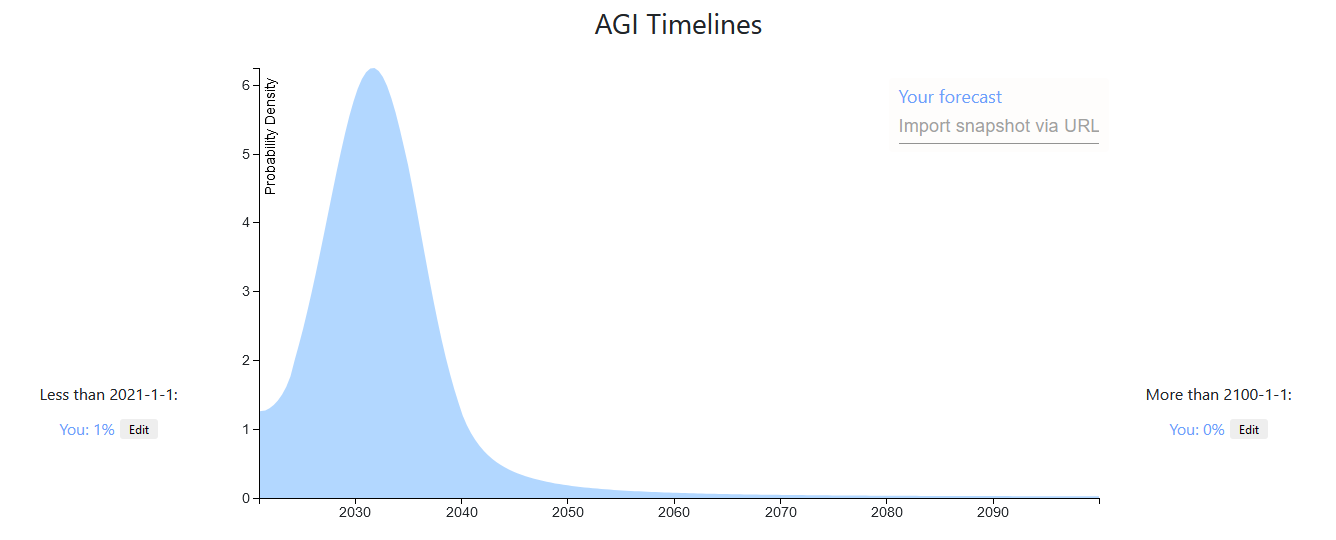

Here is my snapshot. My reasoning is basically similar to Ethan Perez', it's just that I think that if transformative AI is achievable in the next five orders of magnitude of compute improvement (e.g. prosaic AGI?), it will likely be achieved in the next five years or so. I also am slightly more confident that it is, and slightly less confident that TAI will ever be achieved.

I am aware that my timelines are shorter than most... Either I'm wrong and I'll look foolish, or I'm right and we're doomed. Sucks to be me.

[Edited the snapshot slightly on 8/23/2020]

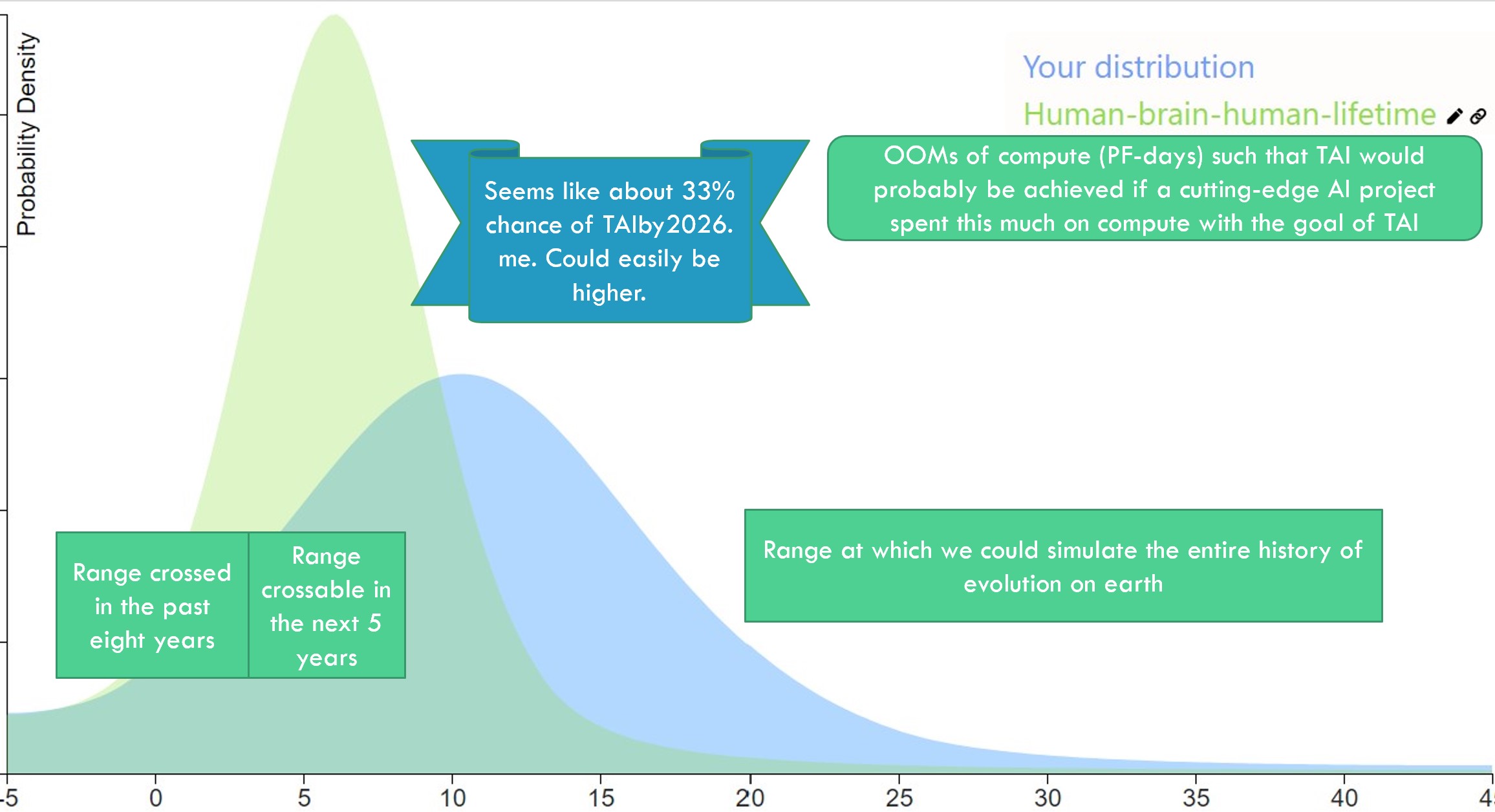

[Edited to add the following powerpoint slide that gets a bit more at my reasoning]

↑ comment by Ben Pace (Benito) · 2020-08-23T16:52:21.890Z · LW(p) · GW(p)

I feel like taking some bets against this, it’s very extreme.

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2020-08-23T18:51:00.705Z · LW(p) · GW(p)

I'd be happy to bet on this. I already have a 10:1 bet with someone to the tune of $1000/$100.

However, I'd be even happier to just have a chat with you and discuss models/evidence. I could probably be updated away from my current position fairly easily. It looks like your distribution isn't that different from my own though? [EDIT 8/26/2020: Lest I give the wrong impression, I have thought about this a fair amount and as a result don't expect to update substantially away from my current position. There are a few things that would result in substantial updates, which I could name, but mostly I'm expecting small updates.]

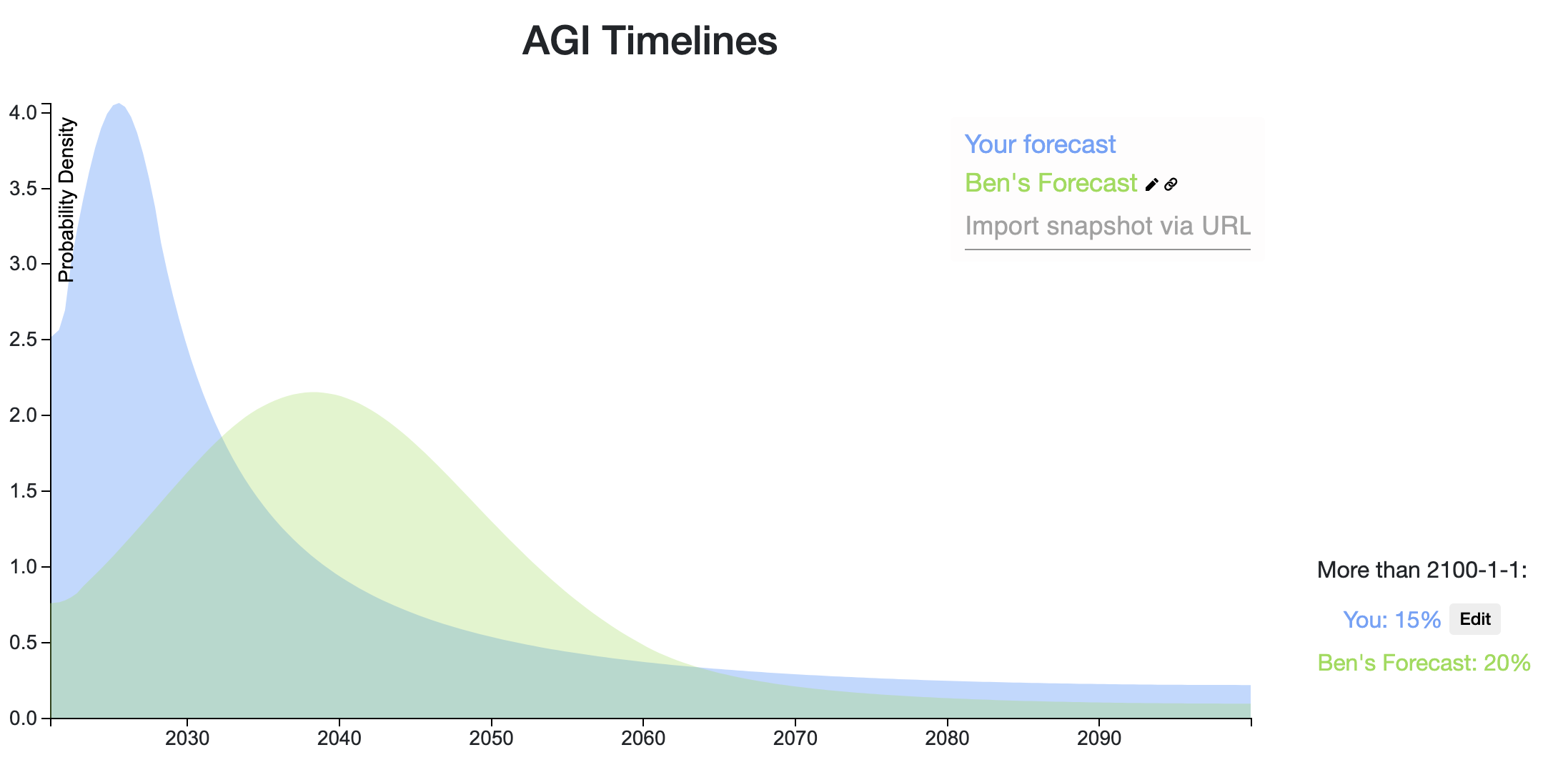

↑ comment by Ben Pace (Benito) · 2020-08-23T22:16:39.576Z · LW(p) · GW(p)

Me: Wonders how much disagreement we have.

Me: Plots it.

I'd be down to chat with you :) although plotting it I wonder that maybe I don't have that much to say.

I think the main differences are that (a) you're assigning much more in the next 10 years, and (b) you're assigning way less worlds where it's just harder, takes more effort, but overall we're still on the right path.

My strawman is that mine is yours plus planning fallacy. I feel like my crux between us something like "I have a bunch of probability on (even though we're on the right track) it just having a lot of details and requiring human coordination of big projects that we're not great at right now" but that sounds very vague and uncompelling.

Added: I think you're focused on the scaling hypothesis being true. Given that, how do you feel about this story:

Over the next five years we scale up AI compute and use up all the existing overhang, and this doesn't finish it but it provides strong evidence that we're nearly there. This makes us confident that if we scaled it up another three orders of magnitude that we'd get AGI, and then we figure out how to get there faster using lots of fine-grained optimisation, so we raise funds to organise a Manhatten project with 1000s of scientists, and this takes 5 years to set up and 10 years to execute. Oh, and also there's a war over control of the compute and so on that makes things difficult.

In a world like this, I feel like it's bottlenecked by our ability to organise large scale projects, which I have smoother uncertainty bars over than spikey. (Like, how long did the large hadron collider take to build?) Does that sound plausible to you, that it's bottlenecked by large scale human projects?

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2020-08-24T01:03:00.091Z · LW(p) · GW(p)

Oh, whoa, OK I guess I was looking at your first forecast, not your second. Your second is substantially different. Yep let's talk then? Want to video chat someday?

I tried to account for the planning fallacy in my forecast, but yeah I admit I probably didn't account for it enough. Idk.

My response to your story is that yeah, that's a possible scenario, but it's a "knife edge" result. It might take <5 OOMs more, in which case it'll happen with existing overhang. Or it'll take >7 OOMs more compute, in which case it'll not happen until new insights/paradigms are invented. If it takes 5-7 OOMs more, then yeah, we'll first burn through the overhang and then need to launch some huge project in order to reach AGI. But that's less likely than the other two scenarios.

(I mean, it's not literally knife's edge. It's probably about as likely as the we-get-AGI-real-soon scenario. But then again I have plenty of probability mass around 2030, and I think 10 years from now is plenty of time for more Manhattan projects.)

↑ comment by Ben Pace (Benito) · 2020-08-25T21:43:27.368Z · LW(p) · GW(p)

Let's do it. I'm super duper busy, please ping me if I've not replied here within a week.

Replies from: daniel-kokotajlo, daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2020-09-10T14:13:55.028Z · LW(p) · GW(p)

Ping?

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2020-08-26T02:28:14.632Z · LW(p) · GW(p)

Sounds good. Also, check out the new image I added to my answer! This image summarizes the weightiest model in my mind.

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2021-09-13T12:52:34.428Z · LW(p) · GW(p)

It's been a year, what do my timelines look like now?

My median has shifted to the left a bit, it's now 2030. However, I have somewhat less probability in the 2020-2025 range I think, because I've become more aware of the difficulties in scaling up compute. You can't just spend more money. You have to do lots of software engineering and for 4+ OOMs you literally need to build more chip fabs to produce more chips. (Also because 2020 has passed without TAI/AGI/etc., so obviously I won't put as much mass there...)

So if I were to draw a distribution it would look pretty similar, just a bit more extreme of a spike and the tip of the spike might be a bit to the right.

Replies from: mtrazzi↑ comment by Michaël Trazzi (mtrazzi) · 2022-01-12T14:57:01.505Z · LW(p) · GW(p)

You have to do lots of software engineering and for 4+ OOMs you literally need to build more chip fabs to produce more chips.

I have probably missed many considerations you have mentioned elsewhere, but in terms of software engineering, how do you think the "software production rate" for scaling up large evolved from 2020 to late 2021? I don't see why we couldn't get 4 OOM between 2020 and 2025.

If we just take the example of large LM, we went from essentially 1-10 publicly known models in 2020, to 10-100 in 2021 (cf. China, Korea, Microsoft, DM, etc.), and I expect the amount of private models to be even higher, so it makes sense to me that we could have 4OOM more SWE in that area by 2025.

Now, for the chip fabs, I feel like one update from 2020 to 2022 has been NVIDIA & Apple doing unexpected hardware advances (A100, M1) and Nvidia stock growing massively, so I would be more optimistic about "build more fabs" than in 2020. Though I'mm not an expert in hardware at all and those two advances I mentioned were maybe not that useful for scaling.

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2022-01-12T17:08:50.887Z · LW(p) · GW(p)

If I understand you correctly, you are asking something like: How many programmer-hours of effort and/or how much money was being spent specifically on scaling up large models in 2020? What about in 2025? Is the latter plausibly 4 OOMs more than the former? (You need some sort of arbitrary cutoff for what counts as large. Let's say GPT-3 sized or bigger.)

Yeah maybe, I don't know! I wish I did. It's totally plausible to me that it could be +4 OOMs in this metric by 2025. It's certainly been growing fast, and prior to GPT-3 there may not have been much of it at all.

Replies from: mtrazzi↑ comment by Michaël Trazzi (mtrazzi) · 2022-01-12T18:19:22.651Z · LW(p) · GW(p)

Yes, something like: given (programmer-hours-into-scaling(July 2020) - programmer-hours-into-scaling(Jan 2022)), and how much progress there has been on hardware for such training (I don't know the right metric for this, but probably something to do with FLOP and parallelization), the extrapolation to 2025 (either linear or exponential) would give the 4 OOM you mentioned.

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-12-29T13:52:54.770Z · LW(p) · GW(p)

Blast from the past!

I'm biased but I'm thinking this "33% by 2026" forecast is looking pretty good.

↑ comment by NunoSempere (Radamantis) · 2020-08-30T22:57:23.876Z · LW(p) · GW(p)

Is your P(AGI | no AGI before 2040) really that low?

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2020-08-30T23:14:06.559Z · LW(p) · GW(p)

Eyeballing the graph in light of the fact that the 50th percentile is 2034.8, it looks like P(AGI | no AGI before 2040) is about 30%. Maybe that's too low, but it feels about right to me, unfortunately. 20 years from now, science in general (or at least in AI research) may have stagnated, with Moore's Law etc. ended and a plateau in new AI researchers. Or maybe a world war or other disaster will have derailed everything. Etc. Meanwhile 20 years is plenty of time for powerful new technologies to appear that accelerate AI research.

Replies from: Radamantis↑ comment by NunoSempere (Radamantis) · 2020-08-30T23:46:26.282Z · LW(p) · GW(p)

Is this your inside view, or your "all things considered" forecast? I.e., how do you update, if at all, on other people disagreeing with you?

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2020-08-31T14:48:22.373Z · LW(p) · GW(p)

This is my all things considered forecast, though I'm open to the idea that I should weight other people's opinions more than I do. It's not that different from my inside view forecast, i.e. I haven't modified it much in light of the opinions of others. I haven't tried to graph my inside view, it would probably look similar to this only with a higher peak and a bit less probability mass in the "never" category and in the "more than 20 years" region.

↑ comment by [deleted] · 2020-08-23T15:33:20.426Z · LW(p) · GW(p)

I’m somewhat confused as to how slightly more confident, and slightly less confident equate to doom- which is a pretty strong claim imo.

Replies from: daniel-kokotajlo, None↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2020-08-23T18:47:33.147Z · LW(p) · GW(p)

I don't literally think we are doomed. I'm just rather pessimistic about our chances of aligning AI if it is happening in the next 5 years or so.

My confidence in prosaic AGI is 30% to Ethan's 25%, and my confidence in "more than 2100" is 15% to Ethan's... Oh wait he has 15% too, huh. I thought he had less.

↑ comment by Ethan Perez (ethan-perez) · 2020-08-23T20:26:29.096Z · LW(p) · GW(p)

I updated to 15% (from 5%) after some feedback so you're right that I had less originally :)

↑ comment by [deleted] · 2020-08-23T15:58:13.469Z · LW(p) · GW(p)

Just as well, I’m also less confident that shorter timelines are congruent with a high, irreducible probability of failure.

EDIT: If doom is instead congruent simply with the advent of Prosaic AGI, then I still disagree- even moreso, actually.

Here's my answer. I'm pretty uncertain compared to some of the others!

First, I'm assuming that by AGI we mean an agent-like entity that can do the things associated with general intelligence, including things like planning towards a goal and carrying that out. If we end up in a CAIS-like world where there is some AI service or other that can do most economically useful tasks, but nothing with very broad competence, I count that as never developing AGI.

I've been impressed with GPT-3, and could imagine it or something like it scaling to produce near-human level responses to language prompts in a few years, especially with RL-based extensions [LW(p) · GW(p)].

But, following the list (below) of missing capabilities by Stuart Russell, I still think things like long-term planning would elude GPT-N, so it wouldn't be agentive general intelligence. Even though you might get those behaviours with trivial extensions of GPT-N, I don't think it's very likely [LW(p) · GW(p)].

That's why I think AGI before 2025 is very unlikely (not enough time for anything except scaling up of existing methods). This is also because I tend to expect progress to be continuous [LW · GW], though potentially quite fast, and going from current AI to AGI in less than 5 years requires a very sharp discontinuity.

AGI before 2035 or so happens if systems quite a lot like current deep learning can do the job, but which aren't just trivial extensions of them - this seems reasonable to me on the inside view - e.g. it takes us less than 15 years to take GPT-N and add layers on top of it that handle things like planning and discovering new actions. This is probably my 'inside view' answer.

I put a lot of weight on a tail peaking around 2050 because of how quickly we've advanced up this 'list of breakthroughs needed for general intelligence' -

There is this list of remaining capabilities needed for AGI in an older post I [LW · GW] wrote, with the capabilities of 'GPT-6' as I see them underlined:

Stuart Russell’s List

human-like language comprehension

cumulative learning

discovering new action sets

managing its own mental activity

For reference, I’ve included two capabilities we already have that I imagine being on a similar list in 1960

So we'd have discovering new action sets, and managing mental activity - effectively, the things that facilitate long-range complex planning, remaining.

So (very oversimplified) if around the 1980s we had efficient search algorithms, by 2015 we had image recognition (basic perception) and by 2025 we have language comprehension courtesy of GPT-8, that leaves cumulative learning (which could be obtained by advanced RL?), then discovering new action sets and managing mental activity (no idea). It feels a bit odd that we'd breeze past all the remaining milestones in one decade after it took ~6 to get to where we are now. Say progress has sped up to be twice as fast, then it's 3 more decades to go. Add to this the economic evidence from things like Modelling the Human Trajectory [LW(p) · GW(p)], which suggests a roughly similar time period of around 2050.

Finally, I think it's unlikely but not impossible that we never build AGI and instead go for tool AI or CAIS, most likely because we've misunderstood the incentives such that it isn't actually economical or agentive behaviour doesn't arise easily. Then there's the small (few percent) chance of catastrophic or existential disaster which wrecks our ability to invent things. This is the one I'm most unsure about - I put 15% for both but it may well be higher.

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2020-08-26T13:34:07.388Z · LW(p) · GW(p)

This is also because I tend to expect progress to be continuous [LW · GW], though potentially quite fast, and going from current AI to AGI in less than 5 years requires a very sharp discontinuity.

I object! I think your argument from extrapolating when milestones have been crossed is good, but it's just one argument among many. There are other trends which, if extrapolated, get to AGI in less than five years. For example if you extrapolate the AI-compute trend and the GPT-scaling trends you get something like "GPT-5 will appear 3 years from now and be 3 orders of magnitude bigger and will be human-level at almost all text-based tasks." No discontinuity required.

Replies from: Amandango, SDM↑ comment by Amandango · 2020-08-28T22:50:13.191Z · LW(p) · GW(p)

Daniel and SDM, what do you think of a bet with 78:22 odds (roughly 4:1) based on the differences in your distributions, i.e: If AGI happens before 2030, SDM owes Daniel $78. If AGI doesn't happen before 2030, Daniel owes SDM $22.

This was calculated by:

- Identifying the earliest possible date with substantial disagreement (in this case, 2030)

- Finding the probability each person assigns to the date range of now to 2030:

- Finding a fair bet

- According to this post, a bet based on the arithmetic mean of 2 differing probability estimates yields the same expected value for each participant. In this case, the mean is (5%+39%)/2=22% chance of AGI before 2030, equivalent to 22:78 odds.

- $78 and $22 can be scaled appropriately for whatever size bet you're comfortable with

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2020-08-29T07:19:34.198Z · LW(p) · GW(p)

The main issue for me is that if I win this bet I either won't be around to collect on it, or I'll be around but have much less need for money. So for me the bet you propose is basically "61% chance I pay SDM $22 in 10 years, 39% chance I get nothing."

Jonas Vollmer helped sponsor my other bet on this matter, to get around this problem. He agreed to give me a loan for my possible winnings up front, which I would pay back (with interest) in 2030, unless I win in which case the person I bet against would pay it. Meanwhile the person I bet against would get his winnings from me in 2030, with interest, assuming I lose. It's still not great because from my perspective it amounts to a loan with a higher interest rate basically, so it would be better for me to just take out a long-term loan. (The chance of never having to pay it back is nice, but I only never have to pay it back in worlds where I won't care about money anyway.) Still though it was better than nothing so I took it.

↑ comment by Sammy Martin (SDM) · 2020-08-29T11:11:04.240Z · LW(p) · GW(p)

I'll take that bet! If I do lose, I'll be far too excited/terrified/dead to worry in any case.

↑ comment by Sammy Martin (SDM) · 2020-08-26T14:35:28.173Z · LW(p) · GW(p)

The 'progress will be continuous' argument, to apply to our near future, does depend on my other assumptions - mainly that the breakthroughs on that list are separable, so agentive behaviour and long-term planning won't drop out of a larger GPT by themselves and can't be considered part of just 'improving up language model accuracy'.

We currently have partial progress on human-level language comprehension, a bit on cumulative learning, but near zero on managing mental activity for long term planning, so if we were to suddenly reach human level on long-term planning in the next 5 years, that would probably involve a discontinuity, which I don't think is very likely for the reasons given here.

If language models scale to near-human performance but the other milestones don't fall in the process, and my initial claim is right, that gives us very transformative AI but not AGI. I think that the situation would look something like this:

If GPT-N reaches par-human:

discovering new action sets

managing its own mental activity

(?) cumulative learning

human-like language comprehension

perception and object recognition

efficient search over known facts

So there would be 2 (maybe 3?) breakthroughs remaining. It seems like you think just scaling up a GPT will also resolve those other milestones, rather than just giving us human-like language comprehension. Whereas if I'm right and also those curves do extrapolate, what we would get at the end would be an excellent text generator, but it wouldn't be an agent, wouldn't be capable of long-term planning and couldn't be accurately described as having a utility function over the states of the external world, and I don't see any reason why trivial extensions of GPT would be able to do that either since those seem like problems that are just as hard as human-like language comprehension. GPT seems like it's also making some progress on cumulative learning, though it might need some RL-based help [LW(p) · GW(p)]with that, but none at all on managing mental activity for longterm planning or discovering new action sets.

As an additional argument, admittedly from authority - Stuart Russell also clearly sees human-like language comprehension as only one of several really hard and independent problems that need to be solved.

A humanlike GPT-N would certainly be a huge leap into a realm of AI we don't know much about, so we could be surprised and discover that agentive behaviour and having a utility function over states of the external world spontaneously appears in a good enough language model, but that argument has to be made, and you need that argument to hold and GPT to keep scaling for us to reach AGI in the next five years, and I don't see the conjunction of those two as that likely - it seems as though your argument rests solely on whether GPT scales or not, when there's also this other conceptual premise that's much harder to justify.

I'm also not sure if I've seen anyone make the argument that GPT-N will also give us these specific breakthroughs - but if you have reasons that GPT scaling would solve all the remaining barriers to AGI, I'd be interested to hear it. Note that this isn't the same as just pointing out how impressive the results scaling up GPT could be - Gwern's piece here, for example, seems to be arguing for a scenario more like what I've envisaged, where GPT-N ends up a key piece of some future AGI but just provides some of the background 'world model':

Models like GPT-3 suggest that large unsupervised models will be vital components of future DL systems, as they can be ‘plugged into’ systems to immediately provide understanding of the world, humans, natural language, and reasoning.

If GPT does scale, and we get human-like language comprehension in 2025, that will mean we're moving up that list much faster, and in turn suggests that there might not be a large number of additional discoveries required to make the other breakthroughs, which in turn suggests they might also occur within the Deep Learning paradigm, and relatively soon. I think that if this happens, there's a reasonable chance that when we do build an AGI a big part of its internals looks like a GPT, as gwern suggested, but by then we're already long past simply scaling up existing systems.

Alternatively, perhaps you're not including agentive behaviour in your definition of AGI - a par-human text generator for most tasks that isn't capable of discovering new action sets or managing its mental activity is, I think a 'mere' transformative AI and not a genuine AGI.

↑ comment by Ben Pace (Benito) · 2020-08-25T08:47:46.856Z · LW(p) · GW(p)

(I can't see your distribution in your image.)

↑ comment by NunoSempere (Radamantis) · 2020-08-30T22:55:35.330Z · LW(p) · GW(p)

That small tail at the end feels really suspicious. I.e., it implies that if we haven't reached AGI by 2080, then we probably won't reach it at all. I feel like this might be an artifact of specifying a small number of bins on elicit, though.

Here's my quick forecast, to get things going. Probably if anyone asks me questions about it I'll realise I'm embarrassed by it and change it.

It has three buckets:

10%: We get to AGI with the current paradigm relatively quickly without major bumps.

60%: We get to it eventually sometime in the next ~50 years.

30%: We manage to move into a stable state where nobody can unilaterally build an AGI, then we focus on alignment for as long as it takes before we build it.

2nd attempt

Adele Lopez is right that 30% is super optimistic. Also I accidentally put a bunch within '2080-2100', instead of 'after 2100'. And also I thought about it more. here's my new one.

Link.

It has four buckets:

20% Current work leads directly into AI in the next 15 years.

55% There are some major bottlenecks, new insights needed, and some engineering projects comparable in size to the manhattan project. This is 2035 to 2070.

10% This is to fill out 2070 to 2100.

15% We manage to move to a stable state, or alternatively civilizational collapse / non-AI x-risk stops AI research. This is beyond 2100.

↑ comment by romeostevensit · 2020-08-22T09:17:53.940Z · LW(p) · GW(p)

Interesting, I would have guessed your big bathtub bottom was something like long ai winter due to current approach stalling and no one finding the needed breakthrough until 2080's.

↑ comment by Adele Lopez (adele-lopez-1) · 2020-08-22T15:35:04.186Z · LW(p) · GW(p)

That 30% where we get our shit together seems wildly optimistic to me!

Replies from: Benito↑ comment by Ben Pace (Benito) · 2020-08-23T01:23:43.905Z · LW(p) · GW(p)

You're right. Updated.

My rough take: https://elicit.ought.org/builder/oTN0tXrHQ

3 buckets, similar to Ben Pace's

- 5% chance that current techniques just get us all the way there, e.g. something like GPT-6 is basically AGI

- 10% chance AGI doesn't happen this century, e.g. humanity sort of starts taking this seriously and decides we ought to hold off + the problem being technically difficult enough that small groups can't really make AGI themselves

- 50% chance that something like current techniques and some number of new insights gets us to AGI.

If I thought about this for 5 additional hours, I can imagine assigning the following ranges to the scenarios:

- [1, 25]

- [1, 30]

- [20, 80]

Roughly my feelings: https://elicit.ought.org/builder/trBX3uNCd

Reasoning: I think lots of people have updated too much on GPT-3, and that the current ML paradigms are still missing key insights into general intelligence. But I also think enough research is going into the field that it won't take too long to reach those insights.

To the extent that it differs from others' predictions, probably the most important factor is that I think even if AGI is hard, there are a number of ways in which human civilization could become capable of doing almost arbitrarily hard things, like through human intelligence enhancement or sufficiently transformative narrow AI. I think that means the question is less about how hard AGI is and more about general futurism than most people think. It's moderately hard for me to imagine how business as usual could go on for the rest of the century, but who knows.

My snapshot: https://elicit.ought.org/builder/xPoVZh7Xq

Idk what we mean by "AGI", so I'm predicting when transformative AI will be developed instead. This is still a pretty fuzzy target: at what point do we say it's "transformative"? Does it have to be fully deployed and we already see the huge economic impact? Or is it just the point at which the model training is complete? I'm erring more on the side of "when the model training is complete", but also there may be lots of models contributing to TAI, in which case it's not clear which particular model we mean. Nonetheless, this feels a lot more concrete and specific than AGI.

Methodology: use a quantitative model, and then slightly change the prediction to account for important unmodeled factors. I expect to write about this model in a future newsletter.

↑ comment by Rohin Shah (rohinmshah) · 2022-06-22T16:42:56.864Z · LW(p) · GW(p)

Some updates:

- This should really be thought of as "when we see the transformative economic impact", I don't like the "when model training is complete" framing (for basically the reason mentioned above, that there may be lots of models).

- I've updated towards shorter timelines; my median is roughly 2045 with a similar shape of the distribution as above.

- One argument for shorter timelines than that in bio anchors is "bio anchors doesn't take into account how non-transformative AI would accelerate AI progress".

- Another relevant argument is "the huge difference between training time compute and inference time compute suggests that we'll find ways to get use out of lots of inferences with dumb models rather than a few inferences with smart models; this means we don't need models as smart as the human brain, thus lessening the needed compute at training time".

- I also feel more strongly about short horizon models probably being sufficient (whereas previously I mostly had a mixture between short and medium horizon models).

- Conversely, reflecting on regulation and robustness made me think I was underweighting those concerns, and lengthened my timelines.

↑ comment by Rohin Shah (rohinmshah) · 2023-03-26T13:25:48.687Z · LW(p) · GW(p)

Interestingly, I apparently had a median around 2040 back in 2019, so my median is still later than it used to be prior to reading the bio anchors report.

If AGI is taken to mean, the first year that there is radical economic, technological, or scientific progress, then these are my AGI timelines.

My percentiles

- 5th: 2029-09-09

- 25th: 2049-01-17

- 50th: 2079-01-24

- 75th: above 2100-01-01

- 95th: above 2100-01-01

I have a bit lower probability for near-term AGI than many people here are. I model my biggest disagreement as about how much work is required to move from high-cost impressive demos to real economic performance. I also have an intuition that it is really hard to automate everything and progress will be bottlenecked by the tasks that are essential but very hard to automate.

↑ comment by Matthew Barnett (matthew-barnett) · 2022-07-15T23:15:16.565Z · LW(p) · GW(p)

Some updates:

- I now have an operationalization of AGI I feel happy about, and I think it's roughly just as difficult as creating transformative AI (though perhaps still slightly easier).

- I have less probability now on very long timelines (>80 years). Previously I had 39% credence on AGI arriving after 2100, but I now only have about 25% credence.

- I also have a bit more credence on short timelines, mostly because I think the potential for massive investment is real, and it doesn't seem implausible that we could spend >1% of our GDP on AI development at some point in the near future.

- I still have pretty much the same reasons for having longer timelines than other people here, though my thinking has become more refined. Here are of my biggest reasons summarized: delays from regulation, difficulty of making AI reliable [LW · GW], the very high bar of automating general physical labor and management, and the fact that previous impressive-seeming AI milestones ended up mattering much less in hindsight than we thought at the time.

- Taking these considerations together, my new median is around 2060. My mode is still probably in the 2040s, perhaps 2042.

- I want to note that I'm quite impressed with recent AI demos, and I think that we are making quite rapid progress at the moment in the field. My longish timelines are mostly a result of the possibility of delays, which I think are non-trivial.

2022-03-19: someone talked to me for many hours about the scaling hypothesis, and i've now updated to shorter timelines; i havent thought about quantifying the update yet, but I can see a path to AGI by 2040 now

(as usual, conditional on understanding the question and the question making sense)

where human-level AGI means an AI better at any task than any human living in 2020, where biologically-improved and machine-augmented humans don't count as AIs for the purpose of this question, but uploaded humans do

said AGI needs to be created by our civilisation

the probability density is the sum of my credences for different frequency of future worlds with AGIs

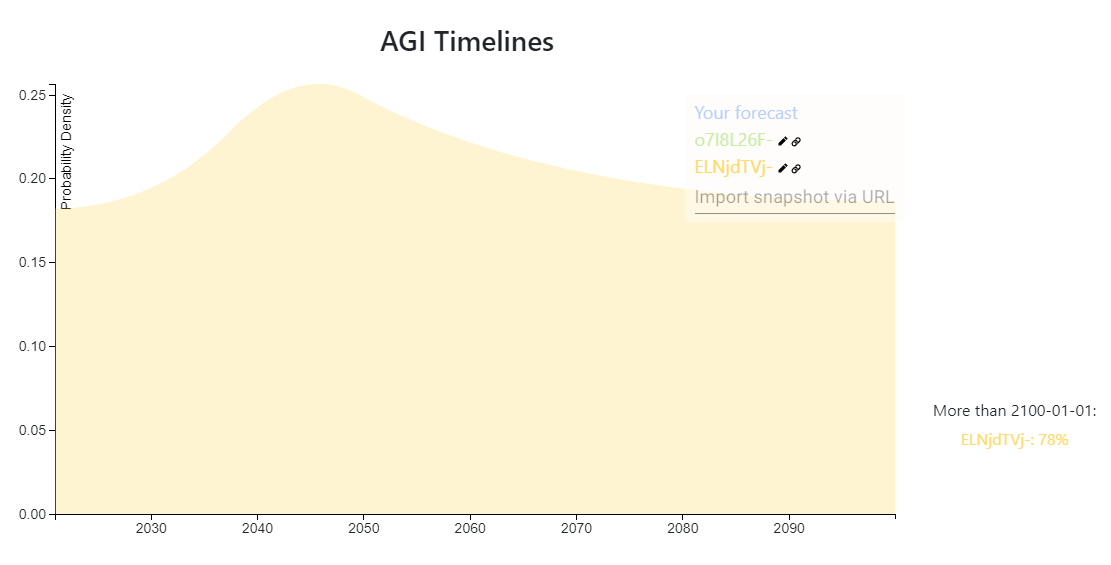

https://elicit.ought.org/builder/ELNjdTVj-

1% it already happened

52% it won't happen (most likely because we'll go extinct or stop being simulated)

26% after 2100

EtA: moved a 2% from >2100 to <2050

Someone asked me:

Any reason why you have such low probability on AGI within like 80 years

partly just wide priors and I didn't update much, partly Hansonian view, partly Drexler's view, partly seems like a hard problem

Note to self; potentially to read:

↑ comment by Matthew Barnett (matthew-barnett) · 2020-09-04T00:53:07.514Z · LW(p) · GW(p)

- Your percentiles:

- 5th: 2040-10-01

- 25th: above 2100-01-01

- 50th: above 2100-01-01

- 75th: above 2100-01-01

- 95th: above 2100-01-01

XD

↑ comment by Mati_Roy (MathieuRoy) · 2023-03-26T21:28:10.935Z · LW(p) · GW(p)

Update: 18% <2033 18% 2033-2043 18% 2043-2053 18% 2050-2070 28% 2070+ or won't happen

see more details on my shortform: https://www.lesswrong.com/posts/DLepxRkACCu8SGqmT/mati_roy-s-shortform?commentId=KjxnsyB7EqdZAuLri [LW(p) · GW(p)]

↑ comment by Mati_Roy (MathieuRoy) · 2021-06-14T21:54:39.411Z · LW(p) · GW(p)

Without consulting my old prediction here, I answered someone asking me:

What is your probability mass for the date with > 50% chance of agi?

with:

I used to use the AGI definition "better and cheaper than humans at all economic tasks", but now I think even if we're dumber, we might still be better at some economic tasks simply because we know human values more. Maybe the definition could be "better and cheaper at any well defined tasks". In that case, I'd say maybe 2080, taking into account some probability of economic stagnation and some probability that sub-AGI AIs cause an existential catastrophe (and so we don't develop AGI)

↑ comment by Mati_Roy (MathieuRoy) · 2020-09-28T16:09:49.789Z · LW(p) · GW(p)

will start tracking some of the things I read on this here:

- 2020-09-28: finished the summary of Conversation with Robin Hanson

note to self -- read:

↑ comment by Mati_Roy (MathieuRoy) · 2020-09-15T06:39:33.765Z · LW(p) · GW(p)

topic: AI timelines

probability nothing here is new, but it's some insights I had

summary: alignment will likely become the bottleneck; we'll have human-capable AIs but they won't do every tasks because we won't know how to specify them

epistemic status: stated more confidently than I am, but seems like a good consideration to add to my portfolio of plausible models of AI development

when I was asking my inner sim "when will we have an AI better than a human at any task", it was returning 21% before 2100 (52% we won't) (see: https://www.lesswrong.com/posts/hQysqfSEzciRazx8k/forecasting-thread-ai-timelines?commentId=AhA3JsvwaZ7h6JbJj). which is a low probability among AI researchers and longtermist forecasters.

but then I asked my inner sim "when will we have an AI better than a human at any game". the timeline for this seemed much shorter.

but a game is just task that has been operationalized.

so what my inner sim was saying is not that human-level capable AI was far away, but that human-level capable AND aligned AI was far away. I was imagining AIs wouldn't clean-up my place anytime soon not because it's hard to do (well, not for an AI XD), but because it's hard to specify what we mean by "don't cause any harm in the process".

in other words, I think alignment is likely to be the bottleneck

the main problem won't be to create an AI that can solve a problem, it will be to operationalize the problem in a way that properly captures what we care about. it won't be about winning games, but creating them.

I should have known; I was well familiar with the orthogonality thesis for a long time

also see David's comment about alignment vs capabilities: https://www.lesswrong.com/posts/DmLg3Q4ZywCj6jHBL/capybaralet-s-shortform?commentId=rdGAv6S6W3SbK6eta

I discussed the above with Matthew Barnett and David Krueger

the Turing Test might be hard to pass because even if you're as smart as a human, if you don't already know what humans want, it seems like it could be hard to learn (as well as a human) for a human-level AI (?) (side note: learning what humans want =/= wanting what humans want; that's a classic confusion) so maybe a better test for human-level intelligence would be: when an AI can beat a human at any game (where a game is a well operationalized task, and doesn't include figuring out what humans want)

(2021-10-10 update: I'm not at all confident about the above paragraph, and it's not central to this thesis. The Turing Test can be a well define game, and we could have AIs that pass it while not having AIs doing other tasks human can do simply because we haven't been able to operationalize those other tasks)

I want to update my AI timelines. I'm now (re)reading some stuff on https://aiimpacts.org/ (I think they have a lot of great writings!) Just read this which was kind of related ^^

> Hanson thinks we shouldn’t believe it when AI researchers give 50-year timescales:

> Rephrasing the question in different ways, e.g. “When will most people lose their jobs?” causes people to give different timescales.

> People consistently give overconfident estimates when they’re estimating things that are abstract and far away.

(https://aiimpacts.org/conversation-with-robin-hanson/)

I feel like for me it was the other way around. Initially I was just thinking more abstractly about "AIs better than humans at everything", but then thinking in terms of games seems like it's somewhat more concrete.

x-post: https://www.facebook.com/mati.roy.09/posts/10158870283394579

↑ comment by Mati_Roy (MathieuRoy) · 2020-10-02T21:32:50.271Z · LW(p) · GW(p)

The key observation is, imitation learning algorithms[1] might produce close-to-human-level intelligence even if they are missing important ingredients of general intelligence that humans have.

See Vanessa Kosoy's post [LW(p) · GW(p)]

↑ comment by Mati_Roy (MathieuRoy) · 2020-10-02T21:23:59.612Z · LW(p) · GW(p)

Related predictions:

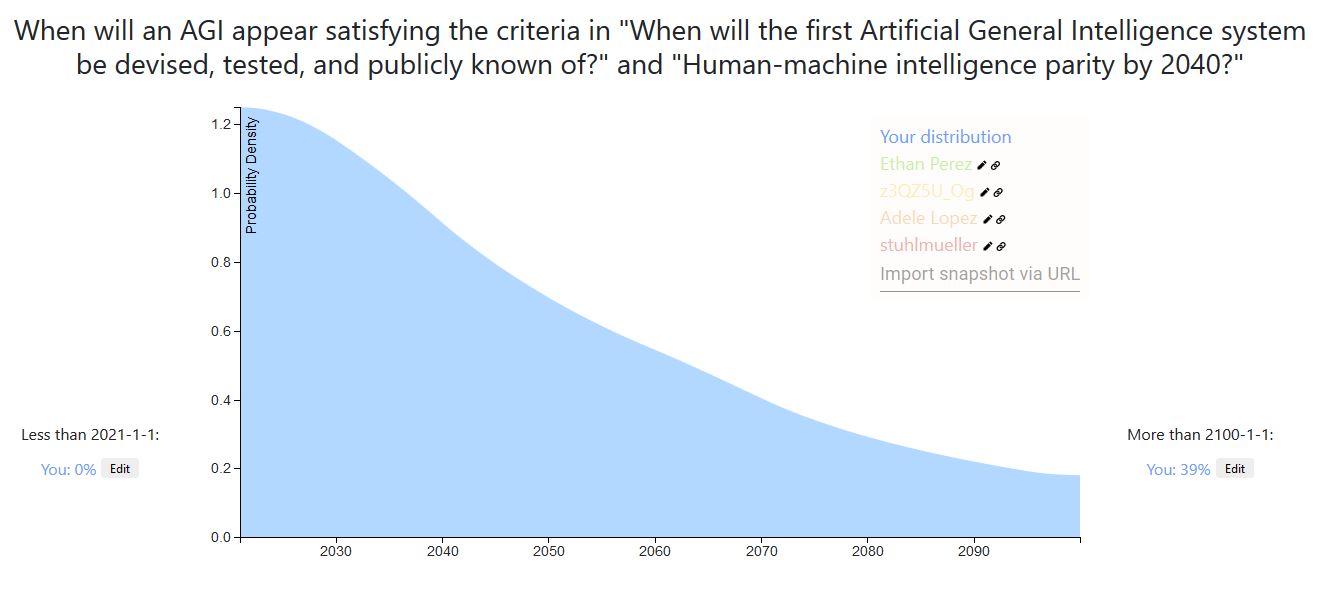

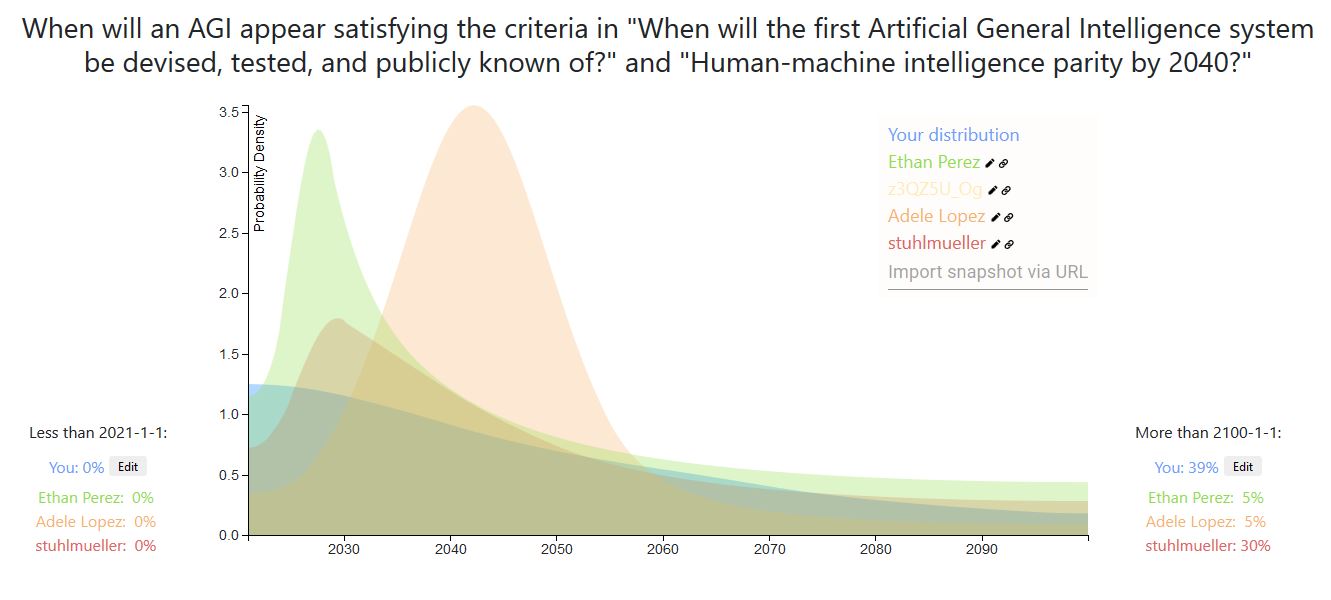

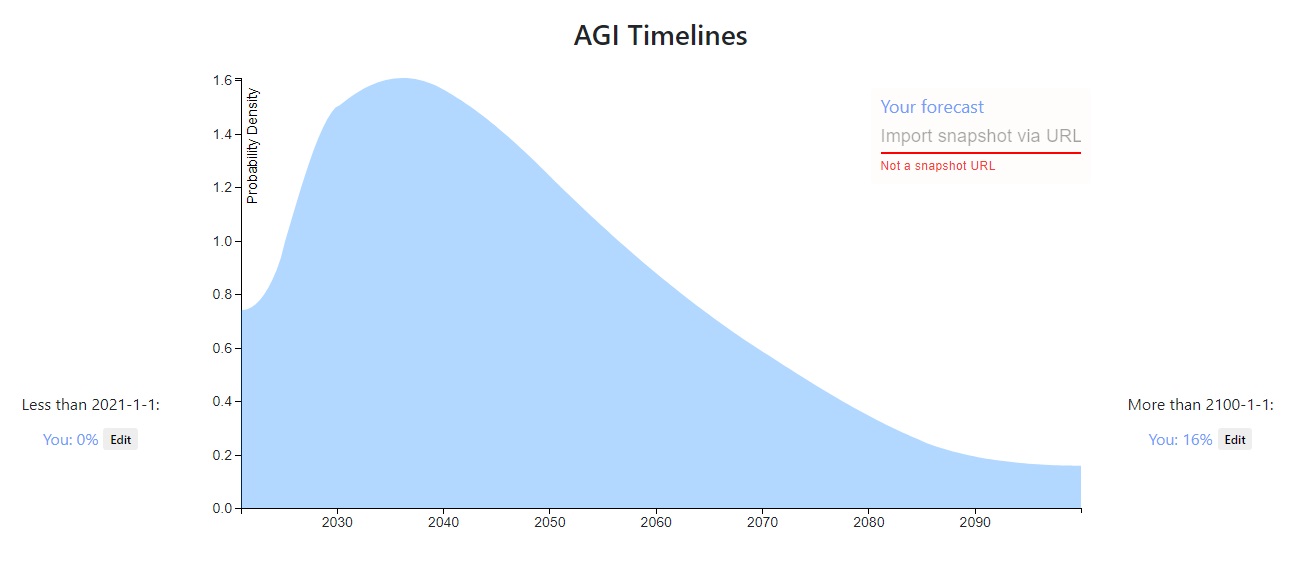

Here is my own answer.

- It takes as a starting point datscilly's own prediction, i.e., the result of applying Laplace's rule from the Dartmouth conference. This seems like the most straightfoward historical base rate / model to use, and on a meta-level I trust datscilly and I've worked with him before.

- I then substract some probability from the beginning and move it towards the end because I think it's unlikely we'll get human parity in the next 5 years. In particular, even Daniel Kokotajlo, the most bullish among the other predictors puts his peak somewhere around 2025.

- I then apply some smoothing.

My resulting distribution looks similar to the current aggregate (and this I noticed after building it)

Datscilly's prediction:

My prediction:

The previous aggregate:

Something I don't like about the other predictions are:

- Not long enough tails. There have been AI winters before; there could be AI winters again. Shit happens.

- Very spiky maximums. I get that specific models can provide sharp predictions, but the question seems hard enough that I'd expect there to be a large amount of model error. I'd also expect predictions which take into account multiple models to do better.

- Not updating on other predictions. Some of the other forecasters seem to have one big idea, rather than multiple uncertainties.

Things that would change my mind:

At the five minute level:

- Getting more information about Daniel Kokotajlo's models. On a meta-level, learning that he is a superforecaster.

- Some specific definitions of "human level".

At the longer-discussion level:

- Object level arguments about AI architectures

- Some information about whether experts believe that current AI methods can lead to AGI.

- Some object level arguments about Moore's law. I.e., by which year does Moore's law predict we'll have much more computing power than the higher estimates for the human Brain?

I'm also uncertain about what probability to assign to AGI after 2100.

I might revisit this as time goes on.

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2020-09-16T06:10:12.303Z · LW(p) · GW(p)

I'm not a superforecaster. I'd be happy to talk more about my models if you like. You may be interested to know that my prediction was based on aggregating various different models, and also that I did try to account for things usually taking longer than expected. I'm trying to arrange a conversation with Ben Pace, perhaps you could join. I could also send you a powerpoint I made, or we could video chat.

I can answer your question #3. There's been some good work on the question recently by people at OpenPhil and AI Impacts.

Prediction: https://elicit.ought.org/builder/ZfFUcNGkL

I (a non-expert) heavily speculate the following scenario for an AGI based on Transformer architectures:

The scaling hypothesis is likely correct (and is the majority of the probability density for the estimate), and maybe only two major architectural breakthroughs are needed before AGI. The first is a functioning memory system capable of handling short and long term memories with lifelong learning without the problems of fine tuning.

The second architectural breakthrough needed would be allowing the system to function in an 'always on' kind of fashion. For example current transformers get an input then spit an output and are done. Where as a human can receive an input, output a response, but then keep running, seeing the result of their own output. I think an 'always on' functionality will allow for multi-step reasoning, and functional 'pruning' as opposed to 'babble'. As an example of what I mean, think of a human carefully writing a paragraph and iterating and fixing/rewriting past work as they go, rather than just the output being their stream of consciousness. Additionally it could allow a system to not have to store all information within its own mind, but rather use tools to store information externally. Getting an output that has been vetted for release rather than a thought stream seems very important for high quality.

Additionally I think functionality such as agent behavior and self awareness only require embedding an agent in a training environment simulating a virtual world and its interactions (See https://www.lesswrong.com/posts/p7x32SEt43ZMC9r7r/embedded-agents [LW · GW] ). I think this may be the most difficult to implement, and there are uncertainties. For example does all training need to take place within this environment? Or is only an additional training run after it has been trained like current systems necessary.

I think such a system utilizing all the above may be able to introspectively analyse its own knowledge/model gaps and actively research to correct them. I think that could cause a discontinuous jump in capabilities.

I think that none of those capabilities/breakthroughs seem out of reach this decade, that that scaling will continue to quadrillions of parameters by the end of the decade (in addition to continued efficiency improvements).

I hope an effective control mechanism can be found by then. (Assuming any of this is correct, 5 months ago I would have laughed at this.).

Holden Karnofsky wrote on Cold Takes:

I estimate that there is more than a 10% chance we'll see transformative AI within 15 years (by 2036); a ~50% chance we'll see it within 40 years (by 2060); and a ~2/3 chance we'll see it this century (by 2100).

I copied these bins to create Holden's approximate forecasted distribution (note that Holden's forecast is for Transformative AI rather than human-level AGI):

Compared to the upvote-weighted mixture in the OP, it puts more probability on longer timelines, with a median of 2060 vs. 2047 and 1/3 vs. 1/5 on after 2100. Holden gives a 10% chance by 2036 while the mixture gives approximately 30%. Snapshot is here.

Here is a link to my forecast

And here are the rough justifications for this distribution:

I don't have much else to add beyond what others have posted, though it's in part influenced by an AIRCS event I attended in the past. Though I do remember being laughed at for suggesting GPT-2 represented a very big advance toward AGI.

I've also never really understood the resistance to why current models of AI are incapable of AGI. Sure, we don't have AGI with current models, but how do we know it isn't a question of scale? Our brains are quite efficient, but the total energy consumption is comparable to that of a light bulb. I find it very hard to believe that a server farm in an Amazon, Microsoft, or Google Datacenter would be incapable of running the final AGI algorithm. And for all the talk of the complexity in the brain, each neuron is agonizingly slow (200-300Hz).

That's also to say nothing of the fact that the vast majority of brain matter is devoted to sensory processing. Advances in autonomous vehicles are already proving that isn't an insurmountable challenge.

Current AI models are performing very well at pattern recognition. Isn't that most of what our brains do anyway?

Self attended recurrent transformer networks with some improvements to memory (attention context) access and recall to me look very similar to our own brain. What am I missing?

↑ comment by TurnTrout · 2020-08-26T13:46:44.549Z · LW(p) · GW(p)

I've also never really understood the resistance to why current models of AI are incapable of AGI. Sure, we don't have AGI with current models, but how do we know it isn't a question of scale? Our brains are quite efficient, but the total energy consumption is comparable to that of a light bulb. I find it very hard to believe that a server farm in an Amazon, Microsoft, or Google Datacenter would be incapable of running the final AGI algorithm. And for all the talk of the complexity in the brain, each neuron is agonizingly slow (200-300Hz).

First, you ask why it isn't a question of scale. But then you seem to wonder why we need any more scaling? This seems to mix up two questions: can current hardware support AGI for some learning paradigm, and can it support AGI for the deep learning paradigm?

Replies from: ChosunOne↑ comment by ChosunOne · 2020-08-26T15:33:54.444Z · LW(p) · GW(p)

My point here was that even if the deep learning paradigm is not anywhere close to as efficient as the brain, it has a reasonable chance of getting to AGI anyway since the brain does not use all that much energy. The biggest models from GPT-3 can run on a fraction of what a datacenter can supply, hence the original question, how do we know AGI isn't just a question of scale in the current deep learning paradigm.

My prediction. Some comments

- Your percentiles:

- 5th: 2023-05-16

- 25th: 2033-03-31

- 50th: 2046-04-13

- 75th: 2075-11-27

- 95th: above 2100-01-01

- You think 2034-03-27 is the most likely point

- You think it's 6.9X as likely as 2099-05-29

Here is a quick approximation of mine, I want more powerful Elicit features to make it easier to translate from sub-problem beliefs to final probability distribution. Without taking the time to write code, I had to intuit some translations. https://elicit.ought.org/builder/CeAJFku1S

My estimate is very different from what others suggest and this stems from my background and my definition of AGI. I see AGI as human-level intelligence. If we present a problem to an AGI system, we would expect that it does not make any "silly" mistakes, but that it makes reasonable responses like a competent human would do.

My background: I work in deep learning on very large language models. I worked on the parallelization of deep learning in the past. I also have in-depth knowledge of GPUs and accelerators in general. I developed the fasted algorithm for top-k sparse-sparse matrix multiplication on a GPU.

I wrote about this 5-years ago, but since then my opinion has not changed: I believe that AGI will be physically impossible with classical computers.

It is very clear that intelligence is all about compute capabilities. The intelligence of mammals is currently limited energy intake — including the intelligence of humans. I believe that the same is true for AI algorithms and these patterns seem to be very clear if you look at the trajectory of compute requirements over the past years.

The main issues are these: You cannot build structures smaller than atoms; you can only dissipate a certain amount of heat per square area; the smaller the structures are that you print with lasers, the higher the probability of artifacts; light can only go a certain distance per second; the speed of SRAM scales sub-linearly with its size. These are all hard physical boundaries that we cannot alter. Yet, all these physical boundaries will be hit within a couple of years and we will fall very, very far short of human processing capabilities and our models will not improve much further. Two orders of magnitude of additional capability are realistic, but anything beyond that is just wishful thinking.

You may say it is just a matter of scale. Our hardware will not be as fast as brains but we just build more of them. Well, the speed of light is fixed and networking scales abysmally. With that, you have a maximum cluster size that you cannot exceed without losing processing power. The thing is, even in current neural networks, doubling the number of GPUs sometimes doubles training time. We will design neural networks that scale better, such as mixtures of experts, but this will not be nearly enough (this will give us another 1-2 orders of magnitude).

We will switch to wafer-scale compute in the next years and this will yield a huge boost in performance, but even wafer-scale chips will not yield the long-term performance that we need to get anywhere near AGI.

The only real possibility that I see is quantum computing. It is currently not clear what quantum AI algorithms would look like but we seem to be able to scale quantum computers double exponentially over time aka Neven's Law. The real question is if quantum error correction also scales exponentially (the current data suggests this) or if it can scale sub-exponentially. If it scales exponentially, quantum computers will be interesting for chemistry, but they would be useless for AI. If it scales sub-exponentially we will hit quantum computers that are faster than classical computers in 2035. Due to double exponential scaling, the quantum computers in 2037 would be an unbelievable amount more powerful than all classical computers before. We might not be able to reach AGI still because we cannot feed such computer data quickly enough to keep up with its processing power, but I am sure we might be able to figure something out to feed a single powerful quantum computer. Currently, the input requirements for pretraining large deep learning models are minimal for natural language but high for images and videos. As such, we might still not have AGI with quantum computers, but we would have computers with excellent natural language processing capabilities.

If quantum computer do not work, I would predict that we never will reach AGI — hence the probability is zero after the peak in 2035-2037.

If the definition of AGI is relaxed and models are allowed to make "stupid mistakes" and the requirement is just that they on average solve problems better than humans. I would be pretty much in line with Ethan's predictions (Ethan and I chatted about this before).

Edit: A friend told me that it is better to put my probability after 2100 if I believe it is not happening after the peak 2037. Updated the graph.

↑ comment by Ben Pace (Benito) · 2020-08-25T21:42:49.011Z · LW(p) · GW(p)

I so want to see a bet come out of this.

↑ comment by Ethan Perez (ethan-perez) · 2020-08-25T22:04:37.418Z · LW(p) · GW(p)

Love this take. Tim, did you mean to put some probability on the >2100 bin? I think that would include the "no AGI ever" prediction, and I'm curious to know exactly how much probability you assign to that scenario.

Replies from: tim_dettmers↑ comment by tim_dettmers · 2020-08-26T00:03:17.695Z · LW(p) · GW(p)

Thanks, Ethan! I updated the post with that info!

↑ comment by Raemon · 2020-08-25T21:23:07.769Z · LW(p) · GW(p)

Do you think the human brain uses quantum computing? (It's not obvious that human brain structure is any easier to replicate than quantum computing, and I haven't thought about this at all, but it seems like an existence proof of a suitable "hardware", and I'd weakly guess it doesn't use QC)

Replies from: tim_dettmers↑ comment by tim_dettmers · 2020-08-26T00:02:56.943Z · LW(p) · GW(p)

The brain does not use quantum computing as far as I know. At least not in the sense that would be highly beneficial computationally. The brain achieves a compute density many orders of magnitude denser than any classical computer we will ever able to design at about 20 W of energy that is the primary advantage of the brain. We cannot build such a structure with silicon, because it would overheat. Even if we would be able to cool it (room temperature superconductors), there is no way to manufacture it. 3D memory is just a couple of layers extra but the yield is so bad that it is almost not economically viable to produce it because the manufacturing process is so unreliable. It is unlikely that we will able to manufacture it more reliably in the future because our tools are already very close to physical limits.

You might say "Why not just build a brain biologically?". Well for that we would need to understand the brain at the protein level and how to coordinated proteins from scratch. From our tools it is unlikely that that will ever happen. You cannot measure a collection of tiny things that are closely bundled together when you need large instruments to measure the tiny things. There is just not enough space, geometrically, to do that. And there are more problems after you understood how the brain works on the protein level. I think efficient biological computation it is just not a physically plausible concept.

With those two eliminated there is just not a way to replicate a human brain. There are different ways to achieve super-human compute capabilities by exploiting some physical dimensions which are limited for brains but such an intelligence would be very different from a human talent. Maybe superior in many aspects, but not AGI in the general sense.

19 comments

Comments sorted by top scores.

comment by Raemon · 2020-08-26T02:28:47.559Z · LW(p) · GW(p)

Curated.

I think this was a quite interesting experiment in LW Post format. Getting to see everyone's probability-distributions in visual graph format felt very different from looking at a bunch of numbers in a list, or seeing them averaged together. I especially liked some of the weirder shapes of some people's curves.

This is a bit of an offbeat curation, but I think it's good to periodically highlight experimental formats like this.

comment by A Ray (alex-ray) · 2020-08-25T15:47:06.990Z · LW(p) · GW(p)

It might be useful for every person responding to attempt to define precisely what they mean by human level AGI.

comment by Matthew Barnett (matthew-barnett) · 2020-08-27T03:57:18.595Z · LW(p) · GW(p)

It's unclear to me what "human-level AGI" is, and it's also unclear to me why the prediction is about the moment an AGI is turned on somewhere. From my perspective, the important thing about artificial intelligence is that it will accelerate technological, economic, and scientific progress. So, the more important thing to predict is something like, "When will real economic growth rates reach at least 30% worldwide?"

It's worth comparing the vagueness in this question with the specificity in this one on Metaculus. From the virtues of rationality,

The tenth virtue is precision. One comes and says: The quantity is between 1 and 100. Another says: the quantity is between 40 and 50. If the quantity is 42 they are both correct, but the second prediction was more useful and exposed itself to a stricter test. What is true of one apple may not be true of another apple; thus more can be said about a single apple than about all the apples in the world. The narrowest statements slice deepest, the cutting edge of the blade.Replies from: jungofthewon

↑ comment by jungofthewon · 2020-08-28T14:02:52.469Z · LW(p) · GW(p)

I generally agree with this but think the alternative goal of "make forecasting easier" is just as good, might actually make aggregate forecasts more accurate in the long run, and may require things that seemingly undermine the virtue of precision.

More concretely, if an underdefined question makes it easier for people to share whatever beliefs they already have, then facilitates rich conversation among those people, that's better than if a highly specific question prevents people from making a prediction at all. At least as much, if not more, of the value of making public, visual predictions like this comes from the ensuing conversation and feedback than from the precision of the forecasts themselves.

Additionally, a lot of assumptions get made at the time the question is defined more precisely, which could prematurely limit the space of conversation or ideas. There are good reasons why different people define AGI the way they do, or the moment of "AGI arrival" the way they do, that might not come up if the question askers had taken a point of view.

comment by Ben Pace (Benito) · 2020-08-22T02:46:29.494Z · LW(p) · GW(p)

Comment here if you have technical issues with the Elicit tool, with putting images in your comments, or with anything else.

Replies from: josh-jacobson↑ comment by Josh Jacobson (josh-jacobson) · 2020-09-24T21:20:40.759Z · LW(p) · GW(p)

I'm colorblind, and I'm unable to distinguish (without using an eye-droplet tool) between many of the colors used here, including in the top-level post's graph. Optimally, this tool would be updated to use a colorblind friendly palette by default.

Replies from: Benito, Amandango↑ comment by Ben Pace (Benito) · 2020-09-24T21:28:04.866Z · LW(p) · GW(p)

Yeah. Hover-overs that tell you whose distribution it is would help here.

comment by Teerth Aloke · 2020-08-22T14:43:00.149Z · LW(p) · GW(p)

Apparently, GPT-3 has greatly influenced the forecasts. I wonder if this is true?

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2020-08-23T09:56:39.574Z · LW(p) · GW(p)

It significantly influenced mine, though the majority of that influence wasn't the evidence it provided but rather the motivation it gave me to think more carefully and deeply about timelines.

Replies from: Teerth Aloke↑ comment by Teerth Aloke · 2020-08-24T12:08:19.529Z · LW(p) · GW(p)

But your timeline should be highly significant for your actions. How has your timeline update affected your planned decisions?

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2020-08-24T12:20:32.414Z · LW(p) · GW(p)

I've shifted my investment portfolio, taken on a higher-risk and more short-term-focused career path, become less concerned about paying off debt, and done more thinking and talking about timelines.

That said, I think it's actually less significant for our actions than most people seem to think. The difference between 30% chance of TAI in the next 6 years, and 5%, may seem like a lot, but it's probably a small contribution to the EV difference between your options compared to the other relevant differences such as personal fit, neglectedness, tractability, etc.

↑ comment by Teerth Aloke · 2020-08-27T07:45:53.046Z · LW(p) · GW(p)