chinchilla's wild implications

post by nostalgebraist · 2022-07-31T01:18:28.254Z · LW · GW · 128 commentsContents

1. the scaling law plugging in real models 2. are we running out of data? web scrapes MassiveWeb The GLaM/PaLM web corpus domain-specific corpora Code Arxiv Books "all the data we have" what is compute? (on a further barrier to data scaling) appendix: to infinity None 128 comments

(Colab notebook here.)

This post is about language model scaling laws, specifically the laws derived in the DeepMind paper that introduced Chinchilla.[1]

The paper came out a few months ago, and has been discussed a lot, but some of its implications deserve more explicit notice in my opinion. In particular:

- Data, not size, is the currently active constraint on language modeling performance. Current returns to additional data are immense, and current returns to additional model size are miniscule; indeed, most recent landmark models are wastefully big.

- If we can leverage enough data, there is no reason to train ~500B param models, much less 1T or larger models.

- If we have to train models at these large sizes, it will mean we have encountered a barrier to exploitation of data scaling, which would be a great loss relative to what would otherwise be possible.

- The literature is extremely unclear on how much text data is actually available for training. We may be "running out" of general-domain data, but the literature is too vague to know one way or the other.

- The entire available quantity of data in highly specialized domains like code is woefully tiny, compared to the gains that would be possible if much more such data were available.

Some things to note at the outset:

- This post assumes you have some familiarity with LM scaling laws.

- As in the paper[2], I'll assume here that models never see repeated data in training.

- This simplifies things: we don't need to draw a distinction between data size and step count, or between train loss and test loss.

- I focus on the parametric scaling law from the paper's "Approach 3," because it's provides useful intuition.

- Keep in mind, though, that Approach 3 yielded somewhat different results from Approaches 1 and 2 (which agreed with one another, and were used to determine Chinchilla's model and data size).

- So you should take the exact numbers below with a grain of salt. They may be off by a few orders of magnitude (but not many orders of magnitude).

1. the scaling law

The paper fits a scaling law for LM loss , as a function of model size and data size .

Its functional form is very simple, and easier to reason about than the law from the earlier Kaplan et al papers. It is a sum of three terms:

The first term only depends on the model size. The second term only depends on the data size. And the third term is a constant.

You can think about this as follows.

An "infinitely big" model, trained on "infinite data," would achieve loss . To get the loss for a real model, you add on two "corrections":

- one for the fact that the model's only has parameters, not infinitely many

- one for the fact that the model only sees training examples, not infinitely many

Here's the same thing, with the constants fitted to DeepMind's experiments on the MassiveText dataset[3].

plugging in real models

Gopher is a model with 280B parameters, trained on 300B tokens of data. What happens if we plug in those numbers?

What jumps out here is that the "finite model" term is tiny.

In terms of the impact on LM loss, Gopher's parameter count might as well be infinity. There's a little more to gain on that front, but not much.

Scale the model up to 500B params, or 1T params, or 100T params, or params . . . and the most this can ever do for you is an 0.052 reduction in loss[4].

Meanwhile, the "finite data" term is not tiny. Gopher's training data size is very much not infinity, and we can go a long way by making it bigger.

Chinchilla is a model with the same training compute cost as Gopher, allocated more evenly between the two terms in the equation.

It's 70B params, trained on 1.4T tokens of data. Let's plug that in:

Much better![5]

Without using any more compute, we've improved the loss by 0.057. That's bigger than Gopher's entire "finite model" term!

The paper demonstrates that Chinchilla roundly defeats Gopher on downstream tasks, as we'd expect.

Even that understates the accomplishment, though. At least in terms of loss, Chinchilla doesn't just beat Gopher. It beats any model trained on Gopher's data, no matter how big.

To put this in context: until this paper, it was conventional to train all large LMs on roughly 300B tokens of data. (GPT-3 did it, and everyone else followed.)

Insofar as we trust our equation, this entire line of research -- which includes GPT-3, LaMDA, Gopher, Jurassic, and MT-NLG -- could never have beaten Chinchilla, no matter how big the models got[6].

People put immense effort into training models that big, and were working on even bigger ones, and yet none of this, in principle, could ever get as far Chinchilla did.

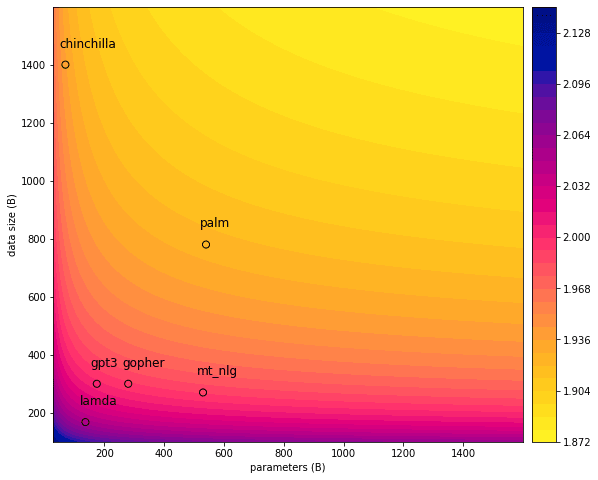

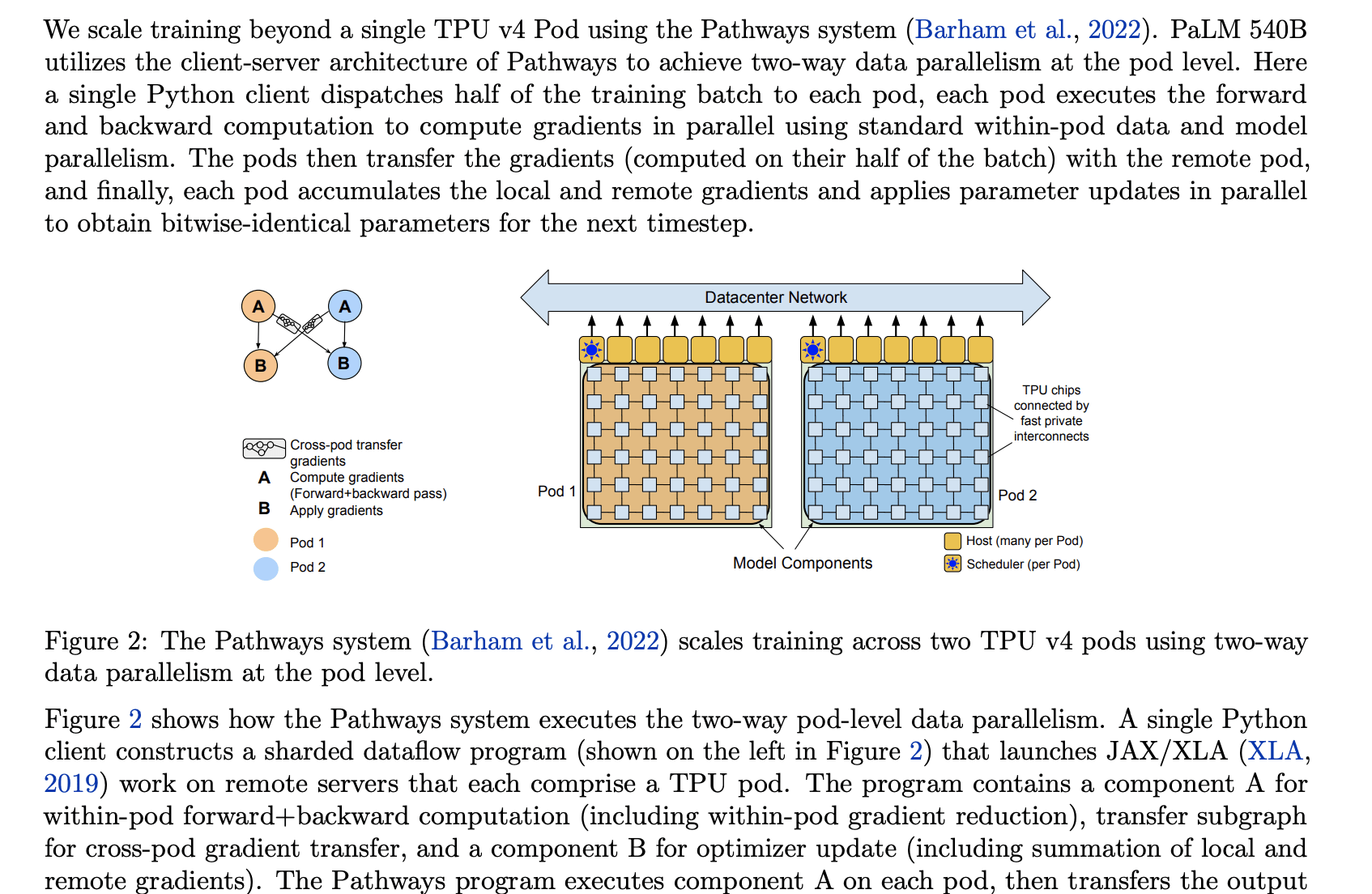

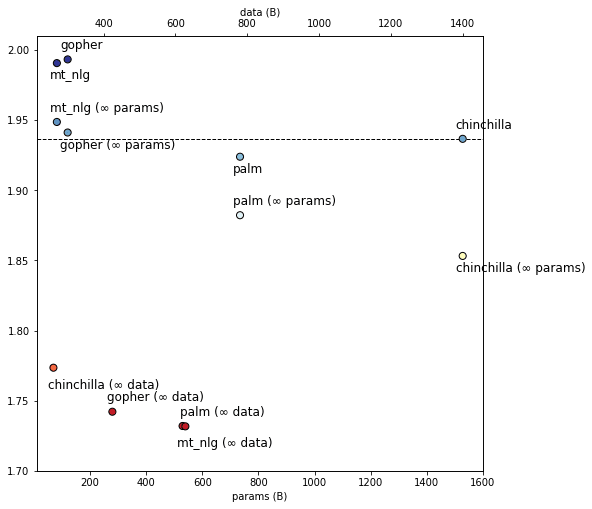

Here's where the various models lie on a contour plot of LM loss (per the equation), with on the x-axis and on the y-axis.

Only PaLM is remotely close to Chinchilla here. (Indeed, PaLM does slightly better.)

PaLM is a huge model. It's the largest one considered here, though MT-NLG is a close second. Everyone writing about PaLM mentions that it has 540B parameters, and the PaLM paper does a lot of experiments on the differences between the 540B PaLM and smaller variants of it.

According to this scaling law, though, PaLM's parameter count is a mere footnote relative to PaLM's training data size.

PaLM isn't competitive with Chinchilla because it's big. MT-NLG is almost the same size, and yet it's trapped in the pinkish-purple zone on the bottom-left, with Gopher and the rest.

No, PaLM is competitive with Chinchilla only because it was trained on more tokens (780B) than the other non-Chinchilla models. For example, this change in data size constitutes 85% of the loss improvement from Gopher to PaLM.

Here's the precise breakdown for PaLM:

PaLM's gains came with a great cost, though. It used way more training compute than any previous model, and its size means it also takes a lot of inference compute to run.

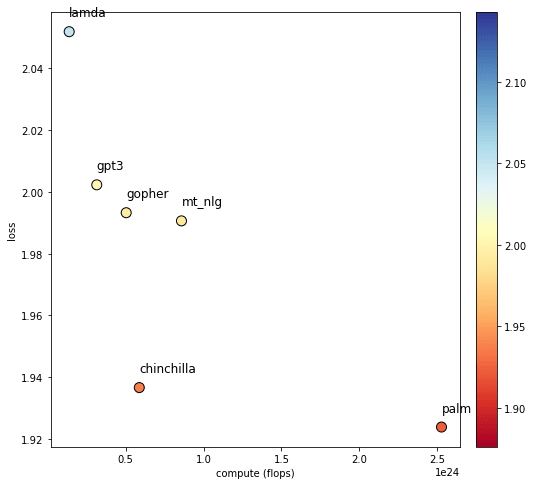

Here's a visualization of loss vs. training compute (loss on the y-axis and in color as well):

Man, we spent all that compute on PaLM, and all we got was the slightest edge over Chinchilla!

Could we have done better? In the equation just above, PaLM's terms look pretty unbalanced. Given that compute, we probably should have used more data and trained a smaller model.

The paper tells us how to pick optimal values for params and data, given a compute budget. Indeed, that's its main focus.

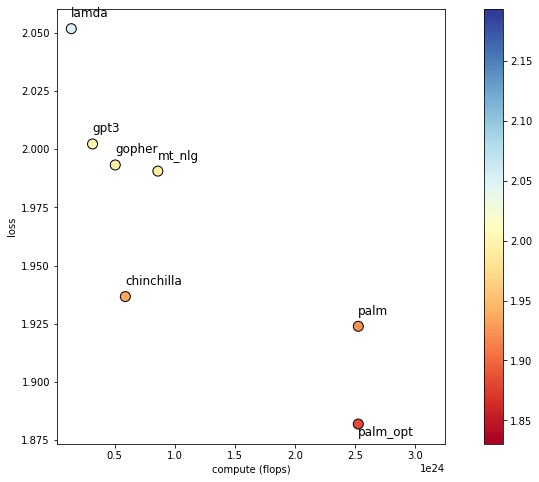

If we use its recommendations for PaLM's compute, we get the point "palm_opt" on this plot:

Ah, now we're talking!

"palm_opt" sure looks good. But how would we train it, concretely?

Let's go back to the -vs.- contour plot world.

I've changed the axis limits here, to accommodate the massive data set you'd need to spent PaLM's compute optimally.

How much data would that require? Around 6.7T tokens, or ~4.8 times as much as Chinchilla used.

Meanwhile, the resulting model would not be nearly as big as PaLM. The optimal compute law actually puts it at 63B params[7].

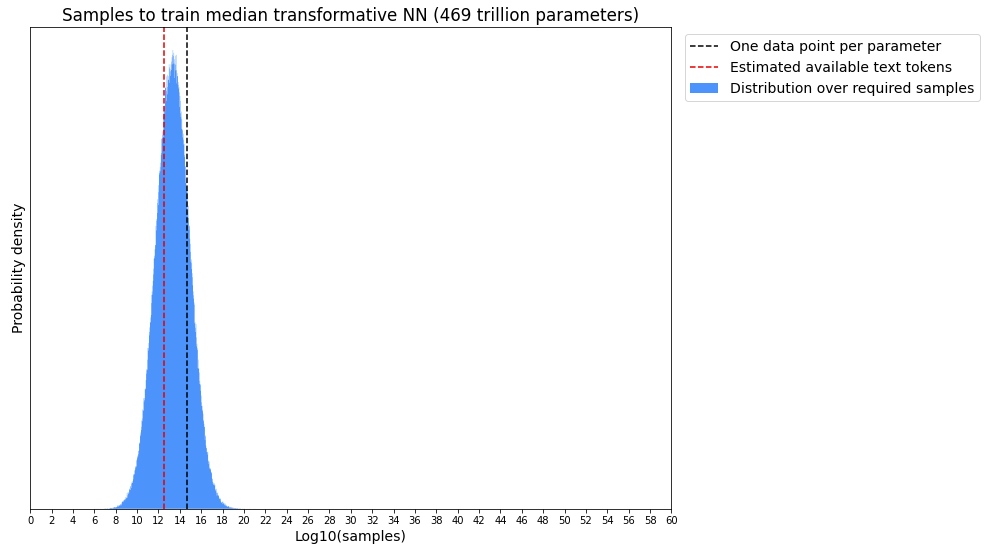

Okay, so we just need to get 6.7T tokens and . . . wait, how exactly are we going to get 6.7T tokens? How much text data is there, exactly?

2. are we running out of data?

It is frustratingly hard to find an answer to this question.

The main moral I want to get across in this post is that the large LM community has not taken data scaling seriously enough.

LM papers are meticulous about -- doing all kinds of scaling analyses on models of various sizes, etc. There has been tons of smart discussion about the hardware and software demands of training high- models. The question "what would it take to get to 1T params? (or 10T?)" is on everyone's radar.

Yet, meanwhile:

- Everyone trained their big models on 300B tokens, for no particular reason, until this paper showed how hilariously wasteful this is

- Papers rarely do scaling analyses that vary data size -- as if the concepts of "LM scaling" and "adding more parameters" have effectively merged in people's minds

- Papers basically never talk about what it would take to scale their datasets up by 10x or 50x

- The data collection sections of LM papers tend to be vague and slapdash, often failing to answer basic questions like "where did you scrape these webpages from?" or "how many more could you scrape, if you wanted to?"

As a particularly egregious example, here is what the LaMDA paper says about the composition of their training data:

The pre-training data, called Infiniset, is a combination of dialog data from public dialog data and other public web documents. It consists of 2.97B documents and 1.12B dialogs with 13.39B utterances. The composition of the data is as follows: 50% dialogs data from public forums; 12.5% C4 data [11]; 12.5% code documents from sites related to programming like Q&A sites, tutorials, etc; 12.5% Wikipedia (English); 6.25% English web documents; and 6.25% Non-English web documents. The total number of words in the dataset is 1.56T.

"Dialogs data from public forums"? Which forums? Did you use all the forum data you could find, or only 0.01% of it, or something in between? And why measure words instead of tokens -- unless they meant tokens?

If people were as casual about scaling as this quotation is about scaling , the methods sections of large LM papers would all be a few sentences long. Instead, they tend to look like this (excerpted from ~3 pages of similar material):

...anyway. How much more data could we get?

This question is complicated by the fact that not all data is equally good.

(This messy Google sheet contains the calculations behind some of what I say below.)

web scrapes

If you just want a lot of text, the easiest way to get it is from web scrapes like Common Crawl.

But these are infamously full of garbage, and if you want to train a good LM, you probably want to aggressively filter them for quality. And the papers don't tell us how much total web data they have, only how much filtered data.

MassiveWeb

The training dataset used for Gopher and Chinchilla is called MassiveText, and the web scrape portion of it is called MassiveWeb. This data originates in a mysterious, unspecified web scrape[8], which is funneled through a series of filters, including quality heuristics and an attempt to only keep English text.

MassiveWeb is 506B. Could it be made bigger, by scaling up the original web scrape? That depends on how complete the original web scrape was -- but we know nothing about it.

The GLaM/PaLM web corpus

PaLM used a different web scrape corpus. It was first used in this paper about "GLaM," which again did not say anything about the original scraping process, only describing the quality filtering they did (and not in much detail).

The GLaM paper says its filtered web corpus is 143B tokens. That's a lot smaller than MassiveWeb. Is that because of the filtering? Because the original scrape was smaller? Dunno.

To further complicate matters, the PaLM authors used a variant of the GLaM dataset which made multilingual versions of (some of?) the English-only components.

How many tokens did this add? They don't say[9].

We are told that 27% (211B) of PaLM's training tokens came from this web corpus, and we are separately told that they tried to avoid repeating data. So the PaLM version of the GLaM web corpus is probably at least 211B, versus the original 143B. (Though I am not very confident of that.)

Still, that's much smaller than MassiveWeb. Is this because they had a higher quality bar (which would be bad news for further data scaling)? They do attribute some of PaLM's success to quality filtering, citing the ablation on this in the GLaM paper[10].

It's hard to tell, but there is this ominous comment, in the section where they talk about PaLM vs. Chinchilla:

Although there is a large amount of very high-quality textual data available on the web, there is not an infinite amount. For the corpus mixing proportions chosen for PaLM, data begins to repeat in some of our subcorpora after 780B tokens, which is why we chose that as the endpoint of training. It is unclear how the “value” of repeated data compares to unseen data for large-scale language model training[11].

The subcorpora that start to repeat are probably the web and dialogue ones.

Read literally, this passage seems to suggest that even the vast web data resources available to Google Research (!) are starting to strain against the data demands of large LMs. Is that plausible? I don't know.

domain-specific corpora

We can speak with more confidence about text in specialized domains that's less common on the open web, since there's less of it out there, and people are more explicit about where they're getting it.

Code

If you want code, it's on Github. There's some in other places too, but if you've exhausted Github, you probably aren't going to find orders of magnitude of additional code data. (I think?)

We've more-or-less exhausted Github. It's been scraped a few times with different kinds of filtering, which yielded broadly similar data sizes:

- The Pile's scrape had 631GB[12] of text, and ~299B tokens

- The MassiveText scrape had 3.1TB of text, and 506B tokens

- The PaLM scrape had only 196GB of text (we aren't told how many tokens)

- The Codex paper's scrape was python-only and had 159GB of text

(The text to token ratios vary due to differences in how whitespace was tokenized.)

All of these scrapes contained a large fraction of the total code available on Github (in the Codex paper's case, just the python code).

Generously, there might be ~1T tokens of code out there, but not vastly more than that.

Arxiv

If you want to train a model on advanced academic research in physics or mathematics, you go to Arxiv.

For example, Arxiv was about half the training data for the math-problem-solving LM Minerva.

We've exhausted Arxiv. Both the Minerva paper and the Pile use basically all of Arxiv, and it amounts to a measly 21B tokens.

Books

Books? What exactly are "books"?

In the Pile, "books" means the Books3 corpus, which means "all of Bibliotik." It contains 196,640 full-text books, amounting to only 27B tokens.

In MassiveText, a mysterious subset called "books" has 560B tokens. That's a lot more than the Pile has! Are these all the books? In . . . the world? In . . . Google books? Who even knows?

In the GLaM/PaLM dataset, an equally mysterious subset called "books" has 390B tokens.

Why is the GLaM/PaLM number so much smaller than the MassiveText number? Is it a tokenization thing? Both of these datasets were made by Google, so it's not like the Gopher authors have special access to some secret trove of forbidden books (I assume??).

If we want LMs to learn the kind of stuff you learn from books, and not just from the internet, this is what we have.

As with the web, it's hard to know what to make of it, because we don't know whether this is "basically all the books in the world" or just some subset that an engineer pulled at one point in time[13].

"all the data we have"

In my spreadsheet, I tried to make a rough, erring-on-generous estimate of what you'd get if you pooled together all the sub-corpora mentioned in the papers I've discussed here.

I tried to make it an overestimate, and did some extreme things like adding up both MassiveWeb and the GLaM/PaLM web corpus as though they were disjoint.

The result was ~3.2T tokens, or

- about 1.6x the size of MassiveText

- about 35% of the data we would need to train palm_opt

Recall that this already contains "basically all" of the open-source code in the world, and "basically all" of the theoretical physics papers written in the internet era -- within an order of magnitude, anyway. In these domains, the "low-hanging fruit" of data scaling are not low-hanging at all.

what is compute? (on a further barrier to data scaling)

Here's another important comment from the PaLM paper's Chinchilla discussion. This is about barriers to doing a head-to-head comparison experiment:

If the smaller model were trained using fewer TPU chips than the larger model, this would proportionally increase the wall-clock time of training, since the total training FLOP count is the same. If it were trained using the same number of TPU chips, it would be very difficult to maintain TPU compute efficiency without a drastic increase in batch size. The batch size of PaLM 540B is already 4M tokens, and it is unclear if even larger batch sizes would maintain sample efficiency.

In LM scaling research, all "compute" is treated as fungible. There's one resource, and you spend it on params and steps, where compute = params * steps.

But params can be parallelized, while steps cannot.

You can take a big model and spread it (and its activations, gradients, Adam buffers, etc.) across a cluster of machines in various ways. This is how people scale up in practice.

But to scale up , you have to either:

- take more optimization steps -- an inherently serial process, which takes linearly more time as you add data, no matter how fancy your computers are

- increase the batch size -- which tends to degrade model quality beyond a certain critical size, and current high- models are already pushing against that limit

Thus, it is unclear whether the "compute" you spend in high- models is as readily available (and as bound to grow over time) as we typically imagine "compute" to be.

If LM researchers start getting serious about scaling up data, no doubt people will think hard about this question, but that work has not yet been done.

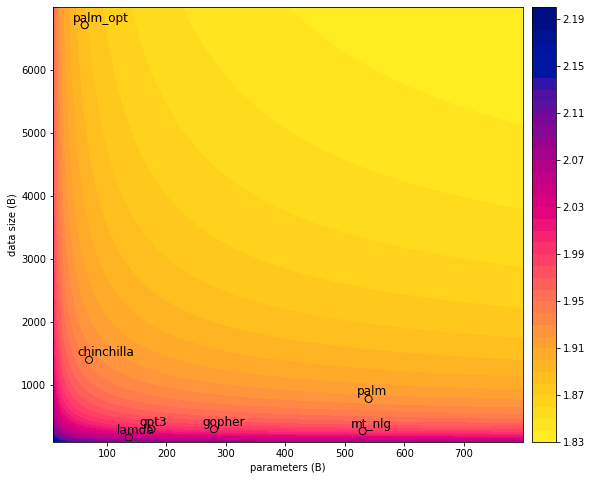

appendix: to infinity

Earlier, I observation that Chinchilla beats any Gopher of arbitrary size.

The graph below expands on that observation, by including two variants of each model:

- one with the finite-model term set to zero, i.e. the infinite-parameter limit

- one with the finite-data term set to zero, i.e. the infinite-data limit

(There are two x-axes, one for data and one for params. I included the latter so I have a place to put the infinite-data models without making an infinitely big plot.

The dotted line is Chinchilla, to emphasize that it beats infinite-params Gopher.)

The main takeaway IMO is the size of the gap between ∞ data models and all the others. Just another way of emphasizing how skewed these models are toward , and away from .

- ^

- ^

See their footnote 2

- ^

See their equation (10)

- ^

Is 0.052 a "small" amount in some absolute sense? Not exactly, but (A) it's small compared to the loss improvements we're used to seeing from new models, and (B) small compared to the improvements possible by scaling data.

In other words, (A) we have spent a few years plucking low-hanging fruit much bigger than this, and (B) there are more such fruit available.

- ^

The two terms are still a bit imbalanced, but that's largely due to the "Approach 3 vs 1/2" nuances mentioned above.

- ^

Caveat: Gopher and Chinchilla were trained on the same data distribution, but these other models were not. Plugging them into the equation won't give us accurate loss values for the datasets they used. Still, the datasets are close enough that the broad trend ought to be accurate.

- ^

Wait, isn't that smaller than Chinchilla?

This is another Approach 3 vs. 1/2 difference.

Chinchilla was designed with Approaches 1/2. Using Approach 3, like we're doing here, give you a Chinchilla of only 33B params, which is lower than our palm_opt's 63B.

- ^

Seriously, I can't find anything about it in the Gopher paper. Except that it was "collected in November 2020."

- ^

It is not even clear that this multilingual-ization affected the web corpus at all.

Their datasheet says they "used multilingual versions of Wikipedia and conversations data." Read literally, this would suggest they didn't change the web corpus, only those other two.

I also can't tell if the original GLaM web corpus was English-only to begin with, since that paper doesn't say.

- ^

This ablation only compared filtered web data to completely unfiltered web data, which is not a very fine-grained signal. (If you're interested, EleutherAI has done more extensive experiments on the impact of filtering at smaller scales.)

- ^

They are being a little coy here. The current received wisdom by now is that repeating data is really bad for LMs and you should never do it. See this paper and this one.

EDIT 11/15/22: but see also the Galactica paper, which casts significant doubt on this claim. - ^

The Pile authors only included a subset of this in the Pile.

- ^

The MassiveText datasheet says only that "the books dataset contains books from 1500 to 2008," which is not especially helpful.

128 comments

Comments sorted by top scores.

comment by Ivan Vendrov (ivan-vendrov) · 2022-07-31T04:31:48.058Z · LW(p) · GW(p)

Thought-provoking post, thanks.

One important implication is that pure AI companies such as OpenAI, Anthropic, Conjecture, Cohere are likely to fall behind companies with access to large amounts of non-public-internet text data like Facebook, Google, Apple, perhaps Slack. Email and messaging are especially massive sources of "dark" data, provided they can be used legally and safely (e.g. without exposing private user information). Taking just email, something like 500 billion emails are sent daily, which is more text than any LLM has ever been trained on (admittedly with a ton of duplication and low quality content).

Another implication is that federated learning, data democratization efforts, and privacy regulations like GDPR are much more likely to be critical levers on the future of AI than previously thought.

Replies from: Thirkle, yitz, hackpert, TrevorWiesinger↑ comment by Thirkle · 2022-07-31T22:44:42.078Z · LW(p) · GW(p)

Another implication is that centralised governments with the ability to aggressively collect and monitor citizen's data, such as China, could be major players.

A government such as China has no need to scrape data from the Internet, while being mindful of privacy regulations and copyright. Instead they can demand 1.4 billion people's data from all of their domestic tech companies. This includes everything such as emails, texts, WeChat, anything that the government desires.

↑ comment by Yitz (yitz) · 2022-07-31T17:56:05.028Z · LW(p) · GW(p)

I suspect that litigation over copyright concerns with LLMs could significantly slow timelines, although it may come with the disadvantage of favoring researchers who don’t care about following regulations/data use best practices

↑ comment by hackpert · 2022-08-06T17:23:41.007Z · LW(p) · GW(p)

I mean Microsoft for one seems fully invested in (married to) OpenAI and will continue to be for the foreseeable future, and Outlook/Exchange is probably the biggest source of "dark" data in the world, so I wouldn't necessarily put OpenAI on the same list as the others without strong traditional tech industry partnerships.

Replies from: ChristianKl↑ comment by ChristianKl · 2022-08-06T20:54:26.253Z · LW(p) · GW(p)

Allowing OpenAI to use Microsofts customer data to train the model essentially means releasing confidential customer information to the public. I doubt that's something that Microsoft is willing to do.

↑ comment by trevor (TrevorWiesinger) · 2022-07-31T18:04:17.163Z · LW(p) · GW(p)

Another implication is that federated learning, data democratization efforts, and privacy regulations like GDPR are much more likely to be critical levers on the future of AI than previously thought.

And presumably data poisoning as well? This sort of thing isn't easily influenced because it's deep in the turf of major militaries, but it would definitely be good news in the scenario that data becomes the bottleneck.

comment by Scott Alexander (Yvain) · 2022-08-01T23:19:32.367Z · LW(p) · GW(p)

Thanks for posting this, it was really interesting. Some very dumb questions from someone who doesn't understand ML at all:

1. All of the loss numbers in this post "feel" very close together, and close to the minimum loss of 1.69. Does loss only make sense on a very small scale (like from 1.69 to 2.2), or is this telling us that language models are very close to optimal and there are only minimal remaining possible gains? What was the loss of GPT-1?

2. Humans "feel" better than even SOTA language models, but need less training data than those models, even though right now the only way to improve the models is through more training data. What am I supposed to conclude from this? Are humans running on such a different paradigm that none of this matters? Or is it just that humans are better at common-sense language tasks, but worse at token-prediction language tasks, in some way where the tails come apart once language models get good enough?

3. Does this disprove claims that "scale is all you need" for AI, since we've already maxed out scale, or are those claims talking about something different?

Replies from: nostalgebraist, jack-armstrong↑ comment by nostalgebraist · 2022-08-02T01:28:35.711Z · LW(p) · GW(p)

(1)

Loss values are useful for comparing different models, but I don't recommend trying to interpret what they "mean" in an absolute sense. There are various reasons for this.

One is that the "conversion rate" between loss differences and ability differences (as judged by humans) changes as the model gets better and the abilities become less trivial.

Early in training, when the model's progress looks like realizing "huh, the word 'the' is more common than some other words", these simple insights correspond to relatively large decreases in loss. Once the model basically kinda knows English or whatever the language is, it's already made most of the loss progress it's going to make, and the further insights we really care about involve much smaller changes in loss. See here for more on this by gwern.

(2)

No one really knows, but my money is on "humans are actually better at this through some currently-unknown mechanism," as opposed to "humans are actually bad at this exact thing."

Why do I think this?

Well, the reason we're here talking about this at all is that LMs do write text of spookily high quality, even if they aren't as good as humans at it. That wasn't always true. Before the transformer architecture was invented in 2017, LMs used to be nowhere near this good, and few people knew or talked about them except researchers.

What changed with the transformer? To some extent, the transformer is really a "smarter" or "better" architecture than the older RNNs. If you do a head-to-head comparison with the same training data, the RNNs do worse.

But also, it's feasible to scale transformers much bigger than we could scale the RNNs. You don't see RNNs as big as GPT-2 or GPT-3 simply because it would take too much compute to train them.

So, even though all these models take tons of data to train, we could make the transformers really big and still train them on the tons-of-data they require. And then, because scaling up really does help, you get a model good enough that you and I are here talking about it.

That is, I don't think transformers are the best you can do at language acquisition. I suspect humans are doing something better that we don't understand yet. But transformers are easy to scale up really big, and in ML it's usually possible for sheer size to compensate for using a suboptimal architecture.

(P.S. Buck says in another thread that humans do poorly when directly asked to do language modeling -- which might mean "humans are actually bad at this exact thing," but I suspect this is due to the unfamiliarity of the task rather than a real limitation of humans. That is, I suspect humans could be trained to perform very well, in the usual sense of "training" for humans where not too much data/time is necessary.)

(3)

This is sort of a semantic issue.

"Scaling" was always a broader concept that just scaling in model size. In this post and the paper, we're talking about scaling with respect to model size and also with respect to data, and earlier scaling papers were like that too. The two types of scaling look similar in equations.

So "data scale" is a kind of scale, and always has been.

On the other hand, the original OpenAI/Kaplan scaling paper found kinda the opposite result from this one -- model size was what mattered practically, and the data we currently have would be enough for a long time.

People started to conflate "scaling" and "scaling in model size," because we thought the OpenAI/Kaplan result meant these were the same thing in practice. The way the "scale is all you need" meme gets used, it has this assumption kind of baked in.

There are some things that "scaling enthusiasts" were planning to do that might change in light of this result (if the result is really true) -- like specialized hardware or software that only helps for very large models. But, if we can get much larger-scale data, we may be able to just switch over to a "data scaling world" that, in most respects, looks like the world the "parameter scaling world" that the scaling enthusiasts imagined.

Replies from: Buck, beth-barnes, iceman, Owain_Evans↑ comment by Buck · 2022-08-02T15:55:45.235Z · LW(p) · GW(p)

That is, I suspect humans could be trained to perform very well, in the usual sense of "training" for humans where not too much data/time is necessary.

I paid people to try to get good at this game, and also various smart people like Paul Christiano tried it for a few hours, and everyone was still notably worse than GPT-2-sm (about the size of GPT-1).

EDIT: These results are now posted here [LW · GW].

↑ comment by paulfchristiano · 2022-08-02T16:24:15.274Z · LW(p) · GW(p)

I expect I would improve significantly with additional practice (e.g. I think a 2nd hour of playing the probability-assignment game would get a much higher score than my 1st in expectation). My subjective feeling was that I could probably learn to do as well as GPT-2-small (though estimated super noisily) but there's definitely no way I was going to get close to GPT-2.

↑ comment by nostalgebraist · 2022-08-02T18:05:24.857Z · LW(p) · GW(p)

I'm wary of the assumption that we can judge "human ability" on a novel task X by observing performance after an hour of practice.

There are some tasks where performance improves with practice but plateaus within one hour. I'm thinking of relatively easy video games. Or relatively easy games in general, like casual card/board/party games with simple rules and optimal policies. But most interesting things that humans "can do" take much longer to learn than this.

Here are some things that humans "can do," but require >> 1 hour of practice to "do," while still requiring far less exposure to task-specific example data than we're used to in ML:

- Superforecasting

- Reporting calibrated numeric credences, a prerequisite for both superforecasting and the GPT game (does this take >> 1 hour? I would guess so, but I'm not sure)

- Playing video/board/card games of nontrivial difficulty or depth

- Speaking any given language, even when learned during the critical language acquisition period

- Driving motor vehicles like cars (arguably) and planes (definitely)

- Writing good prose, for any conventional sense of "good" in any genre/style

- Juggling

- Computer programming (with any proficiency, and certainly e.g. competitive programming)

- Doing homework-style problems in math or physics

- Acquiring and applying significant factual knowledge in academic subjects like law or history

The last 3 examples are the same ones Owain_Evans mentioned [LW(p) · GW(p)] in another thread, as examples of things LMs can do "pretty well on."

If we only let the humans practice for an hour, we'll conclude that humans "cannot do" these tasks at the level of current LMs either, which seems clearly wrong (that is, inconsistent with the common-sense reading of terms like "human performance").

Replies from: Buck↑ comment by Buck · 2022-08-02T18:17:14.121Z · LW(p) · GW(p)

Ok, sounds like you're using "not too much data/time" in a different sense than I was thinking of; I suspect we don't disagree. My current guess is that some humans could beat GPT-1 with ten hours of practice, but that GPT-2 or larger would be extremely difficult or and plausibly impossible with any amount of practice.

Replies from: jacob_cannell↑ comment by jacob_cannell · 2022-08-22T07:49:20.519Z · LW(p) · GW(p)

The human brain internally is performing very similar computations to transformer LLMs - as expected from all the prior research indicating strong similarity between DL vision features and primate vision - but that doesn't mean we can immediately extract those outputs and apply them towards game performance.

↑ comment by Owain_Evans · 2022-08-02T16:24:49.100Z · LW(p) · GW(p)

It could be useful to look at performance of GPT-3 on foreign languages. We know roughly how long it takes humans to reach a given level at a foreign language. E.g. You might find GPT-3 is at a level on 15 different languages that would take a smart human (say) 30 months to achieve (2 months per language). Foreign languages are just a small fraction of the training data.

Replies from: mateusz-baginski↑ comment by Mateusz Bagiński (mateusz-baginski) · 2023-08-25T13:11:48.622Z · LW(p) · GW(p)

I think I remember seeing somewhere that LLMs learn more slowly on languages with "more complex" grammar (in the sense of their loss decreasing more slowly per the same number of tokens) but I can't find the source right now.

↑ comment by Beth Barnes (beth-barnes) · 2022-08-02T02:37:16.495Z · LW(p) · GW(p)

Based on the language modeling game that Redwood made, it seems like humans are much worse than models at next word prediction (maybe around the performance of a 12-layer model)

↑ comment by iceman · 2022-08-02T15:08:39.137Z · LW(p) · GW(p)

What changed with the transformer? To some extent, the transformer is really a "smarter" or "better" architecture than the older RNNs. If you do a head-to-head comparison with the same training data, the RNNs do worse.

But also, it's feasible to scale transformers much bigger than we could scale the RNNs. You don't see RNNs as big as GPT-2 or GPT-3 simply because it would take too much compute to train them.

You might be interested in looking at the progress being made on the RWKV-LM architecture, if you aren't following it. It's an attempt to train an RNN like a transformer. Initial numbers look pretty good.

↑ comment by Owain_Evans · 2022-08-02T16:16:46.580Z · LW(p) · GW(p)

A few points:

- Current models do pretty well on tricky math problems (Minerva), coding competition problems (AlphaCode), and multiple-choice quizzes at college level (MMLU).

- In some ways, the models' ability to learn from data is far superior to humans. For example, models trained mostly on English text are still pretty good at Spanish, while English speakers in parts of the US who hear Spanish (passively) every week of their lives usually retain almost nothing. The same is true for being able to imitate other styles or dialects of English, and for programming languages. (Humans after their earlier years can spend years hearing a foreign language everyday and learn almost nothing! Most people need to make huge efforts to learn.)

- RNNs are much worse than transformers at in-context learning. It's not just a difference in generative text quality. See this study by DeepMind: https://twitter.com/FelixHill84/status/1524352818261499911

↑ comment by Jose Miguel Cruz y Celis (jose-miguel-cruz-y-celis) · 2023-05-22T20:35:06.469Z · LW(p) · GW(p)

I'm curious about where you get that "models trained mostly on English text are still pretty good at Spanish" do you have a reference?

↑ comment by wickemu (jack-armstrong) · 2022-08-03T16:04:32.676Z · LW(p) · GW(p)

2. Humans "feel" better than even SOTA language models, but need less training data than those models, even though right now the only way to improve the models is through more training data. What am I supposed to conclude from this? Are humans running on such a different paradigm that none of this matters? Or is it just that humans are better at common-sense language tasks, but worse at token-prediction language tasks, in some way where the tails come apart once language models get good enough?

Why do we say that we need less training data? Every minute instant of our existence is a multisensory point of data from before we've even exited the womb. We spend months, arguably years, hardly capable of anything at all yet still taking and retaining data. Unsupervised and mostly redundant, sure, but certainly not less than a curated collection of Internet text. By the time we're teaching a child to say "dog" for the first time they've probably experienced millions of fragments of data on creatures of various limb quantities, hair and fur types, sizes, sounds and smells, etc.; so they're already effectively pretrained on animals before we first provide a supervised connection between the sound "dog" and the sight of a four-limbed hairy creature with long ears on a leash.

I believe that Humans exceed the amount of data ML models have by multiple orders of magnitude by the time we're adults, even if it's extremely messy.

Replies from: jose-miguel-cruz-y-celis, Lanrian↑ comment by Jose Miguel Cruz y Celis (jose-miguel-cruz-y-celis) · 2023-05-23T05:07:19.427Z · LW(p) · GW(p)

I did some calculations with a bunch of assumptions and simplifications but here's a high estimate, back of the envelope calculation for the data and "tokens" a 30 year old human would have "trained" on:

- Visual data: 130 million photoreceptor cells, firing at 10 Hz = 1.3Gbits/s = 162.5 MB/s over 30 years (aprox. 946,080,000 seconds) = 153 Petabytes

- Auditory data: Humans can hear frequencies up to 20,000 Hz, high quality audio is sampled at 44.1 kHz satisfying Nyquist-Shannon sampling theorem, if we assume a 16bit (cd quality)*2(channels for stereo) = 1.41 Mbits/s = .18 MB/s over 30 years = .167 Petabytes

- Tactile data: 4 million touch receptors providing 8 bits/s (assuming they account for temperature, pressure, pain, hair movement, vibration) = 5 MB/s over 30 years = 4.73 Petabytes

- Olfactory data: We can detect up to 1 trillion smells , assuming we process 1 smell every second and each smell is represented a its own piece of data i.e. log2(1trillion) = 40 bits/s = 0.0000050 MB/s over 30 years = .000004 Petabytes

- Taste data: 10,000 receptors, assuming a unique identifier for each basic taste (sweet, sour, salty, bitter and umami) log2(5) 2.3 bits rounded up to 3 = 30 kbits/s = 0.00375 MB/s over 30 years = .00035 Petabytes

This amounts to 153 + .167 + 4.73 + .000004 + .00035 = 158.64 Petabytes assuming 5 bytes per token (i.e. 5 characters) this amounts to 31,728 T tokens

This is of course a high estimate and most of this data will clearly have huge compression capacity, but I wanted to get a rough estimate of a high upper bound.

Here's the google sheet if anyone wants to copy it or contribute

↑ comment by Lukas Finnveden (Lanrian) · 2022-08-14T18:18:52.764Z · LW(p) · GW(p)

There's a billion seconds in 30 years. Chinchilla was trained on 1.4 trillion tokens. So for a human adult to have as much data as chinchilla would require us to process the equivalent of ~1400 tokens per second. I think that's something like 2 kilobyte per second.

Inputs to the human brain are probably dominated by vision. I'm not sure how many bytes per second we see, but I don't think it's many orders of magnitudes higher than 2kb.

Replies from: ChristianKl↑ comment by ChristianKl · 2022-08-14T18:29:28.091Z · LW(p) · GW(p)

I'm not sure how many bytes per second we see, but I don't think it's many orders of magnitudes higher than 2kb.

That depends a lot on how you count. A quick Googling suggest that the optic nerve has 1.7 million nerve fibers.

If you think about a neuron firing rate of 20 hz that gives you 34 MB per second.

Replies from: Lanrian↑ comment by Lukas Finnveden (Lanrian) · 2022-08-14T22:13:11.741Z · LW(p) · GW(p)

(If 1 firing = 1 bit, that should be 34 megabit ~= 4 megabyte.)

This random article (which I haven't fact-checked in the least) claims a bandwidth of 8.75 megabit ~= 1 megabyte. So that's like 2.5 OOMs higher than the number I claimed for chinchilla. So yeah, it does seem like humans get more raw data.

(But I still suspect that chinchilla gets more data if you adjust for (un)interestingness. Where totally random data and easily predictable/compressible data are interesting, and data that is hard-but-possible to predict/compress is interesting.)

comment by IL · 2022-07-31T18:26:15.708Z · LW(p) · GW(p)

When you exhaust all the language data from text, you can start extracting language from audio and video.

As far as I know the largest public repository of audio and video is YouTube. We can do a rough back-of-the-envelope computation for how much data is in there:

- According to some 2019 article I found, in every minute 50 hours of video are uploaded to YouTube. If we assume this was the average for the last 15 years, that gets us 200 billion minutes of video.

- An average conversation has 150 words per minute, according to a Google search. That gets us 30T words, or 30T tokens if we assume 1 token per word (is this right?)

- Let's say 1% of that is actually useful, so that gets us 300B tokens, which is... a lot less than I expected.

So it seems like video doesn't save us, if we just use it for the language data. We could do self-supervised learning on the video data, but for that we need to know the scaling laws for video (has anyone done that?).

Replies from: nostalgebraist, sbowman↑ comment by nostalgebraist · 2022-08-02T00:17:01.621Z · LW(p) · GW(p)

Very interesting!

There are a few things in the calculation that seem wrong to me:

- If I did things right,15 years * (365 days/yr) * (24 hours/day) * (60 mins/hour) * (50 youtube!hours / min) * (60 youtube!mins / youtube!hour) = 24B youtube!minutes, not 200B.

- I'd expect much less than 100% of Youtube video time to contain speech. I don't know what a reasonable discount for this would be, though.

- In the opposite direction, 1% useful seems too low. IIRC, web scrape quality pruning discards less than 99%, and this data is less messy than a web scrape.

In any case, yeah, this does not seem like a huge amount of data. But there's enough order-of-magnitude fuzziness in the estimate that it does seem like it's worth someone's time to look into more seriously.

↑ comment by Sam Bowman (sbowman) · 2022-08-02T00:04:31.800Z · LW(p) · GW(p)

I agree that this points in the direction of video becoming increasingly important.

But why assume only 1% is useful? And more importantly, why use only the language data? Even if we don't have the scaling laws, but it seems pretty clear that there's a ton of information in the non-language parts of videos that'd be useful to a general-purpose agent—almost certainly more than in the language parts. (Of course, it'll take more computation to extract the same amount of useful information from video than from text.)

comment by MSRayne · 2022-07-31T13:32:27.370Z · LW(p) · GW(p)

Does this imply that AGI is not as likely to emerge from language models as might have been thought? To me it looks like it's saying that the only way to get enough data would be to have the AI actively interacting in the world - getting data itself.

Replies from: nostalgebraist, MathiasKirkBonde↑ comment by nostalgebraist · 2022-07-31T16:36:27.312Z · LW(p) · GW(p)

I definitely think it makes LM --> AGI less likely, although I didn't think it was very likely to begin with [LW · GW].

I'm not sure that the AI interacting with the world would help, at least with the narrow issue described here.

If we're talking about data produced by humans (perhaps solicited from them by an AI), then we're limited by the timescales of human behavior. The data sources described in this post were produced by millions of humans writing text over the course of decades (in rough order-of-magnitude terms).

All that text was already there in the world when the current era of large LMs began, so large LMs got to benefit from it immediately, "for free." But once it's exhausted, producing more is slow.

IMO, most people are currently overestimating the potential of large generative models -- including image models like DALLE2 -- because of this fact.

There was all this massive data already sitting around from human activity (the web, Github, "books," Instagram, Flickr, etc) long before ML compute/algorithms were anywhere near the point where they needed more data than that.

When our compute finally began to catch up with our data, we effectively spent all the "stored-up potential energy" in that data all at once, and then confused ourselves into thinking that compute was only necessary input for the reaction.

But now compute has finally caught up with data, and it wants more. We are forced for the first time to stop thinking of data as effectively infinite and free, and to face the reality of how much time and how many people it took to produce our huge-but-finite store of "data startup capital."

I suppose the AI's interactions with the world could involve soliciting more data of the kind it needs to improve (ie active learning), which is much more valuable per unit than generic data.

I would still be surprised if this approach could get much of anywhere without requiring solicitation-from-humans on a massive scale, but it'd be nice to see a back-of-the-envelope calculation using existing estimates of the benefit of active learning.

Replies from: MSRayne, clone of saturn, Evan R. Murphy↑ comment by MSRayne · 2022-07-31T21:54:52.254Z · LW(p) · GW(p)

It seems to me that the key to human intelligence is nothing like what LMs do anyway; we don't just correlate vast quantities of text tokens. They have meanings. That is, words correlate to objects in our world model, learned through lived experience, and sentences correspond to claims about how those objects are related to one another or are changing. Without being rooted in sensory, and perhaps even motor, experience, I don't think general intelligence can be achieved. Language by itself can only go so far.

↑ comment by clone of saturn · 2022-08-08T08:00:55.700Z · LW(p) · GW(p)

Language models seem to do a pretty good job at judging text "quality" in a way that agrees with humans. And of course, they're good at generating new text. Could it be useful for a model to generate a bunch of output, filter it for quality by its own judgment, and then continue training on its own output? If so, would it be possible to "bootstrap" arbitrary amounts of extra training data?

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2022-08-08T22:53:25.177Z · LW(p) · GW(p)

It might be even better to just augment the data with quality judgements instead of only keeping the high-quality samples. This way, quality can have the form of a natural language description instead of a one-dimensional in-built thing, and you can later prime the model for an appropriate sense/dimension/direction of quality, as a kind of objective, without retraining.

↑ comment by Evan R. Murphy · 2022-08-01T06:24:49.034Z · LW(p) · GW(p)

But now compute has finally caught up with data, and it wants more. We are forced for the first time to stop thinking of data as effectively infinite and free, and to face the reality of how much time and how many people it took to produce our huge-but-finite store of "data startup capital."

We may be running up against text data limits on the public web. But the big data companies got that name for a reason. If they can tap into the data of a Gmail, Facebook Messenger or YouTube then they will find tons of more fuel for their generative models.

↑ comment by MathiasKB (MathiasKirkBonde) · 2022-07-31T15:23:30.245Z · LW(p) · GW(p)

I don't think the real world is good enough either.

The fact that humans feel a strong sense of the tetris effect, suggest to me that the brain is constantly generating and training on synthetic data.

Replies from: yitz↑ comment by Yitz (yitz) · 2022-07-31T17:57:51.399Z · LW(p) · GW(p)

Aka dreams?

comment by Roman Leventov · 2022-08-01T10:14:54.802Z · LW(p) · GW(p)

My two cents contra updates towards longer or more uncertain AGI timelines given the information in this post:

- The training of language models is many orders of magnitude less efficient than the training of the human brain, which acquires comparable language comprehension and generation ability on a tiny fraction of the text corpora discussed in this post. So we can expect more innovations that improve the training efficiency. Even one such innovation, improving the training efficiency (in terms of data) by a single order of magnitude, would probably ensure that the total size of publically available text data is not a roadblock on the path to AGI, even if it is, currently. I think the probability that we will see at least one such innovation in the next 5 years is quite high, more than 10%.

- Perhaps DeepMind's Gato is already a response to the realisation that "there is not enough text", explained in this post. So they train Gato on game replays, themselves generated programmatically, using RL agents. They can generate practically unlimited amounts of training data in this way. Then there is probably a speculation that at some scale, Gato will generalise the knowledge acquired in games to text, or will indeed enable much more efficient training on text, (a-la few-shot learning in current LMs) if the model is pre-trained on games and other tasks.

comment by Jay Bailey · 2022-07-31T15:19:32.983Z · LW(p) · GW(p)

I am curious about this "irreducible" term in the loss. Apologies if this is covered by the familiarity with LM scaling laws mentioned as a prerequisite for this article.

When you say "irreducible", does that mean "irreducible under current techniques" or "mathematically irreducible", or something else?

Do we have any idea what a model with, say, 1.7 loss (i.e, a model almost arbitrarily big in compute and data, but with the same 1.69 irreducible) would look like?

Replies from: nostalgebraist↑ comment by nostalgebraist · 2022-07-31T15:56:32.620Z · LW(p) · GW(p)

When you say "irreducible", does that mean "irreducible under current techniques" or "mathematically irreducible", or something else?

Closer to the former, and even more restrictive: "irreducible with this type of model, trained in this fashion on this data distribution."

Because language is a communication channel, there is presumably also some nonzero lower bound on the loss that any language model could ever achieve. This is different from the "irreducible" term here, and presumably lower than it, although little is known about this issue.

Do we have any idea what a model with, say, 1.7 loss (i.e, a model almost arbitrarily big in compute and data, but with the same 1.69 irreducible) would look like?

Not really, although section 5 of this post [LW · GW] expresses some of my own intuitions about what this limit looks like.

Keep in mind, also, that we're talking about LMs trained on a specific data distribution, and only evaluating their loss on data sampled from that same distribution.

So if an LM achieved 1.69 loss on MassiveText (or a scaled-up corpus that looked like MassiveText in all respects but size), it would do very well at mimicking all the types of text present in MassiveText, but that does not mean it could mimic every existing kind of text (much less every conceivable kind of text).

Replies from: yitz↑ comment by Yitz (yitz) · 2022-07-31T18:06:25.729Z · LW(p) · GW(p)

Do we have a sense of what the level of loss is in the human brain? If I’m understanding correctly, if the amount of loss in a model is known to be finitely large, then will be incapable of perfectly modeling the world on principle (implying that to such a model physics is non-computable?)

Replies from: conor-sullivan↑ comment by Lone Pine (conor-sullivan) · 2022-07-31T22:24:49.338Z · LW(p) · GW(p)

Theroetically we could measure it by having humans play "the language model game" where you try to predict the next word in a text, repeatedly. How often you would get the next word wrong is a function of your natural loss. Of course, you'd get better at this game as you went along, just like LMs do, so what we'd want to measure is how well you'd do after playing for a few days.

There might have been a psychological study that resembles this. (I don't know.) We could probably also replicate it via citizen science: create a website where you play this game, and get people to play it. My prediction is that DL LMs are already far superior to even the best humans at this game. (Note that this doesn't mean I think DL is smarter than humans.)

Replies from: yitz↑ comment by Yitz (yitz) · 2022-08-01T03:50:38.186Z · LW(p) · GW(p)

Such a game already exists! See https://rr-lm-game.herokuapp.com/whichonescored2 and https://rr-lm-game.herokuapp.com/. I’ve been told humans tend to do pretty badly at the games (I didn’t do too well myself), so if you feel discouraged playing and want a similar style of game that’s perhaps a bit more fun (if slightly less relevant to the question at hand), I recommend https://www.redactle.com/. Regardless, I guess I’m thinking of loss (in humans) in the more abstract sense of “what’s the distance between the correct and human-given answer [to an arbitrary question about the real world]?” If there’s some mathematically necessary positive amount of loss humans must have at a minimum, that would seemingly imply that there are fundamental limits to the ability of human cognition to model reality.

Replies from: Buck, JBlack↑ comment by Buck · 2022-08-01T20:14:23.377Z · LW(p) · GW(p)

Yes, humans are way worse than even GPT-1 at next-token prediction, even after practicing for an hour.

EDIT: These results are now posted here [LW · GW]

↑ comment by Yitz (yitz) · 2022-08-02T04:41:00.764Z · LW(p) · GW(p)

Is there some reasonable-ish way to think about loss in the domain(s) that humans are (currently) superior at? (This might be equivalent to asking for a test of general intelligence, if one wants to be fully comprehensive)

↑ comment by JBlack · 2022-08-01T08:58:44.474Z · LW(p) · GW(p)

The scoring for that first game is downright bizarre. The optimal strategy for picking probabilities does not reflect the actual relative likelihoods of the options, but says "don't overthink it". In order to do well, you must overthink it.

Replies from: Buck, yitz↑ comment by Buck · 2022-08-01T20:13:54.729Z · LW(p) · GW(p)

(I run the team that created that game. I made the guess-most-likely-next-token game and Fabien Roger made the other one.)

The optimal strategy for picking probabilities in that game is to say what your probability for those two next tokens would have been if you hadn't updated on being asked about them. What's your problem with this?

It's kind of sad that this scoring system is kind of complicated. But I don't know how to construct simpler games such that we can unbiasedly infer human perplexity from what the humans do.

↑ comment by Yitz (yitz) · 2022-08-01T17:54:35.520Z · LW(p) · GW(p)

Yeah, if anyone builds a better version of this game, please let me know!

comment by Julian Schrittwieser (julian-schrittwieser) · 2022-07-31T12:29:15.615Z · LW(p) · GW(p)

An important distinction here is that the number of tokens a model was trained for should not be confused with the number of tokens in a dataset: if each token is seen exactly once during training then it has been trained for one "epoch".

In my experience scaling continues for quite a few epochs over the same datset, only if the model has more parameters than the datset tokens and training for >10 epochs does overfitting kick in and scaling break down.

Replies from: nostalgebraist, gwern↑ comment by nostalgebraist · 2022-07-31T17:08:44.346Z · LW(p) · GW(p)

This distinction exists in general, but it's irrelevant when training sufficiently large LMs.

It is well-established that repeating data during large LM training is not a good practice. Depending on the model size and the amount of repeating, one finds that it is either

- a suboptimal use of compute (relative to training a bigger model for 1 epoch), or

- actively harmful, as measured by test loss or loss on out-of-distribution data

with (2) kicking in earlier (in terms of the amount of repeating) for larger models, as shown in this paper (Figure 4 and surrounding discussion).

For more, see

- references linked in footnote 11 [LW(p) · GW(p)] of this post, on how repeating data can be harmful

- my earlier post here [LW · GW], on how repeating data can be compute-inefficient even when it's not harmful

- this report on my own experience finetuning a 6.1B model, where >1 epoch was harmful

↑ comment by p.b. · 2022-08-01T10:24:23.857Z · LW(p) · GW(p)

I think it would be a great follow-up post to explain why you think repeating data is not going to be the easy way out for the scaling enthusiasts at Deepmind and OpenAI.

I find the Figure 4 discussion at your first link quite confusing. They study repeated data i.e. disbalanced datasets to then draw conclusions about repeating data i.e. training for several epochs. The performance hit they observe seems to not be massive (when talking about scaling a couple of OOMs) and they keep the number of training tokens constant.

I really can't tell how this informs me about what would happen if somebody tried to scale compute 1000-fold and had to repeat data to do it compute-optimally, which seems to be the relevant question.

↑ comment by ErickBall · 2022-08-04T16:58:33.143Z · LW(p) · GW(p)

So do you think, once we get to the point where essentially all new language models are trained on essentially all existing language data, it will always be more compute efficient to increase the size of the model rather than train for a second epoch?

This would seem very unintuitive and is not directly addressed by the papers you linked in footnote 11, which deal with small portions of the dataset betting repeated.

Replies from: nostalgebraist↑ comment by nostalgebraist · 2022-08-04T18:30:56.098Z · LW(p) · GW(p)

You're right, the idea that multiple epochs can't possibly help is one of the weakest links in the post. Sometime soon I hope to edit the post with a correction / expansion of that discussion, but I need to collect my thoughts more first -- I'm kinda confused by this too.

After thinking more about it, I agree that the repeated-data papers don't provide much evidence that multiple epochs are harmful.

For example, although the Anthropic repeated-data paper does consider cases where a non-small fraction of total training tokens are repeated more than once. In their most extreme case,

- half of the training tokens are never repeated during training, and

- the other half of training tokens are some (smaller) portion of the original dataset, repeated 2 or more times

But this effectively lowers the total size of the model's training dataset -- the number of training tokens is held constant (100B), so the repeated copies are taking up space that would otherwise be used for fresh data. For example, if the repeated tokens are repeated 2 times, then we are only using 3/4 of the data we could be (we select 1/2 for the unrepeated part, and then select 1/4 and repeat it twice for the other part).

We'd expect this to hurt the model, and to hurt larger models more, which explains some fraction of the observed effect.

I think there's a much stronger case that multiple epochs are surprisingly unhelpful for large models, even if they aren't harmful. I went over that case in this post [LW · GW]. (Which was based on the earlier Kaplan et al papers, but I think the basic result still holds.)

However, multiple epochs do help, just less so as grows... so even if they are negligibly helpful at GPT-3 size or above, they still might be relevantly helpful at Chinchilla size or below. (And this would then push the compute optimal even further down relative to Chinchilla, preferring smaller models + more steps.)

It would be really nice to see an extension of the Chinchilla experiment that tried multiple epochs, which would directly answer the question.

I'm not sure what I'd expect the result to be, even directionally. Consider that if you are setting your learning rate schedule length to the full length of training (as in Chinchilla), then "doing a 2-epoch run" is not identical to "doing a 1-epoch run, then doing another epoch." You'll have a higher LR during the first epoch than the 1-epoch run would have had, which would have been suboptimal if you had stopped at the first epoch.

Replies from: ErickBall↑ comment by ErickBall · 2022-08-04T19:45:53.366Z · LW(p) · GW(p)

Thanks, that's interesting... the odd thing about using a single epoch, or even two epochs, is that you're treating the data points differently. To extract as much knowledge as possible from each data point (to approach L(D)), there should be some optimal combination of pre-training and learning rate. The very first step, starting from random weights, presumably can't extract high level knowledge very well because the model is still trying to learn low level trends like word frequency. So if the first batch has valuable high level patterns and you never revisit it, it's effectively leaving data on the table. Maybe with a large enough model (or a large enough batch size?) this effect isn't too bad though.

↑ comment by Tao Lin (tao-lin) · 2022-08-02T12:10:48.721Z · LW(p) · GW(p)

This paper is very unrepresentative - it seems to test 1 vs 64-1,000,000 repeats of data, not 1 vs 2-10 repeats as you would use in practice

↑ comment by Simon Lermen (dalasnoin) · 2022-08-01T06:12:48.262Z · LW(p) · GW(p)

I can't access the wand link, maybe you have to change the access rules

I was interested in the report on fine-tuning a model for more than 1 epoch, even though finetuning is obviously not the same as training.

Replies from: nostalgebraist↑ comment by nostalgebraist · 2022-08-01T13:23:57.818Z · LW(p) · GW(p)

It should work now, sorry about that.

↑ comment by gwern · 2022-07-31T21:05:58.095Z · LW(p) · GW(p)

only if the model has more parameters than the dataset tokens and training for >10 epochs does overfitting kick in and scaling break down.

That sounds surprising. You are claiming that you observe the exact same loss, and downstream benchmarks, if you train a model on a dataset for 10 epochs as you do training on 10x more data for 1 epoch?

I would have expected some substantial degradation in efficiency such that the 10-epoch case was equivalent to training on 5x the data or something.

Replies from: gwern↑ comment by gwern · 2022-08-02T01:42:25.755Z · LW(p) · GW(p)

Twitter points me to an instance of this with T5, Figure 6/Table 9: at the lowest tested level of 64 repeats, there is slight downstream benchmark harm but still a lot less than I would've guessed.

Not sure how strongly to take this: those benchmarks are weak, not very comprehensive, and wouldn't turn up harm to interesting capabilities like few-shots or emergent ones like inner-monologues; but on the other hand, T5 is also a pretty strong model-family, was SOTA in several ways at the time & the family regularly used in cutting-edge work still, and so it's notable that it's harmed so little.

comment by harsimony · 2022-08-01T19:24:46.973Z · LW(p) · GW(p)

Some other order-of-magnitude estimates on available data, assuming words roughly equal tokens:

Wikipedia: 4B English words, according to this page.

Library of Congress: from this footnote a assume there are at most 100 million books worth of text in the LoC and from this page assume that books are 100k words, giving 10T words at most.

Constant writing: I estimate that a typical person writes at most 1000 words per day, with maybe 100 million people writing this amount of English on the internet. Over the last 10 years, these writers would have produced 370T words.

Research papers: this page estimates ~4m papers are published each year, at 10k words per paper with 100 years of research this amounts to 4T words total.

So it looks like 10T words is an optimistic order-of-magnitude estimate of the total amount of data available.

I assume the importance of a large quantity of clean text data will lead to the construction of a text database of ~1T tokens and that this database (or models trained on it) will eventually be open-sourced.

From there, it seems like really digging in to the sources of irreducible error will be necessary for further scaling. I would guess that a small part of this is "method error" (training details, context window, etc.) but that a significant fraction comes from intrinsic text entropy. Some entropy has to be present, or else text would have no information value.

I would guess that this irreducible error can probably be broken down into:

-

Uncertainty about the specific type of text the model is trying to predict (e.g. it needs some data to figure out that it's supposed to write in modern English, then more data to learn that the writing is flamboyant/emotional, then more to learn that there is a narrative structure, then more to determine that it is a work of fiction etc.). The model will always need some data to specify which text-generating sub-model to use. This error can be reduced with better prompts (though not completely eliminated)

-

Uncertainty about location within the text. For example, even if the model had memorized a specific play by Shakespeare, if you asked it to do next-word prediction on a random paragraph from the text, it would have trouble predicting the first few words simply because it hasn't determined which paragraph it has been given. This error should go away when looking at next-word prediction after the model has been fed enough data. Better prompts and a larger context window should help.

-

Uncertainty inherent to the text. This related to the actual information content of the text, and should be irreducible. I'm not sure about the relative size of this uncertainty compared to the other ones, but this paper suggests an entropy of ~10 bits/word in English (which seems high?). I don't know how entropy translates into training loss for these models. Memorization of key facts (or database access) can reduce the average information content of a text.

EDIT: also note that going from 10T to 100T tokens would only reduce the loss by 0.045, so it may not be worthwhile to increase dataset size beyond the 10T order-of-magnitude.

Replies from: peter-hrosso↑ comment by Peter Hroššo (peter-hrosso) · 2022-08-21T05:17:03.147Z · LW(p) · GW(p)

Uncertainty about location within the text

I think the models are evaluated on inputs that fill their whole context window, ie. ~1024 tokens long. I doubt there is many parts in Shakespeare's plays with the same 1024 tokens repeated.

Replies from: harsimonycomment by Alex_Altair · 2023-04-05T17:22:51.913Z · LW(p) · GW(p)

I have some thoughts that are either confusions, or suggestions for things that should be differently emphasized in this post (which is overall great!).

The first is that, as far as I can tell, these scaling laws are all determined empirically, as in, they literally trained a bunch of models with different parameters and then fit a curve to the points. This is totally fine, that's how a lot of things are discovered, and the fits look good to me, but a lot of this post reads as thought the law is a Law. For example;

At least in terms of loss, Chinchilla doesn't just beat Gopher. It beats any model trained on Gopher's data, no matter how big.

This is not literally true, because saying "any model" could include totally different architectures that obey nothing like the empirical curves in this paper.

I'm generally unclear on what the scope of the empirical discovery is. (I'm also not particularly knowledgeable about machine learning.) Do we have reason to think that it applies in domains outside text completion? Does it apply to models that don't use transformers? (Is that even a thing now?) Does it apply across all the other bazillion parameters that go into a particular model, like, I dunno, the learning rate, or network width vs depth?

It also feels like the discussion over "have we used all the data" is skimming over what the purpose of a language model is, or what loss even means. To make an analogy for comparison, consider someone saying "the US census has gathered all possible data on the heights of US citizens. To get a more accurate model, we need to create more US citizens."

I would say that the point of a language model is to capture all statistical irregularities in language. If we've used all the data, then that's it, we did it. Creating more data will be changing the actual population that we are trying to run stats on, it will be adding more patterns that weren't there before.

I can imagine a counter argument to this that says, the text data that humanity has generated is being generated from some Platonic distribution that relates to what humans think and talk about, and we want to capture the regularities in that distribution. The existing corpus of text isn't the population, it is itself a sampling, and the LLMs are trying to evaluate the regularities from that sample.

Which, sure, that sounds fine, but I think the post sort of just makes it sound like we want to make number go down, and more data make number go down, without really talking about what it means.

Replies from: nostalgebraist↑ comment by nostalgebraist · 2023-04-06T17:50:36.546Z · LW(p) · GW(p)

I'm generally unclear on what the scope of the empirical discovery is. (I'm also not particularly knowledgeable about machine learning.) Do we have reason to think that it applies in domains outside text completion? Does it apply to models that don't use transformers? (Is that even a thing now?) Does it apply across all the other bazillion parameters that go into a particular model, like, I dunno, the learning rate, or network width vs depth?

The answer to each these questions is either "yes" or "tentatively, yes."

But the evidence doesn't come from the Chinchilla paper. It comes from the earlier Kaplan et al papers, to which the Chinchilla paper is a response/extension/correction:

- Scaling Laws for Neural Language Models (original scaling law paper, includes experiments with width/depth/etc, includes an experiment with a non-transformer model class)

- Scaling Laws for Autoregressive Generative Modeling (includes experiments in various non-text and multimodal domains)

If you want to understand this post better, I'd recommend reading those papers, or a summary of them.

This post, and the Chinchilla paper itself, are part of the "conversation" started by the Kaplan papers. They implicitly take some of the results from the Kaplan papers for granted, e.g.

- "Scaling Laws for Neural Language Models" found that architectural "shape" differences, like width vs. depth, mattered very little compared to and . So, later work tends to ignore these differences.

- Even if they got some of the details wrong, the Kaplan papers convinced people that LM loss scales in a very regular, predictable manner. It's empirical work, but it's the kind of empirical work where your data really does look like it's closely following some simple curve -- not the kind where you fit a simple curve for the sake of interpretation, while understanding that there is a lot of variation it cannot capture.

So, later work tends to be casual about the distinction between "the curve we fit to the data" and "the law governing the real phenomena." (Theoretical work in this area generally tries to explain why LM loss might follow a simple power law -- under the assumption it really does follow such a law -- rather than trying to derive some more complicated, real-er functional form.)

I would say that the point of a language model is to capture all statistical irregularities in language. [...]

I can imagine a counter argument to this that says, the text data that humanity has generated is being generated from some Platonic distribution that relates to what humans think and talk about, and we want to capture the regularities in that distribution. The existing corpus of text isn't the population, it is itself a sampling, and the LLMs are trying to evaluate the regularities from that sample.

Which, sure, that sounds fine, but I think the post sort of just makes it sound like we want to make number go down, and more data make number go down, without really talking about what it means.

Hmm, I think these days the field views "language modeling" as a means to an end -- a way to make something useful, or something smart.

We're not trying to model language for its own sake. It just so happens that, if you (say) want to make a machine that can do all the stuff ChatGPT can do, training a language model is the right first step.

You might find models like DALLE-2 and Stable Diffusion a helpful reference point. These are generative models -- what do they for images is (handwaving some nuances) very close to what LMs do for text. But the people creating and using these things aren't asking, "is this a good/better model of the natural distribution of text-image pairs?" They care about creating pictures on demand, and about how good the pictures are.

Often, it turns out that if you want a model to do cool and impressive things, the best first step is to make a generative model, and make it as good as you can. People want to "make number go down," not because we care about the number, but because we've seen time and time again that when it goes down, all the stuff we do care about gets better.

This doesn't fully address your question, because it's not clear that the observed regularity ("number goes down -- stuff gets better") will continue to hold if we change the distribution we use to train the generative model. As an extreme example, if we added more LM training data that consisted of random numbers or letters, I don't think anyone would expect that to help.

However, if we add data that's different but still somehow interesting, it does tend to help -- on the new data, obviously, but also to some extent on the old data as well. (There's another Kaplan scaling paper about that, for instance.)

And at this point, I'd feel wary betting against "more data is better (for doing cool and impressive things later)," as long as the data is interestingly structured and has some relationship to things we care about. (See my exchange with gwern here [LW(p) · GW(p)] from a few years ago -- I think gwern's perspective more than mine has been borne out over time.)

Replies from: Alex_Altair↑ comment by Alex_Altair · 2023-04-06T19:16:19.503Z · LW(p) · GW(p)

Thanks! This whole answer was understandable and clarifying for me.

comment by Legionnaire · 2022-08-01T19:58:37.281Z · LW(p) · GW(p)

We're not running out of data to train on, just text.

Why did I not need 1 Trillion language examples to speak (debatable) intelligently? I'd suspect the reason is a combination of inherited training examples from my ancestors, but more importantly, language output is only the surface layer.

In order for language models to get much better, I suspect they need to be training on more than just language. It's difficult to talk intelligently about complex subjects if you've only ever read about them. Especially if you have no eyes, ears, or any other sense data. The best language models are still missing crucial context/info which could be gained through video, audio, and robotic IO.

Combined with this post, this would also suggest our hardware can already train more parameters than we need to in order to get much more intelligent models, if we can get that data from non text sources.

comment by Dirichlet-to-Neumann · 2022-07-31T06:43:50.070Z · LW(p) · GW(p)

Interesting and thought provoking.

"It's hard to tell, but there is this ominous comment, in the section where they talk about PaLM vs. Chinchilla:". In the context of fears about AI alignment, I would say "hopeful" rather than "ominous" !

comment by Raemon · 2022-08-14T07:43:46.813Z · LW(p) · GW(p)

Something I'm unsure about (commenting from my mod-perspective but not making a mod pronouncement) is how LW should relate to posts that lay out ideas that may advance AI capabilities.

My current understanding is that all major AI labs have already figured out the chinchilla results on their own, but that younger or less in-the-loop AI orgs may have needed to run experiments that took a couple months of staff time. This post was one of the most-read posts on LW this month, and shared heavily around twitter. It's plausible to me that spreading these arguments plausibly speeds up AI timelines by 1-4 weeks on average.