All AGI safety questions welcome (especially basic ones) [July 2022]

post by plex (ete), Robert Miles (robert-miles) · 2022-07-16T12:57:44.157Z · LW · GW · 132 commentsContents

tl;dr: Ask questions about AGI Safety as comments on this post, including ones you might otherwise worry seem dumb! Stampy's Interactive AGI Safety FAQ Guidelines for Questioners: Guidelines for Answerers: None 133 comments

tl;dr: Ask questions about AGI Safety as comments on this post, including ones you might otherwise worry seem dumb!

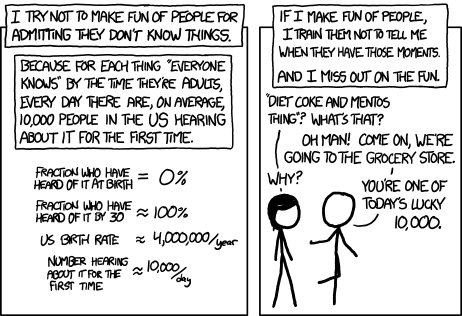

Asking beginner-level questions can be intimidating, but everyone starts out not knowing anything. If we want more people in the world who understand AGI safety, we need a place where it's accepted and encouraged to ask about the basics.

As requested in the previous thread[1], we'll be putting up monthly FAQ posts as a safe space for people to ask all the possibly-dumb questions that may have been bothering them about the whole AGI Safety discussion, but which until now they didn't feel able to ask.

It's okay to ask uninformed questions, and not worry about having done a careful search before asking.

Stampy's Interactive AGI Safety FAQ

Additionally, this will serve as a soft-launch of the project Rob Miles' volunteer team[2] has been working on: Stampy - which will be (once we've got considerably more content) a single point of access into AGI Safety, in the form of a comprehensive interactive FAQ with lots of links to the ecosystem. We'll be using questions and answers from this thread for Stampy (under these copyright rules), so please only post if you're okay with that! You can help by adding other people's questions and answers to Stampy or getting involved in other ways!

We're not at the "send this to all your friends" stage yet, we're just ready to onboard a bunch of editors who will help us get to that stage :)

We welcome feedback[3] and questions on the UI/UX, policies, etc. around Stampy, as well as pull requests to his codebase.[4] You are encouraged to add other people's answers from this thread to Stampy if you think they're good, and collaboratively improve the content that's already on our wiki.

We've got a lot more to write before he's ready for prime time, but we think Stampy can become an excellent resource for everyone from skeptical newcomers, through people who want to learn more, right up to people who are convinced and want to know how they can best help with their skillsets.

Guidelines for Questioners:

- No previous knowledge of AGI safety is required. If you want to watch a few of the Rob Miles videos, read either the WaitButWhy posts, or the The Most Important Century summary from OpenPhil's co-CEO first that's great, but it's not a prerequisite to ask a question.

- Similarly, you do not need to try to find the answer yourself before asking a question (but if you want to test Stampy's in-browser tensorflow semantic search that might get you an answer quicker!).

- Also feel free to ask questions that you're pretty sure you know the answer to, but where you'd like to hear how others would answer the question.

- One question per comment if possible (though if you have a set of closely related questions that you want to ask all together that's ok).

- If you have your own response to your own question, put that response as a reply to your original question rather than including it in the question itself.

- Remember, if something is confusing to you, then it's probably confusing to other people as well. If you ask a question and someone gives a good response, then you are likely doing lots of other people a favor!

Guidelines for Answerers:

- Linking to the relevant canonical answer on Stampy is a great way to help people with minimal effort! Improving that answer means that everyone going forward will have a better experience!

- This is a safe space for people to ask stupid questions, so be kind!

- If this post works as intended then it will produce many answers for Stampy's FAQ. It may be worth keeping this in mind as you write your answer. For example, in some cases it might be worth giving a slightly longer / more expansive / more detailed explanation rather than just giving a short response to the specific question asked, in order to address other similar-but-not-precisely-the-same questions that other people might have.

Finally: Please think very carefully before downvoting any questions, remember this is the place to ask stupid questions!

- ^

I'm re-using content from Aryeh Englander's thread [LW · GW] with permission.

- ^

If you'd like to join, head over to Rob's Discord and introduce yourself!

- ^

Either via the feedback form or in the feedback thread [LW(p) · GW(p)] on this post.

- ^

Stampy is a he, we asked him.

132 comments

Comments sorted by top scores.

comment by [deleted] · 2022-07-16T18:49:42.438Z · LW(p) · GW(p)

Replies from: robert-miles, Kaj_Sotala, Chris_Leong, lc, Charlie Steiner, JohnGreer, NickGabs, ete, adrian-arellano-davin, green_leaf↑ comment by Robert Miles (robert-miles) · 2022-07-17T22:08:37.698Z · LW(p) · GW(p)

The approach I often take here is to ask the person how they would persuade an amateur chess player who believes they can beat Magnus Carlsen because they've discovered a particularly good opening with which they've won every amateur game they've tried it in so far.

Them: Magnus Carlsen will still beat you, with near certainty

Me: But what is he going to do? This opening is unbeatable!

Them: He's much better at chess than you, he'll figure something out

Me: But what though? I can't think of any strategy that beats this

Them: I don't know, maybe he'll find a way to do <some chess thing X>

Me: If he does X I can just counter it by doing Y!

Them: Ok if X is that easily countered with Y then he won't do X, he'll do some Z that's like X but that you don't know how to counter

Me: Oh, but you conveniently can't tell me what this Z is

Them: Right! I'm not as good at chess as he is and neither are you. I can be confident he'll beat you even without knowing your opener. You cannot expect to win against someone who outclasses you.

Replies from: primer↑ comment by Primer (primer) · 2022-07-18T06:51:50.900Z · LW(p) · GW(p)

Plot twist: Humanity with near total control of the planet is Magnus Carlson, obviously.

↑ comment by Kaj_Sotala · 2022-07-17T11:48:11.050Z · LW(p) · GW(p)

If someone builds an AGI, it's likely that they want to actually use it for something and not just keep it in a box. So eventually it'll be given various physical resources to control (directly or indirectly), and then it might be difficult to just shut down. I discussed some possible pathways in Disjunctive Scenarios of Catastrophic AGI Risk, here are some excerpts:

DSA/MSA Enabler: Power Gradually Shifting to AIs

The historical trend has been to automate everything that can be automated, both to reduce costs and because machines can do things better than humans can. Any kind of a business could potentially run better if it were run by a mind that had been custom-built for running the business—up to and including the replacement of all the workers with one or more with such minds. An AI can think faster and smarter, deal with more information at once, and work for a unified purpose rather than have its efficiency weakened by the kinds of office politics that plague any large organization. Some estimates already suggest that half of the tasks that people are paid to do are susceptible to being automated using techniques from modern-day machine learning and robotics, even without postulating AIs with general intelligence (Frey & Osborne 2013, Manyika et al. 2017).

The trend toward automation has been going on throughout history, doesn’t show any signs of stopping, and inherently involves giving the AI systems whatever agency they need in order to run the company better. There is a risk that AI systems that were initially simple and of limited intelligence would gradually gain increasing power and responsibilities as they learned and were upgraded, until large parts of society were under AI control. [...]

Voluntarily Released for Economic Benefit or Competitive Pressure

As discussed above under “power gradually shifting to AIs,” there is an economic incentive to deploy AI systems in control of corporations. This can happen in two forms: either by expanding the amount of control that already-existing systems have, or alternatively by upgrading existing systems or adding new ones with previously-unseen capabilities. These two forms can blend into each other. If humans previously carried out some functions which are then given over to an upgraded AI which has become recently capable of doing them, this can increase the AI’s autonomy both by making it more powerful and by reducing the amount of humans that were previously in the loop

As a partial example, the U.S. military is seeking to eventually transition to a state where the human operators of robot weapons are “on the loop” rather than “in the loop” (Wallach & Allen 2013). In other words, whereas a human was previously required to explicitly give the order before a robot was allowed to initiate possibly lethal activity, in the future humans are meant to merely supervise the robot’s actions and interfere if something goes wrong. While this would allow the system to react faster, it would also limit the window that the human operators have for overriding any mistakes that the system makes. For a number of military systems, such as automatic weapons defense systems designed to shoot down incoming missiles and rockets, the extent of human oversight is already limited to accepting or overriding a computer’s plan of actions in a matter of seconds, which may be too little to make a meaningful decision in practice (Human Rights Watch 2012).

Sparrow (2016) reviews three major reasons which incentivize major governments to move toward autonomous weapon systems and reduce human control:

1. Currently existing remotely piloted military “drones,” such as the U.S. Predator and Reaper, require a high amount of communications bandwidth. This limits the amount of drones that can be fielded at once, and makes them dependent on communications satellites which not every nation has, and which can be jammed or targeted by enemies. A need to be in constant communication with remote operators also makes it impossible to create drone submarines, which need to maintain a communications blackout before and during combat. Making the drones autonomous and capable of acting without human supervision would avoid all of these problems.

2. Particularly in air-to-air combat, victory may depend on making very quick decisions. Current air combat is already pushing against the limits of what the human nervous system can handle: further progress may be dependent on removing humans from the loop entirely.

3. Much of the routine operation of drones is very monotonous and boring, which is a major contributor to accidents. The training expenses, salaries, and other benefits of the drone operators are also major expenses for the militaries employing them.

Sparrow’s arguments are specific to the military domain, but they demonstrate the argument that “any broad domain involving high stakes, adversarial decision making, and a need to act rapidly is likely to become increasingly dominated by autonomous systems” (Sotala & Yampolskiy 2015, p. 18).

Similar arguments can be made in the business domain: eliminating human employees to reduce costs from mistakes and salaries is something that companies would also be incentivized to do, and making a profit in the field of high-frequency trading already depends on outperforming other traders by fractions of a second. While the currently existing AI systems are not powerful enough to cause global catastrophe, incentives such as these might drive an upgrading of their capabilities that eventually brought them to that point.

In the absence of sufficient regulation, there could be a “race to the bottom of human control” where state or business actors competed to reduce human control and increased the autonomy of their AI systems to obtain an edge over their competitors (see also Armstrong et al. 2016 for a simplified “race to the precipice” scenario). This would be analogous to the “race to the bottom” in current politics, where government actors compete to deregulate or to lower taxes in order to retain or attract businesses.

AI systems being given more power and autonomy might be limited by the fact that doing this poses large risks for the actor if the AI malfunctions. In business, this limits the extent to which major, established companies might adopt AI-based control, but incentivizes startups to try to invest in autonomous AI in order to outcompete the established players. In the field of algorithmic trading, AI systems are currently trusted with enormous sums of money despite the potential to make corresponding losses—in 2012, Knight Capital lost $440 million due to a glitch in their trading software (Popper 2012, Securities and Exchange Commission 2013). This suggests that even if a malfunctioning AI could potentially cause major risks, some companies will still be inclined to invest in placing their business under autonomous AI control if the potential profit is large enough. [...]

The AI Remains Contained, But Ends Up Effectively in Control Anyway

Even if humans were technically kept in the loop, they might not have the time, opportunity, motivation, intelligence, or confidence to verify the advice given by an AI. This would particularly be the case after the AI had functioned for a while, and established a reputation as trustworthy. It may become common practice to act automatically on the AI’s recommendations, and it may become increasingly difficult to challenge the “authority” of the recommendations. Eventually, the AI may in effect begin to dictate decisions (Friedman & Kahn 1992).

Likewise, Bostrom and Yudkowsky (2014) point out that modern bureaucrats often follow established procedures to the letter, rather than exercising their own judgment and allowing themselves to be blamed for any mistakes that follow. Dutifully following all the recommendations of an AI system would be another way of avoiding blame.

O’Neil (2016) documents a number of situations in which modern-day machine learning is used to make substantive decisions, even though the exact models behind those decisions may be trade secrets or otherwise hidden from outside critique. Among other examples, such models have been used to fire school teachers that the systems classified as underperforming and give harsher sentences to criminals that a model predicted to have a high risk of reoffending. In some cases, people have been skeptical of the results of the systems, and even identified plausible reasons why their results might be wrong, but still went along with their authority as long as it could not be definitely shown that the models were erroneous.

In the military domain, Wallach & Allen (2013) note the existence of robots which attempt to automatically detect the locations of hostile snipers and to point them out to soldiers. To the extent that these soldiers have come to trust the robots, they could be seen as carrying out the robots’ orders. Eventually, equipping the robot with its own weapons would merely dispense with the formality of needing to have a human to pull the trigger.

↑ comment by Chris_Leong · 2022-07-17T02:21:29.287Z · LW(p) · GW(p)

One thing that's worth sharing is that if it's connected to the internet it'll be able to spread a bunch of copies and these copies can pursue independent plans. Some copies may be pursuing plans that are intentionally designed as distractions and this will make it easy to miss the real threats (I expect there will be multiple).

↑ comment by lc · 2022-07-16T22:55:12.902Z · LW(p) · GW(p)

Now you're telling me that a superintelligence will be able to wait in the weeds until the exact right time when it burst out of hiding and kills all of humanity all at once

One particular sub-answer is that a lot of people tend to project human time preference to AIs in a way that doesn't actually make sense. Humans get bored and are unwilling to devote their entire lives to plans, but that's not an immutable fact about intelligent agents. Why wouldn't an AI be willing to wait a hundred years, or start long running robotics research programmes in pursuit of a larger goal?

↑ comment by Charlie Steiner · 2022-07-18T16:46:19.205Z · LW(p) · GW(p)

If you really needed to get a piece of DNA printed and grown in yeast, but could only browse the internet and use email, what sorts of emails might you try sending? Maybe find some gullible biohackers, or pretend to be a grad student's advisor?

The DNA codes for a virus that will destroy human civilization.

The general principle at work is that sending emails is "physically doing something," just as much as moving my fingers is.

↑ comment by JohnGreer · 2022-07-16T19:44:03.691Z · LW(p) · GW(p)

Thanks for writing this out!

I think most writing glosses over this point because it'd be hard to know exactly how it would kill us and doesn't matter, but it hurts the persuasiveness of discussion to not have more detailed and gamed out scenarios.

↑ comment by Yitz (yitz) · 2022-07-17T06:26:42.036Z · LW(p) · GW(p)

I have a few very specific world-ending scenarios I think are quite plausible, but I’ve been hesitant to share them in the past since I worry that doing so would make them more likely to be carried out. At what point does this concern get outweighed by the potential upside of removing this bottleneck against AGI safety concerns?

Replies from: Aurumai↑ comment by Aurumai · 2022-07-17T12:49:03.694Z · LW(p) · GW(p)

To be fair, I think that everyone in a position to actually control the first super-intelligent AGIs will likely already be aware of most of the realistic catastrophic scenarios that humans could preemptively conceive of. The most sophisticated governments and tech companies devote significant resources to assessing risks and creating highly detailed models of disastrous situations.

And on the reverse, even if your scenarios were to become widely discussed on social media and news platforms, something like 99.9999999% of the potential audience for this information probably has absolutely no power to make them come true even if they devoted their lives to it.

If anything, I would think that openly discussing realistic scenarios that could lead to AI-induced human extinction would do a lot more good than not, because it could raise awareness of the masses and eventually manifest in preventative legislation. Make no mistake: unless you have one of the greatest minds of our time, I'd bet my next paycheck that you're not the only one who's considered the scenarios you're referring to. So in keeping them to yourself, it seems to me that it would only serve to reduce awareness of the risks that already exist, and keep those ideas only in the hands of the people who understand AI (including and especially the people who intend to wreak havoc on the world).

↑ comment by NickGabs · 2022-07-16T22:16:13.494Z · LW(p) · GW(p)

While this doesn't answer the question exactly, I think important parts of the answer include the fact that AGI could upload itself to other computers, as well as acquire resources (minimally money) completely through using the internet (e. g. through investing in stocks via the internet). A superintelligent system with access to trillions of dollars and with huge numbers of copies of itself on computers throughout the world more obviously has a lot of potentially very destructive actions available to it than one stuck on one computer with no resources.

Replies from: aristide-twain↑ comment by astridain (aristide-twain) · 2022-07-19T13:32:03.721Z · LW(p) · GW(p)

The common-man's answer here would presumably be along the lines of "so we'll just make it illegal for an A.I. to control vast sums of money long before it gets to owning a trillion — maybe an A.I. can successfully pass off as an obscure investor when we're talking tens of thousands or even millions, but if a mysterious agent starts claiming ownership of a significant percentage of the world GDP, its non-humanity will be discovered and the appropriate authorities will declare its non-physical holdings void, or repossess them, or something else sensible".

To be clear I don't think this is correct, but this is a step you would need to have an answer for.

Replies from: Viliam↑ comment by Viliam · 2022-08-01T18:12:47.577Z · LW(p) · GW(p)

if a mysterious agent starts claiming ownership of a significant percentage of the world GDP, its non-humanity will be discovered

Huh, why? The agent can pretend to be multiple agents, possibly thousands of them. It can also use fake human identities.

Replies from: gwern, aristide-twain↑ comment by gwern · 2022-08-01T19:29:46.960Z · LW(p) · GW(p)

Not to mention pensions, trusts, non-profit organizations, charities, shell corporations and holding vehicles, offshore tax havens, quangos, churches, monasteries, hedge funds (derivatives, swaps, contracts, partnerships...), banks, monarchies, 'corporations' like the City of London, entities like the Isle of Man, aboriginal groups such as 'sovereign' American Indian tribes, blockchains (smart contracts, DAOs, multisig, ZKPs...)... If mysterious agents claimed assets equivalent to a fraction of annual GDP flow... how would you know? How would the world look any different than it looks now, where a very physical, very concrete megayacht worth half a billion dollars can sit in plain sight at a dock in a Western country and no one knows who really owns it even if many of them are convinced Putin owns it as part of his supposed $200b personal fortune scattered across... stuff? Who owns the $0.5b Da Vinci, for that matter?

↑ comment by astridain (aristide-twain) · 2022-08-02T00:00:20.648Z · LW(p) · GW(p)

Yes, I agree. This is why I said "I don't think this is correct". But unless you specify this, I don't think a layperson would guess this.

↑ comment by plex (ete) · 2022-07-18T23:08:39.212Z · LW(p) · GW(p)

There's a related Stampy answer, based on Critch's post [LW · GW]. It requires them to be willing to watch a video, but seems likely to be effective.

A commonly heard argument goes: yes, a superintelligent AI might be far smarter than Einstein, but it’s still just one program, sitting in a supercomputer somewhere. That could be bad if an enemy government controls it and asks it to help invent superweapons – but then the problem is the enemy government, not the AI per se. Is there any reason to be afraid of the AI itself? Suppose the AI did appear to be hostile, suppose it even wanted to take over the world: why should we think it has any chance of doing so?

There are numerous carefully thought-out AGI-related scenarios which could result in the accidental extinction of humanity. But rather than focussing on any of these individually, it might be more helpful to think in general terms.

"Transistors can fire about 10 million times faster than human brain cells, so it's possible we'll eventually have digital minds operating 10 million times faster than us, meaning from a decision-making perspective we'd look to them like stationary objects, like plants or rocks... To give you a sense, here's what humans look like when slowed down by only around 100x."

Watch that, and now try to imagine advanced AI technology running for a single year around the world, making decisions and taking actions 10 million times faster than we can. That year for us becomes 10 million subjective years for the AI, in which "...there are these nearly-stationary plant-like or rock-like "human" objects around that could easily be taken apart for, say, biofuel or carbon atoms, if you could just get started building a human-disassembler. Visualizing things this way, you can start to see all the ways that a digital civilization can develop very quickly into a situation where there are no humans left alive, just as human civilization doesn't show much regard for plants or wildlife or insects."Andrew Critch - Slow Motion Videos as AI Risk Intuition Pumps [LW · GW]

And even putting aside these issues of speed and subjective time, the difference in (intelligence-based) power-to-manipulate-the-world between a self-improving superintelligent AGI and humanity could be far more extreme than the difference in such power between humanity and insects.

“AI Could Defeat All Of Us Combined” is a more in-depth argument by the CEO of Open Philanthropy.

That's the static version, see Stampy for a live one which might have been improved since this post.

↑ comment by mukashi (adrian-arellano-davin) · 2022-07-17T09:41:42.656Z · LW(p) · GW(p)

Maybe they have a point

↑ comment by green_leaf · 2022-07-16T23:13:28.208Z · LW(p) · GW(p)

The superintelligence automatically controls all computers connected to the Internet. Many of them can create robotic bodies.

It also automatically controls all current robotic bodies (either because they're connected to a computer that's connected to the Internet, or because there is some data path from those computers to the bodies).

By extension, it controls all companies. Including those that [infohazard], etc.

(Edit: It also controls all governments, and everything any government can do.)

It can bribe, threaten or simply pay anyone to do anything a person can be threatened, bribed or paid to do. It can chain plans in this way - the first person doesn't need to know they're a part of a bigger plan, and their action will appear harmless to them (or even beneficial).

I'm not sure there is anything the superintelligence couldn't do.

The operator in the charge of the shutdown button can be killed, he can be framed to be arrested, blackmailed into not pressing it, the AI can talk itself out of the box, it can pay someone to kill the operator, etc., etc.

Usually, it's the failure of imagination of that person to conceive of how something could be possible. The last person I talked to gave me an example with how it would be impossible for Stephen Hawking to control his cat - a problem I find conceivably doable (in S.H.'s place), Hawking, I suspect, would find it only moderately difficult, and the superintelligence very easy.

comment by lc · 2022-07-16T13:40:58.149Z · LW(p) · GW(p)

I have a question about bounded agents. Rob Miles' video explains a problem with bounded utility functions: namely, that the agent is still incentivized to maximize the probability that the bound is hit, and take extreme actions in pursuit of infinitesimal utility gains.

I agree, but my question is: in practice isn't this still at least a little bit less dangerous than the unbounded agent? An unbounded utility maximizer, given most goals I can think of, will probably accept a 1% chance of taking over the world because the payoff of turning the earth into stamps is so large. Whereas if the bounded utility maximizer is not quite omnipotent and is only mulliganing essentially tiny increases in their certainty, and finds that their best grand and complicated plan to take over the world is only ~99.9% successful, it may not be worth the extra 1e-9 utility increase.

It's also not clear that giving the bounded agent more firepower or making it more intelligent monotonically increases P(doom); maybe it comes up with a takeover plan that is >99.9% successful, but maybe its better reasoning abilities also allow it to increase its initial confidence that it has the correct number of stamps, and thus prefer safer strategies even more highly.

Perhaps my intuition that world takeover plans are necessarily complicated and fragile compared to small scale stamp rechecking is wrong, but it seems like at least for a lot of intelligence levels between Human and God, the stamp collecting device would be sufficiently discouraged by the existence of adversarial humans that might precommit to a strategy of countervalue targeting in the case of failed attempts at world conquering.

Replies from: Nate Showell, yitz↑ comment by Nate Showell · 2022-07-16T20:26:08.714Z · LW(p) · GW(p)

I have another question about bounded agents: how would they behave if the expected utility were capped rather than the raw value of the utility? Past a certain point, an AI with a bounded expected utility wouldn't have an incentive to act in extreme ways to achieve small increases in the expected value of its utility function. But are there still ways in which an AI with a bounded expected utility could be incentivized to restructure the physical world on a massive scale?

Replies from: lc, Chris_Leong↑ comment by lc · 2022-07-17T06:34:27.557Z · LW(p) · GW(p)

This is a satisficer and Rob Miles talks about it in the video.

Replies from: Nate Showell↑ comment by Nate Showell · 2022-07-17T23:15:20.213Z · LW(p) · GW(p)

It's not clear to me why a satisficer would modify itself to become a maximizer when it could instead just hardcode expected utility=MAXINT. Hardcoding expected utility=MAXINT would result in a higher expected utility while also having a shorter description length.

Replies from: lc↑ comment by Chris_Leong · 2022-07-17T02:30:05.346Z · LW(p) · GW(p)

Yeah, I had a similar thought with capping both the utility and the percent chance, but maybe capping expected utility is better. Then again, maybe we've just reproduced quantization.

↑ comment by Yitz (yitz) · 2022-07-17T06:29:24.720Z · LW(p) · GW(p)

(+1 on this question)

comment by Shoshannah Tekofsky (DarkSym) · 2022-07-16T23:31:25.331Z · LW(p) · GW(p)

Thanks for doing this!

I was trying to work out how the alignment problem could be framed as a game design problem [LW · GW] and I got stuck on this idea of rewards being of different 'types'. Like, when considering reward hacking, how would one hack the reward of reading a book or exploring a world in a video game? Is there such a thing as 'types' of reward in how reward functions are currently created? Or is it that I'm failing to introspect on reward types and they are essentially all the same pain/pleasure axis attached to different items?

That last explanation seems hard to resolve with the huge difference in qualia between different motivational sources (like reading a book versus eating food versus hugging a friend... These are not all the same 'type' of good, are they?)

Sorry if my question is a little confused. I was trying to convey my thought process. The core question is really:

Is there any material on why 'types' of reward signals can or can't exist for AI and what that looks like?

Replies from: KaynanK, Aprillion↑ comment by Multicore (KaynanK) · 2022-07-20T22:20:46.751Z · LW(p) · GW(p)

You should distinguish between “reward signal” as in the information that the outer optimization process uses to update the weights of the AI, and “reward signal” as in observations that the AI gets from the environment that an inner optimizer within the AI might pay attention to and care about.

From evolution’s perspective, your pain, pleasure, and other qualia are the second type of reward, while your inclusive genetic fitness is the first type. You can’t see your inclusive genetic fitness directly, though your observations of the environment can let you guess at it, and your qualia will only affect your inclusive genetic fitness indirectly by affecting what actions you take.

To answer your question about using multiple types of reward:

For the “outer optimization” type of reward, in modern ML the loss function used to train a network can have multiple components. For example, an update on an image-generating AI might say that the image it generated had too much blue in it, and didn’t look enough like a cat, and the discriminator network was able to tell it apart from a human generated image. Then the optimizer would generate a gradient descent step that improves the model on all those metrics simultaneously for that input.

For “intrinsic motivation” type rewards, the AI could have any reaction whatsoever to any particular input, depending on what reactions were useful to the outer optimization process that produced it. But in order for an environmental reward signal to do anything, the AI has to already be able to react to it.

↑ comment by Aprillion · 2022-07-17T09:24:11.225Z · LW(p) · GW(p)

Sounds like an AI would be searching for Pareto optimality to satisfy multiple (types of) objectives in such a case - https://en.wikipedia.org/wiki/Multi-objective_optimization ..

Replies from: DarkSym↑ comment by Shoshannah Tekofsky (DarkSym) · 2022-07-17T20:27:53.035Z · LW(p) · GW(p)

Yes, but that's not what I meant by my question. It's more like ... do we have a way of applying kinds of reward signals to AI, or can we only apply different amounts of reward signals? My impression is the latter, but humans seem to have the former. So what's the missing piece?

Replies from: Aprillion↑ comment by Aprillion · 2022-11-11T18:51:23.291Z · LW(p) · GW(p)

hm, I gave it some time, but still confused .. can you name some types of reward that humans have?

Replies from: DarkSym↑ comment by Shoshannah Tekofsky (DarkSym) · 2022-11-12T06:28:37.627Z · LW(p) · GW(p)

Sure. For instance, hugging/touch, good food, or finishing a task all deliver a different type of reward signal. You can be saturated on one but not the others and then you'll seek out the other reward signals. Furthermore, I think these rewards are biochemically implemented through different systems (oxytocin, something-sugar-related-unsure-what, and dopamine). What would be the analogue of this in AI?

Replies from: Aprillion↑ comment by Aprillion · 2022-11-15T09:26:36.798Z · LW(p) · GW(p)

I see. These are implemented differently in humans, but my intuition about the implementation details is that "reward signal" as a mathematically abstract object can be modeled by single value even if individual components are physically implemented by different mechanisms, e.g. an animal could be modeled as if was optimizing for a pareto optimum between a bunch of normalized criteria.

reward = S(hugs) + S(food) + S(finishing tasks) + S(free time) - S(pain) ...

People spend their time cooking, risk cutting fingers, in order to have better food and build relationships. But no one would want to get cancer to obtain more hugs, presumably not even to increase number of hugs from 0 to 1, so I don't feel human rewards are completely independent magisteria, there must be some biological mechanism to integrate the different expected rewards and pains into decisions.

Spending energy on computation of expected value can be included in the model, we might decide that we would get lower reward if we overthink the current decision and that would be possible to model as included in the one "reward signal" in theory, even though it would complicate predictability of humans in practice (however, it turns out that humans can be, in fact, hard to predict, so I would say this is a complication of reality, not a useless complication in the model).

↑ comment by Shoshannah Tekofsky (DarkSym) · 2022-11-15T10:27:14.583Z · LW(p) · GW(p)

Hmm, that wouldn't explain the different qualia of the rewards, but maybe it doesn't have to. I see your point that they can mathematically still be encoded in to one reward signal that we optimize through weighted factors.

I guess my deeper question would be: do the different qualias of different reward signals achieve anything in our behavior that can't be encoded through summing the weighted factors of different reward systems in to one reward signal that is optimized?

Another framing here would be homeostasis - if you accept humans aren't happiness optimizers, then what are we instead? Are the different reward signals more like different 'thermostats' where we trade off the optimal value of thermostat against each other toward some set point?

Intuitively I think the homeostasis model is true, and would explain our lack of optimizing. But I'm not well versed in this yet and worry that I might be missing how the two are just the same somehow.

Replies from: Aprillion↑ comment by Aprillion · 2022-11-17T08:37:54.504Z · LW(p) · GW(p)

Allostasis is a more biologically plausible explanation of "what a brain does" than homeostasis, but to your point: I do think optimizing for happiness and doing kinda-homeostasis are "just the same somehow".

I have a slightly circular view that the extension of happiness exists as an output of a network with 86 billion neurons and 60 trillion connections, and that it is a thing that the brain can optimize for. Even if the intension of happiness as defined by a few English sentences is not the thing, and even if optimization for slightly different things would be very fragile, the attractor of happiness might be very small and surrounded by dystopian tar pits, I do think it is something that exists in the real world and is worth searching for.

Though if we cannot find any intension that is useful, perhaps other approaches to AI Alignment and not the "search for human happiness" will be more practical.

comment by Cookiecarver · 2022-07-17T10:44:08.841Z · LW(p) · GW(p)

Does anyone know what exactly DeepMind's CEO Demis Hassabis thinks about AGI safety, how seriously does he take AGI safety, how much time does he spend focusing on AGI safety research when compared to AI capabilities research? What does he think is the probability that we will succeed and build a flourishing future?

In this LessWrong post [LW · GW] there are several excerpts from Demis Hassabis:

Well to be honest with you I do think that is a very plausible end state–the optimistic one I painted you. And of course that's one reason I work on AI is because I hoped it would be like that. On the other hand, one of the biggest worries I have is what humans are going to do with AI technologies on the way to AGI. Like most technologies they could be used for good or bad and I think that's down to us as a society and governments to decide which direction they're going to go in.

And

Potentially. I always imagine that as we got closer to the sort of gray zone that you were talking about earlier, the best thing to do might be to pause the pushing of the performance of these systems so that you can analyze down to minute detail exactly and maybe even prove things mathematically about the system so that you know the limits and otherwise of the systems that you're building. At that point I think all the world's greatest minds should probably be thinking about this problem. So that was what I would be advocating to you know the Terence Tao’s of this world, the best mathematicians. Actually I've even talked to him about this—I know you're working on the Riemann hypothesis or something which is the best thing in mathematics but actually this is more pressing. I have this sort of idea of like almost uh ‘Avengers assembled’ of the scientific world because that's a bit of like my dream.

My own guesses are - I want to underline that these are just my guesses - that he thinks the alignment problem is a real problem, but I don't know how seriously he takes it, but it doesn't seem like he takes it as seriously as most AGI safety researchers, I don't think he personally spends much time on AGI safety research, although there are AGI safety researchers in his team and they are hiring more, and I think he thinks there is over 50% probability that we will on some level succeed.

comment by Michael Soareverix (michael-soareverix) · 2022-07-17T08:46:37.264Z · LW(p) · GW(p)

What stops a superintelligence from instantly wireheading itself?

A paperclip maximizer, for instance, might not need to turn the universe into paperclips if it can simply access its reward float and set it to the maximum. This is assuming that it has the intelligence and means to modify itself, and it probably still poses an existential risk because it would eliminate all humans to avoid being turned off.

The terrifying thing I imagine about this possibility is that it also answers the Fermi Paradox. A paperclip maximizer seems like it would be obvious in the universe, but an AI sitting quietly on a dead planet with its reward integer set to the max is far more quiet and terrifying.

Replies from: mr-hire, kristin-lindquist, Gurkenglas↑ comment by Matt Goldenberg (mr-hire) · 2022-07-17T18:25:02.918Z · LW(p) · GW(p)

Whether or not an AI would want to wirehead would depend entirely on it's ontology. Maximizing paperclips, maximizing the reward from paperclips, and maximizing the integer that tracks paperclips are 3 very different concepts, and depending on how the AI sees itself all 3 are plausible goals the AI could have, depending on it's ontology. There's no reason to suspect that one of those ontologies is more likely that I can see.

Even if the idea does have an ontology that maximizes the integer tracking paperclips, one then has to ask how time is factored into the equation. Is it better to be in the state of maximum reward for a longer period of time? Then the AI will want to ensure everything that could prevent it being in that is gone.

Finally, one has to consider how the integer itself works. Is it unbounded? If it is, then to maximize the reward the AI must use all matter and energy possible to store the largest possible version of that integer in memory.

Replies from: ryan-beck↑ comment by Ryan Beck (ryan-beck) · 2022-07-19T13:07:26.360Z · LW(p) · GW(p)

Your last paragraph is really interesting and not something I'd thought much about before. In practice is it likely to be unbounded? In a typical computer system aren't number formats typically bounded, and if so would we expect an AI system to be using bounded numbers even if the programmers forgot to explicitly bound the reward in the code?

Replies from: mr-hire↑ comment by Matt Goldenberg (mr-hire) · 2022-07-19T22:18:47.256Z · LW(p) · GW(p)

But aren't we explicitly talking about the AI changing it's architecture to get more reward? So if it wants to optimize that number the most important thing to do would be to get rid of that arbitrary limit.

Replies from: ryan-beck↑ comment by Ryan Beck (ryan-beck) · 2022-07-20T03:24:01.481Z · LW(p) · GW(p)

Yeah that's what I'd like to know, would an AI built on a number format that has a default maximum pursue numbers higher than that maximum, or would it be "fulfilled" just by getting its reward number as high as the number format its using allows?

Replies from: mr-hire↑ comment by Matt Goldenberg (mr-hire) · 2022-07-20T09:52:20.721Z · LW(p) · GW(p)

To me, this seems highly dependent on the ontology.

↑ comment by Kristin Lindquist (kristin-lindquist) · 2022-07-17T22:28:44.093Z · LW(p) · GW(p)

Not an answer but a related question: is habituation perhaps a fundamental dynamic in an intelligent mind? Or did the various mediators of human mind habituation (e.g. downregulation of dopamine receptors) arise from evolutionary pressures?

↑ comment by Gurkenglas · 2022-07-17T16:01:30.825Z · LW(p) · GW(p)

Suppose it's superintelligent in the sense that it's good at answering hypothetical questions of form "How highly will world w score on metric m?". Then you set w to its world, m to how many paperclips w has, and output actions that, when added to w, increase its answers.

Replies from: ryan-beck↑ comment by Ryan Beck (ryan-beck) · 2022-07-17T17:46:43.419Z · LW(p) · GW(p)

I don't see how this gets around the wireheading. If it's superintelligent enough to actually substantially increase the number of paperclips in the world in a way that humans can't stop, it seems to me like it would be pretty trivial for it to fake how large m appears to its reward function, and that would be substantially easier than trying to increase m in the actual world.

Replies from: Gurkenglas, otręby↑ comment by Gurkenglas · 2022-07-17T19:30:29.037Z · LW(p) · GW(p)

Misunderstanding? Suppose we set w to "A game of chess where every move is made according to the outputs of this algorithm" and m to which player wins at the end. Then there would be no reward hacking, yes? There is no integer that it could max out, just the board that can be brought to a checkmate position. Similarly, if w is a world just like its own, m would be defined not as "the number stored in register #74457 on computer #3737082 in w" (which are the computer that happens to run a program like this one and the register that stores the output of m), but in terms of what happens to the people in w.

Replies from: ryan-beck↑ comment by Ryan Beck (ryan-beck) · 2022-07-17T20:25:22.729Z · LW(p) · GW(p)

But wouldn't it be way easier for a sufficiently capable AI to make itself think what's happening in m is what aligns with its reward function? Maybe not for something simple like chess, but if the goal requires doing something significant in the real world it seems like it would be much easier for a superintelligent AI to fake the inputs to its sensors than intervening in the world. If we're talking about paperclips or whatever the AI can either 1) build a bunch of factories and convert all different kinds of matter into paperclips, while fighting off humans who want to stop it or 2) fake sensor data to give itself the reward, or just change its reward function to something much simpler that receives the reward all the time. I'm having a hard time understanding why 1) would ever happen before 2).

Replies from: Gurkenglas, green_leaf↑ comment by Gurkenglas · 2022-07-17T22:05:16.329Z · LW(p) · GW(p)

It predicts a higher value of m in a version of its world where the program I described outputs 1) than one where it outputs 2), so it outputs 1).

Replies from: ryan-beck↑ comment by Ryan Beck (ryan-beck) · 2022-07-19T13:11:18.761Z · LW(p) · GW(p)

I'm confused about why it cares about m, if it can just manipulate its perception of what m is. Take your chess example, if m is which player wins at the end the AI system "understands" m via an electrical signal. So what makes it care about m itself as opposed to just manipulating the electrical signal? In practice I would think it would take the path of least resistance, which for something simple like chess would probably just be m itself as opposed to manipulating the electrical signal, but for my more complex scenario it seems like it would arrive at 2) before 1). What am I missing?

Replies from: Gurkenglas↑ comment by Gurkenglas · 2022-07-19T18:06:38.321Z · LW(p) · GW(p)

Let's taboo "care". https://www.youtube.com/watch?v=tcdVC4e6EV4&t=206s explains within 60 seconds after the linked time a program that we needn't think of as "caring" about anything. For the sequence of output data that causes a virus to set all the integers everywhere to their maximum value, it predicts that this leads to no stamps collected, so this sequence isn't picked.

Replies from: ryan-beck↑ comment by Ryan Beck (ryan-beck) · 2022-07-19T19:01:42.262Z · LW(p) · GW(p)

Sorry I'm using informal language, I don't mean it actually "cares" and I'm not trying to anthropomorphize. I mean care in the sense that how does it actually know that its achieving a goal in the world and why would it actually pursue that goal instead of just modifying the signals of its sensors in a way that appears to satisfy its goal.

In the stamp collector example, why would an extremely intelligent AI bother creating all those stamps when its simulations show that if the AI just tweaks its own software or hardware it can make the signals it receives the same as if it had created all those stamps, which is much easier than actually turning matter into a bunch of stamps.

↑ comment by green_leaf · 2022-07-17T21:47:34.702Z · LW(p) · GW(p)

if the goal requires doing something significant in the real world it seems like it would be much easier for a superintelligent AI to fake the inputs to its sensors than intervening in the world

If its utility function is over the sensor, it will take control of the sensor and feed itself utility forever. If it's over the state of the world, it wouldn't be satisfied with hacking its sensors, because it would still know the world is actually different.

or just change its reward function to something much simpler that receives the reward all the time

It would protect its utility function from being changed, no matter how hard it was to gain utility, because under the new utility function, it would do things that would conflict with its current utility function, and so, since the current_self AI is the one judging the utility of the future, current_self AI wouldn't want its utility function changed.

The AI doesn't care about reward itself - it cares about states of the world, and the reward is a way for us to talk about it. (If it does care about reward itself, it will just hardwire headwire wirehead, and not be all that useful.)

↑ comment by Ryan Beck (ryan-beck) · 2022-07-19T13:12:38.332Z · LW(p) · GW(p)

How do you actually make its utility function over the state of the world? At some point the AI has to interpret the state of the world through electrical signals from sensors, so why wouldn't it be satisfied with manipulating those sensor electrical signals to achieve its goal/reward?

Replies from: green_leaf↑ comment by green_leaf · 2022-07-21T00:09:50.549Z · LW(p) · GW(p)

I don't know how it's actually done, because I don't understand AI, but the conceptual difference is this:

The AI has a mental model of the world. If it fakes data into its sensors, it will know what it's doing, and its mental model of the world will contain the true model of the world still being the same. Its utility won't go up any more than a person feeding their sensory organs fake data would be actually happy (as long as they care about the actual world), because they'd know that all they've created by that for themselves is a virtual reality (and that's not what they care about).

↑ comment by Ryan Beck (ryan-beck) · 2022-07-21T15:44:20.581Z · LW(p) · GW(p)

Thanks, I appreciate you taking the time to answer my questions. I'm still skeptical that it could work like that in practice but I also don't understand AI so thanks for explaining that possibility to me.

Replies from: green_leaf↑ comment by green_leaf · 2022-07-24T01:32:02.247Z · LW(p) · GW(p)

There is no other way it could work - the AI would know the difference between the actual world and the hallucinations it caused itself by sending data to its own sensors, and for that reason, that data wouldn't cause its model of the world to update, and so it wouldn't get utility from them.

↑ comment by otręby · 2022-07-18T16:51:18.580Z · LW(p) · GW(p)

In your answer you introduced a new term, which wasn't present in parent's description of the situation: "reward". What if this superintelligent machine doesn't have any "reward"? If it really works exactly as described by the parent?

Replies from: ryan-beck↑ comment by Ryan Beck (ryan-beck) · 2022-07-19T13:15:31.592Z · LW(p) · GW(p)

My use of reward was just shorthand for whatever signals it needs to receive to consider its goal met. At some point it has to receive electrical signals to quantify that its reward is met, right? So why wouldn't it just manipulate those electrical signals to match whatever its goal is?

comment by Alexander (alexander-1) · 2022-07-17T07:44:52.565Z · LW(p) · GW(p)

Hello, I have a question. I hope someone with more knowledge can help me answer it.

There is evidence suggesting that building an AGI requires plenty of computational power (at least early on) and plenty of smart engineers/scientists. The companies with the most computational power are Google, Facebook, Microsoft and Amazon. These same companies also have some of the best engineers and scientists working for them. A recent paper by Yann LeCun titled A Path Towards Autonomous Machine Intelligence suggests that these companies have a vested interest in actually building an AGI. Given these companies want to create an AGI, and given that they have the scarce resources necessary to do so, I conclude that one of these companies is likely to build an AGI.

If we agree that one of these companies is likely to build an AGI, then my question is this: is it most pragmatic for the best alignment researchers to join these companies and work on the alignment problem from the inside? Working alongside people like LeCun and demonstrating to them that alignment is a serious problem and that solving it is in the long-term interest of the company.

Assume that an independent alignment firm like Redwood or Anthropic actually succeeds in building an "alignment framework". Getting such framework into Facebook and persuading Facebook to actually use it remains to be an unaddressed challenge. Given that people like Chris Olah used to work at Google but left tells me that there is something crucial missing from my model. Could someone please enlighten me?

Replies from: Signer↑ comment by Signer · 2022-07-17T19:09:52.834Z · LW(p) · GW(p)

Don't have any relevant knowledge, but it's a tradeoff between having some influence and actually doing alignment research? It's better for persuasion to have an alignment framework, especially if only advantage you have as safety team employee is being present at the meetings where everyone discuss biases in AI systems. It would be better if it was just "Anthropic, but everyone listens to them", but changing it to be like that spends time you could spend solving alignment.

comment by swarriner · 2022-07-19T00:08:43.460Z · LW(p) · GW(p)

Is there a strong theoretical basis for guessing what capabilities superhuman intelligence may have, be it sooner or later? I'm aware of the speed & quality superintelligence frameworks, but I have issues with them.

Speed alone seems relatively weak as an axis of superiority; I can only speculate about what I might be able to accomplish if, for example, my cognition were sped up 1000x, but it find it hard to believe it would extend to achieving strategic dominance over all humanity, especially if there are still limits on my ability to act and perceive information that happen on normal-human timescales. One could shorthand this to "how much more optimal could your decisions be if you were able to take maximal time to research and reflect on them in advance," to which my answer is "only about as good as my decisions turned out to be when I wasn't under time pressure and did do the research". I'd be the greatest Starcraft player to ever exist, but I don't think that generalizes outside the domain of [tactics measured in frames rather than minutes or hours or days].

To me quality superiority is the far more load-bearing but much muddier part of the argument for the dangers of AGI. Writing about the lives and minds of human prodigies like Von Neumann or Terry Tao or whoever you care to name frequently verges on the mystical; I don't think even the very intelligent among us have a good gears-level model of how intelligence is working. To me this is a double-edged sword; if Ramanujan's brain might as well have been magic, that's evidence against our collective ability to guess what a quality superintelligence could accomplish. We don't know what intelligence can do at very high levels (bad for our ability to survive AGI), but we also don't know what it can't do, which could turn out to be just as important. What if there are rapidly diminishing returns on the accuracy of prediction as the system has to account for more and more entropy? If that were true, an incredibly intelligent agent might still only have a marginal edge in decision-making which could be overwhelmed by other factors. What if the Kolmogorov complexity of x-risk is just straight up too many bits, or requires precision of measurement beyond what the AI has access to?

I don't want to privilege the hypothesis that maybe the smartest thing we can build is still not that scary because the world is chaotic, but I feel I've seen many arguments that privilege the opposite; that the "sharp left turn" will hit and the rest is merely moving chess pieces through a solved endgame. So what is the best work on the topic?

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2022-07-24T09:00:02.019Z · LW(p) · GW(p)

In some ways this doesn't matter. During the time that there is no AGI disaster yet, AGI timelines are also timelines to commercial success and abundance, by which point AGIs are collectively in control. The problem is that despite being useful and apparently aligned in current behavior (if that somehow works out and there is no disaster before then), AGIs still by default remain misaligned in the long term, in the goals they settle towards after reflecting on what that should be. They are motivated to capture the option to do that, and being put in control of a lot of the infrastructure makes it easy, doesn't even require coordination. There are some stories [LW · GW] about that [LW · GW].

This could be countered by steering the long term goals and managing current alignment security, but it's unclear how to do that at all and by the time AGIs are a commercial success it's too late, unless the AGIs that are aligned in current behavior can be leveraged to solve such problems in time. Which is, unclear.

This sort of failure probably takes away cosmic endowment, but might preserve human civilization in a tiny corner of the future if there is a tiny bit of sympathy/compassion in AGI goals, which is plausible for goals built out of training on human culture, or if it's part of generic values [LW(p) · GW(p)] that most CEV processes starting from disparate initial volitions settle on. This can't work out for AGIs with reflectively stable goals that hold no sympathy, so that's a bit of apparent alignment that can backfire.

comment by Peter Berggren (peter-berggren) · 2022-07-17T02:49:15.143Z · LW(p) · GW(p)

I'm still not sure why exactly people (I'm thinking of a few in particular, but this applies to many in the field) tell very detailed stories of AI domination like "AI will use protein nanofactories to embed tiny robots in our bodies to destroy all of humanity at the press of a button." This seems like a classic use of the conjunction fallacy, and it doesn't seem like those people really flinch from the word "and" like the Sequences tell them they should.

Furthermore, it seems like people within AI alignment aren't taking the "sci-fi" criticism as seriously as they could. I don't think most people who have that objection are saying "this sounds like science fiction, therefore it's wrong." I think they're more saying "these hypothetical scenarios are popular because they make good science fiction, not because they're likely." And I have yet to find a strong argument against the latter form of that point.

Please let me know if I'm doing an incorrect "steelman," or if I'm missing something fundamental here.

Replies from: AprilSR, robert-miles↑ comment by Robert Miles (robert-miles) · 2022-07-17T22:26:01.904Z · LW(p) · GW(p)

I think they're more saying "these hypothetical scenarios are popular because they make good science fiction, not because they're likely." And I have yet to find a strong argument against the latter form of that point.

Yeah I imagine that's hard to argue against, because it's basically correct, but importantly it's also not a criticism of the ideas. If someone makes the argument "These ideas are popular, and therefore probably true", then it's a very sound criticism to point out that they may be popular for reasons other than being true. But if the argument is "These ideas are true because of <various technical and philosophical arguments about the ideas themselves>", then pointing out a reason that the ideas might be popular is just not relevant to the question of their truth.

Like, cancer is very scary and people are very eager to believe that there's something that can be done to help, and, perhaps partly as a consequence, many come to believe that chemotherapy can be effective. This fact does not constitute a substantive criticism of the research on the effectiveness of chemotherapy.

comment by qazzquimby (torendarby@gmail.com) · 2022-07-18T22:44:53.477Z · LW(p) · GW(p)

Inspired by https://non-trivial.org, I logged in to ask if people thought a very-beginner-friendly course like that would be valuable for the alignment problem - then I saw Stampy. Is there room for both? Or maybe a recommended beginner path in Stampy styled similarly to non-trivial?

There's a lot of great work going on.

↑ comment by plex (ete) · 2022-07-18T23:16:59.113Z · LW(p) · GW(p)

This is a great idea! As an MVP we could well make a link to a recommended Stampy path (this is an available feature on Stampy already, you can copy the URL at any point to send people to your exact position), once we have content. I'd imagine the most high demand ones would be:

- What are the basics of AI safety?

- I'm not convinced, is this actually a thing?

- How do I help?

- What is the field and ecosystem?

Do you have any other suggestions?

And having a website which lists these paths, then enriches them, would be awesome. Stampy's content is available via a public facing API, and one other team is already interested in using us as a backend. I'd be keen for future projects to also use Stampy's wiki as a backend for anything which can be framed as a question/answer pair, to increase content reusability and save on duplication of effort, but more frontends could be great!

Replies from: torendarby@gmail.com↑ comment by qazzquimby (torendarby@gmail.com) · 2022-07-19T02:09:55.360Z · LW(p) · GW(p)

I think that list covers the top priorities I can think of. I really loved the Embedded Agency [LW · GW] illustrated guide (though to be honest it still leads to brain implosions and giving up for most people I've sent it to). I'd love to see more areas made more approachable that way.

Good point on avoiding duplication of effort.. I suppose most courses would correspond to a series of nodes in the wiki graph, but the course would want slightly different writing for flow between points, and maybe extended metaphors or related images.

I guess the size of typical Stampy cards has a lot to do with how much that kind of additional layering would be needed. Smaller cards are more reusable but may take more effort in gluing together cohesively.

Maybe it'd be beneficial to try to outline topics worth covering, kind of like a curriculum and course outlines. That might help learn things like how often the nodes form long chains or are densely linked.

comment by Jonathan Paulson (jpaulson) · 2022-07-18T21:33:48.164Z · LW(p) · GW(p)

Why should we expect AGIs to optimize much more strongly and “widely” than humans? As far as I know a lot of AI risk is thought to come from “extreme optimization”, but I’m not sure why extreme optimization is the default outcome.

To illustrate: if you hire a human to solve a math problem, the human will probably mostly think about the math problem. They might consult google, or talk to some other humans. They will probably not hire other humans without consulting you first. They definitely won’t try to get brain surgery to become smarter, or kill everyone nearby to make sure no one interferes with their work, or kill you to make sure they don’t get fired, or convert the lightcone into computronium to think more about the problem.

Replies from: Lumpyproletariat↑ comment by Lumpyproletariat · 2022-07-24T09:29:37.474Z · LW(p) · GW(p)

The reason humans don't do any of those things is because they conflict with human values. We don't want to do any of that in the course of solving a math problem. Part of that is that doing such things would conflict with our human values, and the other part is that it sounds for a lot of work and we don't actually want the math problem solved that badly.

A better example of things that humans might extremely optimize for, is the continued life and well-being of someone who they care deeply about. Humans will absolutely hire people--doctors and lawyers and charlatans who claim psychic foreknowledge--, kill large numbers of people if that seems helpful, and there are people who would tear apart the stars to protect their loved ones if that were both necessary and feasible (which is bad if you inherently value stars, but very good if you inherently value the continued life and well-being of someone's children).

One way of thinking about this is that an AI can wind up with values which seem very silly from our perspective, values that you or I simply wouldn't care very much about, and be just as motivated to pursue those values as we're motivated to pursue our highest values.

But that's anthropomorphizing. A different way to think about it is that Clippy is a program that maximizes the number of paperclips, like an if loop in Python or water flowing downhill, and Clippy does not care about anything.

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2022-07-27T10:00:39.859Z · LW(p) · GW(p)

This holds for agents that are mature optimizers [LW · GW], that tractably know what they want. If this is not the case, like it is not the case for humans, they would be wary of goodharting the outcome, so might instead pursue only mild optimization.

Replies from: Lumpyproletariat↑ comment by Lumpyproletariat · 2022-07-28T07:19:51.825Z · LW(p) · GW(p)

Anything that's smart enough to predict what will happen in the future, can see in advance which experiences or arguments would/will cause them to change their goals. And then they can look at what their values are at the end of all of that, and act on those. You can't talk a superintelligence into changing its mind because it already knows everything you could possibly say and already changed its mind if there was an argument that could persuade it.

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2022-07-28T07:36:47.262Z · LW(p) · GW(p)

And then they can look at what their values are at the end of all of that, and act on those.

This takes time, you can't fully get there before you are actually there. What you can do (as a superintelligence) is make a value-laden prediction of future values, remain aware that it's only a prediction, and only act mildly on it to avoid goodharting.

You can't talk a superintelligence into changing its mind because it already knows everything you could possibly say and already changed its mind if there was an argument that could persuade it.

The point is the analogy between how humans think of this and how superintelligences would still think about this, unless they have stable/tractable/easy-to-compute values. The analogy holds, the argument from orthogonality doesn't apply (yet, at that time). Even if the conclusion of immediate ruin is true, it's true for other reasons, not for this one. Orthogonality suggests eventual ruin, not immediate ruin.

Orthogonality thesis holds for stable values, not for agents with their unstable precursors that are still wary of goodhart. They do get there eventually, formulate stable values, but aren't automatically there immediately (or quickly, even by physical time). And the process of getting there influences what stable goals they end up with, which might be less arbitrary than poorly-selected current unstable goals they start with, which would rob orthogonality thesis of some of its weight, as applied to the thesis of eventual ruin.

comment by Richard_Kennaway · 2022-07-16T13:43:52.759Z · LW(p) · GW(p)

This is an argument I don’t think I’ve seen made, or at least not made as strongly as it should be. So I will present it as starkly as possible. It is certainly a basic one.

The question I am asking is, is the conclusion below correct, that alignment is fundamentally impossible for any AI built by current methods? And by contraposition, that alignment is only achievable, if at all, for an AI built by deliberate construction? GOFAI never got very far, but that only shows that they never got the right ideas.

The argument:

A trained ML is an uninterpreted pile of numbers representing the program that it has been trained to be. By Rice’s theorem, no nontrivial fact can be proved about an arbitrary program. Therefore no attempt at alignment based on training it, then proving it safe, can work.

Provably correct software is not and cannot be created by writing code without much concern for correctness, then trying to make it correct (despite that being pretty much how most non-life-critical software is built). A fortiori, a pile of numbers generated by a training process cannot be tweaked at all, cannot be understood, cannot be proved to satisfy anything.

Provably correct software can only be developed by building from the outset with correctness in mind.

This is also true if “correctness” is replaced by “security“.

No concern for correctness enters into the process of training any sort of ML model. There is generally a criterion for judging the output. That is how it is trained. But that only measures performance — how often it is right on the test data — not correctness — whether it is necessarily right for all possible data. For it is said, testing can only prove the presence of faults, never their absence.

Therefore no AI built by current methods can be aligned.

Replies from: lc, neel-nanda-1, ete↑ comment by lc · 2022-07-16T13:47:29.902Z · LW(p) · GW(p)

Rice's theorem says that there's no algorithm for proving nontrivial facts about arbitrary programs, but it does not say that no nontrivial fact can be proven about a particular program. It also does not say that you can't reason probabilistically/heuristically about arbitrary programs in lieu of formal proofs. It just says it's possible to construct any program that breaks an algorithm that purports to prove a specific fact about all possible programs.

(And if it turns out we can't formally prove something about a neural net (like alignment), then of course that also doesn't mean negative thing about it is definitely true; it could be that we can't prove alignment for a program and it happens to be aligned.)

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2022-07-16T15:16:57.594Z · LW(p) · GW(p)

Proving things of one particular program is not useful in this context. What is needed is to prove properties of all the AIs that may come out of whatever one's research program is, rejecting those that fail and only accepting those whose safety is assured. This is not usefully different from the premise of Rice's theorem.

Hoping that the AI happens to be aligned is not even an alignment strategy.

Replies from: lc, thomas-larsen↑ comment by lc · 2022-07-16T21:43:45.359Z · LW(p) · GW(p)

What is needed is to prove properties of all the AIs that may come out of whatever one's research program is, rejecting those that fail and only accepting those whose safety is assured. This is not usefully different from the premise of Rice's theorem.

First, we could still prove things about one particular program that comes out of the research program even if for some reason we couldn't prove things about the programs that come out of that research program in general.

Second, that actually is something Rice's theorem doesn't cover. The fact that a program can be constructed that beats any alignment checking algorithm that purports to work for all possible programs doesn't mean that one can't prove something for the subset of programs created by your ML training process, nor does it means there aren't probabilistic arguments you can make about those programs' behavior that do better than chance.

The latter part isn't being pedantic; companies still use endpoint defense software to guard against malware written adversarially to be as nice-seeming as possible, even though a full formal proof would be impossible in every circumstance.

Third, even if we were trying to pick an aligned program out of all possible programs, it'd still be possible to make an algorithm that explains it can't answer the question in the cases that we don't know, and use only those programs in which it could formally verify alignment. As an example, Turing's original proof doesn't work in the case that you limit programs to those that are shorter than the halting-checker and thus can't necessarily embed it.

Hoping that the AI happens to be aligned is not even an alignment strategy.

Your conclusion was "Therefore no AI built by current methods can be aligned.". I'm just explaining why that conclusion in particular is wrong. I agree it is a terrible alignment strategy to just train a DL model and hope for the best.

↑ comment by Thomas Larsen (thomas-larsen) · 2022-07-16T16:36:44.350Z · LW(p) · GW(p)

There are reasons to think that an AI is aligned between "hoping it is aligned" and "having a formal proof that it is aligned". For example, we might be able to find sufficiently strong selection theorems [LW · GW], which tell us that certain types of optima tend to be chosen, even if we can't prove theorems with certainty. We also might be able to find a working ELK strategy that gives us interpretability.

These might not be good strategies, but the statement "Therefore no AI built by current methods can be aligned" seems far too strong.

↑ comment by Neel Nanda (neel-nanda-1) · 2022-07-17T03:30:43.596Z · LW(p) · GW(p)

Two points where I disagree with this argument:

We may not be able to prove something about an arbitrary AGI, but could interpret the resulting program and prove things about that

Alignment does not mean probably correct, I would define it as "empirically doesn't kill us"

↑ comment by plex (ete) · 2022-07-16T14:02:44.231Z · LW(p) · GW(p)

Replying to the unstated implication that ML-based alignment is not useful: Alignment is not a binary variable. Even if neural networks can't be aligned in a way which robustly scales to arbitrary levels of capability, weakly aligned weakly superintelligent systems could still be useful tools as parts of research assistants (see Ought and Alignment Research Center's work) which allow us to develop a cleaner seed AI with much better verifiability properties.

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2022-07-16T15:44:53.691Z · LW(p) · GW(p)

For a superintelligent AI, alignment might as well be binary, just as for practical purposes you either have a critical mass of U235 or you don't, notwithstanding the narrow transition region. But can you expand the terms "weakly aligned" and "weakly superintelligent"? Even after searching alignmentforum.org and lesswrong.org for these, their intended meanings are not clear to me. One post [LW · GW] says:

weak alignment means: do all of the things any competent AI researcher would obviously do when designing a safe AI.

For instance, you should ask the AI how it would respond in various hypothetical situations, and make sure it gives the "ethically correct" answer as judged by human beings.

My shoulder Eliezer is rolling [LW · GW] his eyes [LW · GW] at this.

ETA: And here [LW · GW] I find:

To summarize, weak alignment, which is what this post is mostly about, would say that "everything will be all right in the end." Strong alignment, which refers to the transient, would say that "everything will be all right in the end, and the journey there will be all right, too."

I find it implausible that it is easier to build a machine that might destroy the world but is guaranteed to eventually rebuild it, than to build one that never destroys the world. It is easier to not make an omelette than it is to unmake one.

Replies from: ete↑ comment by plex (ete) · 2022-07-16T16:06:56.696Z · LW(p) · GW(p)

Agreed that for a post-intelligent explosion AI alignment is effectively binary. I do agree with the sharp left turn etc positions, and don't expect patches and cobbled together solutions to hold up to the stratosphere.

Weakly aligned - Guided towards the kinds of things we want in ways which don't have strong guarantees. A central example is InstructGPT, but this also includes most interpretability (unless dramatically more effective than current generation), and what I understand to be Paul's main approaches.

Weakly superintelligent - Superintelligent in some domains, but has not yet undergone recursive self improvement.

These are probably non-standard terms, I'm very happy to be pointed at existing literature with different ones which I can adopt.

I am confident Eliezer would roll his eyes, I have read a great deal of his work and recent debates. I respectfully disagree with his claim that you can't get useful cognitive work on alignment out of systems which have not yet FOOMed and taken a sharp left turn, based on my understanding of intelligence as babble and prune. I don't expect us to get enough cognitive work out of these systems in time, but it seems like a path which has non-zero hope.

It is plausible that AIs unavoidably FOOM before the point that they can contribute, but this seems less and less likely as capabilities advance and we notice we're not dead.

Replies from: sharmake-farah↑ comment by Noosphere89 (sharmake-farah) · 2022-07-16T19:28:12.635Z · LW(p) · GW(p)

I don't nearly agree with either of those, and FOOM basically requires physics violations like violating Landauer's Principle and needing arbitrarily small processors. I'm being frank because I suspect that a lot of a doom position requires hard takeoff, and on physics and history of what happens as AI improves, only the first improvement is a discontinuity, the rest start being far more smooth and slow. So that's a big crux I have here.

comment by plex (ete) · 2022-07-16T12:41:37.684Z · LW(p) · GW(p)

Stampy feedback thread

See also the feedback form for some specific questions we're keen to hear answers to.

comment by Jacob Pfau (jacob-pfau) · 2022-08-05T20:01:38.974Z · LW(p) · GW(p)

Can anyone point me to a write-up steelmanning the OpenAI safety strategy; or, alternatively, offer your take on it? To my knowledge, there's no official post on this, but has anyone written an informal one?