LW Team is adjusting moderation policy

post by Raemon · 2023-04-04T20:41:07.603Z · LW · GW · 185 commentsContents

Broader Context Ideas we're considering, and questions we're trying to answer: None 185 comments

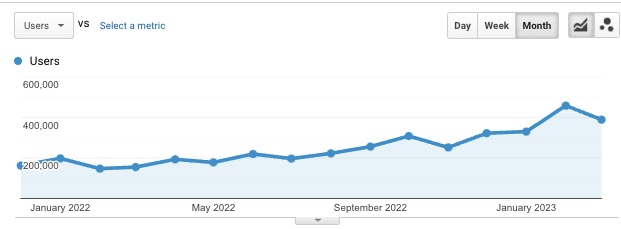

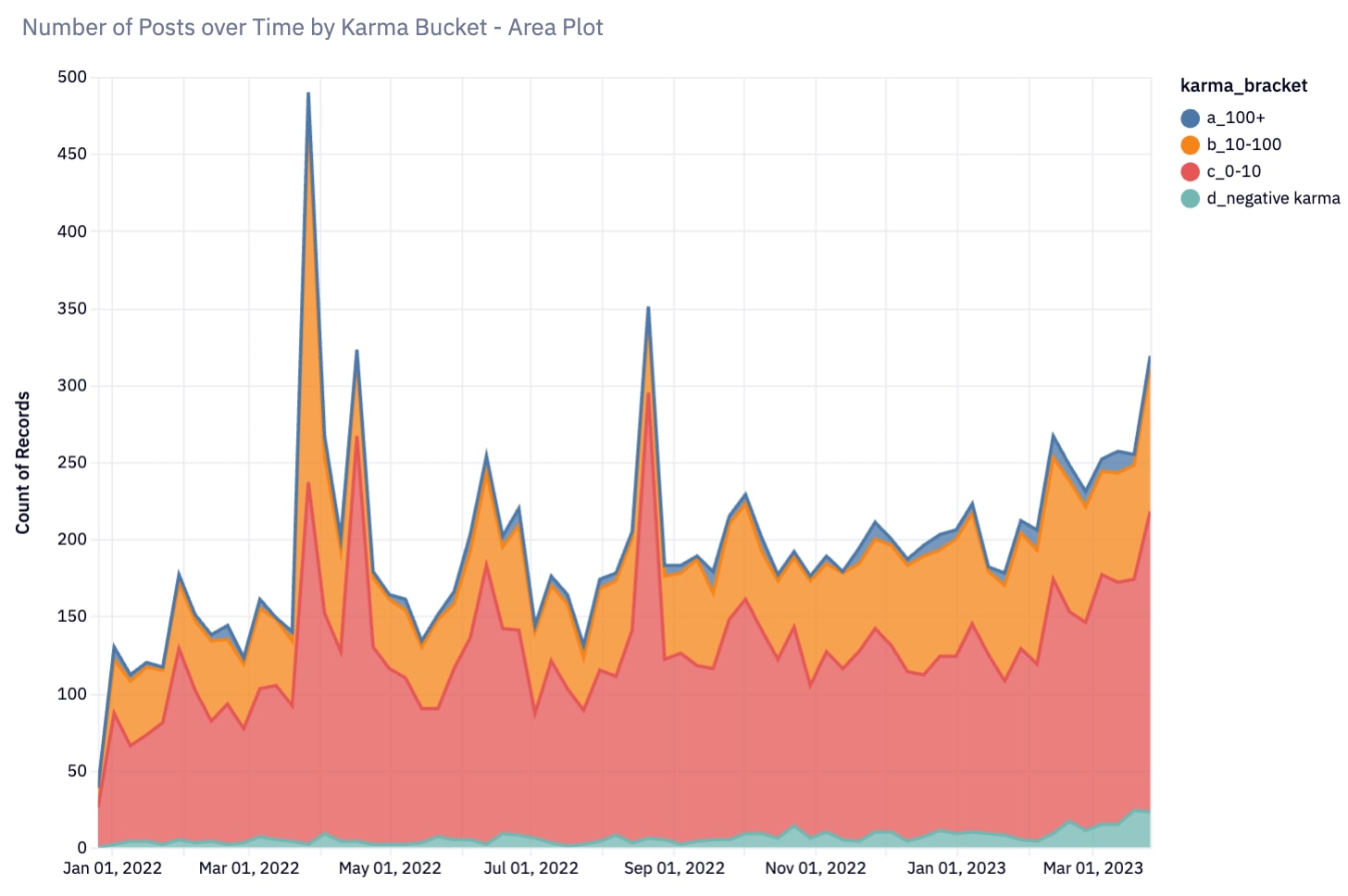

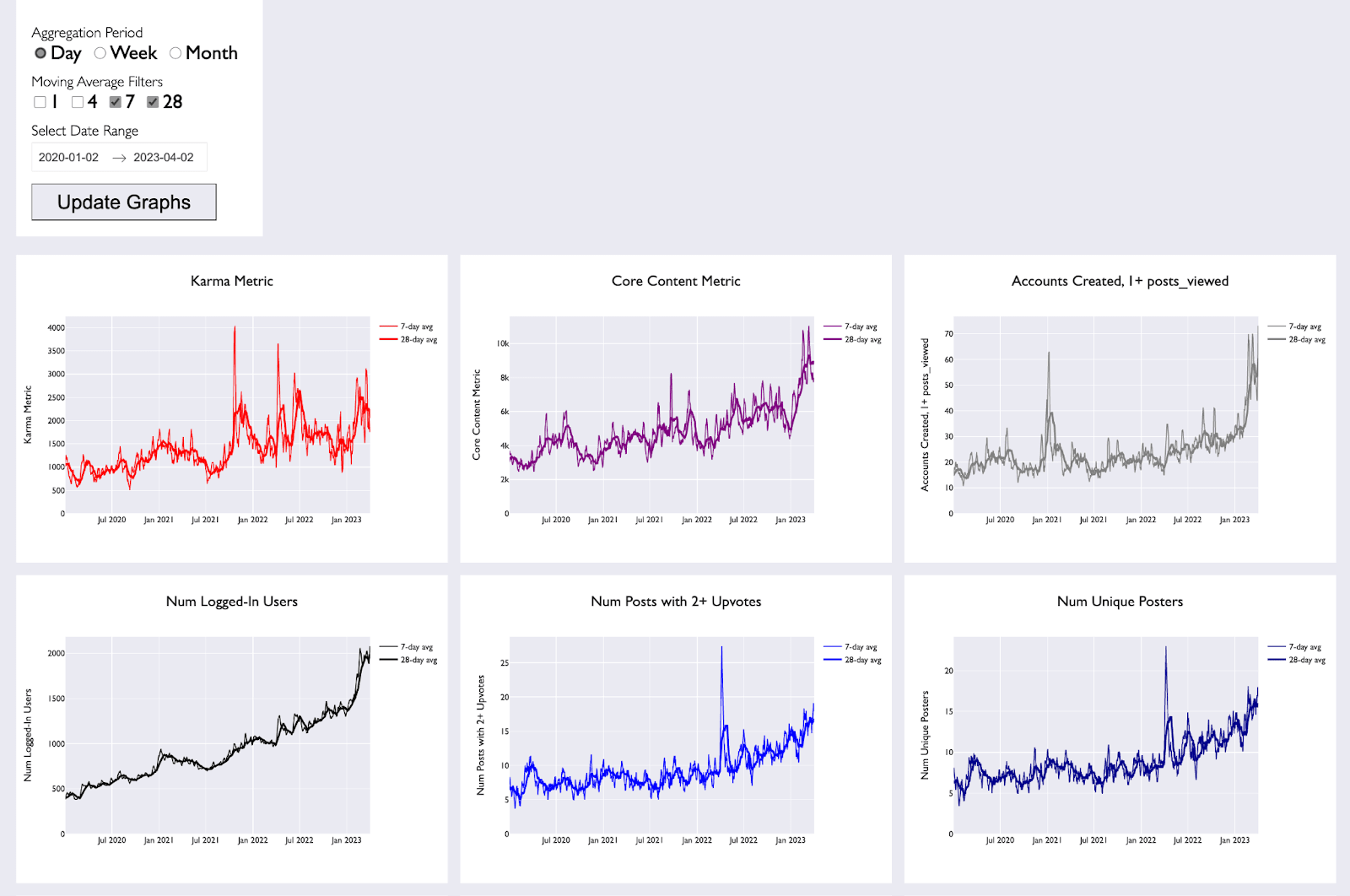

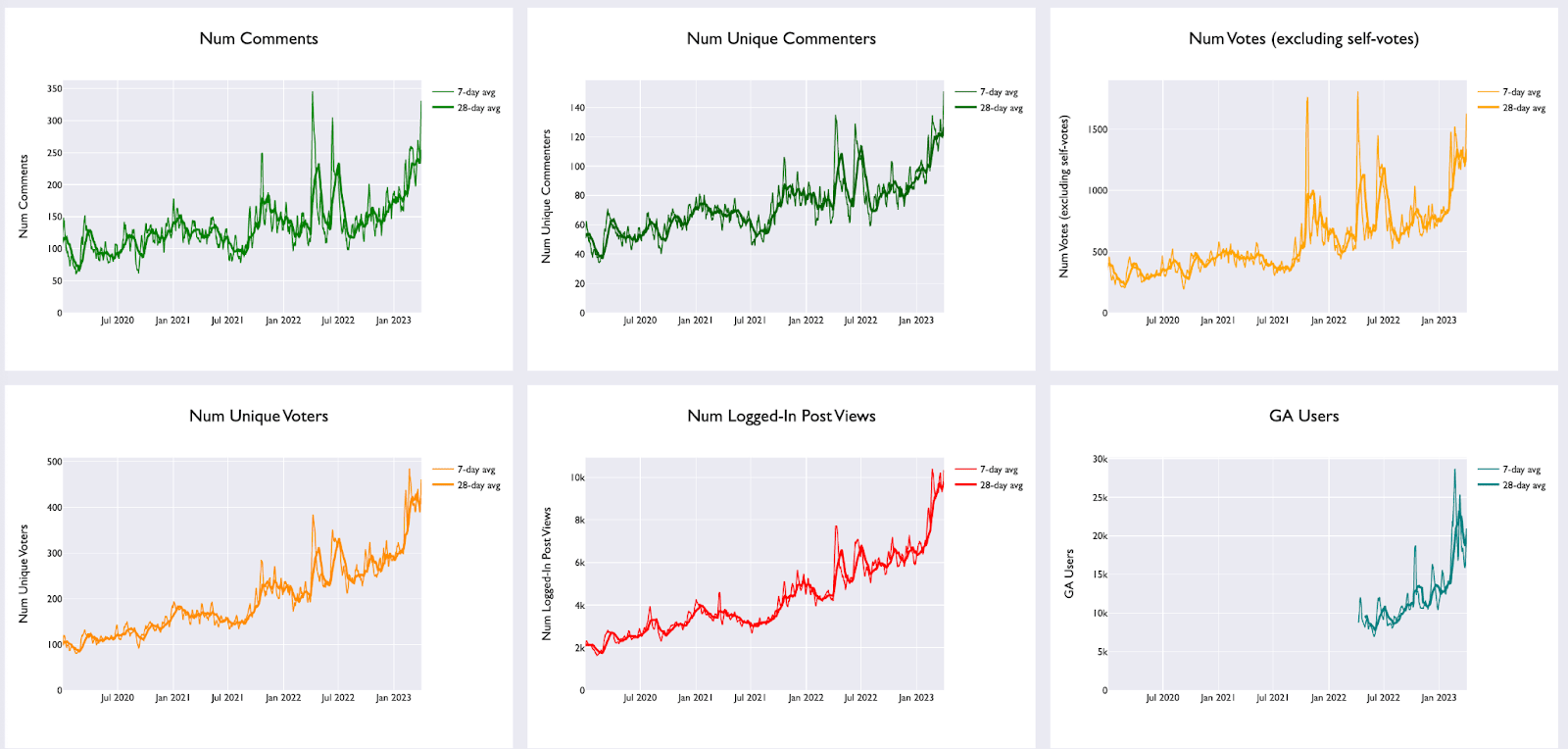

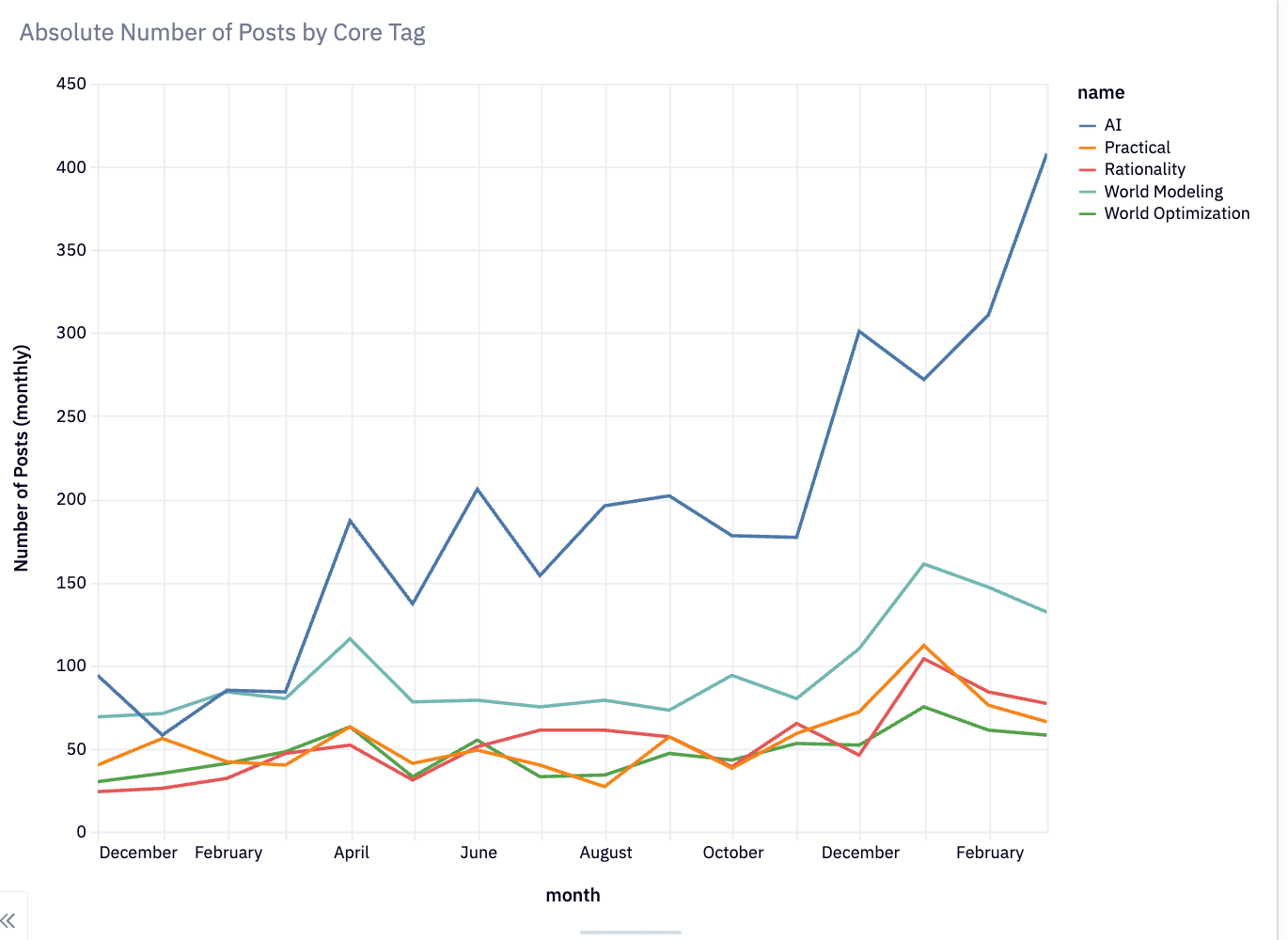

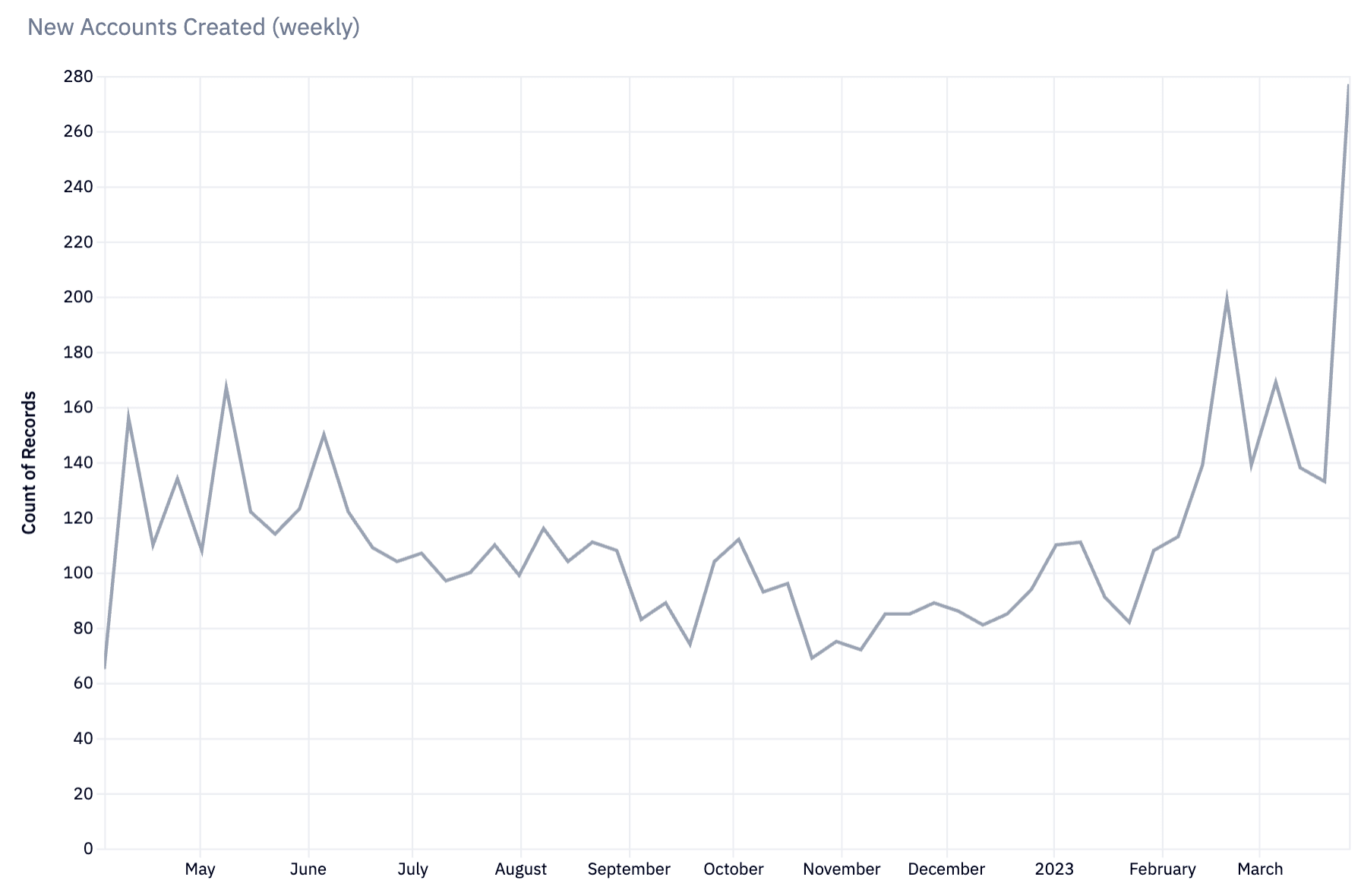

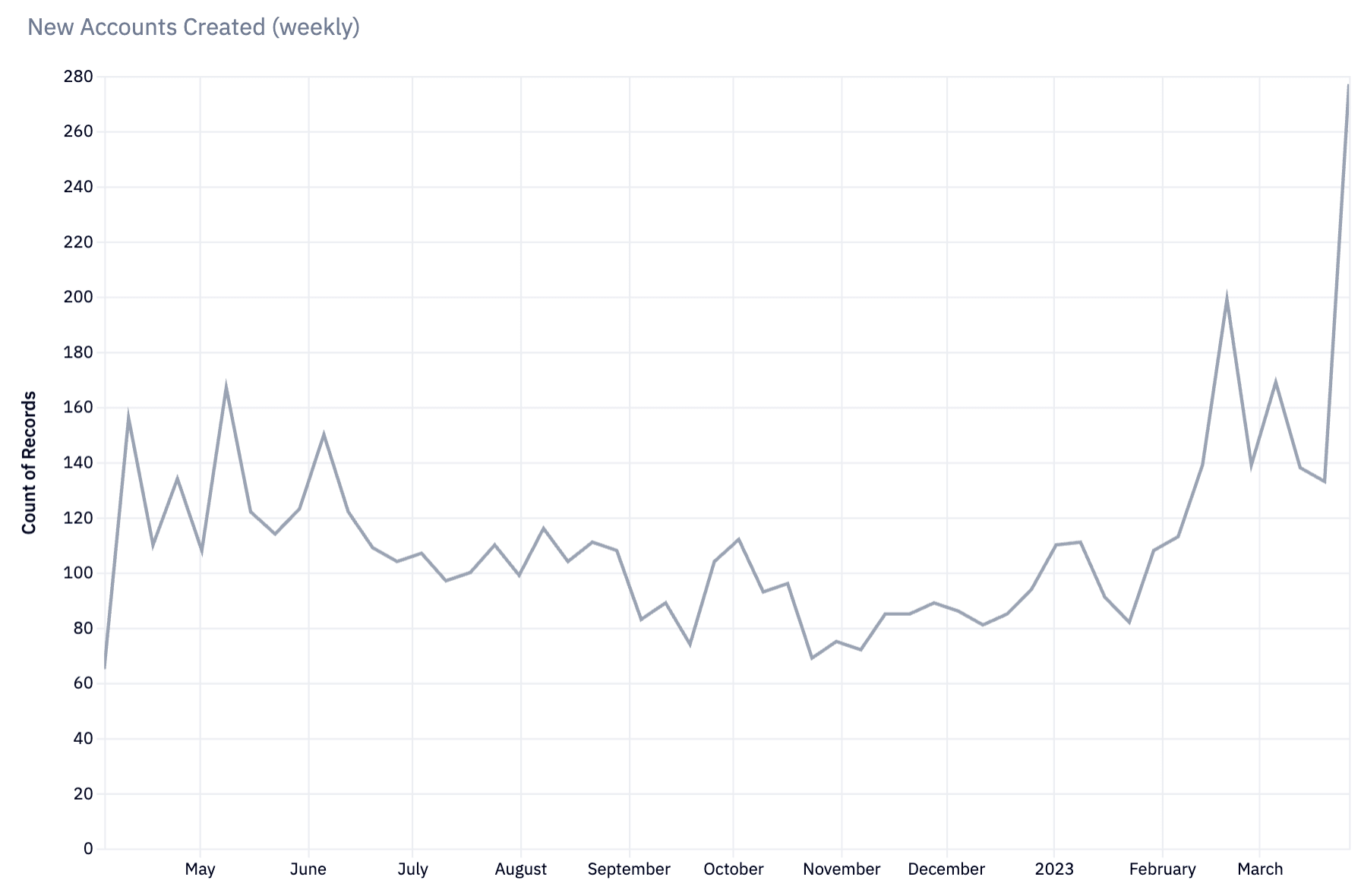

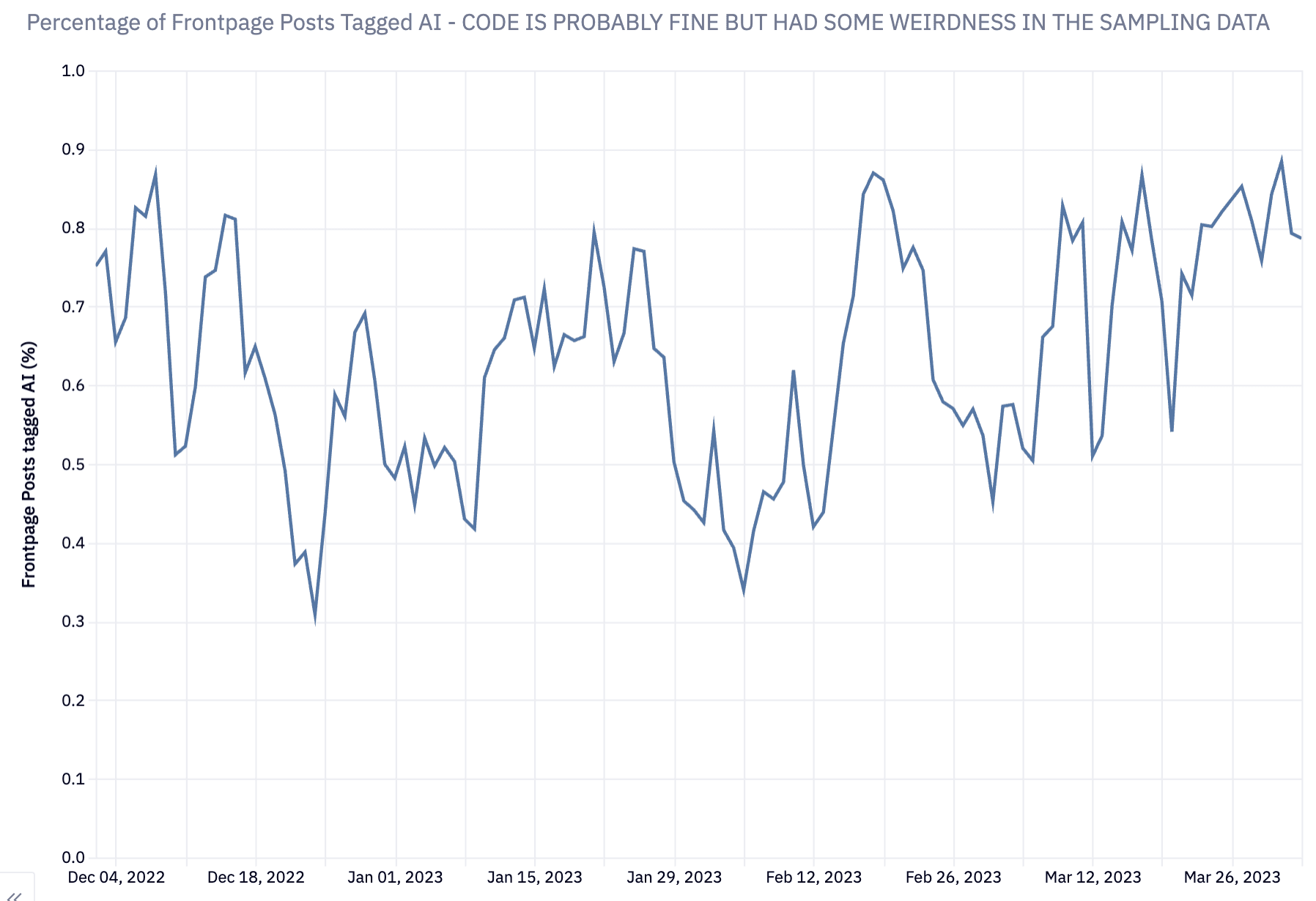

Lots of new users have been joining LessWrong recently, who seem more filtered for "interest in discussing AI" than for being bought into any particular standards for rationalist discourse. I think there's been a shift in this direction over the past few years, but it's gotten much more extreme in the past few months.

So the LessWrong team is thinking through "what standards make sense for 'how people are expected to contribute on LessWrong'?" We'll likely be tightening up moderation standards, and laying out a clearer set of principles so those tightened standards make sense and feel fair.

In coming weeks we'll be thinking about those principles as we look over existing users, comments and posts and asking "are these contributions making LessWrong better?".

Hopefully within a week or two, we'll have a post that outlines our current thinking in more detail.

Generally, expect heavier moderation, especially for newer users.

Two particular changes that should be going live within the next day or so:

- Users will need at least N karma in order to vote, where N is probably somewhere between 1 and 10.

- Comments from new users won't display by default until they've been approved by a moderator.

Broader Context

LessWrong has always had a goal of being a well-kept garden [LW · GW]. We have higher and more opinionated standards than most of the rest of the internet. In many cases we treat some issues as more "settled" than the rest of the internet, so that instead of endlessly rehashing the same questions we can move on to solving more difficult and interesting questions.

What this translates to in terms of moderation policy is a bit murky. We've been stepping up moderation over the past couple months and frequently run into issues like "it seems like this comment is missing some kind of 'LessWrong basics', but 'the basics' aren't well indexed and easy to reference." It's also not quite clear how to handle that from a moderation perspective.

I'm hoping to improve on "'the basics' are better indexed", but meanwhile it's just generally the case that if you participate on LessWrong, you are expected to have absorbed the set of principles in The Sequences (AKA Rationality A-Z).

In some cases you can get away without doing that while participating in local object level conversations, and pick up norms along the way. But if you're getting downvoted and you haven't read them, it's likely you're missing a lot of concepts or norms that are considered basic background reading on LessWrong. I recommend starting with the Sequences Highlights [? · GW], and I'd also note that you don't need to read the Sequences in order [LW · GW], you can pick some random posts that seem fun and jump around based on your interest.

(Note: it's of course pretty important to be able to question all your basic assumptions. But I think doing that in a productive way requires actually understand why the current set of background assumptions are the way they are, and engaging with the object level reasoning)

There's also a straightforward question of quality. LessWrong deals with complicated questions. It's a place for making serious progress on those questions. One model I have of LessWrong is something like a university – there's a role for undergrads who are learning lots of stuff but aren't yet expected to be contributing to the cutting edge. There are grad students and professors who conduct novel research. But all of this is predicated on there being some barrier-to-entry. Not everyone gets accepted to any given university. You need some combination of intelligence, conscientiousness, etc to get accepted in the first place.

See this post by habryka for some more models of moderation [LW · GW].

Ideas we're considering, and questions we're trying to answer:

- What quality threshold does content need to hit in order to show up on the site at all? When is the right solution to approve but downvote immediately?

- How do we deal with low quality criticism? There's something sketchy about rejecting criticism. There are obvious hazards of groupthink. But a lot of criticism isn't well thought out, or is rehashing ideas we've spent a ton of time discussing and doesn't feel very productive.

- What are the actual rationality concepts LWers are basically required to understand to participate in most discussions? (for example: "beliefs are probabilistic, not binary, and you should update them incrementally [LW · GW]")

- What philosophical and/or empirical foundations can we take for granted for building off of (i.e. reductionism, meta-ethics)

- How much familiarity with the existing discussion of AI should you be expected to have to participate in comment threads about that?

- How does moderation of LessWrong intersect with moderating the Alignment Forum?

Again, hopefully in the near future we'll have a more thorough writeup about our answers to these. Meanwhile it seemed good to alert people this would be happening.

185 comments

Comments sorted by top scores.

comment by Raemon · 2023-04-05T00:02:14.260Z · LW(p) · GW(p)

I'm about to process the last few days worth of posts and comments. I'll be linking to this comment as a "here are my current guesses for how to handle various moderation calls".

Replies from: Raemon, Raemon, Raemon, Raemon, Ruby↑ comment by Raemon · 2023-04-05T00:02:25.569Z · LW(p) · GW(p)

Succinctly explain the main point.

When we're processing many new user-posts a day, we don't have much time to evaluate each post.

So, one principle I think is fairly likely to become a "new user guideline" is "Make it pretty clear off the bat what the point of the post is." In ~3 sentences, try to make it clear who your target audience is, and what core point you're trying to communicate to them. If you're able to quickly gesture at the biggest-bit-of-evidence or argument that motivates your point, even better. (Though I understand sometimes this is hard).

This isn't necessarily how you have to write all the time on LessWrong! But your first post is something like an admissions-essay and should be optimized more for being legibly coherent and useful. (And honestly I think most LW posts should lean more in this direction)

In some sense this is similar to submitting something to a journal or magazine. Editors get tons of submissions. For your first couple posts, don't aim to write something that takes a lot of works for us to evaluate.

Corollary: Posts that are more likely to end up in the reject pile include...

- Fiction, especially if it looks like it's trying to make some kind of philosophical point, while being wrapped in a structure that makes that harder to evaluate. (I think good fiction plays a valuable role on LessWrong, I just don't recommend it until you've gotten more of a handle of the culture and background knowledge)

- Long manifestos. Like fiction, these are sometimes valuable. They can communicate something like an overarching way-of-seeing-the-world that is valuable in a different way from individual factual claims. But, a) I think it's a reasonable system to first make some more succinct posts, build up some credibility, and then ask the LessWrongOSphere to evaluate your lengthy treatise. b) honestly... your first manifesto just probably isn't very good. That's okay. No judgment. I've written manifestos that weren't very good and they were an important part of my learning process. Even my more recent manifestos tend to be less well received than my posts that argue a particular object-level claim.

Some users have asked: "Okay, but, when will I be allowed to post the long poetic prose that expresses the nuances of the idea I have in my heart?"

Often the answer is, well, when you get better at thinking and expressing yourself clearly enough that you've written a significantly different piece.

Replies from: Ruby↑ comment by Ruby · 2023-04-05T00:51:51.719Z · LW(p) · GW(p)

I've always like the the pithy advice "you have to know the rules to break the rules" which I do consider valid in many domains.

Before I let users break generally good rules like "explain what your point it up front", I want to know that they could keep to the rule before they don't. The posts of many first time users give me the feeling that their author isn't being rambly on purpose, they don't know how to write otherwise (or aren't willing to).

↑ comment by Raemon · 2023-04-05T00:22:54.127Z · LW(p) · GW(p)

Some top-level post topics that get much higher scrutiny:

1. Takes on AI

Simply because of the volume of it, the standards are higher. I recommend reading Scott Alexander's Superintelligence FAQ [LW · GW] as a good primer to make sure you understand the basics. Make sure you're familiar with the Orthogonality Thesis and Instrumental Convergence. I recommend both Eliezer's AGI Ruin: A List of Lethalities [LW · GW] and Paul Christiano's response post [LW · GW] so you understand what sort of difficulties the field is actually facing.

I suggest going to the most recent AI Open Questions [? · GW] thread, or looking into the FAQ at https://ui.stampy.ai/

2. Quantum Suicide/Immortality, Roko's Basilisk and Acausal Extortion.

In theory, these are topics that have room for novel questions and contributions. In practice, they seem to attract people who seem... looking for something to be anxious about? I don't have great advice for these people, but my impression is that they're almost always trapped in a loop where they're trying to think about it in enough detail that they don't have to be anxious anymore, but that doesn't work. They just keep finding new subthreads to be anxious about.

For Acausal Trade, I do think Critch's Acausal normalcy [LW · GW] might be a useful perspective that points your thoughts in a more useful direction. Alas, I don't have a great primer that succinctly explains why quantum immortality isn't a great frame, in a way that doesn't have a ton of philosophical dependencies.

I mostly recommend... going outside, hanging out with friends and finding other more productive things to get intellectually engrossed in.

3. Needing help with depression, akrasia, or medical advice with confusing mystery illness.

This is pretty sad and I feel quite bad saying it – on one hand, I do think LessWrong has some useful stuff to offer here. But too much focus on this has previously warped the community in weird ways – people with all kinds of problems come trying to get help and we just don't have the resources to help all of them.

For your first post on LessWrong, think of it more like you're applying to a university. Yes, universities have mental health departments for students and faculty... but when we're evaluating "does it make sense to let this person into this university", the focus should be on "does this person have the ability to make useful intellectual contributions?" not "do they need help in a way we can help with?"

Replies from: Sherrinford, Celarix↑ comment by Sherrinford · 2023-04-11T05:36:30.602Z · LW(p) · GW(p)

Maybe an FAQ for the intersection of #1, #2 and #3, "depressed/anxious because of AI", might be a good thing to be able to link to, though?

↑ comment by Celarix · 2023-04-07T14:38:45.621Z · LW(p) · GW(p)

3. Needing help with depression, akrasia, or medical advice with confusing mystery illness.

Bit of a shame to see this one, but I understand this one. It's crunch time for AGI alignment and there's a lot on the line. Maybe those of us interested in self-help can go to/post their thoughts on some of the rationalsphere blogs, or maybe start their own.

I got a lot of value out of the more self-help and theory of mind posts here, especially Kaj Sotala's and Valentine's work on multiagent models of mind, and it'd be cool to have another place to continue discussions around that.

↑ comment by Raemon · 2023-04-05T02:09:38.473Z · LW(p) · GW(p)

A key question when I look at a new user on LessWrong trying to help with AI is, well, are they actually likely to be able to contribute to the field of AI safety?

If they are aiming to make direct novel intellectual contributions, this is in fact fairly hard. People have argued back and forth about how much raw IQ, conscientiousness or other signs of promise a person needs to have. There has been some posts arguing that people are overly pessimistic and gatekeeping-y about AI safety.

But, I think it's just pretty importantly true that it takes a fairly significant combination of intelligence and dedication to contribute. Not everyone is cut out for doing original research. Many people pre-emptively focus on community building and governance because that feels easier and more tractable to them than original research. But those areas still require you to have a pretty understanding of the field you're trying to govern or build a community for.

If someone writes a post on AI that seems like a bad take, which isn't really informed by the real challenges, should I be encouraging that person to make improvements and try again? Or just say "idk man, not everyone is cut out for this?"

Here's my current answer.

If you've written a take on AI that didn't seem to hit the LW team's quality bar, I would recommend some combination of:

- Read ~16 hours of background content, so you're not just completely missing the point. (I have some material in mind that I'll compile later, but for now highlight roughly the amount of effort involved)

- Set aside ~4 hours to think seriously about the topic. Try to find one sub-question you don't know the answer to, and make progress answering that sub-question.

- Write up your thoughts as a LW post.

(For each of these steps, organizing some friends to work together as a reading or thinking group can be helpful to make it more fun)

This doesn't guarantee that you'll be a good fit for AI safety work, but I think this is an amount of effort where it's possible for a LW mod to look at your work, and figure out if this is likely to be a good use of your time.

Some people may object "this is a lot of work." Yes, it is. If you're the right sort of person you may just find this work fun. But the bottom line is yes, this is work. You should not expect to contribute to the field without putting in serious work, and I'm basically happy to filter out of LessWrong people who a) seem to superficially have pretty confused takes, and b) are unwilling to put in 20 hours of research work.

↑ comment by Raemon · 2023-04-06T23:01:22.962Z · LW(p) · GW(p)

Draft in progress. Common failures modes for AI posts that I want to reference later:

Trying to help with AI Alignment

"Let's make the AI not do anything."

This is essentially a very expensive rock. Other people will be building AIs that do do stuff. How does your AI help the situation over not building anything at all?

"Let's make the AI do [some specific thing that seems maybe helpful when parsed as an english sentence], without actually describing how to make sure they do exactly or even approximately that english sentence"

The problem is a) we don't know how to point an AI at doing anything at all, and b) your simple english sentence includes a ton of hidden assumptions.

(Note: I think Mark Xu sort of disagreed with Oli on something related to this recently, so I don't know that I consider this class of solution is completely settled. I think Mark Xu thinks that we don't currently know how to get an AI to do moderately complicated actions with our current tech, but, our current paradigms for how to train AIs are likely to yield AIs that can do moderately complicated actions)

I think the typical new user who says things like this still isn't advancing the current paradigm though, nor saying anything useful that hasn't already been said.

Arguing Alignment is Doomed

[less well formulated]

Lately there's been a crop of posts arguing alignment is doomed. I... don't even strongly disagree with them, but they tend to be poorly argued and seem confused about what good problem solving looks like.

Arguing AI Risk is a dumb concern that doesn't make sense

Lately (in particular since Eliezer's TIME article), we've had a bunch of people coming in to say we're a bunch of doomsday cultists and/or gish gallopers.

And, well, I think if you're just tuning into the TIME article, or you have only been paying bits of attention over the years, I think this is a kinda reasonable belief-state to have. From the outside when you hear an extreme-sounding claim, it's reasonable for alarm bells to go off and assume this is maybe crazy.

If you were the first person bringing up this concern, I'd be interested in your take. But, we've had a ton of these, so you're not saying anything new by bringing it up.

You're welcome to post your take somewhere else, but if you want to participate on LessWrong, you need to engage with the object level arguments.

Here's a couple things I'll say about this:

- One particularly gishgallopy-feeling thing is that many arguments for AI catastrophe are disjunctive. So, yeah, there's not just one argument you can overturn and then we'll all be like "okay great, we can change our mind about this problem." BUT, it is the case that we are pretty curious about individual arguments getting overturned. If individual disjunctive arguments turned out to be flawed, that'd make the problem easier. So I'd be fairly excited about someone who digs into the details of various claims in AGI Ruin: A List of Lethalities [LW · GW] and either disproves them or finds a way around them.

- Another potentially gishgallopy-feeling thing is If you're discussing things on LessWrong, you'll be expected to have absorbed the concepts from the sequences (such as how to think about subjective probability, tribalism, etc), either by reading the sequences or lurking a lot. I acknowledge this is as pretty gishgallopy at first glance, if you came here to debate one particular thing. Alas, that's just how it is [see other FAQ question delving more into this]

↑ comment by Ruby · 2023-04-06T20:22:44.011Z · LW(p) · GW(p)

Here's a quickly written draft for an FAQ we might send users whose content gets blocked from appearing on the site.

The “My post/comment was rejected” FAQ

Why was my submission rejected?

Common reasons that the LW Mod team will reject your post or comment:

- It fails to acknowledge or build upon standard responses and objections that are well-known on LessWrong.

- The LessWrong website is 14 years old and the community behind it older still. Our core readings [link] are over half a million words. So understandably, there’s a lot you might have missed!

- Unfortunately, as the amount of interest in LessWrong grows, we can’t afford to let cutting-edge content get submerged under content from people who aren’t yet caught up to the rest of the site.

- It is poorly reasoned. It contains some mix of bad arguments and obviously bad positions that it does not feel worth the LessWrong mod team or LessWrong community’s time or effort responding to.

- It is difficult to read. Not all posts and comments are equally well-written and make their points as clearly. While established users might get more charity, for new users, we require that we (and others) can easily follow what you’re saying well or enough to know whether or not you’re saying something interesting.

- There are multiple ways to end up difficult to read:

- Poorly crafted sentences and paragraphs

- Long and rambly

- Poorly structure without signposting

- Poor formatting

- There are multiple ways to end up difficult to read:

- It’s a post and doesn’t say very much.

- Each post takes up some space and requires effort to click on and read, and the content of the post needs to make that worthwhile. Your post might have had a reasonable thought, but it didn’t justify a top-level post.

- If it’s a quick thought about AI, try an “AI No Dumb Questions Open Thread”

- Sometimes this just means you need to put in more effort, though effort isn’t the core thing.

- Each post takes up some space and requires effort to click on and read, and the content of the post needs to make that worthwhile. Your post might have had a reasonable thought, but it didn’t justify a top-level post.

- You are rude or hostile. C’mon.

Can I appeal or try again?

You are welcome to message us however note that due to volume, even though we read most messages, we will not necessarily respond, and if we do, we can’t engage in a lengthy back-and-forth.

In an ideal world, we’d have a lot more capacity to engage with each new contributor to discuss what was/wasn’t good about their content, unfortunately with new submissions increasing on short timescales, we can’t afford that and have to be pretty strict in order to ensure site quality stays high.

This is censorship, etc.

LessWrong, while on the public internet, is not the general public. It was built to host a certain kind of discussion between certain kinds of users who’ve agreed to certain basics of discourse, who are on board with a shared philosophy, and can assume certain background knowledge.

Sometimes we say that LessWrong is a little bit like a “university”, and one way that it is true is that not anybody is entitled to walk in and demand that people host the conversation they like.

We hope to keep allowing for new accounts created and for new users to submit new content, but the only way we can do that is if we reject content and users who would degrade the site’s standards.

But diversity of opinion is important, echo chamber, etc.

That’s a good point and a risk that we face when moderating. The LessWrong moderation team tries hard to not reject things just because we disagree, and instead only do so if it feels like the content it failing on some other criteria.

Notably, one of the best ways to disagree (and that will likely get you upvotes) is to criticize not just a commonly-held position on LessWrong, but the reasons why it is held. If you show that you understand why people believe what they do, they’re much more likely to be interested in your criticisms.

How is the mod team kept accountable?

If your post or comment was rejected from the main site, it will be viewable alongside some indication for why it was banned. [we haven't built this yet but plan to soon]

Anyone who wants to audit our decision-making and moderation policies can review blocked content there.

↑ comment by Ben Pace (Benito) · 2023-04-06T20:33:14.294Z · LW(p) · GW(p)

It fails to acknowledge or build upon standard responses and objections that are well-known on LessWrong.

- The LessWrong website is 14 years old and the community behind it older still. Our core readings [link] are over half a million words. So understandably, there’s a lot you might have missed!

- Unfortunately, as the amount of interest in LessWrong grows, we can’t afford to let cutting-edge content get submerged under content from people who aren’t yet caught up to the rest of the site.

I do want to emphasize a subtle distinction between "you have brought up arguments that have already been brought up" and "you are challenging basic assumptions of the ideas here". I think challenging basic assumptions is good and well (like here [LW · GW]), while bringing up "but general intelligences can't exist because of no-free-lunch theorems" or "how could a computer ever do any harm, we can just unplug it" is quite understandably met with "we've spent 100s or 1000s of hours discussing and rebutting that specific argument, please go read about it <here> and come back when you're confident you're not making the same arguments as the last dozen new users".

I would like to make sure new users are specifically not given the impression that LW mods aren't open to basic assumptions being challenged, and I think it might be worth the space to specifically rule out that interpretation [LW · GW] somehow.

Replies from: Ruby↑ comment by Ruby · 2023-04-06T23:26:03.335Z · LW(p) · GW(p)

Can be made more explicit, but this is exactly why the section opens with "acknowledge [existing stuff on topic]".

Replies from: Benito↑ comment by Ben Pace (Benito) · 2023-04-06T23:28:25.221Z · LW(p) · GW(p)

Well, I don't think you have to acknowledge existing stuff on this topic if you have a new and good argument.

Added: I think the phrasing I'd prefer is "You made an argument that has already been addressed extensively on LessWrong" rather than "You have talked about a topic without reading everything we've already written about on that topic".

Replies from: Ruby, Ruby↑ comment by Ruby · 2023-04-07T00:06:43.933Z · LW(p) · GW(p)

I do think there is an interesting question of "how much should people have read?" which is actually hard to answer.

There are people who don't need to read as much in order to say sensible and valuable things, and some people that no amount of reading seems to save.

The half a million words is the Sequences. I don't obviously want a rule that says you need to have read all of them in order to post/comment (nor do I think doing so is a guarantee), but also I do want to say that if you make mistakes the the Sequences would teach you not to make, that could be grounds for not having your content approved.

A lot of the AI newbie stuff I'm disinclined to approve is the kind that makes claims that are actually countered in the Sequences, e.g. orthogonality thesis, treating the AI too much like humans, various fallacies involving words.

↑ comment by Ruby · 2023-04-06T23:36:45.543Z · LW(p) · GW(p)

How do you know you have a new and good argument if you don't know the standards thing said on the topic

And relatedly, why should I or other readers on LW assume that you have a new and good argument without any indication that you know the arguments in general?

This is aimed at users making their very first post/comment. I think it is likely a good policy/heuristic for the mod team in judging your post that claims "AIs won't be dangerous because X", tells me early on that you're not wasting my time because you're already aware of all the standard arguments.

In a world where everyday a few dozen people who started thinking about AI two weeks ago show up on LessWrong and want to give their "why not just X?", I think it's reasonable to say "we want you to give some indication that you're aware of the basic discussion this site generally assumes".

Replies from: Making_Philosophy_Better↑ comment by Portia (Making_Philosophy_Better) · 2023-04-07T01:53:11.400Z · LW(p) · GW(p)

I find it hilarious that you can say this, while simultaneously, the vast majority of this community is deeply upset they are being ignored by academia and companies, because they often have no formal degrees or peer reviewed publication, or other evidence of having considered the relevant science. Less wrong fails the standards of these fields and areas. You routinely re-invent concepts that already exist. Or propose solutions that would be immediately rejected as infeasible if you tried to get them into a journal.

Explaining a concept your community takes for granted to outsiders can help you refresh it, understand it better, and spot potential problems. A lot of things taken for granted here are rejected by outsiders because they are not objectively plausible.

And a significant number of newcomers, while lacking LW canon, will have other relevant knowledge. If you make the bar too high, you deter them.

All this is particularly troubling because your canon is spread all over the place, extremely lengthy, and individually usually incomplete or outdated, and filled in implicitly from prior forum interactions. Academic knowledge is more accessible that way.

Replies from: Ruby, lc, lahwran↑ comment by Ruby · 2023-04-08T00:25:20.932Z · LW(p) · GW(p)

Writing hastily in the interests of time, sorry if not maximally clear.

Explaining a concept your community takes for granted to outsiders can help you refresh it, understand it better, and spot potential problems.

It's very much a matter of how many newcomers there are relative to existing members. If the number of existing members is large compared to newcomers, it's not so bad to take the time to explain things.

If the number of newcomers threatens to overwhelm the existing community, it's just not practical to let everyone in. Among other factors, certain conversation is possible because you can assume that most people have certain background and even if they disagree, at least know the things you know.

The need for getting stricter is because of the current (and forecasted) increase in new user. This means we can't afford to become 50% posts that ignore everything our community has already figured out.

LessWrong is an internet forum, but it's in the direction of a university/academic publication, and such publications only work because editors don't accept everything.

↑ comment by lc · 2023-04-07T05:09:36.553Z · LW(p) · GW(p)

the vast majority of this community is deeply upset they are being ignored by academia and companies, because they often have no formal degrees or peer reviewed publication, or other evidence of having considered the relevant science.

Source?

Replies from: lahwran↑ comment by the gears to ascension (lahwran) · 2023-04-07T05:16:52.852Z · LW(p) · GW(p)

my guess is that that claim is slightly exaggerated, but I expect sources exist for an only mildly weaker claim. I certainly have been specifically mocked for my username in places that watch this site, for example.

Replies from: lc↑ comment by lc · 2023-04-07T05:19:35.332Z · LW(p) · GW(p)

I certainly have been specifically mocked for my username in places that watch this site, for example.

This is an example of people mocking LW for something. Portia is making a claim about LW users' internal emotional states; she is asserting that they care deeply about academic recognition and feel infuriated they're not getting it. Does this describe you or the rest of the website, in your experience?

Replies from: lahwran↑ comment by the gears to ascension (lahwran) · 2023-04-07T05:29:50.063Z · LW(p) · GW(p)

lens portia's writing out of frustrated tone first and it makes more sense. they're saying that recognition is something folks care about (yeah, I think so) and aren't getting to an appropriate degree (also seems true). like I said in my other comment - tone makes it harder to extract intended meaning.

Replies from: lc↑ comment by lc · 2023-04-07T05:43:39.062Z · LW(p) · GW(p)

Well, I disagree. I have literally zero interest in currying the favor of academics, and think Portia is projecting a respect and yearning for status within universities onto the rest of us that mostly doesn't exist. I would additionally prefer if this community were able to set standards for its members without having to worry about or debate whether or not asking people to read canon is a status grab.

Replies from: lahwran↑ comment by the gears to ascension (lahwran) · 2023-04-07T06:02:50.242Z · LW(p) · GW(p)

sure. I do think it's helpful to be academically valid sometimes though. you don't need to care, but some do some of the time somewhat. maybe not as much as the literal wording used here. catch ya later, anyhow.

↑ comment by the gears to ascension (lahwran) · 2023-04-07T05:13:08.159Z · LW(p) · GW(p)

strong agree, single upvote: harsh tone, but reasonable message. I hope the tone doesn't lead this point to be ignored, as I do think it's important. but leading with mocking does seem like it's probably why others have downvoted. downvote need not indicate refusal to consider, merely negative feedback to tone, but I worry about that, given the agree votes are also in the negative.

Replies from: Making_Philosophy_Better↑ comment by Portia (Making_Philosophy_Better) · 2023-04-07T12:37:51.492Z · LW(p) · GW(p)

Thank you. And I apologise for the tone. I think the back of my mind was haunted by Shoggoth with a Smiley face giving me advice for my weekend plans, and that emotional turmoil came out the wrong way.

I am in the strange position of being on this forum, and in academia, and seeing both sides engage in the same barrier keeping behaviour, and call it out as elitist and misguided in the other but a necessary way to ensure quality and affirm your superior identity in your own group is jarring. I've found valuable and admirable practices and insights in both, else I would not be there.

Replies from: thoth-hermes↑ comment by Thoth Hermes (thoth-hermes) · 2023-04-07T15:20:26.030Z · LW(p) · GW(p)

Any group that bears a credential, or performs negative selection of some kind, will bear the traits you speak of. 'Tis the nature of most task-performing groups human society produces. Alas, one cannot escape it, even coming to a group that once claimed to eschew credentialism. Nonetheless, it is still worthwhile to engage with these groups intellectually.

↑ comment by papetoast · 2023-04-10T02:06:17.358Z · LW(p) · GW(p)

I cannot access www.lesswrong.com/rejectedcontent [? · GW] (404 error). I suspect you guys forgot to give access to non-moderators, or you meant www.lesswrong.com/moderation [? · GW] (But there are no rejected posts there, only comments)

Replies from: Raemon↑ comment by Raemon · 2023-04-10T02:13:43.361Z · LW(p) · GW(p)

We didn't build that yet but plan to soon. (I think what happened was Ruby wrote this up in a private google doc, I encouraged him to post it as a comment so I could link to it, and both of us forgot it included that explicit link. Sorry about that, I'll edit it to clarify)

comment by Raemon · 2023-04-09T22:19:41.906Z · LW(p) · GW(p)

Here's my best guess for overall "moderation frame", new this week, to handle the volume of users. (Note: I've discussed this with other LW team members, and I think there's rough buy-in for trying this out, but it's still pretty early in our discussion process, other team members might end up arguing for different solutions)

I think to scale the LessWrong userbase, it'd be really helpful to shift the default assumptions of LessWrong to "users by default have a rate limit of 1-comment-per day" and "1 post per week."

If people get somewhat upvoted, they fairly quickly increase that rate limit to either "1 comment per hour" or "~3 comments per day" (I'm not sure which is better), so they can start participating in conversations. If they get somewhat more upvoted the rate limit disappears completely.

But to preserve this, you need to be producing content that is actively upvoted. If they get downvoted (or just produce a long string of barely-upvoted comments), they go back to the 1-per-day rate limit. If they're getting significantly downvoted, the rate limit ratchets up (to 1 per 3 days, then once per week and eventually once-per month which is essentially saying "you're sort of banned, but you can periodically try again, and if your new comments get upvoted you'll get your privileges restored")

Getting the tuning here exactly right to avoid being really annoying to existing users who weren't doing anything wrong is somewhat tricky, but a) I think there are at least some situations where I think the rules would be pretty straightforward, b) I think it's an achievable goal to the tune the system to basically work as intended.

When users have a rate limit, they get UI elements giving them some recommendations for what to do differently. (I think it's likely we can also build some quick-feedback buttons that moderators and some trusted users can use, so people have a bit more idea of what to do differently).

Once users have produced a multiple highly upvoted posts/comments, they get more leniency (i.e. they can have a larger string of downvotes or longer non-upvoted back-and-forths before getting rate limited).

If we were starting a forum from scratch with this sort of design at it's foundation, I think this could feel more like a positive thing (kinda like a videogame incentivizing good discussion and idea-generation, with built in self-moderation).

Since we're not starting from scratch, I do expect this to feel pretty jarring and unfair to people. I think this is sad, but, I think some kind of change is necessary and we just have to pay the costs somewhere.

My model of @Vladimir_Nesov [LW · GW] pops up to warn about negative selection [LW · GW] here (I'm not sure whether he thinks rate-limiting is as risky as banning, for negative-selection reasons. It certainly still will cause some people to bounce off. I definitely see risks with negative selection punishing variance, but even the current number of mediocre comments has IMO been pretty bad for lesswrong, the growing amount I'm expecting in the coming year seems even worse, and I'm not sure what else to do.

Replies from: Benito, Raemon, pktechgirl, Vladimir_Nesov, Ruby, Vladimir_Nesov↑ comment by Ben Pace (Benito) · 2023-04-09T23:18:08.802Z · LW(p) · GW(p)

shift the default assumptions of LessWrong to "users by default have a rate limit of 1-comment-per day"

Natural times I expect this to be frustrating are when someone's written a post, got 20 comments, and tries to reply to 5 of them, but is locked after the first one. 1 per day seems too strong there. I might say "unlimited daily comments on your own posts".

I also think I'd prefer a cut-off where after which you're trusted to comment freely. Reading the positive-selection post (which I agree with), I think some bars here could include having written a curated post or a post with 200+ karma or having 1000 karma on your account.

Replies from: Raemon↑ comment by Raemon · 2023-04-10T00:20:55.679Z · LW(p) · GW(p)

I'm not particularly attached to these numbers, but fyi the scale I was originally imagining was "after the very first upvote, you get something like 3 comments a day, and after like 5-10 karma you don't have a rate limit." (And note, initially you get one post and one comment, so you get to reply to your post's first comment)

I think in practice, in the world where you receive 4 comments but a) your post hasn't been upvoted much and b) none of your responses to the first three comments didn't get upvoted, my expectation is you're a user I'd indeed prefer to slow down, read up on site guidelines and put more effort into subsequent comments.

I think having 1000 karma isn't actually a very high bar, but yeah I think users with 2+ posts that either have 100+ karma or are curated, should get a lot more leeway.

Replies from: Benito↑ comment by Ben Pace (Benito) · 2023-04-10T00:53:30.900Z · LW(p) · GW(p)

Ah good, I thought you were proposing a drastically higher bar.

↑ comment by Raemon · 2023-04-09T22:26:17.723Z · LW(p) · GW(p)

Here are some principles that are informing some of my thinking here, some pushing in different directions

- Karma isn't that great a metric – I think people often vote for dumb reasons, and they vote highest in drama-threads that don't actually reflect important new intellectual principles. I think there are maybe ways we can improve on the karma system, and I want to consider those soon. But I still think karma-as-is is at least a pretty decent proxy metric to keep the site running smoothly and scaling.

- Because karma is only a proxy metric, I'd still expect moderator judgment to play a significant role in making sure the system isn't going off the rails in the immediate future

- each comment comes with a bit of an attentional cost. If you make a hundred comments and get 10 karma (and no downvotes), I think you're most likely not a net-positive contributor. (i.e. each comment maybe costs 1/5th of a karma in attention or something like that)

- in addition, I think highly upvoted comments/posts tend to be dramatically more valuable than weakly upvoted comments/posts. (i.e. a 50 karma comment is more than 10 times as valuable as a 5 karma comment, most of the time [with an exception IMO for drama threads]

The current karma system kinda encourages people to write lots of comments that get slightly upvoted and gives them the impression of being an established regular. I think in most cases users with a total average karma of ~1-2 are typically commenting in ways that are persistently annoying in some way, in a way that'd be sort of fine with each individual comment but adds up to some kind of "death by a thousand cuts" thing that makes the site worse.

On the other hand, lots of people drawn to LessWrong have a lot of anxiety and scrupulosity issues and I generally don't want people overthinking this and spending a lot of time worrying about it.

My hope is to frame the thing more around positive rewards than punishments.

↑ comment by Elizabeth (pktechgirl) · 2023-04-10T05:24:17.801Z · LW(p) · GW(p)

I suggest not counting people's comments on their own posts towards the rate limit or the “barely upvoted” count. This both seems philosophically correct, and avoids penalizing authors of medium-karma posts for replying to questions (which often don’t get much if any karma).

Replies from: Raemon↑ comment by Vladimir_Nesov · 2023-04-09T23:39:21.550Z · LW(p) · GW(p)

risks with negative selection

There should be fast tracks that present no practical limits to the new users. First few comments should be available immediately upon registration, possibly regenerating quickly. This should only degrade if there is downvoting or no upvoting, and the limits should go away completely according to an algorithm that passes backtesting on first comments made by users in good standing who started commenting within the last 3-4 years. That is, if hypothetically such a rate-limiting algorithm were to be applied 3 years ago to a user who started commenting then, who later became a clearly good contributor, the algorithm should succeed in (almost) never preventing that user from making any of the comments that were actually made, at the rate they were actually made.

If backtesting shows that this isn't feasible, implementing this feature is very bad. Crowdsource moderation instead, allow high-Karma users to rate-limit-vote on new users, but put rate-limit-level of new users to "almost unlimited" by default, until rate-limit-downvoted manually.

↑ comment by Ruby · 2023-04-10T04:11:40.053Z · LW(p) · GW(p)

I'm less optimistic than Ray about rate limits, but still think they're worth exploring. I think getting the limits/rules correct will be tricky since I do care about the normal flow of good conversation not getting impeded.

I think it's something we'll try soon, but not sure if it'll be the priority for this week.

↑ comment by Vladimir_Nesov · 2023-04-09T23:21:11.337Z · LW(p) · GW(p)

"users by default have a rate limit of 1-comment-per day" and "1 post per week."

Imagine a system that lets a user write their comments or posts in advance, and then publishes comments according to these limits automatically. Then these limits wouldn't be enough. On the other hand, if you want to write a comment, you want to write it right away, instead of only starting to write it the next day because you are out of commenting chits. It's very annoying if the UI doesn't allow you to do that and instead you need to write it down in a file on your own device, make a reminder to go back to the site once the timeout is up, and post it at that time, all the while remaining within the bounds of the rules.

Also, being able to reply to responses to your comments is important, especially when the responses are requests for clarification, as long as that doesn't turn into an infinite discussion. So I think commenting chits should accumulate to a maximum of at least 3-4, even if it takes a week to get there, possibly even more if it's been a month. But maybe an even better option is for all but one of these to be "reply chits" that are weaker than full "comment chits" and only work for replies-to-replies to your own comments or posts. While the full "comment chits" allow commenting anywhere.

I don't see a way around the annoyance of feasibility of personally managed manual posting schedule workarounds other than implementing the queued-posting feature on LW, together with ability to manage the queue, arranging the order/schedule in which the pending comments will be posted. Which is pretty convoluted, putting this whole development in question.

Replies from: Raemon↑ comment by Raemon · 2023-04-09T23:32:21.065Z · LW(p) · GW(p)

LessWrong already stores comments you write in local storage so you can edit it over the rose of the day and post it later.

I… don’t see a reason to actively facilitate users having an easier time posting as often as possible, and not sure I understand your objection here.

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2023-04-09T23:52:41.112Z · LW(p) · GW(p)

An obvious issue that could be fixed by the UI but isn't, that can be worked around outside the UI, is deliberate degradation of user experience. The blame is squarely on the developers, because it's an intentional decision by the developers. This should be always avoided, either by not creating this situation, or by fixing the UI. If this is not done, users will be annoyed, I think justifiably.

a reason to actively facilitate users having an easier time posting as often as possible

When users want to post, not facilitating that annoys them. If you actually knew that we want them to go away, you could've banned them already. You don't actually know, that's the whole issue here, so some of them are the reason there is a site at all, and it's very important to be a good host for them.

comment by Raemon · 2023-04-06T19:26:16.697Z · LW(p) · GW(p)

After chatting for a bit about what to do with low-quality new posts and comments, while being transparent and inspectably fair, the LW Team is currently somewhat optimistic about adding a section to lesswrong.com/moderation which lists all comments/posts that we've rejected for quality.

We haven't built it yet, so for immediate future we'll just be strong downvoting content that doesn't meet our quality bar. And for immediate future, if existing users in good standing want to defend particular pieces as worth inclusion they can do so here.

This is not a place for users who submitted rejected content to write an appeal (they can do that via PM, although we don't promise to reply since often we were just pretty confident in our take and the user hasn't offered new information), and I'll be deleting such comments that appear here.

(Is this maximally transparent? No. But, consider that it's still dramatically more transparent than a university or journal)

j/k I just tried this for 5 minutes and a) I don't actually want to approve users to make new posts (which is necessary currently to make their post appear), b) there's no current transparent solution that isn't a giant pain. So, not doing this for now, but we'll hopefully build a Rejected Content section at some point.

comment by Garrett Baker (D0TheMath) · 2023-04-05T13:26:34.870Z · LW(p) · GW(p)

What are the actual rationality concepts LWers are basically required to understand to participate in most discussions?

I am prior to having this bar be set pretty high, like 80-100% of the sequences level. I remember years ago when I finished the sequences, I spent several months practicing everyday rationality in isolation, and only then deigned to visit LessWrong and talk to other rationalists, and I was pretty disappointed with the average quality level, and like I dodged a bullet by spending those months thinking alone rather than with the wider community.

It also seems like average quality has decreased over the years.

Predictable confusion some will have: I’m talking about average quality here. Not 90th percentile quality posters.

Replies from: MondSemmel↑ comment by MondSemmel · 2023-04-06T08:52:32.844Z · LW(p) · GW(p)

I think I'd prefer setting the bar lower, and instead using downvotes as a filter for merely low-quality (rather than abysmal-quality) content. For instance, most posts on LW receive almost no comments, so I'd suspect that filtering for even higher quality would just dry up the discussion even more.

Replies from: D0TheMath, Benito↑ comment by Garrett Baker (D0TheMath) · 2023-04-06T13:28:57.287Z · LW(p) · GW(p)

The main reason I don’t reply to most posts is because I’m not guaranteed an interesting conversation, and it is not uncommon that I’d just be explaining a concept which seems obvious if you’ve read the sequences, which aren’t super fun conversations to have compared to alternative uses of my time.

For example, the other day I got into a discussion on LessWrong about whether I should worry about claims which are provably useless, and was accused of ignoring inconvenient truths for not doing so.

If the bar to entry was a lot higher, I think I’d comment more (and I think others would too, like TurnTrout).

Replies from: MondSemmel↑ comment by MondSemmel · 2023-04-06T13:49:12.007Z · LW(p) · GW(p)

Maybe we have different experiences because we tend to read different LW content? I skip most of the AI content, so I don't have a great sense of the quality of comments there. If most AI discussions get a healthy amount of comments, but those comments are mostly noise, then I can certainly understand your perspective.

↑ comment by Ben Pace (Benito) · 2023-04-06T17:03:11.304Z · LW(p) · GW(p)

In my experience actively getting terrible comments can be more frustrating than a lack-of-comments is demotivating.

Replies from: awg↑ comment by awg · 2023-04-06T17:06:47.981Z · LW(p) · GW(p)

Agreed. I think this also trends exponentially with the number of terrible comments. It is possible to be overwhelmed to death and have to completely relocate/start over (without proper prevention).

One thing that I think in the long term might be worth considering is something like the SomethingAwful approach: a one-time payment per account that is high enough to discourage trolls but low enough for most anyone to afford in combination with a strong culture and moderation (something LessWrong already has/is working on).

comment by lionhearted (Sebastian Marshall) (lionhearted) · 2023-04-04T23:54:50.237Z · LW(p) · GW(p)

Hey, first just wanted to say thanks and love and respect. The moderation team did such an amazing job bringing LW back from nearly defunct into the thriving place it is now. I'm not so active in posting now, but check the site logged out probably 3-5 times a week and my life is much better for it.

After that, a few ideas:

(1) While I don't 100% agree with every point he made, I think Duncan Sabien did an incredible job with "Basics of Rationalist Discourse" - https://www.lesswrong.com/posts/XPv4sYrKnPzeJASuk/basics-of-rationalist-discourse-1 [LW · GW] - perhaps a boiled-down canonical version of that could be created. Obviously the pressure to get something like that perfect would be high, so maybe something like "Our rough thoughts on how to be a good a contributor here, which might get updated from time to time". Or just link Duncan's piece as "non-canonical for rules but a great starting place." I'd hazard a guess that 90% of regular users here agree with at least 70% of it? If everyone followed all of Sabien's guidelines, there'd be a rather high quality standard.

(2) I wonder if there's some reasonably precise questions you could ask new users to check for understanding and could be there as a friendly-ish guidepost if a new user is going wayward. Your example - "(for example: "beliefs are probabilistic, not binary, and you should update them incrementally")" - seems like a really good one. Obviously those should be incredibly non-contentious, but something that would demonstrate a core understanding. Perhaps 3-5 of those, maybe something that a person formally writes up some commentary on their personal blog before posting?

(3) It's fallen from its peak glory years, but sonsofsamhorn.net might be an interesting reference case to look at — it was one of the top analytical sports discussion forums for quite a while. At the height of its popularity, many users wanted to join but wouldn't understand the basics - for instance, that a poorly-positioned player on defense making a flashy "diving play" to get the baseball wasn't a sign of good defense, but rather a sign that that player has a fundamental weakness in their game, which could be investigated more deeply with statistics - and we can't just trust flashy replay videos to be accurate indicators of defensive skill. (Defense in American baseball is particularly hard to measure and sometimes contentious.) What SOSH did was create an area called "The Sandbox" which was relatively unrestricted — spam and abuse still weren't permitted of course, but the standard of rigor was a lot lower. Regular members would engage in Sandbox threads from time to time, and users who made excellent posts and comments in The Sandbox would get invited to full membership. Probably not needed at the current scale level, but might be worth starting to think about for a long-term solution if LW keeps growing.

Thanks so much for everything you and the team do.

Replies from: Ruby, Raemon, NicholasKross↑ comment by Ruby · 2023-04-05T00:25:17.446Z · LW(p) · GW(p)

I did the have the idea of there being regions with varying standards and barriers, in particular places where new users cannot comment easily and place where they can, as an idea.

Replies from: TekhneMakre↑ comment by TekhneMakre · 2023-04-05T09:57:06.688Z · LW(p) · GW(p)

This feels like a/the natural solution. In particular, what occurred to me was:

- Make LW about rationality again.

- Expand the Alignment Forum: 2.1. By default, everything is as it is currently: a small set of users post, comment, and upvote, and that's what people see by default. 2.2. There's another section that's open to whoever.

The reasoning being that the influx is specifically about AI, not just a big influx.

Replies from: steve2152, niplav↑ comment by Steven Byrnes (steve2152) · 2023-04-05T15:27:04.927Z · LW(p) · GW(p)

The idea of AF having both a passing-the-current-AF-bar section and a passing-the-current-LW-bar section is intriguing to me. With some thought about labeling etc., it could be a big win for non-alignment people (since LW can suppress alignment content more aggressively by default), and a big win for people trying to get into alignment (since they can host their stuff on a more professional-looking dedicated alignment site), and no harm done to the current AF people (since the LW-bar section would be clearly labeled and lower on the frontpage).

I didn’t think it through very carefully though.

↑ comment by niplav · 2023-04-05T11:46:47.173Z · LW(p) · GW(p)

I like this direction, but I'm not sure how broadly one would want to define rationality: Would a post collecting quotes about intracranial ultrasound stimulation for meditation enhancement be rationality related enough? What about weird quantified self experiments?

In general I appreciate LessWrong because it is so much broader than other fora, while still staying interesting.

Replies from: TekhneMakre↑ comment by TekhneMakre · 2023-04-05T14:58:43.611Z · LW(p) · GW(p)

Well, at least we can say, "whatever LW has been, minus most AI stuff".

↑ comment by Raemon · 2023-04-05T00:04:03.392Z · LW(p) · GW(p)

I do agree Duncan's post is pretty good, and while I don't think it's perfect I don't really have an alternative I think is better for new users getting a handle on the culture here.

Replies from: Duncan_Sabien, gilch, Vladimir_Nesov, Benito, SaidAchmiz↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2023-04-05T06:02:01.993Z · LW(p) · GW(p)

I'd be willing to put serious effort into editing/updating/redrafting the two sections that got the most constructive pushback, if that would help tip things over the edge.

Replies from: Ilio↑ comment by Ilio · 2023-04-06T14:30:54.085Z · LW(p) · GW(p)

If you could add QAs, this could turn into a certificate that one has verifiably read or copy&past what LessWrong expects its users to know before writing under some tag (with a specific set of QAs for each main tags). Of course LW could also certify that this or that trusted user is welcome on all tags, and chose what tag can or can’t appear in front page.

↑ comment by gilch · 2023-04-07T00:19:21.585Z · LW(p) · GW(p)

I vaguely remember being not on board with that one and downvoting it. Basics of Rationalist Discourse doesn't seem to get to the core of what rationality is, and seems to preclude other approaches that might be valuable. Too strict and misses the point. I would hate for this to become the standard.

↑ comment by Vladimir_Nesov · 2023-04-05T20:42:19.100Z · LW(p) · GW(p)

I don't really have an alternative I think is better for new users getting a handle on the culture here

Culture is not systematically rationality (dath ilan wasn't built in a day). Not having an alternative that's better can coexist with this particular thing not being any good for same purpose. And a thing that's any good could well be currently infeasible to make, for anyone.

Zack's post [LW · GW] describes the fundamental difficulty with this project pretty well. Adherence to most rules of discourse is not systematically an improvement for processes of finding truth [LW · GW], and there is a risk of costly cargo cultist activities, even if they are actually good for something else. The cost could be negative [LW · GW] selection by culture, losing its stated purpose [LW · GW].

↑ comment by Ben Pace (Benito) · 2023-04-05T02:25:52.486Z · LW(p) · GW(p)

I would vastly prefer new users to read it than to not read anything at all.

↑ comment by Said Achmiz (SaidAchmiz) · 2023-04-05T11:33:07.594Z · LW(p) · GW(p)

There is no way that a post which some (otherwise non-banned) members of the site are banned from commenting on should be used as an onboarding tool for the site culture. The very fact of such bannings is a clear demonstration of the post’s unsuitability for purpose.

Replies from: gjm, Duncan_Sabien↑ comment by gjm · 2023-04-05T15:48:57.754Z · LW(p) · GW(p)

How does the fact of such bannings demonstrate the post's unsuitability for purpose?

I think it doesn't. For instance, I think the following scenario is clearly possible:

- There are users A and B who detest one another for entirely non-LW-related reasons. (Maybe they had a messy and distressing divorce or something.)

- A and B are both valuable contributors to LW, as long as they stay away from one another.

- A and B ban one another from commenting on their posts, because they detest one another. (Or, more positively, because they recognize that if they start interacting then sooner or later they will start behaving towards one another in unhelpful ways.)

- A writes an excellent post about LW culture, and bans B from it just like A bans B from all their posts (and vice versa).

If you think that Duncan's post specifically shouldn't be an LW-culture-onboarding tool because he banned you specifically from commenting on it, then I think you need reasons tied to the specifics of the post, or the specifics of your banning, or both.

(To be clear: I am not claiming that you don't have any such reasons, nor that Duncan is right to ban you from commenting on his frontpaged posts, nor that Duncan's "basics" post is good, nor am I claiming the opposite of any of those. I'm just saying that the thing you're saying doesn't follow from the thing you're saying it follows from.)

Replies from: SaidAchmiz↑ comment by Said Achmiz (SaidAchmiz) · 2023-04-05T16:34:20.874Z · LW(p) · GW(p)

I suspect that you know perfectly well that the sort of scenario you describe doesn’t apply here. (If, by some chance, you did not know this: I affirm it now. There is no “messy and distressing divorce”, or any such thing; indeed I have never interacted with Duncan, in any way, in any venue other than on Less Wrong.)

The other people in question were, to my knowledge, also banned from commenting on Duncan’s posts due to their criticism of “Basics of Rationalist Discourse” (or due to related discussions, on related topics on Less Wrong), and likewise have no such “out of band” relationship with Duncan.

(All of this was, I think, obvious from context. But now it has been said explicitly. Given these facts, your objection does not apply.)

Replies from: gjm, Duncan_Sabien↑ comment by gjm · 2023-04-05T22:42:29.407Z · LW(p) · GW(p)

I did not claim that my scenario describes the actual situation; in fact, it should be very obvious from my last two paragraphs that I thought (and think) it likely not to.

What I claimed (and still claim) is that the mere fact that some people are banned from commenting on Duncan's frontpage posts is not on its own anything like a demonstration that any particular post he may have written isn't worthy of being used for LW culture onboarding.

Evidently you think that some more specific features of the situation do have that consequence. But you haven't said what those more specific features are, nor how they have that consequence.

Actually, elsewhere in the thread you've said something that at least gestures in that direction:

the point is that if the conversational norms cannot be discussed openly [...] there's no reason to believe that they're good norms. How were they vetted? [...] the more people are banned from commenting on the norms as a consequence of their criticism of said norms, the less we should believe that the norms are any good!

I don't think this argument works. (There may well be a better version of it, along the lines of "There seem to be an awful lot of people Duncan is unable or unwilling to get on with on LW. That suggests that there's something wrong with his style of interaction, or with how he deals with other people's styles of interaction. And that suggests that we should be very skeptical of anything he writes that purports to tell us what's a good style of interaction". But that would be a different argument.)

It's not true that the norms can't be discussed openly; at least, it seems to me that in general "there are a few people who aren't allowed to talk in place X" is importantly different from "there are a few people who aren't allowed to talk about the thing that's mostly talked about in place X" which in turn is importantly different from "the thing that's mostly talked about in place X cannot be discussed openly".

It does matter how extensively they've been discussed, and that question is worth asking. Part of the answer is that right now that post has 179 comments. Another part is that in fact quite a few of those comments are from you.

If it were true that criticizing Duncan's proposed norms gets you banned from interacting with Duncan (and hence from commenting on that post) then indeed that would be a problem. I do not get the impression that that's true. In particular, to whatever extent it's true that you were banned "as a consequence of [your] criticism of said norms", I think that has to be interpreted as "as a consequence of the manner of your criticism of said norms" as opposed to "as a consequence of the fact that you criticized said norms" or "as a consequence of the content of your criticism of said norms", and I don't think there's any particular conflict between "these norms have been subject to robust debate" and "some people argued about them in a way Duncan found disagreeable enough to trigger a ban".

(Again, it might be that Duncan is too sensitive to some styles of argument, or too ban-happy, or something, and that that is reason to be skeptical of his proposed principles. Again, that's a different argument.)

Replies from: SaidAchmiz↑ comment by Said Achmiz (SaidAchmiz) · 2023-04-05T23:39:08.287Z · LW(p) · GW(p)

In particular, to whatever extent it’s true that you were banned “as a consequence of [your] criticism of said norms”, I think that has to be interpreted as “as a consequence of the manner of your criticism of said norms” as opposed to “as a consequence of the fact that you criticized said norms” or “as a consequence of the content of your criticism of said norms”

This is false.

I don’t think there’s any particular conflict between “these norms have been subject to robust debate” and “some people argued about them in a way Duncan found disagreeable enough to trigger a ban”.

There certainly is a conflict, if “the way Duncan found disagreeable” is “robust”.

(Again, it might be that Duncan is too sensitive to some styles of argument, or too ban-happy, or something, and that that is reason to be skeptical of his proposed principles. Again, that’s a different argument.)

Sorry, no. It’s the very argument in question. The “styles of argument” are “the ones that are directly critical of the heart of the claims being made”.

Replies from: gjm↑ comment by gjm · 2023-04-06T00:34:30.283Z · LW(p) · GW(p)

It is at best debatable that "this is false". Duncan (who, of course, is the person who did the banning) explicitly denies that you were banned for criticizing his proposed norms. Maybe he's just lying, but it's certainly not obvious that he is and it looks to me as if he isn't.

Duncan has also been pretty explicit about what he dislikes about your interactions with him, and what he says he objects to is definitely not simply "robust disagreement". Again, of course it's possible he's just lying; again, I don't see any reason to think he is.

You are claiming very confidently, as if it's a matter of common knowledge, that Duncan banned you from commenting on his frontpage posts because he can't accept direct criticism of his claims and proposals. I do not see any reason to think that that is true. You have not, so far as I can see, given any reason to think it is true. I think you should stop making that claim without either justification or it-seems-to-me-that hedging.

(Since it's fairly clear[1] that this is a matter of something like enmity rather than mere disagreement and in such contexts everything is liable to be taken as a declaration of What Side One Is On, I will say that I think both you and Duncan are clear net-positive contributors to LW, that I am pretty sure I understand what each of you finds intolerable about the other, and that I have zero intention of picking a side in the overall fight.)

[1] So it seems to me. I suspect you disagree, and perhaps Duncan does too, but this is the sort of thing it is very easy to deceive oneself about.

Replies from: Vladimir_Nesov, SaidAchmiz↑ comment by Vladimir_Nesov · 2023-04-06T18:32:22.171Z · LW(p) · GW(p)

You are claiming very confidently, as if it's a matter of common knowledge

As a decoupled aside, something not being a matter of common knowledge is not grounds for making claims of it less confidently, it's only grounds for a tiny bit of acknowledgment of this not being common knowledge, or of the claim not being expected to be persuasive in isolation.

Replies from: gjm↑ comment by gjm · 2023-04-06T18:59:00.195Z · LW(p) · GW(p)

I agree. If you are very certain of X but X isn't common knowledge (actually, "common knowledge" in the technical sense isn't needed, it's something like "agreed on by basically everyone around you") then it's fine to say e.g. "I am very certain of X, from which I infer Y", but I think there is something rude about simply saying "X, therefore Y" without any acknowledgement that some of your audience may disagree with X. (It feels as if the subtext is "if you don't agree with X then you're too ignorant/stupid/crazy for me to care at all what you think".)

In practice, it's rather common to do the thing I'm claiming is rude. I expect I do it myself from time to time. But I think it would be better if we didn't.

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2023-04-06T19:29:01.910Z · LW(p) · GW(p)

My point is that this concern is adequately summarized by something like "claiming without acknowledgment/disclaimers", but not "claiming confidently" (which would change credence in the name of something that's not correctness).

I disagree that this is a problem in most cases (acknowledgment is a cost, and usually not informative), but acknowledge that this is debatable. Similarly to the forms of politeness the require more words, as opposed to forms of politeness that, all else equal, leave the message length unchanged. Acknowledgment is useful where it's actually in doubt.

Replies from: gjm↑ comment by gjm · 2023-04-06T22:13:54.306Z · LW(p) · GW(p)

In this case, Said is both (1) claiming the thing very confidently, when it seems pretty clear to me that that confidence is not warranted, and (2) claiming it as if it's common knowledge, when it seems pretty clear to me that it's far from being common knowledge.

↑ comment by Said Achmiz (SaidAchmiz) · 2023-04-06T00:56:50.968Z · LW(p) · GW(p)

It is at best debatable that “this is false”. Duncan (who, of course, is the person who did the banning) explicitly denies that you were banned for criticizing his proposed norms.

But of course he would deny it. As I’ve said, that’s the problem with giving members the power to ban people from their posts: it creates a conflict of interest. It lets people ban commenters for simply disagreeing with them, while being able to claim that it’s for some other reason. Why would Duncan say “yeah, I banned these people because I don’t like it when people point out the flaws in my arguments, the ways in which something I’ve written makes no sense, etc.”? It would make him look pretty bad to admit that, wouldn’t it? Why shouldn’t he instead say that he banned the people in question for some respectable reason? What downside is there, for him?

And given that, why in the world would we believe him when he says such things? Why would we ever believe any post author who, after banning a commenter who’s made a bunch of posts disagreeing with said author, claims that the ban was actually for some other reason? It doesn’t make any sense at all to take such claims seriously!

The reason why the “ban people from your own post(s)” feature is bad is that it gives people an incentive to make such false claims, not just to deceive others (that would be merely bad) but—much worse!—to deceive themselves about their reasons for issuing bans.

Duncan has also been pretty explicit about what he dislikes about your interactions with him, and what he says he objects to is definitely not simply “robust disagreement”. Again, of course it’s possible he’s just lying; again, I don’t see any reason to think he is.

The obvious reason to think so is that, having written something which is deserving of strong criticism—something which is seriously flawed, etc.—both letting people point this out, in clear and unmerciful terms, and banning them but admitting that you’ve banned them because you can’t take criticism, is unpleasant. (The latter more so than the former… or so we might hope!) Given the option to simply declare that the critics have supposedly violated some supposed norm (and that their violation is so terrible, so absolutely intolerable, that it outweighs the benefit of permitting their criticisms to be posted—quite a claim!), it would take an implausible, an almost superhuman, degree of integrity and force of will to resist doing just that. (Which is why it’s so bad to offer the option.)

it’s fairly clear[1] that this is a matter of something like enmity rather than mere disagreement

I have no idea where you think such “enmity” could come from. As I said, I haven’t interacted with Duncan in any venue except on Less Wrong, ever. I have no personal feelings, positive or negative, toward him.

Replies from: gjm↑ comment by gjm · 2023-04-06T02:59:41.017Z · LW(p) · GW(p)

We would believe it because

- on the whole, people are more likely to say true things than false things

- Duncan has said at some length what he claims to find unpleasant about interacting with you, it isn't just "Said keeps finding mistakes in what I have written", and it is (to me) very plausible that someone might find it unpleasant and annoying

- (I'm pretty sure that) other people have disagreed robustly with Duncan and not had him ban them from commenting on his posts.

You don't give any concrete reason for disbelieving the plausible explanations Duncan gives, you just say -- as you could say regardless of the facts of the matter in this case -- that of course someone banning someone from commenting on their posts won't admit to doing so for lousy reasons. No doubt that's true, but that doesn't mean they all are doing it for lousy reasons.

It seems pretty obvious to me where enmity could come from. You and Duncan have said a bunch of negative things about one another in public; it is absolutely commonplace to resent having people say negative things about you in public. Maybe it all started with straightforward disagreement about some matter of fact, but where we are now is that interactions between you and Duncan tend to get hostile, and this happens faster and further than (to me) seems adequately explained just by disagreements on the points ostensibly at issue.

(For the avoidance of doubt, I was not at all claiming that whatever enmity there might be started somewhere other than LW.)

Replies from: Dagon, SaidAchmiz↑ comment by Dagon · 2023-04-06T08:23:07.045Z · LW(p) · GW(p)

[ I don't follow either participant closely enough to have a strong opinion on the disagreement, aside from noting that the disagreement seems to use a lot of words, and not a lot of effort to distill their own positions toward a crux, as opposed to attacking/defending. ]

on the whole, people are more likely to say true things than false things

In the case of contentious or adversarial discussions, people say incorrect and misleading things . "more likely true than false" is a uselessly low bar for seeking any truth or basing any decisions on.

↑ comment by Said Achmiz (SaidAchmiz) · 2023-04-06T05:15:47.761Z · LW(p) · GW(p)

on the whole, people are more likely to say true things than false things

This is a claim so general as to be meaningless. If we knew absolutely nothing except “a person said a thing”, then retreating to this sort of maximally-vague prior might be relevant. But we in fact are discussing a quite specific situation, with quite specific particular and categorical features. There is no good reason to believe that the quoted prior survives that descent to specificity unscathed (and indeed it seems clear to me that it very much does not).

it isn’t just “Said keeps finding mistakes in what I have written”

It’s slightly more specific, of course—but this is, indeed, a good first approximation.

it is (to me) very plausible that someone might find it unpleasant and annoying

Of course it is! What is surprising about the fact that being challenged on your claims, being asked to give examples of alleged principles, having your theories questioned, having your arguments picked apart, and generally being treated as though you’re basically just some dude saying things which could easily be wrong in all sorts of ways, is unpleasant and annoying? People don’t like such things! On the scale of “man bites dog” to the reverse thereof, this particular insight is all the way at the latter end.

The whole point of this collective exercise that we’re engaged in, with the “rationality” and the “sanity waterline” and all that, is to help each other overcome this sort of resistance, and thereby to more consistently and quickly approach truth.

(I’m pretty sure that) other people have disagreed robustly with Duncan and not had him ban them from commenting on his posts.

Let’s see some examples, then we can talk.

You don’t give any concrete reason for disbelieving the plausible explanations Duncan gives, you just say—as you could say regardless of the facts of the matter in this case—that of course someone banning someone from commenting on their posts won’t admit to doing so for lousy reasons. No doubt that’s true, but that doesn’t mean they all are doing it for lousy reasons.

If Alice criticizes one of Bob’s posts, and Bob immediately or shortly thereafter bans Alice from commenting on Bob’s posts, the immediate default assumption should be that the criticism was the reason for the ban. Knowing nothing else, just based on these bare facts, we should jump right to the assumption that Bob’s reasons for banning Alice were lousy.

If we then learn that Bob has banned multiple people who criticized him robustly/forcefully/etc., and Bob claim that the bans in all of these cases were for good reasons, valid reasons, definitely not just “these people criticized me”… then unless Bob has some truly heroic evidence (of the sort that, really, it is almost never possible to get), his claims should be laughed out of the room.

(Indeed, I’ll go further and say that the default assumption—though a slightly weaker default—in all cases of anyone banning anyone else[1] from commenting on their posts is that the ban was for lousy reasons. Yes, in some cases that default is overridden by some exceptional circumstances. But until we learn of such circumstances, evaluate them, and judge them to be good reasons for such a ban, we should assume that the reasons are bad.)

And the problem here isn’t that our hypothetical Bob, or the actual Duncan, is a bad person, a liar, etc. Nothing of the sort need be true! (And in Duncan’s case, I think that probably nothing of the sort is true.) But it would be very foolish of us to simply take someone’s word in a case like this.