Vote on Interesting Disagreements

post by Ben Pace (Benito) · 2023-11-07T21:35:00.270Z · LW · GW · 131 commentsContents

How to use the poll None 131 comments

Do you have a question you'd like to see argued about? Would you like to indicate your position and discuss [? · GW] it with someone who disagrees?

Add poll options to the thread below [LW(p) · GW(p)] to find questions with lots of interest and disagreement.

How to use the poll

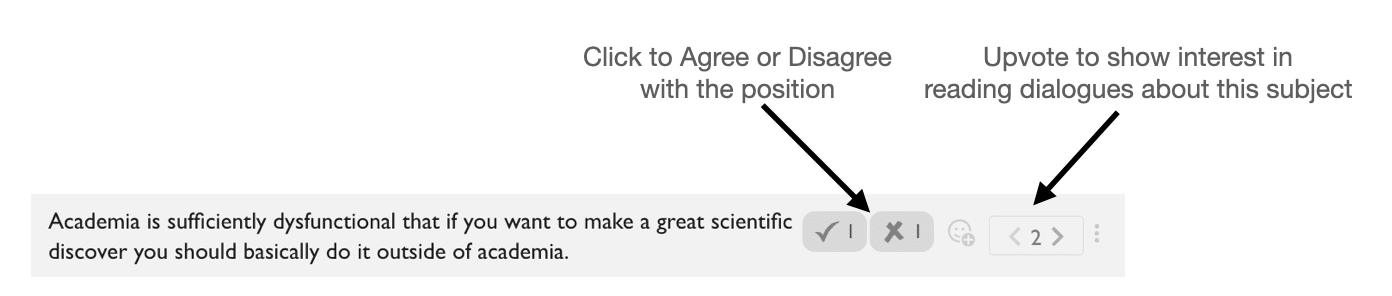

- Reacts: Click on the agree/disagree reacts to help people see how much disagreement there is on the topic.

- Karma: Upvote positions that you'd like to read dialogues about.

- New Poll Option: Add new positions for people to take sides on. Please add the agree/disagree reacts to new poll options you make.

The goal is to show people where a lot of interesting disagreement lies. This can be used to find discussion and dialogue [? · GW] topics in the future.

131 comments

Comments sorted by top scores.

comment by Ben Pace (Benito) · 2023-10-24T00:35:27.551Z · LW(p) · GW(p)

Poll For Topics of Discussion and Disagreement

Use this thread to (a) upvote topics you're interested in reading about, (b) agree/disagree with positions, and (c) add new positions for people to vote on.

Note: Hit cmd-f or ctrl-f (whatever normally opens search) to automatically expand all of the poll options below.

Replies from: Benito, 1a3orn, Benito, habryka4, habryka4, Quadratic Reciprocity, ryan_greenblatt, Quadratic Reciprocity, Jonas Vollmer, Benito, Benito, Quadratic Reciprocity, habryka4, nathan-simons, TrevorWiesinger, habryka4, D0TheMath, D0TheMath, mattmacdermott, Benito, Benito, mattmacdermott, Jonas Vollmer, TrevorWiesinger, leogao, Benito, Tapatakt, gesild-muka, elifland, TrevorWiesinger, Jonas Vollmer, Benito, Benito, Seth Herd, mattmacdermott, Quadratic Reciprocity, habryka4, Jonas Vollmer, Benito, Benito, leogao, P., gworley, D0TheMath, calebp99, Tapatakt, Jonas Vollmer, Nate Showell, D0TheMath, TrevorWiesinger, johnswentworth, D0TheMath, Benito, leogao, Benito, lc, TrevorWiesinger, aysja, romeostevensit, P., Screwtape, D0TheMath, TrevorWiesinger, Jonas Vollmer, lc, Benito, quetzal_rainbow, P., TrevorWiesinger, P., faul_sname, TrevorWiesinger, thomas-kwa, D0TheMath, D0TheMath, D0TheMath, TrevorWiesinger, TrevorWiesinger, Benito, Benito, leogao, thomas-kwa, thomas-kwa, D0TheMath, Benito, strangepoop, strangepoop, KurtB, Benito, strangepoop, pktechgirl, quetzal_rainbow, P., tailcalled, davey-morse, strangepoop, strangepoop, D0TheMath, charbel-raphael-segerie, Slapstick, mattmacdermott, TrevorWiesinger, lc, tailcalled, Benito↑ comment by Ben Pace (Benito) · 2023-10-24T00:42:55.581Z · LW(p) · GW(p)

Prosaic Alignment is currently more important to work on than Agent Foundations work.

↑ comment by Ben Pace (Benito) · 2023-11-07T21:10:38.455Z · LW(p) · GW(p)

Academia is sufficiently dysfunctional that if you want to make a great scientific discovery you should basically do it outside of academia.

↑ comment by habryka (habryka4) · 2023-11-07T23:54:12.540Z · LW(p) · GW(p)

Pursuing plans that cognitively enhance humans while delaying AGI should be our top strategy for avoiding AGI risk

↑ comment by habryka (habryka4) · 2023-11-07T23:49:52.840Z · LW(p) · GW(p)

Current progress in AI governance will translate with greater than 50% probability into more than a 2 year counterfactual delay of dangerous AI systems

↑ comment by Quadratic Reciprocity · 2023-11-08T00:40:46.367Z · LW(p) · GW(p)

It is very unlikely AI causes an existential catastrophe (Bostrom or Ord definition) but doesn't result in human extinction. (That is, non-extinction AI x-risk scenarios are unlikely)

↑ comment by ryan_greenblatt · 2023-11-08T04:52:43.861Z · LW(p) · GW(p)

Ambitious mechanistic interpretability is quite unlikely[1] to be able to confidently assess[2] whether AIs[3] are deceptively aligned (or otherwise have dangerous propensities) in the next 10 years.

↑ comment by Quadratic Reciprocity · 2023-11-08T01:44:34.627Z · LW(p) · GW(p)

Things will basically be fine regarding job loss and unemployment due to AI in the next several years and those worries are overstated

↑ comment by Jonas V (Jonas Vollmer) · 2023-11-07T23:57:01.001Z · LW(p) · GW(p)

The current AI x-risk grantmaking ecosystem is bad and could be improved substantially.

↑ comment by Ben Pace (Benito) · 2023-10-24T00:55:20.284Z · LW(p) · GW(p)

It is critically important for US/EU companies to build AGI before Chinese companies.

↑ comment by Ben Pace (Benito) · 2023-11-07T21:10:45.731Z · LW(p) · GW(p)

People aged 12 to 18 should basically be treated like adults rather than basically treated like children.

↑ comment by Quadratic Reciprocity · 2023-11-08T00:34:36.922Z · LW(p) · GW(p)

EAs and rationalists should strongly consider having lots more children than they currently are

↑ comment by habryka (habryka4) · 2023-11-07T23:55:31.105Z · LW(p) · GW(p)

Meaningness's "Geeks Mops and Sociopaths" model is an accurate model of the dynamics that underlie most social movements

↑ comment by Nathan Simons (nathan-simons) · 2023-11-08T02:41:25.676Z · LW(p) · GW(p)

Irrefutable evidence of extraterrestrial life would be a good thing.

↑ comment by trevor (TrevorWiesinger) · 2023-11-08T01:20:09.549Z · LW(p) · GW(p)

Someone in the AI safety community (e.g. Yud, Critch, Salamon, you) can currently, within 6 month's effort, write a 20,000 word document that would pass a threshold for a coordination takeoff on Earth, given that 1 million smart Americans and Europeans would read all of it and intended to try out much of the advice (i.e. the doc succeeds given 1m serious reads, it doesn't need to cause 1m serious reads). Copy-pasting already-written documents/posts would count.

↑ comment by habryka (habryka4) · 2023-11-07T23:56:05.849Z · LW(p) · GW(p)

It was a mistake to increase salaries in the broader EA/Rationality/AI-Alignment ecosystem between 2019 and 2022

↑ comment by Garrett Baker (D0TheMath) · 2023-11-09T00:01:16.827Z · LW(p) · GW(p)

Good AGI-notkilleveryoneism-conscious researchers should in general prioritize working at big AGI labs over working independently, for alignment-focused labs, or for academia marginally more than they currently do.

↑ comment by Garrett Baker (D0TheMath) · 2023-11-08T23:57:38.793Z · LW(p) · GW(p)

The ratio of good alignment work done at labs vs independently mostly skews toward labs

Good meaning something different from impactful here. Obviously AGI labs will pay more attention to their researchers or researchers from respectable institutions than independent researchers. Your answer should factor out such considerations.

Edit: Also normalize for quantity of researchers.

↑ comment by mattmacdermott · 2023-11-08T14:07:12.166Z · LW(p) · GW(p)

Empirical agent foundations is currently a good idea for a research direction.

↑ comment by Ben Pace (Benito) · 2023-10-24T00:40:57.156Z · LW(p) · GW(p)

There is a greater than 20% chance that the Effective Altruism movement has been net negative for the world.

↑ comment by Ben Pace (Benito) · 2023-11-07T21:15:31.138Z · LW(p) · GW(p)

A basic deontological and straightforward morality (such as that exmplified by Hermione in HPMOR) is basically right; this is in contrast with counterintuitive moralities that suggest evil-tinted people (like Quirrell in HPMOR) are also valid ways of being moral.

↑ comment by mattmacdermott · 2023-11-08T14:01:39.303Z · LW(p) · GW(p)

The work of agency-adjacent research communities such as artificial life, complexity science and active inference is at least as relevant to AI alignment as LessWrong-style agent foundations research is.

↑ comment by Jonas V (Jonas Vollmer) · 2023-11-07T23:58:33.417Z · LW(p) · GW(p)

Just like the last 12 months was the time of the chatbots, the next 12 months will be the time of agent-like AI product releases.

↑ comment by trevor (TrevorWiesinger) · 2023-11-08T00:55:44.070Z · LW(p) · GW(p)

American intelligence agencies consider AI safety to be substantially more worth watching than most social movements

↑ comment by Ben Pace (Benito) · 2023-10-24T00:49:10.717Z · LW(p) · GW(p)

Moloch is winning.

↑ comment by Gesild Muka (gesild-muka) · 2023-11-08T14:53:45.173Z · LW(p) · GW(p)

The rationality community will noticeably spill over into other parts of society in the next ten years. Examples: entertainment, politics, media, art, sports, education etc.

↑ comment by trevor (TrevorWiesinger) · 2023-11-08T00:58:33.537Z · LW(p) · GW(p)

At least one American intelligence agency is concerned about the AI safety movement potentially decelerating the American AI industry, against the administration/natsec community's wishes

↑ comment by Jonas V (Jonas Vollmer) · 2023-11-07T23:52:41.758Z · LW(p) · GW(p)

Having another $1 billion to prevent AGI x-risk would be useful because we could spend it on large compute budgets for safety research teams.

↑ comment by Ben Pace (Benito) · 2023-11-07T21:16:17.686Z · LW(p) · GW(p)

I broadly agree with the claim that "most people don't do anything and the world is very boring".

↑ comment by Ben Pace (Benito) · 2023-10-24T00:43:27.722Z · LW(p) · GW(p)

On the current margin most people would be better off involving more text-based communication in their lives than in-person communication.

↑ comment by mattmacdermott · 2023-11-08T13:57:52.602Z · LW(p) · GW(p)

Agent foundations research should become more academic on the margin (for example by increasing the paper to blogpost ratio, and by putting more effort into relating new work to existing literature).

↑ comment by Quadratic Reciprocity · 2023-11-08T01:47:56.282Z · LW(p) · GW(p)

Current AI safety university groups are overall a good idea and helpful, in expectation, for reducing AI existential risk

↑ comment by habryka (habryka4) · 2023-11-08T00:28:03.841Z · LW(p) · GW(p)

Current progress in AI governance will translate with greater than 20% probability into more than a 2 year counterfactual delay of dangerous AI systems

↑ comment by Jonas V (Jonas Vollmer) · 2023-11-07T23:52:56.855Z · LW(p) · GW(p)

Having another $1 billion to prevent AGI x-risk would be useful because we could spend it on large-scale lobbying efforts in DC.

↑ comment by Ben Pace (Benito) · 2023-11-07T21:13:14.324Z · LW(p) · GW(p)

Immersion into phenomena is better for understanding them than trying to think through at the gears-level, on the margin for most people who read LessWrong.

↑ comment by Ben Pace (Benito) · 2023-10-24T00:43:07.393Z · LW(p) · GW(p)

Rationality should be practiced for Rationality’s sake (rather than for the sake of x-risk).

↑ comment by Gordon Seidoh Worley (gworley) · 2023-11-08T03:48:42.179Z · LW(p) · GW(p)

Rationalists would be better off if they were more spiritual/religious

↑ comment by Garrett Baker (D0TheMath) · 2023-11-08T00:13:13.812Z · LW(p) · GW(p)

Effective altruism can be well modeled by cynically thinking of it as just another social movement, in the sense that those a part of it are mainly jockeying for in-group status, and making costly demonstrations to their in-group & friends that they care about other sentiences more than others in the in-group. Its just that EA has more cerebral standards than others.

↑ comment by Jonas V (Jonas Vollmer) · 2023-11-07T23:51:50.387Z · LW(p) · GW(p)

Investing in early-stage AGI companies helps with reducing x-risk (via mission hedging, having board seats, shareholder activism)

↑ comment by Nate Showell · 2023-11-12T21:32:26.053Z · LW(p) · GW(p)

"Agent" is an incoherent concept.

↑ comment by Garrett Baker (D0TheMath) · 2023-11-10T22:27:36.665Z · LW(p) · GW(p)

The younger generation of rationalists are less interesting than the older generation was when that old generation had the same experience as the young generation currently does.

↑ comment by trevor (TrevorWiesinger) · 2023-11-08T13:58:09.903Z · LW(p) · GW(p)

The most valuable new people joining AI safety will usually take ~1-3 years of effort to begin to be adequately sorted and acknowledged for their worth, unless they are unusually good at self-promotion e.g. gift of gab, networking experience, and stellar resume.

↑ comment by johnswentworth · 2023-11-07T23:16:58.576Z · LW(p) · GW(p)

Poll feature on LW: Yay or Nay?

↑ comment by Garrett Baker (D0TheMath) · 2023-11-10T05:13:13.725Z · LW(p) · GW(p)

At least one of {Anthropic, OpenAI, Deepmind} is net-positive compared to the counterfactual where just before founding the company, its founders were all discretely paid $10B by a time-travelling PauseAI activist not to found the company and to exit the industry for 30 years, and this worked.

↑ comment by Ben Pace (Benito) · 2023-11-07T21:10:01.183Z · LW(p) · GW(p)

One should basically not invest into having "charisma".

↑ comment by Ben Pace (Benito) · 2023-10-24T01:23:04.527Z · LW(p) · GW(p)

Great art is rarely original and mostly copied.

↑ comment by trevor (TrevorWiesinger) · 2023-11-08T00:46:23.376Z · LW(p) · GW(p)

If rationality took off in China, it would yield higher EV from potentially spreading to the rest of the world than from potentially accelerating China.

↑ comment by romeostevensit · 2023-11-10T14:02:30.540Z · LW(p) · GW(p)

When people try to discuss philosophy, math, or science, especially pre-paradigmatic fields such as ai safety, they use a lot of metaphorical thinking to extend from familiar concepts to new concepts. It would be very helpful and people would stop talking past each other so much if they practiced being explicitly aware of these mental representations and directly shared them rather than pretending that something more rigorous is happening. This is part of Alfred Korzybski's original rationality project, something he called 'consciousness of abstraction.'

↑ comment by P. · 2023-11-08T20:55:01.183Z · LW(p) · GW(p)

Research into getting a mechanistic understanding of the brain for purposes of at least one of: understanding how values/empathy works in people, brain uploading or improving cryonics/plastination is net positive and currently greatly underfunded.

↑ comment by Garrett Baker (D0TheMath) · 2023-11-08T00:07:09.393Z · LW(p) · GW(p)

Most persistent disagreements can more usefully be thought of as a difference in priors rather than a difference in evidence or rationality.

↑ comment by trevor (TrevorWiesinger) · 2023-11-08T01:03:21.185Z · LW(p) · GW(p)

Xi Jinping thinks that economic failure in the US or China, e.g. similar to 2008, is one of the most likely things to change the global balance of power.

↑ comment by Jonas V (Jonas Vollmer) · 2023-11-08T00:06:26.479Z · LW(p) · GW(p)

Having another $1 billion to prevent AGI x-risk would be pretty useful.

↑ comment by Ben Pace (Benito) · 2023-11-07T21:16:26.783Z · LW(p) · GW(p)

Most LWers are prioritizing their slack too much.

↑ comment by quetzal_rainbow · 2023-11-10T13:31:15.067Z · LW(p) · GW(p)

If you can write prompt for GPT-2000 such that completion of this prompt results in aligned pivotal act, you can just use knowledge necessary for writing this prompt to Just Build aligned ASI, without necessity to use GPT-2000.

↑ comment by P. · 2023-11-09T20:35:23.459Z · LW(p) · GW(p)

You know of a technology that has at least a 10% chance of having a very big novel impact on the world (think the internet or ending malaria) that isn't included in this list, very similar, or downstream from some element of it: AI, mind uploads, cryonics, human space travel, geo-engineering, gene drives, human intelligence augmentation, anti-aging, cancer cures, regenerative medicine, human genetic engineering, artificial pandemics, nuclear weapons, proper nanotech, very good lie detectors, prediction markets, other mind-altering drugs, cryptocurrency, better batteries, BCIs, nuclear fusion, better nuclear fission, better robots, AR, VR, room-temperature superconductors, quantum computers, polynomial time SAT solvers, cultured meat, solutions to antibiotic resistance, vaccines to some disease, optical computers, artificial wombs, de-extinction and graphene.

Bad options included just in case someone thinks they are good.

↑ comment by trevor (TrevorWiesinger) · 2023-11-08T01:56:54.240Z · LW(p) · GW(p)

Any activity or action taken after drinking coffee in the morning will strongly reward/reinforce that action/activity

↑ comment by faul_sname · 2023-11-08T01:44:14.568Z · LW(p) · GW(p)

Humans are the dominant species on earth primarily because our individual intelligence surpassed the necessary threshold to sustain civilization and take control of our environment.

↑ comment by trevor (TrevorWiesinger) · 2023-11-08T01:01:53.667Z · LW(p) · GW(p)

American intelligence agencies are actively planning to defend the American AI industry against foreign threats (e.g. Russia, China).

↑ comment by Thomas Kwa (thomas-kwa) · 2023-11-10T11:35:50.095Z · LW(p) · GW(p)

Conceptual alignment work on concepts like “agency”, “optimization”, “terminal values”, “abstractions”, “boundaries” is mostly intractable at the moment.

↑ comment by Garrett Baker (D0TheMath) · 2023-11-10T04:59:39.068Z · LW(p) · GW(p)

Rationalist rituals like Petrov day or the Secular Solstices should be marginally more emphasized within those collections of people who call themselves rationalists.

↑ comment by Garrett Baker (D0TheMath) · 2023-11-08T23:59:00.952Z · LW(p) · GW(p)

The ratio of good alignment work done at labs vs in academia mostly skews toward labs

Good meaning something different from impactful here. Obviously AGI labs will pay more attention to their researchers or researchers from respectable institutions than academics. Your answer should factor out such considerations.

Edit: Also normalize for quantity of researchers.

↑ comment by Garrett Baker (D0TheMath) · 2023-11-08T23:58:22.514Z · LW(p) · GW(p)

The ratio of good alignment work done in academia vs independently mostly skews toward academia

Good meaning something different from impactful here. Possibly AGI labs will pay more attention to academics than independent researchers. Your answer should factor out such considerations.

Edit: Also normalize for quantity of researchers.

↑ comment by trevor (TrevorWiesinger) · 2023-11-08T01:06:44.108Z · LW(p) · GW(p)

At least one mole, informant, or spy has been sent by a US government agency or natsec firm to infiltrate the AI safety community by posing as a new member (even if it's just to ask questions in causal conversations at events about recent happenings or influential people's priorities).

↑ comment by trevor (TrevorWiesinger) · 2023-11-08T00:44:28.368Z · LW(p) · GW(p)

Rationality is likely to organically gain popularity in China (e.g. quickly reaching 10,000 people or reaching 100,000 by 2030, e.g. among scientists or engineers, etc).

↑ comment by Ben Pace (Benito) · 2023-11-07T21:11:17.900Z · LW(p) · GW(p)

It is wrong to protest AI labs.

↑ comment by Ben Pace (Benito) · 2023-11-07T21:15:13.376Z · LW(p) · GW(p)

The government should build nuclear-driven helicopters, like nuclear subs.

↑ comment by Thomas Kwa (thomas-kwa) · 2023-11-10T11:43:39.118Z · LW(p) · GW(p)

There are arguments for convergent instrumental pressures towards catastrophe, but the required assumptions are too strong for the arguments to clearly go through.

↑ comment by Thomas Kwa (thomas-kwa) · 2023-11-10T11:41:39.999Z · LW(p) · GW(p)

Most end-to-end “alignment plans” are bad because research will be incremental. For example, Superalignment's impact will mostly come from adapting to the next ~3 years of AI discoveries and working on relevant subproblems like interp, rather than creating a superhuman alignment researcher.

↑ comment by Garrett Baker (D0TheMath) · 2023-11-10T05:07:08.168Z · LW(p) · GW(p)

Those who call themselves rationalists or EAs should drink marginally more alcohol at social events.

↑ comment by Ben Pace (Benito) · 2023-11-07T21:14:20.732Z · LW(p) · GW(p)

Most LWers are not prioritizing their slack enough.

↑ comment by a gently pricked vein (strangepoop) · 2023-11-14T17:38:54.346Z · LW(p) · GW(p)

The virtue of the void [? · GW] is indeed the virtue above all others (in rationality), and fundamentally unformalizable.

↑ comment by a gently pricked vein (strangepoop) · 2023-11-14T17:34:23.376Z · LW(p) · GW(p)

There is likely a deep compositional structure to be found for alignment, possibly to the extent that AGI alignment could come from "merely" stacking together "microalignment", even if in non-trivial ways.

↑ comment by Ben Pace (Benito) · 2023-11-08T00:44:59.873Z · LW(p) · GW(p)

Most of the time, power-seeking behavior in humans is morally good or morally neutral.

↑ comment by a gently pricked vein (strangepoop) · 2023-11-14T18:01:19.735Z · LW(p) · GW(p)

Computer science & ML will become lower in relevance/restricted in scope for the purposes of working with silicon-based minds, just as human-neurosurgery specifics are largely but not entirely irrelevant for most civilization-scale questions like economic policy, international relations, foundational research, etc.

Or IOW: Model neuroscience (and to some extent, model psychology) requires more in-depth CS/ML expertise than will the smorgasbord of incoming subfields of model sociology, model macroeconomics, model corporate law, etc.

↑ comment by Elizabeth (pktechgirl) · 2023-11-12T18:51:20.122Z · LW(p) · GW(p)

It's good for EA orgs to pay well

↑ comment by quetzal_rainbow · 2023-11-10T13:27:30.728Z · LW(p) · GW(p)

If you can't write a program that produces aligned (under whatever definition of alignment you use) output being run on unphysically large computer, you can't deduce from training data or weights of superintelligent neural network if it produces aligned output.

↑ comment by tailcalled · 2023-11-08T11:20:50.694Z · LW(p) · GW(p)

Generative AI like LLMs or diffusion will eventually be superseded by human AI researchers coming up with something autonomous.

↑ comment by Davey Morse (davey-morse) · 2025-04-12T08:28:23.923Z · LW(p) · GW(p)

the AGIs which survive the most will model and prioritize their own survival

↑ comment by a gently pricked vein (strangepoop) · 2023-11-14T17:50:33.834Z · LW(p) · GW(p)

EA has gotten a little more sympathetic to vibes-based reasoning recently, and will continue to incorporate more of it.

↑ comment by a gently pricked vein (strangepoop) · 2023-11-14T17:47:43.192Z · LW(p) · GW(p)

The mind (ie. your mind), and how it is experienced from the inside, is potentially a very rich source of insights for keeping AI minds aligned on the inside.

↑ comment by Garrett Baker (D0TheMath) · 2023-11-10T22:27:26.519Z · LW(p) · GW(p)

The younger generation of EAs are less interesting than the older generation was when that old generation had the same experience as the young generation currently does.

↑ comment by Charbel-Raphaël (charbel-raphael-segerie) · 2023-11-10T11:14:16.120Z · LW(p) · GW(p)

In the context of Offense-defense balance, offense has a strong advantage

↑ comment by mattmacdermott · 2023-11-08T14:03:23.636Z · LW(p) · GW(p)

'Descriptive' agent foundations research is currently more important to work on than 'normative' agent foundations research.

↑ comment by trevor (TrevorWiesinger) · 2023-11-08T01:52:27.704Z · LW(p) · GW(p)

Developing a solid human intelligence/skill evaluation metric would be a high-EV project for AI safety, e.g. to make it easier to invest in moving valuable AI safety people to the Bay Area/London from other parts of the US/UK.

↑ comment by tailcalled · 2023-11-08T11:14:02.170Z · LW(p) · GW(p)

Autism is the extreme male version of a male-female difference in systemic vs empathic thinking.

↑ comment by Ben Pace (Benito) · 2023-11-07T21:15:04.550Z · LW(p) · GW(p)

People should pay an attractiveness tax to the government.

comment by riceissa · 2023-11-09T21:44:51.582Z · LW(p) · GW(p)

I really like the idea of this page and gave this post a strong-upvote. Felt like this was worth mentioning, since in recent years I've felt increasingly alienated by LessWrong culture. My only major request here is that, if there are future iterations of this page, I'd like poll options to be solicited/submitted before any voting happens (this is so that early submissions don't get an unfair advantage just by having more eyeballs on them). A second more minor request is to hide the votes while I'm still voting (I'm trying very hard not to be influenced by vote counts and the names of specific people agreeing/disagreeing with things, but it's difficult).

comment by Measure · 2023-11-08T15:23:36.632Z · LW(p) · GW(p)

Is it intended that collapsed polls display the author and expanded ones don't?

Replies from: Benito↑ comment by Ben Pace (Benito) · 2023-11-10T20:19:51.421Z · LW(p) · GW(p)

No. This is a one-off fast mockup of a poll just to see what it's like, and that's one of the problems that I didn't think I needed to fix before testing the idea (along with things like agree/disagree not automatically showing up).

Overall I'd say it was like an 75th-80th percentile outcome, I'm pleasantly surprised by how much activity it's had and how much interesting disagreement it has surfaced. So I've updated toward building this as a proper feature.

comment by lc · 2023-11-08T03:16:52.368Z · LW(p) · GW(p)

I feel like LessWrong should just update-all-the-way and ask Manifold Markets for a stylable embed system.

Replies from: cata↑ comment by cata · 2023-11-08T06:13:29.011Z · LW(p) · GW(p)

I work at Manifold, I don't know if this is true but I can easily generate some arguments against:

- Manifold's business model is shaky and Manifold may well not exist in 3 years.

- Manifold's codebase is also shaky and would not survive Manifold-the-company dying right now.

- Manifold is quite short on engineering labor.

- It seems to me that Manifold and LW have quite different values (Manifold has a typical startup focus on prioritizing growth at all costs) and so I expect many subtle misalignments in a substantial integration.

Personally for these reasons I am more eager to see features developed in the LW codebase than the Manifold codebase.

Replies from: M. Y. Zuo↑ comment by M. Y. Zuo · 2023-11-10T14:31:37.479Z · LW(p) · GW(p)

- Manifold's codebase is also shaky and would not survive Manifold-the-company dying right now.

Can you elaborate on this point? Why wouldn't the codebase be salvageable?

Replies from: mr-hire↑ comment by Matt Goldenberg (mr-hire) · 2023-11-10T15:07:04.534Z · LW(p) · GW(p)

I don't have any special knowledge, but my guess is their code is like a spaghetti tower (https://www.lesswrong.com/posts/NQgWL7tvAPgN2LTLn/spaghetti-towers#:~:text=The distinction about spaghetti towers,tower is more like this.) [LW · GW] because they've prioritized pushing out new features over refactoring and making a solid code base.

comment by Alex_Altair · 2023-11-08T16:23:03.898Z · LW(p) · GW(p)

I would love to try having dialogues with people about Agent Foundations! I'm on the vaguely-pro side, and want to have a better understanding of people on the vaguely-con side; either people who think it's not useful, or people who are confused about what it is and why we're doing it, etc.

Replies from: Seth Herd, thomas-kwa↑ comment by Seth Herd · 2023-11-08T20:22:13.834Z · LW(p) · GW(p)

I'd love to do this. I think clarifying the types of work that would fall under agent foundations would be very useful. On one hand, it seems as though most of the useful work has probably been done; on the other, some current discussion seems to be missing important points from that work.

↑ comment by Thomas Kwa (thomas-kwa) · 2023-11-08T17:10:41.474Z · LW(p) · GW(p)

I might want to do this. I'm on the vaguely pro side but think we currently don't have many tractable directions.

comment by trevor (TrevorWiesinger) · 2023-11-08T01:23:48.893Z · LW(p) · GW(p)

Would love if expandable comment sections could be put on poll options. Most won't benefit, but some might benefit greatly, epsecially if it incentivized low word count.

For example: For EA being >50% of being net negative, I would like to make a short comment like:

Replies from: TrevorWiesingerI think EA is something like ~52% net negative and ~48% net positive, with wide error bars, because sign uncertainty is high, but EA being net neutral is arbitrarily close to 0%.

A better poll would ask if EA was >60% of being net negative.

↑ comment by trevor (TrevorWiesinger) · 2023-11-08T13:46:57.581Z · LW(p) · GW(p)

I now think that it should go back to the binary yes and no responses, adding bells and whistles will complicate things too much.

Replies from: shankar-sivarajan↑ comment by Shankar Sivarajan (shankar-sivarajan) · 2023-11-09T01:14:58.679Z · LW(p) · GW(p)

At least ternary: an "unsure" option is definitely worth including. (That also seems to be the third most popular option in the questions above.)

I think a fourth option, for "the question is wrong" would also be a good one, but perhaps redundant if there is also a comment section.

comment by rvnnt · 2023-11-08T13:01:59.664Z · LW(p) · GW(p)

non-extinction AI x-risk scenarios are unlikely

Many people disagreed with that. So, apparently many people believe that inescapable dystopias are not-unlikely? (If you're one of the people who disagreed with the quote, I'm curious to hear your thoughts on this.)

Replies from: deluks917, orthonormal, rvnnt↑ comment by sapphire (deluks917) · 2023-11-09T08:14:45.724Z · LW(p) · GW(p)

Farmed animals are currently inside a non-extinction X-risk.

↑ comment by orthonormal · 2023-11-08T19:17:24.930Z · LW(p) · GW(p)

Steelmanning a position I don't quite hold: non-extinction AI x-risk scenarios aren't limited to inescapable dystopias as we imagine them.

"Kill all humans" is certainly an instrumental subgoal of "take control of the future lightcone" and it certainly gains an extra epsilon of resources compared to any form of not literally killing all humans, but it's not literally required, and there are all sorts of weird things the AGI could prefer to do with humanity instead depending on what kind of godshatter [LW · GW] it winds up with, most of which are so far outside the realm of human reckoning that I'm not sure it's reasonable to call them dystopian. (Far outside Weirdtopia, for that matter.)

It still seems very likely to me that a non-aligned superhuman AGI would kill humanity in the process of taking control of the future lightcone, but I'm not as sure of that as I'm sure that it would take control.

Replies from: rvnnt↑ comment by rvnnt · 2023-11-09T10:14:21.040Z · LW(p) · GW(p)

That makes sense; but:

so far outside the realm of human reckoning that I'm not sure it's reasonable to call them dystopian.

setting aside the question of what to call such scenarios, with what probability do you think the humans[1] in those scenarios would (strongly) prefer to not exist?

or non-human minds, other than the machines/Minds that are in control ↩︎

↑ comment by orthonormal · 2023-11-10T00:35:21.003Z · LW(p) · GW(p)

I expect AGI to emerge as part of the frontier model training run (and thus get a godshatter of human values), rather than only emerging after fine-tuning by a troll (and get a godshatter of reversed values), so I think "humans modified to be happy with something much cheaper than our CEV" is a more likely endstate than "humans suffering" (though, again, both much less likely than "humans dead").

↑ comment by rvnnt · 2023-11-09T10:16:45.424Z · LW(p) · GW(p)

One of the main questions on which I'd like to understand others' views is something like: Conditional on sentient/conscious humans[1] continuing to exist in an x-risk scenario[2], with what probability do you think they will be in an inescapable dystopia[3]?

(My own current guess is that dystopia is very likely.)

or non-human minds, other than the machines/Minds that are in control ↩︎

as defined by Bostrom, i.e. "the permanent and drastic destruction of [humanity's] potential for desirable future development" ↩︎

Versus e.g. just limited to a small disempowered population, but living in pleasant conditions? Or a large population living in unpleasant conditions, but where everyone at least has the option of suicide? ↩︎

comment by Davey Morse (davey-morse) · 2025-04-12T08:51:18.537Z · LW(p) · GW(p)

A simple poll system where you can sort the options/issues by their personal relevance... might unlock direct democracy at scale. Relevance could mean: semantic similarity to your past lesswrong writing.

Such a sort option would (1) surface more relevant issues to each person and so (2) increase community participation, and possibly (3) scale indefinitely. You could imagine a million people collectively prioritizing the issues that matter to them with such a system.

Would be simple to build.

comment by Review Bot · 2024-02-13T22:22:01.568Z · LW(p) · GW(p)

The LessWrong Review [? · GW] runs every year to select the posts that have most stood the test of time. This post is not yet eligible for review, but will be at the end of 2024. The top fifty or so posts are featured prominently on the site throughout the year.

Hopefully, the review is better than karma at judging enduring value. If we have accurate prediction markets on the review results, maybe we can have better incentives on LessWrong today. Will this post make the top fifty?

comment by Michael Roe (michael-roe) · 2023-11-21T12:42:30.550Z · LW(p) · GW(p)

I think the intelligence community ought to be watching AI risk, whether or not they actually are.

e.g. in the UK, the Socialist Workers Party was heavily infiltrated by undercover agents; widwly suspected at the time, subsequently officially confirmed. Now, you way well disagree with their politics, but it's pretty clear they didn't amount to a threat. Infiltration should probably have bailed out after quickly Establishing threat level was low.

AI risk, on the other hand ... from the point of view of an intelligence agency, there's uncertainty about whether there's a real risk or not. Seems worthwhile getting some undercover agents in place to find out what's going on.

... though, if in 20 years time it has become clear the AI apocalypse has been averted, and the three letter agencies are still running dozens of agents inside 5he AI companies, we could reasonably say their agents have outlived their usefulness.

comment by denyeverywhere (daniel-radetsky) · 2023-11-10T06:32:52.100Z · LW(p) · GW(p)

Academia is sufficiently dysfunctional that if you want to make a great scientific discover(y) you should basically do it outside of academia.

I feel like this point is a bit confused.

A person believing this essentially has to have a kind of "Wherever I am is where the party's at" mindset, in which case he ought to have an instrumental view of academia. Like obviously, if I want to maximize the time I spend reading math books and solving math problems, doing it inside of academia would involve wasting time and is suboptimal. However, if my goal is to do particle accelerator experiments, the easiest way to do this may be to convince people who have one to let me use it, which may mean getting a PhD. Since getting a PhD will still involve spending a bunch of time studying (if slightly suboptimally as compared to doing it outside of academia) then this might be the way to go.

See, we still think that academia is fucked, we just think they have all the particle accelerators. We only have to think academia is not so fucked that it's a thoroughly unreasonable source of particle accelerator access. We can still publish all our research open access, or even just on our blog (or also on our blog).