2013 Survey Results

post by Scott Alexander (Yvain) · 2014-01-19T02:51:57.048Z · LW · GW · Legacy · 560 commentsContents

Part I. Population II. Categorical Data III. Numeric Data IV. Bivariate Correlations V. Hypothesis Testing A. Do people in the effective altruism movement donate more money to charity? Do they donate a higher percent of their income to charity? Are they just generally more altruistic people? B. Can we finally resolve this IQ controversy that comes up every year? C. Can we predict who does or doesn't cooperate on prisoner's dilemmas? VI. Monetary Prize VII. Calibration Questions VIII. Public Data None 560 comments

Thanks to everyone who took the 2013 Less Wrong Census/Survey. Extra thanks to Ozy, who helped me out with the data processing and statistics work, and to everyone who suggested questions.

This year's results are below. Some of them may make more sense in the context of the original survey questions, which can be seen here. Please do not try to take the survey as it is over and your results will not be counted.

Part I. Population

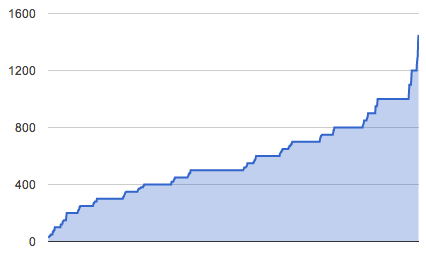

1636 people answered the survey.

Compare this to 1195 people last year, and 1090 people the year before that. It would seem the site is growing, but we do have to consider that each survey lasted a different amount of time; for example, last survey lasted 23 days, but this survey lasted 40.

However, almost everyone who takes the survey takes it in the first few weeks it is available. 1506 of the respondents answered within the first 23 days, proving that even if the survey ran the same length as last year's, there would still have been growth.

As we will see lower down, growth is smooth across all categories of users (lurkers, commenters, posters) EXCEPT people who have posted to Main, the number of which remains nearly the same from year to year.

We continue to have very high turnover - only 40% of respondents this year say they also took the survey last year.

II. Categorical Data

SEX:

Female: 161, 9.8%

Male: 1453, 88.8%

Other: 1, 0.1%

Did not answer: 21, 1.3%

[[Ozy is disappointed that we've lost 50% of our intersex readers.]]

GENDER:

F (cisgender): 140, 8.6%

F (transgender MtF): 20, 1.2%

M (cisgender): 1401, 85.6%

M (transgender FtM): 5, 0.3%

Other: 49, 3%

Did not answer: 21, 1.3%

SEXUAL ORIENTATION:

Asexual: 47, 2.9%

Bisexual: 188, 12.2%

Heterosexual: 1287, 78.7%

Homosexual: 45, 2.8%

Other: 39, 2.4%

Did not answer: 19, 1.2%

RELATIONSHIP STYLE:

Prefer monogamous: 829, 50.7%

Prefer polyamorous: 234, 14.3%

Other: 32, 2.0%

Uncertain/no preference: 520, 31.8%

Did not answer: 21, 1.3%

NUMBER OF CURRENT PARTNERS:

0: 797, 48.7%

1: 728, 44.5%

2: 66, 4.0%

3: 21, 1.3%

4: 1, .1%

6: 3, .2%

Did not answer: 20, 1.2%

RELATIONSHIP STATUS:

Married: 304, 18.6%

Relationship: 473, 28.9%

Single: 840, 51.3%

RELATIONSHIP GOALS:

Looking for more relationship partners: 617, 37.7%

Not looking for more relationship partners: 993, 60.7%

Did not answer: 26, 1.6%

HAVE YOU DATED SOMEONE YOU MET THROUGH THE LESS WRONG COMMUNITY?

Yes: 53, 3.3%

I didn't meet them through the community but they're part of the community now: 66, 4.0%

No: 1482, 90.5%

Did not answer: 35, 2.1%

COUNTRY:

United States: 895, 54.7%

United Kingdom: 144, 8.8%

Canada: 107, 6.5%

Australia: 69, 4.2%

Germany: 68, 4.2%

Finland: 35, 2.1%

Russia: 22, 1.3%

New Zealand: 20, 1.2%

Israel: 17, 1.0%

France: 16, 1.0%

Poland: 16, 1.0%

LESS WRONGERS PER CAPITA:

Finland: 1/154,685.

New Zealand: 1/221,650.

Canada: 1/325,981.

Australia: 1/328,659.

United States: 1/350,726

United Kingdom: 1/439,097

Israel: 1/465,176.

Germany: 1/1,204,264.

Poland: 1/2,408,750.

France: 1/4,106,250.

Russia: 1/6,522,727

RACE:

Asian (East Asian): 60, 3.7%

Asian (Indian subcontinent): 37, 2.3%

Black: 11, .7%

Middle Eastern: 9, .6%

White (Hispanic): 73, 4.5%

White (non-Hispanic): 1373, 83.9%

Other: 51, 3.1%

Did not answer: 22, 1.3%

WORK STATUS:

Academics (teaching): 77, 4.7%

For-profit work: 552, 33.7%

Government work: 55, 3.4%

Independently wealthy: 14, .9%

Non-profit work: 46, 2.8%

Self-employed: 103, 6.3%

Student: 661, 40.4%

Unemployed: 105, 6.4%

Did not answer: 23, 1.4%

PROFESSION:

Art: 27, 1.7%

Biology: 26, 1.6%

Business: 44, 2.7%

Computers (AI): 47, 2.9%

Computers (other academic computer science): 107, 6.5%

Computers (practical): 505, 30.9%

Engineering: 128, 7.8%

Finance/economics: 92, 5.6%

Law: 36, 2.2%

Mathematics: 139, 8.5%

Medicine: 31, 1.9%

Neuroscience: 13, .8%

Philosophy: 41, 2.5%

Physics: 92, 5.6%

Psychology: 34, 2.1%

Statistics: 23, 1.4%

Other hard science: 31, 1.9%

Other social science: 43, 2.6%

Other: 139, 8.5%

Did not answer: 38, 2.3%

DEGREE:

None: 84, 5.1%

High school: 444, 27.1%

2 year degree: 68, 4.2%

Bachelor's: 554, 33.9%

Master's: 323, 19.7%

MD/JD/other professional degree: 31, 2.0%

PhD.: 90, 5.5%

Other: 22, 1.3%

Did not answer: 19, 1.2%

POLITICAL:

Communist: 11, .7%

Conservative: 64, 3.9%

Liberal: 580, 35.5%

Libertarian: 437, 26.7%

Socialist: 502, 30.7%

Did not answer: 42, 2.6%

COMPLEX POLITICAL WITH WRITE-IN:

Anarchist: 52, 3.2%

Conservative: 16, 1.0%

Futarchist: 42, 2.6%

Left-libertarian: 142, 8.7%

Liberal: 5

Moderate: 53, 3.2%

Pragmatist: 110, 6.7%

Progressive: 206, 12.6%

Reactionary: 40, 2.4%

Social democrat: 154, 9.5%

Socialist: 135, 8.2%

Did not answer: 26.2%

[[All answers with more than 1% of the Less Wrong population included. Other answers which made Ozy giggle included "are any of you kings?! why do you CARE?!", "Exclusionary: you are entitled to an opinion on nuclear power when you know how much of your power is nuclear", "having-well-founded-opinions-is-really-hard-ist", "kleptocrat", "pirate", and "SPECIAL FUCKING SNOWFLAKE."]]

AMERICAN PARTY AFFILIATION:

Democratic Party: 226, 13.8%

Libertarian Party: 31, 1.9%

Republican Party: 58, 3.5%

Other third party: 19, 1.2%

Not registered: 447, 27.3%

Did not answer or non-American: 856, 52.3%

VOTING:

Yes: 936, 57.2%

No: 450, 27.5%

My country doesn't hold elections: 2, 0.1%

Did not answer: 249, 15.2%

RELIGIOUS VIEWS:

Agnostic: 165, 10.1%

Atheist and not spiritual: 1163, 71.1%

Atheist but spiritual: 132, 8.1%

Deist/pantheist/etc.: 36, 2.2%

Lukewarm theist: 53, 3.2%

Committed theist 64, 3.9%

RELIGIOUS DENOMINATION (IF THEIST):

Buddhist: 22, 1.3%

Christian (Catholic): 44, 2.7%

Christian (Protestant): 56, 3.4%

Jewish: 31, 1.9%

Mixed/Other: 21, 1.3%

Unitarian Universalist or similar: 25, 1.5%

[[This includes all religions with more than 1% of Less Wrongers. Minority religions include Dzogchen, Daoism, various sorts of Paganism, Simulationist, a very confused secular humanist, Kopmist, Discordian, and a Cultus Deorum Romanum practitioner whom Ozy wants to be friends with.]]

FAMILY RELIGION:

Agnostic: 129, 11.6%

Atheist and not spiritual: 225, 13.8%

Atheist but spiritual: 73, 4.5%

Committed theist: 423, 25.9%

Deist/pantheist, etc.: 42, 2.6%

Lukewarm theist: 563, 34.4%

Mixed/other: 97, 5.9%

Did not answer: 24, 1.5%

RELIGIOUS BACKGROUND:

Bahai: 3, 0.2%

Buddhist: 13, .8%

Christian (Catholic): 418, 25.6%

Christian (Mormon): 38, 2.3%

Christian (Protestant): 631, 38.4%

Christian (Quaker): 7, 0.4%

Christian (Unitarian Universalist or similar): 32, 2.0%

Christian (other non-Protestant): 99, 6.1%

Christian (unknown): 3, 0.2%

Eckankar: 1, 0.1%

Hindu: 29, 1.8%

Jewish: 136, 8.3%

Muslim: 12, 0.7%

Native American Spiritualist: 1, 0.1%

Mixed/Other: 85, 5.3%

Sikhism: 1, 0.1%

Traditional Chinese: 11, .7%

Wiccan: 1, 0.1%

None: 8, 0.4%

Did not answer: 107, 6.7%

MORAL VIEWS:

Accept/lean towards consequentialism: 1049, 64.1%

Accept/lean towards deontology: 77, 4.7%

Accept/lean towards virtue ethics: 197, 12.0%

Other/no answer: 276, 16.9%

Did not answer: 37, 2.3%

CHILDREN

0: 1414, 86.4%

1: 77, 4.7%

2: 90, 5.5%

3: 25, 1.5%

4: 7, 0.4%

5: 1, 0.1%

6: 2, 0.1%

Did not answer: 20, 1.2%

MORE CHILDREN:

Have no children, don't want any: 506, 31.3%

Have no children, uncertain if want them: 472, 29.2%

Have no children, want children: 431, 26.7%

Have no children, didn't answer: 5, 0.3%

Have children, don't want more: 124, 7.6%

Have children, uncertain if want more: 25, 1.5%

Have children, want more: 53, 3.2%

HANDEDNESS:

Right: 1256, 76.6%

Left: 145, 9.5%

Ambidextrous: 36, 2.2%

Not sure: 7, 0.4%

Did not answer: 182, 11.1%

LESS WRONG USE:

Lurker (no account): 584, 35.7%

Lurker (account) 221, 13.5%

Poster (comment, no post): 495, 30.3%

Poster (Discussion, not Main): 221, 12.9%

Poster (Main): 103, 6.3%

SEQUENCES:

Never knew they existed: 119, 7.3%

Knew they existed, didn't look at them: 48, 2.9%

~25% of the Sequences: 200, 12.2%

~50% of the Sequences: 271, 16.6%

~75% of the Sequences: 225, 13.8%

All the Sequences: 419, 25.6%

Did not answer: 24, 1.5%

MEETUPS:

No: 1134, 69.3%

Yes, once or a few times: 307, 18.8%

Yes, regularly: 159, 9.7%

HPMOR:

No: 272, 16.6%

Started it, haven't finished: 255, 15.6%

Yes, all of it: 912, 55.7%

CFAR WORKSHOP ATTENDANCE:

Yes, a full workshop: 105, 6.4%

A class but not a full-day workshop: 40, 2.4%

No: 1446, 88.3%

Did not answer: 46, 2.8%

PHYSICAL INTERACTION WITH LW COMMUNITY:

Yes, all the time: 94, 5.7%

Yes, sometimes: 179, 10.9%

No: 1316, 80.4%

Did not answer: 48, 2.9%

VEGETARIAN:

No: 1201, 73.4%

Yes: 213, 13.0%

Did not answer: 223, 13.6%

SPACED REPETITION:

Never heard of them: 363, 22.2%

No, but I've heard of them: 495, 30.2%

Yes, in the past: 328, 20%

Yes, currently: 219, 13.4%

Did not answer: 232, 14.2%

HAVE YOU TAKEN PREVIOUS INCARNATIONS OF THE LESS WRONG SURVEY?

Yes: 638, 39.0%

No: 784, 47.9%

Did not answer: 215, 13.1%

PRIMARY LANGUAGE:

English: 1009, 67.8%

German: 58, 3.6%

Finnish: 29, 1.8%

Russian: 25, 1.6%

French: 17, 1.0%

Dutch: 16, 1.0%

Did not answer: 15.2%

[[This includes all answers that more than 1% of respondents chose. Other languages include Urdu, both Czech and Slovakian, Latvian, and Love.]]

ENTREPRENEUR:

I don't want to start my own business: 617, 37.7%

I am considering starting my own business: 474, 29.0%

I plan to start my own business: 113, 6.9%

I've already started my own business: 156, 9.5%

Did not answer: 277, 16.9%

EFFECTIVE ALTRUIST:

Yes: 468, 28.6%

No: 883, 53.9%

Did not answer: 286, 17.5%

WHO ARE YOU LIVING WITH?

Alone: 348, 21.3%

With family: 420, 25.7%

With partner/spouse: 400, 24.4%

With roommates: 450, 27.5%

Did not answer: 19, 1.3%

DO YOU GIVE BLOOD?

No: 646, 39.5%

No, only because I'm not allowed: 157, 9.6%

Yes, 609, 37.2%

Did not answer: 225, 13.7%

GLOBAL CATASTROPHIC RISK:

Pandemic (bioengineered): 374, 22.8%

Environmental collapse including global warming: 251, 15.3%

Unfriendly AI: 233, 14.2%

Nuclear war: 210, 12.8%

Pandemic (natural) 145, 8.8%

Economic/political collapse: 175, 1, 10.7%

Asteroid strike: 65, 3.9%

Nanotech/grey goo: 57, 3.5%

Didn't answer: 99, 6.0%

CRYONICS STATUS:

Never thought about it / don't understand it: 69, 4.2%

No, and don't want to: 414, 25.3%

No, still considering: 636, 38.9%

No, would like to: 265, 16.2%

No, would like to, but it's unavailable: 119, 7.3%

Yes: 66, 4.0%

Didn't answer: 68, 4.2%

NEWCOMB'S PROBLEM:

Don't understand/prefer not to answer: 92, 5.6%

Not sure: 103, 6.3%

One box: 1036, 63.3%

Two box: 119, 7.3%

Did not answer: 287, 17.5%

GENOMICS:

Yes: 177, 10.8%

No: 1219, 74.5%

Did not answer: 241, 14.7%

REFERRAL TYPE:

Been here since it started in the Overcoming Bias days: 285, 17.4%

Referred by a friend: 241, 14.7%

Referred by a search engine: 148, 9.0%

Referred by HPMOR: 400, 24.4%

Referred by a link on another blog: 373, 22.8%

Referred by a school course: 1, .1%

Other: 160, 9.8%

Did not answer: 29, 1.9%

REFERRAL SOURCE:

Common Sense Atheism: 33

Slate Star Codex: 20

Hacker News: 18

Reddit: 18

TVTropes: 13

Y Combinator: 11

Gwern: 9

RationalWiki: 8

Marginal Revolution: 7

Unequally Yoked: 6

Armed and Dangerous: 5

Shtetl Optimized: 5

Econlog: 4

StumbleUpon: 4

Yudkowsky.net: 4

Accelerating Future: 3

Stares at the World: 3

xkcd: 3

David Brin: 2

Freethoughtblogs: 2

Felicifia: 2

Givewell: 2

hatrack.com: 2

HPMOR: 2

Patri Friedman: 2

Popehat: 2

Overcoming Bias: 2

Scientiststhesis: 2

Scott Young: 2

Stardestroyer.net: 2

TalkOrigins: 2

Tumblr: 2

[[This includes all sources with more than one referral; needless to say there was a long tail]]

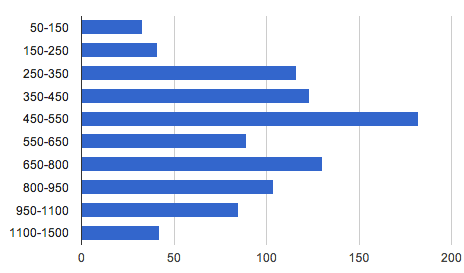

III. Numeric Data

(in the form mean + stdev (1st quartile, 2nd quartile, 3rd quartile) [n = number responding]))

Age: 27.4 + 8.5 (22, 25, 31) [n = 1558]

Height: 176.6 cm + 16.6 (173, 178, 183) [n = 1267]

Karma Score: 504 + 2085 (0, 0, 100) [n = 1438]

Time in community: 2.62 years + 1.84 (1, 2, 4) [n = 1443]

Time on LW: 13.25 minutes/day + 20.97 (2, 10, 15) [n = 1457]

IQ: 138.2 + 13.6 (130, 138, 145) [n = 506]

SAT out of 1600: 1474 + 114 (1410, 1490, 1560) [n = 411]

SAT out of 2400: 2207 + 161 (2130, 2240, 2330) [n = 333]

ACT out of 36: 32.8 + 2.5 (32, 33, 35) [n = 265]

P(Aliens in observable universe): 74.3 + 32.7 (60, 90, 99) [n = 1496]

P(Aliens in Milky Way): 44.9 + 38.2 (5, 40, 85) [n = 1482]

P(Supernatural): 7.7 + 22 (0E-9, .000055, 1) [n = 1484]

P(God): 9.1 + 22.9 (0E-11, .01, 3) [n = 1490]

P(Religion): 5.6 + 19.6 (0E-11, 0E-11, .5) [n = 1497]

P(Cryonics): 22.8 + 28 (2, 10, 33) [n = 1500]

P(AntiAgathics): 27.6 + 31.2 (2, 10, 50) [n = 1493]

P(Simulation): 24.1 + 28.9 (1, 10, 50) [n = 1400]

P(ManyWorlds): 50 + 29.8 (25, 50, 75) [n = 1373]

P(Warming): 80.7 + 25.2 (75, 90, 98) [n = 1509]

P(Global catastrophic risk): 72.9 + 25.41 (60, 80, 95) [n = 1502]

Singularity year: 1.67E +11 + 4.089E+12 (2060, 2090, 2150) [n = 1195]

[[Of course, this question was hopelessly screwed up by people who insisted on filling the whole answer field with 9s, or other such nonsense. I went back and eliminated all outliers - answers with more than 4 digits or answers in the past - which changed the results to: 2150 + 226 (2060, 2089, 2150)]]

Yearly Income: $73,226 +423,310 (10,000, 37,000, 80,000) [n = 910]

Yearly Charity: $1181.16 + 6037.77 (0, 50, 400) [n = 1231]

Yearly Charity to MIRI/CFAR: $307.18 + 4205.37 (0, 0, 0) [n = 1191]

Yearly Charity to X-risk (excluding MIRI or CFAR): $6.34 + 55.89 (0, 0, 0) [n = 1150]

Number of Languages: 1.49 + .8 (1, 1, 2) [n = 1345]

Older Siblings: 0.5 + 0.9 (0, 0, 1) [n = 1366]

Time Online/Week: 42.7 hours + 24.8 (25, 40, 60) [n = 1292]

Time Watching TV/Week: 4.2 hours + 5.7 (0, 2, 5) [n = 1316]

[[The next nine questions ask respondents to rate how favorable they are to the political idea or movement above on a scale of 1 to 5, with 1 being "not at all favorable" and 5 being "very favorable". You can see the exact wordings of the questions on the survey.]]

Abortion: 4.4 + 1 (4, 5, 5) [n = 1350]

Immigration: 4.1 + 1 (3, 4, 5) [n = 1322]

Basic Income: 3.8 + 1.2 (3, 4, 5) [n = 1289]

Taxes: 3.1 + 1.3 (2, 3, 4) [n = 1296]

Feminism: 3.8 + 1.2 (3, 4, 5) [n = 1329]

Social Justice: 3.6 + 1.3 (3, 4, 5) [n = 1263]

Minimum Wage: 3.2 + 1.4 (2, 3, 4) [n = 1290]

Great Stagnation: 2.3 + 1 (2, 2, 3) [n = 1273]

Human Biodiversity: 2.7 + 1.2 (2, 3, 4) [n = 1305]

IV. Bivariate Correlations

Ozy ran bivariate correlations between all the numerical data and recorded all correlations that were significant at the .001 level in order to maximize the chance that these are genuine results. The format is variable/variable: Pearson correlation (n). Yvain is not hugely on board with the idea of running correlations between everything and seeing what sticks, but will grudgingly publish the results because of the very high bar for significance (p < .001 on ~800 correlations suggests < 1 spurious result) and because he doesn't want to have to do it himself.

Less Political:

SAT score (1600)/SAT score (2400): .835 (56)

Charity/MIRI and CFAR donations: .730 (1193)

SAT score out of 2400/ACT score: .673 (111)

SAT score out of 1600/ACT score: .544 (102)

Number of children/age: .507 (1607)

P(Cryonics)/P(AntiAgathics): .489 (1515)

SAT score out of 1600/IQ: .369 (173)

MIRI and CFAR donations/XRisk donations: .284 (1178)

Number of children/ACT score: -.279 (269)

Income/charity: .269 (884)

Charity/Xrisk charity: .262 (1161)

P(Cryonics)/P(Simulation): .256 (1419)

P(AntiAgathics)/P(Simulation): .253 (1418)

Number of current partners/age: .238 (1607)

Number of children/SAT score (2400): -.223 (345)

Number of current partners/number of children: .205 (1612)

SAT score out of 1600/age: -.194 (422)

Charity/age: .175 (1259)

Time on Less Wrong/IQ: -.164 (492)

P(Warming)/P(GlobalCatastrophicRisk): .156 (1522)

Number of current partners/IQ: .155 (521)

P(Simulation)/age: -.153 (1420)

Immigration/P(ManyWorlds): .150 (1195)

Income/age: .150 (930)

P(Cryonics)/age: -.148 (1521)

Income/children: .145 (931)

P(God)/P(Simulation): .142 (1409)

Number of children/P(Aliens): .140 (1523)

P(AntiAgathics)/Hours Online: .138 (1277)

Number of current partners/karma score: .137 (1470)

Abortion/P(ManyWorlds): .122 (1215)

Feminism/Xrisk charity donations: -.122 (1104)

P(AntiAgathics)/P(ManyWorlds) .118 (1381)

P(Cryonics)/P(ManyWorlds): .117 (1387)

Karma score/Great Stagnation: .114 (1202)

Hours online/P(simulation): .114 (1199)

P(Cryonics)/Hours Online: .113 (1279)

P(AntiAgathics)/Great Stagnation: -.111 (1259)

Basic income/hours online: .111 (1200)

P(GlobalCatastrophicRisk)/Great Stagnation: -.110 (1270)

Age/X risk charity donations: .109 (1176)

P(AntiAgathics)/P(GlobalCatastrophicRisk): -.109 (1513)

Time on Less Wrong/age: -.108 (1491)

P(AntiAgathics)/Human Biodiversity: .104 (1286)

Immigration/Hours Online: .104 (1226)

P(Simulation)/P(GlobalCatastrophicRisk): -.103 (1421)

P(Supernatural)/height: -.101 (1232)

P(GlobalCatastrophicRisk)/height: .101 (1249)

Number of children/hours online: -.099 (1321)

P(AntiAgathics)/age: -.097 (1514)

Karma score/time on LW: .096 (1404)

This year for the first time P(Aliens) and P(Aliens2) are entirely uncorrelated with each other. Time in Community, Time on LW, and IQ are not correlated with anything particularly interesting, suggesting all three fail to change people's views.

Results we find amusing: high-IQ and high-karma people have more romantic partners, suggesting that those are attractive traits. There is definitely a Cryonics/Antiagathics/Simulation/Many Worlds cluster of weird beliefs, which younger people and people who spend more time online are slightly more likely to have - weirdly, that cluster seems slightly less likely to believe in global catastrophic risk. Older people and people with more children have more romantic partners (it'd be interesting to see if that holds true for the polyamorous). People who believe in anti-agathics and global catastrophic risk are less likely to believe in a great stagnation (presumably because both of the above rely on inventions). People who spend more time on Less Wrong have lower IQs. Height is, bizarrely, correlated with belief in the supernatural and global catastrophic risk.

All political viewpoints are correlated with each other in pretty much exactly the way one would expect. They are also correlated with one's level of belief in God, the supernatural, and religion. There are minor correlations with some of the beliefs and number of partners (presumably because polyamory), number of children, and number of languages spoken. We are doing terribly at avoiding Blue/Green politics, people.

More Political:

P(Supernatural)/P(God): .736 (1496)

P(Supernatural)/P(Religion): .667 (1492)

Minimum wage/taxes: .649 (1299)

P(God)/P(Religion): .631 (1496)

Feminism/social justice: .619 (1293)

Social justice/minimum wage: .508 (1262)

P(Supernatural)/abortion: -.469 (1309)

Taxes/basic income: .463 (1285)

P(God)/abortion: -.461 (1310)

Social justice/taxes: .456 (1267)

P(Religion)/abortion: -.413

Basic income/minimum wage: .392 (1283)

Feminism/taxes: .391 (1318)

Feminism/minimum wage: .391 (1312)

Feminism/human biodiversity: -.365 (1331)

Immigration/feminism: .355 (1336)

P(Warming)/taxes: .340 (1292)

Basic income/social justice: .311 (1270)

Immigration/social justice: .307 (1275)

P(Warming)/feminism: .294 (1323)

Immigration/human biodiversity: -.292 (1313)

P(Warming)/basic income: .290 (1287)

Social justice/human biodiversity: -.289 (1281)

Basic income/feminism: .284 (1313)

Human biodiversity/minimum wage: -.273 (1293)

P(Warming)/social justice: .271 (1261)

P(Warming)/minimum wage: .262 (1284)

Human biodiversity/taxes: -.251 (1270).

Abortion/feminism: .239 (1356)

Abortion/social justice: .220 (1292)

P(Warming)/immigration: .215 (1315)

Abortion/immigration: .211 (1353)

P(Warming)/abortion: .192 (1340)

Immigration/taxes: .186 (1322)

Basic income/taxes: .174 (1249)

Abortion/taxes: .170 (1328)

Abortion/minimum wage: .169 (1317)

P(warming)/human biodiversity: -.168 (1301)

Abortion/basic income: .168 (1314)

Immigration/Great Stagnation: -.163 (1281)

P(God)/feminism: -.159 (1294)

P(Supernatural)/feminism: -.158 (1292)

Human biodiversity/Great Stagnation: .152 (1287)

Social justice/Great Stagnation: -.135 (1242)

Number of languages/taxes: -.133 (1242)

P(God)/P(Warming): -.132 (1491)

P(Supernatural)/immigration: -.131 (1284)

P(Religion)immigration: -.129 (1296)

P(God)/immigration: -.127 (1286)

P(Supernatural)/P(Warming): -.125 (1487)

P(Supernatural)/social justice: -.125 (1227)

P(God)/taxes: -.145

Minimum wage/Great Stagnation: -124 (1269)

Immigration/minimum wage: .122 (1308)

Great Stagnation/taxes: -.121 (1270)

P(Religion)/P(Warming): -.113 (1505)

P(Supernatural)/taxes: -.113 (1265)

Feminism/Great Stagnation: -.112 (1295)

Number of children/abortion: -.112 (1386)

P(Religion)/basic income: -.108 (1296)

Number of current partners/feminism: .108 (1364)

Basic income/human biodiversity: -.106 (1301)

P(God)/Basic Income: -.105 (1255)

Number of current partners/basic income: .105 (1320)

Human biodiversity/number of languages: .103 (1253)

Number of children/basic income: -.099 (1322)

Number of children/P(Warming): -.091 (1535)

V. Hypothesis Testing

A. Do people in the effective altruism movement donate more money to charity? Do they donate a higher percent of their income to charity? Are they just generally more altruistic people?

1265 people told us how much they give to charity; of those, 450 gave nothing. On average, effective altruists (n = 412) donated $2503 to charity, and other people (n = 853) donated $523 - obviously a significant result. Effective altruists gave on average $800 to MIRI or CFAR, whereas others gave $53. Effective altruists gave on average $16 to other x-risk related charities; others gave only $2.

In order to calculate percent donated I divided charity donations by income in the 947 people helpful enough to give me both numbers. Of those 947, 602 donated nothing to charity, and so had a percent donated of 0. At the other extreme, three people donated 50% of their (substantial) incomes to charity, and 55 people donated at least 10%. I don't want to draw any conclusions about the community from this because the people who provided both their income numbers and their charity numbers are a highly self-selected sample.

303 effective altruists donated, on average, 3.5% of their income to charity, compared to 645 others who donated, on average, 1% of their income to charity. A small but significant (p < .001) victory for the effective altruism movement.

But are they more compassionate people in general? After throwing out the people who said they wanted to give blood but couldn't for one or another reason, I got 1255 survey respondents giving me an unambiguous answer (yes or no) about whether they'd ever given blood. I found that 51% of effective altruists had given blood compared to 47% of others - a difference which did not reach statistical significance.

Finally, at the end of the survey I had a question offering respondents a chance to cooperate (raising the value of a potential monetary prize to be given out by raffle to a random respondent) or defect (decreasing the value of the prize, but increasing their own chance of winning the raffle). 73% of effective altruists cooperated compared to 70% of others - an insignificant difference.

Conclusion: effective altruists give more money to charity, both absolutely and as a percent of income, but are no more likely (or perhaps only slightly more likely) to be compassionate in other ways.

B. Can we finally resolve this IQ controversy that comes up every year?

The story so far - our first survey in 2009 found an average IQ of 146. Everyone said this was stupid, no community could possibly have that high an average IQ, it was just people lying and/or reporting results from horrible Internet IQ tests.

Although IQ fell somewhat the next few years - to 140 in 2011 and 139 in 2012 - people continued to complain. So in 2012 we started asking for SAT and ACT scores, which are known to correlate well with IQ and are much harder to get wrong. These scores confirmed the 139 IQ result on the 2012 test. But people still objected that something must be up.

This year our IQ has fallen further to 138 (no Flynn Effect for us!) but for the first time we asked people to describe the IQ test they used to get the number. So I took a subset of the people with the most unimpeachable IQ tests - ones taken after the age of 15 (when IQ is more stable), and from a seemingly reputable source. I counted a source as reputable either if it name-dropped a specific scientifically validated IQ test (like WAIS or Raven's Progressive Matrices), if it was performed by a reputable institution (a school, a hospital, or a psychologist), or if it was a Mensa exam proctored by a Mensa official.

This subgroup of 101 people with very reputable IQ tests had an average IQ of 139 - exactly the same as the average among survey respondents as a whole.

I don't know for sure that Mensa is on the level, so I tried again deleting everyone who took a Mensa test - leaving just the people who could name-drop a well-known test or who knew it was administered by a psychologist in an official setting. This caused a precipitous drop all the way down to 138.

The IQ numbers have time and time again answered every challenge raised against them and should be presumed accurate.

C. Can we predict who does or doesn't cooperate on prisoner's dilemmas?

As mentioned above, I included a prisoner's dilemma type question in the survey, offering people the chance to make a little money by screwing all the other survey respondents over.

Tendency to cooperate on the prisoner's dilemma was most highly correlated with items in the general leftist political cluster identified by Ozy above. It was most notable for support for feminism, with which it had a correlation of .15, significant at the p < .01 level, and minimum wage, with which it had a correlation of .09, also significant at p < .01. It was also significantly correlated with belief that other people would cooperate on the same question.

I compared two possible explanations for this result. First, leftists are starry-eyed idealists who believe everyone can just get along - therefore, they expected other people to cooperate more, which made them want to cooperate more. Or, second, most Less Wrongers are white, male, and upper class, meaning that support for leftist values - which often favor nonwhites, women, and the lower class - is itself a symbol of self-sacrifce and altruism which one would expect to correlate with a question testing self-sacrifice and altruism.

I tested the "starry-eyed idealist" hypothesis by checking whether leftists were more likely to believe other people would cooperate. They were not - the correlation was not significant at any level.

I tested the "self-sacrifice" hypothesis by testing whether the feminism correlation went away in women. For women, supporting feminism is presumably not a sign of willingness to self-sacrifice to help an out-group, so we would expect the correlation to disappear.

In the all-female sample, the correlation between feminism and PD cooperation shrunk from .15 to a puny .04, whereas the correlation between the minimum wage and PD was previously .09 and stayed exactly the same at .09. This provides some small level of support for the hypothesis that the leftist correlation with PD cooperation represents a willingness to self-sacrifice in a population who are not themselves helped by leftist values.

(on the other hand, neither leftists nor cooperators were more likely to give money to charity, so if this is true it's a very selective form of self-sacrifice)

VI. Monetary Prize

1389 people answered the prize question at the bottom. 71.6% of these [n = 995] cooperated; 28.4% [n = 394] defected.

The prize goes to a person whose two word phrase begins with "eponymous". If this person posts below (or PMs or emails me) the second word in their phrase, I will give them $60 * 71.6%, or about $43. I can pay to a PayPal account, a charity of their choice that takes online donations, or a snail-mail address via check.

VII. Calibration Questions

The population of Europe, according to designated arbiter Wikipedia, is 739 million people.

People were really really bad at giving their answers in millions. I got numbers anywhere from 3 (really? three million people in Europe?) to 3 billion (3 million billion people = 3 quadrillion). I assume some people thought they were answering in billions, others in thousands, and other people thought they were giving a straight answer in number of individuals.

My original plan was to just adjust these to make them fit, but this quickly encountered some pitfalls. Suppose someone wrote 1 million (as one person did). Could I fairly guess they meant 100 million, even though there's really no way to guess that from the text itself? 1 billion? Maybe they just thought there were really one million people in Europe?

If I was too aggressive correcting these, everyone would get close to the right answer not because they were smart, but because I had corrected their answers. If I wasn't aggressive enough, I would end up with some guy who answered 3 quadrillion Europeans totally distorting the mean.

I ended up deleting 40 answers that suggested there were less than ten million or more than eight billion Europeans, on the grounds that people probably weren't really that far off so it was probably some kind of data entry error, and correcting everyone who entered a reasonable answer in individuals to answer in millions as the question asked.

The remaining 1457 people who can either follow simple directions or at least fail to follow them in a predictable way estimated an average European population in millions of 601 + 35.6 (380, 500, 750).

Respondents were told to aim for within 10% of the real value, which means they wanted between 665 million and 812 million. 18.7% of people [n = 272] got within that window.

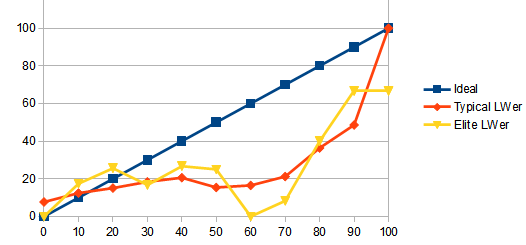

I divided people up into calibration brackets of [0,5], [6,15], [16, 25] and so on. The following are what percent of people in each bracket were right.

[0,5]: 7.7%

[6,15]: 12.4%

[16,25]: 15.1%

[26,35]: 18.4%

[36,45]: 20.6%

[46,55]: 15.4%

[56,65]: 16.5%

[66,75]: 21.2%

[76,85]: 36.4%

[86,95]: 48.6%

[96,100]: 100%

Among people who should know better (those who have read all or most of the Sequences and have > 500 karma, a group of 162 people)

[0,5]: 0

[6,15]: 17.4%

[16,25]: 25.6%

[26,35]: 16.7%

[36,45]: 26.7%

[46,55]: 25%

[56,65]: 0%

[66,75]: 8.3%

[76,85]: 40%

[86,95]: 66.6%

[96,100]: 66.6%

Clearly, the people who should know better don't.

This graph represents your performance relative to ideal performance. Dipping below the blue ideal line represents overconfidence; rising above it represents underconfidence. With few exceptions you were very overconfident. Note that there were so few "elite" LWers at certain levels that the graph becomes very noisy and probably isn't representing much; that huge drop at 60 represents like two or three people. The orange "typical LWer" line is much more robust.

There is one other question that gets at the same idea of overconfidence. 651 people were willing to give valid 90% confidence interval on what percent of people would cooperate (this is my fault; I only added this question about halfway through the survey once I realized it would be interesting to investigate). I deleted four for giving extremely high outliers like 9999% which threw off the results, leaving 647 valid answers. The average confidence interval was [28.3, 72.0], which just BARELY contains the correct answer of 71.6%. Of the 647 of you, only 346 (53.5%) gave 90% confidence intervals that included the correct answer!

Last year I complained about horrible performance on calibration questions, but we all decided it was probably just a fluke caused by a particularly weird question. This year's results suggest that was no fluke and that we haven't even learned to overcome the one bias that we can measure super-well and which is most easily trained away. Disappointment!

VIII. Public Data

There's still a lot more to be done with this survey. User:Unnamed has promised to analyze the "Extra Credit: CFAR Questions" section (not included in this post), but so far no one has looked at the "Extra Credit: Questions From Sarah" section, which I didn't really know what to do with. And of course this is most complete survey yet for seeking classic findings like "People who disagree with me about politics are stupid and evil".

1480 people - over 90% of the total - kindly allowed me to make their survey data public. I have included all their information except the timestamp (which would make tracking pretty easy) including their secret passphrases (by far the most interesting part of this exercise was seeing what unusual two word phrases people could come up with on short notice).

560 comments

Comments sorted by top scores.

comment by jamesf · 2014-01-19T03:32:04.506Z · LW(p) · GW(p)

Next survey, I'd be interested in seeing statistics involving:

- Recreational drug use

- Quantified Self-related activities

- Social media use

- Self-perceived physical attractiveness on the 1-10 scale

- Self-perceived holistic attractiveness on the 1-10 scale

- Personal computer's operating system

Excellent write-up and I look forward to next year's.

Replies from: Acidmind, Desrtopa, ChristianKl, Frazer, shokwave↑ comment by Acidmind · 2014-01-19T11:04:04.863Z · LW(p) · GW(p)

I'd like:

- Estimated average self-perceived physical attractiveness in the community

- Estimated average self-perceived holistic attractiveness in the community

Oh, we are really self-serving elitist overconfident pricks, aren't we?

Replies from: Creutzer↑ comment by Creutzer · 2014-01-19T11:11:25.937Z · LW(p) · GW(p)

How do you expect anybody to be able to answer that and what does it even mean? First, what community, exactly? Second, average - over what?

Replies from: ChristianKl, jkaufman↑ comment by ChristianKl · 2014-01-19T15:55:13.140Z · LW(p) · GW(p)

I think he means the people who take the survey.

If you ask in the survey for the self-perceived physical attractiveness you can ask in the same survey for the estimated average of all survey takers.

↑ comment by jefftk (jkaufman) · 2014-01-19T15:44:35.884Z · LW(p) · GW(p)

I think Acidmind means we should ask people their self-perceived attractiveness, and then ask them to estimate the average that will be given by all people taking the survey.

↑ comment by Desrtopa · 2014-01-21T21:48:26.513Z · LW(p) · GW(p)

Self-perceived physical attractiveness on the 1-10 scale Self-perceived holistic attractiveness on the 1-10 scale

While I don't remember the precise level, I would note that there are studies suggesting a rather surprisingly low level of correlation between self perceived attractiveness and attractiveness as perceived by others, and if we could induce a sufficient sample of participants to submit images of themselves to be rated by others (possibly in a context where they would not themselves find out the rating they received,) I think the comparison of those two values would be much more interesting than self-perceived attractiveness alone.

Replies from: jamesf↑ comment by jamesf · 2014-01-22T04:44:13.429Z · LW(p) · GW(p)

That's kind of the idea. I'm more interested in correlations involving self-perceived attractiveness, particularly the holistic one, than correlations involving measured physical attractiveness. It's a nice proxy for self-esteem.

Anonymity is a bit of a problem, though I suppose a pool of people that are as likely as your average human to know anyone who uses LW could be wrangled with some effort.

Replies from: Desrtopa↑ comment by ChristianKl · 2014-01-19T16:08:27.170Z · LW(p) · GW(p)

Quantified Self-related activities

I thought quite a bit about this and couldn't decide on many good questions.

The Anki question is sort of a result of this desire.

I thought of asking about pedometer usage such as Fitbit/Nike Plus etc but I'm not sure if the amount of people is enough to warrant the question.

Which specific questions would you want?

Social media use

By what metric? Total time investment? Few people can give you an accurate answer to that question.

Asking good questions isn't easy.

Self-perceived physical attractiveness on the 1-10 scale

I personally don't think that term is very meaningful. I do have hotornot pictures that scored a 9, but what does that mean? The last time I used tinder I click through a lot of female images and very few liked me back. But I haven't yet isolated factors or know about average success rates for guy's using Tinder.

Recreational drug use

There interested in not gathering data that would cause someone to admit criminal behavior. A person might be findable if you know there stances on a few questions. There also the issue of possible outsiders being able to say: "30% of LW participants are criminals!"

Personal computer's operating system

I agree, that would be nice question.

Replies from: jamesf, shokwave↑ comment by jamesf · 2014-01-19T17:17:56.958Z · LW(p) · GW(p)

Quantified Self examples:

- Have you attempted and stuck with the recording of personal data for >1 month for any reason? (Y/N)

- If so, did you find it useful? (Y/N)

Social media example:

- How many hours per week do you think you spend on social media?

Asking about self-perceived attractiveness tells us little about how attractive a person is, but quite a bit about how they see themselves, and I want to learn how that's correlated with answers to all these other questions.

Maybe the recreational drug use question(s) could be stripped from the public data?

Replies from: ChristianKl↑ comment by ChristianKl · 2014-01-19T18:17:16.961Z · LW(p) · GW(p)

Have you attempted and stuck with the recording of personal data for >1 month for any reason? (Y/N)

Having a calendar with time of when you do what actions is recording of personal data and for most people for timeframes longer than a month.

Anyone who uses Anki gets automated backround data recording of how many minutes per day he uses Anki.

Replies from: jamesf↑ comment by jamesf · 2014-01-19T18:42:51.187Z · LW(p) · GW(p)

I might be willing to call either of those self-quantifying activities. Definitely the first one, if you actually put most activities you do on there rather than just the ones that aren't habit or important enough to definitely not forget. I think the question could be modified to capture the intent. Let's see...

Replies from: ChristianKlHave you ever made an effort to record personal data for future analysis and stuck with it for >1 month? (Y/N)

↑ comment by ChristianKl · 2014-01-19T19:02:58.259Z · LW(p) · GW(p)

That sounds like a good question. Hopefully we remember when the time comes up.

↑ comment by shokwave · 2014-01-20T13:23:43.664Z · LW(p) · GW(p)

There interested in not gathering data that would cause someone to admit criminal behavior.

As far as I'm aware - and correct me if I'm wrong - drug use is not a crime (and by extension admitting past drug use isn't either). Possession, operating a vehicle under the influence, etc, are all crimes, but actually having used drugs isn't a criminal act.

There also the issue of possible outsiders being able to say: "30% of LW participants are criminals!"

The current survey (hell, the IQ section alone) gives them more ammunition than they could possibly expend, I feel.

Replies from: ChristianKl, Lalartu, nshepperd↑ comment by ChristianKl · 2014-01-20T13:38:02.783Z · LW(p) · GW(p)

The current survey (hell, the IQ section alone)

What the problem with someone external writing an article about how LW is a group who thinks they are high IQ?

Replies from: shokwave, lmm↑ comment by shokwave · 2014-01-21T04:25:00.745Z · LW(p) · GW(p)

The same problem you presumably have with someone external writing an article about how LW is a group of criminals: it makes us look bad.

You might not agree with self-proclaimed high IQ being a social negative, but most of the world does.

Replies from: Lumifer, ChristianKl, Emile↑ comment by Lumifer · 2014-01-23T16:14:23.221Z · LW(p) · GW(p)

You might not agree with self-proclaimed high IQ being a social negative, but most of the world does.

So? Fuck 'em.

Replies from: shokwave, army1987↑ comment by shokwave · 2014-01-24T13:12:15.386Z · LW(p) · GW(p)

Excellent in-group signalling but terrible public relations move.

Replies from: Mestroyer, Lumifer↑ comment by Mestroyer · 2014-01-24T16:30:51.532Z · LW(p) · GW(p)

We don't need or want to signal friendliness to absolutely everyone. We want to carefully choose what kind of filters and how many filters we apply to people who might be interested in our community. Every filter comes with a cost in that it reduces our growth, and must be justified through increasing the quality of our discussions. However, filter not at all, and you might as well just step out onto the street and talk to strangers.

Personally, I am all for filtering out the "punish for not putting modesty before facts" attitude. Both because I find it irritating, and because it drives away boastful awesome people, and I like substantiated boasting and the people who do it.

In other words, "Yeah, fuck 'em."

↑ comment by Lumifer · 2014-01-24T15:56:55.673Z · LW(p) · GW(p)

So is admitting to being an atheist, for example. Optimizing for public relations is rarely a good move.

Replies from: TheAncientGeek↑ comment by TheAncientGeek · 2014-01-24T15:59:48.351Z · LW(p) · GW(p)

So is admitting to being an atheist, for exampl

That's a lot more culture specific.

↑ comment by A1987dM (army1987) · 2014-01-24T10:16:04.940Z · LW(p) · GW(p)

I would also say exactly the same thing with “recreational drug use” replacing “high IQ”.

Replies from: Lumifer↑ comment by ChristianKl · 2014-01-21T12:50:13.581Z · LW(p) · GW(p)

I don't think the goal of LW is to be socially approval for the average person.

On the one hand it's to grow people who might want to participate in LW. The fact that LW has many smart people in it, could draw the right people into LW.

On the other hand it's to further the agenda of CFAR, MIRI and FHI. I don't think the world listens less to a programmer who wants to warn about the dangers of UFAI when the programmer proclaims that he's smart.

It's very hard for me to see a media article that wouldn't describe CFAR as a bunch of people who think they are smart. If you write the advancement of rationality on your bannar, that something that everyone is to assume anyway. Having polled IQ data doesn't do further damage.

Replies from: private_messaging, shokwave↑ comment by private_messaging · 2014-01-24T13:51:53.707Z · LW(p) · GW(p)

On the other hand it's to further the agenda of CFAR, MIRI and FHI. I don't think the world listens less to a programmer who wants to warn about the dangers of UFAI when the programmer proclaims that he's smart.

Mostly, of people who proclaim IQ of, say, 150 or higher, over 9 out of 10 times it's going to be because of some kind of issue such as narcissism.

The funniest aspect of self declared bayesianism is that "bayesians" never imagine that it could be applied to what they say (and go on fuming about punishments and status games and reflexes whenever it is).

Replies from: Vaniver, Jiro↑ comment by Vaniver · 2014-01-24T17:25:29.175Z · LW(p) · GW(p)

The funniest aspect of self declared bayesianism is that "bayesians" never imagine that it could be applied to what they say (and go on fuming about punishments and status games and reflexes whenever it is).

Emphasis mine. Alternatively, those Bayesians with social graces aren't available, because they don't do anything ridiculous enough to remember.

Replies from: private_messaging↑ comment by private_messaging · 2014-01-24T18:11:10.774Z · LW(p) · GW(p)

Fair enough, albeit social graces in that case would imply good understanding of how other people process evidence, which would make self-labeling as "bayesian" seem very silly.

↑ comment by Jiro · 2014-01-24T16:05:37.773Z · LW(p) · GW(p)

Imagine that 1% of the population have high IQs (and will claim so) and 10% of the population are narcissistic, and half of those like to claim they have high IQ. The Bayseian calculation would be P(high IQ|claim high IQ) = P(claim high IQ|high IQ) P(high IQ) divided by P(claim high IQ|high IQ) P(high IQ) + P(claim high IQ|narcissism) P(narcissism) = (1.00 0.01) / (1.00 0.01 + 0.5 0.10) = 1/6.

You can quibble about the exact figures, but private_messaging is correct here. Because narcissism is relatively common, the claim of having high IQ is very weak evidence for having high IQ but very strong evidence for being narcissistic. (Although it's stronger evidence for high IQ in a community where high IQ is more common.)

Replies from: army1987, private_messaging↑ comment by A1987dM (army1987) · 2014-01-25T08:50:46.748Z · LW(p) · GW(p)

You can quibble about the exact figures,

Indeed, I think you're way overestimating P(claim high IQ|high IQ).

↑ comment by private_messaging · 2014-01-24T16:50:29.657Z · LW(p) · GW(p)

To clarify, it's still as strong of evidence of having high IQ as a statement can be, it is just not strong enough to overcome the low prior.

Then there's the issue that - I do not know about the US but it seems fairly uncommon to have taken a professionally administered IQ test here, whenever you are smart or not. It may be that LW has an unusually high percentage of people who took such a test.

↑ comment by shokwave · 2014-01-23T12:33:41.253Z · LW(p) · GW(p)

If you replace "smart" with "used drugs recreationally" you might see my point?

Replies from: ChristianKl↑ comment by ChristianKl · 2014-01-23T14:42:15.026Z · LW(p) · GW(p)

If you replace "smart" with "used drugs recreationally" you might see my point?

Actually I don't think that rationality (as the CFAR mission) has much to do with using drugs recreationally it does have something to do with being smart. You could have a CFAR that experiments with various mind altering substances to see which of those improve rationality. That's not the CFAR that we have.

I did a lot of QS PR. That means having a 2 hour interview where the journalist might pick 30 seconds of phrases that come on TV. I wouldn't have had any issue in that context of playing into a nerd stereotype. On the other hand I wouldn't have said something that fits QS users into the stereotype of drug users.

Replies from: shokwave↑ comment by lmm · 2014-01-20T20:54:33.107Z · LW(p) · GW(p)

It makes it easy to portray LW as a bunch of out-of-touch nerds?

Replies from: blacktrance, ChristianKl↑ comment by blacktrance · 2014-01-20T21:00:53.859Z · LW(p) · GW(p)

"I'm part of a community, you live in a bubble, he's out of touch."

↑ comment by ChristianKl · 2014-01-20T23:12:48.926Z · LW(p) · GW(p)

How does having a high IQ means someone is out-of-touch?

Yes, people can argue that LW is a bunch of nerds, but I don't think that's much of a problem. If we get a newsarticles about how smart nerds think that unfriendly AI is a big risk for humanity, I don't think the fact that those smart nerds think that they are high IQ is a problem.

It's different for arguing criminality or for arguing being delusional because of drug use.

Replies from: Nornagest↑ comment by Nornagest · 2014-01-20T23:34:33.398Z · LW(p) · GW(p)

There is a stereotype -- at least in the United States -- of nerds believing that high intelligence entitles them to claim insight and moral purity beyond their actual abilities, and implicitly of their inevitable downfall and the triumph of good old-fashioned common sense. We risk pattern-matching to this stereotype in any case, thanks to bandying about unusual ethical considerations in academic language, but talking up our own intelligence doesn't help at all.

It isn't having high IQ, in other words, so much as talking about it.

Replies from: ChristianKl↑ comment by ChristianKl · 2014-01-20T23:47:22.923Z · LW(p) · GW(p)

We risk pattern-matching to this stereotype in any case

I can't see how you could structure LW in a way that someone who wants to talk about LW as a bunch of nerds can't do so. You don't need a statistic about the average IQ of LW to do so. Gathering the IQ data doesn't bring up anything that wasn't there before.

The basilisk episode is a lot more useful if you want to argue that LW is a group of out of touch nerds. See rationalwiki.

↑ comment by Frazer · 2014-05-02T03:35:41.782Z · LW(p) · GW(p)

I'd also like to see time spent per day meditating, or other form of mental training

Replies from: ChristianKl↑ comment by ChristianKl · 2014-05-02T12:07:59.939Z · LW(p) · GW(p)

How would you word the question?

↑ comment by shokwave · 2014-01-19T10:20:51.748Z · LW(p) · GW(p)

- Are you Ask or Guess culture?

↑ comment by ChristianKl · 2014-01-19T15:55:48.164Z · LW(p) · GW(p)

I'm not culture.

In some social circles I might behave in one way, in others another way. In different situations I act differently depending on how strongly I want to communicate a demand.

Replies from: shokwavecomment by Vaniver · 2014-01-19T06:15:13.357Z · LW(p) · GW(p)

Repeating complaints from last year:

So in 2012 we started asking for SAT and ACT scores, which are known to correlate well with IQ and are much harder to get wrong. These scores confirmed the 139 IQ result on the 2012 test.

The 2012 estimate from SATs was about 128, since the 1994 renorming destroyed the old relationship between the SAT and IQ. Our average SAT (on 1600) was again about 1470, which again maps to less than 130, but not by much. (And, again, self-reported average probably overestimates actual population average.)

Last year I complained about horrible performance on calibration questions, but we all decided it was probably just a fluke caused by a particularly weird question. This year's results suggest that was no fluke and that we haven't even learned to overcome the one bias that we can measure super-well and which is most easily trained away. Disappointment!

I still think you're asking this question in a way that's particularly hard for people to get right. (The issue isn't the fact you ask about, but what sort of answers you look for.)

You've clearly got an error in your calibration chart; you can't have 2 out of 3 elite LWers be right in the [95,100] category but 100% of typical LWers are right in that category. Or are you not including the elite LWers in typical LWers? Regardless, the person who gave a calibration of 99% and the two people who gave calibrations of 100% aren't elite LWers (karmas of 0, 0, and 4; two 25% of the sequences and one 50%).

With few exceptions you were very overconfident.

The calibration chart doesn't make clear the impact of frequency. If most people are providing probabilities of 20%, and they're about 20% right, then most people are getting it right- and the 2-3 people who provided a probability of 60% don't matter.

There are a handful of ways to depict this. One I haven't seen before, which is probably ugly, is to scale the width of the points by the frequency. Instead, here's a flat graph of the proportion of survey respondents who gave each calibration bracket:

Significant is that if you add together the 10, 20, and 30 brackets (the ones around the correct baseline probability of ~20% of getting it right) you get 50% for typical LWers and 60% for elite LWers; so most people were fairly close to correctly calibrated, and the people who thought they had more skill on the whole dramatically overestimated how much more skill they had.

(I put down 70% probability, but was answering the wrong question; I got the population of the EU almost exactly right, which I knew from GDP and per-capita comparisons to the US. Oops.)

Replies from: private_messaging↑ comment by private_messaging · 2014-01-19T14:24:50.865Z · LW(p) · GW(p)

The 2012 estimate from SATs was about 128, since the 1994 renorming destroyed the old relationship between the SAT and IQ. Our average SAT (on 1600) was again about 1470, which again maps to less than 130, but not by much. (And, again, self-reported average probably overestimates actual population average.)

It's very interesting that the same mistake was boldly made again this year... I guess this mistake is sort of self reinforcing due to the uncannily perfect equality between mean IQ and what's incorrectly estimated from the SAT scores.

Replies from: Vaniver, Yvain↑ comment by Vaniver · 2014-01-19T21:16:43.852Z · LW(p) · GW(p)

Actually, I just ran the numbers on the SAT2400 and they're closer; the average percentile predicted from that is 99th, which corresponds to about 135.

Replies from: private_messaging, None, Yvain↑ comment by private_messaging · 2014-01-19T23:10:39.405Z · LW(p) · GW(p)

For non-Americans, what's the difference between SAT 2400 and SAT 1600 ?

Averaging sat scores is a little iffy because, given a cut-off, they won't have Gaussian distribution. Also, given imperfect correlation it is unclear how one should convert the scores. If I pick someone with SAT in top 1% I shouldn't expect IQ in the top 1% because of regression towards the mean. (Granted I can expect both scores to be closer if I were picking by some third factor influencing both).

It'd be interesting to compare frequency of advanced degrees with the scores, for people old enough to have advanced degrees.

Replies from: Prismattic, Richard_Kennaway↑ comment by Prismattic · 2014-01-20T00:18:45.061Z · LW(p) · GW(p)

The SAT used to have only two sections, with a maximum of 800 points each, for a total of 1600 (the worst possible score, IIRC, was 200 on each for 400). At some point after I graduated high school, they added a 3rd 800 point section (I think it might be an essay), so the maximum score went from 1600 to 2400.

Replies from: Fermatastheorem↑ comment by Fermatastheorem · 2014-01-21T04:32:15.364Z · LW(p) · GW(p)

Yes, it's a timed essay.

↑ comment by Richard_Kennaway · 2014-01-20T00:06:31.772Z · LW(p) · GW(p)

Also, given imperfect correlation it is unclear how one should convert the scores. If I pick someone with SAT in top 1% I shouldn't expect IQ in the top 1% because of regression towards the mean.

The correlation is the slope of the regression line in coordinates normalised to unit standard deviations. Assuming (for mere convenience) a bivariate normal distribution, let F be the cumulative distribution function of the unit normal distribution, with inverse invF. If someone is at the 1-p level of the SAT distribution (in the example p=0.01) then the level to guess they are at in the IQ distribution (or anything else correlated with SAT) is q = F(c invF(p)). For p=0.01, here are a few illustrative values:

c 0.0000 0.1000 0.2000 0.3000 0.4000 0.5000 0.6000 0.7000 0.8000 0.9000 1.0000

q 0.5000 0.4080 0.3209 0.2426 0.1760 0.1224 0.0814 0.0517 0.0314 0.0181 0.0100

The standard deviation of the IQ value, conditional on the SAT value, is the unconditional standard deviation multiplied by c' = sqrt(1-c^2). The q values for 1 standard deviation above and below are therefore given by qlo = F(-c' + c invF(p)) and qhi = F(c' + c invF(p)).

qlo 0.1587 0.1098 0.0742 0.0493 0.0324 0.0212 0.0141 0.0096 0.0069 0.0057 0.0100

qhi 0.8413 0.7771 0.6966 0.6010 0.4944 0.3832 0.2757 0.1803 0.1036 0.0487 0.0100

↑ comment by private_messaging · 2014-01-24T15:44:42.566Z · LW(p) · GW(p)

There are subtleties though. E.g. if we take some programming contest finalists / winners, and take their IQ scores, those are regressed towards the mean from their programming contest performance. Their other abilities will be regressed towards the mean from the same height, not from IQ. This might explain the dramatic cognitive skill disparity between, say, Mensa and some professional group of same IQs.

↑ comment by [deleted] · 2014-02-21T16:26:47.326Z · LW(p) · GW(p)

2210 was 98th percentile in 2013. But it was 99th in 2007.

I haven't seen an SAT-IQ comparison site I trust. This one listed on gwern's website for example seems wrong.

Replies from: Vaniver↑ comment by Vaniver · 2014-02-21T21:30:38.277Z · LW(p) · GW(p)

2210 was 98th percentile in 2013. But it was 99th in 2007.

If I remember correctly, I did SAT->percentile->average, rather than SAT->average->percentile; the first method should lead to a higher estimate if the tail is negative (which I think it is).

[edit]Over here is the work and source for that particular method- turns out I did SAT->average->percentile to get that result, with a slightly different table, and I guess I didn't report the average percentile that I calculated (which you had to rely on interpolation for anyway).

This one listed on gwern's website for example seems wrong.

It's only accurate up to 1994.

↑ comment by Scott Alexander (Yvain) · 2014-01-20T02:41:20.920Z · LW(p) · GW(p)

One reason SAT1600 and SAT2400 scores may differ is that some of the SAT1600 scores might in fact have come from before the 1994 renorming. Have you tried doing pre-1994 and post-1994 scores separately (guessing when someone took the SAT based on age?)

Replies from: Vaniver↑ comment by Vaniver · 2014-01-20T04:52:47.181Z · LW(p) · GW(p)

SAT1600 scores by age:

Average SAT for LWers 30 and under (217 total): 1491. (27 1600s.)

Average SAT for LWers 31 to 35 (74 total): 1462.7 (9 1600s.)

Average SAT for LWers 36 and older (81 total): 1437. (One 1600, by someone who's 56.)

I'm pretty sure the 36 and above are all the older SAT, suspect the middle group contains both, and pretty confident the younger group is mostly the newer SAT. The strong majority comes from the post 1995 test, and the scores don't seem to have changed by all that much in nominal terms.

Replies from: private_messaging↑ comment by private_messaging · 2014-01-20T11:12:33.028Z · LW(p) · GW(p)

Which creates another question, why do the SAT 2400 and SAT 1600 differ so much?

↑ comment by Scott Alexander (Yvain) · 2014-01-21T03:02:46.423Z · LW(p) · GW(p)

According to Vaniver's data downthread, SAT taken only from LWers older than 36 (taking the old SAT) predicts 140 IQ.

I can't calculate the IQ of LWers younger than 36 because I can't find a site I trust to predict IQ from new SAT. The only ones I get give absurd results like average SAT 1491 implies average IQ 151.

comment by jefftk (jkaufman) · 2014-01-19T16:12:22.727Z · LW(p) · GW(p)

The IQ numbers have time and time again answered every challenge raised against them and should be presumed accurate.

What if the people who have taken IQ tests are on average smarter than the people who haven't? My impression is that people mostly take IQ tests when they're somewhat extreme: either low and trying to qualify for assistive services or high and trying to get "gifted" treatment. If we figure lesswrong draws mostly from the high end, then we should expect the IQ among test-takers to be higher than what we would get if we tested random people who had not previously been tested.

The IQ Question read: "Please give the score you got on your most recent PROFESSIONAL, SCIENTIFIC IQ test - no Internet tests, please! All tests should have the standard average of 100 and stdev of 15."

Among the subset of people making their data public (n=1480), 32% (472) put an answer here. Those 472 reports average 138, in line with past numbers. But 32% is low enough that we're pretty vulnerable to selection bias.

(I've never taken an IQ test, and left this question blank.)

Replies from: VincentYu, ArisKatsaris↑ comment by VincentYu · 2014-01-20T15:01:31.166Z · LW(p) · GW(p)

What if the people who have taken IQ tests are on average smarter than the people who haven't? My impression is that people mostly take IQ tests when they're somewhat extreme: either low and trying to qualify for assistive services or high and trying to get "gifted" treatment. If we figure lesswrong draws mostly from the high end, then we should expect the IQ among test-takers to be higher than what we would get if we tested random people who had not previously been tested.

This sounds plausible, but from looking at the data, I don't think this is happening in our sample. In particular, if this were the case, then we would expect the SAT scores of those who did not submit IQ data to be different from those who did submit IQ data. I ran an Anderson–Darling test on each of the following pairs of distributions:

- SAT out of 2400 for those who submitted IQ data (n = 89) vs SAT out of 2400 for those who did not submit IQ data (n = 230)

- SAT out of 1600 for those who submitted IQ data (n = 155) vs SAT out of 1600 for those who did not submit IQ data (n = 217)

The p-values came out as 0.477 and 0.436 respectively, which means that the Anderson–Darling test was unable to distinguish between the two distributions in each pair at any significance.

As I did for my last plot, I've once again computed for each distribution a kernel density estimate with bootstrapped confidence bands from 999 resamples. From visual inspection, I tend to agree that there is no clear difference between the distributions. The plots should be self-explanatory:

(More details about these plots are available in my previous comment.)

Edit: Updated plots. The kernel density estimates are now fixed-bandwidth using the Sheather–Jones method for bandwidth selection. The density near the right edge is bias-corrected using an ad hoc fix described by whuber on stats.SE.

Replies from: jkaufman↑ comment by jefftk (jkaufman) · 2014-01-20T22:53:41.130Z · LW(p) · GW(p)

Thanks for digging into this! Looks like the selection bias isn't significant.

↑ comment by ArisKatsaris · 2014-01-19T17:06:20.310Z · LW(p) · GW(p)

But 32% is low enough that we're pretty vulnerable to selection bias

The large majority of LessWrongers in the USA have however also provided their SAT scores, and those are also very high values (from what little I know of SATs)...

Replies from: Vaniver↑ comment by Vaniver · 2014-01-19T20:45:42.256Z · LW(p) · GW(p)

The large majority of LessWrongers in the USA have however also provided their SAT scores, and those are also very high values (from what little I know of SATs)...

The reported SAT numbers are very high, but the reported IQ scores are extremely high. The mean reported SAT score, if received on the modern 1600 test, corresponds to an IQ in the upper 120s, not the upper 130s. The mean reported SAT2400 score was 2207, which corresponds to 99th but not 99.5th percentile. 99th percentile is an IQ of 135, which suggests that the self-reports may not be that off compared to the SAT self-reports.

Replies from: michaelsullivan, jaime2000↑ comment by michaelsullivan · 2014-01-22T17:29:19.800Z · LW(p) · GW(p)

Some of us took the SAT before 1995, so it's hard to disentangle those scores. A pre-1995 1474 would be at 99.9x percentile, in line with an IQ score around 150-155. If you really want to compare, you should probably assume anyone age 38 or older took the old test and use the recentering adjustment for them.

I'm also not sure how well the SAT distinguishes at the high end. It's apparently good enough for some high IQ societies, who are willing to use the tests for certification. I was shown my results and I had about 25 points off perfect per question marked wrong. So the distinction between 1475 and 1600 on my test would probably be about 5 total questions. I don't remember any questions that required reasoning I considered difficult at the time. The difference between my score and one 100 points above or below might say as much about diligence or proofreading as intelligence.

Admittedly, the variance due to non-g factors should mostly cancel in a population the size of this survey, and is likely to be a feature of almost any IQ test.

That said, the 1995 score adjustment would have to be taken into account before using it as a proxy for IQ.

Replies from: private_messaging↑ comment by private_messaging · 2014-01-22T17:38:01.478Z · LW(p) · GW(p)

Conversion is a very tricky matter, because the correlation is much less than 1 ( 0.369 in the survey, apparently).

With correlation less than 1, regression towards the mean comes into play, so the predicted IQ from perfect SAT is actually not that high (someone posted coefficients in a parallel discussion), and predicted SAT from very high IQ is likewise not that awesome.

The reason the figures seem rather strange, is that they imply some kind of extreme filtering by IQ here. The negative correlation between time here and IQ suggest that the content is not acting as much of a filter, or is acting as a filter in the opposite direction.

Replies from: Vaniver↑ comment by Vaniver · 2014-01-22T18:38:44.221Z · LW(p) · GW(p)

The negative correlation between time here and IQ suggest that the content is not acting as much of a filter, or is acting as a filter in the opposite direction.

Well, alternatively old-timers feel it's more important to accurately estimate their IQ, and new-comers feel it's more important to be impressive. There also might not be an effect that needs explaining: I haven't looked at a scatterplot of IQ by time in community or karma yet for this year; last year, there were a handful of low-karma people who reported massive IQs, and once you removed those outliers the correlation mostly vanished.

Replies from: private_messaging↑ comment by private_messaging · 2014-01-22T20:02:05.337Z · LW(p) · GW(p)

You still need to explain how the population ended up so extremely filtered.

Without the rest of the survey, one might imagine that various unusual beliefs here are something that's only very smart people can see as correct and so only very smart people agree and join, but the survey has shown that said unusual beliefs weren't correlated with self reported IQ or SAT score.

↑ comment by jaime2000 · 2014-01-20T19:55:39.143Z · LW(p) · GW(p)

The Wikipedia article states that those are percentiles of test-takers, not the population as a whole. What percentage of seniors take the SAT? I tried googling, but I could not find the figure.

My first thought is that most people who don't take the SAT don't intend to go to college and are likely to be below the mean reported SAT score, but then I realized that a non-negligible subset of those people must have taken only the ACT as their admission exam.

Replies from: Vaniver↑ comment by Vaniver · 2014-01-20T20:01:39.418Z · LW(p) · GW(p)

I don't have solid numbers myself, but percentile of test-takers should underestimate percentile of population. However, there is regression to the mean to take into account, as well as that many people take the SAT multiple times and report the most favorable score, both of which suggest that score on test should overestimate IQ, and I'm fudging it by treating those two as if they cancel out.

Replies from: michaelsullivan↑ comment by michaelsullivan · 2014-01-22T17:31:59.650Z · LW(p) · GW(p)

Don't most people who report IQ scores do the same thing if they have taken multiple tests?

Replies from: Vaniver, Elund↑ comment by Vaniver · 2014-01-22T18:35:39.370Z · LW(p) · GW(p)

Possibly. My suspicion is that less people have taken multiple professional IQ tests (I've only taken one professional one) than multiple SATs (I think I took it three times, at various ages). I score significantly better on the Raven's subtest than on other subtests, and so my IQ.dk score was significantly higher than my professional IQ test last year- but this year I only reported the professional one, because that was all that was asked for. (I might not be representative.)

comment by Zack_M_Davis · 2014-01-19T00:47:42.705Z · LW(p) · GW(p)

The second word in the winning secret phrase is pony (chosen because you can't spell the former without the latter); I'll accept the prize money via PayPal to main att zackmdavis daht net.

(As I recall, I chose to Defect after looking at the output of one call to Python's random.random() and seeing a high number, probably point-eight-something. But I shouldn't get credit for following my proposed procedure (which turned out to be wrong anyway) because I don't remember deciding beforehand that I was definitely using a "result > 0.8 means Defect" convention (when "result < 0.2 means Defect" is just as natural). I think I would have chosen Cooperate if the random number had come up less than 0.8, but I haven't actually observed the nearby possible world where it did, so it's at least possible that I was rationalizing.)

(Also, I'm sorry for being bad at reading; I don't actually think there are seven hundred trillion people in Europe.)

Replies from: simplicio↑ comment by simplicio · 2014-01-20T14:18:35.347Z · LW(p) · GW(p)

When I heard about Yvain's PD contest, I flipped a coin. I vowed that if it came up heads, I would Paypal the winner $200 (on top of their winnings), and if it came up tails I would ask them for the prize money they won.

It came up tails. YOUR MOVE.

(No, not really. But somebody here SHOULD have made such a commitment.)

Replies from: Zack_M_Davis↑ comment by Zack_M_Davis · 2014-01-21T04:47:53.322Z · LW(p) · GW(p)

Hey, it's not too late: if you should have made such a commitment, then the mere fact that you didn't actually do so shouldn't stop you now. Go ahead, flip a coin; if it comes up heads, you pay me $200; if it comes up tails, I'll ask Yvain to give you the $42.96.

Replies from: Eliezer_Yudkowsky, simplicio↑ comment by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2014-01-21T15:52:19.794Z · LW(p) · GW(p)

...I don't think this is a very wise offer to make on the Internet unless the "coin" is somewhere you can both see it.

Replies from: Zack_M_Davis, Fermatastheorem↑ comment by Zack_M_Davis · 2014-01-22T04:20:25.610Z · LW(p) · GW(p)

Yes, of course I thought of that when considering my reply, but in this particular context (where we're considering counterfactual dealmaking presumably because the idea of pulling such a stunt in real life is amusing), I thought it was more in the spirit of things to be trusting. As you know, Newcomblike arguments still go through when Omega is merely a very good and very honest predictor rather than a perfect one, and my prior beliefs about reasonably-well-known Less Wrongers make me willing to bet that Simplicio probably isn't going to lie in order to scam me out of forty-three dollars. (If it wasn't already obvious, my offer was extended to Simplicio only and for the specified amounts only.)

↑ comment by Fermatastheorem · 2014-01-21T20:33:14.241Z · LW(p) · GW(p)

Nevermind - I thought I'd found a site that would flip a coin and save the result with a timestamp.

Why hasn't anybody made this yet?

Replies from: gwern, MugaSofer↑ comment by gwern · 2014-01-22T02:52:14.035Z · LW(p) · GW(p)

Precommitment is a solved problem which doesn't need a trusted website. For example, simplicio could've released a hash precommitment (made using a local hash utility like sha512sum) to Yvain after taking the survey and just now unveiled that input, if he was serious about the counterfactual.

(He would also want to replace the 'flip a coin' with eg. 'total number of survey participants was odd'.)

You can even still easily do a verifiable coin flip now. For example, you could pick a commonly observable future event like a property of a Bitcoin block 24 hours from now, or you could both post a hash precommitment of a random bit, then when both are posted, each releases the chosen bit, verifies the other's hash, and XOR the 2 bits to choose the winner.

Replies from: ciphergoth↑ comment by Paul Crowley (ciphergoth) · 2014-01-22T21:18:09.547Z · LW(p) · GW(p)

No need for Bitcoin etc; one side commits to a bit, then the other side calls heads or tails, and they win if the call was correct.

comment by [deleted] · 2014-01-19T18:32:29.462Z · LW(p) · GW(p)

Yvain - Next year, please include a question asking if the person taking the survey uses PredictionBook. I'd be curious to see if these people are better calibrated.

Replies from: John_Maxwell_IV↑ comment by John_Maxwell (John_Maxwell_IV) · 2014-01-23T06:28:27.827Z · LW(p) · GW(p)

Maybe ask them how many predictions they have made so we can see if using it more makes you better.

Replies from: NancyLebovitz↑ comment by NancyLebovitz · 2014-01-26T01:36:32.275Z · LW(p) · GW(p)

Probably a good idea-- I use PredictionBook for casual entertainment, not as a serious effort at self-calibration.

comment by Shmi (shminux) · 2014-01-19T04:15:24.199Z · LW(p) · GW(p)

Yvain is not hugely on board with the idea of running correlations between everything and seeing what sticks, but will grudgingly publish the results because of the very high bar for significance (p < .001 on ~800 correlations suggests < 1 spurious result) and because he doesn't want to have to do it himself.

The standard way to fix this is to run them on half the data only and then test their predictive power on the other half. This eliminates almost all spurious correlations.

Replies from: Nominull, Kawoomba↑ comment by Nominull · 2014-01-19T04:59:15.868Z · LW(p) · GW(p)

Does that actually work better than just setting a higher bar for significance? My gut says that data is data and chopping it up cleverly can't work magic.

Replies from: Dan_Weinand, ChristianKl↑ comment by Dan_Weinand · 2014-01-19T05:53:07.811Z · LW(p) · GW(p)

Cross validation is actually hugely useful for predictive models. For a simple correlation like this, it's less of a big deal. But if you are fitting a local linearly weighted regression line for instance, chopping the data up is absolutely standard operating procedure.

↑ comment by ChristianKl · 2014-01-19T16:04:10.933Z · LW(p) · GW(p)

Does that actually work better than just setting a higher bar for significance? My gut says that data is data and chopping it up cleverly can't work magic.

How do you decide for how high to hang your bar for significance? It very hard to estimate how high you have to hang it depending on how you go fishing in your data. The advantage of the two step procedure is that you are completely free to fish how you want in the first step. There are even cases where you might want a three step procedure.

↑ comment by Kawoomba · 2014-01-19T08:48:10.459Z · LW(p) · GW(p)

Alternatively, Bonferroni correction.

Replies from: Pablo_Stafforini↑ comment by Pablo (Pablo_Stafforini) · 2014-01-19T09:51:25.543Z · LW(p) · GW(p)