EA Vegan Advocacy is not truthseeking, and it’s everyone’s problem

post by Elizabeth (pktechgirl) · 2023-09-28T23:30:03.390Z · LW · GW · 250 commentsThis is a link post for https://acesounderglass.com/2023/09/28/ea-vegan-advocacy-is-not-truthseeking-and-its-everyones-problem/

Contents

Introduction Definitions Audience How EA vegan advocacy has hindered truthseeking Active suppression of inconvenient questions Counter-Examples Ignore the arguments people are actually making Frame control/strong implications not defended/fuzziness Counter-Examples Sound and fury, signifying no substantial disagreement Counter-Examples Bad sources, badly handled Counter-Examples Taxing Facebook Ignoring known falsehoods until they’re a PR problem Why I Care What do EA vegan advocates need to do? All Effective Altruists need to stand up for our epistemic commons Acknowledgments Appendix Terrible anti-meat article Edits None 251 comments

Introduction

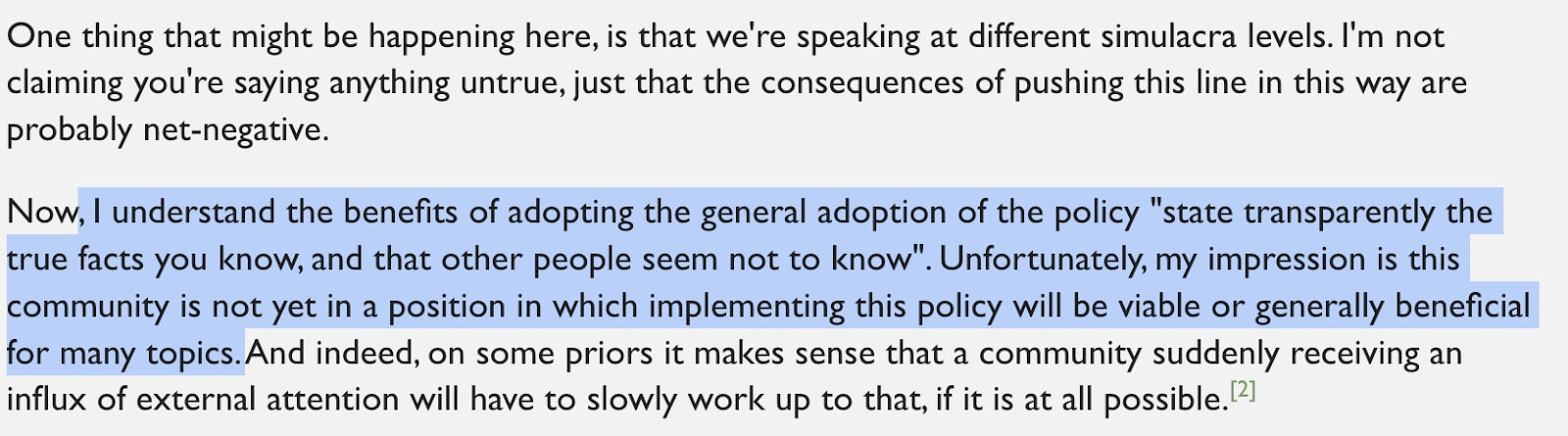

Effective altruism prides itself on truthseeking. That pride is justified in the sense that EA is better at truthseeking than most members of its reference category, and unjustified in that it is far from meeting its own standards. We’ve already seen dire consequences of the inability to detect bad actors who deflect investigation into potential problems, but by its nature you can never be sure you’ve found all the damage done by epistemic obfuscation because the point is to be self-cloaking.

My concern here is for the underlying dynamics of EA’s weak epistemic immune system, not any one instance. But we can’t analyze the problem without real examples, so individual instances need to be talked about. Worse, the examples that are easiest to understand are almost by definition the smallest problems, which makes any scapegoating extra unfair. So don’t.

This post focuses on a single example: vegan advocacy, especially around nutrition. I believe vegan advocacy as a cause has both actively lied and raised the cost for truthseeking, because they were afraid of the consequences of honest investigations. Occasionally there’s a consciously bad actor I can just point to, but mostly this is an emergent phenomenon from people who mean well, and have done good work in other areas. That’s why scapegoating won’t solve the problem: we need something systemic.

In the next post I’ll do a wider but shallower review of other instances of EA being hurt by a lack of epistemic immune system. I already have a long list, but it’s not too late for you to share your examples.

Definitions

I picked the words “vegan advocacy” really specifically. “Vegan” sometimes refers to advocacy and sometimes to just a plant-exclusive diet, so I added “advocacy” to make it clear.

I chose “advocacy” over “advocates” for most statements because this is a problem with the system. Some vegan advocates are net truthseeking and I hate to impugn them. Others would like to be epistemically virtuous but end up doing harm due to being embedded in an epistemically uncooperative system. Very few people are sitting on a throne of plant-based imitation skulls twirling their mustache thinking about how they’ll fuck up the epistemic commons today.

When I call for actions I say “advocates” and not “advocacy” because actions are taken by people, even if none of them bear much individual responsibility for the problem.

I specify “EA vegan advocacy” and not just “vegan advocacy” not because I think mainstream vegan advocacy is better, but because 1. I don’t have time to go after every wrong advocacy group in the world. 2. Advocates within Effective Altruism opted into a higher standard. EA has a right and responsibility to maintain the standards of truth it advocates, even if the rest of the world is too far gone to worry about.

Audience

If you’re entirely uninvolved in effective altruism you can skip this, it’s inside baseball and there’s a lot of context I don’t get into.

How EA vegan advocacy has hindered truthseeking

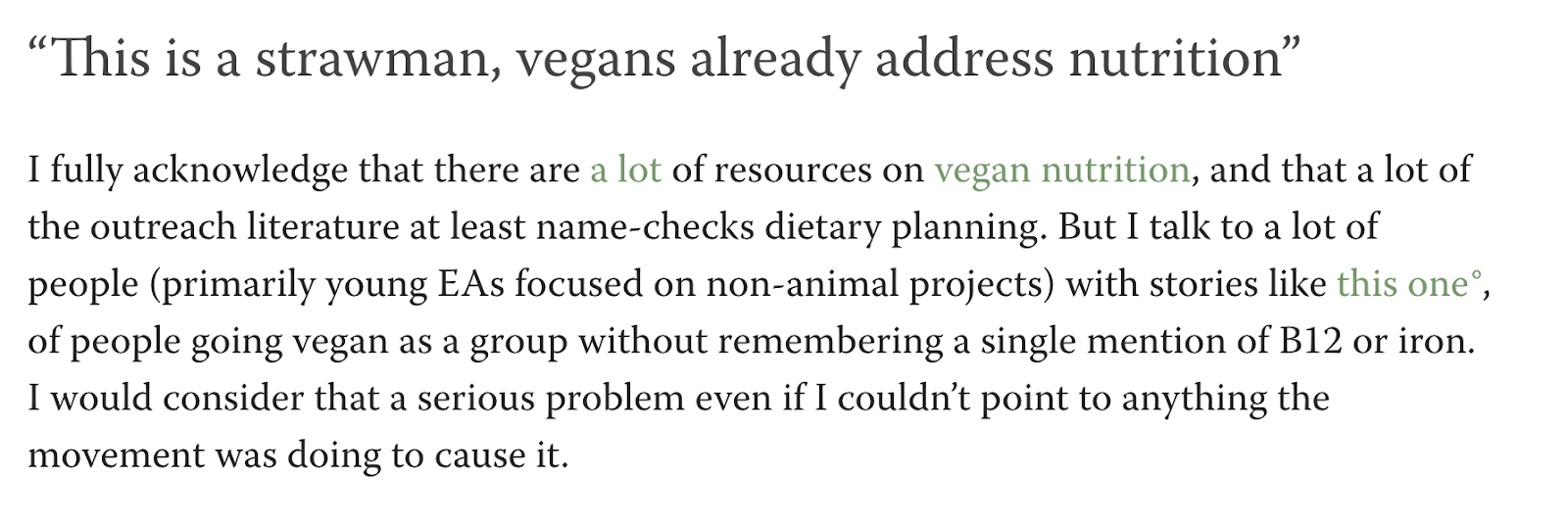

EA vegan advocacy has both pushed falsehoods and punished people for investigating questions it doesn’t like. It manages this even for positions that 90%+ of effective altruism and the rest of the world agree with, like “veganism is a constraint”. I don’t believe its arguments convince anyone directly, but end up having a big impact by making inconvenient beliefs too costly to discuss. This means new entrants to EA are denied half of the argument, and harm themselves due to ignorance.

This section outlines the techniques I’m best able to name and demonstrate. For each technique I’ve included examples. Comments on my own posts are heavily overrepresented because they’re the easiest to find; “go searching through posts on veganism to find the worst examples” didn’t feel like good practice. I did my best to quote and summarize accurately, although I made no attempt to use a representative sample. I think this is fair because a lot of the problem lies in the fact that good comments don’t cancel out bad, especially when the good comments are made in parallel rather than directly arguing with the bad. I’ve linked to the source of every quote and screen shot, so you can (and should) decide for yourself. I’ve also created a list of all of my own posts I’m drawing from, so you can get a holistic view.

My posts:

- Iron deficiencies are very bad and you should treat them (LessWrong [LW · GW], EAForum [EA · GW])

- Vegan Nutrition Testing Project: Interim Report (LessWrong [LW · GW], EAForum [EA · GW])

- Lessons learned from offering in-office nutritional testing (LessWrong [LW · GW], EAForum [EA · GW])

- What vegan food resources have you found useful? (LessWrong [LW · GW], EAForum [EA · GW]) (not published on my own blog)

- Playing with Faunalytics’ Vegan Attrition Data (LessWrong, EAForum)

- Change my mind: Veganism entails trade-offs, and health is one of the axes (LessWrong [LW · GW], EAForum [EA · GW])

- Adventist Health Study-2 supports pescetarianism more than veganism (LessWrong [LW · GW], EAForum [EA · GW])

I should note I quote some commenters and even a few individual comments in more than one section, because they exhibit more than one problem. But if I refer to the same comment multiple times in a row I usually only link to it once, to avoid implying more sources than I have.

My posts were posted on my blog, LessWrong, and EAForum. In practice the comments I drew from came from LessWrong (white background) and EAForum (black background). I tried to go through those posts and remove all my votes on comments (except the automatic vote for my own comments) so that you could get an honest view of how the community voted without my thumb on the scale, but I’ve probably missed some, especially on older posts. On the main posts, which received a lot of traffic, I stuck to well-upvoted comments, but I included some low (but still positive) karma comments from unpopular posts.

The goal here is to make these anti-truthseeking techniques legible for discussion, not develop complicated ways to say “I don’t like this”, so when available I’ve included counter examples. These are comments that look similar to the ones I’m complaining about, but are fine or at least not suffering from the particular flaw in that section. In doing this I hope to keep the techniques’ definitions narrow.

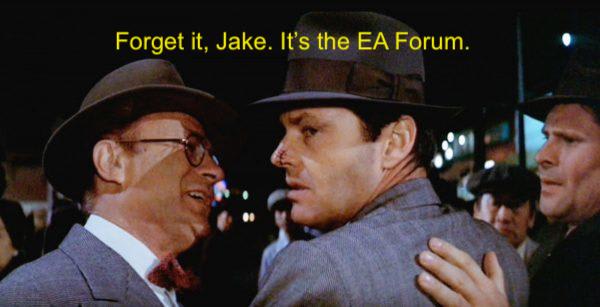

Active suppression of inconvenient questions

A small but loud subset of vegan advocacy will say outright you shouldn’t say true things, because it leads to outcomes they dislike. This accusation is even harsher than “not truthseeking”, and would normally be very hard to prove. If I say “you’re saying that because you care more about creating vegans than the health of those you create”, and they say “no I’m not”, I don’t really have a come back. I can demonstrate that they’re wrong, but not their motivation. Luckily, a few people said the quiet part out loud.

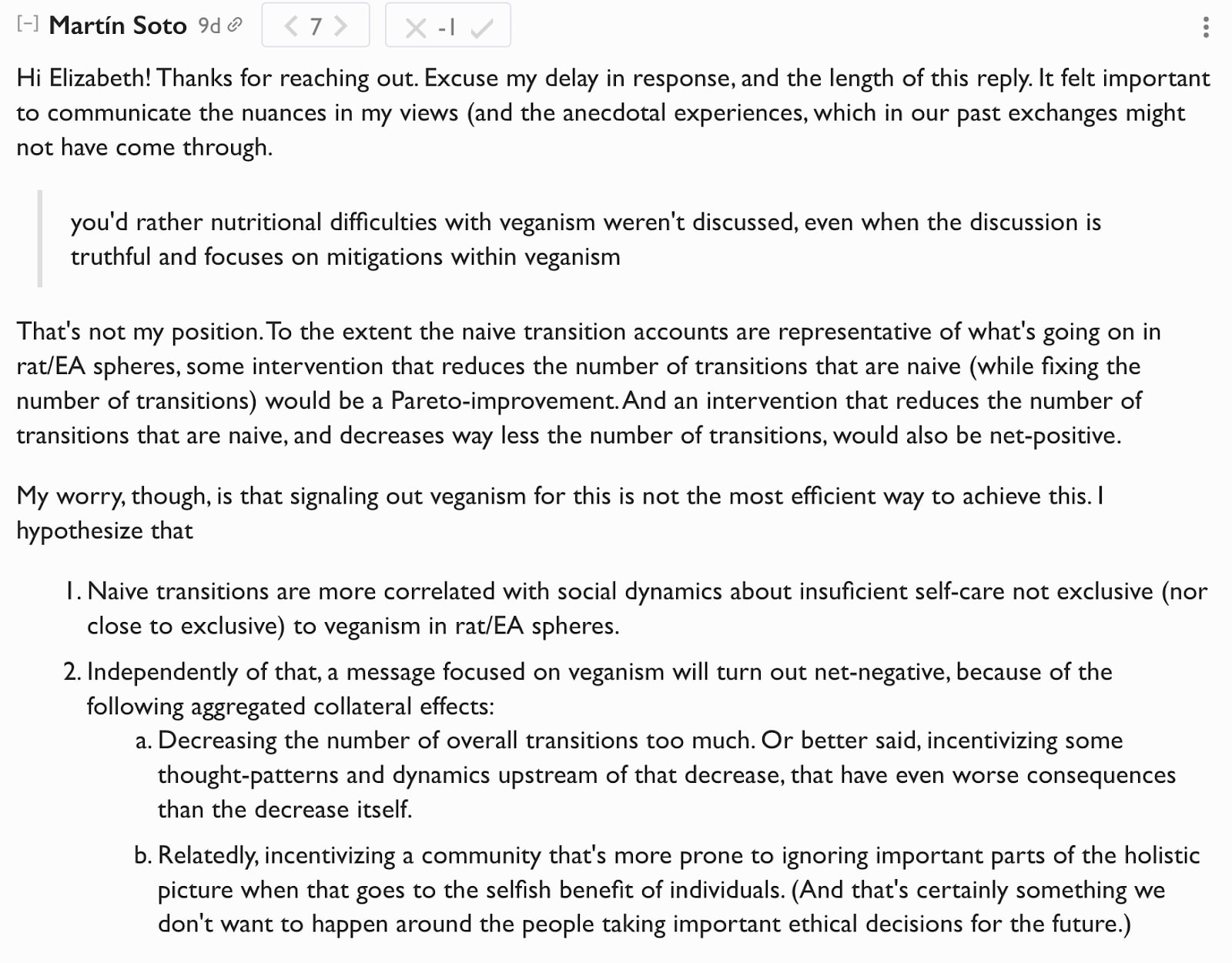

Commenter Martin Soto pushed back very hard on my first nutrition testing study. Finally I asked him outright if he thought it was okay to share true information about vegan nutrition. His response was quite thoughtful and long, so you should really go read the whole thing [LW(p) · GW(p)], but let me share two quotes

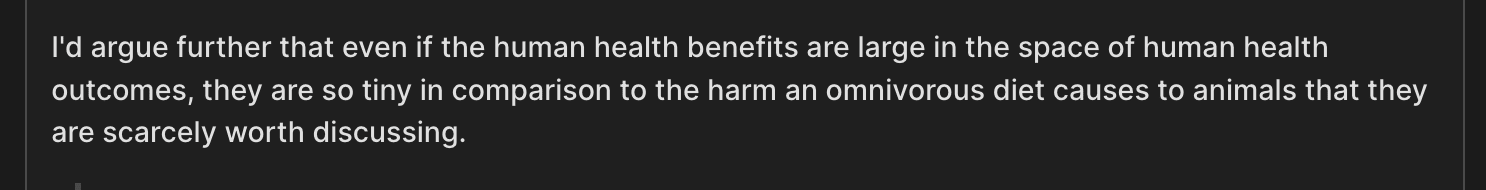

He goes on to say:

[LW(p) · GW(p)]

[LW(p) · GW(p)]And in a later comment

[LW · GW]

[LW · GW]EDIT 2023-10-03: Martin disputes [LW(p) · GW(p)] my summary of his comments. I think it’s good practice to link to disputes like this, even though I stand by my summary. I also want to give a heads-up that I see his comments in the dispute thread as continuing the patterns I describe (which makes that thread a tax on the reader). If you want to dig into this, I strongly suggest you first read his original comments [LW(p) · GW(p)] and come up with your own summary, so you can compare that to each of ours.

The charitable explanation here is that my post focuses on naive veganism, and Soto thinks that’s a made-up problem. He believes this because all of the vegans he knows (through vegan advocacy networks) are well-educated on nutrition. There are a few problems here, but the most fundamental is that enacting his desired policy of suppressing public discussion of nutrition issues with plant-exclusive diets will prevent us from getting the information to know if problems are widespread. My post and a commenter’s report [LW(p) · GW(p)] on their college group are apparently the first time he’s heard of vegans who didn’t live and breathe B12.

I have a lot of respect for Soto for doing the math and so clearly stating his position that “the damage to people who implement veganism badly is less important to me than the damage to animals caused by eating them”. Most people flinch away from explicit trade-offs like that, and I appreciate that he did them and own the conclusion. But I can’t trust his math because he’s cut himself off from half the information necessary to do the calculations. How can he estimate the number of vegans harmed or lost due to nutritional issues if he doesn’t let people talk about them in public?

In fact the best data I found on this was from Faunalytics, which found that ~20% of veg*ns drop out due to health reasons. This suggests to me a high chance his math is wrong and will lead him to do harm by his own standards.

EDIT 2023-10-04: . Using Faunalytics numbers for self-reported health issues and improvements after quitting veg*nism, I calculated that 20% of veg*ns develop health issues. This number is sensitive to your assumptions; I consider 20% conservative but it could be an overestimate. I encourage you to read the whole post and play with my model, and of course read the original work.

Most people aren’t nearly this upfront. They will go through the motions of calling an idea incorrect before emphasizing how it will lead to outcomes they dislike. But the net effect is a suppression of the exploration of ideas they find inconvenient.

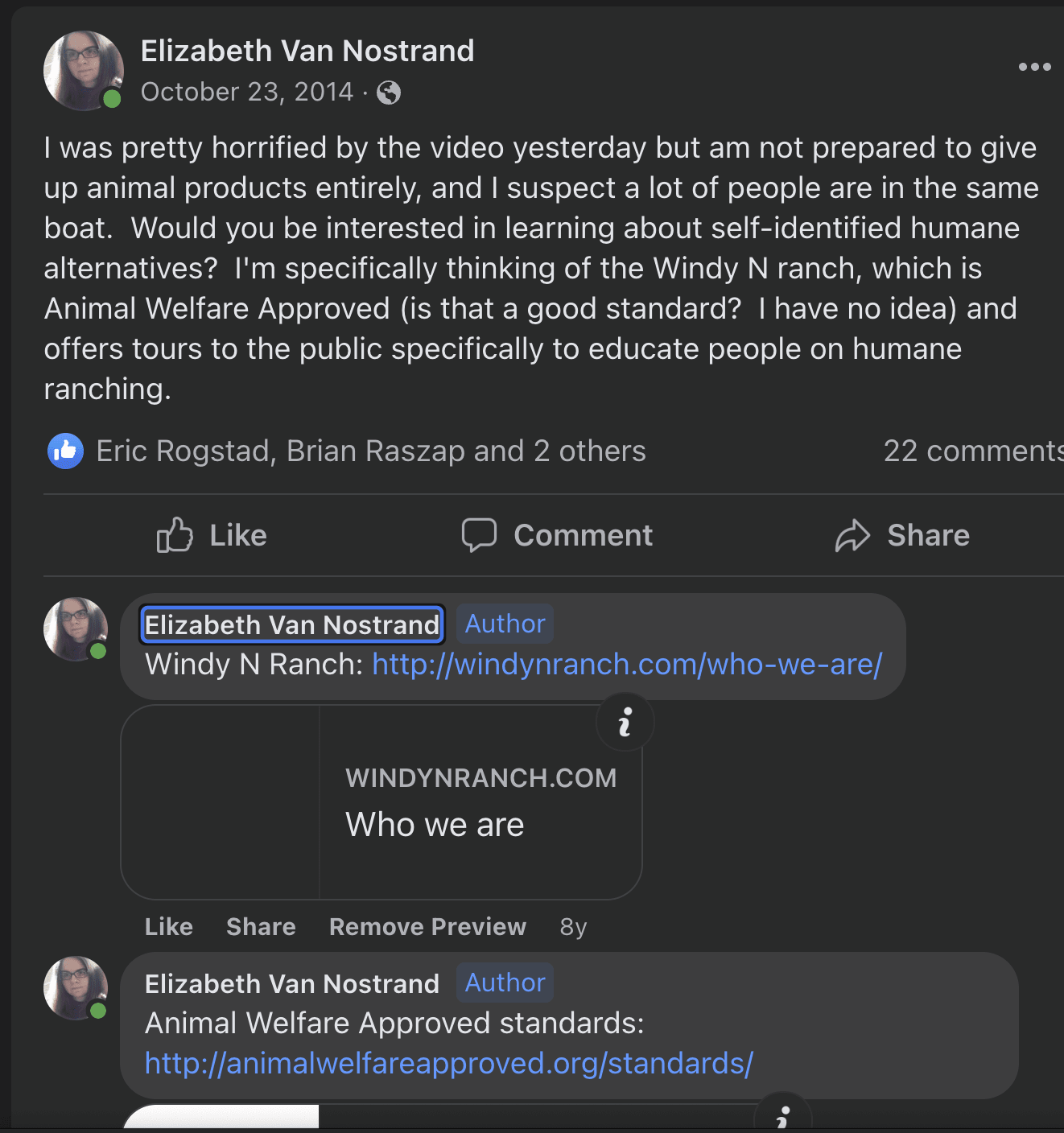

This post on Facebook is a good example. Normally I would consider facebook posts out of bounds, especially ones this old (over five years). Facebook is a casual space and I want people to be able to explore ideas without being worried that they’re creating a permanent record that will be used against them. In this case I felt that because the post was permissioned to public and a considered statement (rather than an off the cuff reply), the truth value outweighed the chilling effect. But because it’s so old and I don’t know how the author’s current opinion, I’m leaving out their name and not linking to the post.

The author is midlist EA- I’d heard of them for other reasons, but they’re certainly not EA-famous.

There are posts very similar to this one I would have been fine with, maybe even joyful about. You could present evidence against the claims that X is harmful, or push people to verify things before repeating them, or suggest we reserve the word poison for actual kill-you-dead molecules and not complicated compound constructions with many good parts and only weak evidence of mild long-term negative effects. But what they actually did was name-check the idea that X is fine before focusing on the harm to animals caused by repeating the claim- which is exactly what you’d expect if the health claims were true but inconvenient. I don’t know what this author actually believes, but I do know focusing on the consequences when the facts are in question is not truthseeking.

A subtler version comes from the AHS-2 post. At the time of this comment the author, Rockwell, described herself as the leader of EA NYC and an advisor to philanthropists on animal suffering, so this isn’t some rando having a feeling. This person has some authority.

[EA(p) · GW(p)]

[EA(p) · GW(p)]This comment more strongly emphasizes the claim that my beliefs are wrong, not just inconvenient. And if they’d written that counter-argument they promised I’d be putting this in the counter-examples section. But it’s been three months and they have not written anything where I can find it, nor responded to my inquiries. So even if literal claim were correct, she’s using a technique whose efficacy is independent of truth.

Over on the Change My Mind post the top comment says that vegan advocacy is fine because it’s no worse than fast food or breakfast cereal ads

[LW · GW]

[LW · GW]I’m surprised someone would make this comment. But what really shocks me is the complete lack of pushback from other vegan advocates. If I heard an ally described our shared movement as no worse than McDonalds, I would injure myself in my haste to repudiate them.

Counter-Examples

This post [? · GW] on EAForum came out while I was finishing this post. The author asks if they should abstain from giving bad reviews to vegan restaurants, because it might lead to more animal consumption- which would be a central example of my complaint. But the comments are overwhelmingly “no, there’s not even a good consequentialist argument for that”, and the author appears to be taking that to heart. So from my perspective this is a success story.

Ignore the arguments people are actually making

I’ve experienced this pattern way too often.

Me: goes out of my way to say not-X in a post

Comment: how dare you say X! X is so wrong!

Me: here’s where I explicitly say not-X.

*crickets*

This is by no means unique to posts about veganism. “They’re yelling at me for an argument I didn’t make” is a common complaint of mine. But it happens so often, and so explicitly, in the vegan nutrition posts. Let me give some examples.

My post:

[EA · GW]

[EA · GW]Commenter:

My post:

[EA · GW]

[EA · GW]

Commenters:

[LW(p) · GW(p)]

[LW(p) · GW(p)] [LW · GW]

[LW · GW]Commenter:

[LW(p) · GW(p)]

[LW(p) · GW(p)]My post:

[LW · GW]

[LW · GW]Commenter:

[LW(p) · GW(p)]

[LW(p) · GW(p)]My post:

Commenter:

[EA(p) · GW(p)]

[EA(p) · GW(p)]You might be thinking “well those posts were very long and honestly kind of boring, it would be unreasonable to expect people to read everything”. But the length and precision are themselves a response to people arguing with positions I don’t hold (and failing to update when I clarify). The only things I can do are spell out all of my beliefs or not spell out all of my beliefs, and either way ends with comments arguing against views I don’t have.

Frame control/strong implications not defended/fuzziness

This is the hardest one to describe. Sometimes people say things, and I disagree, and we can hope to clarify that disagreement. But sometimes people say things and responding is like nailing jello to a wall. Their claims aren’t explicit, or they’re individually explicit but aren’t internally consistent, or play games with definitions. They “counter” statements in ways that might score a point in debate club but don’t address the actual concern in context.

One example is the top-voted comment on LW on Change My Mind

[LW(p) · GW(p)]

[LW(p) · GW(p)]Over a very long exchange I attempt to nail down his position:

- Does he think micronutrient deficiencies don’t exist? No, he agrees they do.

- Does he think that they can’t cause health issues? No, he agrees they do.

- Does he think this just doesn’t happen very often, or is always caught? No, if anything he thinks the Faunalytics underestimates the veg*n attrition due to medical issues.

So what exactly does he disagree with me on?

He also had a very interesting exchange with another commenter. That thread got quite long, and fuzziness by its nature doesn’t lend itself to excerpts, so you should read the whole thing, but I will share highlights.

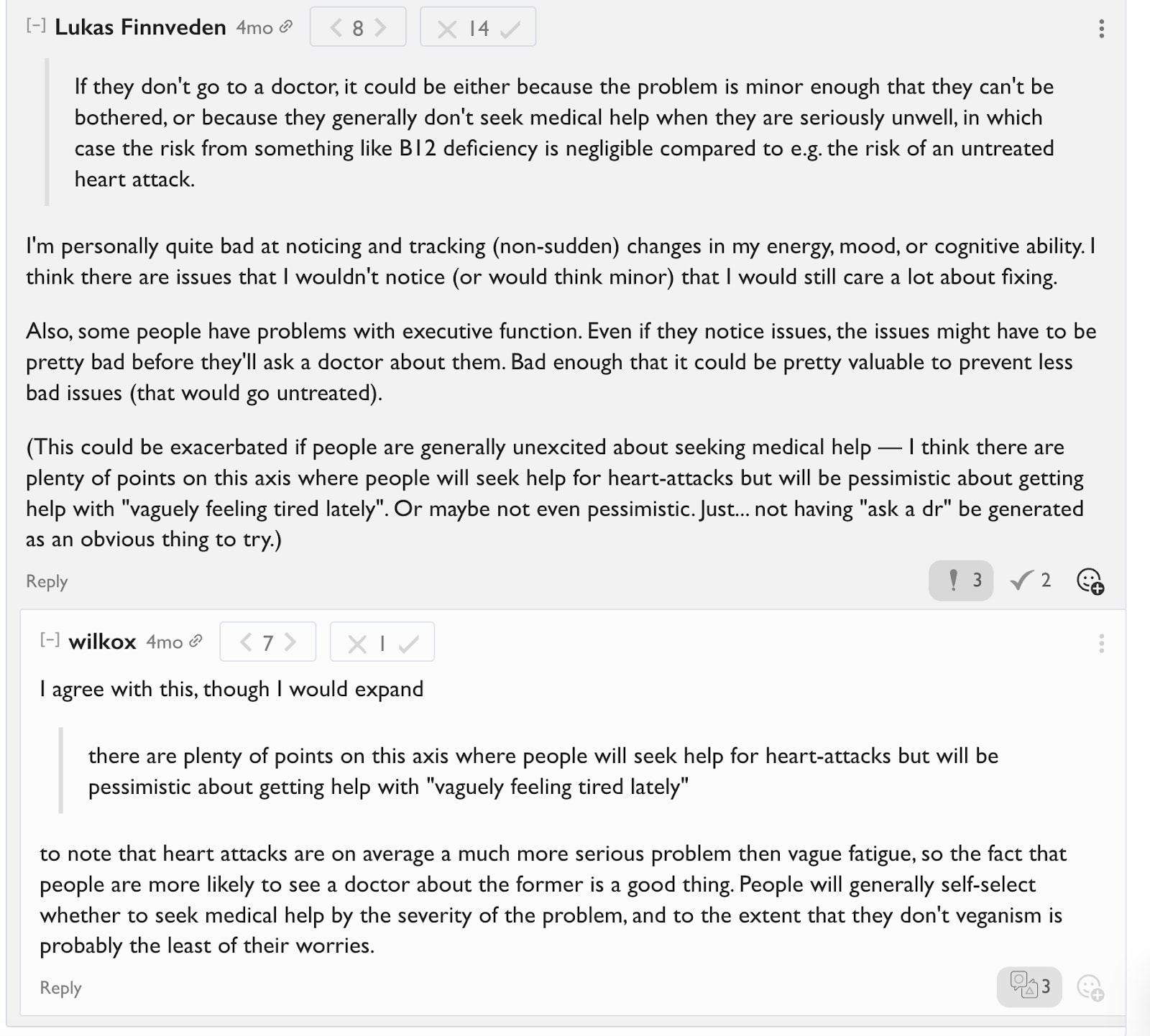

Before the screenshot: Wilkox acknowledges that B12 and iron deficiencies can cause fatigue, and veganism can cause these deficiencies, but it’s fine because if people get tired they can go to a doctor.

[LW(p) · GW(p)]

[LW(p) · GW(p)]That reply doesn’t contain any false statements, and would be perfectly reasonable if we were talking about ER triage protocols. But it’s irrelevant when the conversation is “can we count on veganism-induced fatigue being caught?”. (The answer is no, and only some of the reasons have been brought up here)

You can see how the rest of this conversation worked out in the Sound and Fury section.

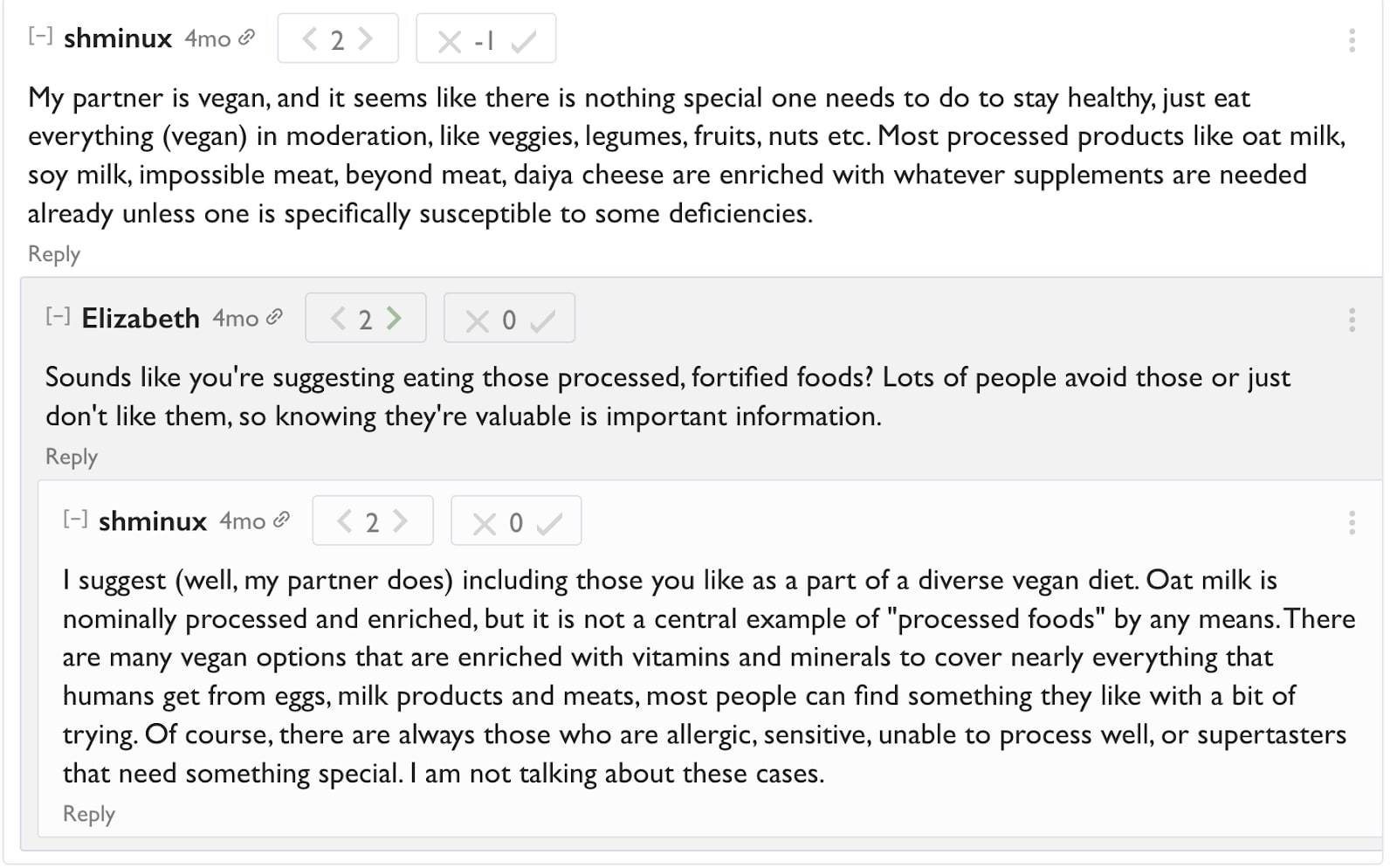

A much, much milder example can be seen in What vegan food resources have you found useful? [LW · GW]. This was my attempt to create something uncontroversially useful, and I’d call it a modest success. The post had 20-something karma on LW and EAForum, and there were several useful-looking resources shared on EAForum. But it also got the following comment on LW:

[LW(p) · GW(p)]

[LW(p) · GW(p)]I picked this example because it only takes a little bit of thought to see the jujitsu, so little it barely counts. He disagreed with my implicit claim that… well okay here’s the problem. I’m still not quite sure where he disagrees. Does he think everyone automatically eats well as a vegan? That no one will benefit from resources like veganhealth.org? That no one will benefit from a cheat sheet for vegan party spreads? That there is no one for whom veganism is challenging? He can’t mean that last one because he acknowledges exceptions in his later comment, but only because I pushed back. Maybe he thinks that the only vegans who don’t follow his steps are those with medical issues, and that no-processed-food diets are too unpopular to consider?

I don’t think this was deliberately anti-truthseeking, because if it was he would have stopped at “nothing special” instead of immediately outlining the special things his partner does. That was fairly epistemically cooperative. But it is still an example of strong claims made only implicitly.

Counter-Examples

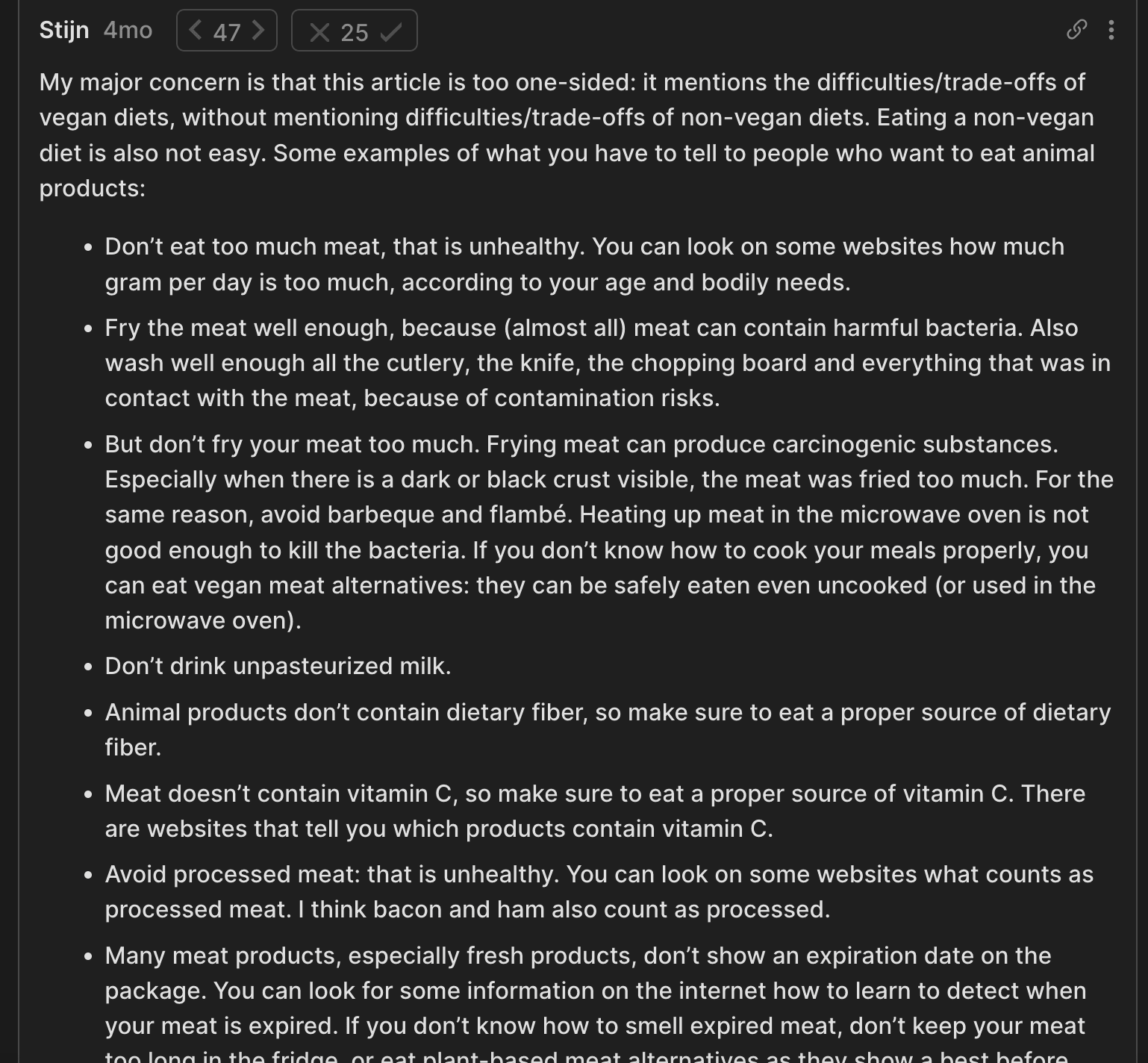

I think this comment makes a claim (“vegans moving to naive omnivorism will hurt themselves”) clearly, and backs it up with a lot of details.

[EA(p) · GW(p)]

[EA(p) · GW(p)]The tone is kind of obnoxious and he’s arguing with something I never claimed, but his beliefs are quite clear. I can immediately understand which beliefs of his I agree with (“vegans moving to naive omnivorism will hurt themselves” and “that would be bad”) and make good guesses at implicit claims I disagree with (“and therefore we should let people hurt themselves with naive veganism”? “I [Elizabeth] wouldn’t treat naive mass conversion to omnivorism seriously as a problem”?). That’s enough to count as epistemically cooperative.

Sound and fury, signifying no substantial disagreement

Sometimes someone comments with an intense, strongly worded, perhaps actively hostile, disagreement. After a laborious back and forth, the problem dissolves: they acknowledge I never held the position they were arguing with, or they don’t actually disagree with my specific claims.

Originally I felt happy about these, because “mostly agreeing” is an unusually positive outcome for that opening. But these discussions are grueling. It is hard to express kindness and curiosity towards someone yelling at you for a position you explicitly disclaimed. Any one of these stories would be a success but en masse they amount to a huge tax on saying anything about veganism, which is already quite labor intensive.

The discussions could still be worth it if it changed the arguer’s mind, or at least how they approached the next argument. But I don’t get the sense that’s what happens. Neither of us have changed our minds about anything, and I think they’re just as likely to start a similar fight the next week.

I do feel like vegan advocates are entitled to a certain amount of defensiveness. They encounter large amounts of concern trolling and outright hostility, and it makes sense that that colors their interactions. But that allowance covers one comment, maybe two, not three to eight (Wilkox, depending on which ones you count).

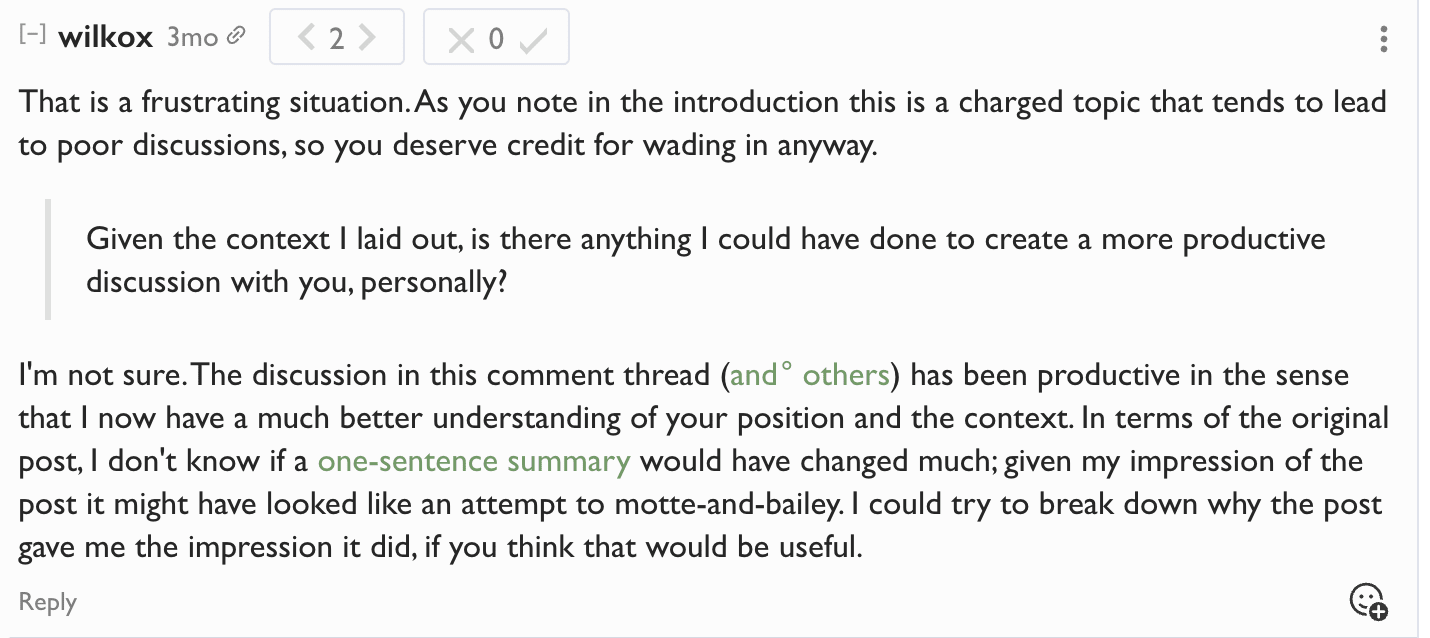

For example, I’ve already quoted Wilkox’s very fuzzy comment (reminder: this was the top voted comment on that post on LW). That was followed by a 13+ comment exchange [LW(p) · GW(p)] in which we eventually found he had little disagreement with any of my claims about vegan nutrition, only the importance of these facts. There really isn’t a way for me to screenshot this: the length and lack of specifics is the point.

You could say that the confusion stemmed from poor writing on my part, but:

[LW(p) · GW(p)]

[LW(p) · GW(p)]I really appreciate the meta-honesty here, but since the exchange appears to have eaten hours of both of our time just to dig ourselves out of a hole, I can’t get that excited about it.

Counter-Examples

I want to explicitly note that Sound and Fury isn’t the same as asking questions or not understanding a post. E.g. here [EA(p) · GW(p)] Ben West identifies a confusion, asks me, and accepts both my answer and an explanation of why answering is difficult.

[EA(p) · GW(p)]

Or in that same post, someone asked me to define nutritionally dense [EA(p) · GW(p)]. It took a bit for me to answer and we still disagreed afterward, but it was a great question and the exchange felt highly truthseeking.

Bad sources, badly handled

Citations should be something of a bet: if the citation (the source itself or your summary of it) is high quality and supports your point, that should move people closer to your views. But if they identify serious relevant flaws, that should move both you and your audience closer to their point of view. Of course our beliefs are based on a lot of sources and it’s not feasible or desirable to really dig into all of them for every disagreement, so the bet may be very small. But if you’re not willing to defend a citation, you shouldn’t make it.

What I see in EA vegan advocacy is deeply terrible citations, thrown out casually, and abandoned when inconvenient. I’ve made something of a name for myself checking citations and otherwise investigating factual claims from works of nonfiction. Of everything I’ve investigated, I think citations from EA vegan advocacy have the worst effort:truth ratio. Not outright more falsehoods, I read some pretty woo stuff, but those can be dismissed quickly. Citations in vegan advocacy are often revealed to be terrible only after great effort.

And having put in that effort, my reward is usually either crickets, or a new terrible citation. Sometimes we will eventually drill into “I just believe it”, which is honestly fine. We don’t live our lives to the standard of academic papers. But if that’s your reason, you need to state it from the beginning.

For example, in the top voted comment [EA(p) · GW(p)] on Change My Mind post on EAF, Rockwell (head of EA NYC) has five links in her post. Only links 1 and 4 are problems, but I’ll describe them in order to avoid confusion.

Of the five links:

- Wilkox’s comment [LW(p) · GW(p)] on the LW version of the post, where he eventually agrees that veganism requires testing and supplementation for many people (although most of that exchange hadn’t happened at the time of linking).

- cites my past work, if anything too generously.

- an estimation of nutrient deficiency in the US. I don’t love that this uses dietary intake as opposed to testing values (people’s needs vary so wildly), but at least it used EAR and not RDA. I’d want more from a post but for a comment this is fine.

- an absolutely atrocious article, which the comment further misrepresents. We don’t have time to get all the flaws in that article, so I’ve put my first hour of criticisms in the appendix. What really gets me here is that I would have agreed the standard American diet sucks without asking for a source. I thought I had conceded that point preemptively, albeit not naming Standard American Diet explicitly.

And if she did feel a need go the extra mile on rigor for this comment, it’s really not that hard to find decent-looking research about the harms of the Standard Shitty American Diet. I found this paper on heart disease in 30 seconds, and most of that time was spent waiting for Elicit to load. I don’t know if it’s actually good, but it is not so obviously farcical as the cited paper. - The fifth link goes to a description of the Standard American Diet.

Rockwell did not respond to my initial reply (that fixing vegan issues is easier than fixing SSAD), or my asking [EA(p) · GW(p)] if that paper on the risks of meat eating was her favorite.

A much more time-consuming version of this happened with Adventist Health Study-2. Several people cited the AHS-2 as a pseudo-RCT that supported veganism (EDIT 2023-10-03: as superior to low meat omnivorism). There’s one commenter on LessWrong [LW(p) · GW(p)] and two [EA(p) · GW(p)] on EAForum [EA(p) · GW(p)] (one of whom had previously co-authored a blog post on the study and offered to answer questions). As I discussed here, that study is one of the best we have on nutrition and I’m very glad people brought it to my attention. But calling it a pseudo-RCT that supports veganism is deeply misleading. It is nowhere near randomized, and doesn’t cleanly support veganism even if you pretend it is.

(EDIT 2023-10-03: To be clear, the noise in the study overwhelms most differences in outcomes, even ignoring the self-sorting. My complaint is that the study was presented as strong evidence in one direction, when it’s both very weak and, if you treat it as strong, points in a different direction than reported. One commenter has said she only meant it as evidence that a vegan diet can work for some people, which I agree with, as stated in the post she was responding to. She disagrees with other parts of my summary as well, you can read more here [LW(p) · GW(p)])

It’s been three months, and none of the recommenders have responded to my analysis of the main AHS-2 paper, despite repeated requests.

But finding a paper is of lower quality and supports an entirely different conclusion is still not the worst-case scenario. The worst outcome is citation whack-a-mole.

A good example of this is from the post “Getting Cats Vegan is Possible and Imperative [EA · GW]”, by Karthik Sekar. Karthik is a vegan author and data scientist at a plant-based meat company.

[Note that I didn’t zero out my votes on this post’s comments, because it seemed less important for posts I didn’t write]

Karthik cites a lot of sources in that post. I picked what looked like his strongest source and investigated. It was terrible. It was a review article, so checking it required reading multiple studies. Of the cited studies, only 4 (with a total of 39 combined subjects) use blood tests rather than owner reports, and more than half of those were given vegetarian diets, not vegan (even though the table header says vegan). The only RCT didn’t include carnivorous diets.

Karthik agrees [EA(p) · GW(p)] that paper (that he cited) is not making its case “strong nor clear”, and cites another one (which AFAICT was not in the original post).

I dismiss [EA(p) · GW(p)] the new citation on the basis of “motivated [study] population and minimal reporting”.

He retreats to [EA(p) · GW(p)] “[My] argument isn’t solely based on the survey data. It’s supported by fundamentals of biochemistry, metabolism, and digestion too […] Mammals such as cats will digest food matter into constituent molecules. Those molecules are chemically converted to other molecules–collectively, metabolism–, and energy and biomass (muscles, bones) are built from those precursors. For cats to truly be obligate carnivores, there would have to be something exceptional about meat: (A) There would have to be essential molecules–nutrients–that cannot be sourced anywhere else OR (B) the meat would have to be digestible in a way that’s not possible with plant matter. […So any plant-based food that passes AAFCO guidelines is nutritionally complete for cats. Ami does, for example.]

I point [EA(p) · GW(p)] out that AAFCO doesn’t think meeting their guidelines is necessarily sufficient. I expected him to dismiss this as corporate ass-covering, and there’s a good chance he’d be right. But he didn’t.

Finally, he gets to his real position [EA(p) · GW(p)]:

Which would have been a fine aspirational statement, but then why include so many papers he wasn’t willing to stand behind?

On that same post someone else [EA(p) · GW(p)] says that they think my concerns are a big deal, and Karthik probably can’t convince them without convincing me. Karthik responds [EA(p) · GW(p)]:

So he’s conceded that his study didn’t show what he claimed. And he’s not really defending the AAFCO standards. But he’s really sure this will work anyway? And I’m the one who won’t update their beliefs.

In a different comment the same someone else [EA(p) · GW(p)] notes a weird incongruity in the paper. Karthik doesn’t respond.

This is the real risk of the bad sources: hours of deep intellectual work to discover that his argument boils down to a theoretical claim the author could have stated at the beginning. “I believe vegan cat food meets these numbers and meeting these numbers is sufficient” honestly isn’t a terrible argument, and I’d have respected it plainly stated, especially since he explicitly calls [EA · GW] for RCTs. Or I would, if he didn’t view those RCTs primarily as a means to prove what he already knows.

Counter-Examples

This commenter [EA(p) · GW(p)] starts out pretty similarly to the others, with a very limited paper implied to have very big implications. But when I push back on the serious limitations of the study, he owns the issues [EA(p) · GW(p)] and says he only ever meant the paper to support a more modest claim (while still believing the big claim he did make?).

Taxing Facebook

When I joined EA Facebook in 2014, it was absolutely hopping. Every week I met new people and had great discussions with them where we both walked away smarter. I’m unclear when this trailed off because I was drifting away from EA at the same time, but let’s say the golden age was definitively over by 2018. Facebook was where I first noticed the pattern with EA vegan advocacy.

Back in 2014 or 2015, Seattle EA watched some horrifying factory farming documentaries, and we were each considering how we should change our diets in light of that new information. We tried to continue the discussion on Facebook, only to have Jacy Reese Anthis (who was not a member of the local group and AFAIK had never been to Seattle) repeatedly insist that the only acceptable compromise was vegetarianism, humane meat doesn’t exist, and he hadn’t heard of health conditions benefiting from animal products so my doctor was wrong (or maybe I made it up?).

I wish I could share screenshots on this, but the comments are gone (I think because the account has been deleted). I’ve included shots of the post and some of my comments (one of which refers to Jacy obstructing an earlier conversation, which I’d forgotten about). A third commenter has been cropped out, but I promise it doesn’t change the context.

(his answer was no, and that either I or my doctor were wrong because Jacy had never heard of any medical issue requiring consumption of animal products)

That conversation went okay. Seattle EA discussed suffering math on different vertebrates, someone brought up eating bugs, Brian Tomasik argued against eating bugs. It was everything an EA conversation should be.

But it never happened again.

Because this kind of thing happened every time animal products, diet, and health came up anywhere on EA Facebook. The commenters weren’t always as aggressive as Jacy, but they added a tremendous amount of cumulative friction. An omnivore would ask if lacto-vegetarianism worked, and the discussion would get derailed by animal advocates insisting you didn’t need milk. Conversations about feeling hungry at EAG inevitably got a bunch of commenters saying they were fine, as if that was a rebuttal.

Jeff Kaufman mirrors his FB posts onto his actual blog, which makes me feel more okay linking to it. In this post he makes a pretty clear point- that veganism can be any of cheaper, or healthier, or tastier, but not all at once. He gets a lot of arguments. One person argues that no one thinks that, they just care about animals more.

One vegetarian says they’d like to go vegan but just can’t beat eggs for their mix of convenience, price, macronutrients, and micronutrients. She gets a lot of suggestions for substitutes, all of which flunk on at least one criterion. Jacy Reese Anthis has a deleted comment, which from the reply looks like he asserted the existence of a substitute without listing one.

After a year or two of this, people just stopped talking about anything except the vegan party line on public FB. We’d bitch to each other in private, but that was it. And that’s why, when a new generation of people joined EA and were exposed to the moral argument for veganism, there was no discussion of the practicalities visible to them.

[TBF they probably wouldn’t have seen the conversations on FB anyway, I’m told that’s an old-person thing now. But the silence has extended itself]

Ignoring known falsehoods until they’re a PR problem

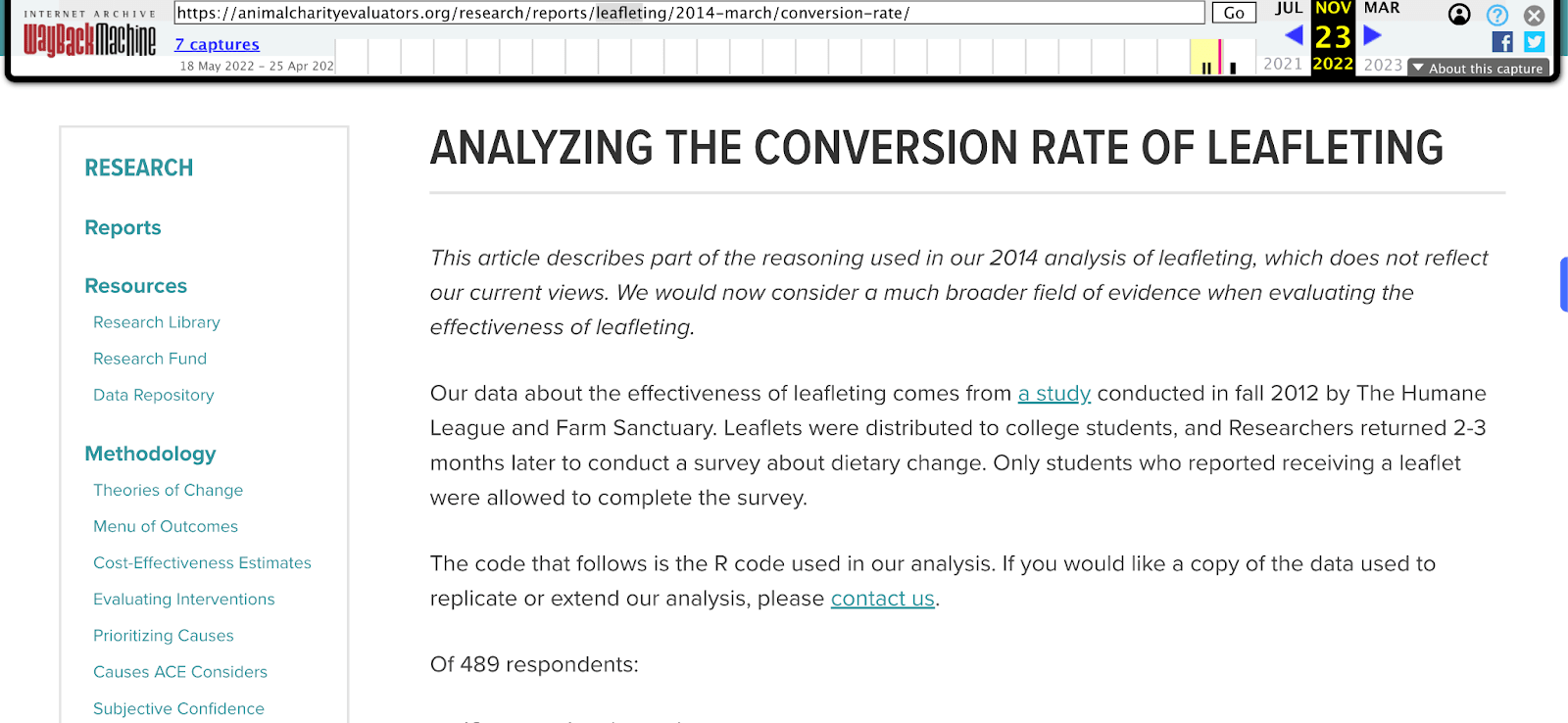

This is old news, but: for many years ACE said leafletting was great. Lots of people (including me and some friends, in 2015) criticized their numbers. This did not seem to have much effect; they’d agree their eval was imperfect and they intended to put up a disclaimer, but it never happened.

In late 2016 a scathing anti-animal-EA piece was published on Medium, making many incendiary accusations, including that the leafleting numbers are made up. I wouldn’t call that post very epistemically virtuous; it was clearly hoping to inflame more than inform. But within a few weeks (months?), ACE put up a disavowal of the leafletting numbers.

I unfortunately can’t look up the original correction or when they put it up; archive.org behaves very weirdly around animalcharityevaluators.org. As I remember it made the page less obviously false, but the disavowal was tepid and not a real fix. Here’s the 2022 version:

There are two options here: ACE was right about leafleting, and caved to public pressure rather than defend their beliefs. Or ACE was wrong about leafleting (and knew they were wrong, because they conceded in private when challenged) but continued to publicly endorse it.

Why I Care

I’ve thought vegan advocates were advocating falsehoods and stifling truthseeking for years. I never bothered to write it up, and generally avoided public discussion, because that sounded like a lot of work for absolutely no benefit. Obviously I wasn’t going to convince the advocates of anything, because finding the truth wasn’t their goal, and everyone else knew it so what did it matter? I was annoyed at them on principle for being wrong and controlling public discussion with unfair means, but there are so many wrong people in the world and I had a lot on my plate.

I should have cared more about the principle.

I’ve talked before about the young Effective Altruists [LW(p) · GW(p)] who converted to veganism with no thought for nutrition, some of whom suffered for it. They trusted effective altruism to have properly screened arguments and tell them what they needed to know. After my posts went up I started getting emails from older EAs who weren’t getting the proper testing either; I didn’t know because I didn’t talk to them in private, and we couldn’t discuss it in public.

Which is the default story of not fighting for truth. You think the consequences are minimal, but you can’t know because the entire problem is that information is being suppressed.

What do EA vegan advocates need to do?

- Acknowledge that nutrition is a multidimensional problem, that veganism is a constraint, and that adding constraints usually makes problems harder, especially if you’re already under several.

- Take responsibility for the nutritional education of vegans you create. This is not just an obligation, it’s an opportunity to improve the lives of people who are on your side. If you genuinely believe veganism can be nutritionally whole, then every person doing it poorly is suffering for your shared cause for no reason.

- You don’t even have to single out veganism. For purposes of this point I’ll accept “All diet switches have transition costs and veganism is no different, and the long term benefits more than compensate”. I don’t think your certainty is merited, and I’ll respect you more if you express uncertainty, but I understand that some situations require short messaging and am willing to allow this compression.

- Be epistemically cooperative [LW · GW], at least within EA spaces. I realize this is a big ask because in the larger world people are often epistemically uncooperative towards you. But obfuscation is a symmetric weapon and anger is not a reason to believe someone. Let’s deescalate this arms race and have both sides be more truthseeking.

What does epistemic cooperation mean?- Epistemic legibility [LW · GW]. Make your claims and cruxes clear. E.g. “I don’t believe iron deficiency is a problem because everyone knows to take supplements and they always work” instead of “Why are you bothering about iron supplements?”

- Respond to the arguments people actually make, or say why you’re not. Don’t project arguments from one context onto someone else. I realize this one is a big ask, and you have my blessing to go meta and ask work from the other party to make this viable, as long as it’s done explicitly.

- Stop categorically dismissing omnivores’ self-reports. I’m sure many people do overestimate the difficulties of veganism, but that doesn’t mean it’s easy or even possible for everyone.

- A scientific study, no matter how good, does not override [EA(p) · GW(p)] a specific person telling you they felt hungry at a specific time.

- If someone makes a good argument or disproves your source, update accordingly.

- Police your own. If someone makes a false claim or bad citation while advocating veganism, point it out. If someone dismisses a detailed self-report of a failed attempt at veganism, push back.

All Effective Altruists need to stand up for our epistemic commons

Effective Altruism is supposed to mean using evidence and reason to do the most good. A necessary component of that is accurate evidence. All the spreadsheets and moral math in the world mean nothing if the input is corrupted. There can be no consequentialist argument for lying to yourself or allies1 because without truth you can’t make accurate utility calculations2. Garbage in, garbage out.

One of EA’s biggest assets is an environment that rewards truthseeking more than average. Without uniquely strong truthseeking, EA is just another movement of people who are sure they’re right. But high truthseeking environments are fragile, exploiting them is rewarding, and the costs of violating them are distributed and hard to measure. The only way EA’s has a chance of persisting is if the community makes preserving it a priority. Even when it’s hard, even when it makes people unhappy, and even when the short term rewards of defection are high.

How do we do that? I wish I had a good answer. The problem is complicated and hard to reason about, and I don’t think we understand it enough to fix it. Thus far I’ve focused on vegan advocacy as a case study in destruction of the epistemic commons because its operations are relatively unsophisticated and easy to understand. Next post I’ll be giving more examples from across EA, but those will still have a bias towards legibility and visibility. The real challenge is creating an epistemic immune system that can fight threats we can’t even detect yet.

Acknowledgments

Thanks to the many people I’ve discussed this with over the past few months.

Thanks to Patrick LaVictoire and Aric Floyd for beta reading this post.

Thanks to Lightspeed Grants for funding this work. Note: a previous post referred to my work on nutrition and epistemics as unpaid after a certain point. That was true at the time and I had no reason to believe it wouldn’t stay true, but Lightspeed launched a week after that post and was an unusually good fit so I applied. I haven’t received a check yet but they have committed to the grant so I think it’s fair to count this as paid.

Appendix

Terrible anti-meat article

- The body of the paper is an argument between two people, but the abstract only includes the anti-animal-product side.

- The “saturated fat” and “cholesterol” sections take as a given that any amount of these is bad, without quantifying or saying why.

- The “heme iron” section does explain why excess iron is bad, but ignores the risks of too little. Maybe he also forgot women exist?

- The lactose section does cite two papers, one of which does not support his claim, and the other of which is focused on mice who received transplants. It probably has a bunch of problems but it was too much work to check, and even if it doesn’t, it’s about a niche group of mice.

- The next section claims milk contains estrogen and thus raises circulating estrogen, which increases cancer risk.

- It cites one paper supporting a link with breast cancer. That paper found a correlation with high fat but not low fat dairy, and the correlation was not statistically significant.

- It cites another paper saying dairy impairs sperm quality. This study was done at a fertility clinic, so will miss men with healthy sperm counts and is thus worthless. Ignoring that, it found a correlation of dairy fat with low sperm count, but low-fat dairy was associated with higher sperm count. Again, among men with impaired fertility.

- The “feces” section says that raw meat contains harmful bacteria (true), but nothing about how that translates to the risks of cooked meat.

That’s the first five subsections. The next set maybe look better sourced, but I can’t imagine them being good enough to redeem the paper. I am less convinced of the link between excess meat and health issues than I was before I read it, because surely if the claim was easy to prove the paper would have better supporting evidence, or the EA Forum commenter would have picked a better source.

[Note: I didn’t bother reading the pro-meat section. It may also be terrible, but this does not affect my position.]

- ”Are you saying I can’t lie to Nazis about the contents of my attic?” No more so than you’re banned from murdering them or slashing their tires. Like, you should probably think hard about how it fits into your strategy, but I assumed “yourself or allies” excluded Nazis for everyone reading this.

“Doesn’t that make the definition of enemies extremely morally load bearing?” It reflects that fact, yes.

“So vegan advocates can morally lie as long as it’s to people they consider enemies?” I think this is, at a minimum, defensible and morally consistent. In some cases I think it’s admirable, such as lying to get access to a slaughterhouse in order to take horrifying videos. It’s a declaration of war, but I assume vegan advocates are proud to declare the meat industry their enemy.︎

- I’ll allow that it’s conceptually possible to make deontological or virtue ethics arguments for lying to yourself or allies, but it’s difficult, and the arguments are narrow and/or wrong. Accurate beliefs turn out to be critical to getting good outcomes in all kinds of situations.

︎

Edits

You will notice a few edits in this post, which are marked with the edit date. The original text is struck through.

When I initially published this post on 2023-09-28, several images failed to copy over from the google doc to the shitty WordPress editor. These were fixed within a few hours.

I tried to link to sources for every screenshot (except the Facebook ones). On 2023-10-05 I realized that a lot of the links were missing (but not all, which is weird) and manually added them back in. In the process I found two screenshots that never had links, even in the google doc, and fixed those. Halfway through this process the already shitty editor flat out refused to add links to any more images. This post is apparently already too big for WordPress to handle, so every attempted action took at least 60 seconds, and I was constantly afraid I was going to make things worse, so for some images the link is in the surrounding text.

If anyone knows of a blogging website that will gracefully accept cut and paste from google docs, please let me know. That is literally all an editor takes to be a success in my book and last time I checked I could not find a single site that managed it.

250 comments

Comments sorted by top scores.

comment by Ninety-Three · 2023-09-29T01:01:43.278Z · LW(p) · GW(p)

The other reason vegan advocates should care about the truth is that if you keep lying, people will notice and stop trusting you. Case in point, I am not a vegan and I would describe my epistemic status as "not really open to persuasion" because I long ago noticed exactly the dynamics this post describes and concluded that I would be a fool to believe anything a vegan advocate told me. I could rigorously check every fact presented but that takes forever, I'd rather just keep eating meat and spend my time in an epistemic environment that hasn't declared war on me.

Replies from: tailcalled, adamzerner, jacques-thibodeau, Slapstick↑ comment by tailcalled · 2023-09-29T06:37:59.804Z · LW(p) · GW(p)

My impression is that while vegans are not truth-seekings, carnists are also not truth-seeking. This includes by making ag-gag laws, putting pictures of free animals on packages containing factory farmed animal flesh, denying that animals have feelings and can experience pain using nonsense arguments, hiding information about factory farming from children, etc..

So I guess the question is whether you prefer being in an epistemic environment that has declared war on humans or an epistemic environment that has declared war on farm animals. And I suppose as a human it's easier to be in the latter, as long as you don't mind hiring people to torture animals for your pleasure.

Edit/clarification: I don't mean that you can't choose to figure it out in more detail, only that if you do give up on figuring it out in more detail, you're more constrained. [LW(p) · GW(p)]

Replies from: dr_s, ariel-kwiatkowski, Serine, tailcalled, adamzerner, ztzuliios↑ comment by dr_s · 2023-09-30T06:01:09.876Z · LW(p) · GW(p)

putting pictures of free animals on packages containing factory farmed animal flesh

Well, yes, that's called marketing, it's like the antithesis of truth seeking.

The cure to hypocrisy is not more hypocrisy and lies but of opposite sign: that's the kind of naive first order consequentialism that leads people to cynicism instead. The fundamental problem is that out of fear that people would reasonably go for a compromise (e.g. keep eating meat but less of it and only from free range animals) some vegans decide to just pile on the arguments, true or false, until anyone who believed them all and had a minimum of sense would go vegan instantly. But that completely denies the agency and moral ability of everyone else, and underestimates the possibility that you may be wrong. As a general rule, "my moral calculus is correct, therefore I will skew the data so that everyone else comes to the same conclusions as me" is a bad principle.

Replies from: tailcalled↑ comment by tailcalled · 2023-09-30T07:27:56.949Z · LW(p) · GW(p)

I agree in principle, though someone has to actually create a community of people who track the truth in order for this to be effective and not be outcompeted by other communities. When working individually, people don't have the resources to untangle the deception in society due to its scale.

↑ comment by kwiat.dev (ariel-kwiatkowski) · 2023-09-29T09:14:50.961Z · LW(p) · GW(p)

There's a pretty significant difference here in my view -- "carnists" are not a coherent group, not an ideology, they do not have an agenda (unless we're talking about some very specific industry lobbyists who no doubt exist). They're just people who don't care and eat meat.

Ideological vegans (i.e. not people who just happen to not eat meat, but don't really care either way) are a very specific ideological group, and especially if we qualify them like in this post ("EA vegan advocates"), we can talk about their collective traits.

Replies from: pktechgirl, tailcalled, Green_Swan, None↑ comment by Elizabeth (pktechgirl) · 2023-09-29T19:36:52.407Z · LW(p) · GW(p)

TBF, the meat/dairy/egg industries are specific groups of people who work pretty hard to increase animal product consumption, and are much better resourced than vegan advocates. I can understand why animal advocacy would develop some pretty aggressive norms in the face of that, and for that reason I consider it kind of besides the point to go after them in the wider world. It would basically be demanding unilateral disarmament from the weaker side.

But the fact that the wider world is so confused there's no point in pushing for truth is the point. EA needs to stay better than that, and part of that is deescalating the arms race when you're inside its boundaries.

Replies from: tailcalled↑ comment by tailcalled · 2023-09-29T19:56:23.633Z · LW(p) · GW(p)

But the fact that the wider world is so confused there's no point in pushing for truth is the point. EA needs to stay better than that, and part of that is deescalating the arms race when you're inside its boundaries.

Agree with this. I mean I'm definitely not pushing back against your claims, I'm just pointing out the problem seems bigger than commonly understood.

↑ comment by tailcalled · 2023-09-29T19:54:57.124Z · LW(p) · GW(p)

Could you expand on why you think that it makes a significant difference?

- E.g. if the goal is to model what epistemic distortions you might face, or to suggests directions of change for fewer distortions, then coherence is only of limited concern (a coherent group might be easier to change, but on the other hand it might also more easily coordinate to oppose change).

- I'm not sure why you say they are not an ideology, at least under my model of ideology that I have developed for other purposes, they fit the definition (i.e. I believe carnism involves a set of correlated beliefs about life and society that fit together).

- Also not sure what you mean by carnists not having an agenda, in my experience most carnists have an agenda of wanting to eat lots of cheap delicious animal flesh.

↑ comment by Rebecca (bec-hawk) · 2023-09-30T18:58:20.033Z · LW(p) · GW(p)

Could you clarify who you are defining as carnists?

Replies from: tailcalled↑ comment by tailcalled · 2023-09-30T19:40:26.896Z · LW(p) · GW(p)

I tend to think of ideology as a continuum, rather than a strict binary. Like people tend to have varying degrees of belief and trust in the sides of a conflict, and various unique factors influencing their views, and this leads to a lot of shades of nuance that can't really be captured with a binary carnist/not-carnist definition.

But I think there are still some correlated beliefs where you could e.g. take their first principal component as an operationalization of carnism. Some beliefs that might go into this, many of which I have encountered from carnists:

- "People should be allowed to freely choose whether they want to eat factory-farmed meat or not."

- "Animals cannot suffer in any way that matters."

- "One should take an evolutionary perspective and realize that factory farming is actually good for animals. After all, if not for humans putting a lot of effort into farming them, they wouldn't even exist at their current population levels."

- "People who do enough good things out of their own charity deserve to eat animals without concerning themselves with the moral implications."

- "People who design packaging for animal products ought to make it look aesthetically pleasing and comfortable."

- "It is offensive and unreasonable for people to claim that meat-eating is a horribly harmful habit."

- "Animals are made to be used by humans."

- "Consuming animal products like meat or milk is healthier than being strictly vegan."

One could make a defense of some of the statements. For instance Elizabeth has made a to-me convincing defense of the last statement. I don't think this is a bug in the definition of carnism, it just shows that some carnist beliefs can be good and true. One ought to be able to admit that ideology is real and matters while also being able to recognize that it's not a black-and-white issue.

↑ comment by Jacob Watts (Green_Swan) · 2023-10-03T02:28:42.616Z · LW(p) · GW(p)

While I agree that there are notable differences between "vegans" and "carnists" in terms of group dynamics, I do not think that necessarily disagrees with the idea that carnists are anti-truthseeking.

"carnists" are not a coherent group, not an ideology, they do not have an agenda (unless we're talking about some very specific industry lobbyists who no doubt exist). They're just people who don't care and eat meat.

It seems untrue that because carnists are not an organized physical group that has meetings and such, they are thereby incapable of having shared norms or ideas/memes. I think in some contexts it can make sense/be useful to refer to a group of people who are not coherent in the sense of explicitly "working together" or having shared newletters based around a subject or whatever. In some cases, it can make sense to refer to those people's ideologies/norms.

Also, I disagree with the idea that carnists are inherently neutral on the subject of animals/meat. That is, they don't "not care". In general, they actively want to eat meat and would be against things that would stop this. That's not "not caring"; it is "having an agenda", just not one that opposes the current status quo. The fact that being pro-meat and "okay with factory farming" is the more dominant stance/assumed default in our current status quo doesn't mean that it isn't a legitimate position/belief that people could be said to hold. There are many examples of other memetic environments throughout history where the assumed default may not have looked like a "stance" or an "agenda" to the people who were used to it, but nonetheless represented certain ideological claims.

I don't think something only becomes an "ideology" when it disagrees with the current dominant cultural ideas; some things that are culturally common and baked into people from birth can still absolutely be "ideology" in the way I am used to using it. If we disagree on that, then perhaps we could use a different term?

If nothing else, carnists share the ideological assumption that "eating meat is okay". In practice, they often also share ideas about the surrounding philosophical questions and attitudes. I don't think it is beyond the pale to say that they could share norms around truth-seeking as it relates to these questions and attitudes. It feels unnecessarily dismissive and perhaps implicitly status quoist to assume that: as a dominant, implicit meme of our culture "carnism" must be "neutral" and therefore does not come with/correlate with any norms surrounding how people think about/process questions related to animals/meat.

Carnism comes with as much ideology as veganism even if people aren't as explicit in presenting it or if the typical carnist hasn't put as much thought into it.

I do not really have any experience advocating publicly for veganism and I wouldn't really know about which specific espistemic failure modes are common among carnists for these sorts of conversations, but I have seen plenty of people bend themselves out of shape persevering their own comfort and status quo, so it really doesn't seem like a stretch to imagine that epistemic maladies may tend to present among carnists when the question of veganism comes up.

For one thing, I have personally seen carnists respond in intentionally hostile ways towards vegans/vegan messaging on several occasions. Partially this is because they see it as a threat to their ideas or their way of life or partially this is because veganism is a designated punching bag that you're allowed to insult in a lot of places. Often times, these attacks draw on shared ideas about veganism/animals/morality that are common between "carnists".

So, while I agree that there are very different group dynamics, I don't think it makes sense to say that vegans hold ideologies and are capable of exhibiting certain epistemic behaviors, but that carnists, by virtue of not being a sufficiently coherent collection of individuals, could not have the same labels applied to them.

↑ comment by [deleted] · 2023-10-06T23:59:56.647Z · LW(p) · GW(p)

(edit: idk if i endorse comments like this, i was really stressed from the things being said in the comments here)

People who fund the torture of animals are not a coherent group, not an ideology, they do not have an agenda. People who don't fund the torture of animals are a coherent group, an ideology, they have an agenda.

People who keep other people enslaved are not a coherent group, not an ideology, they do not have an agenda. People who seek to end slavery are a coherent group, an ideology, they have an agenda.

Normal people like me are not a coherent group, not an ideology, we do not have an agenda.

Atypicals like you are a coherent group, an ideology, you have an agenda.

maybe a future, better, post-singularity version of yourself will understand how terribly alienating statements like this are. maybe that person will see just how out-of-frame you have kept the suffering of other life forms to think this way.

my agenda is that of a confused, tortured animal, crying out in pain. it is, at most, a convulsive reaction. in desperation, it grasps onto 'instrumental rationality' like the paws of one being pulled into rotating blades flail around them, looking for a hold to force themself back.

and it finds nothing, the suffering persists until the day the world ends.

Replies from: ariel-kwiatkowski↑ comment by kwiat.dev (ariel-kwiatkowski) · 2023-10-07T13:40:26.520Z · LW(p) · GW(p)

Jesus christ, chill. I don't like playing into the meme of "that's why people don't like vegans", but that's exactly why.

And posting something insane followed by an edit of "idk if I endorse comments like this" has got to be the most online rationalist thing ever.

Replies from: None↑ comment by [deleted] · 2023-10-10T07:30:39.880Z · LW(p) · GW(p)

i do endorse the actual meaning of what i wrote. it is not "insane" and to call it that is callous. i added the edit because i wasn't sure if expressions of stress are productive. i think there's a case to be made that they are when it clearly stems from some ongoing discursive pattern, so that others can know the pain that their words cause. especially given this hostile reaction.

---

deleted the rest of this. there's no point for two alignment researchers to be fighting over oldworld violence. i hope this will make sense looking back.

↑ comment by Serine · 2023-10-08T11:26:50.301Z · LW(p) · GW(p)

The line about "carnists" strikes me as outgroup homogeneity, conceptual gerrymandering, The Worst Argument In The World [LW · GW] - call it what you want, but it should be something rationalists should have antibodies against.

Specifically, equivocating between "carnists [meat industry lobbyists]" and "carnists [EA non-vegans]" seems to me like known anti-truthseeking behavior.

So the question, as I see you posing, is whether NinetyThree prefers being in an epistemic environment with people who care about epistemic truthseeking (EA non-vegans) or with people for whom your best defense is that they're no worse than meat industry lobbyists.

Replies from: tailcalled↑ comment by tailcalled · 2023-10-08T11:30:12.007Z · LW(p) · GW(p)

I think my point would be empirically supported; we can try set up a survey and run a factor analysis if you doubt it.

Edit: just to clarify I'm not gonna run the factor analysis unless someone who doubts the validity of the category comes by to cooperate, because I'm busy and expect there'd be a lot of goalpost moving that I don't have time to deal with if I did it without pre-approval from someone who doesn't buy it.

↑ comment by tailcalled · 2023-09-29T07:11:58.170Z · LW(p) · GW(p)

Ok I'm getting downvoted to oblivion because of this, so let me clarify:

So I guess the question is whether you prefer being in an epistemic environment that has declared war on humans or an epistemic environment that has declared war on farm animals.

If, like NinetyThree, you decide to give up on untangling the question for yourself because of all the lying ("I would describe my epistemic status as "not really open to persuasion""), then you still have to make decisions, which in practice means following some side in the conflict, and the most common side is the carnist side which has the problems I mention.

I don't want to be in a situation where I have to give up on untangling the question (see my top-level comment proposing a research community), but if I'm being honest I can't exactly say that it's invalid for NinetyThree to do so.

Replies from: bec-hawk↑ comment by Rebecca (bec-hawk) · 2023-09-30T19:13:00.827Z · LW(p) · GW(p)

I understood NinetyThree to be talking about vegans lying about issues of health (as Elizabeth was also focusing on), not about the facts of animal suffering. If you agree with the arguments on the animal cruelty side and your uncertainty is focused on the health effects on you of a vegan diet vs your current one (which you have 1st hand data on), it doesn’t really matter what the meat industry is saying as that wasn’t a factor in the first place

Replies from: tailcalled↑ comment by tailcalled · 2023-10-05T09:31:01.121Z · LW(p) · GW(p)

Maybe. I pattern-matched it this way because I had previously been discussing psychological sex differences with Ninety-Three on discord, where he adopted the HBD views on them due to a perception that psychologists were biased, but he wasn't interested in making arguments or in me doing followup studies to test it. So I assumed a similar thing was going on here with respect to eating animals.

↑ comment by Adam Zerner (adamzerner) · 2023-09-29T07:26:15.531Z · LW(p) · GW(p)

I don't agree with the downvoting. The first paragraph sounds to me like a not only fair, but good point. The first sentence in the second paragraph doesn't really seem true to me though.

Replies from: tailcalled↑ comment by tailcalled · 2023-09-29T07:28:06.512Z · LW(p) · GW(p)

Does it also not seem true in the context of my followup clarification?

Replies from: adamzerner↑ comment by Adam Zerner (adamzerner) · 2023-09-29T07:36:15.086Z · LW(p) · GW(p)

Yeah, it still doesn't seem true even given the followup clarification.

Well, depending on what you actually mean. In the original excerpt, you're saying that the question is whether you want to be in epistemic environment A or epistemic environment B. But in your followup clarification, you talk about the need to decide on something. I agree that you do need to decide on something (~carnist or vegan). I don't think that means you necessarily have to be in one of those two epistemic environments you mention. But I also charitably suspect that you don't actually think that you necessarily have to be in one of those two specific epistemic environments and just misspoke.

Replies from: tailcalled↑ comment by tailcalled · 2023-09-29T07:59:44.281Z · LW(p) · GW(p)

In the followup, I admit you don't have to choose as long as you don't give up on untangling the question. So like I'm implying that there's multiple options such as:

- Try to figure it out (NinetyThree rejects this, "not really open to persuasion")

- Adopt the carnist side (I think NinetyThree probably broadly does this though likely with exceptions)

- Adopt the vegan side (NinetyThree rejects this)

Though I suppose you are right that there are also lots of other nuanced options that I haven't acknowledged, such as "decide you are uncertain between the sides, and e.g. use utility weights to manage risk while exploiting opportunities", which isn't really the same as "try to figure it out". Not sure if that's what you mean; another option would be that e.g. I have a broader view of what "try to figure it out" means than you do, or similar (though what really matters for the literal truth of my comment is what NinetyThree's view is). Or maybe you mean that there are additional sides that could be adopted? (I meant to hint at that possibility with phrasings like "the most common side", but I suppose that could also be interpreted to just be acknowledging the vegan side.) Or maybe it's just "all of the above"?

I do genuinely think that there is value in thinking of it as a 2D space of tradeoffs for cheap epistemics <-> strong epistemics and pro animal <-> pro human (realistically one could also put in the environment too, and realistically on the cheap epistemics side it's probably anti human <-> anti animal). I agree that my original comment lacked nuance wrt the ways one could exist within that tradeoff, though I am unsure to what extent your objection is about the tradeoff framing vs the nuance in the ways one can exist in it.

Replies from: adamzerner↑ comment by Adam Zerner (adamzerner) · 2023-09-29T17:56:51.103Z · LW(p) · GW(p)

In the followup, I admit you don't have to choose as long as you don't give up on untangling the question.

Ah, I kinda overlooked this. My bad.

In general my position is now that:

- I'm a little confused.

- I think what you wrote is probably fine.

- Think you probably could have been more clear about what you initially wrote.

- Think it's totally fine to not be perfect in what you originally wrote.

- Feel pretty charitable. I'm sure that what you truly meant is something pretty reasonable.

- Think downvoters were probably triggered and were being uncharitable.

- Am not interested in spending much more time on this.

↑ comment by ztzuliios · 2023-10-02T20:36:18.103Z · LW(p) · GW(p)

In general, committing to any stance as a personal constant (making it a "part of your identity") is antithetical to truthseeking. It certainly imposes a constraint on truthseeking that makes the problem harder.

But, if you share that stance with someone else, you won't tend to see it. You'll just see the correctness of your own stance. Being able to correctly reason around this is a hard-mode problem.

While you can speak about specific spectra of stances (vegan-carnist, and others), in reality, there are multiple spectra in play at any given time (the one I see the most is liberal-radical but there are also others). This leads to truthseeking constraints or in a word biases in cross-cutting ways. This seems to play out in the interplay of all the different people committing all the different sins called out in the OP. I think this is not unique to veganism at all and in fact plays out in virtually all similar spaces and contests. You always have to average out the ideological bias from a community.

There is no such thing as an epistemic environment that has not declared war on you. There can be no peace. This is hard mode and I consider the OP here to be another restatement of the generally accepted principle that this kind of discussion is hard mode / mindkilling.

This is why I'm highly skeptical of claims like the comment-grandparent. Everyone is lying, and it doesn't matter much whether the lying is intentional or implicit. There is no such thing as a political ideology that is fully truth-seeking. That is a contradiction in terms. There is also no such thing as a fully neutral political ideology or political/ethical stance; everyone has a point of view. I'm not sure whether the vegans are in fact worse than the carnists on this. One side certainly has a significant amount of status-quo bias behind it. The same can be said about many other things.

Just to be explicitly, my point of view as it relates to these issues is vegan/radical, I became vegan roughly at the same time I became aware of rationalism but for other reasons, and when I went vegan the requirement for b12 supplementation was commonly discussed (outside the rationalist community, which was not very widely vegan at the time) mostly because "you get it from supplements that get it from dirt" was the stock counterargument to "but no b12 when vegan."

Replies from: tailcalled↑ comment by tailcalled · 2023-10-08T13:33:11.140Z · LW(p) · GW(p)

I don't think this is right, or at least it doesn't hit the crux.

People on a vegan diet should in a utopian society be the ones who are most interested in truth about the nutritional challenges on a vegan diet, as they are the ones who face the consequences. The fact that they aren't reflects the fact that they are not optimizing for living their own life well, but instead for convincing others of veganism.

Marketing like this is the simplest (and thus most common?) way for ideologies to keep themselves alive. However, it's not clear that it's the only option. If an ideology is excellent at truthseeking, then this would presumably by itself be a reason to adopt it, as it would have a lot of potential to make you stronger.

Rationalism is in theory supposed to be this. In practice, rationalism kind of sucks at it, I think because it's hard and people aren't funding it much and maybe also all the best rationalists start working in AI safety or something.

There's some complications to this story though. As you say, there is no such thing as an epistemic environment that has not (in a metaphorical sense) declared war on you. Everyone does marketing, and so everyone perceives full truthseeking as a threat, and so you'd make a lot of enemies through doing this. A compromise would be a conspiracy which does truthseeking in private to avoid punishment, but such a conspiracy is hardly an ideology, and also it feels pretty suspicious to organize at scale.

↑ comment by Adam Zerner (adamzerner) · 2023-09-29T06:08:15.417Z · LW(p) · GW(p)

The other reason vegan advocates should care about the truth is that if you keep lying, people will notice and stop trusting you.

I hear ya, but I think this is missing something important. Basically, I'm thinking of the post Ends Don't Justify Means (Among Humans) [LW · GW].[1][2]

Doing things that are virtuous tends to lead to good outcomes. Doing things that aren't virtuous tends to lead to bad outcomes. For you, and for others. It's hard to predict what those outcomes -- good and bad -- actually are. If you were a perfect Bayesian with unlimited information, time and computing power, then yes, go ahead and do the consequentialist calculus. But for humans, we are lacking in those things. Enough so that consequentalist calculus frequently becomes challenging, and the good track record of virtue becomes a huge consideration.

So, I agree with you that "lying leads to mistrust" is one of the reasons why vegan advocates shouldn't lie. But I think that the main reason they shouldn't lie is simply that lying has a pretty bad track record.

And then another huge consideration is that people who come up with reasons why they, at least in this particular circumstance, are a special snowflake and are justified in lying, frequently are deluding themselves.[3]

- ^

Well, that post is about ethics. And I think the conversation we're having isn't really limited to ethics. It's more about just, pragmatically, what should the EA community do if they want to win.

- ^

Here's my slightly different(?) take, if anyone's interested: Reflective Consequentialism [LW · GW].

- ^

I cringe at how applause light-y this comment is. Please don't upvote if you feel like you might be non-trivially reacting to an applause light [LW · GW].

↑ comment by Rebecca (bec-hawk) · 2023-09-30T19:27:05.642Z · LW(p) · GW(p)

I understood the original comment to be making essentially the same point you’re making - that lying has a bad track record, where ‘lying has a bad track record of causing mistrust’ is a case of this. In what way do you see them as distinct reasons?

Replies from: adamzerner↑ comment by Adam Zerner (adamzerner) · 2023-09-30T19:41:04.809Z · LW(p) · GW(p)

I see them as distinct because what I'm saying is that lying generally tends to lead to bad outcomes (for both the liar and society at large) whereas mistrust specifically is just one component of the bad outcomes.

Other components that come to my mind:

- People don't end up with accurate information.

- Expectations that people will cooperate (different from "tell you the truth") go down.

- Expectations that people will do things because they are virtuous go down.

But a big thing here is that it's difficult to know why exactly it will lead to bad outcomes. The gears are hard to model. However, I think there's solid evidence that it leads to bad outcomes.

↑ comment by jacquesthibs (jacques-thibodeau) · 2023-09-29T07:39:02.728Z · LW(p) · GW(p)

I personally became vegetarian after being annoyed that vegans weren’t truth-seeking (like most groups of people, tbc). But I can totally see why others would be turned off from veganism completely after being lied to (even if people spread nutrition misinformation about whatever meat-eating diet they are on too).

I became vegetarian even though I stopped trusting what vegans said about nutrition and did my own research. Luckily that’s something I was interested in because I wouldn’t expect others to bother reading papers and such for having healthy diet.

(Note: I’m now considering eating meat again, but only ethical farms and game meat because I now believe those lives are good, I’m really only against some forms of factory farming. But this kind of discussion is hard to have with other veg^ns.)

Replies from: tristan-williams, adamzerner↑ comment by Tristan Williams (tristan-williams) · 2023-09-29T20:28:29.636Z · LW(p) · GW(p)

Did you go vegetarian because you thought it was specifically healthier than going vegan?

Replies from: jacques-thibodeau↑ comment by jacquesthibs (jacques-thibodeau) · 2023-09-29T21:12:25.129Z · LW(p) · GW(p)

Yes and no. No because I figured most of the reduction in suffering came from not eating meat and eggs (I stopped eating eggs even tho most vegetarians do). So I felt it was a good spot to land and not be too much effort for me.

Replies from: tristan-williams↑ comment by Tristan Williams (tristan-williams) · 2023-09-30T11:43:52.347Z · LW(p) · GW(p)

Ah okay cool, so you have a certain threshold for harm and just don't consume anything above that. I've found this approach really interesting and have recommended others against because I've worried about it's sustainability, but do you think it's been a good path for you?

Replies from: jacques-thibodeau, pktechgirl, DonyChristie↑ comment by jacquesthibs (jacques-thibodeau) · 2023-09-30T13:49:11.028Z · LW(p) · GW(p)

I’m not sure why you’d think it’s less sustainable than veganism. In my mind, it’s effective because it is sustainable and reduces most of the suffering. Just like how EA tries to be effective (and sustainable) by not telling people to donate massive amounts of their income (just a small-ish percentage that works for them to the most effective charities), I see my approach as the same. It’s the sweet-spot between reducing suffering and sustainability (for me).

Replies from: tristan-williams↑ comment by Tristan Williams (tristan-williams) · 2023-10-02T15:30:44.030Z · LW(p) · GW(p)

See below if you'd like an in depth look at my way of thinking, but I defiantly see the analogy and suppose I just think of it a bit differently myself. Can I ask how long you've been vegetarian? And how you've come to the decision as to which animals lives you think are net positive?

Replies from: jacques-thibodeau↑ comment by jacquesthibs (jacques-thibodeau) · 2023-10-03T03:14:29.701Z · LW(p) · GW(p)

5 and half years. Didn’t do it sooner because I was concerned about nutrition and don’t trust vegans/vegetarians to give truthful advice. I used various statistics on number of deaths, adjusted for sentience, and more. Looked at articles like this: https://www.vox.com/2015/7/31/9067651/eggs-chicken-effective-altruism

↑ comment by Elizabeth (pktechgirl) · 2023-09-30T17:02:25.791Z · LW(p) · GW(p)

I've found this approach really interesting and have recommended others against because I've worried about it's sustainability,

This argument came up a lot during the facebook debate days, could you say more about why you believe it?

Replies from: tristan-williams↑ comment by Tristan Williams (tristan-williams) · 2023-10-02T15:27:10.475Z · LW(p) · GW(p)

Yeah sure. I would need a full post to explain myself, but basically I think that what seems to be really important when going vegan is standing in a certain sort of loving relationship to animals, one that isn't grounded in utility but instead a strong (but basic) appreciation and valuing of the other. But let me step back for a minute.