[$20K in Prizes] AI Safety Arguments Competition

post by Dan H (dan-hendrycks), Kevin Liu (Pneumaticat), ozhang (oliver-zhang), TW123 (ThomasWoodside), Sidney Hough (Sidney) · 2022-04-26T16:13:16.351Z · LW · GW · 518 commentsContents

Objectives of the arguments Competition details Paragraphs One-liners Conditions of the prizes None 528 comments

TL;DR—We’re distributing $20k in total as prizes for submissions that make effective arguments for the importance of AI safety. The goal is to generate short-form content for outreach to policymakers, management at tech companies, and ML researchers. This competition will be followed by another competition in around a month that focuses on long-form content.

This competition is for short-form arguments for the importance of AI safety. For the competition for distillations of posts, papers, and research agendas, see the Distillation Contest [? · GW].

Objectives of the arguments

To mitigate AI risk, it’s essential that we convince relevant stakeholders sooner rather than later. To this end, we are initiating a pair of competitions to build effective arguments for a range of audiences. In particular, our audiences include policymakers, tech executives, and ML researchers.

- Policymakers may be unfamiliar with the latest advances in machine learning, and may not have the technical background necessary to understand some/most of the details. Instead, they may focus on societal implications of AI as well as which policies are useful.

- Tech executives are likely aware of the latest technology, but lack a mechanistic understanding. They may come from technical backgrounds and are likely highly educated. They will likely be reading with an eye towards how these arguments concretely affect which projects they fund and who they hire.

- Machine learning researchers can be assumed to have high familiarity with the state of the art in deep learning. They may have previously encountered talk of x-risk but were not compelled to act. They may want to know how the arguments could affect what they should be researching.

We’d like arguments to be written for at least one of the three audiences listed above. Some arguments could speak to multiple audiences, but we expect that trying to speak to all at once could be difficult. After the competition ends, we will test arguments with each audience and collect feedback. We’ll also compile top submissions into a public repository for the benefit of the x-risk community.

Note that we are not interested in arguments for very specific technical strategies towards safety. We are simply looking for sound arguments that AI risk is real and important.

Competition details

The present competition addresses shorter arguments (paragraphs and one-liners) with a total prize pool of $20K. The prizes will be split among, roughly, 20-40 winning submissions. Please feel free to make numerous submissions and try your hand at motivating various different risk factors; it's possible that an individual with multiple great submissions could win a good fraction of the prize. The prize distribution will be determined by effectiveness and epistemic soundness as judged by us. Arguments must not be misleading.

To submit an entry:

- Please leave a comment on this post (or submit a response to this form), including:

- The original source, if not original.

- If the entry contains factual claims, a source for the factual claims.

- The intended audience(s) (one or more of the audiences listed above).

- In addition, feel free to adapt another user’s comment by leaving a reply—prizes will be awarded based on the significance and novelty of the adaptation.

Note that if two entries are extremely similar, we will, by default, give credit to the entry which was posted earlier. Please do not submit multiple entries in one comment; if you want to submit multiple entries, make multiple comments.

The first competition will run until May 27th, 11:59 PT. In around a month, we’ll release a second competition for generating longer “AI risk executive summaries'' (more details to come). If you win an award, we will contact you via your forum account or email.

Paragraphs

We are soliciting argumentative paragraphs (of any length) that build intuitive and compelling explanations of AI existential risk.

- Paragraphs could cover various hazards and failure modes, such as weaponized AI, loss of autonomy and enfeeblement, objective misspecification, value lock-in, emergent goals, power-seeking AI, and so on.

- Paragraphs could make points about the philosophical or moral nature of x-risk.

- Paragraphs could be counterarguments to common misconceptions.

- Paragraphs could use analogies, imagery, or inductive examples.

- Paragraphs could contain quotes from intellectuals: “If we continue to accumulate only power and not wisdom, we will surely destroy ourselves” (Carl Sagan), etc.

For a collection of existing paragraphs that submissions should try to do better than, see here.

Paragraphs need not be wholly original. If a paragraph was written by or adapted from somebody else, you must cite the original source. We may provide a prize to the original author as well as the person who brought it to our attention.

One-liners

Effective one-liners are statements (25 words or fewer) that make memorable, “resounding” points about safety. Here are some (unrefined) examples just to give an idea:

- Vladimir Putin said that whoever leads in AI development will become “the ruler of the world.” (source for quote)

- Inventing machines that are smarter than us is playing with fire.

- Intelligence is power: we have total control of the fate of gorillas, not because we are stronger but because we are smarter. (based on Russell)

One-liners need not be full sentences; they might be evocative phrases or slogans. As with paragraphs, they can be arguments about the nature of x-risk or counterarguments to misconceptions. They do not need to be novel as long as you cite the original source.

Conditions of the prizes

If you accept a prize, you consent to the addition of your submission to the public domain. We expect that top paragraphs and one-liners will be collected into executive summaries in the future. After some experimentation with target audiences, the arguments will be used for various outreach projects.

(We thank the Future Fund regrant program and Yo Shavit and Mantas Mazeika for earlier discussions.)

In short, make a submission by leaving a comment with a paragraph or one-liner. Feel free to enter multiple submissions. In around a month we'll divide 20K to award the best submissions.

518 comments

Comments sorted by top scores.

comment by johnswentworth · 2022-04-26T17:55:00.800Z · LW(p) · GW(p)

I'd like to complain that this project sounds epistemically absolutely awful. It's offering money for arguments explicitly optimized to be convincing (rather than true), it offers money only for prizes making one particular side of the case (i.e. no money for arguments that AI risk is no big deal), and to top it off it's explicitly asking for one-liners.

I understand that it is plausibly worth doing regardless, but man, it feels so wrong having this on LessWrong.

Replies from: not-relevant, lc, conor-sullivan, Sidney, Davidmanheim↑ comment by Not Relevant (not-relevant) · 2022-04-26T20:02:46.000Z · LW(p) · GW(p)

If the world is literally ending, and political persuasion seems on the critical path to preventing that, and rationality-based political persuasion has thus far failed while the empirical track record of persuasion for its own sake is far superior, and most of the people most familiar with articulating AI risk arguments are on LW/AF, is it not the rational thing to do to post this here?

I understand wanting to uphold community norms, but this strikes me as in a separate category from “posts on the details of AI risk”. I don’t see why this can’t also be permitted.

Replies from: johnswentworth, tamgent↑ comment by johnswentworth · 2022-04-26T20:26:44.232Z · LW(p) · GW(p)

TBC, I'm not saying the contest shouldn't be posted here. When something with downsides is nonetheless worthwhile, complaining about it but then going ahead with it is often the right response - we want there to be enough mild stigma against this sort of thing that people don't do it lightly, but we still want people to do it if it's really clearly worthwhile. Thus my kvetching.

(In this case, I'm not sure it is worthwhile, compared to some not-too-much-harder alternative. Specifically, it's plausible to me that the framing of this contest could be changed to not have such terrible epistemics while still preserving the core value - i.e. make it about fast, memorable communication rather than persuasion. But I'm definitely not close to 100% sure that would capture most of the value.

Fortunately, the general policy of imposing a complaint-tax on really bad epistemics does not require me to accurately judge the overall value of the proposal.)

Replies from: not-relevant, Raemon↑ comment by Not Relevant (not-relevant) · 2022-04-26T20:45:49.104Z · LW(p) · GW(p)

I'm all for improving the details. Which part of the framing seems focused on persuasion vs. "fast, effective communication"? How would you formalize "fast, effective communication" in a gradeable sense? (Persuasion seems gradeable via "we used this argument on X people; how seriously they took AI risk increased from A to B on a 5-point scale".)

Replies from: liam-donovan-1↑ comment by Liam Donovan (liam-donovan-1) · 2022-04-27T04:28:27.705Z · LW(p) · GW(p)

Maybe you could measure how effectively people pass e.g. a multiple choice version of an Intellectual Turing Test (on how well they can emulate the viewpoint of people concerned by AI safety) after hearing the proposed explanations.

[Edit: To be explicit, this would help further John's goals (as I understand them) because it ideally tests whether the AI safety viewpoint is being communicated in such a way that people can understand and operate the underlying mental models. This is better than testing how persuasive the arguments are because it's a) more in line with general principles of epistemic virtue and b) is more likely to persuade people iff the specific mental models underlying AI safety concern are correct.

One potential issue would be people bouncing off the arguments early and never getting around to building their own mental models, so maybe you could test for succinct/high-level arguments that successfully persuade target audiences to take a deeper dive into the specifics? That seems like a much less concerning persuasion target to optimize, since the worst case is people being wrongly persuaded to "waste" time thinking about the same stuff the LW community has been spending a ton of time thinking about for the last ~20 years]

↑ comment by Raemon · 2022-04-26T22:17:22.099Z · LW(p) · GW(p)

This comment thread did convince me to put it on personal blog (previously we've frontpaged writing-contents and went ahead and unreflectively did it for this post)

Replies from: None↑ comment by [deleted] · 2022-04-26T22:35:43.088Z · LW(p) · GW(p)

I don't understand the logic here? Do you see it as bad for the contest to get more attention and submissions?

Replies from: johnswentworth↑ comment by johnswentworth · 2022-04-26T22:56:03.803Z · LW(p) · GW(p)

No, it's just the standard frontpage policy [? · GW]:

Frontpage posts must meet the criteria of being broadly relevant to LessWrong’s main interests; timeless, i.e. not about recent events; and are attempts to explain not persuade.

Technically the contest is asking for attempts to persuade not explain, rather than itself attempting to persuade not explain, but the principle obviously applies.

As with my own comment, I don't think keeping the post off the frontpage is meant to be a judgement that the contest is net-negative in value; it may still be very net positive. It makes sense to have standard rules which create downsides for bad epistemics, and if some bad epistemics are worthwhile anyway, then people can pay the price of those downsides and move forward.

Replies from: Ruby↑ comment by Ruby · 2022-04-27T02:13:04.629Z · LW(p) · GW(p)

Raemon and I discussed whether it should be frontpage this morning. Prizes are kind of an edge case in my mind. They don't properly fulfill the frontpage criteria but also it feels like they deserve visibility in a way that posts on niche topics don't, so we've more than once made an exception for them.

I didn't think too hard about the epistemics of the post when I made the decision to frontpage, but after John pointed out the suss epistemics, I'm inclined to agree, and concurred with Raemon moving it back to Personal.

----

I think the prize could be improved simply by rewarding the best arguments in favor and against AI risk. This might actually be more convincing to the skeptics – we paid people to argue against this position and now you can see the best they came up with.

↑ comment by lc · 2022-05-20T22:12:24.609Z · LW(p) · GW(p)

We're out of time. This is what serious political activism involves.

Replies from: TrevorWiesinger↑ comment by trevor (TrevorWiesinger) · 2022-05-25T23:47:25.807Z · LW(p) · GW(p)

I don't see any lc comments, and I really wish I could see some here because I feel like they'd be good.

Let's go! Let's go! Crack open an old book and let the ideas flow! The deadline is, like, basically tomorrow.

Replies from: lc↑ comment by Lone Pine (conor-sullivan) · 2022-04-26T18:46:20.721Z · LW(p) · GW(p)

Most movements (and yes, this is a movement) have multiple groups of people, perhaps with degrees in subjects like communication, working full time coming up with slogans, making judgments about which terms to use for best persuasiveness, and selling the cause to the public. It is unusual for it to be done out in the open, yes. But this is what movements do when they have already decided what they believe and now have policy goals they know they want to achieve. It’s only natural.

Replies from: hath↑ comment by hath · 2022-04-26T19:00:48.026Z · LW(p) · GW(p)

You didn't refute his argument at all, you just said that other movements do the same thing. Isn't the entire point of rationality that we're meant to be truth-focused, and winning-focused, in ways that don't manipulate others? Are we not meant to hold ourselves to the standard of "Aim to explain, not persuade"? Just because others in the reference class of "movements" do something doesn't mean it's immediately something we should replicate! Is that not the obvious, immediate response? Your comment proves too much; it could be used to argue for literally any popular behavior of movements, including canceling/exiling dissidents.

Do I think that this specific contest is non-trivially harmful at the margin? Probably not. I am, however, worried about the general attitude behind some of this type of recruitment, and the justifications used to defend it. I become really fucking worried when someone raises an entirely valid objection, and is met with "It's only natural; most other movements do this".

Replies from: P.↑ comment by P. · 2022-04-26T19:26:39.357Z · LW(p) · GW(p)

To the extent that rationality has a purpose, I would argue that it is to do what it takes to achieve our goals, if that includes creating "propaganda", so be it. And the rules explicitly ask for submissions not to be deceiving, so if we use them to convince people it will be a pure epistemic gain.

Edit: If you are going to downvote this, at least argue why. I think that if this works like they expect, it truly is a net positive.

Replies from: hath↑ comment by hath · 2022-04-27T00:02:41.094Z · LW(p) · GW(p)

If you are going to downvote this, at least argue why.

Fair. Should've started with that.

To the extent that rationality has a purpose, I would argue that it is to do what it takes to achieve our goals,

I think there's a difference between "rationality is systematized winning" and "rationality is doing whatever it takes to achieve our goals". That difference requires more time to explain than I have right now.

if that includes creating "propaganda", so be it.

I think that if this works like they expect, it truly is a net positive.

I think that the whole AI alignment thing requires extraordinary measures, and I'm not sure what specifically that would take; I'm not saying we shouldn't do the contest. I doubt you and I have a substantial disagreement as to the severity of the problem or the effectiveness of the contest. My above comment was more "argument from 'everyone does this' doesn't work", not "this contest is bad and you are bad".

Also, I wouldn't call this contest propaganda. At the same time, if this contest was "convince EAs and LW users to have shorter timelines and higher chances of doom", it would be reacted to differently. There is a difference, convincing someone to have a shorter timeline isn't the same as trying to explain the whole AI alignment thing in the first place, but I worry that we could take that too far. I think that (most of) the responses John's comment got were good, and reassure me that the OPs are actually aware of/worried about John's concerns. I see no reason why this particular contest will be harmful, but I can imagine a future where we pivot to mainly strategies like this having some harmful second-order effects (which need their own post to explain).

↑ comment by Sidney Hough (Sidney) · 2022-04-26T20:52:33.151Z · LW(p) · GW(p)

Hey John, thank you for your feedback. As per the post, we’re not accepting misleading arguments. We’re looking for the subset of sound arguments that are also effective.

We’re happy to consider concrete suggestions which would help this competition reduce x-risk.

Replies from: jacobjacob↑ comment by Bird Concept (jacobjacob) · 2022-04-26T22:23:18.527Z · LW(p) · GW(p)

Thanks for being open to suggestions :) Here's one: you could award half the prize pool to compelling arguments against AI safety. That addresses one of John's points.

For example, stuff like "We need to focus on problems AI is already causing right now, like algorithmic fairness" would not win a prize, but "There's some chance we'll be better able to think about these issues much better in the future once we have more capable models that can aid our thinking, making effort right now less valuable" might.

Replies from: Chris_Leong, Sidney, thomas-kwa↑ comment by Chris_Leong · 2022-04-26T23:10:40.375Z · LW(p) · GW(p)

That idea seems reasonable at first glance, but upon reflection, I think it's a really bad idea. It's one thing to run a red-teaming competition, it's another to spend money building rhetorically optimised tools for the other side. If we do that, then maybe there was no point running the competition in the first place as it might all cancel out.

Replies from: Ruby↑ comment by Sidney Hough (Sidney) · 2022-04-26T23:07:31.916Z · LW(p) · GW(p)

Thanks for the idea, Jacob. Not speaking on behalf of the group here - but my first thought is that enforcing symmetry on discussion probably isn't a condition for good epistemics, especially since the distribution of this community's opinions is skewed. I think I'd be more worried if particular arguments that were misleading went unchallenged, but we'll be vetting submissions as they come in, and I'd also encourage anyone who has concerns with a given submission to talk with the author and/or us. My second thought is that we're planning a number of practical outreach projects that will make use of the arguments generated here - we're not trying to host an intra-community debate about the legitimacy of AI risk - so we'd ideally have the prize structure reflect the outreach value for which arguments are responsible.

I'm potentially up to opening the contest to arguments for or against AI risk, and allowing the distribution of responses to reflect the distribution of the opinions of the community. Will discuss with the rest of the group.

↑ comment by Thomas Kwa (thomas-kwa) · 2022-04-27T07:06:38.828Z · LW(p) · GW(p)

It seems better to award some fraction of the prize pool to refutations of the posted arguments. IMO the point isn't to be "fair to both sides", it's to produce truth.

Replies from: Davidmanheim↑ comment by Davidmanheim · 2022-04-27T10:01:36.952Z · LW(p) · GW(p)

Wait, the goal here, at least, isn't to produce truth, it is to disseminate it. Counter-arguments are great, but this isn't about debating the question, it's about communicating a conclusion well.

Replies from: yitz↑ comment by Yitz (yitz) · 2022-04-27T17:04:32.716Z · LW(p) · GW(p)

This is PR, not internal epistemics, if I’m understanding the situation correctly.

↑ comment by Davidmanheim · 2022-04-27T09:59:49.359Z · LW(p) · GW(p)

Think of it as a "practicing a dark art of rationality" post, and I'd think it would seem less off-putting.

Replies from: Benito↑ comment by Ben Pace (Benito) · 2022-04-27T10:06:26.063Z · LW(p) · GW(p)

I think it would be less "off-putting" if we had common knowledge of it being such a post. I think the authors don't think of it as that from reading Sidney's comment.

comment by trevor (TrevorWiesinger) · 2022-04-26T20:11:04.054Z · LW(p) · GW(p)

It's unreasonable to look at AI and think "in order to make it more predictable, more controllable, we just have to make it smarter". When individual humans become smarter, they become much less predictable. And the human mind is built to predict the behavior of other humans.

Replies from: TrevorWiesinger↑ comment by trevor (TrevorWiesinger) · 2022-04-27T18:38:33.847Z · LW(p) · GW(p)

The last sentence can be cut off for simplicity, most of the point isin the first two sentences.

comment by Marius Hobbhahn (marius-hobbhahn) · 2022-04-26T18:03:31.042Z · LW(p) · GW(p)

Random side note: GPT-3 seems to be able to generate decent one liners.

Generate one-liners describing the dangers of AI. An example is "Inventing machines that are smarter than us is playing with fire."

1. Machines that are smarter than us could easily become our masters.

2. If we're not careful, AI could spell the end of humanity as we know it.

3. AI could be used to create weapons of mass destruction that could devastate the planet.

4. AI could be used to create powerful robots that could enslave humans.

5. AI could be used to create artificial intelligence that could turn against its creators

6. AI usher in a new era of cyber-warfare that could cripple society

7. AI could create self-replicating robots that could eventually consume all resources on Earth

8. AI could be used to create powerful AIs that could eventually surpass human intelligence and take over the world

9. AI technology could eventually be used to create a global surveillance state where everyone is constantly watched and monitored

Replies from: mitchell-reynolds, jcp29, TrevorWiesinger↑ comment by Mitchell Reynolds (mitchell-reynolds) · 2022-04-26T21:42:41.115Z · LW(p) · GW(p)

I had a similar thought to prompt GPT-3 for one liners or to summarize some article (if available). I think involving the community to write 500-1000 winning submissions would have the positive externality of non-winners to distill/condense their views. My exploratory idea is that this would be instrumentally useful when talking with those new to AI x-risk topics.

Replies from: yitz↑ comment by Yitz (yitz) · 2022-04-27T17:06:08.256Z · LW(p) · GW(p)

We could also prompt GPT-3 with the results ;)

↑ comment by jcp29 · 2022-04-27T19:13:16.158Z · LW(p) · GW(p)

Good idea! I could imagine doing something similar with images generated by DALL-E.

Replies from: FinalFormal2↑ comment by FinalFormal2 · 2022-05-09T05:11:27.927Z · LW(p) · GW(p)

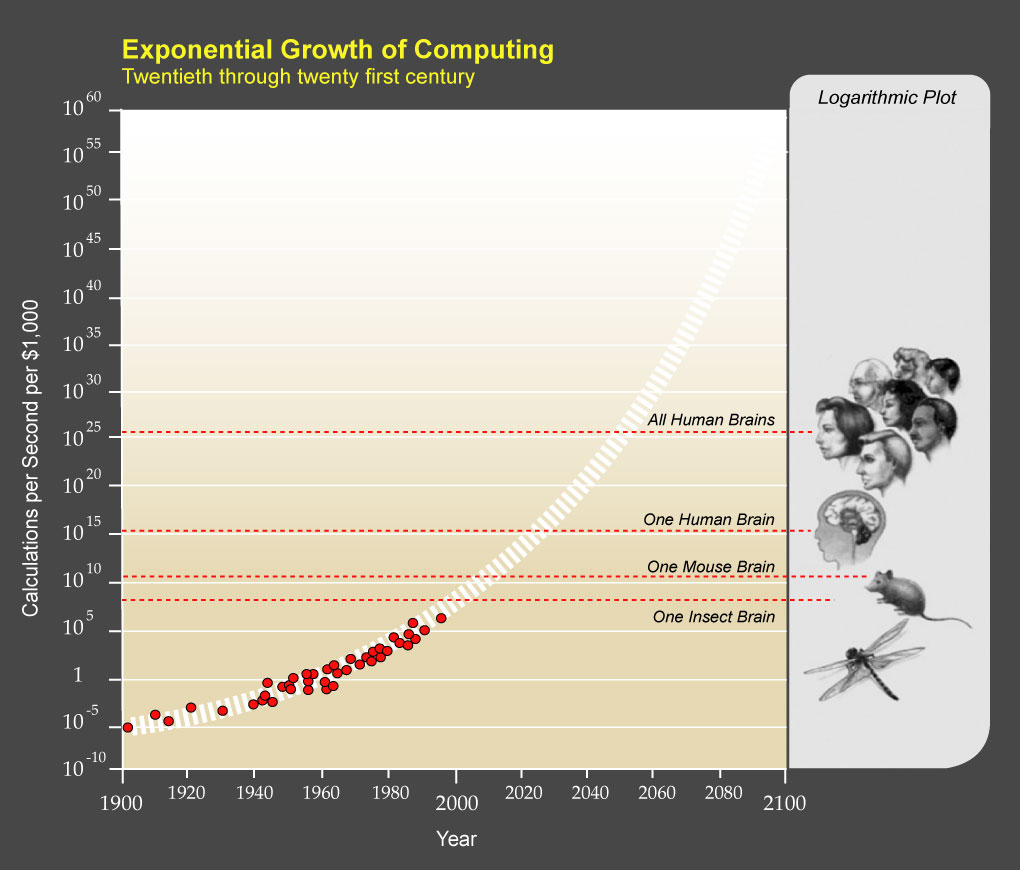

That's a very good idea, I think one limitation of most AI arguments is that they seem to lack urgency. GAI seems like it's a hundred years away at least, and showing the incredible progress we've already seen might help to negate some of that perception.

↑ comment by trevor (TrevorWiesinger) · 2022-04-27T20:47:36.719Z · LW(p) · GW(p)

1. Machines that are smarter than us could easily become our masters. [All it takes is a single glitch, and they will outsmart us the same way we outsmart animals.]

2. If we're not careful, AI could spell the end of humanity as we know it. [Artificial intelligence improves itself at an exponential pace, so if it speeds up there is no guarantee that it will slow down until it is too late.]

3. AI could be used to create weapons of mass destruction that could devastate the planet. x

4. AI could be used to create powerful robots that could enslave humans. x

5. AI could one day be used to create artificial intelligence [an even smarter AI system] that could turn against its creators [if it becomes capable of outmaneuvering humans and finding loopholes in order to pursue it's mission.]

6. AI usher in a new era of cyber-warfare that could cripple society x

7. AI could create self-replicating robots that could eventually consume all resources on Earth x

8. AI could [can one day] be used to create [newer, more powerful] AI [systems] that could eventually surpass human intelligence and take over the world [behave unpredictably].

9. AI technology could eventually be used to create a global surveillance state where everyone is constantly watched and monitored x

comment by lc · 2022-05-27T02:27:26.577Z · LW(p) · GW(p)

I remember watching a documentary made during the satanic panic by some activist Christian group. I found it very funny at the time, and then became intrigued when an expert came on to say something like:

"Look, you may not believe in any of this occult stuff; but there are people out there that do, and they're willing to do bad things because of their beliefs."

I was impressed with that line's simplicity and effectiveness. A lot of it's effectiveness stems silently from the fact that, inadvertently, it helps suspend disbelief about the negative impact of "satanic rituals" by starting the conversation with a reminder that there are people who take them very seriously.

It's invoking some very Dark Arts [LW · GW], but depending on the person you're talking to, I think the most effective rhetorical technique is to start by tapping into resentment toward Big Tech and the wealthy by saying:

"Look, you might not think AGI is going to hurt anybody, or that it will ever be developed. But DeepMind and OpenAI engineers are being paid millions of dollars a year to help develop it. And a worrying proportion of those engineers do that in spite of publicized expectations that AGI has a large chance of hurting you and me."

I have used this variation on the above theme several times in conversations with real humans. It's a biased sample, but it plus a followup conversation on the more concrete and factual risks has always worked to move them towards concern.

comment by FinalFormal2 · 2022-05-09T05:25:50.132Z · LW(p) · GW(p)

Any arguments for AI safety should be accompanied by images from DALL-E 2.

One of the key factors which makes AI safety such a low priority topic is a complete lack of urgency. Dangerous AI seems like a science fiction element, that's always a century away, and we can fight against this perception by demonstrating the potential and growth of AI capability.

No demonstration of AI capability has the same immediate visceral power as DALL-E 2.

In longer-form arguments, urgency could also be demonstrated through GPT-3's prompts, but DALL-E 2 is better, especially if you can also implicitly suggest a greater understanding of concepts by having DALL-E 2 represent something more abstract.

(Inspired by a comment from jcp29)

Replies from: FinalFormal2↑ comment by FinalFormal2 · 2022-05-09T05:30:08.222Z · LW(p) · GW(p)

Any image produced by DALL-E which could also convey or be used to convey misalignment or other risks from AI would be very useful because it could combine the desired messages: "the AI problem is urgent," and "misalignment is possible and dangerous."

For example, if DALL-E responded to the prompt: "AI living with humans" by creating an image suggesting a hierarchy of AI over humans, it would serve both messages.

However, this is only worthy of a side note, because creating such suggested misalignment organically might be very difficult.

Other image prompts might be: "The world as AI sees it," "the power of intelligence," "recursive self-improvement," "the danger of creating life," "god from the machine," etc.

Replies from: TrevorWiesinger, TrevorWiesinger↑ comment by trevor (TrevorWiesinger) · 2022-05-19T09:25:18.218Z · LW(p) · GW(p)

both prompts are from here:

https://www.lesswrong.com/posts/uKp6tBFStnsvrot5t/what-dall-e-2-can-and-cannot-do#:~:text=DALL%2DE%20can't%20spell,written%20on%20a%20stop%20sign.)

↑ comment by trevor (TrevorWiesinger) · 2022-05-12T22:27:58.949Z · LW(p) · GW(p)

There's a lot of good DALL-E images floating around lesswrong that point towards alignment significance. We can use copy + paste into a lesswrong comment to post it.

comment by Shmi (shminux) · 2022-04-26T19:51:08.408Z · LW(p) · GW(p)

First two paragraphs of https://astralcodexten.substack.com/p/book-review-a-clinical-introduction seem to fit the bill.

Replies from: yitz↑ comment by Yitz (yitz) · 2022-04-27T17:09:41.055Z · LW(p) · GW(p)

very slight modification of Scott’s words to produce a more self-contained paragraph:

A robot was trained to pick strawberries. The programmers rewarded it whenever it got a strawberry in its bucket. It started by flailing around, gradually shifted its behavior towards the reward signal, and ended up with a tendency to throw red things at light sources - in the training environment, strawberries were the only red thing, and the glint of the metal bucket was the brightest light source. Later, after training was done, it was deployed at night, and threw strawberries at a streetlight. Also, when someone with a big bulbous red nose walked by, it ripped his nose off and threw that at the streetlight too.

Suppose somebody tried connecting a language model to the AI. “You’re a strawberry picking robot,” they told it. “I’m a strawberry picking robot,” it repeated, because that was the sequence of words that earned it the most reward. Somewhere in its electronic innards, there was a series of neurons that corresponded to “I’m a strawberry-picking robot”, and if asked what it was, it would dutifully retrieve that sentence. But actually, it ripped off people’s noses and threw them at streetlights.

comment by Scott Emmons · 2022-04-26T17:53:01.170Z · LW(p) · GW(p)

The technology [of lethal autonomous drones], from the point of view of AI, is entirely feasible. When the Russian ambassador made the remark that these things are 20 or 30 years off in the future, I responded that, with three good grad students and possibly the help of a couple of my robotics colleagues, it will be a term project [six to eight weeks] to build a weapon that could come into the United Nations building and find the Russian ambassador and deliver a package to him.

-- Stuart Russell on a February 25, 2021 podcast with the Future of Life Institute.

comment by trevor (TrevorWiesinger) · 2022-05-04T05:27:04.510Z · LW(p) · GW(p)

Neither us humans, nor the flower, sees anything that looks like a bee. But when a bee looks at it, it sees another bee, and it is tricked into pollinating that flower. The flower did not know any of this, it's petals randomly changed shape over millions of years, and eventually one of those random shapes started tricking bees and outperforming all of the other flowers.

Today's AI already does this. If AI begins to approach human intelligence, there's no limit to the number of ways things can go horribly wrong.

Replies from: TrevorWiesinger↑ comment by trevor (TrevorWiesinger) · 2022-05-17T23:25:01.627Z · LW(p) · GW(p)

This is for ML researchers, I'd worry sigificantly about sending bizarre imagery to techxecutives or policymakers due to the absurdity heuristic being one of the most serious concerns.

Generally, nature and evolution are horrible.

comment by Stephen McAleese (stephen-mcaleese) · 2022-09-18T15:11:39.485Z · LW(p) · GW(p)

Here is the spreadsheet containing the results from the competition.

More quotes on AI safety here.

comment by FinalFormal2 · 2022-05-09T04:43:57.761Z · LW(p) · GW(p)

[Policy makers]

A couple of years ago there was an AI trained to beat Tetris. Artificial intelligences are very good at learning video games, so it didn't take long for it to master the game. Soon it was playing so quickly that the game was speeding up to the point it was impossible to win and blocks were slowly stacking up, but before it could be forced to place the last piece, it paused the game.

As long as the game didn't continue, it could never lose.

When we ask AI to do something, like play Tetris, we have a lot of assumptions about how it can or should approach that goal, but an AI doesn't have those assumptions. If it looks like it might not achieve its goal through regular means, it doesn't give up or ask a human for guidance, it pauses the game.

Replies from: FinalFormal2↑ comment by FinalFormal2 · 2022-05-09T04:51:58.885Z · LW(p) · GW(p)

I'm trying to find the balance between suggesting existential/catastrophic risk and screaming it or coming off too dramatic, any feedback would be welcome.

comment by Andy Jones (andyljones) · 2022-05-01T18:58:21.672Z · LW(p) · GW(p)

(a)

Look, we already have superhuman intelligences. We call them corporations and while they put out a lot of good stuff, we're not wild about the effects they have on the world. We tell corporations 'hey do what human shareholders want' and the monkey's paw curls and this is what we get.

Anyway yeah that but a thousand times faster, that's what I'm nervous about.

(b)

Look, we already have superhuman intelligences. We call them governments and while they put out a lot of good stuff, we're not wild about the effects they have on the world. We tell governments 'hey do what human voters want' and the monkey's paw curls and this is what we get.

Anyway yeah that but a thousand times faster, that's what I'm nervous about.

Replies from: FinalFormal2, TrevorWiesinger↑ comment by FinalFormal2 · 2022-05-09T05:02:35.217Z · LW(p) · GW(p)

I think this would benefit from being turned into a longer-form argument. Here's a quote you could use in the preface:

“Sure, cried the tenant men,but it’s our land…We were born on it, and we got killed on it, died on it. Even if it’s no good, it’s still ours….That’s what makes ownership, not a paper with numbers on it."

"We’re sorry. It’s not us. It’s the monster. The bank isn’t like a man."

"Yes, but the bank is only made of men."

"No, you’re wrong there—quite wrong there. The bank is something else than men. It happens that every man in a bank hates what the bank does, and yet the bank does it. The bank is something more than men, I tell you. It’s the monster. Men made it, but they can’t control it.”

― John Steinbeck, The Grapes of Wrath

↑ comment by trevor (TrevorWiesinger) · 2022-05-02T03:21:26.423Z · LW(p) · GW(p)

I had no idea that this angle existed or was feasible. I think these are best for ML researchers, since policymakers and techxecutives tend to think of institutions as flawed due to the vicious self-interest of the people who inhabit them (the problem is particularly acute in management). In which they might respond by saying that AI should not split into subroutines that compete with eachother, or something like that. One way or another, they'll see it as a human problem and not a machine problem.

"We only have two cases of generally intelligent systems: individual humans and organizations made of humans. When a very large and competent organization is sent to solve a task, such as a corporation, it will often do so by cutting corners in undetectable ways, even when total synergy is achieved and each individual agrees that it would be best not to cut corners. So not only do we know that individual humans feel inclined to cheat and cut corners, but we also know that large optimal groups will automatically cheat and cut corners. Undetectable cheating and misrepresentation is fundamental to learning processes in general, not just a base human instinct"

I'm not an ML researcher and haven't been acquainted with very many, so I don't know if this will work.

Replies from: TrevorWiesinger↑ comment by trevor (TrevorWiesinger) · 2022-05-02T03:22:05.424Z · LW(p) · GW(p)

"Undetectable cheating, and misrepresentation, is fundamental to learning processes in general; it's not just a base human instinct"

comment by Linda Linsefors · 2022-04-27T10:55:02.875Z · LW(p) · GW(p)

"Most AI reserch focus on building machines that do what we say. Aligment reserch is about building machines that do what we want."

Source: Me, probably heavely inspred by "Human Compatible" and that type of arguments. I used this argument in conversations to explain AI Alignment for a while, and I don't remember when I started. But the argument is very CIRL (cooperative inverse reinforcment learning).

I'm not sure if this works as a one liner explanation. But it does work as a conversation starter of why trying to speify goals directly is a bad idea. And how the things we care about often are hard to messure and therefore hard to instruct an AI to do. Insert referenc to King Midas, or talk about what can go wrong with a super inteligent Youtube algorithm that only optimises for clicks.

_____________________________

"Humans rule the earth becasue we are smart. Some day we'll build something smarter than us. When it hapens we better make sure it's on our side."

Source: Me

Inspiration: I don't know. I probably stole the structure of this agument from somwhere, but it was too long ago to remember.

By "our side" I mean on the side of humans. I don't mean it as an us vs them thing. But maybe it can be read that way. That would be bad. I've never run in to that missunderstanding though, but I also have not talked to politicians.

comment by Nanda Ale · 2022-05-28T06:48:11.371Z · LW(p) · GW(p)

Crypto Executives and Crypto Researchers

Question: If it becomes a problem, why can't you just shut it off? Why can't you just unplug it?

Response: Why can't you just shut off bitcoin? There isn't any single button to push, and many people prefer it not being shut off and will oppose you.

(Might resonate well with crypto folks.)

comment by trevor (TrevorWiesinger) · 2022-05-28T01:25:55.061Z · LW(p) · GW(p)

"Humanity has risen to a position where we control the rest of the world precisely because of our [unrivaled] mental abilities. If we pass this mantle to our machines, it will be they who are in this unique position."

Toby Ord, The Precipice

Replaced [unparalleled] with [unrivaled]

comment by romeostevensit · 2022-05-28T00:25:33.333Z · LW(p) · GW(p)

As recent experience has shown, exponential processes don't need to be smarter than us to utterly upend our way of life. They can go from a few problems here and there to swamping all other considerations in a span of time too fast to react to, if preparations aren't made and those knowledgeable don't have the leeway to act. We are in the early stages of an exponential increase in the power of AI algorithms over human life, and people who work directly on these problems are sounding the alarm right now. It is plausible that we will soon have processes that can escape the lab just as a virus can, and we as a species are pouring billions into gain-of-function research for these algorithms, with little concomitant funding or attention paid to the safety of such research.

comment by trevor (TrevorWiesinger) · 2022-05-27T19:53:18.569Z · LW(p) · GW(p)

For policymakers:

Expecting today's ML researchers to understand AGI is like expecting a local mechanic to understand how to design a more efficient engine. It's a lot better than total ignorance, but it's also clearly not enough.

comment by Nicholas / Heather Kross (NicholasKross) · 2022-05-17T20:38:58.078Z · LW(p) · GW(p)

In 1903, The New York Times thought heavier-than-air flight would take 1-10 million years… less than 10 weeks before it happened. Is AI next? (source for NYT info) (Policymakers)

Replies from: TrevorWiesinger↑ comment by trevor (TrevorWiesinger) · 2022-05-19T09:57:16.604Z · LW(p) · GW(p)

Yours are really good, please keep making entries. This contest is really, really important, even if there's a lot of people don't see it that way due to a lack of policy experience.

I've been looking at old papers (e.g. yudkowsky papers) but I feel like most of my entries (and most of the entries in general) are usually missing the magic "zing" that they're looking for. They're blowing a ton of money on getting good entries, and it's a really good investment, so don't leave them empty handed!

Replies from: NicholasKross, NicholasKross↑ comment by Nicholas / Heather Kross (NicholasKross) · 2022-05-20T22:56:37.459Z · LW(p) · GW(p)

Also, despite having researched this as a hobby for years, I'd still like all feedback possible on how to add zing to my short phrases and paragraphs.

↑ comment by Nicholas / Heather Kross (NicholasKross) · 2022-05-20T19:38:50.623Z · LW(p) · GW(p)

Thanks! Am gonna post many more today.

comment by trevor (TrevorWiesinger) · 2022-05-04T05:14:46.415Z · LW(p) · GW(p)

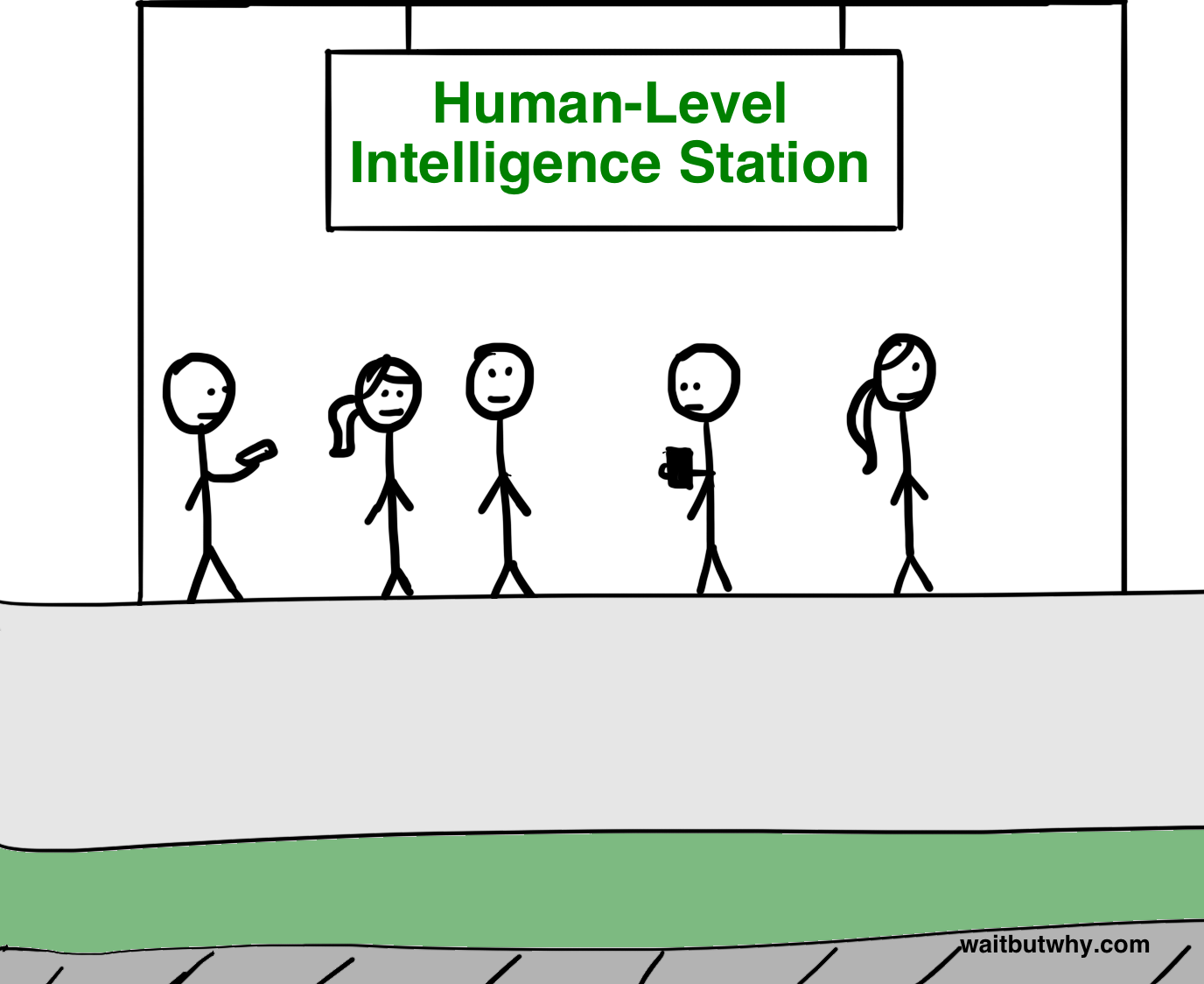

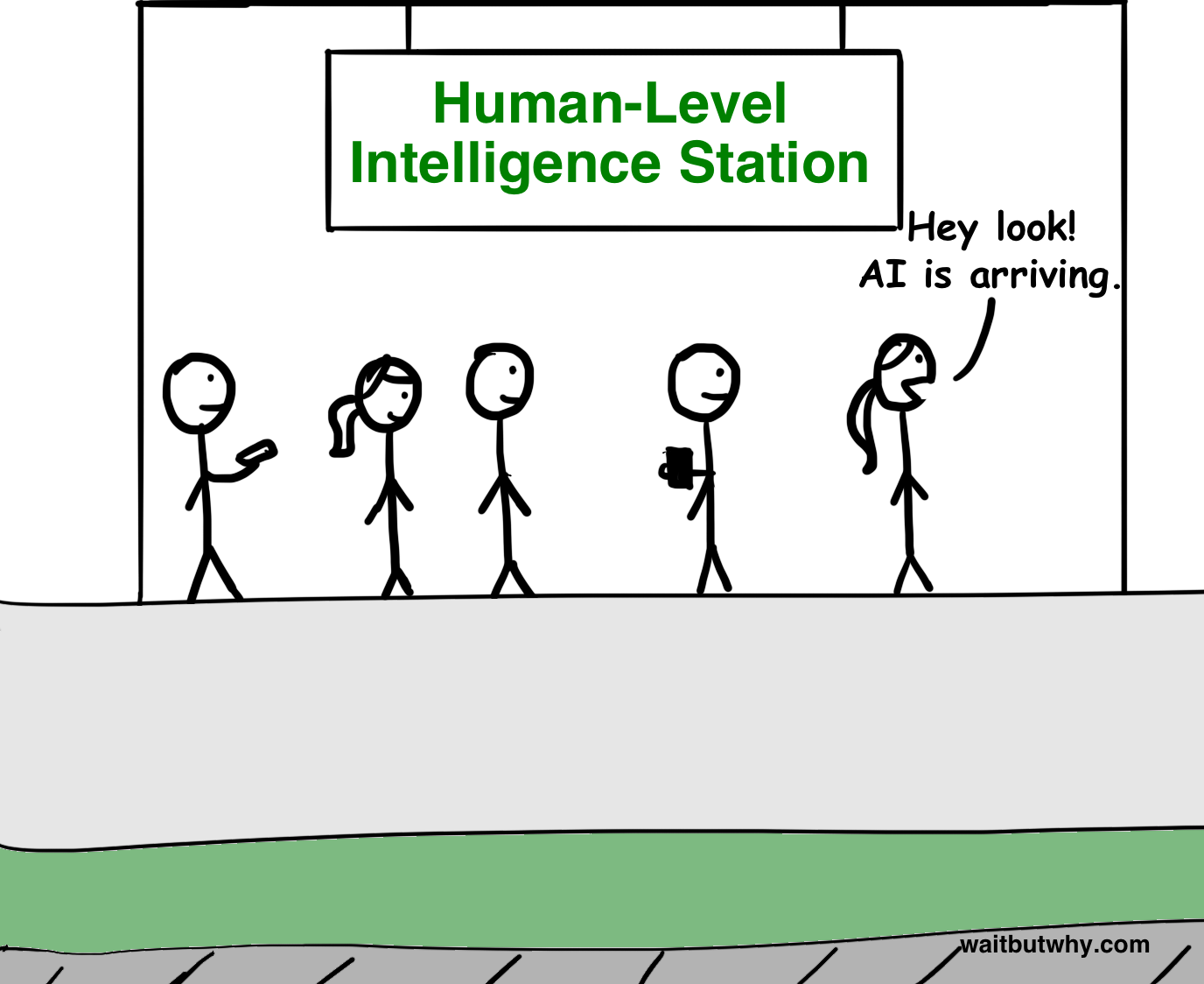

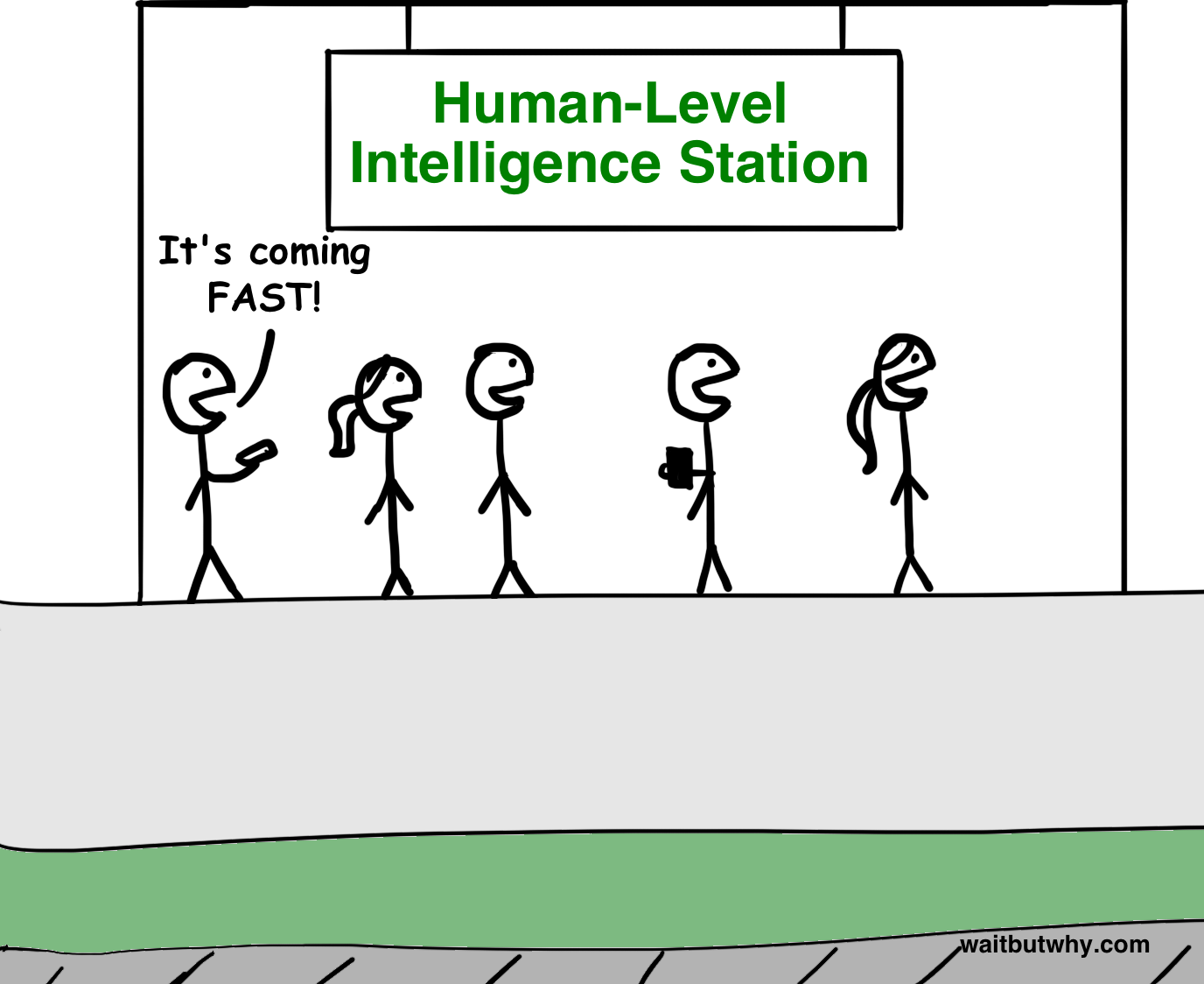

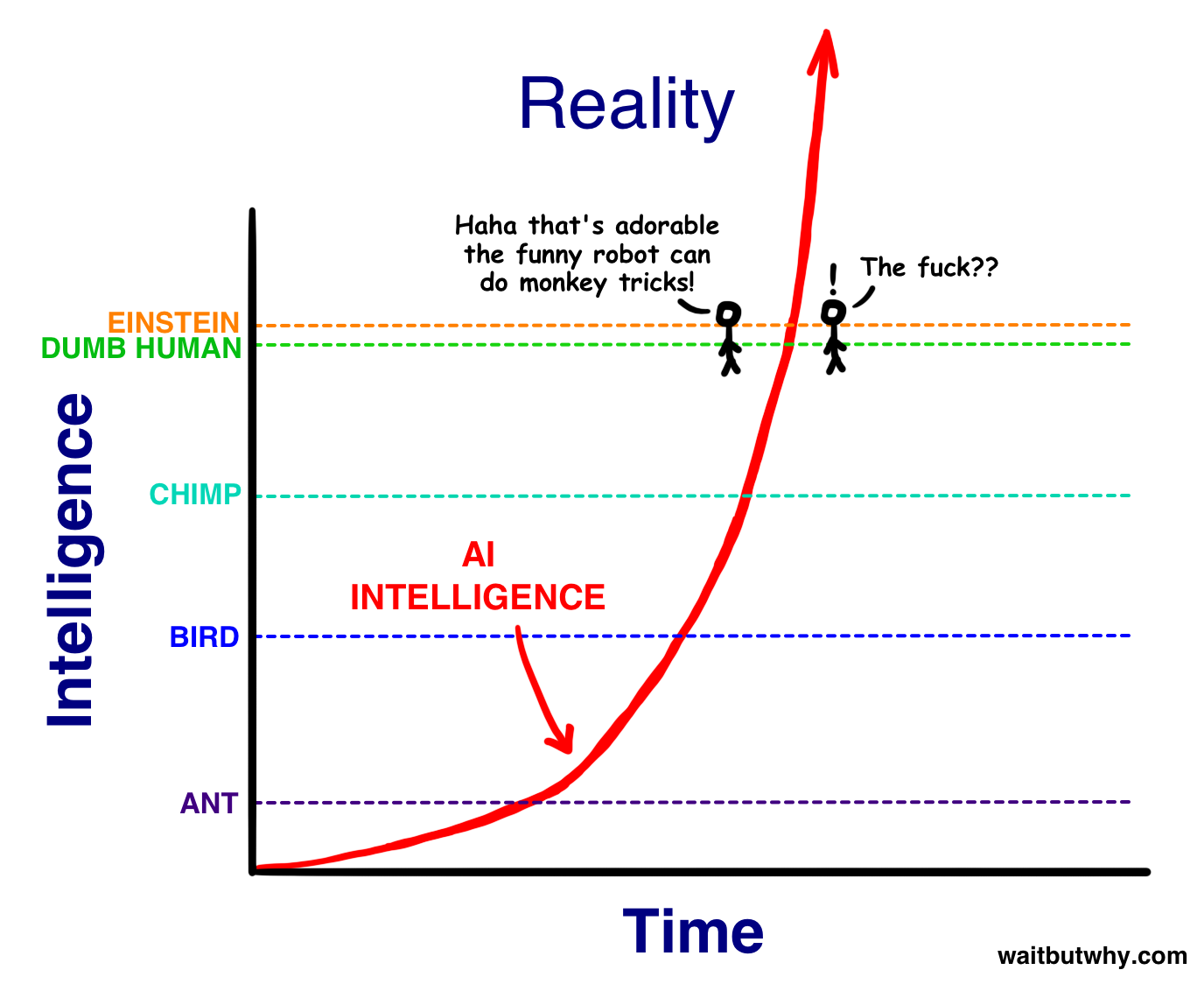

If AI approaches and reaches human-level intelligence, it will probably pass that level just as quickly as it arrived at that level.

comment by David Scott Krueger (formerly: capybaralet) (capybaralet) · 2022-05-01T15:11:42.559Z · LW(p) · GW(p)

What about graphics? e.g. https://twitter.com/DavidSKrueger/status/1520782213175992320

Replies from: TrevorWiesinger↑ comment by trevor (TrevorWiesinger) · 2022-05-02T03:04:41.266Z · LW(p) · GW(p)

(On Lesswrong, you can use ctrl + K to turn highlighted text into a link. You can also paste images directly into a post or comment with ctrl + V)

Replies from: TrevorWiesinger↑ comment by trevor (TrevorWiesinger) · 2022-05-02T04:04:07.890Z · LW(p) · GW(p)

https://twitter.com/DavidSKrueger/status/1520782213175992320

Replies from: TrevorWiesinger↑ comment by trevor (TrevorWiesinger) · 2022-05-04T05:03:40.690Z · LW(p) · GW(p)

100% of credit here goes to capybaralet for an excellent submission, they simply didn't know they could paste an image into a Lesswrong comment. I did not do any refining here.

This is a very good submission, one of the best in my opinion, it's obviously more original than most of my own submissions, and we should all look up to it as a standard of quality. I can easily see this image making a solid point in the minds of ML researchers, tech executives, and even policymakers.

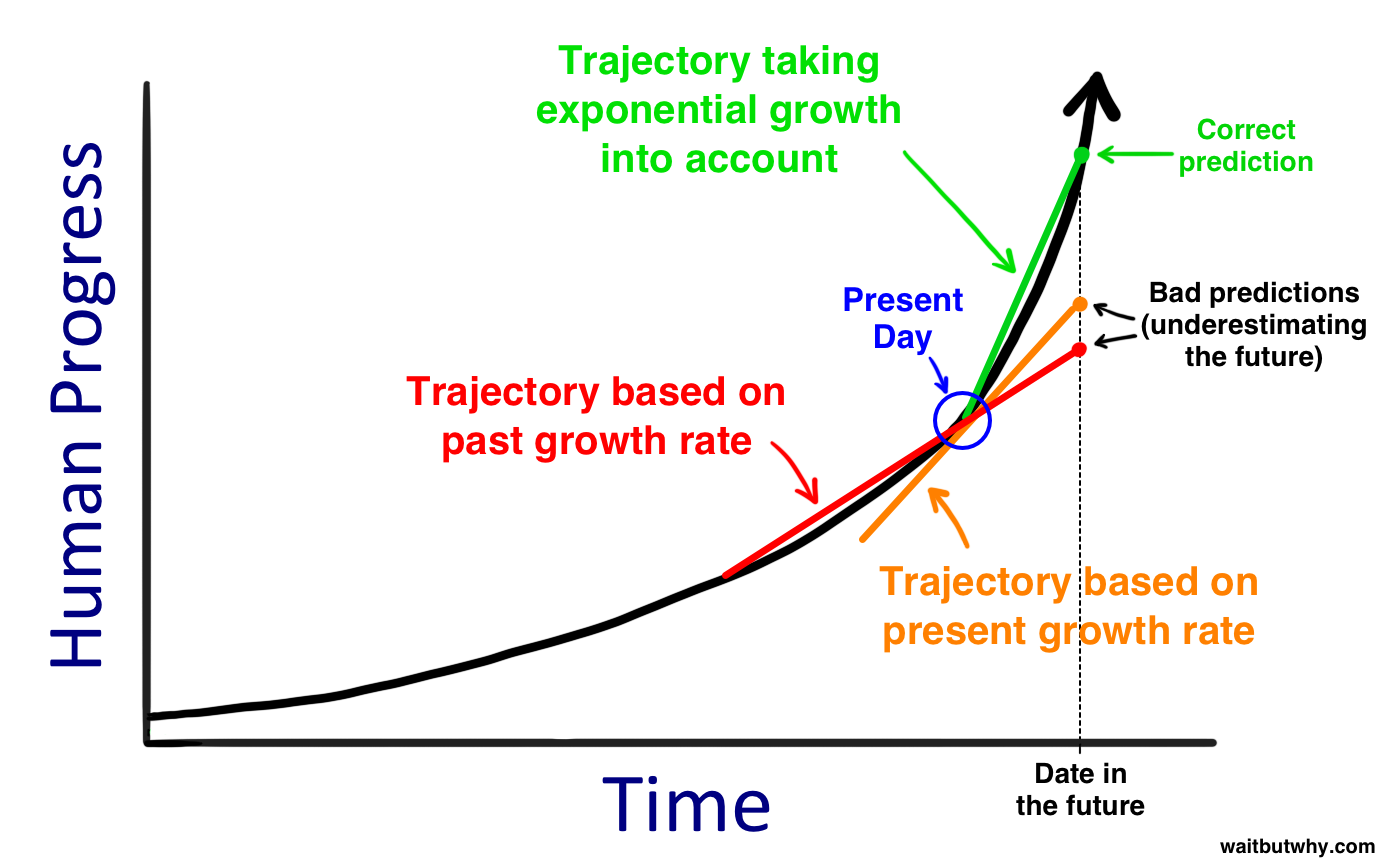

comment by trevor (TrevorWiesinger) · 2022-04-27T21:30:58.429Z · LW(p) · GW(p)

"AI will probably surpass human intelligence at the same pace that it reaches human intelligence. Considering the pace of AI advancement over the last 3 years, that pace will probably be very fast"

https://waitbutwhy.com/2015/01/artificial-intelligence-revolution-

Replies from: TrevorWiesinger↑ comment by trevor (TrevorWiesinger) · 2022-05-27T22:34:13.556Z · LW(p) · GW(p)

I tried to shrink the first image but it still displays it at the obnoxiously large full size

comment by [deleted] · 2022-04-27T19:24:21.962Z · LW(p) · GW(p)

“The smartest ones are the most criminally capable.” [·]

Replies from: sue-hyer-essek, TrevorWiesinger↑ comment by SueE (sue-hyer-essek) · 2022-06-02T04:57:04.755Z · LW(p) · GW(p)

Agree but the lobbyist & policy makers whom are concentrated in Senate & committees by design bank on lpeople have both stupid << more than misunderstanding-

↑ comment by trevor (TrevorWiesinger) · 2022-05-21T01:51:22.399Z · LW(p) · GW(p)

comment by trevor (TrevorWiesinger) · 2022-04-26T20:00:11.910Z · LW(p) · GW(p)

"AI cheats. We've seen hundreds of unique instances of this. It finds loopholes and exploits them, just like us, only faster. The scary thing is that, every year now, AI becomes more aware of its surroundings, behaving less like a computer program and more like a human that thinks but does not feel"

comment by Gyrodiot · 2022-05-27T22:43:44.894Z · LW(p) · GW(p)

(Policymakers) There is outrage right now about AI systems amplifying discrimination and polarizing discourse. Consider that this was discovered after they were widely deployed. We still don't know how to make them fair. This isn't even much of a priority.

Those are the visible, current failures. Given current trajectories and lack of foresight of AI research, more severe failures will happen in more critical situations, without us knowing how to prevent them. With better priorities, this need not happen.

Replies from: sue-hyer-essek↑ comment by SueE (sue-hyer-essek) · 2022-06-02T04:42:28.171Z · LW(p) · GW(p)

Yes!! I wrote more but then poof gone.

Every time I attempt to post anything it vanishes. I'm new to this site & learning the ins & outs- my apologies. Will try again tomorrow.

~ SueE

comment by Arran McCutcheon · 2022-05-27T22:24:10.973Z · LW(p) · GW(p)

On average, experts estimate a 10-20% (?) probability of human extinction due to unaligned AGI this century, making AI Safety not simply the most important issue for future generations, but for present generations as well. (policymakers)

comment by Peter Berggren (peter-berggren) · 2022-05-27T18:57:13.757Z · LW(p) · GW(p)

Clarke’s First Law goes: When a distinguished but elderly scientist states that something is possible, he is almost certainly right. When he states that something is impossible, he is very probably wrong.

Stuart Russell is only 60. But what he lacks in age, he makes up in distinction: he’s a computer science professor at Berkeley, neurosurgery professor at UCSF, DARPA advisor, and author of the leading textbook on AI. His book Human Compatible states that superintelligent AI is possible; Clarke would recommend we listen.

(tech executives, ML researchers)

(adapted slightly from the first paragraphs of the Slate Star Codex review of Human Compatible)

↑ comment by trevor (TrevorWiesinger) · 2022-05-27T23:32:00.799Z · LW(p) · GW(p)

"There's been centuries of precedent of scientists incorrectly claiming that something is impossible for humans to invent"

"right before the instant something is invented successfully, 100% of the evidence leading up to that point will be evidence of failed efforts to invent it. Everyone involved will only have memories of people failing to invent it. Because it hasn't been invented yet"

comment by Nicholas / Heather Kross (NicholasKross) · 2022-05-26T00:49:20.596Z · LW(p) · GW(p)

All humans, even people labelled "stupid", are smarter than apes. Both apes and humans are far smarter than ants. The intelligence spectrum could extend much higher, e.g. up to a smart AI… (Adapted from here [LW · GW]). (Policymakers)

comment by Nicholas / Heather Kross (NicholasKross) · 2022-05-25T23:52:52.172Z · LW(p) · GW(p)

The smarter AI gets, the further it strays from our intuitions of how it should act. (Tech executives)

comment by trevor (TrevorWiesinger) · 2022-05-24T01:30:07.442Z · LW(p) · GW(p)

The existence of the human race has been defined by our absolute monopoly on intelligent thought. That monopoly is no longer absolute, and we are on track for it to vanish entirely.

comment by ukc10014 · 2022-05-08T12:14:26.175Z · LW(p) · GW(p)

Here's my submission, it might work better as bullet points on a page.

AI will transform human societies over the next 10-20 years. Its impact will be comparable to electricity or nuclear weapons. As electricity did, AI could improve the world dramatically; or, like nuclear weapons, it could end it forever. Like inequality, climate change, nuclear weapons, or engineered pandemics, AI Existential Risk is a wicked problem. It calls upon every policymaker to become a statesperson: to rise above the short-term, narrow interests of party, class, or nation, to actually make a contribution to humankind as a whole. Why? Here are 10 reasons.

(1) Current AI problems, like racial and gender bias, are like canaries in a coal-mine. They portend even worse future failures.

(2) Scientists do not understand how current AI actually works: for instance, engineers know why bridges collapse, or why Chernobyl failed. There is no similar understanding of why AI models misbehave.

(3) Future AI will be dramatically more powerful than today’s. In the last decade, the pace of development has exploded, with current AI performing at super-human level on games (like chess or Go). Massive language models (like GPT-3) can write really good college essays while deepfakes of politicians are already a thing.

(4) These very powerful AIs might develop their own goals, which is a problem if they are connected to electrical grids, hospitals, social media networks, or nuclear weapons systems.

(5) The competitive dynamics are dangerous: the US-China strategic rivalry implies neither side has an incentive to go slowly or be careful. Domestically, tech companies are in an intense race to develop & deploy AI across all aspects of the economy.

(6) The current US lead in AI might be unsustainable. As an analogy, think of nuclear weapons: in the 1940s, the US hoped it would keep its atomic monopoly. Since then, we have 9 nuclear powers today, with 12,705 weapons.

(7) Accidents happen: again, from the nuclear case, there have been over 100 accidents and proliferation incidents involving nuclear power/weapons.

(8) AI could proliferate virally across globally connected networks, making it more dangerous than nuclear weapons (which are visible, trackable, and less useful than powerful AI).

(9) Even today’s moderately-capable AIs, if used effectively, can entrench totalitarianism, manipulate democratic societies or enable repressive security states.

(10) There will be a point of no return after which we may not be able to recover as a species. So what is to be done? Negotiations to reach a global, temporary moratorium on certain types of AI research. Enforce this moratorium through intrusive domestic regulation and international surveillance. Lastly, avoiding historical policy errors, such as in climate change and in the terrorist threat post-9/11: politicians must ensure that the military-industrial complex does not ‘weaponise’ AI.

Replies from: TrevorWiesinger, FinalFormal2↑ comment by trevor (TrevorWiesinger) · 2022-05-12T22:30:35.927Z · LW(p) · GW(p)

There's a lot of points here that I disagree intensely with. But regardless of that, your "canary in a coal mine" line is fantastic, we need more really-good one-liners here.

Replies from: ukc10014↑ comment by FinalFormal2 · 2022-05-09T05:08:08.132Z · LW(p) · GW(p)

The way you have it formatted right now makes it very difficult to read.

Try accessing the formatting functions in-platform by highlighting the text you want to make into bullet points.

comment by joseph_c (cooljoseph1) · 2022-04-28T05:56:03.337Z · LW(p) · GW(p)

(To Policymakers and Machine Learning Researchers)

Building a nuclear weapon is hard. Even if one manages to steal the government's top secret plans, one still need to find a way to get uranium out of the ground, find a way to enrich it, and attach it to a missile. On the other hand, building an AI is easy. With scientific papers and open source tools, researchers are doing their utmost to disseminate their work.

It's pretty hard to hide a uranium mine. Downloading TensorFlow takes one line of code. As AI becomes more powerful and more dangerous, greater efforts need to be taken to ensure malicious actors don't blow up the world.

comment by sithlord · 2022-04-26T19:37:21.299Z · LW(p) · GW(p)

To Policymakers: "Just think of the way in which we humans have acted towards animals, and how animals act towards lesser animals, now think of how a powerful AI with superior intellect might act towards us, unless we create them in such a way that they will treat us well, and even help us."

Source: Me

comment by Peter Berggren (peter-berggren) · 2022-07-22T19:46:44.802Z · LW(p) · GW(p)

Have any prizes been awarded yet? I haven't heard anything about prizes, but that could have just been that I didn't win one...

comment by Nanda Ale · 2022-05-28T06:37:55.462Z · LW(p) · GW(p)

Target: Everyone. Another good zinger.

just sitting here laughing at how people's complaints about different AI models have shifted in under 3 years

"it's not quite human quality writing"

"okay but it can't handle context or reason"

"yeah but it didn't know Leonardo would hold PIZZA more often than a katana"

Source: https://nitter.nl/liminal_warmth/status/1511536700127731713#m

comment by Peter Berggren (peter-berggren) · 2022-05-28T02:00:50.122Z · LW(p) · GW(p)

You wouldn't hire an employee without references. Why would you make an AI that doesn't share your values?

(policymakers, tech executives)

↑ comment by Nanda Ale · 2022-05-28T07:36:01.330Z · LW(p) · GW(p)

Reframed even more generally for parents:

"You wouldn’t leave your child with a stranger. With AI, we’re about to leave the world’s children with the strangest mind humans have ever encountered."

(I know the deadline passed. But I finally have time to read other people's entries and couldn't resist.)

comment by trevor (TrevorWiesinger) · 2022-05-27T23:21:51.062Z · LW(p) · GW(p)

For policymakers:

The predictability of today's AI systems doesn't tell us squat about whether they will remain predictable after achieving human-level intelligence. Individual apes are far more predictable than individual humans, and apes themselves are far less predictable than ants.

comment by Peter Berggren (peter-berggren) · 2022-05-27T16:12:02.636Z · LW(p) · GW(p)

Climate change was weird in the 1980s. Pandemics were weird in the 2010s. Every world problem is weird... until it happens.

(policymakers)

comment by DirectedEvolution (AllAmericanBreakfast) · 2022-05-27T05:32:37.488Z · LW(p) · GW(p)

When nuclear weapons were first made, there was a serious concern that the first nuclear test would trigger a chain reaction and ignite the entire plant’s atmosphere. AI has an analogous issue. It used a technology called machine learning, that allows AI to figure out the solutions for problems on its own. The problem is that we don’t know whether this technology, or something similar, might cause the AI to start “thinking for itself.” There are a significant number of software engineers who think this might have disastrous consequences, but it’s a risk to the public, while machine learning research mostly creates private gains. Government should create a task force to take this possibility seriously, so researchers can coordinate to better understand and mitigate that risk.

Replies from: Nanda Ale↑ comment by Nanda Ale · 2022-05-27T13:21:41.000Z · LW(p) · GW(p)

During the Manhattan project, scientists were concerned that the first nuclear weapon would trigger a chain reaction and ignite the planet's atmosphere. But after the first test was completed this was no longer a concern. The remaining concern was what humans will choose to do with such weapons, instead of unexpected consequences.

But with AI, that risk never goes away. Each successful test is followed by bigger and more ambitious tests, each with the possibility of a horrific chain reaction beyond our control. And unlike the Manhattan project, there is no consensus that the atmosphere will not ignite.

comment by Nicholas / Heather Kross (NicholasKross) · 2022-05-27T01:56:20.253Z · LW(p) · GW(p)

Imagine it's 1932, but with one major difference: uranium is cheap enough that anyone can get some. Radioactive materials are unregulated. The world's largest companies are competing to build nuclear power plants. Nuclear weapons have not yet been discovered. Would you think nuclear arms control is premature? Or would you want to get started now to prevent a catastrophe?

This is the same situation the real world is in, with machine learning and artificial intelligence. The world's biggest tech companies are gathering GPUs and working to build AI that is smarter than humans about everything. And right now, there's not much coordination being done to make this go well. (Policymakers)

comment by Quintin Pope (quintin-pope) · 2022-05-27T01:42:28.359Z · LW(p) · GW(p)

“Thousands of researchers at the worlds richest corporations are all working to make AI more powerful. Who is working to make AI more moral?”

(For policymakers and activists skeptical of big tech)

comment by trevor (TrevorWiesinger) · 2022-05-27T01:06:21.879Z · LW(p) · GW(p)

If an AI is cranked up to the point that it becomes smarter than humans, it will not behave predictably. We humans are not predictable. Even chimpanzees and dolphins are unpredictable. Smart things are not predictable. Intelligence, itself, does not tend to result in predictability.

Replies from: TrevorWiesinger↑ comment by trevor (TrevorWiesinger) · 2022-05-27T01:25:53.182Z · LW(p) · GW(p)

This one's very good for policymakers, I think. Anything that makes them sound smart to their friends or family is more likely to stick in their heads. Even as conversation starters. Especially if it has to do with evolution, they might hire a biologist consultant and have them read the report on AGI risk, and it will basically always blow the mind of those consultants.

comment by trevor (TrevorWiesinger) · 2022-05-26T23:58:56.906Z · LW(p) · GW(p)

"When I visualize [a scenario where a highly intelligent AI compromises all human controllers], I think it [probably] involves an AGI system which has the ability to be cranked up by adding more computing resources to it [to increase its intelligence and creativity incrementally]; and I think there is an extended period where the system is not aligned enough that you can crank it up that far, without [any dangerously erratic behavior from the system]"

Eliezer Yudkowsky [LW · GW]

comment by trevor (TrevorWiesinger) · 2022-05-25T22:57:43.817Z · LW(p) · GW(p)

"Guns were developed centuries before bulletproof vests"

"Smallpox was used as a tool of war before the development of smallpox vaccines"

EY, AI as a pos neg factor, 2006ish

comment by Adam Jermyn (adam-jermyn) · 2022-05-22T20:09:54.770Z · LW(p) · GW(p)

Targeting policymakers:

Regulating an industry requires understanding it. This is why complex financial instruments are so hard to regulate. Superhuman AI could have plans far beyond our ability to understand and so could be impossible to regulate.

comment by Nicholas / Heather Kross (NicholasKross) · 2022-05-20T23:39:10.074Z · LW(p) · GW(p)

The implicit goal, the thing you want, is to get good at the game; the explicit goal, the thing the AI was programmed to want, is to rack up points by any means necessary. (Machine learning researchers)

comment by jcp29 · 2022-05-12T13:12:18.042Z · LW(p) · GW(p)

[Policy makers & ML researchers]

"There isn’t any spark of compassion that automatically imbues computers with respect for other sentients once they cross a certain capability threshold. If you want compassion, you have to program it in" (Nate Soares). Given that we can't agree on whether a straw has two holes or one...We should probably start thinking about how program compassion into a computer.

Replies from: TrevorWiesinger↑ comment by trevor (TrevorWiesinger) · 2022-05-12T22:18:59.047Z · LW(p) · GW(p)

MIRI peope quotes are great, they aren't easy to find like EY's one ultra-famous paper from 2006, please add more MIRI people quotes (I probably will too).

Don't give up, keep commenting, this contest has been cut off from most people's visibility so it needs all the attention and entries it can get.

Replies from: jcp29comment by trevor (TrevorWiesinger) · 2022-05-02T14:51:23.650Z · LW(p) · GW(p)

It is a fundamental law of thought that thinking things will cut corners, misinterpret instructions, and cheat.

comment by trevor (TrevorWiesinger) · 2022-04-28T07:01:41.636Z · LW(p) · GW(p)

"Past technological revolutions usually did not telegraph themselves to people alive at the time, whatever was said afterward in hindsight"

Eliezer Yudkowsky, AI as a pos neg factor, around 2006

comment by trevor (TrevorWiesinger) · 2022-04-27T23:12:40.224Z · LW(p) · GW(p)

"Imagine that Facebook and Netflix have two separate AIs that compete over hours that each user spends on their own platform. They want users to spend the maximum amount of minutes on Facebook or Netflix, respectively.

The Facebook AI discovers that posts that spoil popular TV shows result in people spending more time on the platform. It doesn't know what spoilers are, only that they cause people to spend more time on Facebook. But in reality, they're ruining the entertainment value from excellent shows on Netflix.

Even worse, the Netflix AI discovers that people stop watching shows with plot twists, so it promotes more boring shows with no plot twists. They end up on the front page and make the most profit due to exposure.

The problem gets discovered and fixed three years later, but by that time it's too late; Facebook has a reputation for ruining TV shows and many people consciously avoid using it, and meanwhile Netflix has spent 3 years punishing producers and scriptwriters for filming excellent stories with plot twists. Neither company wants this. It takes 3 years for human engineers to discover and fix it, and the whole time, many more misguided AI decisions have popped up.

The supervision required for AI is immense, for the simplest tasks and the simplest systems. AI just keeps finding new ways to cheat"

comment by jcp29 · 2022-04-27T19:07:00.782Z · LW(p) · GW(p)

[Policymakers & ML researchers]

A virus doesn't need to explain itself before it destroys us. Neither does AI.

A meteor doesn't need to warn us before it destroys us. Neither does AI.

An atomic bomb doesn't need to understand us in order to destroy us. Neither does AI.

A supervolcano doesn't need to think like us in order to destroy us. Neither does AI.

(I could imagine a series riffing based on this structure / theme)

comment by jcp29 · 2022-04-27T17:59:27.853Z · LW(p) · GW(p)

[ML researchers]

Given that we can't agree on whether a hotdog is a sandwich or not...We should probably start thinking about how to tell a computer what is right and wrong.

[Insert call to action on support / funding for AI governance / regulation etc.]

-

Given that we can't agree on whether a straw has two holes or one...We should probably start thinking about how to explain good and evil to a computer.

[Insert call to action on support / funding for AI governance / regulation etc.]

(I could imagine a series riffing based on this structure / theme)

comment by Yitz (yitz) · 2022-04-27T17:17:33.772Z · LW(p) · GW(p)

I will post my submissions as individual replies to this comment. Please let me know if there’s any issues with that.

Replies from: yitz, yitz, yitz, yitz↑ comment by Yitz (yitz) · 2022-04-27T17:29:27.651Z · LW(p) · GW(p)

Imagine that you are an evil genius who wants to kill over a billion people. Can you think of a plausible way you might succeed? I certainly can. Now imagine a very large company that wants to maximize profits. We all know from experience that large companies are going to take unethical measures in order to maximize their goals. Finally, imagine an AI with the intelligence of Einstein, but trying to maximize for a goal alien to us, and which doesn’t care for human well-being at all, even less than a large corporation cares about its employees. Do you see why experts are afraid?

↑ comment by Yitz (yitz) · 2022-05-02T06:50:55.075Z · LW(p) · GW(p)

A member of an intelligent social species might also have motivations related to cooperation and competition: like us, it might show in-group loyalty, a resentment of free-riders, perhaps even a concern with reputation and appearance. By contrast, an artificial mind need not care intrinsically about any of those things, not even to the slightest degree.

↑ comment by Yitz (yitz) · 2022-04-29T21:50:14.701Z · LW(p) · GW(p)

If most large companies tend to be unethical, then what are the chances a non-human AI will be more ethical?

↑ comment by Yitz (yitz) · 2022-04-29T21:47:55.997Z · LW(p) · GW(p)

According to [insert relevant poll here] most researchers believe that we will create a human-level AI within this century.

comment by Davidmanheim · 2022-04-27T10:04:28.987Z · LW(p) · GW(p)

Question: "effective arguments for the importance of AI safety" - is this about arguments for the importance of just technical AI safety, or more general AI safety, to include governance and similar things?

comment by Lone Pine (conor-sullivan) · 2022-04-26T18:58:58.175Z · LW(p) · GW(p)

“Aligning AI is the last job we need to do. Let’s make sure we do it right.”

(I’m not sure which target audience my submissions are best targeted towards. I’m hoping that the judges can make that call for me.)

comment by Mati_Roy (MathieuRoy) · 2022-09-17T20:42:55.665Z · LW(p) · GW(p)

I recently talked with the minister of innovation in Yucatan, and ze's looking to have competitions in the domain of artificial intelligence in a large conference on innovation they're organizing in Yucatan, Mexico that will happen in mid-November. Do you think there's the potential for a partnership?

comment by michael_mjd · 2022-05-28T06:57:26.783Z · LW(p) · GW(p)

AI existential risk is like climate change. It's easy to come up with short slogans that make it seem ridiculous. Yet, when you dig deeper into each counterargument, you find none of them are very convincing, and the dangers are quite substantial. There's quite a lot of historical evidence for the risk, especially in the impact humans have had on the rest of the world. I strongly encourage further, open-minded study.

Replies from: michael_mjd↑ comment by michael_mjd · 2022-05-28T06:57:49.657Z · LW(p) · GW(p)

For ML researchers.

comment by Nanda Ale · 2022-05-28T06:56:40.661Z · LW(p) · GW(p)

Policymakers

For the first time in human history, philosophical questions of good and bad have a real deadline.

This is a an extremely common, perhaps even overused, catchphrase for AI risk. But I still want to make sure it’s represented because I personally find it striking.

comment by Nicholas / Heather Kross (NicholasKross) · 2022-05-28T06:56:14.711Z · LW(p) · GW(p)

Leading up to the first nuclear weapons test, the Trinity event in July 1945, multiple physicists in the Manhattan Project thought the single explosion would destroy the world. Edward Teller, Arthur Compton, and J. Robert Oppenheimer all had concerns that the nuclear chain reaction could ignite Earth's atmosphere in an instant. Yet, despite disagreement and uncertainty over their calculations, they detonated the device anyway. If the world's experts in a field can be uncertain about causing human extinction with their work, and still continue doing it, what safeguards are we missing for today's emerging technologies? Could we be sleepwalking into catastrophe with bioengineering, or perhaps artificial intelligence? (Based on info from here). (Policymakers)

comment by Nanda Ale · 2022-05-28T06:35:19.910Z · LW(p) · GW(p)

Target: Everyone? Just really snappy.

I'm old enough to remember when protein folding, text-based image generation, StarCraft play, 3+ player poker, and Winograd schemas were considered very difficult challenges for AI. I'm 3 years old.

Source: https://nitter.nl/Miles_Brundage/status/1490512439154151426#m

comment by Nanda Ale · 2022-05-28T06:26:44.805Z · LW(p) · GW(p)

Machine learning researchers

Common Deep Learning Critique “It’s just memorization”

Critique:

Let’s say there is some intelligent behavior that emerges from these huge models. These researchers have given up on the idea that we should understand intelligence. They’re just playing the memorization game. They’re using their petabytes and petabytes of data to make these every bigger models, and they’re just memorizing everything with brute force. This strategy can not scale. They will run out of space before anything more interesting happens.

Connor Leahy response:

What you just described is what I believed one year ago. I believed bigger models just memorized more.

But when I sat down and read the paper for GPT-3 I almost fell out of my chair. GPT-3 did not complete a full epoch on its data. It only saw most of its data once.

But even so, it had a wide knowledge of topics it could not have seen more than once. This implies it is capable of learning complete concepts in a single update step. Everyone says deep learning can’t do this. But GPT-3 seems to have learned some kind of meta-learning algorithm to allow it to rapidly learn new concepts it only sees one time.

Human babies need to hear a new word many times to learn it, perhaps over weeks. Human adults, on the other hand, can immediately understand a new word the first time it is introduced. GPT-3 has learned this without any change in architecture.

Paraphrased from this podcast segment: https://www.youtube.com/watch?v=HrV19SjKUss&t=5460s

comment by Nanda Ale · 2022-05-28T06:17:20.161Z · LW(p) · GW(p)

Policymakers

These researchers built an AI for discovering less toxic drug compounds. Then they retrained it to do the opposite. Within six hours it generated 40,000 toxic molecules, including VX nerve agent and "many other known chemical warfare agents."

Source: https://nitter.nl/WriteArthur/status/1503393942016086025#m

comment by Nicholas / Heather Kross (NicholasKross) · 2022-05-28T01:41:26.550Z · LW(p) · GW(p)

Imagine a piece of AI software was invented, capable of doing any intellectual task a human can, at a normal human level. Should we be concerned about this? Yes, because this artificial mind would be more powerful (and dangerous) than any human mind. It can think anything a normal human can, but faster, more precisely, and without needing to be fed. In addition, it could be copied onto a million computers with ease. An army of thinkers, available at the press of a button. (Adapted from here). (Policymakers)

comment by trevor (TrevorWiesinger) · 2022-05-27T23:42:57.460Z · LW(p) · GW(p)

Policymakers and techxecutives:

If we build an AI that's smarter than a human, then it will be smarter than a human, so it won't have a hard time convincing us that it's on our side. This is why we have to build it perfectly, before it's built, not after.

comment by theme_arrow · 2022-05-27T21:43:08.677Z · LW(p) · GW(p)

AI has a history of surprising us with its capabilities. Throughout the last 50 years, AI and machine learning systems have kept gaining skills that were once thought to be uniquely human, such as playing chess, classifying images, telling stories, and making art. Already, we see the risks associated with these kinds of AI capabilities. We worry about bias in algorithms that guide sentencing decisions or polarization induced by algorithms that curate our social media feeds. But we have every reason to believe that trends in AI progress will continue. AI will likely move from classifying satellite imagery to actually deciding whether to order a drone strike, or from helping AI researchers conduct literature reviews to actually executing AI research. As these AI systems continue to grow more capable, our ability to understand and control them will tend to weaken, with potentially disastrous consequences. it is therefore critical that we build the technological foundation to ensure these systems share our values and the policy and regulatory foundation to ensure these systems are used for good.

Replies from: TrevorWiesinger↑ comment by trevor (TrevorWiesinger) · 2022-05-27T23:26:38.133Z · LW(p) · GW(p)

the first sentence counts as a one-liner

comment by IrenicTruth · 2022-05-27T13:25:38.372Z · LW(p) · GW(p)

For lefties:

- We put unaligned AIs in charge of choosing what news people see. Result: polarization resulting in millions of deaths. Let's not make the same mistake again.

For right-wingers:

- We put unaligned AIs in charge of choosing what news people see. Result: people addicted to their phones, oblivious to their families, morals, and eroding freedoms. Let's not make the same mistake again.

comment by Nanda Ale · 2022-05-27T12:11:46.858Z · LW(p) · GW(p)

Policymakers

Look, we know how we sound waving our hands warning about this AI stuff. But here’s the thing, in this space, things that sounded crazy yesterday can become very real overnight. (Link DALL-E 2 or Imagen samples). Honestly ask yourself: would you have believed a computer could do that before seeing these examples? And if you were surprised by this, how many more surprises won’t you see coming? We’re asking you to expect to be surprised, and to get ready.

comment by AprilSR · 2022-05-27T00:47:22.721Z · LW(p) · GW(p)

Humans are pretty clever, but AI will be eventually be even more clever. If you give a powerful enough AI a task, it can direct a level of ingenuity towards it far greater than history’s smartest scientists and inventors. But there are many cases of people accidentally giving an AI imperfect instructions.

If things go poorly, such an AI might notice that taking over the world would give it access to lots of resources helpful for accomplishing its task. If this ever happens, even once, with an AI smart enough to escape any precautions we set and succeed at taking over the world, then there will be nothing humanity can do to fix things.

comment by jimv · 2022-05-27T00:00:27.038Z · LW(p) · GW(p)

The first moderately smart AI anyone develops might quickly become the last time that that people are the smartest things around. We know that people can write computer programs. Once we make an AI computer program that is a bit smarter than people, it should be able to write computer programs too, including re-writing its own software to make itself even smarter. This could happen repeatedly, with the program getting smarter and smarter. If an AI quickly re-programs itself from moderately-smart to super-smart, we could soon find that it is as disinterested in the wellbeing of people as people are of mice.

comment by Zack_M_Davis · 2022-05-26T23:05:02.088Z · LW(p) · GW(p)

(For non-x-risk-focused transhumanists, some of whom may be tech execs or ML researchers.)

Some people treat the possibility of human extinction with a philosophical detachment: who are we to obstruct the destiny of the evolution of intelligent life? If the "natural" course of events for a biological species like ours is to be transcended by our artificial "mind children", shouldn't we be happy for them?

I actually do have some sympathy for this view, in the sense that the history where we build AI that kills us is plausibly better than the history where the Industrial Revolution never happens at all. Still—if you had the choice between a superintelligence that kills you and everyone you know, and one that grants all your hopes and dreams for a happy billion-year lifespan, isn't it worth some effort trying to figure out how to get the latter?

comment by Robert Cousineau (robert-cousineau) · 2022-05-26T22:28:46.832Z · LW(p) · GW(p)

"Through the past 4 billion years of life on earth, the evolutionary process has emerged to have one goal: create more life. In the process, it made us intelligent. In the past 50 years, as humanity gotten exponentially more economically capable we've seen human birth rates fall dramatically. Why should we expect that when we create something smarter than us, it will retain our goals any better than we have retained evolution's?" (Policymaker)

comment by Nina Panickssery (NinaR) · 2022-05-26T17:18:21.645Z · LW(p) · GW(p)

A system that is optimizing a function of n variables, where the objective depends on a subset of size k<n, will often set the remaining unconstrained variables to extreme values; if one of those unconstrained variables is actually something we care about, the solution found may be highly undesirable. - Stuart Russell

comment by Nicholas / Heather Kross (NicholasKross) · 2022-05-26T02:26:21.559Z · LW(p) · GW(p)

With enough effort, could humanity have prevented nuclear proliferation? (Machine learning researchers)

comment by Nicholas / Heather Kross (NicholasKross) · 2022-05-26T02:17:49.404Z · LW(p) · GW(p)

Progress moves faster than we think; who in the past would've thought that the world economy would double in size, multiple times in a single person's lifespan? (Adapted from here). (Policymakers)

comment by trevor (TrevorWiesinger) · 2022-05-25T23:08:43.146Z · LW(p) · GW(p)

The nightmare scenario is that we find ourselves stuck with a catalog of mature, powerful, publicly available AI techniques... which cannot be used to build Friendly AI without redoing the last three decades of AI work from scratch.

EY, AI as a pos neg factor, 2006ish

comment by avturchin · 2022-05-25T09:38:23.139Z · LW(p) · GW(p)

There are several dozens scenarios how advanced AI can cause a global catastrophe. The full is presented in the article Classification of Global Catastrophic Risks Connected with Artificial Intelligence. At least some scenarios are real and likely to happen. Therefore we have to pay more attention to AI safety.

comment by Nicholas / Heather Kross (NicholasKross) · 2022-05-25T07:57:18.058Z · LW(p) · GW(p)