Voting Results for the 2022 Review

post by Ben Pace (Benito) · 2024-02-02T20:34:59.768Z · LW · GW · 3 commentsContents

Review Facts Review Prizes We have been working on a new way of celebrating the best posts of the year Voting Results None 3 comments

The 5th Annual LessWrong Review has come to a close!

Review Facts

There were 5330 posts published in 2022.

Here's how many posts passed through the different review phases.

| Phase | No. of posts | Eligibility |

| Nominations Phase | 579 | Any 2022 post could be given preliminary votes |

| Review Phase | 363 | Posts with 2+ votes could be reviewed |

| Voting Phase | 168 | Posts with 1+ reviews could be voted on |

Here how many votes and voters there were by karma bracket.

| Karma Bucket | No. of Voters | No. of Votes Cast | ||

| Any | 333 | 5007 | ||

| 1+ | 307 | 4944 | ||

| 10+ | 298 | 4902 | ||

| 100+ | 245 | 4538 | ||

| 1,000+ | 121 | 2801 | ||

| 10,000+ | 24 | 816 |

To give some context on this annual tradition, here are the absolute numbers compared to last year and to the first year of the LessWrong Review.

| 2018 | 2021 | 2022 | |||

| Voters | 59 | 238 | 333 | ||

| Nominations | 75 | 452 | 579 | ||

| Reviews | 120 | 209 | 227 | ||

| Votes | 1272 | 2870 | 5007 | ||

| Total LW Posts | 1703 | 4506 | 5330 |

Review Prizes

There were lots of great reviews this year! Here's a link to all of them [? · GW]

Of 227 reviews we're giving 31 of them prizes.

This follows up on Habryka who gave out about half of these prizes 2 months ago. [LW(p) · GW(p)]

Note that two users were paid to produce reviews and so will not be receiving the prize money. They're still here because I wanted to indicate that they wrote some really great reviews.

Click below to expand and see who won prizes.

Excellent ($200) (7 reviews)

- ambigram [LW · GW] for their review [LW(p) · GW(p)] of Meadow Theory

- Buck [LW · GW] for his self-review [LW(p) · GW(p)] of Causal Scrubbing: a method for rigorously testing interpretability hypotheses

- DirectedEvolution [LW · GW] for their paid review [LW(p) · GW(p)] of How satisfied should you expect to be with your partner?

- LawrenceC [LW · GW] for their paid review [LW(p) · GW(p)] of Some Lessons Learned from Studying Indirect Object Identification in GPT-2 small

- LawrenceC [LW · GW] for their paid review [LW(p) · GW(p)] of How "Discovering Latent Knowledge in Language Models Without Supervision" Fits Into a Broader Alignment Scheme

- LoganStrohl [LW · GW] for their self-review [LW(p) · GW(p)] of the Intro to Naturalism sequence

- porby [LW · GW] for their self-review [LW(p) · GW(p)] of Why I think strong general AI is coming soon

Great ($100) (6 reviews)

- DirectedEvolution [LW · GW] for their paid review [LW(p) · GW(p)] of Slack matters more than any outcome

- janus [LW · GW] for their self-review [LW(p) · GW(p)] of Simulators

- Lee Sharkey [LW · GW] for their self-review [LW(p) · GW(p)] of Taking features out of superposition with sparse autoencoders

- Neel Nanda [LW · GW] for their review [LW(p) · GW(p)] of "Some Lessons Learned from Studying Indirect Object Identification in GPT-2 small"

- nostalgebraist [LW · GW] for their review [LW(p) · GW(p)] of Simulators

- Writer [LW · GW] for their review [LW(p) · GW(p)] of "Inner and outer alignment decompose one hard problem into two extremely hard problems"

Good ($50) (18 reviews)

- Alex_Altair [LW · GW] for their review [LW(p) · GW(p)] of the Intro to Naturalism sequence

- Buck [LW · GW] for their review [LW(p) · GW(p)] of K-complexity is silly; use cross-entropy instead

- Davidmanheim [LW · GW] for their review [LW(p) · GW(p)] of It's Probably not Lithium

- [DEACTIVATED] Duncan Sabien [LW · GW] for their review [LW(p) · GW(p)] of Here's the exit.

- [DEACTIVATED] Duncan Sabien [LW · GW] for their self-review [LW(p) · GW(p)] of Benign Boundary Violations

- eukaryote [LW · GW] for their self-review [LW(p) · GW(p)] of Fiber arts, mysterious dodecahedrons, and waiting on “Eureka!”

- Jan_Kulveit [LW · GW] for their review [LW(p) · GW(p)] of Human values & biases are inaccessible to the genome

- Jan_Kulveit [LW · GW] for their review [LW(p) · GW(p)] of The shard theory of human values

- johnswentworth [LW · GW] for their review [LW(p) · GW(p)] of Revisiting algorithmic progress

- L Rudolf L [LW · GW] for their self-review [LW(p) · GW(p)] of Review: Amusing Ourselves to Death

- Nathan Young [LW · GW] for their review [LW(p) · GW(p)] of Introducing Pastcasting: A tool for forecasting practice

- Neel Nanda [LW · GW] for their self-review [LW(p) · GW(p)] of A Longlist of Theories of Impact for Interpretability

- Screwtape [LW · GW] for their review [LW(p) · GW(p)] of How To: A Workshop (or anything)

- Screwtape [LW · GW] for their review [LW(p) · GW(p)] of Sazen

- TurnTrout [LW · GW] for their review [LW(p) · GW(p)] of Simulators

- Vanessa Kosoy [LW · GW] for their post-length review [LW(p) · GW(p)] of Where I agree and disagree with Eliezer

- Vika [LW · GW] for their self-review [LW(p) · GW(p)] of DeepMind alignment team opinions on AGI ruin arguments

- Vika [LW · GW] for their self-review [LW(p) · GW(p)] of Refining the Sharp Left Turn threat model, part 1: claims and mechanisms

We'll reach out to prizewinners in the coming weeks to give you your prizes.

We have been working on a new way of celebrating the best posts of the year

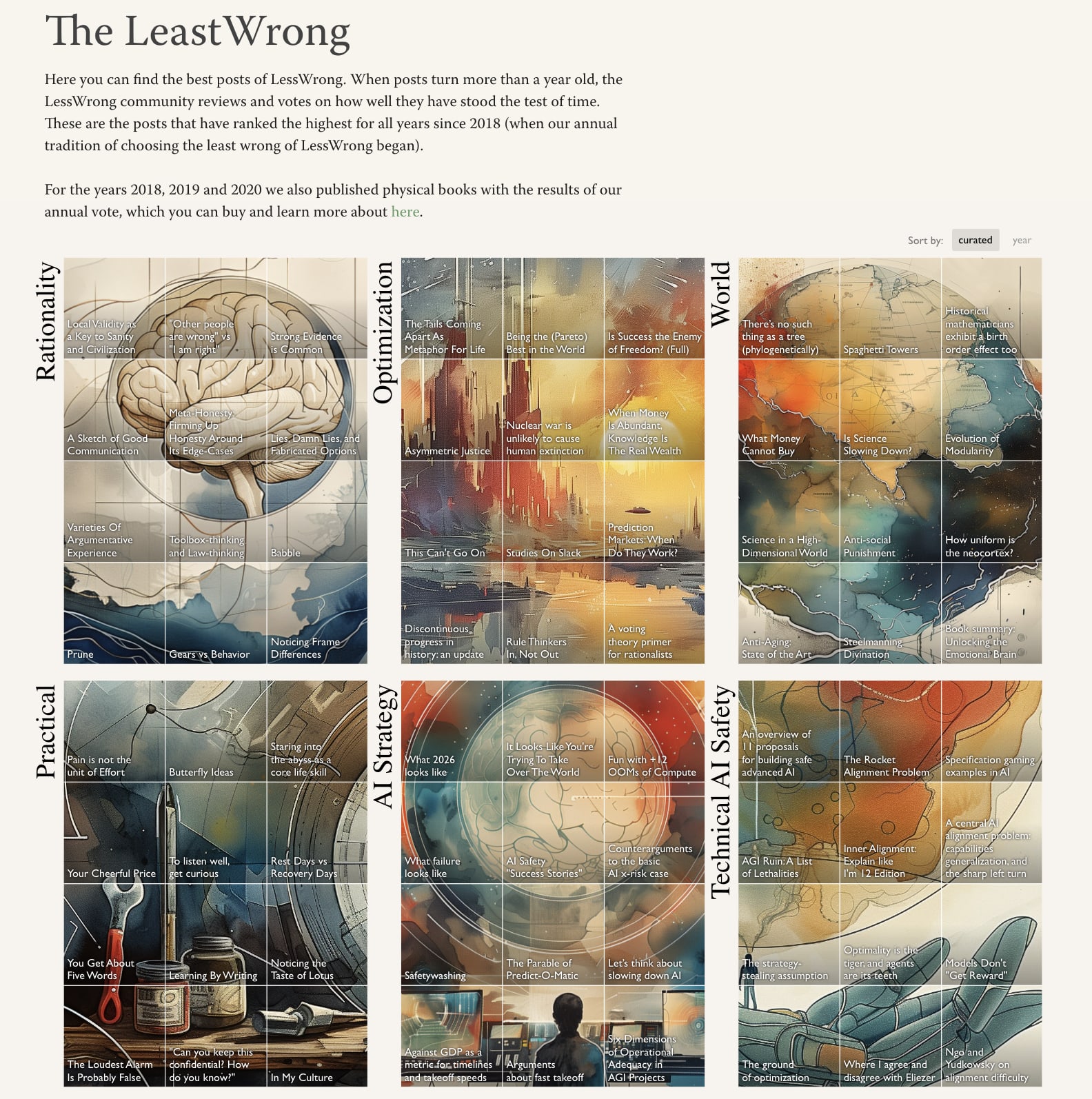

The top 50 posts of each year are being celebrated in a new way! Read this companion post [LW · GW] to find out all the details, but for now here's a preview of the sorts of changes we've made for the top-voted posts of the annual review.

And there's a new LeastWrong page [? · GW] with the top 50 posts from all 5 annual reviews so far, sorted into categories.

You can learn more about what we've built in the companion post [LW · GW].

Okay, now onto the voting results!

Voting Results

Voting is visualized here with dots of varying sizes, roughly indicating that a user thought a post was "good" (+1), "important" (+4), or "extremely important" (+9).

Green dots indicate positive votes. Red indicate negative votes.

If a user spent more than their budget of 500 points, all of their votes were scaled down slightly, so some of the circles are slightly smaller than others.

These are the 161 posts that got a net positive score, out of 168 posts that were eligible for the vote.

3 comments

Comments sorted by top scores.

comment by Alex_Altair · 2024-02-28T17:48:10.468Z · LW(p) · GW(p)

Just noticing that every post has at least one negative vote, which feels interesting for some reason.

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-02-28T17:53:34.997Z · LW(p) · GW(p)

Technically the optimal way to spend your points to influence the vote outcome is to center them (i.e. have the mean be zero). In-practice this means giving a -1 to lots of posts. It doesn't provide much of an advantage, but I vaguely remember some people saying they did it, which IMO would explain there being some very small number of negative votes on everything.

comment by Neil (neil-warren) · 2024-02-29T11:00:00.412Z · LW(p) · GW(p)

The new designs are cool, I'd just be worried about venturing too far into insight porn. You don't want people reading the posts just because they like how they look (although reading them superficially is probably better than not reading them at all). Clicking on the posts and seeing a giant image that bleeds color into the otherwise sober text format is distracting.

I guess if I don't like it there's always GreaterWrong.