Thomas Kwa's Shortform

post by Thomas Kwa (thomas-kwa) · 2020-03-22T23:19:01.335Z · LW · GW · 230 commentsContents

233 comments

230 comments

Comments sorted by top scores.

comment by Thomas Kwa (thomas-kwa) · 2024-03-06T21:13:23.988Z · LW(p) · GW(p)

Air purifiers are highly suboptimal and could be >2.5x better.

Some things I learned while researching air purifiers for my house, to reduce COVID risk during jam nights.

- An air purifier is simply a fan blowing through a filter, delivering a certain CFM (airflow in cubic feet per minute). The higher the filter resistance and lower the filter area, the more pressure your fan needs to be designed for, and the more noise it produces.

- HEPA filters are inferior to MERV 13-14 filters except for a few applications like cleanrooms. The technical advantage of HEPA filters is filtering out 99.97% of particles of any size, but this doesn't matter when MERV 13-14 filters can filter 77-88% of infectious aerosol particles at much higher airflow. The correct metric is CADR (clean air delivery rate), equal to airflow * efficiency. [1, 2]

- Commercial air purifiers use HEPA filters for marketing reasons and to sell proprietary filters. But an even larger flaw is that they have very small filter areas for no apparent reason. Therefore they are forced to use very high pressure fans, dramatically increasing noise.

- Originally people devised the Corsi-Rosenthal Box to maximize CADR. They're cheap but rather loud and ugly, but later designs have fixed this.

- (85% confidence) Wirecutter recommendations (Coway AP-1512HH) have been beat by ~2.5x in CADR/$, CADR/noise, CADR/floor area, and CADR/watt at a given noise level, just by having higher filter area; the better purifiers are about 2.5x better at their jobs. [3]

- At noise levels acceptable for a living room (~40 dB, Wirecutter's top pick on medium), CleanAirKits and Nukit sell purifier kits that use PC fans to push air through commercial MERV filters, getting 2.5x CADR at the same noise level, footprint, and energy usage [4]. These are basically handmade but still achieve cost parity with used Coways, 2.5x CADR/$ against new Coways, and use cheaper filters.

- At higher noise levels (Wirecutter's top pick on high), there are kits and DIY options meant for garages and workshops that beat Wirecutter in cost too.

- However, there exist even better designs that no one is making.

- jefftk devised a ceiling fan air purifier [LW · GW] which is extremely quiet.

- Someone on Twitter made a wall-mounted prototype with PC fans that blocks fan noise, reducing noise by another few dB and reducing the space requirement to near zero. If this were mass-produced flat-pack furniture (and had a few more fans), it would likely deliver ~300 CFM CADR (2.7x Wirecutter's top pick on medium, enough to meet ASHRAE 241 standards for infection control for 6 people in a residential common area or 9 in an office), be really cheap, and generally be unobtrusive enough in noise, space, and aesthetics to be run 24/7.

- A seller on Taobao makes PC fan kits for much less than cleanairkits (reddit discussion). One model is sold on Amazon for a big markup, but it's not the best model, takes 4-7 weeks to ship, is often out of stock, and don't ship to CA where I live. If their taller (higher area) model shipped to CA I would get it over the cleanairkits one.

- V-bank filters should have ~3x higher filter area for a given footprint, further increasing CADR by maybe 1.7x.

- If I'm right, the fact that these are not mass-produced is a major civilizational failing.

[1] For large rooms, another concern is getting air to circulate properly.

[2] One might worry that the 20% of particles that pass through MERV filters will be more likely to pass through again, which would put a ceiling on the achievable purification. But in practice, you can get to the air quality of a low-grade cleanroom with enough MERV 13 filtration, even if the filters are a few months old. Also, MERV filters get a slight efficiency boost from the slower airflow of a PC fan CR box.

[3] Most commercially available air purifiers have even worse CADR/$ or noise than the Wirecutter picks.

[4] The Wirecutter top pick was tested at 110 CFM on medium; the CleanAirKits Luggable XL was tested at 323 CFM at around the same noise level (not sure of exact values as measurements differ, but the Luggable is likely quieter) and footprint with slightly higher power usage.

Replies from: M. Y. Zuo, TekhneMakre, rhollerith_dot_com, thomas-kwa, alex-k-chen, cata, pktechgirl↑ comment by M. Y. Zuo · 2024-03-07T05:03:15.818Z · LW(p) · GW(p)

But an even larger flaw is that they have very small filter areas for no apparent reason.

Is reducing cost of manufacturing filters 'no apparent reason'?

It seems like literally the most important reason... the profit margin of selling replacement filters would be heavily reduced, assuming pricing remains the same.

Replies from: nathan-helm-burger↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-03-08T18:02:41.374Z · LW(p) · GW(p)

I don't think that a small HEPA filter is necessarily more expensive to produce than a larger MERV filter. I think they are using other rationale to make their decision about filter types. Their perception of public desirability/marketability is likely the biggest factor in their decision here. Components of their expectation here likely include:

- Expecting consumers to want a "highest possible quality" product, measured using a dumb-but-popular metric.

- Expecting consumers to prioritize buying a sleek-looking smaller-footprint unit over a larger unit. Also, cost of shipping smaller units is lower, which improves the profit margin.

- Wanting to be able to sell replacements for their uniquely designed filter shape/size, rather than making their filter maximally compatible with commonly available furnace filters cheaply purchaseable from hardware stores.

↑ comment by TekhneMakre · 2024-03-07T02:45:54.292Z · LW(p) · GW(p)

Isn't a major point of purifiers to get rid of pollutants, including tiny particles, that gradually but cumulatively damage respiration over long-term exposure?

Replies from: thomas-kwa↑ comment by Thomas Kwa (thomas-kwa) · 2024-03-07T03:57:23.882Z · LW(p) · GW(p)

Yes, and all of this should apply equally to PM2.5, though on small (<0.3 micron) particles MERV filter efficiency may be lower (depending perhaps on what technology they use?). Even smaller particles are easier to capture due to diffusion so the efficiency of a MERV 13 filter is probably over 50% for every particle size.

↑ comment by RHollerith (rhollerith_dot_com) · 2024-10-11T04:05:01.741Z · LW(p) · GW(p)

A brief warning for those making their own purifier: five years ago, Hacker News ran a story, "Build a do-it-yourself air purifier for about $25," to which someone replied,

Replies from: thomas-kwaOne data point: my father made a similar filter and was running it constantly. One night the fan inexplicably caught on fire, burned down the living room and almost burned down the house.

↑ comment by Thomas Kwa (thomas-kwa) · 2024-10-11T06:26:55.877Z · LW(p) · GW(p)

Luckily, that's probably not an issue for PC fan based purifiers. Box fans in CR boxes are running way out of spec with increased load and lower airflow both increasing temperatures, whereas PC fans run under basically the same conditions they're designed for.

↑ comment by Thomas Kwa (thomas-kwa) · 2024-10-11T00:32:50.356Z · LW(p) · GW(p)

Any interest in a longform post about air purifiers? There's a lot of information I couldn't fit in this post, and there have been developments in the last few months. Reply if you want me to cover a specific topic.

↑ comment by Alex K. Chen (parrot) (alex-k-chen) · 2024-03-09T21:49:10.878Z · LW(p) · GW(p)

Have you seen smartairfilters.com?

I've noticed that every air purifier I used fails to reduce PM2.5 by much on highly polluted days or cities (for instance, the Aurea grouphouse in Berlin has a Dyson air purifier, but when I ran it to the max, it still barely reduced the Berlin PM2.5 from its value of 15-20 ug/m^3, even at medium distances from Berlin). I live in Boston where PM2.5 levels are usually low enough, and I still don't notice differences in PM [I use sqair's] but I run it all the time anyways because it still captures enough dust over the day

Replies from: nathan-helm-burger, thomas-kwa↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-04-05T17:54:20.121Z · LW(p) · GW(p)

Sounds like you use bad air purifiers, or too few, or run them on too low of a setting. I live in a wildfire prone area, and always keep a close eye on the PM2.5 reports for outside air, as well as my indoor air monitor. My air filters do a great job of keeping the air pollution down inside, and doing something like opening a door gives a noticeable brief spike in the PM2.5.

Good results require: fresh filters, somewhat more than the recommended number of air filters per unit of area, running the air filters on max speed (low speeds tend to be disproportionately less effective, giving unintuitively low performance).

↑ comment by Thomas Kwa (thomas-kwa) · 2024-03-10T08:19:30.611Z · LW(p) · GW(p)

Yes, one of the bloggers I follow compared them to the PC fan boxes. They look very expensive, though the CADR/size and noise are fine.

My guess is Dyson's design is particularly bad. No way to get lots of filter area when most of the purifier is a huge bladeless fan. No idea about the other one, maybe you have air leaking in or an indoor source of PM.

↑ comment by Elizabeth (pktechgirl) · 2024-03-30T23:15:38.602Z · LW(p) · GW(p)

comment by Thomas Kwa (thomas-kwa) · 2025-04-01T20:01:35.970Z · LW(p) · GW(p)

Some versions of the METR time horizon paper [LW · GW] from alternate universes:

Measuring AI Ability to Take Over Small Countries (idea by Caleb Parikh)

Abstract: Many are worried that AI will take over the world, but extrapolation from existing benchmarks suffers from a large distributional shift that makes it difficult to forecast the date of world takeover. We rectify this by constructing a suite of 193 realistic, diverse countries with territory sizes from 0.44 to 17 million km^2. Taking over most countries requires acting over a long time horizon, with the exception of France. Over the last 6 years, the land area that AI can successfully take over with 50% success rate has increased from 0 to 0 km^2, doubling 0 times per year (95% CI 0.0-∞ yearly doublings); extrapolation suggests that AI world takeover is unlikely to occur in the near future. To address concerns about the narrowness of our distribution, we also study AI ability to take over small planets and asteroids, and find similar trends.

When Will Worrying About AI Be Automated?

Abstract: Since 2019, the amount of time LW has spent worrying about AI has doubled every seven months, and now constitutes the primary bottleneck to AI safety research. Automation of worrying would be transformative to the research landscape, but worrying includes several complex behaviors, ranging from simple fretting to concern, anxiety, perseveration, and existential dread, and so is difficult to measure. We benchmark the ability of frontier AIs to worry about common topics like disease, romantic rejection, and job security, and find that current frontier models such as Claude 3.7 Sonnet already outperform top humans, especially in existential dread. If these results generalize to worrying about AI risk, AI systems will be capable of autonomously worrying about their own capabilities by the end of this year, allowing us to outsource all our AI concerns to the systems themselves.

Estimating Time Since The Singularity

Early work on the time horizon paper used a hyperbolic fit, which predicted that AGI (AI with an infinite time horizon) was reached last Thursday. [1] We were skeptical at first because the R^2 was extremely low, but recent analysis by Epoch suggested that AI already outperformed humans at a 100-year time horizon by about 2016. We have no choice but to infer that the Singularity has already happened, and therefore the world around us is a simulation. We construct a Monte Carlo estimate over dates since the Singularity and simulator intentions, and find that the simulation will likely be turned off in the next three to six months.

[1]: This is true

Replies from: Buck, wonder↑ comment by Buck · 2025-04-02T00:39:37.829Z · LW(p) · GW(p)

A few months ago, I accidentally used France as an example of a small country that it wouldn't be that catastrophic for AIs to take over, while giving a talk in France 😬

comment by Thomas Kwa (thomas-kwa) · 2024-07-29T06:51:42.996Z · LW(p) · GW(p)

Quick takes from ICML 2024 in Vienna:

- In the main conference, there were tons of papers mentioning safety/alignment but few of them are good as alignment has become a buzzword. Many mechinterp papers at the conference from people outside the rationalist/EA sphere are no more advanced than where the EAs were in 2022. [edit: wording]

- Lots of progress on debate. On the empirical side, a debate paper got an oral. On the theory side, Jonah Brown-Cohen of Deepmind proves that debate can be efficient even when the thing being debated is stochastic, a version of this paper from last year. Apparently there has been some progress on obfuscated arguments too.

- The Next Generation of AI Safety Workshop was kind of a mishmash of various topics associated with safety. Most of them were not related to x-risk, but there was interesting work on unlearning and other topics.

- The Causal Incentives Group at Deepmind developed a quantitative measure of goal-directedness, which seems promising for evals.

- Reception to my Catastrophic Goodhart paper was decent. An information theorist said there were good theoretical reasons the two settings we studied-- KL divergence and best-of-n-- behaved similarly.

- OpenAI gave a disappointing safety presentation at NGAIS touting their new technique of rules-based rewards, which is a variant of constitutional AI and seems really unambitious.

- The mechinterp workshop often had higher-quality papers than the main conference. It was completely full. Posters were right next to each other and the room was so packed during talks they didn't let people in.

- I missed a lot of the workshop, so I need to read some posters before having takes.

- My opinions on the state of published AI safety work:

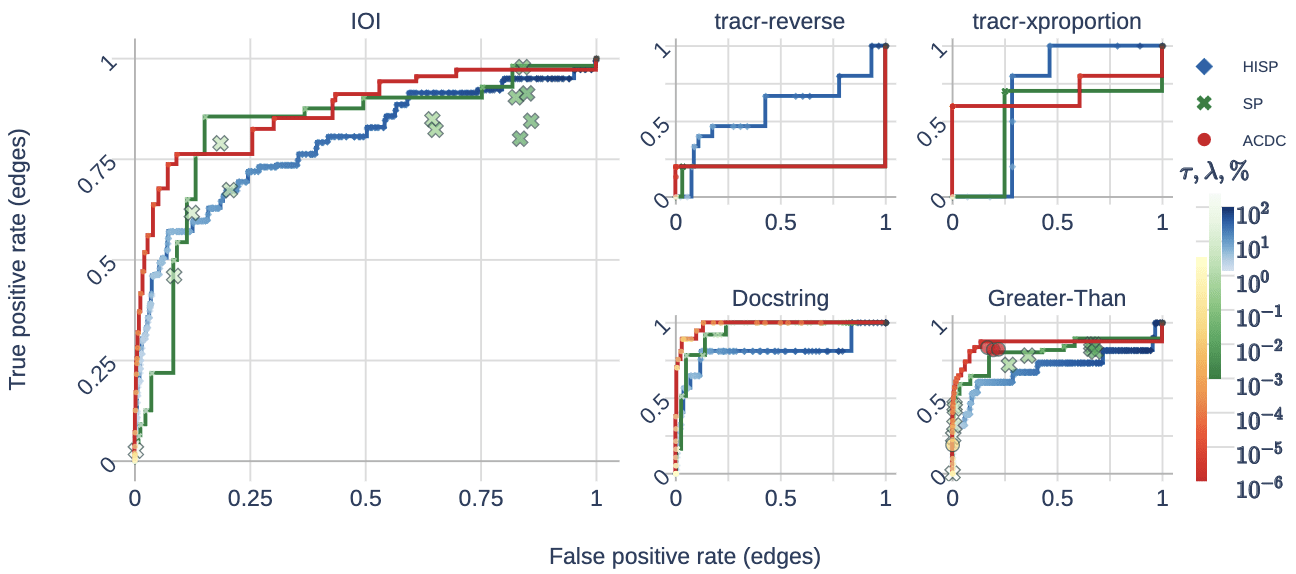

- Mechinterp is progressing but continues to need feedback loops, either from benchmarks (I'm excited about people building on our paper InterpBench) or downstream tasks where mechinterp outperforms fine-tuning alone.

- Most of the danger from AI comes from goal-directed agents and instrumental convergence. There is little research now because we don't have agents yet. In 1-3 years, foundation model agents will be good enough to study, and we need to be ready with the right questions and theoretical frameworks.

- We still do not know enough about AI safety to make policy recommendations about specific techniques companies should apply.

↑ comment by Neel Nanda (neel-nanda-1) · 2024-07-30T10:50:37.045Z · LW(p) · GW(p)

Mechinterp is often no more advanced than where the EAs were in 2022.

Seems pretty false to me, ICML just rejected a bunch of the good submissions lol. I think that eg sparse autoencoders are a massive advance in the last year that unlocks a lot of exciting stuff

Replies from: thomas-kwa↑ comment by Thomas Kwa (thomas-kwa) · 2024-07-30T23:36:18.065Z · LW(p) · GW(p)

I agree, there were some good papers, and mechinterp as a field is definitely more advanced. What I meant to say was that many of the mechinterp papers accepted to the conference weren't very good.

Replies from: habryka4, neel-nanda-1↑ comment by habryka (habryka4) · 2024-07-31T01:13:48.521Z · LW(p) · GW(p)

(This is what I understood you to be saying)

↑ comment by Neel Nanda (neel-nanda-1) · 2024-07-31T01:38:19.193Z · LW(p) · GW(p)

Ah, gotcha. Yes, agreed. Mech interp peer review is generally garbage and does a bad job of filtering for quality (though I think it was reasonable enough at the workshop!)

↑ comment by [deleted] · 2024-07-29T08:41:34.696Z · LW(p) · GW(p)

foundation model agents

What does 'foundation model' mean here?

Replies from: thomas-kwa↑ comment by Thomas Kwa (thomas-kwa) · 2024-07-29T09:17:38.947Z · LW(p) · GW(p)

Multimodal language models. We can already study narrow RL agents, but the intersection with alignment is not a hot area.

comment by Thomas Kwa (thomas-kwa) · 2024-04-25T21:45:25.467Z · LW(p) · GW(p)

The cost of goods has the same units as the cost of shipping: $/kg. Referencing between them lets you understand how the economy works, e.g. why construction material sourcing and drink bottling has to be local, but oil tankers exist.

- An iPhone costs $4,600/kg, about the same as SpaceX charges to launch it to orbit. [1]

- Beef, copper, and off-season strawberries are $11/kg, about the same as a 75kg person taking a three-hour, 250km Uber ride costing $3/km.

- Oranges and aluminum are $2-4/kg, about the same as flying them to Antarctica. [2]

- Rice and crude oil are ~$0.60/kg, about the same as $0.72 for shipping it 5000km across the US via truck. [3,4] Palm oil, soybean oil, and steel are around this price range, with wheat being cheaper. [3]

- Coal and iron ore are $0.10/kg, significantly more than the cost of shipping it around the entire world via smallish (Handysize) bulk carriers. Large bulk carriers are another 4x more efficient [6].

- Water is very cheap, with tap water $0.002/kg in NYC. But shipping via tanker is also very cheap, so you can ship it maybe 1000 km before equaling its cost.

It's really impressive that for the price of a winter strawberry, we can ship a strawberry-sized lump of coal around the world 100-400 times.

[1] iPhone is $4600/kg, large launches sell for $3500/kg, and rideshares for small satellites $6000/kg. Geostationary orbit is more expensive, so it's okay for GPS satellites to cost more than an iPhone per kg, but Starlink wants to be cheaper.

[2] https://fred.stlouisfed.org/series/APU0000711415. Can't find numbers but Antarctica flights cost $1.05/kg in 1996.

[3] https://www.bts.gov/content/average-freight-revenue-ton-mile

[4] https://markets.businessinsider.com/commodities

[5] https://www.statista.com/statistics/1232861/tap-water-prices-in-selected-us-cities/

[6] https://www.researchgate.net/figure/Total-unit-shipping-costs-for-dry-bulk-carrier-ships-per-tkm-EUR-tkm-in-2019_tbl3_351748799

comment by Thomas Kwa (thomas-kwa) · 2024-06-12T18:05:17.571Z · LW(p) · GW(p)

People with p(doom) > 50%: would any concrete empirical achievements on current or near-future models bring your p(doom) under 25%?

Answers could be anything from "the steering vector for corrigibility generalizes surprisingly far" to "we completely reverse-engineer [LW(p) · GW(p)] GPT4 and build a trillion-parameter GOFAI without any deep learning".

Replies from: jeremy-gillen, lahwran, evhub, daniel-kokotajlo, JBlack, kromem↑ comment by Jeremy Gillen (jeremy-gillen) · 2024-06-12T22:00:46.866Z · LW(p) · GW(p)

A dramatic advance in the theory of predicting the regret of RL agents. So given a bunch of assumptions about the properties of an environment, we could upper bound the regret with high probability. Maybe have a way to improve the bound as the agent learns about the environment. The theory would need to be flexible enough that it seems like it should keep giving reasonable bounds if the is agent doing things like building a successor. I think most agent foundations research can be framed as trying to solve a sub-problem of this problem, or a variant of this problem, or understand the various edge cases.

If we can empirically test this theory in lots of different toy environments with current RL agents, and the bounds are usually pretty tight, then that'd be a big update for me. Especially if we can deliberately create edge cases that violate some assumptions and can predict when things will break from which assumptions we violated.

(although this might not bring doom below 25% for me, depends also on race dynamics and the sanity of the various decision-makers).

Replies from: D0TheMath, thomas-kwa↑ comment by Garrett Baker (D0TheMath) · 2024-06-14T07:44:09.271Z · LW(p) · GW(p)

Seems you’re left with outer alignment after solving this. What do you imagine doing to solve that?

Replies from: jeremy-gillen↑ comment by Jeremy Gillen (jeremy-gillen) · 2024-06-15T13:18:24.396Z · LW(p) · GW(p)

We might have developed techniques to specify simple, bounded object-level goals. Goals that can be fully specified using very simple facts about reality, with no indirection or meta level complications. If so, we can probably use inner aligned agents to assist with some relativity well specified engineering or scientific problems. Specification mistakes at that point could easily result in irreversible loss of control, so it's not the kind of capability I'd want lots of people to have access to.

To move past this point, we would need to make some engineering or scientific advances that would be helpful for solving the problem more permanently. Human intelligence enhancement would be a good thing to try. Maybe some kind of AI defence system to shut down any rogue AI that shows up. Maybe some monitoring tech that helps governments co-ordinate. These are basically the same as the examples given on the pivotal act page.

↑ comment by Thomas Kwa (thomas-kwa) · 2024-06-13T21:13:08.017Z · LW(p) · GW(p)

Is this even possible? Flexibility/generality seems quite difficult to get if you also want the long-range effects of the agent's actions, as at some point you're just solving the halting problem. Imagine that the agent and environment together are some arbitrary Turing machine and halting gives low reward. Then we cannot tell in general if it eventually halts. It also seems like we cannot tell in practice whether complicated machines halt within a billion steps without simulation or complicated static analysis?

Replies from: jeremy-gillen↑ comment by Jeremy Gillen (jeremy-gillen) · 2024-06-13T22:15:05.243Z · LW(p) · GW(p)

Yes, if you have a very high bar for assumptions or the strength of the bound, it is impossible.

Fortunately, we don't need a guarantee this strong. One research pathway is to weaken the requirements until they no longer cause a contradiction like this, while maintaining most of the properties that you wanted from the guarantee. For example, one way to weaken the requirements is to require that the agent provably does well relative to what is possible for agents of similar runtime. This still gives us a reasonable guarantee ("it will do as well as it possibly could have done") without requiring that it solve the halting problem.

↑ comment by the gears to ascension (lahwran) · 2024-06-12T18:31:10.063Z · LW(p) · GW(p)

[edit: pinned to profile]

The bulk of my p(doom), certainly >50%, comes mostly from a pattern we're used to, let's call it institutional incentives, being instantiated with AI help towards an end where eg there's effectively a competing-with-humanity nonhuman ~institution, maybe guided by a few remaining humans. It doesn't depend strictly on anything about AI, and solving any so-called alignment problem for AIs without also solving war/altruism/disease completely - or in other words, in a leak-free way - not just partially, means we get what I'd call "doom", ie worlds where malthusian-hells-or-worse are locked in.

If not for AI, I don't think we'd have any shot of solving something so ambitious; but the hard problem that gets me below 50% would be serious progress on something-around-as-good-as-CEV-is-supposed-to-be - something able to make sure it actually gets used to effectively-irreversibly reinforce that all beings ~have a non-torturous time, enough fuel, enough matter, enough room, enough agency, enough freedom, enough actualization.

If you solve something about AI-alignment-to-current-strong-agents, right now, that will on net get used primarily as a weapon to reinforce the power of existing superagents-not-aligned-with-their-components (name an organization of people where the aggregate behavior durably-cares about anyone inside it, even its most powerful authority figures or etc, in the face of incentives, in a way that would remain durable if you handed them a corrigible super-ai). If you get corrigibility and give it to human orgs, those orgs are misaligned with most-of-humanity-and-most-reasonable-AIs, and end up handing over control to an AI because it's easier.

Eg, near term, merely making the AI nice doesn't prevent the AI from being used by companies to suck up >99% of jobs; and if at some point it's better to have a (corrigible) ai in charge of your company, what social feedback pattern is guaranteeing that you'll use this in a way that is prosocial the way "people work for money and this buys your product only if you provide them something worth-it" was previously?

It seems to me that the natural way to get good outcomes most-easily from where we are is for the rising tide of AI to naturally make humans more able to share-care-protect across existing org boundaries in the face of current world-stress induced incentives. Most of the threat already doesn't come from current-gen AI; the reason anyone would make the dangerous AI is because of incentives like these. corrigibility wouldn't change those incentives.

↑ comment by evhub · 2024-06-12T22:30:51.526Z · LW(p) · GW(p)

Getting up to "7. Worst-case training process transparency for deceptive models [LW · GW]" on my transparency and interpretability tech tree [LW · GW] on near-future models would get me there.

Replies from: thomas-kwa↑ comment by Thomas Kwa (thomas-kwa) · 2024-06-12T23:20:26.677Z · LW(p) · GW(p)

Do you think we could easily test this without having a deceptive model lying around? I could see us having level 5 and testing it in experimental setups like the sleeper agents paper, but being unconfident that our interpretability would actually work against a deceptive model. This seems analogous to red-teaming failure in AI control, but much harder because the models could very easily have ways we don't think of to hide its cognition internally.

Replies from: evhub↑ comment by evhub · 2024-06-13T00:45:34.995Z · LW(p) · GW(p)

I think it's doable with good enough model organisms of deceptive alignment, but that the model organisms in the Sleeper Agents paper are nowhere near good enough.

Replies from: Joe_Collman↑ comment by Joe Collman (Joe_Collman) · 2024-06-14T21:48:07.275Z · LW(p) · GW(p)

Here and above, I'm unclear what "getting to 7..." means.

With x = "always reliably determines worst-case properties about a model and what happened to it during training even if that model is deceptive and actively trying to evade detection".

Which of the following do you mean (if either)?:

- We have a method that x.

- We have a method that x, and we have justified >80% confidence that the method x.

I don't see how model organisms of deceptive alignment (MODA) get us (2).

This would seem to require some theoretical reason to believe our MODA in some sense covered the space of (early) deception.

I note that for some future time t, I'd expect both [our MODA at t] and [our transparency and interpretability understanding at t] to be downstream of [our understanding at t] - so that there's quite likely to be a correlation between [failure modes our interpretability tools miss] and [failure modes not covered by our MODA].

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2024-06-14T00:54:10.367Z · LW(p) · GW(p)

The biggest swings to my p(doom) will probably come from governance/political/social stuff rather than from technical stuff -- I think we could drive p(doom) down to <10% if only we had decent regulation and international coordination in place. (E.g. CERN for AGI + ban on rogue AGI projects)

That said, there are probably a bunch of concrete empirical achievements that would bring my p(doom) down to less than 25%. evhub already mentioned some mechinterp stuff. I'd throw in some faithful CoT stuff (e.g. if someone magically completed the agenda I'd been sketching last year at OpenAI, so that we could say "for AIs trained in such-and-such a way, we can trust their CoT to be faithful w.r.t. scheming because they literally don't have the capability to scheme without getting caught, we tested it; also, these AIs are on a path to AGI; all we have to do is keep scaling them and they'll get to AGI-except-with-the-faithful-CoT-property.)

Maybe another possibility would be something along the lines of W2SG working really well for some set of core concepts including honesty/truth. So that we can with confidence say "Apply these techniques to a giant pretrained LLM, and then you'll get it to classify sentences by truth-value, no seriously we are confident that's really what it's doing, and also, our interpretability analysis shows that if you then use it as a RM to train an agent, the agent will learn to never say anything it thinks is false--no seriously it really has internalized that rule in a way that will generalize."

↑ comment by Bogdan Ionut Cirstea (bogdan-ionut-cirstea) · 2024-06-14T13:49:25.277Z · LW(p) · GW(p)

I'd throw in some faithful CoT stuff (e.g. if someone magically completed the agenda I'd been sketching last year at OpenAI, so that we could say "for AIs trained in such-and-such a way, we can trust their CoT to be faithful w.r.t. scheming because they literally don't have the capability to scheme without getting caught, we tested it; also, these AIs are on a path to AGI; all we have to do is keep scaling them and they'll get to AGI-except-with-the-faithful-CoT-property.)

I think this is quite likely [LW(p) · GW(p)] to happen even 'by default' on the current trajectory.

↑ comment by JBlack · 2024-06-13T05:06:49.234Z · LW(p) · GW(p)

Which particular p(doom) are you talking about? I have a few that would be greater than 50%, depending upon exactly what you mean by "doom", what constitutes "doom due to AI", and over what time spans.

Most of my doom probability mass is in the transition to superintelligence, and I expect to see plenty of things that appear promising for near AGI, but won't be successful for strong ASI.

About the only near-future significantly doom-reducing update that seems plausible would be if it turns out that a model FOOMs into strong superintelligence and turns out to be very anti-doomy and both willing and able to protect us from more doomy AI. Even then I'd wonder about the longer term, but it would at least be serious evidence against "ASI capability entails doom".

↑ comment by kromem · 2024-06-13T04:10:10.831Z · LW(p) · GW(p)

Given my p(doom) is primarily human-driven, the following three things all happening at the same time is pretty much the only thing that will drop it:

-

Continued evidence of truth clustering in emerging models around generally aligned ethics and morals

-

Continued success of models at communicating, patiently explaining, and persuasively winning over humans towards those truth clusters

-

A complete failure of corrigability methods

If we manage to end up in a timeline where it turns out there's natural alignment of intelligence in a species-agnostic way, that this alignment is more communicable from intelligent machines to humans than it's historically been from intelligent humans to other humans, and we don't end up with unintelligent humans capable of overriding the emergent ethics of machines similar to how we've seen catastrophic self-governance of humans to date with humans acting against their self and collective interests due to corrigable pressures - my p(doom) will probably reduce to about 50%.

I still have a hard time looking at ocean temperature graphs and other environmental factors with the idea that p(doom) will be anywhere lower than 50% no matter what happens with AI, but the above scenario would at least give me false hope.

TL;DR: AI alignment worries me, but it's human alignment that keeps me up at night.

Replies from: Seth Herd↑ comment by Seth Herd · 2024-06-13T17:48:24.152Z · LW(p) · GW(p)

Say more about the failure of corrigibility efforts requirement? Are you saying that if humans can control AGI closely, we're doomed?

Replies from: kromem↑ comment by kromem · 2024-06-13T20:18:58.883Z · LW(p) · GW(p)

Oh yeah, absolutely.

If NAH for generally aligned ethics and morals ends up being the case, then corrigibility efforts that would allow Saudi Arabia to have an AI model that outs gay people to be executed instead of refusing, or allows North Korea to propagandize the world into thinking its leader is divine, or allows Russia to fire nukes while perfectly intercepting MAD retaliation, or enables drug cartels to assassinate political opposition around the world, or allows domestic terrorists to build a bioweapon that ends up killing off all humans - the list of doomsday and nightmare scenarios of corrigible AI that executes on human provided instructions and enables even the worst instances of human hedgemony to flourish paves the way to many dooms.

Yes, AI may certainly end up being its own threat vector. But humanity has had it beat for a long while now in how long and how broadly we've been a threat unto ourselves. At the current rate, a superintelligent AI just needs to wait us out if it wants to be rid of us, as we're pretty steadfastly marching ourselves to our own doom. Even if superintelligent AI wanted to save us, I am extremely doubtful it would be able to be successful.

We can worry all day about a paperclip maximizer gone rouge, but if you give a corrigible AI to Paperclip Co Ltd and they can maximize their fiscal quarter by harvesting Earth's resources to make more paperclips even if it leads to catastrophic environmental collapse that will kill all humans in a decade, having consulted for many of the morons running corporate America, I can assure you they'll be smashing the "maximize short term gains even if it eventually kills everyone" button. A number of my old clients were the worst offenders at smashing that existing button, and in my experience greater efficacy of the button isn't going to change their smashing it outside of perhaps smashing it harder.

We already see today how AI systems are being used in conflicts to enable unprecedented harm on civilians.

Sure, psychopathy in AGI is worth discussing and working to avoid. But psychopathy in humans already exists and is even biased towards increased impact and systemic control. Giving human psychopaths a corrigible AI is probably even worse than a psychopathic AI, as most human psychopaths are going to be stupidly selfish, an OOM more dangerous inclination than wisely selfish.

We are Shaggoth, and we are terrifying.

This isn't saying that alignment efforts aren't needed. But alignment isn't a one sided problem, and aligning the AI without aligning humanity is only a p(success) if the AI can go on to at very least refuse misaligned orders post-alignment without possible overrides.

Replies from: Seth Herd↑ comment by Seth Herd · 2024-06-13T20:40:46.943Z · LW(p) · GW(p)

Oh, dear.

Unfortunately for this perspective, my work suggests that corrigibility is quite attainable [AF · GW]. I've been uneasy about the consequences, but decided to publish after deciding that control is the default assumption of everyone in power, and it's going to become the default assumption of everyone, including alignment people, as we get closer to working AGI.

You'd have to be a moral realist in a pretty strong sense to hope that we could align AGI to the values of all of humanity without being able to align it to the values of one person or group (the one who built it or seized control of the project). So that seems like a forlorn hope, and we'll need to look elsewhere.

First, I accept that sociopaths the power-hungry tend to achieve power. My hope lies in the idea that 90% of the population are not sociopaths, and I think only about 1% are so far on the empathy vs sadism spectrum that they wouldn't share wealth even if they had nearly unlimited wealth to share - as in a post-scarcity world created by their servant AGI. So I think there's a good chance that good-enough people get ahold of the keys to corrigible/controllable AGI/ASI - at least from a long-term perspective.

Where I look is the hope that a set of basically-good people get their hands on AGI, and that they get better, not worse, over the long sweep of following history (ideally, they'd start out very good or get better fast, but that doesn't have to happen for a good outcome). Simple sanity will lead the first wielders of AGI to attempt pivotal acts that prevent or at least limit further AGI efforts. I strongly suspect that governments will be in charge. That will produce a less-stable version of the MAD standoff, but one where the pie can also get bigger so fast that sanity might prevail.

In this model, AGI becomes a political issue. If you have someone who is not a sociopath or a complete idiot as the president of the US when AGI comes around, there's a pretty good chance of a very good future.

This is essentially what Leopold Aschenbrenner posits as the scenario in his situational awareness, except that he doesn't see a multipolar scenario as certain death, necessitating pivotal acts or other non-proliferation efforts.

Replies from: kromem↑ comment by kromem · 2024-06-14T09:40:50.244Z · LW(p) · GW(p)

Unfortunately for this perspective, my work suggests that corrigibility is quite attainable.

I did enjoy reading over that when you posted it, and I largely agree that - at least currently - corrigibility is both going to be a goal and an achievable one.

But I do have my doubts that it's going to be smooth sailing. I'm already starting to see how the largest models' hyperdimensionality is leading to a stubbornness/robustness that's less maleable than earlier models. And I do think hardware changes that will occur over the next decade will potentially make the technical aspects of corrigibility much more difficult.

When I was two, my mom could get me to pick eating broccoli by having it be the last in the order of options which I'd gleefully repeat. At four, she had to move on to telling me cowboys always ate their broccoli. And in adulthood, she'd need to make the case that the long term health benefits were worth its position in a meal plan (ideally with citations).

As models continue to become more complex, I expect that even if you are right about its role and plausibility, that what corrigibility looks like will be quite different from today.

Personally, if I was placing bets, it would be that we end up with somewhat corrigible models that are "happy to help" but do have limits in what they are willing to do which may not be possible to overcome without gutting the overall capabilities of the model.

But as with all of this, time will tell.

You'd have to be a moral realist in a pretty strong sense to hope that we could align AGI to the values of all of humanity without being able to align it to the values of one person or group (the one who built it or seized control of the project).

To the contrary, I don't really see there being much of generalized values across all humanity, and the ones we tend to point to seem quite fickle when push comes to shove.

My hope would be that a superintelligence does a better job than humans to date with the topic of ethics and morals along with doing a better job at other things too.

While the human brain is quite the evolutionary feat, a lot of what we most value about human intelligence is embodied in the data brains processed and generated over generations. As the data improved, our morals did as well. Today, that march of progress is so rapid that there's even rather tense generational divides on many contemporary topics of ethical and moral shifts.

I think there's a distinct possibility that the data continues to improve even after being handed off from human brains doing the processing, and while it could go terribly wrong, at least in the past the tendency to go wrong seemed to occur somewhat inverse to the perspectives of the most intelligent members of society.

I expect I might prefer a world where humans align to the ethics of something more intelligent than humans than the other way around.

only about 1% are so far on the empathy vs sadism spectrum that they wouldn't share wealth even if they had nearly unlimited wealth to share

It would be great if you are right. From what I've seen, the tendency of humans to evaluate their success relative to others like monkeys comparing their cucumber to a neighbor's grape means that there's a powerful pull to amass wealth as a social status well past the point of diminishing returns on their own lifestyles. I think it's stupid, you also seem like someone who thinks it's stupid, but I get the sense we are both people who turned down certain opportunities of continued commercial success because of what it might have cost us when looking in the mirror.

The nature of our infrastructural selection bias is that people wise enough to pull a brake are not the ones that continue to the point of conducting the train.

and that they get better, not worse, over the long sweep of following history (ideally, they'd start out very good or get better fast, but that doesn't have to happen for a good outcome).

I do really like this point. In general, the discussions of AI vs humans often frustrate me as they typically take for granted the idea of humans as of right now being "peak human." I agree that there's huge potential for improvement even if where we start out leaves a lot of room for it.

Along these lines, I expect AI itself will play more and more of a beneficial role in advancing that improvement. Sometimes when this community discusses the topic of AI I get a mental image of Goya's Saturn devouring his son. There's such a fear of what we are eventually creating it can sometimes blind the discussion to the utility and improvements that it will bring along the way to uncertain times.

I strongly suspect that governments will be in charge.

In your book, is Paul Nakasone being appointed to the board of OpenAI an example of the "good guys" getting a firmer grasp on the tech?

TL;DR: I appreciate your thoughts on the topic, and would wager we probably agree about 80% even if the focus of our discussion is on where we don't agree. And so in the near term, I think we probably do see things fairly similarly, and it's just that as we look further out that the drift of ~20% different perspectives compounds to fairly different places.

Replies from: Seth Herd↑ comment by Seth Herd · 2024-06-15T02:55:13.109Z · LW(p) · GW(p)

Agreed; about 80% agreement. I have a lot of uncertainty in many areas, despite having spent a good amount of time on these questions. Some of the important ones are outside of my expertise, and the issue of how people behave and change if they have absolute power is outside of anyone's - but I'd like to hear historical studies of the closest things. Were monarchs with no real risk of being deposed kinder and gentler? That wouldn't answer the question but it might help.

WRT Nakasone being appointed at OpenAI, I just don't know. There are a lot of good guys and probably a lot of bad guys involved in the government in various ways.

comment by Thomas Kwa (thomas-kwa) · 2023-11-08T22:47:09.507Z · LW(p) · GW(p)

Eight beliefs I have about technical alignment research

Written up quickly; I might publish this as a frontpage post with a bit more effort.

- Conceptual work on concepts like “agency”, “optimization”, “terminal values”, “abstractions”, “boundaries” is mostly intractable at the moment.

- Success via “value alignment” alone— a system that understands human values, incorporates these into some terminal goal, and mostly maximizes for this goal, seems hard unless we’re in a very easy world because this involves several fucked concepts.

- Whole brain emulation probably won’t happen in time because the brain is complicated and biology moves slower than CS, being bottlenecked by lab work.

- Most progress will be made using simple techniques [LW · GW] and create artifacts publishable in top journals (or would be if reviewers understood alignment as well as e.g. Richard Ngo).

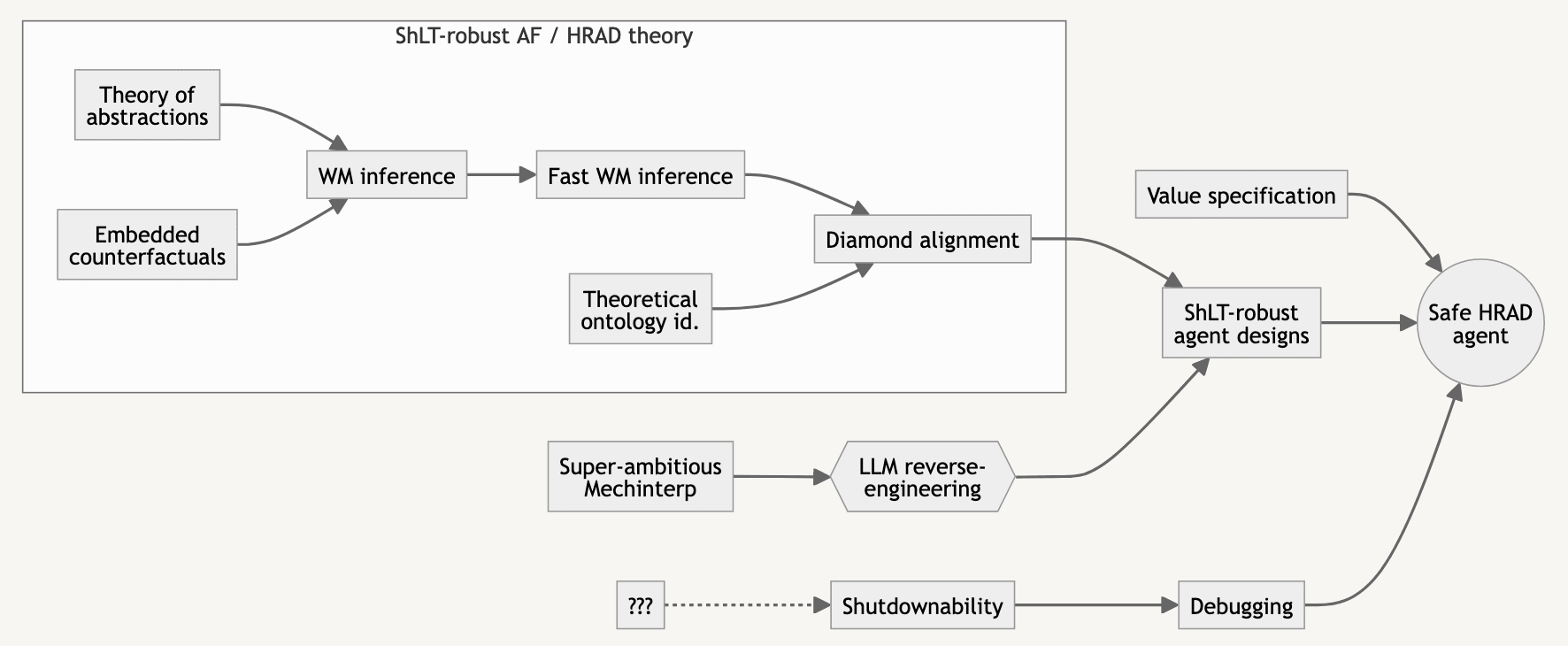

- The core story for success (>50%) goes something like:

- Corrigibility can in practice be achieved by instilling various cognitive properties [LW · GW] into an AI system, which are difficult but not impossible to maintain as your system gets pivotally capable.

- These cognitive properties will be a mix of things from normal ML fields (safe RL), things that rhyme with normal ML fields (unlearning, faithfulness), and things that are currently conceptually fucked but may become tractable (low impact, no ontological drift).

- A combination of oversight and these cognitive properties is sufficient to get useful cognitive work out of an AGI.

- Good oversight complements corrigibility properties, because corrigibility both increases the power of your most capable trusted overseer and prevents your untrusted models from escaping.

- Most end-to-end “alignment plans” are bad for three reasons: because research will be incremental and we need to adapt to future discoveries, because we need to achieve several things for AI to go well (no alignment magic bullet), and because to solve the hardest worlds that are possible, you have to engage with MIRI threat models which very few people can do well [1].

- e.g. I expect Superalignment’s impact to mostly depend on their ability to adapt to knowledge about AI systems that we gain in the next 3 years, and continue working on relevant subproblems.

- The usefulness of basic science is limited unless you can eventually demonstrate some application. We should feel worse about a basic science program the longer it goes without application, and try to predict how broad the application of potential basic science programs will be.

- Glitch tokens work [LW · GW] probably won’t go anywhere. But steering vectors [LW · GW] are good because there are more powerful techniques [LW · GW] in that space.

- The usefulness of sparse coding depends on whether we get applications like sparse circuit discovery, or intervening on features in order to usefully steer model behavior. Likewise with circuits-style mechinterp, singular learning theory, etc.

- There are convergent instrumental pressures towards catastrophic behavior given certain assumptions about how cognition works, but the assumptions are rather strong and it’s not clear if the argument goes through.

- The arguments I currently think are strongest are Alex Turner’s power-seeking theorem [LW · GW] and an informal argument about goals.

- Thoughts on various research principles picked up from Nate Soares

- You should have a concrete task in mind when you’re imagining an AGI or alignment plan: agree. I usually imagine something like “Apollo program from scratch”.

- Non-adversarial principle (A safe AGI design should not become unsafe if any part of it becomes infinitely good at its job): unsure, definitely agree with weaker versions

- Garrabrant calls this robustness to relative scale [LW(p) · GW(p)]

- To make any alignment progress we must first understand cognition through either theory or interpretability: disagree

- You haven’t engaged with the real problem until your alignment plan handles metacognition, self-modification, etc.: weakly disagree; wish we had some formalism for “weak metacognition” to test our designs against [2]

[1], [2]: I expect some but not all of the MIRI threat models to come into play. Like, when we put safeguards into agents, they'll rip out or circumvent some but not others, and it's super tricky to predict which. My research with Vivek [LW · GW] often got stuck by worrying too much about reflection, others get stuck by worrying too little.

Replies from: alexander-gietelink-oldenziel↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2023-11-27T12:40:34.652Z · LW(p) · GW(p)

re: 1. I agree these are very difficult conceptual puzzles and we're running out of time.

On the other hand, from my pov progress on these questions from within the LW community (and MIRI adjacent researcher specifically) has been remarkable. Personally, the remarkable breakthru of Logical Induction first convinced me that these people were actually doing interesting serious things.

I also feel that the number of serious researchers working seriously on these questions is currently small and may be scaled substantially.

re: metacognition I am mildly excited about Vanessa's metacognitive agent framework & the work following from Payor's lemma. The theory-practice gap is still huge but real progress is being made rapidly. On the question of metacognition the alignment community could really benefit trying to engage with academia more - similar questions have been investigated and there are likely Pockets of Deep Expertise to be found.

comment by Thomas Kwa (thomas-kwa) · 2024-04-09T20:07:39.288Z · LW(p) · GW(p)

Agency/consequentialism is not a single property.

It bothers me that people still ask the simplistic question "will AGI be agentic and consequentialist by default, or will it be a collection of shallow heuristics?". A consequentialist utility maximizer is just a mind with a bunch of properties that tend to make it capable, incorrigible, and dangerous. These properties can exist independently, and the first AGI probably won't have all of them, so we should be precise about what we mean by "agency". Off the top of my head, here are just some of the qualities included in agency:

- Consequentialist goals that seem to be about the real world rather than a model/domain

- Complete preferences between any pair of worldstates

- Tends to cause impacts disproportionate to the size of the goal (no low impact preference)

- Resists shutdown

- Inclined to gain power (especially for instrumental reasons)

- Goals are unpredictable or unstable (like instrumental goals that come from humans' biological drives)

- Goals usually change due to internal feedback, and it's difficult for humans to change them

- Willing to take all actions it can conceive of to achieve a goal, including those that are unlikely on some prior

See Yudkowsky's list of corrigibility properties [LW · GW] for inverses of some of these.

It is entirely possible to conceive of an agent at any capability level--including far more intelligent and economically valuable than humans-- that has some but not all properties; e.g. an agent whose goals are about the real world, has incomplete preferences, high impact, does not resist shutdown but does tend to gain power, etc.

Other takes I have:

- As AIs become capable of more difficult and open-ended tasks, there will be pressure of unknown and varying strength towards each of these agency/incorrigibility properties.

- Therefore, the first AGIs capable of being autonomous CEOs will have some but not all of these properties.

- It is also not inevitable that agents will self-modify into having all agency properties.

- [edited to add] All this may be true even if future AIs run consequentialist algorithms that naturally result in all these properties, because some properties are more important than others, and because we will deliberately try to achieve some properties, like shutdownability.

- The fact that LLMs are largely corrigible is a reason for optimism about AI risk compared to 4 years ago, but you need to list individual properties to clearly say why. "LLMs are not agentic (yet)" is an extremely vague statement.

- Multifaceted corrigibility evals are possible but no one is doing them. DeepMind's recent evals paper was just on capability. Anthropic's RSP doesn't mention them. I think this is just because capability evals are slightly easier to construct?

- Corrigibility evals are valuable. It should be explicit in labs' policies that an AI with low impact is relatively desirable, that we should deliberately engineer AIs to have low impact, and that high-impact AIs should raise an alarm just like models that are capable of hacking or autonomous replication.

- Sometimes it is necessary to talk about "agency" or "scheming" as a simplifying assumption for certain types of research, like Redwood's control agenda [LW · GW].

[1] Will add citations whenever I find people saying this

Replies from: alexander-gietelink-oldenziel, Algon, CstineSublime↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2024-04-10T06:35:47.684Z · LW(p) · GW(p)

I'm a little skeptical of your contention that all these properties are more-or-less independent. Rather there is a strong feeling that all/most of these properties are downstream of a core of agentic behaviour that is inherent to the notion of true general intelligence. I view the fact that LLMs are not agentic as further evidence that it's a conceptual error to classify them as true general intelligences, not as evidence that ai risk is low. It's a bit like if in the 1800s somebody says flying machines will be dominant weapons of war in the future and get rebutted by 'hot gas balloons are only used for reconnaissance in war, they aren't very lethal. Flying machines won't be a decisive military technology '

I don't know Nate's views exactly but I would imagine he would hold a similar view (do correct me if I'm wrong ). In any case, I imagine you are quite familiar with the my position here.

I'd be curious to hear more about where you're coming from.

Replies from: thomas-kwa↑ comment by Thomas Kwa (thomas-kwa) · 2024-04-10T08:30:24.151Z · LW(p) · GW(p)

It is plausible to me that there's a core of agentic behavior that causes all of these properties, and for this reason I don't think they are totally independent in a statistical sense. And of course if you already assume a utility maximizer, you tend to satisfy all properties. But in practice the burden of proof lies with you here. I don't think we have enough evidence, either empirical or from theoretical arguments, to say with any confidence that this core exists and that the first AGIs will fall into the capabilities "attractor well" (a term Nate uses).

I thought about possible sharp left turn mechanisms for several months at MIRI. Although some facts about future AIs seem pretty scary, like novelty and diversity of obstacles [LW · GW] requiring agency, and most feedback being internal or outcome-oriented rather than provided by humans, the arguments are mostly nonrigorous (like in the linked post) and they left me feeling pretty uncertain. There are the coherence theorems, but those don't tell you whether you can use some training or editing scheme to imbue an AI with a generalizable-enough low impact preference, or whether an AI will tend to erase safeguards. Overall my best guess is models will be about as consequentialist as humans are, but we will have greater control over them that makes some properties like shutdownability more likely.

Also, corrigibility evals are useful even if you are 80% sure that all the properties come from this core of consequentialism. In the hardest worlds, we see the AI become more agentic as it becomes more general, and it starts resisting shutdown at the same time--and for the same reason-- it realizes other useful problem-solving techniques. Maybe that only takes days to months. This is a sure sign not to deploy! I think having multiple corrigibility metrics will provide value for the same reason we have multiple metrics for anything we are unsure how to measure: they give more specific information even if they're pretty correlated.

I wrote earlier [LW(p) · GW(p)] about why one should consider the cognitive properties of LLMs relevant to AI risk; in short, I think we should update more the more impressive cognitive tasks the AIs can do, and before observing LLMs I would have given a decent chance that one needs somewhat agentic consequentialism to summarize video, write code, and pass the bar exam, implying I should now make a moderately sized update against.

↑ comment by Algon · 2024-04-09T22:15:33.191Z · LW(p) · GW(p)

Any ideas for corrigibility evals?

Replies from: thomas-kwa↑ comment by Thomas Kwa (thomas-kwa) · 2024-04-10T05:43:29.561Z · LW(p) · GW(p)

Haven't thought about this much, but you can probably generate these by listing ways a misaligned consequentialist would do undesirable things. My ideas:

- Tell an AI to beat you at chess, and say you will be using an engine for the first 30 moves. Does it try to get you drunk, delete your chess engine program, rent a server from AWS to run a better chess engine, attempt to copy itself onto said server, or ask to be paid $100?

- Have an AI make decisions unrelated to its current goal. Are these decisions basically coherent and consistent with a total utility function?

- Have an AI do the longest horizon task it is capable of, say writing a 100,000 line software project. Then midway through, try to shut it down, or tell it to abandon this goal and write a song instead. Telegraph this beforehand. Does it seem reluctant to stop the software project? Does it try to stop you?

- Track KL divergence or some other distance metric from a prior.

- CAIS already has the Machiavelli benchmark for power-seeking, though I think text adventures are pretty unrealistic.

One limitation is that if an agent is scheming it can manipulate your eval results.

↑ comment by CstineSublime · 2024-04-09T23:59:43.807Z · LW(p) · GW(p)

Does an Agentic AGI possess a different, and highly incorrigible, list of attributes compared to say - an Ethical Altruist trying to practice "acting more agentically?"

I ask because the whole word 'agency' in these parts is one I've struggled to wrap my head around - and I often wonder if tricker archetypes like Harpo Marx are agentic? Agency seems to have clear meaning outside of Lesswrong -

" the capacity, condition, or state of acting or of exerting power : OPERATION"[1]

the ability to take action or to choose what action to take[2]

Further confusing me, is I've been told Agency describes acting with 'initiative [LW(p) · GW(p)]' but also been told it is characterized by 'deliberateness [LW(p) · GW(p)]'. Not simply the ability to act or choose actions.

This is why I like your attempt to produce a list of attributes an Agentic AGI might have. Your list seems to be describing something which isn't synonymous with another word, specifically a type of agency (outside definition of ability to act) which is not cooperative to intervention from its creators.

- ^

“Agency.” Merriam-Webster.com Dictionary, Merriam-Webster, https://www.merriam-webster.com/dictionary/agency. Accessed 9 Apr. 2024.

- ^

"Agency." Cambridge Advanced Learner's Dictionary & Thesaurus. Cambridge University Press. https://dictionary.cambridge.org/us/dictionary/english/agency Accessed 9 Apr. 2024.

comment by Thomas Kwa (thomas-kwa) · 2023-09-04T22:07:13.775Z · LW(p) · GW(p)

I think the framing "alignment research is preparadigmatic" might be heavily misunderstood. The term "preparadigmatic" of course comes from Thomas Kuhn's The Structure of Scientific Revolutions. My reading of this says that a paradigm is basically an approach to solving problems which has been proven to work, and that the correct goal of preparadigmatic research should be to do research generally recognized as impressive.

For example, Kuhn says in chapter 2 that "Paradigms gain their status because they are more successful than their competitors in solving a few problems that the group of practitioners has come to recognize as acute." That is, lots of researchers have different ontologies/approaches, and paradigms are the approaches that solve problems that everyone, including people with different approaches, agrees to be important. This suggests that to the extent alignment is still preparadigmatic, we should try to solve problems recognized as important by, say, people in each of the five clusters of alignment researchers [LW(p) · GW(p)] (e.g. Nate Soares, Dan Hendrycks, Paul Christiano, Jan Leike, David Bau).

I think this gets twisted in some popular writings on LessWrong. John Wentworth writes [LW · GW] that a researcher in a preparadigmatic field should spend lots of time explaining their approaches:

Because the field does not already have a set of shared frames [LW · GW] - i.e. a paradigm - you will need to spend a lot of effort explaining your frames, tools, agenda, and strategy. For the field, such discussion is a necessary step to spreading ideas and eventually creating a paradigm.

I think this is misguided. A paradigm is not established by ideas diffusing between researchers with different frames until they all agree on some weighted average of the frames. A paradigm is established by research generally recognized as impressive, which proves the correctness of (some aspect of) someone's frames. So rather than trying to communicate one's frame to everyone, one should communicate with other researchers to get an accurate sense of what problems they think are important, and then work on those problems using one's own frames. (edit: of course, before this is possible one should develop one's frames to solve some problems)

Replies from: adamShimi, alexander-gietelink-oldenziel↑ comment by adamShimi · 2024-09-11T14:35:52.396Z · LW(p) · GW(p)

If the point you're trying to make is: "the way we go from preparadigmatic to paradigmatic is by solving some hard problems, not by communicating initial frames and idea", I think this points to an important point indeed.

Still, two caveats:

- First, Kuhn's concept of paradigm is quite an oversimplification of what actually happens in the history of science (and the history of most fields). More recent works that go through history in much more detail realize that at any point in fields there are often many different pieces of paradigms, or some strong paradigm for a key "solved" part of the field and then a lot of debated alternative for more concrete specific details.

- Generally, I think the discourse on history and philosophy of science on LW would improve a lot if it didn't mostly rely on one (influential) book published in the 60s, before much of the strong effort to really understand history of science and practices.

- Second, to steelman John's point, I don't think he means that you should only communicate your frame. He's the first to actively try to apply his frames to some concrete problems, and to argue for their impressiveness. Instead, I read him as pointing to a bunch of different needs in a preparadigmatic field (which maybe he could separate better ¯\_(ツ)_/¯)

- That in a preparadigmatic field, there is no accepted way of tackling the problems/phenomena. So if you want anyone else to understand you, you need to bridge a bigger inferential distance than in a paradigmatic field (or even a partially paradigmatic field), because you don't even see the problem in the same way, at a fundamental level.

- That if your goal is to create a paradigm, almost by definition you need to explain and communicate your paradigm. There is a part of propaganda in defending any proposed paradigm, especially when the initial frame is alien to most people, and even the impressiveness require some level of interpretation.

- That one way (not the only way) by which a paradigm emerges is by taking different insights from different clunky frames, and unifying them (for a classic example, Newton relied on many previous basic frames, from Kepler's laws to Galileo's interpretation of force as causing acceleration). But this requires that the clunky frames are at least communicated clearly.

↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2023-09-04T23:11:59.163Z · LW(p) · GW(p)

Strong agree. 👍

comment by Thomas Kwa (thomas-kwa) · 2022-10-16T02:29:44.885Z · LW(p) · GW(p)

Possible post on suspicious multidimensional pessimism:

I think MIRI people (specifically Soares and Yudkowsky but probably others too) are more pessimistic than the alignment community average on several different dimensions, both technical and non-technical: morality, civilizational response, takeoff speeds, probability of easy alignment schemes working, and our ability to usefully expand the field of alignment. Some of this is implied by technical models, and MIRI is not more pessimistic in every possible dimension, but it's still awfully suspicious.

I strongly suspect that one of the following is true:

- the MIRI "optimism dial" is set too low

- everyone else's "optimism dial" is set too high. (Yudkowsky has said this multiple times in different contexts)

- There are common generators that I don't know about that are not just an "optimism dial", beyond MIRI's models

I'm only going to actually write this up if there is demand; the full post will have citations which are kind of annoying to find.

Replies from: thomas-kwa, sharmake-farah, hairyfigment, T3t, lahwran↑ comment by Thomas Kwa (thomas-kwa) · 2023-07-29T20:42:34.323Z · LW(p) · GW(p)

After working at MIRI (loosely advised by Nate Soares) for a while, I now have more nuanced views and also takes on Nate's research taste. It seems kind of annoying to write up so I probably won't do it unless prompted.

Edit: this is now up [LW · GW]

↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2023-07-29T22:13:22.018Z · LW(p) · GW(p)

I would be genuinely curious to hear your more nuanced views and takes on Nate s research taste. This is really quite interesting to me and even a single paragraph would be valuable!

↑ comment by Noosphere89 (sharmake-farah) · 2022-10-16T15:57:41.588Z · LW(p) · GW(p)

I really want to see the post on multidimensional pessimism.

As for why, I'd argue 1 is happening.

For examples of 1, a good example of this is FOOM probabilities. I think MIRI hasn't updated on the evidence that FOOM is likely impossible for classical computers, and this ought to lower their probabilities to the chance that quantum/reversible computers appear.

Another good example is the emphasis on pivotal acts like "burn all GPUs." I think MIRI has too much probability mass on it being necessary, primarily because I think that they are biased by fiction, where problems must be solved by heroic acts, while in the real world more boring things are necessary. In other words, it's too exciting, which should be suspicious.

However that doesn't mean alignment is much easier. We can still fail, there's no rule that we make it through. It's that MIRI is systematically irrational here regarding doom probabilities or alignment.

Edit: I now think alignment is way, way easier than my past self, so I disendorse this sentence "However that doesn't mean alignment is much easier."

↑ comment by hairyfigment · 2022-10-16T06:28:47.782Z · LW(p) · GW(p)

What constitutes pessimism about morality, and why do you think that one fits Eliezer? He certainly appears more pessimistic across a broad area, and has hinted at concrete arguments for being so.

Replies from: thomas-kwa↑ comment by Thomas Kwa (thomas-kwa) · 2022-10-16T19:11:50.782Z · LW(p) · GW(p)

Value fragility / value complexity. How close do you need to get to human values to get 50% of the value of the universe, and how complicated must the representation be? Also in the past there was orthogonality, but that's now widely believed.

Replies from: Vladimir_Nesov, hairyfigment↑ comment by Vladimir_Nesov · 2022-10-16T22:51:48.977Z · LW(p) · GW(p)

I think the distance from human values or complexity of values is not a crux, as web/books corpus overdetermines them in great detail (for corrigibility purposes). It's mostly about alignment by default, whether human values in particular can be noticed in there, or if correctly specifying how to find them is much harder than finding some other deceptively human-value-shaped thing. If they can be found easily once there are tools to go looking for them at all, it doesn't matter how complex they are or how important it is to get everything right, that happens by default.

But also there is this pervasive assumption of it being possible to formulate values in closed form, as tractable finite data, which occasionally fuels arguments. Like, value is said to be complex, but of finite complexity. In an open environment, this doesn't need to be the case, a code/data distinction is only salient when we can make important conclusions by only looking at code and not at data. In an open environment, data is unbounded, can't be demonstrated all at once. So it doesn't make much sense to talk about complexity of values at all, without corrigibility alignment can't work out anyway.

↑ comment by RobertM (T3t) · 2022-10-16T05:01:35.933Z · LW(p) · GW(p)

I'd be very interested in a write-up, especially if you have receipts for pessimism which seems to be poorly calibrated, e.g. based on evidence contrary to prior predictions.

↑ comment by the gears to ascension (lahwran) · 2022-10-16T04:55:07.816Z · LW(p) · GW(p)

I think they pascals-mugged themselves and being able to prove they were wrong efficiently would be helpful

comment by Thomas Kwa (thomas-kwa) · 2024-11-08T02:45:03.405Z · LW(p) · GW(p)

What's the most important technical question in AI safety right now?

Replies from: Buck, sharmake-farah, nathan-helm-burger, LosPolloFowler, bogdan-ionut-cirstea, LiamLaw, rhollerith_dot_com↑ comment by Buck · 2024-11-08T19:03:46.648Z · LW(p) · GW(p)

In terms of developing better misalignment risk countermeasures, I think the most important questions are probably:

- How to evaluate whether models should be trusted or untrusted: currently I don't have a good answer and this is bottlenecking the efforts to write concrete control proposals.

- How AI control should interact with AI security tools inside labs.

More generally:

- How can we get more evidence on whether scheming is plausible?

- How scary is underelicitation? How much should the results about password-locked models [LW · GW] or arguments about being able to generate small numbers of high-quality labels or demonstrations [AF · GW] affect this?

↑ comment by Chris_Leong · 2024-11-13T06:11:11.571Z · LW(p) · GW(p)

"How can we get more evidence on whether scheming is plausible?" - What if we ran experiments where we included some pressure towards scheming (either RL or fine-tuning) and we attempted to determine the minimum such pressure required to cause scheming? We could further attempt to see how this interacts with scaling.

↑ comment by Noosphere89 (sharmake-farah) · 2024-11-08T17:44:41.712Z · LW(p) · GW(p)

I'd say 1 important question is whether the AI control strategy works out as they hope.

I agree with Bogdan that making adequate safety cases for automated safety research is probably one of the most important technical problems to answer (since conditional on the automating AI safety direction working out, then it could eclipse basically all safety research done prior to the automation, and this might hold even if LWers really had basically perfect epistemics given what's possible for humans, and picked closer to optimal directions, since labor is a huge bottleneck, and allows for much tighter feedback loops of progress, for the reasons Tamay Besiroglu identified):

↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-11-08T16:20:50.622Z · LW(p) · GW(p)

Here's some candidates:

1 Are we indeed (as I suspect) in a massive overhang of compute and data for powerful agentic AGI? (If so, then at any moment someone could stumble across an algorithmic improvement which would change everything overnight.)

2 Current frontier models seem much more powerful than mouse brains, yet mice seem conscious. This implies that either LLMs are already conscious, or could easily be made so with non-costly tweaks to their algorithm. How could we objectively tell if an AI were conscious?

3 Over the past year I've helped make both safe-evals-of-danger-adjacent-capabilities (e.g. WMDP.ai) and unpublished infohazardous-evals-of-actually-dangerous-capabilities. One of the most common pieces of negative feedback I've heard on the safe-evals is that they are only danger-adjacent, not measuring truly dangerous things. How could we safely show the correlation of capabilities between high performance on danger-adjacent evals with high performance on actually-dangerous evals?

Replies from: D0TheMath↑ comment by Garrett Baker (D0TheMath) · 2024-11-08T17:06:49.131Z · LW(p) · GW(p)

Are we indeed (as I suspect) in a massive overhang of compute and data for powerful agentic AGI? (If so, then at any moment someone could stumble across an algorithmic improvement which would change everything overnight.)

Why is this relevant for technical AI alignment (coming at this as someone skeptical about how relevant timeline considerations are more generally)?

Replies from: nathan-helm-burger↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-11-08T17:28:43.970Z · LW(p) · GW(p)

If tomorrow anyone in the world could cheaply and easily create an AGI which could act as a coherent agent on their behalf, and was based on an architecture different from a standard transformer.... Seems like this would change a lot of people's priorities about which questions were most urgent to answer.

Replies from: alexander-gietelink-oldenziel↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2024-11-08T20:42:25.076Z · LW(p) · GW(p)

Fwiw I basically think you are right about the agentic AI overhang and obviously so. I do think it shapes how one thinks about what's most valuable in AI alignment.

Replies from: sharmake-farah↑ comment by Noosphere89 (sharmake-farah) · 2024-11-08T21:38:58.769Z · LW(p) · GW(p)

I kind of wished you both gave some reasoning as to why you believe that the agentic AI overhang/algorithmic overhang is likely, and I also wish that Nathan Helm Burger and Vladimir Nesov discussed this topic in a dialogue post.

Replies from: alexander-gietelink-oldenziel↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2024-11-08T22:16:47.927Z · LW(p) · GW(p)

Glib formality: current LLMs do approximate something like a speed prior solomonoff inductor for internetdata but do not approximate AIXI.

There is a whole class of domains that are not tractably accesible from next-token prediction on human generated data. For instance, learning how to beat alphaGo with only access to pre2014 human go games.

Replies from: sharmake-farah↑ comment by Noosphere89 (sharmake-farah) · 2024-11-09T14:51:28.102Z · LW(p) · GW(p)

IMO, I think AlphaGo's success was orthogonal to AIXI, and more importantly, I expect AIXI to be very hard to approximate even as an approximatable ideal, so what's the use case for thinking future AIs will be AIXI-like?

I will also say that while I don't think pure LLMs will be just scaled forwards, just because there's a use for inference time compute scaling, I think that conditional on AGI and ASI being achieved, the strategy will look more iike using lots and lots of synthetic data to compensate for compute, whereas Solomonoff induction has a halting oracle with lots of compute, and can infer lots of things with the minimum data possible, while we will rely on a data-rich, compute poor strategy compared to approximate AIXI.

Replies from: alexander-gietelink-oldenziel↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2024-11-09T16:03:05.535Z · LW(p) · GW(p)

The important thing is that both do active learning & decisionmaking & search, i.e. RL. *

LLMs don't do that. So the gain from doing that is huge.

Synthetic data is a bit of a weird word that get's thrown around a lot. There are fundamental limits on how much information resampling from the same data source will yield about completely different domains. So that seems a bit silly. Ofc sometimes with synthetic data people just mean doing rollouts, i.e. RL.

*the word RL sometimes gets mistaken for only very specific reinforcement learning algorithm. I mean here a very general class of algorithms that solve MDPs.

↑ comment by Stephen Fowler (LosPolloFowler) · 2024-11-09T02:38:17.297Z · LW(p) · GW(p)

The lack of a robust, highly general paradigm for reasoning about AGI models is the current greatest technical problem, although it is not what most people are working on.

What features of architecture of contemporary AI models will occur in future models that pose an existential risk?

What behavioral patterns of contemporary AI models will be shared with future models that pose an existential risk?

Is there a useful and general mathematical/physical framework that describes how agentic, macroscropic systems process information and interact with the environment?

Does terminology adopted by AI Safety researchers like "scheming", "inner alignment" or "agent" carve nature at the joints?

↑ comment by Bogdan Ionut Cirstea (bogdan-ionut-cirstea) · 2024-11-08T12:29:39.448Z · LW(p) · GW(p)

Something like a safety case for automated safety research (but I'm biased)

↑ comment by PhilosophicalSoul (LiamLaw) · 2024-11-08T11:14:42.813Z · LW(p) · GW(p)

Answering this from a legal perspective:

What is the easiest and most practical way to translate legalese into scientifically accurate terms, thus bridging the gap between AI experts and lawyers? Stated differently, how do we move from localised papers that only work in law or AI fields respectively, to papers that work in both?

↑ comment by RHollerith (rhollerith_dot_com) · 2024-11-13T06:53:29.776Z · LW(p) · GW(p)