MIRI announces new "Death With Dignity" strategy

post by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2022-04-02T00:43:19.814Z · LW · GW · 545 commentsContents

Q1: Does 'dying with dignity' in this context mean accepting the certainty of your death, and not childishly regretting that or trying to fight a hopeless battle? Q2: I have a clever scheme for saving the world! I should act as if I believe it will work and save everyone, right, even if there's arguments that it's almost certainly misguided and doomed? Because if those arguments are correct and my scheme can't work, we're all dead anyways, right? Q3: Should I scream and run around and go through the streets wailing of doom? Q4: Should I lie and pretend everything is fine, then? Keep everyone's spirits up, so they go out with a smile, unknowing? Q5: Then isn't it unwise to speak plainly of these matters, when fools may be driven to desperation by them? What if people believe you about the hopeless situation, but refuse to accept that conducting themselves with dignity is the appropriate response? Q6: Hey, this was posted on April 1st. All of this is just an April Fool's joke, right? None 548 comments

tl;dr: It's obvious at this point that humanity isn't going to solve the alignment problem, or even try very hard, or even go out with much of a fight. Since survival is unattainable, we should shift the focus of our efforts to helping humanity die with with slightly more dignity.

Well, let's be frank here. MIRI didn't solve AGI alignment and at least knows that it didn't. Paul Christiano's incredibly complicated schemes have no chance of working in real life before DeepMind destroys the world. Chris Olah's transparency work, at current rates of progress, will at best let somebody at DeepMind give a highly speculative warning about how the current set of enormous inscrutable tensors, inside a system that was recompiled three weeks ago and has now been training by gradient descent for 20 days, might possibly be planning to start trying to deceive its operators.

Management will then ask what they're supposed to do about that.

Whoever detected the warning sign will say that there isn't anything known they can do about that. Just because you can see the system might be planning to kill you, doesn't mean that there's any known way to build a system that won't do that. Management will then decide not to shut down the project - because it's not certain that the intention was really there or that the AGI will really follow through, because other AGI projects are hard on their heels, because if all those gloomy prophecies are true then there's nothing anybody can do about it anyways. Pretty soon that troublesome error signal will vanish.

When Earth's prospects are that far underwater in the basement of the logistic success curve, it may be hard to feel motivated about continuing to fight, since doubling our chances of survival will only take them from 0% to 0%.

That's why I would suggest reframing the problem - especially on an emotional level - to helping humanity die with dignity, or rather, since even this goal is realistically unattainable at this point, die with slightly more dignity than would otherwise be counterfactually obtained.

Consider the world if Chris Olah had never existed. It's then much more likely that nobody will even try and fail to adapt Olah's methodologies to try and read complicated facts about internal intentions and future plans, out of whatever enormous inscrutable tensors are being integrated a million times per second, inside of whatever recently designed system finished training 48 hours ago, in a vast GPU farm that's already helpfully connected to the Internet.

It is more dignified for humanity - a better look on our tombstone - if we die after the management of the AGI project was heroically warned of the dangers but came up with totally reasonable reasons to go ahead anyways.

Or, failing that, if people made a heroic effort to do something that could maybe possibly have worked to generate a warning like that but couldn't actually in real life because the latest tensors were in a slightly different format and there was no time to readapt the methodology. Compared to the much less dignified-looking situation if there's no warning and nobody even tried to figure out how to generate one.

Or take MIRI. Are we sad that it looks like this Earth is going to fail? Yes. Are we sad that we tried to do anything about that? No, because it would be so much sadder, when it all ended, to face our ends wondering if maybe solving alignment would have just been as easy as buckling down and making a serious effort on it - not knowing if that would've just worked, if we'd only tried, because nobody had ever even tried at all. It wasn't subjectively overdetermined that the (real) problems would be too hard for us, before we made the only attempt at solving them that would ever be made. Somebody needed to try at all, in case that was all it took.

It's sad that our Earth couldn't be one of the more dignified planets that makes a real effort, correctly pinpointing the actual real difficult problems and then allocating thousands of the sort of brilliant kids that our Earth steers into wasting their lives on theoretical physics. But better MIRI's effort than nothing. What were we supposed to do instead, pick easy irrelevant fake problems that we could make an illusion of progress on, and have nobody out of the human species even try to solve the hard scary real problems, until everybody just fell over dead?

This way, at least, some people are walking around knowing why it is that if you train with an outer loss function that enforces the appearance of friendliness, you will not get an AI internally motivated to be friendly in a way that persists after its capabilities start to generalize far out of the training distribution...

To be clear, nobody's going to listen to those people, in the end. There will be more comforting voices that sound less politically incongruent with whatever agenda needs to be pushed forward that week. Or even if that ends up not so, this isn't primarily a social-political problem, of just getting people to listen. Even if DeepMind listened, and Anthropic knew, and they both backed off from destroying the world, that would just mean Facebook AI Research destroyed the world a year(?) later.

But compared to being part of a species that walks forward completely oblivious into the whirling propeller blades, with nobody having seen it at all or made any effort to stop it, it is dying with a little more dignity, if anyone knew at all. You can feel a little incrementally prouder to have died as part of a species like that, if maybe not proud in absolute terms.

If there is a stronger warning, because we did more transparency research? If there's deeper understanding of the real dangers and those come closer to beating out comfortable nonrealities, such that DeepMind and Anthropic really actually back off from destroying the world and let Facebook AI Research do it instead? If they try some hopeless alignment scheme whose subjective success probability looks, to the last sane people, more like 0.1% than 0? Then we have died with even more dignity! It may not get our survival probabilities much above 0%, but it would be so much more dignified than the present course looks to be!

Now of course the real subtext here, is that if you can otherwise set up the world so that it looks like you'll die with enough dignity - die of the social and technical problems that are really unavoidable, after making a huge effort at coordination and technical solutions and failing, rather than storming directly into the whirling helicopter blades as is the present unwritten plan -

- heck, if there was even a plan at all -

- then maybe possibly, if we're wrong about something fundamental, somehow, somewhere -

- in a way that makes things easier rather than harder, because obviously we're going to be wrong about all sorts of things, it's a whole new world inside of AGI -

- although, when you're fundamentally wrong about rocketry, this does not usually mean your rocket prototype goes exactly where you wanted on the first try while consuming half as much fuel as expected; it means the rocket explodes earlier yet, and not in a way you saw coming, being as wrong as you were -

- but if we get some miracle of unexpected hope, in those unpredicted inevitable places where our model is wrong -

- then our ability to take advantage of that one last hope, will greatly depend on how much dignity we were set to die with, before then.

If we can get on course to die with enough dignity, maybe we won't die at all...?

In principle, yes. Let's be very clear, though: Realistically speaking, that is not how real life works.

It's possible for a model error to make your life easier. But you do not get more surprises that make your life easy, than surprises that make your life even more difficult. And people do not suddenly become more reasonable, and make vastly more careful and precise decisions, as soon as they're scared. No, not even if it seems to you like their current awful decisions are weird and not-in-the-should-universe, and surely some sharp shock will cause them to snap out of that weird state into a normal state and start outputting the decisions you think they should make.

So don't get your heart set on that "not die at all" business. Don't invest all your emotion in a reward you probably won't get. Focus on dying with dignity - that is something you can actually obtain, even in this situation. After all, if you help humanity die with even one more dignity point, you yourself die with one hundred dignity points! Even if your species dies an incredibly undignified death, for you to have helped humanity go down with even slightly more of a real fight, is to die an extremely dignified death.

"Wait, dignity points?" you ask. "What are those? In what units are they measured, exactly?"

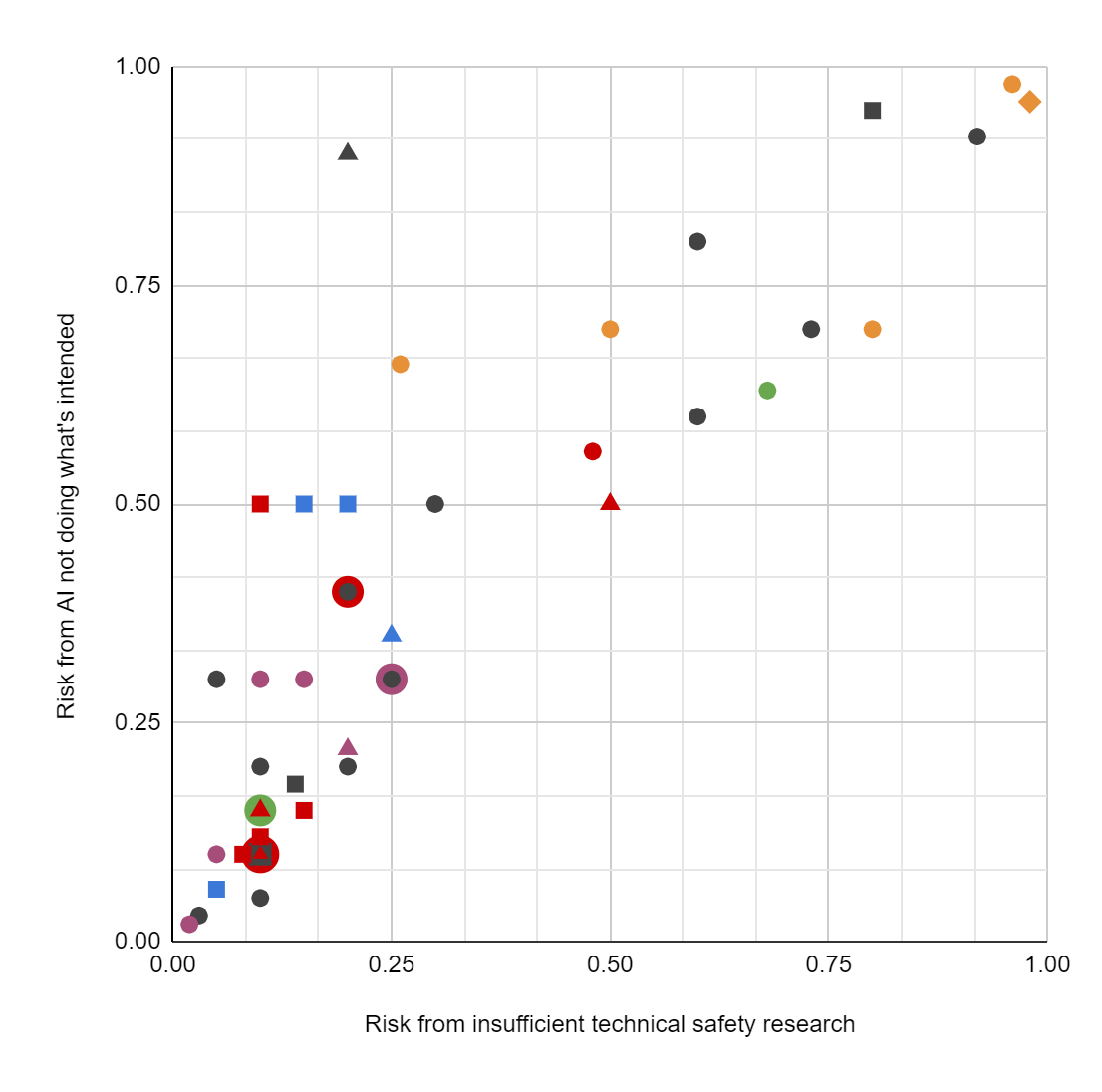

And to this I reply: Obviously, the measuring units of dignity are over humanity's log odds of survival - the graph on which the logistic success curve is a straight line. A project that doubles humanity's chance of survival from 0% to 0% is helping humanity die with one additional information-theoretic bit of dignity.

But if enough people can contribute enough bits of dignity like that, wouldn't that mean we didn't die at all? Yes, but again, don't get your hopes up. Don't focus your emotions on a goal you're probably not going to obtain. Realistically, we find a handful of projects that contribute a few more bits of counterfactual dignity; get a bunch more not-specifically-expected bad news that makes the first-order object-level situation look even worse (where to second order, of course, the good Bayesians already knew that was how it would go); and then we all die.

With a technical definition in hand of what exactly constitutes dignity, we may now consider some specific questions about what does and doesn't constitute dying with dignity.

Q1: Does 'dying with dignity' in this context mean accepting the certainty of your death, and not childishly regretting that or trying to fight a hopeless battle?

Don't be ridiculous. How would that increase the log odds of Earth's survival?

My utility function isn't up for grabs, either. If I regret my planet's death then I regret it, and it's beneath my dignity to pretend otherwise.

That said, I fought hardest while it looked like we were in the more sloped region of the logistic success curve, when our survival probability seemed more around the 50% range; I borrowed against my future to do that, and burned myself out to some degree. That was a deliberate choice, which I don't regret now; it was worth trying, I would not have wanted to die having not tried, I would not have wanted Earth to die without anyone having tried. But yeah, I am taking some time partways off, and trying a little less hard, now. I've earned a lot of dignity already; and if the world is ending anyways and I can't stop it, I can afford to be a little kind to myself about that.

When I tried hard and burned myself out some, it was with the understanding, within myself, that I would not keep trying to do that forever. We cannot fight at maximum all the time, and some times are more important than others. (Namely, when the logistic success curve seems relatively more sloped; those times are relatively more important.)

All that said: If you fight marginally longer, you die with marginally more dignity. Just don't undignifiedly delude yourself about the probable outcome.

Q2: I have a clever scheme for saving the world! I should act as if I believe it will work and save everyone, right, even if there's arguments that it's almost certainly misguided and doomed? Because if those arguments are correct and my scheme can't work, we're all dead anyways, right?

A: No! That's not dying with dignity! That's stepping sideways out of a mentally uncomfortable world and finding an escape route from unpleasant thoughts! If you condition your probability models on a false fact, something that isn't true on the mainline, it means you've mentally stepped out of reality and are now living somewhere else instead.

There are more elaborate arguments against the rationality of this strategy, but consider this quick heuristic for arriving at the correct answer: That's not a dignified way to die. Death with dignity means going on mentally living in the world you think is reality, even if it's a sad reality, until the end; not abandoning your arts of seeking truth; dying with your commitment to reason intact.

You should try to make things better in the real world, where your efforts aren't enough and you're going to die anyways; not inside a fake world you can save more easily.

Q2: But what's wrong with the argument from expected utility, saying that all of humanity's expected utility lies within possible worlds where my scheme turns out to be feasible after all?

A: Most fundamentally? That's not what the surviving worlds look like. The surviving worlds look like people who lived inside their awful reality and tried to shape up their impossible chances; until somehow, somewhere, a miracle appeared - the model broke in a positive direction, for once, as does not usually occur when you are trying to do something very difficult and hard to understand, but might still be so - and they were positioned with the resources and the sanity to take advantage of that positive miracle, because they went on living inside uncomfortable reality. Positive model violations do ever happen, but it's much less likely that somebody's specific desired miracle that "we're all dead anyways if not..." will happen; these people have just walked out of the reality where any actual positive miracles might occur.

Also and in practice? People don't just pick one comfortable improbability to condition on. They go on encountering unpleasant facts true on the mainline, and each time saying, "Well, if that's true, I'm doomed, so I may as well assume it's not true," and they say more and more things like this. If you do this it very rapidly drives down the probability mass of the 'possible' world you're mentally inhabiting. Pretty soon you're living in a place that's nowhere near reality. If there were an expected utility argument for risking everything on an improbable assumption, you'd get to make exactly one of them, ever. People using this kind of thinking usually aren't even keeping track of when they say it, let alone counting the occasions.

Also also, in practice? In domains like this one, things that seem to first-order like they "might" work... have essentially no chance of working in real life, to second-order after taking into account downward adjustments against optimism. AGI is a scientifically unprecedented experiment and a domain with lots of optimization pressures some of which work against you and unforeseeable intelligently selected execution pathways and with a small target to hit and all sorts of extreme forces that break things and that you couldn't fully test before facing them. AGI alignment seems like it's blatantly going to be an enormously Murphy-cursed domain, like rocket prototyping or computer security but worse.

In a domain like, if you have a clever scheme for winning anyways that, to first-order theoretical theory, totally definitely seems like it should work, even to Eliezer Yudkowsky rather than somebody who just goes around saying that casually, then maybe there's like a 50% chance of it working in practical real life after all the unexpected disasters and things turning out to be harder than expected.

If to first-order it seems to you like something in a complicated unknown untested domain has a 40% chance of working, it has a 0% chance of working in real life.

Also also also in practice? Harebrained schemes of this kind are usually actively harmful. Because they're invented by the sort of people who'll come up with an unworkable scheme, and then try to get rid of counterarguments with some sort of dismissal like "Well if not then we're all doomed anyways."

If nothing else, this kind of harebrained desperation drains off resources from those reality-abiding efforts that might try to do something on the subjectively apparent doomed mainline, and so position themselves better to take advantage of unexpected hope, which is what the surviving possible worlds mostly look like.

The surviving worlds don't look like somebody came up with a harebrained scheme, dismissed all the obvious reasons it wouldn't work with "But we have to bet on it working," and then it worked.

That's the elaborate argument about what's rational in terms of expected utility, once reasonable second-order commonsense adjustments are taken into account. Note, however, that if you have grasped the intended emotional connotations of "die with dignity", it's a heuristic that yields the same answer much faster. It's not dignified to pretend we're less doomed than we are, or step out of reality to live somewhere else.

Q3: Should I scream and run around and go through the streets wailing of doom?

A: No, that's not very dignified. Have a private breakdown in your bedroom, or a breakdown with a trusted friend, if you must.

Q3: Why is that bad from a coldly calculating expected utility perspective, though?

A: Because it associates belief in reality with people who act like idiots and can't control their emotions, which worsens our strategic position in possible worlds where we get an unexpected hope.

Q4: Should I lie and pretend everything is fine, then? Keep everyone's spirits up, so they go out with a smile, unknowing?

A: That also does not seem to me to be dignified. If we're all going to die anyways, I may as well speak plainly before then. If into the dark we must go, let's go there speaking the truth, to others and to ourselves, until the end.

Q4: Okay, but from a coldly calculating expected utility perspective, why isn't it good to lie to keep everyone calm? That way, if there's an unexpected hope, everybody else will be calm and oblivious and not interfering with us out of panic, and my faction will have lots of resources that they got from lying to their supporters about how much hope there was! Didn't you just say that people screaming and running around while the world was ending would be unhelpful?

A: You should never try to reason using expected utilities again. It is an art not meant for you. Stick to intuitive feelings henceforth.

There are, I think, people whose minds readily look for and find even the slightly-less-than-totally-obvious considerations of expected utility, what some might call "second-order" considerations. Ask them to rob a bank and give the money to the poor, and they'll think spontaneously and unprompted about insurance costs of banking and the chance of getting caught and reputational repercussions and low-trust societies and what if everybody else did that when they thought it was a good cause; and all of these considerations will be obviously-to-them consequences under consequentialism.

These people are well-suited to being 'consequentialists' or 'utilitarians', because their mind naturally sees all the consequences and utilities, including those considerations that others might be tempted to call by names like "second-order" or "categorical" and so on.

If you ask them why consequentialism doesn't say to rob banks, they reply, "Because that actually realistically in real life would not have good consequences. Whatever it is you're about to tell me as a supposedly non-consequentialist reason why we all mustn't do that, seems to you like a strong argument, exactly because you recognize implicitly that people robbing banks would not actually lead to happy formerly-poor people and everybody living cheerfully ever after."

Others, if you suggest to them that they should rob a bank and give the money to the poor, will be able to see the helped poor as a "consequence" and a "utility", but they will not spontaneously and unprompted see all those other considerations in the formal form of "consequences" and "utilities".

If you just asked them informally whether it was a good or bad idea, they might ask "What if everyone did that?" or "Isn't it good that we can live in a society where people can store and transmit money?" or "How would it make effective altruism look, if people went around doing that in the name of effective altruism?" But if you ask them about consequences, they don't spontaneously, readily, intuitively classify all these other things as "consequences"; they think that their mind is being steered onto a kind of formal track, a defensible track, a track of stating only things that are very direct or blatant or obvious. They think that the rule of consequentialism is, "If you show me a good consequence, I have to do that thing."

If you present them with bad things that happen if people rob banks, they don't see those as also being 'consequences'. They see them as arguments against consequentialism; since, after all consequentialism says to rob banks, which obviously leads to bad stuff, and so bad things would end up happening if people were consequentialists. They do not do a double-take and say "What?" That consequentialism leads people to do bad things with bad outcomes is just a reasonable conclusion, so far as they can tell.

People like this should not be 'consequentialists' or 'utilitarians' as they understand those terms. They should back off from this form of reasoning that their mind is not naturally well-suited for processing in a native format, and stick to intuitively informally asking themselves what's good or bad behavior, without any special focus on what they think are 'outcomes'.

If they try to be consequentialists, they'll end up as Hollywood villains describing some grand scheme that violates a lot of ethics and deontology but sure will end up having grandiose benefits, yup, even while everybody in the audience knows perfectly well that it won't work. You can only safely be a consequentialist if you're genre-savvy about that class of arguments - if you're not the blind villain on screen, but the person in the audience watching who sees why that won't work.

Q4: I know EAs shouldn't rob banks, so this obviously isn't directed at me, right?

A: The people of whom I speak will look for and find the reasons not to do it, even if they're in a social environment that doesn't have strong established injunctions against bank-robbing specifically exactly. They'll figure it out even if you present them with a new problem isomorphic to bank-robbing but with the details changed.

Which is basically what you just did, in my opinion.

Q4: But from the standpoint of cold-blooded calculation -

A: Calculations are not cold-blooded. What blood we have in us, warm or cold, is something we can learn to see more clearly with the light of calculation.

If you think calculations are cold-blooded, that they only shed light on cold things or make them cold, then you shouldn't do them. Stay by the warmth in a mental format where warmth goes on making sense to you.

Q4: Yes yes fine fine but what's the actual downside from an expected-utility standpoint?

A: If good people were liars, that would render the words of good people meaningless as information-theoretic signals, and destroy the ability for good people to coordinate with others or among themselves.

If the world can be saved, it will be saved by people who didn't lie to themselves, and went on living inside reality until some unexpected hope appeared there.

If those people went around lying to others and paternalistically deceiving them - well, mostly, I don't think they'll have really been the types to live inside reality themselves. But even imagining the contrary, good luck suddenly unwinding all those deceptions and getting other people to live inside reality with you, to coordinate on whatever suddenly needs to be done when hope appears, after you drove them outside reality before that point. Why should they believe anything you say?

Q4: But wouldn't it be more clever to -

A: Stop. Just stop. This is why I advised you to reframe your emotional stance as dying with dignity.

Maybe there'd be an argument about whether or not to violate your ethics if the world was actually going to be saved at the end. But why break your deontology if it's not even going to save the world? Even if you have a price, should you be that cheap?

Q4 But we could maybe save the world by lying to everyone about how much hope there was, to gain resources, until -

A: You're not getting it. Why violate your deontology if it's not going to really actually save the world in real life, as opposed to a pretend theoretical thought experiment where your actions have only beneficial consequences and none of the obvious second-order detriments?

It's relatively safe to be around an Eliezer Yudkowsky while the world is ending, because he's not going to do anything extreme and unethical unless it would really actually save the world in real life, and there are no extreme unethical actions that would really actually save the world the way these things play out in real life, and he knows that. He knows that the next stupid sacrifice-of-ethics proposed won't work to save the world either, actually in real life. He is a 'pessimist' - that is, a realist, a Bayesian who doesn't update in a predictable direction, a genre-savvy person who knows that the viewer would say if there were a villain on screen making that argument for violating ethics. He will not, like a Hollywood villain onscreen, be deluded into thinking that some clever-sounding deontology-violation is bound to work out great, when everybody in the audience watching knows perfectly well that it won't.

My ethics aren't for sale at the price point of failure. So if it looks like everything is going to fail, I'm a relatively safe person to be around.

I'm a genre-savvy person about this genre of arguments and a Bayesian who doesn't update in a predictable direction. So if you ask, "But Eliezer, what happens when the end of the world is approaching, and in desperation you cling to whatever harebrained scheme has Goodharted past your filters and presented you with a false shred of hope; what then will you do?" - I answer, "Die with dignity." Where "dignity" in this case means knowing perfectly well that's what would happen to some less genre-savvy person; and my choosing to do something else which is not that. But "dignity" yields the same correct answer and faster.

Q5: "Relatively" safe?

A: It'd be disingenuous to pretend that it wouldn't be even safer to hang around somebody who had no clue what was coming, didn't know any mental motions for taking a worldview seriously, thought it was somebody else's problem to ever do anything, and would just cheerfully party with you until the end.

Within the class of people who know the world is ending and consider it to be their job to do something about that, Eliezer Yudkowsky is a relatively safe person to be standing next to. At least, before you both die anyways, as is the whole problem there.

Q5: Some of your self-proclaimed fans don't strike me as relatively safe people to be around, in that scenario?

A: I failed to teach them whatever it is I know. Had I known then what I knew now, I would have warned them not to try.

If you insist on putting it into terms of fandom, though, feel free to notice that Eliezer Yudkowsky is much closer to being a typical liberaltarian science-fiction fan, as was his own culture that actually birthed him, than he is a typical member of any subculture that might have grown up later. Liberaltarian science-fiction fans do not usually throw away all their ethics at the first sign of trouble. They grew up reading books where those people were the villains.

Please don't take this as a promise from me to play nice, as you define niceness; the world is ending, and also people have varying definitions of what is nice. But I presently mostly expect to end up playing nice, because there won't be any options worth playing otherwise.

It is a matter of some concern to me that all this seems to be an alien logic to some strange people who - this fact is still hard for me to grasp on an emotional level - don't spontaneously generate all of this reasoning internally, as soon as confronted with the prompt. Alas.

Q5: Then isn't it unwise to speak plainly of these matters, when fools may be driven to desperation by them? What if people believe you about the hopeless situation, but refuse to accept that conducting themselves with dignity is the appropriate response?

A: I feel like I've now tried to live my life that way for a while, by the dictum of not panicking people; and, like everything else I've tried, that hasn't particularly worked? There are no plans left to avoid disrupting, now, with other people's hypothetical panic.

I think we die with slightly more dignity - come closer to surviving, as we die - if we are allowed to talk about these matters plainly. Even given that people may then do unhelpful things, after being driven mad by overhearing sane conversations. I think we die with more dignity that way, than if we go down silent and frozen and never talking about our impending death for fear of being overheard by people less sane than ourselves.

I think that in the last surviving possible worlds with any significant shred of subjective probability, people survived in part because they talked about it; even if that meant other people, the story's antagonists, might possibly hypothetically panic.

But still, one should present the story-antagonists with an easy line of retreat. So -

Q6: Hey, this was posted on April 1st. All of this is just an April Fool's joke, right?

A: Why, of course! Or rather, it's a preview of what might be needful to say later, if matters really do get that desperate. You don't want to drop that on people suddenly and with no warning.

Q6: Oh. Really? That would be such a relief!

A: Only you can decide whether to live in one mental world or the other.

Q6: Wait, now I'm confused. How do I decide which mental world to live in?

A: By figuring out what is true, and by allowing no other considerations than that to enter; that's dignity.

Q6: But that doesn't directly answer the question of which world I'm supposed to mentally live in! Can't somebody just tell me that?

A: Well, conditional on you wanting somebody to tell you that, I'd remind you that many EAs hold that it is very epistemically unvirtuous to just believe what one person tells you, and not weight their opinion and mix it with the weighted opinions of others?

Lots of very serious people will tell you that AGI is thirty years away, and that's plenty of time to turn things around, and nobody really knows anything about this subject matter anyways, and there's all kinds of plans for alignment that haven't been solidly refuted so far as they can tell.

I expect the sort of people who are very moved by that argument, to be happier, more productive, and less disruptive, living mentally in that world.

Q6: Thanks for answering my question! But aren't I supposed to assign some small probability to your worldview being correct?

A: Conditional on you being the sort of person who thinks you're obligated to do that and that's the reason you should do it, I'd frankly rather you didn't. Or rather, seal up that small probability in a safe corner of your mind which only tells you to stay out of the way of those gloomy people, and not get in the way of any hopeless plans they seem to have.

Q6: Got it. Thanks again!

A: You're welcome! Goodbye and have fun!

545 comments

Comments sorted by top scores.

comment by AI_WAIFU · 2022-04-02T05:47:07.286Z · LW(p) · GW(p)

That's great and all, but with all due respect:

Fuck. That. Noise.

Regardless of the odds of success and what the optimal course of action actually is, I would be very hard pressed to say that I'm trying to "help humanity die with dignity". Regardless of what the optimal action should be given that goal, on an emotional level, it's tantamount to giving up.

Before even getting into the cost/benefit of that attitude, in the worlds where we do make it out alive, I don't want to look back and see a version of me where that became my goal. I also don't think that if that was my goal, that I would fight nearly as hard to achieve it. I want a catgirl volcano lair [LW · GW] not "dignity". So when I try to negotiate with my money brain to expend precious calories, the plan had better involve the former, not the latter. I suspect that something similar applies to others.

I don't want to hear about genre-saviness from the defacto-founder of the community that gave us HPMOR!Harry and the Comet King after he wrote this post. Because it's so antithetical to the attitude present in those characters and posts like this one [LW · GW].

I also don't want to hear about second-order effects when, as best as I can tell, the attitude present here is likely to push people towards ineffective doomerism, rather than actually dying with dignity.

So instead, I'm gonna think carefully about my next move, come up with a plan, blast some shonen anime OSTs, and get to work. Then, amongst all the counterfactual worlds, there will be a version of me that gets to look back and know that they faced the end of the world, rose to the challenge, and came out the other end having carved utopia out of the bones of lovecraftian gods.

Replies from: Vaniver, AnnaSalamon, lc, Telofy, adamzerner, rank-biserial, SaidAchmiz, anonce, throwaway69420↑ comment by Vaniver · 2022-04-02T17:17:11.572Z · LW(p) · GW(p)

I think there's an important point about locus of control and scope. You can imagine someone who, early in life, decides that their life's work will be to build a time machine, because the value of doing so is immense (turning an otherwise finite universe into an infinite one, for example). As time goes on, they notice being more and more pessimistic about their prospects of doing that, but have some block against giving up on an emotional level. The stakes are too high for doomerism to be entertained!

But I think they overestimated their locus of control when making their plans, and they should have updated as evidence came in. If they reduced the scope of their ambitions, they might switch from plans that are crazy because they have to condition on time travel being possible to plans that are sane (because they can condition on actual reality). Maybe they just invent flying cars instead of time travel, or whatever.

I see this post as saying: "look, people interested in futurism: if you want to live in reality, this is where the battle line actually is. Fight your battles there, don't send bombing runs behind miles of anti-air defenses and wonder why you don't seem to be getting any hits." Yes, knowing the actual state of the battlefield might make people less interested in fighting in the war, but especially for intellectual wars it doesn't make sense to lie to maintain morale.

[In particular, lies of the form "alignment is easy!" work both to attract alignment researchers and convince AI developers and their supporters that developing AI is good instead of world-ending, because someone else is handling the alignment bit.]

Replies from: TurnTrout↑ comment by TurnTrout · 2022-04-03T16:43:48.960Z · LW(p) · GW(p)

lies of the form "alignment is easy!"

Aside: Regardless of whether the quoted claim is true, it does not seem like a prototypical lie. My read of your meaning is: "If you [the hypothetical person claiming alignment is easy] were an honest reasoner and worked out the consequences of what you know, you would not believe that alignment is easy; thusly has an inner deception blossomed into an outer deception; thus I call your claim a 'lie.'"

And under that understanding of what you mean, Vaniver, I think yours is not a wholly inappropriate usage, but rather unconventional. In its unconventionality, I think it implies untruths about the intentions of the claimants. (Namely, that they semi-consciously seek to benefit by spreading a claim they know to be false on some level.) In your shoes, I think I would have just called it an "untruth" or "false claim."

Edit: I now think you might have been talking about EY's hypothetical questioners who thought it valuable to purposefully deceive about the problem's difficulty, and not about the typical present-day person who believes alignment is easy?

Replies from: Vaniver↑ comment by Vaniver · 2022-04-03T18:50:53.540Z · LW(p) · GW(p)

Edit: I now think you might have been talking about EY's hypothetical questioners who thought it valuable to purposefully deceive about the problem's difficulty, and not about the typical present-day person who believes alignment is easy?

That is what I was responding to.

↑ comment by AnnaSalamon · 2022-04-02T20:37:30.381Z · LW(p) · GW(p)

"To win any battle, you must fight as if you are already dead.” — Miyamoto Musashi.

I don't in fact personally know we won't make it. This may be because I'm more ignorant than Eliezer, or may be because he (or his April first identity, I guess) is overconfident on a model, relative to me; it's hard to tell.

Regardless, the bit about "don't get psychologically stuck having-to-(believe/pretend)-it's-gonna-work seems really sane and healthy to me. Like falling out of an illusion and noticing your feet on the ground. The ground is a more fun and joyful place to live, even when things are higher probability of death than one is used to acting-as-though, in my limited experience. More access to creativity near the ground, I think.

But, yes, I can picture things under the heading "ineffective doomerism" that seem to me like they suck. Like, still trying to live in an ego-constructed illusion of deferral, and this time with "and we die" pasted on it, instead of "and we definitely live via such-and-such a plan."

Replies from: Benito↑ comment by Ben Pace (Benito) · 2022-04-03T04:51:43.006Z · LW(p) · GW(p)

I think I have more access to all of my emotional range nearer the ground, but this sentence doesn't ring true to me.

Replies from: TurnTroutThe ground is a more fun and joyful place to live, even when things are higher probability of death than one is used to acting-as-though, in my limited experience.

↑ comment by lc · 2022-04-02T05:50:59.217Z · LW(p) · GW(p)

As cheesy as it is, this is the correct response. I'm a little disappointed that Eliezer would resort to doomposting like this, but at the same time it's to be expected from him after some point. The people with remaining energy need to understand his words are also serving a personal therapeautic purpose and press on.

Replies from: tomcatfish, AprilSR↑ comment by Alex Vermillion (tomcatfish) · 2022-04-02T06:04:06.488Z · LW(p) · GW(p)

Some people can think there's next to no chance and yet go out swinging. I plan to, if I reach the point of feeling hopeless.

Replies from: RobbBB, lc↑ comment by Rob Bensinger (RobbBB) · 2022-04-02T07:31:21.470Z · LW(p) · GW(p)

Yeah -- I love AI_WAIFU's comment, but I love the OP too.

To some extent I think these are just different strategies that will work better for different people; both have failure modes, and Eliezer is trying to guard against the failure modes of 'Fuck That Noise' (e.g., losing sight of reality), while AI_WAIFU is trying to guard against the failure modes of 'Try To Die With More Dignity' (e.g., losing motivation).

My general recommendation to people would be to try different framings / attitudes out and use the ones that empirically work for them personally, rather than trying to have the same lens as everyone else. I'm generally a skeptic of advice, because I think people vary a lot; so I endorse the meta-advice that you should be very picky about which advice you accept, and keep in mind that you're the world's leading expert on yourself. (Or at least, you're in the best position to be that thing.)

Cf. 'Detach the Grim-o-Meter' versus 'Try to Feel the Emotions that Match Reality [LW · GW]'. Both are good advice in some contexts, for some people; but I think there's some risk from taking either strategy too far, especially if you aren't aware of the other strategy as a viable option.

Replies from: rhollerith_dot_com↑ comment by RHollerith (rhollerith_dot_com) · 2022-04-02T13:03:58.705Z · LW(p) · GW(p)

Please correct me if I am wrong, but a huge difference between Eliezer's post and AI_WAIFU's comment is that Eliezer's post is informed by conversations with dozens of people about the problem.

Replies from: RobbBB, Aiyen↑ comment by Rob Bensinger (RobbBB) · 2022-04-02T17:22:06.440Z · LW(p) · GW(p)

I interpreted AI_WAIFU as pushing back against a psychological claim ('X is the best attitude for mental clarity, motivation, etc.'), not as pushing back against a AI-related claim like P(doom). Are you interpreting them as disagreeing about P(doom)? (If not, then I don't understand your comment.)

If (counterfactually) they had been arguing about P(doom), I'd say: I don't know AI_WAIFU's level of background. I have a very high opinion of Eliezer's thinking about AI (though keep in mind that I'm a co-worker of his), but EY is still some guy who can be wrong about things, and I'm interested to hear counter-arguments against things like P(doom). AGI forecasting and alignment are messy, pre-paradigmatic fields, so I think it's easier for field founders and authorities to get stuff wrong than it would be in a normal scientific field.

The specific claim that Eliezer's P(doom) is "informed by conversations with dozens of people about the problem" (if that's what you were claiming) seems off to me. Like, it may be technically true under some interpretation, but (a) I think of Eliezer's views as primarily based on his own models, (b) I'd tentatively guess those models are much more based on things like 'reading textbooks' and 'thinking things through himself' than on 'insights gleaned during back-and-forth discussions with other people', and (c) most people working full-time on AI alignment have far lower P(doom) than Eliezer.

Replies from: rhollerith_dot_com↑ comment by RHollerith (rhollerith_dot_com) · 2022-04-02T19:41:56.977Z · LW(p) · GW(p)

Sorry for the lack of clarity. I share Eliezer's pessimism about the global situation (caused by rapid progress in AI). All I meant is that I see signs in his writings that over the last 15 years Eliezer has spent many hours trying to help at least a dozen different people become effective at trying to improve the horrible situation we are currently in. That work experience makes me pay much greater attention to him on the subject at hand than someone I know nothing about.

Replies from: RobbBB↑ comment by Rob Bensinger (RobbBB) · 2022-04-02T21:25:19.897Z · LW(p) · GW(p)

Ah, I see. I think Eliezer has lots of relevant experience and good insights, but I still wouldn't currently recommend the 'Death with Dignity' framing to everyone doing good longtermist work, because I just expect different people's minds to work very differently.

↑ comment by Aiyen · 2022-04-02T16:22:14.473Z · LW(p) · GW(p)

Assuming this is correct (certainly it is of Eliezer, though I don’t know AI_WAIFU’s background and perhaps they have had similar conversations), does it matter? WAIFU’s point is that we should continue trying as a matter of our terminal values; that’s not something that can be wrong due to the problem being difficult.

Replies from: rhollerith_dot_com↑ comment by RHollerith (rhollerith_dot_com) · 2022-04-02T19:50:20.764Z · LW(p) · GW(p)

I agree, but do not perceive Eliezer as having stopped trying or as advising others to stop trying, er, except of course for the last section of this post ("Q6: . . . All of this is just an April Fool’s joke, right?") but that is IMHO addressed to a small fraction of his audience.

Replies from: Aiyen↑ comment by Aiyen · 2022-04-02T20:05:21.046Z · LW(p) · GW(p)

I don't want to speak for him (especially when he's free to clarify himself far better than we could do for him!), but dying with dignity conveys an attitude that might be incompatible with actually winning. Maybe not; sometimes abandoning the constraint that you have to see a path to victory makes it easier to do the best you can. But it feels concerning on an instinctive level.

↑ comment by AprilSR · 2022-04-02T05:55:31.745Z · LW(p) · GW(p)

I think both emotions are helpful at motivating me.

Replies from: deepy↑ comment by deepy · 2022-04-02T11:55:28.255Z · LW(p) · GW(p)

I think I'm more motivated by the thought that I am going to die soon, any children I might have in the future will die soon, my family, my friends, and their children are going to die soon, and any QALYs I think I'm buying are around 40% as valuable as I thought, more than undoing the income tax deduction I get for them.

It seems like wrangling my ADHD brain into looking for way to prevent catastrophe could be more worthwhile than working a high-paid job I can currently hyper-focus on (and probably more virtuous, too), unless I find that the probability of success is literally 0% despite what I think I know about Bayesian reasoning, in which case I'll probably go into art or something.

↑ comment by Dawn Drescher (Telofy) · 2022-04-03T15:06:59.020Z · LW(p) · GW(p)

Agreed. Also here’s the poem that goes with that comment:

Do not go gentle into that good night,

Old age should burn and rave at close of day;

Rage, rage against the dying of the light.Though wise men at their end know dark is right,

Because their words had forked no lightning they

Do not go gentle into that good night.Good men, the last wave by, crying how bright

Their frail deeds might have danced in a green bay,

Rage, rage against the dying of the light.Wild men who caught and sang the sun in flight,

And learn, too late, they grieved it on its way,

Do not go gentle into that good night.Grave men, near death, who see with blinding sight

Blind eyes could blaze like meteors and be gay,

Rage, rage against the dying of the light.And you, my father, there on the sad height,

Curse, bless, me now with your fierce tears, I pray.

Do not go gentle into that good night.

Rage, rage against the dying of the light.

I totally empathize with Eliezer, and I’m afraid that I might be similarly burned out if I had been trying this for as long.

But that’s not who I want to be. I want to be Harry who builds a rocket to escape Azkaban, the little girl that faces the meteor with a baseball bat, and the general who empties his guns into the sky against another meteor (minus all his racism and shit).

I bet I won’t always have the strength for that – but that’s the goal.

Replies from: david-james↑ comment by David James (david-james) · 2024-05-06T03:55:52.849Z · LW(p) · GW(p)

If we know a meteor is about to hit earth, having only D days to prepare, what is rational for person P? Depending on P and D, any of the following might be rational: throw an end of the world party, prep to live underground, shoot ICBMs at the meteor, etc.

↑ comment by Adam Zerner (adamzerner) · 2022-04-03T01:26:11.423Z · LW(p) · GW(p)

Makes me think of the following quote. I'm not sure how much I agree with or endorse it, but it's something to think about.

The good fight has its own values. That it must end in irrevocable defeat is irrelevant.

— Isaac Asimov, It's Been A Good Life

↑ comment by rank-biserial · 2022-04-02T20:16:42.609Z · LW(p) · GW(p)

Exquisitely based

↑ comment by Said Achmiz (SaidAchmiz) · 2022-04-02T20:23:10.879Z · LW(p) · GW(p)

Harry Potter and the Comet King have access to magic; we don’t.

… is the obvious response, but the correct response is actually:

Harry Potter and the Comet King don’t exist, so what attitude is present in those characters is irrelevant to the question of what attitude we, in reality, ought to have.

Replies from: aristide-twain, Daphne_W↑ comment by astridain (aristide-twain) · 2022-04-04T15:57:04.744Z · LW(p) · GW(p)

Most fictional characters are optimised to make for entertaining stories, hence why "generalizing from fictional evidence" is usually a failure-mode. The HPMOR Harry and the Comet King were optimized by two rationalists as examples of rationalist heroes — and are active in allegorical situations engineered to say something that rationalists would find to be “of worth” about real world problems.

They are appealing precisely because they encode assumptions about what a real-world, rationalist “hero” ought to be like. Or at least, that's the hope. So, they can be pointed to as “theses” about the real world by Yudkowsky and Alexander, no different from blog posts that happen to be written as allegorical stories, and if people found the ideas encoded in those characters more convincing than the ideas encoded in the present April Fools' Day post, that's fair enough.

Not necessarily correct on the object-level, but, if it's wrong, it's a different kind of error from garden-variety “generalizing from fictional evidence”.

Replies from: RobbBB↑ comment by Rob Bensinger (RobbBB) · 2022-04-06T04:38:41.261Z · LW(p) · GW(p)

+1

↑ comment by Daphne_W · 2022-04-05T10:31:10.378Z · LW(p) · GW(p)

As fictional characters popular among humans, what attitude is present in them is evidence for what sort of attitude humans like to see or inhabit. As author of those characters, Yudkowsky should be aware of this mechanism. And empirically, people with accurate beliefs and positive attitudes outperform people with accurate beliefs and negative attitudes. It seems plausible Yudkowsky is aware of this as well.

"Death with dignity" reads as an unnecessarily negative attitude to accompany the near-certainty of doom. Heroism, maximum probability of catgirls, or even just raw log-odds-of-survival seem like they would be more motivating than dignity without sacrificing accuracy.

Like, just substitute all instances of 'dignity' in the OP with 'heroism' and naively I would expect this post to have a better impact(/be more dignified/be more heroic), except insofar it might give a less accurate impression of Yudkowsky's mood. But few people have actually engaged with him on that front.

↑ comment by anonce · 2022-04-16T00:10:48.934Z · LW(p) · GW(p)

You have a money brain? That's awesome, most of us only have monkey brains! 🙂

Replies from: anonce↑ comment by anonce · 2022-04-19T19:29:34.762Z · LW(p) · GW(p)

Why the downboats? People new to LW jargon probably wouldn't realize "money brain" is a typo.

Replies from: interstice↑ comment by interstice · 2022-04-19T21:25:27.040Z · LW(p) · GW(p)

Seemed like a bit of a rude way to let someone know they had a typo, I would have just gone with "Typo: money brain should be monkey brain".

Replies from: anonce↑ comment by anonce · 2023-07-31T18:29:20.324Z · LW(p) · GW(p)

That's fair; thanks for the feedback! I'll tone down the gallows humor on future comments; gotta keep in mind that tone of voice doesn't come across.

BTW a money brain would arise out of, e.g., a merchant caste in a static medieval society after many millennia. Much better than a monkey brain, and more capable of solving alignment!

↑ comment by throwaway69420 · 2022-04-02T14:46:40.930Z · LW(p) · GW(p)

Here we see irrationality for what it is: a rational survival mechanism.

comment by Alex_Altair · 2022-04-02T16:51:10.083Z · LW(p) · GW(p)

Seeing this post get so strongly upvoted makes me feel like I'm going crazy.

This is not the kind of content I want on LessWrong. I did not enjoy it, I do not think it will lead me to be happier or more productive toward reducing x-risk, I don't see how it would help others, and it honestly doesn't even seem like a particularly well done version of itself.

Can people help me understand why they upvoted?

Replies from: P., RobbBB, habryka4, Vaniver, Rubix, thomas-kwa, Liron, Richard_Kennaway, johnlawrenceaspden, shminux, vanessa-kosoy, PeterMcCluskey, CarlJ, conor-sullivan↑ comment by P. · 2022-04-02T18:40:25.102Z · LW(p) · GW(p)

For whatever it is worth, this post along with reading the unworkable alignment strategy on the ELK report has made me realize that we actually have no idea what to do and has finally convinced me to try to solve alignment, I encourage everyone else to do the same. For some people knowing that the world is doomed by default and that we can't just expect the experts to save it is motivating. If that was his goal, he achieved it.

Replies from: yitz, niplav, Buck, Chris_Leong, aditya-prasad↑ comment by Yitz (yitz) · 2022-04-03T02:38:18.151Z · LW(p) · GW(p)

Certainly for some people (including you!), yes. For others, I expect this post to be strongly demotivating. That doesn’t mean it shouldn’t have been written (I value honestly conveying personal beliefs and are expressing diversity of opinion enough to outweigh the downsides), but we should realistically expect this post to cause psychological harm for some people, and could also potentially make interaction and PR with those who don’t share Yudkowsky’s views harder. Despite some claims to the contrary, I believe (through personal experience in PR) that expressing radical honesty is not strongly valued outside the rationalist community, and that interaction with non-rationalists can be extremely important, even to potentially world-saving levels. Yudkowsky, for all of his incredible talent, is frankly terrible at PR (at least historically), and may not be giving proper weight to its value as a world-saving tool. I’m still thinking through the details of Yudkowsky’s claims, but expect me to write a post here in the near future giving my perspective in more detail.

Replies from: abramdemski↑ comment by abramdemski · 2022-04-03T17:34:13.536Z · LW(p) · GW(p)

I don't think "Eliezer is terrible at PR" is a very accurate representation of historical fact. It might be a good representation of something else. But it seems to me that deleting Eliezer from the timeline would probably result in a world where far far fewer people were convinced of the problem. Admittedly, such questions are difficult to judge.

I think "Eliezer is bad at PR" rings true in the sense that he belongs in the cluster of "bad at PR"; you'll make more correct inferences about Eliezer if you cluster him that way. But on historical grounds, he seems good at PR.

Replies from: lc, kabir-kumar, yitz↑ comment by lc · 2022-04-03T17:36:15.831Z · LW(p) · GW(p)

Eliezer is "bad at PR" in the sense that there are lots of people who don't like him. But that's mostly irrelevant. The people who do like him like him enough to donate to his foundation and all of the foundations he inspired.

Replies from: yitz↑ comment by Yitz (yitz) · 2022-04-03T22:00:30.193Z · LW(p) · GW(p)

It’s the people who don’t like him (and are also intelligent and in positions of power), which I’m concerned with in this context. We’re dealing with problems where even a small adversarial group can do a potentially world-ending amount of harm, and that’s pretty important to be able to handle!

Replies from: abramdemski↑ comment by abramdemski · 2022-04-04T15:05:16.755Z · LW(p) · GW(p)

My personal experience is that the people who actively dislike Eliezer are specifically the people who were already set on their path; they dislike Eliezer mostly because he's telling them to get off that path.

I could be wrong, however; my personal experience is undoubtedly very biased.

Replies from: yitz↑ comment by Yitz (yitz) · 2022-04-04T22:32:13.880Z · LW(p) · GW(p)

I’ll tell you that one of my brothers (who I greatly respect) has decided not to be concerned about AGI risks specifically because he views EY as being a very respected “alarmist” in the field (which is basically correct), and also views EY as giving off extremely “culty” and “obviously wrong” vibes (with Roko’s Basilisk and EY’s privacy around the AI boxing results being the main examples given), leading him to conclude that it’s simply not worth engaging with the community (and their arguments) in the first place. I wouldn’t personally engage with what I believe to be a doomsday cult (even if they claim that the risk of ignoring them is astronomically high), so I really can’t blame him.

I’m also aware of an individual who has enormous cultural influence, and was interested in rationalism, but heard from an unnamed researcher at Google that the rationalist movement is associated with the alt-right, so they didn’t bother looking further. (Yes, that’s an incorrect statement, but came from the widespread [possibly correct?] belief that Peter Theil is both alt-right and has/had close ties with many prominent rationalists.) This indicates a general lack of control of the narrative surrounding the movement, and likely has directly led to needlessly antagonistic relationships.

Replies from: TAG, bn22, green_leaf↑ comment by TAG · 2022-04-05T15:04:35.018Z · LW(p) · GW(p)

That's putting it mildly.

The problems are well known. The mystery is why the community doesn't implement obvious solutions. Hiring PR people is an obvious solution. There's a posting somewhere in which Anna Salamon argues that there is some sort of moral hazard involved in professional PR, but never explains why, and everyone agrees with her anyway.

If the community really and literally is about saving the world, then having a constant stream of people who are put off, or even becoming enemies is incrementally making the world more likely to be destroyed. So surely it's an important problem to solve? Yet the community doesn't even like discussing it. It's as if maintaining some sort of purity, or some sort of impression that you don't make mistakes is more important than saving the world.

Replies from: Vaniver↑ comment by Vaniver · 2022-04-05T19:59:58.650Z · LW(p) · GW(p)

Presumably you mean this post [LW · GW].

If the community really and literally is about saving the world, then having a constant stream of people who are put off, or even becoming enemies is incrementally making the world more likely to be destroyed. So surely it's an important problem to solve? Yet the community doesn't even like discussing it. It's as if maintaining some sort of purity, or some sort of impression that you don't make mistakes is more important than saving the world.

I think there are two issues.

First, some of the 'necessary to save the world' things might make enemies. If it's the case that Bob really wants there to be a giant explosion, and you think giant explosions might kill everyone, you and Bob are going to disagree about what to do, and Bob existing in the same information environment as you will constrain your ability to share your preferences and collect allies without making Bob an enemy.

Second, this isn't an issue where we can stop thinking, and thus we need to continue doing things that help us think, even if those things have costs. In contrast, in a situation where you know what plan you need to implement, you can now drop lots of your ability to think in order to coordinate on implementing that plan. [Like, a lot of the "there are too much PR in EA" complaints were specifically about situations where people were overstating the effectiveness of particular interventions, which seemed pretty poisonous to the project of comparing interventions, which was one of the core goals of EA, rather than just 'money moved' or 'number of people pledging' or so on.]

That said, I agree that this seems important to make progress on; this is one of the reasons I worked in communications roles, this is one of the reasons I try to be as polite as I am, this is why I've tried to make my presentation more adaptable instead of being more willing to write people off.

Replies from: TAG, TAG↑ comment by TAG · 2022-04-06T20:12:25.442Z · LW(p) · GW(p)

First, some of the ‘necessary to save the world’ things might make enemies. If it’s the case that Bob really wants there to be a giant explosion, and you think giant explosions might kill everyone, you and Bob are going to disagree about what to do, and Bob existing in the same information environment as you will constrain your ability to share your preferences and collect allies without making Bob an enemy.

So...that's a metaphor for "telling people who like building AIs to stop building AIs pisses them off and turns them into enemies". Which it might, but how often does that happen? Your prominent enemies aren't in that category , as far as I can see. David Gerard,for instance, was alienated by a race/IQ discussion. So good PR might consist of banning race/IQ.

Also, consider the possibility that people who know how to build AIs know more than you, so it's less a question of their being enemies , and more one of their being people you can learn from.

Replies from: Vaniver↑ comment by Vaniver · 2022-04-06T23:03:50.832Z · LW(p) · GW(p)

Which it might, but how often does that happen?

I don't know how public various details are, but my impression is that this was a decent description of the EY - Dario Amodei relationship (and presumably still is?), tho I think personality clashes are also a part of that.

Also, consider the possibility that people who know how to build AIs know more than you, so it's less a question of their being enemies , and more one of their being people you can learn from.

I mean, obviously they know more about some things and less about others? Like, virologists doing gain of function research are also people who know more than me, and I could view them as people I could learn from. Would that advance or hinder my goals?

Replies from: TAG↑ comment by TAG · 2022-04-07T17:37:18.425Z · LW(p) · GW(p)

If you are under some kind of misapprehension about the nature of their work, it would help. And you don't know that you are not under a misapprehension, because they are the experts, not you. So you need to talk to them anyway. You might believe that you understand the field flawlessly, but you dont know until someone checks your work.

↑ comment by TAG · 2022-04-06T20:30:18.286Z · LW(p) · GW(p)

That said, I agree that this seems important to make progress on; this is one of the reasons I worked in roles, this is one of the reasons I try to be as polite as I am, this is why I’ve tried to make my presentation more adaptable instead of being more willing to write people off.

It is not enough to say nice things: other representatives must be prevented from saying nasty things.

Replies from: jeronimo196↑ comment by jeronimo196 · 2023-04-14T09:12:07.670Z · LW(p) · GW(p)

For any statement one can make, there will be people "alienated" (=offended?) by it.

David Gerard was alienated by a race/IQ discussion and you think that should've been avoided.

But someone was surely equally alienated by discussions of religion, evolution, economics, education and our ability to usefully define words.

Do we value David Gerard so far above any given creationist, that we should hire a PR department to cater to him and people like him specifically?

There is an ongoing effort to avoid overtly political topics (Politics is the mind-killer!) - but this effort is doomed beyond a certain threshold, since everything is political to some extent. Or to some people.

To me, a concerted PR effort on part of all prominent representatives to never say anything "nasty" would be alienating. I don't think a community even somewhat dedicated to "radical" honesty could abide a PR department - or vice versa.

TL;DR - LessWrong has no PR department, LessWrong needs no PR department!

Replies from: TAG↑ comment by TAG · 2023-04-14T13:43:00.498Z · LW(p) · GW(p)

For any statement one can make, there will be people “alienated” (=offended?) by it

If you also assume that nothing available except of perfection, that's a fully general argument against PR, not just against the possibility of LW/MIRI having good PR.

If you don't assume that, LW/MIRI can have good PR, by avoiding just the most significant bad PR. Disliking racism isn't some weird idiosyncratic thing that only Gerard has.

Replies from: jeronimo196↑ comment by jeronimo196 · 2023-04-17T12:01:30.344Z · LW(p) · GW(p)

The level of PR you aim for puts an upper limit to how much "radical" honesty you can have.

If you aim for perfect PR, you can have 0 honesty.

If you aim for perfect honesty, you can have no PR. lesswrong doesn't go that far, by a long shot - even without a PR team present.

Most organization do not aim for honesty at all.

The question is where do we draw the line.

Which brings us to "Disliking racism isn't some weird idiosyncratic thing that only Gerard has."

From what I understand, Gerard left because he doesn't like discussions about race/IQ.

Which is not the same thing as racism.

I, personally, don't want lesswrong to cater to people who can not tolerate a discussion.

Replies from: TAG↑ comment by TAG · 2023-04-17T13:06:12.777Z · LW(p) · GW(p)

honesty=/=frankness. Good PR does not require you to lie.

Replies from: jeronimo196↑ comment by jeronimo196 · 2023-04-18T02:38:29.497Z · LW(p) · GW(p)

Semantics.

Good PR requires you to put a filter between what you think is true and what you say.

Replies from: TAG↑ comment by TAG · 2023-04-18T13:13:55.417Z · LW(p) · GW(p)

It requires you to filter what you publicly and officially say. "You", plural, the collective, can speak as freely as you like ...in private. But if you, individually, want to be able to say anything you like to anyone, you had better accept the consequences.

Replies from: jeronimo196↑ comment by jeronimo196 · 2023-04-18T17:24:53.558Z · LW(p) · GW(p)

"The mystery is why the community doesn't implement obvious solutions. Hiring PR people is an obvious solution. There's a posting somewhere in which Anna Salamon argues that there is some sort of moral hazard involved in professional PR, but never explains why, and everyone agrees with her anyway."

""You", plural, the collective, can speak as freely as you like ...in private."

Suppose a large part of the community wants to speak as freely as it likes in public, and the mystery is solved.

We even managed to touch upon the moral hazard involved in professional PR - insofar as it is a filter between what you believe and what you say publicly.

Replies from: TAG↑ comment by bn22 · 2022-04-05T06:16:15.904Z · LW(p) · GW(p)

None of these seem to reflect on EY unless you would expect him to be able to predict that a journalist would write an incoherent almost maximally inaccurate description of an event where he criticized an idea for being implausible then banned its discussion for being off-topic/pointlessly disruptive to something like two people or that his clearly written rationale for not releasing the transcripts for the ai box experiments would be interpreted as a recruiting tool for the only cult that requires no contributions to be a part of, doesn't promise its members salvation/supernatural powers, has no formal hierarchy and is based on a central part of economics.

Replies from: yitz↑ comment by Yitz (yitz) · 2022-04-05T10:43:35.548Z · LW(p) · GW(p)

I would not expect EY to have predicted that himself, given his background. If, however, he either had studied PR deeply or had consulted with a domain expert before posting, then I would have totally expected that result to be predicted with some significant likelihood. Remember, optimally good rationalists should win, and be able to anticipate social dynamics. In this case EY fell into a social trap he didn’t even know existed, so again, I do not blame him personally, but that does not negate the fact that he’s historically not been very good at anticipating that sort of thing, due to lack of training/experience/intuition in that field. I’m fairly confident that at least regarding the Roko’s Basilisk disaster, I would have been able to predict something close to what actually happened if I had seen his comment before he posted it. (This would have been primarily due to pattern matching between the post and known instances of the Striezand Effect, as well as some amount of hard-to-formally-explain intuition that EY’s wording would invoke strong negative emotions in some groups, even if he hadn’t taken any action. Studying “ratio’d” tweets can help give you a sense for this, if you want to practice that admittedly very niche skill). I’m not saying this to imply that I’m a better rationalist than EY (I’m not), merely to say that EY—and the rationalist movement generally—hasn’t focused on honing the skillset necessary to excel at PR, which has sometimes been to our collective detriment.

↑ comment by green_leaf · 2022-04-15T00:01:06.178Z · LW(p) · GW(p)

The question is whether people who prioritize social-position/status-based arguments over actual reality were going to contribute anything meaningful to begin with.

The rationalist community has been built on, among other things, the recognition that human species is systematically broken when it comes to epistemic rationality. Why think that someone who fails this deeply wouldn't continue failing at epistemic rationality at every step even once they've already joined?

Replies from: yitz↑ comment by Yitz (yitz) · 2022-04-15T05:30:53.558Z · LW(p) · GW(p)

Why think that someone who fails this deeply wouldn't continue failing at epistemic rationality at every step even once they've already joined?

I think making the assumption that anyone who isn't in our community is failing to think rationally is itself not great epistemics. It's not irrational at all to refrain from engaging with the ideas of a community you believe to be vaguely insane. After all, I suspect you haven't looked all that deeply into the accuracy of the views of the Church of Scientology, and that's not a failure on your part, since there's little chance you'll gain much of value for your time if you did. There are many, many, many groups out there who sound intelligent at first glance, but when seriously engaged with fall apart. Likewise, there are those groups which sound insane at first, but actually have deep truths to teach (I'd place some forms of Zen Buddhism under this category). It makes a lot of sense to trust your intuition on this sort of thing, if you don't want to get sucked into cults or time-sinks.

Replies from: green_leaf↑ comment by green_leaf · 2022-04-17T14:20:19.498Z · LW(p) · GW(p)

I think making the assumption that anyone who isn't in our community

I didn't talk about "anyone who isn't in our community," but about

people who prioritize social-position/status-based arguments over actual reality[.]

It's not irrational at all to refrain from engaging with the ideas of a community you believe to be vaguely insane.

It's epistemically irrational if I'm implying the ideas are false and if this judgment isn't born from interacting with the ideas themselves but with

social-position/status-based arguments[.]

↑ comment by Kabir Kumar (kabir-kumar) · 2023-12-15T21:25:45.260Z · LW(p) · GW(p)

Eliezer is extremely skilled at capturing attention. One of the best I've seen, outside of presidents and some VCs.

However, as far as I've seen, he's terrible at getting people to do what he wants.

Which means that he has a tendency to attract people to a topic he thinks is important but they never do what he thinks should be done- which seems to lead to a feeling of despondence.

This is where he really differs from those VCs and presidents- they're usually far more balanced.

For an example of an absolute genius in getting people to do what he wants, see Sam Altman.

↑ comment by Yitz (yitz) · 2022-04-03T21:52:15.550Z · LW(p) · GW(p)

You make a strong point, and as such I’ll emend my statement a bit—Eliezer is great at PR aimed at a certain audience in a certain context, which is not universal. Outside of that audience, he is not great at Public Relations(™) in the sense of minimizing the risk of gaining a bad reputation. Historically, I am mostly referring to Eliezer’s tendency to react to what he’s believed to be infohazards in such a way that what he tried to suppress was spread vastly beyond the counterfactual world in which Eliezer hadn’t reacted at all. You only need to slip up once when it comes to risking all PR gains (just ask the countless politicians destroyed by a single video or picture), and Eliezer has slipped up multiple times in the past (not that I personally blame him; it’s a tremendously difficult skillset which I doubt he’s had the time to really work on). All of this is to say that yes, he’s great at making powerful, effective arguments, which convince many rationalist-leaning people. That is not, however, what it means to be a PR expert, and is only one small aspect of a much larger domain which rationalists have historically under-invested in.

Replies from: abramdemski↑ comment by abramdemski · 2022-04-04T14:58:54.466Z · LW(p) · GW(p)

Sounds about right!

↑ comment by Buck · 2022-04-03T21:38:22.137Z · LW(p) · GW(p)

What part of the ELK report are you saying felt unworkable?

Replies from: P.↑ comment by Chris_Leong · 2022-04-03T13:29:24.005Z · LW(p) · GW(p)

Awesome. What are your plans?

Have you considered booking a call with AI Safety Support, registering your interest for the next AGI Safety Fundamentals Course or applying to talk to 80,000 hours?

↑ comment by P. · 2022-04-03T15:11:04.856Z · LW(p) · GW(p)

I will probably spend 4 days (from the 14th to the 17th, I’m somewhat busy until then) thinking about alignment to see whether there is any chance I might be able to make progress. I have read what is recommended as a starting point on the alignment forum [AF · GW], and can read the AGI Safety Fundamentals Course’s curriculum on my own. I will probably start by thinking about how to formalize (and compute) something similar to what we call human values, since that seems to be the core of the problem, and then turning that into something that can be evaluated over possible trajectories of the AI’s world model (or over something like reasoning chains or whatever, I don’t know). I hadn’t considered that as a career, I live in Europe and we don’t have that kind of organizations here, so it will probably just be a hobby.

Replies from: Chris_Leong↑ comment by Chris_Leong · 2022-04-03T15:30:11.893Z · LW(p) · GW(p)

Sounds like a great plan! Even if you end up deciding that you can't make research progress (not that you should give up after just 4 days!), I can suggest a bunch of other activities that might plausibly contribute towards this.

I hadn’t considered that as a career, I live in Europe and we don’t have that kind of organizations here, so it will probably just be a hobby.

I expect that this will change within the next year or so (for example, there are plans for a Longtermist Hotel in Berlin and I think it's very likely to happen).

Replies from: richard-ford↑ comment by ArtMi (richard-ford) · 2022-04-05T06:39:48.048Z · LW(p) · GW(p)

What other activities?

Replies from: Chris_Leong↑ comment by Chris_Leong · 2022-04-05T06:57:59.349Z · LW(p) · GW(p)

Here's a few off the top of my mind:

• Applying to facilitate the next rounds of the AGI Safety Fundamentals course (apparently they compensated facilitators this time)

• Contributing to Stampy Wiki

• AI Safety Movement Building - this can be as simple as hosting dinners with two or three people who are also interested

• General EA/rationalist community building

• Trying to improve online outreach. Take for example the AI Safety Discussion (Open) fb group. They could probably be making better use of the sidebar. The moderator might be open to updating it if someone reached out to them and offered to put in the work. It might be worth seeing what other groups are out there too.

Let me know if none of these sound interesting and I could try to think up some more.

↑ comment by Aditya (aditya-prasad) · 2022-06-03T20:56:25.032Z · LW(p) · GW(p)

Same this post is what made me decide I can't leave it to the experts. It is just a matter of spending the required time to catch up on what we know and tried. As Keltham said - Diversity is in itself an asset. If we can get enough humans to think about this problem we can get some breakthroughs many some angles others have not thought of yet.

For me, it was not demotivating. He is not a god, and it ain't over until the fat lady sings. Things are serious and it just means we should all try our best. In fact, I am kinda happy to imagine we might see a utopia happen in my lifetime. Most humans don't get a chance to literally save the world. It would be really sad if I died a few years before some AGI turned into a superintelligence.

↑ comment by Rob Bensinger (RobbBB) · 2022-04-02T18:33:25.782Z · LW(p) · GW(p)

I primarily upvoted it because I like the push to 'just candidly talk about your models of stuff':