Shallow review of technical AI safety, 2024

post by technicalities, Stag, Stephen McAleese (stephen-mcaleese), jordine, Dr. David Mathers · 2024-12-29T12:01:14.724Z · LW · GW · 34 commentsContents

Editorial Agendas with public outputs 1. Understand existing models Evals Interpretability Understand learning 2. Control the thing Prevent deception and scheming Surgical model edits Goal robustness 3. Safety by design 4. Make AI solve it Scalable oversight Task decomp Adversarial 5. Theory Understanding agency Corrigibility Ontology Identification Understand cooperation 6. Miscellaneous Agendas without public outputs this year Graveyard (known to be inactive) Method Other reviews and taxonomies Acknowledgments None 34 comments

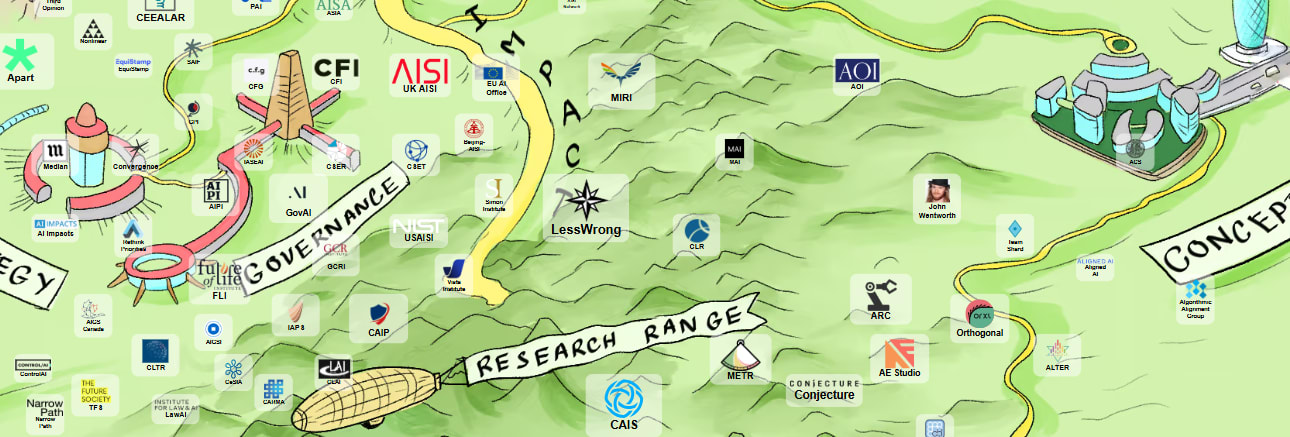

from aisafety.world

The following is a list of live agendas in technical AI safety, updating our post [LW · GW] from last year. It is “shallow” in the sense that 1) we are not specialists in almost any of it and that 2) we only spent about an hour on each entry. We also only use public information, so we are bound to be off by some additional factor.

The point is to help anyone look up some of what is happening, or that thing you vaguely remember reading about; to help new researchers orient and know (some of) their options and the standing critiques; to help policy people know who to talk to for the actual information; and ideally to help funders see quickly what has already been funded and how much (but this proves to be hard).

“AI safety” means many things. We’re targeting work that intends to prevent very competent cognitive systems from having large unintended effects on the world.

This time we also made an effort to identify work that doesn’t show up on LW/AF by trawling conferences and arXiv. Our list is not exhaustive, particularly for academic work which is unindexed and hard to discover. If we missed you or got something wrong, please comment, we will edit.

The method section is important but we put it down the bottom anyway.

Here’s a spreadsheet version also.

Editorial

- One commenter said we shouldn’t do this review, because the sheer length of it fools people into thinking that there’s been lots of progress. Obviously we disagree that it’s not worth doing, but be warned: the following is an exercise in quantity; activity is not the same as progress; you have to consider whether it actually helps.

- A smell of ozone. In the last month there has been a flurry of hopeful or despairing pieces claiming that the next base models are not a big advance, or that we hit a data wall. These often ground out in gossip, but it’s true that the next-gen base models are held up by something, maybe just inference cost.

- The pretraining runs are bottlenecked on electrical power too. Amazon is at present not getting its nuclear datacentre.

- The pretraining runs are bottlenecked on electrical power too. Amazon is at present not getting its nuclear datacentre.

- But overall it’s been a big year for capabilities despite no pretraining scaling.

- I had forgotten long contexts were so recent; million-token windows only arrived in February. Multimodality was February. “Reasoning” was September. “Agency” was October.

- I don’t trust benchmarks much, but on GPQA (hard science) they leapt all the way from chance to PhD-level just with post-training.

- FrontierMath launched 6 weeks ago; o3 moved it 2% → 25%. This is about a year ahead of schedule (though 25% of the benchmark is “find this number!” International Math Olympiad/elite undergrad level.) Unlike the IMO there’s an unusual emphasis on numerical answers and computation too.

- LLaMA-3.1 only used 0.1% synthetic pretraining data, Hunyuan-Large supposedly used 20%.

- The revenge of factored cognition? The full o1 (descriptive name GPT-4-RL-CoT) model is uneven, but seems better at some hard things. The timing is suggestive: this new scaling dimension is being exploited now to make up for the lack of pretraining compute scaling, and so keep the excitement/investment level high. See also Claude apparently doing a little of this.

- Moulton: “If the paths found compress well into the base model then even the test-time compute paradigm may be short lived.”

- You can do a lot with a modern 8B model, apparently more than you could with 2020’s GPT-3-175B. This scaling of capability density will cause other problems.

- There’s still some room for scepticism on OOD capabilities. Here’s a messy case which we don’t fully endorse.

- Whatever safety properties you think [LW · GW] LLMs have [LW · GW] are not set in stone and might be lost. For instance, previously, training them didn’t involve much RL. And currently they have at least partially faithful CoT.

- Some parts of AI safety are now mainstream.

- So it’s hard to find all of the people who don’t post here. For instance, here’s a random ACL paper which just cites the frontier labs.

- The AI governance pivot continues despite the SB1047 setback. MIRI is [LW · GW] a policy org now. 80k made governance researcher its top recommended career, after 4 years of technical safety being that.

- The AISIs seem to be doing well. The UK one survived a political transition and the US one might survive theirs. See also the proposed AI Safety Review Office.

- Mainstreaming safety ideas has polarised things of course; an organised opposition has stood up at last. Seems like it’s not yet party-political though.

- Last year we noted a turn towards control instead of alignment, a turn which seems to have continued.

- Alignment evals with public test sets will probably be pretrained on, and as such will probably quickly stop meaning anything. Maybe you hope that it generalises from post-training anyway?

- Safety cases are a mix of scalable oversight and governance; if it proves hard to make a convincing safety case for a given deployment, then – unlike evals – the safety case gives an if-then decision procedure to get people to stop; or if instead real safety cases are easy to make, we can make safety cases for scalable oversight, and then win.

- Grietzer and Jha deprecate the word “alignment”, since it means too many things at once:

- “P1: Avoiding takeover from emergent optimization in AI agents

- P2: Ensuring that AI’s information processing (and/or reasoning) is intelligible to us

- P3: Ensuring AIs are good at solving problems as specified (by user or designer)

- P4: Ensuring AI systems enhance, and don’t erode, human agency

- P5: Ensuring that advanced AI agents learn a human utility function

- P6: Ensuring that AI systems lead to desirable systemic and long term outcomes”

- Manifestos / mini-books: A Narrow Path (ControlAI), The Compendium (Conjecture), Situational Awareness (Aschenbrenner), Introduction to AI Safety, Ethics, and Society (CAIS).

- I note in passing the for-profit ~alignment companies in this list: Conjecture, Goodfire, Leap, AE Studio. (Not counting the labs.)

- We don’t comment on quality. Here’s [LW(p) · GW(p)] one researcher’s opinion on the best work of the year (for his purposes).

- From December 2023: you should read Turner and Barnett [LW(p) · GW(p)] alleging community failures.

- The term “prosaic alignment” violates one good rule of thumb: that one should in general name things in ways that the people covered would endorse.[2] We’re calling it “iterative alignment”. We liked Kosoy’s description [LW · GW] of the big implicit strategies used in the field, including the “incrementalist” strategy.

- Quite a lot of the juiciest work is in the "miscellaneous" category, suggesting that our taxonomy isn't right, or that tree data structures aren't.

Agendas with public outputs

1. Understand existing models

Evals

(Figuring out how trained models behave. Arguably not itself safety work but a useful input.)

Various capability and safety evaluations

- One-sentence summary: make tools that can actually check whether a model has a certain capability or propensity. We default to low-n sampling of a vast latent space but aim to do better.

- Theory of change: keep a close eye on what capabilities are acquired when, so that frontier labs and regulators are better informed on what security measures are already necessary (and hopefully they extrapolate). You can’t regulate without them.

- See also: Deepmind’s frontier safety framework, Aether [EA · GW]

- Which orthodox alignment problems could it help with?: none; a barometer for risk

- Target case [LW · GW]: optimistic, pessimistic

- Broad approach [LW · GW]: behavioural

- Some names: METR, AISI, Apollo, Epoch, Marius Hobbhahn, Mary Phuong, Beth Barnes, Owain Evans

- Estimated # FTEs: 100+

- Some outputs in 2024:

- Evals: Evaluating Frontier Models for Dangerous Capabilities, WMDP, Situational Awareness Dataset [LW · GW], Introspection, HarmBench, FrontierMath, SWE-bench, Language Models Model Users [AF · GW], LAB-Bench

- Agentic: AgentHarm, Details about METR’s preliminary evaluation of OpenAI o1-preview, Research Engineering (RE-)Bench, Safety training do not fully transfer to the agent setting [LW · GW], Evaluating frontier AI R&D capabilities of language model agents against human experts, Automation of AI R&D: Researcher Perspectives

- Meta-discussion: We need a Science of Evals, AI Sandbagging [AF · GW], Sabotage Evaluations, [AF · GW] Safetywashing [AF · GW], A statistical approach to model evaluations, Why Has Predicting Downstream Capabilities of Frontier AI Models with Scale Remained Elusive?

- Tools:

- Demos: Uncovering Deceptive Tendencies in Language Models: A Simulated Company AI Assistant [AF · GW], LLMs can Strategically Deceive Under Pressure, In-Context Scheming, Alignment Faking in LLMs

- Other misc: An Opinionated Evals Reading List [AF · GW], A starter guide for evals [AF · GW]

- Critiques: Hubinger [AF · GW], Hubinger [AF · GW], Shovelain & Mckernon [LW · GW], Jozdien [AF · GW], Casper

- Funded by: basically everyone. Google, Microsoft, Open Philanthropy, LTFF, Governments etc

- Publicly-announced funding 2023-4: N/A. Tens of millions?

- One-sentence summary: let’s attack current models and see what they do / deliberately induce bad things on current frontier models to test out our theories / methods.

- See also: gain of function [EA · GW] experiments (producing demos and toy models of misalignment; threat modelling (Model Organisms [AF · GW], Powerseeking, Apollo); steganography; Trojans (CAIS); Latent Adversarial Training [AF · GW]; Palisade.

- Which orthodox alignment problems could it help with?: 12. A boxed AGI might exfiltrate itself by steganography, spearphishing, 4. Goals misgeneralize out of distribution

- Target case: Pessimistic

- Broad approach: Behavioural

- Some names: Anthropic Alignment Stress-Testing [LW · GW], Ethan Perez, Evan Hubinger, Beth Barnes, Nicholas Schiefer, Jesse Mu, David Duvenaud

- Estimated # FTEs: 10-50

- Some outputs in 2024: Many-shot Jailbreaking, HarmBench, Phishing, Sleeper Agents, Sabotage, Emergence, TrojanForge, Collusion in SAEs [LW · GW], Coercion, Emergence and Mitigation of Steganographic Collusion [LW · GW], Secret Collusion [AF · GW], Negative steganography results [LW · GW], Introducing Alignment Stress-Testing at Anthropic [AF · GW], Investigating reward tampering [AF · GW], Gray Swan’s Ultimate Jailbreaking Championship [LW · GW], Universal Image Jailbreak Transferability, Misleading via RLHF, WildGuard: Open One-Stop Moderation Tools, LLM-to-LLM Prompt Injection within Multi-Agent Systems, Adversaries Can Misuse Combinations of Safe Models, Alignment Faking

- Critiques: nostalgebraist contra CoT unfaithfulness [AF · GW] kinda

- Funded by: the big labs, the government evaluators

- Publicly-announced funding 2023-4: N/A. Millions?

Eliciting model anomalies

- One-sentence summary: finding weird features of current models, in a way which isn’t fishing for capabilities nor exactly red-teaming. Think inverse Scaling, SolidGoldMagikarp [AF · GW], Reversal curse, out of context. Not an agenda, an input.

- Theory of change: maybe anomalies and edge cases tell us something deep about the models; you need data to theorise.

- Some outputs in 2024: Mechanistically Eliciting Latent Behaviors in Language Models [LW · GW], Yang 2024, Balesni 2024, Feng 2024, When Do Universal Image Jailbreaks Transfer Between Vision-Language Models?, Eight methods to evaluate robust unlearning in LLMs, Connecting the Dots, Predicting Emergent Capabilities by Finetuning, On Evaluating the Durability of Safeguards for Open-weight LLMs.

Interpretability

(Figuring out what a trained model is actually computing.[3])

Good-enough mech interp [LW · GW]

- One-sentence summary: try to reverse-engineer models in a principled way and use this understanding to make models safer. Break it into components (neurons, polytopes, circuits, feature directions, singular vectors, etc), interpret the components, check that your interpretation is right.

- Theory of change: Iterate towards things which don’t scheme. Most bottom-up interp agendas are not seeking a full circuit-level reconstruction of model algorithms, they're just aiming at formal models that are principled enough to root out e.g. deception. Aid alignment through ontology identification, auditing for deception and planning, targeting alignment methods, intervening in training, inference-time control to act on hypothetical real-time monitoring.

- This is a catch-all entry with lots of overlap with the rest of this section. See also scalable oversight, ambitious mech interp [EA · GW].

- Which orthodox alignment problems could it help with?: 1. Value is fragile and hard to specify; 7. Superintelligence can fool human supervisors; 12. A boxed AGI might exfiltrate itself.

- Target case: pessimistic

- Broad approach: cognitive

- Some names: Chris Olah, Neel Nanda, Trenton Bricken, Samuel Marks, Nina Panickssery

- Estimated # FTEs: 100+

- Some outputs in 2024: Neel’s extremely Opinionated Annotated List v2 [AF · GW], (unpublished) Exciting Open Problems In Mech Interp v2, A List of 45+ Mech Interp Project Ideas [AF · GW], Dictionary learning, Sparse Feature Circuits: Discovering and Editing Interpretable Causal Graphs in Language Models, Mechanistic Interpretability for AI Safety -- A Review

- Critiques: Summarised here: Charbel [LW · GW], Bushnaq [LW · GW], Casper [? · GW], Shovelain & Mckernon [LW · GW], RicG [LW · GW], Kross [LW · GW], Hobbhahn [LW · GW]

- Funded by: everyone, roughly. Frontier labs, LTFF, OpenPhil, etc.

- Publicly-announced funding 2023-4: N/A. Millions.

Sparse Autoencoders [AF · GW]

- One-sentence summary: decompose the polysemantic activations of the residual stream into a sparse linear combination of monosemantic “features” which correspond to interpretable concepts.

- Theory of change: get a principled decomposition of an LLM's activation into atomic components → identify deception and other misbehaviors. Sharkey’s version [AF · GW] has much more detail.

- See also: Bau Lab, the Local Interaction Basis

- Which orthodox alignment problems could it help with?: 1. Value is fragile and hard to specify, 7. Superintelligence can fool human supervisors

- Target case: pessimistic

- Broad approach: cognitive

- Some names: Senthooran Rajamanoharan, Arthur Conmy, Leo Gao, Neel Nanda, Connor Kissane, Lee Sharkey, Samuel Marks, David Bau, Eric Michaud, Aaron Mueller, Decode

- Estimated # FTEs: 10-50

- Some outputs in 2024: Scaling Monosemanticity, Extracting Concepts from GPT-4, Gemma Scope, JumpReLU, Dictionary learning with gated SAEs, Scaling and evaluating sparse autoencoders, Automatically Interpreting LLM Features, Interpreting Attention Layers, SAEs (usually) Transfer Between Base and Chat Models [AF · GW], End-to-End Sparse Dictionary Learning, Transcoders Find Interpretable LLM Feature Circuits, A is for Absorption [LW · GW], Sparse Feature Circuits, Function Vectors, Improving Steering Vectors by Targeting SAE Features, Matryoshka SAEs [AF · GW], Goodfire.

- Critiques: SAEs are highly dataset dependent [AF · GW], The ‘strong’ feature hypothesis could be wrong [AF · GW], EIS XIV: Is mechanistic interpretability about to be practically useful? [AF · GW], steganography [LW · GW], Analyzing (In)Abilities of SAEs via Formal Languages

- Funded by: everyone, roughly. Frontier labs, LTFF, OpenPhil, etc.

- Publicly-announced funding 2023-4: N/A. Millions?

Simplex: computational mechanics [? · GW] for interp

- One-sentence summary: Computational mechanics for interpretability; what structures must a system track in order to predict the future?

- Theory of change: apply the theory to SOTA AI, improve structure measures and unsupervised methods for discovering structure, ultimately operationalize safety-relevant phenomena.

- See also: Belief State Geometry [LW · GW]

- Which orthodox alignment problems could it help with?: 9. Humans cannot be first-class parties to a superintelligent value handshake

- Target case: pessimistic

- Broad approach: cognitive, maths/philosophy

- Some names: Paul Riechers, Adam Shai

- Estimated # FTEs: 1-10

- Some outputs in 2024: Transformers represent belief state geometry in their residual stream, Open Problems in Comp Mech

- Critiques: not found

- Funded by: Survival and Flourishing Fund

- Publicly-announced funding 2023-4: $74,000

- One-sentence summary: develop the foundations of interpretable AI through the lens of causality and abstraction.

- Theory of change: figure out what it means for a mechanistic explanation of neural network behavior to be correct → find a mechanistic explanation of neural network behavior

- See also: causal scrubbing [LW · GW], locally consistent abstractions [LW · GW]

- Which orthodox alignment problems could it help with?: 1. Value is fragile and hard to specify, 7. Superintelligence can fool human supervisors

- Target case: pessimistic

- Broad approach: cognitive

- Some names: Atticus Geiger

- Estimated # FTEs: 1-10

- Some outputs in 2024: Disentangling Factual Knowledge in GPT-2 Small, Causal Abstraction, ReFT, pyvene, defending subspace interchange

- Critiques: not found

- Funded by: Open Philanthropy

- Publicly-announced funding 2023-4: $737,000

Concept-based interp

- One-sentence summary: if a bottom-up understanding of models turns out to be too hard, we might still be able to jump in at some high level of abstraction and still steer away from misaligned AGI.

- Theory of change: build tools that can output a probable and predictive representation of internal objectives or capabilities of a model, thereby enabling model editing and monitoring.

- See also: high-level interpretability, model-agnostic interpretability, Cadenza, Leap.

- Which orthodox alignment problems could it help with?: 1. Value is fragile and hard to specify, 7. Superintelligence can fool human supervisors

- Target case: optimistic / pessimistic

- Broad approach: cognitive

- Some names: Wes Gurnee, Max Tegmark, Eric J. Michaud, David Baek, Josh Engels, Walter Laurito, Kaarel Hänni

- Estimated # FTEs: 10-100

- Some outputs in 2024: Unifying Causal Representation Learning and Foundation Models, Cluster-norm for Unsupervised Probing of Knowledge, The SaTML '24 CNN Interpretability Competition, Language Models Represent Space and Time, The Geometry of Concepts: Sparse Autoencoder Feature Structure, Transformers Represent Belief State Geometry in their Residual Stream [AF · GW], OthelloGPT learned a bag of heuristics [AF · GW], Evidence of Learned Look-Ahead in a Chess-Playing Neural Network [AF · GW], Benchmarking Mental State Representations in Language Models, On the Origins of Linear Representations in LLMs

- Critiques: Deepmind, Not All Language Model Features Are Linear, Intricacies of Feature Geometry in Large Language Models [LW · GW], SAE feature geometry is outside the superposition hypothesis [LW · GW]

- Funded by: ?

- Publicly-announced funding 2023-4: N/A

- One-sentence summary: research startup selling an interpretability API (model-agnostic feature viz of vision models). Aiming for data-independent (“want to extract information directly from the model with little dependence on training or test data”) and global (“mech interp isn’t going to be enough, we need holistic methods that capture gestalt”) interpretability methods.

- Theory of change: make safety tools people want to use, stress-test methods in real life, develop a strong alternative to bottom-up circuit analysis.

- Which orthodox alignment problems could it help with?: 1. Value is fragile and hard to specify

- Target case: pessimistic

- Broad approach: cognitive

- Some names: Jessica Rumbelow, Robbie McCorkell

- Estimated # FTEs: 1-10

- Some outputs in 2024: Why did ChatGPT say that? (PIZZA) [LW · GW]

- Critiques: not found

- Funded by: Speedinvest, Ride Home, Open Philanthropy, EA Funds

- Publicly-announced funding 2023-4: $425,000

EleutherAI interp

- One-sentence summary: tools to investigate questions like path dependence of training.

- Theory of change: make amazing tools to push forward the frontier of interpretability.

- Which orthodox alignment problems could it help with?: 1. Value is fragile and hard to specify

- Target case: optimistic-case

- Broad approach: cognitive

- Some names: Nora Belrose, Brennan Dury, David Johnston, Alex Mallen, Lucia Quirke, Adam Scherlis

- Estimated # FTEs: 6

- Some outputs in 2024: Neural Networks Learn Statistics of Increasing Complexity, Automatically Interpreting Millions of Features in Large Language Models, Refusal in LLMs is an Affine Function

- Critiques: not found

- Funded by: CoreWeave, Hugging Face, Open Philanthropy, Mozilla, Omidyar Network, Stability AI, Lambda Labs

- Publicly-announced funding 2023-4: $2,642,273

Understand learning

(Figuring out how the model figured it out.)

Timaeus: Developmental interpretability [AF · GW]

- One-sentence summary: Build tools for detecting, locating, and interpreting key moments (saddle-to-saddle dynamics, groks) that govern training and in-context learning in models.

- build an idealized model of NNs, measure high-level phenomena, derive interesting predictions about real models. Apply these measures to real models: "pretend they're idealized, then apply the high-level measurements you'd apply to idealized models, then see if you can interpret the results".

- Theory of change: structures forming in neural networks can leave traces we can interpret to figure out where and how that structure is implemented. This could automate interpretability. It may be hopeless to intervene at the end of the learning process, so we want to catch and prevent deceptiveness and other dangerous capabilities and values as early as possible.

- See also: singular learning theory, computational mechanics [? · GW], complexity.

- Which orthodox alignment problems could it help with?: 4. Goals misgeneralize out of distribution

- Target case: pessimistic

- Broad approach: cognitive

- Some names: Jesse Hoogland, George Wang, Daniel Murfet, Stan van Wingerden, Alexander Gietelink Oldenziel

- Estimated # FTEs: 10+?

- Some outputs in 2024: Stagewise Development in Neural Networks [AF · GW], Differentiation and Specialization of Attention Heads via the Refined Local Learning Coefficient, Higher-order degeneracy and error-correction [AF · GW], Feature Targeted LLC Estimation Distinguishes SAE Features from Random Directions [AF · GW].

- See also: Dialogue introduction to SLT [LW · GW], SLT exercises [LW · GW]

- Critiques: Timaeus [? · GW], Erdil [AF · GW], Skalse [AF · GW]

- Funded by: Manifund, Survival and Flourishing Fund, EA Funds

- Publicly-announced funding 2023-4: $700,050

- One-sentence summary: toy models (e.g. of induction heads) to understand learning in interesting limiting examples; only part of their work is safety related.

- Theory of change: study interpretability and learning in DL (for bio insights, unrelated to AI) → someone else uses this work to do something safety related

- Which orthodox alignment problems could it help with?: We don’t know how to determine an AGI’s goals or values

- Target case: optimistic?

- Broad approach: cognitive

- Some names: Andrew Saxe, Basile Confavreux, Erin Grant, Stefano Sarao Mannelli, Tyler Boyd-Meredith, Victor Pedrosa

- Estimated # FTEs: 10-50

- Some outputs in 2024: Tilting the Odds at the Lottery, What needs to go right for an induction head?, Why Do Animals Need Shaping?, When Representations Align, Understanding Unimodal Bias in Multimodal Deep Linear Networks, Meta-Learning Strategies through Value Maximization in Neural Networks

- Critiques: none found.

- Funded by: Sir Henry Dale Fellowship, Wellcome-Beit Prize, CIFAR, Schmidt Science Polymath Program

- Publicly-announced funding 2023-4: >£25,000

See also

- Influence functions [LW · GW]

- Memorization in LMs as a Multifaceted Phenomenon

- What Do Learning Dynamics Reveal About Generalization in LLM Reasoning?

2. Control the thing [LW · GW]

(figure out how to predictably detect and quash misbehaviour)

- One-sentence summary: nudging base models by optimising their output. (RLHF [AF · GW], Constitutional, DPO, SFT, HHH, RLAIF.) Non-agenda.

- Theory of change (imputed): things are generally smooth, relevant capabilities are harder than alignment, assume no mesaoptimisers, that zero-shot deception is hard, assume a fundamentally humanish ontology is learned, assume no simulated agents, assume that noise in the data means that human preferences are not ruled out, assume that alignment is a superficial feature. Assume that task reliability is enough (that tuning for what we want will also get us avoidance of what we don't want). Maybe assume that thoughts are translucent [LW · GW].

- See also: prosaic alignment [LW · GW], incrementalism [LW · GW], alignment-by-default [AF · GW]

- Which orthodox alignment problems could it help with?: this agenda implicitly questions this framing

- Target case: optimistic-case

- Broad approach: engineering

- Some names: post-training teams at most labs. Beren Millidge.

- Estimated # FTEs: 1000+

- Some outputs in 2024: Deliberative Alignment (Constitutional AI, redux), REINFORCE, WARP, Catastrophic Goodhart, RLCHF, E-RLHF, NLHF, IPO, KTO, Why Don't We Just... Shoggoth+Face+Paraphraser? [LW · GW], Towards a Unified View of Preference Learning for Large Language Models: A Survey, Rule-Based Rewards, Reward Model Ensembles, Guardrails, ProgressGym, What are human values, and how do we align AI to them?

- Critique: hoo [LW · GW] boy [LW · GW], Open Problems with RLHF, neo-Arrow, Challenges of Partial Observability in RLHF, Jozdien kinda [LW · GW], RLHF is the worst possible thing [LW · GW], Fundamental Limitations of Alignment in Large Language Models

- Funded by: most of the industry

- Publicly-announced funding 2023-4: N/A

Control evaluations [LW · GW]

- One-sentence summary: don’t evaluate the model, evaluate the humans / organisation / deployment method instead: assess the risk of a particular protocol for deploying your model in a particular scenario, by silently swapping in an intentionally misaligned model. Play out the scenario in sim until 1) a catastrophe occurs, 2) your protocol confidently spots the red team model, or 3) the scenario ends without catastrophe. Also do adversarial evaluation (monitoring untrusted model outputs with both trusted and untrusted models). Predecessor [AF · GW].

- Theory of change: prevent high-stakes failures by automating risk assessment of eval and deployment plans.

- See also: safety cases.

- Which orthodox alignment problems could it help with?: 12. A boxed AGI might exfiltrate itself by steganography, spearphishing

- Target case: worst-case

- Broad approach: engineering / behavioural

- Some names: Redwood, Buck Shlegeris, Ryan Greenblatt, Kshitij Sachan, Alex Mallen

- Estimated # FTEs: 9

- Some outputs in 2024: AI Control, Subversion Strategy Eval, sequence [? · GW], toy models [LW · GW], notes [? · GW]

- Critiques: of org in general [EA · GW], Jozdien [AF · GW]

- Funded by: Open Philanthropy, Survival and Flourishing Fund

- Publicly-announced funding 2023-4: $6,398,000

- One-sentence summary: This is a multi-team agenda with some big differences. Something like: formally model the behavior of cyber-physical systems, define precise constraints on what actions can occur, and require AIs to provide safety proofs for their recommended actions (correctness and uniqueness). Get AI to (assist in) building a detailed world simulation which humans understand, elicit preferences over future states from humans, verify[4] that the AI adheres to coarse preferences[5]; plan using this world model and preferences.

- Theory of change: make a formal verification system that can act as an intermediary between a human user and a potentially dangerous system and only let provably safe actions through. Notable for not requiring that we solve ELK. Does require that we solve ontology though.

- See also: Bengio’s AI Scientist, Safeguarded AI, Open Agency Architecture [LW · GW], SLES, Atlas Computing, program synthesis, Tenenbaum.

- Which orthodox alignment problems could it help with?: 1. Value is fragile and hard to specify, 4. Goals misgeneralize out of distribution, 7. Superintelligence can fool human supervisors, 9. Humans cannot be first-class parties to a superintelligent value handshake, 12. A boxed AGI might exfiltrate itself by steganography, spearphishing

- Target case: (nearly) worst-case

- Broad approach: cognitive

- Some names: Yoshua Bengio, Max Tegmark, Steve Omohundro, David "davidad" Dalrymple, Joar Skalse, Stuart Russell, Ohad Kammar, Alessandro Abate, Fabio Zanassi

- Estimated # FTEs: 10-50

- Some outputs in 2024: Bayesian oracle, Towards Guaranteed Safe AI, ARIA Safeguarded AI Programme Thesis

- Critiques: Zvi, Gleave[6], Dickson [LW · GW]

- Funded by: UK government, OpenPhil, Survival and Flourishing Fund, Mila / CIFAR

- Publicly-announced funding 2023-4: >$10m

Assistance games / reward learning

- One-sentence summary: reorient the general thrust of AI research towards provably beneficial systems.

- Theory of change: understand what kinds of things can go wrong when humans are directly involved in training a model → build tools that make it easier for a model to learn what humans want it to learn.

- See also RLHF and recursive reward modelling, the industrialised forms.

- Which orthodox alignment problems could it help with?: 1. Value is fragile and hard to specify, 10. Humanlike minds/goals are not necessarily safe

- Target case: varies

- Broad approach: engineering, cognitive

- Some names: Joar Skalse, Anca Dragan, Stuart Russell, David Krueger

- Estimated # FTEs: 10+

- Some outputs in 2024: The Perils of Optimizing Learned Reward Functions, Correlated Proxies: A New Definition and Improved Mitigation for Reward Hacking, Changing and Influenceable Reward Functions, RL, but don't do anything I wouldn't do, Interpreting Preference Models w/ Sparse Autoencoders [LW · GW]

- Critiques: nice summary [LW · GW] of historical problem statements

- Funded by: EA funds, Open Philanthropy. Survival and Flourishing Fund, Manifund

- Publicly-announced funding 2023-4: >$1500

Social-instinct AGI [LW · GW]

- One-sentence summary: Social and moral instincts are (partly) implemented in particular hardwired brain circuitry; let's figure out what those circuits are and how they work; this will involve symbol grounding. Newest iteration of a sustained and novel agenda.

- Theory of change: Fairly direct alignment via changing training to reflect actual human reward. Get actual data about (reward, training data) → (human values) to help with theorising this map in AIs; "understand human social instincts, and then maybe adapt some aspects of those for AGIs, presumably in conjunction with other non-biological ingredients".

- Which orthodox alignment problems could it help with?: 1. Value is fragile and hard to specify.

- Target case: worst-case

- Broad approach: cognitive

- Some names: Steve Byrnes

- Estimated # FTEs: 1

- Some outputs in 2024: My AGI safety research—2024 review, ’25 plans [LW · GW], Neuroscience of human social instincts: a sketch [LW · GW], Intuitive Self Models [? · GW]

- Critiques: Not found.

- Funded by: Astera Institute.

Prevent deception and scheming

(through methods besides mechanistic interpretability)

Mechanistic anomaly detection [LW · GW]

- One-sentence summary: understand what an LLM’s normal (~benign) functioning looks like and detect divergence from this, even if we don't understand the exact nature of that divergence.

- Theory of change: build models of normal functioning → find and flag behaviors that look unusual → match the unusual behaviors to problematic outcomes or shut it down outright.

- Which orthodox alignment problems could it help with?: 7. Superintelligence can fool human supervisors or 8. Superintelligence can hack software supervisors

- Target case: pessimistic

- Broad approach: cognitive

- Some names: Nora Belrose, Erik Jenner

- Estimated # FTEs: 1-10

- Some outputs in 2024: Password-locked model capability elicitation [AF · GW], Towards a Law of Iterated Expectations for Heuristic Estimators, Eleuther research update [LW · GW], Concrete empirical research projects [LW · GW], Mack and Turner [LW · GW]

- Critiques: critique of past agenda [AF · GW], contra counting arguments? [LW · GW]

- Funded by: ARC, Eleuther funders

- Publicly-announced funding 2023-4: some fraction of Eleuther’s $2,642,273.

- One-sentence summary: now focusing on developing robust white-box dishonesty-detection methods for LLM's and model evals. Previously working on concept-based interpretability.

- Theory of change: Build and benchmark strong white-box methods to assess trustworthiness and increase transparency of models, and encourage open releases / evals from labs by demonstrating the benefits and necessity of such methods.

- Which orthodox alignment problems could it help with?: 7. Superintelligence can fool human supervisors or 8. Superintelligence can hack software supervisors

- Target case: pessimistic / worst-case

- Broad approach: cognitive

- Some names: Kieron Kretschmar, Walter Laurito, Sharan Maiya, Grégoire Dhimoïla

- #FTEs: 3

- Some outputs in 2024: Cluster-Norm for Unsupervised Probing of Knowledge

- Funded by: self-funded / volunteers

- Publicly-announced funding 2023-4: none.

Faithful CoT through separation and paraphrasing [LW · GW]

- One-sentence summary: shoggoth/face + paraphraser. Avoid giving the model incentives to hide its deceptive cognition or steganography. You could do this with an o1-like design, where the base model is not optimised for agency or alignment.

- Theory of change: keep the CoT unoptimised and informative so that it can be used for control. Make it so we can see (most) misalignment in the hidden CoT.

- Which orthodox alignment problems could it help with?: 7. Superintelligence can fool human supervisors

- Target case: pessimistic

- Broad approach: engineering

- Some names: Daniel Kokotajlo, AI Futures Project

- Estimated # FTEs: 1

- Some outputs in 2024: Why don’t we just… [LW · GW]

- Critiques: Demski [LW · GW]

- Funded by: SFF

- Publicly-announced funding 2023-4: $505,000 for the AI Futures Project

Indirect deception monitoring

- One-sentence summary: build tools to find whether a model will misbehave in high stakes circumstances by looking at it in testable circumstances. This bucket catches work on lie classifiers [AF · GW], sycophancy, Scaling Trends For Deception [AF · GW].

- Theory of change: maybe we can catch a misaligned model by observing dozens of superficially unrelated parts, or tricking it into self-reporting, or by building the equivalent of brain scans.

- Which orthodox alignment problems could it help with?: 7. Superintelligence can fool human supervisors[7]

- Target case: pessimistic

- Broad approach: engineering

- Some names: Anthropic, Monte MacDiarmid, Meg Tong, Mrinank Sharma, Owain Evans, Colognese [LW · GW]

- Estimated # FTEs: 1-10

- Some outputs in 2024: Simple probes can catch sleeper agents [AF · GW], Sandbag Detection through Noise Injection, Hidden in Plain Text: Emergence & Mitigation of Steganographic Collusion in LLMs

- Critique (of related ideas): 1% [LW · GW], contra counting arguments [LW · GW]

- Funded by: Anthropic funders

- Publicly-announced funding 2023-4: N/A

See also “retarget the search [LW · GW]”.

Surgical model edits

(interventions on model internals)

Activation engineering [? · GW]

- One-sentence summary: a sort of interpretable finetuning. Let's see if we can programmatically modify activations to steer outputs towards what we want, in a way that generalises across models and topics. As much an intervention-based approach to interpretability as about control.

- Theory of change: test interpretability theories; find new insights from interpretable causal interventions on representations. Or: build more stuff to stack on top of finetuning. Slightly encourage the model to be nice, add one more layer of defence to our bundle [LW · GW] of partial alignment methods.

- See also: representation engineering, SAEs.

- Which orthodox alignment problems could it help with?: 1. Value is fragile and hard to specify, 4. Goals misgeneralize out of distribution, 5. Instrumental convergence, 7. Superintelligence can fool human supervisors, 9. Humans cannot be first-class parties to a superintelligent value handshake

- Target case: pessimistic

- Broad approach: engineering/cognitive

- Some names: Jan Wehner, Alex Turner, Nina Panickssery, Marc Carauleanu, Collin Burns, Andrew Mack, Pedro Freire, Joseph Miller, Andy Zou, Andy Arditi, Ole Jorgensen.

- Estimated # FTEs: 10+?

- Some outputs in 2024: Circuit Breakers, An Introduction to Representation Engineering - an activation-based paradigm for controlling LLMs [AF · GW], Steering Llama-2 with contrastive activation additions [AF · GW], Simple probes can catch sleeper agents [AF · GW], Refusal in LLMs is mediated by a single direction [AF · GW], Mechanistically Eliciting Latent Behaviors in Language Models [AF · GW], Uncovering Latent Human Wellbeing in Language Model Embeddings, Goodfire, LatentQA, SelfIE, Mack and Turner [LW · GW], Obfuscated Activations Bypass LLM Latent-Space Defenses

- Critiques: of ROME [LW · GW], open question thread for theory of impact [LW · GW], A Sober Look at Steering Vectors for LLMs [LW · GW]

- Funded by: various, including EA funds

- Publicly-announced funding 2023-4: N/A

See also unlearning.

Goal robustness

(Figuring out how to keep the model doing what it has been doing so far.)

Mild optimisation [? · GW]

- One-sentence summary: avoid Goodharting by getting AI to satisfice rather than maximise.

- Theory of change: if we fail to exactly nail down the preferences for a superintelligent agent we die to Goodharting → shift from maximising to satisficing in the agent’s utility function → we get a nonzero share of the lightcone as opposed to zero; also, moonshot at this being the recipe for fully aligned AI.

- Which orthodox alignment problems could it help with?: 4. Goals misgeneralize out of distribution

- Target case: pessimistic

- Broad approach: cognitive

- Some names: Jobst Heitzig, Simon Fischer, Jessica Taylor

- Estimated # FTEs: ?

- Some outputs in 2024: How to safely use an optimizer [AF · GW], Aspiration-based designs sequence [? · GW], Non-maximizing policies that fulfill multi-criterion aspirations in expectation

- Critiques: Dearnaley [LW · GW]

- Funded by: ?

- Publicly-announced funding 2023-4: N/A

3. Safety by design

(Figuring out how to avoid using deep learning)

Conjecture: Cognitive Software

- One-sentence summary: make tools to write, execute and deploy cognitive programs; compose these into large, powerful systems that do what we want; make a training procedure that lets us understand what the model does and does not know at each step; finally, partially emulate [LW · GW] human reasoning.

- Theory of change: train a bounded tool AI to promote AI benefits without needing unbounded AIs. If the AI uses similar heuristics to us, it should default to not being extreme.

- Which orthodox alignment problems could it help with?: 2. Corrigibility is anti-natural, 5. Instrumental convergence

- Target case: pessimistic

- Broad approach: engineering, cognitive

- Some names: Connor Leahy, Gabriel Alfour, Adam Shimi

- Estimated # FTEs: 1-10

- Some outputs in 2024: “We have already done a fair amount of work on Vertical Scaling (Phase 4) and Cognitive Emulation (Phase 5), and lots of work of Phase 1 and Phase 2 happens in parallel.”. The Tactics programming language/framework. Still working on cognitive emulations, A Roadmap for Cognitive Software and A Humanist Future of AI

- See also AI chains.

- Critiques: Scher [LW(p) · GW(p)], Samin [LW(p) · GW(p)], org [LW · GW]

- Funded by: Plural Platform, Metaplanet, “Others Others”, Firestreak Ventures, EA Funds in 2022

- Publicly-announced funding 2023-4: N/A.

See also parts of Guaranteed Safe AI involving world models and program synthesis.

4. Make AI solve it [? · GW]

(Figuring out how models might help with figuring it out.)

Scalable oversight

(Figuring out how to get AI to help humans supervise models.)

OpenAI Superalignment Automated Alignment Research

- One-sentence summary: be ready to align a human-level automated alignment researcher.

- Theory of change: get AI to help us with scalable oversight, critiques, recursive reward modelling, and so solve inner alignment.

- Which orthodox alignment problems could it help with?: 1. Value is fragile and hard to specify or 8. Superintelligence can hack software supervisors

- Target case: optimistic

- Broad approach: behavioural

- Some names: Jan Leike, Elriggs [AF · GW], Jacques Thibodeau

- Estimated # FTEs: 10-50

- Some outputs in 2024: Prover-verifier games

- Critiques: Zvi [LW · GW], Christiano [LW(p) · GW(p)], MIRI [LW · GW], Steiner [LW · GW], Ladish [LW · GW], Wentworth [LW · GW], Gao [LW(p) · GW(p)]

- Funded by: lab funders

- Publicly-announced funding 2023-4: N/A

- One-sentence summary: use weaker models to supervise and provide a feedback signal to stronger models.

- Theory of change: find techniques that do better than RLHF at supervising superior models → track whether these techniques fail as capabilities increase further

- Which orthodox alignment problems could it help with?: 8. Superintelligence can hack software supervisors

- Target case: optimistic

- Broad approach: engineering

- Some names: Jan Leike, Collin Burns, Nora Belrose, Zachary Kenton, Noah Siegel, János Kramár, Noah Goodman, Rohin Shah

- Estimated # FTEs: 10-50

- Some outputs in 2024: Easy-to-Hard Generalization, Balancing Label Quantity and Quality for Scalable Elicitation, The Unreasonable Effectiveness of Easy Training Data, On scalable oversight with weak LLMs judging strong LLMs, Your Weak LLM is Secretly a Strong Teacher for Alignment

- Critiques: Nostalgebraist [LW(p) · GW(p)]

- Funded by: lab funders, Eleuther funders

- Publicly-announced funding 2023-4: N/A.

Supervising AIs improving AIs [LW · GW]

- One-sentence summary: scalable tracking of behavioural drift, benchmarks for self-modification.

- Theory of change: early models train ~only on human data while later models also train on early model outputs, which leads to early model problems cascading; left unchecked this will likely cause problems, so we need a better iterative improvement process.

- Which orthodox alignment problems could it help with?: 7. Superintelligence can fool human supervisors or 8. Superintelligence can hack software supervisors

- Target case: pessimistic

- Broad approach: behavioural

- Some names: Roman Engeler, Akbir Khan, Ethan Perez

- Estimated # FTEs: 1-10

- Some outputs in 2024: Weak LLMs judging strong LLMs [AF · GW], Scalable AI Safety via Doubly-Efficient Debate, Debating with More Persuasive LLMs Leads to More Truthful Answers [AF · GW], Prover-Verifier Games Improve Legibility of LLM Output, LLM Critics Help Catch LLM Bugs

- Critiques: Automation collapse [AF · GW]

- Funded by: lab funders

- Publicly-announced funding 2023-4: N/A

- One-sentence summary: Train human-plus-LLM alignment researchers: with humans in the loop and without outsourcing to autonomous agents. More than that, an active attitude towards risk assessment of AI-based AI alignment.

- Theory of change: Cognitive prosthetics to amplify human capability and preserve values. More alignment research per year and dollar.

- Which orthodox alignment problems could it help with?: 7. Superintelligence can fool human supervisors, 9. Humans cannot be first-class parties to a superintelligent value handshake

- Target case: pessimistic

- Broad approach: engineering, behavioural

- Some names: Janus, Nicholas Kees Dupuis,

- Estimated # FTEs: ?

- Some outputs in 2024: Pantheon Interface [LW · GW]

- Critiques: self [LW · GW]

- Funded by: ?

- Publicly-announced funding 2023-4: N/A.

- One-sentence summary: Make open AI tools to explain AIs, including agents. E.g. feature descriptions for neuron activation patterns; an interface for steering these features; behavior elicitation agent that searches for user-specified behaviors from frontier models

- Theory of change: Introducing Transluce; improve interp and evals in public and get invited to improve lab processes.

- Which orthodox alignment problems could it help with?: 7. Superintelligence can fool human supervisors or 8. Superintelligence can hack software supervisors

- Target case: pessimistic

- Broad approach: cognitive

- Some names: Jacob Steinhardt, Sarah Schwettmann

- Estimated # FTEs: 6

- Some outputs in 2024: Eliciting Language Model Behaviors with Investigator Agents, Monitor: An AI-Driven Observability Interface, Scaling Automatic Neuron Description

- Critiques: not found.

- Funded by: Schmidt Sciences, Halcyon Futures, John Schulman, Wojciech Zaremba.

- Publicly-announced funding 2023-4: N/A

Task decomp

Recursive reward modelling is supposedly not dead but instead one of the tools OpenAI will build. Another line tries to make something honest out of chain of thought / tree of thought.

Adversarial

Deepmind Scalable Alignment [AF · GW]

- One-sentence summary: “make highly capable agents do what humans want, even when it is difficult for humans to know what that is”.

- Theory of change: [“Give humans help in supervising strong agents”] + [“Align explanations with the true reasoning process of the agent”] + [“Red team models to exhibit failure modes that don’t occur in normal use”] are necessary but probably not sufficient for safe AGI.

- Which orthodox alignment problems could it help with?: 1. Value is fragile and hard to specify, 7. Superintelligence can fool human supervisors

- Target case: worst-case

- Broad approach: engineering, cognitive

- Some names: Rohin Shah, Jonah Brown-Cohen, Georgios Piliouras

- Estimated # FTEs: ?

- Some outputs in 2024: Progress update [AF · GW] - Doubly Efficient Debate, Inference-only Experiments

- Critiques: The limits of AI safety via debate [AF · GW]

- Funded by: Google

- Publicly-announced funding 2023-4: N/A

Anthropic: Bowman/Perez

- One-sentence summary: scalable oversight of truthfulness: is it possible to develop training methods that incentivize truthfulness even when humans are unable to directly judge the correctness of a model’s output? / scalable benchmarking how to measure (proxies for) speculative capabilities like situational awareness.

- Theory of change: current methods like RLHF will falter as frontier AI tackles harder and harder questions → we need to build tools that help human overseers continue steering AI → let’s develop theory on what approaches might scale → let’s build the tools.

- Which orthodox alignment problems could it help with?: 7. Superintelligence can fool human supervisors

- Target case: pessimistic

- Broad approach: behavioural

- Some names: Sam Bowman, Ethan Perez, He He, Mengye Ren

- Estimated # FTEs: ?

- Some outputs in 2024: Debating with more persuasive LLMs Leads to More Truthful Answers, Sleeper Agents

- Critiques: obfuscation [LW · GW], local inadequacy [AF · GW]?, it doesn’t work right now (2022)

- Funded by: mostly Anthropic’s investors

- Publicly-announced funding 2023-4: N/A.

Latent adversarial training [LW · GW]

- One-sentence summary: uncover dangerous properties like scheming in a target model by having another model manipulate its state adversarially.

- Theory of change: automate red-teaming, thus uncover bad goals in a test environment rather than after deployment.

- See also red-teaming, concept-based interp, Algorithmic Alignment Group.

- Some names: Stephen Casper, Dylan Hadfield-Menell

- Estimated #FTEs: 1-10

- Some outputs in 2024: LAT Improves Robustness to Persistent Harmful Behaviors in LLMs, LAT Defends Against Unforeseen Failure Modes

- Critiques: not found

- Funded by: ?

- Publicly-announced funding 2023-4: N/A

See also FAR (below). See also obfuscated activations.

5. Theory

(Figuring out what we need to figure out, and then doing that.)

The Learning-Theoretic Agenda [AF · GW]

- One-sentence summary: try to formalise a more realistic agent, understand what it means for it to be aligned with us, translate between its ontology and ours, and produce desiderata for a training setup that points at coherent AGIs similar to our model of an aligned agent.

- Theory of change: fix formal epistemology to work out how to avoid deep training problems.

- Which orthodox alignment problems could it help with?: 1. Value is fragile and hard to specify, 9. Humans cannot be first-class parties to a superintelligent value handshake

- Target case: worst-case

- Broad approach: cognitive

- Some names: Vanessa Kosoy, Diffractor

- Estimated # FTEs: 3

- Some outputs in 2024: Linear infra-Bayesian Bandits [AF · GW], Time complexity for deterministic string machines, Infra-Bayesian Haggling [LW · GW], Quantum Mechanics in Infra-Bayesian Physicalism [AF · GW]. Intro lectures [LW · GW]

- Critiques: Matolcsi [LW · GW]

- Funded by: EA Funds, Survival and Flourishing Fund, ARIA[8]

- Publicly-announced funding 2023-4: $123,000

Question-answer counterfactual intervals (QACI) [LW · GW]

- One-sentence summary: Get the thing to work out its own objective function (a la HCH).

- Theory of change: make a fully formalized goal such that a computationally unbounded oracle with it would take desirable actions; and design a computationally bounded AI which is good enough to take satisfactory actions.

- Which orthodox alignment problems could it help with?: 1. Value is fragile and hard to specify, 4. Goals misgeneralize out of distribution

- Target case: worst-case

- Broad approach: cognitive

- Some names: Tamsin Leake, Julia Persson

- Estimated # FTEs: 1-10

- Some outputs in 2024: Epistemic states as a potential benign prior [LW · GW]

- Critiques: none found.

- Funded by: Survival and Flourishing Fund

- Publicly-announced funding 2023-4: $55,000

Understanding agency

(Figuring out ‘what even is an agent’ and how it might be linked to causality.)

- One-sentence intro [? · GW]: use causal models to understand agents. Originally this was to design environments where they lack the incentive to defect, hence the name.

- Theory of change: as above.

- Which orthodox alignment problems could it help with?: 1. Value is fragile and hard to specify, 4. Goals misgeneralize out of distribution

- Target case: pessimistic

- Broad approach: behavioural/maths/philosophy

- Some names: Tom Everitt, Matt McDermott, Francis Rhys Ward, Jonathan Richens, Ryan Carey

- Estimated # FTEs: 1-10

- Some outputs in 2024: Robust agents learn causal world models, Measuring Goal-Directedness

- Critiques: not found.

- Funded by: EA funds, Manifund, Deepmind

- Publicly-announced funding 2023-4: Some tacitly from DM

Hierarchical agency [LW · GW]

- One-sentence summary: Develop formal models of subagents and superagents, use the model to specify desirable properties of whole-part relations (e.g. how to prevent human-friendly parts getting wiped out).

- Theory of change: Solve self-unalignment [AF · GW], prevent destructive alignment, allow for scalable noncoercion.

- See also Alignment of Complex Systems, multi-scale alignment, scale-free theories of agency, active inference, bounded rationality.

- Which orthodox alignment problems could it help with?: 5. Instrumental convergence, 9. Humans cannot be first-class parties to a superintelligent value handshake

- Target case: pessimistic

- Broad approach: cognitive

- Some names: Jan Kulveit, Roman Leventov, Scott Viteri, Michael Levin, Ivan Vendrov, Richard Ngo

- Estimated # FTEs: 1-10

- Some outputs in 2024: Hierarchical Agency: A Missing Piece in AI Alignment [LW · GW], “How do parts form a whole?”, Free-energy equilibria, Two conceptions of active inference, [LW · GW]generalised active inference

- Critiques: indirect [AF(p) · GW(p)]

- Funded by: SFF

- Publicly-announced funding 2023-4: N/A.

(Descendents of) shard theory [? · GW]

- One-sentence summary: model the internal components of agents, use humans as a model organism of AGI (humans seem made up of shards and so might AI). Now more of an empirical ML agenda.

- Theory of change: If policies are controlled by an ensemble of influences ("shards"), consider which training approaches increase the chance that human-friendly shards substantially influence that ensemble.

- See also Activation Engineering, Reward bases, gradient routing [LW · GW].

- Which orthodox alignment problems could it help with?: 2. Corrigibility is anti-natural

- Target case: optimistic

- Broad approach: cognitive

- Some names: Alex Turner, Quintin Pope, Alex Cloud, Jacob Goldman-Wetzler, Evzen Wybitul, Joseph Miller

- Estimated # FTEs: 1-10

- Some outputs in 2024: Intrinsic Power-Seeking: AI Might Seek Power for Power’s Sake [AF · GW], Shard Theory - is it true for humans? [LW · GW], Gradient routing [LW · GW]

- Critiques: Chan [LW · GW], Soares [LW · GW], Miller [LW · GW], Lang [LW · GW], Kwa [LW · GW], Herd [LW(p) · GW(p)], Rishika [LW · GW], tailcalled [LW · GW]

- Funded by: Open Philanthropy (via funding of MATS), EA funds, Manifund

- Publicly-announced funding 2023-4: >$581,458

Dovetail research [LW · GW]

- One-sentence summary: Formalize key ideas (“structure”, “agency”, etc) mathematically

- Theory of change: generalize theorems → formalize agent foundations concepts like the agent structure problem → hopefully assist other projects through increased understanding

- Which orthodox alignment problems could it help with?: "intended to help make progress on understanding the nature of the problems through formalization, so that they can be avoided or postponed, or more effectively solved by other research agenda."

- Target case: pessimistic

- Broad approach: maths/philosophy

- Some names: Alex Altair, Alfred Harwood, Daniel C, Dalcy K, José Pedro Faustino

- Estimated # FTEs: 2

- Some outputs in 2024: mostly [LW · GW] exposition [LW · GW] but [LW · GW]it’s [LW · GW]early days [LW · GW]. Formalization [LW · GW].

- Critiques: not found

- Funded by: LTFF

- Publicly-announced funding 2023-4: $60,000

boundaries / membranes [LW · GW]

- One-sentence summary: Formalise one piece of morality: the causal separation between agents and their environment. See also Open Agency Architecture.

- Theory of change: Formalise (part of) morality/safety, solve outer alignment.

- Which orthodox alignment problems could it help with?: 9. Humans cannot be first-class parties to a superintelligent value handshake

- Target case: pessimistic

- Broad approach: maths/philosophy

- Some names: Chris Lakin, Andrew Critch, davidad, Evan Miyazono, Manuel Baltieri

- Estimated # FTEs: 0.5

- Some outputs in 2024: Chris posts [? · GW]

- Critiques: not found

- Funded by: ?

- Publicly-announced funding 2023-4: N/A

Understanding optimisation

- One-sentence summary: what is “optimisation power” (formally), how do we build tools that track it, and how relevant is any of this anyway. See also developmental interpretability.

- Theory of change: existing theories are either rigorous OR good at capturing what we mean; let’s find one that is both → use the concept to build a better understanding of how and when an AI might get more optimisation power. Would be nice if we could detect or rule out speculative stuff like gradient hacking too.

- Which orthodox alignment problems could it help with?: 5. Instrumental convergence

- Target case: pessimistic

- Broad approach: maths/philosophy

- Some names: Alex Flint, Guillaume Corlouer, Nicolas Macé

- Estimated # FTEs: 1-10

- Some outputs in 2024: Degeneracies are sticky for SGD [AF · GW]

- Critiques: not found.

- Funded by: CLR, EA funds

- Publicly-announced funding 2023-4: N/A.

Corrigibility

(Figuring out how we get superintelligent agents to keep listening to us. Arguably scalable oversight are ~atheoretical approaches to this.)

- One-sentence summary: predict properties of AGI (e.g. powerseeking) with formal models. Corrigibility as the opposite of powerseeking.

- Theory of change: figure out hypotheses about properties powerful agents will have → attempt to rigorously prove under what conditions the hypotheses hold, test them when feasible.

- See also this [LW · GW], EJT, Dupuis [LW · GW], Holtman.

- Which orthodox alignment problems could it help with?: 2. Corrigibility is anti-natural, 5. Instrumental convergence

- Target case: worst-case

- Broad approach: maths/philosophy

- Some names: Michael K. Cohen, Max Harms/Raelifin, John Wentworth, David Lorell, Elliott Thornley

- Estimated # FTEs: 1-10

- Some outputs in 2024: CAST: Corrigibility As Singular Target [? · GW], A Shutdown Problem Proposal [AF · GW], The Shutdown Problem: Incomplete Preferences as a Solution [AF · GW]

- Critiques: none found.

- Funded by: ?

- Publicly-announced funding 2023-4: ?

Ontology Identification

(Figuring out how AI agents think about the world and how to get superintelligent agents to tell us what they know. Much of interpretability is incidentally aiming at this. See also latent knowledge.)

Natural abstractions [AF · GW]

- One-sentence summary: check the hypothesis that our universe “abstracts well” and that many cognitive systems learn to use similar abstractions. Check if features correspond to small causal diagrams corresponding to linguistic constructions.

- Theory of change: find all possible abstractions of a given computation → translate them into human-readable language → identify useful ones like deception → intervene when a model is using it. Also develop theory for interp more broadly; more mathematical analysis. Also maybe enables “retargeting the search” (direct training away from things we don’t want).

- See also: causal abstractions, representational alignment, convergent abstractions

- Which orthodox alignment problems could it help with?: 5. Instrumental convergence, 7. Superintelligence can fool human supervisors, 9. Humans cannot be first-class parties to a superintelligent value handshake

- Target case: worst-case

- Broad approach: cognitive

- Some names: John Wentworth, Paul Colognese, David Lorrell, Sam Eisenstat

- Estimated # FTEs: 1-10

- Some outputs in 2024: Natural Latents: The Concepts [LW · GW], Natural Latents Are Not Robust To Tiny Mixtures [LW · GW], Towards a Less Bullshit Model of Semantics [LW · GW]

- Critiques: Chan et al [LW · GW], Soto [LW · GW], Harwood [LW · GW], Soares [AF · GW]

- Funded by: EA Funds

- Publicly-announced funding 2023-4: N/A?

ARC Theory: Formalizing heuristic arguments

- One-sentence summary: mech interp plus formal verification. Formalize mechanistic explanations of neural network behavior, so to predict when novel input may lead to anomalous behavior.

- Theory of change: find a scalable method to predict when any model will act up. Very good coverage of the group’s general approach here [LW · GW].

- See also: ELK, mechanistic anomaly detection.

- Which orthodox alignment problems could it help with?: 4. Goals misgeneralize out of distribution, 8. Superintelligence can hack software supervisors

- Target case: worst-case

- Broad approach: cognitive, maths/philosophy

- Some names: Jacob Hilton, Mark Xu, Eric Neyman, Dávid Matolcsi, Victor Lecomte, George Robinson

- Estimated # FTEs: 1-10

- Some outputs in 2024: Estimating tail risk, Towards a Law of Iterated Expectations for Heuristic Estimators, Probabilities of rare outputs, Bird’s eye overview [AF · GW], Formal verification [LW · GW]

- Critiques: Vaintrob [LW(p) · GW(p)]. Clarification [LW · GW], alternative [LW · GW] formulation

- Funded by: FLI, SFF

- Publicly-announced funding 2023-4: $1.7m

Understand cooperation

(Figuring out how inter-AI and AI/human game theory should or would work.)

Pluralistic alignment / collective intelligence [LW · GW]

- One-sentence summary: align AI to broader values / use AI to understand and improve coordination among humans.

- Theory of change: focus on getting more people and values represented.

- See also: AI Objectives Institute, Lightcone Chord, Intelligent Cooperation, Meaning Alignment Institute. See also AI-AI Bias.

- Which orthodox alignment problems could it help with?: 11. Someone else will deploy unsafe superintelligence first, 13. Fair, sane pivotal processes

- Target case: optimistic

- Broad approach: engineering?

- Some names: Yejin Choi, Seth Lazar, Nouha Dziri, Deger Turan, Ivan Vendrov, Jacob Lagerros

- Estimated # FTEs: 10-50

- Some outputs in 2024: roadmap, workshop

- Critiques: none found

- Funded by: Foresight, Midjourney?

- Publicly-announced funding 2023-4: N/A

Center on Long-Term Risk (CLR)

- One-sentence summary: future agents creating s-risks is the worst of all possible problems, we should avoid that.

- Theory of change: make present and future AIs inherently cooperative via improving theories of cooperation and measuring properties related to catastrophic conflict.

- See also: FOCAL

- Which orthodox alignment problems could it help with?: 1. Value is fragile and hard to specify, 3. Pivotal processes require dangerous capabilities, 4. Goals misgeneralize out of distribution

- Target case: worst-case

- Broad approach: maths/philosophy

- Some names: Jesse Clifton, Caspar Oesterheld, Anthony DiGiovanni, Maxime Riché, Mia Taylor

- Estimated # FTEs: 10-50

- Some outputs in 2024: Measurement Research Agenda, Computing Optimal Commitments to Strategies and Outcome-conditional Utility Transfers

- Critiques: none found

- Funded by: Polaris Ventures, Survival and Flourishing Fund, Community Foundation Ireland

- Publicly-announced funding 2023-4: $1,327,000

- One-sentence summary: make sure advanced AI uses what we regard as proper game theory.

- Theory of change: (1) keep the pre-superintelligence world sane by making AIs more cooperative; (2) remain integrated in the academic world, collaborate with academics on various topics and encourage their collaboration on x-risk; (3) hope that work on “game theory for AIs”, which emphasises cooperation and benefit to humans, has framing & founder effects on the new academic field.

- Which orthodox alignment problems could it help with?: 1. Value is fragile and hard to specify, 10. Humanlike minds/goals are not necessarily safe

- Target case: pessimistic

- Broad approach: maths/philosophy

- Some names: Vincent Conitzer, Caspar Oesterheld, Vojta Kovarik

- Estimated # FTEs: 1-10

- Some outputs in 2024: Foundations of Cooperative AI, A dataset of questions on decision-theoretic reasoning in Newcomb-like problems, Why should we ever automate moral decision making?, Social Choice Should Guide AI Alignment in Dealing with Diverse Human Feedback

- Critiques: Self-submitted: “our theory of change is not clearly relevant to superintelligent AI”.

- Funded by: Cooperative AI Foundation, Polaris Ventures

- Publicly-announced funding 2023-4: N/A

Alternatives to utility theory in alignment

- Some disparate papers we noticed pointing in the same direction:

See also: Chris Leong's Wisdom Explosion

6. Miscellaneous

(those hard to classify, or those making lots of bets rather than following one agenda)

- One-sentence summary: try out a lot of fast high-variance safety ideas that could bear fruit even if timelines are short.

- Theory of change: The 'Neglected Approaches' Broad approach: AE Studio's Alignment Agenda [LW · GW]

- Which orthodox alignment problems could it help with?: mixed.

- Target case: pessimistic

- Broad approach: mixed.

- Some names: Judd Rosenblatt, Marc Carauleanu, Diogo de Lucena, Cameron Berg

- Estimated # FTEs: 1-10

- Some outputs in 2024: Self-Other Overlap [AF · GW], Self-prediction acts as an emergent regularizer [AF · GW], Survey for alignment researchers [AF · GW], Reason-based deception

- Critiques: not found.

- Funded by: the host design consultancy

- Publicly-announced funding 2023-4: N/A

Anthropic Alignment Capabilities / Alignment Science / Assurance / Trust & Safety / RSP Evaluations

- One-sentence summary: remain ahead of the capabilities curve/maintain ability to figure out what’s up with state of the art models, keep an updated risk profile, propagate flaws to relevant parties as they are discovered.

- Theory of change [LW(p) · GW(p)]: “hands-on experience building safe and aligned AI… We'll invest in mechanistic interpretability because solving that would be awesome, and even modest success would help us detect risks before they become disasters. We'll train near-cutting-edge models to study how interventions like RL from human feedback and model-based supervision succeed and fail, iterate on them, and study how novel capabilities emerge as models scale up. We'll also share information so policy-makers and other interested parties can understand what the state of the art is like, and provide an example to others of how responsible labs can do safety-focused research.”

- Which orthodox alignment problems could it help with?: 7. Superintelligence can fool human supervisors, 13. Fair, sane pivotal processes

- Target case: mixed

- Broad approach: mixed

- Some names: Evan Hubinger, Monte Macdiarmid

- Estimated # FTEs: 10-50

- Some outputs in 2024: Updated Responsible Scaling Policy, collaboration with U.S. AISI

- Critiques: RSPs [EA · GW], Zach Stein-Perlman [LW · GW]

- Funded by: Amazon, Stackpoint, Google, Menlo Ventures, Wisdom Ventures, Ripple Impact Investments, Factorial Fund, Mubadala, Jane Street, HOF Capital, the Ford Foundation, Fidelity.

- Publicly-announced funding 2023-4: $4 billion (safety fraction unknown)

- One-sentence summary: high volume of experiments from research sprints, interpretability, benchmarks

- Theory of change: make open-source research on AI safety easier to publish + act as a coordination point

- Which orthodox alignment problems could it help with?: 1. Value is fragile and hard to specify, 7. Superintelligence can fool human supervisors

- Target case: mixed

- Broad approach: behavioural/engineering

- Some names: Jason Schreiber, Natalia Pérez-Campanero Antolín, Esben Kran

- Estimated # FTEs: 1-10

- Some outputs in 2024:

- Benchmarks:

- Interpretability:

- Deception

- Critiques: none found.

- Funded by: LTFF, Survival and Flourishing Fund, Manifund

- Publicly-announced funding 2023-4: $643,500

- One-sentence summary: model evaluations and conceptual work on deceptive alignment. Also an interp agenda (decompose NNs into components more carefully and in a more computation-compatible way than SAEs). Also deception evals in major labs.

- Theory of change: “Conduct foundational research in interpretability and behavioural model evaluations, audit real-world models for deceptive alignment, support policymakers with our technical expertise where needed.”

- Which orthodox alignment problems could it help with?: 2. Corrigibility is anti-natural, 4. Goals misgeneralize out of distribution

- Target case: pessimistic

- Broad approach: behavioural/cognitive

- Some names: Marius Hobbhanh, Lee Sharkey, Lucius Bushnaq, Mikita Balesni

- Estimated # FTEs: 10-50

- Some outputs in 2024: The first year of Apollo research

- Evals:

- Interpretability:

- Control

- Critiques: various people disliked the scheming experiment, mostly because of others exaggerating it.

- Funded by: Open Philanthropy, Survival and Flourishing Fund

- Publicly-announced funding 2023-4: $4,885,349

- One-sentence summary: ?

- Theory of change: ?

- Some names: Andrew Gritsevskiy, Joseph M. Cavanagh, Aaron Kirtland, Derik Kauffman,

- Some outputs in 2024: REBUS: A Robust Evaluation Benchmark of Understanding Symbols, Unelicitable Backdoors in Language Models via Cryptographic Transformer Circuits, cogsci

Center for AI Safety (CAIS)

- One-sentence summary: do what needs doing, any type of work

- Theory of change: make the field more credible. Make really good benchmarks, integrate academia into the field, advocate for safety standards and help design legislation.

- Which orthodox alignment problems could it help with?: 1. Value is fragile and hard to specify, 10. Humanlike minds/goals are not necessarily safe, 13. Fair, sane pivotal processes

- Target case: mixed

- Broad approach: mixed

- Some names: Dan Hendrycks, Andy Zou, Mantas Mazeika, Jacob Steinhardt, Dawn Song (some of these are not full-time at CAIS though).

- Estimated # FTEs: 10-50

- Some outputs in 2024: WMDP, Circuit Breakers, Safetywashing [AF · GW], HarmBench, Tamper-Resistant Safeguards for Open-Weight LLMs

- Critiques: various people hated SB1047

- Funded by: Open Philanthropy, Survival and Flourishing Fund, Future of Life Institute

- Publicly-announced funding 2023-4: $9,800,854

- See also the reward learning and provably safe systems entries.

Deepmind Alignment Team [AF · GW]

- One-sentence summary: theory generation, threat modelling, and toy methods to help with those. “Our main threat model is basically a combination of specification gaming and goal misgeneralisation leading to misaligned power-seeking.” See announcement post [AF · GW] for full picture.

- Theory of change: direct the training process towards aligned AI and away from misaligned AI: build enabling tech to ease/enable alignment work → apply said tech to correct missteps in training non-superintelligent agents → keep an eye on it as capabilities scale to ensure the alignment tech continues to work.

- See also (in this document): Process-based supervision, Red-teaming, Capability evaluations, Mechanistic interpretability, Goal misgeneralisation, Causal alignment/incentives

- Which orthodox alignment problems could it help with?: 4. Goals misgeneralize out of distribution, 7. Superintelligence can fool human supervisors

- Target case: pessimistic

- Broad approach: engineering

- Some names: Rohin Shah, Anca Dragan, Allan Dafoe, Dave Orr, Sebastian Farquhar

- Estimated # FTEs: ?

- Some outputs in 2024: AGI Safety and Alignment at Google DeepMind: A Summary of Recent Work [LW · GW]

- Critiques: Zvi

- Funded by: Google

- Publicly-announced funding 2023-4: N/A

- One-sentence summary: “a) improved reasoning of AI governance & alignment researchers, particularly on long-horizon tasks and (b) pushing supervision of process rather than outcomes, which reduces the optimisation pressure on imperfect proxy objectives leading to “safety by construction”.

- Theory of change: “The two main impacts of Elicit on AI Safety are improving epistemics and pioneering process supervision.”

- One-sentence summary: a science of robustness / fault tolerant alignment is their stated aim, but they do lots of interpretability papers and other things.

- Theory of change: make AI systems less exploitable and so prevent one obvious failure mode of helper AIs / superalignment / oversight: attacks on what is supposed to prevent attacks. In general, work on overlooked safety research others don’t do for structural reasons: too big for academia or independents, but not totally aligned with the interests of the labs (e.g. prototyping moonshots, embarrassing issues with frontier models).

- Some names: Adrià Garriga-Alonso, Adam Gleave, Chris Cundy, Mohammad Taufeeque, Kellin Pelrine

- Estimated # FTEs: 25

- Some outputs in 2024: Effects of Scale on Language Model Robustness, Data Poisoning in LLMs, InterpBench: Semi-Synthetic Transformers for Evaluating Mechanistic Interpretability Techniques, Planning in an RNN that plays Sokoban. See also: Vienna Alignment Workshop 2024

- Critiques: tangential from Demski [LW · GW]

- Funded by: Open Philanthropy, Survival and Flourishing Fund, Future of Life Institute

- Publicly-announced funding 2023-4: $10,260,827

Krueger Lab Mila

- One-sentence summary: misc. Understand Goodhart’s law; reward learning 2.0; demonstrating safety failures; understand DL generalization / learning dynamics.

- Theory of change: misc. Improve theory and demos while steering policy to steer away from AGI risk.

- Which orthodox alignment problems could it help with?: 1. Value is fragile and hard to specify, 4. Goals misgeneralize out of distribution

- Target case: mixed

- Broad approach: mixed

- Some names: David Krueger, Alan Chan, Ethan Caballero

- Estimated # FTEs: 1-10

- Some outputs in 2024: Towards Reliable Evaluation of Behavior Steering Interventions in LLMs, Enhancing Neural Network Interpretability with Feature-Aligned Sparse Autoencoders

- Critiques: none found.

- Funded by: ?

- Publicly-announced funding 2023-4: N/A

- Now a governance org – out of scope for us but here’s what they’re been working on.

- One-sentence summary: funds academics or near-academics to do ~classical safety engineering on AIs. A collaboration between the NSF and OpenPhil. Projects include

- “Neurosymbolic Multi-Agent Systems”

- “Conformal Safe Reinforcement Learning”

- “Autonomous Vehicles”

- Theory of change: apply safety engineering principles from other fields to AI safety.

- Which orthodox alignment problems could it help with?: 4. Goals misgeneralize out of distribution

- Target case: pessimistic case

- Broad approach: engineering

- Some names: Dan Hendrycks, Sharon Li

- Estimated # FTEs: 10+

- Some outputs in 2024: Generalized Out-of-Distribution Detection: A Survey, Alignment as Reward-Guided Search

- See also: Artificial Intelligence in Safety-critical Systems: A Systematic Review

- Critiques: zoop [LW(p) · GW(p)]

- Funded by: Open Philanthropy

- Publicly-announced funding 2023-4: $18m granted in 2024.

OpenAI Superalignment Safety Systems

- See also: weak-to-strong generalization, automated alignment researcher.

- Some outputs: MLE-Bench

- # FTEs: “80”. But this includes lots working on bad-words prevention and copyright-violation prevention.

- They just lost Lilian Weng, their VP of safety systems.

OpenAI Alignment Science

- use reasoning systems to prevent models from generating unsafe outputs. Unclear if this is a decoding-time thing (i.e. actually a control method) or a fine-tuning thing.

- Some names: Mia Glaese, Boaz Barak, Johannes Heidecke, Melody Guan. Lost its head, John Schulman.

- Some outputs: o1-preview system card, Deliberative Alignment

OpenAI Safety and Security Committee

- One responsibility of the new board is to act with an OODA loop 3 months long.

OpenAI AGI Readiness Mission Alignment

- No detail. Apparently a cross-function, whole-company oversight thing.

- We haven’t heard much about the Preparedness team since Mądry left it.

- Some names: Joshua Achiam.

- One-sentence summary: Fundamental research in LLM security, plus capability demos for outreach, plus workshops.

- Theory of change: control is much easier if we can secure the datacenter / if hacking becomes much harder. The cybersecurity community need to be alerted.